Thank you for visiting nature.com. You are using a browser version with limited support for CSS. To obtain the best experience, we recommend you use a more up to date browser (or turn off compatibility mode in Internet Explorer). In the meantime, to ensure continued support, we are displaying the site without styles and JavaScript.

- View all journals

- Explore content

- About the journal

- Publish with us

- Sign up for alerts

- Open access

- Published: 22 January 2024

Segment anything in medical images

- Jun Ma 1 , 2 , 3 ,

- Yuting He 4 ,

- Feifei Li ORCID: orcid.org/0000-0002-4004-4134 1 ,

- Lin Han 5 ,

- Chenyu You ORCID: orcid.org/0000-0001-8365-7822 6 &

- Bo Wang ORCID: orcid.org/0000-0002-9620-3413 1 , 2 , 3 , 7 , 8

Nature Communications volume 15 , Article number: 654 ( 2024 ) Cite this article

109k Accesses

161 Citations

269 Altmetric

Metrics details

- Computer science

- Machine learning

- Medical imaging

Medical image segmentation is a critical component in clinical practice, facilitating accurate diagnosis, treatment planning, and disease monitoring. However, existing methods, often tailored to specific modalities or disease types, lack generalizability across the diverse spectrum of medical image segmentation tasks. Here we present MedSAM, a foundation model designed for bridging this gap by enabling universal medical image segmentation. The model is developed on a large-scale medical image dataset with 1,570,263 image-mask pairs, covering 10 imaging modalities and over 30 cancer types. We conduct a comprehensive evaluation on 86 internal validation tasks and 60 external validation tasks, demonstrating better accuracy and robustness than modality-wise specialist models. By delivering accurate and efficient segmentation across a wide spectrum of tasks, MedSAM holds significant potential to expedite the evolution of diagnostic tools and the personalization of treatment plans.

Similar content being viewed by others

The Medical Segmentation Decathlon

Annotation-efficient deep learning for automatic medical image segmentation

Shifting to machine supervision: annotation-efficient semi and self-supervised learning for automatic medical image segmentation and classification

Introduction.

Segmentation is a fundamental task in medical imaging analysis, which involves identifying and delineating regions of interest (ROI) in various medical images, such as organs, lesions, and tissues 1 . Accurate segmentation is essential for many clinical applications, including disease diagnosis, treatment planning, and monitoring of disease progression 2 , 3 . Manual segmentation has long been the gold standard for delineating anatomical structures and pathological regions, but this process is time-consuming, labor-intensive, and often requires a high degree of expertise. Semi- or fully automatic segmentation methods can significantly reduce the time and labor required, increase consistency, and enable the analysis of large-scale datasets 4 .

Deep learning-based models have shown great promise in medical image segmentation due to their ability to learn intricate image features and deliver accurate segmentation results across a diverse range of tasks, from segmenting specific anatomical structures to identifying pathological regions 5 . However, a significant limitation of many current medical image segmentation models is their task-specific nature. These models are typically designed and trained for a specific segmentation task, and their performance can degrade significantly when applied to new tasks or different types of imaging data 6 . This lack of generality poses a substantial obstacle to the wider application of these models in clinical practice. In contrast, recent advances in the field of natural image segmentation have witnessed the emergence of segmentation foundation models, such as segment anything model (SAM) 7 and Segment Everything Everywhere with Multi-modal prompts all at once 8 , showcasing remarkable versatility and performance across various segmentation tasks.

There is a growing demand for universal models in medical image segmentation: models that can be trained once and then applied to a wide range of segmentation tasks. Such models would not only exhibit heightened versatility in terms of model capacity but also potentially lead to more consistent results across different tasks. However, the applicability of the segmentation foundation models (e.g., SAM 7 ) to medical image segmentation remains limited due to the significant differences between natural images and medical images. Essentially, SAM is a promptable segmentation method that requires points or bounding boxes to specify the segmentation targets. This resembles conventional interactive segmentation methods 4 , 9 , 10 , 11 but SAM has better generalization ability, while existing deep learning-based interactive segmentation methods focus mainly on limited tasks and image modalities.

Many studies have applied the out-of-the-box SAM models to typical medical image segmentation tasks 12 , 13 , 14 , 15 , 16 , 17 and other challenging scenarios 18 , 19 , 20 , 21 . For example, the concurrent studies 22 , 23 conducted a comprehensive assessment of SAM across a diverse array of medical images, underscoring that SAM achieved satisfactory segmentation outcomes primarily on targets characterized by distinct boundaries. However, the model exhibited substantial limitations in segmenting typical medical targets with weak boundaries or low contrast. In congruence with these observations, we further introduce MedSAM, a refined foundation model that significantly enhances the segmentation performance of SAM on medical images. MedSAM accomplishes this by fine-tuning SAM on an unprecedented dataset with more than one million medical image-mask pairs.

We thoroughly evaluate MedSAM through comprehensive experiments on 86 internal validation tasks and 60 external validation tasks, spanning a variety of anatomical structures, pathological conditions, and medical imaging modalities. Experimental results demonstrate that MedSAM consistently outperforms the state-of-the-art (SOTA) segmentation foundation model 7 , while achieving performance on par with, or even surpassing specialist models 1 , 24 that were trained on the images from the same modality. These results highlight the potential of MedSAM as a new paradigm for versatile medical image segmentation.

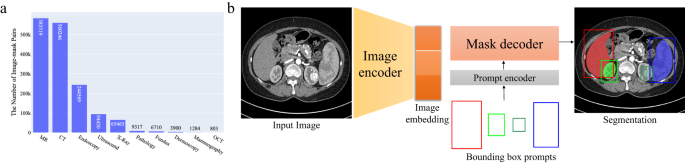

MedSAM: a foundation model for promptable medical image segmentation

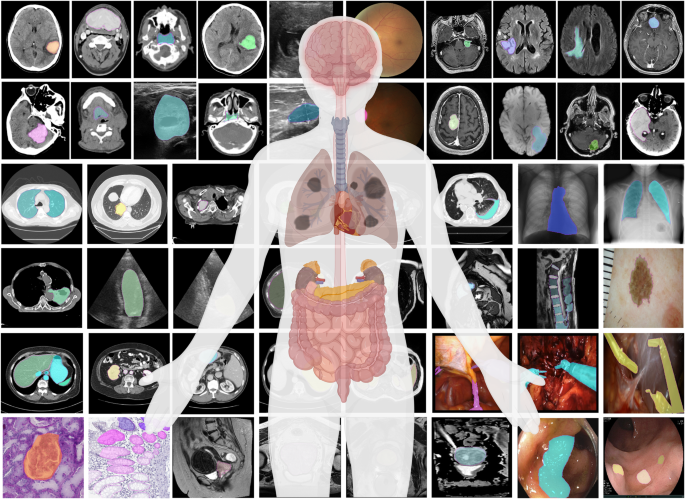

MedSAM aims to fulfill the role of a foundation model for universal medical image segmentation. A crucial aspect of constructing such a model is the capacity to accommodate a wide range of variations in imaging conditions, anatomical structures, and pathological conditions. To address this challenge, we curated a diverse and large-scale medical image segmentation dataset with 1,570,263 medical image-mask pairs, covering 10 imaging modalities, over 30 cancer types, and a multitude of imaging protocols (Fig. 1 and Supplementary Tables 1 – 4) . This large-scale dataset allows MedSAM to learn a rich representation of medical images, capturing a broad spectrum of anatomies and lesions across different modalities. Figure 2 a provides an overview of the distribution of images across different medical imaging modalities in the dataset, ranked by their total numbers. It is evident that computed tomography (CT), magnetic resonance imaging (MRI), and endoscopy are the dominant modalities, reflecting their ubiquity in clinical practice. CT and MRI images provide detailed cross-sectional views of 3D body structures, making them indispensable for non-invasive diagnostic imaging. Endoscopy, albeit more invasive, enables direct visual inspection of organ interiors, proving invaluable for diagnosing gastrointestinal and urological conditions. Despite the prevalence of these modalities, others such as ultrasound, pathology, fundus, dermoscopy, mammography, and optical coherence tomography (OCT) also hold significant roles in clinical practice. The diversity of these modalities and their corresponding segmentation targets underscores the necessity for universal and effective segmentation models capable of handling the unique characteristics associated with each modality.

The dataset covers a variety of anatomical structures, pathological conditions, and medical imaging modalities. The magenta contours and mask overlays denote the expert annotations and MedSAM segmentation results, respectively.

a The number of medical image-mask pairs in each modality. b MedSAM is a promptable segmentation method where users can use bounding boxes to specify the segmentation targets. Source data are provided as a Source Data file.

Another critical consideration is the selection of the appropriate segmentation prompt and network architecture. While the concept of fully automatic segmentation foundation models is enticing, it is fraught with challenges that make it impractical. One of the primary challenges is the variability inherent in segmentation tasks. For example, given a liver cancer CT image, the segmentation task can vary depending on the specific clinical scenario. One clinician might be interested in segmenting the liver tumor, while another might need to segment the entire liver and surrounding organs. Additionally, the variability in imaging modalities presents another challenge. Modalities such as CT and MR generate 3D images, whereas others like X-ray and ultrasound yield 2D images. These variabilities in task definition and imaging modalities complicate the design of a fully automatic model capable of accurately anticipating and addressing the diverse requirements of different users.

Considering these challenges, we argue that a more practical approach is to develop a promptable 2D segmentation model. The model can be easily adapted to specific tasks based on user-provided prompts, offering enhanced flexibility and adaptability. It is also able to handle both 2D and 3D images by processing 3D images as a series of 2D slices. Typical user prompts include points and bounding boxes and we show some segmentation examples with the different prompts in Supplementary Fig. 1 . It can be found that bounding boxes provide a more unambiguous spatial context for the region of interest, enabling the algorithm to more precisely discern the target area. This stands in contrast to point-based prompts, which can introduce ambiguity, particularly when proximate structures resemble each other. Moreover, drawing a bounding box is efficient, especially in scenarios involving multi-object segmentation. We follow the network architecture in SAM 7 , including an image encoder, a prompt encoder, and a mask decoder (Fig. 2 b). The image encoder 25 maps the input image into a high-dimensional image embedding space. The prompt encoder transforms the user-drawn bounding boxes into feature representations via positional encoding 26 . Finally, the mask decoder fuses the image embedding and prompt features using cross-attention 27 (Methods).

Quantitative and qualitative analysis

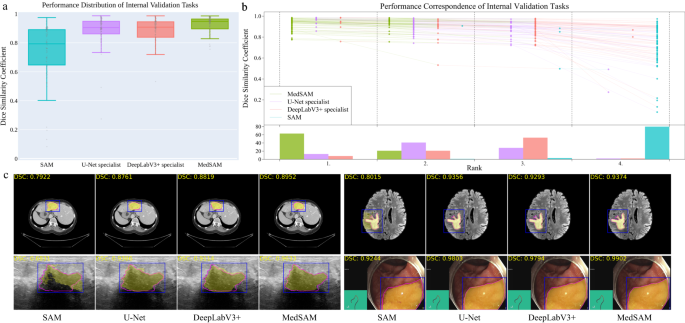

We evaluated MedSAM through both internal validation and external validation. Specifically, we compared it to the SOTA segmentation foundation model SAM 7 as well as modality-wise specialist U-Net 1 and DeepLabV3+ 24 models. Each specialized model was trained on images from the corresponding modality, resulting in 10 dedicated specialist models for each method. During inference, these specialist models were used to segment the images from corresponding modalities, while SAM and MedSAM were employed for segmenting images across all modalities (Methods). The internal validation contained 86 segmentation tasks (Supplementary Tables 5 – 8 and Fig. 2) , and Fig. 3 a shows the median dice similarity coefficient (DSC) score of these tasks for the four methods. Overall, SAM obtained the lowest performance on most segmentation tasks although it performed promisingly on some RGB image segmentation tasks, such as polyp (DSC: 91.3%, interquartile range (IQR): 81.2–95.1%) segmentation in endoscopy images. This could be attributed to SAM’s training on a variety of RGB images, and the fact that many targets in these images are relatively straightforward to segment due to their distinct appearances. The other three models outperformed SAM by a large margin and MedSAM has a narrower distribution of DSC scores of the 86 interval validation tasks than the two groups of specialist models, reflecting the robustness of MedSAM across different tasks. We further connected the DSC scores corresponding to the same task of the four models with the podium plot Fig. 3 b, which is complementary to the box plot. In the upper part, each colored dot denotes the median DSC achieved with the respective method on one task. Dots corresponding to identical test cases are connected by a line. In the lower part, the frequency of achieved ranks for each method is presented with bar charts. It can be found that MedSAM ranked in first place on most tasks, surpassing the performance of the U-Net and DeepLabV3+ specialist models that have a high frequency of ranks with second and third places, respectively, In contrast, SAM ranked last place in almost all tasks. Figure 3 c (and Supplementary Fig. 9) visualizes some randomly selected segmentation examples where MedSAM obtained a median DSC score, including liver tumor in CT images, brain tumor in MR images, breast tumor in ultrasound images, and polyp in endoscopy images. SAM struggles with targets of weak boundaries, which is prone to under or over-segmentation errors. In contrast, MedSAM can accurately segment a wide range of targets across various imaging conditions, which achieves comparable of even better than the specialist U-Net and DeepLabV3+ models.

a Performance distribution of 86 internal validation tasks in terms of median dice similarity coefficient (DSC) score. The center line within the box represents the median value, with the bottom and top bounds of the box delineating the 25th and 75th percentiles, respectively. Whiskers are chosen to show the 1.5 of the interquartile range. Up-triangles denote the minima and down-triangles denote the maxima. b Podium plots for visualizing the performance correspondence of 86 internal validation tasks. Upper part: each colored dot denotes the median DSC achieved with the respective method on one task. Dots corresponding to identical tasks are connected by a line. Lower part: bar charts represent the frequency of achieved ranks for each method. MedSAM ranks in the first place on most tasks. c Visualized segmentation examples on the internal validation set. The four examples are liver cancer, brain cancer, breast cancer, and polyp in computed tomography (CT), (Magnetic Resonance Imaging) MRI, ultrasound, and endoscopy images, respectively. Blue: bounding box prompts; Yellow: segmentation results. Magenta: expert annotations. Source data are provided as a Source Data file.

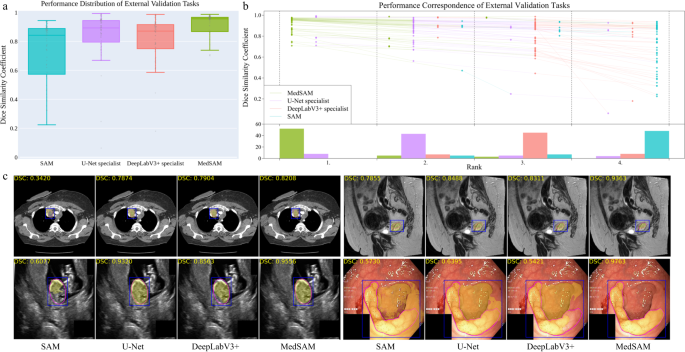

The external validation included 60 segmentation tasks, all of which either were from new datasets or involved unseen segmentation targets (Supplementary Tables 9 – 11 and Figs. 10 – 12) . Figure 4 a, b show the task-wise median DSC score distribution and their correspondence of the 60 tasks, respectively. Although SAM continued exhibiting lower performance on most CT and MR segmentation tasks, the specialist models no longer consistently outperformed SAM (e.g., right kidney segmentation in MR T1-weighted images: 90.1%, 85.3%, 86.4% for SAM, U-Net, and DeepLabV3+, respectively). This indicates the limited generalization ability of such specialist models on unseen targets. In contrast, MedSAM consistently delivers superior performance. For example, MedSAM obtained median DSC scores of 87.8% (IQR: 85.0-91.4%) on the nasopharynx cancer segmentation task, demonstrating 52.3%, 15.5%, and 22.7 improvements over SAM, the specialist U-Net, and DeepLabV3+, respectively. Significantly, MedSAM also achieved better performance in some unseen modalities (e.g., abdomen T1 Inphase and Outphase), surpassing SAM and the specialist models with improvements by up to 10%. Figure 4 c presents four randomly selected segmentation examples for qualitative evaluation, revealing that while all the methods have the ability to handle simple segmentation targets, MedSAM performs better at segmenting challenging targets with indistinguishable boundaries, such as cervical cancer in MR images (more examples are presented in Supplementary Fig. 13) . Furthermore, we evaluated MedSAM on the multiple myeloma plasma cell dataset, which represents a distinct modality and task in contrast to all previously leveraged validation tasks. Although this task had never been seen during training, MedSAM still exhibited superior performance compared to the SAM (Supplementary Fig. 14) , highlighting its remarkable generalization ability.

a Performance distribution of 60 external validation tasks in terms of median dice similarity coefficient (DSC) score. The center line within the box represents the median value, with the bottom and top bounds of the box delineating the 25th and 75th percentiles, respectively. Whiskers are chosen to show the 1.5 of the interquartile range. Up-triangles denote the minima and down-triangles denote the maxima. b Podium plots for visualizing the performance correspondence of 60 external validation tasks. Upper part: each colored dot denotes the median DSC achieved with the respective method on one task. Dots corresponding to identical tasks are connected by a line. Lower part: bar charts represent the frequency of achieved ranks for each method. MedSAM ranks in the first place on most tasks. c Visualized segmentation examples on the external validation set. The four examples are the lymph node, cervical cancer, fetal head, and polyp in CT, MR, ultrasound, and endoscopy images, respectively. Source data are provided as a Source Data file.

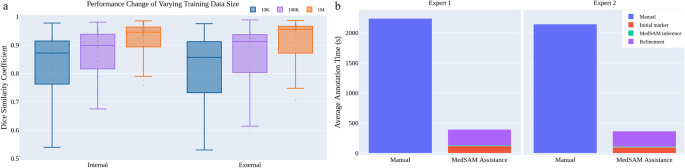

The effect of training dataset size

We also investigated the effect of varying dataset sizes on MedSAM’s performance because the training dataset size has been proven to be pivotal in model performance 28 . We additionally trained MedSAM on two different dataset sizes: 10,000 (10K) and 100,000 (100K) images and their performances were compared with the default MedSAM model. The 10K and 100K training images were uniformly sampled from the whole training set, to maintain data diversity. As shown in (Fig. 5 a) (Supplementary Tables 12 – 14) , the performance adhered to the scaling rule, where increasing the number of training images significantly improved the performance in both internal and external validation sets.

a Scaling up the training image size to one million can significantly improve the model performance on both internal and external validation sets. b MedSAM can be used to substantially reduce the annotation time cost. Source data are provided as a Source Data file.

MedSAM can improve the annotation efficiency

Furthermore, we conducted a human annotation study to assess the time cost of two pipelines (Methods). For the first pipeline, two human experts manually annotate 3D adrenal tumors in a slice-by-slice way. For the second pipeline, the experts first drew the long and short tumor axes with the linear marker (initial marker) every 3-10 slices, which is a common practice in tumor response evaluation. Then, MedSAM was used to segment the tumors based on these sparse linear annotations. Finally, the expert manually revised the segmentation results until they were satisfied. We quantitatively compared the annotation time cost between the two pipelines (Fig. 5 b). The results demonstrate that with the assistance of MedSAM, the annotation time is substantially reduced by 82.37% and 82.95% for the two experts, respectively.

We introduce MedSAM, a deep learning-powered foundation model designed for the segmentation of a wide array of anatomical structures and lesions across diverse medical imaging modalities. MedSAM is trained on a meticulously assembled large-scale dataset comprised of over one million medical image-mask pairs. Its promptable configuration strikes an optimal balance between automation and customization, rendering MedSAM a versatile tool for universal medical image segmentation.

Through comprehensive evaluations encompassing both internal and external validation, MedSAM has demonstrated substantial capabilities in segmenting a diverse array of targets and robust generalization abilities to manage new data and tasks. Its performance not only significantly exceeds that of existing the state-of-the-art segmentation foundation model, but also rivals or even surpasses specialist models. By providing precise delineation of anatomical structures and pathological regions, MedSAM facilitates the computation of various quantitative measures that serve as biomarkers. For instance, in the field of oncology, MedSAM could play a crucial role in accelerating the 3D tumor annotation process, enabling subsequent calculations of tumor volume, which is a critical biomarker 29 for assessing disease progression and response to treatment. Additionally, MedSAM provides a successful paradigm for adapting natural image foundation models to new domains, which can be further extended to biological image segmentation 30 , such as cell segmentation in light microscopy images 31 and organelle segmentation in electron microscopy images 32 .

While MedSAM boasts strong capabilities, it does present certain limitations. One such limitation is the modality imbalance in the training set, with CT, MRI, and endoscopy images dominating the dataset. This could potentially impact the model’s performance on less-represented modalities, such as mammography. Another limitation is its difficulty in the segmentation of vessel-like branching structures because the bounding box prompt can be ambiguous in this setting. For example, arteries and veins share the same bounding box in eye fundus images. However, these limitations do not diminish MedSAM’s utility. Since MedSAM has learned rich and representative medical image features from the large-scale training set, it can be fine-tuned to effectively segment new tasks from less-represented modalities or intricate structures like vessels.

In conclusion, this study highlights the feasibility of constructing a single foundation model capable of managing a multitude of segmentation tasks, thereby eliminating the need for task-specific models. MedSAM, as the inaugural foundation model in medical image segmentation, holds great potential to accelerate the advancement of new diagnostic and therapeutic tools, and ultimately contribute to improved patient care 33 .

Dataset curation and pre-processing

We curated a comprehensive dataset by collating images from publicly available medical image segmentation datasets, which were obtained from various sources across the internet, including the Cancer Imaging Archive (TCIA) 34 , Kaggle, Grand-Challenge, Scientific Data, CodaLab, and segmentation challenges in the Medical Image Computing and Computer Assisted Intervention Society (MICCAI). All the datasets provided segmentation annotations by human experts, which have been widely used in existing literature (Supplementary Table 1 – 4) . We incorporated these annotations directly for both model development and validation.

The original 3D datasets consisted of computed tomography (CT) and magnetic resonance (MR) images in DICOM, nrrd, or mhd formats. To ensure uniformity and compatibility with developing medical image deep learning models, we converted the images to the widely used NifTI format. Additionally, grayscale images (such as X-Ray and Ultrasound) as well as RGB images (including endoscopy, dermoscopy, fundus, and pathology images), were converted to the png format. Several exclusive criteria are applied to improve the dataset quality and consistency, including incomplete images and segmentation targets with branching structures, inaccurate annotations, and tiny volumes. Notably, image intensities varied significantly across different modalities. For instance, CT images had intensity values ranging from -2000 to 2000, while MR images exhibited a range of 0 to 3000. In endoscopy and ultrasound images, intensity values typically spanned from 0 to 255. To facilitate stable training, we performed intensity normalization across all images, ensuring they shared the same intensity range.

For CT images, we initially normalized the Hounsfield units using typical window width and level values. The employed window width and level values for soft tissues, lung, and brain are (W:400, L:40), (W:1500, L:-160), and (W:80, L:40), respectively. Subsequently, the intensity values were rescaled to the range of [0, 255]. For MR, X-ray, ultrasound, mammography, and optical coherence tomography (OCT) images, we clipped the intensity values to the range between the 0.5th and 99.5th percentiles before rescaling them to the range of [0, 255]. Regarding RGB images (e.g., endoscopy, dermoscopy, fundus, and pathology images), if they were already within the expected intensity range of [0, 255], their intensities remained unchanged. However, if they fell outside this range, we utilized max-min normalization to rescale the intensity values to [0, 255]. Finally, to meet the model’s input requirements, all images were resized to a uniform size of 1024 × 1024 × 3. In the case of whole-slide pathology images, patches were extracted using a sliding window approach without overlaps. The patches located on boundaries were padded to this size with 0. As for 3D CT and MR images, each 2D slice was resized to 1024 × 1024, and the channel was repeated three times to maintain consistency. The remaining 2D images were directly resized to 1024 × 1024 × 3. Bi-cubic interpolation was used for resizing images, while nearest-neighbor interpolation was applied for resizing masks to preserve their precise boundaries and avoid introducing unwanted artifacts. These standardization procedures ensured uniformity and compatibility across all images and facilitated seamless integration into the subsequent stages of the model training and evaluation pipeline.

Network architecture

The network utilized in this study was built on transformer architecture 27 , which has demonstrated remarkable effectiveness in various domains such as natural language processing and image recognition tasks 25 . Specifically, the network incorporated a vision transformer (ViT)-based image encoder responsible for extracting image features, a prompt encoder for integrating user interactions (bounding boxes), and a mask decoder that generated segmentation results and confidence scores using the image embedding, prompt embedding, and output token.

To strike a balance between segmentation performance and computational efficiency, we employed the base ViT model as the image encoder since extensive evaluation indicated that larger ViT models, such as ViT Large and ViT Huge, offered only marginal improvements in accuracy 7 while significantly increasing computational demands. Specifically, the base ViT model consists of 12 transformer layers 27 , with each block comprising a multi-head self-attention block and a Multilayer Perceptron (MLP) block incorporating layer normalization 35 . Pre-training was performed using masked auto-encoder modeling 36 , followed by fully supervised training on the SAM dataset 7 . The input image (1024 × 1024 × 3) was reshaped into a sequence of flattened 2D patches with the size 16 × 16 × 3, yielding a feature size in image embedding of 64 × 64 after passing through the image encoder, which is 16 × downscaled. The prompt encoders mapped the corner point of the bounding box prompt to 256-dimensional vectorial embeddings 26 . In particular, each bounding box was represented by an embedding pair of the top-left corner point and the bottom-right corner point. To facilitate real-time user interactions once the image embedding had been computed, a lightweight mask decoder architecture was employed. It consists of two transformer layers 27 for fusing the image embedding and prompt encoding, and two transposed convolutional layers to enhance the embedding resolution to 256 × 256. Subsequently, the embedding underwent sigmoid activation, followed by bi-linear interpolations to match the input size.

Training protocol and experimental setting

During data pre-processing, we obtained 1,570,263 medical image-mask pairs for model development and validation. For internal validation, we randomly split the dataset into 80%, 10%, and 10% as training, tuning, and validation, respectively. Specifically, for modalities where within-scan continuity exists, such as CT and MRI, and modalities where continuity exists between consecutive frames, we performed the data splitting at the 3D scan and the video level respectively, by which any potential data leak was prevented. For pathology images, recognizing the significance of slide-level cohesiveness, we first separated the whole-slide images into distinct slide-based sets. Then, each slide was divided into small patches with a fixed size of 1024 × 1024. This setup allowed us to monitor the model’s performance on the tuning set and adjust its parameters during training to prevent overfitting. For the external validation, all datasets were held out and did not appear during model training. These datasets provide a stringent test of the model’s generalization ability, as they represent new patients, imaging conditions, and potentially new segmentation tasks that the model has not encountered before. By evaluating the performance of MedSAM on these unseen datasets, we can gain a realistic understanding of how MedSAM is likely to perform in real-world clinical settings, where it will need to handle a wide range of variability and unpredictability in the data. The training and validation are independent.

The model was initialized with the pre-trained SAM model with the ViT-Base model. We fixed the prompt encoder since it can already encode the bounding box prompt. All the trainable parameters in the image encoder and mask decoder were updated during training. Specifically, the number of trainable parameters for the image encoder and mask decoder are 89,670,912 and 4,058,340, respectively. The bounding box prompt was simulated from the expert annotations with a random perturbation of 0-20 pixels. The loss function is the unweighted sum between dice loss and cross-entropy loss, which has been proven to be robust in various segmentation tasks 1 . The network was optimized by AdamW 37 optimizer ( β 1 = 0.9, β 2 = 0.999) with an initial learning rate of 1e-4 and a weight decay of 0.01. The global batch size was 160 and data augmentation was not used. The model was trained on 20 A100 (80G) GPUs with 150 epochs and the last checkpoint was selected as the final model.

Furthermore, to thoroughly evaluate the performance of MedSAM, we conducted comparative analyses against both the state-of-the-art segmentation foundation model SAM 7 and specialist models (i.e., U-Net 1 and DeepLabV3+ 24 ). The training images contained 10 modalities: CT, MR, chest X-ray (CXR), dermoscopy, endoscopy, ultrasound, mammography, OCT, and pathology, and we trained the U-Net and DeepLabV3+ specialist models for each modality. There were 20 specialist models in total and the number of corresponding training images was presented in Supplementary Table 5 . We employed the nnU-Net to conduct all U-Net experiments, which can automatically configure the network architecture based on the dataset properties. In order to incorporate the bounding box prompt into the model, we transformed the bounding box into a binary mask and concatenated it with the image as the model input. This function was originally supported by nnU-Net in the cascaded pipeline, which has demonstrated increased performance in many segmentation tasks by using the binary mask as an additional channel to specify the target location. The training settings followed the default configurations of 2D nnU-Net. Each model was trained on one A100 GPU with 1000 epochs and the last checkpoint was used as the final model. The DeepLabV3+ specialist models used ResNet50 38 as the encoder. Similar to ref. 3 , the input images were resized to 224 × 224 × 3. The bounding box was transformed into a binary mask as an additional input channel to provide the object location prompt. Segmentation Models Pytorch (0.3.3) 39 was used to perform training and inference for all the modality-wise specialist DeepLabV3 + models. Each modality-wise model was trained on one A100 GPU with 500 epochs and the last checkpoint was used as the final model. During the inference phase, SAM and MedSAM were used to perform segmentation across all modalities with a single model. In contrast, the U-Net and DeepLabV3+ specialist models were used to individually segment the respective corresponding modalities.

A task-specific segmentation model might outperform a modality-based one for certain applications. Since U-Net obtained better performance than DeepLabV3+ on most tasks, we further conducted a comparison study by training task-specific U-Net models on four representative tasks, including liver cancer segmentation in CT scans, abdominal organ segmentation in MR scans, nerve cancer segmentation in ultrasound, and polyp segmentation in endoscopy images. The experiments included both internal validation and external validation. For internal validation, we adhered to the default data splits, using them to train the task-specific U-Net models and then evaluate their performance on the corresponding validation set. For external validation, the trained U-Net models were evaluated on new datasets from the same modality or segmentation targets. In all these experiments, MedSAM was directly applied to the validation sets without additional fine-tuning. As shown in Supplementary Fig. 15 , while task-specific U-Net models often achieved great results on internal validation sets, their performance diminished significantly for external sets. In contrast, MedSAM maintained consistent performance across both internal and external validation sets. This underscores MedSAM’s superior generalization ability, making it a versatile tool in a variety of medical image segmentation tasks.

Loss function

We used the unweighted sum between cross-entropy loss and dice loss 40 as the final loss function since it has been proven to be robust across different medical image segmentation tasks 41 . Specifically, let S , G denote the segmentation result and ground truth, respectively. s i , g i denotes the predicted segmentation and ground truth of voxel i , respectively. N is the number of voxels in the image I . Binary cross-entropy loss is defined by

and dice loss is defined by

The final loss L is defined by

Human annotation study

The objective of the human annotation study was to quantitatively evaluate how MedSAM can reduce the annotation time cost. Specifically, we used the recent adrenocortical carcinoma CT dataset 34 , 42 , 43 , where the segmentation target, adrenal tumor, was neither part of the training nor of the existing validation sets. We randomly sampled 10 cases, comprising a total of 733 tumor slices requiring annotations. Two human experts participated in this study, both of whom are experienced radiologists with 8 and 6 years of clinical practice in abdominal diseases, respectively. Each expert generated two groups of annotations, one with the assistance of MedSAM and one without.

In the first group, the experts manually annotated the 3D adrenal tumor in a slice-by-slice manner. Annotations by the two experts were conducted independently, with no collaborative discussions, and the time taken for each case was recorded. In the second group, annotations were generated after one week of cooling period. The experts independently drew the long and short tumor axes as initial markers, which is a common practice in tumor response evaluation. This process was executed every 3-10 slices from the top slice to the bottom slice of the tumor. Then, we applied MedSAM to segment the tumors based on these sparse linear annotations, including three steps.

Step 1. For each annotated slice, a rectangle binary mask was generated based on the linear label that can completely cover the linear label.

Step 2. For the unlabeled slices, the rectangle binary masks were created through interpolation of the surrounding labeled slices.

Step 3. We transformed the binary masks into bounding boxes and then fed them along with the images into MedSAM to generate segmentation results.

All these steps were conducted in an automatic way and the model running time was recorded for each case. Finally, human experts manually refined the segmentation results until they met their satisfaction. To summarize, the time cost of the second group of annotations contained three parts: initial markers, MedSAM inference, and refinement. All the manual annotation processes were based on ITK-SNAP 44 , an open-source software designed for medical image visualization and annotation.

Evaluation metrics

We followed the recommendations in Metrics Reloaded 45 and used the dice similarity coefficient and normalized surface distance (NSD) to quantitatively evaluate the segmentation results. DSC is a region-based segmentation metric, aiming to evaluate the region overlap between expert annotation masks and segmentation results, which is defined by

NSD 46 is a boundary-based metric, aiming to evaluate the boundary consensus between expert annotation masks and segmentation results at a given tolerance, which is defined by

where \({B}_{\partial G}^{(\tau )}=\{x\in {R}^{3}\,| \,\exists \tilde{x}\in \partial G,\,| | x-\tilde{x}| | \le \tau \}\) , \({B}_{\partial S}^{(\tau )}=\{x\in {R}^{3}\,| \,\exists \tilde{x}\in \partial S,\,| | x-\tilde{x}| | \le \tau \}\) denote the border region of the expert annotation mask and the segmentation surface at tolerance τ , respectively. In this paper, we set the tolerance τ as 2.

Statistical analysis

To statistically analyze and compare the performance of the aforementioned four methods (MedSAM, SAM, U-Net, and DeepLabV3+ specialist models), we employed the Wilcoxon signed-rank test. This non-parametric test is well-suited for comparing paired samples and is particularly useful when the data does not meet the assumptions of normal distribution. This analysis allowed us to determine if any method demonstrated statistically superior segmentation performance compared to the others, providing valuable insights into the comparative effectiveness of the evaluated methods. The Wilcoxon signed-rank test results are marked on the DSC and NSD score tables (Supplementary Table 6 – 11) .

Software utilized

All code was implemented in Python (3.10) using Pytorch (2.0) as the base deep learning framework. We also used several Python packages for data analysis and results visualization, including connected-components-3d (3.10.3), SimpleITK (2.2.1), nibabel (5.1.0), torchvision (0.15.2), numpy (1.24.3), scikit-image (0.20.0), scipy (1.10.1), and pandas (2.0.2), matplotlib (3.7.1), opencv-python (4.8.0), ChallengeR (1.0.5), and plotly (5.15.0). Biorender was used to create Fig. 1 .

Reporting summary

Further information on research design is available in the Nature Portfolio Reporting Summary linked to this article.

Data availability

The training and validating datasets used in this study are available in the public domain and can be downloaded via the links provided in Supplementary Tables 16 and 17 . Source data are provided with this paper in the Source Data file. We confirmed that All the image datasets in this study are publicly accessible and permitted for research purposes. Source data are provided in this paper.

Code availability

The training script, inference script, and trained model have been publicly available at https://github.com/bowang-lab/MedSAM . A permanent version is released on Zenodo 47 .

Isensee, F., Jaeger, P. F., Kohl, S. A., Petersen, J. & Maier-Hein, K. H. nnU-Net: a self-configuring method for deep learning-based biomedical image segmentation. Nat. Method. 18 , 203–211 (2021).

De Fauw, J. Clinically applicable deep learning for diagnosis and referral in retinal disease. Nat. Med. 24 , 1342–1350 (2018).

Ouyang, D. Video-based AI for beat-to-beat assessment of cardiac function. Nature 580 , 252–256 (2020).

Wang, G. Deepigeos: a deep interactive geodesic framework for medical image segmentation. In IEEE Transactions on Pattern Analysis and Machine Intelligence 41 , 1559–1572 (IEEE, 2018).

Antonelli, M. The medical segmentation decathlon. Nat. Commun. 13 , 4128 (2022).

Minaee, S. Image segmentation using deep learning: A survey. In IEEE Transactions on Pattern Analysis and Machine Intelligence 44 , 3523–3542 (IEEE, 2021).

Kirillov, A. et al. Segment anything. In IEEE International Conference on Computer Vision. 4015–4026 (IEEE, 2023).

Zou, X. et al. Segment everything everywhere all at once. In Advances in Neural Information Processing Systems (MIT Press, 2023).

Wang, G. Interactive medical image segmentation using deep learning with image-specific fine tuning. In IEEE Transactions on Medical Imaging 37 , 1562–1573 (IEEE, 2018).

Zhou, T. Volumetric memory network for interactive medical image segmentation. Med. Image Anal. 83 , 102599 (2023).

Luo, X. Mideepseg: Minimally interactive segmentation of unseen objects from medical images using deep learning. Med. Image Anal. 72 , 102102 (2021).

Deng, R. et al. Segment anything model (SAM) for digital pathology: assess zero-shot segmentation on whole slide imaging. Preprint at https://arxiv.org/abs/2304.04155 (2023).

Hu, C., Li, X. When SAM meets medical images: an investigation of segment anything model (SAM) on multi-phase liver tumor segmentation. Preprint at https://arxiv.org/abs/2304.08506 (2023).

He, S., Bao, R., Li, J., Grant, P.E., Ou, Y. Accuracy of segment-anything model (SAM) in medical image segmentation tasks. Preprint at https://doi.org/10.48550/arXiv.2304.09324 (2023).

Roy, S. et al. SAM.MD: zero-shot medical image segmentation capabilities of the segment anything model. Preprint at https://arxiv.org/abs/2304.05396 (2023).

Zhou, T., Zhang, Y., Zhou, Y., Wu, Y. & Gong, C. Can SAM segment polyps? Preprint at https://arxiv.org/abs/2304.07583 (2023).

Mohapatra, S., Gosai, A., Schlaug, G. Sam vs bet: a comparative study for brain extraction and segmentation of magnetic resonance images using deep learning. Preprint at https://arxiv.org/abs/2304.04738 (2023).

Chen, J., Bai, X. Learning to" segment anything" in thermal infrared images through knowledge distillation with a large scale dataset SATIR. Preprint at https://arxiv.org/abs/2304.07969 (2023).

Tang, L., Xiao, H., Li, B. Can SAM segment anything? when SAM meets camouflaged object detection. Preprint at https://arxiv.org/abs/2304.04709 (2023).

Ji, G.-P. et al. SAM struggles in concealed scenes–empirical study on” segment anything”. Science China Information Sciences. 66 , 226101 (2023).

Ji, W., Li, J., Bi, Q., Li, W., Cheng, L. Segment anything is not always perfect: an investigation of SAM on different real-world applications. Preprint at https://arxiv.org/abs/2304.05750 (2023).

Mazurowski, M. A. Segment anything model for medical image analysis: an experimental study. Med. Image Anal. 89 , 102918 (2023).

Huang, Y. et al. Segment anything model for medical images? Med. Image Anal. 92 , 103061 (2024).

Chen, L.-C., Zhu, Y., Papandreou, G., Schroff, F., Adam, H. Encoder-decoder with atrous separable convolution for semantic image segmentation. In Proc. European Conference on Computer Vision . 801–818 (IEEE, 2018).

Dosovitskiy, A. et al. An image is worth 16x16 words: transformers for image recognition at scale. In: International Conference on Learning Representations (OpenReview.net, 2020).

Tancik, M. Fourier features let networks learn high frequency functions in low-dimensional domains. In Advances in Neural Information Processing Systems 33 , 7537–7547 (Curran Associates, Inc., 2020).

Vaswani, A. et al. Attention is all you need. In Advances in Neural Information Processing Systems , Vol. 30 (Curran Associates, Inc., 2017).

He, B. Blinded, randomized trial of sonographer versus AI cardiac function assessment. Nature 616 , 520–524 (2023).

Eisenhauer, E. A. New response evaluation criteria in solid tumours: revised recist guideline (version 1.1). Eur. J. Cancer 45 , 228–247 (2009).

Ma, J. & Wang, B. Towards foundation models of biological image segmentation. Nat. Method. 20 , 953–955 (2023).

Ma, J. et al. The multi-modality cell segmentation challenge: towards universal solutions. Preprint at https://arxiv.org/abs/2308.05864 (2023).

Xie, R., Pang, K., Bader, G.D., Wang, B. Maester: masked autoencoder guided segmentation at pixel resolution for accurate, self-supervised subcellular structure recognition. In IEEE Conference on Computer Vision and Pattern Recognition . 3292–3301 (IEEE, 2023).

Bera, K., Braman, N., Gupta, A., Velcheti, V. & Madabhushi, A. Predicting cancer outcomes with radiomics and artificial intelligence in radiology. Nat. Rev. Clin. Oncol. 19 , 132–146 (2022).

Clark, K. The cancer imaging archive (TCIA): maintaining and operating a public information repository. J. Digit. Imaging 26 , 1045–1057 (2013).

Ba, J.L., Kiros, J.R., Hinton, G.E. Layer normalization. Preprint at https://arxiv.org/abs/1607.06450 (2016).

He, K. et al. Masked autoencoders are scalable vision learners. In Proc. IEEE/CVF Conference on Computer Vision and Pattern Recognition . 16000–16009 (IEEE, 2022).

Loshchilov, I., Hutter, F. Decoupled weight decay regularization. In International Conference on Learning Representations (OpenReview.net, 2019).

He, K., Zhang, X., Ren, S., Sun, J. Deep residual learning for image recognition. In Proc. IEEE Conference on Computer Vision and Pattern Recognition . 770–778 (IEEE, 2016).

Iakubovskii, P. Segmentation models pytorch. GitHub https://github.com/qubvel/segmentation_models.pytorch (2019).

Milletari, F., Navab, N., Ahmadi, S.-A. V-net: Fully convolutional neural networks for volumetric medical image segmentation. In International Conference on 3D Vision (3DV). 565–571 (IEEE, 2016).

Ma, J. Loss odyssey in medical image segmentation. Med. Image Anal. 71 , 102035 (2021).

Ahmed, A. Radiomic mapping model for prediction of Ki-67 expression in adrenocortical carcinoma. Clin. Radiol. 75 , 479–17 (2020).

Moawad, A.W. et al. Voxel-level segmentation of pathologically-proven Adrenocortical carcinoma with Ki-67 expression (Adrenal-ACC-Ki67-Seg) [data set]. https://doi.org/10.7937/1FPG-VM46 (2023).

Yushkevich, P.A., Gao, Y., Gerig, G. Itk-snap: an interactive tool for semi-automatic segmentation of multi-modality biomedical images. In International Conference of the IEEE Engineering in Medicine and Biology Society (EMBC) . 3342–3345 (IEEE, 2016).

Maier-Hein, L. et al. Metrics reloaded: Pitfalls and recommendations for image analysis validation. Preprint at https://arxiv.org/abs/2206.01653 (2022).

DeepMind surface-distance. https://github.com/google-deepmind/surface-distance (2018).

Ma, J. bowang-lab/MedSAM: v1.0.0. https://doi.org/10.5281/zenodo.10452777 (2023).

Download references

Acknowledgements

This work was supported by the Natural Sciences and Engineering Research Council of Canada (NSERC, RGPIN-2020-06189 and DGECR-2020-00294) and CIFAR AI Chair programs. The authors of this paper highly appreciate all the data owners for providing public medical images to the community. We also thank Meta AI for making the source code of segment anything publicly available to the community. This research was enabled in part by computing resources provided by the Digital Research Alliance of Canada.

Author information

Authors and affiliations.

Peter Munk Cardiac Centre, University Health Network, Toronto, ON, Canada

Jun Ma, Feifei Li & Bo Wang

Department of Laboratory Medicine and Pathobiology, University of Toronto, Toronto, ON, Canada

Jun Ma & Bo Wang

Vector Institute, Toronto, ON, Canada

Department of Computer Science, Western University, London, ON, Canada

Tandon School of Engineering, New York University, New York, NY, USA

Department of Electrical Engineering, Yale University, New Haven, CT, USA

Department of Computer Science, University of Toronto, Toronto, ON, Canada

UHN AI Hub, Toronto, ON, Canada

You can also search for this author in PubMed Google Scholar

Contributions

Conceived and designed the experiments: J.M. Y.H., C.Y., B.W. Performed the experiments: J.M. Y.H., F.L., L.H., C.Y. Analyzed the data: J.M. Y.H., F.L., L.H., C.Y., B.W. Wrote the paper: J.M. Y.H., F.L., L.H., C.Y., B.W. All authors have read and agreed to the published version of the manuscript.

Corresponding author

Correspondence to Bo Wang .

Ethics declarations

Competing interests.

The authors declare no competing interests

Peer review

Peer review information.

Nature Communications thanks David Ouyang, and the other, anonymous, reviewer(s) for their contribution to the peer review of this work. A peer review file is available.

Additional information

Publisher’s note Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Supplementary information

Supplementary information, reporting summary, peer review file, source data, source data file, rights and permissions.

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article’s Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article’s Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/ .

Reprints and permissions

About this article

Cite this article.

Ma, J., He, Y., Li, F. et al. Segment anything in medical images. Nat Commun 15 , 654 (2024). https://doi.org/10.1038/s41467-024-44824-z

Download citation

Received : 24 October 2023

Accepted : 05 January 2024

Published : 22 January 2024

DOI : https://doi.org/10.1038/s41467-024-44824-z

Share this article

Anyone you share the following link with will be able to read this content:

Sorry, a shareable link is not currently available for this article.

Provided by the Springer Nature SharedIt content-sharing initiative

This article is cited by

Visual interpretability of image-based classification models by generative latent space disentanglement applied to in vitro fertilization.

- Tamar Schwartz

- Assaf Zaritsky

Nature Communications (2024)

Holotomography

- Herve Hugonnet

- YongKeun Park

Nature Reviews Methods Primers (2024)

An efficient segment anything model for the segmentation of medical images

- Guanliang Dong

- Zhangquan Wang

- Haidong Cui

Scientific Reports (2024)

A Comprehensive Survey of Image Generation Models Based on Deep Learning

- Chenyang Zhang

Annals of Data Science (2024)

TransDiff: medical image segmentation method based on Swin Transformer with diffusion probabilistic model

- Xiaoxiao Liu

Applied Intelligence (2024)

By submitting a comment you agree to abide by our Terms and Community Guidelines . If you find something abusive or that does not comply with our terms or guidelines please flag it as inappropriate.

Quick links

- Explore articles by subject

- Guide to authors

- Editorial policies

Sign up for the Nature Briefing: AI and Robotics newsletter — what matters in AI and robotics research, free to your inbox weekly.

An official website of the United States government

The .gov means it’s official. Federal government websites often end in .gov or .mil. Before sharing sensitive information, make sure you’re on a federal government site.

The site is secure. The https:// ensures that you are connecting to the official website and that any information you provide is encrypted and transmitted securely.

- Publications

- Account settings

- My Bibliography

- Collections

- Citation manager

Save citation to file

Email citation, add to collections.

- Create a new collection

- Add to an existing collection

Add to My Bibliography

Your saved search, create a file for external citation management software, your rss feed.

- Search in PubMed

- Search in NLM Catalog

- Add to Search

Recent Advances in Medical Image Processing

Affiliations.

- 1 Hangzhou Zhiwei Information and Technology Inc., Hangzhou, China.

- 2 Hangzhou Zhiwei Information and Technology Inc., Hangzhou, China, [email protected].

- PMID: 33176311

- DOI: 10.1159/000510992

Background: Application and development of the artificial intelligence technology have generated a profound impact in the field of medical imaging. It helps medical personnel to make an early and more accurate diagnosis. Recently, the deep convolution neural network is emerging as a principal machine learning method in computer vision and has received significant attention in medical imaging. Key Message: In this paper, we will review recent advances in artificial intelligence, machine learning, and deep convolution neural network, focusing on their applications in medical image processing. To illustrate with a concrete example, we discuss in detail the architecture of a convolution neural network through visualization to help understand its internal working mechanism.

Summary: This review discusses several open questions, current trends, and critical challenges faced by medical image processing and artificial intelligence technology.

Keywords: Artificial intelligence; Convolution neural network; Deep learning; Medical imaging.

© 2020 S. Karger AG, Basel.

PubMed Disclaimer

Similar articles

- [Role of artificial intelligence in the diagnosis and treatment of gastrointestinal diseases]. Yu YY. Yu YY. Zhonghua Wei Chang Wai Ke Za Zhi. 2020 Jan 25;23(1):33-37. doi: 10.3760/cma.j.issn.1671-0274.2020.01.006. Zhonghua Wei Chang Wai Ke Za Zhi. 2020. PMID: 31958928 Chinese.

- AI-based computer-aided diagnosis (AI-CAD): the latest review to read first. Fujita H. Fujita H. Radiol Phys Technol. 2020 Mar;13(1):6-19. doi: 10.1007/s12194-019-00552-4. Epub 2020 Jan 2. Radiol Phys Technol. 2020. PMID: 31898014 Review.

- An overview of deep learning algorithms and water exchange in colonoscopy in improving adenoma detection. Hsieh YH, Leung FW. Hsieh YH, et al. Expert Rev Gastroenterol Hepatol. 2019 Dec;13(12):1153-1160. doi: 10.1080/17474124.2019.1694903. Epub 2019 Nov 30. Expert Rev Gastroenterol Hepatol. 2019. PMID: 31755802 Review.

- Super-resolution reconstruction of knee magnetic resonance imaging based on deep learning. Qiu D, Zhang S, Liu Y, Zhu J, Zheng L. Qiu D, et al. Comput Methods Programs Biomed. 2020 Apr;187:105059. doi: 10.1016/j.cmpb.2019.105059. Epub 2019 Sep 24. Comput Methods Programs Biomed. 2020. PMID: 31582263

- Artificial Intelligence and Machine Learning in Cardiovascular Imaging. Seetharam K, Min JK. Seetharam K, et al. Methodist Debakey Cardiovasc J. 2020 Oct-Dec;16(4):263-271. doi: 10.14797/mdcj-16-4-263. Methodist Debakey Cardiovasc J. 2020. PMID: 33500754 Free PMC article. Review.

- Deep Learning for Nasopharyngeal Carcinoma Segmentation in Magnetic Resonance Imaging: A Systematic Review and Meta-Analysis. Wang CK, Wang TW, Yang YX, Wu YT. Wang CK, et al. Bioengineering (Basel). 2024 May 17;11(5):504. doi: 10.3390/bioengineering11050504. Bioengineering (Basel). 2024. PMID: 38790370 Free PMC article. Review.

- Validation of an established TW3 artificial intelligence bone age assessment system: a prospective, multicenter, confirmatory study. Liu Y, Ouyang L, Wu W, Zhou X, Huang K, Wang Z, Song C, Chen Q, Su Z, Zheng R, Wei Y, Lu W, Wu W, Liu Y, Yan Z, Wu Z, Fan J, Zhou M, Fu J. Liu Y, et al. Quant Imaging Med Surg. 2024 Jan 3;14(1):144-159. doi: 10.21037/qims-23-715. Epub 2023 Oct 28. Quant Imaging Med Surg. 2024. PMID: 38223047 Free PMC article.

Publication types

- Search in MeSH

LinkOut - more resources

Full text sources.

- S. Karger AG, Basel, Switzerland

- Citation Manager

NCBI Literature Resources

MeSH PMC Bookshelf Disclaimer

The PubMed wordmark and PubMed logo are registered trademarks of the U.S. Department of Health and Human Services (HHS). Unauthorized use of these marks is strictly prohibited.

ORIGINAL RESEARCH article

Medical image analysis using deep learning algorithms.

- 1 The First Affiliated Hospital of Wenzhou Medical University, Wenzhou, China

- 2 Department of Cardiovascular Medicine, The First Affiliated Hospital of Zhengzhou University, Zhengzhou, China

- 3 Department of Cardiovascular Medicine, Wencheng People’s Hospital, Wencheng, China

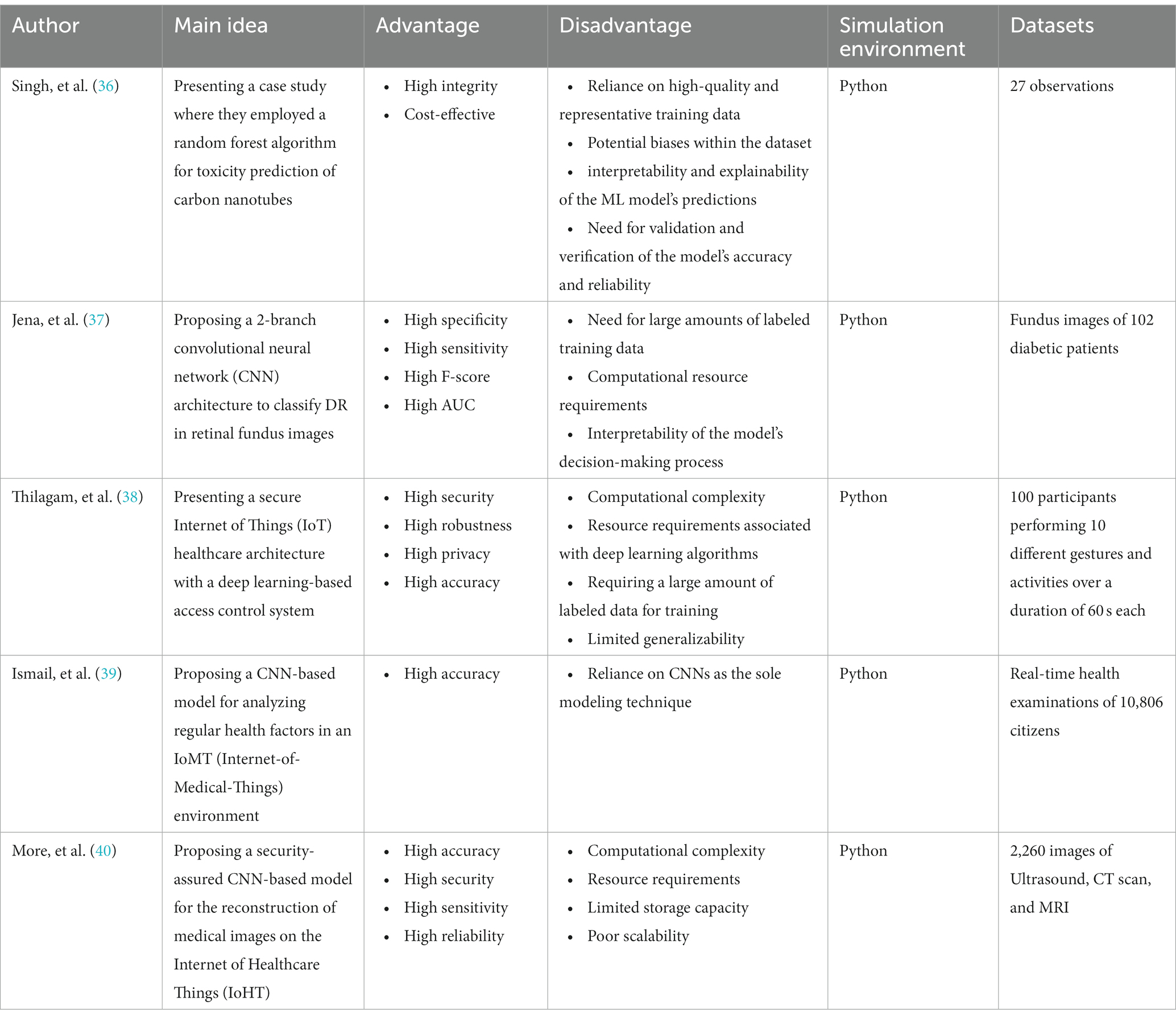

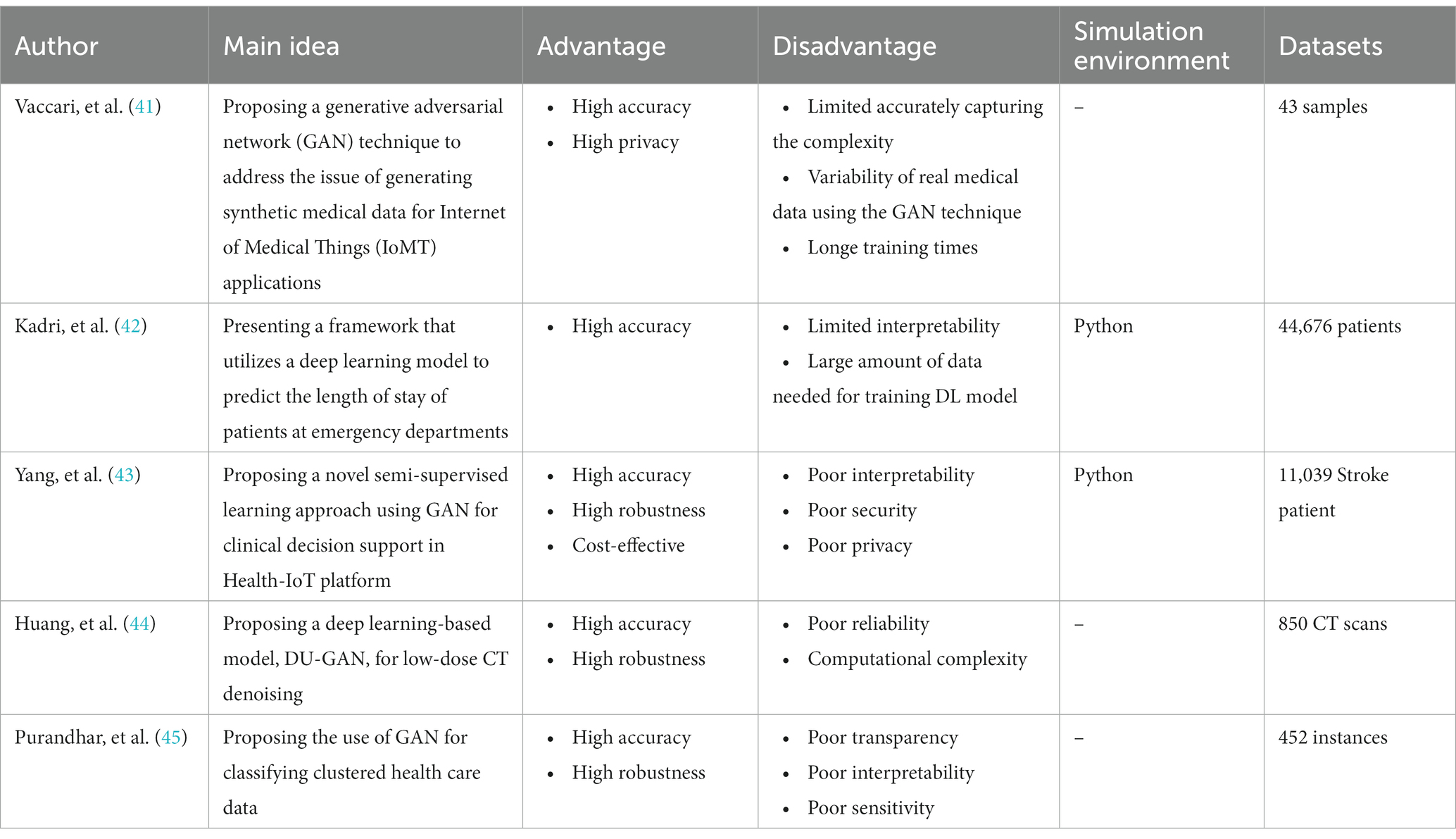

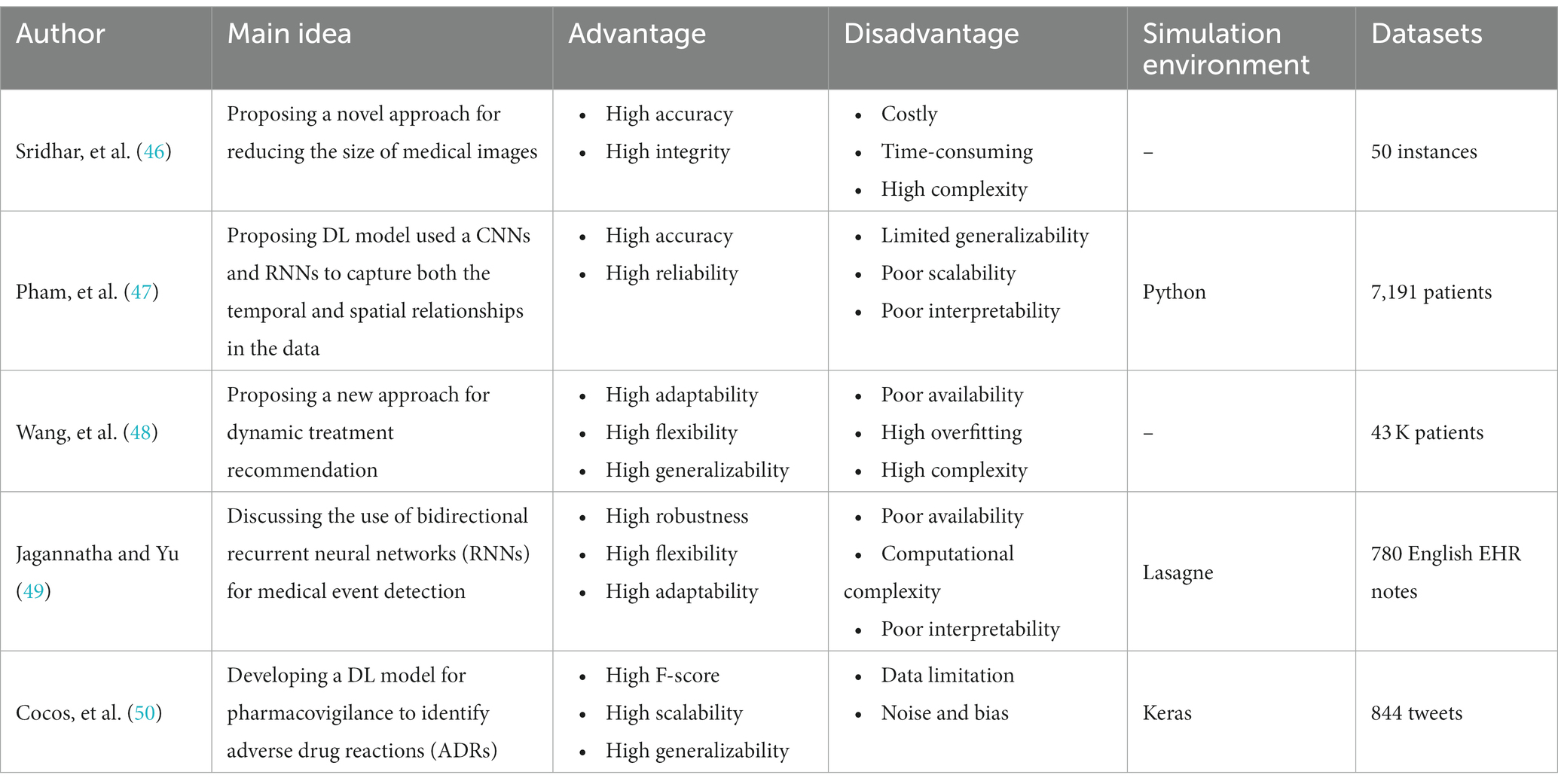

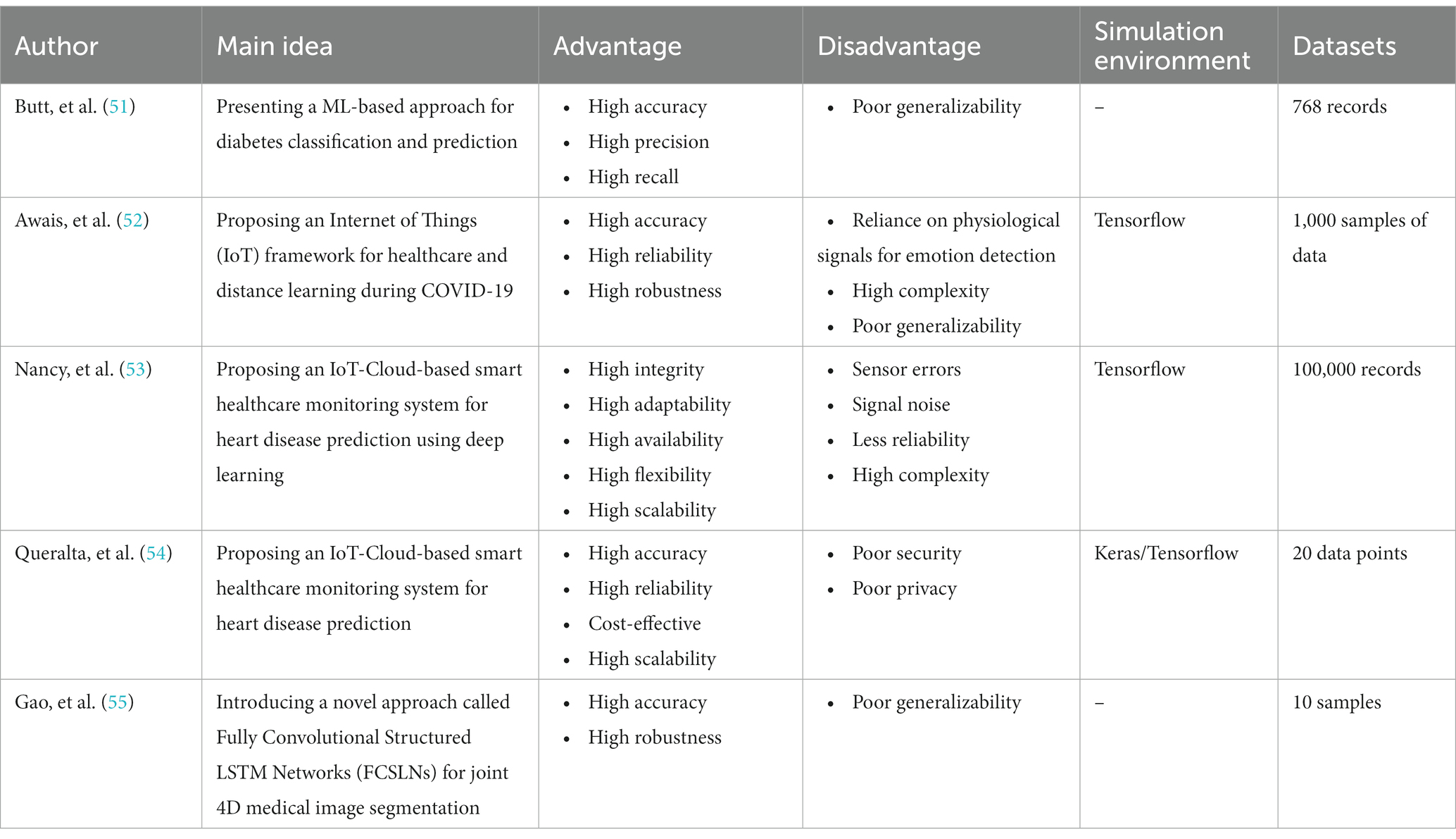

In the field of medical image analysis within deep learning (DL), the importance of employing advanced DL techniques cannot be overstated. DL has achieved impressive results in various areas, making it particularly noteworthy for medical image analysis in healthcare. The integration of DL with medical image analysis enables real-time analysis of vast and intricate datasets, yielding insights that significantly enhance healthcare outcomes and operational efficiency in the industry. This extensive review of existing literature conducts a thorough examination of the most recent deep learning (DL) approaches designed to address the difficulties faced in medical healthcare, particularly focusing on the use of deep learning algorithms in medical image analysis. Falling all the investigated papers into five different categories in terms of their techniques, we have assessed them according to some critical parameters. Through a systematic categorization of state-of-the-art DL techniques, such as Convolutional Neural Networks (CNNs), Recurrent Neural Networks (RNNs), Generative Adversarial Networks (GANs), Long Short-term Memory (LSTM) models, and hybrid models, this study explores their underlying principles, advantages, limitations, methodologies, simulation environments, and datasets. Based on our results, Python was the most frequent programming language used for implementing the proposed methods in the investigated papers. Notably, the majority of the scrutinized papers were published in 2021, underscoring the contemporaneous nature of the research. Moreover, this review accentuates the forefront advancements in DL techniques and their practical applications within the realm of medical image analysis, while simultaneously addressing the challenges that hinder the widespread implementation of DL in image analysis within the medical healthcare domains. These discerned insights serve as compelling impetuses for future studies aimed at the progressive advancement of image analysis in medical healthcare research. The evaluation metrics employed across the reviewed articles encompass a broad spectrum of features, encompassing accuracy, sensitivity, specificity, F-score, robustness, computational complexity, and generalizability.

1. Introduction

Deep learning is a branch of machine learning that employs artificial neural networks comprising multiple layers to acquire and discern intricate patterns from extensive datasets ( 1 , 2 ). It has brought about a revolution in various domains, including computer vision, natural language processing, and speech recognition, among other areas ( 3 ). One of the primary advantages of deep learning is its capacity to automatically learn features from raw data, thereby eliminating the necessity for manual feature engineering ( 4 ). This makes it especially powerful in domains with large, complex datasets, where traditional machine learning methods may struggle to capture the underlying patterns ( 5 ). Deep learning has also facilitated significant advancements in various tasks, including but not limited to image and speech recognition, comprehension of natural language, and the development of autonomous driving capabilities ( 6 ). For instance, deep learning has enabled the creation of exceptionally precise computer vision systems capable of identifying objects in images and videos with unparalleled precision. Likewise, deep learning has brought about substantial enhancements in natural language processing, leading to the development of models capable of comprehending and generating language that resembles human-like expression ( 7 ). Overall, deep learning has opened up new opportunities for solving complex problems and has the potential to transform many industries, including healthcare, finance, transportation, and more.

Medical image analysis is a field of study that involves the processing, interpretation, and analysis of medical images ( 8 ). The emergence of deep learning algorithms has prompted a notable transformation in the field of medical image analysis, as they have increasingly been employed to enhance the diagnosis, treatment, and monitoring of diverse medical conditions in recent years ( 9 ). Deep learning, as a branch of machine learning, encompasses the training of algorithms to acquire knowledge from vast quantities of data. When applied to medical image analysis, deep learning algorithms possess the capability to automatically identify and categorize anomalies in various medical images, including X-rays, MRI scans, CT scans, and ultrasound images ( 10 ). These algorithms can undergo training using extensive datasets consisting of annotated medical images, where each image is accompanied by labels indicating the corresponding medical condition or abnormality ( 11 ). Once trained, the algorithm can analyze new medical images and provide diagnostic insights to healthcare professionals. The application of deep learning algorithms in medical image analysis has exhibited promising outcomes, as evidenced by studies showcasing high levels of accuracy in detecting and diagnosing a wide range of medical conditions ( 12 ). This has led to the development of various commercial and open-source software tools that leverage deep learning algorithms for medical image analysis ( 13 ). Overall, the utilization of deep learning algorithms in medical image analysis has the capability to bring about substantial enhancements in healthcare results and transform the utilization of medical imaging in diagnosis and treatment.

Medical image processing is an area of research that encompasses the creation and application of algorithms and methods to analyze and decipher medical images ( 14 ). The primary objective of medical image processing is to extract meaningful information from medical images to aid in diagnosis, treatment planning, and therapeutic interventions ( 15 ). Medical image processing involves various tasks such as image segmentation, image registration, feature extraction, classification, and visualization. The primary aim of medical image processing is to extract pertinent information from medical images, facilitating the tasks of diagnosis, treatment planning, and therapeutic interventions. Each modality has its unique strengths and limitations, and the images produced by different modalities may require specific processing techniques to extract useful information ( 16 ). Medical image processing techniques have revolutionized the field of medicine by providing a non-invasive means to visualize and analyze the internal structures and functions of the body. It has enabled early detection and diagnosis of diseases, accurate treatment planning, and monitoring of treatment response. The use of medical image processing has significantly improved patient outcomes, reduced treatment costs, and enhanced the quality of care provided to patients. Visual depictions of CNNs in the context of medical image analysis using DL algorithms portray a layered architecture, where initial layers capture rudimentary features like edges and textures, while subsequent layers progressively discern more intricate and abstract characteristics, allowing the network to autonomously extract pertinent information from medical images for tasks like detection, segmentation, and classification. Additionally, the visual representations of RNNs in medical image analysis involving DL algorithms illustrate a network structure adept at grasping temporal relationships and sequential patterns within images, rendering them well-suited for tasks such as video analysis or the processing of time-series medical image data. Furthermore, visual representations of GANs in medical image analysis employing DL algorithms exemplify a dual-network framework: one network, the generator, fabricates synthetic medical images, while the other, the discriminator, assesses their authenticity, facilitating the generation of lifelike images closely resembling actual medical data. Moreover, visual depictions of LSTM networks in medical image analysis with DL algorithms delineate a specialized form of recurrent neural network proficient in processing sequential medical image data by preserving long-term dependencies and learning temporal patterns crucial for tasks like video analysis and time-series image processing. Finally, visual representations of hybrid methods in medical image analysis using DL algorithms portray a combination of diverse neural network architectures, often integrating CNNs with RNNs or other specialized modules, enabling the model to harness both spatial and temporal information for a comprehensive analysis of medical images.

Case studies and real-world examples provide tangible evidence of the effectiveness and applicability of DL algorithms in various medical image analysis tasks. They underscore the potential of this technology to revolutionize healthcare by improving diagnostic accuracy, reducing manual labor, and enabling earlier interventions for patients. Here are several examples of case studies and real-worlds applications:

1. Skin cancer detection

2. Case Study: In Vijayalakshmi ( 17 ), a DL algorithm was trained to identify skin cancer from images of skin lesions. The algorithm demonstrated accuracy comparable to that of dermatologists, highlighting its potential as a tool for early skin cancer detection.

3. Diabetic retinopathy screening

4. Case Study: also, De Fauw et al. ( 18 ) in Moorfields Eye Hospital, developed a DL system capable of identifying diabetic retinopathy from retinal images. The system was trained on a dataset of over 128,000 images and achieved a level of accuracy comparable to expert ophthalmologists.

1. Tumor segmentation in MRI

2. Case Study: A study conducted by Guo et al. ( 8 ) at Massachusetts General Hospital utilized DL techniques to automate the segmentation of brain tumors from MRI scans. The algorithm significantly reduced the time required for tumor delineation, enabling quicker treatment planning for patients.

3. Chest X-ray analysis for tuberculosis detection

4. Case Study: The National Institutes of Health (NIH) released a dataset of chest X-ray images for the detection of tuberculosis. Researchers have successfully applied deep learning algorithms to this dataset, achieving high accuracy in identifying TB-related abnormalities.

5. Automated bone fracture detection

6. Case Study: Meena and Roy ( 19 ) at Stanford University developed a deep learning model capable of detecting bone fractures in X-ray images. The model demonstrated high accuracy and outperformed traditional rule-based systems in fracture detection.

Within the realm of medical image analysis utilizing DL algorithms, ML algorithms are extensively utilized for precise and efficient segmentation tasks. DL approaches, especially convolutional neural networks (CNNs) and recurrent neural networks (RNNs), have demonstrated exceptional proficiency in capturing and leveraging spatial dependencies and symmetrical properties inherent in medical images. These algorithms enable the analyzing medical image of symmetric structures, such as organs or limbs, by leveraging their inherent symmetrical patterns. The utilization of DL mechanisms in medical image analysis encompasses various practical approaches, including generative adversarial networks (GANs), hybrid models, and combinations of CNNs and RNNs. The objective of this research is to offer a thorough examination of the uses of DL techniques in the domain of deep symmetry-based image analysis within medical healthcare, providing a comprehensive overview. By conducting an in-depth systematic literature review (SLR), analyzing multiple studies, and exploring the properties, advantages, limitations, datasets, and simulation environments associated with different DL mechanisms, this study enhances comprehension regarding the present state and future pathways for advancing and refining deep symmetry-based image analysis methodologies in the field of medical healthcare. The article is structured in the following manner: The key principles and terminology of ML/DL in medical image analysis are covered in the first part, followed by an investigation of relevant papers in part 3. Part 4 discusses the studied mechanisms and tools for paper selection, while part 5 illustrates the classification that was selected. Section 6 presents the results and comparisons, and the remaining concerns and conclusion are explored in the last section.

2. Fundamental concepts and terminology

The concepts and terms related to medical image analysis using DL algorithms that are covered in this section are essential for understanding the underlying principles and techniques used in medical image analysis.

2.1. The role of image analysis in medical healthcare

The utilization of deep learning algorithms for image analysis has brought about a revolution in medical healthcare by facilitating advanced and automated analysis of medical images ( 20 ). Deep learning methods, including Convolutional Neural Networks (CNNs), have showcased outstanding proficiency in tasks like image segmentation, feature extraction, and classification, exhibiting remarkable performance ( 21 ). By leveraging large amounts of annotated data, deep learning models can learn intricate patterns and relationships within medical images, facilitating accurate detection, localization, and diagnosis of diseases and abnormalities. Deep learning-based image analysis allows for faster and more precise interpretation of medical images, leading to improved patient outcomes, personalized treatment planning, and efficient healthcare workflows ( 22 ). Furthermore, these algorithms have the potential to assist in early disease detection, assist radiologists in decision-making, and enhance medical research through the analysis of large-scale image datasets. Overall, deep learning-based image analysis is transforming medical healthcare by providing powerful tools for image interpretation, augmenting the capabilities of healthcare professionals, and enhancing patient care ( 23 ).

2.2. Medical image analysis application

The utilization of deep learning algorithms in medical image analysis has discovered numerous applications within the healthcare sector. Deep learning techniques, notably Convolutional Neural Networks (CNNs), have been widely employed for tasks encompassing image segmentation, object detection, disease classification, and image reconstruction ( 24 ). In medical image analysis, these algorithms can assist in the detection and diagnosis of various conditions, such as tumors, lesions, anatomical abnormalities, and pathological changes. They can also aid in the evaluation of disease progression, treatment response, and prognosis. Deep learning models can automatically extract meaningful features from medical images, enabling efficient and accurate interpretation ( 25 ). The application of this technology holds promise for elevating clinical decision-making, ameliorating patient outcomes, and optimizing resource allocation in healthcare settings. Moreover, deep learning algorithms can be employed for data augmentation, image registration, and multimodal fusion, facilitating a comprehensive and integrated analysis of medical images obtained from various modalities. With continuous advancements in deep learning algorithms, medical image analysis is witnessing significant progress, opening up new possibilities for precision medicine, personalized treatment planning, and advanced healthcare solutions ( 26 ).

2.3. Various aspects of medical image analysis for the healthcare section

Medical image analysis encompasses various crucial aspects in the healthcare sector, enabling in-depth examination and diagnosis based on medical imaging data ( 27 ). Image preprocessing constitutes a crucial element, encompassing techniques like noise reduction, image enhancement, and normalization, aimed at enhancing the quality and uniformity of the images. Another essential aspect is image registration, which aligns multiple images of the same patient or acquired through different imaging modalities, enabling precise comparison and fusion of information ( 28 ). Feature extraction is another crucial step, where relevant characteristics and patterns are extracted from the images, aiding in the detection and classification of abnormalities or specific anatomical structures. Segmentation plays a vital role in delineating regions of interest, enabling precise localization and measurement of anatomical structures, tumors, or lesions ( 29 ). Finally, classification and recognition techniques are applied to differentiate normal and abnormal regions, aiding in disease diagnosis and treatment planning. Deep learning algorithms, notably Convolutional Neural Networks (CNNs), have exhibited extraordinary achievements in diverse facets of medical image analysis by acquiring complex patterns and representations from extensive datasets of medical imaging ( 30 ). However, challenges such as data variability, interpretability, and generalization across different patient populations and imaging modalities need to be addressed to ensure reliable and effective medical image analysis in healthcare applications.

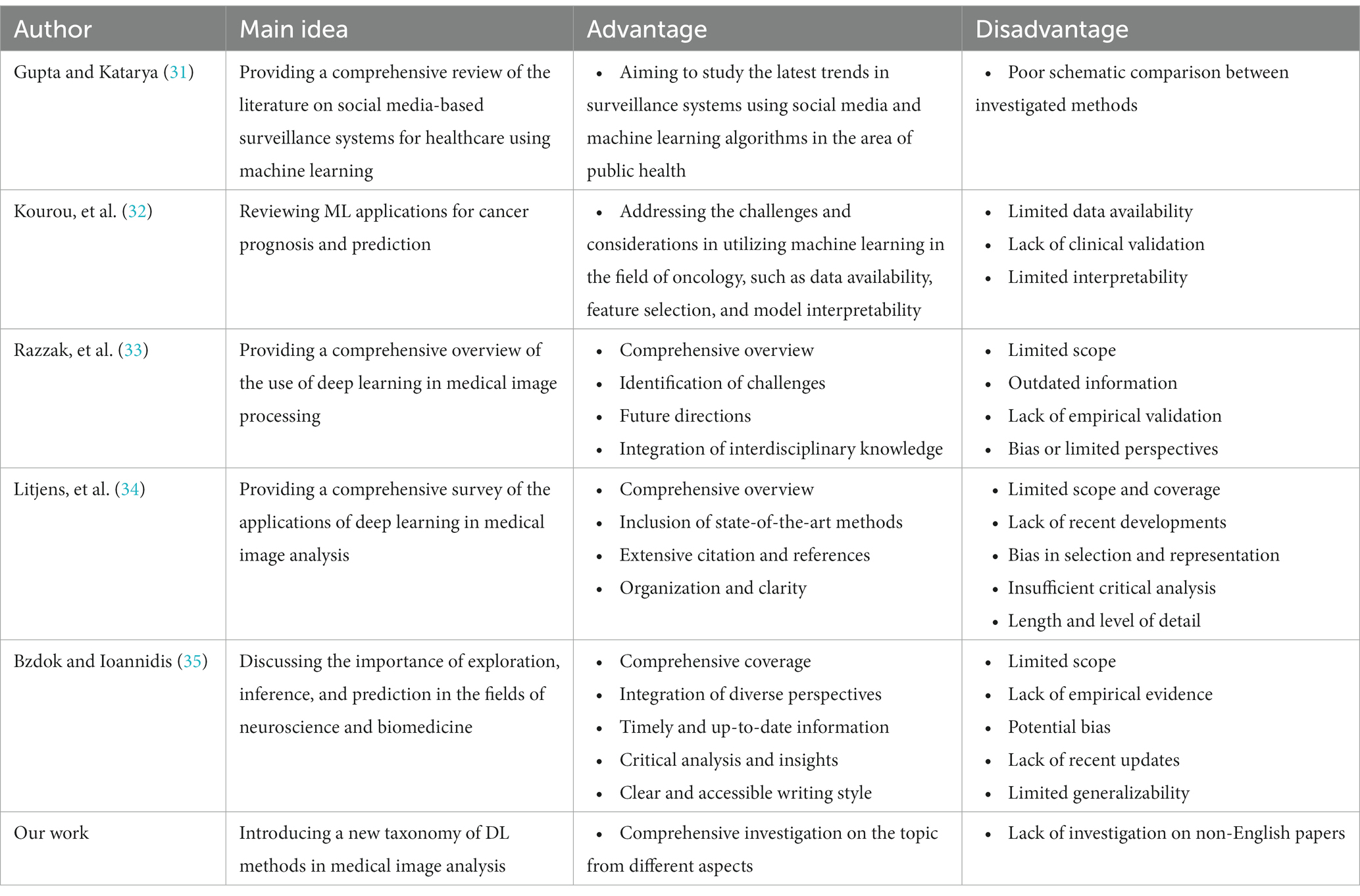

3. Relevant reviews

We are going to look into some recent research on medical image analysis using DL algorithms in this part. The initial purpose is to properly make a distinction between the current study’s significant results in comparison with what is discussed in this paper. Due to advancements in AI technology, there is a growing adoption of AI mechanisms in medical image analysis. Simultaneously, academia has shown a heightened interest in addressing challenges related to medical image analysis. Furthermore, medical image analysis is a hierarchical network management framework modeled to direct analysis availability to aim medical healthcare. In this regard, Gupta and Katarya ( 31 ) provided a comprehensive review of the literature on social media-based surveillance systems for healthcare using machine learning. The authors analyzed 50 studies published between 2011 and 2021, covering a wide range of topics related to social media monitoring for healthcare, including disease outbreaks, adverse drug reactions, mental health, and vaccine hesitancy. The review highlighted the potential of machine learning algorithms for analyzing vast amounts of social media data and identifying relevant health-related information. The authors also identified several challenges associated with the use of social media data, such as data quality and privacy concerns, and discuss potential solutions to address these challenges. The authors noted that social media-based surveillance systems can complement traditional surveillance methods by providing real-time data on health-related events and trends. They also suggested that machine learning algorithms can improve the accuracy and efficiency of social media monitoring by automatically filtering out irrelevant information and identifying patterns and trends in the data. The review highlighted the importance of data pre-processing and feature selection in developing effective machine learning models for social media analysis.

As well, Kourou et al. ( 32 ) reviewed machine learning (ML) applications for cancer prognosis and prediction. The authors started by describing the challenges of cancer treatment, highlighting the importance of personalized medicine and the role of ML algorithms in enabling it. The paper then provided an overview of different types of ML algorithms, including supervised and unsupervised learning, and discussed their potential applications in cancer prognosis and prediction. The authors presented examples of studies that have used ML algorithms for diagnosis, treatment response prediction, and survival prediction across different types of cancer. They also discussed the use of multiple data sources for ML algorithms, such as genetic data, imaging data, and clinical data. The paper concluded by addressing the challenges and limitations encountered in using ML algorithms for cancer prognosis and prediction, which include concerns regarding data quality, overfitting, and interpretability. The authors proposed that ML algorithms hold significant potential for enhancing cancer treatment outcomes. However, they emphasized the necessity for further research to optimize their application and tackle the associated challenges in this domain.