- AI & NLP

- Churn & Loyalty

- Customer Experience

- Customer Journeys

- Customer Metrics

- Feedback Analysis

- Product Experience

- Product Updates

- Sentiment Analysis

- Surveys & Feedback Collection

- Text Analytics

- Try Thematic

Welcome to the community

Qualitative Data Analysis: Step-by-Step Guide (Manual vs. Automatic)

When we conduct qualitative methods of research, need to explain changes in metrics or understand people's opinions, we always turn to qualitative data. Qualitative data is typically generated through:

- Interview transcripts

- Surveys with open-ended questions

- Contact center transcripts

- Texts and documents

- Audio and video recordings

- Observational notes

Compared to quantitative data, which captures structured information, qualitative data is unstructured and has more depth. It can answer our questions, can help formulate hypotheses and build understanding.

It's important to understand the differences between quantitative data & qualitative data . But unfortunately, analyzing qualitative data is difficult. While tools like Excel, Tableau and PowerBI crunch and visualize quantitative data with ease, there are a limited number of mainstream tools for analyzing qualitative data . The majority of qualitative data analysis still happens manually.

That said, there are two new trends that are changing this. First, there are advances in natural language processing (NLP) which is focused on understanding human language. Second, there is an explosion of user-friendly software designed for both researchers and businesses. Both help automate the qualitative data analysis process.

In this post we want to teach you how to conduct a successful qualitative data analysis. There are two primary qualitative data analysis methods; manual & automatic. We will teach you how to conduct the analysis manually, and also, automatically using software solutions powered by NLP. We’ll guide you through the steps to conduct a manual analysis, and look at what is involved and the role technology can play in automating this process.

More businesses are switching to fully-automated analysis of qualitative customer data because it is cheaper, faster, and just as accurate. Primarily, businesses purchase subscriptions to feedback analytics platforms so that they can understand customer pain points and sentiment.

We’ll take you through 5 steps to conduct a successful qualitative data analysis. Within each step we will highlight the key difference between the manual, and automated approach of qualitative researchers. Here's an overview of the steps:

The 5 steps to doing qualitative data analysis

- Gathering and collecting your qualitative data

- Organizing and connecting into your qualitative data

- Coding your qualitative data

- Analyzing the qualitative data for insights

- Reporting on the insights derived from your analysis

What is Qualitative Data Analysis?

Qualitative data analysis is a process of gathering, structuring and interpreting qualitative data to understand what it represents.

Qualitative data is non-numerical and unstructured. Qualitative data generally refers to text, such as open-ended responses to survey questions or user interviews, but also includes audio, photos and video.

Businesses often perform qualitative data analysis on customer feedback. And within this context, qualitative data generally refers to verbatim text data collected from sources such as reviews, complaints, chat messages, support centre interactions, customer interviews, case notes or social media comments.

How is qualitative data analysis different from quantitative data analysis?

Understanding the differences between quantitative & qualitative data is important. When it comes to analyzing data, Qualitative Data Analysis serves a very different role to Quantitative Data Analysis. But what sets them apart?

Qualitative Data Analysis dives into the stories hidden in non-numerical data such as interviews, open-ended survey answers, or notes from observations. It uncovers the ‘whys’ and ‘hows’ giving a deep understanding of people’s experiences and emotions.

Quantitative Data Analysis on the other hand deals with numerical data, using statistics to measure differences, identify preferred options, and pinpoint root causes of issues. It steps back to address questions like "how many" or "what percentage" to offer broad insights we can apply to larger groups.

In short, Qualitative Data Analysis is like a microscope, helping us understand specific detail. Quantitative Data Analysis is like the telescope, giving us a broader perspective. Both are important, working together to decode data for different objectives.

Qualitative Data Analysis methods

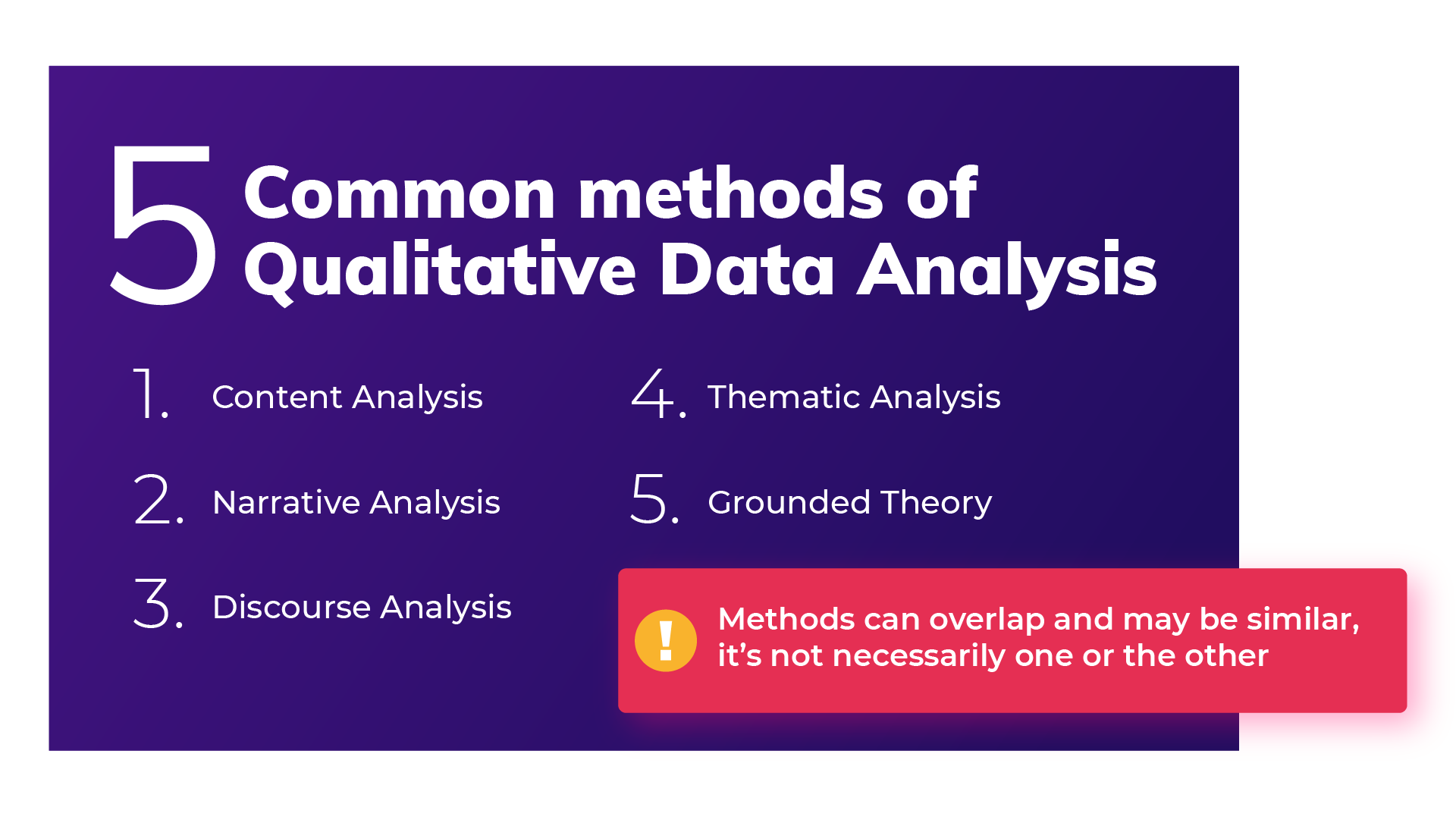

Once all the data has been captured, there are a variety of analysis techniques available and the choice is determined by your specific research objectives and the kind of data you’ve gathered. Common qualitative data analysis methods include:

Content Analysis

This is a popular approach to qualitative data analysis. Other qualitative analysis techniques may fit within the broad scope of content analysis. Thematic analysis is a part of the content analysis. Content analysis is used to identify the patterns that emerge from text, by grouping content into words, concepts, and themes. Content analysis is useful to quantify the relationship between all of the grouped content. The Columbia School of Public Health has a detailed breakdown of content analysis .

Narrative Analysis

Narrative analysis focuses on the stories people tell and the language they use to make sense of them. It is particularly useful in qualitative research methods where customer stories are used to get a deep understanding of customers’ perspectives on a specific issue. A narrative analysis might enable us to summarize the outcomes of a focused case study.

Discourse Analysis

Discourse analysis is used to get a thorough understanding of the political, cultural and power dynamics that exist in specific situations. The focus of discourse analysis here is on the way people express themselves in different social contexts. Discourse analysis is commonly used by brand strategists who hope to understand why a group of people feel the way they do about a brand or product.

Thematic Analysis

Thematic analysis is used to deduce the meaning behind the words people use. This is accomplished by discovering repeating themes in text. These meaningful themes reveal key insights into data and can be quantified, particularly when paired with sentiment analysis . Often, the outcome of thematic analysis is a code frame that captures themes in terms of codes, also called categories. So the process of thematic analysis is also referred to as “coding”. A common use-case for thematic analysis in companies is analysis of customer feedback.

Grounded Theory

Grounded theory is a useful approach when little is known about a subject. Grounded theory starts by formulating a theory around a single data case. This means that the theory is “grounded”. Grounded theory analysis is based on actual data, and not entirely speculative. Then additional cases can be examined to see if they are relevant and can add to the original grounded theory.

Challenges of Qualitative Data Analysis

While Qualitative Data Analysis offers rich insights, it comes with its challenges. Each unique QDA method has its unique hurdles. Let’s take a look at the challenges researchers and analysts might face, depending on the chosen method.

- Time and Effort (Narrative Analysis): Narrative analysis, which focuses on personal stories, demands patience. Sifting through lengthy narratives to find meaningful insights can be time-consuming, requires dedicated effort.

- Being Objective (Grounded Theory): Grounded theory, building theories from data, faces the challenges of personal biases. Staying objective while interpreting data is crucial, ensuring conclusions are rooted in the data itself.

- Complexity (Thematic Analysis): Thematic analysis involves identifying themes within data, a process that can be intricate. Categorizing and understanding themes can be complex, especially when each piece of data varies in context and structure. Thematic Analysis software can simplify this process.

- Generalizing Findings (Narrative Analysis): Narrative analysis, dealing with individual stories, makes drawing broad challenging. Extending findings from a single narrative to a broader context requires careful consideration.

- Managing Data (Thematic Analysis): Thematic analysis involves organizing and managing vast amounts of unstructured data, like interview transcripts. Managing this can be a hefty task, requiring effective data management strategies.

- Skill Level (Grounded Theory): Grounded theory demands specific skills to build theories from the ground up. Finding or training analysts with these skills poses a challenge, requiring investment in building expertise.

Benefits of qualitative data analysis

Qualitative Data Analysis (QDA) is like a versatile toolkit, offering a tailored approach to understanding your data. The benefits it offers are as diverse as the methods. Let’s explore why choosing the right method matters.

- Tailored Methods for Specific Needs: QDA isn't one-size-fits-all. Depending on your research objectives and the type of data at hand, different methods offer unique benefits. If you want emotive customer stories, narrative analysis paints a strong picture. When you want to explain a score, thematic analysis reveals insightful patterns

- Flexibility with Thematic Analysis: thematic analysis is like a chameleon in the toolkit of QDA. It adapts well to different types of data and research objectives, making it a top choice for any qualitative analysis.

- Deeper Understanding, Better Products: QDA helps you dive into people's thoughts and feelings. This deep understanding helps you build products and services that truly matches what people want, ensuring satisfied customers

- Finding the Unexpected: Qualitative data often reveals surprises that we miss in quantitative data. QDA offers us new ideas and perspectives, for insights we might otherwise miss.

- Building Effective Strategies: Insights from QDA are like strategic guides. They help businesses in crafting plans that match people’s desires.

- Creating Genuine Connections: Understanding people’s experiences lets businesses connect on a real level. This genuine connection helps build trust and loyalty, priceless for any business.

How to do Qualitative Data Analysis: 5 steps

Now we are going to show how you can do your own qualitative data analysis. We will guide you through this process step by step. As mentioned earlier, you will learn how to do qualitative data analysis manually , and also automatically using modern qualitative data and thematic analysis software.

To get best value from the analysis process and research process, it’s important to be super clear about the nature and scope of the question that’s being researched. This will help you select the research collection channels that are most likely to help you answer your question.

Depending on if you are a business looking to understand customer sentiment, or an academic surveying a school, your approach to qualitative data analysis will be unique.

Once you’re clear, there’s a sequence to follow. And, though there are differences in the manual and automatic approaches, the process steps are mostly the same.

The use case for our step-by-step guide is a company looking to collect data (customer feedback data), and analyze the customer feedback - in order to improve customer experience. By analyzing the customer feedback the company derives insights about their business and their customers. You can follow these same steps regardless of the nature of your research. Let’s get started.

Step 1: Gather your qualitative data and conduct research (Conduct qualitative research)

The first step of qualitative research is to do data collection. Put simply, data collection is gathering all of your data for analysis. A common situation is when qualitative data is spread across various sources.

Classic methods of gathering qualitative data

Most companies use traditional methods for gathering qualitative data: conducting interviews with research participants, running surveys, and running focus groups. This data is typically stored in documents, CRMs, databases and knowledge bases. It’s important to examine which data is available and needs to be included in your research project, based on its scope.

Using your existing qualitative feedback

As it becomes easier for customers to engage across a range of different channels, companies are gathering increasingly large amounts of both solicited and unsolicited qualitative feedback.

Most organizations have now invested in Voice of Customer programs , support ticketing systems, chatbot and support conversations, emails and even customer Slack chats.

These new channels provide companies with new ways of getting feedback, and also allow the collection of unstructured feedback data at scale.

The great thing about this data is that it contains a wealth of valubale insights and that it’s already there! When you have a new question about user behavior or your customers, you don’t need to create a new research study or set up a focus group. You can find most answers in the data you already have.

Typically, this data is stored in third-party solutions or a central database, but there are ways to export it or connect to a feedback analysis solution through integrations or an API.

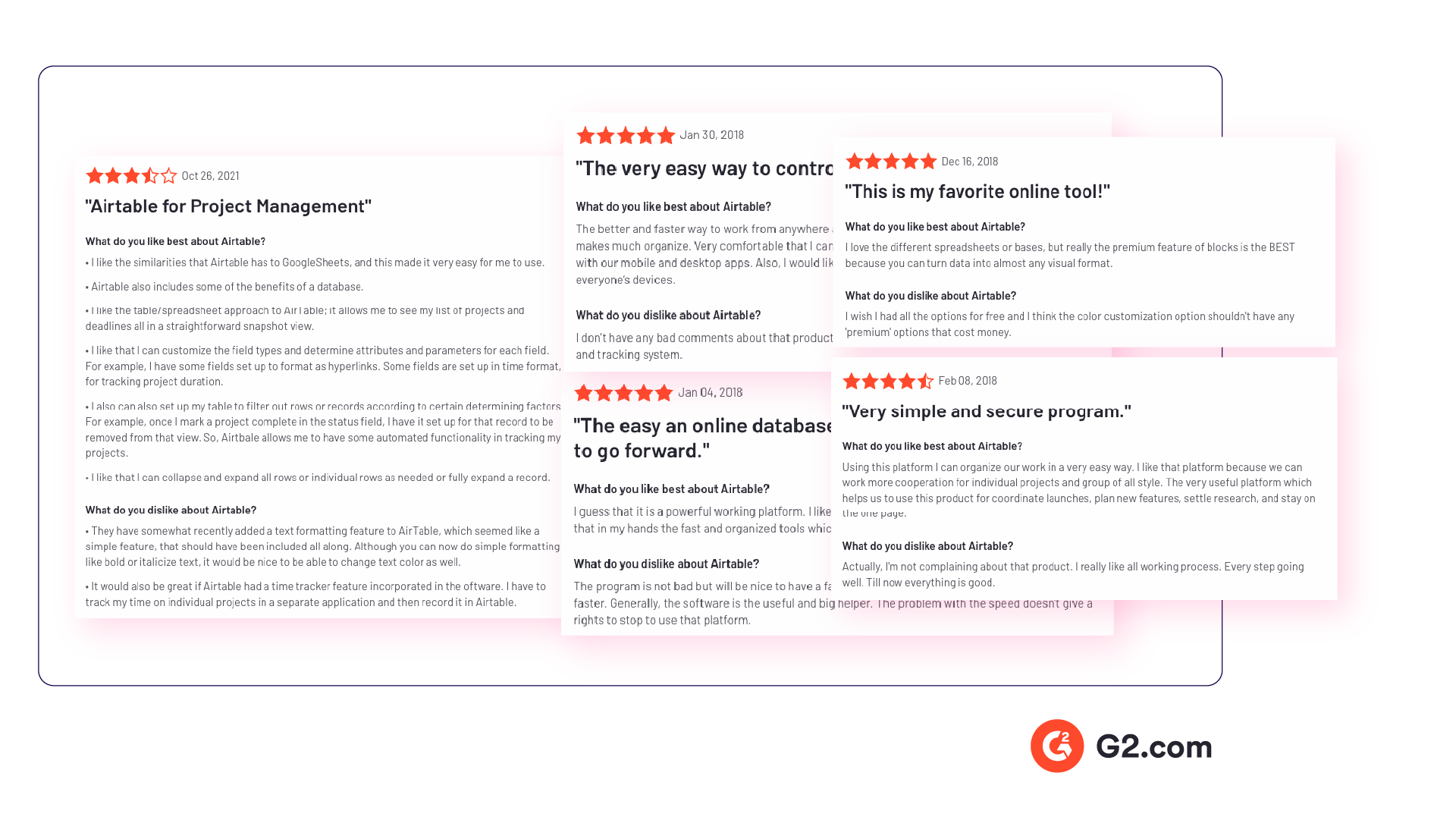

Utilize untapped qualitative data channels

There are many online qualitative data sources you may not have considered. For example, you can find useful qualitative data in social media channels like Twitter or Facebook. Online forums, review sites, and online communities such as Discourse or Reddit also contain valuable data about your customers, or research questions.

If you are considering performing a qualitative benchmark analysis against competitors - the internet is your best friend, and review analysis is a great place to start. Gathering feedback in competitor reviews on sites like Trustpilot, G2, Capterra, Better Business Bureau or on app stores is a great way to perform a competitor benchmark analysis.

Customer feedback analysis software often has integrations into social media and review sites, or you could use a solution like DataMiner to scrape the reviews.

Step 2: Connect & organize all your qualitative data

Now you all have this qualitative data but there’s a problem, the data is unstructured. Before feedback can be analyzed and assigned any value, it needs to be organized in a single place. Why is this important? Consistency!

If all data is easily accessible in one place and analyzed in a consistent manner, you will have an easier time summarizing and making decisions based on this data.

The manual approach to organizing your data

The classic method of structuring qualitative data is to plot all the raw data you’ve gathered into a spreadsheet.

Typically, research and support teams would share large Excel sheets and different business units would make sense of the qualitative feedback data on their own. Each team collects and organizes the data in a way that best suits them, which means the feedback tends to be kept in separate silos.

An alternative and a more robust solution is to store feedback in a central database, like Snowflake or Amazon Redshift .

Keep in mind that when you organize your data in this way, you are often preparing it to be imported into another software. If you go the route of a database, you would need to use an API to push the feedback into a third-party software.

Computer-assisted qualitative data analysis software (CAQDAS)

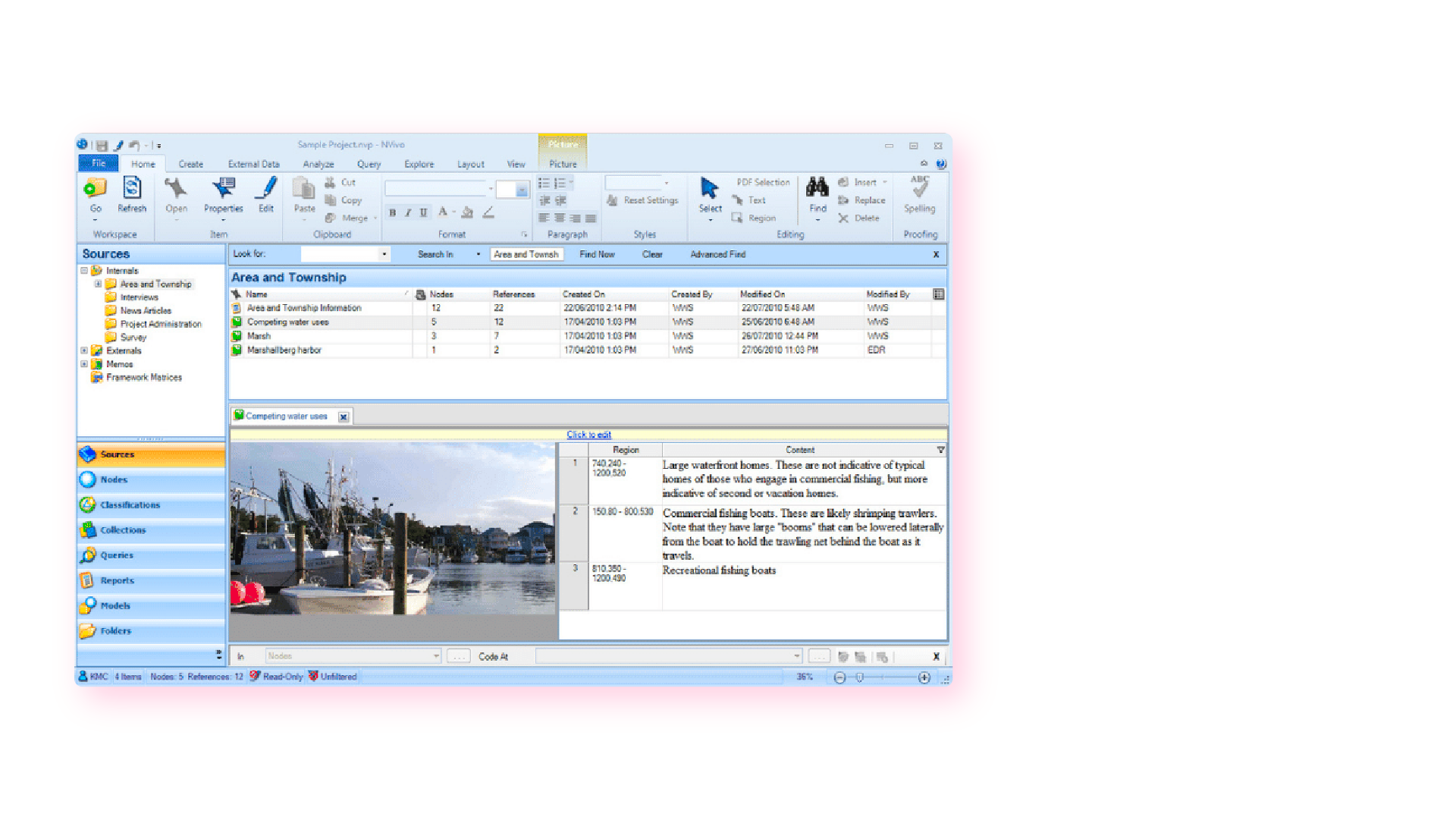

Traditionally within the manual analysis approach (but not always), qualitative data is imported into CAQDAS software for coding.

In the early 2000s, CAQDAS software was popularised by developers such as ATLAS.ti, NVivo and MAXQDA and eagerly adopted by researchers to assist with the organizing and coding of data.

The benefits of using computer-assisted qualitative data analysis software:

- Assists in the organizing of your data

- Opens you up to exploring different interpretations of your data analysis

- Allows you to share your dataset easier and allows group collaboration (allows for secondary analysis)

However you still need to code the data, uncover the themes and do the analysis yourself. Therefore it is still a manual approach.

Organizing your qualitative data in a feedback repository

Another solution to organizing your qualitative data is to upload it into a feedback repository where it can be unified with your other data , and easily searchable and taggable. There are a number of software solutions that act as a central repository for your qualitative research data. Here are a couple solutions that you could investigate:

- Dovetail: Dovetail is a research repository with a focus on video and audio transcriptions. You can tag your transcriptions within the platform for theme analysis. You can also upload your other qualitative data such as research reports, survey responses, support conversations ( conversational analytics ), and customer interviews. Dovetail acts as a single, searchable repository. And makes it easier to collaborate with other people around your qualitative research.

- EnjoyHQ: EnjoyHQ is another research repository with similar functionality to Dovetail. It boasts a more sophisticated search engine, but it has a higher starting subscription cost.

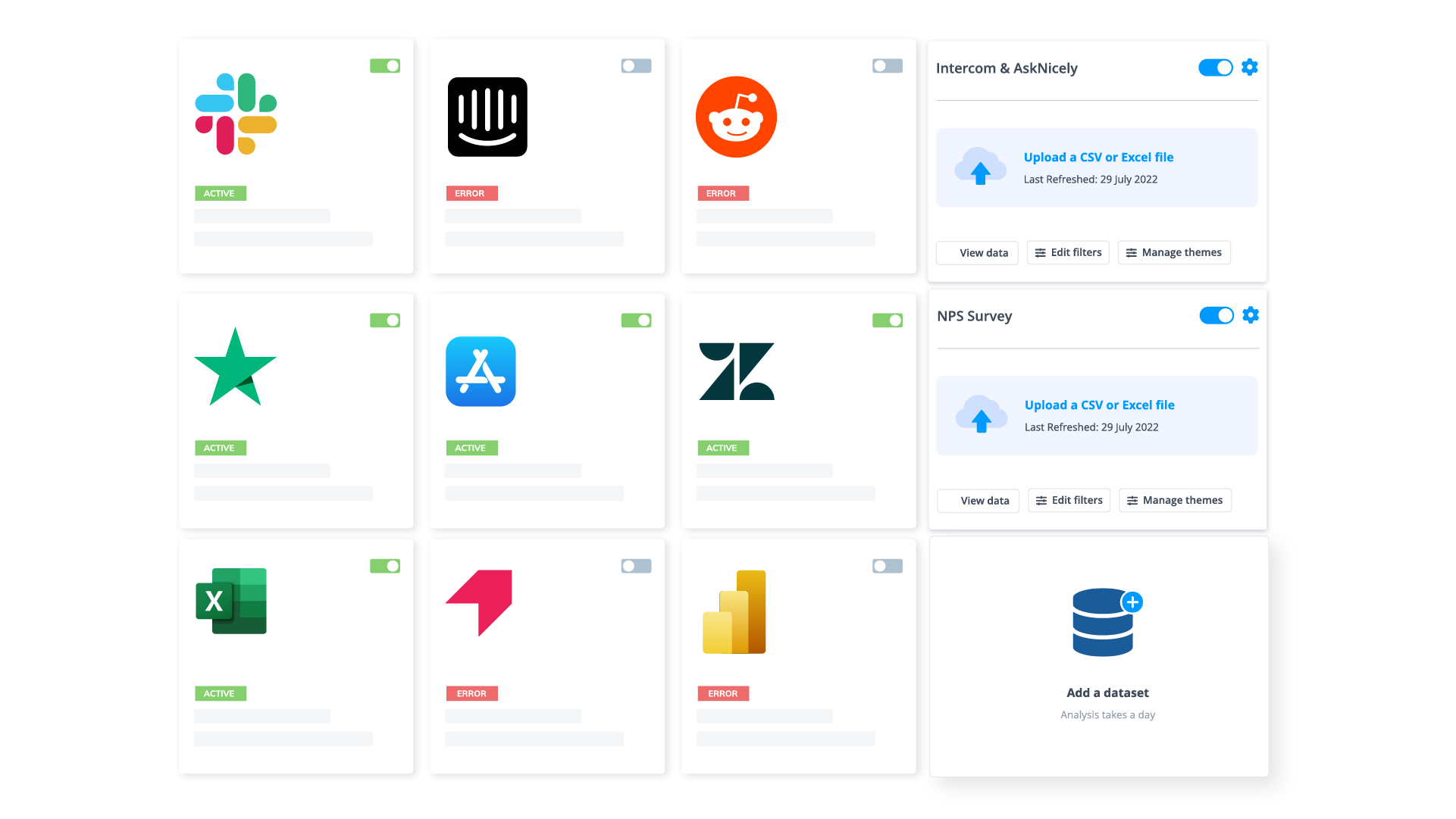

Organizing your qualitative data in a feedback analytics platform

If you have a lot of qualitative customer or employee feedback, from the likes of customer surveys or employee surveys, you will benefit from a feedback analytics platform. A feedback analytics platform is a software that automates the process of both sentiment analysis and thematic analysis . Companies use the integrations offered by these platforms to directly tap into their qualitative data sources (review sites, social media, survey responses, etc.). The data collected is then organized and analyzed consistently within the platform.

If you have data prepared in a spreadsheet, it can also be imported into feedback analytics platforms.

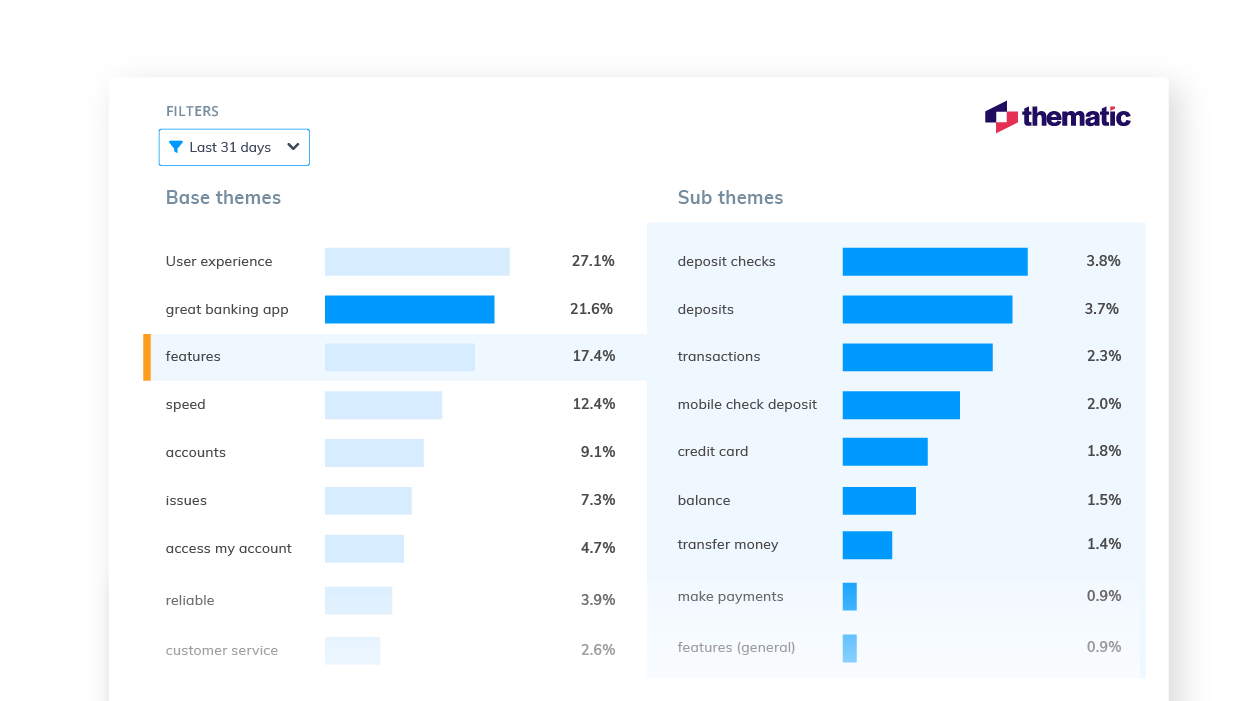

Once all this rich data has been organized within the feedback analytics platform, it is ready to be coded and themed, within the same platform. Thematic is a feedback analytics platform that offers one of the largest libraries of integrations with qualitative data sources.

Step 3: Coding your qualitative data

Your feedback data is now organized in one place. Either within your spreadsheet, CAQDAS, feedback repository or within your feedback analytics platform. The next step is to code your feedback data so we can extract meaningful insights in the next step.

Coding is the process of labelling and organizing your data in such a way that you can then identify themes in the data, and the relationships between these themes.

To simplify the coding process, you will take small samples of your customer feedback data, come up with a set of codes, or categories capturing themes, and label each piece of feedback, systematically, for patterns and meaning. Then you will take a larger sample of data, revising and refining the codes for greater accuracy and consistency as you go.

If you choose to use a feedback analytics platform, much of this process will be automated and accomplished for you.

The terms to describe different categories of meaning (‘theme’, ‘code’, ‘tag’, ‘category’ etc) can be confusing as they are often used interchangeably. For clarity, this article will use the term ‘code’.

To code means to identify key words or phrases and assign them to a category of meaning. “I really hate the customer service of this computer software company” would be coded as “poor customer service”.

How to manually code your qualitative data

- Decide whether you will use deductive or inductive coding. Deductive coding is when you create a list of predefined codes, and then assign them to the qualitative data. Inductive coding is the opposite of this, you create codes based on the data itself. Codes arise directly from the data and you label them as you go. You need to weigh up the pros and cons of each coding method and select the most appropriate.

- Read through the feedback data to get a broad sense of what it reveals. Now it’s time to start assigning your first set of codes to statements and sections of text.

- Keep repeating step 2, adding new codes and revising the code description as often as necessary. Once it has all been coded, go through everything again, to be sure there are no inconsistencies and that nothing has been overlooked.

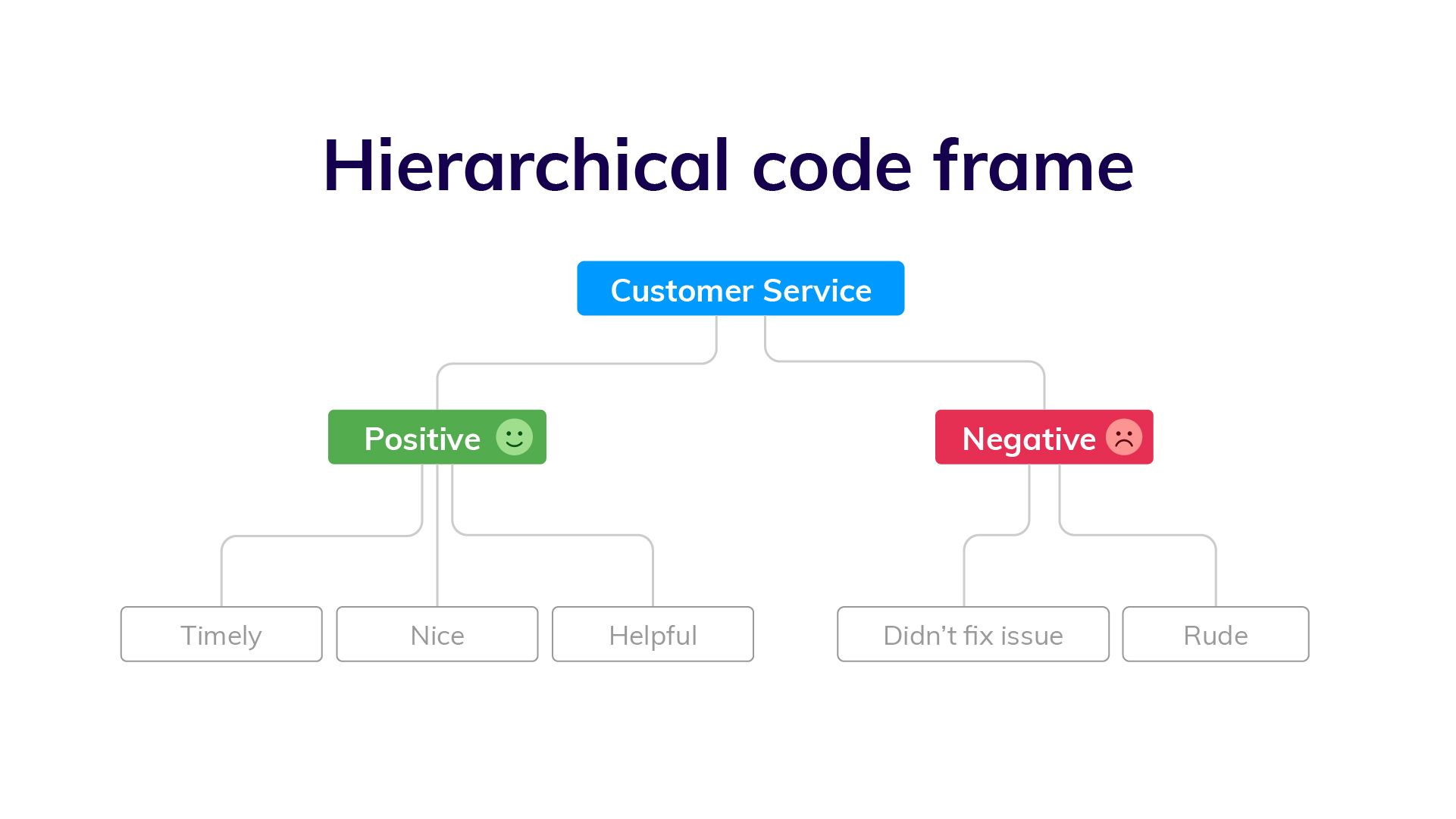

- Create a code frame to group your codes. The coding frame is the organizational structure of all your codes. And there are two commonly used types of coding frames, flat, or hierarchical. A hierarchical code frame will make it easier for you to derive insights from your analysis.

- Based on the number of times a particular code occurs, you can now see the common themes in your feedback data. This is insightful! If ‘bad customer service’ is a common code, it’s time to take action.

We have a detailed guide dedicated to manually coding your qualitative data .

Using software to speed up manual coding of qualitative data

An Excel spreadsheet is still a popular method for coding. But various software solutions can help speed up this process. Here are some examples.

- CAQDAS / NVivo - CAQDAS software has built-in functionality that allows you to code text within their software. You may find the interface the software offers easier for managing codes than a spreadsheet.

- Dovetail/EnjoyHQ - You can tag transcripts and other textual data within these solutions. As they are also repositories you may find it simpler to keep the coding in one platform.

- IBM SPSS - SPSS is a statistical analysis software that may make coding easier than in a spreadsheet.

- Ascribe - Ascribe’s ‘Coder’ is a coding management system. Its user interface will make it easier for you to manage your codes.

Automating the qualitative coding process using thematic analysis software

In solutions which speed up the manual coding process, you still have to come up with valid codes and often apply codes manually to pieces of feedback. But there are also solutions that automate both the discovery and the application of codes.

Advances in machine learning have now made it possible to read, code and structure qualitative data automatically. This type of automated coding is offered by thematic analysis software .

Automation makes it far simpler and faster to code the feedback and group it into themes. By incorporating natural language processing (NLP) into the software, the AI looks across sentences and phrases to identify common themes meaningful statements. Some automated solutions detect repeating patterns and assign codes to them, others make you train the AI by providing examples. You could say that the AI learns the meaning of the feedback on its own.

Thematic automates the coding of qualitative feedback regardless of source. There’s no need to set up themes or categories in advance. Simply upload your data and wait a few minutes. You can also manually edit the codes to further refine their accuracy. Experiments conducted indicate that Thematic’s automated coding is just as accurate as manual coding .

Paired with sentiment analysis and advanced text analytics - these automated solutions become powerful for deriving quality business or research insights.

You could also build your own , if you have the resources!

The key benefits of using an automated coding solution

Automated analysis can often be set up fast and there’s the potential to uncover things that would never have been revealed if you had given the software a prescribed list of themes to look for.

Because the model applies a consistent rule to the data, it captures phrases or statements that a human eye might have missed.

Complete and consistent analysis of customer feedback enables more meaningful findings. Leading us into step 4.

Step 4: Analyze your data: Find meaningful insights

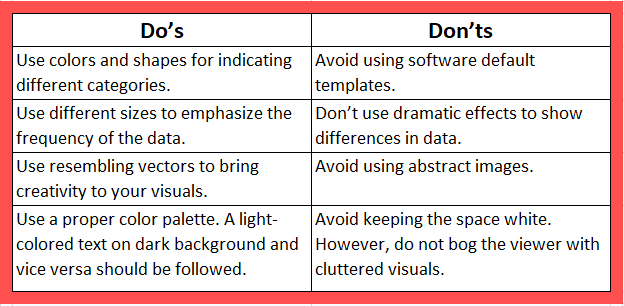

Now we are going to analyze our data to find insights. This is where we start to answer our research questions. Keep in mind that step 4 and step 5 (tell the story) have some overlap . This is because creating visualizations is both part of analysis process and reporting.

The task of uncovering insights is to scour through the codes that emerge from the data and draw meaningful correlations from them. It is also about making sure each insight is distinct and has enough data to support it.

Part of the analysis is to establish how much each code relates to different demographics and customer profiles, and identify whether there’s any relationship between these data points.

Manually create sub-codes to improve the quality of insights

If your code frame only has one level, you may find that your codes are too broad to be able to extract meaningful insights. This is where it is valuable to create sub-codes to your primary codes. This process is sometimes referred to as meta coding.

Note: If you take an inductive coding approach, you can create sub-codes as you are reading through your feedback data and coding it.

While time-consuming, this exercise will improve the quality of your analysis. Here is an example of what sub-codes could look like.

You need to carefully read your qualitative data to create quality sub-codes. But as you can see, the depth of analysis is greatly improved. By calculating the frequency of these sub-codes you can get insight into which customer service problems you can immediately address.

Correlate the frequency of codes to customer segments

Many businesses use customer segmentation . And you may have your own respondent segments that you can apply to your qualitative analysis. Segmentation is the practise of dividing customers or research respondents into subgroups.

Segments can be based on:

- Demographic

- And any other data type that you care to segment by

It is particularly useful to see the occurrence of codes within your segments. If one of your customer segments is considered unimportant to your business, but they are the cause of nearly all customer service complaints, it may be in your best interest to focus attention elsewhere. This is a useful insight!

Manually visualizing coded qualitative data

There are formulas you can use to visualize key insights in your data. The formulas we will suggest are imperative if you are measuring a score alongside your feedback.

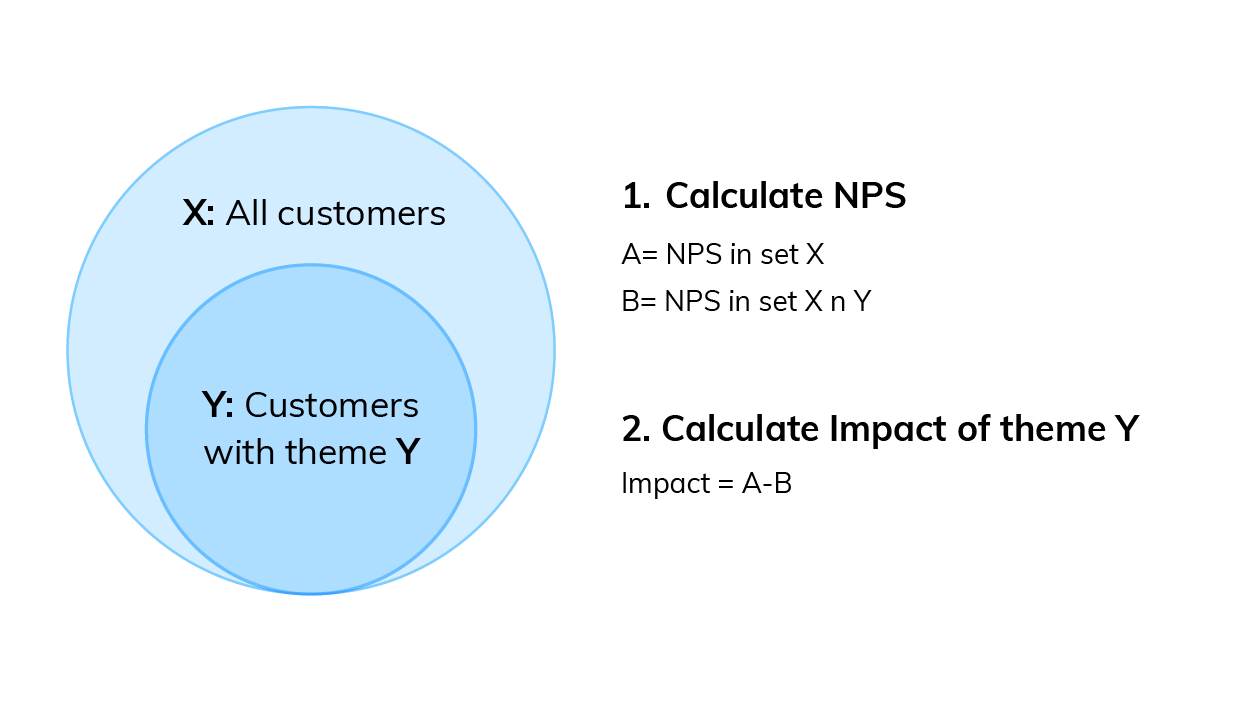

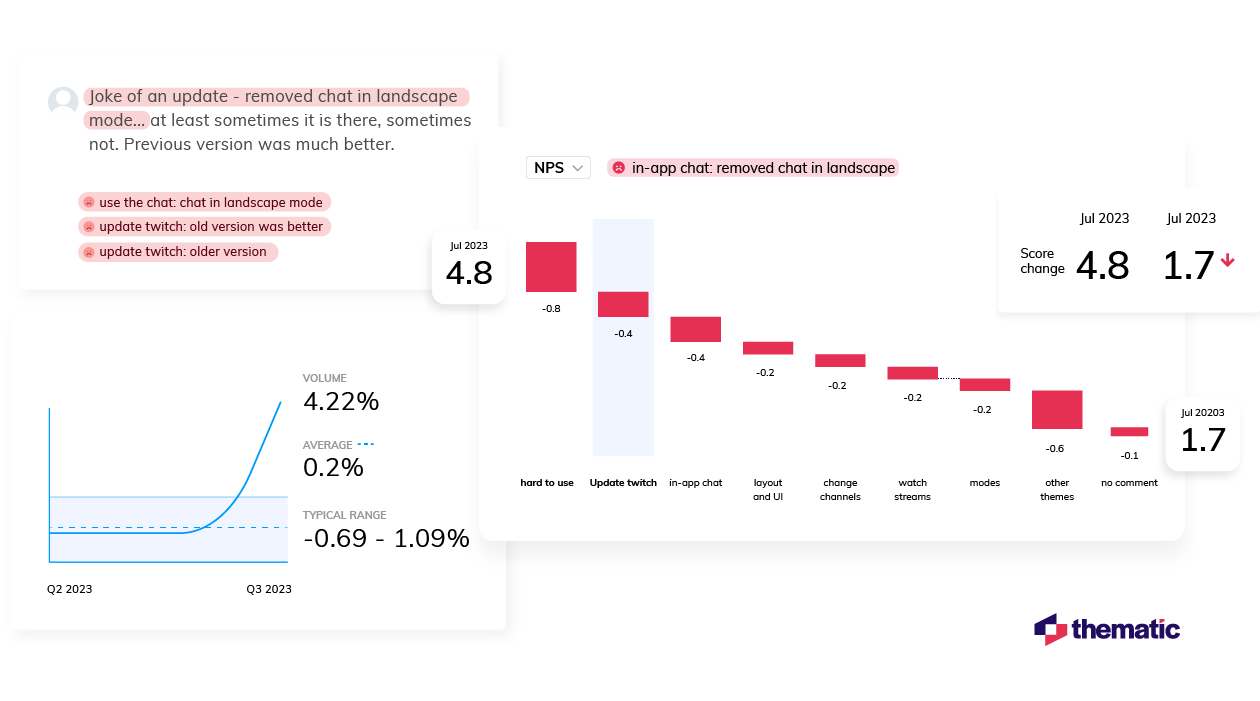

If you are collecting a metric alongside your qualitative data this is a key visualization. Impact answers the question: “What’s the impact of a code on my overall score?”. Using Net Promoter Score (NPS) as an example, first you need to:

- Calculate overall NPS

- Calculate NPS in the subset of responses that do not contain that theme

- Subtract B from A

Then you can use this simple formula to calculate code impact on NPS .

You can then visualize this data using a bar chart.

You can download our CX toolkit - it includes a template to recreate this.

Trends over time

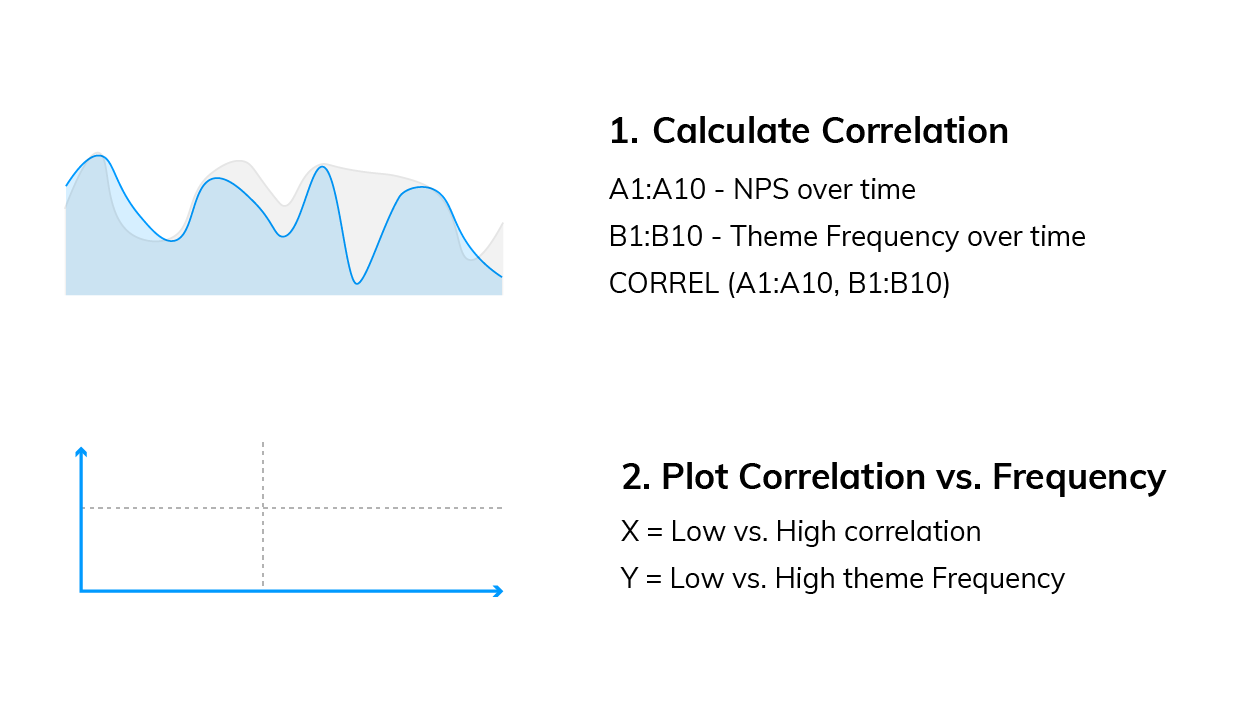

This analysis can help you answer questions like: “Which codes are linked to decreases or increases in my score over time?”

We need to compare two sequences of numbers: NPS over time and code frequency over time . Using Excel, calculate the correlation between the two sequences, which can be either positive (the more codes the higher the NPS, see picture below), or negative (the more codes the lower the NPS).

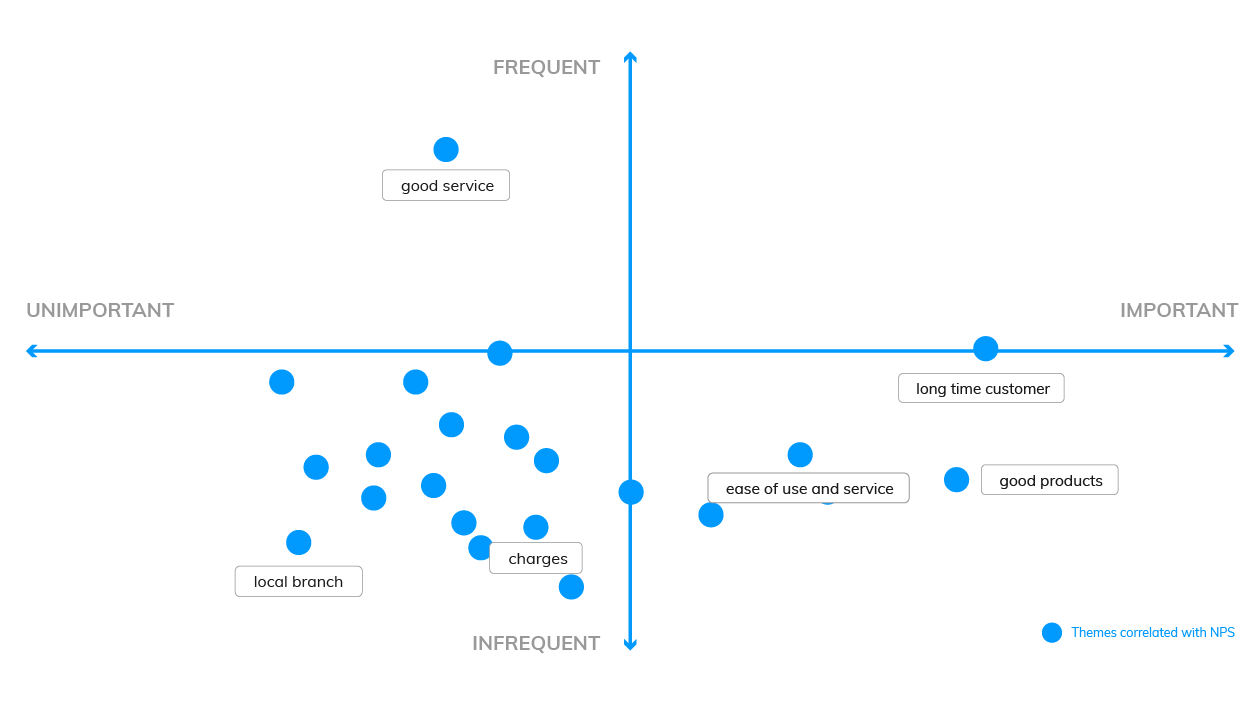

Now you need to plot code frequency against the absolute value of code correlation with NPS. Here is the formula:

The visualization could look like this:

These are two examples, but there are more. For a third manual formula, and to learn why word clouds are not an insightful form of analysis, read our visualizations article .

Using a text analytics solution to automate analysis

Automated text analytics solutions enable codes and sub-codes to be pulled out of the data automatically. This makes it far faster and easier to identify what’s driving negative or positive results. And to pick up emerging trends and find all manner of rich insights in the data.

Another benefit of AI-driven text analytics software is its built-in capability for sentiment analysis, which provides the emotive context behind your feedback and other qualitative textual data therein.

Thematic provides text analytics that goes further by allowing users to apply their expertise on business context to edit or augment the AI-generated outputs.

Since the move away from manual research is generally about reducing the human element, adding human input to the technology might sound counter-intuitive. However, this is mostly to make sure important business nuances in the feedback aren’t missed during coding. The result is a higher accuracy of analysis. This is sometimes referred to as augmented intelligence .

Step 5: Report on your data: Tell the story

The last step of analyzing your qualitative data is to report on it, to tell the story. At this point, the codes are fully developed and the focus is on communicating the narrative to the audience.

A coherent outline of the qualitative research, the findings and the insights is vital for stakeholders to discuss and debate before they can devise a meaningful course of action.

Creating graphs and reporting in Powerpoint

Typically, qualitative researchers take the tried and tested approach of distilling their report into a series of charts, tables and other visuals which are woven into a narrative for presentation in Powerpoint.

Using visualization software for reporting

With data transformation and APIs, the analyzed data can be shared with data visualisation software, such as Power BI or Tableau , Google Studio or Looker. Power BI and Tableau are among the most preferred options.

Visualizing your insights inside a feedback analytics platform

Feedback analytics platforms, like Thematic, incorporate visualisation tools that intuitively turn key data and insights into graphs. This removes the time consuming work of constructing charts to visually identify patterns and creates more time to focus on building a compelling narrative that highlights the insights, in bite-size chunks, for executive teams to review.

Using a feedback analytics platform with visualization tools means you don’t have to use a separate product for visualizations. You can export graphs into Powerpoints straight from the platforms.

Conclusion - Manual or Automated?

There are those who remain deeply invested in the manual approach - because it’s familiar, because they’re reluctant to spend money and time learning new software, or because they’ve been burned by the overpromises of AI.

For projects that involve small datasets, manual analysis makes sense. For example, if the objective is simply to quantify a simple question like “Do customers prefer X concepts to Y?”. If the findings are being extracted from a small set of focus groups and interviews, sometimes it’s easier to just read them

However, as new generations come into the workplace, it’s technology-driven solutions that feel more comfortable and practical. And the merits are undeniable. Especially if the objective is to go deeper and understand the ‘why’ behind customers’ preference for X or Y. And even more especially if time and money are considerations.

The ability to collect a free flow of qualitative feedback data at the same time as the metric means AI can cost-effectively scan, crunch, score and analyze a ton of feedback from one system in one go. And time-intensive processes like focus groups, or coding, that used to take weeks, can now be completed in a matter of hours or days.

But aside from the ever-present business case to speed things up and keep costs down, there are also powerful research imperatives for automated analysis of qualitative data: namely, accuracy and consistency.

Finding insights hidden in feedback requires consistency, especially in coding. Not to mention catching all the ‘unknown unknowns’ that can skew research findings and steering clear of cognitive bias.

Some say without manual data analysis researchers won’t get an accurate “feel” for the insights. However, the larger data sets are, the harder it is to sort through the feedback and organize feedback that has been pulled from different places. And, the more difficult it is to stay on course, the greater the risk of drawing incorrect, or incomplete, conclusions grows.

Though the process steps for qualitative data analysis have remained pretty much unchanged since psychologist Paul Felix Lazarsfeld paved the path a hundred years ago, the impact digital technology has had on types of qualitative feedback data and the approach to the analysis are profound.

If you want to try an automated feedback analysis solution on your own qualitative data, you can get started with Thematic .

Community & Marketing

Tyler manages our community of CX, insights & analytics professionals. Tyler's goal is to help unite insights professionals around common challenges.

We make it easy to discover the customer and product issues that matter.

Unlock the value of feedback at scale, in one platform. Try it for free now!

- Questions to ask your Feedback Analytics vendor

- How to end customer churn for good

- Scalable analysis of NPS verbatims

- 5 Text analytics approaches

- How to calculate the ROI of CX

Our experts will show you how Thematic works, how to discover pain points and track the ROI of decisions. To access your free trial, book a personal demo today.

Recent posts

Become a qualitative theming pro! Creating a perfect code frame is hard, but thematic analysis software makes the process much easier.

Discover the power of thematic analysis to unlock insights from qualitative data. Learn about manual vs. AI-powered approaches, best practices, and how Thematic software can revolutionize your analysis workflow.

When two major storms wreaked havoc on Auckland and Watercare’s infrastructurem the utility went through a CX crisis. With a massive influx of calls to their support center, Thematic helped them get inisghts from this data to forge a new approach to restore services and satisfaction levels.

Qualitative Data Coding 101

How to code qualitative data, the smart way (with examples).

By: Jenna Crosley (PhD) | Reviewed by:Dr Eunice Rautenbach | December 2020

As we’ve discussed previously , qualitative research makes use of non-numerical data – for example, words, phrases or even images and video. To analyse this kind of data, the first dragon you’ll need to slay is qualitative data coding (or just “coding” if you want to sound cool). But what exactly is coding and how do you do it?

Overview: Qualitative Data Coding

In this post, we’ll explain qualitative data coding in simple terms. Specifically, we’ll dig into:

- What exactly qualitative data coding is

- What different types of coding exist

- How to code qualitative data (the process)

- Moving from coding to qualitative analysis

- Tips and tricks for quality data coding

What is qualitative data coding?

Let’s start by understanding what a code is. At the simplest level, a code is a label that describes the content of a piece of text. For example, in the sentence:

“Pigeons attacked me and stole my sandwich.”

You could use “pigeons” as a code. This code simply describes that the sentence involves pigeons.

So, building onto this, qualitative data coding is the process of creating and assigning codes to categorise data extracts. You’ll then use these codes later down the road to derive themes and patterns for your qualitative analysis (for example, thematic analysis ). Coding and analysis can take place simultaneously, but it’s important to note that coding does not necessarily involve identifying themes (depending on which textbook you’re reading, of course). Instead, it generally refers to the process of labelling and grouping similar types of data to make generating themes and analysing the data more manageable.

Makes sense? Great. But why should you bother with coding at all? Why not just look for themes from the outset? Well, coding is a way of making sure your data is valid . In other words, it helps ensure that your analysis is undertaken systematically and that other researchers can review it (in the world of research, we call this transparency). In other words, good coding is the foundation of high-quality analysis.

What are the different types of coding?

Now that we’ve got a plain-language definition of coding on the table, the next step is to understand what overarching types of coding exist – in other words, coding approaches . Let’s start with the two main approaches, inductive and deductive .

With deductive coding, you, as the researcher, begin with a set of pre-established codes and apply them to your data set (for example, a set of interview transcripts). Inductive coding on the other hand, works in reverse, as you create the set of codes based on the data itself – in other words, the codes emerge from the data. Let’s take a closer look at both.

Deductive coding 101

With deductive coding, we make use of pre-established codes, which are developed before you interact with the present data. This usually involves drawing up a set of codes based on a research question or previous research . You could also use a code set from the codebook of a previous study.

For example, if you were studying the eating habits of college students, you might have a research question along the lines of

“What foods do college students eat the most?”

As a result of this research question, you might develop a code set that includes codes such as “sushi”, “pizza”, and “burgers”.

Deductive coding allows you to approach your analysis with a very tightly focused lens and quickly identify relevant data . Of course, the downside is that you could miss out on some very valuable insights as a result of this tight, predetermined focus.

Inductive coding 101

But what about inductive coding? As we touched on earlier, this type of coding involves jumping right into the data and then developing the codes based on what you find within the data.

For example, if you were to analyse a set of open-ended interviews , you wouldn’t necessarily know which direction the conversation would flow. If a conversation begins with a discussion of cats, it may go on to include other animals too, and so you’d add these codes as you progress with your analysis. Simply put, with inductive coding, you “go with the flow” of the data.

Inductive coding is great when you’re researching something that isn’t yet well understood because the coding derived from the data helps you explore the subject. Therefore, this type of coding is usually used when researchers want to investigate new ideas or concepts , or when they want to create new theories.

A little bit of both… hybrid coding approaches

If you’ve got a set of codes you’ve derived from a research topic, literature review or a previous study (i.e. a deductive approach), but you still don’t have a rich enough set to capture the depth of your qualitative data, you can combine deductive and inductive methods – this is called a hybrid coding approach.

To adopt a hybrid approach, you’ll begin your analysis with a set of a priori codes (deductive) and then add new codes (inductive) as you work your way through the data. Essentially, the hybrid coding approach provides the best of both worlds, which is why it’s pretty common to see this in research.

Need a helping hand?

How to code qualitative data

Now that we’ve looked at the main approaches to coding, the next question you’re probably asking is “how do I actually do it?”. Let’s take a look at the coding process , step by step.

Both inductive and deductive methods of coding typically occur in two stages: initial coding and line by line coding .

In the initial coding stage, the objective is to get a general overview of the data by reading through and understanding it. If you’re using an inductive approach, this is also where you’ll develop an initial set of codes. Then, in the second stage (line by line coding), you’ll delve deeper into the data and (re)organise it according to (potentially new) codes.

Step 1 – Initial coding

The first step of the coding process is to identify the essence of the text and code it accordingly. While there are various qualitative analysis software packages available, you can just as easily code textual data using Microsoft Word’s “comments” feature.

Let’s take a look at a practical example of coding. Assume you had the following interview data from two interviewees:

What pets do you have?

I have an alpaca and three dogs.

Only one alpaca? They can die of loneliness if they don’t have a friend.

I didn’t know that! I’ll just have to get five more.

I have twenty-three bunnies. I initially only had two, I’m not sure what happened.

In the initial stage of coding, you could assign the code of “pets” or “animals”. These are just initial, fairly broad codes that you can (and will) develop and refine later. In the initial stage, broad, rough codes are fine – they’re just a starting point which you will build onto in the second stage.

How to decide which codes to use

But how exactly do you decide what codes to use when there are many ways to read and interpret any given sentence? Well, there are a few different approaches you can adopt. The main approaches to initial coding include:

- In vivo coding

Process coding

- Open coding

Descriptive coding

Structural coding.

- Value coding

Let’s take a look at each of these:

In vivo coding

When you use in vivo coding , you make use of a participants’ own words , rather than your interpretation of the data. In other words, you use direct quotes from participants as your codes. By doing this, you’ll avoid trying to infer meaning, rather staying as close to the original phrases and words as possible.

In vivo coding is particularly useful when your data are derived from participants who speak different languages or come from different cultures. In these cases, it’s often difficult to accurately infer meaning due to linguistic or cultural differences.

For example, English speakers typically view the future as in front of them and the past as behind them. However, this isn’t the same in all cultures. Speakers of Aymara view the past as in front of them and the future as behind them. Why? Because the future is unknown, so it must be out of sight (or behind us). They know what happened in the past, so their perspective is that it’s positioned in front of them, where they can “see” it.

In a scenario like this one, it’s not possible to derive the reason for viewing the past as in front and the future as behind without knowing the Aymara culture’s perception of time. Therefore, in vivo coding is particularly useful, as it avoids interpretation errors.

Next up, there’s process coding , which makes use of action-based codes . Action-based codes are codes that indicate a movement or procedure. These actions are often indicated by gerunds (words ending in “-ing”) – for example, running, jumping or singing.

Process coding is useful as it allows you to code parts of data that aren’t necessarily spoken, but that are still imperative to understanding the meaning of the texts.

An example here would be if a participant were to say something like, “I have no idea where she is”. A sentence like this can be interpreted in many different ways depending on the context and movements of the participant. The participant could shrug their shoulders, which would indicate that they genuinely don’t know where the girl is; however, they could also wink, showing that they do actually know where the girl is.

Simply put, process coding is useful as it allows you to, in a concise manner, identify the main occurrences in a set of data and provide a dynamic account of events. For example, you may have action codes such as, “describing a panda”, “singing a song about bananas”, or “arguing with a relative”.

Descriptive coding aims to summarise extracts by using a single word or noun that encapsulates the general idea of the data. These words will typically describe the data in a highly condensed manner, which allows the researcher to quickly refer to the content.

Descriptive coding is very useful when dealing with data that appear in forms other than traditional text – i.e. video clips, sound recordings or images. For example, a descriptive code could be “food” when coding a video clip that involves a group of people discussing what they ate throughout the day, or “cooking” when coding an image showing the steps of a recipe.

Structural coding involves labelling and describing specific structural attributes of the data. Generally, it includes coding according to answers to the questions of “ who ”, “ what ”, “ where ”, and “ how ”, rather than the actual topics expressed in the data. This type of coding is useful when you want to access segments of data quickly, and it can help tremendously when you’re dealing with large data sets.

For example, if you were coding a collection of theses or dissertations (which would be quite a large data set), structural coding could be useful as you could code according to different sections within each of these documents – i.e. according to the standard dissertation structure . What-centric labels such as “hypothesis”, “literature review”, and “methodology” would help you to efficiently refer to sections and navigate without having to work through sections of data all over again.

Structural coding is also useful for data from open-ended surveys. This data may initially be difficult to code as they lack the set structure of other forms of data (such as an interview with a strict set of questions to be answered). In this case, it would useful to code sections of data that answer certain questions such as “who?”, “what?”, “where?” and “how?”.

Let’s take a look at a practical example. If we were to send out a survey asking people about their dogs, we may end up with a (highly condensed) response such as the following:

Bella is my best friend. When I’m at home I like to sit on the floor with her and roll her ball across the carpet for her to fetch and bring back to me. I love my dog.

In this set, we could code Bella as “who”, dog as “what”, home and floor as “where”, and roll her ball as “how”.

Values coding

Finally, values coding involves coding that relates to the participant’s worldviews . Typically, this type of coding focuses on excerpts that reflect the values, attitudes, and beliefs of the participants. Values coding is therefore very useful for research exploring cultural values and intrapersonal and experiences and actions.

To recap, the aim of initial coding is to understand and familiarise yourself with your data , to develop an initial code set (if you’re taking an inductive approach) and to take the first shot at coding your data . The coding approaches above allow you to arrange your data so that it’s easier to navigate during the next stage, line by line coding (we’ll get to this soon).

While these approaches can all be used individually, it’s important to remember that it’s possible, and potentially beneficial, to combine them . For example, when conducting initial coding with interviews, you could begin by using structural coding to indicate who speaks when. Then, as a next step, you could apply descriptive coding so that you can navigate to, and between, conversation topics easily. You can check out some examples of various techniques here .

Step 2 – Line by line coding

Once you’ve got an overall idea of our data, are comfortable navigating it and have applied some initial codes, you can move on to line by line coding. Line by line coding is pretty much exactly what it sounds like – reviewing your data, line by line, digging deeper and assigning additional codes to each line.

With line-by-line coding, the objective is to pay close attention to your data to add detail to your codes. For example, if you have a discussion of beverages and you previously just coded this as “beverages”, you could now go deeper and code more specifically, such as “coffee”, “tea”, and “orange juice”. The aim here is to scratch below the surface. This is the time to get detailed and specific so as to capture as much richness from the data as possible.

In the line-by-line coding process, it’s useful to code everything in your data, even if you don’t think you’re going to use it (you may just end up needing it!). As you go through this process, your coding will become more thorough and detailed, and you’ll have a much better understanding of your data as a result of this, which will be incredibly valuable in the analysis phase.

Moving from coding to analysis

Once you’ve completed your initial coding and line by line coding, the next step is to start your analysis . Of course, the coding process itself will get you in “analysis mode” and you’ll probably already have some insights and ideas as a result of it, so you should always keep notes of your thoughts as you work through the coding.

When it comes to qualitative data analysis, there are many different types of analyses (we discuss some of the most popular ones here ) and the type of analysis you adopt will depend heavily on your research aims, objectives and questions . Therefore, we’re not going to go down that rabbit hole here, but we’ll cover the important first steps that build the bridge from qualitative data coding to qualitative analysis.

When starting to think about your analysis, it’s useful to ask yourself the following questions to get the wheels turning:

- What actions are shown in the data?

- What are the aims of these interactions and excerpts? What are the participants potentially trying to achieve?

- How do participants interpret what is happening, and how do they speak about it? What does their language reveal?

- What are the assumptions made by the participants?

- What are the participants doing? What is going on?

- Why do I want to learn about this? What am I trying to find out?

- Why did I include this particular excerpt? What does it represent and how?

Code categorisation

Categorisation is simply the process of reviewing everything you’ve coded and then creating code categories that can be used to guide your future analysis. In other words, it’s about creating categories for your code set. Let’s take a look at a practical example.

If you were discussing different types of animals, your initial codes may be “dogs”, “llamas”, and “lions”. In the process of categorisation, you could label (categorise) these three animals as “mammals”, whereas you could categorise “flies”, “crickets”, and “beetles” as “insects”. By creating these code categories, you will be making your data more organised, as well as enriching it so that you can see new connections between different groups of codes.

Theme identification

From the coding and categorisation processes, you’ll naturally start noticing themes. Therefore, the logical next step is to identify and clearly articulate the themes in your data set. When you determine themes, you’ll take what you’ve learned from the coding and categorisation and group it all together to develop themes. This is the part of the coding process where you’ll try to draw meaning from your data, and start to produce a narrative . The nature of this narrative depends on your research aims and objectives, as well as your research questions (sounds familiar?) and the qualitative data analysis method you’ve chosen, so keep these factors front of mind as you scan for themes.

Tips & tricks for quality coding

Before we wrap up, let’s quickly look at some general advice, tips and suggestions to ensure your qualitative data coding is top-notch.

- Before you begin coding, plan out the steps you will take and the coding approach and technique(s) you will follow to avoid inconsistencies.

- When adopting deductive coding, it’s useful to use a codebook from the start of the coding process. This will keep your work organised and will ensure that you don’t forget any of your codes.

- Whether you’re adopting an inductive or deductive approach, keep track of the meanings of your codes and remember to revisit these as you go along.

- Avoid using synonyms for codes that are similar, if not the same. This will allow you to have a more uniform and accurate coded dataset and will also help you to not get overwhelmed by your data.

- While coding, make sure that you remind yourself of your aims and coding method. This will help you to avoid directional drift , which happens when coding is not kept consistent.

- If you are working in a team, make sure that everyone has been trained and understands how codes need to be assigned.

32 Comments

I appreciated the valuable information provided to accomplish the various stages of the inductive and inductive coding process. However, I would have been extremely satisfied to be appraised of the SPECIFIC STEPS to follow for: 1. Deductive coding related to the phenomenon and its features to generate the codes, categories, and themes. 2. Inductive coding related to using (a) Initial (b) Axial, and (c) Thematic procedures using transcribe data from the research questions

Thank you so much for this. Very clear and simplified discussion about qualitative data coding.

This is what I want and the way I wanted it. Thank you very much.

All of the information’s are valuable and helpful. Thank for you giving helpful information’s. Can do some article about alternative methods for continue researches during the pandemics. It is more beneficial for those struggling to continue their researchers.

Thank you for your information on coding qualitative data, this is a very important point to be known, really thank you very much.

Very useful article. Clear, articulate and easy to understand. Thanks

This is very useful. You have simplified it the way I wanted it to be! Thanks

Thank you so very much for explaining, this is quite helpful!

hello, great article! well written and easy to understand. Can you provide some of the sources in this article used for further reading purposes?

You guys are doing a great job out there . I will not realize how many students you help through your articles and post on a daily basis. I have benefited a lot from your work. this is remarkable.

Wonderful one thank you so much.

Hello, I am doing qualitative research, please assist with example of coding format.

This is an invaluable website! Thank you so very much!

Well explained and easy to follow the presentation. A big thumbs up to you. Greatly appreciate the effort 👏👏👏👏

Thank you for this clear article with examples

Thank you for the detailed explanation. I appreciate your great effort. Congrats!

Ahhhhhhhhhh! You just killed me with your explanation. Crystal clear. Two Cheers!

D0 you have primary references that was used when creating this? If so, can you share them?

Being a complete novice to the field of qualitative data analysis, your indepth analysis of the process of thematic analysis has given me better insight. Thank you so much.

Excellent summary

Thank you so much for your precise and very helpful information about coding in qualitative data.

Thanks a lot to this helpful information. You cleared the fog in my brain.

Glad to hear that!

This has been very helpful. I am excited and grateful.

I still don’t understand the coding and categorizing of qualitative research, please give an example on my research base on the state of government education infrastructure environment in PNG

Wahho, this is amazing and very educational to have come across this site.. from a little search to a wide discovery of knowledge.

Thanks I really appreciate this.

Thank you so much! Very grateful.

This was truly helpful. I have been so lost, and this simplified the process for me.

Just at the right time when I needed to distinguish between inductive and

deductive data analysis of my Focus group discussion results very helpful

Very useful across disciplines and at all levels. Thanks…

Hello, Thank you for sharing your knowledge on us.

Submit a Comment Cancel reply

Your email address will not be published. Required fields are marked *

Save my name, email, and website in this browser for the next time I comment.

- Print Friendly

Dissertations and research projects

- Sessions and recordings

- Skill guide

- Finding the gap

- Developing research questions

- Epistemology

- Ethical approval

- Methodology and Methods

- Recruiting participants

- Planning your analysis

- Writing your research proposal

- Hypothesis testing

- Reliability and validity

- Approaches to quantitative research

- Developing a theoretical framework

- Reflecting on your position

- Extended literature reviews

- Presenting qualitative data

- Introduction

- Literature review

- Methodology

- Conclusions

- 5) Working with a supervisor

- e-learning and books

- Quick resources

- SkillsCheck This link opens in a new window

- Review this resource

Qualitative research

In this section on Qualitative Research you can find out about:

You might also want to consult our other sections on Planning your research , Quantitative research and Writing up research , and check out the Additional resources .

- << Previous: Approaches to quantitative research

- Next: Developing a theoretical framework >>

Adsetts Library

Collegiate library, sheffield hallam university, city campus, howard street, sheffield s1 1wb, contact us / live chat, +44 (0)114 225 2222, [email protected], accessibility, legal information, privacy and gdpr, login to libapps.

Cookies on this website

We use cookies to ensure that we give you the best experience on our website. If you click 'Accept all cookies' we'll assume that you are happy to receive all cookies and you won't see this message again. If you click 'Reject all non-essential cookies' only necessary cookies providing core functionality such as security, network management, and accessibility will be enabled. Click 'Find out more' for information on how to change your cookie settings.

Tips for a qualitative dissertation

Veronika Williams

17 October 2017

Tips for students

This blog is part of a series for Evidence-Based Health Care MSc students undertaking their dissertations.

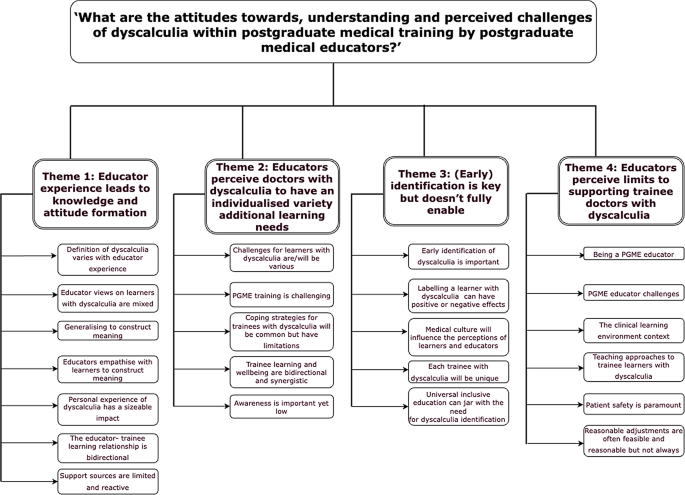

Undertaking an MSc dissertation in Evidence-Based Health Care (EBHC) may be your first hands-on experience of doing qualitative research. I chatted to Dr. Veronika Williams, an experienced qualitative researcher, and tutor on the EBHC programme, to find out her top tips for producing a high-quality qualitative EBHC thesis.

1) Make the switch from a quantitative to a qualitative mindset

It’s not just about replacing numbers with words. Doing qualitative research requires you to adopt a different way of seeing and interpreting the world around you. Veronika asks her students to reflect on positivist and interpretivist approaches: If you come from a scientific or medical background, positivism is often the unacknowledged status quo. Be open to considering there are alternative ways to generate and understand knowledge.

2) Reflect on your role

Quantitative research strives to produce “clean” data unbiased by the context in which it was generated. With qualitative methods, this is neither possible nor desirable. Students should reflect on how their background and personal views shape the way they collect and analyse their data. This will not only add to the transparency of your work but will also help you interpret your findings.

3) Don’t forget the theory

Qualitative researchers use theories as a lens through which they understand the world around them. Veronika suggests that students consider the theoretical underpinning to their own research at the earliest stages. You can read an article about why theories are useful in qualitative research here.

4) Think about depth rather than breadth

Qualitative research is all about developing a deep and insightful understanding of the phenomenon/ concept you are studying. Be realistic about what you can achieve given the time constraints of an MSc. Veronika suggests that collecting and analysing a smaller dataset well is preferable to producing a superficial, rushed analysis of a larger dataset.

5) Blur the boundaries between data collection, analysis and writing up

Veronika strongly recommends keeping a research diary or using memos to jot down your ideas as your research progresses. Not only do these add to your audit trail, these entries will help contribute to your first draft and the process of moving towards theoretical thinking. Qualitative researchers move back and forward between their dataset and manuscript as their ideas develop. This enriches their understanding and allows emerging theories to be explored.

6) Move beyond the descriptive

When analysing interviews, for example, it can be tempting to think that having coded your transcripts you are nearly there. This is not the case! You need to move beyond the descriptive codes to conceptual themes and theoretical thinking in order to produce a high-quality thesis. Veronika warns against falling into the pitfall of thinking writing up is, “Two interviews said this whilst three interviewees said that”.

7) It’s not just about the average experience

When analysing your data, consider the outliers or negative cases, for example, those that found the intervention unacceptable. Although in the minority, these respondents will often provide more meaningful insight into the phenomenon or concept you are trying to study.

8) Bounce ideas

Veronika recommends sharing your emerging ideas and findings with someone else, maybe with a different background or perspective. This isn’t about getting to the “right answer” rather it offers you the chance to refine your thinking. Be sure, though, to fully acknowledge their contribution in your thesis.

9) Be selective

In can be a challenge to meet the dissertation word limit. It won’t be possible to present all the themes generated by your dataset so focus! Use quotes from across your dataset that best encapsulate the themes you are presenting. Display additional data in the appendix. For example, Veronika suggests illustrating how you moved from your coding framework to your themes.

10) Don’t panic!

There will be a stage during analysis and write up when it seems undoable. Unlike quantitative researchers who begin analysis with a clear plan, qualitative research is more of a journey. Everything will fall into place by the end. Be sure, though, to allow yourself enough time to make sense of the rich data qualitative research generates.

Related course:

Qualitative research methods.

Short Course

Frequently asked questions

How do you analyze qualitative data.

There are various approaches to qualitative data analysis , but they all share five steps in common:

- Prepare and organize your data.

- Review and explore your data.

- Develop a data coding system.

- Assign codes to the data.

- Identify recurring themes.

The specifics of each step depend on the focus of the analysis. Some common approaches include textual analysis , thematic analysis , and discourse analysis .

Frequently asked questions: Methodology

Attrition refers to participants leaving a study. It always happens to some extent—for example, in randomized controlled trials for medical research.

Differential attrition occurs when attrition or dropout rates differ systematically between the intervention and the control group . As a result, the characteristics of the participants who drop out differ from the characteristics of those who stay in the study. Because of this, study results may be biased .

Action research is conducted in order to solve a particular issue immediately, while case studies are often conducted over a longer period of time and focus more on observing and analyzing a particular ongoing phenomenon.

Action research is focused on solving a problem or informing individual and community-based knowledge in a way that impacts teaching, learning, and other related processes. It is less focused on contributing theoretical input, instead producing actionable input.

Action research is particularly popular with educators as a form of systematic inquiry because it prioritizes reflection and bridges the gap between theory and practice. Educators are able to simultaneously investigate an issue as they solve it, and the method is very iterative and flexible.

A cycle of inquiry is another name for action research . It is usually visualized in a spiral shape following a series of steps, such as “planning → acting → observing → reflecting.”

To make quantitative observations , you need to use instruments that are capable of measuring the quantity you want to observe. For example, you might use a ruler to measure the length of an object or a thermometer to measure its temperature.

Criterion validity and construct validity are both types of measurement validity . In other words, they both show you how accurately a method measures something.

While construct validity is the degree to which a test or other measurement method measures what it claims to measure, criterion validity is the degree to which a test can predictively (in the future) or concurrently (in the present) measure something.

Construct validity is often considered the overarching type of measurement validity . You need to have face validity , content validity , and criterion validity in order to achieve construct validity.

Convergent validity and discriminant validity are both subtypes of construct validity . Together, they help you evaluate whether a test measures the concept it was designed to measure.

- Convergent validity indicates whether a test that is designed to measure a particular construct correlates with other tests that assess the same or similar construct.

- Discriminant validity indicates whether two tests that should not be highly related to each other are indeed not related. This type of validity is also called divergent validity .

You need to assess both in order to demonstrate construct validity. Neither one alone is sufficient for establishing construct validity.

- Discriminant validity indicates whether two tests that should not be highly related to each other are indeed not related

Content validity shows you how accurately a test or other measurement method taps into the various aspects of the specific construct you are researching.

In other words, it helps you answer the question: “does the test measure all aspects of the construct I want to measure?” If it does, then the test has high content validity.

The higher the content validity, the more accurate the measurement of the construct.

If the test fails to include parts of the construct, or irrelevant parts are included, the validity of the instrument is threatened, which brings your results into question.

Face validity and content validity are similar in that they both evaluate how suitable the content of a test is. The difference is that face validity is subjective, and assesses content at surface level.

When a test has strong face validity, anyone would agree that the test’s questions appear to measure what they are intended to measure.

For example, looking at a 4th grade math test consisting of problems in which students have to add and multiply, most people would agree that it has strong face validity (i.e., it looks like a math test).

On the other hand, content validity evaluates how well a test represents all the aspects of a topic. Assessing content validity is more systematic and relies on expert evaluation. of each question, analyzing whether each one covers the aspects that the test was designed to cover.

A 4th grade math test would have high content validity if it covered all the skills taught in that grade. Experts(in this case, math teachers), would have to evaluate the content validity by comparing the test to the learning objectives.

Snowball sampling is a non-probability sampling method . Unlike probability sampling (which involves some form of random selection ), the initial individuals selected to be studied are the ones who recruit new participants.

Because not every member of the target population has an equal chance of being recruited into the sample, selection in snowball sampling is non-random.

Snowball sampling is a non-probability sampling method , where there is not an equal chance for every member of the population to be included in the sample .

This means that you cannot use inferential statistics and make generalizations —often the goal of quantitative research . As such, a snowball sample is not representative of the target population and is usually a better fit for qualitative research .

Snowball sampling relies on the use of referrals. Here, the researcher recruits one or more initial participants, who then recruit the next ones.

Participants share similar characteristics and/or know each other. Because of this, not every member of the population has an equal chance of being included in the sample, giving rise to sampling bias .

Snowball sampling is best used in the following cases:

- If there is no sampling frame available (e.g., people with a rare disease)

- If the population of interest is hard to access or locate (e.g., people experiencing homelessness)

- If the research focuses on a sensitive topic (e.g., extramarital affairs)

The reproducibility and replicability of a study can be ensured by writing a transparent, detailed method section and using clear, unambiguous language.

Reproducibility and replicability are related terms.

- Reproducing research entails reanalyzing the existing data in the same manner.

- Replicating (or repeating ) the research entails reconducting the entire analysis, including the collection of new data .

- A successful reproduction shows that the data analyses were conducted in a fair and honest manner.

- A successful replication shows that the reliability of the results is high.

Stratified sampling and quota sampling both involve dividing the population into subgroups and selecting units from each subgroup. The purpose in both cases is to select a representative sample and/or to allow comparisons between subgroups.

The main difference is that in stratified sampling, you draw a random sample from each subgroup ( probability sampling ). In quota sampling you select a predetermined number or proportion of units, in a non-random manner ( non-probability sampling ).

Purposive and convenience sampling are both sampling methods that are typically used in qualitative data collection.

A convenience sample is drawn from a source that is conveniently accessible to the researcher. Convenience sampling does not distinguish characteristics among the participants. On the other hand, purposive sampling focuses on selecting participants possessing characteristics associated with the research study.

The findings of studies based on either convenience or purposive sampling can only be generalized to the (sub)population from which the sample is drawn, and not to the entire population.

Random sampling or probability sampling is based on random selection. This means that each unit has an equal chance (i.e., equal probability) of being included in the sample.

On the other hand, convenience sampling involves stopping people at random, which means that not everyone has an equal chance of being selected depending on the place, time, or day you are collecting your data.

Convenience sampling and quota sampling are both non-probability sampling methods. They both use non-random criteria like availability, geographical proximity, or expert knowledge to recruit study participants.

However, in convenience sampling, you continue to sample units or cases until you reach the required sample size.

In quota sampling, you first need to divide your population of interest into subgroups (strata) and estimate their proportions (quota) in the population. Then you can start your data collection, using convenience sampling to recruit participants, until the proportions in each subgroup coincide with the estimated proportions in the population.

A sampling frame is a list of every member in the entire population . It is important that the sampling frame is as complete as possible, so that your sample accurately reflects your population.

Stratified and cluster sampling may look similar, but bear in mind that groups created in cluster sampling are heterogeneous , so the individual characteristics in the cluster vary. In contrast, groups created in stratified sampling are homogeneous , as units share characteristics.

Relatedly, in cluster sampling you randomly select entire groups and include all units of each group in your sample. However, in stratified sampling, you select some units of all groups and include them in your sample. In this way, both methods can ensure that your sample is representative of the target population .

A systematic review is secondary research because it uses existing research. You don’t collect new data yourself.

The key difference between observational studies and experimental designs is that a well-done observational study does not influence the responses of participants, while experiments do have some sort of treatment condition applied to at least some participants by random assignment .

An observational study is a great choice for you if your research question is based purely on observations. If there are ethical, logistical, or practical concerns that prevent you from conducting a traditional experiment , an observational study may be a good choice. In an observational study, there is no interference or manipulation of the research subjects, as well as no control or treatment groups .

It’s often best to ask a variety of people to review your measurements. You can ask experts, such as other researchers, or laypeople, such as potential participants, to judge the face validity of tests.

While experts have a deep understanding of research methods , the people you’re studying can provide you with valuable insights you may have missed otherwise.

Face validity is important because it’s a simple first step to measuring the overall validity of a test or technique. It’s a relatively intuitive, quick, and easy way to start checking whether a new measure seems useful at first glance.

Good face validity means that anyone who reviews your measure says that it seems to be measuring what it’s supposed to. With poor face validity, someone reviewing your measure may be left confused about what you’re measuring and why you’re using this method.

Face validity is about whether a test appears to measure what it’s supposed to measure. This type of validity is concerned with whether a measure seems relevant and appropriate for what it’s assessing only on the surface.

Statistical analyses are often applied to test validity with data from your measures. You test convergent validity and discriminant validity with correlations to see if results from your test are positively or negatively related to those of other established tests.

You can also use regression analyses to assess whether your measure is actually predictive of outcomes that you expect it to predict theoretically. A regression analysis that supports your expectations strengthens your claim of construct validity .

When designing or evaluating a measure, construct validity helps you ensure you’re actually measuring the construct you’re interested in. If you don’t have construct validity, you may inadvertently measure unrelated or distinct constructs and lose precision in your research.

Construct validity is often considered the overarching type of measurement validity , because it covers all of the other types. You need to have face validity , content validity , and criterion validity to achieve construct validity.

Construct validity is about how well a test measures the concept it was designed to evaluate. It’s one of four types of measurement validity , which includes construct validity, face validity , and criterion validity.

There are two subtypes of construct validity.

- Convergent validity : The extent to which your measure corresponds to measures of related constructs

- Discriminant validity : The extent to which your measure is unrelated or negatively related to measures of distinct constructs

Naturalistic observation is a valuable tool because of its flexibility, external validity , and suitability for topics that can’t be studied in a lab setting.

The downsides of naturalistic observation include its lack of scientific control , ethical considerations , and potential for bias from observers and subjects.

Naturalistic observation is a qualitative research method where you record the behaviors of your research subjects in real world settings. You avoid interfering or influencing anything in a naturalistic observation.