Stack Exchange Network

Stack Exchange network consists of 183 Q&A communities including Stack Overflow , the largest, most trusted online community for developers to learn, share their knowledge, and build their careers.

Q&A for work

Connect and share knowledge within a single location that is structured and easy to search.

What is the acceptable similarity in a mathematics PhD dissertation when checking by Turnitin?

I have checked the originality of my PhD thesis in mathematics using Turnitin . The similarity was 31%. Is this percentage acceptable by most committees?

- mathematics

- plagiarism-checker

- 1 I would imagine that this would vary for university to university. – user21984 Commented Oct 10, 2014 at 10:45

- 7 Provided that we are speaking about the ratings provided by automated tools (somewhat implied by the "similarity"): the answer should probably be that it does not matter. Any single copied paragraph that is beyond coincidence is a reason for rejecting the thesis. At the same time, for a thesis, someone should definitely check all potential cases of plagiarism that an automated tool provides. Otherwise the department would use the automated checking tool in a plain wrong way. If the rating is "90% plagiarism", but all cases found by the tool are false-positives, then this should be fine. – DCTLib Commented Oct 10, 2014 at 11:08

- 1 Different (but similar) question with good answers and comments is asked in this link: What is the range of percentage similarity of plagiarism for a review article? – enthu Commented Oct 10, 2014 at 11:14

- 13 Surely you know whether or not you've plagiarised. If you have, you'll be removing that plagiarism before you submit, regardless of the Turnitin score. So why are you checking your own thesis with Turnitin? – 410 gone Commented Oct 10, 2014 at 14:19

- 1 @FranckDernoncourt: I do not think anybody will need a link to Turnitin. See also this Meta discussion . – Wrzlprmft ♦ Commented Oct 10, 2014 at 16:53

5 Answers 5

Is this percentage acceptable by most committees?

This is the wrong question to be asking, since academic decisions are not made based on a numerical measure of similarity from a computer program. The purpose of this software is to flag suspicious cases for humans to examine more carefully. It will identify passages that appear similar to other writings, but it can't decide whether that constitutes plagiarism.

For example, part of your thesis might be based on previous papers you have written. In some circumstances, it may be reasonable to copy text from these papers. (You need to check that your advisor approves and that it doesn't conflict with any university regulations or the publishing agreement with the publisher.) Of course you would need to cite the papers and clearly indicate the overlap. It's not plagiarism if you do that, but Turnitin doesn't understand what you've written well enough to distinguish it from plagiarism. So it's possible that Turnitin would flag lots of suspicious sections, but that your committee would look at them and see that everything is cited appropriately.

If you haven't committed any plagiarism, then you don't need to worry about this at all. If you genuinely write everything yourself (or carefully quote and cite anything you didn't write), then there's no way you could accidentally write something that looks like proof of plagiarism. There's just too much possible variation, and the probability of matching someone else's words by chance is negligible. The worst case scenario is that Turnitin flags something due to algorithmic limitations or a poor underlying model, but human review shows that it is not actually worrisome. (Nobody trusts Turnitin more than they trust their own judgment.)

I'll assume you don't know you've committed plagiarism, but it is possible that you honestly wouldn't know? Unfortunately, the answer is yes if you have certain bad writing habits. For example, it's dangerous to write while having another reference open in front of you to compare with. Even if you don't copy anything verbatim, it's easy to write something that's just an adaptation of the original source (maybe rewording sentences or rearranging things slightly, but clearly based on the original).

If that's what worries you, then you should take a look at the most suspicious passages found by Turnitin. If they look like an adaptation of another source, then it's worth rewriting them. If they don't, then maybe Turnitin is worrying you unnecessarily.

But in any case a plagiarism finding won't just come down to a percentage of similarity. Any percentage greater than 0 is too much for actual plagiarism, and no percentage is too high if it reflects limitations of the software rather than actual plagiarism.

- 5 Something that may be different for math papers is that many definitions are standard enough that the wording is almost exactly the same in all papers. I would not try to get "creative" with the definition of a complete metric space for example. – Sasho Nikolov Commented Oct 24, 2014 at 22:31

TurnItIn uses a complicated algorithm to determine whether a piece of text within a larger body of work matches something in its database. The TurnItIn is limited to open access sources and therefore has huge gaps in its ability to detect things. Further, while TurnItIn can in some cases exclude things like references and quotes from the similarity index, it sometimes fails. Overall, when my department's academic misconduct committee looks at TurnItIn reports we essentially ignore the overall similarity index. We do not completely ignore it in that it guides how we are going to further examine the document.

We employ 4 different strategies based on whether the similarity index is 0, between 1 and 20 percent, between 20 and 40 percent, and over 40+ percent. A piece of work with a similarity index of 0 is pretty rare and generally means that students have manipulated the document in a way that TurnItIn cannot process it (e.g., if a paper is converted to an image file and then converted to a pdf, there is no text for TurnItIn to analyse). A similarity index less than 20 percent can arise from work that contains no plagiarism with the similarity being quotes and references and small meaningless sentences. The key here is "meaningless". For example, there are only so many ways of saying "we did a t-test between the two groups" and it is reasonable to assume that someone else has used exactly the same wording. A piece of work with a similarity index less than 20 percent can also, however, include a huge amount of plagiarised material. A similarity index between 20-40 percent generally means there is a problem unless a large portion of text that should have been skipped was not (e.g., block quotes, reference lists, or appendices of common tables). A similarity index in excess of 40 percent is almost always problematic.

You really should not depend on the overall similarity index. First and foremost you should depend on your own following of good academic practices. If you have followed good academic practices, there really is no need for TurnItIn. If you want to use the TurnItIn report, you should look at what is being match and ask yourself why it is matching. If it found something your "accidentally" cut and paste, or "inadvertently" did not reword appropriately, fix it and use that as a wake up call to improve your academic practice. If everything it is finding are properly attributed quotes or common tables (or questionnaires, etc) and references then there is no problem.

I have some familiarity with Turnitin, though that was way back in undergrad. The thing about similarity engines is that they aren't perfect.

It's important to consider exactly how Turnitin describes itself on its FAQ .

What does TurnItIn actually do?

Turnitin determines if text in a paper matches text in any of the Turnitin databases. By itself, Turnitin does not detect or determine plagiarism — it just detects matching text to help instructors determine if plagiarism has occurred. Indeed, the text in the student’s paper that is found to match a source may be properly cited and attributed.

When we were testing Turnitin in high school (probably a decade ago) with a short writing prompt (~page or two) with a single source, the entire class ended up getting 15 to 20% similarity score, because not only did our sources match, but our quotes matched. No surprise there, really.

Now, consider how large Turnitin's database has grown. If this FAQ is to be trusted, you're comparing your paper to more than 80 thousand journals.

Turnitin’s proprietary software then compares the paper’s text to a vast database of 12+ billion pages of digital content (including archived internet content that is no longer available on the live web) as well as over 110 million papers in the student paper archive, and 80,000+ professional, academic and commercial journals and publications. We’re adding new content through new partnerships all the time. For example, our partner CrossRef boasts 500-plus members that include publishers such as Elsevier and the IEEE, and has already added hundreds of millions of pages of new content to our database.

If I recall correctly, you can see exactly where your paper has similarity with others, so you can pull that up.

Sources of Similarity

My bet is that your paper cites papers almost identically to how another paper cites theirs. The great benefit of commonplace citing techniques like APA and MLA is that they're consistent.

If you cite, for example, the general APA format from Purdue, and someone else cites it, they're going to match at almost 100%.

Angeli, E., Wagner, J., Lawrick, E., Moore, K., Anderson, M., Soderlund, L., & Brizee, A. (2010, May 5). General format. Retrieved from http://owl.english.purdue.edu/owl/resource/560/01/

The chances of you citing a paper that has never been cited before when compared to the world of science is, let's face it, probably 0%. Someone out there has cited your sources at some point. With sources being at times up to 10% of the paper's length, that's an easy portion we can knock out.

The other portion likely has to do with the vernacular that is used to describe a situation. Let's go with the following statement, written entirely off the top of my head.

Java is an object-oriented programming language.

Pretty simple statement, and true enough that it has been mentioned 260,000 times already, in that exact wording.

Similarity for that statement is 100% if it were to check for that. But when you make it loosely checked for similarity (i.e. remove the quotes from the search), you get several million hits.

Does that mean I plagiarized? Nope. Would TurnItIn flag it? Definitely. Consider how likely everyday people great each other with "How was your weekend?" Are we plagiarizing each other's greetings? Nope. We pick up similarities in how we control language to understand each other, and that shows in papers, where we describe confidence intervals, methodologies, and processes the same way.

Perhaps even more terrifying in considering the similarity score, is that it will likely evaluate the two following statements similar:

Statement 1

The double helix of DNA was first discovered by the combined efforts of Watson and Crick. Watson and Crick would later get a Nobel Prize for their efforts.

Statement 2

The double helix of DNA was not first discovered by the combined efforts of Watson and Crick, but by Franklin. Watson and Crick would later get a Nobel Prize for her efforts.

Two very similar sentences. 80-90% similarity word-wise. Meaning-wise? Completely different. That's why the human element is required. We can tell those two statements tell an entirely different story when read. These small similar sets of wording add up quite quickly, and a 30% similarity in your case, given the level of research probably done in whatever your field is, and the amount of sources you have probably cited (100+?) is unlikely to be anything to fret about in this day and age.

- 1 Nice analysis, but I disagree with your conclusions. Do you have any evidence that "30% similarity is unlikely to be anything to fret about in this day and age." I haven't calculated the numbers for my department, although it might be worth doing, but I would estimate that over 3/4 of the cases of academic misconduct I have seen have an overall similarity index of less than 30%. Harder for me to estimate is the percentage of work that has a similarity index in excess of 30 percent that did not involve academic misconduct. – StrongBad Commented Oct 10, 2014 at 14:23

- @StrongBad I mean in his case, not in general, sorry D: I'm sure if we really wanted to, we could definitely break TurnItIn by forceful plagiarism at a <10% rating, and I know students will likely do that. I'll edit it to reflect that. – Compass Commented Oct 10, 2014 at 14:25

From my experience with Ithenticate (the version of turnitin for journals and conference proceedings), I'd say that 30% similarity most likely indicates significant plagiarism or self-plagiarism (recycling of text.) I would certainly investigate further to understand exactly where the similar text was coming from.

If the similar text is taken from sources written by other authors, then I would investigate further by reading the text carefully and comparing it with the sources. There are certainly false alarms raised by this type of software. For example, common phrases like "Without loss of generality, we can assume that..." and "Partial differential equation boundary value problem" will be flagged. Standard definitions are also commonly flagged. However, if I see long narrative paragraphs with significant copying, that's clearly plagiarism.

It's traditional at many universities to staple together a bunch of papers and call it a dissertation. Conversely, it's also very common to slightly rewrite chapters of a dissertation and turn them into papers. Either way, this is "text recycling."

Now that text recycling can be easily detected, commercial publishers are cracking down on it for a variety of reasons. First, the publisher might get sued for copyright violation if the holder of the copyright on the previously published text objects. A different objection is that the material shouldn't be published because it isn't original. As a result, text recycling between two published papers (in conference proceedings or journal articles) is rapidly becoming a thing of the past. This has upset many academics who have made a habit of reusing text from one paper to the next. Some feel that if the reused text is from a methods section or literature review, than the copying is harmless. Publishers typically take a harder line.

The situation with dissertations is somewhat different. In one direction journals have always been willing to accept papers that are substantially based on dissertation chapters with minimal rewriting. Since the student usually retains copyright on the thesis itself, there's no particular problem with copyright violation. Since dissertations traditionally weren't widely distributed, publishers didn't care that the material had been "previously published." I don't really expect this to change much in the near future.

In the other direction, there are two issues: First, will the publisher of journal articles object to reuse of the text in the dissertation as a copyright violation? You'd need to check with the publisher. Second, will the university be willing to accept a dissertation (and perhaps publish it through Proquest or its own online dissertation web site) that contains material that has been separately published? That really depends on the policy of your university and the particular opinions of your advisor and committee.

I have used websites in the past to help with similar content, they will give you a report of what was found online and help remove/reword the similar content so you don't have to worry about your document being marked as plagiarism.

- He doesn't have to worry about the document being marked as plagiarism, no matter what a program says. – Austin Henley Commented Oct 24, 2014 at 17:57

You must log in to answer this question.

Not the answer you're looking for browse other questions tagged phd mathematics thesis plagiarism plagiarism-checker ..

- Featured on Meta

- Upcoming initiatives on Stack Overflow and across the Stack Exchange network...

- Announcing a change to the data-dump process

Hot Network Questions

- Does `chfn` provide a mobile phone parameter?

- USB A mechanical orientation?

- Solution for a modern nation that mustn't see themselves or their own reflection

- Sorting with a deque

- What is this font called and how to study it

- Okay to travel to places where the locals are unwelcoming?

- Has an aircraft ever crashed due to it exceeding its ceiling altitude?

- CH32V003 max GPIO current

- Is Shunt Jumper for Power Plane jumper, a good idea?

- How could breastfeeding/suckling work for a beaked animal?

- Zener instead of resistor divider on dc-dc converter feedback pin

- Does quick review means likely rejection?

- Was supposed to be co-signer on auto for daughter but I’m listed the buyer

- Need help deciphering word written in Fraktur

- Finite verification for theorems due to Busy Beaver numbers

- Can a bad paper title hurt you? Can a good one help you?

- Binary search tree with height of max 1.44 * log(n) is AVL tree or it's not an iff

- Confusion on Symmetry in probability

- Relation between energy and time

- How can a hazard function be negative?

- Ideas for cooling a small office space with direct sunlight

- Edna Andrade's Black Dragon: Winding around control points

- HPE Smart Array P408i 2GB performance drop

- Was the head of the Secret Service ever removed for a security failure?

- DSpace@MIT Home

- MIT Libraries

- Doctoral Theses

Learning task-specific similarity

Other Contributors

Terms of use, description, date issued, collections.

Show Statistical Information

- Apps & tools

- Library access browser extension

- Readspeaker Textaid

- Access & accounts

- Accessibility tools

iThenticate – Similarity check for researchers

- Keylinks Learning Resources

- Working with courses

- Faculty support

- Canvas tools

- Integrated third party tools

- Manuals and videos

- FAQ for teachers and tutors

- Canvas FAQ for students

- Open Access Journal Browser

- Qualtrics survey tool

- Remote access to licensed resources and software

- Virtual Research Environment – VRE

- Wooclap for interaction

- Zoom Videoconferencing tool

- Video editing tools

- Video recording tools

- Check for software

Why and what?

Maastricht University endorses the principles of scientific integrity and therefore provides services to check for the similarity between documents. Separate services are provided for research and educational purposes.

Every UM-affiliated researcher can use this service. Ithenticate – provided by TurnItIn – compares your submitted work to millions of articles and other published works and billions of webpages.

Check a manuscript / PhD thesis

This tool is for research purposes only!

Not for educational purposes

The Similarity Check Service is not intended for educational purposes (e.g., checking master’s theses for plagiarism). Please use Turnitin Originality instead (available through the digital learning environment Canvas). Turnitin Originality is tailored to the specific requirements for educational purposes.

The maximum number of submissions for these services is adapted to their respective purposes.

Support & Contact

In case you are in doubt about which similarity check service to use for a particular purpose, please contact us so we can find a suitable solution for you while guaranteeing the sustainable availability of the services for all UM scholars.

Plagiarism and how to prevent it

Plagiarism is using someone else’s work or findings without stating the source and thereby implying that the work is your own. When using previously established ideas that add pertinent information in a research paper, every researcher should be cautious not to fall into the trap of sloppy referencing or even plagiarism.

Plagiarism is not just confined to papers, articles or books, it can take many forms (for more information, see this infographic by iThenticate ).

The Similarity Check Service can help you to prevent only one type of plagiarism: verbatim plagiarism, and only if the source is part of the corpus.

The software does not automatically detect plagiarism; it provides insight into the amount of similarity in the text between the uploaded document and other sources in the corpus of the software. This does not mean this part of the text is viewed as plagiarism in your specific field. For instance, the methods section in some subfields follows very common wording, which could lead to a match. If there are instances where the submission’s content is similar to the content in the database, it will be flagged for review and should be evaluated by you.

How to use the service

Getting started.

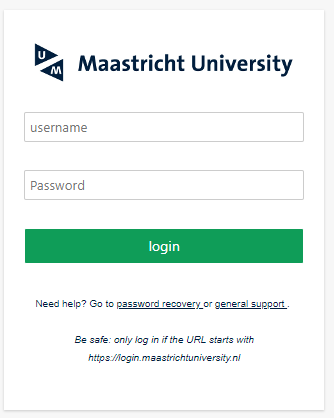

Go to iThenticate and enter your UM username and password in the appropriate fields. Select ‘login’.

2. First-time user

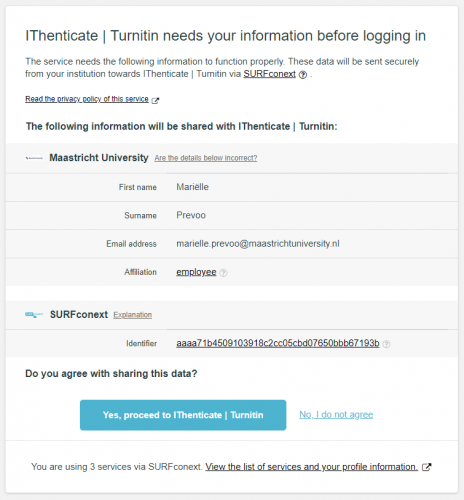

As a first-time user, you will then have to check your personal information and declare that you agree to the Terms and conditions.

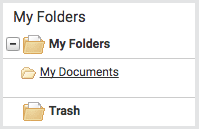

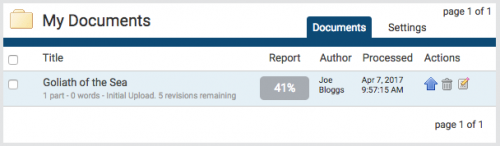

3. My Folders and My Documents iThenticate

iThenticate will provide you with a folder group My Folders and a folder within that group titled My Documents.

From the My Documents folder, you will be able to submit a document by selecting the Submit a document link.

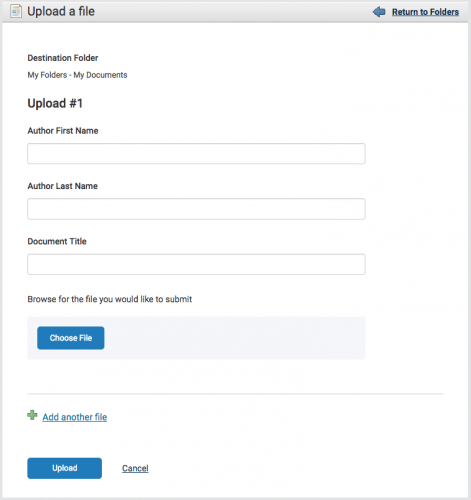

4. Upload a file

On the Upload a file page, enter the authorship details and the document title. Select Choose File and locate the file on your device.

Select the Add another file link to add another file. You can add up to ten files before submitting. Select Upload to upload the document(s).

5. Similarity Report

To view the Similarity Report for the paper, select the similarity score in the Report column. It usually takes a couple of minutes for a report to generate.

Finding your way around

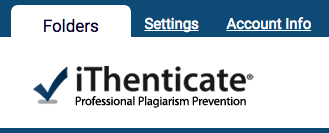

The main navigation bar at the top of the screen has three tabs. Upon logging in, you will automatically land on the folders page.

This is the main area of iThenticate. From the folders page, you will be able to upload, manage and view documents.

The settings page contains configuration options for the iThenticate interface.

Account Info

The account information page contains the user profile and account usage.

Options for exclusion

There can be various reasons why you may want to exclude certain sources that your document is compared to or certain parts of your document in the similarity check. You can specify options for exclusion in the Folder settings.

If you choose to exclude ‘small matches’, you will be asked to specify the minimum number of words that you want to be shown as a match.

If you choose to exclude ‘small sources’, you will be asked to specify a minimum number of words or a minimum match percentage.

Once you click Update Settings, the settings will be applied to the particular folder.

Manuals & training videos

iThenticate provides a scale of up-to-date manuals and instructions on their own website. Please consult them here .

You can also use these training videos to learn how to use the service.

Please be aware that information in these manuals and videos about logging in and account settings are not applicable to UM users of this service.

How to read the similarity report

The similarity report provides the percentage of similarity between the submitted document and content in the iThenticate database. This is the type of report that you will use most often for a similarity check.

It is perfectly natural for a submitted document to match against sources in the database, for example if you have used quotes.

The similarity score simply makes you aware of potential problem areas in the submitted document. These areas should then be reviewed to make sure there is no sloppy referencing or plagiarism.

iThenticate should be used as part of a larger process, in order to determine if a match between your submitted document and content in the database is or is not acceptable.

This video shows how to read the various reports

This video shows how the Document viewer works

Academic Integrity and Plagiarism

Everyone involved in teaching and research at Maastricht University shares in the responsibility for maintaining academic integrity (see Scientific Integrity ). All academic staff at UM are expected to adhere to the general principles of professional academic practice at all times.

Adhering to those principles also includes preventing sloppy referencing or plagiarism in your publications.

Additional information on how to avoid plagiarism can be also be found in the Copyright portal of the library.

Sources used

iThenticate compares the submitted work to 60 million scholarly articles, books, and conferences proceedings from 115,000 scientific, technical, and medical journals, 114 million Published works from journals, periodicals, magazines, encyclopedias, and abstracts, 68 billion current and archived web pages.

Checking PhD theses

The similarity check service (iThenticate) can be used by doctoral candidates or their supervisors to assess the work. Find out about the level of similarity with other publications and incorrect referencing before you send (parts of) the thesis to the Assessment Committee, a publisher or send in the thesis for deposit in the UM repository.

We kindly request you submit the whole thesis as one document (i.e. not per chapter) and only once to prevent unnecessary draws on the maximum number of submissions, as our contract provides a limited number of checks.

iThenticate FAQ

Contact & Support

For questions or information, use the web form to contact a library specialist.

Ask Your Librarian - Contact a library specialist

Learning Task-Specific Similarity

The right measure of similarity between examples is important in many areas of computer science. In particular it is a critical component in example- based learning methods. Similarity is commonly defined in terms of a conventional distance function, but such a definition does not necessarily capture the inherent meaning of similarity, which tends to depend on the underlying task. We develop an algorithmic approach to learning similarity from examples of what objects are deemed similar according to the task-specific notion of similarity at hand, as well as optional negative examples. Our learning algorithm constructs, in a greedy fashion, an encoding of the data. This encoding can be seen as an embedding into a space, where a weighted Hamming distance is correlated with the unknown similarity. This allows us to predict when two previously unseen examples are similar and, importantly, to efficiently search a very large database for examples similar to a query.

This approach is tested on a set of standard machine learning benchmark problems. The model of similarity learned with our algorithm provides and improvement over standard example-based classification and regression. We also apply this framework to problems in computer vision: articulated pose estimation of humans from single images, articulated tracking in video, and matching image regions subject to generic visual similarity.

Thesis chapters

- Front matter (of little scientific interest)

- Chapter 1: Introduction

This chapter defines some technical concepts, most importantly the notion of similarity we want to model, and provides a brief overview of the contributions of the thesis.

- Chapter 2: Background

Among the topics covered in this chapter: example-based classification and regression, previous work on learning distances and (dis)similarities (such as MDS), and algorithms for fast search and retrieval, with emphasis on locality sensitive hashing (LSH).

- Chapter 3: Learning embeddings that reflect similarity

- Similarity sensitive coding (SSC). This algorithm discretizes each dimension of the data into zero or more bits. Each dimension is considered independently of the rest.

- Boosted SSC. A modification of SSC in which the code is constructed by greedily collecting discretization bits, thus removing the independence assumption.

The underlying idea of all three algorithms is the same: build an embedding that, based on training examples of similar pairs, maps two similar objects close to each other (with high probability). At the same time, there is an objective to control for "spread": the probability of arbitrary two objects (in particular of dissimilar pairs of objects, if examples of such pairs are available) to be close in the embedding space should be low.

This chapter also describes results of an evaluation of the proposed algorithms on seven benchmarks data sets from UCI and Delve repositories.

- Chapter 4: Articulated pose estimation

An application of the ideas developed in previous chapters to the problem of pose estimation: inferring the articulated body pose (e.g. the 3D positions of key joints, or values of joint angles) from a single, monocular image containing a person.

- Chapter 5: Articulated tracking

In a tracking scenario, a sequence of views, rather than a single view of a person, is available. The motion provides additional cues, which are typically used in a probabilistic framework. In this chapter we show how similarity-based algorithms have been used to improve accuracy and speed of two articulated tracking systems: a general motion tracker and a motion-driven animation system focusing on swing dancing.

- Chapter 6: Learning image patch similarity

An important notion of similarity that is naturally conveyed by examples is the visual similarity of image regions. In this chapter we focus on a particular definition of such similarity, namely invariance under rotation and slight shift. We show how the machinery developed in Chapter 3 allows us to improve matching performance for two popular representations of image patches.

- Chapter 7: Conclusions

- Bibliography

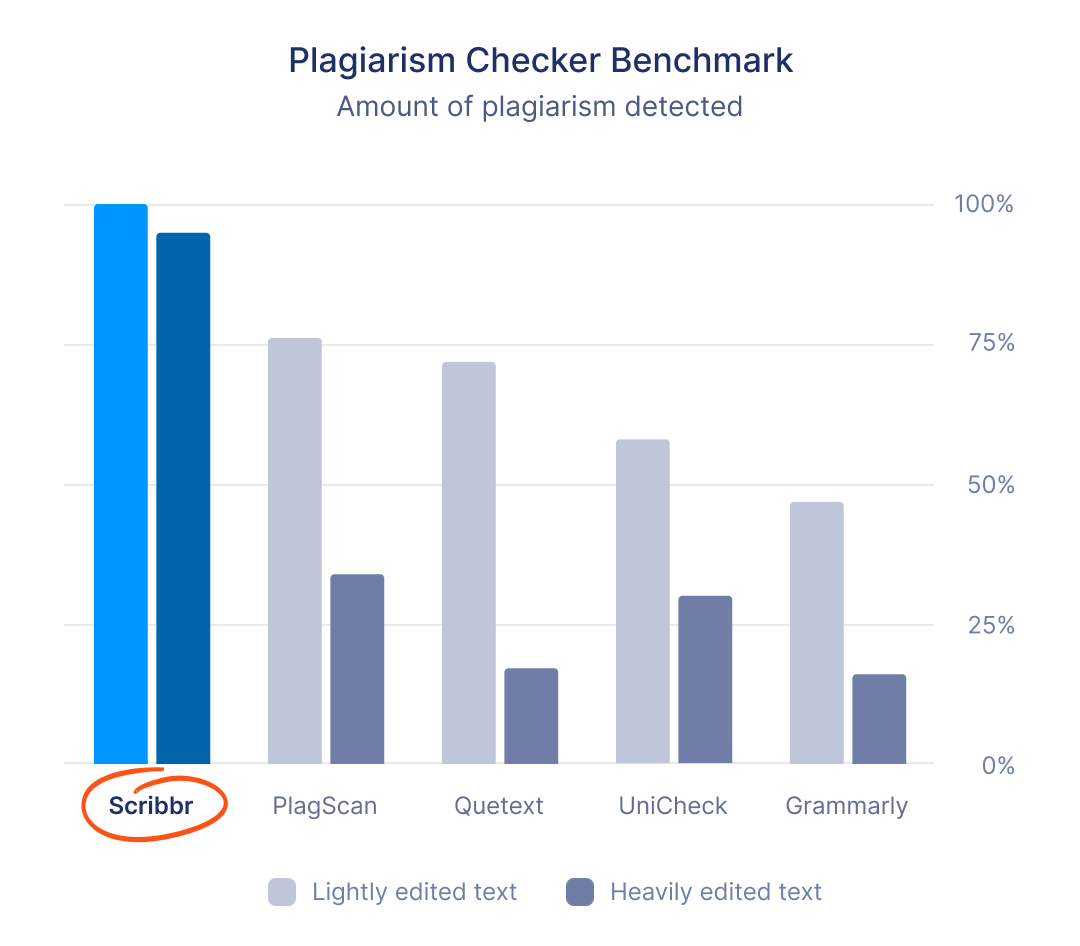

Scribbr Plagiarism Checker

Plagiarism checker software for students who value accuracy.

Extensive research shows that Scribbr's plagiarism checker, in partnership with Turnitin, detects plagiarism more accurately than other tools, making it the no. 1 choice for students.

How Scribbr detects plagiarism better

Powered by leading plagiarism checking software

Scribbr is an authorized partner of Turnitin, a leader in plagiarism prevention. Its software detects everything from exact word matches to synonym swapping .

Access to exclusive content databases

Your submissions are compared to the world’s largest content database , covering 99 billion webpages, 8 million publications, and over 20 languages.

Comparison against unpublished works

You can upload your previous assignments, referenced works, or a classmate’s paper or essay to catch (self-)plagiarism that is otherwise difficult to detect.

The Scribbr Plagiarism Checker is perfect for you if:

- Are a student writing an essay or paper

- Value the confidentiality of your submissions

- Prefer an accurate plagiarism report

- Want to compare your work against publications

This tool is not for you if you:

- Prefer a free plagiarism checker despite a less accurate result

- Are a copywriter, SEO, or business owner

Get started

Trusted by students and academics worldwide

University applicants

Ace your admissions essay to your dream college.

Compare your admissions essay to billions of web pages, including other essays.

- Avoid having your essay flagged or rejected for accidental plagiarism.

- Make a great first impression on the admissions officer.

Submit your assignments with confidence.

Detect plagiarism using software similar to what most universities use.

- Spot missing citations and improperly quoted or paraphrased content.

- Avoid grade penalties or academic probation resulting from accidental plagiarism.

Take your journal submission to the next level.

Compare your submission to millions of scholarly publications.

- Protect your reputation as a scholar.

- Get published by the journal of your choice.

Happiness guarantee

Scribbr’s services are rated 4.9 out of 5 based on 13,360 reviews. We aim to make you just as happy. If not, we’re happy to refund you !

Privacy guarantee

Your submissions will never be added to our content database, and you’ll never get a 100% match at your academic institution.

Price per document

Select your currency

Prices are per check, not a subscription

- Turnitin-powered plagiarism checker

- Access to 99.3B web pages & 8M publications

- Comparison to private papers to avoid self-plagiarism

- Downloadable plagiarism report

- Live chat with plagiarism experts

- Private and confidential

Volume pricing available for institutions. Get in touch.

Request volume pricing

Institutions interested in buying more than 50 plagiarism checks can request a discounted price. Please fill in the form below.

Name * Email * Institution Name * Institution’s website * Country * Phone number Give an indication of how many checks you need * Please indicate how you want to use the checks * Depending of the size of your request, you will be contacted by a representative of either Scribbr or Turnitin. * Required

You don't need a plagiarism checker, right?

You would never copy-and-paste someone else’s work, you’re great at paraphrasing, and you always keep a tidy list of your sources handy.

But what about accidental plagiarism ? It’s more common than you think! Maybe you paraphrased a little too closely, or forgot that last citation or set of quotation marks.

Even if you did it by accident, plagiarism is still a serious offense. You may fail your course, or be placed on academic probation. The risks just aren’t worth it.

Scribbr & academic integrity

Scribbr is committed to protecting academic integrity. Our plagiarism checker software, Citation Generator , proofreading services , and free Knowledge Base content are designed to help educate and guide students in avoiding unintentional plagiarism.

We make every effort to prevent our software from being used for fraudulent or manipulative purposes.

Ask our team

Want to contact us directly? No problem. We are always here for you.

- Email [email protected]

- Start live chat

- Call +1 (510) 822-8066

- WhatsApp +31 20 261 6040

Frequently asked questions

No, the Self-Plagiarism Checker does not store your document in any public database.

In addition, you can delete all your personal information and documents from the Scribbr server as soon as you’ve received your plagiarism report.

Scribbr’s Plagiarism Checker is powered by elements of Turnitin’s Similarity Checker , namely the plagiarism detection software and the Internet Archive and Premium Scholarly Publications content databases .

The add-on AI detector is powered by Scribbr’s proprietary software.

Extensive testing proves that Scribbr’s plagiarism checker is one of the most accurate plagiarism checkers on the market in 2022.

The software detects everything from exact word matches to synonym swapping. It also has access to a full range of source types, including open- and restricted-access journal articles, theses and dissertations, websites, PDFs, and news articles.

At the moment we do not offer a monthly subscription for the Scribbr Plagiarism Checker. This means you won’t be charged on a recurring basis – you only pay for what you use. We believe this provides you with the flexibility to use our service as frequently or infrequently as you need, without being tied to a contract or recurring fee structure.

You can find an overview of the prices per document here:

| Small document (up to 7,500 words) | $19.95 |

|---|---|

| Normal document (7,500-50,000 words) | $29.95 |

| Large document (50,000+ words) | $39.95 |

Please note that we can’t give refunds if you bought the plagiarism check thinking it was a subscription service as communication around this policy is clear throughout the order process.

Your document will be compared to the world’s largest and fastest-growing content database , containing over:

- 99.3 billion current and historical webpages.

- 8 million publications from more than 1,700 publishers such as Springer, IEEE, Elsevier, Wiley-Blackwell, and Taylor & Francis.

Note: Scribbr does not have access to Turnitin’s global database with student papers. Only your university can add and compare submissions to this database.

Scribbr’s plagiarism checker offers complete support for 20 languages, including English, Spanish, German, Arabic, and Dutch.

The add-on AI Detector and AI Proofreader are only available in English.

The complete list of supported languages:

If your university uses Turnitin, the result will be very similar to what you see at Scribbr.

The only possible difference is that your university may compare your submission to a private database containing previously submitted student papers. Scribbr does not have access to these private databases (and neither do other plagiarism checkers).

To cater to this, we have the Self-Plagiarism Checker at Scribbr. Just upload any document you used and start the check. You can repeat this as often as you like with all your sources. With your Plagiarism Check order, you get a free pass to use the Self-Plagiarism Checker. Simply upload them to your similarity report and let us do the rest!

Your writing stays private. Your submissions to Scribbr are not published in any public database, so no other plagiarism checker (including those used by universities) will see them.

Open Access Theses and Dissertations

Thursday, April 18, 8:20am (EDT): Searching is temporarily offline. We apologize for the inconvenience and are working to bring searching back up as quickly as possible.

Advanced research and scholarship. Theses and dissertations, free to find, free to use.

Advanced search options

Browse by author name (“Author name starts with…”).

Find ETDs with:

| in | ||

| / | ||

| in | ||

| / | ||

| in | ||

| / | ||

| in | ||

Written in any language English Portuguese French German Spanish Swedish Lithuanian Dutch Italian Chinese Finnish Greek Published in any country US or Canada Argentina Australia Austria Belgium Bolivia Brazil Canada Chile China Colombia Czech Republic Denmark Estonia Finland France Germany Greece Hong Kong Hungary Iceland India Indonesia Ireland Italy Japan Latvia Lithuania Malaysia Mexico Netherlands New Zealand Norway Peru Portugal Russia Singapore South Africa South Korea Spain Sweden Switzerland Taiwan Thailand UK US Earliest date Latest date

Sorted by Relevance Author University Date

Only ETDs with Creative Commons licenses

Results per page: 30 60 100

October 3, 2022. OATD is dealing with a number of misbehaved crawlers and robots, and is currently taking some steps to minimize their impact on the system. This may require you to click through some security screen. Our apologies for any inconvenience.

Recent Additions

See all of this week’s new additions.

About OATD.org

OATD.org aims to be the best possible resource for finding open access graduate theses and dissertations published around the world. Metadata (information about the theses) comes from over 1100 colleges, universities, and research institutions . OATD currently indexes 7,042,247 theses and dissertations.

About OATD (our FAQ) .

Visual OATD.org

We’re happy to present several data visualizations to give an overall sense of the OATD.org collection by county of publication, language, and field of study.

You may also want to consult these sites to search for other theses:

- Google Scholar

- NDLTD , the Networked Digital Library of Theses and Dissertations. NDLTD provides information and a search engine for electronic theses and dissertations (ETDs), whether they are open access or not.

- Proquest Theses and Dissertations (PQDT), a database of dissertations and theses, whether they were published electronically or in print, and mostly available for purchase. Access to PQDT may be limited; consult your local library for access information.

- Switch language

- Português do Brasil

Research Repository

Uk doctoral thesis metadata from ethos.

The datasets in this collection comprise snapshots in time of metadata descriptions of hundreds of thousands of PhD theses awarded by UK Higher Education institutions aggregated by the British Library's EThOS service. The data is estimated to cover around 98% of all PhDs ever awarded by UK Higher Education institutions, dating back to 1787.

Previous versions of the datasets are restricted to ensure the most accurate version of metadata is available for download. Please contact [email protected] if you require access to an older version.

Collection Details

| Title | Creator | Year Published | Date Added | Visibility | ||

|---|---|---|---|---|---|---|

| 2023 | 2023-11-27 | Public | ||||

| 2023 | 2023-05-12 | Public | ||||

| 2022 | 2022-10-14 | Public | ||||

| 2022 | 2022-04-12 | Public | ||||

| 2021 | 2021-09-03 | Public | ||||

| 2015 | 2021-03-08 | Public | ||||

| 2021 | 2021-02-09 | Public | ||||

| 2020 | 2020-07-24 | Public | ||||

| 2020 | 2020-02-11 | Public | ||||

| 2019 | 2019-12-12 | Public |

- « Previous

- Next »

- Enroll & Pay

Open Access Theses and Dissertations (OATD)

OATD.org provides open access graduate theses and dissertations published around the world. Metadata (information about the theses) comes from over 1100 colleges, universities, and research institutions. OATD currently indexes 6,654,285 theses and dissertations.

Turnitin Access To Plagiarism Check For GMS Students

Turnitin is an online plagiarism checking tool that compares your work with existing online publications.

To gain access:

- Request access to the Plagiarism-Check Blackboard site

- Access to this site will be continuous throughout your time in GMS

How it works:

- Upload your papers to Blackboard Learn to check for similarity index

- Submit multiple versions of papers, take home assignments, theses or dissertations and rewrite text as needed

Extended Directions:

- You will be asked to give your name, email BUID and the submission type (i.e. Dissertation, Thesis or Paper) and your GMS program affiliation.

- After verifying you are not a robot, you be should sent to a new page and get a notification saying “We have received your request to be added to the plagiarism check Blackboard Learn site.” It will take some time for your request to be approved (at least 10 min, possibly 24 hr). The next time you log in to Blackboard, under ‘My Courses’ in the right-hand column you should see the entry: “GMS Plagiarism Check”.

- Then click on “>> View/Complete” (The first time you use Turnitin it will ask you to agree the user agreement). If you have never submitted a document before there should be a submit button. If you have submitted before you will need to click the resubmit button to submit your new or revised document. You may get a warning that resubmitting will replace your earlier submission. It also reminds you that you can only upload 3 documents in 24 hours.

- You can browse to find the document on your computer then click ‘upload’

- You will get a message saying it may take up to 2-min to load

- Once the document is loaded be sure to click on ‘confirm’

- You should get a congratulatory message. (You will also get an email confirming the successful submission.)

- Click on the link to return to submission list

- You will likely see that your document ‘similarity’ index is ‘processing.’ The algorithm may take a few hours to run. You will need check back and see when it has finished.

- Press “view” on the far right to see the submitted manuscript with Turnitin’s Feedback Studio which has some interesting automation features.

- Alternatively, you can download the results with the arrow icon on the far right of the display

- NOTE: Students cannot submit more than 3 documents in a 24hour period. Please plan accordingly or use an alternatively resource like Turnitin Draft Coach alternative via Google Doc

- It is recommended that you remove the bibliography/references prior to submission to Turnitin.

- Because Turnitin cannot scan Images and Figures these can also be removed prior to the check. You must manually check images and figures for plagiarism and potential copyright violations.

- Published manuscripts should be removed from your thesis or dissertation prior to submission to Turnitin, assuming that they have already been analyzed by Turnitin. If they have not been, they should be included. Unpublished manuscripts should be included in your submission to Turnitin. • How to interpret a “Turnitin Originality Report”

How to Interpret Your Score Report

- Similarity index, Similarity by Source, Internet Sources, Publications, & Student Papers

- Generally, the similarity index should be less than 20%.

- A common definition may be acceptable.

- Did it identify methods or protocols from your lab? These will need to be rewritten.

- Did it identify matches to publications or text from the internet—again these sentences, paragraphs, sections will need to be rewritten

- If you have any questions interpreting the Turnitin report please reach out to your faculty mentors.

- Once you have made edits it is important to resubmit the document for a final check.

- The final Turnitin report should be submitted to your mentor and first reader for approval.

- Having problems with Turnitin: Reach out to Dr. Theresa Davies for assistance ( [email protected] )

Librarians/Admins

- EBSCOhost Collection Manager

- EBSCO Experience Manager

- EBSCO Connect

- Start your research

- EBSCO Mobile App

Clinical Decisions Users

- DynaMed Decisions

- Dynamic Health

- Waiting Rooms

- NoveList Blog

EBSCO Open Dissertations

EBSCO Open Dissertations makes electronic theses and dissertations (ETDs) more accessible to researchers worldwide. The free portal is designed to benefit universities and their students and make ETDs more discoverable.

Increasing Discovery & Usage of ETD Research

EBSCO Open Dissertations is a collaboration between EBSCO and BiblioLabs to increase traffic and discoverability of ETD research. You can join the movement and add your theses and dissertations to the database, making them freely available to researchers everywhere while increasing traffic to your institutional repository.

EBSCO Open Dissertations extends the work started in 2014, when EBSCO and the H.W. Wilson Foundation created American Doctoral Dissertations which contained indexing from the H.W. Wilson print publication, Doctoral Dissertations Accepted by American Universities, 1933-1955. In 2015, the H.W. Wilson Foundation agreed to support the expansion of the scope of the American Doctoral Dissertations database to include records for dissertations and theses from 1955 to the present.

How Does EBSCO Open Dissertations Work?

Your ETD metadata is harvested via OAI and integrated into EBSCO’s platform, where pointers send traffic to your IR.

EBSCO integrates this data into their current subscriber environments and makes the data available on the open web via opendissertations.org .

You might also be interested in:

Citation analysis of Ph.D. theses with data from Scopus and Google Books

- Open access

- Published: 24 October 2021

- Volume 126 , pages 9431–9456, ( 2021 )

Cite this article

You have full access to this open access article

- Paul Donner ORCID: orcid.org/0000-0001-5737-8483 1

5492 Accesses

5 Citations

9 Altmetric

Explore all metrics

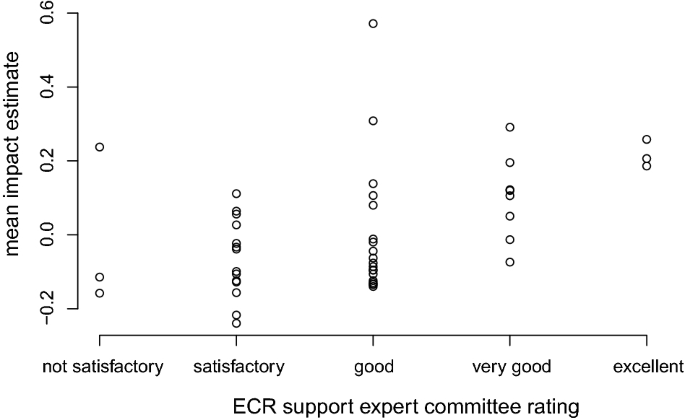

This study investigates the potential of citation analysis of Ph.D. theses to obtain valid and useful early career performance indicators at the level of university departments. For German theses from 1996 to 2018 the suitability of citation data from Scopus and Google Books is studied and found to be sufficient to obtain quantitative estimates of early career researchers’ performance at departmental level in terms of scientific recognition and use of their dissertations as reflected in citations. Scopus and Google Books citations complement each other and have little overlap. Individual theses’ citation counts are much higher for those awarded a dissertation award than others. Departmental level estimates of citation impact agree reasonably well with panel committee peer review ratings of early career researcher support.

Similar content being viewed by others

Researchgate versus google scholar: which finds more early citations.

The influence of discipline consistency between papers and published journals on citations: an analysis of Chinese papers in three social science disciplines

The Use of Google Scholar for Tenure and Promotion Decisions

Avoid common mistakes on your manuscript.

Introduction

In this article we present a study on the feasibility of Ph.D. thesis citation analysis and its potential for studies of early career researchers (ECR) and for the rigorous evaluation of university departments. The context is the German national research system with its characteristics of a very high ratio of graduating Ph.D.’s to available open job positions in academia, a distinct national language publication tradition in the social sciences and humanities and slowly unfolding change from a traditional apprenticeship-type Ph.D. system to a grad school type system. The first nationwide census in Germany reported 152,300 registered active doctoral students in Germany (Vollmar 2019 ). In the same year, 28,404 doctoral students passed their exams in Germany (Statitisches Bundesamt 2018 ). Both universities and science and higher education policy attach high value to doctoral training and consider it a core task of the university system. For this reason, doctoral student performance also plays an important role in institutional assessment systems.

While there is currently no national scale research assessment implemented in Germany, all German federal states have introduced formula-based partial funding allocation systems for universities. In most of these, the number of Ph.D. candidates is a well-established indicator. Most universities also partially distribute funds internally by similar systems. Such implementations can be seen as incomplete as they do not take into account the actual research output of Ph.D. candidates. In this contribution we investigate if citation analysis of doctoral theses is feasible on a large scale and can conceptually and practically serve as a complement to current operationalizations of ECR performance. For this purpose we study the utility of two citation data sources, Scopus and Google Books. We analyze the obtained citation data at the level of university departments within disciplines.

Doctoral studies

The doctoral studies phase can theoretically be conceived as a status transition period. It comprises a status passage process from apprentice to formally acknowledged researcher and colleague in the social context of a scientific community (Laudel and Gläser 2008 ). Footnote 1 The published doctoral thesis and its public defense are manifest proof of the fulfilment of the degree criterion of independent scientific contribution, marking said transition. The scientific community, rather than the specific organization, collectively sets the goals and standards of work in the profession, and experienced members of a community of peers judge and grade the doctoral work upon completion. Footnote 2 Yet the specific organization also plays a very important role. The Ph.D. project and dissertation are closely associated with the hosting university as it is this organization that provides the environmental means to conduct the Ph.D. research, as a bare minimum the supervision by professors and experienced researchers, but often also formal employment with salary, workspace and facilities. And it is also the department ( Fakultät ) which formally confers the degree after passing the thesis review and defense.

As a rule, it is a formal requirement of doctoral studies that the Ph.D. candidates make substantial independent scientific contributions and publish the results. The Ph.D. thesis is a published scientific work and can be read and cited by other researchers. The extent to which other researchers make use of these results is reflected in citations to the work and is in principle amenable to bibliometric citation analysis (Kousha and Thelwall 2019 ). Citation impact of theses can be seen as a proxy of the recognition of the utility and relevance of the doctoral research results by other researchers. Theses are often not published in an established venue and are hence absent from the usual channels of communication of the research front, more so in journal-oriented fields, whereas in book-oriented fields, publication of theses through scholarly publishers is common. We address this challenge by investigating the presence of dissertation citations in data sources hitherto not sufficiently considered for this purpose in what follows.

Research contribution of early career researchers and performance evaluation in Germany

Almost all universities in Germany are predominantly tax-funded and the consumption of these public resources necessitates a certain degree of transparency to establish and maintain the perceived legitimacy of the higher education and research system. Consequently, universities and their subdivisions are increasingly subjected to evaluations. The pressure to participate in evaluation exercises, or in some cases the bureaucratic directive to do so by the responsible political actors, in turn, derives from demands of the public, which holds political actors accountable for the responsible spending of resources appropriated from net tax payers. Because the training of Ph.D. candidates is undisputedly a core task of universities, it is commonly implemented as an important component or dimension in university research evaluation.

While there is no official established national-scale research evaluation exercise in Germany (Hinze et al. 2019 ), the assessment of ECR performance plays a very importent role in evaluation and funding of universities and in the systems of performance-based funding within universities. In the following paragraphs we will shows this with several examples while critically discussing some inadequacies of the extant operationalizations of the ECR performance dimensions, thereby substantiating the case for more research into the affordance of Ph.D. thesis citation analysis.

The Council of Science and Humanities ( Wissenschaftsrat ) has conducted four pilot studies for national-scale evaluations of disciplines in universities and research institutes ( Forschungsrating ). While the exercises were utilized to test different modalities Footnote 3 , they all followed a basic template of informed peer review by appointed expert committees along a number of prescribed performance dimensions. The evaluation results did not have any serious funding allocation or restructuring consequences for the units. In all exercises, the dimension of support for early career researchers played a prominent role next to such dimensions as research quality, impact/effectivity, efficiency, and transfer of knowledge into society. Footnote 4 In all four exercises, the dimension was operationalized with a combination of quantitative and qualitative criteria.

As the designation ‘support for early career researchers’ suggests, the focus was primarily on the support structures and provisions that the assessed units offered, but the outcomes or successes of these support environments also played a role. Yet, some of the applied indicators are more in line with a construct such as the performance, or success, of the ECRs themselves, namely, first appointments of graduates to professorships, scholarships or fellowship of ECRs (if granted externally of the assessed unit), and awards. Footnote 5 As for the difference between the concept of the efforts expended for ECRs and the concept of the performance of ECRs, it appears to be implied that the efforts cause the performance, but this is far from self-evident. There may well be extensive support programs without realized benefits or ECRs achieving great success despite a lack of support structures. For this implied causal connection to be accepted, its mechanism should first be worked out and articulated and then be empirically validated, which was not the case in the Forschungsrating evaluation exercises. Footnote 6

No bibliometric data on Ph.D. theses was employed in the Forschungsrating exercises (Wissenschaftsrat 2007 , 2008 , 2011 , 2012 ). However, it stands to reason that citation analysis of theses might provide a valuable complementary tool if a more sound operationalization of the dimension of the performance of ECRs is to be established in similar future assessments. As for the publications of ECRs besides doctoral theses, these have been included in the other dimensions in which publications were used as criteria without special consideration. Footnote 7

There is a further area of university evaluation in which a performance indicator of ECRs, namely the absolute number of Ph.D. graduates over a specific time period, is an important component. At the time of writing, systems of partial funding allocation from ministries to states’ universities across all German federal states are well established. In these systems, universities within a state compete with one another for a modest part of the total budget based on fixed formulas relating performance to money. The performance based funding systems, different for each state, all include ‘research’ among their dimensions, and within it, the number of graduated Ph.D.’s is the second most important indicator after the acquired third party funding of universities (Wespel and Jaeger 2015 ). In direct consequence, similar systems have also found widespread application to distribute funds across departments within universities (Jaeger 2006 ; Niggemann 2020 ). These systems differ across universities. If only the number of completed Ph.D.’s is used as an indicator, then the quality of the research of the graduates does not matter in such systems. It is conceivable that graduating as many Ph.D.’s as possible becomes prioritized at the expense of the quality of ECR research and training.

A working group tasked by the Federal Ministry of Education and Research to work out an indicator model for monitoring the situation of early career researchers in Germany proposed to consider the citation impact of publications as an indicator of outcomes (Projektgruppe Indikatorenmodell 2014 ). Under the heading of “quality of Ph.D.—disciplinary acceptance and possibility of transfer” the authors acknowledge that, in principle, citation analysis of Ph.D. theses is possible, but citation counts do not directly measure scientific quality, but rather the level of response to, and reuse of, publications (impact). Moreover, it is stated that the literature of the social sciences and humanities are not covered well in citation indexes and theses are generally not indexed as primary documents (p. 136). Nevertheless, this approach is not to be rejected out of hand. Rather, it is recommended that the prospects of thesis citation analysis be empirically studied to judge its suitability (p. 137).

Another motivation for the present study was the finding of the National Report on Junior Scholars that even though “[j]unior scholars make a telling contribution to developing scientific and social insights and to innovation” (p. 3) the “contribution made by junior scholars to research and knowledge sharing is difficult to quantify in view of the available data” (Consortium for the National Report on Junior Scholars 2017 , p. 19).

To sum up, the foregoing discussion establishes (1) that there is a theoretically underdeveloped evaluation practice in the area of ECR support and performance, and (2) that a need for better early career researcher performance indicators on the institutional level has been suggested to science policy actors. This gives occasion to explore which, if any, contribution bibliometrics can make to a valid and practically useful assessment.

Prior research

Citation analysis of dissertation theses.

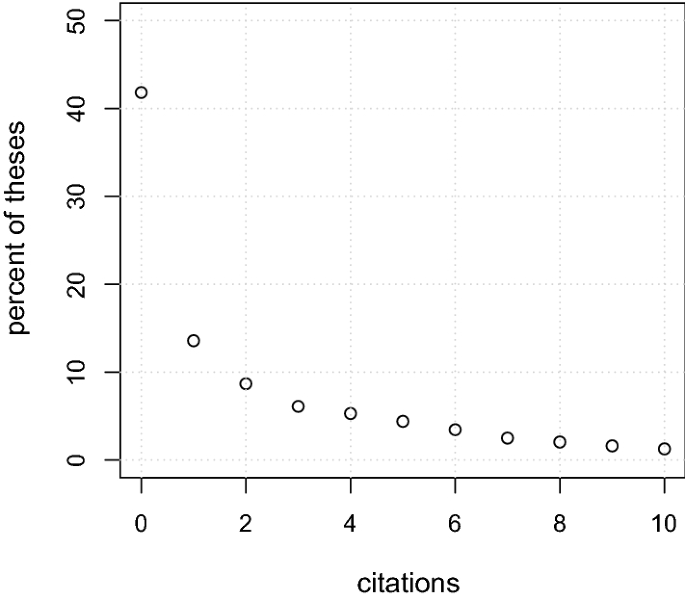

There are few publications on citation analysis of Ph.D. theses as the cited documents, as opposed to studies of the documents cited in theses, of which there are plenty. Yoels ( 1974 ) studied citations to dissertations in American journals in optics, political science (one journal each), and sociology (two journals) from the 1955 to 1969 volumes. In each case, several hundred citations in total to all Ph.D. theses combined were found, with a notable concentration on origins of Ph.D.’s in departments of high prestige – a possible first hint of differential research performance reflected in Ph.D. thesis citations. Non-US dissertations were cited only in optics. Author self-citations were very common, especially in optics and political science. While citations peaked in the periods of 1–2 or 3–5 years after the Ph.D. was awarded, they continued to be cited to some degree as much as 10 years later. According to Larivière et al. ( 2008 ), dissertations only account for a very small fraction of cited references in the Web of Science database. The impact of individual theses was not investigated. This study used a search approach in the cited references, based on keywords for theses and filtering, which may not be able to discover all dissertation citations. Kousha and Thelwall ( 2019 ) investigated Google Scholar citation counts and Mendeley reader counts for a large set of American dissertations from 2013 to 2017 sourced from ProQuest. This study did not take into account Google Books. Of these dissertations, 20% had one or more citations (2013: over 30%, 2017: over 5%) while 16% had at least one Mendeley reader. Average citation counts were comparatively high in the arts, social sciences, and humanities, and low in science, technology, and biomedical subjects. The authors evaluated the citation data quality and found that 97% of the citations of a sample of 646 were correct. As for the publication type of the citing documents, the majority were journal articles (56%), remarkably many were other dissertations (29%), and only 6% of citations originated from books. This suggests that Google Books might be a relevant citation data source instead of, or in addition to, Google Scholar.

More research has been conducted into the citation impact of thesis-related journal publications. Hay ( 1985 ) found that for the special case of a small sample from UK human geography research, papers based on Ph.D. thesis work accrued more citations than papers by established researchers. In a recent study of refereed journal publications based on US psychology Ph.D. theses, Evans et al. ( 2018 ) found that they were cited on average 16 times after 10 years. The citation impact of journal articles to which Ph.D. candidates contributed (but not of dissertations) has only been studied on a large scale for the Canadian province of Quèbec (Larivière 2012 ). The impact of journal papers with Ph.D. candidates’ contribution was contrasted to all other papers with Quèbec authors in the Web of Science database. As the impact of these papers, quantified as average of relative citations, was close to that of the comparison groups in three of four broad subject areas, it can be tentatively assumed that the impact of doctoral candidates’ papers was on par with that of their more experienced colleagues. The area with a notable difference between groups was arts and humanities, in which the coverage of publication output in the database was less comprehensive because a lot of research is published in monographs, and in which presumably many papers were written in French, another reason for lower coverage.

While these papers are not concerned with citations to dissertations, they do suggest that the research of Ph.D.’s is as impactful as that of other colleagues. To the best of our knowledge, no large scale study has been conducted on the citation impact of German theses on the level of individual works or on the level of university departments. We so far have scant information on the citation impact of dissertation theses, therefore the current study aims to fill this gap by a large scale investigation of citations received by German Ph.D. theses in Scopus and Google Books.

Causes for department-level performance differences

As we wish to investigate performance differences between departments of universities by discipline as reflected by thesis citations, we next consider the literature on plausible reasons for such performance differences which can result in differences in thesis citation impact. We do not consider individual level reasons for performance differences such as ability, intrinsic motivation, perseverance, and commitment.

One possible reason for cross-department performance differences is mutual selectivity of Ph.D. project applicants and Ph.D. project supervisors. In a situation in which there is some choice between the departments at which prospective Ph.D. candidates might register and some choice between the applicants a prospective supervisor might accept, out of self-interest both sides will seek to optimize their outcomes given their particular constraints. That is, applicants will opt for the most promising department for their future career while supervisors or selection committees, and thus departments, will attempt to select the most promising candidates, perhaps those who they judge most likely to contribute positively to their research agenda. Both sides can take into account a variety of criteria, such as departmental reputation or candidates’ prior performance. This is part of the normal, constant social process of mutual evaluation in science. However, in this case, the mutual evaluation does not take place between peers, that is, individuals of equal scientific social status. Rather, the situation is characterized by status inequality (superior-inferior, i.e. professor-applicant). Consequently, an applicant may well apply to her or his preferred department and supervisor, but the supervisor or the selection committee makes the acceptance decision. In practice however, there are many constraints on such situations. For example, as described above, the current evaluation regime rewards the sheer quantity of completed Ph.D.’s.

Once the choices are made, Ph.D. candidates at different departments can face quite different environments, more or less conducive to research performance (which, as far as they were aware of them and were able to judge them, they would have taken into consideration, as mentioned). For instance, some departments might have access to important equipment and resources, others not. There may prevail different local practices in time available for the Ph.D. project for employed candidates as opposed to expected participation in groups’ research, teaching, and other duties (Hesli and Lee 2011 ).

Ph.D. candidates may benefit from the support, experience and stimulation of the presence of highly accomplished supervisors. Experienced and engaged supervisors teach explicit and tacit knowledge and can serve as role models. Long and McGinnis ( 1985 ) found that the performance of mentors was associated with Ph.D.’s publication and citation counts. In particular, citations were predicted by collaborating with the mentor and the mentor’s own prior citation counts. Mentors’ eminence only had a weak positive effect on the publication output of Ph.D.’s who actively collaborated with them. Similarly, Hilmer and Hilmer ( 2007 ) report that advisors’ publication productivity is associated with candidate’s publication count. However, there are multiple professors or other supervisors at any department, which causes variation within departments if the department and not the supervisor is used as a predictive variable. Between departments it is then the concentration of highly accomplished supervisors that may cause differences. Beyond immediate supervisors, a more or less supportive research environment can offer opportunities for learning, cooperation or access to personal networks. For example, Kim and Karau ( 2009 ) found that support from faculty, through the development of research skills, lead to higher publication productivity of management Ph.D. candidates. Local work culture and local expectations of performance may elicit behavioral adjustment (Allison and Long 1990 ).

In summary, prior research shows that there are several reasons to expect department-level differences of Ph.D. research quality (and its reproduction and reinforcement) which might be reflected in thesis citation impact. But it needs to be noted that the present study cannot serve towards shedding light on which particular factors are associated with Ph.D. performance in terms of citation impact. It is limited to testing if there are any department-level differences on this measure.

Citation counts and scientific impact of dissertation theses

We have argued above that citation analysis of theses could be a complementary tool for quantitative assessment of university departments in terms of the research performance of early career researchers. Hence it needs to be established that citation counts of dissertations are in fact associated with a conception of the impact of research.

As outlined by Hemlin ( 1996 ), “[t]he idea [of citation analysis] is that the more cited an author or a paper is by others, the more attention it has received. This attention is interpreted as an indicator of the importance, the visibility, or the impact of the researcher or the paper in the scientific community. Whether citation measures also express research quality is a highly debated issue.” Hemlin reviewed a number of studies of the relationship between citations and research quality but was not able to make a definite conclusion: “it is possible that citation analysis is an indicator of scientific recognition, usefulness and, to some unknown extent, quality.” Researchers cite for a variety of reasons, not only or primarily to indicate the quality of the cited work (Aksnes et al. 2019 ; Bornmann and Daniel 2008 ). Nevertheless, work that is cited usually has some importance for the citing work. Even citations classified in citation behavior studies as ‘perfunctory’ or ‘persuasive’ are not made randomly. On the contrary, for a citation to persuade anyone, the content of the cited work needs to be convincing rather than ephemeral, irrelevant, or immaterial. Citation counts are thus a direct measure of the utility, influence, and importance of publications for further research (Martin and Irvine 1983 , sec. 6). Therefore, as a measure of scientific impact, citation counts have face validity. They are a measure of the concept itself, though a noisy one. Not so for research quality.

Highly relevant for the topic of the present study are the early citation impact validation studies by Nederhof and van Raan ( 1987 ), Nederhof and van Raan ( 1989 ). These studied the differences in citation impact of publications produced during doctoral studies of physics and chemistry Ph.D. holders, comparing those awarded the distinction ‘cum laude’ for their dissertation based on the quality of the research with other graduates without this distinction (cum laude: 12% of n = 237 in chemistry, 13% of n = 138 in physics). In physics, “[c]ompared to non-cumlaudes, cumlaudes received more than twice as many citations overall for their publications, which were all given by scientists outside their alma mater” (Nederhof and van Raan 1987 , p. 346). In fact, differences in citation impact of papers between the groups are already apparent before graduation, that is, before the conferral of the cum laude distinction on the basis of the dissertation. And interestingly, “[a]fter graduation, citation rates of cumlaudes even decline to the level of non-cumlaudes” (p. 347) leading the authors to suggest that “the quality of the research project, and not the quality of the particular graduate is the most important determinant of both productivity and impact figures. A possible scenario would be that some PhD graduates are choosen carefully by their mentors to do research in one of the usually rare very promising, interesting and hot research topics currently available. Most others are engaged in relatively less interesting and promising graduate research projects” (p. 348). The results in chemistry are very similar: “Large difference in impact and productivity favor cumlaudes three to 2 years before graduation, differences which decrease in the following years, although remaining significant. [...] Various sceptics have claimed that bibliometric measures based on citations are generally invalid. The present data do not offer any support for this stance. Highly significant differences in impact and productivity were obtained between two groups distinguished on a measure of scientific quality based on peer review (the cum laude award)” (Nederhof and van Raan 1989 , p. 434).

In Germany, a system of four passing marks and one failing mark is commonly used. The better the referees judge the thesis, the higher the mark. Studies investigating the association of level of mark and citation impact of theses or thesis-associated publications are as of yet lacking. The closest are studies on medical doctoral theses from Charité. Oestmann et al. ( 2015 ) provide a correlational study of medical doctoral degree marks (averages of thesis and oral exams marks) and the publications associated with the theses from one institution, Charité University Medicine Berlin. Their data for 1992–2014 shows a longitudinal decrease of the incidence of the third best mark and an increase of the second best mark. For samples from 3 years (1998, 2004, 2008) for which publication data were collected, an association between the level of the mark and the publication productivity was detected. Both the chance to publish any peer-reviewed articles and the number of articles increase with the level of the mark. The study was extended in Chuadja ( 2021 ) with publication data for 2015 graduates. It was found that the time to graduation covaries with the level of the mark. For 2015 graduates, the average 5 year Journal Impact Factors for thesis-associated publication increase with the level of the graduation mark in the sense that theses awarded better marks produced publications in journals with higher Impact Factors. As little as these findings say about the real association of thesis research quality and citation impact, they suggest enough to motivate more research into this relationship.

Research questions

The following research questions will be addressed:

How often are individual Ph.D. theses cited in the journal and book literature?

Does Google Books contain sufficient additional citation data to warrant its inclusion as an additional data source alongside established data sources?

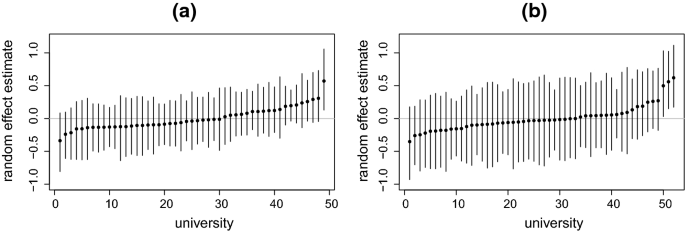

Can differences between universities within a discipline explain some of the variability in citation counts?

Are there noteworthy differences in Ph.D. thesis citation impact on the institutional level within disciplines?

Are the citation counts of Ph.D. theses associated with their scientific quality?

To test whether or not dissertation citation impact is a suitable indicator of departmental Ph.D. performance, citation data for theses needs to be collected, aggregated and studied for associations with other relevant indicators, such as doctorate conferrals, drop-out rates, graduate employability, thesis awards, or subjective program appraisals of graduates. As a first step towards a better understanding of Ph.D. performance, we conducted a study on citation sources for dissertations. The present study is restricted to monograph form dissertations. These also include monographs that are based on material published as articles. However, to be able to assess the complete scientific impact of a Ph.D. project it is necessary to also include the impact of papers which are produced in the context of the Ph.D. project, for both cumulative publication-based theses and for theses only published in monograph form. Because of this, the later results should be interpreted with due caution as we do not claim completeness of data.

Dissertations’ bibliographical data