- school Campus Bookshelves

- menu_book Bookshelves

- perm_media Learning Objects

- login Login

- how_to_reg Request Instructor Account

- hub Instructor Commons

- Download Page (PDF)

- Download Full Book (PDF)

- Periodic Table

- Physics Constants

- Scientific Calculator

- Reference & Cite

- Tools expand_more

- Readability

selected template will load here

This action is not available.

1.1: Statements and Conditional Statements

- Last updated

- Save as PDF

- Page ID 7034

- Ted Sundstrom

- Grand Valley State University via ScholarWorks @Grand Valley State University

Much of our work in mathematics deals with statements. In mathematics, a statement is a declarative sentence that is either true or false but not both. A statement is sometimes called a proposition . The key is that there must be no ambiguity. To be a statement, a sentence must be true or false, and it cannot be both. So a sentence such as "The sky is beautiful" is not a statement since whether the sentence is true or not is a matter of opinion. A question such as "Is it raining?" is not a statement because it is a question and is not declaring or asserting that something is true.

Some sentences that are mathematical in nature often are not statements because we may not know precisely what a variable represents. For example, the equation 2\(x\)+5 = 10 is not a statement since we do not know what \(x\) represents. If we substitute a specific value for \(x\) (such as \(x\) = 3), then the resulting equation, 2\(\cdot\)3 +5 = 10 is a statement (which is a false statement). Following are some more examples:

- There exists a real number \(x\) such that 2\(x\)+5 = 10. This is a statement because either such a real number exists or such a real number does not exist. In this case, this is a true statement since such a real number does exist, namely \(x\) = 2.5.

- For each real number \(x\), \(2x +5 = 2 \left( x + \dfrac{5}{2}\right)\). This is a statement since either the sentence \(2x +5 = 2 \left( x + \dfrac{5}{2}\right)\) is true when any real number is substituted for \(x\) (in which case, the statement is true) or there is at least one real number that can be substituted for \(x\) and produce a false statement (in which case, the statement is false). In this case, the given statement is true.

- Solve the equation \(x^2 - 7x +10 =0\). This is not a statement since it is a directive. It does not assert that something is true.

- \((a+b)^2 = a^2+b^2\) is not a statement since it is not known what \(a\) and \(b\) represent. However, the sentence, “There exist real numbers \(a\) and \(b\) such that \((a+b)^2 = a^2+b^2\)" is a statement. In fact, this is a true statement since there are such integers. For example, if \(a=1\) and \(b=0\), then \((a+b)^2 = a^2+b^2\).

- Compare the statement in the previous item to the statement, “For all real numbers \(a\) and \(b\), \((a+b)^2 = a^2+b^2\)." This is a false statement since there are values for \(a\) and \(b\) for which \((a+b)^2 \ne a^2+b^2\). For example, if \(a=2\) and \(b=3\), then \((a+b)^2 = 5^2 = 25\) and \(a^2 + b^2 = 2^2 +3^2 = 13\).

Progress Check 1.1: Statements

Which of the following sentences are statements? Do not worry about determining whether a statement is true or false; just determine whether each sentence is a statement or not.

- 2\(\cdot\)7 + 8 = 22.

- \((x-1) = \sqrt(x + 11)\).

- \(2x + 5y = 7\).

- There are integers \(x\) and \(y\) such that \(2x + 5y = 7\).

- There are integers \(x\) and \(y\) such that \(23x + 27y = 52\).

- Given a line \(L\) and a point \(P\) not on that line, there is a unique line through \(P\) that does not intersect \(L\).

- \((a + b)^3 = a^3 + 3a^2b + 3ab^2 + b^3\).

- \((a + b)^3 = a^3 + 3a^2b + 3ab^2 + b^3\) for all real numbers \(a\) and \(b\).

- The derivative of \(f(x) = \sin x\) is \(f' (x) = \cos x\).

- Does the equation \(3x^2 - 5x - 7 = 0\) have two real number solutions?

- If \(ABC\) is a right triangle with right angle at vertex \(B\), and if \(D\) is the midpoint of the hypotenuse, then the line segment connecting vertex \(B\) to \(D\) is half the length of the hypotenuse.

- There do not exist three integers \(x\), \(y\), and \(z\) such that \(x^3 + y^2 = z^3\).

Add texts here. Do not delete this text first.

How Do We Decide If a Statement Is True or False?

In mathematics, we often establish that a statement is true by writing a mathematical proof. To establish that a statement is false, we often find a so-called counterexample. (These ideas will be explored later in this chapter.) So mathematicians must be able to discover and construct proofs. In addition, once the discovery has been made, the mathematician must be able to communicate this discovery to others who speak the language of mathematics. We will be dealing with these ideas throughout the text.

For now, we want to focus on what happens before we start a proof. One thing that mathematicians often do is to make a conjecture beforehand as to whether the statement is true or false. This is often done through exploration. The role of exploration in mathematics is often difficult because the goal is not to find a specific answer but simply to investigate. Following are some techniques of exploration that might be helpful.

Techniques of Exploration

- Guesswork and conjectures . Formulate and write down questions and conjectures. When we make a guess in mathematics, we usually call it a conjecture.

For example, if someone makes the conjecture that \(\sin(2x) = 2 \sin(x)\), for all real numbers \(x\), we can test this conjecture by substituting specific values for \(x\). One way to do this is to choose values of \(x\) for which \(\sin(x)\)is known. Using \(x = \frac{\pi}{4}\), we see that

\(\sin(2(\frac{\pi}{4})) = \sin(\frac{\pi}{2}) = 1,\) and

\(2\sin(\frac{\pi}{4}) = 2(\frac{\sqrt2}{2}) = \sqrt2\).

Since \(1 \ne \sqrt2\), these calculations show that this conjecture is false. However, if we do not find a counterexample for a conjecture, we usually cannot claim the conjecture is true. The best we can say is that our examples indicate the conjecture is true. As an example, consider the conjecture that

If \(x\) and \(y\) are odd integers, then \(x + y\) is an even integer.

We can do lots of calculation, such as \(3 + 7 = 10\) and \(5 + 11 = 16\), and find that every time we add two odd integers, the sum is an even integer. However, it is not possible to test every pair of odd integers, and so we can only say that the conjecture appears to be true. (We will prove that this statement is true in the next section.)

- Use of prior knowledge. This also is very important. We cannot start from square one every time we explore a statement. We must make use of our acquired mathematical knowledge. For the conjecture that \(\sin (2x) = 2 \sin(x)\), for all real numbers \(x\), we might recall that there are trigonometric identities called “double angle identities.” We may even remember the correct identity for \(\sin (2x)\), but if we do not, we can always look it up. We should recall (or find) that for all real numbers \(x\), \[\sin(2x) = 2 \sin(x)\cos(x).\]

- We could use this identity to argue that the conjecture “for all real numbers \(x\), \(\sin (2x) = 2 \sin(x)\)” is false, but if we do, it is still a good idea to give a specific counterexample as we did before.

- Cooperation and brainstorming . Working together is often more fruitful than working alone. When we work with someone else, we can compare notes and articulate our ideas. Thinking out loud is often a useful brainstorming method that helps generate new ideas.

Progress Check 1.2: Explorations

Use the techniques of exploration to investigate each of the following statements. Can you make a conjecture as to whether the statement is true or false? Can you determine whether it is true or false?

- \((a + b)^2 = a^2 + b^2\), for all real numbers a and b.

- There are integers \(x\) and \(y\) such that \(2x + 5y = 41\).

- If \(x\) is an even integer, then \(x^2\) is an even integer.

- If \(x\) and \(y\) are odd integers, then \(x \cdot y\) is an odd integer.

Conditional Statements

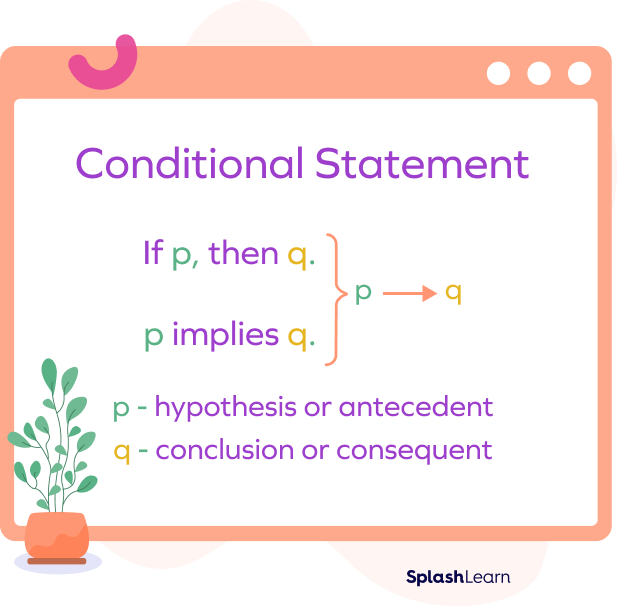

One of the most frequently used types of statements in mathematics is the so-called conditional statement. Given statements \(P\) and \(Q\), a statement of the form “If \(P\) then \(Q\)” is called a conditional statement . It seems reasonable that the truth value (true or false) of the conditional statement “If \(P\) then \(Q\)” depends on the truth values of \(P\) and \(Q\). The statement “If \(P\) then \(Q\)” means that \(Q\) must be true whenever \(P\) is true. The statement \(P\) is called the hypothesis of the conditional statement, and the statement \(Q\) is called the conclusion of the conditional statement. Since conditional statements are probably the most important type of statement in mathematics, we give a more formal definition.

A conditional statement is a statement that can be written in the form “If \(P\) then \(Q\),” where \(P\) and \(Q\) are sentences. For this conditional statement, \(P\) is called the hypothesis and \(Q\) is called the conclusion .

Intuitively, “If \(P\) then \(Q\)” means that \(Q\) must be true whenever \(P\) is true. Because conditional statements are used so often, a symbolic shorthand notation is used to represent the conditional statement “If \(P\) then \(Q\).” We will use the notation \(P \to Q\) to represent “If \(P\) then \(Q\).” When \(P\) and \(Q\) are statements, it seems reasonable that the truth value (true or false) of the conditional statement \(P \to Q\) depends on the truth values of \(P\) and \(Q\). There are four cases to consider:

- \(P\) is true and \(Q\) is true.

- \(P\) is false and \(Q\) is true.

- \(P\) is true and \(Q\) is false.

- \(P\) is false and \(Q\) is false.

The conditional statement \(P \to Q\) means that \(Q\) is true whenever \(P\) is true. It says nothing about the truth value of \(Q\) when \(P\) is false. Using this as a guide, we define the conditional statement \(P \to Q\) to be false only when \(P\) is true and \(Q\) is false, that is, only when the hypothesis is true and the conclusion is false. In all other cases, \(P \to Q\) is true. This is summarized in Table 1.1 , which is called a truth table for the conditional statement \(P \to Q\). (In Table 1.1 , T stands for “true” and F stands for “false.”)

Table 1.1: Truth Table for \(P \to Q\)

The important thing to remember is that the conditional statement \(P \to Q\) has its own truth value. It is either true or false (and not both). Its truth value depends on the truth values for \(P\) and \(Q\), but some find it a bit puzzling that the conditional statement is considered to be true when the hypothesis P is false. We will provide a justification for this through the use of an example.

Example 1.3:

Suppose that I say

“If it is not raining, then Daisy is riding her bike.”

We can represent this conditional statement as \(P \to Q\) where \(P\) is the statement, “It is not raining” and \(Q\) is the statement, “Daisy is riding her bike.”

Although it is not a perfect analogy, think of the statement \(P \to Q\) as being false to mean that I lied and think of the statement \(P \to Q\) as being true to mean that I did not lie. We will now check the truth value of \(P \to Q\) based on the truth values of \(P\) and \(Q\).

- Suppose that both \(P\) and \(Q\) are true. That is, it is not raining and Daisy is riding her bike. In this case, it seems reasonable to say that I told the truth and that\(P \to Q\) is true.

- Suppose that \(P\) is true and \(Q\) is false or that it is not raining and Daisy is not riding her bike. It would appear that by making the statement, “If it is not raining, then Daisy is riding her bike,” that I have not told the truth. So in this case, the statement \(P \to Q\) is false.

- Now suppose that \(P\) is false and \(Q\) is true or that it is raining and Daisy is riding her bike. Did I make a false statement by stating that if it is not raining, then Daisy is riding her bike? The key is that I did not make any statement about what would happen if it was raining, and so I did not tell a lie. So we consider the conditional statement, “If it is not raining, then Daisy is riding her bike,” to be true in the case where it is raining and Daisy is riding her bike.

- Finally, suppose that both \(P\) and \(Q\) are false. That is, it is raining and Daisy is not riding her bike. As in the previous situation, since my statement was \(P \to Q\), I made no claim about what would happen if it was raining, and so I did not tell a lie. So the statement \(P \to Q\) cannot be false in this case and so we consider it to be true.

Progress Check 1.4: xplorations with Conditional Statements

1 . Consider the following sentence:

If \(x\) is a positive real number, then \(x^2 + 8x\) is a positive real number.

Although the hypothesis and conclusion of this conditional sentence are not statements, the conditional sentence itself can be considered to be a statement as long as we know what possible numbers may be used for the variable \(x\). From the context of this sentence, it seems that we can substitute any positive real number for \(x\). We can also substitute 0 for \(x\) or a negative real number for x provided that we are willing to work with a false hypothesis in the conditional statement. (In Chapter 2 , we will learn how to be more careful and precise with these types of conditional statements.)

(a) Notice that if \(x = -3\), then \(x^2 + 8x = -15\), which is negative. Does this mean that the given conditional statement is false?

(b) Notice that if \(x = 4\), then \(x^2 + 8x = 48\), which is positive. Does this mean that the given conditional statement is true?

(c) Do you think this conditional statement is true or false? Record the results for at least five different examples where the hypothesis of this conditional statement is true.

2 . “If \(n\) is a positive integer, then \(n^2 - n +41\) is a prime number.” (Remember that a prime number is a positive integer greater than 1 whose only positive factors are 1 and itself.) To explore whether or not this statement is true, try using (and recording your results) for \(n = 1\), \(n = 2\), \(n = 3\), \(n = 4\), \(n = 5\), and \(n = 10\). Then record the results for at least four other values of \(n\). Does this conditional statement appear to be true?

Further Remarks about Conditional Statements

Suppose that Ed has exactly $52 in his wallet. The following four statements will use the four possible truth combinations for the hypothesis and conclusion of a conditional statement.

- If Ed has exactly $52 in his wallet, then he has $20 in his wallet. This is a true statement. Notice that both the hypothesis and the conclusion are true.

- If Ed has exactly $52 in his wallet, then he has $100 in his wallet. This statement is false. Notice that the hypothesis is true and the conclusion is false.

- If Ed has $100 in his wallet, then he has at least $50 in his wallet. This statement is true regardless of how much money he has in his wallet. In this case, the hypothesis is false and the conclusion is true.

This is admittedly a contrived example but it does illustrate that the conventions for the truth value of a conditional statement make sense. The message is that in order to be complete in mathematics, we need to have conventions about when a conditional statement is true and when it is false.

If \(n\) is a positive integer, then \((n^2 - n + 41)\) is a prime number.

Perhaps for all of the values you tried for \(n\), \((n^2 - n + 41)\) turned out to be a prime number. However, if we try \(n = 41\), we ge \(n^2 - n + 41 = 41^2 - 41 + 41\) \(n^2 - n + 41 = 41^2\) So in the case where \(n = 41\), the hypothesis is true (41 is a positive integer) and the conclusion is false \(41^2\) is not prime. Therefore, 41 is a counterexample for this conjecture and the conditional statement “If \(n\) is a positive integer, then \((n^2 - n + 41)\) is a prime number” is false. There are other counterexamples (such as \(n = 42\), \(n = 45\), and \(n = 50\)), but only one counterexample is needed to prove that the statement is false.

- Although one example can be used to prove that a conditional statement is false, in most cases, we cannot use examples to prove that a conditional statement is true. For example, in Progress Check 1.4 , we substituted values for \(x\) for the conditional statement “If \(x\) is a positive real number, then \(x^2 + 8x\) is a positive real number.” For every positive real number used for \(x\), we saw that \(x^2 + 8x\) was positive. However, this does not prove the conditional statement to be true because it is impossible to substitute every positive real number for \(x\). So, although we may believe this statement is true, to be able to conclude it is true, we need to write a mathematical proof. Methods of proof will be discussed in Section 1.2 and Chapter 3 .

Progress Check 1.5: Working with a Conditional Statement

The following statement is a true statement, which is proven in many calculus texts.

If the function \(f\) is differentiable at \(a\), then the function \(f\) is continuous at \(a\).

Using only this true statement, is it possible to make a conclusion about the function in each of the following cases?

- It is known that the function \(f\), where \(f(x) = \sin x\), is differentiable at 0.

- It is known that the function \(f\), where \(f(x) = \sqrt[3]x\), is not differentiable at 0.

- It is known that the function \(f\), where \(f(x) = |x|\), is continuous at 0.

- It is known that the function \(f\), where \(f(x) = \dfrac{|x|}{x}\) is not continuous at 0.

Closure Properties of Number Systems

The primary number system used in algebra and calculus is the real number system . We usually use the symbol R to stand for the set of all real numbers. The real numbers consist of the rational numbers and the irrational numbers. The rational numbers are those real numbers that can be written as a quotient of two integers (with a nonzero denominator), and the irrational numbers are those real numbers that cannot be written as a quotient of two integers. That is, a rational number can be written in the form of a fraction, and an irrational number cannot be written in the form of a fraction. Some common irrational numbers are \(\sqrt2\), \(\pi\) and \(e\). We usually use the symbol \(\mathbb{Q}\) to represent the set of all rational numbers. (The letter \(\mathbb{Q}\) is used because rational numbers are quotients of integers.) There is no standard symbol for the set of all irrational numbers.

Perhaps the most basic number system used in mathematics is the set of natural numbers . The natural numbers consist of the positive whole numbers such as 1, 2, 3, 107, and 203. We will use the symbol \(\mathbb{N}\) to stand for the set of natural numbers. Another basic number system that we will be working with is the set of integers . The integers consist of zero, the positive whole numbers, and the negatives of the positive whole numbers. If \(n\) is an integer, we can write \(n = \dfrac{n}{1}\). So each integer is a rational number and hence also a real number.

We will use the letter \(\mathbb{Z}\) to stand for the set of integers. (The letter \(\mathbb{Z}\) is from the German word, \(Zahlen\), for numbers.) Three of the basic properties of the integers are that the set \(\mathbb{Z}\) is closed under addition , the set \(\mathbb{Z}\) is closed under multiplication , and the set of integers is closed under subtraction. This means that

- If \(x\) and \(y\) are integers, then \(x + y\) is an integer;

- If \(x\) and \(y\) are integers, then \(x \cdot y\) is an integer; and

- If \(x\) and \(y\) are integers, then \(x - y\) is an integer.

Notice that these so-called closure properties are defined in terms of conditional statements. This means that if we can find one instance where the hypothesis is true and the conclusion is false, then the conditional statement is false.

Example 1.6: Closure

- In order for the set of natural numbers to be closed under subtraction, the following conditional statement would have to be true: If \(x\) and \(y\) are natural numbers, then \(x - y\) is a natural number. However, since 5 and 8 are natural numbers, \(5 - 8 = -3\), which is not a natural number, this conditional statement is false. Therefore, the set of natural numbers is not closed under subtraction.

- We can use the rules for multiplying fractions and the closure rules for the integers to show that the rational numbers are closed under multiplication. If \(\dfrac{a}{b}\) and \(\dfrac{c}{d}\) are rational numbers (so \(a\), \(b\), \(c\), and \(d\) are integers and \(b\) and \(d\) are not zero), then \(\dfrac{a}{b} \cdot \dfrac{c}{d} = \dfrac{ac}{bd}.\) Since the integers are closed under multiplication, we know that \(ac\) and \(bd\) are integers and since \(b \ne 0\) and \(d \ne 0\), \(bd \ne 0\). Hence, \(\dfrac{ac}{bd}\) is a rational number and this shows that the rational numbers are closed under multiplication.

Progress Check 1.7: Closure Properties

Answer each of the following questions.

- Is the set of rational numbers closed under addition? Explain.

- Is the set of integers closed under division? Explain.

- Is the set of rational numbers closed under subtraction? Explain.

- Which of the following sentences are statements? (a) \(3^2 + 4^2 = 5^2.\) (b) \(a^2 + b^2 = c^2.\) (c) There exists integers \(a\), \(b\), and \(c\) such that \(a^2 + b^2 = c^2.\) (d) If \(x^2 = 4\), then \(x = 2.\) (e) For each real number \(x\), if \(x^2 = 4\), then \(x = 2.\) (f) For each real number \(t\), \(\sin^2t + \cos^2t = 1.\) (g) \(\sin x < \sin (\frac{\pi}{4}).\) (h) If \(n\) is a prime number, then \(n^2\) has three positive factors. (i) 1 + \(\tan^2 \theta = \text{sec}^2 \theta.\) (j) Every rectangle is a parallelogram. (k) Every even natural number greater than or equal to 4 is the sum of two prime numbers.

- Identify the hypothesis and the conclusion for each of the following conditional statements. (a) If \(n\) is a prime number, then \(n^2\) has three positive factors. (b) If \(a\) is an irrational number and \(b\) is an irrational number, then \(a \cdot b\) is an irrational number. (c) If \(p\) is a prime number, then \(p = 2\) or \(p\) is an odd number. (d) If \(p\) is a prime number and \(p \ne 2\) or \(p\) is an odd number. (e) \(p \ne 2\) or \(p\) is a even number, then \(p\) is not prime.

- Determine whether each of the following conditional statements is true or false. (a) If 10 < 7, then 3 = 4. (b) If 7 < 10, then 3 = 4. (c) If 10 < 7, then 3 + 5 = 8. (d) If 7 < 10, then 3 + 5 = 8.

- Determine the conditions under which each of the following conditional sentences will be a true statement. (a) If a + 2 = 5, then 8 < 5. (b) If 5 < 8, then a + 2 = 5.

- Let \(P\) be the statement “Student X passed every assignment in Calculus I,” and let \(Q\) be the statement “Student X received a grade of C or better in Calculus I.” (a) What does it mean for \(P\) to be true? What does it mean for \(Q\) to be true? (b) Suppose that Student X passed every assignment in Calculus I and received a grade of B-, and that the instructor made the statement \(P \to Q\). Would you say that the instructor lied or told the truth? (c) Suppose that Student X passed every assignment in Calculus I and received a grade of C-, and that the instructor made the statement \(P \to Q\). Would you say that the instructor lied or told the truth? (d) Now suppose that Student X did not pass two assignments in Calculus I and received a grade of D, and that the instructor made the statement \(P \to Q\). Would you say that the instructor lied or told the truth? (e) How are Parts ( 5b ), ( 5c ), and ( 5d ) related to the truth table for \(P \to Q\)?

Theorem If f is a quadratic function of the form \(f(x) = ax^2 + bx + c\) and a < 0, then the function f has a maximum value when \(x = \dfrac{-b}{2a}\). Using only this theorem, what can be concluded about the functions given by the following formulas? (a) \(g (x) = -8x^2 + 5x - 2\) (b) \(h (x) = -\dfrac{1}{3}x^2 + 3x\) (c) \(k (x) = 8x^2 - 5x - 7\) (d) \(j (x) = -\dfrac{71}{99}x^2 +210\) (e) \(f (x) = -4x^2 - 3x + 7\) (f) \(F (x) = -x^4 + x^3 + 9\)

Theorem If \(f\) is a quadratic function of the form \(f(x) = ax^2 + bx + c\) and ac < 0, then the function \(f\) has two x-intercepts.

Using only this theorem, what can be concluded about the functions given by the following formulas? (a) \(g (x) = -8x^2 + 5x - 2\) (b) \(h (x) = -\dfrac{1}{3}x^2 + 3x\) (c) \(k (x) = 8x^2 - 5x - 7\) (d) \(j (x) = -\dfrac{71}{99}x^2 +210\) (e) \(f (x) = -4x^2 - 3x + 7\) (f) \(F (x) = -x^4 + x^3 + 9\)

Theorem A. If \(f\) is a cubic function of the form \(f (x) = x^3 - x + b\) and b > 1, then the function \(f\) has exactly one \(x\)-intercept. Following is another theorem about \(x\)-intercepts of functions: Theorem B . If \(f\) and \(g\) are functions with \(g (x) = k \cdot f (x)\), where \(k\) is a nonzero real number, then \(f\) and \(g\) have exactly the same \(x\)-intercepts.

Using only these two theorems and some simple algebraic manipulations, what can be concluded about the functions given by the following formulas? (a) \(f (x) = x^3 -x + 7\) (b) \(g (x) = x^3 + x +7\) (c) \(h (x) = -x^3 + x - 5\) (d) \(k (x) = 2x^3 + 2x + 3\) (e) \(r (x) = x^4 - x + 11\) (f) \(F (x) = 2x^3 - 2x + 7\)

- (a) Is the set of natural numbers closed under division? (b) Is the set of rational numbers closed under division? (c) Is the set of nonzero rational numbers closed under division? (d) Is the set of positive rational numbers closed under division? (e) Is the set of positive real numbers closed under subtraction? (f) Is the set of negative rational numbers closed under division? (g) Is the set of negative integers closed under addition? Explorations and Activities

- Exploring Propositions . In Progress Check 1.2 , we used exploration to show that certain statements were false and to make conjectures that certain statements were true. We can also use exploration to formulate a conjecture that we believe to be true. For example, if we calculate successive powers of \(2, (2^1, 2^2, 2^3, 2^4, 2^5, ...)\) and examine the units digits of these numbers, we could make the following conjectures (among others): \(\bullet\) If \(n\) is a natural number, then the units digit of \(2^n\) must be 2, 4, 6, or 8. \(\bullet\) The units digits of the successive powers of 2 repeat according to the pattern “2, 4, 8, 6.” (a) Is it possible to formulate a conjecture about the units digits of successive powers of \(4 (4^1, 4^2, 4^3, 4^4, 4^5,...)\)? If so, formulate at least one conjecture. (b) Is it possible to formulate a conjecture about the units digit of numbers of the form \(7^n - 2^n\), where \(n\) is a natural number? If so, formulate a conjecture in the form of a conditional statement in the form “If \(n\) is a natural number, then ... .” (c) Let \(f (x) = e^(2x)\). Determine the first eight derivatives of this function. What do you observe? Formulate a conjecture that appears to be true. The conjecture should be written as a conditional statement in the form, “If n is a natural number, then ... .”

A hypothesis is a proposition that is consistent with known data, but has been neither verified nor shown to be false.

In statistics, a hypothesis (sometimes called a statistical hypothesis) refers to a statement on which hypothesis testing will be based. Particularly important statistical hypotheses include the null hypothesis and alternative hypothesis .

In symbolic logic , a hypothesis is the first part of an implication (with the second part being known as the predicate ).

In general mathematical usage, "hypothesis" is roughly synonymous with " conjecture ."

Explore with Wolfram|Alpha

More things to try:

- 30 choose 18

- Cesaro fractal

Cite this as:

Weisstein, Eric W. "Hypothesis." From MathWorld --A Wolfram Web Resource. https://mathworld.wolfram.com/Hypothesis.html

Subject classifications

Or search by topic

Number and algebra

- The Number System and Place Value

- Calculations and Numerical Methods

- Fractions, Decimals, Percentages, Ratio and Proportion

- Properties of Numbers

- Patterns, Sequences and Structure

- Algebraic expressions, equations and formulae

- Coordinates, Functions and Graphs

Geometry and measure

- Angles, Polygons, and Geometrical Proof

- 3D Geometry, Shape and Space

- Measuring and calculating with units

- Transformations and constructions

- Pythagoras and Trigonometry

- Vectors and Matrices

Probability and statistics

- Handling, Processing and Representing Data

- Probability

Working mathematically

- Thinking mathematically

- Mathematical mindsets

- Cross-curricular contexts

- Physical and digital manipulatives

For younger learners

- Early Years Foundation Stage

Advanced mathematics

- Decision Mathematics and Combinatorics

- Advanced Probability and Statistics

Published 2008 Revised 2019

Understanding Hypotheses

'What happens if ... ?' to ' This will happen if'

The experimentation of children continually moves on to the exploration of new ideas and the refinement of their world view of previously understood situations. This description of the playtime patterns of young children very nicely models the concept of 'making and testing hypotheses'. It follows this pattern:

- Make some observations. Collect some data based on the observations.

- Draw a conclusion (called a 'hypothesis') which will explain the pattern of the observations.

- Test out your hypothesis by making some more targeted observations.

So, we have

- A hypothesis is a statement or idea which gives an explanation to a series of observations.

Sometimes, following observation, a hypothesis will clearly need to be refined or rejected. This happens if a single contradictory observation occurs. For example, suppose that a child is trying to understand the concept of a dog. He reads about several dogs in children's books and sees that they are always friendly and fun. He makes the natural hypothesis in his mind that dogs are friendly and fun . He then meets his first real dog: his neighbour's puppy who is great fun to play with. This reinforces his hypothesis. His cousin's dog is also very friendly and great fun. He meets some of his friends' dogs on various walks to playgroup. They are also friendly and fun. He is now confident that his hypothesis is sound. Suddenly, one day, he sees a dog, tries to stroke it and is bitten. This experience contradicts his hypothesis. He will need to amend the hypothesis. We see that

- Gathering more evidence/data can strengthen a hypothesis if it is in agreement with the hypothesis.

- If the data contradicts the hypothesis then the hypothesis must be rejected or amended to take into account the contradictory situation.

- A contradictory observation can cause us to know for certain that a hypothesis is incorrect.

- Accumulation of supporting experimental evidence will strengthen a hypothesis but will never let us know for certain that the hypothesis is true.

In short, it is possible to show that a hypothesis is false, but impossible to prove that it is true!

Whilst we can never prove a scientific hypothesis to be true, there will be a certain stage at which we decide that there is sufficient supporting experimental data for us to accept the hypothesis. The point at which we make the choice to accept a hypothesis depends on many factors. In practice, the key issues are

- What are the implications of mistakenly accepting a hypothesis which is false?

- What are the cost / time implications of gathering more data?

- What are the implications of not accepting in a timely fashion a true hypothesis?

For example, suppose that a drug company is testing a new cancer drug. They hypothesise that the drug is safe with no side effects. If they are mistaken in this belief and release the drug then the results could have a disastrous effect on public health. However, running extended clinical trials might be very costly and time consuming. Furthermore, a delay in accepting the hypothesis and releasing the drug might also have a negative effect on the health of many people.

In short, whilst we can never achieve absolute certainty with the testing of hypotheses, in order to make progress in science or industry decisions need to be made. There is a fine balance to be made between action and inaction.

Hypotheses and mathematics So where does mathematics enter into this picture? In many ways, both obvious and subtle:

- A good hypothesis needs to be clear, precisely stated and testable in some way. Creation of these clear hypotheses requires clear general mathematical thinking.

- The data from experiments must be carefully analysed in relation to the original hypothesis. This requires the data to be structured, operated upon, prepared and displayed in appropriate ways. The levels of this process can range from simple to exceedingly complex.

Very often, the situation under analysis will appear to be complicated and unclear. Part of the mathematics of the task will be to impose a clear structure on the problem. The clarity of thought required will actively be developed through more abstract mathematical study. Those without sufficient general mathematical skill will be unable to perform an appropriate logical analysis.

Using deductive reasoning in hypothesis testing

There is often confusion between the ideas surrounding proof, which is mathematics, and making and testing an experimental hypothesis, which is science. The difference is rather simple:

- Mathematics is based on deductive reasoning : a proof is a logical deduction from a set of clear inputs.

- Science is based on inductive reasoning : hypotheses are strengthened or rejected based on an accumulation of experimental evidence.

Of course, to be good at science, you need to be good at deductive reasoning, although experts at deductive reasoning need not be mathematicians. Detectives, such as Sherlock Holmes and Hercule Poirot, are such experts: they collect evidence from a crime scene and then draw logical conclusions from the evidence to support the hypothesis that, for example, Person M. committed the crime. They use this evidence to create sufficiently compelling deductions to support their hypotheses beyond reasonable doubt . The key word here is 'reasonable'. There is always the possibility of creating an exceedingly outlandish scenario to explain away any hypothesis of a detective or prosecution lawyer, but judges and juries in courts eventually make the decision that the probability of such eventualities are 'small' and the chance of the hypothesis being correct 'high'.

- If a set of data is normally distributed with mean 0 and standard deviation 0.5 then there is a 97.7% certainty that a measurement will not exceed 1.0.

- If the mean of a sample of data is 12, how confident can we be that the true mean of the population lies between 11 and 13?

It is at this point that making and testing hypotheses becomes a true branch of mathematics. This mathematics is difficult, but fascinating and highly relevant in the information-rich world of today.

To read more about the technical side of hypothesis testing, take a look at What is a Hypothesis Test?

You might also enjoy reading the articles on statistics on the Understanding Uncertainty website

This resource is part of the collection Statistics - Maths of Real Life

- school Campus Bookshelves

- menu_book Bookshelves

- perm_media Learning Objects

- login Login

- how_to_reg Request Instructor Account

- hub Instructor Commons

- Download Page (PDF)

- Download Full Book (PDF)

- Periodic Table

- Physics Constants

- Scientific Calculator

- Reference & Cite

- Tools expand_more

- Readability

selected template will load here

This action is not available.

9.1: Introduction to Hypothesis Testing

- Last updated

- Save as PDF

- Page ID 10211

- Kyle Siegrist

- University of Alabama in Huntsville via Random Services

Basic Theory

Preliminaries.

As usual, our starting point is a random experiment with an underlying sample space and a probability measure \(\P\). In the basic statistical model, we have an observable random variable \(\bs{X}\) taking values in a set \(S\). In general, \(\bs{X}\) can have quite a complicated structure. For example, if the experiment is to sample \(n\) objects from a population and record various measurements of interest, then \[ \bs{X} = (X_1, X_2, \ldots, X_n) \] where \(X_i\) is the vector of measurements for the \(i\)th object. The most important special case occurs when \((X_1, X_2, \ldots, X_n)\) are independent and identically distributed. In this case, we have a random sample of size \(n\) from the common distribution.

The purpose of this section is to define and discuss the basic concepts of statistical hypothesis testing . Collectively, these concepts are sometimes referred to as the Neyman-Pearson framework, in honor of Jerzy Neyman and Egon Pearson, who first formalized them.

A statistical hypothesis is a statement about the distribution of \(\bs{X}\). Equivalently, a statistical hypothesis specifies a set of possible distributions of \(\bs{X}\): the set of distributions for which the statement is true. A hypothesis that specifies a single distribution for \(\bs{X}\) is called simple ; a hypothesis that specifies more than one distribution for \(\bs{X}\) is called composite .

In hypothesis testing , the goal is to see if there is sufficient statistical evidence to reject a presumed null hypothesis in favor of a conjectured alternative hypothesis . The null hypothesis is usually denoted \(H_0\) while the alternative hypothesis is usually denoted \(H_1\).

An hypothesis test is a statistical decision ; the conclusion will either be to reject the null hypothesis in favor of the alternative, or to fail to reject the null hypothesis. The decision that we make must, of course, be based on the observed value \(\bs{x}\) of the data vector \(\bs{X}\). Thus, we will find an appropriate subset \(R\) of the sample space \(S\) and reject \(H_0\) if and only if \(\bs{x} \in R\). The set \(R\) is known as the rejection region or the critical region . Note the asymmetry between the null and alternative hypotheses. This asymmetry is due to the fact that we assume the null hypothesis, in a sense, and then see if there is sufficient evidence in \(\bs{x}\) to overturn this assumption in favor of the alternative.

An hypothesis test is a statistical analogy to proof by contradiction, in a sense. Suppose for a moment that \(H_1\) is a statement in a mathematical theory and that \(H_0\) is its negation. One way that we can prove \(H_1\) is to assume \(H_0\) and work our way logically to a contradiction. In an hypothesis test, we don't prove anything of course, but there are similarities. We assume \(H_0\) and then see if the data \(\bs{x}\) are sufficiently at odds with that assumption that we feel justified in rejecting \(H_0\) in favor of \(H_1\).

Often, the critical region is defined in terms of a statistic \(w(\bs{X})\), known as a test statistic , where \(w\) is a function from \(S\) into another set \(T\). We find an appropriate rejection region \(R_T \subseteq T\) and reject \(H_0\) when the observed value \(w(\bs{x}) \in R_T\). Thus, the rejection region in \(S\) is then \(R = w^{-1}(R_T) = \left\{\bs{x} \in S: w(\bs{x}) \in R_T\right\}\). As usual, the use of a statistic often allows significant data reduction when the dimension of the test statistic is much smaller than the dimension of the data vector.

The ultimate decision may be correct or may be in error. There are two types of errors, depending on which of the hypotheses is actually true.

Types of errors:

- A type 1 error is rejecting the null hypothesis \(H_0\) when \(H_0\) is true.

- A type 2 error is failing to reject the null hypothesis \(H_0\) when the alternative hypothesis \(H_1\) is true.

Similarly, there are two ways to make a correct decision: we could reject \(H_0\) when \(H_1\) is true or we could fail to reject \(H_0\) when \(H_0\) is true. The possibilities are summarized in the following table:

Of course, when we observe \(\bs{X} = \bs{x}\) and make our decision, either we will have made the correct decision or we will have committed an error, and usually we will never know which of these events has occurred. Prior to gathering the data, however, we can consider the probabilities of the various errors.

If \(H_0\) is true (that is, the distribution of \(\bs{X}\) is specified by \(H_0\)), then \(\P(\bs{X} \in R)\) is the probability of a type 1 error for this distribution. If \(H_0\) is composite, then \(H_0\) specifies a variety of different distributions for \(\bs{X}\) and thus there is a set of type 1 error probabilities.

The maximum probability of a type 1 error, over the set of distributions specified by \( H_0 \), is the significance level of the test or the size of the critical region.

The significance level is often denoted by \(\alpha\). Usually, the rejection region is constructed so that the significance level is a prescribed, small value (typically 0.1, 0.05, 0.01).

If \(H_1\) is true (that is, the distribution of \(\bs{X}\) is specified by \(H_1\)), then \(\P(\bs{X} \notin R)\) is the probability of a type 2 error for this distribution. Again, if \(H_1\) is composite then \(H_1\) specifies a variety of different distributions for \(\bs{X}\), and thus there will be a set of type 2 error probabilities. Generally, there is a tradeoff between the type 1 and type 2 error probabilities. If we reduce the probability of a type 1 error, by making the rejection region \(R\) smaller, we necessarily increase the probability of a type 2 error because the complementary region \(S \setminus R\) is larger.

The extreme cases can give us some insight. First consider the decision rule in which we never reject \(H_0\), regardless of the evidence \(\bs{x}\). This corresponds to the rejection region \(R = \emptyset\). A type 1 error is impossible, so the significance level is 0. On the other hand, the probability of a type 2 error is 1 for any distribution defined by \(H_1\). At the other extreme, consider the decision rule in which we always rejects \(H_0\) regardless of the evidence \(\bs{x}\). This corresponds to the rejection region \(R = S\). A type 2 error is impossible, but now the probability of a type 1 error is 1 for any distribution defined by \(H_0\). In between these two worthless tests are meaningful tests that take the evidence \(\bs{x}\) into account.

If \(H_1\) is true, so that the distribution of \(\bs{X}\) is specified by \(H_1\), then \(\P(\bs{X} \in R)\), the probability of rejecting \(H_0\) is the power of the test for that distribution.

Thus the power of the test for a distribution specified by \( H_1 \) is the probability of making the correct decision.

Suppose that we have two tests, corresponding to rejection regions \(R_1\) and \(R_2\), respectively, each having significance level \(\alpha\). The test with region \(R_1\) is uniformly more powerful than the test with region \(R_2\) if \[ \P(\bs{X} \in R_1) \ge \P(\bs{X} \in R_2) \text{ for every distribution of } \bs{X} \text{ specified by } H_1 \]

Naturally, in this case, we would prefer the first test. Often, however, two tests will not be uniformly ordered; one test will be more powerful for some distributions specified by \(H_1\) while the other test will be more powerful for other distributions specified by \(H_1\).

If a test has significance level \(\alpha\) and is uniformly more powerful than any other test with significance level \(\alpha\), then the test is said to be a uniformly most powerful test at level \(\alpha\).

Clearly a uniformly most powerful test is the best we can do.

\(P\)-value

In most cases, we have a general procedure that allows us to construct a test (that is, a rejection region \(R_\alpha\)) for any given significance level \(\alpha \in (0, 1)\). Typically, \(R_\alpha\) decreases (in the subset sense) as \(\alpha\) decreases.

The \(P\)-value of the observed value \(\bs{x}\) of \(\bs{X}\), denoted \(P(\bs{x})\), is defined to be the smallest \(\alpha\) for which \(\bs{x} \in R_\alpha\); that is, the smallest significance level for which \(H_0\) is rejected, given \(\bs{X} = \bs{x}\).

Knowing \(P(\bs{x})\) allows us to test \(H_0\) at any significance level for the given data \(\bs{x}\): If \(P(\bs{x}) \le \alpha\) then we would reject \(H_0\) at significance level \(\alpha\); if \(P(\bs{x}) \gt \alpha\) then we fail to reject \(H_0\) at significance level \(\alpha\). Note that \(P(\bs{X})\) is a statistic . Informally, \(P(\bs{x})\) can often be thought of as the probability of an outcome as or more extreme than the observed value \(\bs{x}\), where extreme is interpreted relative to the null hypothesis \(H_0\).

Analogy with Justice Systems

There is a helpful analogy between statistical hypothesis testing and the criminal justice system in the US and various other countries. Consider a person charged with a crime. The presumed null hypothesis is that the person is innocent of the crime; the conjectured alternative hypothesis is that the person is guilty of the crime. The test of the hypotheses is a trial with evidence presented by both sides playing the role of the data. After considering the evidence, the jury delivers the decision as either not guilty or guilty . Note that innocent is not a possible verdict of the jury, because it is not the point of the trial to prove the person innocent. Rather, the point of the trial is to see whether there is sufficient evidence to overturn the null hypothesis that the person is innocent in favor of the alternative hypothesis of that the person is guilty. A type 1 error is convicting a person who is innocent; a type 2 error is acquitting a person who is guilty. Generally, a type 1 error is considered the more serious of the two possible errors, so in an attempt to hold the chance of a type 1 error to a very low level, the standard for conviction in serious criminal cases is beyond a reasonable doubt .

Tests of an Unknown Parameter

Hypothesis testing is a very general concept, but an important special class occurs when the distribution of the data variable \(\bs{X}\) depends on a parameter \(\theta\) taking values in a parameter space \(\Theta\). The parameter may be vector-valued, so that \(\bs{\theta} = (\theta_1, \theta_2, \ldots, \theta_n)\) and \(\Theta \subseteq \R^k\) for some \(k \in \N_+\). The hypotheses generally take the form \[ H_0: \theta \in \Theta_0 \text{ versus } H_1: \theta \notin \Theta_0 \] where \(\Theta_0\) is a prescribed subset of the parameter space \(\Theta\). In this setting, the probabilities of making an error or a correct decision depend on the true value of \(\theta\). If \(R\) is the rejection region, then the power function \( Q \) is given by \[ Q(\theta) = \P_\theta(\bs{X} \in R), \quad \theta \in \Theta \] The power function gives a lot of information about the test.

The power function satisfies the following properties:

- \(Q(\theta)\) is the probability of a type 1 error when \(\theta \in \Theta_0\).

- \(\max\left\{Q(\theta): \theta \in \Theta_0\right\}\) is the significance level of the test.

- \(1 - Q(\theta)\) is the probability of a type 2 error when \(\theta \notin \Theta_0\).

- \(Q(\theta)\) is the power of the test when \(\theta \notin \Theta_0\).

If we have two tests, we can compare them by means of their power functions.

Suppose that we have two tests, corresponding to rejection regions \(R_1\) and \(R_2\), respectively, each having significance level \(\alpha\). The test with rejection region \(R_1\) is uniformly more powerful than the test with rejection region \(R_2\) if \( Q_1(\theta) \ge Q_2(\theta)\) for all \( \theta \notin \Theta_0 \).

Most hypothesis tests of an unknown real parameter \(\theta\) fall into three special cases:

Suppose that \( \theta \) is a real parameter and \( \theta_0 \in \Theta \) a specified value. The tests below are respectively the two-sided test , the left-tailed test , and the right-tailed test .

- \(H_0: \theta = \theta_0\) versus \(H_1: \theta \ne \theta_0\)

- \(H_0: \theta \ge \theta_0\) versus \(H_1: \theta \lt \theta_0\)

- \(H_0: \theta \le \theta_0\) versus \(H_1: \theta \gt \theta_0\)

Thus the tests are named after the conjectured alternative. Of course, there may be other unknown parameters besides \(\theta\) (known as nuisance parameters ).

Equivalence Between Hypothesis Test and Confidence Sets

There is an equivalence between hypothesis tests and confidence sets for a parameter \(\theta\).

Suppose that \(C(\bs{x})\) is a \(1 - \alpha\) level confidence set for \(\theta\). The following test has significance level \(\alpha\) for the hypothesis \( H_0: \theta = \theta_0 \) versus \( H_1: \theta \ne \theta_0 \): Reject \(H_0\) if and only if \(\theta_0 \notin C(\bs{x})\)

By definition, \(\P[\theta \in C(\bs{X})] = 1 - \alpha\). Hence if \(H_0\) is true so that \(\theta = \theta_0\), then the probability of a type 1 error is \(P[\theta \notin C(\bs{X})] = \alpha\).

Equivalently, we fail to reject \(H_0\) at significance level \(\alpha\) if and only if \(\theta_0\) is in the corresponding \(1 - \alpha\) level confidence set. In particular, this equivalence applies to interval estimates of a real parameter \(\theta\) and the common tests for \(\theta\) given above .

In each case below, the confidence interval has confidence level \(1 - \alpha\) and the test has significance level \(\alpha\).

- Suppose that \(\left[L(\bs{X}, U(\bs{X})\right]\) is a two-sided confidence interval for \(\theta\). Reject \(H_0: \theta = \theta_0\) versus \(H_1: \theta \ne \theta_0\) if and only if \(\theta_0 \lt L(\bs{X})\) or \(\theta_0 \gt U(\bs{X})\).

- Suppose that \(L(\bs{X})\) is a confidence lower bound for \(\theta\). Reject \(H_0: \theta \le \theta_0\) versus \(H_1: \theta \gt \theta_0\) if and only if \(\theta_0 \lt L(\bs{X})\).

- Suppose that \(U(\bs{X})\) is a confidence upper bound for \(\theta\). Reject \(H_0: \theta \ge \theta_0\) versus \(H_1: \theta \lt \theta_0\) if and only if \(\theta_0 \gt U(\bs{X})\).

Pivot Variables and Test Statistics

Recall that confidence sets of an unknown parameter \(\theta\) are often constructed through a pivot variable , that is, a random variable \(W(\bs{X}, \theta)\) that depends on the data vector \(\bs{X}\) and the parameter \(\theta\), but whose distribution does not depend on \(\theta\) and is known. In this case, a natural test statistic for the basic tests given above is \(W(\bs{X}, \theta_0)\).

Reset password New user? Sign up

Existing user? Log in

Hypothesis Testing

Already have an account? Log in here.

A hypothesis test is a statistical inference method used to test the significance of a proposed (hypothesized) relation between population statistics (parameters) and their corresponding sample estimators . In other words, hypothesis tests are used to determine if there is enough evidence in a sample to prove a hypothesis true for the entire population.

The test considers two hypotheses: the null hypothesis , which is a statement meant to be tested, usually something like "there is no effect" with the intention of proving this false, and the alternate hypothesis , which is the statement meant to stand after the test is performed. The two hypotheses must be mutually exclusive ; moreover, in most applications, the two are complementary (one being the negation of the other). The test works by comparing the \(p\)-value to the level of significance (a chosen target). If the \(p\)-value is less than or equal to the level of significance, then the null hypothesis is rejected.

When analyzing data, only samples of a certain size might be manageable as efficient computations. In some situations the error terms follow a continuous or infinite distribution, hence the use of samples to suggest accuracy of the chosen test statistics. The method of hypothesis testing gives an advantage over guessing what distribution or which parameters the data follows.

Definitions and Methodology

Hypothesis test and confidence intervals.

In statistical inference, properties (parameters) of a population are analyzed by sampling data sets. Given assumptions on the distribution, i.e. a statistical model of the data, certain hypotheses can be deduced from the known behavior of the model. These hypotheses must be tested against sampled data from the population.

The null hypothesis \((\)denoted \(H_0)\) is a statement that is assumed to be true. If the null hypothesis is rejected, then there is enough evidence (statistical significance) to accept the alternate hypothesis \((\)denoted \(H_1).\) Before doing any test for significance, both hypotheses must be clearly stated and non-conflictive, i.e. mutually exclusive, statements. Rejecting the null hypothesis, given that it is true, is called a type I error and it is denoted \(\alpha\), which is also its probability of occurrence. Failing to reject the null hypothesis, given that it is false, is called a type II error and it is denoted \(\beta\), which is also its probability of occurrence. Also, \(\alpha\) is known as the significance level , and \(1-\beta\) is known as the power of the test. \(H_0\) \(\textbf{is true}\)\(\hspace{15mm}\) \(H_0\) \(\textbf{is false}\) \(\textbf{Reject}\) \(H_0\)\(\hspace{10mm}\) Type I error Correct Decision \(\textbf{Reject}\) \(H_1\) Correct Decision Type II error The test statistic is the standardized value following the sampled data under the assumption that the null hypothesis is true, and a chosen particular test. These tests depend on the statistic to be studied and the assumed distribution it follows, e.g. the population mean following a normal distribution. The \(p\)-value is the probability of observing an extreme test statistic in the direction of the alternate hypothesis, given that the null hypothesis is true. The critical value is the value of the assumed distribution of the test statistic such that the probability of making a type I error is small.

Methodologies: Given an estimator \(\hat \theta\) of a population statistic \(\theta\), following a probability distribution \(P(T)\), computed from a sample \(\mathcal{S},\) and given a significance level \(\alpha\) and test statistic \(t^*,\) define \(H_0\) and \(H_1;\) compute the test statistic \(t^*.\) \(p\)-value Approach (most prevalent): Find the \(p\)-value using \(t^*\) (right-tailed). If the \(p\)-value is at most \(\alpha,\) reject \(H_0\). Otherwise, reject \(H_1\). Critical Value Approach: Find the critical value solving the equation \(P(T\geq t_\alpha)=\alpha\) (right-tailed). If \(t^*>t_\alpha\), reject \(H_0\). Otherwise, reject \(H_1\). Note: Failing to reject \(H_0\) only means inability to accept \(H_1\), and it does not mean to accept \(H_0\).

Assume a normally distributed population has recorded cholesterol levels with various statistics computed. From a sample of 100 subjects in the population, the sample mean was 214.12 mg/dL (milligrams per deciliter), with a sample standard deviation of 45.71 mg/dL. Perform a hypothesis test, with significance level 0.05, to test if there is enough evidence to conclude that the population mean is larger than 200 mg/dL. Hypothesis Test We will perform a hypothesis test using the \(p\)-value approach with significance level \(\alpha=0.05:\) Define \(H_0\): \(\mu=200\). Define \(H_1\): \(\mu>200\). Since our values are normally distributed, the test statistic is \(z^*=\frac{\bar X - \mu_0}{\frac{s}{\sqrt{n}}}=\frac{214.12 - 200}{\frac{45.71}{\sqrt{100}}}\approx 3.09\). Using a standard normal distribution, we find that our \(p\)-value is approximately \(0.001\). Since the \(p\)-value is at most \(\alpha=0.05,\) we reject \(H_0\). Therefore, we can conclude that the test shows sufficient evidence to support the claim that \(\mu\) is larger than \(200\) mg/dL.

If the sample size was smaller, the normal and \(t\)-distributions behave differently. Also, the question itself must be managed by a double-tail test instead.

Assume a population's cholesterol levels are recorded and various statistics are computed. From a sample of 25 subjects, the sample mean was 214.12 mg/dL (milligrams per deciliter), with a sample standard deviation of 45.71 mg/dL. Perform a hypothesis test, with significance level 0.05, to test if there is enough evidence to conclude that the population mean is not equal to 200 mg/dL. Hypothesis Test We will perform a hypothesis test using the \(p\)-value approach with significance level \(\alpha=0.05\) and the \(t\)-distribution with 24 degrees of freedom: Define \(H_0\): \(\mu=200\). Define \(H_1\): \(\mu\neq 200\). Using the \(t\)-distribution, the test statistic is \(t^*=\frac{\bar X - \mu_0}{\frac{s}{\sqrt{n}}}=\frac{214.12 - 200}{\frac{45.71}{\sqrt{25}}}\approx 1.54\). Using a \(t\)-distribution with 24 degrees of freedom, we find that our \(p\)-value is approximately \(2(0.068)=0.136\). We have multiplied by two since this is a two-tailed argument, i.e. the mean can be smaller than or larger than. Since the \(p\)-value is larger than \(\alpha=0.05,\) we fail to reject \(H_0\). Therefore, the test does not show sufficient evidence to support the claim that \(\mu\) is not equal to \(200\) mg/dL.

The complement of the rejection on a two-tailed hypothesis test (with significance level \(\alpha\)) for a population parameter \(\theta\) is equivalent to finding a confidence interval \((\)with confidence level \(1-\alpha)\) for the population parameter \(\theta\). If the assumption on the parameter \(\theta\) falls inside the confidence interval, then the test has failed to reject the null hypothesis \((\)with \(p\)-value greater than \(\alpha).\) Otherwise, if \(\theta\) does not fall in the confidence interval, then the null hypothesis is rejected in favor of the alternate \((\)with \(p\)-value at most \(\alpha).\)

- Statistics (Estimation)

- Normal Distribution

- Correlation

- Confidence Intervals

Problem Loading...

Note Loading...

Set Loading...

A statement that could be true, which might then be tested.

Example: Sam has a hypothesis that "large dogs are better at catching tennis balls than small dogs". We can test that hypothesis by having hundreds of different sized dogs try to catch tennis balls.

Sometimes the hypothesis won't be tested, it is simply a good explanation (which could be wrong). Conjecture is a better word for this.

Example: you notice the temperature drops just as the sun rises. Your hypothesis is that the sun warms the air high above you, which rises up and then cooler air comes from the sides.

Note: when someone says "I have a theory" they should say "I have a hypothesis", because in mathematics a theory is actually well proven.

9.1 Null and Alternative Hypotheses

The actual test begins by considering two hypotheses . They are called the null hypothesis and the alternative hypothesis . These hypotheses contain opposing viewpoints.

H 0 , the — null hypothesis: a statement of no difference between sample means or proportions or no difference between a sample mean or proportion and a population mean or proportion. In other words, the difference equals 0.

H a —, the alternative hypothesis: a claim about the population that is contradictory to H 0 and what we conclude when we reject H 0 .

Since the null and alternative hypotheses are contradictory, you must examine evidence to decide if you have enough evidence to reject the null hypothesis or not. The evidence is in the form of sample data.

After you have determined which hypothesis the sample supports, you make a decision. There are two options for a decision. They are reject H 0 if the sample information favors the alternative hypothesis or do not reject H 0 or decline to reject H 0 if the sample information is insufficient to reject the null hypothesis.

Mathematical Symbols Used in H 0 and H a :

H 0 always has a symbol with an equal in it. H a never has a symbol with an equal in it. The choice of symbol depends on the wording of the hypothesis test. However, be aware that many researchers use = in the null hypothesis, even with > or < as the symbol in the alternative hypothesis. This practice is acceptable because we only make the decision to reject or not reject the null hypothesis.

Example 9.1

H 0 : No more than 30 percent of the registered voters in Santa Clara County voted in the primary election. p ≤ 30 H a : More than 30 percent of the registered voters in Santa Clara County voted in the primary election. p > 30

A medical trial is conducted to test whether or not a new medicine reduces cholesterol by 25 percent. State the null and alternative hypotheses.

Example 9.2

We want to test whether the mean GPA of students in American colleges is different from 2.0 (out of 4.0). The null and alternative hypotheses are the following: H 0 : μ = 2.0 H a : μ ≠ 2.0

We want to test whether the mean height of eighth graders is 66 inches. State the null and alternative hypotheses. Fill in the correct symbol (=, ≠, ≥, <, ≤, >) for the null and alternative hypotheses.

- H 0 : μ __ 66

- H a : μ __ 66

Example 9.3

We want to test if college students take fewer than five years to graduate from college, on the average. The null and alternative hypotheses are the following: H 0 : μ ≥ 5 H a : μ < 5

We want to test if it takes fewer than 45 minutes to teach a lesson plan. State the null and alternative hypotheses. Fill in the correct symbol ( =, ≠, ≥, <, ≤, >) for the null and alternative hypotheses.

- H 0 : μ __ 45

- H a : μ __ 45

Example 9.4

An article on school standards stated that about half of all students in France, Germany, and Israel take advanced placement exams and a third of the students pass. The same article stated that 6.6 percent of U.S. students take advanced placement exams and 4.4 percent pass. Test if the percentage of U.S. students who take advanced placement exams is more than 6.6 percent. State the null and alternative hypotheses. H 0 : p ≤ 0.066 H a : p > 0.066

On a state driver’s test, about 40 percent pass the test on the first try. We want to test if more than 40 percent pass on the first try. Fill in the correct symbol (=, ≠, ≥, <, ≤, >) for the null and alternative hypotheses.

- H 0 : p __ 0.40

- H a : p __ 0.40

Collaborative Exercise

Bring to class a newspaper, some news magazines, and some internet articles. In groups, find articles from which your group can write null and alternative hypotheses. Discuss your hypotheses with the rest of the class.

As an Amazon Associate we earn from qualifying purchases.

This book may not be used in the training of large language models or otherwise be ingested into large language models or generative AI offerings without OpenStax's permission.

Want to cite, share, or modify this book? This book uses the Creative Commons Attribution License and you must attribute Texas Education Agency (TEA). The original material is available at: https://www.texasgateway.org/book/tea-statistics . Changes were made to the original material, including updates to art, structure, and other content updates.

Access for free at https://openstax.org/books/statistics/pages/1-introduction

- Authors: Barbara Illowsky, Susan Dean

- Publisher/website: OpenStax

- Book title: Statistics

- Publication date: Mar 27, 2020

- Location: Houston, Texas

- Book URL: https://openstax.org/books/statistics/pages/1-introduction

- Section URL: https://openstax.org/books/statistics/pages/9-1-null-and-alternative-hypotheses

© Jan 23, 2024 Texas Education Agency (TEA). The OpenStax name, OpenStax logo, OpenStax book covers, OpenStax CNX name, and OpenStax CNX logo are not subject to the Creative Commons license and may not be reproduced without the prior and express written consent of Rice University.

User Preferences

Content preview.

Arcu felis bibendum ut tristique et egestas quis:

- Ut enim ad minim veniam, quis nostrud exercitation ullamco laboris

- Duis aute irure dolor in reprehenderit in voluptate

- Excepteur sint occaecat cupidatat non proident

Keyboard Shortcuts

5.2 - writing hypotheses.

The first step in conducting a hypothesis test is to write the hypothesis statements that are going to be tested. For each test you will have a null hypothesis (\(H_0\)) and an alternative hypothesis (\(H_a\)).

When writing hypotheses there are three things that we need to know: (1) the parameter that we are testing (2) the direction of the test (non-directional, right-tailed or left-tailed), and (3) the value of the hypothesized parameter.

- At this point we can write hypotheses for a single mean (\(\mu\)), paired means(\(\mu_d\)), a single proportion (\(p\)), the difference between two independent means (\(\mu_1-\mu_2\)), the difference between two proportions (\(p_1-p_2\)), a simple linear regression slope (\(\beta\)), and a correlation (\(\rho\)).

- The research question will give us the information necessary to determine if the test is two-tailed (e.g., "different from," "not equal to"), right-tailed (e.g., "greater than," "more than"), or left-tailed (e.g., "less than," "fewer than").

- The research question will also give us the hypothesized parameter value. This is the number that goes in the hypothesis statements (i.e., \(\mu_0\) and \(p_0\)). For the difference between two groups, regression, and correlation, this value is typically 0.

Hypotheses are always written in terms of population parameters (e.g., \(p\) and \(\mu\)). The tables below display all of the possible hypotheses for the parameters that we have learned thus far. Note that the null hypothesis always includes the equality (i.e., =).

Hypothesis Testing

Hypothesis testing is a tool for making statistical inferences about the population data. It is an analysis tool that tests assumptions and determines how likely something is within a given standard of accuracy. Hypothesis testing provides a way to verify whether the results of an experiment are valid.

A null hypothesis and an alternative hypothesis are set up before performing the hypothesis testing. This helps to arrive at a conclusion regarding the sample obtained from the population. In this article, we will learn more about hypothesis testing, its types, steps to perform the testing, and associated examples.

What is Hypothesis Testing in Statistics?

Hypothesis testing uses sample data from the population to draw useful conclusions regarding the population probability distribution . It tests an assumption made about the data using different types of hypothesis testing methodologies. The hypothesis testing results in either rejecting or not rejecting the null hypothesis.

Hypothesis Testing Definition

Hypothesis testing can be defined as a statistical tool that is used to identify if the results of an experiment are meaningful or not. It involves setting up a null hypothesis and an alternative hypothesis. These two hypotheses will always be mutually exclusive. This means that if the null hypothesis is true then the alternative hypothesis is false and vice versa. An example of hypothesis testing is setting up a test to check if a new medicine works on a disease in a more efficient manner.

Null Hypothesis

The null hypothesis is a concise mathematical statement that is used to indicate that there is no difference between two possibilities. In other words, there is no difference between certain characteristics of data. This hypothesis assumes that the outcomes of an experiment are based on chance alone. It is denoted as \(H_{0}\). Hypothesis testing is used to conclude if the null hypothesis can be rejected or not. Suppose an experiment is conducted to check if girls are shorter than boys at the age of 5. The null hypothesis will say that they are the same height.

Alternative Hypothesis

The alternative hypothesis is an alternative to the null hypothesis. It is used to show that the observations of an experiment are due to some real effect. It indicates that there is a statistical significance between two possible outcomes and can be denoted as \(H_{1}\) or \(H_{a}\). For the above-mentioned example, the alternative hypothesis would be that girls are shorter than boys at the age of 5.

Hypothesis Testing P Value

In hypothesis testing, the p value is used to indicate whether the results obtained after conducting a test are statistically significant or not. It also indicates the probability of making an error in rejecting or not rejecting the null hypothesis.This value is always a number between 0 and 1. The p value is compared to an alpha level, \(\alpha\) or significance level. The alpha level can be defined as the acceptable risk of incorrectly rejecting the null hypothesis. The alpha level is usually chosen between 1% to 5%.

Hypothesis Testing Critical region

All sets of values that lead to rejecting the null hypothesis lie in the critical region. Furthermore, the value that separates the critical region from the non-critical region is known as the critical value.

Hypothesis Testing Formula

Depending upon the type of data available and the size, different types of hypothesis testing are used to determine whether the null hypothesis can be rejected or not. The hypothesis testing formula for some important test statistics are given below:

- z = \(\frac{\overline{x}-\mu}{\frac{\sigma}{\sqrt{n}}}\). \(\overline{x}\) is the sample mean, \(\mu\) is the population mean, \(\sigma\) is the population standard deviation and n is the size of the sample.

- t = \(\frac{\overline{x}-\mu}{\frac{s}{\sqrt{n}}}\). s is the sample standard deviation.

- \(\chi ^{2} = \sum \frac{(O_{i}-E_{i})^{2}}{E_{i}}\). \(O_{i}\) is the observed value and \(E_{i}\) is the expected value.

We will learn more about these test statistics in the upcoming section.

Types of Hypothesis Testing

Selecting the correct test for performing hypothesis testing can be confusing. These tests are used to determine a test statistic on the basis of which the null hypothesis can either be rejected or not rejected. Some of the important tests used for hypothesis testing are given below.

Hypothesis Testing Z Test

A z test is a way of hypothesis testing that is used for a large sample size (n ≥ 30). It is used to determine whether there is a difference between the population mean and the sample mean when the population standard deviation is known. It can also be used to compare the mean of two samples. It is used to compute the z test statistic. The formulas are given as follows:

- One sample: z = \(\frac{\overline{x}-\mu}{\frac{\sigma}{\sqrt{n}}}\).

- Two samples: z = \(\frac{(\overline{x_{1}}-\overline{x_{2}})-(\mu_{1}-\mu_{2})}{\sqrt{\frac{\sigma_{1}^{2}}{n_{1}}+\frac{\sigma_{2}^{2}}{n_{2}}}}\).

Hypothesis Testing t Test

The t test is another method of hypothesis testing that is used for a small sample size (n < 30). It is also used to compare the sample mean and population mean. However, the population standard deviation is not known. Instead, the sample standard deviation is known. The mean of two samples can also be compared using the t test.

- One sample: t = \(\frac{\overline{x}-\mu}{\frac{s}{\sqrt{n}}}\).

- Two samples: t = \(\frac{(\overline{x_{1}}-\overline{x_{2}})-(\mu_{1}-\mu_{2})}{\sqrt{\frac{s_{1}^{2}}{n_{1}}+\frac{s_{2}^{2}}{n_{2}}}}\).

Hypothesis Testing Chi Square

The Chi square test is a hypothesis testing method that is used to check whether the variables in a population are independent or not. It is used when the test statistic is chi-squared distributed.

One Tailed Hypothesis Testing

One tailed hypothesis testing is done when the rejection region is only in one direction. It can also be known as directional hypothesis testing because the effects can be tested in one direction only. This type of testing is further classified into the right tailed test and left tailed test.

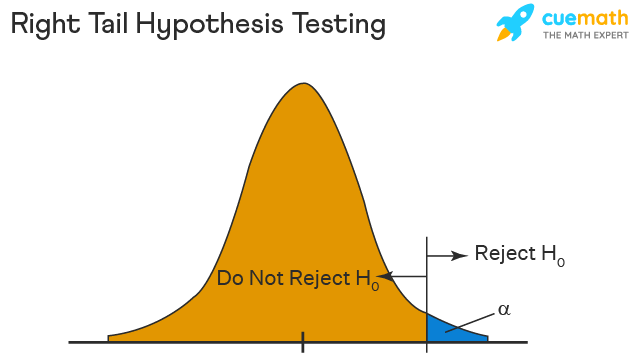

Right Tailed Hypothesis Testing

The right tail test is also known as the upper tail test. This test is used to check whether the population parameter is greater than some value. The null and alternative hypotheses for this test are given as follows:

\(H_{0}\): The population parameter is ≤ some value

\(H_{1}\): The population parameter is > some value.

If the test statistic has a greater value than the critical value then the null hypothesis is rejected

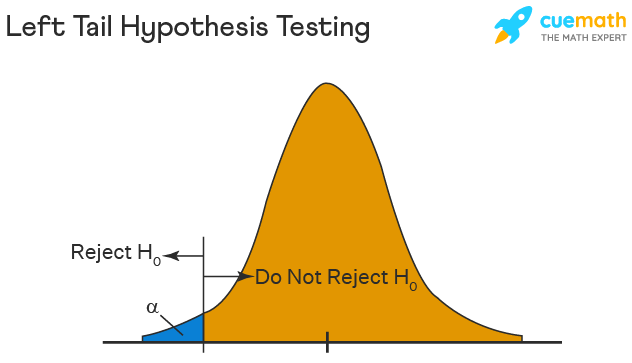

Left Tailed Hypothesis Testing

The left tail test is also known as the lower tail test. It is used to check whether the population parameter is less than some value. The hypotheses for this hypothesis testing can be written as follows:

\(H_{0}\): The population parameter is ≥ some value

\(H_{1}\): The population parameter is < some value.

The null hypothesis is rejected if the test statistic has a value lesser than the critical value.

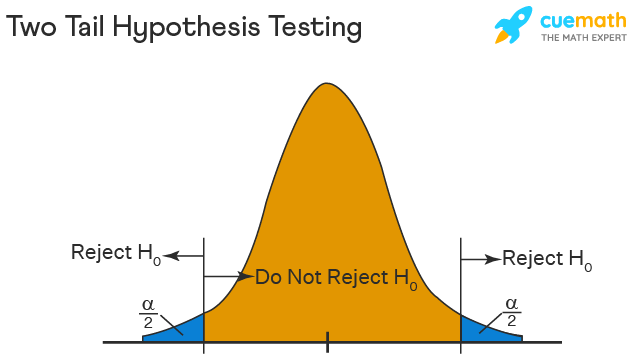

Two Tailed Hypothesis Testing

In this hypothesis testing method, the critical region lies on both sides of the sampling distribution. It is also known as a non - directional hypothesis testing method. The two-tailed test is used when it needs to be determined if the population parameter is assumed to be different than some value. The hypotheses can be set up as follows:

\(H_{0}\): the population parameter = some value

\(H_{1}\): the population parameter ≠ some value

The null hypothesis is rejected if the test statistic has a value that is not equal to the critical value.

Hypothesis Testing Steps

Hypothesis testing can be easily performed in five simple steps. The most important step is to correctly set up the hypotheses and identify the right method for hypothesis testing. The basic steps to perform hypothesis testing are as follows:

- Step 1: Set up the null hypothesis by correctly identifying whether it is the left-tailed, right-tailed, or two-tailed hypothesis testing.

- Step 2: Set up the alternative hypothesis.

- Step 3: Choose the correct significance level, \(\alpha\), and find the critical value.

- Step 4: Calculate the correct test statistic (z, t or \(\chi\)) and p-value.

- Step 5: Compare the test statistic with the critical value or compare the p-value with \(\alpha\) to arrive at a conclusion. In other words, decide if the null hypothesis is to be rejected or not.

Hypothesis Testing Example

The best way to solve a problem on hypothesis testing is by applying the 5 steps mentioned in the previous section. Suppose a researcher claims that the mean average weight of men is greater than 100kgs with a standard deviation of 15kgs. 30 men are chosen with an average weight of 112.5 Kgs. Using hypothesis testing, check if there is enough evidence to support the researcher's claim. The confidence interval is given as 95%.

Step 1: This is an example of a right-tailed test. Set up the null hypothesis as \(H_{0}\): \(\mu\) = 100.

Step 2: The alternative hypothesis is given by \(H_{1}\): \(\mu\) > 100.

Step 3: As this is a one-tailed test, \(\alpha\) = 100% - 95% = 5%. This can be used to determine the critical value.

1 - \(\alpha\) = 1 - 0.05 = 0.95

0.95 gives the required area under the curve. Now using a normal distribution table, the area 0.95 is at z = 1.645. A similar process can be followed for a t-test. The only additional requirement is to calculate the degrees of freedom given by n - 1.

Step 4: Calculate the z test statistic. This is because the sample size is 30. Furthermore, the sample and population means are known along with the standard deviation.

z = \(\frac{\overline{x}-\mu}{\frac{\sigma}{\sqrt{n}}}\).

\(\mu\) = 100, \(\overline{x}\) = 112.5, n = 30, \(\sigma\) = 15

z = \(\frac{112.5-100}{\frac{15}{\sqrt{30}}}\) = 4.56

Step 5: Conclusion. As 4.56 > 1.645 thus, the null hypothesis can be rejected.

Hypothesis Testing and Confidence Intervals

Confidence intervals form an important part of hypothesis testing. This is because the alpha level can be determined from a given confidence interval. Suppose a confidence interval is given as 95%. Subtract the confidence interval from 100%. This gives 100 - 95 = 5% or 0.05. This is the alpha value of a one-tailed hypothesis testing. To obtain the alpha value for a two-tailed hypothesis testing, divide this value by 2. This gives 0.05 / 2 = 0.025.

Related Articles:

- Probability and Statistics

- Data Handling

Important Notes on Hypothesis Testing

- Hypothesis testing is a technique that is used to verify whether the results of an experiment are statistically significant.

- It involves the setting up of a null hypothesis and an alternate hypothesis.

- There are three types of tests that can be conducted under hypothesis testing - z test, t test, and chi square test.

- Hypothesis testing can be classified as right tail, left tail, and two tail tests.

Examples on Hypothesis Testing

- Example 1: The average weight of a dumbbell in a gym is 90lbs. However, a physical trainer believes that the average weight might be higher. A random sample of 5 dumbbells with an average weight of 110lbs and a standard deviation of 18lbs. Using hypothesis testing check if the physical trainer's claim can be supported for a 95% confidence level. Solution: As the sample size is lesser than 30, the t-test is used. \(H_{0}\): \(\mu\) = 90, \(H_{1}\): \(\mu\) > 90 \(\overline{x}\) = 110, \(\mu\) = 90, n = 5, s = 18. \(\alpha\) = 0.05 Using the t-distribution table, the critical value is 2.132 t = \(\frac{\overline{x}-\mu}{\frac{s}{\sqrt{n}}}\) t = 2.484 As 2.484 > 2.132, the null hypothesis is rejected. Answer: The average weight of the dumbbells may be greater than 90lbs