- PRO Courses Guides New Tech Help Pro Expert Videos About wikiHow Pro Upgrade Sign In

- EDIT Edit this Article

- EXPLORE Tech Help Pro About Us Random Article Quizzes Request a New Article Community Dashboard This Or That Game Popular Categories Arts and Entertainment Artwork Books Movies Computers and Electronics Computers Phone Skills Technology Hacks Health Men's Health Mental Health Women's Health Relationships Dating Love Relationship Issues Hobbies and Crafts Crafts Drawing Games Education & Communication Communication Skills Personal Development Studying Personal Care and Style Fashion Hair Care Personal Hygiene Youth Personal Care School Stuff Dating All Categories Arts and Entertainment Finance and Business Home and Garden Relationship Quizzes Cars & Other Vehicles Food and Entertaining Personal Care and Style Sports and Fitness Computers and Electronics Health Pets and Animals Travel Education & Communication Hobbies and Crafts Philosophy and Religion Work World Family Life Holidays and Traditions Relationships Youth

- Browse Articles

- Learn Something New

- Quizzes Hot

- This Or That Game New

- Train Your Brain

- Explore More

- Support wikiHow

- About wikiHow

- Log in / Sign up

- Education and Communications

How to Develop a Questionnaire for Research

Last Updated: December 4, 2022 Fact Checked

This article was co-authored by Alexander Ruiz, M.Ed. . Alexander Ruiz is an Educational Consultant and the Educational Director of Link Educational Institute, a tutoring business based in Claremont, California that provides customizable educational plans, subject and test prep tutoring, and college application consulting. With over a decade and a half of experience in the education industry, Alexander coaches students to increase their self-awareness and emotional intelligence while achieving skills and the goal of achieving skills and higher education. He holds a BA in Psychology from Florida International University and an MA in Education from Georgia Southern University. There are 13 references cited in this article, which can be found at the bottom of the page. This article has been fact-checked, ensuring the accuracy of any cited facts and confirming the authority of its sources. This article has been viewed 588,152 times.

A questionnaire is a technique for collecting data in which a respondent provides answers to a series of questions. [1] X Research source To develop a questionnaire that will collect the data you want takes effort and time. However, by taking a step-by-step approach to questionnaire development, you can come up with an effective means to collect data that will answer your unique research question.

Designing Your Questionnaire

- Come up with a research question. It can be one question or several, but this should be the focal point of your questionnaire.

- Develop one or several hypotheses that you want to test. The questions that you include on your questionnaire should be aimed at systematically testing these hypotheses.

- Dichotomous question: this is a question that will generally be a “yes/no” question, but may also be an “agree/disagree” question. It is the quickest and simplest question to analyze, but is not a highly sensitive measure.

- Open-ended questions: these questions allow the respondent to respond in their own words. They can be useful for gaining insight into the feelings of the respondent, but can be a challenge when it comes to analysis of data. It is recommended to use open-ended questions to address the issue of “why.” [2] X Research source

- Multiple choice questions: these questions consist of three or more mutually-exclusive categories and ask for a single answer or several answers. [3] X Research source Multiple choice questions allow for easy analysis of results, but may not give the respondent the answer they want.

- Rank-order (or ordinal) scale questions: this type of question asks your respondent to rank items or choose items in a particular order from a set. For example, it might ask your respondents to order five things from least to most important. These types of questions forces discrimination among alternatives, but does not address the issue of why the respondent made these discriminations. [4] X Research source

- Rating scale questions: these questions allow the respondent to assess a particular issue based on a given dimension. You can provide a scale that gives an equal number of positive and negative choices, for example, ranging from “strongly agree” to “strongly disagree.” [5] X Research source These questions are very flexible, but also do not answer the question “why.”

- Write questions that are succinct and simple. You should not be writing complex statements or using technical jargon, as it will only confuse your respondents and lead to incorrect responses.

- Ask only one question at a time. This will help avoid confusion

- Asking questions such as these usually require you to anonymize or encrypt the demographic data you collect.

- Determine if you will include an answer such as “I don’t know” or “Not applicable to me.” While these can give your respondents a way of not answering certain questions, providing these options can also lead to missing data, which can be problematic during data analysis.

- Put the most important questions at the beginning of your questionnaire. [7] X Research source This can help you gather important data even if you sense that your respondents may be becoming distracted by the end of the questionnaire.

- Only include questions that are directly useful to your research question. [9] X Trustworthy Source Food and Agricultural Organization of the United Nations Specialized agency of the United Nations responsible for leading international efforts to end world hunger and improve nutrition Go to source A questionnaire is not an opportunity to collect all kinds of information about your respondents.

- Avoid asking redundant questions. This will frustrate those who are taking your questionnaire.

- Consider if you want your questionnaire to collect information from both men and women. Some studies will only survey one sex.

- Consider including a range of ages in your target demographic. For example, you can consider young adult to be 18-29 years old, adults to be 30-54 years old, and mature adults to be 55+. Providing the an age range will help you get more respondents than limiting yourself to a specific age.

- Consider what else would make a person a target for your questionnaire. Do they need to drive a car? Do they need to have health insurance? Do they need to have a child under 3? Make sure you are very clear about this before you distribute your questionnaire.

- Consider an anonymous questionnaire. You may not want to ask for names on your questionnaire. This is one step you can take to prevent privacy, however it is often possible to figure out a respondent’s identity using other demographic information (such as age, physical features, or zipcode).

- Consider de-identifying the identity of your respondents. Give each questionnaire (and thus, each respondent) a unique number or word, and only refer to them using that new identifier. Shred any personal information that can be used to determine identity.

- Remember that you do not need to collect much demographic information to be able to identify someone. People may be wary to provide this information, so you may get more respondents by asking less demographic questions (if it is possible for your questionnaire).

- Make sure you destroy all identifying information after your study is complete.

Writing your questionnaire

- My name is Jack Smith and I am one of the creators of this questionnaire. I am part of the Department of Psychology at the University of Michigan, where I am focusing in developing cognition in infants.

- I’m Kelly Smith, a 3rd year undergraduate student at the University of New Mexico. This questionnaire is part of my final exam in statistics.

- My name is Steve Johnson, and I’m a marketing analyst for The Best Company. I’ve been working on questionnaire development to determine attitudes surrounding drug use in Canada for several years.

- I am collecting data regarding the attitudes surrounding gun control. This information is being collected for my Anthropology 101 class at the University of Maryland.

- This questionnaire will ask you 15 questions about your eating and exercise habits. We are attempting to make a correlation between healthy eating, frequency of exercise, and incidence of cancer in mature adults.

- This questionnaire will ask you about your recent experiences with international air travel. There will be three sections of questions that will ask you to recount your recent trips and your feelings surrounding these trips, as well as your travel plans for the future. We are looking to understand how a person’s feelings surrounding air travel impact their future plans.

- Beware that if you are collecting information for a university or for publication, you may need to check in with your institution’s Institutional Review Board (IRB) for permission before beginning. Most research universities have a dedicated IRB staff, and their information can usually be found on the school’s website.

- Remember that transparency is best. It is important to be honest about what will happen with the data you collect.

- Include an informed consent for if necessary. Note that you cannot guarantee confidentiality, but you will make all reasonable attempts to ensure that you protect their information. [12] X Research source

- Time yourself taking the survey. Then consider that it will take some people longer than you, and some people less time than you.

- Provide a time range instead of a specific time. For example, it’s better to say that a survey will take between 15 and 30 minutes than to say it will take 15 minutes and have some respondents quit halfway through.

- Use this as a reason to keep your survey concise! You will feel much better asking people to take a 20 minute survey than you will asking them to take a 3 hour one.

- Incentives can attract the wrong kind of respondent. You don’t want to incorporate responses from people who rush through your questionnaire just to get the reward at the end. This is a danger of offering an incentive. [13] X Research source

- Incentives can encourage people to respond to your survey who might not have responded without a reward. This is a situation in which incentives can help you reach your target number of respondents. [14] X Research source

- Consider the strategy used by SurveyMonkey. Instead of directly paying respondents to take their surveys, they offer 50 cents to the charity of their choice when a respondent fills out a survey. They feel that this lessens the chances that a respondent will fill out a questionnaire out of pure self-interest. [15] X Research source

- Consider entering each respondent in to a drawing for a prize if they complete the questionnaire. You can offer a 25$ gift card to a restaurant, or a new iPod, or a ticket to a movie. This makes it less tempting just to respond to your questionnaire for the incentive alone, but still offers the chance of a pleasant reward.

- Always proof read. Check for spelling, grammar, and punctuation errors.

- Include a title. This is a good way for your respondents to understand the focus of the survey as quickly as possible.

- Thank your respondents. Thank them for taking the time and effort to complete your survey.

Distributing Your Questionnaire

- Was the questionnaire easy to understand? Were there any questions that confused you?

- Was the questionnaire easy to access? (Especially important if your questionnaire is online).

- Do you feel the questionnaire was worth your time?

- Were you comfortable answering the questions asked?

- Are there any improvements you would make to the questionnaire?

- Use an online site, such as SurveyMonkey.com. This site allows you to write your own questionnaire with their survey builder, and provides additional options such as the option to buy a target audience and use their analytics to analyze your data. [19] X Research source

- Consider using the mail. If you mail your survey, always make sure you include a self-addressed stamped envelope so that the respondent can easily mail their responses back. Make sure that your questionnaire will fit inside a standard business envelope.

- Conduct face-to-face interviews. This can be a good way to ensure that you are reaching your target demographic and can reduce missing information in your questionnaires, as it is more difficult for a respondent to avoid answering a question when you ask it directly.

- Try using the telephone. While this can be a more time-effective way to collect your data, it can be difficult to get people to respond to telephone questionnaires.

- Make your deadline reasonable. Giving respondents up to 2 weeks to answer should be more than sufficient. Anything longer and you risk your respondents forgetting about your questionnaire.

- Consider providing a reminder. A week before the deadline is a good time to provide a gentle reminder about returning the questionnaire. Include a replacement of the questionnaire in case it has been misplaced by your respondent. [20] X Research source

Community Q&A

You Might Also Like

- ↑ https://www.questionpro.com/blog/what-is-a-questionnaire/

- ↑ https://www.hotjar.com/blog/open-ended-questions/

- ↑ https://www.questionpro.com/a/showArticle.do?articleID=survey-questions

- ↑ https://surveysparrow.com/blog/ranking-questions-examples/

- ↑ https://www.lumoa.me/blog/rating-scale/

- ↑ http://www.sciencebuddies.org/science-fair-projects/project_ideas/Soc_survey.shtml

- ↑ http://www.monash.edu.au/lls/hdr/design/2.4.3.html

- ↑ http://www.fao.org/docrep/W3241E/w3241e05.htm

- ↑ http://managementhelp.org/businessresearch/questionaires.htm

- ↑ https://www.surveymonkey.com/mp/survey-rewards/

- ↑ http://www.ideafit.com/fitness-library/how-to-develop-a-questionnaire

- ↑ https://www.surveymonkey.com/mp/take-a-tour/?ut_source=header

About This Article

To develop a questionnaire for research, identify the main objective of your research to act as the focal point for the questionnaire. Then, choose the type of questions that you want to include, and come up with succinct, straightforward questions to gather the information that you need to answer your questions. Keep your questionnaire as short as possible, and identify a target demographic who you would like to answer the questions. Remember to make the questionnaires as anonymous as possible to protect the integrity of the person answering the questions! For tips on writing out your questions and distributing the questionnaire, keep reading! Did this summary help you? Yes No

- Send fan mail to authors

Reader Success Stories

Abdul Bari Khan

Nov 11, 2020

Did this article help you?

Jul 25, 2023

Iman Ilhusadi

Nov 26, 2016

Jaydeepa Das

Aug 21, 2018

Atefeh Abdollahi

Jan 3, 2017

Featured Articles

Trending Articles

Watch Articles

- Terms of Use

- Privacy Policy

- Do Not Sell or Share My Info

- Not Selling Info

Don’t miss out! Sign up for

wikiHow’s newsletter

Numbers, Facts and Trends Shaping Your World

Read our research on:

Full Topic List

Regions & Countries

- Publications

- Our Methods

- Short Reads

- Tools & Resources

Read Our Research On:

Writing Survey Questions

Perhaps the most important part of the survey process is the creation of questions that accurately measure the opinions, experiences and behaviors of the public. Accurate random sampling will be wasted if the information gathered is built on a shaky foundation of ambiguous or biased questions. Creating good measures involves both writing good questions and organizing them to form the questionnaire.

Questionnaire design is a multistage process that requires attention to many details at once. Designing the questionnaire is complicated because surveys can ask about topics in varying degrees of detail, questions can be asked in different ways, and questions asked earlier in a survey may influence how people respond to later questions. Researchers are also often interested in measuring change over time and therefore must be attentive to how opinions or behaviors have been measured in prior surveys.

Surveyors may conduct pilot tests or focus groups in the early stages of questionnaire development in order to better understand how people think about an issue or comprehend a question. Pretesting a survey is an essential step in the questionnaire design process to evaluate how people respond to the overall questionnaire and specific questions, especially when questions are being introduced for the first time.

For many years, surveyors approached questionnaire design as an art, but substantial research over the past forty years has demonstrated that there is a lot of science involved in crafting a good survey questionnaire. Here, we discuss the pitfalls and best practices of designing questionnaires.

Question development

There are several steps involved in developing a survey questionnaire. The first is identifying what topics will be covered in the survey. For Pew Research Center surveys, this involves thinking about what is happening in our nation and the world and what will be relevant to the public, policymakers and the media. We also track opinion on a variety of issues over time so we often ensure that we update these trends on a regular basis to better understand whether people’s opinions are changing.

At Pew Research Center, questionnaire development is a collaborative and iterative process where staff meet to discuss drafts of the questionnaire several times over the course of its development. We frequently test new survey questions ahead of time through qualitative research methods such as focus groups , cognitive interviews, pretesting (often using an online, opt-in sample ), or a combination of these approaches. Researchers use insights from this testing to refine questions before they are asked in a production survey, such as on the ATP.

Measuring change over time

Many surveyors want to track changes over time in people’s attitudes, opinions and behaviors. To measure change, questions are asked at two or more points in time. A cross-sectional design surveys different people in the same population at multiple points in time. A panel, such as the ATP, surveys the same people over time. However, it is common for the set of people in survey panels to change over time as new panelists are added and some prior panelists drop out. Many of the questions in Pew Research Center surveys have been asked in prior polls. Asking the same questions at different points in time allows us to report on changes in the overall views of the general public (or a subset of the public, such as registered voters, men or Black Americans), or what we call “trending the data”.

When measuring change over time, it is important to use the same question wording and to be sensitive to where the question is asked in the questionnaire to maintain a similar context as when the question was asked previously (see question wording and question order for further information). All of our survey reports include a topline questionnaire that provides the exact question wording and sequencing, along with results from the current survey and previous surveys in which we asked the question.

The Center’s transition from conducting U.S. surveys by live telephone interviewing to an online panel (around 2014 to 2020) complicated some opinion trends, but not others. Opinion trends that ask about sensitive topics (e.g., personal finances or attending religious services ) or that elicited volunteered answers (e.g., “neither” or “don’t know”) over the phone tended to show larger differences than other trends when shifting from phone polls to the online ATP. The Center adopted several strategies for coping with changes to data trends that may be related to this change in methodology. If there is evidence suggesting that a change in a trend stems from switching from phone to online measurement, Center reports flag that possibility for readers to try to head off confusion or erroneous conclusions.

Open- and closed-ended questions

One of the most significant decisions that can affect how people answer questions is whether the question is posed as an open-ended question, where respondents provide a response in their own words, or a closed-ended question, where they are asked to choose from a list of answer choices.

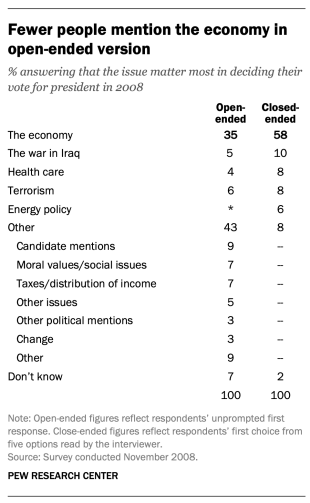

For example, in a poll conducted after the 2008 presidential election, people responded very differently to two versions of the question: “What one issue mattered most to you in deciding how you voted for president?” One was closed-ended and the other open-ended. In the closed-ended version, respondents were provided five options and could volunteer an option not on the list.

When explicitly offered the economy as a response, more than half of respondents (58%) chose this answer; only 35% of those who responded to the open-ended version volunteered the economy. Moreover, among those asked the closed-ended version, fewer than one-in-ten (8%) provided a response other than the five they were read. By contrast, fully 43% of those asked the open-ended version provided a response not listed in the closed-ended version of the question. All of the other issues were chosen at least slightly more often when explicitly offered in the closed-ended version than in the open-ended version. (Also see “High Marks for the Campaign, a High Bar for Obama” for more information.)

Researchers will sometimes conduct a pilot study using open-ended questions to discover which answers are most common. They will then develop closed-ended questions based off that pilot study that include the most common responses as answer choices. In this way, the questions may better reflect what the public is thinking, how they view a particular issue, or bring certain issues to light that the researchers may not have been aware of.

When asking closed-ended questions, the choice of options provided, how each option is described, the number of response options offered, and the order in which options are read can all influence how people respond. One example of the impact of how categories are defined can be found in a Pew Research Center poll conducted in January 2002. When half of the sample was asked whether it was “more important for President Bush to focus on domestic policy or foreign policy,” 52% chose domestic policy while only 34% said foreign policy. When the category “foreign policy” was narrowed to a specific aspect – “the war on terrorism” – far more people chose it; only 33% chose domestic policy while 52% chose the war on terrorism.

In most circumstances, the number of answer choices should be kept to a relatively small number – just four or perhaps five at most – especially in telephone surveys. Psychological research indicates that people have a hard time keeping more than this number of choices in mind at one time. When the question is asking about an objective fact and/or demographics, such as the religious affiliation of the respondent, more categories can be used. In fact, they are encouraged to ensure inclusivity. For example, Pew Research Center’s standard religion questions include more than 12 different categories, beginning with the most common affiliations (Protestant and Catholic). Most respondents have no trouble with this question because they can expect to see their religious group within that list in a self-administered survey.

In addition to the number and choice of response options offered, the order of answer categories can influence how people respond to closed-ended questions. Research suggests that in telephone surveys respondents more frequently choose items heard later in a list (a “recency effect”), and in self-administered surveys, they tend to choose items at the top of the list (a “primacy” effect).

Because of concerns about the effects of category order on responses to closed-ended questions, many sets of response options in Pew Research Center’s surveys are programmed to be randomized to ensure that the options are not asked in the same order for each respondent. Rotating or randomizing means that questions or items in a list are not asked in the same order to each respondent. Answers to questions are sometimes affected by questions that precede them. By presenting questions in a different order to each respondent, we ensure that each question gets asked in the same context as every other question the same number of times (e.g., first, last or any position in between). This does not eliminate the potential impact of previous questions on the current question, but it does ensure that this bias is spread randomly across all of the questions or items in the list. For instance, in the example discussed above about what issue mattered most in people’s vote, the order of the five issues in the closed-ended version of the question was randomized so that no one issue appeared early or late in the list for all respondents. Randomization of response items does not eliminate order effects, but it does ensure that this type of bias is spread randomly.

Questions with ordinal response categories – those with an underlying order (e.g., excellent, good, only fair, poor OR very favorable, mostly favorable, mostly unfavorable, very unfavorable) – are generally not randomized because the order of the categories conveys important information to help respondents answer the question. Generally, these types of scales should be presented in order so respondents can easily place their responses along the continuum, but the order can be reversed for some respondents. For example, in one of Pew Research Center’s questions about abortion, half of the sample is asked whether abortion should be “legal in all cases, legal in most cases, illegal in most cases, illegal in all cases,” while the other half of the sample is asked the same question with the response categories read in reverse order, starting with “illegal in all cases.” Again, reversing the order does not eliminate the recency effect but distributes it randomly across the population.

Question wording

The choice of words and phrases in a question is critical in expressing the meaning and intent of the question to the respondent and ensuring that all respondents interpret the question the same way. Even small wording differences can substantially affect the answers people provide.

[View more Methods 101 Videos ]

An example of a wording difference that had a significant impact on responses comes from a January 2003 Pew Research Center survey. When people were asked whether they would “favor or oppose taking military action in Iraq to end Saddam Hussein’s rule,” 68% said they favored military action while 25% said they opposed military action. However, when asked whether they would “favor or oppose taking military action in Iraq to end Saddam Hussein’s rule even if it meant that U.S. forces might suffer thousands of casualties, ” responses were dramatically different; only 43% said they favored military action, while 48% said they opposed it. The introduction of U.S. casualties altered the context of the question and influenced whether people favored or opposed military action in Iraq.

There has been a substantial amount of research to gauge the impact of different ways of asking questions and how to minimize differences in the way respondents interpret what is being asked. The issues related to question wording are more numerous than can be treated adequately in this short space, but below are a few of the important things to consider:

First, it is important to ask questions that are clear and specific and that each respondent will be able to answer. If a question is open-ended, it should be evident to respondents that they can answer in their own words and what type of response they should provide (an issue or problem, a month, number of days, etc.). Closed-ended questions should include all reasonable responses (i.e., the list of options is exhaustive) and the response categories should not overlap (i.e., response options should be mutually exclusive). Further, it is important to discern when it is best to use forced-choice close-ended questions (often denoted with a radio button in online surveys) versus “select-all-that-apply” lists (or check-all boxes). A 2019 Center study found that forced-choice questions tend to yield more accurate responses, especially for sensitive questions. Based on that research, the Center generally avoids using select-all-that-apply questions.

It is also important to ask only one question at a time. Questions that ask respondents to evaluate more than one concept (known as double-barreled questions) – such as “How much confidence do you have in President Obama to handle domestic and foreign policy?” – are difficult for respondents to answer and often lead to responses that are difficult to interpret. In this example, it would be more effective to ask two separate questions, one about domestic policy and another about foreign policy.

In general, questions that use simple and concrete language are more easily understood by respondents. It is especially important to consider the education level of the survey population when thinking about how easy it will be for respondents to interpret and answer a question. Double negatives (e.g., do you favor or oppose not allowing gays and lesbians to legally marry) or unfamiliar abbreviations or jargon (e.g., ANWR instead of Arctic National Wildlife Refuge) can result in respondent confusion and should be avoided.

Similarly, it is important to consider whether certain words may be viewed as biased or potentially offensive to some respondents, as well as the emotional reaction that some words may provoke. For example, in a 2005 Pew Research Center survey, 51% of respondents said they favored “making it legal for doctors to give terminally ill patients the means to end their lives,” but only 44% said they favored “making it legal for doctors to assist terminally ill patients in committing suicide.” Although both versions of the question are asking about the same thing, the reaction of respondents was different. In another example, respondents have reacted differently to questions using the word “welfare” as opposed to the more generic “assistance to the poor.” Several experiments have shown that there is much greater public support for expanding “assistance to the poor” than for expanding “welfare.”

We often write two versions of a question and ask half of the survey sample one version of the question and the other half the second version. Thus, we say we have two forms of the questionnaire. Respondents are assigned randomly to receive either form, so we can assume that the two groups of respondents are essentially identical. On questions where two versions are used, significant differences in the answers between the two forms tell us that the difference is a result of the way we worded the two versions.

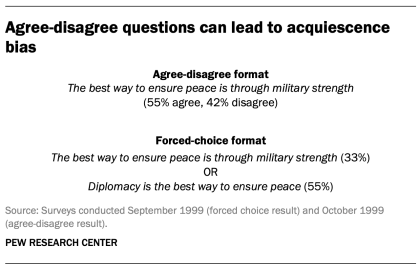

One of the most common formats used in survey questions is the “agree-disagree” format. In this type of question, respondents are asked whether they agree or disagree with a particular statement. Research has shown that, compared with the better educated and better informed, less educated and less informed respondents have a greater tendency to agree with such statements. This is sometimes called an “acquiescence bias” (since some kinds of respondents are more likely to acquiesce to the assertion than are others). This behavior is even more pronounced when there’s an interviewer present, rather than when the survey is self-administered. A better practice is to offer respondents a choice between alternative statements. A Pew Research Center experiment with one of its routinely asked values questions illustrates the difference that question format can make. Not only does the forced choice format yield a very different result overall from the agree-disagree format, but the pattern of answers between respondents with more or less formal education also tends to be very different.

One other challenge in developing questionnaires is what is called “social desirability bias.” People have a natural tendency to want to be accepted and liked, and this may lead people to provide inaccurate answers to questions that deal with sensitive subjects. Research has shown that respondents understate alcohol and drug use, tax evasion and racial bias. They also may overstate church attendance, charitable contributions and the likelihood that they will vote in an election. Researchers attempt to account for this potential bias in crafting questions about these topics. For instance, when Pew Research Center surveys ask about past voting behavior, it is important to note that circumstances may have prevented the respondent from voting: “In the 2012 presidential election between Barack Obama and Mitt Romney, did things come up that kept you from voting, or did you happen to vote?” The choice of response options can also make it easier for people to be honest. For example, a question about church attendance might include three of six response options that indicate infrequent attendance. Research has also shown that social desirability bias can be greater when an interviewer is present (e.g., telephone and face-to-face surveys) than when respondents complete the survey themselves (e.g., paper and web surveys).

Lastly, because slight modifications in question wording can affect responses, identical question wording should be used when the intention is to compare results to those from earlier surveys. Similarly, because question wording and responses can vary based on the mode used to survey respondents, researchers should carefully evaluate the likely effects on trend measurements if a different survey mode will be used to assess change in opinion over time.

Question order

Once the survey questions are developed, particular attention should be paid to how they are ordered in the questionnaire. Surveyors must be attentive to how questions early in a questionnaire may have unintended effects on how respondents answer subsequent questions. Researchers have demonstrated that the order in which questions are asked can influence how people respond; earlier questions can unintentionally provide context for the questions that follow (these effects are called “order effects”).

One kind of order effect can be seen in responses to open-ended questions. Pew Research Center surveys generally ask open-ended questions about national problems, opinions about leaders and similar topics near the beginning of the questionnaire. If closed-ended questions that relate to the topic are placed before the open-ended question, respondents are much more likely to mention concepts or considerations raised in those earlier questions when responding to the open-ended question.

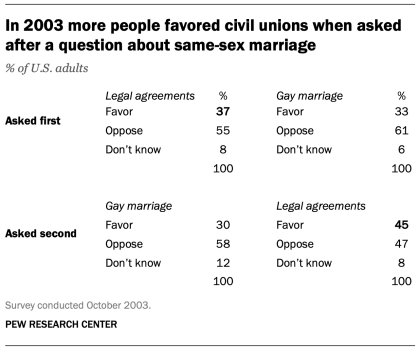

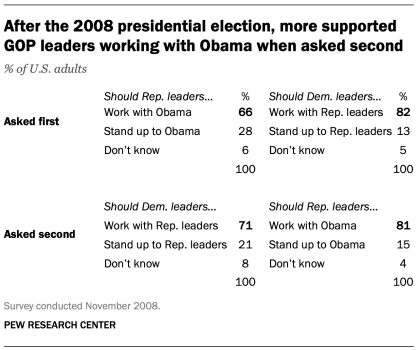

For closed-ended opinion questions, there are two main types of order effects: contrast effects ( where the order results in greater differences in responses), and assimilation effects (where responses are more similar as a result of their order).

An example of a contrast effect can be seen in a Pew Research Center poll conducted in October 2003, a dozen years before same-sex marriage was legalized in the U.S. That poll found that people were more likely to favor allowing gays and lesbians to enter into legal agreements that give them the same rights as married couples when this question was asked after one about whether they favored or opposed allowing gays and lesbians to marry (45% favored legal agreements when asked after the marriage question, but 37% favored legal agreements without the immediate preceding context of a question about same-sex marriage). Responses to the question about same-sex marriage, meanwhile, were not significantly affected by its placement before or after the legal agreements question.

Another experiment embedded in a December 2008 Pew Research Center poll also resulted in a contrast effect. When people were asked “All in all, are you satisfied or dissatisfied with the way things are going in this country today?” immediately after having been asked “Do you approve or disapprove of the way George W. Bush is handling his job as president?”; 88% said they were dissatisfied, compared with only 78% without the context of the prior question.

Responses to presidential approval remained relatively unchanged whether national satisfaction was asked before or after it. A similar finding occurred in December 2004 when both satisfaction and presidential approval were much higher (57% were dissatisfied when Bush approval was asked first vs. 51% when general satisfaction was asked first).

Several studies also have shown that asking a more specific question before a more general question (e.g., asking about happiness with one’s marriage before asking about one’s overall happiness) can result in a contrast effect. Although some exceptions have been found, people tend to avoid redundancy by excluding the more specific question from the general rating.

Assimilation effects occur when responses to two questions are more consistent or closer together because of their placement in the questionnaire. We found an example of an assimilation effect in a Pew Research Center poll conducted in November 2008 when we asked whether Republican leaders should work with Obama or stand up to him on important issues and whether Democratic leaders should work with Republican leaders or stand up to them on important issues. People were more likely to say that Republican leaders should work with Obama when the question was preceded by the one asking what Democratic leaders should do in working with Republican leaders (81% vs. 66%). However, when people were first asked about Republican leaders working with Obama, fewer said that Democratic leaders should work with Republican leaders (71% vs. 82%).

The order questions are asked is of particular importance when tracking trends over time. As a result, care should be taken to ensure that the context is similar each time a question is asked. Modifying the context of the question could call into question any observed changes over time (see measuring change over time for more information).

A questionnaire, like a conversation, should be grouped by topic and unfold in a logical order. It is often helpful to begin the survey with simple questions that respondents will find interesting and engaging. Throughout the survey, an effort should be made to keep the survey interesting and not overburden respondents with several difficult questions right after one another. Demographic questions such as income, education or age should not be asked near the beginning of a survey unless they are needed to determine eligibility for the survey or for routing respondents through particular sections of the questionnaire. Even then, it is best to precede such items with more interesting and engaging questions. One virtue of survey panels like the ATP is that demographic questions usually only need to be asked once a year, not in each survey.

U.S. Surveys

Other research methods, sign up for our weekly newsletter.

Fresh data delivered Saturday mornings

1615 L St. NW, Suite 800 Washington, DC 20036 USA (+1) 202-419-4300 | Main (+1) 202-857-8562 | Fax (+1) 202-419-4372 | Media Inquiries

Research Topics

- Age & Generations

- Coronavirus (COVID-19)

- Economy & Work

- Family & Relationships

- Gender & LGBTQ

- Immigration & Migration

- International Affairs

- Internet & Technology

- Methodological Research

- News Habits & Media

- Non-U.S. Governments

- Other Topics

- Politics & Policy

- Race & Ethnicity

- Email Newsletters

ABOUT PEW RESEARCH CENTER Pew Research Center is a nonpartisan fact tank that informs the public about the issues, attitudes and trends shaping the world. It conducts public opinion polling, demographic research, media content analysis and other empirical social science research. Pew Research Center does not take policy positions. It is a subsidiary of The Pew Charitable Trusts .

Copyright 2024 Pew Research Center

Terms & Conditions

Privacy Policy

Cookie Settings

Reprints, Permissions & Use Policy

- Privacy Policy

Home » Survey Research – Types, Methods, Examples

Survey Research – Types, Methods, Examples

Table of Contents

Survey Research

Definition:

Survey Research is a quantitative research method that involves collecting standardized data from a sample of individuals or groups through the use of structured questionnaires or interviews. The data collected is then analyzed statistically to identify patterns and relationships between variables, and to draw conclusions about the population being studied.

Survey research can be used to answer a variety of questions, including:

- What are people’s opinions about a certain topic?

- What are people’s experiences with a certain product or service?

- What are people’s beliefs about a certain issue?

Survey Research Methods

Survey Research Methods are as follows:

- Telephone surveys: A survey research method where questions are administered to respondents over the phone, often used in market research or political polling.

- Face-to-face surveys: A survey research method where questions are administered to respondents in person, often used in social or health research.

- Mail surveys: A survey research method where questionnaires are sent to respondents through mail, often used in customer satisfaction or opinion surveys.

- Online surveys: A survey research method where questions are administered to respondents through online platforms, often used in market research or customer feedback.

- Email surveys: A survey research method where questionnaires are sent to respondents through email, often used in customer satisfaction or opinion surveys.

- Mixed-mode surveys: A survey research method that combines two or more survey modes, often used to increase response rates or reach diverse populations.

- Computer-assisted surveys: A survey research method that uses computer technology to administer or collect survey data, often used in large-scale surveys or data collection.

- Interactive voice response surveys: A survey research method where respondents answer questions through a touch-tone telephone system, often used in automated customer satisfaction or opinion surveys.

- Mobile surveys: A survey research method where questions are administered to respondents through mobile devices, often used in market research or customer feedback.

- Group-administered surveys: A survey research method where questions are administered to a group of respondents simultaneously, often used in education or training evaluation.

- Web-intercept surveys: A survey research method where questions are administered to website visitors, often used in website or user experience research.

- In-app surveys: A survey research method where questions are administered to users of a mobile application, often used in mobile app or user experience research.

- Social media surveys: A survey research method where questions are administered to respondents through social media platforms, often used in social media or brand awareness research.

- SMS surveys: A survey research method where questions are administered to respondents through text messaging, often used in customer feedback or opinion surveys.

- IVR surveys: A survey research method where questions are administered to respondents through an interactive voice response system, often used in automated customer feedback or opinion surveys.

- Mixed-method surveys: A survey research method that combines both qualitative and quantitative data collection methods, often used in exploratory or mixed-method research.

- Drop-off surveys: A survey research method where respondents are provided with a survey questionnaire and asked to return it at a later time or through a designated drop-off location.

- Intercept surveys: A survey research method where respondents are approached in public places and asked to participate in a survey, often used in market research or customer feedback.

- Hybrid surveys: A survey research method that combines two or more survey modes, data sources, or research methods, often used in complex or multi-dimensional research questions.

Types of Survey Research

There are several types of survey research that can be used to collect data from a sample of individuals or groups. following are Types of Survey Research:

- Cross-sectional survey: A type of survey research that gathers data from a sample of individuals at a specific point in time, providing a snapshot of the population being studied.

- Longitudinal survey: A type of survey research that gathers data from the same sample of individuals over an extended period of time, allowing researchers to track changes or trends in the population being studied.

- Panel survey: A type of longitudinal survey research that tracks the same sample of individuals over time, typically collecting data at multiple points in time.

- Epidemiological survey: A type of survey research that studies the distribution and determinants of health and disease in a population, often used to identify risk factors and inform public health interventions.

- Observational survey: A type of survey research that collects data through direct observation of individuals or groups, often used in behavioral or social research.

- Correlational survey: A type of survey research that measures the degree of association or relationship between two or more variables, often used to identify patterns or trends in data.

- Experimental survey: A type of survey research that involves manipulating one or more variables to observe the effect on an outcome, often used to test causal hypotheses.

- Descriptive survey: A type of survey research that describes the characteristics or attributes of a population or phenomenon, often used in exploratory research or to summarize existing data.

- Diagnostic survey: A type of survey research that assesses the current state or condition of an individual or system, often used in health or organizational research.

- Explanatory survey: A type of survey research that seeks to explain or understand the causes or mechanisms behind a phenomenon, often used in social or psychological research.

- Process evaluation survey: A type of survey research that measures the implementation and outcomes of a program or intervention, often used in program evaluation or quality improvement.

- Impact evaluation survey: A type of survey research that assesses the effectiveness or impact of a program or intervention, often used to inform policy or decision-making.

- Customer satisfaction survey: A type of survey research that measures the satisfaction or dissatisfaction of customers with a product, service, or experience, often used in marketing or customer service research.

- Market research survey: A type of survey research that collects data on consumer preferences, behaviors, or attitudes, often used in market research or product development.

- Public opinion survey: A type of survey research that measures the attitudes, beliefs, or opinions of a population on a specific issue or topic, often used in political or social research.

- Behavioral survey: A type of survey research that measures actual behavior or actions of individuals, often used in health or social research.

- Attitude survey: A type of survey research that measures the attitudes, beliefs, or opinions of individuals, often used in social or psychological research.

- Opinion poll: A type of survey research that measures the opinions or preferences of a population on a specific issue or topic, often used in political or media research.

- Ad hoc survey: A type of survey research that is conducted for a specific purpose or research question, often used in exploratory research or to answer a specific research question.

Types Based on Methodology

Based on Methodology Survey are divided into two Types:

Quantitative Survey Research

Qualitative survey research.

Quantitative survey research is a method of collecting numerical data from a sample of participants through the use of standardized surveys or questionnaires. The purpose of quantitative survey research is to gather empirical evidence that can be analyzed statistically to draw conclusions about a particular population or phenomenon.

In quantitative survey research, the questions are structured and pre-determined, often utilizing closed-ended questions, where participants are given a limited set of response options to choose from. This approach allows for efficient data collection and analysis, as well as the ability to generalize the findings to a larger population.

Quantitative survey research is often used in market research, social sciences, public health, and other fields where numerical data is needed to make informed decisions and recommendations.

Qualitative survey research is a method of collecting non-numerical data from a sample of participants through the use of open-ended questions or semi-structured interviews. The purpose of qualitative survey research is to gain a deeper understanding of the experiences, perceptions, and attitudes of participants towards a particular phenomenon or topic.

In qualitative survey research, the questions are open-ended, allowing participants to share their thoughts and experiences in their own words. This approach allows for a rich and nuanced understanding of the topic being studied, and can provide insights that are difficult to capture through quantitative methods alone.

Qualitative survey research is often used in social sciences, education, psychology, and other fields where a deeper understanding of human experiences and perceptions is needed to inform policy, practice, or theory.

Data Analysis Methods

There are several Survey Research Data Analysis Methods that researchers may use, including:

- Descriptive statistics: This method is used to summarize and describe the basic features of the survey data, such as the mean, median, mode, and standard deviation. These statistics can help researchers understand the distribution of responses and identify any trends or patterns.

- Inferential statistics: This method is used to make inferences about the larger population based on the data collected in the survey. Common inferential statistical methods include hypothesis testing, regression analysis, and correlation analysis.

- Factor analysis: This method is used to identify underlying factors or dimensions in the survey data. This can help researchers simplify the data and identify patterns and relationships that may not be immediately apparent.

- Cluster analysis: This method is used to group similar respondents together based on their survey responses. This can help researchers identify subgroups within the larger population and understand how different groups may differ in their attitudes, behaviors, or preferences.

- Structural equation modeling: This method is used to test complex relationships between variables in the survey data. It can help researchers understand how different variables may be related to one another and how they may influence one another.

- Content analysis: This method is used to analyze open-ended responses in the survey data. Researchers may use software to identify themes or categories in the responses, or they may manually review and code the responses.

- Text mining: This method is used to analyze text-based survey data, such as responses to open-ended questions. Researchers may use software to identify patterns and themes in the text, or they may manually review and code the text.

Applications of Survey Research

Here are some common applications of survey research:

- Market Research: Companies use survey research to gather insights about customer needs, preferences, and behavior. These insights are used to create marketing strategies and develop new products.

- Public Opinion Research: Governments and political parties use survey research to understand public opinion on various issues. This information is used to develop policies and make decisions.

- Social Research: Survey research is used in social research to study social trends, attitudes, and behavior. Researchers use survey data to explore topics such as education, health, and social inequality.

- Academic Research: Survey research is used in academic research to study various phenomena. Researchers use survey data to test theories, explore relationships between variables, and draw conclusions.

- Customer Satisfaction Research: Companies use survey research to gather information about customer satisfaction with their products and services. This information is used to improve customer experience and retention.

- Employee Surveys: Employers use survey research to gather feedback from employees about their job satisfaction, working conditions, and organizational culture. This information is used to improve employee retention and productivity.

- Health Research: Survey research is used in health research to study topics such as disease prevalence, health behaviors, and healthcare access. Researchers use survey data to develop interventions and improve healthcare outcomes.

Examples of Survey Research

Here are some real-time examples of survey research:

- COVID-19 Pandemic Surveys: Since the outbreak of the COVID-19 pandemic, surveys have been conducted to gather information about public attitudes, behaviors, and perceptions related to the pandemic. Governments and healthcare organizations have used this data to develop public health strategies and messaging.

- Political Polls During Elections: During election seasons, surveys are used to measure public opinion on political candidates, policies, and issues in real-time. This information is used by political parties to develop campaign strategies and make decisions.

- Customer Feedback Surveys: Companies often use real-time customer feedback surveys to gather insights about customer experience and satisfaction. This information is used to improve products and services quickly.

- Event Surveys: Organizers of events such as conferences and trade shows often use surveys to gather feedback from attendees in real-time. This information can be used to improve future events and make adjustments during the current event.

- Website and App Surveys: Website and app owners use surveys to gather real-time feedback from users about the functionality, user experience, and overall satisfaction with their platforms. This feedback can be used to improve the user experience and retain customers.

- Employee Pulse Surveys: Employers use real-time pulse surveys to gather feedback from employees about their work experience and overall job satisfaction. This feedback is used to make changes in real-time to improve employee retention and productivity.

Survey Sample

Purpose of survey research.

The purpose of survey research is to gather data and insights from a representative sample of individuals. Survey research allows researchers to collect data quickly and efficiently from a large number of people, making it a valuable tool for understanding attitudes, behaviors, and preferences.

Here are some common purposes of survey research:

- Descriptive Research: Survey research is often used to describe characteristics of a population or a phenomenon. For example, a survey could be used to describe the characteristics of a particular demographic group, such as age, gender, or income.

- Exploratory Research: Survey research can be used to explore new topics or areas of research. Exploratory surveys are often used to generate hypotheses or identify potential relationships between variables.

- Explanatory Research: Survey research can be used to explain relationships between variables. For example, a survey could be used to determine whether there is a relationship between educational attainment and income.

- Evaluation Research: Survey research can be used to evaluate the effectiveness of a program or intervention. For example, a survey could be used to evaluate the impact of a health education program on behavior change.

- Monitoring Research: Survey research can be used to monitor trends or changes over time. For example, a survey could be used to monitor changes in attitudes towards climate change or political candidates over time.

When to use Survey Research

there are certain circumstances where survey research is particularly appropriate. Here are some situations where survey research may be useful:

- When the research question involves attitudes, beliefs, or opinions: Survey research is particularly useful for understanding attitudes, beliefs, and opinions on a particular topic. For example, a survey could be used to understand public opinion on a political issue.

- When the research question involves behaviors or experiences: Survey research can also be useful for understanding behaviors and experiences. For example, a survey could be used to understand the prevalence of a particular health behavior.

- When a large sample size is needed: Survey research allows researchers to collect data from a large number of people quickly and efficiently. This makes it a useful method when a large sample size is needed to ensure statistical validity.

- When the research question is time-sensitive: Survey research can be conducted quickly, which makes it a useful method when the research question is time-sensitive. For example, a survey could be used to understand public opinion on a breaking news story.

- When the research question involves a geographically dispersed population: Survey research can be conducted online, which makes it a useful method when the population of interest is geographically dispersed.

How to Conduct Survey Research

Conducting survey research involves several steps that need to be carefully planned and executed. Here is a general overview of the process:

- Define the research question: The first step in conducting survey research is to clearly define the research question. The research question should be specific, measurable, and relevant to the population of interest.

- Develop a survey instrument : The next step is to develop a survey instrument. This can be done using various methods, such as online survey tools or paper surveys. The survey instrument should be designed to elicit the information needed to answer the research question, and should be pre-tested with a small sample of individuals.

- Select a sample : The sample is the group of individuals who will be invited to participate in the survey. The sample should be representative of the population of interest, and the size of the sample should be sufficient to ensure statistical validity.

- Administer the survey: The survey can be administered in various ways, such as online, by mail, or in person. The method of administration should be chosen based on the population of interest and the research question.

- Analyze the data: Once the survey data is collected, it needs to be analyzed. This involves summarizing the data using statistical methods, such as frequency distributions or regression analysis.

- Draw conclusions: The final step is to draw conclusions based on the data analysis. This involves interpreting the results and answering the research question.

Advantages of Survey Research

There are several advantages to using survey research, including:

- Efficient data collection: Survey research allows researchers to collect data quickly and efficiently from a large number of people. This makes it a useful method for gathering information on a wide range of topics.

- Standardized data collection: Surveys are typically standardized, which means that all participants receive the same questions in the same order. This ensures that the data collected is consistent and reliable.

- Cost-effective: Surveys can be conducted online, by mail, or in person, which makes them a cost-effective method of data collection.

- Anonymity: Participants can remain anonymous when responding to a survey. This can encourage participants to be more honest and open in their responses.

- Easy comparison: Surveys allow for easy comparison of data between different groups or over time. This makes it possible to identify trends and patterns in the data.

- Versatility: Surveys can be used to collect data on a wide range of topics, including attitudes, beliefs, behaviors, and preferences.

Limitations of Survey Research

Here are some of the main limitations of survey research:

- Limited depth: Surveys are typically designed to collect quantitative data, which means that they do not provide much depth or detail about people’s experiences or opinions. This can limit the insights that can be gained from the data.

- Potential for bias: Surveys can be affected by various biases, including selection bias, response bias, and social desirability bias. These biases can distort the results and make them less accurate.

- L imited validity: Surveys are only as valid as the questions they ask. If the questions are poorly designed or ambiguous, the results may not accurately reflect the respondents’ attitudes or behaviors.

- Limited generalizability : Survey results are only generalizable to the population from which the sample was drawn. If the sample is not representative of the population, the results may not be generalizable to the larger population.

- Limited ability to capture context: Surveys typically do not capture the context in which attitudes or behaviors occur. This can make it difficult to understand the reasons behind the responses.

- Limited ability to capture complex phenomena: Surveys are not well-suited to capture complex phenomena, such as emotions or the dynamics of interpersonal relationships.

Following is an example of a Survey Sample:

Welcome to our Survey Research Page! We value your opinions and appreciate your participation in this survey. Please answer the questions below as honestly and thoroughly as possible.

1. What is your age?

- A) Under 18

- G) 65 or older

2. What is your highest level of education completed?

- A) Less than high school

- B) High school or equivalent

- C) Some college or technical school

- D) Bachelor’s degree

- E) Graduate or professional degree

3. What is your current employment status?

- A) Employed full-time

- B) Employed part-time

- C) Self-employed

- D) Unemployed

4. How often do you use the internet per day?

- A) Less than 1 hour

- B) 1-3 hours

- C) 3-5 hours

- D) 5-7 hours

- E) More than 7 hours

5. How often do you engage in social media per day?

6. Have you ever participated in a survey research study before?

7. If you have participated in a survey research study before, how was your experience?

- A) Excellent

- E) Very poor

8. What are some of the topics that you would be interested in participating in a survey research study about?

……………………………………………………………………………………………………………………………………………………………………………………………………………………………………………………………………….

9. How often would you be willing to participate in survey research studies?

- A) Once a week

- B) Once a month

- C) Once every 6 months

- D) Once a year

10. Any additional comments or suggestions?

Thank you for taking the time to complete this survey. Your feedback is important to us and will help us improve our survey research efforts.

About the author

Muhammad Hassan

Researcher, Academic Writer, Web developer

You may also like

Questionnaire – Definition, Types, and Examples

Case Study – Methods, Examples and Guide

Observational Research – Methods and Guide

Quantitative Research – Methods, Types and...

Qualitative Research Methods

Explanatory Research – Types, Methods, Guide

Have a language expert improve your writing

Run a free plagiarism check in 10 minutes, automatically generate references for free.

- Knowledge Base

- Methodology

- Questionnaire Design | Methods, Question Types & Examples

Questionnaire Design | Methods, Question Types & Examples

Published on 6 May 2022 by Pritha Bhandari . Revised on 10 October 2022.

A questionnaire is a list of questions or items used to gather data from respondents about their attitudes, experiences, or opinions. Questionnaires can be used to collect quantitative and/or qualitative information.

Questionnaires are commonly used in market research as well as in the social and health sciences. For example, a company may ask for feedback about a recent customer service experience, or psychology researchers may investigate health risk perceptions using questionnaires.

Table of contents

Questionnaires vs surveys, questionnaire methods, open-ended vs closed-ended questions, question wording, question order, step-by-step guide to design, frequently asked questions about questionnaire design.

A survey is a research method where you collect and analyse data from a group of people. A questionnaire is a specific tool or instrument for collecting the data.

Designing a questionnaire means creating valid and reliable questions that address your research objectives, placing them in a useful order, and selecting an appropriate method for administration.

But designing a questionnaire is only one component of survey research. Survey research also involves defining the population you’re interested in, choosing an appropriate sampling method , administering questionnaires, data cleaning and analysis, and interpretation.

Sampling is important in survey research because you’ll often aim to generalise your results to the population. Gather data from a sample that represents the range of views in the population for externally valid results. There will always be some differences between the population and the sample, but minimising these will help you avoid sampling bias .

Prevent plagiarism, run a free check.

Questionnaires can be self-administered or researcher-administered . Self-administered questionnaires are more common because they are easy to implement and inexpensive, but researcher-administered questionnaires allow deeper insights.

Self-administered questionnaires

Self-administered questionnaires can be delivered online or in paper-and-pen formats, in person or by post. All questions are standardised so that all respondents receive the same questions with identical wording.

Self-administered questionnaires can be:

- Cost-effective

- Easy to administer for small and large groups

- Anonymous and suitable for sensitive topics

But they may also be:

- Unsuitable for people with limited literacy or verbal skills

- Susceptible to a nonreponse bias (most people invited may not complete the questionnaire)

- Biased towards people who volunteer because impersonal survey requests often go ignored

Researcher-administered questionnaires

Researcher-administered questionnaires are interviews that take place by phone, in person, or online between researchers and respondents.

Researcher-administered questionnaires can:

- Help you ensure the respondents are representative of your target audience

- Allow clarifications of ambiguous or unclear questions and answers

- Have high response rates because it’s harder to refuse an interview when personal attention is given to respondents

But researcher-administered questionnaires can be limiting in terms of resources. They are:

- Costly and time-consuming to perform

- More difficult to analyse if you have qualitative responses

- Likely to contain experimenter bias or demand characteristics

- Likely to encourage social desirability bias in responses because of a lack of anonymity

Your questionnaire can include open-ended or closed-ended questions, or a combination of both.

Using closed-ended questions limits your responses, while open-ended questions enable a broad range of answers. You’ll need to balance these considerations with your available time and resources.

Closed-ended questions

Closed-ended, or restricted-choice, questions offer respondents a fixed set of choices to select from. Closed-ended questions are best for collecting data on categorical or quantitative variables.

Categorical variables can be nominal or ordinal. Quantitative variables can be interval or ratio. Understanding the type of variable and level of measurement means you can perform appropriate statistical analyses for generalisable results.

Examples of closed-ended questions for different variables

Nominal variables include categories that can’t be ranked, such as race or ethnicity. This includes binary or dichotomous categories.

It’s best to include categories that cover all possible answers and are mutually exclusive. There should be no overlap between response items.

In binary or dichotomous questions, you’ll give respondents only two options to choose from.

White Black or African American American Indian or Alaska Native Asian Native Hawaiian or Other Pacific Islander

Ordinal variables include categories that can be ranked. Consider how wide or narrow a range you’ll include in your response items, and their relevance to your respondents.

Likert-type questions collect ordinal data using rating scales with five or seven points.

When you have four or more Likert-type questions, you can treat the composite data as quantitative data on an interval scale . Intelligence tests, psychological scales, and personality inventories use multiple Likert-type questions to collect interval data.

With interval or ratio data, you can apply strong statistical hypothesis tests to address your research aims.

Pros and cons of closed-ended questions

Well-designed closed-ended questions are easy to understand and can be answered quickly. However, you might still miss important answers that are relevant to respondents. An incomplete set of response items may force some respondents to pick the closest alternative to their true answer. These types of questions may also miss out on valuable detail.

To solve these problems, you can make questions partially closed-ended, and include an open-ended option where respondents can fill in their own answer.

Open-ended questions

Open-ended, or long-form, questions allow respondents to give answers in their own words. Because there are no restrictions on their choices, respondents can answer in ways that researchers may not have otherwise considered. For example, respondents may want to answer ‘multiracial’ for the question on race rather than selecting from a restricted list.

- How do you feel about open science?

- How would you describe your personality?

- In your opinion, what is the biggest obstacle to productivity in remote work?

Open-ended questions have a few downsides.

They require more time and effort from respondents, which may deter them from completing the questionnaire.

For researchers, understanding and summarising responses to these questions can take a lot of time and resources. You’ll need to develop a systematic coding scheme to categorise answers, and you may also need to involve other researchers in data analysis for high reliability .

Question wording can influence your respondents’ answers, especially if the language is unclear, ambiguous, or biased. Good questions need to be understood by all respondents in the same way ( reliable ) and measure exactly what you’re interested in ( valid ).

Use clear language

You should design questions with your target audience in mind. Consider their familiarity with your questionnaire topics and language and tailor your questions to them.

For readability and clarity, avoid jargon or overly complex language. Don’t use double negatives because they can be harder to understand.

Use balanced framing

Respondents often answer in different ways depending on the question framing. Positive frames are interpreted as more neutral than negative frames and may encourage more socially desirable answers.

Use a mix of both positive and negative frames to avoid bias , and ensure that your question wording is balanced wherever possible.

Unbalanced questions focus on only one side of an argument. Respondents may be less likely to oppose the question if it is framed in a particular direction. It’s best practice to provide a counterargument within the question as well.

Avoid leading questions

Leading questions guide respondents towards answering in specific ways, even if that’s not how they truly feel, by explicitly or implicitly providing them with extra information.

It’s best to keep your questions short and specific to your topic of interest.

- The average daily work commute in the US takes 54.2 minutes and costs $29 per day. Since 2020, working from home has saved many employees time and money. Do you favour flexible work-from-home policies even after it’s safe to return to offices?

- Experts agree that a well-balanced diet provides sufficient vitamins and minerals, and multivitamins and supplements are not necessary or effective. Do you agree or disagree that multivitamins are helpful for balanced nutrition?

Keep your questions focused

Ask about only one idea at a time and avoid double-barrelled questions. Double-barrelled questions ask about more than one item at a time, which can confuse respondents.

This question could be difficult to answer for respondents who feel strongly about the right to clean drinking water but not high-speed internet. They might only answer about the topic they feel passionate about or provide a neutral answer instead – but neither of these options capture their true answers.

Instead, you should ask two separate questions to gauge respondents’ opinions.

Strongly Agree Agree Undecided Disagree Strongly Disagree

Do you agree or disagree that the government should be responsible for providing high-speed internet to everyone?

You can organise the questions logically, with a clear progression from simple to complex. Alternatively, you can randomise the question order between respondents.

Logical flow

Using a logical flow to your question order means starting with simple questions, such as behavioural or opinion questions, and ending with more complex, sensitive, or controversial questions.

The question order that you use can significantly affect the responses by priming them in specific directions. Question order effects, or context effects, occur when earlier questions influence the responses to later questions, reducing the validity of your questionnaire.

While demographic questions are usually unaffected by order effects, questions about opinions and attitudes are more susceptible to them.

- How knowledgeable are you about Joe Biden’s executive orders in his first 100 days?

- Are you satisfied or dissatisfied with the way Joe Biden is managing the economy?

- Do you approve or disapprove of the way Joe Biden is handling his job as president?

It’s important to minimise order effects because they can be a source of systematic error or bias in your study.

Randomisation

Randomisation involves presenting individual respondents with the same questionnaire but with different question orders.

When you use randomisation, order effects will be minimised in your dataset. But a randomised order may also make it harder for respondents to process your questionnaire. Some questions may need more cognitive effort, while others are easier to answer, so a random order could require more time or mental capacity for respondents to switch between questions.

Follow this step-by-step guide to design your questionnaire.

Step 1: Define your goals and objectives

The first step of designing a questionnaire is determining your aims.

- What topics or experiences are you studying?

- What specifically do you want to find out?

- Is a self-report questionnaire an appropriate tool for investigating this topic?

Once you’ve specified your research aims, you can operationalise your variables of interest into questionnaire items. Operationalising concepts means turning them from abstract ideas into concrete measurements. Every question needs to address a defined need and have a clear purpose.

Step 2: Use questions that are suitable for your sample

Create appropriate questions by taking the perspective of your respondents. Consider their language proficiency and available time and energy when designing your questionnaire.

- Are the respondents familiar with the language and terms used in your questions?

- Would any of the questions insult, confuse, or embarrass them?

- Do the response items for any closed-ended questions capture all possible answers?

- Are the response items mutually exclusive?

- Do the respondents have time to respond to open-ended questions?

Consider all possible options for responses to closed-ended questions. From a respondent’s perspective, a lack of response options reflecting their point of view or true answer may make them feel alienated or excluded. In turn, they’ll become disengaged or inattentive to the rest of the questionnaire.

Step 3: Decide on your questionnaire length and question order

Once you have your questions, make sure that the length and order of your questions are appropriate for your sample.