What Is Good H-Index? H-Index Required For An Academic Position

In the academic world, the h-index score stands as a pivotal metric, gauging the impact and breadth of a researcher’s work. Understanding what constitutes a good h-index is crucial for academics at all stages, from budding PhD students to seasoned professors.

This article looks into the h-index, exploring what scores are considered impressive across various disciplines and career stages.

- PhD Student: An h-index between 1 and 5 is typical for PhD students nearing the end of their program, reflecting their early stage in academic publishing.

- Postdoc and Assistant Professor: Early career researchers like postdoctoral fellows or assistant professors often find an h-index around 5 to 10 impressive, indicating a solid start in their respective fields.

- Associate Professor: At this more advanced stage, an h-index of 10 or more is generally expected, reflecting a consistent record of impactful research.

- Full Professor: For full professors, an h-index of 15 or higher is often seen, indicating a long and impactful career in research and academia.

How To Calculate Your H-Index Score?

In the academic world, the h-index score is a critical metric, essentially acting like a report card for scholars.

The h-index is a measure of a researcher’s productivity and impact. H-index was designed to assess the number of papers published and the number of citations each paper receives.

Now that you know what is a h-index score, you may now wonder if you can find out your own. Good thing is that platforms like Google Scholar or Web of Science can come in handy.

They track your number of publications and the number of times those publications are cited, crunching these numbers into your h-index.

This number can vary based on the field and years of research experience. A full professor might be expected to have a higher h-index, reflecting more years of impactful research.

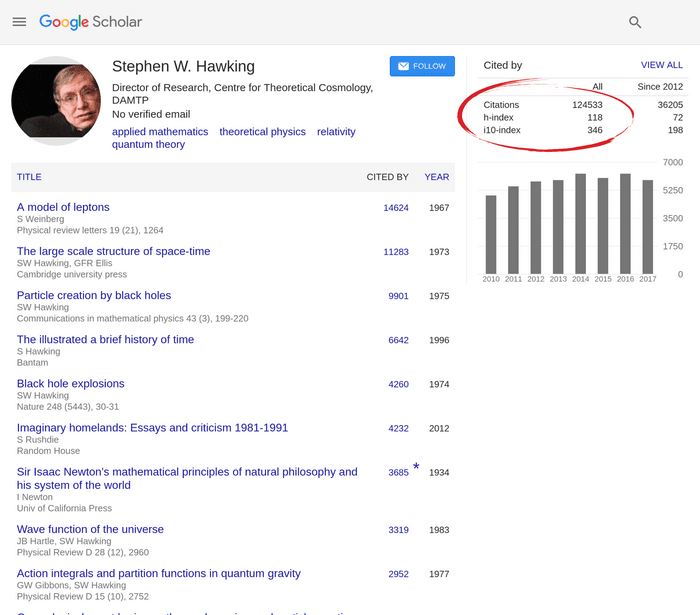

Google Scholar

To find out your h-index score from Google Scholar, you can follow the steps below:

- Create a Google Scholar Profile : If you don’t already have one, go to Google Scholar and create a profile. Fill in your academic details and affiliations.

- Add Publications : Ensure all your research publications are listed in your profile. You can add them manually or import them if they are already available on Google Scholar.

- Verify your Publications : Make sure the publications listed are indeed yours, as sometimes publications from other authors with similar names might appear.

- Check the Citations Section : Once your profile is complete and updated, look for the ‘Citations’ section on your profile page. This is usually located at the top and easy to spot.

- Find Your H-Index : In the Citations section, you will see your h-index listed among other citation metrics like the total number of citations and the i10-index.

Web Of Science

To find out your h-index score from Web Of Science, you can follow the steps below:

- Access Web of Science : Go to the Web of Science website. Access may require an institutional login, depending on your affiliation.

- Search for Your Name : Use the author search function to find your publications. Ensure you search with variations of your name if you’ve published under different names or initials.

- Create a Citation Report : Once your publications are listed, select them and create a citation report. This option is typically found above the list of your publications.

- View Your H-Index : In the citation report, your h-index will be displayed. This number is calculated based on the total number of papers you’ve published and the number of citations each paper has received.

What H-Index Is Considered Good For A PhD Student?

For a PhD student, the world of academic metrics can be daunting, especially when it comes to the h-index, a measure that intertwines the number of publications with their citation impact.

So, what h-index score should you, as a PhD student, aim for?

A “good” h-index can vary based on your field of study and the stage of your PhD program.

Generally, for PhD students, a lower h-index is expected and completely normal. You’re just beginning your journey in academic publishing.

An h-index between 1 and 5 might be typical for students nearing the end of their PhD. This means you have 1 to 5 publications that have been cited at least 1 to 5 times, respectively.

Your h-index can be calculated using tools like Google Scholar or Web of Science. These platforms track your published papers and the number of citations each receives.

As a PhD student, your focus should be on publishing quality research in reputable journals, as this will gradually increase your h-index.

Remember, while a higher h-index is beneficial for future academic positions, it’s not the only metric that matters. Your research’s quality, relevance, and impact in your field are equally important. A single highly influential paper might open more doors than several less impactful ones.

What Are Good H-Index Required For An Academic Position?

your h-index can be as crucial as your research itself. This metric, a blend of productivity and impact, is often scrutinized by hiring committees.

But what number should you aim for? A good h-index varies by field and career stage.

PostDoc, Assistant Professors

For early career researchers, like postdoctoral fellows or assistant professors, an h-index around 5 to 10 is often impressive.

It shows you’ve made a mark in your field, with a number of papers that have been cited at least that many times.

Associate Professor, Full Professor

In more senior roles, such as a tenured associate professor or full professor, expectations rise.

Here, an h-index of 10 or 15 might be the minimum, with higher numbers not uncommon.

This single number, while important, doesn’t tell the whole story. A young researcher might have a lower h-index simply due to less time in the field. Moreover, some fields tend to have higher citation rates, which can inflate h-index scores.

It’s wise to keep an eye on your h-index, especially if you’re eyeing:

- Competitive academic positions,

- Research funding

- Collaboration opportunities.

Improving your h-index involves not just publishing papers, but ensuring they are of high quality and relevance, increasing the likelihood of citations.

In sum, a good h-index is one that matches your career stage and field, reflecting both the quantity and impact of your work. However, it’s not the sole measure of your worth as a researcher.

The breadth and depth of your contributions, beyond just citation counts, also paint a vivid picture of your academic and scientific impact.

What Metric Influences H-Index Score?

Your h-index score is influenced by several key factors:

- Number of Publications : The more papers you publish, the greater the potential for citations. It’s a numbers game, but quality over quantity should be your mantra. High-caliber papers in respected journals often garner more attention and citations.

- Citations Per Publication : Your h-index heavily relies on how often your papers are cited. Even if you have a plethora of publications, your h-index won’t shine if they’re seldom cited.

- Years of Research Experience : A young researcher might have a lower h-index compared to a full professor, who has had more time to build their citation record.

- Research Field : The h-index varies widely across disciplines. Fields with rapid publication and citation rates like biomedical sciences often see higher h-index scores than, say, humanities. So, a good h-index in one field might be considered low in another.

- Access to Research Collaborations : Collaborations can boost your h-index. Working with other researchers, can increase the visibility and citation potential of your papers. However, too many authors on a single paper might dilute the perceived contribution of each.

Remember, while a high h-index can be indicative of a significant academic impact, it’s not the sole measure of your scientific worth. It’s a good idea to give your h-index some consideration, but also focus on the broader spectrum of your academic contributions.

How To Increase H-Index Score?

Increasing your h-index, a metric reflecting the impact and productivity of your academic work, is a strategic goal for many researchers.

This single number, representing the intersection of the quantity of your publications and their citation impact, can play a pivotal role in securing research grants and academic positions.

To boost your h-index, focus on publishing quality research in well-regarded journals. A paper published in a respected journal is more likely to be cited, and each citation nudges your h-index upwards.

For example, if you’re an assistant professor with an h-index of 5, aiming for journals with high visibility in your field can help you reach a higher h-index, making you more competitive for positions like associate or full professor.

Collaboration is another key strategy. Co-authoring with established researchers can increase the reach and citation potential of your papers.

This, however, comes with a caveat: the more number of authors on a paper, the more diluted your perceived contribution might be. Aim for a balance in co-authorship.

Active engagement in the academic community also matters. Increase citations on your work by:

- Presenting at conferences,

- networking, and

- promoting your work on platforms like Google Scholar or Web of Science.

Remember, the h-index varies by field and career stage. A good h-index for a young researcher might be 10, while more senior academics might aim for higher numbers. Using databases like Google Scholar, you can track your number of cited publications and calculate your h-index.

While a higher h-index can bolster your academic profile, it’s not the sole indicator of your scholarly worth – low h-index score is not a dealbreaker in many cases. It’s wise to consider it alongside other measures of your academic and scientific impact.

Good H-Index Score May Vary

A good h-index score is relative, varying across academic fields and career stages. While it offers a valuable snapshot of a researcher’s impact and productivity, it’s important to view it as one part of a larger picture.

Aspiring for a higher h-index should go hand in hand with maintaining the quality and relevance of research. Ultimately, the h-index is a useful tool, but it’s the depth and innovation of your work that truly define your academic legacy.

Dr Andrew Stapleton has a Masters and PhD in Chemistry from the UK and Australia. He has many years of research experience and has worked as a Postdoctoral Fellow and Associate at a number of Universities. Although having secured funding for his own research, he left academia to help others with his YouTube channel all about the inner workings of academia and how to make it work for you.

Thank you for visiting Academia Insider.

We are here to help you navigate Academia as painlessly as possible. We are supported by our readers and by visiting you are helping us earn a small amount through ads and affiliate revenue - Thank you!

2024 © Academia Insider

- Discoveries

- Right Journal

- Journal Metrics

- Journal Fit

- Abbreviation

- In-Text Citations

- Bibliographies

- Writing an Article

- Peer Review Types

- Acknowledgements

- Withdrawing a Paper

- Form Letter

- ISO, ANSI, CFR

- Google Scholar

- Journal Manuscript Editing

- Research Manuscript Editing

Book Editing

- Manuscript Editing Services

Medical Editing

- Bioscience Editing

- Physical Science Editing

- PhD Thesis Editing Services

- PhD Editing

- Master’s Proofreading

- Bachelor’s Editing

- Dissertation Proofreading Services

- Best Dissertation Proofreaders

- Masters Dissertation Proofreading

- PhD Proofreaders

- Proofreading PhD Thesis Price

- Journal Article Editing

- Book Editing Service

- Editing and Proofreading Services

- Research Paper Editing

- Medical Manuscript Editing

- Academic Editing

- Social Sciences Editing

- Academic Proofreading

- PhD Theses Editing

- Dissertation Proofreading

- Proofreading Rates UK

- Medical Proofreading

- PhD Proofreading Services UK

- Academic Proofreading Services UK

Medical Editing Services

- Life Science Editing

- Biomedical Editing

- Environmental Science Editing

- Pharmaceutical Science Editing

- Economics Editing

- Psychology Editing

- Sociology Editing

- Archaeology Editing

- History Paper Editing

- Anthropology Editing

- Law Paper Editing

- Engineering Paper Editing

- Technical Paper Editing

- Philosophy Editing

- PhD Dissertation Proofreading

- Lektorat Englisch

- Akademisches Lektorat

- Lektorat Englisch Preise

- Wissenschaftliches Lektorat

- Lektorat Doktorarbeit

PhD Thesis Editing

- Thesis Proofreading Services

- PhD Thesis Proofreading

- Proofreading Thesis Cost

- Proofreading Thesis

- Thesis Editing Services

- Professional Thesis Editing

- Thesis Editing Cost

- Proofreading Dissertation

- Dissertation Proofreading Cost

- Dissertation Proofreader

- Correção de Artigos Científicos

- Correção de Trabalhos Academicos

- Serviços de Correção de Inglês

- Correção de Dissertação

- Correção de Textos Precos

- 定額 ネイティブチェック

- Copy Editing

- FREE Courses

- Revision en Ingles

- Revision de Textos en Ingles

- Revision de Tesis

- Revision Medica en Ingles

- Revision de Tesis Precio

- Revisão de Artigos Científicos

- Revisão de Trabalhos Academicos

- Serviços de Revisão de Inglês

- Revisão de Dissertação

- Revisão de Textos Precos

- Corrección de Textos en Ingles

- Corrección de Tesis

- Corrección de Tesis Precio

- Corrección Medica en Ingles

- Corrector ingles

Select Page

What Is a Good H-Index Required for an Academic Position?

Posted by Rene Tetzner | Sep 3, 2021 | Career Advice for Academics , How To Get Published | 0 |

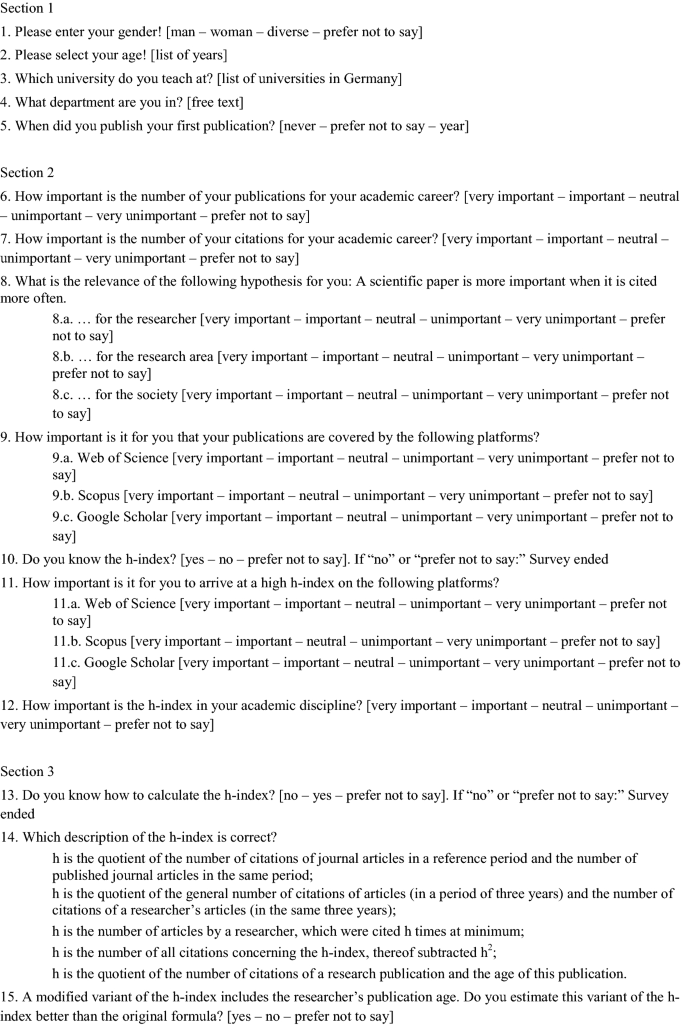

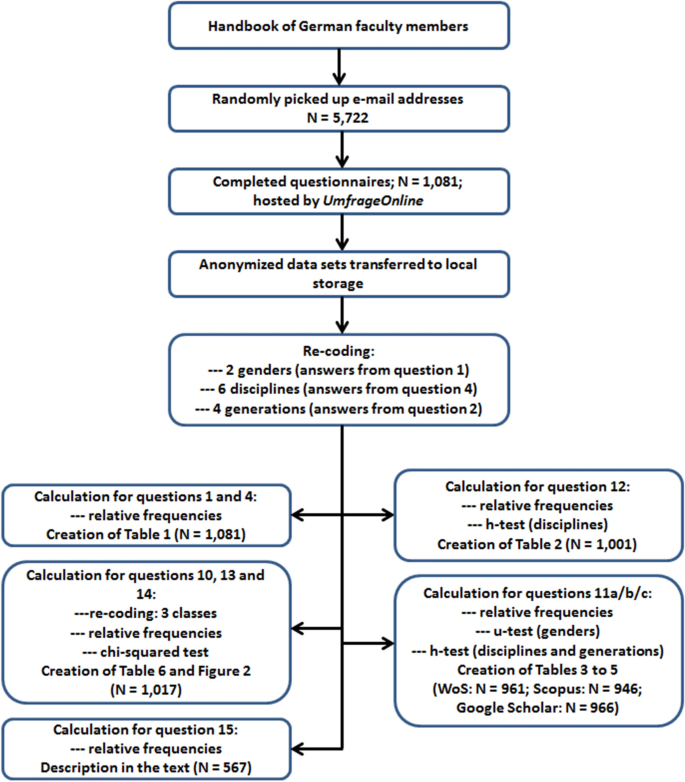

What Is a Good H-Index Required for an Academic Position? Metrics are important. Even scholars who may not entirely agree with the ways in which academic and scientific impact is currently measured and used cannot deny that metrics play a significant role in determining who receives research grants, employment offers and desirable promotions. The h-index is only one among various kinds of metrics now applied to the research-based writing of professional scholars, but it is an increasingly significant one. Introduced by the physicist Jorge Hirsch in a paper published in 2005, the h-index was designed to assess the quantity and quality of a scientist’s contributions and predict his or her productivity and influence in the coming years. However, its use and importance have quickly expanded beyond physics and the sciences into a wide variety of disciplines and fields of study. If you are applying for a scientific or academic position, hoping for a promotion or in need of research funding, it will therefore be wise to give your h-index score some consideration, but within reason. In some fields, the h-index and other forms of metrics play a very small part if any in hiring and funding, and there are still many other means used by hiring and funding committees to assess scholarly contributions.

The h-index is considered preferable to metrics that measure only a researcher’s number of publications or the number of times those publications have been cited. This is because it combines the two, considering both publications and citations to arrive at a particular value. A scholar who has five publications that have been cited at least five times has an h-index of 5, whereas a scholar with ten publications that have been cited ten times has an h-index of 10. Publication and citation patterns differ markedly across disciplines and fields of study, and the expectations of hiring and funding bodies vary depending on the level and type of position and the kind and size of research project, so it is impossible to say exactly what might be considered an acceptable or competitive h-index in a given situation. H-index scores between 3 and 5 seem common for new assistant professors, scores between 8 and 12 fairly standard for promotion to the position of tenured associate professor, and scores between 15 and 20 about right for becoming a full professor. Be aware, however, that these are gross generalisations and actual figures vary enormously among disciplines and fields: there are, for instance, many full professors, deans and chancellors with very low h-index scores, and an exceptional young researcher with an h-index of 10 or 15 might conceivably still be working on a post doctorate.

As a general rule in many fields, an h-index that matches the number of years a scholar has been working in the field is a respectable score. Hirsch in fact suggested that the h-index be used in conjunction with a scholar’s active research time to arrive at what is known as Hirsch’s individual m. It is calculated by dividing a scientist’s h-index by the number of years that have passed since the first publication, with a score of 1 being very good indeed, 2 being outstanding and 3 truly exceptional. This means that if you have published at least one well-cited document each year since your first publication – a decent textual output by any measure – you are among a successful group of scholars, and if you have published two or three times that number of well-cited documents over the same period of time, you are among the intellectual superstars of your discipline and probably of your time. To put this into perspective, from what I can find online it looks like Stephen Hawking has a score of about 1.6 by this calculation. If you can approach a hiring committee or funding body with anything close to that, you are certainly going to be a serious contender in the competition.

The h-index as a measure of both the quantity and quality of scholarly achievement is considered quite reliable and robust, so it has proved incredibly popular and is now applied not only to individual researchers, but also to research groups and projects, to scholarly journals and publishers, to academic and scientific departments, to entire universities and even to entire countries. As with all metrics, however, the h-index is subject to a number of biases and limitations, so there are significant problems associated with relying solely on h-index scores when making important research and career decisions. The h-index does not, for example, account for publications with citation numbers far above a researcher’s h-index or distinguish any difference between publications with a single author or many. Older publications are counted exactly as more recent ones are and older scholars benefit, whether they have published anything new in years or not. Neither the length of a publication nor the nature of each citation (positive or negative) is considered, so those measures of quantity and quality are not part of the picture. Early career researchers who take the time to delve deeply into an important problem and eventually produce an excellent article and scholars at any stage in their careers who dedicate time to teaching or practical applications of research will have lower scores than those who crank out mediocre articles based on uninteresting research that is nonetheless cited by their colleagues. Finally, the databases from which the h-index and other metrics are determined vary in the types of documents they consider and the fields of study they include, so the same scholar will not receive the same h value across all of them, and accurate comparison across fields and disciplines is impossible.

These and other problems have generated a number of adjustments that are rather similar to Hirsch’s individual m, which, as discussed above, considers a scholar’s active research time in relation to his or her h-index. The g-index gives greater weight to publications whose citation counts exceed a researcher’s h value; the hi index corrects for the number of authors; the hc index corrects for the age of publications, with recent citations earning more counts; and the c-index considers collaboration distance between the author of a publication and the authors citing it. Solutions for comparison between disciplines and fields have included dividing the h-index scores of scholars by the h-index averages in their respective fields to arrive at results that can be compared, but defining fields can be tricky, and larger fields of study with more researchers naturally generate more citations. The databases used for scholarly metrics are constantly upgrading and broadening their inclusiveness to render metrics like the h-index more truly representative of a researcher’s actual productivity and impact, so the accuracy and consistency of these tools are likely to continue improving. However, no new numbers or calculations can add what all of these metrics lack, and that is research content – the valuable and unique content that makes the publication of research a worthy task in the first place.

Committees gathered to hire or promote faculty or to select the recipients of research grants rarely rely solely on metrics when making their decisions. If they are doing their jobs properly, they combine what they can gather from metrics with other information about candidates and their scholarly impact. They do not just notice how many times the papers of candidates have been cited; they read those papers and consider their content, and they pay attention to the other activities of the scholars they are considering. This wider perspective is appropriate for an applicant as well, so if you are polishing your CV, putting together a grant application or preparing for a job interview, look over your own unique achievements with a kindly yet critical eye and consider them in direct relation to what the job posting or grant regulations indicate is wanted. If you happen to have a wonderful h-index score or any other impressive metrics, by all means flaunt them, and if you fear that a low h value will compromise your career aspirations, do what you can to have your publications with lower citation counts read and used more often, update your profiles on the relevant databases, and publish the type of document sure to garner citations in your field, such as a review article.

Do keep in mind, however, that hiring and funding committees are often looking for far more than large numbers of highly cited publications. Admittedly, they rarely balk at them, but universities are also seeking excellent teachers, advisors and administrators, so play up those skills and any related experience you have, and remember that financial supporters of research may be keen to fund scholars who can successfully manage and complete projects, even and perhaps especially if part of the training they offer younger researchers means that their students tend to publish most of the results. Finally, an active online presence in your field established through sharing your research via blogs, professional platforms and social media might not garner the same respect as formal publications, but it can count for a great deal when many universities are working to increase their online activities and funding bodies working to democratise the publication of the research they support. Generally speaking, committees considering applications will be even more likely to google the names of candidates and applicants than to look up the metrics associated with them, so assume that both will be done and ensure that what can be found shares excellent research content and leaves a desirable professional impression of you and your work.

You might be interested in Services offered by Proof-Reading-Service.com

Journal editing.

Journal article editing services

PhD thesis editing services

Scientific Editing

Manuscript editing.

Manuscript editing services

Expert Editing

Expert editing for all papers

Research Editing

Research paper editing services

Professional book editing services

What Is a Good H-Index Required for an Academic Position? The h-index is used along with applicants research & skills to measure their impact

Related Posts

Choosing the Right Journal

September 10, 2021

Example of a Quantitative Research Paper

September 4, 2021

Acknowledgements Example for an Academic Research Paper

September 1, 2021

Free Sample Letters for Withdrawing a Manuscript

August 31, 2021

Our Recent Posts

Our review ratings

- Examples of Research Paper Topics in Different Study Areas Score: 98%

- Dealing with Language Problems – Journal Editor’s Feedback Score: 95%

- Making Good Use of a Professional Proofreader Score: 92%

- How To Format Your Journal Paper Using Published Articles Score: 95%

- Journal Rejection as Inspiration for a New Perspective Score: 95%

Explore our Categories

- Abbreviation in Academic Writing (4)

- Career Advice for Academics (5)

- Dealing with Paper Rejection (11)

- Grammar in Academic Writing (5)

- Help with Peer Review (7)

- How To Get Published (146)

- Paper Writing Advice (17)

- Referencing & Bibliographies (16)

- Maps & Floorplans

- Libraries A-Z

- Ellis Library (main)

- Engineering Library

- Geological Sciences

- Journalism Library

- Law Library

- Mathematical Sciences

- MU Digital Collections

- Veterinary Medical

- More Libraries...

- Instructional Services

- Course Reserves

- Course Guides

- Schedule a Library Class

- Class Assessment Forms

- Recordings & Tutorials

- Research & Writing Help

- More class resources

- Places to Study

- Borrow, Request & Renew

- Call Numbers

- Computers, Printers, Scanners & Software

- Digital Media Lab

- Equipment Lending: Laptops, cameras, etc.

- Subject Librarians

- Writing Tutors

- More In the Library...

- Undergraduate Students

- Graduate Students

- Faculty & Staff

- Researcher Support

- Distance Learners

- International Students

- More Services for...

- View my MU Libraries Account (login & click on My Library Account)

- View my MOBIUS Checkouts

- Renew my Books (login & click on My Loans)

- Place a Hold on a Book

- Request Books from Depository

- View my ILL@MU Account

- Set Up Alerts in Databases

- More Account Information...

Maximizing your research identity and impact

- Researcher Profiles

- h-index for resesarchers-definition

h-index for journals

H-index for institutions, computing your own h-index, ways to increase your h-index, limitations of the h-index, variations of the h-index.

- Using Scopus to find a researcher's h-index

- Additional resources for finding a researcher's h-index

- Journal Impact Factor & other journal rankings

- Altmetrics This link opens in a new window

- Research Repositories

- Open Access This link opens in a new window

- Methods for increasing researcher impact & visibility

h-index for researchers-definition

- The h-index is a measure used to indicate the impact and productivity of a researcher based on how often his/her publications have been cited.

- The physicist, Jorge E. Hirsch, provides the following definition for the h-index: A scientist has index h if h of his/her N p papers have at least h citations each, and the other (N p − h) papers have no more than h citations each. (Hirsch, JE (15 November 2005) PNAS 102 (46) 16569-16572)

- The h -index is based on the highest number of papers written by the author that have had at least the same number of citations.

- A researcher with an h-index of 6 has published six papers that have been cited at least six times by other scholars. This researcher may have published more than six papers, but only six of them have been cited six or more times.

Whether or not a h-index is considered strong, weak or average depends on the researcher's field of study and how long they have been active. The h-index of an individual should be considered in the context of the h-indices of equivalent researchers in the same field of study.

Definition : The h-index of a publication is the largest number h such that at least h articles in that publication were cited at least h times each. For example, a journal with a h-index of 20 has published 20 articles that have been cited 20 or more times.

Available from:

- SJR (Scimago Journal & Country Rank)

Whether or not a h-index is considered strong, weak or average depends on the discipline the journal covers and how long it has published. The h-index of a journal should be considered in the context of the h-indices of other journals in similar disciplines.

Definition : The h-index of an institution is the largest number h such that at least h articles published by researchers at the institution were cited at least h times each. For example, if an institution has a h-index of 200 it's researchers have published 200 articles that have been cited 200 or more times.

Available from: exaly

In a spreadsheet, list the number of times each of your publications has been cited by other scholars.

Sort the spreadsheet in descending order by the number of times each publication is cited. Then start counting down until the article number is equal to or not greater than the times cited.

Article Times Cited

1 50

2 15

3 12

4 10

5 8

6 7 == =>h index is 6

7 5

8 1

How to successfully boost your h-index (enago academy, 2019)

Glänzel, Wolfgang On the Opportunities and Limitations of the H-index. , 2006

- h -index based upon data from the last 5 years

- i-10 index is the number of articles by an author that have at least ten citations.

- i-10 index was created by Google Scholar .

- Used to compare researchers with different lengths of publication history

- m-index = ___________ h-index _______________ # of years since author’s 1 st publication

Using Scopus to find an researcher's h-index

Additional resources for finding a researcher's h-index.

Web of Science Core Collection or Web of Science All Databases

- Perform an author search

- Create a citation report for that author.

- The h-index will be listed in the report.

Set up your author profile in the following three resources. Each resource will compute your h-index. Your h-index may vary since each of these sites collects data from different resources.

- Google Scholar Citations Computes h-index based on publications and cited references in Google Scholar .

- Researcher ID

- Computes h-index based on publications and cited references in the last 20 years of Web of Science .

- << Previous: Researcher Profiles

- Next: Journal Impact Factor & other journal rankings >>

- Last Updated: Nov 15, 2023 11:59 AM

- URL: https://libraryguides.missouri.edu/researchidentity

Course blog for INFO 2040/CS 2850/Econ 2040/SOC 2090

The H-Index: good or bad?

Anyone working in academia is well-aware of the ubiquity of h-indexes. To many professors and graduate students, the h-index is perhaps the most widely used metric in determining the influence of one’s work. This single number is used to convey the influence you have had in your research career, is pivotal to career advancement, and used in part to determine the relative influence of difference academic institutions. Given the ubiquity and power of such an index on the academic sphere, we must pause for a second and ask, is this actually the best method for ranking the merit of different scientists? Have we perhaps learned better alternatives of ranking publications within our own course?

First off, I shall define the h-index:

The h-index of an author, h, is the largest number x such that there are x articles published by the author which have at least x references. In other words, h is the maximum number of publications by a scientist that were cited at least h times.

As can be seen, this metric (developed by Jorge Eduardo Hirsch of UCSD in 2005) is used to measure the quality and quantity of a researcher’s work. The inventor, Hirsch himself, proposes that after 20 years of research, an h-index of 20 is good, 40 is outstanding, and 60 is exceptional. It is an indicator that a researcher is reliable, consistently engaged in meaningful science and has publications that are largely adopted. However, time and time again, the h-index has proved ineffective to honour the importance of scientific endeavours.

First, consider the young and exceptional scientist. If in their short career, they have published 2 great papers, with thousands of citations, their h-index is just as good as another scientist who has worked for 20 years and published 2o papers, 2 of which each have 2 citations. Their is an implicit agism in the h-index that works against the interests of meritocracy.

Second, consider the scientist Y that is consistently published by the best journals. H-index does not discriminate between the authority of different hubs, and the achievement of being published in a great journal is treated equal to being published in the worst one. The h-index does not take into account the fact that some citations are more impressive than others, and more indicative of meaningful work. It is not fair to treat every referrer with the same sense of credibility.

Third, authors are encouraged by h-index to produce less important publications that would enhance their index, as the h-index is bounded by the minimum number of articles. For instance, I could compartmentalise my research into 4 different research papers for a better h-index, even though the ideas might be better expressed in a single research paper. This creates a culture in academia of prioritising quantity: publishing more papers to convey influence, instead of focusing on the quality and merit of the science itself.

I could not help but pause and think, have we learned a better model in our Networks class? Could we not provide a better score than the H-Index?

I came across a most though-provoking article in PLOS, a non-profit tech and medicine publisher that contains open-access journals:

The Pagerank-Index: Going beyond Citation Counts in Quantifying Scientific Impact of Researchers

https://journals.plos.org/plosone/article?id=10.1371/journal.pone.013479

They propose using the page-rank algorithm (as discussed in class) to rank publications in the citation network. Each node gets a value after this process, which can then be distributed to each author, and the summation of all page-rank values is obtained for every author. This can then be compared to all other author values to form the percentile.

The advantage of doing so, is that PageRank can compare the sources of information and determine which references are more-trustworthy. As discussed in lectures, PageRank is calculated recursively and depends on the metric of all pages that link to it. Each page spreads it vote equally among all out-links. If a page is linked to by many high ranked pages, it achieves a high rank.

Here, not all citations are equal, and a publications is important if it is pointed to by other important publications. This is the beauty of PageRank, an elegant solution which we have covered in our course.

In this case, we make the scientific world more meritocratic. We give the potential to young authors to be taken seriously, if they have already produced valuable works. Further, we give credence to researchers that are being published in amazing scientific journals over mediocre ones. We could also implement a variance of HITS to achieve similar outcomes, and there are a myriad of strategies we have learned in class that could create a more fair academic environment.

In conclusion, the H-index should be forgotten! Let the academic world move forward, and benefit from the might of Networks and Google’s innovation. After all, Google Scholar is one of the most ubiquitous users of the h-index, and the company itself could lead the way by reverting back to their own early innovations! Let us use the PageRank algorithm to evaluate scientific research in a fair manner!

November 13, 2020 | category: Uncategorized

Leave a Comment

Leave a Reply

Name (required)

Mail (will not be published) (required)

XHTML: You can use these tags: <a href="" title=""> <abbr title=""> <acronym title=""> <b> <blockquote cite=""> <cite> <code> <del datetime=""> <em> <i> <q cite=""> <s> <strike> <strong>

Blogging Calendar

©2024 Cornell University Powered by Edublogs Campus and running on blogs.cornell.edu

Measuring academic impact: An author-level metric: the H-index

- Tracking your research impact: sources & metrics

- An author-level metric: the H-index

- Journal level metrics

An author-level metric: the h-index

The H-index, proposed by physicist J.E. Hirsch (hence the H) in 2005, is a way to measure the individual academic output of a researcher. "A scientist has index h if h of his or her Np papers have at least h citations each and the other (Np-h) papers have ≤h citations each" (Hirsch, 2005).

To determine the H-index of a researcher you need the list of the publications of that researcher and the number of citations each publication received. Then sort the publication list by the number of citations: the publication with the highest number of citations is number 1. The H-index is the number where the number of the publication in the list and the number of citations received are the same.

Example of an H-graph in Scopus (click on the picture to enlarge it)

The H-index has become a popular performance indicator, probably because the calculation of the h-index is easy to understand, and because it takes into account both productivity (the number of publications) and academic impact (the number of citations received). The H-index of an author is also easy to find: in Scopus, Web of Science and Google Scholar within a few mouseclicks (but be aware, that's the 'quick and dirty approach').

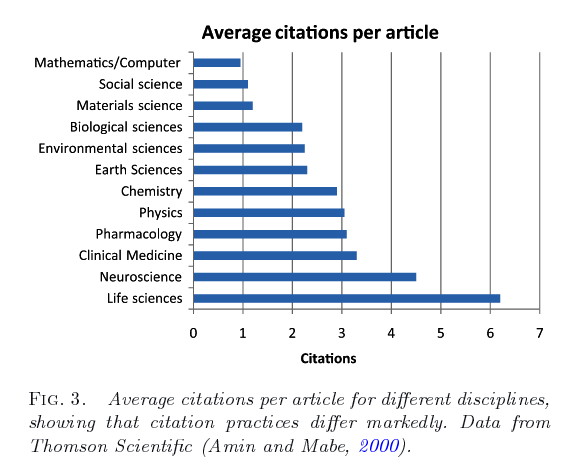

However, the H-index has various weak points. An easy example: the H-index doesn’t take into account the age of the researcher, which makes it unfair to use the H-index to compare two researchers in different stages of their research career. A professor at the end of his career has published more than a PhD-student and has had more time to get cited. There are also examples of researchers changing disciplines, taking their high H-index with them to a discipline with in general lower H-indexes. Because of differences in publication cultures between disciplines , you can’t compare the H-index of researchers from different disciplines.

Examples of researchers changing disciplines

From astrophysics to economics: Titus Galama . His Google Scholar Profile shows his H-index based on all his publications.

From psychology to media & communication: Jeroen Jansz . His Google Scholar Profile also shows his H-index based on all his publications.

Hirsch, J. E. (2005). An index to quantify an individual's scientific research output. Proceedings of the National Academy of Sciences of the United States of America, 102 (46), 16569-16572. https://doi.org/10.1073/pnas.0507655102

Which H-index?

To create the list of publications and the number of citations received you can use different sources. The most used sources are Scopus, Web of Science and Google Scholar. Which source you use, determines the H-index you find for a particular researcher, because the ‘publication universes’ of the sources are different. For example Google Scholar also indexes university repositories, so citations in student’s theses are counted as well.

The example below shows the general picture: the H-index based on publications in Web of Science is lower than the H-index based on publications in Scopus; the H-index in Google Scholar is by far the highest.

An example: Rutger Engels, Professor Developmental Psychopathology at ESSB and former rector magnificus of the Erasmus University Rotterdam.

* In Web of Science we had to combine and clean several author records. In Scopus the Scopus Author Profile was used, in Google Scholar the Google Scholar Citation Profile was used.

Our advice: When you are looking for your own H-index or the H-index of another researcher, don’t rely on easily-available numbers. The basis of the H-index is the publications list: you have to check whether the publications in the list you use are indeed of the researcher and whether publications might be missing. Always give information about the source used and use, when possible, multiple sources.

Determining the H-index in Web of Science, Scopus and Google Scholar

In these handouts you can find the steps to determine the H-index of a researcher in:

- The H-index in Google Scholar

- The H-index in Scopus

- The H-index in Web of Science

- YOU in databases: Academic profiling How can you make sure that your publications can be found easily in citation databases such as Scopus, Web of Science and Google Scholar? By managing your author profiles, such as Web of Science ResearcherID, ORCID iD, Google Scholar Citations profile or Scopus Author ID.

- EUR ORCID LibGuide Practical information on how to register for an ORCID iD, how to add publication data and other information to your ORCID record and how to use your ORCID iD in other systems.

- Research Evaluation and Assessment Service (REAS) A team of bibliometric practitioners of the University Library can give advice on how to evaluate academic impact and to create understanding of responsible metrics. Click on the header 'Contact the REAS team'.

Email the Information skills team

- << Previous: Tracking your research impact: sources & metrics

- Next: Journal level metrics >>

- Last Updated: Mar 27, 2024 6:24 PM

- URL: https://libguides.eur.nl/informationskillsimpact

- Research guides

Assessing Article and Author Influence

Finding an author's h-index, the h-index: a brief guide.

This page provides an overview of the H-Index, an attempt to measure the research impact of a scholar. The topics include:

What is the H-Index?

How is the h-index computed, factors to bear in mind.

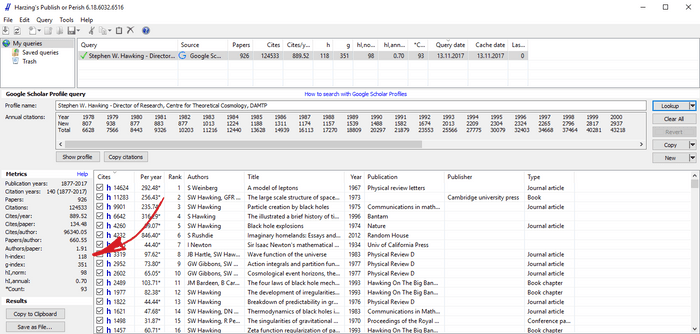

- Using Harzing's Publish or Publish to Assess the H-Index

Using Web of Science to Assess the H-Index

- H-Index Video

Contemporary H-Index

Selected further reading.

The h-index, created by Jorge E. Hirsch in 2005, is an attempt to measure the research impact of a scholar. In his 2005 article Hirsch put forward "an easily computable index, h, which gives an estimate of the importance, significance, and broad impact of a scientist's cumulative research contributions." He believed "that this index may provide a useful yardstick with which to compare, in an unbiased way, different individuals competing for the same resource when an important evaluation criterion is scientific achievement." There has been much controversy over the value of the h-index, in particular whether its merits outweigh its weaknesses. There has also been much debate concerning the optimal methodology to use in assessing the index. In locating someone's h-index a number of methodologies/databases may be used. Two major ones are ISI's Web of Science and the free Harzing's Publish or Perish which uses Google Scholar data.

An h-index of 20 signifies that a scientist has published 20 articles each of which has been cited at least 20 times. Sometimes the h=index is, arguably, misleading. For example, if a scholar's works have received, say, 10,000 citations he may still have a h-index of only 12 as only 12 of his papers have been cited at least 12 times. This can happen when one of his papers has been cited thousands and thousands of times. So, to have a high h-index one must have published a large number of papers. There have been instances of Nobel Prize winners in scientific fields who have a relatively low h-index. This is due to them having published one or a very small number of extremely influential papers and maybe numerous other papers that were not so important and, consequently, not well cited.

- As citation practices/patterns can vary quite widely across disciplines, it is not advisable to use h-index scores to assess the research impact of personnel in different disciplines.

- The h-index is not very widely used in the Arts and Humanities.

- H-index scores can vary widely depending on the methodology/database used. This is because different methodologies draw upon different citation data. When comparing different people’s H-Index it’s essential to use the same methodology. The h-index does not distinguish the relative contributions of authors in multi-author articles.

- The h-index may vary significantly depending on how long the scholar has been publishing and on the number of articles they’ve published. Older, more prolific scholars will tend to have a higher h-index than younger, less productive ones.

- The h-index can never decrease. This, at times, can be a problem as it does not indicate the decreasing productivity and influence of a scholar.

Using Harzing's Publish or Publish to Assess the H-Index

Publish or Perish utilizes data from Google Scholar. Its software may be downloaded from the Publish or Perish website . A person's h-index located through Publish or Perish is often higher than the same person's index located by means of ISI's Web of Science . This is primarily because the Google Scholar data utilized by Publish or Perish includes a much wider range of sources, e.g. working papers, conference papers, technical reports etc., than does Web of Science . It has often been observed that Web of Science may sometimes produce a more authoritative h-index than Publish or Perish. This tends to be more likely in certain disciplines in the Arts, Humanities and Social Sciences.

After you've launched the application, click on "Author impact" on top. Enter the author's name as initial and surname enclosed with quotation marks, e.g. "S Helluy". Then click "Lookup" (top right). You'll see a screen with a listing of S. Helluy's works arranged by number of citations. Above this listing is a smaller panel where one may see the h-index score of 17:

Publish or Perish uses Google Scholar data and these data occasionally split a single paper into multiple entries. This is usually due to incorrect or sloppy referencing of a paper by others, which causes Google Scholar to believe that the referenced works are different. However, you can merge duplicate records in the Publish or Perish results list. You do this by dragging one item and dropping it onto another; the resulting item has a small "double document" icon as illustrated below:

- Alan Marnett (2010). "H-Index: What It Is and How to Find Yours"

- Harzing, Anne-Wil (2008) Reflections on the H-Index .

- Hirsch, J. E. (15 November 2005). "An index to quantify an individual's scientific research output" . PNAS 102 (46): 16569–16572.

- A. M. Petersen, H. E. Stanley, and S. Succi (2011). "Statistical Regularities in the Rank-Citation Profile of Scientists" Nature Scientific Reports 181 : 1–7.

- Williams, Antony (2011). Calculating my H Index With Free Available Tools .

If you are using Clarivate's Web of Science database to assess a h-index, it is important to remember that Web of Science uses only those citations in the journals listed in Web of Science . However, a scholar’s work may be published in journals not covered by Web of Science . It is not possible to add these to the database’s citation report and go towards the h-index. Also, Web of Science only includes citations to journal articles – no books, chapters, working papers etc.). Moreover, Web of Science ’s coverage of journals in the Social Sciences and the Humanities is relatively sparse. This is especially so for the Humanities.

Select the option "Cited Reference Search" (on top). Enter the person’s last name and first initial followed by an asterisk, e.g. Helluy S* If the person always uses a second first name include the second initial followed by an asterisk, e.g. Franklin KT* .

If other authors have the same name, it’s important that you omit their articles. You can use the check boxes to the left of each article to remove individual items that are not by the author you are searching. The “Refine Results” column on the left can also help by limiting to relevant “Organizations – Enhanced”, by “Research Areas”, by “Publication Years”.

When you've determined that all the articles in the list are by the author, S. Helluy , you're searching for click on “Create Citation Report” on the right. The h-index for S. Helluy will be displayed as well as other citation stats.

Notice the two bar charts that graph the number of items published each year and the number of citations received each year.

If you wish to see how the person's h-index has changed over a time period you can use the drop-down menus below to specify a range of years. Web of Science will then re-calculate the h-index using only those articles added for those particular years.

Contending that Hirsch's H-Index does not take into account the "age" of an article, Sidiropoulos et al. (2006) came up with a modification, i.e. the Contemporary H-Index . They argued that though some older scholars may have have been "inactive" for a long period their h-index may still be high since the h-index cannot decline. This may be considered as somewhat unfair to older, senior scholars who continue to produce (if one has published a lot and already has a high h-index it is more and more difficult to incease the index). It may also be seen as unfair to younger brilliant scholars who have had time only to publish a small number of significant articles and consequently have only a low h-index. Hirsch's h-index, it is argued, doesn't distinguish between the different productivity/citations of these different kinds of scholars. The solution of Sidiropoulos et al. is to give weightings to articles according to the year in which they're published. For example, "for an article published during the current year, its citations account four times. For an article published 4 year ago, its citations account only one time. For an article published 6 year ago, its citations account 4/6 times, and so on. This way, an old article gradually loses its 'value', even if it still gets citations." Thus, more emphasis is given to recent articles thereby favoring the h-index of scholars who are actively publishing.

One of the easiest ways to obtain someone's contemporary h-index, or "hc-index", is to use Harzing's Publish or Perish software.

- << Previous: AltMetrics

- Next: "Times Cited" >>

- Last Updated: Mar 20, 2024 11:33 AM

- Subjects: General

- Tags: altmetrics , author ID , h-index , impact factor

Reference management. Clean and simple.

How to find your h-index on Google Scholar

How to calculate your h-index using Google Scholar

The name says it all: get more insights using harzing's "publish or perish", can you trust the h-index calculated with google scholar, frequently asked questions about finding your h-index on google scholar, related articles.

Google Scholar is a search engine with a special focus on academic papers and patents. It's limited in functionality compared to the major academic databases Scopus and Web of Science , but it is free, and you will easily know your way around because it is like doing a search on Google.

While Scopus and Web of Science limit their analyses to published journal articles, conference proceedings, and books, Google Scholar uses the entire internet as its source of data. As a result, the h-index reported by Google Scholar tends to be higher than the one found in the other databases.

➡️ What is the h-index?

Google Scholar can automatically calculate your h-index; you just need to set up a profile first. By default, Google Scholar profiles are public - allowing others to find you and see your publications and h-index. However, if you don't want to have such a public web presence, you can un-tick the "make my profile public" box on the final page of setting up your profile.

Once you have set up your profile, the h-index will be displayed in the upper right corner. Besides the classic h-index, Google also reports an i10-index along with the h-index. The i10-index is a simple measurement that shows how many of the author's papers have 10 or more citations.

Google Scholar also has a special author search , where you can look up the author profiles of others. It will, however, only show results for scholars with public profiles, as well as those of historical scientists like Albert Einstein .

Google Scholar's extensive database might list publications that most academics would not include in an h-index analysis. So it might be useful to vet the papers before calculating the h-index. Scopus and Web of Science offer such functionality to some extent, but for Google Scholar it's not possible to do right in your browser. However, there is a free desktop application called Publish or Perish , that allows you to just do that. It's available on Windows, and with some effort, you can also run it on macOS and Linux.

In order to check an author's h-index with Publish or Perish go to "Query > New Google Scholar Profile Query". Enter the scholar's name in the search box and click lookup. A window will open with potential matches. After selecting a scholar, the program will query Google Scholar for citation data and populate a list of papers, and present summary statistics on the right of this list. The list is particularly helpful because it can be used to exclude false positives.

In addition to the standard h-index, Publish or Perish, also calculates Egghe's g-index , along with normalized and annual individual h-indexes. You can read more about how these are calculated in the Publish or Perish manual .

As illustrated in Stephen Hawking's Google Scholar h-index and also noted by others , the h-index in Google Scholar tends to be higher than in Scopus or Web of Science. The main reason for this discrepancy is mainly attributed to the use of different data sources.

While Google Scholar grabs citation information from all over the internet, Scopus and Web of Science restrict their data sources to classic academic sources. Each approach is valid on its own. One could say that Google Scholar's h-index is more up-to-date as it also includes "early citations" from pre-prints before the article is actually published in an academic journal.

Also with the rise of "altmetrics", there is generally a trend to measure the resonance of academic papers outside the strict academic world. However, since Google Scholar's approach is fully automatic and not subject to any review, it can also be manipulated rather easily .

For example, you could upload false scholarly papers that give unsupported citation credit, or add papers to the Google Scholar profile that were not even authored by the person in question. Yes, there is room for improvement, but Google Scholar's h-index is a great free alternative to subscription-based databases.

You can learn how to calculate your h-index using Scopus and Web of Science below:

➡️ How to use Scopus to calculate your h-index

➡️ How to use Web of Science to calculate your h-index

An h-index is a rough summary measure of a researcher’s productivity and impact . Productivity is quantified by the number of papers, and impact by the number of citations the researchers' publications have received.

Even though Scopus needs to crunch millions of citations to find the h-index, the look-up is pretty fast. Read our guide How to calculate your h-index using Scopus for further instructions.

Web of Science is a database that has compiled millions of articles and citations. This data can be used to calculate all sorts of bibliographic metrics including an h-index. Read our guide How to use Web of Science to calculate your h-index for further instructions.

The h-index is not something that needs to be calculated on a daily basis, but it's good to know where you are for several reasons. First, climbing the h-index ladder is something worth celebrating. But more importantly, the h-index is one of the measures funding agencies or the university's hiring committee calculate when you apply for a grant or a position. Given the often huge number of applications, the h-index is calculated in order to rank candidates and apply a pre-filter.

An h-index is calculated as the number of papers with a citation number ≥ h. An h-index of 3 hence means that the author has published at least three articles, of which each has been cited at least three times.

Measuring your research impact: H-Index

Getting Started

Journal Citation Reports (JCR)

Eigenfactor and Article Influence

Scimago Journal and Country Rank

Google Scholar Metrics

Web of Science Citation Tools

Google Scholar Citations

PLoS Article-Level Metrics

Publish or Perish

- Author disambiguation

- Broadening your impact

Table of Contents

Author Impact

Journal Impact

Tracking and Measuring Your Impact

Author Disambiguation

Broadening Your Impact

Other H-Index Resources

- An index to quantify an individual's scientific research output This is the original paper by J.E. Hirsch proposing and describing the H-index.

H-Index in Web of Science

The Web of Science uses the H-Index to quantify research output by measuring author productivity and impact.

H-Index = number of papers ( h ) with a citation number ≥ h .

Example: a scientist with an H-Index of 37 has 37 papers cited at least 37 times.

Advantages of the H-Index:

- Allows for direct comparisons within disciplines

- Measures quantity and impact by a single value.

Disadvantages of the H-Index:

- Does not give an accurate measure for early-career researchers

- Calculated by using only articles that are indexed in Web of Science. If a researcher publishes an article in a journal that is not indexed by Web of Science, the article as well as any citations to it will not be included in the H-Index calculation.

Tools for measuring H-Index:

- Web of Science

- Google Scholar

This short clip helps to explain the limitations of the H-Index for early-career scientists:

- << Previous: Author Impact

- Next: G-Index >>

- Last Updated: Dec 7, 2022 1:18 PM

- URL: https://guides.library.cornell.edu/impact

When you choose to publish with PLOS, your research makes an impact. Make your work accessible to all, without restrictions, and accelerate scientific discovery with options like preprints and published peer review that make your work more Open.

- PLOS Biology

- PLOS Climate

- PLOS Complex Systems

- PLOS Computational Biology

- PLOS Digital Health

- PLOS Genetics

- PLOS Global Public Health

- PLOS Medicine

- PLOS Mental Health

- PLOS Neglected Tropical Diseases

- PLOS Pathogens

- PLOS Sustainability and Transformation

- PLOS Collections

- About This Blog

- Official PLOS Blog

- EveryONE Blog

- Speaking of Medicine

- PLOS Biologue

- Absolutely Maybe

- DNA Science

- PLOS ECR Community

- All Models Are Wrong

- About PLOS Blogs

Why I love the H-index

The H-index – a small number with a big impact. First introduced by Jorge E. Hirsh in 2005, it is a relatively simple way to calculate and measure the impact of a scientist (Hirsch, 2005). It divides opinion. You either love it or hate it. I happen to think the H-index is a superb tool to help assess scientific impact. Of course, people are always favourable towards metrics that make them look good. So let’s get this out into the open now, my H-index is 44 (I have 44 papers with at least 44 citations) and, yes, I’m proud of it! But my love of the H-index stems from a much deeper obsession with citations.

As an impressionable young graduate student, I saw my PhD supervisor regularly check his citations. Citations to papers means that someone used your work or thought it was relevant to mention in the context of their own work. If a paper was never cited, and perhaps therefore also little read, was it worth doing the research in the first place? I still remember the excitement of the first citation I ever received and I still enjoy seeing new citations roll in.

The H index: what does it mean, how is it calculated and used?

The H-index measures the maximum number of papers N you have, all of which have at least N citations. So if you have 3 papers with at least 3 citations, but you don’t have 4 papers with at least 4 citations then your H-index is 3. Obviously, the H-index can only increase if you keep publishing papers and they are cited. But the higher your H-index gets, the harder it is to increase it.

One of the ways in which I use the H-index is when making tenure recommendations. By placing the candidate within the context of the H-indices of their departmental peers, I can judge the scientific output of the candidate within the context of the host institution. This is a useful because it can be difficult to understand what is required at different host institutions from around the world. It would be negligent to only look at H-index and so I use a range of other metrics as well, together with good old fashioned scientific judgement of their contributions from reading their application and papers.

The m value

One of those extra metrics I use was also introduced by Hirsch, and is called m (Hirsch, 2005). M measures the slope or rate of increase of the H-index over time and is, in my view, a greatly underappreciated measure. To calculate the m -value, take the researchers H-index and divide by the number of years since their first publication. This measure helps to normalise between those at the early or twilight stages of their career. As Hirsch did for physicists in the field of computational biology, I broadly categorise people according to their m value in the table below. The boundaries correspond exactly to those used by Hirsch.

So post-docs with an m -value of greater than three are future science superstars and highly likely to have a stratospheric rise. If you can find one, hire them immediately!

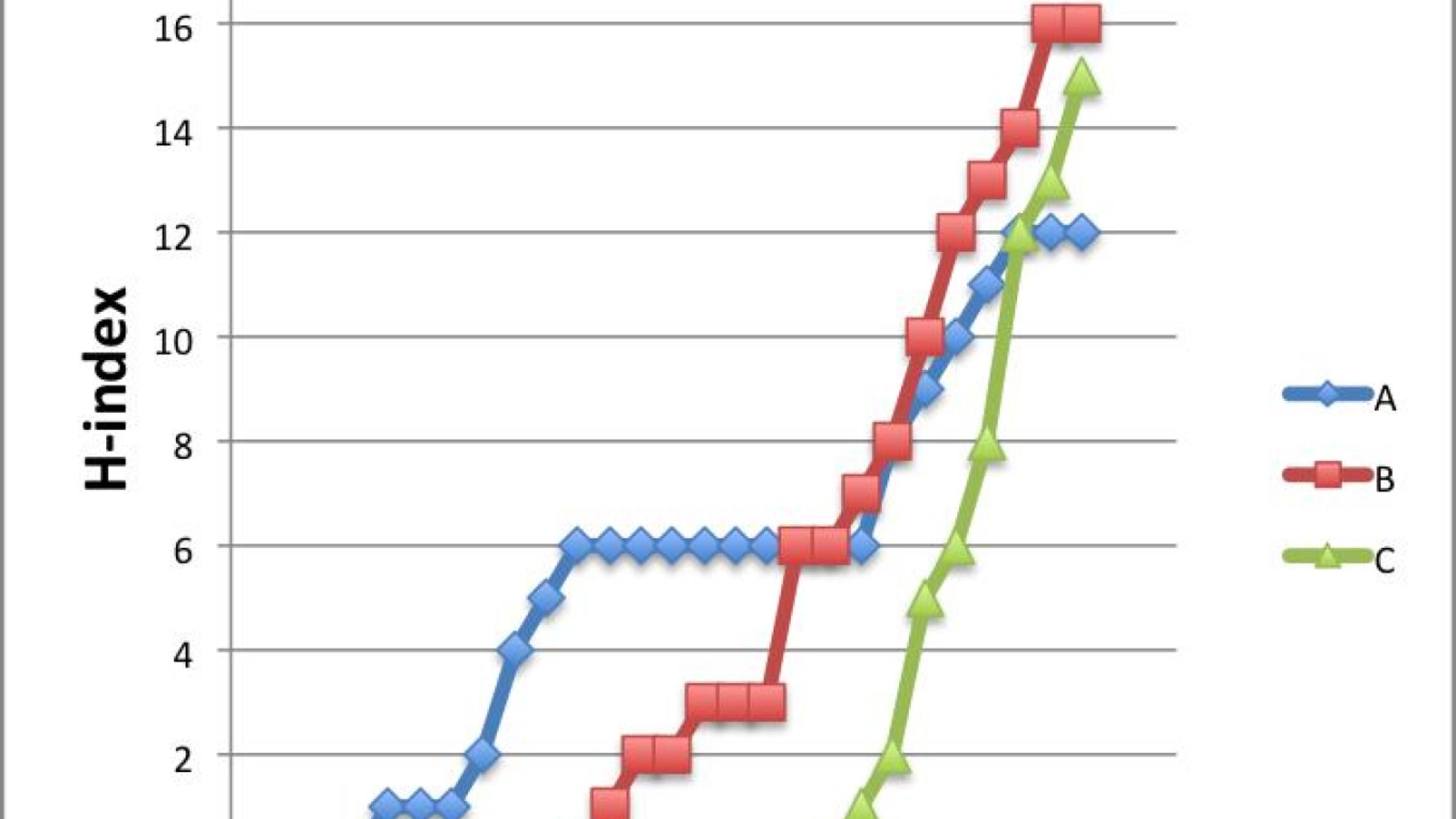

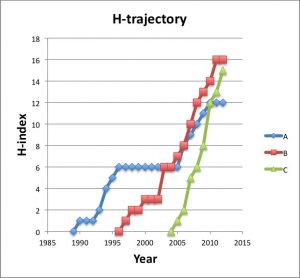

The H-trajectory

The graph below shows the growth of the H-index for three scientists – A, B and C – who respectively have an H-index of 12, 15 and 16. I call these curves a researcher’s H-trajectory.

If we calculate their m -value, then we find that A has a value of 0.5, B has 0.94 and C a value of 1.67. So while each of these researchers has a similar H-index, their likelihood for future growth can be predicted based on past performance. Recently, Daniel Acuna and colleagues presented a sophisticated prediction of future H-index using a number of several features, such as number of publications and the number in top journals (Acuna et al . 2012).

As any serious citation gazer knows, the H-index has numerous potential problems. For example, researcher A who spent time in industry has fewer publications, people with names in non English alphabets or very common names can be difficult to correctly calculate, different fields have widely differing authorship, publication and citation patterns. But even considering all these problems, I believe the H-index is here to stay. My experience is that ranking scientists by H-index and m-value correlates very well with my own personal judgements about the impact of scientists that I know and indeed with the positions that those scientists hold in Universities around the world.

Alex Bateman is currently a computational biologist at the Wellcome Trust Sanger Institute where he has led the Pfam database project. On Novembert 1st, he takes up a new role as Head of Protein Sequence Resources at the EMBL-European Bioinformatics Institute (EMBL-EBI).

J.E. Hirsch. An index to quantify an individual’s scientific research output. Proc. Natl. Acad. Sci. 102, 16569-16572.

D.E. Acuna, S. Allesina & P. Konrad. Predicting scientific success. Nature 489, 201-202.

The problem with the H factor is that it is, to a considerable extent, a measure of how old you are. The m index is supposed to correct this but it can distort things by assuming a linearity that just isn’t there in the development of a scientist.

The alternative I propose is the H5Y factor. It is the H factor, but calculated only on citations received in the past five years. This equalizes the playing field and my guess is that it is a much better predictor of performance for the next five years than H or m. Who cares what you have published thirty years ago? (unless it is still being cited, of course!)

I agree with Constantin, and would add that the m-index is particularly unfair to those who take early career breaks, since it takes several years before the penalty of having a gap after their first few papers starts to become trivially small.

Interestingly, Google Scholar’s “My Citations” pages (e.g. see my profile link for a not-so-random example!) does calculate what Constantin proposes, an H-index computed from the last five years’ citations, though they don’t call it H5Y. Personally, though I agree that this is better than H, I still think it’s a rather biased measure of quality, which more strongly reflects quantity or length of active career.

Funnily enough, I think Google are already using a better measure (which they call H5), but only for their journal rankings, not their author profiles, see e.g. scholar.google.co.uk/citations?view_op=top_venues This measure is the H-index for work published in the last five years, rather than just cited in the last five years, and they call this H5.

I think it would be great if Google Citations profiles showed H5 for authors, but frustratingly, Google’s FAQ indicates that they are opposed to adding new metrics: http://scholar.google.com/intl/en/scholar/citations.html#citations But perhaps Scopus, ResearcherID, Academia.edu, ResearchGate or similar will add H5 in the future…

[…] Crushing it Among Nobel Science Winners Why I love the H-index (not sure I’m afraid or in agreement) A Simple Way to Reduce the Excess of Antibiotics […]

Most of the H-trajectory plots that I have created for active scientists do show quite a linear trend. I only showed three in my graph above, but researcher A was the only significant deviation that I found. Creating these H-trajectory plots was not as easy as I thought it was going to be. Downloading the full citation data is time consuming given the limits imposed by SCOPUS and ISI. I also found that the underlying data for citations was not nearly as clean as I expected.

I agree that it is important to be able to take account of career breaks so that we do not penalise researchers unfairly. Being able to plot the H-trajectory might help spot these. But as I mentioned in the article these metrics should only be used as part of a wider evaluation of individuals outputs. I tend to agree with the google view on the proliferation of metrics that this could lead to more confusion than it solves. But H5-like measures seem like another reasonable way to normalise out the length of career issue.

I find Google Scholar far better than ISI. It is updated more regularly and gives better representation to publications in non-English journals. I would choose it over others to calculate any sort of index.

Ok, but why would the h-index given by an online calculator ever be higher than the number of publications?

I agree with Alex. I had the same experience, whether to recruit post-docs, young group leaders or evaluate tenure (and even in one case head of large institute). After 3, 10, 20 or 30 years of research, the h-index and m numbers are very good to evaluate not only the brilliance at one point, but also the steady success. You do not hire the genious who had only one magic paper and nothing else significant. The likelyhood that the magic happens again is very low. You have to compare with peers though. Having been an experimental neuroscientist and a computational modeller I know that the citation patterns are quite different. However, when using the H-index to compare people, we are generally in a situation where we compare similar scientists.

All that of course being a way to quickly sort out A, B or C lists, and uncovering potential problems (100 publications and h-index of 10). After that step, you need to evaluate the candidates more attentively, using interviews etc. But interestingly you very rarely read the publications. In the first screen you have too many of them and in the second you do not need them anymore.

(and I am “excellent” yeah! Not “stellar” though. One delusion I have to get rid of 😉 )

Interesting logic exercises. What about superstars who translate their work into patents/products and can not publish due company confidentiality, company goals, etc? Patents are not cited anywhere close to publications. Organic journals often have low impact factors and low citation rates as the animal studies in higher impact journals always overshadow the original synthetic papers. A good friend of mine has an H-index of only 7 but has designed a block-buster drug (and several other promising leads)–I would trade my inflated H-Index (product of a hot, speculative field) in a minute to have his stock options–oh and that drug that helps tens of thousands everyday. Sorry to burst that bubble–H-Indexers. Used to be a believer but I have now seen the light. Yep–and I would also take that “flash in the pan” invention of PCR (and the Nobel) over 50 years of high citations. One flash can have a greater impact than a thousand scientists over a thousand years.

[…] is reading about the H-index from someone with a big one. Does side matter? When it comes to how many times your works get cited it […]

Thanks for the helpful discussion. I just googled “what is a good h-index” and yours was the first thing to come up. I think as with any single statistic it has limitations, but overall is a decent reflection of output, especially for comparison with similar applicants for a position.

I’d convert your m index based on h-index/(fte years working since first paper) which would take account of breaks/part-time working. This would particularly help women remain competitive in the context of extended periods of part-time working. So my 10 years since 1st paper would turn into 10-1-(5*0.6) = 6, so my m index is 2 = 12/6 instead of 12/10. Woo!

Anyway I don’t think anything will stop me checking my citations obsessively and google citations is the easiest place I’ve found to keep my publications organised.

Amen. Coming from someone who was stuck too long in a company in which publishing in the open domain was a big no-no. H-index is one number, but it is not _the_ number. Neither are Google’s variations, and so forth. For example, IQ is another number, it has it uses, but it clearly isn’t _the_ number either. Me not like metrics so much.

I like the m-value, but it has the unfortunate effect of penalising the early starter. For example, someone who publishes a paper from their Hons thesis may be penalised by 3-4 years in the denominator producing their m-value when compared with someone starting publishing in the third or fourth year of their PhD. So I would take Kate’s idea further, and use FTE as THE denominator when calculating m, instead of years since first paper. This could include time spent as a PhD student, or not, as long as it was standardised.

Google Scholar gives exactly this statistic under the standard h-value.

One problem with H or M index can be how many people are actively involved in the research in a particular field. For example, there are only ~186 laboratories in the whole world working on my previous field, Candida albicans. But, right now I am working on cancer biology. Huge number of people are working in that field, hence the h index will increase dramatically.

I think that is a good suggestion. It is important to take account of career breaks when judging peoples scientific output. Its not perfect to just subtract the break length or some combination of time. Even during a career break your pre-break papers will still be cited and potentially increasing your H-index. But to a first approximation what you suggest makes good sense. It would be interesting to look at the H-trajectories of people who have taken a career break to see how it affects growth of H-index.

Yes that is a good point. Publishing a paper during your degree should be seen as a strong positive indicator in my opinion and as you say not penalise the person. OK, so lets use years of FTE employment as the denominator.

It is best to only using H-index for comparing people within the same field. I’m not sure that moving field is any guarantee of increasing H-index, but it will be easier for your H-index to grow in the large field. I guess the smart thing to do is to start in cancer biology then move to the specialist field 😉

I don’t like h index when it is used to rank journals as it basically gives a statistics about the best papers in that journal. For example if nature has a 5 year h index of 300, it only says something about those 300 papers and nothing about the thousands of other papers they published. Because of that, plos one has a very high h index, I think ranked top 30, but that just reflects the number of elite papers being published there, not the tens of thousands of junk papers it publishes..

My problem with h-index for insividuals is that it does not differentiate first author papers from contributing author papers. A tech could be put in 50 high impact papers over 5-6 years because he is in a super high impact lab for technical contributions. However a post doc in such a lab would have much fewer papers because be would be focusing on making first author papers. However in the end, the tech would have a higher h index. Is that a fair assessment? Also that tech could be a postdoc in name but doing tech work. Would such a technician postdoc be at a higher advantage to employers who only look at h index?

The extreme scenarios given to discount H-index can be absurd: a) A tech having 50 papers! I do not know a tech that is put on 50 papers in 5 years in any lab. If such a tech exists, then he/she is a superstar tech and needs to be celebrated. b) why penalize someone who publishes in their PhD with an m index- well you forget that if someone publishes early, then their h index will increase because their papers will start collecting citations early so even if the denominator is increased by a few years, isn’t the numerator also increased? c) In chemistry, there was a table of the top 500, based on h-index. All on that table were superstars, by other metrics, and all the recent nobel prize winners were on that list. There was not a single name on that list who was not famous. I agree that one can not use h index to different between h of say 15 and 20. But if someone has an h of say 60 and the other has 30, there is usually a light and day between them. The h is here to stay.

None of the above addresses key weaknesses of the h-index – self citation and citation rings.

If you work in large collaborations and projects it is *easy* for *many* people to rack up large numbers of citations (and h-indices) by citing each others papers and by simply appearing on lots of papers for which they have done little work. At the very least I believe citations should be a conserved quantity – one citation is one citation, and if it to is a paper with 100 authors then it should not add 1 citation to *each* of those author’s records, it should add 0.01 (or some other agreed fraction dictated by author order such that Sigma (fraction) =1).

Then, self-citations, both in the form of you citing your own paper, or any papers upon which a co-author appears citing that paper should not count.

This would cut many h-indices down to size and be a much truer reflection of an individual’s contribution.

What’s your normalised (by number of authors) h-index, excluding self-citations?

[…] https://blogs.plos.org/biologue/2012/10/19/why-i-love-the-h-index/ Tweet!function(d,s,id){var js,fjs=d.getElementsByTagName(s)[0];if(!d.getElementById(id)){js=d.createElement(s);js.id=id;js.src="//platform.twitter.com/widgets.js";fjs.parentNode.insertBefore(js,fjs);}}(document,"script","twitter-wjs"); […]

Out lab publishes over 40 papers a year and has three techs contributing to almost every paper for technical work. They have higher h then postdocs.

But what is worse are PIs who do no work and don’t even read the paper but is still on the author list…apparently a common occurrence for high energy physics consortiums.

[…] h-index weaknesses with various computational models that, for example, reward highly-cited papers, correct for career length, rank authors’ papers against other papers published in the same year and source, or count just […]

There should be a metrics which weigh the author position. Typically first author does all the work. So the first authorship and the final authorship should have higher weightage compared to other authorships. Personally, I think the name appearing after the third author and before the final author should not have any weightage

[…] Many have attempted to fix the h-index weaknesses with various computational models that, for example, reward highly-cited papers, correct for career length, […]