Thank you for visiting nature.com. You are using a browser version with limited support for CSS. To obtain the best experience, we recommend you use a more up to date browser (or turn off compatibility mode in Internet Explorer). In the meantime, to ensure continued support, we are displaying the site without styles and JavaScript.

- View all journals

- Explore content

- About the journal

- Publish with us

- Sign up for alerts

- Published: 29 May 2014

Points of significance

Designing comparative experiments

- Martin Krzywinski 1 &

- Naomi Altman 2

Nature Methods volume 11 , pages 597–598 ( 2014 ) Cite this article

48k Accesses

14 Citations

8 Altmetric

Metrics details

- Research data

- Statistical methods

Good experimental designs limit the impact of variability and reduce sample-size requirements.

You have full access to this article via your institution.

In a typical experiment, the effect of different conditions on a biological system is compared. Experimental design is used to identify data-collection schemes that achieve sensitivity and specificity requirements despite biological and technical variability, while keeping time and resource costs low. In the next series of columns we will use statistical concepts introduced so far and discuss design, analysis and reporting in common experimental scenarios.

In experimental design, the researcher-controlled independent variables whose effects are being studied (e.g., growth medium, drug and exposure to light) are called factors. A level is a subdivision of the factor and measures the type (if categorical) or amount (if continuous) of the factor. The goal of the design is to determine the effect and interplay of the factors on the response variable (e.g., cell size). An experiment that considers all combinations of N factors, each with n i levels, is a factorial design of type n 1 × n 2 × ... × n N . For example, a 3 × 4 design has two factors with three and four levels each and examines all 12 combinations of factor levels. We will review statistical methods in the context of a simple experiment to introduce concepts that apply to more complex designs.

Suppose that we wish to measure the cellular response to two different treatments, A and B, measured by fluorescence of an aliquot of cells. This is a single factor (treatment) design with three levels (untreated, A and B). We will assume that the fluorescence (in arbitrary units) of an aliquot of untreated cells has a normal distribution with μ = 10 and that real effect sizes of treatments A and B are d A = 0.6 and d B = 1 (A increases response by 6% to 10.6 and B by 10% to 11). To simulate variability owing to biological variation and measurement uncertainty (e.g., in the number of cells in an aliquot), we will use σ = 1 for the distributions. For all tests and calculations we use α = 0.05.

We start by assigning samples of cell aliquots to each level ( Fig. 1a ). To improve the precision (and power) in measuring the mean of the response, more than one aliquot is needed 1 . One sample will be a control (considered a level) to establish the baseline response, and capture biological and technical variability. The other two samples will be used to measure response to each treatment. Before we can carry out the experiment, we need to decide on the sample size.

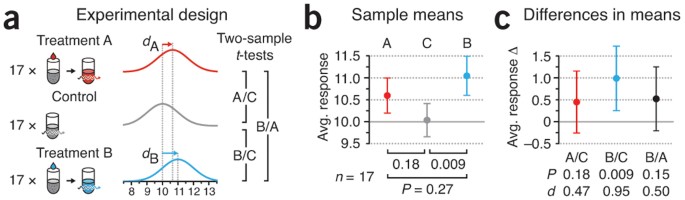

( a ) Two treated samples (A and B) with n = 17 are compared to a control (C) with n = 17 and to each other using two-sample t -tests. ( b ) Simulated means and P values for samples in a . Values are drawn from normal populations with σ = 1 and mean response of 10 (C), 10.6 (A) and 11 (B). ( c ) The preferred reporting method of results shown in b , illustrating difference in means with CIs, P values and effect size, d . All error bars show 95% CI.

We can fall back to our discussion about power 1 to suggest n . How large an effect size ( d ) do we wish to detect and at what sensitivity? Arbitrarily small effects can be detected with large enough sample size, but this makes for a very expensive experiment. We will need to balance our decision based on what we consider to be a biologically meaningful response and the resources at our disposal. If we are satisfied with an 80% chance (the lowest power we should accept) of detecting a 10% change in response, which corresponds to the real effect of treatment B ( d B = 1), the two-sample t -test requires n = 17. At this n value, the power to detect d A = 0.6 is 40%. Power calculations are easily computed with software; typically inputs are the difference in means (Δ μ ), standard deviation estimate ( σ ), α and the number of tails (we recommend always using two-tailed calculations).

Based on the design in Figure 1a , we show the simulated samples means and their 95% confidence interval (CI) in Figure 1b . The 95% CI captures the mean of the population 95% of the time; we recommend using it to report precision. Our results show a significant difference between B and control (referred to as B/C, P = 0.009) but not for A/C ( P = 0.18). Paradoxically, testing B/A does not return a significant outcome ( P = 0.15). Whenever we perform more than one test we should adjust the P values 2 . As we only have three tests, the adjusted B/C P value is still significant, P ′ = 3 P = 0.028. Although commonly used, the format used in Figure 1b is inappropriate for reporting our results: sample means, their uncertainty and P values alone do not present the full picture.

A more complete presentation of the results ( Fig. 1c ) combines the magnitude with uncertainty (as CI) in the difference in means. The effect size, d , defined as the difference in means in units of pooled standard deviation, expresses this combination of measurement and precision in a single value. Data in Figure 1c also explain better that the difference between a significant result (B/C, P = 0.009) and a nonsignificant result (A/C, P = 0.18) is not always significant (B/A, P = 0.15) 3 . Significance itself is a hard boundary at P = α , and two arbitrarily close results may straddle it. Thus, neither significance itself nor differences in significance status should ever be used to conclude anything about the magnitude of the underlying differences, which may be very small and not biologically relevant.

CIs explicitly show how close we are to making a positive inference and help assess the benefit of collecting more data. For example, the CIs of A/C and B/C closely overlap, which suggests that at our sample size we cannot reliably distinguish between the response to A and B ( Fig. 1c ). Furthermore, given that the CI of A/C just barely crosses zero, it is possible that A has a real effect that our test failed to detect. More information about our ability to detect an effect can be obtained from a post hoc power analysis, which assumes that the observed effect is the same as the real effect (normally unknown), and uses the observed difference in means and pooled variance. For A/C, the difference in means is 0.48 and the pooled s.d. ( s p ) = 1.03, which yields a post hoc power of 27%; we have little power to detect this difference. Other than increasing sample size, how could we improve our chances of detecting the effect of A?

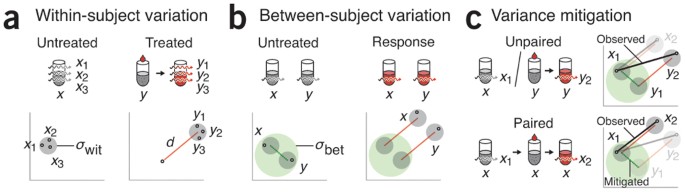

Our ability to detect the effect of A is limited by variability in the difference between A and C, which has two random components. If we measure the same aliquot twice, we expect variability owing to technical variation inherent in our laboratory equipment and variability of the sample over time ( Fig. 2a ). This is called within-subject variation, σ wit . If we measure two different aliquots with the same factor level, we also expect biological variation, called between-subject variation, σ bet , in addition to the technical variation ( Fig. 2b ). Typically there is more biological than technical variability ( σ bet > σ wit ). In an unpaired design, the use of different aliquots adds both σ wit and σ bet to the measured difference ( Fig. 2c ). In a paired design, which uses the paired t -test 4 , the same aliquot is used and the impact of biological variation ( σ bet ) is mitigated ( Fig. 2c ). If differences in aliquots ( σ bet ) are appreciable, variance is markedly reduced (to within-subject variation) and the paired test has higher power.

( a ) Limits of measurement and technical precision contribute to σ wit (gray circle) observed when the same aliquot is measured more than once. This variability is assumed to be the same in the untreated and treated condition, with effect d on aliquot x and y . ( b ) Biological variation gives rise to σ bet (green circle). ( c ) Paired design uses the same aliquot for both measurements, mitigating between-subject variation.

The link between σ bet and σ wit can be illustrated by an experiment to evaluate a weight-loss diet in which a control group eats normally and a treatment group follows the diet. A comparison of the mean weight after a month is confounded by the initial weights of the subjects in each group. If instead we focus on the change in weight, we remove much of the subject variability owing to the initial weight.

If we write the total variance as σ 2 = σ wit 2 + σ bet 2 , then the variance of the observed quantity in Figure 2c is 2 σ 2 for the unpaired design but 2 σ 2 (1 – ρ ) for the paired design, where ρ = σ bet 2 / σ 2 is the correlation coefficient (intraclass correlation). The relative difference is captured by ρ of two measurements on the same aliquot, which must be included because the measurements are no longer independent. If we ignore ρ in our analysis, we will overestimate the variance and obtain overly conservative P values and CIs. In the case where there is no additional variation between aliquots, there is no benefit to using the same aliquot: measurements on the same aliquot are uncorrelated ( ρ = 0) and variance of the paired test is the same as the variance of the unpaired. In contrast, if there is no variation in measurements on the same aliquot except for the treatment effect ( σ wit = 0), we have perfect correlation ( ρ = 1). Now, the difference measurement derived from the same aliquot removes all the noise; in fact, a single pair of aliquots suffices for an exact inference. Practically, both sources of variation are present, and it is their relative size—reflected in ρ —that determines the benefit of using the paired t-test.

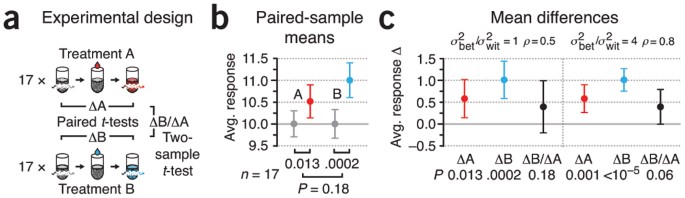

We can see the improved sensitivity of the paired design ( Fig. 3a ) in decreased P values for the effects of A and B ( Fig. 3b versus Fig. 1b ). With the between-subject variance mitigated, we now detect an effect for A ( P = 0.013) and an even lower P value for B ( P = 0.0002) ( Fig. 3b ). Testing the difference between ΔA and ΔB requires the two-sample t -test because we are testing different aliquots, and this still does not produce a significant result ( P = 0.18). When reporting paired-test results, sample means ( Fig. 3b ) should never be shown; instead, the mean difference and confidence interval should be shown ( Fig. 3c ). The reason for this comes from our discussion above: the benefit of pairing comes from reduced variance because ρ > 0, something that cannot be gleaned from Figure 3b . We illustrate this in Figure 3c with two different sample simulations with same sample mean and variance but different correlation, achieved by changing the relative amount of σ bet 2 and σ wit 2 . When the component of biological variance is increased, ρ is increased from 0.5 to 0.8, total variance in difference in means drops and the test becomes more sensitive, reflected by the narrower CIs. We are now more certain that A has a real effect and have more reason to believe that the effects of A and B are different, evidenced by the lower P value for ΔB/ΔA from the two-sample t -test (0.06 versus 0.18; Fig. 3c ). As before, P values should be adjusted with multiple-test correction.

( a ) The same n = 17 sample is used to measure the difference between treatment and background (ΔA = A after − A before , ΔB = B after − B before ), analyzed with the paired t -test. Two-sample t -test is used to compare the difference between responses (ΔB versus ΔA). ( b ) Simulated sample means and P values for measurements and comparisons in a . ( c ) Mean difference, CIs and P values for two variance scenarios, σ bet 2 / σ wit 2 of 1 and 4, corresponding to ρ of 0.5 and 0.8. Total variance was fixed: σ bet 2 + σ wit 2 = 1. All error bars show 95% CI.

The paired design is a more efficient experiment. Fewer aliquots are needed: 34 instead of 51, although now 68 fluorescence measurements need to be taken instead of 51. If we assume σ wit = σ bet ( ρ = 0.5; Fig. 3c ), we can expect the paired design to have a power of 97%. This power increase is highly contingent on the value of ρ . If σ wit is appreciably larger than σ bet (i.e., ρ is small), the power of the paired test can be lower than for the two-sample variant. This is because total variance remains relatively unchanged (2 σ 2 (1 – ρ ) ≈ 2 σ 2 ) while the critical value of the test statistic can be markedly larger (particularly for small samples) because the number of degrees of freedom is now n – 1 instead of 2( n – 1). If the ratio of σ bet 2 to σ wit 2 is 1:4 ( ρ = 0.2), the paired test power drops from 97% to 86%.

To analyze experimental designs that have more than two levels, or additional factors, a method called analysis of variance is used. This generalizes the t -test for comparing three or more levels while maintaining better power than comparing all sets of two levels. Experiments with two or more levels will be our next topic.

Krzywinski, M.I. & Altman, N. Nat. Methods 10 , 1139–1140 (2013).

Article CAS Google Scholar

Krzywinski, M.I. & Altman, N. Nat. Methods 11 , 355–356 (2014).

Gelman, A. & Stern, H. Am. Stat. 60 , 328–331 (2006).

Article Google Scholar

Krzywinski, M.I. & Altman, N. Nat. Methods 11 , 215–216 (2014).

Download references

Author information

Authors and affiliations.

Martin Krzywinski is a staff scientist at Canada's Michael Smith Genome Sciences Centre.,

- Martin Krzywinski

Naomi Altman is a Professor of Statistics at The Pennsylvania State University.,

- Naomi Altman

You can also search for this author in PubMed Google Scholar

Ethics declarations

Competing interests.

The authors declare no competing financial interests.

Rights and permissions

Reprints and permissions

About this article

Cite this article.

Krzywinski, M., Altman, N. Designing comparative experiments. Nat Methods 11 , 597–598 (2014). https://doi.org/10.1038/nmeth.2974

Download citation

Published : 29 May 2014

Issue Date : June 2014

DOI : https://doi.org/10.1038/nmeth.2974

Share this article

Anyone you share the following link with will be able to read this content:

Sorry, a shareable link is not currently available for this article.

Provided by the Springer Nature SharedIt content-sharing initiative

This article is cited by

Sources of variation.

Nature Methods (2015)

ETD Outperforms CID and HCD in the Analysis of the Ubiquitylated Proteome

- Tanya R. Porras-Yakushi

- Michael J. Sweredoski

Journal of the American Society for Mass Spectrometry (2015)

Analysis of variance and blocking

Nature Methods (2014)

Nested designs

- Paul Blainey

Quick links

- Explore articles by subject

- Guide to authors

- Editorial policies

Sign up for the Nature Briefing newsletter — what matters in science, free to your inbox daily.

Warning: The NCBI web site requires JavaScript to function. more...

An official website of the United States government

The .gov means it's official. Federal government websites often end in .gov or .mil. Before sharing sensitive information, make sure you're on a federal government site.

The site is secure. The https:// ensures that you are connecting to the official website and that any information you provide is encrypted and transmitted securely.

- Publications

- Account settings

- Browse Titles

NCBI Bookshelf. A service of the National Library of Medicine, National Institutes of Health.

Velentgas P, Dreyer NA, Nourjah P, et al., editors. Developing a Protocol for Observational Comparative Effectiveness Research: A User's Guide. Rockville (MD): Agency for Healthcare Research and Quality (US); 2013 Jan.

Developing a Protocol for Observational Comparative Effectiveness Research: A User's Guide.

- Hardcopy Version at Agency for Healthcare Research and Quality

Chapter 2 Study Design Considerations

Til Stürmer , MD, MPH, PhD and M. Alan Brookhart , PhD.

The choice of study design often has profound consequences for the causal interpretation of study results. The objective of this chapter is to provide an overview of various study design options for nonexperimental comparative effectiveness research (CER), with their relative advantages and limitations, and to provide information to guide the selection of an appropriate study design for a research question of interest. We begin the chapter by reviewing the potential for bias in nonexperimental studies and the central assumption needed for nonexperimental CER—that treatment groups compared have the same underlying risk for the outcome within subgroups definable by measured covariates (i.e., that there is no unmeasured confounding). We then describe commonly used cohort and case-control study designs, along with other designs relevant to CER such as case-cohort designs (selecting a random sample of the cohort and all cases), case-crossover designs (using prior exposure history of cases as their own controls), case–time controlled designs (dividing the case-crossover odds ratio by the equivalent odds ratio estimated in controls to account for calendar time trends), and self-controlled case series (estimating the immediate effect of treatment in those treated at least once). Selecting the appropriate data source, patient population, inclusion/exclusion criteria, and comparators are discussed as critical design considerations. We also describe the employment of a “new user” design, which allows adjustment for confounding at treatment initiation without the concern of mixing confounding with selection bias during followup, and discuss the means of recognizing and avoiding immortal-time bias, which is introduced by defining the exposure during the followup time versus the time prior to followup. The chapter concludes with a checklist for the development of the study design section of a CER protocol, emphasizing the provision of a rationale for study design selection and the need for clear definitions of inclusion/exclusion criteria, exposures (treatments), outcomes, confounders, and start of followup or risk period.

- Introduction

The objective of this chapter is to provide an overview of various study design options for nonexperimental comparative effectiveness research (CER), with their relative advantages and limitations. Of the multitude of epidemiologic design options, we will focus on observational designs that compare two or more treatment options with respect to an outcome of interest in which treatments are not assigned by the investigator but according to routine medical practice. We will not cover experimental or quasi-experimental designs, such as interrupted time series, 1 designed delays, 2 cluster randomized trials, individually randomized trials, pragmatic trials, or adaptive trials. These designs also have important roles in CER; however, the focus of this guide is on nonexperimental approaches that directly compare treatment options.

The choice of study design often has profound consequences for the causal interpretation of study results that are irreversible in many settings. Study design decisions must therefore be considered even more carefully than analytic decisions, which often can be changed and adapted at later stages of the research project. Those unfamiliar with nonexperimental design options are thus strongly encouraged to involve experts in the design of nonexperimental treatment comparisons, such as epidemiologists, especially ones familiar with comparing medical treatments (e.g., pharmacoepidemiologists), during the planning stage of a CER study and throughout the project. In the planning stage of a CER study, researchers need to determine whether the research question should be studied using nonexperimental or experimental methods (or a combination thereof, e.g., two-stage RCTs). 3 - 4 Feasibility may determine whether an experimental or a nonexperimental design is most suitable, and situations may arise where neither approach is feasible.

- Issues of Bias in Observational CER

In observational CER, the exposures or treatments are not assigned by the investigator but rather by mechanisms of routine practice. Although the investigator can (and should) speculate on the treatment assignment process or mechanism, the actual process will be unknown to the investigator. The nonrandom nature of treatment assignment leads to the major challenge in nonexperimental CER studies, that of ensuring internal validity. Internal validity is defined as the absence of bias; biases may be broadly classified as selection bias, information bias, and confounding bias. Epidemiology has advanced our thinking about these biases for more than 100 years, and many papers have been published describing the underlying concepts and approaches to bias reduction. For a comprehensive description and definition of these biases, we suggest the book Modern Epidemiology. 5 Ensuring a study's internal validity is a prerequisite for its external validity or generalizability The limited generalizability of findings from randomized controlled trials (RCTs), such as to older adults, patients with comorbidities or comedications, is one of the major drivers for the conduct of nonexperimental CER.

The central assumption needed for nonexperimental CER is that the treatment groups compared have the same underlying risk for the outcome within subgroups definable by measured covariates. Until recently, this “no unmeasured confounding” assumption was deemed plausible only for unintended (usually adverse) effects of medical interventions, that is, for safety studies. The assumption was considered to be less plausible for intended effects of medical interventions (effectiveness) because of intractable confounding by indication. 6 - 7 Confounding by indication leads to higher propensity for treatment or more intensive treatment in those with the most severe disease. A typical example would be a study on the effects of beta-agonists on asthma mortality in patients with asthma. The association between treatment (intensity) with beta-agonists and asthma mortality would be confounded by asthma severity. The direction of the confounding by asthma severity would tend to make the drug look bad (as if it is “causing” mortality). The study design challenge in this example would not be the confounding itself, but the fact that it is hard to control for asthma severity because it is difficult to measure precisely. Confounding by frailty has been identified as another potential bias when assessing preventive treatments in population-based studies, particularly those among older adults. 8 - 11 Because frail persons (those close to death) are less likely to be treated with a multitude of preventive treatments, 8 frailty would lead to confounding, which would bias the association between preventive treatments and outcomes associated with frailty (e.g., mortality). Since the bias would be that the untreated cohort has a higher mortality irrespective of the treatment, this would make the drug's effectiveness look too good. Here again the crux of the problem is that frailty is hard to control for because it is difficult to measure.

- Basic Epidemiologic Study Designs

The general principle of epidemiologic study designs is to compare the distribution of the outcome of interest in groups characterized by the exposure/treatment/intervention of interest. The association between the exposure and outcome is then assessed using measures of association. The causal interpretation of these associations is dependent on additional assumptions, most notably that the risk for the outcome is the same in all treatment groups compared (before they receive the respective treatments), also called exchangeability. 12 - 13 Additional assumptions for a causal interpretation, starting with the Hill criteria, 14 are beyond the scope of this chapter, although most of these are relevant to many CER settings. For situations where treatment effects are heterogeneous, see chapter 3 .

The basic epidemiologic study designs are usually defined by whether study participants are sampled based on their exposure or outcome of interest. In a cross-sectional study, participants are sampled independent of exposure and outcome, and prevalence of exposure and outcome are assessed at the same point in time. In cohort studies, participants are sampled according to their exposures and followed over time for the incidence of outcomes. In case-control studies, cases and controls are sampled based on the outcome of interest, and the prevalence of exposure in these two groups is then compared. Because the cross-sectional study design usually does not allow the investigator to define whether the exposure preceded the outcome, one of the prerequisites for a causal interpretation, we will focus on cohort and case-control studies as well as some more advanced designs with specific relevance to CER.

Definitions of some common epidemiologic terms are presented in Table 2.1 . Given the space constraints and the intended audience, these definitions do not capture all nuances.

Definition of epidemiologic terms.

Cohort Study Design

Description.

Cohorts are defined by their exposure at a certain point in time (baseline date) and are followed over time after baseline for the occurrence of the outcome. For the usual study of first occurrence of outcomes, cohort members with the outcome prevalent at baseline need to be excluded. Cohort entry (baseline) is ideally defined by a meaningful event (e.g., initiation of treatment; see the section on new user design) rather than convenience (prevalence of treatment), although this may not always be feasible or desirable.

The main advantage of the cohort design is that it has a clear timeline separating potential confounders from the exposure and the exposure from the outcome. Cohorts allow the estimation of actual incidence (risk or rate) in all treatment groups and thus the estimation of risk or rate differences. Cohort studies allow investigators to assess multiple outcomes from given treatments. The cohort design is also easy to conceptualize and readily compared to the RCT, a design with which most medical researchers are very familiar.

Limitations

If participants need to be recruited and followed over time for the incidence of the outcome, the cohort design quickly becomes inefficient when the incidence of the outcome is low. This limitation has led to the widespread use of case-control designs (see below) in pharmacoepidemiologic studies using large automated databases. With the IT revolution over the past 10 years, lack of efficiency is rarely, if ever, a reason not to implement a cohort study even in the largest health care databases if all the data have already been collected.

Important Considerations

Patients can only be excluded from the cohort based on information available at start of followup (baseline). Any exclusion of cohort members based on information accruing during followup, including treatment changes, has a strong potential to introduce bias. The idea to have a “clean” treatment group usually introduces selection bias, such as by removing the sickest, those with treatment failure, or those with adverse events, from the cohort. The fundamental principle of the cohort is the enumeration of people at baseline (based on inclusion and exclusion criteria) and reporting losses to followup for everyone enrolled at baseline.

Clinical researchers may also be tempted to assess the treatments during the same time period the outcome is assessed (i.e., during followup) instead of prior to followup. Another fundamental of the cohort design is, however, that the exposure is assessed prior to the assessment of the outcome, thus limiting the potential for incorrect causal inference if the outcome also influences the likelihood of exposure. This general principle also applies to time-varying treatments for which the followup time needs to start anew after treatment changes rather than from baseline.

Cadarette et al. 15 employed a cohort design to investigate the comparative effectiveness of four alternative treatments to prevent osteoporotic fractures. The four cohorts were defined by the initiation of the four respective treatments (the baseline date). Cohorts were followed from baseline to the first occurrence of a fracture at various sites. To minimize bias, statistical analyses adjusted for risk factors for fractures assessed at baseline. As discussed the cohort design provided a clear timeline, differentiating exposure from potential confounders and the outcomes.

Case-Control Study Design

Nested within an underlying cohort, the case-control design identifies all incident cases that develop the outcome of interest and compares their exposure history with the exposure history of controls sampled at random from everyone within the cohort still at risk for developing the outcome of interest. Given proper sampling of controls from the risk set, the estimation of the odds ratio in a case-control study is a computationally more efficient way to estimate the otherwise identical incidence rate ratio in the underlying cohort.

The oversampling of persons with the outcome increases efficiency compared with the full underlying cohort. As outlined above, this efficiency advantage is of minor importance in many CER settings. Efficiency is of major importance, however, if additional data (e.g., blood levels, biologic materials, validation data) need to be collected. It is straightforward to assess multiple exposures, although this will quickly become very complicated when implementing a new user design.

The case-control study is difficult to conceptualize. Some researchers do not understand, for example, that matching does not control for confounding in a case-control study, whereas it does in a cohort study. 16 Unless additional information from the underlying cohort is available, risk or rate differences cannot be estimated from case-control studies. Because the timing between potential confounders and the treatments is often not taken into account, current implementations of the case-control design assessing confounders at the index date rather than prior to treatment initiation will be biased when controlling for covariates that may be affected by prior treatment. Thus, implementing a new user design with proper definition of confounders will often be difficult, although not impossible. If information on treatments needs to be obtained retrospectively, such as from an interview with study participants identified as cases and controls, there is the potential that treatments will be assessed differently for cases and controls, which will lead to bias (often referred to as recall bias).

Controls need to be sampled from the “risk set,” i.e., all patients from the underlying cohort who remain at risk for the outcome at the time a case occurs. Sampling of controls from all those who enter the cohort (i.e., at baseline) may lead to biased estimates of treatment effects if treatments are associated with loss to followup or mortality. Matching on confounders can improve the efficiency of estimation of treatment effects, but does not control for confounding in case-control studies. Matching should only be considered for strong risk factors for the outcome; however, the often small gain in efficiency must be weighed against the loss of the ability to estimate the effect of the matching variable on the outcome (which could, for example, be used as a positive control to show content validity of an outcome definition). 17 Matching on factors strongly associated with treatment often reduces efficiency of case-control studies (overmatching). Generally speaking, matching should not routinely be performed in case-control studies but be carefully considered ideally after some study of the expected efficiency gains. 16 , 18

Martinez et al. 19 conducted a case-control study employing a new user design. The investigators compared venlafaxine and other antidepressants and risk of sudden cardiac death or near death. An existing cohort of new users of antidepressants was identified. (“New users” were defined as subjects without a prescription for the medication in the year prior to cohort entry). Nested within the underlying cohort, cases and up to 30 randomly selected matched controls were identified. Potential controls were assigned an “index date” corresponding to the same followup time to event as the matched case. Controls were only sampled from the “risk set.” That is, controls had to be at risk for the outcome on their index date, thus ensuring that bias was not introduced via the sampling scheme.

Case-Cohort Study Design

In the case-cohort design, cohorts are defined as in a cohort study, and all cohort members are followed for the incidence of the outcomes. Additional information required for analysis (e.g., blood levels, biologic materials for genetic analyses) is collected for a random sample of the cohort and for all cases. (Note that the random sample may contain cases.) This sampling needs to be accounted for in the analysis, 20 but otherwise this design offers all the advantages and possibilities of a cohort study. The case-cohort design is intended to increase efficiency compared with the nested case-control design when selecting participants for whom additional information needs to be collected or when studying more than one outcome.

- Other Epidemiological Study Designs Relevant to CER

Case-Crossover Design

Faced with the problem of selection of adequate controls in a case-control study of triggers of myocardial infarction, Maclure proposed to use prior exposure history of cases as their own controls. 21 For this study design, only patients with the outcome (cases) who have discrepant exposures during the case and the control period contribute information. A feature of this design is that it is self-controlled which removes the confounding effect of any characteristic of subjects that is stable over time (e.g., genetics). For CER, the latter property of the case-crossover design is a major advantage, because measures of stable confounding factors (to address confounding) are not needed. The former property or initial reason to develop the case-crossover design, that is, its ability to assess triggers of (or immediate, reversible effects of, e.g., treatments on) outcomes may also have specific advantages for CER. The case-crossover design is thought to be appropriate for studying acute effects of transient exposures.

While the case-crossover design has been developed to compare exposed with unexposed periods rather than compare two active treatment periods, it may still be valuable for certain CER settings. This would include situations in which patients switch between two similar treatments without stopping treatment. Often such switching would be triggered by health events, which could cause within-person confounding, but when the causes of switching are unrelated to health events (e.g., due to changes in health plan drug coverage), within-person estimates of effect from crossover designs could be unbiased. More work is needed to evaluate the potential to implement the case-crossover design in the presence of treatment gaps (neither treatment) or of more than two treatments that need to be compared.

Exactly as in a case-control study, the first step is to identify all cases with the outcome and assess the prevalence of exposure during a brief time window before the outcome occurred. Instead of sampling controls, we create a separate observation for each case that contains all the same variables except for the exposure, which is defined for a different time period. This “control” time period has the same length as the case period and needs to be carefully chosen to take, for example, seasonality of exposures into account. The dataset is then analyzed as an individually matched case-control study.

The lack of need to select controls, the ability to assess short-term reversible effects, the ability to inform about the time window for this effect using various intervals to define treatment, and the control for all, even unmeasured factors that are stable over time are the major advantages of the case-crossover design. The design can also be easily added to any case-control study with little (if any) cost.

Because only cases with discrepant exposure histories contribute information to the analysis, the case-crossover design is often not very efficient. This may not be a major issue if the design is used in addition to the full case-control design. While the design avoids confounding by factors that are stable over time, it can still be confounded by factors that vary over time. The possibility of time-varying conditions leading to changes in treatment and increasing the risk for the outcome (i.e., confounding by indication) would need to be carefully considered in CER studies.

The causal interpretation changes from the effect of treatment versus no treatment on the outcome to the short-term effect of treatment in those treated. Thus, it can be used to assess the effects of adherence/persistence with treatment on outcomes in those who have initiated treatment. 22

Case-Time Controlled Design

One of the assumptions behind the case-crossover design is that the prevalence of exposure stays constant over time in the population studied. While plausible in many settings, this assumption may be violated in dynamic phases of therapies (after market introduction or safety alerts). To overcome this problem, Suissa proposed the case–time controlled design. 23 This approach divides the case-crossover odds ratio by the equivalent odds ratio estimated in controls. Greenland has criticized this design because it can reintroduce confounding, thus detracting from one of the major advantages of the case-crossover design. 24

This study design tries to adjust for calendar time trends in the prevalence of treatments that can introduce bias in the case-crossover design. To do so, the design uses controls as in a case-control design but estimates a case-crossover odds ratio (i.e., within individuals) in these controls. The case-crossover odds ratio (in cases) is then divided by the case-crossover odds ratio in controls.

This design is the same as the case-crossover design (with the caveat outlined by Greenland) with the additional advantage of not being dependent on the assumption of no temporal changes in the prevalence of the treatment.

The need for controls removes the initial motivation for the case-crossover design and adds complexity. The control for the time trend can introduce confounding, although the magnitude of this problem for various settings has not been quantified.

Self-Controlled Case-Series Design

Some of the concepts of the case-crossover design have also been adapted to cohort studies. This design, called self-controlled case-series, 25 shares most of the advantages with the case-crossover design but requires additional assumptions.

As with the case-crossover design, the self-controlled case-series design estimates the immediate effect of treatment in those treated at least once. It is similarly dependent on cases that have changes in treatment during a defined period of observation time. This observation time is divided into treated person-time, a washout period of person-time, and untreated person-time. A conditional Poisson regression is used to estimate the incidence rate ratio within individuals. A SAS macro is available with software to arrange the data and to run the conditional Poisson regression. 26 - 27

The self-controlled design controls for factors that are stable over time. The cohort design, using all the available person-time information, has the potential to increase efficiency compared with the case-crossover design. The design was originally proposed for rare adverse events in vaccine safety studies for which it seems especially well suited.

The need for repeated events or, alternatively, a rare outcome, and the apparent need to assign person-time for treatment even after the outcome of interest occurs, limits the applicability of the design in many CER settings. The assumption that the outcome does not affect treatment will often be implausible. Furthermore, the design precludes the study of mortality as an outcome. The reason treatment information after the outcome is needed is not obvious to us, and this issue needs further study. More work is needed to understand the relationship of the self-controlled case-series with the case-crossover design and to delineate relative advantages and limitations of these designs for specific CER settings.

- Study Design Features

Study Setting

One of the first decisions with respect to study design is consideration of the population and data source(s) from which the study subjects will be identified. Usually, the general population or a population-based approach is preferred, but selected populations (e.g., a drug/device or disease registry) may offer advantages such as availability of data on covariates in specific settings. Availability of existing data and their scope and quality will determine whether a study can be done using existing data or whether additional new data need to be collected. (See chapter 8 for a full discussion of data sources.) Researchers should start with a definition of the treatments and outcomes of interest, as well as the predictors of outcome risk potentially related to choice of treatments of interest (i.e., potential confounders). Once these have been defined, availability and validity of information on treatments, outcomes, and confounders in existing databases should be weighed against the time and cost involved in collecting additional or new data. This process is iterative insofar as availability and validity of information may inform the definition of treatments, outcomes, and potential confounders. We need to point out that we do not make the distinction between retrospective and prospective studies here because this distinction does not affect the validity of the study design. The only difference between these general options of how to implement a specific study design lies in the potential to influence what kind of data will be available for analysis.

Inclusion and Exclusion Criteria

Every CER study should have clearly defined inclusion and exclusion criteria. The definitions need to include details about the study time period and dates used to define these criteria. Great care should be taken to use uniform periods to define these criteria for all subjects. If this cannot be achieved, then differences in periods between treatment groups need to be carefully evaluated because such differences have the potential to introduce bias. Inclusion and exclusion criteria need to be defined based on information available at baseline, and cannot be updated based on accruing information during followup. (See the discussion of immortal time below.)

Inclusion and exclusion criteria can also be used to increase the internal validity of non-experimental studies. Consider an example in which an investigator suspects that an underlying comorbidity is a confounder of the association under study. A diagnostic code with a low sensitivity but a high specificity for the underlying comorbidity exists (i.e., many subjects with the comorbidity aren't coded; however, for patients who do have the code, nearly all have the comorbidity). In this example, the investigator's ability to control for confounding by the underlying comorbidity would be hampered by the low sensitivity of the diagnostic code (as there are potentially many subjects with the comorbidity that are not coded). In contrast, restricting the study population to those with the diagnostic code removes confounding by the underlying condition due to the high specificity of the code.

It should be noted that inclusion and exclusion criteria also affect the generalizability of results. If in doubt, potential benefits in internal validity will outweigh any potential reduction in generalizability.

Choice of Comparators

Both confounding by indication and confounding by frailty may be strongest and most difficult to adjust for when comparing treated with untreated persons. One way to reduce the potential for confounding is to compare the treatment of interest with a different treatment for the same indication or an indication with a similar potential for confounding. 28 A comparator treatment within the same indication is likely to reduce the potential for bias from both confounding by indication and confounding by frailty. This opens the door to using nonexperimental methods to study intended effects of medical interventions (effectiveness). Comparing different treatment options for a given patient (i.e., the same indication) is at the very core of CER. Thus both methodological and clinical relevance considerations lead to the same principle for study design.

Another beneficial aspect of choosing an active comparator group comprised of a treatment alternative for the same indication is the identification of the point in time when the treatment decision is made, so that all subjects may start followup at the same time, “synchronizing” both the timeline and the point at which baseline characteristics are measured. This reduces the potential for various sources of confounding and selection bias, including by barriers to treatment (e.g., frailty). 8 , 29 A good source for active comparator treatments are current treatment guidelines for the condition of interest.

- Other Study Design Considerations

New-User Design

It has long been realized that the biologic effects of treatments may change over time since initiation. 30 Guess used the observed risk of angioedema after initiation of angiotensin-converting enzyme inhibitors, which is orders of magnitude higher in the first week after initiation compared with subsequent weeks, 31 to make the point. Nonbiologic changes of treatment effects over time since initiation may also be caused by selection bias. 8 , 29 , 32 For example, Dormuth et al. 32 examined the relationship between adherence to statin therapy (more adherent vs. less adherent) and a variety of outcomes thought to be associated with and not associated with statin use. The investigators found that subjects classified as more adherent were less likely to experience negative health outcomes unlikely to be caused by statin treatment.

Poor health, for example frailty, is also associated with nonadherence in RCTs 33 and thus those adhering to randomized treatment will appear to have better outcomes, including those adhering to placebo. 33 This selection bias is most pronounced for mortality, 34 but extends to a wide variety of outcomes, including accidents. 31 The conventional prevalent-user design is thus prone to suffer from both confounding and selection bias. While confounding by measured covariates can usually be addressed by standard epidemiologic methods, selection bias cannot. An additional problem of studying prevalent users is that covariates that act as confounders may also be influenced by prior treatment (e.g., blood pressure, asthma severity, CD4 count); in such a setting, necessary control for these covariates to address confounding will introduce bias because some of the treatment effect is removed.

The new-user design 6 , 30 - 31 , 35 - 36 is the logical solution to the problems resulting from inclusion of persons who are persistent with a treatment over prolonged periods because researchers can adjust for confounding at initiation without the concern of selection bias during followup. Additionally, the new-user approach avoids the problem of confounders' potentially being influenced by prior treatment, and provides approaches for structuring comparisons which are free of selection bias, such as first-treatment-carried-forward or intention-to-treat approaches. These and other considerations are covered in further detail in chapter 5 . In addition, the new user design offers a further advantage in anchoring the time scale for analysis at “time since initiation of treatment” for all subjects under study. Advantages and limitations of the new-user design are clearly outlined in the paper by Ray. 36 Limitations include the reduction in sample size leading to reduced precision of treatment effect estimates and the potential to lead to a highly selected population for treatments often used intermittently (e.g., pain medications). 37 Given the conceptual advantages of the new-user design to address confounding and selection bias, it should be the default design for CER studies; deviations should be argued for and their consequences discussed.

Immortal-Time Bias

While the term “immortal-time bias” was introduced by Suissa in 2003, 38 the underlying bias introduced by defining the exposure during the followup time rather than before followup was first outlined by Gail. 39 Gail noted that the survival advantage attributed to getting a heart transplant in two studies enrolling cohorts of potential heart transplant recipients was a logical consequence of the study design. The studies compared survival in those who later got a heart transplant with those who did not, starting from enrollment (getting on the heart transplant list). As one of the conditions to get a heart transplant is survival until the time of surgery, this survival time prior to the exposure classification (heart transplant or not) should not be attributed to the heart transplant and is described as “immortal.” Any observed survival advantage in those who received transplants cannot be clearly ascribed to the intervention if time prior to the intervention is included because of the bias introduced by defining the exposure at a later point during followup. Suissa 38 showed that a number of pharmacoepidemiologic studies assessing the effectiveness of inhaled corticosteroids in chronic obstructive pulmonary disease were also affected by immortal-time bias. While immortal person time and the corresponding bias is introduced whenever exposures (treatments) are defined during followup, immortal-time bias can also be introduced by exclusion of patients from cohorts based on information accrued after the start of followup, i.e., based on changes in treatment or exclusion criteria during followup.

It should be noted that both the new-user design and the use of comparator treatments reduce the potential for immortal-time bias. These design options are no guarantee against immortal-time bias, however, unless the corresponding definitions of cohort inclusion and exclusion criteria are based exclusively on data available at start of followup (i.e., at baseline). 40

This chapter provides an overview of advantages and limitations of various study designs relevant to CER. It is important to realize that many see the cohort design as more valid than the case-control design. Although the case-control design may be more prone to potential biases related to control selection and recall in ad hoc studies, if a case-control study is nested within an existing cohort (e.g., based within a large health care database) its validity is equivalent to the one of the cohort study under the condition that the controls are sampled appropriately and the confounders are assessed during the relevant time period (i.e., before the treatments). Because the cohort design is generally easier to conceptualize, implement, and communicate, and because computational efficiency will not be a real limitation in most settings, the cohort design will be preferred when data have already been collected. The cohort design has the added advantage that absolute risks or incidence rates can be estimated and therefore risk or incidence rate differences can be estimated which have specific advantages as outlined above. While we would always recommend including an epidemiologist in the early planning phase of a CER study, an experienced epidemiologist would be a prerequisite outside of these basic designs.

Some additional study designs have not been discussed. These include hybrid designs such as two-stage studies, 41 validation studies, 42 ecologic designs arising from natural experiments, interrupted time series, adaptive designs, and pragmatic trials. Many of the issues that will be discussed in the following chapters about ways to deal with treatment changes (stopping, switching, and augmenting) also will need to be addressed in pragmatic trials because their potential to introduce selection bias will be the same in both experimental and nonexperimental studies.

Knowledge of study designs and design options is essential to increase internal and external validity of nonexperimental CER studies. An appropriate study design is a prerequisite to reduce the potential for bias. Biases introduced by suboptimal study design cannot usually be removed during the statistical analysis phase. Therefore, the choice of an appropriate study design is at least as important, if not more important, than the approach to statistical analysis.

Checklist: Guidance and key considerations for study design for an observational CER protocol

View in own window

| Guidance | Key Considerations | Check |

|---|---|---|

| Provide a rationale for study design choice and describe key design features. | □ | |

| Define start of followup (baseline). | □ | |

| Define inclusion and exclusion criteria at start of followup. (baseline). | □ | |

| Define exposure (treatments) of interest at start of followup. | □ | |

| Define outcome(s) of interest. | □ | |

| Define potential confounders. | □ |

Developing a Protocol for Observational Comparative Effectiveness Research: A User’s Guide is copyrighted by the Agency for Healthcare Research and Quality (AHRQ). The product and its contents may be used and incorporated into other materials on the following three conditions: (1) the contents are not changed in any way (including covers and front matter), (2) no fee is charged by the reproducer of the product or its contents for its use, and (3) the user obtains permission from the copyright holders identified therein for materials noted as copyrighted by others. The product may not be sold for profit or incorporated into any profitmaking venture without the expressed written permission of AHRQ.

- Cite this Page Stürmer T, Brookhart MA. Study Design Considerations. In: Velentgas P, Dreyer NA, Nourjah P, et al., editors. Developing a Protocol for Observational Comparative Effectiveness Research: A User's Guide. Rockville (MD): Agency for Healthcare Research and Quality (US); 2013 Jan. Chapter 2.

- PDF version of this title (5.8M)

In this Page

Other titles in these collections.

- AHRQ Methods for Effective Health Care

- Health Services/Technology Assessment Text (HSTAT)

Related information

- PMC PubMed Central citations

- PubMed Links to PubMed

Recent Activity

- Study Design Considerations - Developing a Protocol for Observational Comparativ... Study Design Considerations - Developing a Protocol for Observational Comparative Effectiveness Research: A User's Guide

Your browsing activity is empty.

Activity recording is turned off.

Turn recording back on

Connect with NLM

National Library of Medicine 8600 Rockville Pike Bethesda, MD 20894

Web Policies FOIA HHS Vulnerability Disclosure

Help Accessibility Careers

- Search Menu

- Sign in through your institution

- Advance articles

- Editor's Choice

- Special Issues

- Author Guidelines

- Submission Site

- Open Access

- Why Publish?

- About Migration Studies

- Editorial Board

- Call for Papers

- Advertising and Corporate Services

- Journals Career Network

- Self-Archiving Policy

- Dispatch Dates

- Journals on Oxford Academic

- Books on Oxford Academic

Article Contents

1. why compare, 2. what to compare, 3. how to compare, 4. conclusion, acknowledgements.

- < Previous

The promise and pitfalls of comparative research design in the study of migration

- Article contents

- Figures & tables

- Supplementary Data

Irene Bloemraad, The promise and pitfalls of comparative research design in the study of migration, Migration Studies , Volume 1, Issue 1, March 2013, Pages 27–46, https://doi.org/10.1093/migration/mns035

- Permissions Icon Permissions

This article contends that our ability to study migration is significantly enhanced by carefully conceived comparative research designs. Comparing and contrasting a small number of cases—meaningful, complex structures, institutions, collectives, and/or configurations of events—is a creative strategy of analytical elaboration through research design. As such, comparative migration studies are characterized by their research design and conceptual focus on cases, not by a particular type of data. I outline some reasons why scholars should engage in comparison and discuss some challenges in doing so. I survey major comparative strategies in migration research, including between groups, places, time periods, and institutions, and I highlight how decisions about case selection are part and parcel of theory-building and theory evaluation. Comparative research design involves a decision over what to compare—what is the general class of ‘ cases’ in a study—and how to compare, a choice about the comparative logics that drive the selection of specific cases.

Migration as a field of study rests on an often unarticulated comparison: scholars assume that there is something unique and noteworthy about the experiences of those who migrate compared with those who do not.

For some researchers, the comparative distinction is sociological, in the broadest sense of the term. Migrants are socialized in one particular economic, cultural, religious, political, and social milieu, but through migration they enter into a new social space. Geographic movement provides a lens on individuals’ and groups’ ability to adapt to new contexts, on locals’ reactions to newcomers, and on changes in social systems as locals and migrants interact. Whether they stay or move on, migrants embody the analytical lens of Simmel’s ‘stranger’: ‘his position in this group is determined, essentially, by the fact that he has not belonged to it from the beginning, that he imports qualities into it, which do not and cannot stem from the group itself’ (1950: 402). Migration also has repercussions on those ‘left behind’; we can compare places that experience migration with those that do not.

For other scholars, the legitimacy of migration as a field of study lies in implicit or explicit comparisons of people who have distinct legal, political, and administrative statuses. This approach justifies the conventional distinction between internal migration, which often involves the sociological dynamics above, and international migration. International migration implicates rights and legal status as people cross the borders of sovereign nation-states. 1 The comparative question—often an assumption—is whether and how migration status affects communities and individuals. Increasingly, researchers have an interest in both the sociological and political dimensions of migration as well as how the two might be mutually constitutive (e.g. Menjívar and Abrego 2012 ).

This article contends that our ability to study migration, either in a sociological or political sense, is significantly enhanced through the use of carefully theorized comparative research designs. For my purposes, comparative migration research entails the systematic analysis of a relatively small number of cases. ‘Cases’ are conceptualized and theorized as meaningful, complex structures, institutions, collectives, and/or configurations of events ( Ragin 1997 ). Instead of primarily or only focusing on individuals as the unit of analysis, comparative migration research compares and contrasts migrant groups, organizations, geographical areas, time periods, and so forth. The goal is to examine how structures, cultures, processes, norms, or institutions affect outcomes through the combination and intersection of causal mechanisms. Comparison is employed as a creative strategy of analytical elaboration through research design. 2

Comparative migration studies are characterized by their research design and the conceptual focus on cases, not by a particular type of data or method. 3 Comparative migration studies use the full breadth of evidence commonly employed by academic researchers, from in-depth interview data to mass survey responses, and from documentary materials to observations in the field. The type of evidence can vary within or across comparative migration projects.

Understood as an approach to research design, comparative migration studies require that decisions about case selection and comparison become part and parcel of theory-building and theory evaluation. This involves both a decision over what to compare and how to compare. What to compare entails decisions about the general class of ‘cases’ in a study: are we interested in migrant groups, immigrant-receiving countries, both, or something else altogether? Decisions about how to compare lead to the selection of specific cases: Sudanese migrants or Columbians? Seoul or San Francisco? Case selection involves a choice about the comparative logics that will drive the analysis as well as the type of conversation the researcher wants to have with existing theory. Comparative migration studies can help break down artificial distinctions between ‘theorizing’ and ‘research design’ to elucidate how each can build on and improve the other.

In what follows, I examine both what migration researchers compare, from groups to time periods, and how to compare. First, however, I outline some of the reasons why scholars should engage in comparison. I also discuss some of the challenges of doing so; in some instances, engaging in comparisons is more costly than helpful. My arguments are animated by a conviction that more migration studies should employ comparison, but that it must be done with careful thought as to what, how, and why we compare.

Comparison is compelling because it reminds us that social phenomena are not fixed or ‘natural’. Through comparison we can de-center what is taken for granted in a particular time or place after we learn that something was not always so, or that it is different elsewhere, or for other people. A well-chosen comparative study can challenge conventional wisdom or show how existing academic theories might be wrong.

Testing or disconfirming theory is not enough, however. Finding one case that does not fit a general model only disproves a theory if a scholar takes a deterministic approach: every time X occurs, then Y will follow, or every time A and B interact, we see outcome C. Most social scientists instead operate with a probabilistic approach ( Lieberson 1992 ) to explanation: we believe theories to be generally correct, but we allow room for some variation rather than expect the theory to always provide exact predictions across people, places, and time. For example, a social capital theory of migration posits that, once started, it is highly likely that migration will continue through social networks. We do not, however, expect that all people with social ties to migrants will themselves become migrants. If one family member does not migrate, most scholars will not conclude that social network models are categorically disproven.

The strength of a comparative research design consequently also rests on its ability to foster concept-building, theory-building, and the identification of causal mechanisms. The case that does not fit standard models pushes the researcher to reconsider existing frameworks, for example by theorizing a sub-category or class of cases that are exceptions to the model, or challenging the conventional wisdom about how we should even conceptualize a ‘case’. Perhaps the homogeneous category of ‘migrant’ is inappropriate to a particular issue, and scholars should instead identify distinct classes of migrants: official refugees as distinct from family-sponsored migrants, or sojourners as compared with permanent immigrants. In delineating such categories, the scholar is forced to theorize why and how such distinctions matter. Should we distinguish between ‘traditional’ Anglo-settler immigrant nations, former colonial immigrant nations, and other highly industrialized immigrant-receiving countries? If so, why? The very conceptualization of a case is a serious analytical exercise.

Detailed attention to a few cases also permits more careful process-tracing and the identification of causal mechanisms that come together to produce social phenomena ( Ragin 1987 , 1997 ; FitzGerald 2012 ). In this, a case-oriented comparison offers an advantage over standard statistical analyses of many data points. Most inferential statistics in the social sciences can establish correlation but only very rarely do they provide strong evidence on causation. Rather, they confirm or refute the theoretical expectations of causal theories. Statistics tell us, for example, that refugees in the USA are more likely to take out citizenship than economic or family-sponsored migrants, even if we control for socio-economic background and key demographic variables. Such regression models cannot, however, explain whether inter-group differences stem from the particular experience of and motivations for migration or from the greater state funding and voluntary sector support for integration offered to refugees compared with other migrants. Excavating causal processes requires careful attention to cases.

For this reason, comparison is not very useful when the goal is merely to ‘increase the N’, that is, when it is an exercise in expanding the number of observations without considering how they advance the project. This is a pitfall that ensnares many young (and sometimes not so young) researchers. Those doing in-depth interviewing might feel that interviewing fifty migrants is inherently better than interviewing forty. This may be true if the ten additional people represent a particular type of experience or a category of individuals that could nuance an evolving argument. Put in Becker’s (1992) formulation, what are these ten people a case of? For example, do they hold a distinct legal status? If the goal of ten additional interviews is merely to increase confidence in the generalizability of results, however, additional interviews will contribute little if selection is not based on probability sampling. 4 Increasing your ‘N’ in this situation involves more work but limited analytical payoff.

Comparison is most productive when it does analytical weight-lifting. In prior research, I wanted to study how government policies influence immigrants’ political incorporation ( Bloemraad 2006 ). Rather than study different ideal-typical national models of integration or nationhood, as in contrasts between ‘civic’ France and ‘ethnic’ Germany popular in the 1990s, I wanted to know whether and how policy could affect immigrants in more similar countries, such as the USA and Canada. I asked, why were levels of citizenship among immigrants in Canada so much higher than in the USA? To address concerns that observed differences were due only to different immigration policies and migration streams, I focused on Portuguese immigrants, a group with very similar characteristics and migration trajectories on either side of the forty-ninth parallel. Comparing the same group in two countries served as an analytical strategy to move beyond theories centered on the resources and motivations of migrants. With a ‘control’ for migrant origins, I could focus on the mechanisms by which government policy trickled down to affect decisions about citizenship and influenced the creation of a civic infrastructure amenable to political incorporation. 5

In the same project, comparison served a second analytical purpose with the addition of another migrant group, the Vietnamese. They were added as a case of an official refugee group that, in the USA, receives government support more akin to the policies in Canada than to the laissez-faire treatment of most economic and family-sponsored migrants in the USA. Critics of my argument—namely, the argument that Canadian integration and multiculturalism policies facilitate citizenship acquisition and political engagement—could reasonably argue that political integration might be driven by a host of other US–Canada variations, from welfare state differences to distinct electoral politics. But the Portuguese case suggested some key causal mechanisms: Canadian policies helped fund community-based organizations, provided services and advanced symbolic politics of legitimacy, all of which facilitated political integration. The logic of the argument suggested that if migrant groups in the USA received similar government assistance, they would more closely resemble compatriots in Canada. By expanding the comparison to two groups in two countries, I could evaluate whether the mechanisms identified in the first comparison held in a second. 6

Additional comparisons are costly, however. The more things you compare—whether types of people, immigrant groups, organizations, neighborhoods, cities, countries, or time periods—the more background knowledge you need and the more time and resources you must invest to collect and analyze data. Introducing additional comparisons into an immigration-related project frequently entails significant costs in time and money due to the distances involved and the multilingual and multicultural skills needed, and they raise thorny challenges of access and communication. Such costs must be weighed against the advantages of a well-chosen comparison.

Comparison without careful forethought can leave researchers open to criticism when peers and reviewers fear that an expansion of cases undermines the attention paid to any one case and, by implication, the quality of the data. Such critiques are particularly likely to be directed at those using historical methods or ethnography; both approaches privilege deep engagement and expertise on a particular nexus of time and place, whether historical or contemporary. Multi-sited ethnographic fieldwork, for instance, can face objections that the study of additional places comes at the expense of deep, local knowledge, or that uneven engagement with different communities undermines the researcher’s ability to do systematic comparison ( Fitzgerald 2006 : 4; Boccagni 2012 ). 7 From a purely practical viewpoint, a researcher may only have twelve months of sabbatical leave or eighteen months to do dissertation fieldwork. Dividing that time between two or more sites reduces time spent in any one place.

The costliness of comparison applies across data types, even though ethnographers and historians may be subject to greater criticism given the norms of their method. A survey researcher who wants to poll additional people, in multiple locations or across multiple immigrant groups, also faces hard choices, though arguably more over financial resources than time. Fielding additional surveys, translating survey instruments, hiring bilingual interviewers, and doing probability sampling on immigrant populations are all very expensive endeavors. Given, for instance, resources to sample 800 people from two distinct language groups or 500 individuals from three groups, a researcher needs to theorize and justify why increasing the number of comparative cases is worth decreasing the number of people sampled within each group and in the project overall. All scholars, but especially students of migration, need to think very hard about why comparison makes sense. There needs to be some conceptual or theoretical purpose.

Because academics continuously build on prior research, there is always an inherent comparison between a particular study and the research or thinking that has come before. I term this the external comparative placement of a project vis-à-vis the existing literature. Both novice and experienced researchers need to ask the question, ‘What is the theoretical and substantive edge of my project in relation to others?’ The answer to this question is usually laid out in an author’s discussion of the literature and justification of methods.

In this sense, even a single case study can be ‘comparative’ to the extent that a scholar compares his or her case with existing research. Such comparisons often occur in studying a new immigrant group or a research site—a city, a neighborhood, a church—that does not fit the general pattern. Analytically, such a comparison can stretch or modify an existing theory, as Burawoy (1998) recommends in his extended case method. Alternatively, the single, anomalous case can generate new theories and ideas. Either as an extension or challenge to existing findings and theories, the conversation between the new empirical study and the established literature entails a comparative logic.

Such single case studies are not, however, formal comparative studies in the sense outlined here. I reserve the term ‘comparison’ for a specific comparative design embedded in the research project. Such comparisons can take a variety of forms, but they all involve a choice about what, exactly, should be compared. What constitutes a ‘case’? I first discuss two key comparisons, between migrants groups and between geographic areas, that are most common in migration studies, and then I consider some additional comparisons.

2.1 Comparing migrant groups

In the USA, comparative migration studies traditionally contrast different migrant groups in the same geographical location, be it a city or the country as a whole. In their review of immigration research published between 1990 and 2004, Eric Fong and Elic Chan (2008) find that only 14 per cent of studies conducted by US researchers focused on immigrants in general, while 86 per cent focused on particular groups. 8 Milton Gordon’s (1964) classic theorizing on assimilation draws on a comparison of four groups distinguished by race and religion: blacks, Puerto Ricans, European-origin Catholics, and Jews. More recently, Kasinitz et al.'s (2008) study of immigrant assimilation compares the social, political, and economic integration of second-generation young adults and native-born peers across seven ethno-racial groups in New York City.

Migration researchers in the USA overwhelmingly assume that national origin matters. Empirically, this assumption often finds support. Even if it turns out that national origin does not matter, this ‘non-finding’ is viewed as significant because of the general expectation, within the academic field and among the public, that it should matter. The focus on national origin stems, in part, from Americans’ longstanding concern over race relations in the USA. It might also reflect taken-for-granted ways of thinking about belonging and identity in the US context, norms that scholars sometimes adopt without reflection. 9

While comparing groups defined by national origin, ethnicity, or race is ‘natural’ in the USA, in France the state—and many researchers—have explicitly rejected race or ethnicity as a social category. Instead, other categories—such as class and, increasingly, religious background—are taken-for-granted as the way people should be conceptualized, grouped together, and compared. For example, in an analysis of educational outcomes similar in style to some of the results reported by Kasinitz et al., French scholar Patrick Simon (2003) adopts American practice by identifying and comparing groups by national origin and generational status in order to evaluate educational trajectories. Simon justifies and theorizes the comparative cases explicitly because, as he notes, ‘the sheer concept of second generation for a long time seemed utterly nonsensical’ for French observers, given the emphasis on common French citizenship and the traditional comparison between citizens and foreigners (2003: 1092). At the same time, Simon departs from standard US practice by bringing in a class dimension when he uses the children of working-class French parents as another comparative case rather using all ‘majority French’ individuals as the reference point.

Scholars are well advised to take a metaphorical step back and think carefully about why and how they think migrant group comparisons matter. Comparing migrant groups is a theoretical and conceptual choice about what sorts of factors are consequential for a particular outcome of interest. Thus, the decision to compare the educational aspirations or outcomes of different migrant groups rests on the assumption that national origin has some inherent meaning for migrants, or for others. 10 This is not always the case, since national origin can act as a proxy for something else, such as religion, or homeland economic system, or some other factor. If national origin is a proxy for something else, scholars should consider constructing their ‘cases’ in a different way. Individual immigrants can be grouped into analytical ‘cases’ by various characteristics other than national origin, such as by social class, gender, generation, legal status, or other socially relevant categories. For example, rather than comparing two national origin groups as proxies for high- or low-skilled migrants, perhaps a direct class-based comparison with less regard to migrant origins is preferable. Researchers should be attentive to the inherent cognitive biases of their discipline or their society when deciding what sort of cases to compare.

2.2 Geographic comparisons: nation-states