Want to create or adapt books like this? Learn more about how Pressbooks supports open publishing practices.

Introduction

This publication follows a first book ( Évaluation : fondements, controverses, perspectives ) published at the end of 2021 by Editions Science et Bien Commun (ESBC) with the support of the Laboratory for interdisciplinary evaluation of public policies (LIEPP), compiling a series of excerpts from fundamental and contemporary texts in evaluation (Delahais et al. 2021). Although part of this book is dedicated to the diversity of paradigmatic approaches, we chose not to go into a detailed presentation of methods on the grounds that this would at least merit a book of its own. This is the purpose of this volume. This publication is part of LIEPP’s collective project in two ways: through the articulation between research and evaluation, and through the dialogue between quantitative and qualitative methods.

Methods between research and evaluation

Most definitions of programme evaluation [1] articulate three dimensions, described by Alkin and Christie as the three branches of the “evaluation theory tree” (Alkin and Christie 2012). These are the mobilisation of research methods (evaluation is based on systematic empirical investigation), the role of values in providing criteria for judging the intervention under study, and the focus on the usefulness of the evaluation.

The use of systematic methods of empirical investigation is therefore one of the foundations of evaluation practice. This is how evaluation in the sense of evaluative research differs from the mere subjective judgement that the term ‘evaluation’ in its common sense may otherwise denote (Suchman 1967). Evaluation is first and foremost an applied research practice, and as such, it has borrowed a whole series of investigative techniques, both quantitative and qualitative, initially developed in basic research (e.g. questionnaires, quantitative analyses on databases, experimental methods, semi-structured interviews, observations, case studies, etc.). Beyond the techniques, the borrowing also concerns the methods of analysis and the conception of research designs. Despite this strong methodological link, evaluation does not boil down to a research practice (Wanzer 2021).This is suggested by the other two dimensions identified earlier (the concern for values and utility). In fact, the development of programme evaluation has given rise to a plurality of practices by a variety of public and private actors (public administration, consultants, NGOs, etc.), practices within which methodological issues are not necessarily central and where methodological rigour greatly varies.

At the same time, the practice of evaluation has remained weakly and very unevenly institutionalised in the university (Cox 1990), where it suffers in particular from a frequent devaluation of applied research practices, suspicions of complacency towards commissioners, and difficulties linked to its interdisciplinary nature (see below ) (Jacob 2008). Thus, although it has developed its journals and professional conferences, evaluation is still the subject of very few doctoral programmes and dedicated recruitments. Practised to varying degrees by different academic disciplines (public health, economics and development are now particularly involved), and sometimes described as ‘transdisciplinary’ in terms of its epistemological scope (Scriven 1993), evaluation is still far from being an academic discipline in the institutional sense of the term. From an epistemological point of view, this non- (or weak) disciplinarisation of evaluation is to be welcomed. The fact remains, however, that this leads to weaknesses. One of the consequences of this situation is a frequent lack of training for researchers in evaluation: particularly concerning the non-methodological dimensions of this practice (questions of values and utility), but also concerning certain approaches more specifically derived from evaluation practice.

Indeed, while evaluation has largely borrowed from social science methods, it has also fostered a number of methodological innovations. For example, the use of experimental methods first took off in the social sciences in the context of evaluation, initially in education in the 1920s and then in social policy, health and other fields from the 1960s onwards (Campbell and Stanley 1963). The link with medicine brought about by the borrowing of the model of the clinical trial (the notions of ‘trial’ and ‘treatment’ having thus been transposed to evaluation) then favoured the transfer from the medical sciences to evaluation of another method, systematic literature reviews, which consists in adopting a systematic protocol to search for existing publications on (a) given evaluative question(s) and to draw up a synthesis of their contributions (Hong and Pluye 2018; Belaid and Ridde 2020). Without being the only place where it is deployed, programme evaluation has also made a major contribution to the development and theorising of mixed methods, which consist of articulating qualitative and quantitative techniques in the same research (Baïz and Revillard 2022; Greene, Benjamin and Goodyear 2001; Burch and Heinrich 2016; Mertens 2017). Similarly, because of its central concern with the use of knowledge, evaluation has been a privileged site for the development of participatory research and its theorisation (Brisolara 1998; Cousins and Whitmore 1998; Patton 2018).

While these methods (experimental methods, systematic literature reviews, mixed methods, participatory research) are immediately applicable to fields other than programme evaluation, other methodological approaches and tools have been more specifically developed for this purpose [2] . This is particularly the case of theory-based evaluation (Weiss 1997; Rogers and Weiss 2007), encompassing a variety of approaches (realist evaluation, contribution analysis, outcome harvesting, etc.) which will be described below (Pawson and Tilley 1997; Mayne 2012; Wilson-Grau 2018). Apart from a few disciplines in which they are more widespread, such as public health or development (Ridde and Dagenais 2009; Ridde et al. 2020), these approaches are still little known to researchers who have undergone traditional training in research methods, including those who may be involved in evaluation projects.

A dialogue therefore needs to be renewed between evaluation and research: according to the reciprocal dynamic of the initial borrowing of research methods by evaluation, a greater diversity of basic research circles would now benefit from a better knowledge of the specific methods and approaches derived from the practice of evaluation. This is one of the vocations of LIEPP, which promotes a strengthening of exchanges between researchers and evaluation practitioners. Since 2020, LIEPP has been organising a monthly seminar on evaluation methods and approaches (METHEVAL), alternating presentations by researchers and practitioners, and bringing together a diverse audience [3] . This is also one of the motivations behind the book Evaluation: Foundations, Controversies, Perspectives , published in 2021, which aimed in particular to make researchers aware of the non-methodological aspects of evaluation (Delahais et al. 2021). This publication completes the process by facilitating the appropriation of approaches developed in evaluation such as theory-based evaluation, realistic evaluation, contribution analysis and outcome harvesting.

Conversely, LIEPP believes that evaluation would benefit from being more open to methodological tools more frequently used in basic research and with which it tends to be less familiar, particularly because of the targeting of questions at the scale of the intervention. In fact, evaluation classically takes as its object an intervention or a programme, usually on a local, regional or national scale, and within a sufficiently targeted questioning perimeter to allow conclusions to be drawn regarding the consequences of the intervention under study. By talking about policy evaluation rather than programme evaluation in the strict sense, our aim is to include the possibility of reflection on a more macro scale in both the geographical and temporal sense, by integrating reflections on the historicity of public policies, on the arrangement of different interventions in a broader policy context (a welfare regime, for example), and by relying more systematically on international comparative approaches. Evaluation, in other words, must be connected to policy analysis – an ambition already stated in the 1990s by the promoters of an “ évaluation à la française ” (Duran, Monnier, and Smith 1995; Duran, Erhel, and Gautié 2018). This is made possible, for example, by comparative historical analysis and macro-level comparisons presented in this book. Another important implication of programme evaluation is that the focus is on the intervention under study. By shifting the focus, many basic research practices can provide very useful insights in a more prospective way, helping to understand the social problems targeted by the interventions. All the thematic research conducted in the social sciences provides very useful insights for evaluation in this respect (Rossi, Lipsey, and Freeman 2004). Among the methods presented in this book, experimental approaches such as laboratory experimentation or testing, which are not necessarily focused on interventions as such, help to illustrate this more prospective contribution of research to evaluation.

A dialogue between qualitative and quantitative approaches

By borrowing its methods from the social sciences, policy evaluation has also inherited the associated methodological and epistemological controversies. Although there are many calls for reconciliation, although evaluation is more likely to emphasise its methodological pragmatism (the evaluative question guides the choice of methods), and although it has been a driving force in the development of mixed methods, in practice, in evaluation as in research, the dialogue between quantitative and qualitative traditions (especially in their epistemological dimension) is not always simple.

Articulating different disciplinary and methodological approaches to evaluate public policies is the founding ambition of LIEPP. The difficulties of this dialogue, particularly on an epistemological level (opposition between positivism and constructivism), were identified at the creation of the laboratory (Wasmer and Musselin 2013). Over the years, LIEPP has worked to overcome these obstacles by organizing a more systematic dialogue between different methods and disciplines in order to enrich evaluation: through the development of six research groups co-led by researchers from different disciplines, through projects carried out by interdisciplinary teams, but also through the regular discussion of projects from one discipline or family of methods by specialists from other disciplines or methods. It is also through these exchanges that the need for didactic material to facilitate the understanding of quantitative methods by specialists in qualitative methods, and vice-versa, has emerged. This mutual understanding is becoming increasingly difficult in a context of growing technicisation of methods. This book responds to this need, drawing heavily on the group of researchers open to interdisciplinarity and to the dialogue between methods that has been built up at LIEPP over the years: among the 25 authors of this book, nine are affiliated to LIEPP and eight others have had the opportunity to present their research at seminars organised by LIEPP.

This book has therefore been conceived as a means of encouraging a dialogue between methods, both within LIEPP and beyond. The aim is not necessarily to promote the development of mixed-methods research, although the strengths of such approaches are described (Part III). It is first of all to promote mutual understanding between the different methodological approaches, to ensure that practitioners of qualitative methods understand the complementary contribution of quantitative methods, their scope and their limits, and vice versa. In doing so, the approach also aims to foster greater reflexivity in each methodological practice, through a greater awareness of what one method is best suited for and the issues for which other methods are more relevant. While avoiding excessive technicality, the aim is to get to the heart of how each method works in order to understand concretely what it allows and what it does not allow. We are betting that this practical approach will help to overcome certain obstacles to dialogue between methods linked to major epistemological oppositions (positivism versus constructivism, for example) which are not necessarily central in everyday research practice. For students and non-academic audiences (particularly among policymakers or NGOs who may have recourse to programme evaluations), the aim is also to promote a more global understanding of the contributions and limitations of the various methods.

Far from claiming to be exhaustive, the book aims to present some examples of three main families of methods or approaches: quantitative methods, qualitative methods, and mixed methods and cross-sectional approaches in evaluation [4] . In what follows, we present the general organisation of the book and the different chapters, integrating them into a more global reflection on the distinction between quantitative and qualitative approaches.

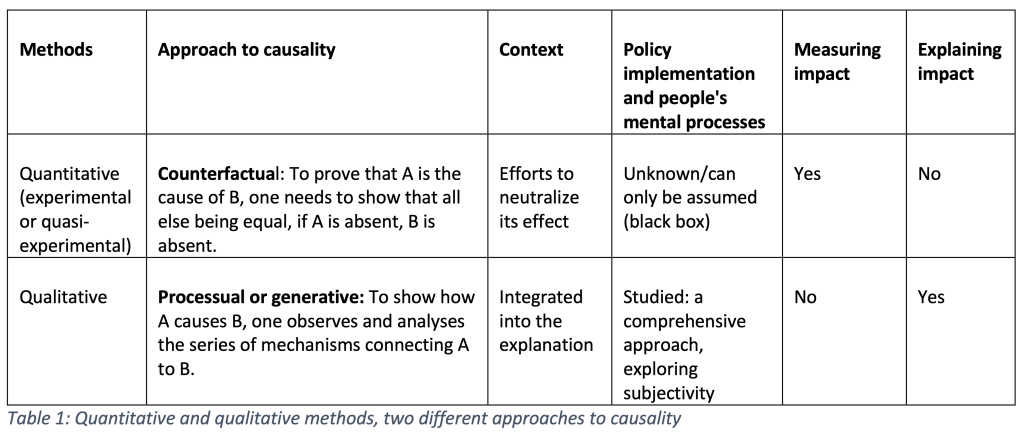

At a very general level, quantitative and qualitative methods are distinguished by the density and breadth of the type of information they produce: whereas quantitative methods can produce limited information on a large number of cases, qualitative methods provide denser, contextualised information on a limited number of cases. But beyond these descriptive characteristics, the two families of methods also tend to differ in their conception of causality. This is a central issue for policy evaluation which, without being restricted to this question [5] , was founded on investigating the impact of public interventions: to what extent can a given change observed be attributed to the effect of a given intervention? – In other words, a causal question (can a cause-and-effect relationship be established between the intervention and the observed change?). To understand the complementary contributions of quantitative and qualitative methods for evaluation, it is therefore important to understand the different ways in which they tend to address this central question of causality.

Quantitative methods

Experimental and quasi-experimental quantitative methods are based on a counterfactual view of causality: to prove that A causes B, it must be shown that, all other things being equal, if A is absent, B is absent (Woodward 2003). Applied to the evaluation of policy impact, this logic invites us to prove that an intervention causes a given impact by showing that in the absence of this intervention, all other things being equal, this impact does not occur (Desplatz and Ferracci 2017). The whole difficulty then consists of approximating as best as possible these ‘all other things being equal’ situations: what would have happened in the absence of the intervention, all other characteristics of the situation being identical? It is this desire to compare situations with and without intervention ‘all other things being equal’ that gave rise to the development of experimental methods in evaluation (Campbell and Stanley 1963; Rossi, Lipsey, and Freeman 2004).

Most experiments conducted in policy evaluation are field experiments, in the sense that they study the intervention in situation, as it is actually implemented. Randomised controlled trials (RCTs) (see Chapter 1 ) compare an experimental group (receiving the intervention) with a control group, aiming for equivalence of characteristics between the two groups by randomly assigning participants to one or the other group. This type of approach is particularly well suited to interventions that are otherwise referred to as ‘experiments’ in public policy (Devaux-Spatarakis 2014). These are interventions that public authorities launch in a limited number of territories or organisations to test their effects [6] , thus allowing for the possibility of control groups. When this type of direct experimentation is not possible, evaluators can resort to several quasi-experimental methods, aiming to reconstitute comparison groups from already existing situations and data (thus without manipulating reality, unlike experimental protocols) (Fougère and Jacquemet 2019). The difference-in-differences method uses a time marker at which one of the two groups studied receives the intervention and the other does not, and measures the impact of the intervention by comparing the results before and after this time (see Chapter 2 ). Discontinuity regression (see Chapter 3 ) reconstructs a target group and a control group by comparing the situations on either side of an eligibility threshold set by the policy under study (e.g. eligibility for the intervention at a given age, income threshold, etc.). Finally, matching methods (see Chapter 4 ) consist of comparing the situations of beneficiaries of an intervention with those of non-beneficiaries with the most similar characteristics.

In addition to these methods, which are based on real-life data, other quantitative impact assessment approaches are based on computer simulations or laboratory experiments. Microsimulation (see Chapter 5 ), the development of which has been facilitated by improvements in computing power, consists of estimating ex ante the expected impact of an intervention by taking into consideration a wide variety of data relating to the targeted individuals and simulating changes in their situation (e.g. ageing, changes in the labour market, fiscal policies, etc.). It also allows for a refined ex post analysis of the diversity of effects of a given policy on the targeted individuals. Policy evaluation can also rely on laboratory experiments (see Chapter 6 ), which make it possible to accurately measure the behaviour of individuals and, in particular, to uncover unconscious biases. Such analyses can, for example, be very useful in helping to design anti-discrimination policies, as part of an ex ante evaluation process. It is also in the context of reflection on these policies that testing methods (see Chapter 7 ) have been developed, making it possible to measure discrimination by sending fictitious applications in response to real offers (for example, job offers). But evaluation also seeks to measure the efficiency of interventions, beyond their impact. This implies comparing the results obtained with the cost of the policy under study and with those of alternative policies, in a cost-efficiency analysis approach (see Chapter 8 ).

Qualitative methods

While they are also compatible with counterfactual approaches, qualitative methods are more likely to support a generative or processual conception of causality (Maxwell 2004; 2012; Mohr 1999). Following this logic, causality is inferred, not from relations between variables, but from the analysis of the processes through which it operates. While the counterfactual approach establishes whether A causes B, the processual approach shows how (through what series of mechanisms) A causes B, through observing the empirical manifestations of these causal mechanisms that link A and B. In so doing, it goes beyond the behaviourist logic which, in counterfactual approaches, conceives the intervention according to a stimulus-response mechanism, the intervention itself then constituting a form of black box. Qualitative approaches break down the intervention into a series of processes that contribute to producing (or preventing) the desired result: this is the general principle of theory-based evaluations (presented in the third part of this book in Chapter 20 as they are also compatible with quantitative methods). This finer scale analysis is made possible by focusing on a limited number of cases, which are then studied in greater depth using different qualitative techniques. Particular attention is paid to the contexts, as well as to the mental processes and the logic of action of the people involved in the intervention (agents responsible for its implementation, target groups), in a comprehensive approach (Revillard 2018). Unlike quantitative methods, qualitative methods cannot measure the impact of a public policy; they can, however, explain it (and its variations according to context), but also answer other evaluative questions such as the relevance or coherence of interventions. Table 1 summarises these ideal-typical differences between quantitative and qualitative methods: it is important to specify that we are highlighting here the affinities of a given family of methods with a given approach to causality and a given consideration of processes and context, but this is an ideal-typical distinction which is far from exhausting the actual combinations in terms of methods and research designs.

The most emblematic qualitative research technique is probably direct observation or ethnography, coming from anthropology, which consists of directly observing the social situation being studied in the field (see Chapter 9 ). A particularly engaging method, direct observation is very effective in uncovering all the intermediate policy processes that contribute to producing its effects, as well as in distancing official discourse through the direct observation of interactions. The semi-structured interview (see Chapter 10 ) is another widely used qualitative research technique, which consists of a verbal interaction solicited by the researcher with a research participant, based on a grid of questions used in a very flexible manner. The interview aims both to gather information and to understand the experience and worldview of the interviewee. This method can also be used in a more collective setting, in the form of focus groups (see Chapter 11 ) or group interviews (see Chapter 12 ). As Ana Manzano points out in her chapter on focus groups, the terminologies for these group interview practices vary. Our aim in publishing two chapters on these techniques is not to rigidify the distinction but to provide two complementary views on these frequently used methods.

Although case studies (see Chapter 13 ) can use a variety of qualitative, quantitative and mixed methods, they are classically part of a qualitative research tradition because of their connection to anthropology. They allow interventions to be studied in context and are particularly suited to the analysis of complex interventions. Several case studies can be combined in the evaluation of the same policy; the way in which they are selected is then decisive. Process tracing (see Chapter 14 ), which relies mainly but not exclusively on qualitative enquiry techniques, focuses on the course of the intervention in a particular case, seeking to trace how certain actions led to others. The evaluator then acts as a detective looking for the “fingerprints” left by the mechanisms of change. The approach makes it possible to establish under what conditions, how and why an intervention works in a particular case. Finally, comparative historical analysis combines the two fundamental methodological tools of social science, comparison and history, to help explain large-scale social phenomena (see Chapter 15 ). It is particularly useful for reporting on the definition of public policies.

Mixed methods and cross-cutting approaches in evaluation

The third and final part of the book brings together a series of chapters on the articulation between qualitative and quantitative methods as well as on cross-cutting approaches that are compatible with a diversity of methods. Policy evaluation has played a driving role in the formalisation of the use of mixed methods, leading in particular to the distinction between different strategies for linking qualitative and quantitative methods (sequential exploratory, sequential explanatory or convergent design) (see Chapter 16 ). Even when the empirical investigation mobilises only one type of method, it benefits from being based on a systematic mixed methods literature review. While the practice of systematic literature reviews was initially developed to synthesise results from randomised controlled trials, this practice has diversified over the years to include other types of research (Hong and Pluye 2018). The particularity of systematic mixed methods literature reviews is that they include quantitative, qualitative and mixed studies, making it possible to answer a wider range of evaluative questions (see Chapter 17 ).

Having set out this general framework on mixed methods and reviews, the following chapters present six cross-cutting approaches. The first two, macro-level comparisons and qualitative comparative analysis (QCA), tend to be drawn from basic research practices, while the other four (theory-based evaluation, realist evaluation, contribution analysis, outcome harvesting) are drawn from the field of evaluation. Macro-level comparisons (see C hapter 18 ) consist of exploiting variations and similarities between large entities of analysis (e.g. states or regions) for explanatory purposes: for example, to explain differences between large social policy models, or the influence of a particular family policy configuration on women’s employment rate. Qualitative comparative analysis (QCA) is a mixed method which consists in translating qualitative data into a numerical format in order to systematically analyse which configurations of factors produce a given result (see Chapter 19 ). Based on an alternative, configurational conception of causality, it is useful for understanding why the same policy may lead to certain changes in some circumstances and not in others.

Developed in response to the limitations of experimental and quasi-experimental approaches to understanding how an intervention produces its impacts, theory-based evaluation consists of opening the ‘black box’ of public policy by breaking down the different stages of the causal chain linking the intervention to its final results (see Chapter 20 ). The following chapters fall broadly within this family of evaluation approaches. Realist evaluation (see Chapter 21 ) conceives of public policies as interventions that produce their effects through mechanisms that are only triggered in specific contexts. By uncovering context-mechanism-outcomes (CMO) configurations, this approach makes it possible to establish for whom, how and under what circumstances an intervention works. Particularly suited to complex interventions, contribution analysis (see Chapter 22 ) involves the progressive formulation of ‘contribution claims’ in a process involving policy stakeholders, and then testing these claims systematically using a variety of methods. Outcome harveting (see Chapter 23 ) starts from a broad understanding of observable changes, and then traces whether and how the intervention may have played a role in producing them. Finally, the last chapter is devoted to an innovative approach to evaluation, based on the concept of cultural safety initially developed in nursing science (see Chapter 24 ). Cultural safety aims to ensure that the evaluation takes place in a ‘safe’ manner for stakeholders, and in particular for the minority communities targeted by the intervention under study, i.e. that the evaluation process avoids reproducing mechanisms of domination (aggression, denial of identity, etc.) linked to structural inequalities. To this end, various participatory techniques are used at all stages of the evaluation. This chapter is thus an opportunity to emphasise the importance of participatory dynamics in evaluation, also highlighted in several other contributions.

A didactic and illustrated presentation

To facilitate reading and comparison between methods and approaches, each chapter is organised according to a common outline based on five main questions:

1) What does this method/approach consist of?

2) How is it useful for policy evaluation?

3) An example of the use of this method/approach;

4) What are the criteria for judging the quality of the use of this method/approach?

5) What are the strengths and limitations of this method/approach compared to others?

The book is published directly in two languages (French and English) in order to facilitate its dissemination. The contributions were initially written in one or the other language according to the preference of the authors, then translated and revised (where possible) by them. A bilingual glossary is available below to facilitate the transition from one language to the other.

The examples used cover a wide range of public policy areas, studied in a variety of contexts: pensions in Italy, weather and climate information in Senegal, minimum wage in New Jersey, reception in public services in France, child development in China, the fight against smoking among young people in the United Kingdom, health financing in Burkina Faso, the impact of a summer school on academic success in the United States, soft skills training in Belgium, the development of citizen participation to improve public services in the Dominican Republic, a nutrition project in Bangladesh, universal health coverage in six African countries, etc. The many examples presented in the chapters illustrate the diversity and current vitality of evaluation research practices.

Far from claiming to be exhaustive, this publication is an initial summary of some of the most widely used methods. The collection is intended to be enriched by means of publications over time in the open access collection of LIEPP methods briefs [7] .

Cited references

Alkin, Marvin. and Christie, Christina. 2012. ‘An Evaluation Theory Tree’. In Evaluation Roots , edited by Marvin Alkin and Christina Christie. London: Sage. https://doi.org/10.4135/9781412984157.n2 .

Baïz, Adam. and Revillard, Anne. 2022. Comment Articuler Les Méthodes Qualitatives et Quantitatives Pour Évaluer L’impact Des Politiques Publiques? Paris: France Stratégie. https://www.strategie.gouv.fr/publications/articuler-methodes-qualitatives-quantitatives-evaluer-limpact-politiques-publiques .

Belaid, Loubna. and Ridde, Valéry. 2020. ‘Une Cartographie de Quelques Méthodes de Revues Systématiques’. Working Paper CEPED N°44 .

Brisolara, Sharon. 1998. ‘The History of Participatory Evaluation and Current Debates in the Field’. New Directions for Evaluation, (80): 25–41. https://doi.org/10.1002/ev.1115 .

Burch, Patricia. and Heinrich, Carolyn J.. 2016. Mixed Methods for Policy Research and Program Evaluation . Los Angeles: Sage.

Campbell, Donald T.. and Stanley, Julian C.. 1963. ‘Experimental and Quasi-Experimental Designs for Research’. In Handbook of Research on Teaching . Houghton Mifflin Company.

Cousins, J. Bradley. and Whitmore, Elizabeth. 1998. ‘Framing Participatory Evaluation’. New Directions for Evaluation, (80): 5–23.

Cox, Gary. 1990. ‘On the Demise of Academic Evaluation’. Evaluation and Program Planning, 13(4): 415–19.

Delahais, Thomas. 2022. ‘Le Choix Des Approches Évaluatives’. In L’évaluation En Contexte de Développement: Enjeux, Approches et Pratiques , edited by Linda Rey, Jean Serge Quesnel, and Vénétia Sauvain, 155–80. Montréal: JFP/ENAP.

Delahais, Thomas, Devaux-Spatarakis, Agathe, Revillard, Anne, and Ridde, Valéry. eds. 2021. Evaluation: Fondements, Controverses, Perspectives . Québec: Éditions science et bien commun. https://scienceetbiencommun.pressbooks.pub/evaluationanthologie/ .

Desplatz, Rozenn. and Ferracci, Marc. 2017. Comment Évaluer l’impact Des Politiques Publiques? Un Guide à l’usage Des Décideurs et Praticiens . Paris: France Stratégie.

Devaux-Spatarakis, Agathe. 2014. ‘L’expérimentation “telle Qu’elle Se Fait”: Leçons de Trois Expérimentations Par Assignation Aléatoire’. Formation Emploi, (126): 17–38.

Duran, Patrice. and Erhel, Christine. and Gautié, Jérôme. 2018. ‘L’évaluation des politiques publiques’. Idées Économiques et Sociales, 193(3): 4–5.

Duran, Patrice. and Monnier, Eric. and Smith, Andy. 1995. ‘Evaluation à La Française: Towards a New Relationship between Social Science and Public Action’. Evaluation, 1(1): 45–63. https://doi.org/10.1177/135638909500100104 .

Fougère, Denis. and Jacquemet, Nicolas. 2019. ‘Causal Inference and Impact Evaluation’. Economie et Statistique, (510-511–512): 181–200. https://doi.org/10.24187/ecostat.2019.510t.1996 .

Greene, Jennifer C.. and Lehn, Benjamin. and Goodyear, Leslie. 2001. ‘The Merits of Mixing Methods in Evaluation’. Evaluation, 7(1): 25–44.

Hong, Quan Nha. and Pluye, Pierre. 2018. ‘Systematic Reviews: A Brief Historical Overview’. Education for Information, (34): 261–76.

Jacob, Steve. 2008. ‘Cross-Disciplinarization a New Talisman for Evaluation?’ American Journal of Evaluation 19(2): 175–94. https://doi.org/10.1177/1098214008316655 .

Mathison, Sandra. 2005. Encyclopedia of Evaluation . London: Sage.

Maxwell, Joseph A. 2004. ‘Using Qualitative Methods for Causal Explanation’. Field Methods, 16(3): 243–64. https://doi.org/10.1177/1525822X04266831 .

———. 2012. A Realist Approach for Qualitative Research . London: Sage.

Mayne, John. 2012. ‘Contribution Analysis: Coming of Age?’ Evaluation, 18(3): 270–80. https://doi.org/10.1177/1356389012451663 .

Mertens, Donna M. 2017. Mixed Methods Design in Evaluation . Thousand Oaks: Sage. Evaluation in Practice Series. https://doi.org/10.4135/9781506330631 .

Mohr, Lawrence B. 1999. ‘The Qualitative Method of Impact Analysis’. American Journal of Evaluation, 20(1): 69–84. https://doi.org/10.1177/109821409902000106 .

Newcomer, Kathryn E.. and Hatry, Harry P.. and Wholey, Joseph S.. 2015. Handbook of Practical Program Evaluation . Hoboken: Wiley.

Patton, Michael Q. 1997. Utilization-Focused Evaluation . 3rd ed. Thousand Oaks: Sage.

———. 2015. Qualitative Research and Evaluation Methods: Integrating Theory and Practice . London: Sage.

———. 2018. Utilization-Focused Evaluation . London: Sage.

Pawson, Ray. and Tilley, Nicholas. 1997. Realistic Evaluation . London: Sage.

Revillard, Anne. 2018. Quelle place pour les méthodes qualitatives dans l’évaluation des politiques publiques? Paris: LIEPP Working Paper n°81.

Ridde, Valéry. and Dagenais, Christian. 2009. Approches et Pratiques En Évaluation de Programme . Montréal: Presses de l’Université de Montréal.

Ridde, Valéry. and Dagenais, Christian (eds). 2020. Évaluation Des Interventions de Santé Mondiale: Méthodes Avancées . Québec: Éditions science et bien commun.

Rogers, Patricia J.. and Weiss, Carol H.. 2007. ‘Theory-Based Evaluation: Reflections Ten Years on: Theory-Based Evaluation: Past, Present, and Future’. New Directions for Evaluation, (114): 63–81. https://doi.org/10.1002/ev.225 .

Rossi, Peter H.. and Mark W. Lipsey. and Howard E. Freeman. 2004. Evaluation: A Systematic Approach . London: Sage.

Scriven, Michael. 1993. ‘Hard-Won Lessons in Program Evaluation.’ New Directions for Program Evaluation , (58): 5–48.

Suchman, Edward A. 1967. Evaluative Research. Principles and Practice in Public Service and Social Action Programs . New York: Russell Sage Foundation.

Wanzer, Dana L. 2021. ‘What Is Evaluation? Perspectives of How Evaluation Differs (or Not) From Research’. American Journal of Evaluation, 42(1): 28–46. https://doi.org/10.1177/1098214020920710 .

Wasmer, Etienne. and Musselin, Christine. 2013. Évaluation Des Politiques Publiques: Faut-Il de l’interdisciplinarité? Paris: LIEPP Methodological discussion paper n°2.

Weiss, Carol H. 1997. ‘Theory-Based Evaluation: Past, Present, and Future’. New Directions for Evaluation (76): 41–55. https://doi.org/10.1002/ev.1086 .

———. 1998. Evaluation: Methods for Studying Programs and Policies . Upper Saddle River, NJ: Prentice-Hall.

Wilson-Grau, Ricardo. 2018. Outcome Harvesting: Principles, Steps, and Evaluation Applications . IAP.

Woodward, James. 2003. Making Things Happen a Theory of Causal Explanation . Oxford Studies in Philosophy of Science. New York: Oxford University Press.

- For example, Michael Patton's definition of evaluation as "the systematic collection of information about the activities, characteristics, and outcomes of programs to make judgements about the program, improve program effectiveness, and/or inform decisions about future programming" (Patton 1997, 23) ↵

- As opposed to methods in the sense of methodological tools, approaches are situated in "a kind of in-between between theory and practice" (Delahais 2022), by embodying certain paradigms. In evaluation, some may be very methodologically oriented, but others may be more concerned with values, with the use of results, or with social justice (ibid.). ↵

- The programme and resources from previous sessions of this seminar are available online: https://www.sciencespo.fr/liepp/fr/content/cycle-de-seminaires-methodes-et-approches-en-evaluation-metheval.html ↵

- In doing so, it complements other methodological resources available in handbooks (Ridde and Dagenais 2009; Ridde et al. 2020; Newcomer, Hatry, and Wholey 2015; Mathison 2005; Weiss 1998; Patton 2015) or online: for example the Methods excellence network ( https://www.methodsnet.org/ ), or in the field of evaluation, the resources compiled by the OECD ( https://www.oecd.org/fr/cad/evaluation/keydocuments.htm ), the UN's Evalpartners network ( https://evalpartners.org/ ), or in France the methodological guides of the Institut des politiques publiques ( https://www.ipp.eu/publications/guides-methodologiques-ipp/ ) and the Société coopérative et participative (SCOP) Quadrant Conseil ( https://www.quadrant-conseil.fr/ressources/evaluation-impact.php#/ ). ↵

- Evaluation also looks at, for example, the relevance, coherence, effectiveness, efficiency or sustainability of interventions. See OECD DAC Network on Development Evaluation (EvalNet) https://www.oecd.org/dac/evaluation/daccriteriaforevaluatingdevelopmentassistance.htm ↵

- Unfortunately, these government initiatives are far from being systematically and rigorously evaluated. ↵

- LIEPP Methods briefs : https://www.sciencespo.fr/liepp/en/publications.html#LIEPP%20methods%20briefs ↵

Policy Evaluation: Methods and Approaches Copyright © by Anne Revillard is licensed under a Creative Commons Attribution-ShareAlike 4.0 International License , except where otherwise noted.

Share This Book

King's College London

Applied public policy phd/mphil, key information.

The Policy Institute works to solve society’s challenges with evidence and expertise, combining the rigour of an academic department with the agility of a consultancy and the connectedness of a think tank.

The institute has a reputation for conducting impactful research that shapes media and public debate and the policy environment. This PhD Programme in Applied Public Policy will train the next generation of researchers to conduct this kind of impact-focused public policy research. The programme offers you the opportunity to study public policy as it is happening in the real world. It will offer opportunities to explore how policy can be influenced, and whether interventions in public policy are effective.

The Policy Institute is based in the Faculty of Social Science and Public Policy (SSPP), which seeks to understand global, social, technological, and economic transformations changes and to inform these through education and research.

Applied Public Policy doctoral students are supervised by academic staff in the Policy Institute. We recommend that prospective students read through the PI webpages to find their preferred research area and potential supervisors .

Course detail

The PhD in Applied Public Policy focuses on training researchers to study public policy as it is happening in the real world. It offers opportunities to explore how policy can be influenced, and whether interventions in public policy are effective.

PhD students will learn the use of causal methods in statistics and econometrics, including randomised controlled trials and quasi-experimental designs, to study social policy questions, including in education, child and adult safeguarding, health and social care, youth employment, criminology and justice, mental health and wellbeing, and behavioural economics.

Alongside this, doctoral students will receive comprehensive training and supervision in the underlying theories in public policy, economics, sociology, and other relevant fields to their domain(s) of study.

This kind of research has clear policy impacts, but is also increasingly valuable from an academic perspective, with randomised trials, quasi-experimental methods, multi-methods studies, and behavioural economics being increasingly utilised across a wide range of fields, and increasingly being published in high-impact journals in economics, psychology, public policy, social policy, health and care research, and political science. Doctoral students will be equipped to critically examine the use and appropriateness of these methods.

Alongside quantitative methods, doctoral students will also use qualitative methods including interviews, focus groups, and ethnography, and the use of deliberative methods (such as citizens’ assemblies) both as a method of research to study public policy and their use as a tool for designing and conducting it.

Head of group/division

Professor Michael Sanders and Dr Kate Bancroft

Candidates can also email [email protected] .

- How to apply

- Fees or Funding

UK Tuition Fees 2023/24

Full time tuition fees: £6,540 per year

Part time tuition fees: £3,270 per year

International Tuition Fees 2023/24

Full time tuition fees: £24,360 per year

Part time tuition fees: £12,180 per year

UK Tuition Fees 2024/25

Full time tuition fees: £6,936 per year

Part time tuition fees: £3,468 per year

International Tuition Fees 2024/25

Full time tuition fees: £26,070 per year

Part time tuition fees: £13,035 per year

These tuition fees may be subject to additional increases in subsequent years of study, in line with King's terms and conditions

- Study environment

Base campus

Strand Campus

Located on the north bank of the River Thames, the Strand Campus houses King's College London's arts and sciences faculties.

Study Environment

Each student has two supervisors and meets regularly with both, though typically more frequently with the primary supervisor. They are encouraged to attend relevant research seminars at King’s and elsewhere and to and to participate in the wide variety of conferences and events on offer at King’s.

The Policy Institute offers training opportunities for students, for example through the institute’s Methods Club, Evaluation seminars, training focused on analytical coding and a wide variety of research fora. They will also be able to participate in training from across the LISS Doctoral Training Partnership .

Impact will be at the centre of the students’ experience in the Policy Institute, and they be able to attend and be part of events featuring the Institute’s visiting faculty which include current and former ministers and senior officials including the current Cabinet Secretary.

Alongside this, students will benefit from the Institute’s close connections with other academic departments, being able to attend regular seminar series across King’s.

Postgraduate training

Students graduating from this programme will be employable in a range of academic, research, and applied roles. Previous graduates of this programme’s predecessors have gone on to work in the civil service; to pursue academic careers including postdoctoral studies at the European University Institute and Harvard’s Kennedy School of Government; and to work in the government’s network of What Works Centres.

- Entry requirements

Find a supervisor

Search through a list of available supervisors.

For more information regarding our courses please contact us using the details below

Chat to students and staff

Chat to current students and staff to find out about life at King's.

Accommodation

Discover your accommodation options and explore our residences.

Connect with a King’s Advisor

Want to know more about studying at King's? We're here to help.

Experience life in London

Evaluation Institute for Public Health

The Evaluation Institute for Public Health seeks to advance evaluation science, scholarship, and practice through research, training, and consultation. By making evaluation science a core component of public health infrastructure we strive to improve the performance of public health and related human service organizations.

The Evaluation Institute serves three distinct constituencies:

- public sector organizations at the county, state or federal level;

- organizations that manage health care services such as hospitals, primary care centers or long-term care centers; and

- community-based nonprofit organizations.

Primary Activities of the Evaluation Institute

Institute staff offer educational programs via formal University courses; short-term institutes regionally, nationally, and internationally; independent study either in Pittsburgh or on site; and Web-based instruction. A 15-credit certificate in evaluation is available to graduate students and others who meet the admission requirements. We also offer the Evaluation Fellows Program. Every three years the Institute invites a director of human service organizations to study evaluation science. Fellows are expected to design and conduct an evaluation study within their organization. They often team with a public health doctoral student. The Fellows Program is designed to increase the use of scientific evaluation. Past fellows include John Zanaradelli, executive director (chief executive officer), Asbury Heights; and Joni Schwager, executive director, Staunton Farm Foundation.

The Institute contributes to the further development of the theoretical framework and methods used in scientific evaluation studies. The Institute is particularly interested in the improvement of “mixed method” strategies for evaluation, the development of statistical designs and analytic tools, and the application of behavioral intervention theories for rigorous testing.

Institute staff use the most advanced scientific methods for the conduct of community health status assessments.

The Institute is committed to assist agencies to develop the infrastructure and capacity to design and conduct their own evaluation studies, including designing information systems, training staff in evaluation methods, identifying financial resources, and creating an organizational culture that values evaluation.

The conduct of scientific evaluation studies, and the process by which such studies are planned, is being strongly modified by advances in medical devices, telecommunications, and information system technologies.

Our faculty and staff use the full range of evaluation design options including experimental, mixed methods, case study, and community- based participatory research.

Community Needs Assessment: Institute staff apply a variety of methods to assess the health status, utilization patterns, barriers to obtaining health care, and unmet need for services of populations of interest.

Qualitative/Ethnographic Data Collection Analysis and Community-Based Participatory Research: These methods are employed in formative evaluation studies and as a basis for planning and implementing behavioral interventions. Also, qualitative methods are essential to understanding individual and community decision making about seeking preventive, emergency, and acute medical care.

Institute staff adhere to the American Evaluation Association’s Standards for the Conduct of Evaluation Studies. The standards describe guidelines for utility, feasibility, accuracy, and propriety in evaluation research.

Practicum Opportunities

View practicum opportunities with a wide range of organizations and programs. Specific contact information and details are included in current and past opportunities posted here. Contact Thistle Elias with questions about practicum expectations.

Evaluation Certificate Program

- Graduate Programs

- Online Programs

- Youth Programs

- Professional Development

- Lifelong Learning

- How to Apply

- Costs & Financial Aid

- Scholarships

- Living on Campus

- Student Clubs & Organizations

- Student Engagement & Leadership

- Intercultural Student Affairs

- Equity & Inclusion

- Prospective Students

- Adult Learners

- Parents & Families

- Current Students

- Faculty & Staff

- Academic Calendar

- Course Search

- Academic Catalog

- Learning Commons

- Registration

- Student Financial Services

- Technology Support

- University Store

- Commencement

- Maine Education 2050

The Center for Education Policy, Applied Research and Evaluation (CEPARE) is devoted to promoting evidence-based decision making from the school house to the state house.

CEPARE provides assistance to school districts, agencies, organizations, and university faculty by conducting independent research, evaluation, and policy studies.

In addition, CEPARE co-directs the Maine Education Policy Research Institute (MEPRI), an entity jointly funded by the Maine State Legislature and the University of Maine System to conduct studies on Maine education policy and the public education system for the Maine Legislature.

CEPARE’s mission is to provide independent, nonpartisan research to inform education policy and practice, and to systematically identify, analyze, and continually evaluate education strategies that significantly improve education outcomes for students in the context of fiscal realities.

CORE BELIEFS : We believe that

- Public Education must be guided by the moral imperative to offer every child a first-rate education, and equity in public education must be a central and driving consideration in education policy and in education practice.

- Education initiatives are too often driven by opinion and conjecture; rigorous education research is needed to systematically assess the impacts of education initiatives over time.

- Greater sharing of research across disciplines and geographic divisions and through collaborations and partnerships among stakeholder groups invested in education will promote an increased understanding of which education strategies are most beneficial in differing circumstances across the many divergent learning settings.

- Ongoing systematic evaluation should be part of all education enterprises.

- Research and evaluation are powerful tools for improving policy and practice.

Presentation of Center for Program and Policy Evaluation ( CPPE) Moscow 200 8

Aug 23, 2014

130 likes | 205 Views

Presentation of Center for Program and Policy Evaluation ( CPPE) Moscow 200 8. About the Center of Program and Policy Evaluation.

Share Presentation

- berlin university

- methodological recommendations

- sociological studies

- national associations evaluation standards

- evaluation andeconomic analysis directorate

- evaluation society

Policy Analysis and Program Evaluation

Policy Analysis and Program Evaluation Lecture 15 – Administrative Processes in Government Stages of the Policy Process Problem Definition and Agenda Setting. Key actors: policy entrepreneurs, elected executives, interest groups Secondary actors: public administrators, legislators

1.39k views • 44 slides

Office of Governmentwide Policy Center for Policy Evaluation (CPE)

Office of Governmentwide Policy Center for Policy Evaluation (CPE). February 4, 2009. Part 1: Background Methodology Changes for 2009 Evaluation Criteria and Scoring 2008 Results 2009 Schedule. Part 2: Website Policy Review Tool Sections Submission Process System flow Emails

623 views • 52 slides

Center for Research and Policy Making

Center for Research and Policy Making. Local Economic Development of Gostivar municipality- Collapse of the industry, emigrants key for surviving . History of Gostivar. Gostivar is mentioned for the first time in Middle Ages In XVII century Gostivar became center of Gorni Polog

362 views • 23 slides

CENTER FOR DRUG EVALUATION

CENTER FOR DRUG EVALUATION. AND RESEARCH. LIST OF CONTENTS. INTRODUCTION ABOUT CDER DRUG INFORMATION REGULATORY GUIDANCE CDER CALENDER SPECIFIC AUDIENCES CDER ARCHIVES POSSIBLE QUESTIONS REFERENCES. DEPARTMENT OF HEALTH AND HUMAN SERVICES. FOOD AND DRUG ADMINISTRATION (FDA).

627 views • 40 slides

Center for Biologics Evaluation and Research

Center for Biologics Evaluation and Research. Applying Regulatory Science to Advance Development of Innovative, Safe and Effective Biologic Products Carolyn A. Wilson, Ph.D. Associate Director for Research. CBER Our Vision. Innovative Technology Advancing Public Health

324 views • 18 slides

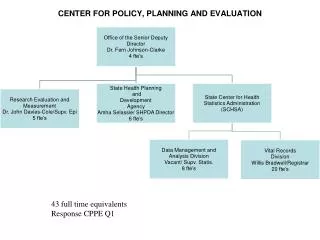

CENTER FOR POLICY, PLANNING AND EVALUATION

CENTER FOR POLICY, PLANNING AND EVALUATION. 43 full time equivalents Response CPPE Q1.

24 views • 1 slides

Population Policy and Program Monitoring and Evaluation

2. POPCOM and PIDS Project Reports. Herrin, A. N., 2002, Population Policy in the Philippines, 1969-2002".Orbeta, A. C., Jr. et al., 2002, Review of the Population Program: 1986-2002".Racelis, R. H. and A. N. Herrin, 2003, Philippine Population Management Program (PPMP) Expenditures, 1998 a

574 views • 43 slides

Department of State Program Evaluation Policy Overview

Department of State Program Evaluation Policy Overview. Spring 2013. Overview of Policy and Status. Policy was approved by Secretary on March 1, 2012 This is the first State Department Policy

312 views • 14 slides

POLICY AND PROGRAM EVALUATION

POLICY AND PROGRAM EVALUATION. Consession schools in colombia Cecilia Maria Velez Felipe Barrera Octubre 15 2010. OUTLINE. Evaluation The program : Consession Shools The evaluation General consideration about evaluation methods . 1. Evaluation.

326 views • 10 slides

University of Washington Center for Teaching and Policy

Tackling turnaround: a tale of two principals The Education trust national conference November 4, 2010. Bradley S. Portin University of Washington, Bothell. University of Washington Center for Teaching and Policy with generous support from The Wallace Foundation.

213 views • 6 slides

Graduate Diploma in Public Policy and Program Evaluation

Graduate Diploma in Public Policy and Program Evaluation. IPDET ORIENTATION June 13, 2012. Our “DPE” Program. Program Objectives Key Features Target Markets Annual In-take Course Progression Qualifications Application Process. Program Objectives. To support the CES CE designation

323 views • 16 slides

Office of Governmentwide Policy Center for Policy Evaluation

Office of Governmentwide Policy Center for Policy Evaluation. January 9, 2008. BECKY RHODES Deputy Associate Administrator. Agenda. Background Purpose Methodology Website Program Review Tool Follow-up activities. Background and Mission. Outgrowth of OMB PART evaluation

585 views • 41 slides

Policy and Program Evaluation

Policy and Program Evaluation. Logical Framework What is Program Evaluation Case 1: Indonesia PQEP Case 2: Honduras Educatodos Case 3: School Quality Program Summing Up. Logical Framework.

1.51k views • 83 slides

Center for Vaccine Ethics and Policy

Civil Society in Decision Making for Immunization Policies: the example of National Immunization Technical Advisory Groups (NITAGs). Center for Vaccine Ethics and Policy Global Vaccines 202X: Access, Equity, Ethics Philadelphia, USA, 3rd May 2011. About the SIVAC Initiative.

383 views • 15 slides

Center for Biologics Evaluation and Research. Applying Regulatory Science to Advance Development of Innovative, Safe and Effective Biologic Products. Carolyn A. Wilson, Ph.D. Associate Director for Research. CBER Mission.

435 views • 24 slides

Population Policy and Program Monitoring and Evaluation. Alejandro N. Herrin and Aniceto C. Orbeta, Jr. Baguio City June 26, 2003. POPCOM and PIDS Project Reports. Herrin, A. N., 2002, “Population Policy in the Philippines, 1969-2002”.

738 views • 53 slides

CENTER FOR BIOLOGICS EVALUATION AND RESEARCH

CENTER FOR BIOLOGICS EVALUATION AND RESEARCH. By: Wysteria Hart Sahar Mohammadi. Who is CBER?. Governed by: Section 351 of the Public Health Service Act Sections of the Food Drug and Cosmetics Act. What is CBER?. Regulate biologic products Safe for use Effective

326 views • 14 slides

Population Policy and Program Monitoring and Evaluation. Alejandro N. Herrin and Aniceto C. Orbeta, Jr. May 29, 2003. POPCOM and PIDS Project Reports. Herrin, A. N., 2002, “Population Policy in the Philippines, 1969-2002”.

608 views • 49 slides

Population Policy and Program Monitoring and Evaluation. Alejandro N. Herrin May 13, 2003. POPCOM and PIDS Project Reports. Herrin, A. N., 2002, “Population Policy in the Philippines, 1969-2002”. Orbeta, A. C., Jr. et al., 2002, “Review of the Population Program: 1986-2002”.

564 views • 37 slides

Center of Policy and Evaluation

Center of Policy and Evaluation. Mrs. Joddy Perkins Garner, Deputy Center of Policy and Evaluation (MTC) Office of Travel, Transportation and Asset Management GSA EXPO 2010. Agenda. Background Methodology Evaluation Criteria and Scoring 2009 Results

398 views • 23 slides

Program and policy evaluation

Program and policy evaluation. PPAS4200 February 1 st , 2012. Program evaluation.

404 views • 14 slides

Mental Health Evaluation Activities of the VA Program Evaluation and Resource Center

Mental Health Evaluation Activities of the VA Program Evaluation and Resource Center. Prepared for Institute of Medicine, Committee Evaluating VA Mental Health Services June 5, 2014. PERC History.

303 views • 18 slides

- Open access

- Published: 02 September 2024

“I am there just to get on with it”: a qualitative study on the labour of the patient and public involvement workforce

- Stan Papoulias ORCID: orcid.org/0000-0002-7891-0923 1 &

- Louca-Mai Brady 2

Health Research Policy and Systems volume 22 , Article number: 118 ( 2024 ) Cite this article

28 Altmetric

Metrics details

Workers tasked with specific responsibilities around patient and public involvement (PPI) are now routinely part of the organizational landscape for applied health research in the United Kingdom. Even as the National Institute for Health and Care Research (NIHR) has had a pioneering role in developing a robust PPI infrastructure for publicly funded health research in the United Kingdom, considerable barriers remain to embedding substantive and sustainable public input in the design and delivery of research. Notably, researchers and clinicians report a tension between funders’ orientation towards deliverables and the resources and labour required to embed public involvement in research. These and other tensions require further investigation.

This was a qualitative study with participatory elements. Using purposive and snowball sampling and attending to regional and institutional diversity, we conducted 21 semi-structured interviews with individuals holding NIHR-funded formal PPI roles across England. Interviews were analysed through reflexive thematic analysis with coding and framing presented and adjusted through two workshops with study participants.

We generated five overarching themes which signal a growing tension between expectations put on staff in PPI roles and the structural limitations of these roles: (i) the instability of support; (ii) the production of invisible labour; (iii) PPI work as more than a job; (iv) accountability without control; and (v) delivering change without changing.

Conclusions

The NIHR PPI workforce has enabled considerable progress in embedding patient and public input in research activities. However, the role has led not to a resolution of the tension between performance management priorities and the labour of PPI, but rather to its displacement and – potentially – its intensification. We suggest that the expectation to “deliver” PPI hinges on a paradoxical demand to deliver a transformational intervention that is fundamentally divorced from any labour of transformation. We conclude that ongoing efforts to transform health research ecologies so as to better respond to the needs of patients will need to grapple with the force and consequences of this paradoxical demand.

Peer Review reports

Introduction – the labour of PPI

The inclusion of patients, service users and members of the public in the design, delivery and governance of health research is increasingly embedded in policy internationally, as partnerships with the beneficiaries of health research are seen to increase its relevance, acceptability and implementability. In this context, a growing number of studies have sought to evaluate the impact of public participation on research, including identifying the barriers and facilitators of good practice [ 1 , 2 , 3 , 4 , 5 , 6 , 7 , 8 ]. Some of this inquiry has centred on power, control and agency. Attention has been drawn, for example, to the scarcity of user or community-led research and to the low status of experiential knowledge in the hierarchies of knowledge production guiding evidence-based medicine [ 9 ]. Such hierarchies, authors have argued, constrain the legitimacy that the experiential knowledge of patients can achieve within academic-led research [ 10 ], may block the possibility of equitable partnerships such as those envisioned in co-production [ 11 ] and may function as a pull back against more participatory or emancipatory models of research [ 12 , 13 , 14 ]. In this way, patient and public inclusion in research may become less likely to aim towards inclusion of public and patient-led priorities, acting instead as kind of a “handmaiden” to research, servicing and validating institutionally pre-defined research goals [ 15 , 16 , 17 ].

Research on how public participation-related activities function as a form of labour within a research ecosystem, however, is scarce [ 18 ]. In this paper, we examine the labour of embedding such participation, with the aim of understanding how such labour fits within the regimes of performance management underpinning current research systems. We argue that considering this “fit” is crucial for a broader understanding of the implementation of public participation and therefore its potential impact on research delivery. To this end, we present findings from a UK study of the labour of an emerging professional cadre: “patient and public involvement” leads, managers and co-ordinators (henceforth PPI, the term routinely used for public participation in the United Kingdom). We concentrate specifically on staff working on research partnerships and centres funded by the National Institute for Health and Care Research (NIHR). This focus on the NIHR is motivated by the organization’s status as the centralized research and development arm of the National Health Service (NHS), with an important role in shaping health research systems in the United Kingdom since 2006. NIHR explicitly installed PPI in research as a foundational part of its mission and is currently considered a global leader in the field [ 19 ]. We contend that exploring the labour of this radically under-investigated workforce is crucial for understanding what we see as the shifting tensions – outlined in later sections – that underpin the key policy priority of embedding patients as collaborators in applied health research. To contextualize our study, we first consider how the requirement for PPI in research relates to the overall policy rationale underpinning the organizational mission of the NIHR as the NHS’s research arm, then consider existing research on tensions identified in efforts to embed PPI in a health system governed through regimes of performance management and finally articulate the ways in which dedicated PPI workers’ responsibilities have been developed as a way to address these tensions.

The NIHR as a site of “reformed managerialism”

The NIHR was founded in 2006 with the aim of centralizing and rationalizing NHS research and development activities. Its foundation instantiated the then Labour government’s efforts to strengthen and consolidate health research in the UK while also tackling some of the problems associated with the earlier introduction of new public management (NPM) principles in the governance of public services. NPM had been introduced in the UK public sector by Margaret Thatcher’s government, in line with similar trends in much of the Global North [ 20 ]. The aim was to curb what the Conservatives saw as saw as excesses in both public spending and professional autonomy. NPM consisted in management techniques adapted from the private sector: in the NHS this introduction was formalized via the 1990 National Health Service and Community Care Act, which created an internal market for services, with local authorities purchasing services from local health providers (NHS Trusts) [ 21 ]; top-down management control; an emphasis on cost-efficiency; a focus on targets and outputs over process; an intensification of metrics for performance management; and a positioning of patients and the public as consumers of health services with a right to choose [ 22 , 23 ]. In the context of the NHS, cost-efficiency meant concentrating on services and on research which would have the greatest positive impact on population health while preventing research waste [ 24 ]. By the mid-1990s, however, considerable criticism had been directed towards this model, including concerns that NPM techniques resulted in silo-like operations and public sector fragmentation, which limited the capacity for collaboration between services essential for effective policy. Importantly, there was also a sense that an excessive managerialism had resulted in a disconnection of public services from public and civic aims, that is, from the values, voices and interests of the public [ 25 , 26 ].

In this context, the emergence of the NIHR can be contextualized through the succeeding Labour government’s much publicized reformed managerialism, announced in their 1997 white paper “The New NHS: Modern, Dependable” [ 27 ]. Here, the reworking of NPM towards “network governance” meant that the silo-like effects of competition and marketization were to be attenuated through a turn to cross-sector partnerships and a renewed attention to quality standards and to patients’ voices [ 28 ]. It has been argued, however, that the new emphasis on partnerships did not undermine the dominance of performance management, while the investment in national standards for quality and safety resulted in an intensified metricization, with the result that this reform may have been more apparent than real, amounting to “NPM with a human face” [ 29 , 30 , 31 ]. Indeed, the NIHR can be seen as an exemplary instantiation of this model: as a centralized commissioner of research for the NHS, the NIHR put in place reporting mechanisms and performance indicators to ensure transparent and cost-efficient use of funds, with outputs and impact measured, managed and ranked [ 24 ]. At the same time, the founding document of the NIHR, Best Research for Best Health, articulates the redirection of such market-oriented principles towards a horizon of public good and patient benefit. The document firmly and explicitly positioned patients and the public as both primary beneficiaries of and important partners in the delivery of health research. People (patients) were to be placed “at the centre of a research system that focuses on quality, transparency and value for money” [ 32 ], a mission implemented through the installation of “structures and mechanisms to facilitate increased involvement of patients and the public in all stages of NHS Research & Development” [ 33 ]. This involvement would be supported by the advisory group INVOLVE, a key part of the new centralized health research system. INVOLVE, which had started life in 1996 as Consumers in NHS Research, funded by the Department of Health, testified to the Labour administration’s investment in championing “consumer” involvement in NHS research as a means of increasing research relevance [ 34 ]. The foundation of the NIHR then exemplified the beneficent alignment of NPM with public benefit, represented through the imaginary of a patient-centred NHS, performing accountability to the consumers/taxpayers through embedding PPI in all its activities. In this context, “public involvement” functioned as the lynchpin through which such alignment could be effected.

PPI work and the “logic of deliverables”: a site of tension

Existing research on the challenges of embedding PPI has typically focussed on the experiences of academics tasked with doing so within university research processes. For example, Pollard and Evans, in a 2013 paper, argue that undertaking PPI work in mental health research can be arduous, emotionally taxing and time consuming, and as such, can be in tension with expectations for cost-efficient and streamlined delivery of research outputs [ 35 ]. Similarly, Papoulias and Callard found that the “logic of deliverables” governing research funding can militate against undertaking PPI or even constitute PPI as “out of sync” with research timelines [ 36 ]. While recent years have seen a deepening operationalization of PPI in the NIHR and beyond, there are indications that this process, rather than removing these tensions, may have recast them in a different form. For example, when PPI is itself set up as performance-based obligation, researchers, faced with the requirement to satisfy an increasing number of such obligations, may either engage in “surface-level spectacles” to impress the funder while eschewing the long-term commitment necessary for substantive and ongoing PPI, or altogether refuse to undertake PPI, relegating the responsibility to others [ 37 , 38 ]. Such refusals may then contribute to a sharpening of workplace inequalities: insofar as PPI work is seen as “low priority” for more established academic staff, it can be unevenly distributed within research organizations, with precariously employed junior researchers and women typically assigned PPI responsibilities with the assumption that they possess the “soft skills” necessary for these roles [ 39 ].

Notably, the emergence of a dedicated PPI workforce is intended as a remedy for this tension by providing support, expertise and ways of negotiating the challenges associated with undertaking PPI responsibilities. In the NIHR, this workforce is part of a burgeoning infrastructure for public involvement which includes national standards, training programmes, payment guidelines, reporting frameworks and impact assessments [ 40 , 41 , 42 , 43 , 44 , 45 ]. By 2015, an INVOLVE review of PPI activities during the first 10 years of the NIHR attested to “a frenzy of involvement activity…across the system”, including more than 200 staff in PPI-related roles [ 40 ]. As NIHR expectations regarding PPI have become more extensive, responsibilities of PPI workers have proliferated, with INVOLVE organizing surveys and national workshops to identify their skills and support needs [ 41 , 42 ]. In 2019, the NIHR mandated the inclusion of a “designated PPI lead” in all funding applications, listing an extensive and complex roster of responsibilities. These now included delivery and implementation of long-term institutional strategies and objectives, thus testifying to the assimilation of involvement activities within the roster of “performance-based obligations” within research delivery systems [ 43 ]. Notably however, this formalization of PPI responsibilities is ambiguous: the website states that the role “should be a budgeted and resourced team member” and that they should have “the relevant skills, experience and authority”, but it does not specify whether this should be a researcher with skills in undertaking PPI or indeed someone hired specifically for their skills in PPI, that is, a member of the PPI workforce. Equally, the specifications, skills and support needs, which have been brought together into a distinct role, have yet to crystallize into a distinct career trajectory.

Case studies and evaluations of PPI practice often reference the skills and expertise required in leading and managing PPI. Chief among them are relational and communication skills: PPI workers have been described as “brokers” who mediate and enable learning between research and lay spaces [ 44 , 45 ]; skilled facilitators enabling inclusive practice [ 46 , 47 , 48 ]; “boundary spanners” navigating the complexities of bridging researchers with public contributors and undertaking community engagement through ongoing relational work [ 49 ]. While enumerating the skillset required for PPI work, some of these studies have identified a broader organizational devaluation of PPI workers: Brady and colleagues write of PPI roles as typically underfunded with poor job security, which undermines the continuity necessary for generating trust in PPI work [ 46 ], while Mathie and colleagues report that many PPI workers describe their work as “invisible”, a term which the authors relate to the sociological work on women’s labour (particularly housework and care labour) which is unpaid and rendered invisible insofar as it is naturalized as “care” [ 50 ]. Research on the neighbouring role of public engagement professionals in UK universities, which has been more extensive than that on PPI roles, can be instructive in fleshing out some of these points: public engagement professionals (PEPs) are tasked with mediating between academics and various publics in the service of a publicly accountable university. In a series of papers on the status of PEPs in university workplaces, Watermeyer and colleagues argue that, since public engagement labour is relegated to non-academic forms of expertise which lack recognition, PEPs’ efforts in boundary spanning do not confer prestige. This lack of prestige can, in effect, function as a “boundary block” obstructing PEPs’ work [ 51 , 52 ]. Furthermore, like Mathie and Brady, Watermeyer and colleagues also argue that the relational and facilitative nature of engagement labour constitutes such labour as feminized and devalued, with PEPs also reporting that their work remains invisible to colleagues and institutional audit instruments alike [ 50 , 53 ].

The present study seeks to explore further these suggestions that PPI labour, like that of public engagement professionals, lacks recognition and is constituted as invisible. However, we maintain that there are significant differences between the purpose and moral implications of involvement and engagement activities. PPI constitutes an amplification of the moral underpinnings of engagement policies: while public engagement seeks to showcase the public utility of academic research, public involvement aims to directly contribute to optimizing and personalizing healthcare provision by minimizing research waste, ensuring that treatments and services tap into the needs of patient groups, and delivering the vision of a patient-centred NHS. Therefore, even as PPI work may be peripheral to other auditable research activities, it is nevertheless central to the current rationale for publicly funded research ecosystems: by suturing performance management and efficiency metrics onto a discourse of public benefit, such work constitutes the moral underpinnings of performance management in health research systems. Therefore, an analysis of the labour of the dedicated PPI workforce is crucial for understanding how this suturing of performance management and “public benefit” works over the conjured figures of patients in need of benefit. This issue lies at the heart of our research study.

Our interview study formed the first phase of a multi-method qualitative inquiry into the working practices of NIHR-funded PPI leads. While PPI lead posts are in evidence in most NIHR-funded research, we decided to focus on NIHR infrastructure funding specifically: these are 5-year grants absorbing a major tranche of NIHR funds (over £600 million annually in 2024). They function as “strategic investments” embodying the principles outlined in Best Research for Best Health: they are awarded to research organizations and NHS Trusts for the purposes of developing and consolidating capacious environments for early stage and applied clinical research, including building a research delivery workforce and embedding a regional infrastructure of partnerships with industry, the third sector and patients and communities [ 55 ]. We believe that understanding the experience of the PPI workforce funded by these grants may give better insights into NIHR’s ecosystem and priorities, since they are specifically set up to support the development of sustainable partnerships and embed the translational pipeline into clinical practice.

The study used purposive sampling with snowball elements. In 2020–2021, we mapped all 72 NIHR infrastructure grants, identified the PPI teams working in each of these using publicly available information (found on the NIHR website and the websites and PPI pages of every organization awarded infrastructure grants) and sent out invitation emails to all teams. Where applicable, we also sent invitations to mailing lists of PPI-lead national networks connected to these grants. Inclusion criteria were that potential participants should have oversight roles, and/or be tasked with cross-programme/centre responsibilities, meaning that their facilitative and strategy building roles should cover the entirety of activities funded by one (and sometimes more than one) NIHR infrastructure grant or centres including advisory roles over most or all research projects associated with the centre of grant, and that they had worked in this or a comparable environment for 2 years.