- Privacy Policy

Home » Variables in Research – Definition, Types and Examples

Variables in Research – Definition, Types and Examples

Table of Contents

Variables in Research

Definition:

In Research, Variables refer to characteristics or attributes that can be measured, manipulated, or controlled. They are the factors that researchers observe or manipulate to understand the relationship between them and the outcomes of interest.

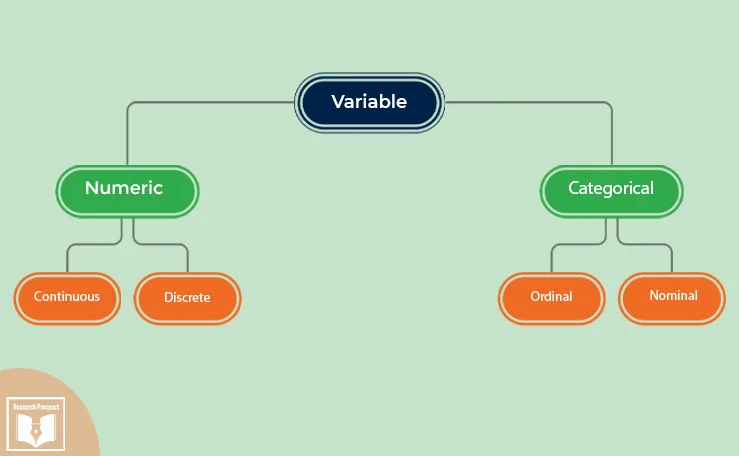

Types of Variables in Research

Types of Variables in Research are as follows:

Independent Variable

This is the variable that is manipulated by the researcher. It is also known as the predictor variable, as it is used to predict changes in the dependent variable. Examples of independent variables include age, gender, dosage, and treatment type.

Dependent Variable

This is the variable that is measured or observed to determine the effects of the independent variable. It is also known as the outcome variable, as it is the variable that is affected by the independent variable. Examples of dependent variables include blood pressure, test scores, and reaction time.

Confounding Variable

This is a variable that can affect the relationship between the independent variable and the dependent variable. It is a variable that is not being studied but could impact the results of the study. For example, in a study on the effects of a new drug on a disease, a confounding variable could be the patient’s age, as older patients may have more severe symptoms.

Mediating Variable

This is a variable that explains the relationship between the independent variable and the dependent variable. It is a variable that comes in between the independent and dependent variables and is affected by the independent variable, which then affects the dependent variable. For example, in a study on the relationship between exercise and weight loss, the mediating variable could be metabolism, as exercise can increase metabolism, which can then lead to weight loss.

Moderator Variable

This is a variable that affects the strength or direction of the relationship between the independent variable and the dependent variable. It is a variable that influences the effect of the independent variable on the dependent variable. For example, in a study on the effects of caffeine on cognitive performance, the moderator variable could be age, as older adults may be more sensitive to the effects of caffeine than younger adults.

Control Variable

This is a variable that is held constant or controlled by the researcher to ensure that it does not affect the relationship between the independent variable and the dependent variable. Control variables are important to ensure that any observed effects are due to the independent variable and not to other factors. For example, in a study on the effects of a new teaching method on student performance, the control variables could include class size, teacher experience, and student demographics.

Continuous Variable

This is a variable that can take on any value within a certain range. Continuous variables can be measured on a scale and are often used in statistical analyses. Examples of continuous variables include height, weight, and temperature.

Categorical Variable

This is a variable that can take on a limited number of values or categories. Categorical variables can be nominal or ordinal. Nominal variables have no inherent order, while ordinal variables have a natural order. Examples of categorical variables include gender, race, and educational level.

Discrete Variable

This is a variable that can only take on specific values. Discrete variables are often used in counting or frequency analyses. Examples of discrete variables include the number of siblings a person has, the number of times a person exercises in a week, and the number of students in a classroom.

Dummy Variable

This is a variable that takes on only two values, typically 0 and 1, and is used to represent categorical variables in statistical analyses. Dummy variables are often used when a categorical variable cannot be used directly in an analysis. For example, in a study on the effects of gender on income, a dummy variable could be created, with 0 representing female and 1 representing male.

Extraneous Variable

This is a variable that has no relationship with the independent or dependent variable but can affect the outcome of the study. Extraneous variables can lead to erroneous conclusions and can be controlled through random assignment or statistical techniques.

Latent Variable

This is a variable that cannot be directly observed or measured, but is inferred from other variables. Latent variables are often used in psychological or social research to represent constructs such as personality traits, attitudes, or beliefs.

Moderator-mediator Variable

This is a variable that acts both as a moderator and a mediator. It can moderate the relationship between the independent and dependent variables and also mediate the relationship between the independent and dependent variables. Moderator-mediator variables are often used in complex statistical analyses.

Variables Analysis Methods

There are different methods to analyze variables in research, including:

- Descriptive statistics: This involves analyzing and summarizing data using measures such as mean, median, mode, range, standard deviation, and frequency distribution. Descriptive statistics are useful for understanding the basic characteristics of a data set.

- Inferential statistics : This involves making inferences about a population based on sample data. Inferential statistics use techniques such as hypothesis testing, confidence intervals, and regression analysis to draw conclusions from data.

- Correlation analysis: This involves examining the relationship between two or more variables. Correlation analysis can determine the strength and direction of the relationship between variables, and can be used to make predictions about future outcomes.

- Regression analysis: This involves examining the relationship between an independent variable and a dependent variable. Regression analysis can be used to predict the value of the dependent variable based on the value of the independent variable, and can also determine the significance of the relationship between the two variables.

- Factor analysis: This involves identifying patterns and relationships among a large number of variables. Factor analysis can be used to reduce the complexity of a data set and identify underlying factors or dimensions.

- Cluster analysis: This involves grouping data into clusters based on similarities between variables. Cluster analysis can be used to identify patterns or segments within a data set, and can be useful for market segmentation or customer profiling.

- Multivariate analysis : This involves analyzing multiple variables simultaneously. Multivariate analysis can be used to understand complex relationships between variables, and can be useful in fields such as social science, finance, and marketing.

Examples of Variables

- Age : This is a continuous variable that represents the age of an individual in years.

- Gender : This is a categorical variable that represents the biological sex of an individual and can take on values such as male and female.

- Education level: This is a categorical variable that represents the level of education completed by an individual and can take on values such as high school, college, and graduate school.

- Income : This is a continuous variable that represents the amount of money earned by an individual in a year.

- Weight : This is a continuous variable that represents the weight of an individual in kilograms or pounds.

- Ethnicity : This is a categorical variable that represents the ethnic background of an individual and can take on values such as Hispanic, African American, and Asian.

- Time spent on social media : This is a continuous variable that represents the amount of time an individual spends on social media in minutes or hours per day.

- Marital status: This is a categorical variable that represents the marital status of an individual and can take on values such as married, divorced, and single.

- Blood pressure : This is a continuous variable that represents the force of blood against the walls of arteries in millimeters of mercury.

- Job satisfaction : This is a continuous variable that represents an individual’s level of satisfaction with their job and can be measured using a Likert scale.

Applications of Variables

Variables are used in many different applications across various fields. Here are some examples:

- Scientific research: Variables are used in scientific research to understand the relationships between different factors and to make predictions about future outcomes. For example, scientists may study the effects of different variables on plant growth or the impact of environmental factors on animal behavior.

- Business and marketing: Variables are used in business and marketing to understand customer behavior and to make decisions about product development and marketing strategies. For example, businesses may study variables such as consumer preferences, spending habits, and market trends to identify opportunities for growth.

- Healthcare : Variables are used in healthcare to monitor patient health and to make treatment decisions. For example, doctors may use variables such as blood pressure, heart rate, and cholesterol levels to diagnose and treat cardiovascular disease.

- Education : Variables are used in education to measure student performance and to evaluate the effectiveness of teaching strategies. For example, teachers may use variables such as test scores, attendance, and class participation to assess student learning.

- Social sciences : Variables are used in social sciences to study human behavior and to understand the factors that influence social interactions. For example, sociologists may study variables such as income, education level, and family structure to examine patterns of social inequality.

Purpose of Variables

Variables serve several purposes in research, including:

- To provide a way of measuring and quantifying concepts: Variables help researchers measure and quantify abstract concepts such as attitudes, behaviors, and perceptions. By assigning numerical values to these concepts, researchers can analyze and compare data to draw meaningful conclusions.

- To help explain relationships between different factors: Variables help researchers identify and explain relationships between different factors. By analyzing how changes in one variable affect another variable, researchers can gain insight into the complex interplay between different factors.

- To make predictions about future outcomes : Variables help researchers make predictions about future outcomes based on past observations. By analyzing patterns and relationships between different variables, researchers can make informed predictions about how different factors may affect future outcomes.

- To test hypotheses: Variables help researchers test hypotheses and theories. By collecting and analyzing data on different variables, researchers can test whether their predictions are accurate and whether their hypotheses are supported by the evidence.

Characteristics of Variables

Characteristics of Variables are as follows:

- Measurement : Variables can be measured using different scales, such as nominal, ordinal, interval, or ratio scales. The scale used to measure a variable can affect the type of statistical analysis that can be applied.

- Range : Variables have a range of values that they can take on. The range can be finite, such as the number of students in a class, or infinite, such as the range of possible values for a continuous variable like temperature.

- Variability : Variables can have different levels of variability, which refers to the degree to which the values of the variable differ from each other. Highly variable variables have a wide range of values, while low variability variables have values that are more similar to each other.

- Validity and reliability : Variables should be both valid and reliable to ensure accurate and consistent measurement. Validity refers to the extent to which a variable measures what it is intended to measure, while reliability refers to the consistency of the measurement over time.

- Directionality: Some variables have directionality, meaning that the relationship between the variables is not symmetrical. For example, in a study of the relationship between smoking and lung cancer, smoking is the independent variable and lung cancer is the dependent variable.

Advantages of Variables

Here are some of the advantages of using variables in research:

- Control : Variables allow researchers to control the effects of external factors that could influence the outcome of the study. By manipulating and controlling variables, researchers can isolate the effects of specific factors and measure their impact on the outcome.

- Replicability : Variables make it possible for other researchers to replicate the study and test its findings. By defining and measuring variables consistently, other researchers can conduct similar studies to validate the original findings.

- Accuracy : Variables make it possible to measure phenomena accurately and objectively. By defining and measuring variables precisely, researchers can reduce bias and increase the accuracy of their findings.

- Generalizability : Variables allow researchers to generalize their findings to larger populations. By selecting variables that are representative of the population, researchers can draw conclusions that are applicable to a broader range of individuals.

- Clarity : Variables help researchers to communicate their findings more clearly and effectively. By defining and categorizing variables, researchers can organize and present their findings in a way that is easily understandable to others.

Disadvantages of Variables

Here are some of the main disadvantages of using variables in research:

- Simplification : Variables may oversimplify the complexity of real-world phenomena. By breaking down a phenomenon into variables, researchers may lose important information and context, which can affect the accuracy and generalizability of their findings.

- Measurement error : Variables rely on accurate and precise measurement, and measurement error can affect the reliability and validity of research findings. The use of subjective or poorly defined variables can also introduce measurement error into the study.

- Confounding variables : Confounding variables are factors that are not measured but that affect the relationship between the variables of interest. If confounding variables are not accounted for, they can distort or obscure the relationship between the variables of interest.

- Limited scope: Variables are defined by the researcher, and the scope of the study is therefore limited by the researcher’s choice of variables. This can lead to a narrow focus that overlooks important aspects of the phenomenon being studied.

- Ethical concerns: The selection and measurement of variables may raise ethical concerns, especially in studies involving human subjects. For example, using variables that are related to sensitive topics, such as race or sexuality, may raise concerns about privacy and discrimination.

About the author

Muhammad Hassan

Researcher, Academic Writer, Web developer

You may also like

Control Variable – Definition, Types and Examples

Moderating Variable – Definition, Analysis...

Categorical Variable – Definition, Types and...

Independent Variable – Definition, Types and...

Ratio Variable – Definition, Purpose and Examples

Ordinal Variable – Definition, Purpose and...

Have a language expert improve your writing

Run a free plagiarism check in 10 minutes, automatically generate references for free.

- Knowledge Base

- Methodology

- Types of Variables in Research | Definitions & Examples

Types of Variables in Research | Definitions & Examples

Published on 19 September 2022 by Rebecca Bevans . Revised on 28 November 2022.

In statistical research, a variable is defined as an attribute of an object of study. Choosing which variables to measure is central to good experimental design .

You need to know which types of variables you are working with in order to choose appropriate statistical tests and interpret the results of your study.

You can usually identify the type of variable by asking two questions:

- What type of data does the variable contain?

- What part of the experiment does the variable represent?

Table of contents

Types of data: quantitative vs categorical variables, parts of the experiment: independent vs dependent variables, other common types of variables, frequently asked questions about variables.

Data is a specific measurement of a variable – it is the value you record in your data sheet. Data is generally divided into two categories:

- Quantitative data represents amounts.

- Categorical data represents groupings.

A variable that contains quantitative data is a quantitative variable ; a variable that contains categorical data is a categorical variable . Each of these types of variable can be broken down into further types.

Quantitative variables

When you collect quantitative data, the numbers you record represent real amounts that can be added, subtracted, divided, etc. There are two types of quantitative variables: discrete and continuous .

Categorical variables

Categorical variables represent groupings of some kind. They are sometimes recorded as numbers, but the numbers represent categories rather than actual amounts of things.

There are three types of categorical variables: binary , nominal , and ordinal variables.

*Note that sometimes a variable can work as more than one type! An ordinal variable can also be used as a quantitative variable if the scale is numeric and doesn’t need to be kept as discrete integers. For example, star ratings on product reviews are ordinal (1 to 5 stars), but the average star rating is quantitative.

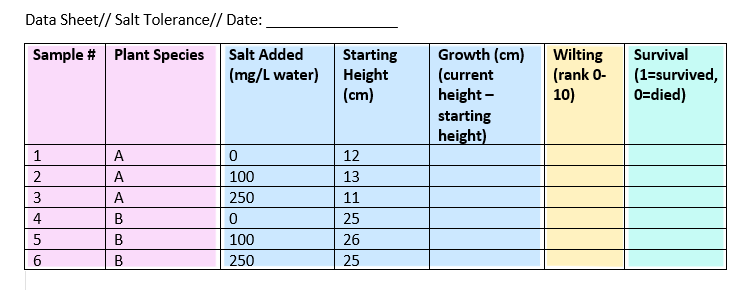

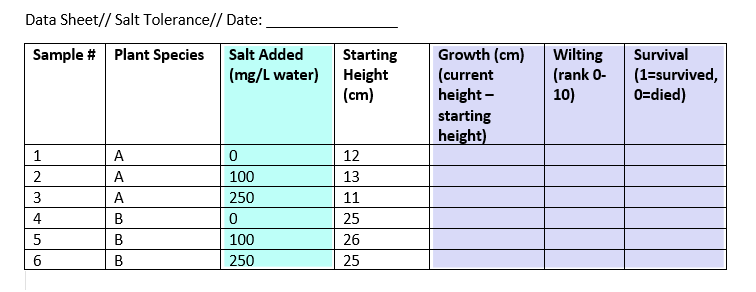

Example data sheet

To keep track of your salt-tolerance experiment, you make a data sheet where you record information about the variables in the experiment, like salt addition and plant health.

To gather information about plant responses over time, you can fill out the same data sheet every few days until the end of the experiment. This example sheet is colour-coded according to the type of variable: nominal , continuous , ordinal , and binary .

Prevent plagiarism, run a free check.

Experiments are usually designed to find out what effect one variable has on another – in our example, the effect of salt addition on plant growth.

You manipulate the independent variable (the one you think might be the cause ) and then measure the dependent variable (the one you think might be the effect ) to find out what this effect might be.

You will probably also have variables that you hold constant ( control variables ) in order to focus on your experimental treatment.

In this experiment, we have one independent and three dependent variables.

The other variables in the sheet can’t be classified as independent or dependent, but they do contain data that you will need in order to interpret your dependent and independent variables.

What about correlational research?

When you do correlational research , the terms ‘dependent’ and ‘independent’ don’t apply, because you are not trying to establish a cause-and-effect relationship.

However, there might be cases where one variable clearly precedes the other (for example, rainfall leads to mud, rather than the other way around). In these cases, you may call the preceding variable (i.e., the rainfall) the predictor variable and the following variable (i.e., the mud) the outcome variable .

Once you have defined your independent and dependent variables and determined whether they are categorical or quantitative, you will be able to choose the correct statistical test .

But there are many other ways of describing variables that help with interpreting your results. Some useful types of variable are listed below.

A confounding variable is closely related to both the independent and dependent variables in a study. An independent variable represents the supposed cause , while the dependent variable is the supposed effect . A confounding variable is a third variable that influences both the independent and dependent variables.

Failing to account for confounding variables can cause you to wrongly estimate the relationship between your independent and dependent variables.

Discrete and continuous variables are two types of quantitative variables :

- Discrete variables represent counts (e.g., the number of objects in a collection).

- Continuous variables represent measurable amounts (e.g., water volume or weight).

You can think of independent and dependent variables in terms of cause and effect: an independent variable is the variable you think is the cause , while a dependent variable is the effect .

In an experiment, you manipulate the independent variable and measure the outcome in the dependent variable. For example, in an experiment about the effect of nutrients on crop growth:

- The independent variable is the amount of nutrients added to the crop field.

- The dependent variable is the biomass of the crops at harvest time.

Defining your variables, and deciding how you will manipulate and measure them, is an important part of experimental design .

Cite this Scribbr article

If you want to cite this source, you can copy and paste the citation or click the ‘Cite this Scribbr article’ button to automatically add the citation to our free Reference Generator.

Bevans, R. (2022, November 28). Types of Variables in Research | Definitions & Examples. Scribbr. Retrieved 22 April 2024, from https://www.scribbr.co.uk/research-methods/variables-types/

Is this article helpful?

Rebecca Bevans

Other students also liked, a quick guide to experimental design | 5 steps & examples, quasi-experimental design | definition, types & examples, construct validity | definition, types, & examples.

- school Campus Bookshelves

- menu_book Bookshelves

- perm_media Learning Objects

- login Login

- how_to_reg Request Instructor Account

- hub Instructor Commons

- Download Page (PDF)

- Download Full Book (PDF)

- Periodic Table

- Physics Constants

- Scientific Calculator

- Reference & Cite

- Tools expand_more

- Readability

selected template will load here

This action is not available.

2.2: Concepts, Constructs, and Variables

- Last updated

- Save as PDF

- Page ID 26212

- Anol Bhattacherjee

- University of South Florida via Global Text Project

We discussed in Chapter 1 that although research can be exploratory, descriptive, or explanatory, most scientific research tend to be of the explanatory type in that they search for potential explanations of observed natural or social phenomena. Explanations require development of concepts or generalizable properties or characteristics associated with objects, events, or people. While objects such as a person, a firm, or a car are not concepts, their specific characteristics or behavior such as a person’s attitude toward immigrants, a firm’s capacity for innovation, and a car’s weight can be viewed as concepts.

Knowingly or unknowingly, we use different kinds of concepts in our everyday conversations. Some of these concepts have been developed over time through our shared language. Sometimes, we borrow concepts from other disciplines or languages to explain a phenomenon of interest. For instance, the idea of gravitation borrowed from physics can be used in business to describe why people tend to “gravitate” to their preferred shopping destinations. Likewise, the concept of distance can be used to explain the degree of social separation between two otherwise collocated individuals. Sometimes, we create our own concepts to describe a unique characteristic not described in prior research. For instance, technostress is a new concept referring to the mental stress one may face when asked to learn a new technology.

Concepts may also have progressive levels of abstraction. Some concepts such as a person’s weight are precise and objective, while other concepts such as a person’s personality may be more abstract and difficult to visualize. A construct is an abstract concept that is specifically chosen (or “created”) to explain a given phenomenon. A construct may be a simple concept, such as a person’s weight , or a combination of a set of related concepts such as a person’s communication skill , which may consist of several underlying concepts such as the person’s vocabulary , syntax , and spelling . The former instance (weight) is a unidimensional construct , while the latter (communication skill) is a multi-dimensional construct (i.e., it consists of multiple underlying concepts). The distinction between constructs and concepts are clearer in multi-dimensional constructs, where the higher order abstraction is called a construct and the lower order abstractions are called concepts. However, this distinction tends to blur in the case of unidimensional constructs.

Constructs used for scientific research must have precise and clear definitions that others can use to understand exactly what it means and what it does not mean. For instance, a seemingly simple construct such as income may refer to monthly or annual income, before-tax or after-tax income, and personal or family income, and is therefore neither precise nor clear. There are two types of definitions: dictionary definitions and operational definitions. In the more familiar dictionary definition, a construct is often defined in terms of a synonym. For instance, attitude may be defined as a disposition, a feeling, or an affect, and affect in turn is defined as an attitude. Such definitions of a circular nature are not particularly useful in scientific research for elaborating the meaning and content of that construct. Scientific research requires operational definitions that define constructs in terms of how they will be empirically measured. For instance, the operational definition of a construct such as temperature must specify whether we plan to measure temperature in Celsius, Fahrenheit, or Kelvin scale. A construct such as income should be defined in terms of whether we are interested in monthly or annual income, before-tax or after-tax income, and personal or family income. One can imagine that constructs such as learning , personality , and intelligence can be quite hard to define operationally.

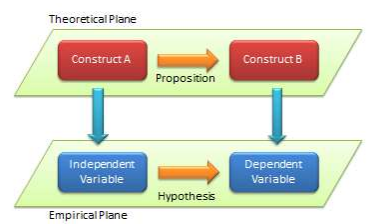

A term frequently associated with, and sometimes used interchangeably with, a construct is a variable. Etymologically speaking, a variable is a quantity that can vary (e.g., from low to high, negative to positive, etc.), in contrast to constants that do not vary (i.e., remain constant). However, in scientific research, a variable is a measurable representation of an abstract construct. As abstract entities, constructs are not directly measurable, and hence, we look for proxy measures called variables. For instance, a person’s intelligence is often measured as his or her IQ ( intelligence quotient ) score , which is an index generated from an analytical and pattern-matching test administered to people. In this case, intelligence is a construct, and IQ score is a variable that measures the intelligence construct. Whether IQ scores truly measures one’s intelligence is anyone’s guess (though many believe that they do), and depending on whether how well it measures intelligence, the IQ score may be a good or a poor measure of the intelligence construct. As shown in Figure 2.1, scientific research proceeds along two planes: a theoretical plane and an empirical plane. Constructs are conceptualized at the theoretical (abstract) plane, while variables are operationalized and measured at the empirical (observational) plane. Thinking like a researcher implies the ability to move back and forth between these two planes.

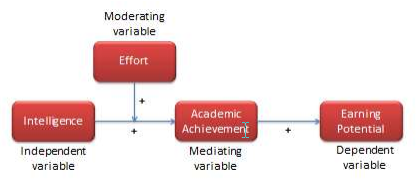

Depending on their intended use, variables may be classified as independent, dependent, moderating, mediating, or control variables. Variables that explain other variables are called independent variables , those that are explained by other variables are dependent variables , those that are explained by independent variables while also explaining dependent variables are mediating variables (or intermediate variables), and those that influence the relationship between independent and dependent variables are called moderating variables . As an example, if we state that higher intelligence causes improved learning among students, then intelligence is an independent variable and learning is a dependent variable. There may be other extraneous variables that are not pertinent to explaining a given dependent variable, but may have some impact on the dependent variable. These variables must be controlled for in a scientific study, and are therefore called control variables .

To understand the differences between these different variable types, consider the example shown in Figure 2.2. If we believe that intelligence influences (or explains) students’ academic achievement, then a measure of intelligence such as an IQ score is an independent variable, while a measure of academic success such as grade point average is a dependent variable. If we believe that the effect of intelligence on academic achievement also depends on the effort invested by the student in the learning process (i.e., between two equally intelligent students, the student who puts is more effort achieves higher academic achievement than one who puts in less effort), then effort becomes a moderating variable. Incidentally, one may also view effort as an independent variable and intelligence as a moderating variable. If academic achievement is viewed as an intermediate step to higher earning potential, then earning potential becomes the dependent variable for the independent variable academic achievement , and academic achievement becomes the mediating variable in the relationship between intelligence and earning potential. Hence, variable are defined as an independent, dependent, moderating, or mediating variable based on their nature of association with each other. The overall network of relationships between a set of related constructs is called a nomological network (see Figure 2.2). Thinking like a researcher requires not only being able to abstract constructs from observations, but also being able to mentally visualize a nomological network linking these abstract constructs.

Research Variables 101

Independent variables, dependent variables, control variables and more

By: Derek Jansen (MBA) | Expert Reviewed By: Kerryn Warren (PhD) | January 2023

If you’re new to the world of research, especially scientific research, you’re bound to run into the concept of variables , sooner or later. If you’re feeling a little confused, don’t worry – you’re not the only one! Independent variables, dependent variables, confounding variables – it’s a lot of jargon. In this post, we’ll unpack the terminology surrounding research variables using straightforward language and loads of examples .

Overview: Variables In Research

What (exactly) is a variable.

The simplest way to understand a variable is as any characteristic or attribute that can experience change or vary over time or context – hence the name “variable”. For example, the dosage of a particular medicine could be classified as a variable, as the amount can vary (i.e., a higher dose or a lower dose). Similarly, gender, age or ethnicity could be considered demographic variables, because each person varies in these respects.

Within research, especially scientific research, variables form the foundation of studies, as researchers are often interested in how one variable impacts another, and the relationships between different variables. For example:

- How someone’s age impacts their sleep quality

- How different teaching methods impact learning outcomes

- How diet impacts weight (gain or loss)

As you can see, variables are often used to explain relationships between different elements and phenomena. In scientific studies, especially experimental studies, the objective is often to understand the causal relationships between variables. In other words, the role of cause and effect between variables. This is achieved by manipulating certain variables while controlling others – and then observing the outcome. But, we’ll get into that a little later…

The “Big 3” Variables

Variables can be a little intimidating for new researchers because there are a wide variety of variables, and oftentimes, there are multiple labels for the same thing. To lay a firm foundation, we’ll first look at the three main types of variables, namely:

- Independent variables (IV)

- Dependant variables (DV)

- Control variables

What is an independent variable?

Simply put, the independent variable is the “ cause ” in the relationship between two (or more) variables. In other words, when the independent variable changes, it has an impact on another variable.

For example:

- Increasing the dosage of a medication (Variable A) could result in better (or worse) health outcomes for a patient (Variable B)

- Changing a teaching method (Variable A) could impact the test scores that students earn in a standardised test (Variable B)

- Varying one’s diet (Variable A) could result in weight loss or gain (Variable B).

It’s useful to know that independent variables can go by a few different names, including, explanatory variables (because they explain an event or outcome) and predictor variables (because they predict the value of another variable). Terminology aside though, the most important takeaway is that independent variables are assumed to be the “cause” in any cause-effect relationship. As you can imagine, these types of variables are of major interest to researchers, as many studies seek to understand the causal factors behind a phenomenon.

Need a helping hand?

What is a dependent variable?

While the independent variable is the “ cause ”, the dependent variable is the “ effect ” – or rather, the affected variable . In other words, the dependent variable is the variable that is assumed to change as a result of a change in the independent variable.

Keeping with the previous example, let’s look at some dependent variables in action:

- Health outcomes (DV) could be impacted by dosage changes of a medication (IV)

- Students’ scores (DV) could be impacted by teaching methods (IV)

- Weight gain or loss (DV) could be impacted by diet (IV)

In scientific studies, researchers will typically pay very close attention to the dependent variable (or variables), carefully measuring any changes in response to hypothesised independent variables. This can be tricky in practice, as it’s not always easy to reliably measure specific phenomena or outcomes – or to be certain that the actual cause of the change is in fact the independent variable.

As the adage goes, correlation is not causation . In other words, just because two variables have a relationship doesn’t mean that it’s a causal relationship – they may just happen to vary together. For example, you could find a correlation between the number of people who own a certain brand of car and the number of people who have a certain type of job. Just because the number of people who own that brand of car and the number of people who have that type of job is correlated, it doesn’t mean that owning that brand of car causes someone to have that type of job or vice versa. The correlation could, for example, be caused by another factor such as income level or age group, which would affect both car ownership and job type.

To confidently establish a causal relationship between an independent variable and a dependent variable (i.e., X causes Y), you’ll typically need an experimental design , where you have complete control over the environmen t and the variables of interest. But even so, this doesn’t always translate into the “real world”. Simply put, what happens in the lab sometimes stays in the lab!

As an alternative to pure experimental research, correlational or “ quasi-experimental ” research (where the researcher cannot manipulate or change variables) can be done on a much larger scale more easily, allowing one to understand specific relationships in the real world. These types of studies also assume some causality between independent and dependent variables, but it’s not always clear. So, if you go this route, you need to be cautious in terms of how you describe the impact and causality between variables and be sure to acknowledge any limitations in your own research.

What is a control variable?

In an experimental design, a control variable (or controlled variable) is a variable that is intentionally held constant to ensure it doesn’t have an influence on any other variables. As a result, this variable remains unchanged throughout the course of the study. In other words, it’s a variable that’s not allowed to vary – tough life 🙂

As we mentioned earlier, one of the major challenges in identifying and measuring causal relationships is that it’s difficult to isolate the impact of variables other than the independent variable. Simply put, there’s always a risk that there are factors beyond the ones you’re specifically looking at that might be impacting the results of your study. So, to minimise the risk of this, researchers will attempt (as best possible) to hold other variables constant . These factors are then considered control variables.

Some examples of variables that you may need to control include:

- Temperature

- Time of day

- Noise or distractions

Which specific variables need to be controlled for will vary tremendously depending on the research project at hand, so there’s no generic list of control variables to consult. As a researcher, you’ll need to think carefully about all the factors that could vary within your research context and then consider how you’ll go about controlling them. A good starting point is to look at previous studies similar to yours and pay close attention to which variables they controlled for.

Of course, you won’t always be able to control every possible variable, and so, in many cases, you’ll just have to acknowledge their potential impact and account for them in the conclusions you draw. Every study has its limitations, so don’t get fixated or discouraged by troublesome variables. Nevertheless, always think carefully about the factors beyond what you’re focusing on – don’t make assumptions!

Other types of variables

As we mentioned, independent, dependent and control variables are the most common variables you’ll come across in your research, but they’re certainly not the only ones you need to be aware of. Next, we’ll look at a few “secondary” variables that you need to keep in mind as you design your research.

- Moderating variables

- Mediating variables

- Confounding variables

- Latent variables

Let’s jump into it…

What is a moderating variable?

A moderating variable is a variable that influences the strength or direction of the relationship between an independent variable and a dependent variable. In other words, moderating variables affect how much (or how little) the IV affects the DV, or whether the IV has a positive or negative relationship with the DV (i.e., moves in the same or opposite direction).

For example, in a study about the effects of sleep deprivation on academic performance, gender could be used as a moderating variable to see if there are any differences in how men and women respond to a lack of sleep. In such a case, one may find that gender has an influence on how much students’ scores suffer when they’re deprived of sleep.

It’s important to note that while moderators can have an influence on outcomes , they don’t necessarily cause them ; rather they modify or “moderate” existing relationships between other variables. This means that it’s possible for two different groups with similar characteristics, but different levels of moderation, to experience very different results from the same experiment or study design.

What is a mediating variable?

Mediating variables are often used to explain the relationship between the independent and dependent variable (s). For example, if you were researching the effects of age on job satisfaction, then education level could be considered a mediating variable, as it may explain why older people have higher job satisfaction than younger people – they may have more experience or better qualifications, which lead to greater job satisfaction.

Mediating variables also help researchers understand how different factors interact with each other to influence outcomes. For instance, if you wanted to study the effect of stress on academic performance, then coping strategies might act as a mediating factor by influencing both stress levels and academic performance simultaneously. For example, students who use effective coping strategies might be less stressed but also perform better academically due to their improved mental state.

In addition, mediating variables can provide insight into causal relationships between two variables by helping researchers determine whether changes in one factor directly cause changes in another – or whether there is an indirect relationship between them mediated by some third factor(s). For instance, if you wanted to investigate the impact of parental involvement on student achievement, you would need to consider family dynamics as a potential mediator, since it could influence both parental involvement and student achievement simultaneously.

What is a confounding variable?

A confounding variable (also known as a third variable or lurking variable ) is an extraneous factor that can influence the relationship between two variables being studied. Specifically, for a variable to be considered a confounding variable, it needs to meet two criteria:

- It must be correlated with the independent variable (this can be causal or not)

- It must have a causal impact on the dependent variable (i.e., influence the DV)

Some common examples of confounding variables include demographic factors such as gender, ethnicity, socioeconomic status, age, education level, and health status. In addition to these, there are also environmental factors to consider. For example, air pollution could confound the impact of the variables of interest in a study investigating health outcomes.

Naturally, it’s important to identify as many confounding variables as possible when conducting your research, as they can heavily distort the results and lead you to draw incorrect conclusions . So, always think carefully about what factors may have a confounding effect on your variables of interest and try to manage these as best you can.

What is a latent variable?

Latent variables are unobservable factors that can influence the behaviour of individuals and explain certain outcomes within a study. They’re also known as hidden or underlying variables , and what makes them rather tricky is that they can’t be directly observed or measured . Instead, latent variables must be inferred from other observable data points such as responses to surveys or experiments.

For example, in a study of mental health, the variable “resilience” could be considered a latent variable. It can’t be directly measured , but it can be inferred from measures of mental health symptoms, stress, and coping mechanisms. The same applies to a lot of concepts we encounter every day – for example:

- Emotional intelligence

- Quality of life

- Business confidence

- Ease of use

One way in which we overcome the challenge of measuring the immeasurable is latent variable models (LVMs). An LVM is a type of statistical model that describes a relationship between observed variables and one or more unobserved (latent) variables. These models allow researchers to uncover patterns in their data which may not have been visible before, thanks to their complexity and interrelatedness with other variables. Those patterns can then inform hypotheses about cause-and-effect relationships among those same variables which were previously unknown prior to running the LVM. Powerful stuff, we say!

Let’s recap

In the world of scientific research, there’s no shortage of variable types, some of which have multiple names and some of which overlap with each other. In this post, we’ve covered some of the popular ones, but remember that this is not an exhaustive list .

To recap, we’ve explored:

- Independent variables (the “cause”)

- Dependent variables (the “effect”)

- Control variables (the variable that’s not allowed to vary)

If you’re still feeling a bit lost and need a helping hand with your research project, check out our 1-on-1 coaching service , where we guide you through each step of the research journey. Also, be sure to check out our free dissertation writing course and our collection of free, fully-editable chapter templates .

Psst... there’s more!

This post was based on one of our popular Research Bootcamps . If you're working on a research project, you'll definitely want to check this out ...

You Might Also Like:

Very informative, concise and helpful. Thank you

Helping information.Thanks

practical and well-demonstrated

Very helpful and insightful

Submit a Comment Cancel reply

Your email address will not be published. Required fields are marked *

Save my name, email, and website in this browser for the next time I comment.

- Print Friendly

- How it works

Types of Variables – A Comprehensive Guide

Published by Carmen Troy at August 14th, 2021 , Revised On October 26, 2023

A variable is any qualitative or quantitative characteristic that can change and have more than one value, such as age, height, weight, gender, etc.

Before conducting research, it’s essential to know what needs to be measured or analysed and choose a suitable statistical test to present your study’s findings.

In most cases, you can do it by identifying the key issues/variables related to your research’s main topic.

Example: If you want to test whether the hybridisation of plants harms the health of people. You can use the key variables like agricultural techniques, type of soil, environmental factors, types of pesticides used, the process of hybridisation, type of yield obtained after hybridisation, type of yield without hybridisation, etc.

Variables are broadly categorised into:

- Independent variables

- Dependent variable

- Control variable

Independent Vs. Dependent Vs. Control Variable

The research includes finding ways:

- To change the independent variables.

- To prevent the controlled variables from changing.

- To measure the dependent variables.

Note: The term dependent and independent is not applicable in correlational research as this is not a controlled experiment. A researcher doesn’t have control over the variables. The association and between two or more variables are measured. If one variable affects another one, then it’s called the predictor variable and outcome variable.

Example: Correlation between investment (predictor variable) and profit (outcome variable)

What data collection best suits your research?

- Find out by hiring an expert from ResearchProspect today!

- Despite how challenging the subject may be, we are here to help you.

Types of Variables Based on the Types of Data

A data is referred to as the information and statistics gathered for analysis of a research topic. Data is broadly divided into two categories, such as:

Quantitative/Numerical data is associated with the aspects of measurement, quantity, and extent.

Categorial data is associated with groupings.

A qualitative variable consists of qualitative data, and a quantitative variable consists of a quantitative variable.

Quantitative Variable

The quantitative variable is associated with measurement, quantity, and extent, like how many . It follows the statistical, mathematical, and computational techniques in numerical data such as percentages and statistics. The research is conducted on a large group of population.

Example: Find out the weight of students of the fifth standard studying in government schools.

The quantitative variable can be further categorised into continuous and discrete.

Categorial Variable

The categorical variable includes measurements that vary in categories such as names but not in terms of rank or degree. It means one level of a categorical variable cannot be considered better or greater than another level.

Example: Gender, brands, colors, zip codes

The categorical variable is further categorised into three types:

Note: Sometimes, an ordinal variable also acts as a quantitative variable. Ordinal data has an order, but the intervals between scale points may be uneven.

Example: Numbers on a rating scale represent the reviews’ rank or range from below average to above average. However, it also represents a quantitative variable showing how many stars and how much rating is given.

Not sure which statistical tests to use for your data?

Let the experts at researchprospect do the daunting work for you..

Using our approach, we illustrate how to collect data, sample sizes, validity, reliability, credibility, and ethics, so you won’t have to do it all by yourself!

Other Types of Variables

It’s important to understand the difference between dependent and independent variables and know whether they are quantitative or categorical to choose the appropriate statistical test.

There are many other types of variables to help you differentiate and understand them.

Also, read a comprehensive guide written about inductive and deductive reasoning .

- Entertainment

- Online education

- Database management, storage, and retrieval

Frequently Asked Questions

What are the 10 types of variables in research.

The 10 types of variables in research are:

- Independent

- Confounding

- Categorical

- Extraneous.

What is an independent variable?

An independent variable, often termed the predictor or explanatory variable, is the variable manipulated or categorized in an experiment to observe its effect on another variable, called the dependent variable. It’s the presumed cause in a cause-and-effect relationship, determining if changes in it produce changes in the observed outcome.

What is a variable?

In research, a variable is any attribute, quantity, or characteristic that can be measured or counted. It can take on various values, making it “variable.” Variables can be classified as independent (manipulated), dependent (observed outcome), or control (kept constant). They form the foundation for hypotheses, observations, and data analysis in studies.

What is a dependent variable?

A dependent variable is the outcome or response being studied in an experiment or investigation. It’s what researchers measure to determine the effect of changes in the independent variable. In a cause-and-effect relationship, the dependent variable is presumed to be influenced or caused by the independent variable.

What is a variable in programming?

In programming, a variable is a symbolic name for a storage location that holds data or values. It allows data storage and retrieval for computational operations. Variables have types, like integer or string, determining the nature of data they can hold. They’re fundamental in manipulating and processing information in software.

What is a control variable?

A control variable in research is a factor that’s kept constant to ensure that it doesn’t influence the outcome. By controlling these variables, researchers can isolate the effects of the independent variable on the dependent variable, ensuring that other factors don’t skew the results or introduce bias into the experiment.

What is a controlled variable in science?

In science, a controlled variable is a factor that remains constant throughout an experiment. It ensures that any observed changes in the dependent variable are solely due to the independent variable, not other factors. By keeping controlled variables consistent, researchers can maintain experiment validity and accurately assess cause-and-effect relationships.

How many independent variables should an investigation have?

Ideally, an investigation should have one independent variable to clearly establish cause-and-effect relationships. Manipulating multiple independent variables simultaneously can complicate data interpretation.

However, in advanced research, experiments with multiple independent variables (factorial designs) are used, but they require careful planning to understand interactions between variables.

You May Also Like

A case study is a detailed analysis of a situation concerning organizations, industries, and markets. The case study generally aims at identifying the weak areas.

What are the different types of research you can use in your dissertation? Here are some guidelines to help you choose a research strategy that would make your research more credible.

Descriptive research is carried out to describe current issues, programs, and provides information about the issue through surveys and various fact-finding methods.

USEFUL LINKS

LEARNING RESOURCES

COMPANY DETAILS

- How It Works

Independent and Dependent Variables

This guide discusses how to identify independent and dependent variables effectively and incorporate their description within the body of a research paper.

A variable can be anything you might aim to measure in your study, whether in the form of numerical data or reflecting complex phenomena such as feelings or reactions. Dependent variables change due to the other factors measured, especially if a study employs an experimental or semi-experimental design. Independent variables are stable: they are both presumed causes and conditions in the environment or milieu being manipulated.

Identifying Independent and Dependent Variables

Even though the definitions of the terms independent and dependent variables may appear to be clear, in the process of analyzing data resulting from actual research, identifying the variables properly might be challenging. Here is a simple rule that you can apply at all times: the independent variable is what a researcher changes, whereas the dependent variable is affected by these changes. To illustrate the difference, a number of examples are provided below.

- The purpose of Study 1 is to measure the impact of different plant fertilizers on how many fruits apple trees bear. Independent variable : plant fertilizers (chosen by researchers) Dependent variable : fruits that the trees bear (affected by choice of fertilizers)

- The purpose of Study 2 is to find an association between living in close vicinity to hydraulic fracturing sites and respiratory diseases. Independent variable: proximity to hydraulic fracturing sites (a presumed cause and a condition of the environment) Dependent variable: the percentage/ likelihood of suffering from respiratory diseases

Confusion is possible in identifying independent and dependent variables in the social sciences. When considering psychological phenomena and human behavior, it can be difficult to distinguish between cause and effect. For example, the purpose of Study 3 is to establish how tactics for coping with stress are linked to the level of stress-resilience in college students. Even though it is feasible to speculate that these variables are interdependent, the following factors should be taken into account in order to clearly define which variable is dependent and which is interdependent.

- The dependent variable is usually the objective of the research. In the study under examination, the levels of stress resilience are being investigated.

- The independent variable precedes the dependent variable. The chosen stress-related coping techniques help to build resilience; thus, they occur earlier.

Writing Style and Structure

Usually, the variables are first described in the introduction of a research paper and then in the method section. No strict guidelines for approaching the subject exist; however, academic writing demands that the researcher make clear and concise statements. It is only reasonable not to leave readers guessing which of the variables is dependent and which is independent. The description should reflect the literature review, where both types of variables are identified in the context of the previous research. For instance, in the case of Study 3, a researcher would have to provide an explanation as to the meaning of stress resilience and coping tactics.

In properly organizing a research paper, it is essential to outline and operationalize the appropriate independent and dependent variables. Moreover, the paper should differentiate clearly between independent and dependent variables. Finding the dependent variable is typically the objective of a study, whereas independent variables reflect influencing factors that can be manipulated. Distinguishing between the two types of variables in social sciences may be somewhat challenging as it can be easy to confuse cause with effect. Academic format calls for the author to mention the variables in the introduction and then provide a detailed description in the method section.

Unfortunately, your browser is too old to work on this site.

For full functionality of this site it is necessary to enable JavaScript.

- USC Libraries

- Research Guides

Organizing Your Social Sciences Research Paper

- Types of Research Designs

- Purpose of Guide

- Design Flaws to Avoid

- Independent and Dependent Variables

- Glossary of Research Terms

- Reading Research Effectively

- Narrowing a Topic Idea

- Broadening a Topic Idea

- Extending the Timeliness of a Topic Idea

- Academic Writing Style

- Applying Critical Thinking

- Choosing a Title

- Making an Outline

- Paragraph Development

- Research Process Video Series

- Executive Summary

- The C.A.R.S. Model

- Background Information

- The Research Problem/Question

- Theoretical Framework

- Citation Tracking

- Content Alert Services

- Evaluating Sources

- Primary Sources

- Secondary Sources

- Tiertiary Sources

- Scholarly vs. Popular Publications

- Qualitative Methods

- Quantitative Methods

- Insiderness

- Using Non-Textual Elements

- Limitations of the Study

- Common Grammar Mistakes

- Writing Concisely

- Avoiding Plagiarism

- Footnotes or Endnotes?

- Further Readings

- Generative AI and Writing

- USC Libraries Tutorials and Other Guides

- Bibliography

Introduction

Before beginning your paper, you need to decide how you plan to design the study .

The research design refers to the overall strategy and analytical approach that you have chosen in order to integrate, in a coherent and logical way, the different components of the study, thus ensuring that the research problem will be thoroughly investigated. It constitutes the blueprint for the collection, measurement, and interpretation of information and data. Note that the research problem determines the type of design you choose, not the other way around!

De Vaus, D. A. Research Design in Social Research . London: SAGE, 2001; Trochim, William M.K. Research Methods Knowledge Base. 2006.

General Structure and Writing Style

The function of a research design is to ensure that the evidence obtained enables you to effectively address the research problem logically and as unambiguously as possible . In social sciences research, obtaining information relevant to the research problem generally entails specifying the type of evidence needed to test the underlying assumptions of a theory, to evaluate a program, or to accurately describe and assess meaning related to an observable phenomenon.

With this in mind, a common mistake made by researchers is that they begin their investigations before they have thought critically about what information is required to address the research problem. Without attending to these design issues beforehand, the overall research problem will not be adequately addressed and any conclusions drawn will run the risk of being weak and unconvincing. As a consequence, the overall validity of the study will be undermined.

The length and complexity of describing the research design in your paper can vary considerably, but any well-developed description will achieve the following :

- Identify the research problem clearly and justify its selection, particularly in relation to any valid alternative designs that could have been used,

- Review and synthesize previously published literature associated with the research problem,

- Clearly and explicitly specify hypotheses [i.e., research questions] central to the problem,

- Effectively describe the information and/or data which will be necessary for an adequate testing of the hypotheses and explain how such information and/or data will be obtained, and

- Describe the methods of analysis to be applied to the data in determining whether or not the hypotheses are true or false.

The research design is usually incorporated into the introduction of your paper . You can obtain an overall sense of what to do by reviewing studies that have utilized the same research design [e.g., using a case study approach]. This can help you develop an outline to follow for your own paper.

NOTE : Use the SAGE Research Methods Online and Cases and the SAGE Research Methods Videos databases to search for scholarly resources on how to apply specific research designs and methods . The Research Methods Online database contains links to more than 175,000 pages of SAGE publisher's book, journal, and reference content on quantitative, qualitative, and mixed research methodologies. Also included is a collection of case studies of social research projects that can be used to help you better understand abstract or complex methodological concepts. The Research Methods Videos database contains hours of tutorials, interviews, video case studies, and mini-documentaries covering the entire research process.

Creswell, John W. and J. David Creswell. Research Design: Qualitative, Quantitative, and Mixed Methods Approaches . 5th edition. Thousand Oaks, CA: Sage, 2018; De Vaus, D. A. Research Design in Social Research . London: SAGE, 2001; Gorard, Stephen. Research Design: Creating Robust Approaches for the Social Sciences . Thousand Oaks, CA: Sage, 2013; Leedy, Paul D. and Jeanne Ellis Ormrod. Practical Research: Planning and Design . Tenth edition. Boston, MA: Pearson, 2013; Vogt, W. Paul, Dianna C. Gardner, and Lynne M. Haeffele. When to Use What Research Design . New York: Guilford, 2012.

Action Research Design

Definition and Purpose

The essentials of action research design follow a characteristic cycle whereby initially an exploratory stance is adopted, where an understanding of a problem is developed and plans are made for some form of interventionary strategy. Then the intervention is carried out [the "action" in action research] during which time, pertinent observations are collected in various forms. The new interventional strategies are carried out, and this cyclic process repeats, continuing until a sufficient understanding of [or a valid implementation solution for] the problem is achieved. The protocol is iterative or cyclical in nature and is intended to foster deeper understanding of a given situation, starting with conceptualizing and particularizing the problem and moving through several interventions and evaluations.

What do these studies tell you ?

- This is a collaborative and adaptive research design that lends itself to use in work or community situations.

- Design focuses on pragmatic and solution-driven research outcomes rather than testing theories.

- When practitioners use action research, it has the potential to increase the amount they learn consciously from their experience; the action research cycle can be regarded as a learning cycle.

- Action research studies often have direct and obvious relevance to improving practice and advocating for change.

- There are no hidden controls or preemption of direction by the researcher.

What these studies don't tell you ?

- It is harder to do than conducting conventional research because the researcher takes on responsibilities of advocating for change as well as for researching the topic.

- Action research is much harder to write up because it is less likely that you can use a standard format to report your findings effectively [i.e., data is often in the form of stories or observation].

- Personal over-involvement of the researcher may bias research results.

- The cyclic nature of action research to achieve its twin outcomes of action [e.g. change] and research [e.g. understanding] is time-consuming and complex to conduct.

- Advocating for change usually requires buy-in from study participants.

Coghlan, David and Mary Brydon-Miller. The Sage Encyclopedia of Action Research . Thousand Oaks, CA: Sage, 2014; Efron, Sara Efrat and Ruth Ravid. Action Research in Education: A Practical Guide . New York: Guilford, 2013; Gall, Meredith. Educational Research: An Introduction . Chapter 18, Action Research. 8th ed. Boston, MA: Pearson/Allyn and Bacon, 2007; Gorard, Stephen. Research Design: Creating Robust Approaches for the Social Sciences . Thousand Oaks, CA: Sage, 2013; Kemmis, Stephen and Robin McTaggart. “Participatory Action Research.” In Handbook of Qualitative Research . Norman Denzin and Yvonna S. Lincoln, eds. 2nd ed. (Thousand Oaks, CA: SAGE, 2000), pp. 567-605; McNiff, Jean. Writing and Doing Action Research . London: Sage, 2014; Reason, Peter and Hilary Bradbury. Handbook of Action Research: Participative Inquiry and Practice . Thousand Oaks, CA: SAGE, 2001.

Case Study Design

A case study is an in-depth study of a particular research problem rather than a sweeping statistical survey or comprehensive comparative inquiry. It is often used to narrow down a very broad field of research into one or a few easily researchable examples. The case study research design is also useful for testing whether a specific theory and model actually applies to phenomena in the real world. It is a useful design when not much is known about an issue or phenomenon.

- Approach excels at bringing us to an understanding of a complex issue through detailed contextual analysis of a limited number of events or conditions and their relationships.

- A researcher using a case study design can apply a variety of methodologies and rely on a variety of sources to investigate a research problem.

- Design can extend experience or add strength to what is already known through previous research.

- Social scientists, in particular, make wide use of this research design to examine contemporary real-life situations and provide the basis for the application of concepts and theories and the extension of methodologies.

- The design can provide detailed descriptions of specific and rare cases.

- A single or small number of cases offers little basis for establishing reliability or to generalize the findings to a wider population of people, places, or things.

- Intense exposure to the study of a case may bias a researcher's interpretation of the findings.

- Design does not facilitate assessment of cause and effect relationships.

- Vital information may be missing, making the case hard to interpret.

- The case may not be representative or typical of the larger problem being investigated.

- If the criteria for selecting a case is because it represents a very unusual or unique phenomenon or problem for study, then your interpretation of the findings can only apply to that particular case.

Case Studies. Writing@CSU. Colorado State University; Anastas, Jeane W. Research Design for Social Work and the Human Services . Chapter 4, Flexible Methods: Case Study Design. 2nd ed. New York: Columbia University Press, 1999; Gerring, John. “What Is a Case Study and What Is It Good for?” American Political Science Review 98 (May 2004): 341-354; Greenhalgh, Trisha, editor. Case Study Evaluation: Past, Present and Future Challenges . Bingley, UK: Emerald Group Publishing, 2015; Mills, Albert J. , Gabrielle Durepos, and Eiden Wiebe, editors. Encyclopedia of Case Study Research . Thousand Oaks, CA: SAGE Publications, 2010; Stake, Robert E. The Art of Case Study Research . Thousand Oaks, CA: SAGE, 1995; Yin, Robert K. Case Study Research: Design and Theory . Applied Social Research Methods Series, no. 5. 3rd ed. Thousand Oaks, CA: SAGE, 2003.

Causal Design

Causality studies may be thought of as understanding a phenomenon in terms of conditional statements in the form, “If X, then Y.” This type of research is used to measure what impact a specific change will have on existing norms and assumptions. Most social scientists seek causal explanations that reflect tests of hypotheses. Causal effect (nomothetic perspective) occurs when variation in one phenomenon, an independent variable, leads to or results, on average, in variation in another phenomenon, the dependent variable.

Conditions necessary for determining causality:

- Empirical association -- a valid conclusion is based on finding an association between the independent variable and the dependent variable.

- Appropriate time order -- to conclude that causation was involved, one must see that cases were exposed to variation in the independent variable before variation in the dependent variable.

- Nonspuriousness -- a relationship between two variables that is not due to variation in a third variable.

- Causality research designs assist researchers in understanding why the world works the way it does through the process of proving a causal link between variables and by the process of eliminating other possibilities.

- Replication is possible.

- There is greater confidence the study has internal validity due to the systematic subject selection and equity of groups being compared.

- Not all relationships are causal! The possibility always exists that, by sheer coincidence, two unrelated events appear to be related [e.g., Punxatawney Phil could accurately predict the duration of Winter for five consecutive years but, the fact remains, he's just a big, furry rodent].

- Conclusions about causal relationships are difficult to determine due to a variety of extraneous and confounding variables that exist in a social environment. This means causality can only be inferred, never proven.

- If two variables are correlated, the cause must come before the effect. However, even though two variables might be causally related, it can sometimes be difficult to determine which variable comes first and, therefore, to establish which variable is the actual cause and which is the actual effect.

Beach, Derek and Rasmus Brun Pedersen. Causal Case Study Methods: Foundations and Guidelines for Comparing, Matching, and Tracing . Ann Arbor, MI: University of Michigan Press, 2016; Bachman, Ronet. The Practice of Research in Criminology and Criminal Justice . Chapter 5, Causation and Research Designs. 3rd ed. Thousand Oaks, CA: Pine Forge Press, 2007; Brewer, Ernest W. and Jennifer Kubn. “Causal-Comparative Design.” In Encyclopedia of Research Design . Neil J. Salkind, editor. (Thousand Oaks, CA: Sage, 2010), pp. 125-132; Causal Research Design: Experimentation. Anonymous SlideShare Presentation; Gall, Meredith. Educational Research: An Introduction . Chapter 11, Nonexperimental Research: Correlational Designs. 8th ed. Boston, MA: Pearson/Allyn and Bacon, 2007; Trochim, William M.K. Research Methods Knowledge Base. 2006.

Cohort Design

Often used in the medical sciences, but also found in the applied social sciences, a cohort study generally refers to a study conducted over a period of time involving members of a population which the subject or representative member comes from, and who are united by some commonality or similarity. Using a quantitative framework, a cohort study makes note of statistical occurrence within a specialized subgroup, united by same or similar characteristics that are relevant to the research problem being investigated, rather than studying statistical occurrence within the general population. Using a qualitative framework, cohort studies generally gather data using methods of observation. Cohorts can be either "open" or "closed."

- Open Cohort Studies [dynamic populations, such as the population of Los Angeles] involve a population that is defined just by the state of being a part of the study in question (and being monitored for the outcome). Date of entry and exit from the study is individually defined, therefore, the size of the study population is not constant. In open cohort studies, researchers can only calculate rate based data, such as, incidence rates and variants thereof.

- Closed Cohort Studies [static populations, such as patients entered into a clinical trial] involve participants who enter into the study at one defining point in time and where it is presumed that no new participants can enter the cohort. Given this, the number of study participants remains constant (or can only decrease).

- The use of cohorts is often mandatory because a randomized control study may be unethical. For example, you cannot deliberately expose people to asbestos, you can only study its effects on those who have already been exposed. Research that measures risk factors often relies upon cohort designs.

- Because cohort studies measure potential causes before the outcome has occurred, they can demonstrate that these “causes” preceded the outcome, thereby avoiding the debate as to which is the cause and which is the effect.

- Cohort analysis is highly flexible and can provide insight into effects over time and related to a variety of different types of changes [e.g., social, cultural, political, economic, etc.].

- Either original data or secondary data can be used in this design.

- In cases where a comparative analysis of two cohorts is made [e.g., studying the effects of one group exposed to asbestos and one that has not], a researcher cannot control for all other factors that might differ between the two groups. These factors are known as confounding variables.

- Cohort studies can end up taking a long time to complete if the researcher must wait for the conditions of interest to develop within the group. This also increases the chance that key variables change during the course of the study, potentially impacting the validity of the findings.

- Due to the lack of randominization in the cohort design, its external validity is lower than that of study designs where the researcher randomly assigns participants.

Healy P, Devane D. “Methodological Considerations in Cohort Study Designs.” Nurse Researcher 18 (2011): 32-36; Glenn, Norval D, editor. Cohort Analysis . 2nd edition. Thousand Oaks, CA: Sage, 2005; Levin, Kate Ann. Study Design IV: Cohort Studies. Evidence-Based Dentistry 7 (2003): 51–52; Payne, Geoff. “Cohort Study.” In The SAGE Dictionary of Social Research Methods . Victor Jupp, editor. (Thousand Oaks, CA: Sage, 2006), pp. 31-33; Study Design 101. Himmelfarb Health Sciences Library. George Washington University, November 2011; Cohort Study. Wikipedia.

Cross-Sectional Design

Cross-sectional research designs have three distinctive features: no time dimension; a reliance on existing differences rather than change following intervention; and, groups are selected based on existing differences rather than random allocation. The cross-sectional design can only measure differences between or from among a variety of people, subjects, or phenomena rather than a process of change. As such, researchers using this design can only employ a relatively passive approach to making causal inferences based on findings.

- Cross-sectional studies provide a clear 'snapshot' of the outcome and the characteristics associated with it, at a specific point in time.

- Unlike an experimental design, where there is an active intervention by the researcher to produce and measure change or to create differences, cross-sectional designs focus on studying and drawing inferences from existing differences between people, subjects, or phenomena.

- Entails collecting data at and concerning one point in time. While longitudinal studies involve taking multiple measures over an extended period of time, cross-sectional research is focused on finding relationships between variables at one moment in time.

- Groups identified for study are purposely selected based upon existing differences in the sample rather than seeking random sampling.

- Cross-section studies are capable of using data from a large number of subjects and, unlike observational studies, is not geographically bound.

- Can estimate prevalence of an outcome of interest because the sample is usually taken from the whole population.

- Because cross-sectional designs generally use survey techniques to gather data, they are relatively inexpensive and take up little time to conduct.

- Finding people, subjects, or phenomena to study that are very similar except in one specific variable can be difficult.