- school Campus Bookshelves

- menu_book Bookshelves

- perm_media Learning Objects

- login Login

- how_to_reg Request Instructor Account

- hub Instructor Commons

- Download Page (PDF)

- Download Full Book (PDF)

- Periodic Table

- Physics Constants

- Scientific Calculator

- Reference & Cite

- Tools expand_more

- Readability

selected template will load here

This action is not available.

7.3: Presenting Quantitative Data Graphically

- Last updated

- Save as PDF

- Page ID 57463

- David Lippman

- Pierce College via The OpenTextBookStore

Quantitative, or numerical, data can also be summarized into frequency tables.

A teacher records scores on a 20-point quiz for the 30 students in his class. The scores are:

19 20 18 18 17 18 19 17 20 18 20 16 20 15 17 12 18 19 18 19 17 20 18 16 15 18 20 5 0 0

These scores could be summarized into a frequency table by grouping like values:

\(\begin{array}{|c|c|} \hline \textbf { Score } & \textbf { Frequency } \\ \hline 0 & 2 \\ \hline 5 & 1 \\ \hline 12 & 1 \\ \hline 15 & 2 \\ \hline 16 & 2 \\ \hline 17 & 4 \\ \hline 18 & 8 \\ \hline 19 & 4 \\ \hline 20 & 6 \\ \hline \end{array}\)

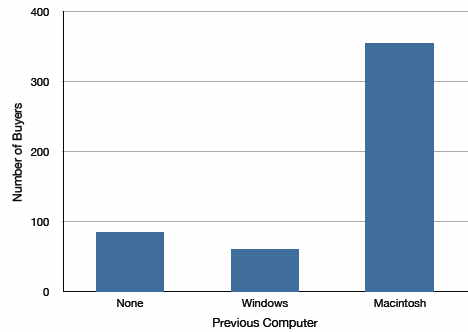

Using this table, it would be possible to create a standard bar chart from this summary, like we did for categorical data:

However, since the scores are numerical values, this chart doesn’t really make sense; the first and second bars are five values apart, while the later bars are only one value apart. It would be more correct to treat the horizontal axis as a number line. This type of graph is called a histogram .

A histogram is like a bar graph, but where the horizontal axis is a number line

For the values above, a histogram would look like:

Notice that in the histogram, a bar represents values on the horizontal axis from that on the left hand-side of the bar up to, but not including, the value on the right hand side of the bar. Some people choose to have bars start at ½ values to avoid this ambiguity.

Unfortunately, not a lot of common software packages can correctly graph a histogram. About the best you can do in Excel or Word is a bar graph with no gap between the bars and spacing added to simulate a numerical horizontal axis.

If we have a large number of widely varying data values, creating a frequency table that lists every possible value as a category would lead to an exceptionally long frequency table, and probably would not reveal any patterns. For this reason, it is common with quantitative data to group data into class intervals .

Class Intervals

Class intervals are groupings of the data. In general, we define class intervals so that:

- Each interval is equal in size. For example, if the first class contains values from 120-129, the second class should include values from 130-139.

- We have somewhere between 5 and 20 classes, typically, depending upon the number of data we’re working with.

Suppose that we have collected weights from 100 male subjects as part of a nutrition study. For our weight data, we have values ranging from a low of 121 pounds to a high of 263 pounds, giving a total span of 263-121 = 142. We could create 7 intervals with a width of around 20, 14 intervals with a width of around 10, or somewhere in between. Often time we have to experiment with a few possibilities to find something that represents the data well. Let us try using an interval width of 15. We could start at 121, or at 120 since it is a nice round number.

\(\begin{array}{|c|c|} \hline \textbf { Interval } & \textbf { Frequency } \\ \hline 120-134 & 4 \\ \hline 135-149 & 14 \\ \hline 150-164 & 16 \\ \hline 165-179 & 28 \\ \hline 180-194 & 12 \\ \hline 195-209 & 8 \\ \hline 210-224 & 7 \\ \hline 225-239 & 6 \\ \hline 240-254 & 2 \\ \hline 255-269 & 3 \\ \hline \end{array}\)

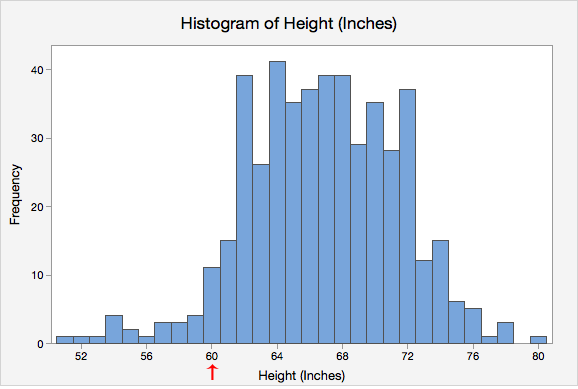

A histogram of this data would look like:

In many software packages, you can create a graph similar to a histogram by putting the class intervals as the labels on a bar chart.

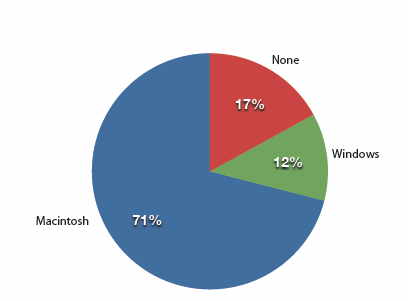

Other graph types such as pie charts are possible for quantitative data. The usefulness of different graph types will vary depending upon the number of intervals and the type of data being represented. For example, a pie chart of our weight data is difficult to read because of the quantity of intervals we used.

Try it Now 3

The total cost of textbooks for the term was collected from 36 students. Create a histogram for this data.

$140 $160 $160 $165 $180 $220 $235 $240 $250 $260 $280 $285

$285 $285 $290 $300 $300 $305 $310 $310 $315 $315 $320 $320

$330 $340 $345 $350 $355 $360 $360 $380 $395 $420 $460 $460

Using a class intervals of size 55, we can group our data into six intervals:

\(\begin{array}{|l|r|} \hline \textbf { cost interval } & \textbf { Frequency } \\ \hline \$ 140-194 & 5 \\ \hline \$ 195-249 & 3 \\ \hline \$ 250-304 & 9 \\ \hline \$ 305-359 & 12 \\ \hline \$ 360-414 & 4 \\ \hline \$ 415-469 & 3 \\ \hline \end{array}\)

We can use the frequency distribution to generate the histogram.

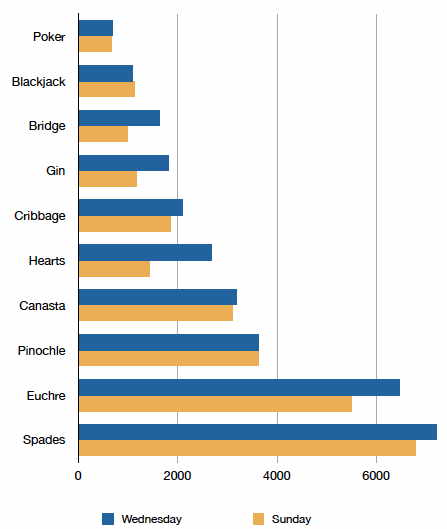

When collecting data to compare two groups, it is desirable to create a graph that compares quantities.

The data below came from a task in which the goal is to move a computer mouse to a target on the screen as fast as possible. On 20 of the trials, the target was a small rectangle; on the other 20, the target was a large rectangle. Time to reach the target was recorded on each trial.

\(\begin{array}{|c|c|c|} \hline \begin{array}{c} \textbf { Interval } \\ \textbf { (milliseconds) } \end{array} & \begin{array}{c} \textbf { Frequency } \\ \textbf { small target } \end{array} & \begin{array}{c} \textbf { Frequency } \\ \textbf { large target } \end{array} \\ \hline 300-399 & 0 & 0 \\ \hline 400-499 & 1 & 5 \\ \hline 500-599 & 3 & 10 \\ \hline 600-699 & 6 & 5 \\ \hline 700-799 & 5 & 0 \\ \hline 800-899 & 4 & 0 \\ \hline 900-999 & 0 & 0 \\ \hline 1000-1099 & 1 & 0 \\ \hline 1100-1199 & 0 & 0 \\ \hline \end{array}\)

One option to represent this data would be a comparative histogram or bar chart, in which bars for the small target group and large target group are placed next to each other.

Frequency polygon

An alternative representation is a frequency polygon . A frequency polygon starts out like a histogram, but instead of drawing a bar, a point is placed in the midpoint of each interval at height equal to the frequency. Typically the points are connected with straight lines to emphasize the distribution of the data.

This graph makes it easier to see that reaction times were generally shorter for the larger target, and that the reaction times for the smaller target were more spread out.

Find what you need to study

2.4 Representing the Relationship Between Two Quantitative Variables

6 min read • december 29, 2022

Athena_Codes

Jed Quiaoit

Attend a live cram event

Review all units live with expert teachers & students

In a bivariate quantitative data set , we often have two sets of quantitative data that are related or dependent in some way. One of the variables, referred to as the " independent " or " explanatory " (x) variable, is thought to have an effect on the other variable, which is referred to as the " dependent " or " response " (y) variable. The explanatory variable is often used to explain or predict the value of the response variable.

For example, in a study examining the relationship between age and blood pressure, age might be the explanatory variable and blood pressure the response variable. In this case, the value of the explanatory variable (age) might be used to predict the value of the response variable (blood pressure).

What is a Scatterplot?

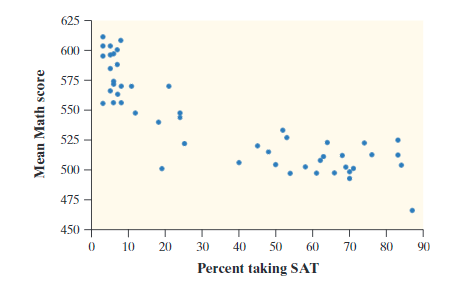

We can organize this data into scatterplots , which is a graph of the data. On the horizontal axis (also called the x-axis) is the explanatory variable and on the vertical axis is the response variable. The explanatory variable is also known as the independent variable, while the response variable is the dependent variable. Here are two examples below:

Both images courtesy of: Starnes, Daren S. and Tabor, Josh. The Practice of Statistics—For the AP Exam, 5th Edition. Cengage Publishing.

Describing Scatterplots

When given a scatterplot , we are often asked to describe them. In AP Statistics, there are four things graders are looking for when asked to describe a scatterplot , or describe the correlation in a scatterplot .

The form of a scatterplot refers to the general shape of the plotted points on the graph. A scatterplot may have a linear form, in which the points form a straight line, or a curved form, in which the points follow a curved pattern. The form of a scatterplot can be useful for understanding the relationship between the two variables and for identifying patterns or trends in the data. ✊

For example, a scatterplot with a linear form might indicate a strong, positive relationship between the two variables, where an increase in one variable is associated with an increase in the other. A scatterplot with a curved form might indicate a nonlinear relationship between the two variables, such as a quadratic relationship, where the relationship between the variables is not a straight line.

In the scatterplot above, Graph 1 is best described as curved, while Graph 2 is obviously linear.

The direction of the scatterplot is the general trend that you see when going left to right. Graph 1 is decreasing as the values of the response variable tend to go down from left to right while graph 2 is increasing as the values of the response variable tend to go up from left to right. ➡️

In a linear model, the direction of the relationship between two variables is often described in terms of positive or negative correlation. Positive correlation means that as one variable increases, the other variable also tends to increase. Negative correlation means that as one variable increases, the other variable tends to decrease.

The slope of the line that fits the data can be used to determine the direction of the correlation. If the slope is positive, the correlation is positive, and if the slope is negative, the correlation is negative.

For example, consider a linear model that shows the relationship between age and height. If the slope of the line is positive, it indicates that as age increases, height tends to increase as well. This would indicate a positive correlation between age and height. On the other hand, if the slope of the line is negative, it would indicate a negative correlation between age and height, where an increase in age is associated with a decrease in height.

The strength of a scatterplot describes how closely the points fit a certain model, and it can either be strong , moderate , or weak . How we figure this out numerically will be on the next section about correlation and the correlation coefficient . In our case, Graph 1 shows a medium strength correlation while Graph 2 shows a strong strength correlation. 🥋

Unusual Features

Lastly, we have to discuss unusual features on a scatterplot . The two types you should know are clusters and outliers , which are similar to their single-variable counterparts. 👽

Clusters are groups of points that are close together on the scatterplot . They may indicate that there are subgroups or patterns within the data that are different from the overall trend.

Outliers are points that are far from the other points on the scatterplot and may indicate unusual or unexpected values in the data. Outliers can be caused by errors in data collection or measurement, or they may indicate a genuine difference in the population being studied.

It's important to consider unusual features on a scatterplot when analyzing the data, as they can influence the interpretation of the relationship between the two variables and the results of statistical analyses.

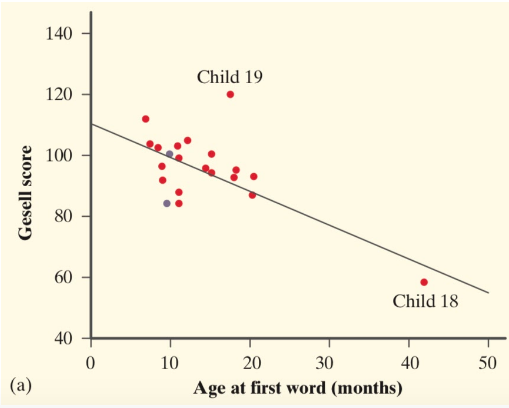

Describe the scatterplot in context of the problem.

Courtesy of Starnes, Daren S. and Tabor, Josh. The Practice of Statistics—For the AP Exam, 5th Edition. Cengage Publishing.

A sample answer may look like this: "In the scatterplot above, we see that it appears to follow a linear pattern. It also shows a negative correlation since the Gesell score seems to decrease as the age at first word increases. The correlation appears to be moderate, since there are some points that follow the pattern exactly, while others seem to break apart from the pattern. The data appears to have one cluster with an outlier at Child 19, because the predicted Gesell Score for Child 19 (value at line) has a large discrepancy from the actual Gesell score (value at point). Also, the data has an influential point that is a high leverage point with Child 18 because it heavily influences the negative correlation of the data set."

**Notice that this response is IN CONTEXT of the problem. This is a great way to maximize your credit on the AP Statistics exam.

Side Note: Outliers, Influential Points, and (High) Leverage Points

Source: Cambridge University Press

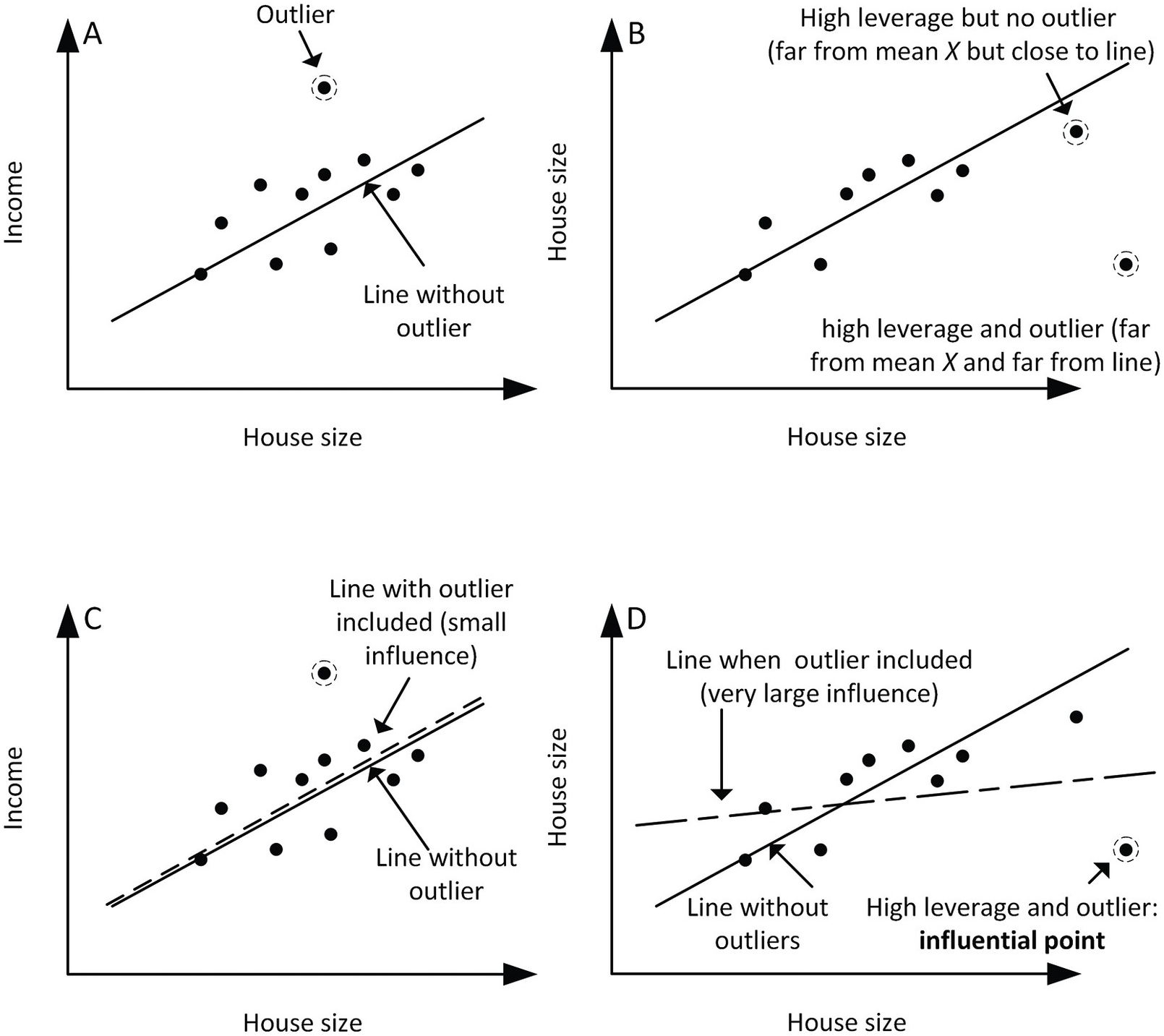

After going through the example problem above, the biggest question you might have in mind is: Whats the difference between outliers , influential points, and high leverage points --given that they all greatly impact scatterplot trends, correlations (to be discussed in-depth in the next section), and such? 🪞

An outlier is a data point that is significantly different from the rest of the data in a dataset. Outliers can have a significant impact on the results of statistical analyses and can potentially distort the overall pattern of the data.

An influential point is a data point that has a significant impact on the regression line or the fitted model, but it is technically not an outlier. Influential points can cause the regression line to change direction or curvature, and they can have a large influence on the slope and intercept of the line.

A high leverage point is a data point that has a large value for one of the independent variables in a regression model. High leverage points can have a large influence on the fitted model, and they can be detected by examining the leverage values for each data point. High leverage points may or may not be outliers .

In summary, outliers are data points that are significantly different from the rest of the data, influential points are data points that have a significant impact on the fitted model, and high leverage points are data points that have a large value for one of the independent variables and can have a large influence on the fitted model.

🎥 Watch: AP Stats - Scatterplots and Association

Key Terms to Review ( 8 )

Correlation Coefficient

Explanatory Variable

High Leverage Points

Negative Correlation

Positive Correlation

Scatterplot

Stay Connected

© 2024 Fiveable Inc. All rights reserved.

AP® and SAT® are trademarks registered by the College Board, which is not affiliated with, and does not endorse this website.

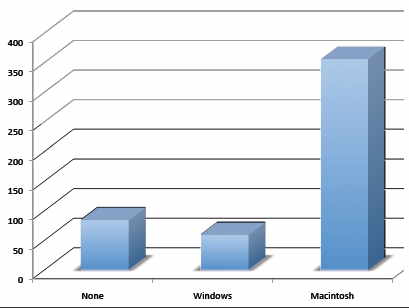

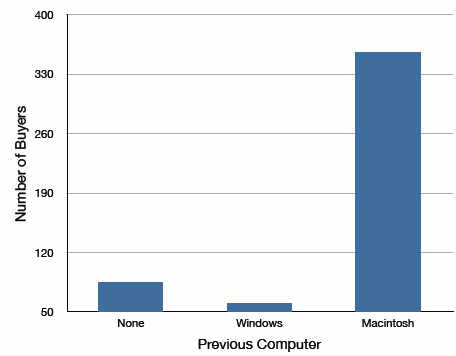

Graphical Representation of Data

Graphical representation of data is an attractive method of showcasing numerical data that help in analyzing and representing quantitative data visually. A graph is a kind of a chart where data are plotted as variables across the coordinate. It became easy to analyze the extent of change of one variable based on the change of other variables. Graphical representation of data is done through different mediums such as lines, plots, diagrams, etc. Let us learn more about this interesting concept of graphical representation of data, the different types, and solve a few examples.

Definition of Graphical Representation of Data

A graphical representation is a visual representation of data statistics-based results using graphs, plots, and charts. This kind of representation is more effective in understanding and comparing data than seen in a tabular form. Graphical representation helps to qualify, sort, and present data in a method that is simple to understand for a larger audience. Graphs enable in studying the cause and effect relationship between two variables through both time series and frequency distribution. The data that is obtained from different surveying is infused into a graphical representation by the use of some symbols, such as lines on a line graph, bars on a bar chart, or slices of a pie chart. This visual representation helps in clarity, comparison, and understanding of numerical data.

Representation of Data

The word data is from the Latin word Datum, which means something given. The numerical figures collected through a survey are called data and can be represented in two forms - tabular form and visual form through graphs. Once the data is collected through constant observations, it is arranged, summarized, and classified to finally represented in the form of a graph. There are two kinds of data - quantitative and qualitative. Quantitative data is more structured, continuous, and discrete with statistical data whereas qualitative is unstructured where the data cannot be analyzed.

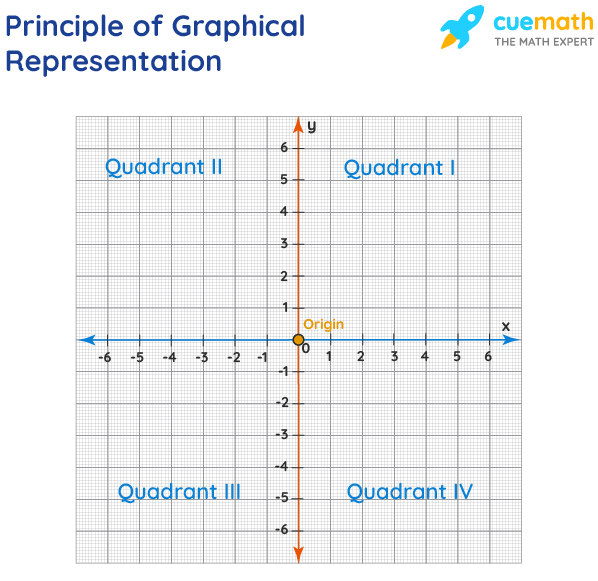

Principles of Graphical Representation of Data

The principles of graphical representation are algebraic. In a graph, there are two lines known as Axis or Coordinate axis. These are the X-axis and Y-axis. The horizontal axis is the X-axis and the vertical axis is the Y-axis. They are perpendicular to each other and intersect at O or point of Origin. On the right side of the Origin, the Xaxis has a positive value and on the left side, it has a negative value. In the same way, the upper side of the Origin Y-axis has a positive value where the down one is with a negative value. When -axis and y-axis intersect each other at the origin it divides the plane into four parts which are called Quadrant I, Quadrant II, Quadrant III, Quadrant IV. This form of representation is seen in a frequency distribution that is represented in four methods, namely Histogram, Smoothed frequency graph, Pie diagram or Pie chart, Cumulative or ogive frequency graph, and Frequency Polygon.

Advantages and Disadvantages of Graphical Representation of Data

Listed below are some advantages and disadvantages of using a graphical representation of data:

- It improves the way of analyzing and learning as the graphical representation makes the data easy to understand.

- It can be used in almost all fields from mathematics to physics to psychology and so on.

- It is easy to understand for its visual impacts.

- It shows the whole and huge data in an instance.

- It is mainly used in statistics to determine the mean, median, and mode for different data

The main disadvantage of graphical representation of data is that it takes a lot of effort as well as resources to find the most appropriate data and then represent it graphically.

Rules of Graphical Representation of Data

While presenting data graphically, there are certain rules that need to be followed. They are listed below:

- Suitable Title: The title of the graph should be appropriate that indicate the subject of the presentation.

- Measurement Unit: The measurement unit in the graph should be mentioned.

- Proper Scale: A proper scale needs to be chosen to represent the data accurately.

- Index: For better understanding, index the appropriate colors, shades, lines, designs in the graphs.

- Data Sources: Data should be included wherever it is necessary at the bottom of the graph.

- Simple: The construction of a graph should be easily understood.

- Neat: The graph should be visually neat in terms of size and font to read the data accurately.

Uses of Graphical Representation of Data

The main use of a graphical representation of data is understanding and identifying the trends and patterns of the data. It helps in analyzing large quantities, comparing two or more data, making predictions, and building a firm decision. The visual display of data also helps in avoiding confusion and overlapping of any information. Graphs like line graphs and bar graphs, display two or more data clearly for easy comparison. This is important in communicating our findings to others and our understanding and analysis of the data.

Types of Graphical Representation of Data

Data is represented in different types of graphs such as plots, pies, diagrams, etc. They are as follows,

Related Topics

Listed below are a few interesting topics that are related to the graphical representation of data, take a look.

- x and y graph

- Frequency Polygon

- Cumulative Frequency

Examples on Graphical Representation of Data

Example 1 : A pie chart is divided into 3 parts with the angles measuring as 2x, 8x, and 10x respectively. Find the value of x in degrees.

We know, the sum of all angles in a pie chart would give 360º as result. ⇒ 2x + 8x + 10x = 360º ⇒ 20 x = 360º ⇒ x = 360º/20 ⇒ x = 18º Therefore, the value of x is 18º.

Example 2: Ben is trying to read the plot given below. His teacher has given him stem and leaf plot worksheets. Can you help him answer the questions? i) What is the mode of the plot? ii) What is the mean of the plot? iii) Find the range.

Solution: i) Mode is the number that appears often in the data. Leaf 4 occurs twice on the plot against stem 5.

Hence, mode = 54

ii) The sum of all data values is 12 + 14 + 21 + 25 + 28 + 32 + 34 + 36 + 50 + 53 + 54 + 54 + 62 + 65 + 67 + 83 + 88 + 89 + 91 = 958

To find the mean, we have to divide the sum by the total number of values.

Mean = Sum of all data values ÷ 19 = 958 ÷ 19 = 50.42

iii) Range = the highest value - the lowest value = 91 - 12 = 79

go to slide go to slide

Book a Free Trial Class

Practice Questions on Graphical Representation of Data

Faqs on graphical representation of data, what is graphical representation.

Graphical representation is a form of visually displaying data through various methods like graphs, diagrams, charts, and plots. It helps in sorting, visualizing, and presenting data in a clear manner through different types of graphs. Statistics mainly use graphical representation to show data.

What are the Different Types of Graphical Representation?

The different types of graphical representation of data are:

- Stem and leaf plot

- Scatter diagrams

- Frequency Distribution

Is the Graphical Representation of Numerical Data?

Yes, these graphical representations are numerical data that has been accumulated through various surveys and observations. The method of presenting these numerical data is called a chart. There are different kinds of charts such as a pie chart, bar graph, line graph, etc, that help in clearly showcasing the data.

What is the Use of Graphical Representation of Data?

Graphical representation of data is useful in clarifying, interpreting, and analyzing data plotting points and drawing line segments , surfaces, and other geometric forms or symbols.

What are the Ways to Represent Data?

Tables, charts, and graphs are all ways of representing data, and they can be used for two broad purposes. The first is to support the collection, organization, and analysis of data as part of the process of a scientific study.

What is the Objective of Graphical Representation of Data?

The main objective of representing data graphically is to display information visually that helps in understanding the information efficiently, clearly, and accurately. This is important to communicate the findings as well as analyze the data.

Modern Data Visualization with R

Chapter 5 bivariate graphs.

One of the most fundamental questions in research is “What is the relationship between A and B?” . Bivariate graphs display the relationship between two variables. The type of graph will depend on the measurement level of each variable (categorical or quantitative).

5.1 Categorical vs. Categorical

When plotting the relationship between two categorical variables, stacked, grouped, or segmented bar charts are typically used. A less common approach is the mosaic chart (section 9.5 ).

In this section, we will look at automobile characteristics contained in mpg dataset that comes with the ggplot2 package. It provides fuel efficiency data for 38 popular car models in 1998 and 2008 (see Appendix A.6 ).

5.1.1 Stacked bar chart

Let’s examine the relationship between automobile class and drive type (front-wheel, rear-wheel, or 4-wheel drive) for the automobiles in the mpg dataset.

Figure 5.1: Stacked bar chart

From the Figure 5.1 , we can see for example, that the most common vehicle is the SUV. All 2seater cars are rear wheel drive, while most, but not all SUVs are 4-wheel drive.

Stacked is the default, so the last line could have also been written as geom_bar() .

5.1.2 Grouped bar chart

Grouped bar charts place bars for the second categorical variable side-by-side. To create a grouped bar plot use the position = "dodge" option.

Figure 5.2: Side-by-side bar chart

Notice that all Minivans are front-wheel drive. By default, zero count bars are dropped and the remaining bars are made wider. This may not be the behavior you want. You can modify this using the position = position_dodge(preserve = "single")" option.

Figure 5.3: Side-by-side bar chart with zero count bars retained

Note that this option is only available in the later versions of ggplot2 .

5.1.3 Segmented bar chart

A segmented bar plot is a stacked bar plot where each bar represents 100 percent. You can create a segmented bar chart using the position = "filled" option.

Figure 5.4: Segmented bar chart

This type of plot is particularly useful if the goal is to compare the percentage of a category in one variable across each level of another variable. For example, the proportion of front-wheel drive cars go up as you move from compact, to midsize, to minivan.

5.1.4 Improving the color and labeling

You can use additional options to improve color and labeling. In the graph below

- factor modifies the order of the categories for the class variable and both the order and the labels for the drive variable

- scale_y_continuous modifies the y-axis tick mark labels

- labs provides a title and changed the labels for the x and y axes and the legend

- scale_fill_brewer changes the fill color scheme

- theme_minimal removes the grey background and changed the grid color

Figure 5.5: Segmented bar chart with improved labeling and color

Each of these functions is discussed more fully in the section on Customizing graphs (see Section 11 ).

Next, let’s add percent labels to each segment. First, we’ll create a summary dataset that has the necessary labels.

Next, we’ll use this dataset and the geom_text function to add labels to each bar segment.

Figure 5.6: Segmented bar chart with value labeling

Now we have a graph that is easy to read and interpret.

5.1.5 Other plots

Mosaic plots provide an alternative to stacked bar charts for displaying the relationship between categorical variables. They can also provide more sophisticated statistical information. See Section 9.5 for details.

5.2 Quantitative vs. Quantitative

The relationship between two quantitative variables is typically displayed using scatterplots and line graphs.

5.2.1 Scatterplot

The simplest display of two quantitative variables is a scatterplot, with each variable represented on an axis. Here, we will use the Salaries dataset described in Appendix A.1 . First, let’s plot experience ( yrs.since.phd ) vs. academic salary ( salary ) for college professors.

Figure 5.7: Simple scatterplot

As expected, salary tends to rise with experience, but the relationship may not be strictly linear. Note that salary appears to fall off after about 40 years of experience.

The geom_point function has options that can be used to change the

- color - point color

- size - point size

- shape - point shape

- alpha - point transparency. Transparency ranges from 0 (transparent) to 1 (opaque), and is a useful parameter when points overlap.

The functions scale_x_continuous and scale_y_continuous control the scaling on x and y axes respectively.

We can use these options and functions to create a more attractive scatterplot.

Figure 5.8: Scatterplot with color, transparency, and axis scaling

Again, see Customizing graphs (Section 11 ) for more details.

5.2.1.1 Adding best fit lines

It is often useful to summarize the relationship displayed in the scatterplot, using a best fit line. Many types of lines are supported, including linear, polynomial, and nonparametric (loess). By default, 95% confidence limits for these lines are displayed.

Figure 5.9: Scatterplot with linear fit line

Clearly, salary increases with experience. However, there seems to be a dip at the right end - professors with significant experience, earning lower salaries. A straight line does not capture this non-linear effect. A line with a bend will fit better here.

A polynomial regression line provides a fit line of the form \[\hat{y} = \beta_{0} +\beta_{1}x + \beta{2}x^{2} + \beta{3}x^{3} + \beta{4}x^{4} + \dots\]

Typically either a quadratic (one bend), or cubic (two bends) line is used. It is rarely necessary to use a higher order( >3 ) polynomials. Adding a quadratic fit line to the salary dataset produces the following result.

Figure 5.10: Scatterplot with quadratic fit line

Finally, a smoothed nonparametric fit line can often provide a good picture of the relationship. The default in ggplot2 is a loess line which stands for for lo cally w e ighted s catterplot s moothing ( Cleveland 1979 ) .

Figure 5.11: Scatterplot with nonparametric fit line

You can suppress the confidence bands by including the option se = FALSE .

Here is a complete (and more attractive) plot.

Figure 5.12: Scatterplot with nonparametric fit line

5.2.2 Line plot

When one of the two variables represents time, a line plot can be an effective method of displaying relationship. For example, the code below displays the relationship between time ( year ) and life expectancy ( lifeExp ) in the United States between 1952 and 2007. The data comes from the gapminder dataset (Appendix A.8 ).

Figure 5.13: Simple line plot

It is hard to read indivial values in the graph above. In the next plot, we’ll add points as well.

Figure 5.14: Line plot with points and labels

Time dependent data is covered in more detail under Time series (Section 8 ). Customizing line graphs is covered in the Customizing graphs (Section 11 ).

5.3 Categorical vs. Quantitative

When plotting the relationship between a categorical variable and a quantitative variable, a large number of graph types are available. These include bar charts using summary statistics, grouped kernel density plots, side-by-side box plots, side-by-side violin plots, mean/sem plots, ridgeline plots, and Cleveland plots. Each is considered in turn.

5.3.1 Bar chart (on summary statistics)

In previous sections, bar charts were used to display the number of cases by category for a single variable (Section 4.1.1 ) or for two variables (Section 5.1 ). You can also use bar charts to display other summary statistics (e.g., means or medians) on a quantitative variable for each level of a categorical variable.

For example, the following graph displays the mean salary for a sample of university professors by their academic rank.

Figure 5.15: Bar chart displaying means

We can make it more attractive with some options. In particular, the factor function modifies the labels for each rank, the scale_y_continuous function improves the y-axis labeling, and the geom_text function adds the mean values to each bar.

Figure 5.16: Bar chart displaying means

The vjust parameter in the geom_text function controls vertical justification and nudges the text above the bars. See Annotations (Section 11.7 ) for more details.

One limitation of such plots is that they do not display the distribution of the data - only the summary statistic for each group. The plots below correct this limitation to some extent.

5.3.2 Grouped kernel density plots

One can compare groups on a numeric variable by superimposing kernel density plots (Section 4.2.2 ) in a single graph.

Figure 5.17: Grouped kernel density plots

The alpha option makes the density plots partially transparent, so that we can see what is happening under the overlaps. Alpha values range from 0 (transparent) to 1 (opaque). The graph makes clear that, in general, salary goes up with rank. However, the salary range for full professors is very wide.

5.3.3 Box plots

A boxplot displays the 25 th percentile, median, and 75 th percentile of a distribution. The whiskers (vertical lines) capture roughly 99% of a normal distribution, and observations outside this range are plotted as points representing outliers (see the figure below).

Figure 5.18: Side-by-side boxplots

Notched boxplots provide an approximate method for visualizing whether groups differ. Although not a formal test, if the notches of two boxplots do not overlap, there is strong evidence (95% confidence) that the medians of the two groups differ ( McGill, Tukey, and Larsen 1978 ) .

Figure 5.19: Side-by-side notched boxplots

In the example above, all three groups appear to differ.

One of the advantages of boxplots is that the width is usually not meaningful. This allows you to compare the distribution of many groups in a single graph.

5.3.4 Violin plots

Violin plots are similar to kernel density plots, but are mirrored and rotated 90 o .

Figure 5.20: Side-by-side violin plots

A violin plots capture more a a distribution’s shape than a boxplot, but does not indicate median or middle 50% of the data. A useful variation is to superimpose boxplots on violin plots.

Figure 5.21: Side-by-side violin/box plots

Be sure to set the width parameter in the geom_boxplot in order to assure the boxplots fit within the violin plots. You may need to play around with this in order to find a value that works well. Since geoms are layered, it is also important for the geom_boxplot function to appear after the geom_violin function. Otherwise the boxplots will be hidden beneath the violin plots.

5.3.5 Ridgeline plots

A ridgeline plot (also called a joyplot) displays the distribution of a quantitative variable for several groups. They’re similar to kernel density plots with vertical faceting , but take up less room. Ridgeline plots are created with the ggridges package.

Using the mpg dataset, let’s plot the distribution of city driving miles per gallon by car class.

Figure 5.22: Ridgeline graph with color fill

I’ve suppressed the legend here because it’s redundant (the distributions are already labeled on the y -axis). Unsurprisingly, pickup trucks have the poorest mileage, while subcompacts and compact cars tend to achieve ratings. However, there is a very wide range of gas mileage scores for these smaller cars.

Note the the possible overlap of distributions is the trade-off for a more compact graph. You can add transparency if the the overlap is severe using geom_density_ridges(alpha = n) , where n ranges from 0 (transparent) to 1 (opaque). See the package vignette ( https://cran.r-project.org/web/packages/ggridges/vignettes/introduction.html ) for more details.

5.3.6 Mean/SEM plots

A popular method for comparing groups on a numeric variable is a mean plot with error bars. Error bars can represent standard deviations, standard errors of the means, or confidence intervals. In this section, we’ll calculate all three, but only plot means and standard errors to save space.

The resulting dataset is given below.

Figure 5.23: Mean plots with standard error bars

Although we plotted error bars representing the standard error, we could have plotted standard deviations or 95% confidence intervals. Simply replace se with sd or error in the aes option.

We can use the same technique to compare salary across rank and sex. (Technically, this is not bivariate since we’re plotting rank, sex, and salary, but it seems to fit here.)

Figure 5.24: Mean plots with standard error bars by sex

Unfortunately, the error bars overlap. We can dodge the horizontal positions a bit to overcome this.

Figure 5.25: Mean plots with standard error bars (dodged)

Finally, lets add some options to make the graph more attractive.

Figure 5.26: Mean/se plot with better labels and colors

This is a graph you could publish in a journal.

5.3.7 Strip plots

The relationship between a grouping variable and a numeric variable can be also displayed with a scatter plot. For example

Figure 5.27: Categorical by quantiative scatterplot

These one-dimensional scatterplots are called strip plots. Unfortunately, overprinting of points makes interpretation difficult. The relationship is easier to see if the the points are jittered. Basically a small random number is added to each y-coordinate. To jitter the points, replace geom_point with geom_jitter .

Figure 5.28: Jittered plot

It is easier to compare groups if we use color.

Figure 5.29: Fancy jittered plot

The option legend.position = "none" is used to suppress the legend (which is not needed here). Jittered plots work well when the number of points in not overly large. Here, we can not only compare groups, but see the salaries of each individual faculty member. As a college professor myself, I want to know who is making more than $200,000 on a nine month contract!

Finally, we can superimpose boxplots on the jitter plots.

Figure 5.30: Jitter plot with superimposed box plots

Several options were added to create this plot.

For the boxplot

- size = 1 makes the lines thicker

- outlier.color = "black" makes outliers black

- outlier.shape = 1 specifies circles for outliers

- outlier.size = 3 increases the size of the outlier symbol

For the jitter

- alpha = 0.5 makes the points more transparent

- width = .2 decreases the amount of jitter (.4 is the default)

Finally, the x and y axes are revered using the coord_flip function (i.e., the graph is turned on its side).

Before moving on, it is worth mentioning the geom_boxjitter function provided in the ggpol package. It creates a hybrid boxplot - half boxplot, half scaterplot.

Figure 5.31: Using geom_boxjitter

Choose the approach that you find most useful.

5.3.8 Cleveland Dot Charts

Cleveland plots are useful when you want to compare each observation on a numeric variable, or compare a large number of groups on a numeric summary statistic. For example, say that you want to compare the 2007 life expectancy for Asian country using the gapminder dataset.

Figure 5.32: Basic Cleveland dot plot

Comparisons are usually easier if the y -axis is sorted.

Figure 5.33: Sorted Cleveland dot plot

The difference in life expectancy between countries like Japan and Afghanistan is striking.

Finally, we can use options to make the graph more attractive by removing unnecessary elements, like the grey background panel and horizontal reference lines, and adding a line segment connecting each point to the y axis.

Figure 5.34: Fancy Cleveland plot

This last plot is also called a lollipop graph (I wonder why?).

An official website of the United States government

The .gov means it’s official. Federal government websites often end in .gov or .mil. Before sharing sensitive information, make sure you’re on a federal government site.

The site is secure. The https:// ensures that you are connecting to the official website and that any information you provide is encrypted and transmitted securely.

- Publications

- Account settings

Preview improvements coming to the PMC website in October 2024. Learn More or Try it out now .

- Advanced Search

- Journal List

- Perspect Behav Sci

- v.45(1); 2022 Mar

Quantitative Techniques and Graphical Representations for Interpreting Results from Alternating Treatment Design

Rumen manolov.

1 Department of Social Psychology and Quantitative Psychology, University of Barcelona, Passeig de la Vall dHebron 171, 08035 Barcelona, Spain

René Tanious

2 Methodology of Educational Sciences Research Group, KU Leuven - University of Leuven, Leuven, Belgium

Patrick Onghena

Associated data.

The data used for the illustrations are available from https://osf.io/ks4p2/

Multiple quantitative methods for single-case experimental design data have been applied to multiple-baseline, withdrawal, and reversal designs. The advanced data analytic techniques historically applied to single-case design data are primarily applicable to designs that involve clear sequential phases such as repeated measurement during baseline and treatment phases, but these techniques may not be valid for alternating treatment design (ATD) data where two or more treatments are rapidly alternated. Some recently proposed data analytic techniques applicable to ATD are reviewed. For ATDs with random assignment of condition ordering, the Edgington’s randomization test is one type of inferential statistical technique that can complement descriptive data analytic techniques for comparing data paths and for assessing the consistency of effects across blocks in which different conditions are being compared. In addition, several recently developed graphical representations are presented, alongside the commonly used time series line graph. The quantitative and graphical data analytic techniques are illustrated with two previously published data sets. Apart from discussing the potential advantages provided by each of these data analytic techniques, barriers to applying them are reduced by disseminating open access software to quantify or graph data from ATDs.

Alternating treatment design (ATD) is a single-case experimental design (SCED 1 ), characterized by a rapid and frequent alternation of conditions (Barlow & Hayes, 1979 ; Kratochwill & Levin, 1980 ) that can be used to compare two (or more) different treatments, or a control and a treatment condition. An ATD can be understood as a type of “multielement design” (see Hammond et al., 2013 ; Kennedy, 2005 ; Riley-Tillman et al., 2020 ; see Barlow & Hayes, 1979 , for a discussion), but it important to mention two potential distinctions. On the one hand, the term “multilelement design” is employed when an ATD is used for test-control pairwise functional analysis methodology (Hagopian et al., 1997 ; Hall et al., 2020 ; Hammond et al., 2013 ; Iwata et al., 1994 ). On the other hand, a multielement design can be used for assessing contextual variables and ATD for assessing interventions (Ledford et al., 2019 ). Previous publications on best practices for applying ATD recommend a minimum of five data points per condition, and limiting consecutive repeated exposure to two sessions of any one condition (What Works Clearinghouse, 2020 ; Wolery al., 2018). The rapid alternation between conditions distinguishes ATDs from other SCEDs, which are characterized by more consecutive repeated measurements for the same condition (Onghena & Edgington, 2005 ).

In relation to the previously mentioned distinguishing features of ATDs, it is important to adequately identify under what conditions this design is most useful and should be recommended to applied researchers. ATDs are applicable to reversible behaviors (Wolery et al., 2018 ) that are sensitive to interventions that can be introduced and removed fast, prior to maintenance and generalization phases of treatment analyses. Thus, for nonreversible behaviors, an AB (Michiels & Onghena, 2019 ), a multiple-baseline and/or a changing-criterion design can be used (Ledford et al., 2019 ), whereas for reversible behaviors and interventions that require more time to demonstrate a treatment effect (or for an effect to wear off), an ABAB design is typically recommended.

ATD can be useful for applied researchers for several reasons. First, an ATD can be used to compare the efficiency of different interventions (Holcombe et al., 1994 ), instead of only comparing a baseline to an intervention condition. Second, an ATD enables researchers to perform, in a brief period of time, several attempts to demonstrate whether one condition is superior to the other. This rapid alternation of conditions is useful to reduce the threat of history because it decreases the likelihood that confounding external events occur exactly at the same time as the conditions change (Petursdottir & Carr, 2018 ). This rapid alternation is also useful to reduce the threat of maturation, which usually entails a gradual process (Petursdottir & Carr, 2018 ), because the total duration of the ATD study is likely to be shorter when conditions change rapidly and the same condition is not in place for many consecutive measurements. Third, an ATD entailing a random determination of the sequence of conditions further increases the level of internal validity and makes the design equivalent to medical N-of-1 trials, which also entail block randomization and are considered Level-1 empirical evidence for treatment effectiveness for individual cases (Howick et al., 2011 ). The use of randomization when determining the alternating sequence has been recommended (Barlow & Hayes, 1979 ; Horner & Odom, 2014 ; Kazdin, 2011 ) and is relatively common: Manolov and Onghena ( 2018 ) report 51% and Tanious and Onghena ( 2020 ) report 59% of the ATD studies use randomization in the design. The fact that randomization is not always used limits the data analysis options available to the investigator. In the following paragraphs, we refer to different options for determining the condition sequence for ATDs. It is important to note that the way in which the sequence is determined affects the number of options available for data analysis.

Among the possibilities for a random determination for condition ordering, a completely randomized design (Onghena & Edgington, 2005 ) entails that the conditions are randomly alternated without any restriction, but this could lead to problematic sequences such as AAAAABBBBB or AAABBBBBAA. Given that such sequences do not allow for a rapid alternation of conditions, other randomization techniques are more commonly used to select the ordering of conditions. In particular, a “random alternation with no condition repeating until all have been conducted” (Wolery et al., 2018 , p. 304) describes block randomization (Ledford, 2018 ) or a randomized block design (Onghena & Edgington, 2005 ), in which all conditions are grouped in blocks and the order of conditions within each block is determined at random. For instance, sequences such as AB-BA-BA-AB-BA and BA-AB-BA-BA-AB can be obtained. A randomly determined sequence arising from an ATD with block randomization is equivalent to the N-of-1 trials used in the health sciences (Guyatt et al., 1990 ; Krone et al., 2020 ; Nikles & Mitchell, 2015 ), in which several random-order blocks are referred to as multiple crossovers. Another option is to use “random alternation with no more than two consecutive sessions in a single condition” (Wolery et al., 2018 , p. 304). Such an ATD with restricted randomization could lead to a sequence such as ABBABAABAB or AABABBABBA, with the latter being impossible when using block randomization. An alternative procedure for determining the sequence is through counterbalancing (Barlow & Hayes, 1979 ; Kennedy, 2005 ), which is especially relevant if there are multiple conditions and participants. Counterbalancing enables different ordering of the conditions to be present for different participants. For instance, the sequence could be ABBABAAB for participant 1 and BAABABBA for participant 2.

Aims and Organization

In the remaining sections of this manuscript, the emphasis is placed on data analysis options for ATD data. In particular, we illustrate the use of several quantitative techniques as complements to (rather than substitutes for) visual analysis. Quantifications are highlighted in relation to the importance of increasing the objectivity of the assessment of intervention effectiveness (Cox & Friedel, 2020 ; Laraway et al., 2019 ), reducing difficulties with accurately identifying clear differences between ATD data paths (Kranak et al., 2021 ), and making ATD results more likely to meet the requirements for including the data in meta-analyses (Onghena et al., 2018 ). The descriptive quantifications of differences in treatment effects and the inferential techniques (i.e., a randomization test) are applicable to both ATDs with block randomization and restricted randomization. However, the quantifications for assessing the consistency of effects across blocks are only applicable to ATDs with block randomization assignment for the conditions. The analytical options the current manuscript focuses on are scattered across several texts published since 2018. This article is aimed at providing behavior analysts with additional data analytic options, using freely available web-based software.

In the following text, we first discuss visual analysis, several descriptive quantitative techniques, and one inferential statistical technique. Next, we provide potential advantages for the proposed quantifications that complement visual inspection of graphed ATD data. Third, in order to enhance the applicability of the techniques and to make possible the replication of the results presented, we describe several existing software for data analysis options. Finally, we illustrate these quantitative data analytic techniques with two previously published ATD data sets.

Data Analysis Options for Alternating Treatment Design

Visual analysis.

Visual inspection has long been the first choice for investigators (Barlow et al., 2009 ; Sidman, 1960 ). The data analysis focuses on the degree to which the data path for one condition is differentiable from (and clearly superior to) the data path for the other condition (Ledford et al., 2019 ). The data paths are represented by lines connecting sessions within each condition of the ATD. Thus, visual analysis assesses the magnitude and consistency of the separation between conditions (Horner & Odom, 2014 ), also referred to as differentiation (Riley-Tillman et al., 2020 ) between the data paths (e.g., whether they cross or not and what is the vertical distance between them). This comparison usually incorporates consistency and level or magnitude of the difference in the dependent variable across the treatment conditions (Ledford et al., 2019 ).

Descriptive Data Analytic Techniques

The main strengths and limitations of the descriptive data analytic techniques reviewed are presented in Table Table1. 1 . Examples of their use are provided in the section entitled “Illustrations and Comparison of the Results,” including a graphical representation of most of these techniques. In Table Table1, 1 , we also refer to the particular figure that represents an application of a technique.

Summary of the main features of several data analytic techniques applicable to alternating treatments designs

Comparing Data Paths

Quantifying the difference between the data paths entails using observed behavior via direct measurement and linearly interpolated values. The linearly interpolated values are the specific locations within a data path for one condition; they lie between session data points from that condition. The interpolated data points represent the value that hypothetically would have been obtained for a given condition if it had taken place on a given measurement occasion; however, in the ATD, the alternative treatment condition is imposed instead.

One approach to comparing two or more data paths is to use the visual structured criterion (VSC; Lanovaz et al., 2019 ). The comparison is performed ordinally, that is, considering only whether one condition is superior to the other; it does not measure the degree of superiority (unlike the quantification described in the following paragraph). In particular, the VSC first quantifies the number of comparisons (measurement sessions) for which one condition is superior. Afterwards, the VSC compares this quantity to the cut-off points empirically derived by Lanovaz et al. ( 2019 ) for detecting superiority greater than one expected by chance.

A comparison involving actual and linearly interpolated values (abbreviated as ALIV, Manolov & Onghena, 2018 ) assesses the magnitude of effect, by focusing on the average distance between the data paths. Complementary to the visual structured criterion, ALIV quantifies the magnitude of the separation between data paths.

Assessment of Level and Trend

Comparing data paths is common in visual analysis of graphed SCED data, and in many ways relies on implicit use of interpolated values between sessions for each data path. In addition to visual comparison, a quantification using only the obtained (observed) measurements may be preferable to a quantification using the interpolated values from the ALIV. A possible quantification using only observed values is the “average difference between successive observations” (ADISO; Manolov & Onghena, 2018 ). As suggested by Ledford et al. ( 2019 ), measurements from one condition are compared to adjacent measurements of the other condition. The calculations focus on level, whereas potential distinct trends are quantified via increasing or decreasing differences between adjacent values. For an ATD with block randomization of condition ordering, it is straightforward to perform the comparisons within blocks. However, a substantial limitation arises when ADISO is used for ATD data with restricted randomization because the analyst would have to decide exactly how to segment the alternation sequence (i.e., which comparisons to perform). With different segmentations, the quantification of the difference between conditions can lead to different results. The recommendation is to segment the sequence in such a way that it allows for the maximum number of possible comparisons (e.g., segment AABBABBAABBA as AABB-AB-BA-AB-BA and not as AAB-BA-BBAA-BBA). In cases where different segmentations lead to the same number of comparisons (e.g., BAABAABABABB can be segmented as BAA-BA-AB-AB-ABB and BA-AB-AAB-AB-ABB), a sensitivity analysis comparing the results across different segmentations is warranted.

Taking into Account the Variability within Conditions

In ATD research, the measures of variability within a condition commonly reported are the (1) range and (2) standard deviation (Manolov & Onghena, 2018 ). Beyond reporting these values, the “visual aid and objective rule” (VAIOR, Manolov & Vannest, 2019 ) also includes the degree of variability within conditions. VAIOR assesses whether the data from one condition are superior to the data from the other condition, with the latter being summarized by a trend line and a variability band . The trend line is fitted by applying the Theil-Sen method (Vannest et al., 2012 ) to the data obtained in one condition (usually the baseline condition or another reference condition). The Theil-Sen method is a robust (i.e., resistant to outliers) technique based on finding the median of the slopes of all possible trend lines connecting all values pairwise. The variability band is constructed on the basis of the median absolute deviations from the median, which is a measure of scatter that is also resistant to outliers. The assessment in VAIOR focuses on whether the data from a given condition exceed the variability band. Similar to the visual structured criterion, a dichotomous decision is reached regarding whether there is sufficient evidence for the superiority of one condition over another with the degree of variability within each condition affecting this determination.

Consistency of Effects when Comparing Conditions

When analyzing SCED data, the consistency of the data within the same condition and the consistency of effects are two crucial aspects for establishing a functional relation between the independent variable, which causes the observed change (if any) on the dependent variable (Lane et al., 2017 ; Maggin et al., 2018 ). Two different approaches can be used for quantifying the consistency of effects for data obtained following an ATD with block randomization . The first is called “consistency of effects across blocks” (CEAB), is based on variance partitioning (Manolov et al., 2020 ): the total variance is divided into variance explained by the intervention effect, variance attributed to differences between blocks, and residual or interaction variance. The total variance is the sum of the squared deviations between any value and the mean of all values. The explained variance basically reflects the squared differences between the mean in each condition and the mean of all values, regardless of the condition in which they were obtained. The variance attributed to the blocks reflects the squared differences between the mean of the values from each block (mixing both conditions being compared) and the mean of all values. The variance represents the lack of consistency of the effect across blocks because the difference between conditions is larger in some blocks than others. The smaller the residual or interaction variability, the more consistent the effect was across blocks. In the context of this data analytic technique, several graphical representations are also suggested to facilitate interpreting the CEAB (Manolov et al., 2020 ), as shown in the section entitled “Illustrations and Comparison of the Results.”

Another approach is based on a graphical representation called the modified Brinley plot (Blampied, 2017 ) in which the measurements in one condition are plotted (on the Y-axis) against the measurements in the other condition (on the X-axis). A single data point represents the block. For designs that have phases (e.g., a multiple-baseline design or an ABAB design), each point represents the mean of a phase for a condition, with baseline means represented on the X-axis and adjacent intervention phase means on the Y-axis. A diagonal line (slope = 1, intercept = 0) shows the absence of difference or the equality between conditions. If all points are above the diagonal line, there is consistent superiority of treatment over baseline (assuming a high score represents improvement). If all points are below the diagonal then the treatment made behavior worse. The consistency in the magnitude of the effect across blocks is assessed in relation to the degree to which the points are close to a parallel diagonal line marking the average difference between conditions. If the slope is not equal to 1.0, then the interpretation is a bit more complex but quite revealing. If, for example, the treatment works best when baseline values are low, then data points on the left end of the graph will be farther above the baseline than points on the right end.

The calculation is actually a mean absolute percentage error, computed when comparing different conditions, which is why this data analytical technique is abbreviated MAPEDIFF (Manolov & Tanious, 2020 ). Thus, the modified Brinley plot can be used to represent visually the outcome of the specific comparisons performed between measurements in an ATD with block randomization) or between phases in a multiple-baseline or an ABAB design. It also enables checking whether the direction of the difference is consistently in favor of one of the conditions, whether this difference is of sufficient magnitude for all comparisons (in case a meaningful cut-off point is available), whether treatment efficacy depends on baseline levels, and whether this difference is consistent across all comparisons.

In both cases, the consistency of effects can be conceptualized as the degree to which variability of the effects observed in the different blocks are comparable to the average of these effects across blocks. Nonetheless, we prefer to separate the assessment of variability (usually assessed within each condition separately, before exploring whether there is a difference in variability across conditions), from the assessment of consistency of effects (which necessarily entails a comparison across conditions). These separate assessments are well-aligned with the recommendations for performing visual analysis (Lane et al., 2017 ; Ledford et al., 2019 ; Maggin et al., 2018 ).

Inferential Data Analytical Techniques

In the following section we refer to randomization tests as an inferential technique based on a stochastic element in the design (i.e., the use of randomization for determining the alternation sequence for conditions). In fact, randomization tests are the historically first statistical option proposed for ATD (Edgington, 1967 ; Kratochwill & Levin, 1980 ) and several studies using ATD have applied this analytical option (Weaver & Lloyd, 2019 ). However, despite the frequent use of randomization of condition assignment, the application of randomization tests are not yet commonly used with SCEDs (Manolov & Onghena, 2018 ). The aim of the current section is to justify and encourage both the use of randomization of condition presentation and the employment of randomization tests as an inferential analytical tool, as well as to describe their main features. Other inferential techniques, based on random sampling, are not discussed here. The interested reader is referred to regression-based procedures for model-based inference (Onghena, 2020 ). In particular, these techniques allow modeling the average level of the measurements in each condition and, if desired, the trends. The readings suggested for regression-based options in the SCED context are Moeyaert et al. ( 2014 ), Shadish et al. ( 2013 ), and Solmi et al. ( 2014 ), whereas for options in the context of N-of-1 trials Krone et al. ( 2020 ) and Zucker et al. ( 2010 ) can be consulted.

What is Gained by Using Randomization of Condition Ordering

Randomization can address threats to internal validity and increase the scientific credibility of the results of a study, including SCED studies (Edgington, 1996 ; Kratochwill & Levin, 2010 ; Tate et al., 2013 ). For ATDs, alternating the sequence randomly makes it less likely that external events are systematically associated with the exact moments in which conditions change. Randomization, along with counterbalancing, has also been suggested for decreasing condition sequencing effects, i.e., the possibility that one condition consistently precedes the other condition (Horner & Odom, 2014 ; Kennedy, 2005 ). The usefulness of randomization for addressing threats to internal validity is likely the reason for original introduction of ATDs as discussed by Barlow and Hayes ( 1979 ).

The inclusion of randomization of condition ordering in the design also allows the investigator to use a specific analytical technique called randomization tests (Edgington, 1967 , 1975 ). Randomization tests are applicable across different kinds of SCEDs (Craig & Fisher, 2019 ; Heyvaert & Onghena, 2014 ; Kratochwill & Levin, 2010 ), as long as there is randomization in the design, such as the random assignment of conditions to measurement occasions (Edgington, 1980 ; Levin et al., 2019 ). Randomization tests are also flexible in the selection of a test statistic according to the type of effect expected (Heyvaert & Onghena, 2014 ). In particular, the test statistic can be defined according to whether the effect is expected to be a change in level or in slope (Levin et al., 2020 ), and whether the change is expected to be immediate or delayed (Levin et al., 2017 ; Michiels & Onghena, 2019 ). The test statistic is just the computation of a specific measure of the difference between conditions that is of interest to the researcher for which a p -value will be obtained. Owing to the presence of randomization in condition ordering, there is no need to refer to any theoretical sampling distribution that would require random sampling. The test statistic is usually the mean difference actually obtained, due to its frequent use as a summary measure in ATD (Manolov & Onghena, 2018 ). Any aspect of the observed data (e.g., level, trend, overlap 2 ) or any effect size or quantification (e.g., ALIV; Manolov, 2019 ) can be used as a test statistic. To conduct the analysis, the test statistic is computed for the actual (obtained) alternation sequence (for instance, ABBAAB). Then the same test statistic is computed for all possible alternation sequences. In particular, the measurements obtained (e.g., 6, 8, 9, 7, 5, 7) maintain their order as they cannot be placed elsewhere due to the likely presence of autocorrelation in the data (Shadish & Sullivan, 2011 ). What changes in each possible alternation sequence, from which the actual alternation sequence was selected at random, are the labels, which denote the treatment conditions. Thus, when constructing the randomization distribution, other possible orderings/labels such ABABAB and ABABBA are assigned to each measurement in its original sequence (6, 8, 9, 7, 5, 7) and the test statistic is computed according to these labels. The randomization distribution is constructed by computing the test statistic for all possible alternation sequences, whose number is 2 k when there are k blocks or pairs of conditions and for each block a random selection is performed regarding which condition is first and which section (Onghena & Edgington, 2005 ). The actually obtained test statistic is compared to the test statistics computed for all possible alternation sequences under the randomization scheme (these are called “pseudostatistics,” because they are computed for alternating sequences that did not actually take place, but are ones that could possibly occur). If an increase in the target behavior is desired, the p -value is the proportion of pseudostatistics as large as or larger than the actual test statistic. As an alternative, if a decrease is the aim of the intervention, the p -value is the proportion of pseudostatistics as small as or smaller than the actual test statistic.

As an additional strength, although their use requires random ordering of conditions for each participant, randomization tests are free from the assumptions of random sampling of participants from a population, normality or independence of the data (Dugard et al., 2012 ; Edgington & Onghena, 2007 ). This is important, because in the SCED context it cannot be assumed that either the individual or their behavior were sampled at random. Moreover, the data are autocorrelated and not necessarily normally distributed (Pustejovsky et al., 2019 ; Shadish & Sullivan, 2011 ; Solomon, 2014 ). Finally, when using a randomization test, missing data can be handled effectively in a straightforward way by randomizing a missing-data marker, as if it were just another observed value, when obtaining the value of the test statistic for all possible random assignments (De et al., 2020 ). There is no specific limitation that the use of randomization of condition ordering entails because it is also possible to combine randomization and counterbalancing (e.g., see Edgington & Onghena, 2007 , ch. 6). This could occur, for instance, when determining the sequence at random for participant 1 (e.g., ABABBAAB) and counterbalancing for participant 2 (i.e., BABAABBA).

Interpreting the p-Value

The null hypothesis is that there is no effect of the intervention and thus the measurements obtained would have been the same under any of the possible randomizations (Jacobs, 2019 ), and in the ATD case, under any of the possible random sequences. The p -value quantifies the probability of obtaining a difference between conditions as large as, or larger than, the actually observed difference, conditional on there being no difference between the conditions. A small p -value entails that the difference observed is unlikely if the null hypothesis is true. Hence, either we observed an unlikely event or it is not true that the intervention is ineffective. If we don’t believe in unlikely events then our conclusion is tentatively that the intervention is effective, but a statistically significant result does not show the actual probability that the intervention is superior to another treatment or baseline.

In addition, it should be noted that p -values should not be interpreted in isolation. Other analytical methods, such as visual analysis and clinical significance measures, as well as assessment of social validity should be considered as well. We do not suggest that a p -value is the only way for tentatively inferring a substantial treatment effect, because the assessment of the presence of a functional relation is usually performed via visual analysis of graphed data (Maggin et al., 2018 ), especially in terms of the consistency of the effects (Ledford et al., 2019 ). However, the p -value based on the presence of randomization in the design is an objective quantification, which is valid thanks to the use of the randomization of condition ordering as it was actually implemented during the study.

Assessing Intervention Effectiveness: Beyond p-Values

A randomization test is not to be applied arbitrarily (Gigerenzer, 2004 ), nor is it free of interpretation from the researcher (see Perone, 1999 ). In fact, the researcher chooses a priori the method for choosing the condition ordering at random that is the most reasonable (e.g., block randomization vs. restricted randomization; Manolov, 2019 ) and which test statistic to use according to the expected effects (change in level or change in trend, immediate or delayed), in relation to the six data aspects emphasized by Kratochwill et al. ( 2013 ). Moreover, the researcher is encouraged to use other data analytic outcomes besides the p -value because other sources of data analysis are not discarded or disregarded when interpreting a p -value. In terms of inferential quantifications, confidence intervals are important for informing about the precision of estimates (Wilkinson & The Task Force on Statistical Inference, 1999 ) and they can be constructed based on randomization test inversion (Michiels et al., 2017 ). The visual representation of the data should always be inspected, and the individual values can be analyzed. The researchers can, and must, still seek the possible causes of specific outlier measurements according to their knowledge about the client, the context, and the target behavior. Finally, maintenance, generalization, and any subjective opinion expressed by the client or significant others can be considered, along with normative data (if available), to assess the social validity of the results (Horner et al., 2005 ; Kazdin, 1977 ).

The Need for Quantifications Complementing Visual Analysis

Visual and quantitative analyses should be used in conjunction.

The quantifications illustrated are not suggested as replacements for the visual inspection of graphed data. They should rather be understood as complementary. Such complements are necessary for several reasons. First, visual and quantitative analyses can achieve different goals. Visual analysis is used to shape an inductive and dynamic approach to identifying the factors controlling the target behavior (Johnson & Cook, 2019 ; Ledford et al., 2019 ), or to conduct response-guided experimentation (Ferron et al., 2017 ). For such purposes, visual analysis enables the researcher to maintain in close contact with the data (Fahmie & Hanley, 2008 ; Perone, 1999 ). Quantifications used for a summative purpose can complement this by providing objective and easily communicable results that can be aggregated across participants, avoiding subjectivity and potential confirmation bias in visual analysis (Laraway et al., 2019 ). Such quantification facilitates the analysis of multiple data sets, making it easier than inspecting each one of them separately (Kranak et al., 2021 ). In addition, quantifications can be used to integrate the results across studies via meta-analysis (Jenson et al., 2007 ; Onghena et al., 2018 ), which is important considering the need for examining the external validity of treatment results. The complementarity between visual and quantitative analyses can be illustrated by data analytic techniques such as ALIV (Manolov & Onghena, 2018 ), which was developed to quantify exactly the same aspect that is visually evaluated: the degree of separation between data paths. It is possible that a separation or differentiation be of such size that it is easy to identify via visual inspection (Perone, 1999 ), but a quantification can still be useful for communicating and aggregating the results via meta-analysis of SCED data.

Quantifications Commonly Accompany Visual Analysis

When presenting visual analysis, it is common to refer to visual aids (e.g., trend lines, which are based on quantitative methods) and descriptive quantifications, such as means and overlap indices (Lane & Gast, 2014 ; Ninci, 2019 ). In addition, probabilities (such as the ones arising from a null hypothesis test) have also been suggested as tools for aiding visual analysts: see the dual criteria (Fisher et al., 2003 ), which are commonly recommended and tested in the context of visual analysis (Falligant et al., 2020 ; Lanovaz et al., 2017 ; Wolfe et al., 2018 ).

Why Quantifications Are Useful

Quantifications can help mitigate some of the potential problems associated with visual inspection, such as insufficient interrater agreement (Ninci et al., 2015 ) or the fact that the graphical features of the plot can affect the result of the visual inspection (Dart & Radley, 2017 ; Kinney, 2020 ; Radley et al., 2018 ). A quantitative analysis requires several decisions to be made which leads to “researcher degrees of freedom” (Hantula, 2019 ; Simmons et al., 2011 ), potentially affecting the results through the decisions that were made. However, once an appropriate specific quantitative method is chosen, it yields the same result regardless of how the data are graphed.

Some of the quantifications illustrated in this article (i.e., Manolov et al., 2020 ; Manolov & Tanious, 2020 ) refer to an issue that is critical for SCEDs: replication (Kennedy, 2005 ; Sidman, 1960 ; Wolery et al., 2010 ; see also the special issue of Perspectives on Behavior Science on the “replication crisis”: Hantula, 2019 ) and the consistency of results across replications (Ledford, 2018 ; Maggin et al., 2018 ). Considering the fact that p -values in the classical null hypothesis significance testing approach do not provide information about the replicability of an effect (Branch, 2014 ; Killeen, 2005 ), we consider that it is important to emphasize quantifications that emphasize the consistency of effects across replications.

Some Quantifications that are Easy to Understand and to Use

Applied researchers are likely to be more familiar with visual analysis and prefer avoiding the steep learning curve required for specialized skills such as advanced statistical analysis. However, most of the quantifications described in the current text are straightforward and intuitive. For instance, ALIV is simply a quantification of the distance between data paths, whereas ADISO is a quantification of the average difference between successive measurements. Likewise, a randomization test entails the calculation of a test statistic (e.g., mean difference between conditions) for the actual alternation sequence as compared with all possible alternation sequences that could have been obtained according to the randomization scheme. There is no need to assume hypothetical sampling distribution with normal distribution of data points. Simple quantifications, like the ones illustrated here, are more likely to be used by applied researchers 3 who are typically more familiar with visual inspection of graphically depicted data. Moreover, the quantifications illustrated here are implemented in intuitive and user friendly software that is available for free (e.g., https://tamalkd.shinyapps.io/scda/ and https://manolov.shinyapps.io/ATDesign/ ).

Open Access Software for Data Analysis

List of software.

The current section provides a list of software that can be used when analyzing ATD data. All software listed, except for the Microsoft Excel macro for randomization tests ( https://ex-prt.weebly.com/; Gafurov & Levin, 2020 ), are user-friendly and freely available websites that do not require that the user has any specific program installed.

- Choosing an alternation sequence at random (i.e., designing the study) and performing a randomization tests for data analysis (Heyvaert & Onghena, 2014 ; Levin et al., 2012 ; Onghena & Edgington, 1994 , 2005 ): https://tamalkd.shinyapps.io/scda and https://ex-prt.weebly.com/ .

- Comparing data paths via ALIV (Manolov & Onghena, 2018 ; with the possibility of obtaining a p -value for ALIV on the basis of randomization test, Manolov, 2019 ) and also as a basis for the visual structured criterion (Lanovaz et al., 2019 ): https://manolov.shinyapps.io/ATDesign .

- Comparing adjacent data points using ADISO (Manolov & Onghena, 2018 ): https://manolov.shinyapps.io/ATDesign .

- Visual aid and objective rule (VAIOR; Manolov & Vannest, 2019 ) for complementing visual analysis, using Theil-Sen trend and a variability band: https://manolov.shinyapps.io/TrendMAD .

- Assessment of consistency on the basis of variance partitioning (Manolov et al., 2020 ): https://manolov.shinyapps.io/ConsistencyRBD .

- Assessment of consistency in relation to the modified Brinley plot—MAPESIM and MAPEDIFF (Manolov & Tanious, 2020 ): https://manolov.shinyapps.io/Brinley .

Data Files to Use