The Case of the Killer Robot

The cast of characters.

- No category

The Case of the Killer Robot

Related documents

Add this document to collection(s)

You can add this document to your study collection(s)

Add this document to saved

You can add this document to your saved list

Suggest us how to improve StudyLib

(For complaints, use another form )

Input it if you want to receive answer

The case of the Killer Robot by Richard Epstein

Sep 17, 2014

250 likes | 605 Views

The case of the Killer Robot by Richard Epstein. As retold by Netiva Caftori NEIU Oct. 2004. When software engineering and computer ethics collide. The news. A robot operator, Bart Matthews, was killed by his robot, Robbie CX30, while at work.

Share Presentation

- randy samuels

- design stage

- johnson perfect software

- building real world software

Presentation Transcript

The case of theKiller RobotbyRichard Epstein As retold by Netiva Caftori NEIU Oct. 2004

When software engineering and computer ethics collide

The news • A robot operator, Bart Matthews, was killed by his robot, Robbie CX30, while at work. • A programmer, Randy Samuels, who wrote the faulty code was indicted for manslaughter. • Silicon Techchronics is Randy’s employer.

What really happened? • The robot malfunctioned & crushed its operator to death • Blood all over • Decapitated head • Num-lock key light not on • Numeric key pad bloody

Who is at fault? • The programmer who made a careless mistake? • The designer? • The robotics division? • The company? • The operator of the robot? • The robot?

Robbie CX 10 & 20 were experimental. • The robotics division chief, Johnson, put pressure on the project manager Reynolds to finish the project by January. • Johnson hired 20 new programmers in June against Reynolds’ will, by shifting resources. Robbie CX 30 had to succeed. • Johnson only knew manufacturing hardware. One cannot speed software coding by adding more programmers. • Robbie CX 30 was a step ahead in sophistication. • Johnson: Perfect software is an oxymoron.

Programmer personality • Randy’s home page: freedom for programmers • A hacker type • Enormous stress • Programmer was a prima donna: could not accept criticism or his own fallibility • Helpful but arrogant • Under pressure to finish project on time

A good programming team • Needs to have a whole array of personalities types including: • An interaction-oriented person • Someone who keeps the peace and helps move things in a positive direction • Task-oriented people, but not all • A democratic team • Egoless programming

Team dynamics • The project was controversial from the beginning • Jan Anderson, a programmer, was fired after she attacked project manager Reynolds on his decision to use the waterfall methodology versus the prototype methodology. • Reynolds was replacing a dead project manager as a cost saving measure instead of hiring someone from outside the company. He never worked with robotics before.

More explanations • The earlier Robbie models processed transactions. Robbie CX30 was to interact with its operator, therefore the interface cannot be designed as an afterthought. Thus, a prototyping model is preferable where the users can use a prototype robot while in the design stage.

Software development life-cycleWater fall methodology • Requirements • Analysis • Design • Coding • Testing • Implementation • Maintenance • No standard practices

Quality controlor quality assurance • Dynamic testing • Static testing • Unit testing: Black and white box testing • Integration testing • Prof Silber attested that test results were inconsistent with actual killer code test results

When is the software good enough? • Errors can happen in any stage • Design stage: 2 alternatives: Waterfall & prototyping • Testing • User interface • The complexity of the task of building real-world software • Enormous stress

Worker-friendly: Chip Creek facility. Prevent repetitive strain injuries. Special training for employees. Well designed workstations. Frequent breaks. Worker-unfriendly: Silicon Valley plant. Workers criticized for novelties. No exercises or training encouraged. RSI frequent More compensation claims The company-Sili-Tech Two environments:

Law suits • The wife of the murdered operator. • The indicted programmer himself. • More possible indictments.

Why was Randy Samuels indicted? • Sili-Tech promised to deliver robots that would cause no bodily harm. • So Randy was not legally responsible for the death of the robot operator. • Waterson, the president of Sili-Tech has contributed large sums to the re-election of judge McMurdock, who indicted Randy. • Functional requirements specify the behavior of the robot under exceptional conditions. Operator intervention may be needed. • Exceptional conditions were not mentioned in training of operators.

The characters Waterson Johnson Reynolds Randy CX30 Jane Cindy Bart Prof Gritty Prof Silber

E-mail was not secure at Sili-TechIt was found that: • Cindy Yardley faked the test results of the Killer code • Johnson told Cindy that the robot was safe and all will lose their jobs if the robot were not shipped on time • Randy stole some of his software, but admitted he had bugs in his Killer code

Ethical issues • Email privacy • Professional ethics: delivering bug-free software • Plagiarism-intellectual property • Honor confidentiality • ACM code of ethics

Five ethical tests:by Kallman & Grillo • The mom test: would you tell your mother? • The TV test: would you tell your story on national TV? • The smell test: does it smell bad? • The other person’s shoes test: would you like it if done to you? • The market test: would your action make a good sale pitch?

- More by User

The Case Of The Killer Robot - Praxis

Learning Outcomes. After this Lecture you should be able to:Identify the particular Ethical issues that the Case of the Killer Robot raisesAppreciate some of the Ethics of software developmentRealise that you need to consider your own views on these issues as a potential software developer. Current Situation.

2.19k views • 28 slides

The killer angels by michael shaara

The killer angels by michael shaara. Historical fiction Dramatizes the Battle of Gettysburg, Pennsylvania July 1 - 3, 1863 the most pivotal battle of the American civil war (1861-1865) The first major southern loss. GEneral Robert E. Lee. Commander, Army of Virginia

1.48k views • 16 slides

Attack of the Killer courses

Attack of the Killer courses. How course taking patterns affect retention. Jaclyn Cameron. Research Analyst DePaul University Chicago, IL Presented at National Symposium on Student Retention CSRDE 4 th Annual Conference September 29 th -October 1 st 2008. The Inspiration.

398 views • 22 slides

The Adventure of the Killer Python

ebook telling the story of some young divers and their mission to find lost treasure.

265 views • 8 slides

The Silent Killer

The Silent Killer. Welcome to Kenya. 40.5 million people. (CIA, 2012). The Silent Killer. Hypertension. (APHRC, 2010). (CDC, 2012). 12% of Kenyans are hypertensive Over 10% of Kenyan deaths are due to heart disease Urbanization is causing a rise in non-communicable disease.

527 views • 17 slides

The KILLER WHALE

The KILLER WHALE. BY LOGAN. WHY IS THE KILLER WHALE ENDANGERED?. HUNTED FOR MEAT. KILLER WHALE’S BODY. 16 TO 33 FEET LONG HOLD BREATH LONG TIME BIG FIN EVERY OCEAN BUT ARTIC 1500 TO 1600 POUNDS. WHAT IS BEING DONE TO PROTECT THE KILLER WHALE. HUNTING BANNED ILLEGAL TO HAVE MEAT

267 views • 5 slides

Progression of the Epstein-Barr Virus

Progression of the Epstein-Barr Virus. Role of the Latent Membrane Protein 1 (LMP1) in activating the PI3K/Akt Pathway Presented by Mitchell Cadet.

455 views • 19 slides

THE KILLER LIGER

THE KILLER LIGER. Brought to you by http://www.ligerworld.com LIGERWORLD.COM CONTAINS ABSOLUTE INFORMATION ABOUT LIGERS. THE KILLER LIGER. Before going into this incident there are few things that need to know about ligers Ligers are the biggest cats on earth.

238 views • 7 slides

The Zodiac Killer

The Zodiac Killer. By: Reed Fujan. Zodiac. Serial Killer One of the great unsolved serial killers. Police investigated over 2,500 potential suspects. Roamed parts of Northern California. Between December 1968 and 1969.

734 views • 10 slides

The Killer Angles

The Killer Angles. Born in 1928 in New Jersey Served as a paratrooper Was a boxer, policeman, and a writer Wrote more than 70 short stories, The Broken Place, The Herald, For Love of the Game, ect . died in 1988 at age 59. Michael Shaara. Southern generals . Northern Generals .

565 views • 41 slides

ERR Robot By WRC (Windsor Richard and Catherine)

ERR Robot By WRC (Windsor Richard and Catherine). Objectives. To build a robot to follow a path, and stop at the end. To use the same robot to push cans of different sizes to each side, and complete the course.

194 views • 8 slides

Pills of the Future By Richard Perry

Pills of the Future By Richard Perry. Nanoparticles That Can Be Taken Orally. Researchers from MIT have developed a new type of nanoparticle that can be delivered orally and absorbed through the digestive tract, allowing patients to simply take a pill instead of receiving injections. Benefits.

169 views • 9 slides

Evolving Killer Robot Tanks

Evolving Killer Robot Tanks. Jacob Eisenstein. Why Must We Fight?. Giving the people what they want. Essence of embodiment: Moving around and surviving in a hostile environment. Real creatures…. Tank fighting simulator Human players code tanks in Java ~4000 tank control programs online

288 views • 16 slides

The Zodiac Killer. By: Bianca Peluso and Urszula Karwowska. Who is the Zodiac Killer?. One of the greatest unsolved serial killer mysteries Operated in Northern California during the late 1960s and early 1970s

3.18k views • 13 slides

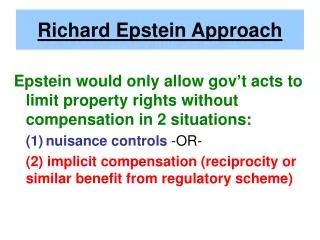

Richard Epstein Approach

Richard Epstein Approach. Epstein would only allow gov’t acts to limit property rights without compensation in 2 situations: (1) nuisance controls -OR- (2) implicit compensation (reciprocity or similar benefit from regulatory scheme). Richard Epstein Approach.

524 views • 38 slides

The Zodiac Killer. To: Ms. Ross By: Jana Mackinnon October 14,2014. Maps Of Where He Murdered….

1.33k views • 6 slides

Richard Trenton Chase Vampire Killer of Sacramento

Richard Trenton Chase Vampire Killer of Sacramento. California v. Richard Trenton Chase. ATTENTION!!!. Man slaughter Cannibalism Rape Decapitation Slaying of babies and toddlers DISCRETION IS ADVISED!!!. Objectives. Name two drugs that Chase heavily abused.

749 views • 33 slides

The Killer Angles. Born in 1928 in New Jersey Served as a paratrooper Was a boxer, policeman, and a writer Wrote more than 70 short stories, The Broken Place, The Herald, For Love of the Game, ect . died in 1988 at age 59. Michael Shaara. Southern generals. Northern Generals.

522 views • 41 slides

The Killer Clown

The Killer Clown. John Wayne Gacy. By: Julie McDonald. Childhood. Born in Chicago Illinois on March 17, 1942 Troubled relationship with his father Physically abusive Alcoholic

290 views • 13 slides

The Epstein - Barr Virus

The Epstein - Barr Virus. An introduction to one of the world’s most common viruses Presented by: Mary Shvarts. Shown here, Type 1 and Type 2 Downy cell, the common name for EBV infected lymphocytes. General Information on EBV. Known as EBV, it is one of the best studied viruses.

535 views • 10 slides

“The Silent Killer”

“The Silent Killer”. By: Cierra. Silent Killer. High blood pressure or Hypertension is called silent killer because it regularly it has no symptoms. Some are Irregular heartbeat Chest Pains Nose-bleeds. High Blood Pressure.

356 views • 10 slides

The Gout Killer

Gout is a form of arthritis caused by excess uric acid in the bloodstream. The symptoms of gout are due to the formation of uric acid crystals in the joints and the body's response to them. Gout most classically affects the joint in the base of the big toe.

81 views • 7 slides

Featured Topics

Featured series.

A series of random questions answered by Harvard experts.

Explore the Gazette

Read the latest.

One way to help big groups of students? Volunteer tutors.

Footnote leads to exploration of start of for-profit prisons in N.Y.

Should NATO step up role in Russia-Ukraine war?

Harvard Law School’s Bonnie Docherty attended the U.N General Assembly where the first-ever resolution on “killer robots” was adopted.

Courtesy of Bonnie Docherty

‘Killer robots’ are coming, and U.N. is worried

Human rights specialist lays out legal, ethical problems of military weapons systems that attack without human guidance

Harvard Staff Writer

Long the stuff of science fiction, autonomous weapons systems, known as “killer robots,” are poised to become a reality, thanks to the rapid development of artificial intelligence.

In response, international organizations have been intensifying calls for limits or even outright bans on their use. The U.N General Assembly in November adopted the first-ever resolution on these weapons systems, which can select and attack targets without human intervention.

To shed light on the legal and ethical concerns they raise, the Gazette interviewed Bonnie Docherty , lecturer on law at Harvard Law School’s International Human Rights Clinic (IHRC), who attended some of the U.N. meetings. Docherty is also a senior researcher in the Arms Division of Human Rights Watch . This interview has been condensed and edited for length and clarity.

What exactly are killer robots? To what extent are they a reality?

Killer robots, or autonomous weapons systems to use the more technical term, are systems that choose a target and fire on it based on sensor inputs rather than human inputs. They have been under development for a while but are rapidly becoming a reality. We are increasingly concerned about them because weapons systems with significant autonomy over the use of force are already being used on the battlefield.

What are those? Where have they been used?

It’s a little bit of a fine line about what counts as a killer robot and what doesn’t. Some systems that were used in Libya and others that have been used in [the ethnic and territorial conflict between Armenia and Azerbaijan over] Nagorno-Karabakh show significant autonomy in the sense that they can operate on their own to identify a target and to attack.

They’re called loitering munitions , and they are increasingly using autonomy that allows them to hover above the battlefield and wait to attack until they sense a target. Whether systems are considered killer robots depends on specific factors, such as the degree of human control, but these weapons show the dangers of autonomy in military technology.

What are the ethical concerns posed by killer robots?

The ethical concerns are very serious. Delegating life-and-death decisions to machines crosses a red line for many people. It would dehumanize violence and boil down humans to numerical values.

There’s also a serious risk of algorithmic bias, where discriminating against people based on race, gender, disability, and so forth is possible because machines may be intentionally programmed to look for certain criteria or may unintentionally become biased. There’s ample evidence that artificial intelligence can become biased. We in the human-rights community are very concerned about this being used in machines that are designed to kill.

“Delegating life-and-death decisions to machines crosses a red line for many people. It would dehumanize violence and boil down humans to numerical values.”

What are the legal concerns?

There are also very serious legal concerns, such as the inability for machines to distinguish soldiers from civilians. They’re going to have particular trouble doing so in a climate where combatants mingle with civilians.

Even if the technology can overcome that problem, they lack human judgment. That is important for what’s called the proportionality test, where you’re weighing whether civilian harm is greater than military advantage.

That test requires a human to make an ethical and legal decision. That’s a judgment that cannot be programmed into a machine because there are an infinite number of situations that happen on the battlefield. And you can’t program a machine to deal with an infinite number of situations.

There is also concern about the lack of accountability.

We’re very concerned about the use of autonomous weapons systems falling in an accountability gap because, obviously, you can’t hold the weapon system itself accountable.

It would also be legally challenging and arguably unfair to hold an operator responsible for the actions of a system that was operating autonomously.

There are also difficulties with holding weapons manufacturers responsible under tort law. There is wide concern among states and militaries and other people that these autonomous weapons could fall through a gap in responsibility.

We also believe that the use of these weapons systems would undermine existing international criminal law by creating a gap in the framework; it would create something that’s not covered by existing criminal law.

“Most of the countries that have sought either nonbinding rules or no action whatsoever are those that are in the process of developing the technology and clearly don’t want to give up the option to use it down the road.”

There have been efforts to ban killer robots, but they have been unsuccessful so far. Why is that?

There are certain countries who oppose any action to address the concerns these weapons raise — Russia in particular. Some countries, such as the U.S., the U.K., and so forth, have supported nonbinding rules. We believe that a binding treaty is the only answer to dealing with such grave concerns.

Most of the countries that have sought either nonbinding rules or no action whatsoever are those that are in the process of developing the technology and clearly don’t want to give up the option to use it down the road.

There could be several reasons why it has been challenging to ban these weapons systems. These are weapons systems that are in development as we speak, unlike landmines and cluster munitions that had already existed for a while when they were banned. We could show documented harm with landmines and cluster munitions, and that is a factor that moves people to action — when there’s already harm.

In the case of blinding lasers, it was a pre-emptive ban [to ensure they will be used only on optical equipment, not on military personnel] so that is a good parallel for autonomous weapons systems, although these weapons systems are a much broader category. There’s also a different political climate right now. Worldwide, there is a much more conservative political climate, which has made disarmament more challenging.

What are your thoughts on the U.S. government’s position?

We believe they fall short of what a solution should be. We think that we need legally binding rules that are much stronger than what the U.S. government is proposing and that they need to include prohibitions of certain kinds of autonomous weapons systems, and they need to be obligations, not simply recommendations.

There was a recent development in the U.N. recently in the decade-long effort to ban these weapons systems.

The disarmament committee, the U.N. General Assembly’s First Committee on Disarmament and International Security, adopted in November by a wide margin —164 states in favor and five states against — a resolution calling on the U.N. secretary-general to gather the opinions of states and civil society on autonomous weapons systems.

Although it seems like a small step, it’s a crucial step forward. It changes the center of the discussion to the General Assembly from the Convention on Conventional Weapons (CCW), where progress has been very slow and has been blocked by Russia and other states. The U.N. General Assembly (UNGA) includes more states and operates by voting rather than consensus.

Many states, over 100 , have said that they support a new treaty that includes prohibitions and regulations on autonomous weapons systems. That combined with the increased use of these systems in the real world have converged to drive action on the diplomatic front.

The secretary-general has said that by 2026 he would like to see a new treaty. A treaty emerging from the UNGA could consider a wider range of topics such as human rights, law, ethics, and not just be limited to humanitarian law. We’re very hopeful that this will be a game-shifter in the coming years.

What would an international ban on autonomous weapons systems entail, and how probable is it that this will happen soon?

We are calling for a treaty that has three parts to it. One is a ban on autonomous weapons systems that lack meaningful human control. We are also calling for a ban on autonomous weapons systems that target people because they raise concerns about discrimination and ethical challenges. The third prong is that we’re calling for regulations on all other autonomous weapons systems to ensure that they can only be used within a certain geographic or temporal scope. We’re optimistic that states will adopt such a treaty in the next few years.

Share this article

You might like.

Research finds low-cost, online program yields significant results

Historian traces 19th-century murder case that brought together historical figures, helped shape American thinking on race, violence, incarceration

National security analysts outline stakes ahead of July summit

Six receive honorary degrees

Harvard recognizes educator, conductor, theoretical physicist, advocate for elderly, writer, and Nobel laureate

When should Harvard speak out?

Institutional Voice Working Group provides a roadmap in new report

Day to remember

One journey behind them, grads pause to reflect before starting the next

An autonomous robot may have already killed people – here’s how the weapons could be more destabilizing than nukes

Professor of English, Macalester College

Disclosure statement

James Dawes does not work for, consult, own shares in or receive funding from any company or organisation that would benefit from this article, and has disclosed no relevant affiliations beyond their academic appointment.

Macalester College provides funding as a member of The Conversation US.

View all partners

An updated version of this article was published on Dec. 20, 2021. Read it here .

Autonomous weapon systems – commonly known as killer robots – may have killed human beings for the first time ever last year, according to a recent United Nations Security Council report on the Libyan civil war . History could well identify this as the starting point of the next major arms race, one that has the potential to be humanity’s final one.

Autonomous weapon systems are robots with lethal weapons that can operate independently, selecting and attacking targets without a human weighing in on those decisions. Militaries around the world are investing heavily in autonomous weapons research and development. The U.S. alone budgeted US$18 billion for autonomous weapons between 2016 and 2020.

Meanwhile, human rights and humanitarian organizations are racing to establish regulations and prohibitions on such weapons development. Without such checks, foreign policy experts warn that disruptive autonomous weapons technologies will dangerously destabilize current nuclear strategies, both because they could radically change perceptions of strategic dominance, increasing the risk of preemptive attacks , and because they could become combined with chemical, biological, radiological and nuclear weapons themselves.

As a specialist in human rights with a focus on the weaponization of artificial intelligence , I find that autonomous weapons make the unsteady balances and fragmented safeguards of the nuclear world – for example, the U.S. president’s minimally constrained authority to launch a strike – more unsteady and more fragmented.

Lethal errors and black boxes

I see four primary dangers with autonomous weapons. The first is the problem of misidentification. When selecting a target, will autonomous weapons be able to distinguish between hostile soldiers and 12-year-olds playing with toy guns? Between civilians fleeing a conflict site and insurgents making a tactical retreat?

The problem here is not that machines will make such errors and humans won’t. It’s that the difference between human error and algorithmic error is like the difference between mailing a letter and tweeting. The scale, scope and speed of killer robot systems – ruled by one targeting algorithm, deployed across an entire continent – could make misidentifications by individual humans like a recent U.S. drone strike in Afghanistan seem like mere rounding errors by comparison.

Autonomous weapons expert Paul Scharre uses the metaphor of the runaway gun to explain the difference. A runaway gun is a defective machine gun that continues to fire after a trigger is released. The gun continues to fire until ammunition is depleted because, so to speak, the gun does not know it is making an error. Runaway guns are extremely dangerous, but fortunately they have human operators who can break the ammunition link or try to point the weapon in a safe direction. Autonomous weapons, by definition, have no such safeguard.

Importantly, weaponized AI need not even be defective to produce the runaway gun effect. As multiple studies on algorithmic errors across industries have shown, the very best algorithms – operating as designed – can generate internally correct outcomes that nonetheless spread terrible errors rapidly across populations.

For example, a neural net designed for use in Pittsburgh hospitals identified asthma as a risk-reducer in pneumonia cases; image recognition software used by Google identified African Americans as gorillas ; and a machine-learning tool used by Amazon to rank job candidates systematically assigned negative scores to women .

The problem is not just that when AI systems err, they err in bulk. It is that when they err, their makers often don’t know why they did and, therefore, how to correct them. The black box problem of AI makes it almost impossible to imagine morally responsible development of autonomous weapons systems.

The proliferation problems

The next two dangers are the problems of low-end and high-end proliferation. Let’s start with the low end. The militaries developing autonomous weapons now are proceeding on the assumption that they will be able to contain and control the use of autonomous weapons . But if the history of weapons technology has taught the world anything, it’s this: Weapons spread.

Market pressures could result in the creation and widespread sale of what can be thought of as the autonomous weapon equivalent of the Kalashnikov assault rifle : killer robots that are cheap, effective and almost impossible to contain as they circulate around the globe. “Kalashnikov” autonomous weapons could get into the hands of people outside of government control, including international and domestic terrorists.

High-end proliferation is just as bad, however. Nations could compete to develop increasingly devastating versions of autonomous weapons, including ones capable of mounting chemical, biological, radiological and nuclear arms . The moral dangers of escalating weapon lethality would be amplified by escalating weapon use.

High-end autonomous weapons are likely to lead to more frequent wars because they will decrease two of the primary forces that have historically prevented and shortened wars: concern for civilians abroad and concern for one’s own soldiers. The weapons are likely to be equipped with expensive ethical governors designed to minimize collateral damage, using what U.N. Special Rapporteur Agnes Callamard has called the “myth of a surgical strike” to quell moral protests. Autonomous weapons will also reduce both the need for and risk to one’s own soldiers, dramatically altering the cost-benefit analysis that nations undergo while launching and maintaining wars.

Asymmetric wars – that is, wars waged on the soil of nations that lack competing technology – are likely to become more common. Think about the global instability caused by Soviet and U.S. military interventions during the Cold War, from the first proxy war to the blowback experienced around the world today. Multiply that by every country currently aiming for high-end autonomous weapons.

Undermining the laws of war

Finally, autonomous weapons will undermine humanity’s final stopgap against war crimes and atrocities: the international laws of war. These laws, codified in treaties reaching as far back as the 1864 Geneva Convention , are the international thin blue line separating war with honor from massacre. They are premised on the idea that people can be held accountable for their actions even during wartime, that the right to kill other soldiers during combat does not give the right to murder civilians. A prominent example of someone held to account is Slobodan Milosevic , former president of the Federal Republic of Yugoslavia, who was indicted on charges against humanity and war crimes by the U.N.’s International Criminal Tribunal for the Former Yugoslavia.

But how can autonomous weapons be held accountable? Who is to blame for a robot that commits war crimes? Who would be put on trial? The weapon? The soldier? The soldier’s commanders? The corporation that made the weapon? Nongovernmental organizations and experts in international law worry that autonomous weapons will lead to a serious accountability gap.

To hold a soldier criminally responsible for deploying an autonomous weapon that commits war crimes, prosecutors would need to prove both actus reus and mens rea, Latin terms describing a guilty act and a guilty mind. This would be difficult as a matter of law, and possibly unjust as a matter of morality, given that autonomous weapons are inherently unpredictable. I believe the distance separating the soldier from the independent decisions made by autonomous weapons in rapidly evolving environments is simply too great.

The legal and moral challenge is not made easier by shifting the blame up the chain of command or back to the site of production. In a world without regulations that mandate meaningful human control of autonomous weapons, there will be war crimes with no war criminals to hold accountable. The structure of the laws of war, along with their deterrent value, will be significantly weakened.

A new global arms race

Imagine a world in which militaries, insurgent groups and international and domestic terrorists can deploy theoretically unlimited lethal force at theoretically zero risk at times and places of their choosing, with no resulting legal accountability. It is a world where the sort of unavoidable algorithmic errors that plague even tech giants like Amazon and Google can now lead to the elimination of whole cities.

In my view, the world should not repeat the catastrophic mistakes of the nuclear arms race. It should not sleepwalk into dystopia.

[ Get our best science, health and technology stories. Sign up for The Conversation’s science newsletter .]

- Artificial intelligence (AI)

- Lethal Autonomous Weapons Systems

- Killer robots

- Autonomous weapons

- Algorithmic bias

- Campaign to Stop Killer Robots

Data Manager

Research Support Officer

Director, Social Policy

Head, School of Psychology

Senior Research Fellow - Women's Health Services

Killer Robot Arms: A Case-Study in Brain–Computer Interfaces and Intentional Acts

- Published: 06 April 2018

- Volume 28 , pages 775–785, ( 2018 )

Cite this article

- David Gurney 1

663 Accesses

5 Citations

3 Altmetric

Explore all metrics

I use a hypothetical case study of a woman who replaces here biological arms with prostheses controlled through a brain–computer interface the explore how a BCI might interpret and misinterpret intentions. I define pre-veto intentions and post-veto intentions and argue that a failure of a BCI to differentiate between the two could lead to some troubling legal and ethical problems.

This is a preview of subscription content, log in via an institution to check access.

Access this article

Price includes VAT (Russian Federation)

Instant access to the full article PDF.

Rent this article via DeepDyve

Institutional subscriptions

Adapted from Mason and Birch ( 2003 )

Similar content being viewed by others

An Analysis of the Impact of Brain-Computer Interfaces on Autonomy

Brain–Machine Interface (BMI) as a Tool for Understanding Human–Machine Cooperation

Future Developments in Brain/Neural–Computer Interface Technology

Many bioethicists have addressed the issue of whether one can truly separate therapeutic uses and enhancement uses into distinct categories. I will not retread that ground here. Rather, I will take the common-sense approach that certain uses BCI fall clearly into the category of therapies, while other uses fall clearly into the category of enhancements. For example, a therapeutic use of BCI would be using the technology to give someone who did not have the ability to speak to communicate through a computerized speech-generating device. On the other hand, using BCI to enable someone with fully-functional limbs to operate a robotic arm capable of lifting two tons would qualify as enhancement. Whether or not the distinction between therapy and enhancement can be made in all cases is not germane to my argument in this paper. See Kass et al. ( 2003 , p. 15), for a concise overview of the therapy versus enhancement debate.

Also, it doesn’t seem that all intentions must be conscious. Mele ( 2008 , p. 3) writes: “The last time you signaled for a turn in your car, were you conscious of an intention to do that?” The only circumstance in which we thought that signally was not intentional is if we genuinely felt surprise at the signal, either because we hit the signal by accident or because we felt that something outside of our control had forced us to signal.

Note that I say that these intentions arise spontaneously but that we are consciously aware of them. This is distinct from something like unconsciously turning a signal in the note above. In that case, the intention to signal for a turn is neither conscious nor spontaneous; rather, it is part of a larger plan to get somewhere. In the case of pre-veto intentions, it can be said that the intentions are formed unconsciously, i.e., spontaneously, yet we are still consciously aware of them having formed.

There is some research indicating that pre-veto intentions may result in action occurring before we are even aware that the pre-veto intention has been formed. See Mele ( 2008 , pp. 1–12) for an overview. For my purposes, I will be assuming that there are at least some cases where we form pre-veto intentions and have the opportunity to veto them.

This distinction in responsibility is tracked by law, which provides greater punishment for murder committed with deliberation (first-degree murder) and murder committed without deliberation, in the head of the moment (manslaughter or second-degree murder, depending on the jurisdiction).

I.e., the scenarios I will present are plausible given current trends in BCI technology.

See Glannon ( 2007 , p. 142): “Those with chips implanted in their brains can think about executing a bodily movement, and that thought alone can cause the movement…. But forming an intention or plan and executing it are two separate mental acts…. One can form an intention to act but not executive that intention… by changing one’s mind at the last moment”.

Ariz. Rev. Stat. Ann. § 13-1103(a)(2).

Ariz. Rev. Stat. Ann. § 13-1104(a)(1).

There are other questions that might be raised, such as whether the manufacture of the BCI device bears any responsibility for the outcome here. For example, in Human Values, Ethics, and Design , Friedman and Kahn ( 2003 ) argue that human–computer interface designers have an obligation to implement these technologies in an ethical manner. As such, designers of human–computer interfaces must take human values into account when designing systems. While this topic could form its own paper using the same case-study presented above, it is outside the scope of the issue presented here.

Ariz. Rev. Stat. Ann. § 13-201.

Circumstances where a defendant can be said to have had the mens rea but not the actus reus are usually those where the defendant has attempted to commit some crime but been unsuccessful in doing so, e.g., attempted murder.

Ariz. Rev. Stat. Ann. § 13-105(2).

Id. (10)(a).

(2nd ed. 1989) The Oxford English Dictionary . Oxford, England: Oxford University Press.

Bratman, M. (1984). Two faces of intention. Philosophical Review, 93 (3), 375–405.

Article Google Scholar

Friedman, B., & Kahn, P., Jr. (2003). Human values, ethics, and design. In J. Jacko & A. Sears (Eds.), The human–computer interaction handbook (pp. 1177–1201). Mahway, NJ: Lawrence Erlbaum Associates Inc.

Google Scholar

Gardener, J. (2012, December 18). Paralyzed mom controls robotic arm using her thoughts. ABC News . Retrieved from http://new.yahoo.com .

Glannon, W. (2007). Bioethics and the brain . New York, NY: Oxford University Press.

Kass, L. et al. (2003). Beyond therapy: Biotechnology and the pursuit of happiness . Washington, DC: President’s Council on Bioethics.

Klose, C. (2007). Connections that count: Brain–computer interface enables the profoundly paralyzed to communicate. NIH Medline Plus, 2 (3), 20–21.

Long, J., Li, Y., Yu, T., et al. (2012). Hybrid brain–computer interface to control the direction and speed of a simulated or real wheelchair. IEEE Transactions on Neural Systems and Rehabilitation Engineering, 20 (5), 720–729.

Article MathSciNet Google Scholar

Mason, S., & Birch, G. (2003). A general framework for brain–computer interface design. IEEE Transactions on Neural Systems and Rehabilitation Engineering, 11 (1), 70–85.

Mele, A. (2008). Proximal intentions, intention-reports, and vetoing. Philosophical Psychology, 21 (1), 1–14.

Sunny, T. D., Aparna, T., Neethu, P., et al. (2016). Robotic arm with brain–computer interface. Procedia Techology, 24, 1089–1096.

Vallabhaneni, A., Wong, T., & He, B. (2005). Brain–computer interface. In B. He (Ed.), Neuronal engineering (pp. 85–121). New York: Springer.

Chapter Google Scholar

van de Laar, B., Gurkok, H., & Plass-Oude Bos, D. (2013). Experiencing BCI control in a popular computer game. IEEE Transactions on Computational Intelligence and AI in Games, 5 (2), 176–184.

Wan, W. (2017, November 15). New robotic hand named after Luke Skywalker helps amputee touch and feel again. Washington Post . Retrieved from http://www.washingtonpost.com .

Download references

Author information

Authors and affiliations.

James E. Rogers College of Law, University of Arizona, 1145 N. Mountain Avenue, Tucson, AZ, 85719, USA

David Gurney

You can also search for this author in PubMed Google Scholar

Corresponding author

Correspondence to David Gurney .

Rights and permissions

Reprints and permissions

About this article

Gurney, D. Killer Robot Arms: A Case-Study in Brain–Computer Interfaces and Intentional Acts. Minds & Machines 28 , 775–785 (2018). https://doi.org/10.1007/s11023-018-9462-9

Download citation

Received : 29 November 2017

Accepted : 03 April 2018

Published : 06 April 2018

Issue Date : December 2018

DOI : https://doi.org/10.1007/s11023-018-9462-9

Share this article

Anyone you share the following link with will be able to read this content:

Sorry, a shareable link is not currently available for this article.

Provided by the Springer Nature SharedIt content-sharing initiative

- Brain–computer interface

- Find a journal

- Publish with us

- Track your research

COMMENTS

Killer Robot Case Study Refrence Killer robot case study BCS code of Conduct (1)OEC - Silicon Techtronics Employee Admits Faking Software Tests. 2018. ... Understanding 30-60-90 sales plans and incorporating them into a presentation; April 13, 2024. How to create a great thesis defense presentation: everything you need to know; Latest posts

The list of topics which are being investigated for Assignment 1 all relate to the case study. "The Case of the Killer Robot" (henceforth to be abbreviated to CKR) was originally conceived by Richard Epstein in 1989 as a teaching aid. He subsequently made the materials freely available on the WWW for use by other academics and there are ...

The Case of the Killer Robot is a detailed scenario that combines elements of software engineering and computer ethics. The scenario consists of fictitious articles that touch on specific issues in software engineering and computer ethics. The articles discuss programs such as programmer psychology, team dynamics, user interfaces, software ...

Data Visualization. Infographics. Charts. Blog. April 18, 2024. Use Prezi Video for Zoom for more engaging meetings. April 16, 2024. Understanding 30-60-90 sales plans and incorporating them into a presentation. April 13, 2024.

Presentation Transcript. 1. The Case Of The Killer Robot u000b-u000bPraxis The Smoking Gun Mike Wicks 8th November 2005. 3. Current Situation Randy Samuels is indicted on charges of manslaughter Samuels was formerly employed as a programmer at Silicon Techtronics Inc. The charge involves the death of Bart Matthews, who was killed last May by an ...

The Case of the Killer Robot This scenario is intended to raise issues of computer ethics and software engineering. The people and institutions involved in this scenario are entirely fictitious (except for references to Carnegie Mellon and Purdue universities and to the venerable computer scientists Ben Shneiderman and Jim Foley).

The Case of the Killer Robot. Richard G. Epstein West Chester University of PA West Chester, PA 19383 [email protected]. The case of the killer robot consists of seven newspaper articles, one journal article and one magazine interview. This scenario is intended to raise issues in computer ethics and in software engineering.

The Case of the Killer Robot Richard G. Epstein West Chester University of PA West Chester, PA 19383 [email protected] The case of the killer robot consists of seven newspaper articles, one journal article and one magazine interview. This scenario is intended to raise issues in computer ethics and in software engineering.

Case of the Killer Robo t. Author (s): Richard G. Epst ein. Richard G. Epstein, Wes tchester University of Penns ylvania. Mike Melamed, CWRU 2000. The Cas e o f t h e Kill er Ro b o t is a detail ...

The "Case of theKiller Robot" is a computer ethics scenario that explores issues in software engineering and computer ethics. It is in twoparts. Part I is the scenario itself. It uses the waterfall software process as a skeletal framework for presenting factors that played a role in the death of a robot operator.

Third, The Case of the Killer Robot (Epstein, 1994a (Epstein, , 1994b articles are used as a case study on ethics in software engineering. The case study consists of nine articles and is about 70 ...

Mar 26, 2021. 28. T he Case of the Killer Robot tells a fictional story of Robbie CX30, a robot programmed to automate an assembly line which ended up taking the life of a programmer, Barth Matthews. The story particularly tackles specific issues in software engineering and computer ethics such as software process models, team dynamics, user ...

The Case of the Killer Robot consists of newspaper articles, a journal article, and a magazine interview. This scenario is intended to raise issues of computer ethics and software engineering. The people and institutions involved in this scenario are entirely fictitious (except for references to Carnegie Mellon and Purdue

The following article provides the rationale behind the design of computer ethics scenarios such as this one and suggests how they can be used in other courses: Epstein, Richard G. "The Use of Computer Ethics Scenarios in Software Engineering Education: The Case of the Killer Robot." Software Engineering Education: Proceedings of the7th SEI ...

Presentation Transcript. The case of theKiller RobotbyRichard Epstein As retold by Netiva Caftori NEIU Oct. 2004. When software engineering and computer ethics collide. The news • A robot operator, Bart Matthews, was killed by his robot, Robbie CX30, while at work. • A programmer, Randy Samuels, who wrote the faulty code was indicted for ...

The Case of the Killer Robot is a detailed scenario that combines elements of software ... She recounted, "Ray gave us a big multimedia presentation, with slides . Author(s): Richard G. Epstein ...

The "Killer Robot" Project Mired in Controversy Right from Start, the Case of the Virtual Epidemic, and Varieties of Teamwork Experience. Partial table of contents: PRINT MEDIA. Robot Kills Operator in Grisly Accident. McMurdock Promises Justice in "Killer Robot" Case. "Killer Robot" Developers Worked Under Enormous Stress. "Killer Robot" Programmer Was Prima Donna, Co--Workers Claim.

Long the stuff of science fiction, autonomous weapons systems, known as "killer robots," are poised to become a reality, thanks to the rapid development of artificial intelligence. In response, international organizations have been intensifying calls for limits or even outright bans on their use. The U.N General Assembly in November adopted ...

An updated version of this article was published on Dec. 20, 2021. Read it here.. Autonomous weapon systems - commonly known as killer robots - may have killed human beings for the first time ...

in August, the "Case of the Killer Robot". was a 100 page computer ethics scenario. Now, in December, it is nearly 200 pages. and at least four more articles are planned. The expanded version ...

The case studies show that the two historical processes display most of the advantageous factors, whereas the current process on LAWS displays some while lacking others. Ban on blinding laser weapons. ... Blinding is cruel; mines maim civilians. In the case of LAWS, the term "killer robots" aims at simplification as well. But the term is a ...

Body. The Case of the Killer Robot is a detailed scenario that combines elements of software engineering and computer ethics. The scenario consists of fictitious articles that touch on specific issues in software engineering and computer ethics. The articles discuss programs such as programmer psychology, team dynamics, user interfaces ...

I use a hypothetical case study of a woman who replaces here biological arms with prostheses controlled through a brain-computer interface the explore how a BCI might interpret and misinterpret intentions. I define pre-veto intentions and post-veto intentions and argue that a failure of a BCI to differentiate between the two could lead to some troubling legal and ethical problems.