Have a language expert improve your writing

Run a free plagiarism check in 10 minutes, generate accurate citations for free.

- Knowledge Base

Methodology

- Random Assignment in Experiments | Introduction & Examples

Random Assignment in Experiments | Introduction & Examples

Published on March 8, 2021 by Pritha Bhandari . Revised on June 22, 2023.

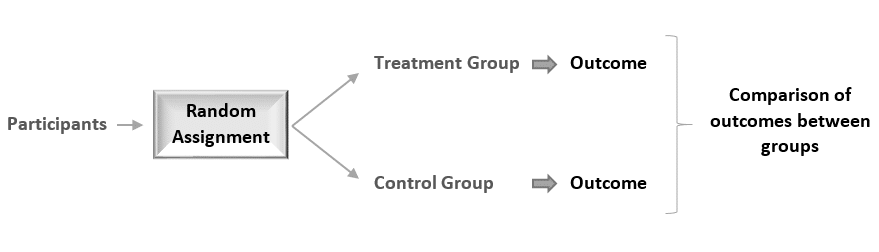

In experimental research, random assignment is a way of placing participants from your sample into different treatment groups using randomization.

With simple random assignment, every member of the sample has a known or equal chance of being placed in a control group or an experimental group. Studies that use simple random assignment are also called completely randomized designs .

Random assignment is a key part of experimental design . It helps you ensure that all groups are comparable at the start of a study: any differences between them are due to random factors, not research biases like sampling bias or selection bias .

Table of contents

Why does random assignment matter, random sampling vs random assignment, how do you use random assignment, when is random assignment not used, other interesting articles, frequently asked questions about random assignment.

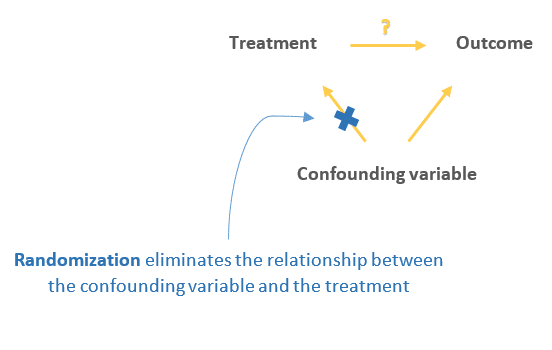

Random assignment is an important part of control in experimental research, because it helps strengthen the internal validity of an experiment and avoid biases.

In experiments, researchers manipulate an independent variable to assess its effect on a dependent variable, while controlling for other variables. To do so, they often use different levels of an independent variable for different groups of participants.

This is called a between-groups or independent measures design.

You use three groups of participants that are each given a different level of the independent variable:

- a control group that’s given a placebo (no dosage, to control for a placebo effect ),

- an experimental group that’s given a low dosage,

- a second experimental group that’s given a high dosage.

Random assignment to helps you make sure that the treatment groups don’t differ in systematic ways at the start of the experiment, as this can seriously affect (and even invalidate) your work.

If you don’t use random assignment, you may not be able to rule out alternative explanations for your results.

- participants recruited from cafes are placed in the control group ,

- participants recruited from local community centers are placed in the low dosage experimental group,

- participants recruited from gyms are placed in the high dosage group.

With this type of assignment, it’s hard to tell whether the participant characteristics are the same across all groups at the start of the study. Gym-users may tend to engage in more healthy behaviors than people who frequent cafes or community centers, and this would introduce a healthy user bias in your study.

Although random assignment helps even out baseline differences between groups, it doesn’t always make them completely equivalent. There may still be extraneous variables that differ between groups, and there will always be some group differences that arise from chance.

Most of the time, the random variation between groups is low, and, therefore, it’s acceptable for further analysis. This is especially true when you have a large sample. In general, you should always use random assignment in experiments when it is ethically possible and makes sense for your study topic.

Prevent plagiarism. Run a free check.

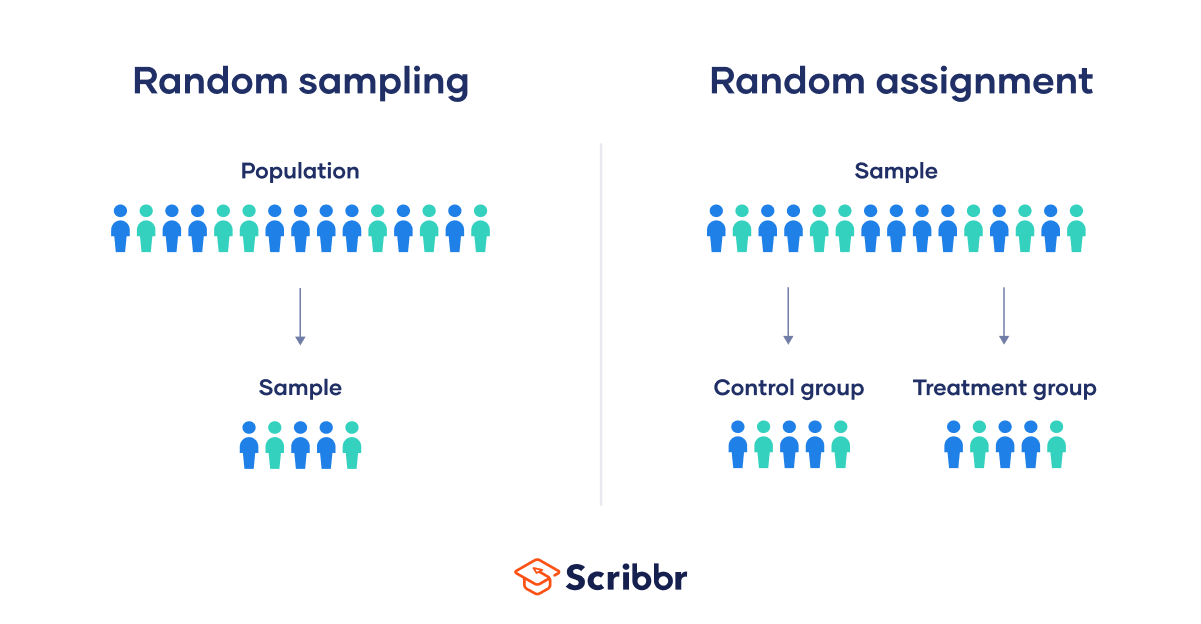

Random sampling and random assignment are both important concepts in research, but it’s important to understand the difference between them.

Random sampling (also called probability sampling or random selection) is a way of selecting members of a population to be included in your study. In contrast, random assignment is a way of sorting the sample participants into control and experimental groups.

While random sampling is used in many types of studies, random assignment is only used in between-subjects experimental designs.

Some studies use both random sampling and random assignment, while others use only one or the other.

Random sampling enhances the external validity or generalizability of your results, because it helps ensure that your sample is unbiased and representative of the whole population. This allows you to make stronger statistical inferences .

You use a simple random sample to collect data. Because you have access to the whole population (all employees), you can assign all 8000 employees a number and use a random number generator to select 300 employees. These 300 employees are your full sample.

Random assignment enhances the internal validity of the study, because it ensures that there are no systematic differences between the participants in each group. This helps you conclude that the outcomes can be attributed to the independent variable .

- a control group that receives no intervention.

- an experimental group that has a remote team-building intervention every week for a month.

You use random assignment to place participants into the control or experimental group. To do so, you take your list of participants and assign each participant a number. Again, you use a random number generator to place each participant in one of the two groups.

To use simple random assignment, you start by giving every member of the sample a unique number. Then, you can use computer programs or manual methods to randomly assign each participant to a group.

- Random number generator: Use a computer program to generate random numbers from the list for each group.

- Lottery method: Place all numbers individually in a hat or a bucket, and draw numbers at random for each group.

- Flip a coin: When you only have two groups, for each number on the list, flip a coin to decide if they’ll be in the control or the experimental group.

- Use a dice: When you have three groups, for each number on the list, roll a dice to decide which of the groups they will be in. For example, assume that rolling 1 or 2 lands them in a control group; 3 or 4 in an experimental group; and 5 or 6 in a second control or experimental group.

This type of random assignment is the most powerful method of placing participants in conditions, because each individual has an equal chance of being placed in any one of your treatment groups.

Random assignment in block designs

In more complicated experimental designs, random assignment is only used after participants are grouped into blocks based on some characteristic (e.g., test score or demographic variable). These groupings mean that you need a larger sample to achieve high statistical power .

For example, a randomized block design involves placing participants into blocks based on a shared characteristic (e.g., college students versus graduates), and then using random assignment within each block to assign participants to every treatment condition. This helps you assess whether the characteristic affects the outcomes of your treatment.

In an experimental matched design , you use blocking and then match up individual participants from each block based on specific characteristics. Within each matched pair or group, you randomly assign each participant to one of the conditions in the experiment and compare their outcomes.

Sometimes, it’s not relevant or ethical to use simple random assignment, so groups are assigned in a different way.

When comparing different groups

Sometimes, differences between participants are the main focus of a study, for example, when comparing men and women or people with and without health conditions. Participants are not randomly assigned to different groups, but instead assigned based on their characteristics.

In this type of study, the characteristic of interest (e.g., gender) is an independent variable, and the groups differ based on the different levels (e.g., men, women, etc.). All participants are tested the same way, and then their group-level outcomes are compared.

When it’s not ethically permissible

When studying unhealthy or dangerous behaviors, it’s not possible to use random assignment. For example, if you’re studying heavy drinkers and social drinkers, it’s unethical to randomly assign participants to one of the two groups and ask them to drink large amounts of alcohol for your experiment.

When you can’t assign participants to groups, you can also conduct a quasi-experimental study . In a quasi-experiment, you study the outcomes of pre-existing groups who receive treatments that you may not have any control over (e.g., heavy drinkers and social drinkers). These groups aren’t randomly assigned, but may be considered comparable when some other variables (e.g., age or socioeconomic status) are controlled for.

Here's why students love Scribbr's proofreading services

Discover proofreading & editing

If you want to know more about statistics , methodology , or research bias , make sure to check out some of our other articles with explanations and examples.

- Student’s t -distribution

- Normal distribution

- Null and Alternative Hypotheses

- Chi square tests

- Confidence interval

- Quartiles & Quantiles

- Cluster sampling

- Stratified sampling

- Data cleansing

- Reproducibility vs Replicability

- Peer review

- Prospective cohort study

Research bias

- Implicit bias

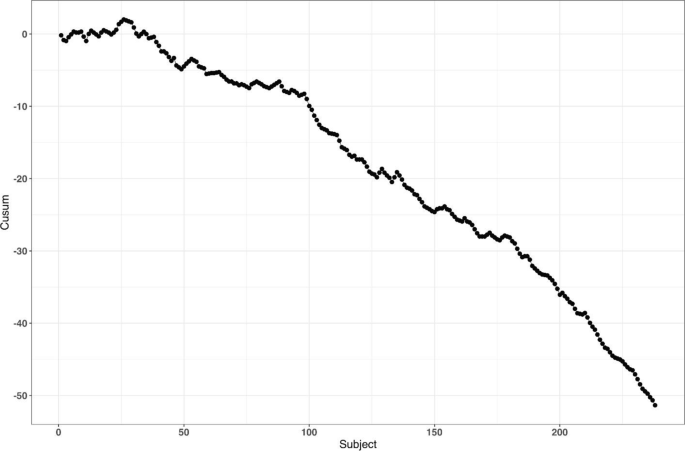

- Cognitive bias

- Placebo effect

- Hawthorne effect

- Hindsight bias

- Affect heuristic

- Social desirability bias

In experimental research, random assignment is a way of placing participants from your sample into different groups using randomization. With this method, every member of the sample has a known or equal chance of being placed in a control group or an experimental group.

Random selection, or random sampling , is a way of selecting members of a population for your study’s sample.

In contrast, random assignment is a way of sorting the sample into control and experimental groups.

Random sampling enhances the external validity or generalizability of your results, while random assignment improves the internal validity of your study.

Random assignment is used in experiments with a between-groups or independent measures design. In this research design, there’s usually a control group and one or more experimental groups. Random assignment helps ensure that the groups are comparable.

In general, you should always use random assignment in this type of experimental design when it is ethically possible and makes sense for your study topic.

To implement random assignment , assign a unique number to every member of your study’s sample .

Then, you can use a random number generator or a lottery method to randomly assign each number to a control or experimental group. You can also do so manually, by flipping a coin or rolling a dice to randomly assign participants to groups.

Cite this Scribbr article

If you want to cite this source, you can copy and paste the citation or click the “Cite this Scribbr article” button to automatically add the citation to our free Citation Generator.

Bhandari, P. (2023, June 22). Random Assignment in Experiments | Introduction & Examples. Scribbr. Retrieved August 12, 2024, from https://www.scribbr.com/methodology/random-assignment/

Is this article helpful?

Pritha Bhandari

Other students also liked, guide to experimental design | overview, steps, & examples, confounding variables | definition, examples & controls, control groups and treatment groups | uses & examples, get unlimited documents corrected.

✔ Free APA citation check included ✔ Unlimited document corrections ✔ Specialized in correcting academic texts

- Open access

- Published: 16 August 2021

A roadmap to using randomization in clinical trials

- Vance W. Berger 1 ,

- Louis Joseph Bour 2 ,

- Kerstine Carter 3 ,

- Jonathan J. Chipman ORCID: orcid.org/0000-0002-3021-2376 4 , 5 ,

- Colin C. Everett ORCID: orcid.org/0000-0002-9788-840X 6 ,

- Nicole Heussen ORCID: orcid.org/0000-0002-6134-7206 7 , 8 ,

- Catherine Hewitt ORCID: orcid.org/0000-0002-0415-3536 9 ,

- Ralf-Dieter Hilgers ORCID: orcid.org/0000-0002-5945-1119 7 ,

- Yuqun Abigail Luo 10 ,

- Jone Renteria 11 , 12 ,

- Yevgen Ryeznik ORCID: orcid.org/0000-0003-2997-8566 13 ,

- Oleksandr Sverdlov ORCID: orcid.org/0000-0002-1626-2588 14 &

- Diane Uschner ORCID: orcid.org/0000-0002-7858-796X 15

for the Randomization Innovative Design Scientific Working Group

BMC Medical Research Methodology volume 21 , Article number: 168 ( 2021 ) Cite this article

30k Accesses

40 Citations

14 Altmetric

Metrics details

Randomization is the foundation of any clinical trial involving treatment comparison. It helps mitigate selection bias, promotes similarity of treatment groups with respect to important known and unknown confounders, and contributes to the validity of statistical tests. Various restricted randomization procedures with different probabilistic structures and different statistical properties are available. The goal of this paper is to present a systematic roadmap for the choice and application of a restricted randomization procedure in a clinical trial.

We survey available restricted randomization procedures for sequential allocation of subjects in a randomized, comparative, parallel group clinical trial with equal (1:1) allocation. We explore statistical properties of these procedures, including balance/randomness tradeoff, type I error rate and power. We perform head-to-head comparisons of different procedures through simulation under various experimental scenarios, including cases when common model assumptions are violated. We also provide some real-life clinical trial examples to illustrate the thinking process for selecting a randomization procedure for implementation in practice.

Restricted randomization procedures targeting 1:1 allocation vary in the degree of balance/randomness they induce, and more importantly, they vary in terms of validity and efficiency of statistical inference when common model assumptions are violated (e.g. when outcomes are affected by a linear time trend; measurement error distribution is misspecified; or selection bias is introduced in the experiment). Some procedures are more robust than others. Covariate-adjusted analysis may be essential to ensure validity of the results. Special considerations are required when selecting a randomization procedure for a clinical trial with very small sample size.

Conclusions

The choice of randomization design, data analytic technique (parametric or nonparametric), and analysis strategy (randomization-based or population model-based) are all very important considerations. Randomization-based tests are robust and valid alternatives to likelihood-based tests and should be considered more frequently by clinical investigators.

Peer Review reports

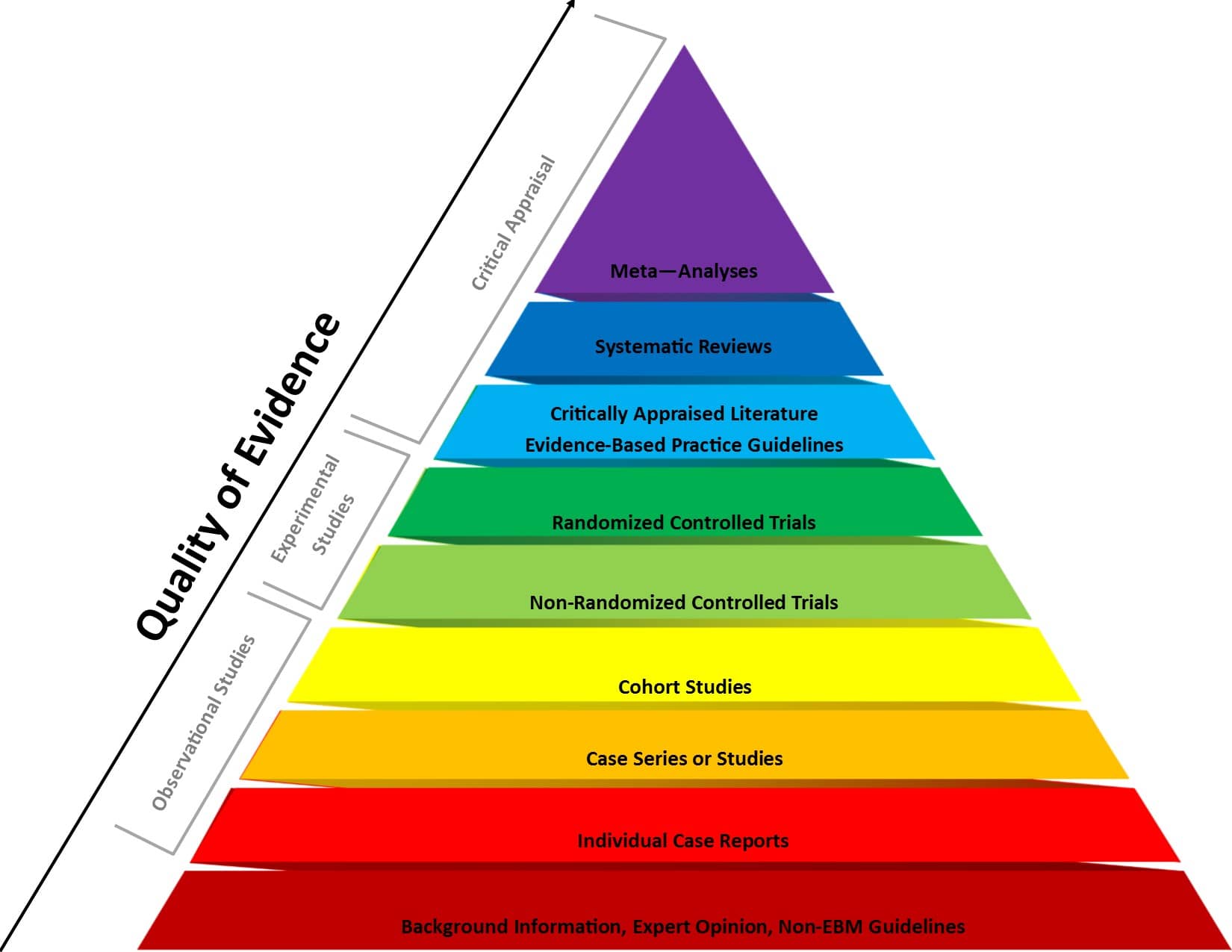

Various research designs can be used to acquire scientific medical evidence. The randomized controlled trial (RCT) has been recognized as the most credible research design for investigations of the clinical effectiveness of new medical interventions [ 1 , 2 ]. Evidence from RCTs is widely used as a basis for submissions of regulatory dossiers in request of marketing authorization for new drugs, biologics, and medical devices. Three important methodological pillars of the modern RCT include blinding (masking), randomization, and the use of control group [ 3 ].

While RCTs provide the highest standard of clinical evidence, they are laborious and costly, in terms of both time and material resources. There are alternative designs, such as observational studies with either a cohort or case–control design, and studies using real world evidence (RWE). When properly designed and implemented, observational studies can sometimes produce similar estimates of treatment effects to those found in RCTs, and furthermore, such studies may be viable alternatives to RCTs in many settings where RCTs are not feasible and/or not ethical. In the era of big data, the sources of clinically relevant data are increasingly rich and include electronic health records, data collected from wearable devices, health claims data, etc. Big data creates vast opportunities for development and implementation of novel frameworks for comparative effectiveness research [ 4 ], and RWE studies nowadays can be implemented rapidly and relatively easily. But how credible are the results from such studies?

In 1980, D. P. Byar issued warnings and highlighted potential methodological problems with comparison of treatment effects using observational databases [ 5 ]. Many of these issues still persist and actually become paramount during the ongoing COVID-19 pandemic when global scientific efforts are made to find safe and efficacious vaccines and treatments as soon as possible. While some challenges pertinent to RWE studies are related to the choice of proper research methodology, some additional challenges arise from increasing requirements of health authorities and editorial boards of medical journals for the investigators to present evidence of transparency and reproducibility of their conducted clinical research. Recently, two top medical journals, the New England Journal of Medicine and the Lancet, retracted two COVID-19 studies that relied on observational registry data [ 6 , 7 ]. The retractions were made at the request of the authors who were unable to ensure reproducibility of the results [ 8 ]. Undoubtedly, such cases are harmful in many ways. The already approved drugs may be wrongly labeled as “toxic” or “inefficacious”, and the reputation of the drug developers could be blemished or destroyed. Therefore, the highest standards for design, conduct, analysis, and reporting of clinical research studies are now needed more than ever. When treatment effects are modest, yet still clinically meaningful, a double-blind, randomized, controlled clinical trial design helps detect these differences while adjusting for possible confounders and adequately controlling the chances of both false positive and false negative findings.

Randomization in clinical trials has been an important area of methodological research in biostatistics since the pioneering work of A. Bradford Hill in the 1940’s and the first published randomized trial comparing streptomycin with a non-treatment control [ 9 ]. Statisticians around the world have worked intensively to elaborate the value, properties, and refinement of randomization procedures with an incredible record of publication [ 10 ]. In particular, a recent EU-funded project ( www.IDeAl.rwth-aachen.de ) on innovative design and analysis of small population trials has “randomization” as one work package. In 2020, a group of trial statisticians around the world from different sectors formed a subgroup of the Drug Information Association (DIA) Innovative Designs Scientific Working Group (IDSWG) to raise awareness of the full potential of randomization to improve trial quality, validity and rigor ( https://randomization-working-group.rwth-aachen.de/ ).

The aims of the current paper are three-fold. First, we describe major recent methodological advances in randomization, including different restricted randomization designs that have superior statistical properties compared to some widely used procedures such as permuted block designs. Second, we discuss different types of experimental biases in clinical trials and explain how a carefully chosen randomization design can mitigate risks of these biases. Third, we provide a systematic roadmap for evaluating different restricted randomization procedures and selecting an “optimal” one for a particular trial. We also showcase application of these ideas through several real life RCT examples.

The target audience for this paper would be clinical investigators and biostatisticians who are tasked with the design, conduct, analysis, and interpretation of clinical trial results, as well as regulatory and scientific/medical journal reviewers. Recognizing the breadth of the concept of randomization, in this paper we focus on a randomized, comparative, parallel group clinical trial design with equal (1:1) allocation, which is typically implemented using some restricted randomization procedure, possibly stratified by some important baseline prognostic factor(s) and/or study center. Some of our findings and recommendations are generalizable to more complex clinical trial settings. We shall highlight these generalizations and outline additional important considerations that fall outside the scope of the current paper.

The paper is organized as follows. The “ Methods ” section provides some general background on the methodology of randomization in clinical trials, describes existing restricted randomization procedures, and discusses some important criteria for comparison of these procedures in practice. In the “ Results ” section, we present our findings from four simulation studies that illustrate the thinking process when evaluating different randomization design options at the study planning stage. The “ Conclusions ” section summarizes the key findings and important considerations on restricted randomization procedures, and it also highlights some extensions and further topics on randomization in clinical trials.

What is randomization and what are its virtues in clinical trials?

Randomization is an essential component of an experimental design in general and clinical trials in particular. Its history goes back to R. A. Fisher and his classic book “The Design of Experiments” [ 11 ]. Implementation of randomization in clinical trials is due to A. Bradford Hill who designed the first randomized clinical trial evaluating the use of streptomycin in treating tuberculosis in 1946 [ 9 , 12 , 13 ].

Reference [ 14 ] provides a good summary of the rationale and justification for the use of randomization in clinical trials. The randomized controlled trial (RCT) has been referred to as “the worst possible design (except for all the rest)” [ 15 ], indicating that the benefits of randomization should be evaluated in comparison to what we are left with if we do not randomize. Observational studies suffer from a wide variety of biases that may not be adequately addressed even using state-of-the-art statistical modeling techniques.

The RCT in the medical field has several features that distinguish it from experimental designs in other fields, such as agricultural experiments. In the RCT, the experimental units are humans, and in the medical field often diagnosed with a potentially fatal disease. These subjects are sequentially enrolled for participation in the study at selected study centers, which have relevant expertise for conducting clinical research. Many contemporary clinical trials are run globally, at multiple research institutions. The recruitment period may span several months or even years, depending on a therapeutic indication and the target patient population. Patients who meet study eligibility criteria must sign the informed consent, after which they are enrolled into the study and, for example, randomized to either experimental treatment E or the control treatment C according to the randomization sequence. In this setup, the choice of the randomization design must be made judiciously, to protect the study from experimental biases and ensure validity of clinical trial results.

The first virtue of randomization is that, in combination with allocation concealment and masking, it helps mitigate selection bias due to an investigator’s potential to selectively enroll patients into the study [ 16 ]. A non-randomized, systematic design such as a sequence of alternating treatment assignments has a major fallacy: an investigator, knowing an upcoming treatment assignment in a sequence, may enroll a patient who, in their opinion, would be best suited for this treatment. Consequently, one of the groups may contain a greater number of “sicker” patients and the estimated treatment effect may be biased. Systematic covariate imbalances may increase the probability of false positive findings and undermine the integrity of the trial. While randomization alleviates the fallacy of a systematic design, it does not fully eliminate the possibility of selection bias (unless we consider complete randomization for which each treatment assignment is determined by a flip of a coin, which is rarely, if ever used in practice [ 17 ]). Commonly, RCTs employ restricted randomization procedures which sequentially balance treatment assignments while maintaining allocation randomness. A popular choice is the permuted block design that controls imbalance by making treatment assignments at random in blocks. To minimize potential for selection bias, one should avoid overly restrictive randomization schemes such as permuted block design with small block sizes, as this is very similar to alternating treatment sequence.

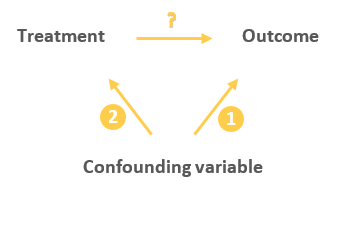

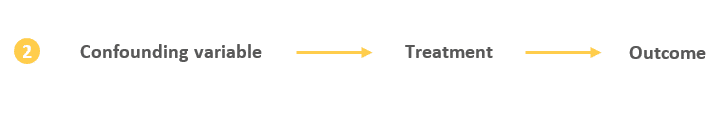

The second virtue of randomization is its tendency to promote similarity of treatment groups with respect to important known, but even more importantly, unknown confounders. If treatment assignments are made at random, then by the law of large numbers, the average values of patient characteristics should be approximately equal in the experimental and the control groups, and any observed treatment difference should be attributed to the treatment effects, not the effects of the study participants [ 18 ]. However, one can never rule out the possibility that the observed treatment difference is due to chance, e.g. as a result of random imbalance in some patient characteristics [ 19 ]. Despite that random covariate imbalances can occur in clinical trials of any size, such imbalances do not compromise the validity of statistical inference, provided that proper statistical techniques are applied in the data analysis.

Several misconceptions on the role of randomization and balance in clinical trials were documented and discussed by Senn [ 20 ]. One common misunderstanding is that balance of prognostic covariates is necessary for valid inference. In fact, different randomization designs induce different extent of balance in the distributions of covariates, and for a given trial there is always a possibility of observing baseline group differences. A legitimate approach is to pre-specify in the protocol the clinically important covariates to be adjusted for in the primary analysis, apply a randomization design (possibly accounting for selected covariates using pre-stratification or some other approach), and perform a pre-planned covariate-adjusted analysis (such as analysis of covariance for a continuous primary outcome), verifying the model assumptions and conducting additional supportive/sensitivity analyses, as appropriate. Importantly, the pre-specified prognostic covariates should always be accounted for in the analysis, regardless whether their baseline differences are present or not [ 20 ].

It should be noted that some randomization designs (such as covariate-adaptive randomization procedures) can achieve very tight balance of covariate distributions between treatment groups [ 21 ]. While we address randomization within pre-specified stratifications, we do not address more complex covariate- and response-adaptive randomization in this paper.

Finally, randomization plays an important role in statistical analysis of the clinical trial. The most common approach to inference following the RCT is the invoked population model [ 10 ]. With this approach, one posits that there is an infinite target population of patients with the disease, from which \(n\) eligible subjects are sampled in an unbiased manner for the study and are randomized to the treatment groups. Within each group, the responses are assumed to be independent and identically distributed (i.i.d.), and inference on the treatment effect is performed using some standard statistical methodology, e.g. a two sample t-test for normal outcome data. The added value of randomization is that it makes the assumption of i.i.d. errors more feasible compared to a non-randomized study because it introduces a real element of chance in the allocation of patients.

An alternative approach is the randomization model , in which the implemented randomization itself forms the basis for statistical inference [ 10 ]. Under the null hypothesis of the equality of treatment effects, individual outcomes (which are regarded as not influenced by random variation, i.e. are considered as fixed) are not affected by treatment. Treatment assignments are permuted in all possible ways consistent with the randomization procedure actually used in the trial. The randomization-based p- value is the sum of null probabilities of the treatment assignment permutations in the reference set that yield the test statistic values greater than or equal to the experimental value. A randomization-based test can be a useful supportive analysis, free of assumptions of parametric tests and protective against spurious significant results that may be caused by temporal trends [ 14 , 22 ].

It is important to note that Bayesian inference has also become a common statistical analysis in RCTs [ 23 ]. Although the inferential framework relies upon subjective probabilities, a study analyzed through a Bayesian framework still relies upon randomization for the other aforementioned virtues [ 24 ]. Hence, the randomization considerations discussed herein have broad application.

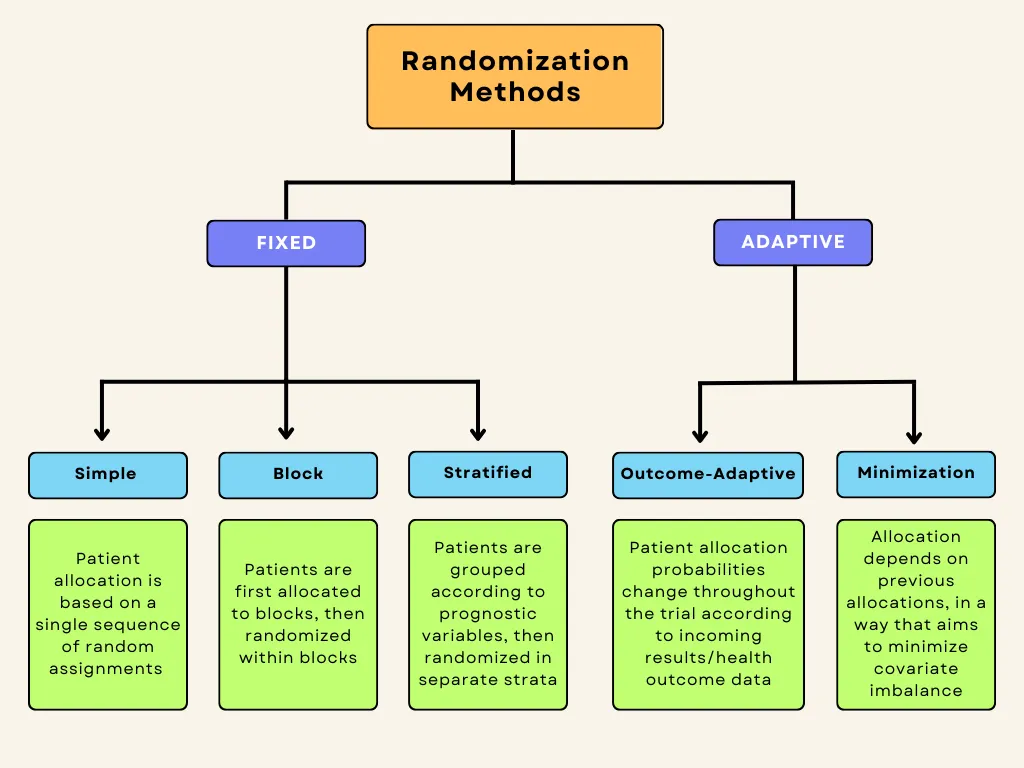

What types of randomization methodologies are available?

Randomization is not a single methodology, but a very broad class of design techniques for the RCT [ 10 ]. In this paper, we consider only randomization designs for sequential enrollment clinical trials with equal (1:1) allocation in which randomization is not adapted for covariates and/or responses. The simplest procedure for an RCT is complete randomization design (CRD) for which each subject’s treatment is determined by a flip of a fair coin [ 25 ]. CRD provides no potential for selection bias (e.g. based on prediction of future assignments) but it can result, with non-negligible probability, in deviations from the 1:1 allocation ratio and covariate imbalances, especially in small samples. This may lead to loss of statistical efficiency (decrease in power) compared to the balanced design. In practice, some restrictions on randomization are made to achieve balanced allocation. Such randomization designs are referred to as restricted randomization procedures [ 26 , 27 ].

Suppose we plan to randomize an even number of subjects \(n\) sequentially between treatments E and C. Two basic designs that equalize the final treatment numbers are the random allocation rule (Rand) and the truncated binomial design (TBD), which were discussed in the 1957 paper by Blackwell and Hodges [ 28 ]. For Rand, any sequence of exactly \(n/2\) E’s and \(n/2\) C’s is equally likely. For TBD, treatment assignments are made with probability 0.5 until one of the treatments receives its quota of \(n/2\) subjects; thereafter all remaining assignments are made deterministically to the opposite treatment.

A common feature of both Rand and TBD is that they aim at the final balance, whereas at intermediate steps it is still possible to have substantial imbalances, especially if \(n\) is large. A long run of a single treatment in a sequence may be problematic if there is a time drift in some important covariate, which can lead to chronological bias [ 29 ]. To mitigate this risk, one can further restrict randomization so that treatment assignments are balanced over time. One common approach is the permuted block design (PBD) [ 30 ], for which random treatment assignments are made in blocks of size \(2b\) ( \(b\) is some small positive integer), with exactly \(b\) allocations to each of the treatments E and C. The PBD is perhaps the oldest (it can be traced back to A. Bradford Hill’s 1951 paper [ 12 ]) and the most widely used randomization method in clinical trials. Often its choice in practice is justified by simplicity of implementation and the fact that it is referenced in the authoritative ICH E9 guideline on statistical principles for clinical trials [ 31 ]. One major challenge with PBD is the choice of the block size. If \(b=1\) , then every pair of allocations is balanced, but every even allocation is deterministic. Larger block sizes increase allocation randomness. The use of variable block sizes has been suggested [ 31 ]; however, PBDs with variable block sizes are also quite predictable [ 32 ]. Another problematic feature of the PBD is that it forces periodic return to perfect balance, which may be unnecessary from the statistical efficiency perspective and may increase the risk of prediction of upcoming allocations.

More recent and better alternatives to the PBD are the maximum tolerated imbalance (MTI) procedures [ 33 , 34 , 35 , 36 , 37 , 38 , 39 , 40 , 41 ]. These procedures provide stronger encryption of the randomization sequence (i.e. make it more difficult to predict future treatment allocations in the sequence even knowing the current sizes of the treatment groups) while controlling treatment imbalance at a pre-defined threshold throughout the experiment. A general MTI procedure specifies a certain boundary for treatment imbalance, say \(b>0\) , that cannot be exceeded. If, at a given allocation step the absolute value of imbalance is equal to \(b\) , then one next allocation is deterministically forced toward balance. This is in contrast to PBD which, after reaching the target quota of allocations for either treatment within a block, forces all subsequent allocations to achieve perfect balance at the end of the block. Some notable MTI procedures are the big stick design (BSD) proposed by Soares and Wu in 1983 [ 37 ], the maximal procedure proposed by Berger, Ivanova and Knoll in 2003 [ 35 ], the block urn design (BUD) proposed by Zhao and Weng in 2011 [ 40 ], just to name a few. These designs control treatment imbalance within pre-specified limits and are more immune to selection bias than the PBD [ 42 , 43 ].

Another important class of restricted randomization procedures is biased coin designs (BCDs). Starting with the seminal 1971 paper of Efron [ 44 ], BCDs have been a hot research topic in biostatistics for 50 years. Efron’s BCD is very simple: at any allocation step, if treatment numbers are balanced, the next assignment is made with probability 0.5; otherwise, the underrepresented treatment is assigned with probability \(p\) , where \(0.5<p\le 1\) is a fixed and pre-specified parameter that determines the tradeoff between balance and randomness. Note that \(p=1\) corresponds to PBD with block size 2. If we set \(p<1\) (e.g. \(p=2/3\) ), then the procedure has no deterministic assignments and treatment allocation will be concentrated around 1:1 with high probability [ 44 ]. Several extensions of Efron’s BCD providing better tradeoff between treatment balance and allocation randomness have been proposed [ 45 , 46 , 47 , 48 , 49 ]; for example, a class of adjustable biased coin designs introduced by Baldi Antognini and Giovagnoli in 2004 [ 49 ] unifies many BCDs in a single framework. A comprehensive simulation study comparing different BCDs has been published by Atkinson in 2014 [ 50 ].

Finally, urn models provide a useful mechanism for RCT designs [ 51 ]. Urn models apply some probabilistic rules to sequentially add/remove balls (representing different treatments) in the urn, to balance treatment assignments while maintaining the randomized nature of the experiment [ 39 , 40 , 52 , 53 , 54 , 55 ]. A randomized urn design for balancing treatment assignments was proposed by Wei in 1977 [ 52 ]. More novel urn designs, such as the drop-the-loser urn design developed by Ivanova in 2003 [ 55 ] have reduced variability and can attain the target treatment allocation more efficiently. Many urn designs involve parameters that can be fine-tuned to obtain randomization procedures with desirable balance/randomness tradeoff [ 56 ].

What are the attributes of a good randomization procedure?

A “good” randomization procedure is one that helps successfully achieve the study objective(s). Kalish and Begg [ 57 ] state that the major objective of a comparative clinical trial is to provide a precise and valid comparison. To achieve this, the trial design should be such that it: 1) prevents bias; 2) ensures an efficient treatment comparison; and 3) is simple to implement to minimize operational errors. Table 1 elaborates on these considerations, focusing on restricted randomization procedures for 1:1 randomized trials.

Before delving into a detailed discussion, let us introduce some important definitions. Following [ 10 ], a randomization sequence is a random vector \({{\varvec{\updelta}}}_{n}=({\delta }_{1},\dots ,{\delta }_{n})\) , where \({\delta }_{i}=1\) , if the i th subject is assigned to treatment E or \({\delta }_{i}=0\) , if the \(i\) th subject is assigned to treatment C. A restricted randomization procedure can be defined by specifying a probabilistic rule for the treatment assignment of the ( i +1)st subject, \({\delta }_{i+1}\) , given the past allocations \({{\varvec{\updelta}}}_{i}\) for \(i\ge 1\) . Let \({N}_{E}\left(i\right)={\sum }_{j=1}^{i}{\delta }_{j}\) and \({N}_{C}\left(i\right)=i-{N}_{E}\left(i\right)\) denote the numbers of subjects assigned to treatments E and C, respectively, after \(i\) allocation steps. Then \(D\left(i\right)={N}_{E}\left(i\right)-{N}_{C}(i)\) is treatment imbalance after \(i\) allocations. For any \(i\ge 1\) , \(D\left(i\right)\) is a random variable whose probability distribution is determined by the chosen randomization procedure.

Balance and randomness

Treatment balance and allocation randomness are two competing requirements in the design of an RCT. Restricted randomization procedures that provide a good tradeoff between these two criteria are desirable in practice.

Consider a trial with sample size \(n\) . The absolute value of imbalance, \(\left|D(i)\right|\) \((i=1,\dots,n)\) , provides a measure of deviation from equal allocation after \(i\) allocation steps. \(\left|D(i)\right|=0\) indicates that the trial is perfectly balanced. One can also consider \(\Pr(\vert D\left(i\right)\vert=0)\) , the probability of achieving exact balance after \(i\) allocation steps. In particular \(\Pr(\vert D\left(n\right)\vert=0)\) is the probability that the final treatment numbers are balanced. Two other useful summary measures are the expected imbalance at the \(i\mathrm{th}\) step, \(E\left|D(i)\right|\) and the expected value of the maximum imbalance of the entire randomization sequence, \(E\left(\underset{1\le i\le n}{\mathrm{max}}\left|D\left(i\right)\right|\right)\) .

Greater forcing of balance implies lack of randomness. A procedure that lacks randomness may be susceptible to selection bias [ 16 ], which is a prominent issue in open-label trials with a single center or with randomization stratified by center, where the investigator knows the sequence of all previous treatment assignments. A classic approach to quantify the degree of susceptibility of a procedure to selection bias is the Blackwell-Hodges model [ 28 ]. Let \({G}_{i}=1\) (or 0), if at the \(i\mathrm{th}\) allocation step an investigator makes a correct (or incorrect) guess on treatment assignment \({\delta }_{i}\) , given past allocations \({{\varvec{\updelta}}}_{i-1}\) . Then the predictability of the design at the \(i\mathrm{th}\) step is the expected value of \({G}_{i}\) , i.e. \(E\left(G_i\right)=\Pr(G_i=1)\) . Blackwell and Hodges [ 28 ] considered the expected bias factor , the difference between expected total number of correct guesses of a given sequence of random assignments and the similar quantity obtained from CRD for which treatment assignments are made independently with equal probability: \(E(F)=E\left({\sum }_{i=1}^{n}{G}_{i}\right)-n/2\) . This quantity is zero for CRD, and it is positive for restricted randomization procedures (greater values indicate higher expected bias). Matts and Lachin [ 30 ] suggested taking expected proportion of deterministic assignments in a sequence as another measure of lack of randomness.

In the literature, various restricted randomization procedures have been compared in terms of balance and randomness [ 50 , 58 , 59 ]. For instance, Zhao et al. [ 58 ] performed a comprehensive simulation study of 14 restricted randomization procedures with different choices of design parameters, for sample sizes in the range of 10 to 300. The key criteria were the maximum absolute imbalance and the correct guess probability. The authors found that the performance of the designs was within a closed region with the boundaries shaped by Efron’s BCD [ 44 ] and the big stick design [ 37 ], signifying that the latter procedure with a suitably chosen MTI boundary can be superior to other restricted randomization procedures in terms of balance/randomness tradeoff. Similar findings confirming the utility of the big stick design were recently reported by Hilgers et al. [ 60 ].

Validity and efficiency

Validity of a statistical procedure essentially means that the procedure provides correct statistical inference following an RCT. In particular, a chosen statistical test is valid, if it controls the chance of a false positive finding, that is, the pre-specified probability of a type I error of the test is achieved but not exceeded. The strong control of type I error rate is a major prerequisite for any confirmatory RCT. Efficiency means high statistical power for detecting meaningful treatment differences (when they exist), and high accuracy of estimation of treatment effects.

Both validity and efficiency are major requirements of any RCT, and both of these aspects are intertwined with treatment balance and allocation randomness. Restricted randomization designs, when properly implemented, provide solid ground for valid and efficient statistical inference. However, a careful consideration of different options can help an investigator to optimize the choice of a randomization procedure for their clinical trial.

Let us start with statistical efficiency. Equal (1:1) allocation frequently maximizes power and estimation precision. To illustrate this, suppose the primary outcomes in the two groups are normally distributed with respective means \({\mu }_{E}\) and \({\mu }_{C}\) and common standard deviation \(\sigma >0\) . Then the variance of an efficient estimator of the treatment difference \({\mu }_{E}-{\mu }_{C}\) is equal to \(V=\frac{4{\sigma }^{2}}{n-{L}_{n}}\) , where \({L}_{n}=\frac{{\left|D(n)\right|}^{2}}{n}\) is referred to as loss [ 61 ]. Clearly, \(V\) is minimized when \({L}_{n}=0\) , or equivalently, \(D\left(n\right)=0\) , i.e. the balanced trial.

When the primary outcome follows a more complex statistical model, optimal allocation may be unequal across the treatment groups; however, 1:1 allocation is still nearly optimal for binary outcomes [ 62 , 63 ], survival outcomes [ 64 ], and possibly more complex data types [ 65 , 66 ]. Therefore, a randomization design that balances treatment numbers frequently promotes efficiency of the treatment comparison.

As regards inferential validity, it is important to distinguish two approaches to statistical inference after the RCT – an invoked population model and a randomization model [ 10 ]. For a given randomization procedure, these two approaches generally produce similar results when the assumption of normal random sampling (and some other assumptions) are satisfied, but the randomization model may be more robust when model assumptions are violated; e.g. when outcomes are affected by a linear time trend [ 67 , 68 ]. Another important issue that may interfere with validity is selection bias. Some authors showed theoretically that PBDs with small block sizes may result in serious inflation of the type I error rate under a selection bias model [ 69 , 70 , 71 ]. To mitigate risk of selection bias, one should ideally take preventative measures, such as blinding/masking, allocation concealment, and avoidance of highly restrictive randomization designs. However, for already completed studies with evidence of selection bias [ 72 ], special statistical adjustments are warranted to ensure validity of the results [ 73 , 74 , 75 ].

Implementation aspects

With the current state of information technology, implementation of randomization in RCTs should be straightforward. Validated randomization systems are emerging, and they can handle randomization designs of increasing complexity for clinical trials that are run globally. However, some important points merit consideration.

The first point has to do with how a randomization sequence is generated and implemented. One should distinguish between advance and adaptive randomization [ 16 ]. Here, by “adaptive” randomization we mean “in-real-time” randomization, i.e. when a randomization sequence is generated not upfront, but rather sequentially, as eligible subjects enroll into the study. Restricted randomization procedures are “allocation-adaptive”, in the sense that the treatment assignment of an individual subject is adapted to the history of previous treatment assignments. While in practice the majority of trials with restricted and stratified randomization use randomization schedules pre-generated in advance, there are some circumstances under which “in-real-time” randomization schemes may be preferred; for instance, clinical trials with high cost of goods and/or shortage of drug supply [ 76 ].

The advance randomization approach includes the following steps: 1) for the chosen randomization design and sample size \(n\) , specify the probability distribution on the reference set by enumerating all feasible randomization sequences of length \(n\) and their corresponding probabilities; 2) select a sequence at random from the reference set according to the probability distribution; and 3) implement this sequence in the trial. While enumeration of all possible sequences and their probabilities is feasible and may be useful for trials with small sample sizes, the task becomes computationally prohibitive (and unnecessary) for moderate or large samples. In practice, Monte Carlo simulation can be used to approximate the probability distribution of the reference set of all randomization sequences for a chosen randomization procedure.

A limitation of advance randomization is that a sequence of treatment assignments must be generated upfront, and proper security measures (e.g. blinding/masking) must be in place to protect confidentiality of the sequence. With the adaptive or “in-real-time” randomization, a sequence of treatment assignments is generated dynamically as the trial progresses. For many restricted randomization procedures, the randomization rule can be expressed as \(\Pr(\delta_{i+1}=1)=\left|F\left\{D\left(i\right)\right\}\right|\) , where \(F\left\{\cdot \right\}\) is some non-increasing function of \(D\left(i\right)\) for any \(i\ge 1\) . This is referred to as the Markov property [ 77 ], which makes a procedure easy to implement sequentially. Some restricted randomization procedures, e.g. the maximal procedure [ 35 ], do not have the Markov property.

The second point has to do with how the final data analysis is performed. With an invoked population model, the analysis is conditional on the design and the randomization is ignored in the analysis. With a randomization model, the randomization itself forms the basis for statistical inference. Reference [ 14 ] provides a contemporaneous overview of randomization-based inference in clinical trials. Several other papers provide important technical details on randomization-based tests, including justification for control of type I error rate with these tests [ 22 , 78 , 79 ]. In practice, Monte Carlo simulation can be used to estimate randomization-based p- values [ 10 ].

A roadmap for comparison of restricted randomization procedures

The design of any RCT starts with formulation of the trial objectives and research questions of interest [ 3 , 31 ]. The choice of a randomization procedure is an integral part of the study design. A structured approach for selecting an appropriate randomization procedure for an RCT was proposed by Hilgers et al. [ 60 ]. Here we outline the thinking process one may follow when evaluating different candidate randomization procedures. Our presented roadmap is by no means exhaustive; its main purpose is to illustrate the logic behind some important considerations for finding an “optimal” randomization design for the given trial parameters.

Throughout, we shall assume that the study is designed as a randomized, two-arm comparative trial with 1:1 allocation, with a fixed sample size \(n\) that is pre-determined based on budgetary and statistical considerations to obtain a definitive assessment of the treatment effect via the pre-defined hypothesis testing. We start with some general considerations which determine the study design:

Sample size ( \(n\) ). For small or moderate studies, exact attainment of the target numbers per group may be essential, because even slight imbalance may decrease study power. Therefore, a randomization design in such studies should equalize well the final treatment numbers. For large trials, the risk of major imbalances is less of a concern, and more random procedures may be acceptable.

The length of the recruitment period and the trial duration. Many studies are short-term and enroll participants fast, whereas some other studies are long-term and may have slow patient accrual. In the latter case, there may be time drifts in patient characteristics, and it is important that the randomization design balances treatment assignments over time.

Level of blinding (masking): double-blind, single-blind, or open-label. In double-blind studies with properly implemented allocation concealment the risk of selection bias is low. By contrast, in open-label studies the risk of selection bias may be high, and the randomization design should provide strong encryption of the randomization sequence to minimize prediction of future allocations.

Number of study centers. Many modern RCTs are implemented globally at multiple research institutions, whereas some studies are conducted at a single institution. In the former case, the randomization is often stratified by center and/or clinically important covariates. In the latter case, especially in single-institution open-label studies, the randomization design should be chosen very carefully, to mitigate the risk of selection bias.

An important point to consider is calibration of the design parameters. Many restricted randomization procedures involve parameters, such as the block size in the PBD, the coin bias probability in Efron’s BCD, the MTI threshold, etc. By fine-tuning these parameters, one can obtain designs with desirable statistical properties. For instance, references [ 80 , 81 ] provide guidance on how to justify the block size in the PBD to mitigate the risk of selection bias or chronological bias. Reference [ 82 ] provides a formal approach to determine the “optimal” value of the parameter \(p\) in Efron’s BCD in both finite and large samples. The calibration of design parameters can be done using Monte Carlo simulations for the given trial setting.

Another important consideration is the scope of randomization procedures to be evaluated. As we mentioned already, even one method may represent a broad class of randomization procedures that can provide different levels of balance/randomness tradeoff; e.g. Efron’s BCD covers a wide spectrum of designs, from PBD(2) (if \(p=1\) ) to CRD (if \(p=0.5\) ). One may either prefer to focus on finding the “optimal” parameter value for the chosen design, or be more general and include various designs (e.g. MTI procedures, BCDs, urn designs, etc.) in the comparison. This should be done judiciously, on a case-by-case basis, focusing only on the most reasonable procedures. References [ 50 , 58 , 60 ] provide good examples of simulation studies to facilitate comparisons among various restricted randomization procedures for a 1:1 RCT.

In parallel with the decision on the scope of randomization procedures to be assessed, one should decide upon the performance criteria against which these designs will be compared. Among others, one might think about the two competing considerations: treatment balance and allocation randomness. For a trial of size \(n\) , at each allocation step \(i=1,\dots ,n\) one can calculate expected absolute imbalance \(E\left|D(i)\right|\) and the probability of correct guess \(\Pr(G_i=1)\) as measures of lack of balance and lack of randomness, respectively. These measures can be either calculated analytically (when formulae are available) or through Monte Carlo simulations. Sometimes it may be useful to look at cumulative measures up to the \(i\mathrm{th}\) allocation step ( \(i=1,\dots ,n\) ); e.g. \(\frac{1}{i}{\sum }_{j=1}^{i}E\left|D(j)\right|\) and \(\frac1i\sum\nolimits_{j=1}^i\Pr(G_j=1)\) . For instance, \(\frac{1}{n}{\sum }_{j=1}^{n}{\mathrm{Pr}}({G}_{j}=1)\) is the average correct guess probability for a design with sample size \(n\) . It is also helpful to visualize the selected criteria. Visualizations can be done in a number of ways; e.g. plots of a criterion vs. allocation step, admissibility plots of two chosen criteria [ 50 , 59 ], etc. Such visualizations can help evaluate design characteristics, both overall and at intermediate allocation steps. They may also provide insights into the behavior of a particular design for different values of the tuning parameter, and/or facilitate a comparison among different types of designs.

Another way to compare the merits of different randomization procedures is to study their inferential characteristics such as type I error rate and power under different experimental conditions. Sometimes this can be done analytically, but a more practical approach is to use Monte Carlo simulation. The choice of the modeling and analysis strategy will be context-specific. Here we outline some considerations that may be useful for this purpose:

Data generating mechanism . To simulate individual outcome data, some plausible statistical model must be posited. The form of the model will depend on the type of outcomes (e.g. continuous, binary, time-to-event, etc.), covariates (if applicable), the distribution of the measurement error terms, and possibly some additional terms representing selection and/or chronological biases [ 60 ].

True treatment effects . At least two scenarios should be considered: under the null hypothesis ( \({H}_{0}\) : treatment effects are the same) to evaluate the type I error rate, and under an alternative hypothesis ( \({H}_{1}\) : there is some true clinically meaningful difference between the treatments) to evaluate statistical power.

Randomization designs to be compared . The choice of candidate randomization designs and their parameters must be made judiciously.

Data analytic strategy . For any study design, one should pre-specify the data analysis strategy to address the primary research question. Statistical tests of significance to compare treatment effects may be parametric or nonparametric, with or without adjustment for covariates.

The approach to statistical inference: population model-based or randomization-based . These two approaches are expected to yield similar results when the population model assumptions are met, but they may be different if some assumptions are violated. Randomization-based tests following restricted randomization procedures will control the type I error at the chosen level if the distribution of the test statistic under the null hypothesis is fully specified by the randomization procedure that was used for patient allocation. This is always the case unless there is a major flaw in the design (such as selection bias whereby the outcome of any individual participant is dependent on treatment assignments of the previous participants).

Overall, there should be a well-thought plan capturing the key questions to be answered, the strategy to address them, the choice of statistical software for simulation and visualization of the results, and other relevant details.

In this section we present four examples that illustrate how one may approach evaluation of different randomization design options at the study planning stage. Example 1 is based on a hypothetical 1:1 RCT with \(n=50\) and a continuous primary outcome, whereas Examples 2, 3, and 4 are based on some real RCTs.

Example 1: Which restricted randomization procedures are robust and efficient?

Our first example is a hypothetical RCT in which the primary outcome is assumed to be normally distributed with mean \({\mu }_{E}\) for treatment E, mean \({\mu }_{C}\) for treatment C, and common variance \({\sigma }^{2}\) . A total of \(n\) subjects are to be randomized equally between E and C, and a two-sample t-test is planned for data analysis. Let \(\Delta ={\mu }_{E}-{\mu }_{C}\) denote the true mean treatment difference. We are interested in testing a hypothesis \({H}_{0}:\Delta =0\) (treatment effects are the same) vs. \({H}_{1}:\Delta \ne 0\) .

The total sample size \(n\) to achieve given power at some clinically meaningful treatment difference \({\Delta }_{c}\) while maintaining the chance of a false positive result at level \(\alpha\) can be obtained using standard statistical methods [ 83 ]. For instance, if \({\Delta }_{c}/\sigma =0.95\) , then a design with \(n=50\) subjects (25 per arm) provides approximately 91% power of a two-sample t-test to detect a statistically significant treatment difference using 2-sided \(\alpha =\) 5%. We shall consider 12 randomization procedures to sequentially randomize \(n=50\) subjects in a 1:1 ratio.

Random allocation rule – Rand.

Truncated binomial design – TBD.

Permuted block design with block size of 2 – PBD(2).

Permuted block design with block size of 4 – PBD(4).

Big stick design [ 37 ] with MTI = 3 – BSD(3).

Biased coin design with imbalance tolerance [ 38 ] with p = 2/3 and MTI = 3 – BCDWIT(2/3, 3).

Efron’s biased coin design [ 44 ] with p = 2/3 – BCD(2/3).

Adjustable biased coin design [ 49 ] with a = 2 – ABCD(2).

Generalized biased coin design (GBCD) with \(\gamma =1\) [ 45 ] – GBCD(1).

GBCD with \(\gamma =2\) [ 46 ] – GBCD(2).

GBCD with \(\gamma =5\) [ 47 ] – GBCD(5).

Complete randomization design – CRD.

These 12 procedures can be grouped into five major types. I) Procedures 1, 2, 3, and 4 achieve exact final balance for a chosen sample size (provided the total sample size is a multiple of the block size). II) Procedures 5 and 6 ensure that at any allocation step the absolute value of imbalance is capped at MTI = 3. III) Procedures 7 and 8 are biased coin designs that sequentially adjust randomization according to imbalance measured as the difference in treatment numbers. IV) Procedures 9, 10, and 11 (GBCD’s with \(\gamma =\) 1, 2, and 5) are adaptive biased coin designs, for which randomization probability is modified according to imbalance measured as the difference in treatment allocation proportions (larger \(\gamma\) implies greater forcing of balance). V) Procedure 12 (CRD) is the most random procedure that achieves balance for large samples.

Balance/randomness tradeoff

We first compare the procedures with respect to treatment balance and allocation randomness. To quantify imbalance after \(i\) allocations, we consider two measures: expected value of absolute imbalance \(E\left|D(i)\right|\) , and expected value of loss \(E({L}_{i})=E{\left|D(i)\right|}^{2}/i\) [ 50 , 61 ]. Importantly, for procedures 1, 2, and 3 the final imbalance is always zero, thus \(E\left|D(n)\right|\equiv 0\) and \(E({L}_{n})\equiv 0\) , but at intermediate steps one may have \(E\left|D(i)\right|>0\) and \(E\left({L}_{i}\right)>0\) , for \(1\le i<n\) . For procedures 5 and 6 with MTI = 3, \(E\left({L}_{i}\right)\le 9/i\) . For procedures 7 and 8, \(E\left({L}_{n}\right)\) tends to zero as \(n\to \infty\) [ 49 ]. For procedures 9, 10, 11, and 12, as \(n\to \infty\) , \(E\left({L}_{n}\right)\) tends to the positive constants 1/3, 1/5, 1/11, and 1, respectively [ 47 ]. We take the cumulative average loss after \(n\) allocations as an aggregate measure of imbalance: \(Imb\left(n\right)=\frac{1}{n}{\sum }_{i=1}^{n}E\left({L}_{i}\right)\) , which takes values in the 0–1 range.

To measure lack of randomness, we consider two measures: expected proportion of correct guesses up to the \(i\mathrm{th}\) step, \(PCG\left(i\right)=\frac1i\sum\nolimits_{j=1}^i\Pr(G_j=1)\) , \(i=1,\dots ,n\) , and the forcing index [ 47 , 84 ], \(FI(i)=\frac{{\sum }_{j=1}^{i}E\left|{\phi }_{j}-0.5\right|}{i/4}\) , where \(E\left|{\phi }_{j}-0.5\right|\) is the expected deviation of the conditional probability of treatment E assignment at the \(j\mathrm{th}\) allocation step ( \({\phi }_{j}\) ) from the unconditional target value of 0.5. Note that \(PCG\left(i\right)\) takes values in the range from 0.5 for CRD to 0.75 for PBD(2) assuming \(i\) is even, whereas \(FI(i)\) takes values in the 0–1 range. At the one extreme, we have CRD for which \(FI(i)\equiv 0\) because for CRD \({\phi }_{i}=0.5\) for any \(i\ge 1\) . At the other extreme, we have PBD(2) for which every odd allocation is made with probability 0.5, and every even allocation is deterministic, i.e. made with probability 0 or 1. For PBD(2), assuming \(i\) is even, there are exactly \(i/2\) pairs of allocations, and so \({\sum }_{j=1}^{i}E\left|{\phi }_{j}-0.5\right|=0.5\cdot i/2=i/4\) , which implies that \(FI(i)=1\) for PBD(2). For all other restricted randomization procedures one has \(0<FI(i)<1\) .

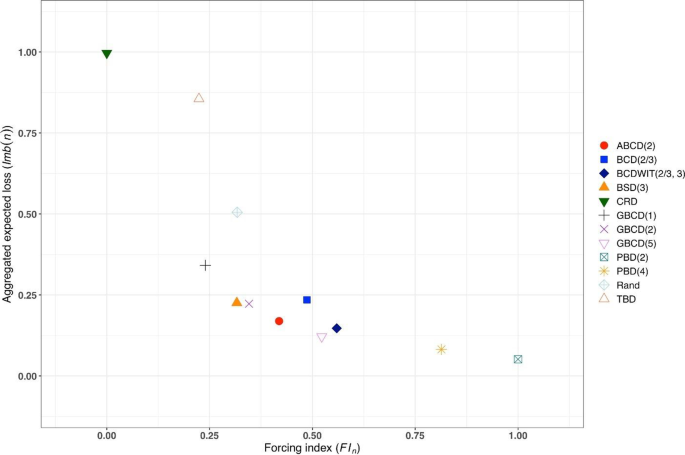

A “good” randomization procedure should have low values of both loss and forcing index. Different randomization procedures can be compared graphically. As a balance/randomness tradeoff metric, one can calculate the quadratic distance to the origin (0,0) for the chosen sample size, e.g. \(d(n)=\sqrt{{\left\{Imb(n)\right\}}^{2}+{\left\{FI(n)\right\}}^{2}}\) (in our example \(n=50\) ), and the randomization designs can then be ranked such that designs with lower values of \(d(n)\) are preferable.

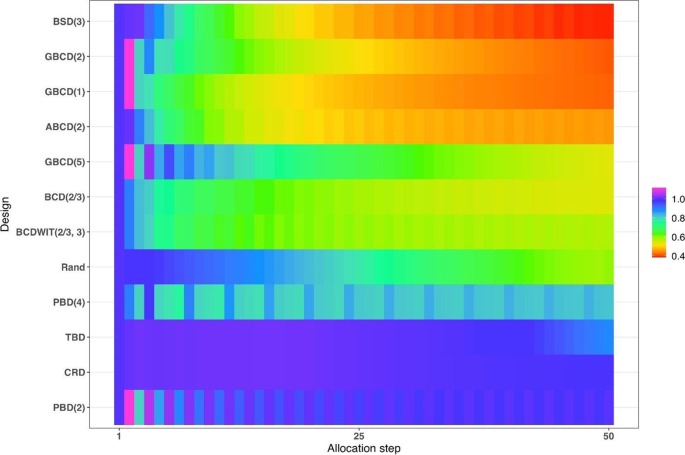

We ran a simulation study of the 12 randomization procedures for an RCT with \(n=50\) . Monte Carlo average values of absolute imbalance, loss, \(Imb\left(i\right)\) , \(FI\left(i\right)\) , and \(d(i)\) were calculated for each intermediate allocation step ( \(i=1,\dots ,50\) ), based on 10,000 simulations.

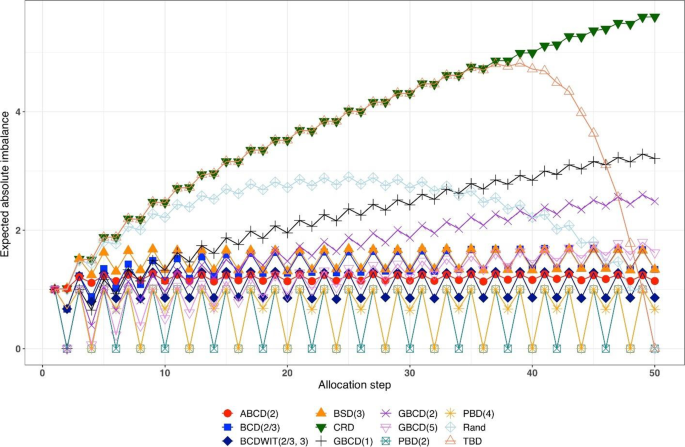

Figure 1 is a plot of expected absolute imbalance vs. allocation step. CRD, GBCD(1), and GBCD(2) show increasing patterns. For TBD and Rand, the final imbalance (when \(n=50\) ) is zero; however, at intermediate steps is can be quite large. For other designs, absolute imbalance is expected to be below 2 at any allocation step up to \(n=50\) . Note the periodic patterns of PBD(2) and PBD(4); for instance, for PBD(2) imbalance is 0 (or 1) for any even (or odd) allocation.

Simulated expected absolute imbalance vs. allocation step for 12 restricted randomization procedures for n = 50. Note: PBD(2) and PBD(4) have forced periodicity absolute imbalance of 0, which distinguishes them from MTI procedures

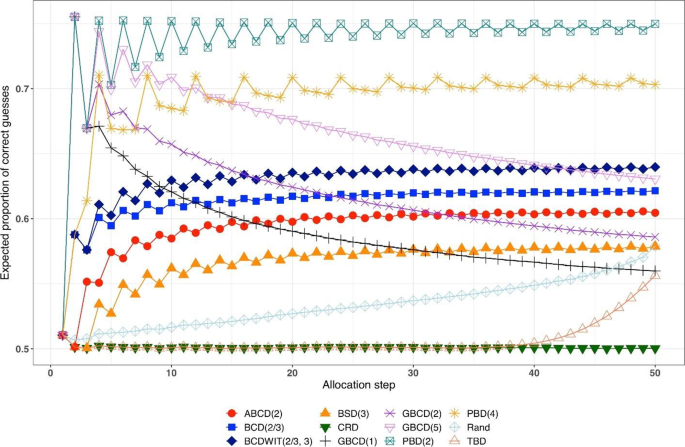

Figure 2 is a plot of expected proportion of correct guesses vs. allocation step. One can observe that for CRD it is a flat pattern at 0.5; for PBD(2) it fluctuates while reaching the upper limit of 0.75 at even allocation steps; and for ten other designs the values of proportion of correct guesses fall between those of CRD and PBD(2). The TBD has the same behavior up to ~ 40 th allocation step, at which the pattern starts increasing. Rand exhibits an increasing pattern with overall fewer correct guesses compared to other randomization procedures. Interestingly, BSD(3) is uniformly better (less predictable) than ABCD(2), BCD(2/3), and BCDWIT(2/3, 3). For the three GBCD procedures, there is a rapid initial increase followed by gradual decrease in the pattern; this makes good sense, because GBCD procedures force greater balance when the trial is small and become more random (and less prone to correct guessing) as the sample size increases.

Simulated expected proportion of correct guesses vs. allocation step for 12 restricted randomization procedures for n = 50

Table 2 shows the ranking of the 12 designs with respect to the overall performance metric \(d(n)=\sqrt{{\left\{Imb(n)\right\}}^{2}+{\left\{FI(n)\right\}}^{2}}\) for \(n=50\) . BSD(3), GBCD(2) and GBCD(1) are the top three procedures, whereas PBD(2) and CRD are at the bottom of the list.

Figure 3 is a plot of \(FI\left(n\right)\) vs. \(Imb\left(n\right)\) for \(n=50\) . One can see the two extremes: CRD that takes the value (0,1), and PBD(2) with the value (1,0). The other ten designs are closer to (0,0).

Simulated forcing index (x-axis) vs. aggregate expected loss (y-axis) for 12 restricted randomization procedures for n = 50

Figure 4 is a heat map plot of the metric \(d(i)\) for \(i=1,\dots ,50\) . BSD(3) seems to provide overall best tradeoff between randomness and balance throughout the study.

Heatmap of the balance/randomness tradeoff \(d\left(i\right)=\sqrt{{\left\{Imb(i)\right\}}^{2}+{\left\{FI(i)\right\}}^{2}}\) vs. allocation step ( \(i=1,\dots ,50\) ) for 12 restricted randomization procedures. The procedures are ordered by value of d(50), with smaller values (more red) indicating more optimal performance

Inferential characteristics: type I error rate and power

Our next goal is to compare the chosen randomization procedures in terms of validity (control of the type I error rate) and efficiency (power). For this purpose, we assumed the following data generating mechanism: for the \(i\mathrm{th}\) subject, conditional on the treatment assignment \({\delta }_{i}\) , the outcome \({Y}_{i}\) is generated according to the model

where \({u}_{i}\) is an unknown term associated with the \(i\mathrm{th}\) subject and \({\varepsilon }_{i}\) ’s are i.i.d. measurement errors. We shall explore the following four models:

M1: Normal random sampling : \({u}_{i}\equiv 0\) and \({\varepsilon }_{i}\sim\) i.i.d. N(0,1), \(i=1,\dots ,n\) . This corresponds to a standard setup for a two-sample t-test under a population model.

M2: Linear trend : \({u}_{i}=\frac{5i}{n+1}\) and \({\varepsilon }_{i}\sim\) i.i.d. N(0,1), \(i=1,\dots ,n\) . In this model, the outcomes are affected by a linear trend over time [ 67 ].

M3: Cauchy errors : \({u}_{i}\equiv 0\) and \({\varepsilon }_{i}\sim\) i.i.d. Cauchy(0,1), \(i=1,\dots ,n\) . In this setup, we have a misspecification of the distribution of measurement errors.

M4: Selection bias : \({u}_{i+1}=-\nu \cdot sign\left\{D\left(i\right)\right\}\) , \(i=0,\dots ,n-1\) , with the convention that \(D\left(0\right)=0\) . Here, \(\nu >0\) is the “bias effect” (in our simulations we set \(\nu =0.5\) ). We also assume that \({\varepsilon }_{i}\sim\) i.i.d. N(0,1), \(i=1,\dots ,n\) . In this setup, at each allocation step the investigator attempts to intelligently guess the upcoming treatment assignment and selectively enroll a patient who, in their view, would be most suitable for the upcoming treatment. The investigator uses the “convergence” guessing strategy [ 28 ], that is, guess the treatment as one that has been less frequently assigned thus far, or make a random guess in case the current treatment numbers are equal. Assuming that the investigator favors the experimental treatment and is interested in demonstrating its superiority over the control, the biasing mechanism is as follows: at the \((i+1)\) st step, a “healthier” patient is enrolled, if \(D\left(i\right)<0\) ( \({u}_{i+1}=0.5\) ); a “sicker” patient is enrolled, if \(D\left(i\right)>0\) ( \({u}_{i+1}=-0.5\) ); or a “regular” patient is enrolled, if \(D\left(i\right)=0\) ( \({u}_{i+1}=0\) ).

We consider three statistical test procedures:

T1: Two-sample t-test : The test statistic is \(t=\frac{{\overline{Y} }_{E}-{\overline{Y} }_{C}}{\sqrt{{S}_{p}^{2}\left(\frac{1}{{N}_{E}\left(n\right)}+\frac{1}{{N}_{C}\left(n\right)}\right)}}\) , where \({\overline{Y} }_{E}=\frac{1}{{N}_{E}\left(n\right)}{\sum }_{i=1}^{n}{{\delta }_{i}Y}_{i}\) and \({\overline{Y} }_{C}=\frac{1}{{N}_{C}\left(n\right)}{\sum }_{i=1}^{n}{(1-\delta }_{i}){Y}_{i}\) are the treatment sample means, \({N}_{E}\left(n\right)={\sum }_{i=1}^{n}{\delta }_{i}\) and \({N}_{C}\left(n\right)=n-{N}_{E}\left(n\right)\) are the observed group sample sizes, and \({S}_{p}^{2}\) is a pooled estimate of variance, where \({S}_{p}^{2}=\frac{1}{n-2}\left({\sum }_{i=1}^{n}{\delta }_{i}{\left({Y}_{i}-{\overline{Y} }_{E}\right)}^{2}+{\sum }_{i=1}^{n}(1-{\delta }_{i}){\left({Y}_{i}-{\overline{Y} }_{C}\right)}^{2}\right)\) . Then \({H}_{0}:\Delta =0\) is rejected at level \(\alpha\) , if \(\left|t\right|>{t}_{1-\frac{\alpha }{2}, n-2}\) , the 100( \(1-\frac{\alpha }{2}\) )th percentile of the t-distribution with \(n-2\) degrees of freedom.

T2: Randomization-based test using mean difference : Let \({{\varvec{\updelta}}}_{obs}\) and \({{\varvec{y}}}_{obs}\) denote, respectively the observed sequence of treatment assignments and responses, obtained from the trial using randomization procedure \(\mathfrak{R}\) . We first compute the observed mean difference \({S}_{obs}=S\left({{\varvec{\updelta}}}_{obs},{{\varvec{y}}}_{obs}\right)={\overline{Y} }_{E}-{\overline{Y} }_{C}\) . Then we use Monte Carlo simulation to generate \(L\) randomization sequences of length \(n\) using procedure \(\mathfrak{R}\) , where \(L\) is some large number. For the \(\ell\mathrm{th}\) generated sequence, \({{\varvec{\updelta}}}_{\ell}\) , compute \({S}_{\ell}=S({{\varvec{\updelta}}}_{\ell},{{\varvec{y}}}_{obs})\) , where \({\ell}=1,\dots ,L\) . The proportion of sequences for which \({S}_{\ell}\) is at least as extreme as \({S}_{obs}\) is computed as \(\widehat{P}=\frac{1}{L}{\sum }_{{\ell}=1}^{L}1\left\{\left|{S}_{\ell}\right|\ge \left|{S}_{obs}\right|\right\}\) . Statistical significance is declared, if \(\widehat{P}<\alpha\) .

T3: Randomization-based test based on ranks : This test procedure follows the same logic as T2, except that the test statistic is calculated based on ranks. Given the vector of observed responses \({{\varvec{y}}}_{obs}=({y}_{1},\dots ,{y}_{n})\) , let \({a}_{jn}\) denote the rank of \({y}_{j}\) among the elements of \({{\varvec{y}}}_{obs}\) . Let \({\overline a}_n\) denote the average of \({a}_{jn}\) ’s, and let \({\boldsymbol a}_n=\left(a_{1n}-{\overline a}_n,...,\alpha_{nn}-{\overline a}_n\right)\boldsymbol'\) . Then a linear rank test statistic has the form \({S}_{obs}={{\varvec{\updelta}}}_{obs}^{\boldsymbol{^{\prime}}}{{\varvec{a}}}_{n}={\sum }_{i=1}^{n}{\delta }_{i}({a}_{in}-{\overline{a} }_{n})\) .

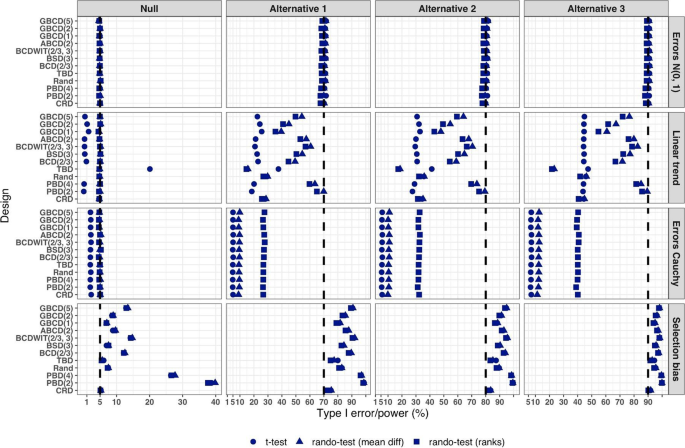

We consider four scenarios of the true mean difference \(\Delta ={\mu }_{E}-{\mu }_{C}\) , which correspond to the Null case ( \(\Delta =0\) ), and three choices of \(\Delta >0\) which correspond to Alternative 1 (power ~ 70%), Alternative 2 (power ~ 80%), and Alternative 3 (power ~ 90%). In all cases, \(n=50\) was used.

Figure 5 summarizes the results of a simulation study comparing 12 randomization designs, under 4 models for the outcome (M1, M2, M3, and M4), 4 scenarios for the mean treatment difference (Null, and Alternatives 1, 2, and 3), using 3 statistical tests (T1, T2, and T3). The operating characteristics of interest are the type I error rate under the Null scenario and the power under the Alternative scenarios. Each scenario was simulated 10,000 times, and each randomization-based test was computed using \(L=\mathrm{10,000}\) sequences.

Simulated type I error rate and power of 12 restricted randomization procedures. Four models for the data generating mechanism of the primary outcome (M1: Normal random sampling; M2: Linear trend; M3: Errors Cauchy; and M4: Selection bias). Four scenarios for the treatment mean difference (Null; Alternatives 1, 2, and 3). Three statistical tests (T1: two-sample t-test; T2: randomization-based test using mean difference; T3: randomization-based test using ranks)

From Fig. 5 , under the normal random sampling model (M1), all considered randomization designs have similar performance: they maintain the type I error rate and have similar power, with all tests. In other words, when population model assumptions are satisfied, any combination of design and analysis should work well and yield reliable and consistent results.

Under the “linear trend” model (M2), the designs have differential performance. First of all, under the Null scenario, only Rand and CRD maintain the type I error rate at 5% with all three tests. For TBD, the t-test is anticonservative, with type I error rate ~ 20%, whereas for nine other procedures the t-test is conservative, with type I error rate in the range 0.1–2%. At the same time, for all 12 designs the two randomization-based tests maintain the nominal type I error rate at 5%. These results are consistent with some previous findings in the literature [ 67 , 68 ]. As regards power, it is reduced significantly compared to the normal random sampling scenario. The t-test seems to be most affected and the randomization-based test using ranks is most robust for a majority of the designs. Remarkably, for CRD the power is similar with all three tests. This signifies the usefulness of randomization-based inference in situations when outcome data are subject to a linear time trend, and the importance of applying randomization-based tests at least as supplemental analyses to likelihood-based test procedures.

Under the “Cauchy errors” model (M3), all designs perform similarly: the randomization-based tests maintain the type I error rate at 5%, whereas the t-test deflates the type I error to 2%. As regards power, all designs also have similar, consistently degraded performance: the t-test is least powerful, and the randomization-based test using ranks has highest power. Overall, under misspecification of the error distribution a randomization-based test using ranks is most appropriate; yet one should acknowledge that its power is still lower than expected.

Under the “selection bias” model (M4), the 12 designs have differential performance. The only procedure that maintained the type I error rate at 5% with all three tests was CRD. For eleven other procedures, inflations of the type I error were observed. In general, the more random the design, the less it was affected by selection bias. For instance, the type I error rate for TBD was ~ 6%; for Rand, BSD(3), and GBCD(1) it was ~ 7.5%; for GBCD(2) and ABCD(2) it was ~ 8–9%; for Efron’s BCD(2/3) it was ~ 12.5%; and the most affected design was PBD(2) for which the type I error rate was ~ 38–40%. These results are consistent with the theory of Blackwell and Hodges [ 28 ] which posits that TBD is least susceptible to selection bias within a class of restricted randomization designs that force exact balance. Finally, under M4, statistical power is inflated by several percentage points compared to the normal random sampling scenario without selection bias.

We performed additional simulations to assess the impact of the bias effect \(\nu\) under selection bias model. The same 12 randomization designs and three statistical tests were evaluated for a trial with \(n=50\) under the Null scenario ( \(\Delta =0\) ), for \(\nu\) in the range of 0 (no bias) to 1 (strong bias). Figure S1 in the Supplementary Materials shows that for all designs but CRD, the type I error rate is increasing in \(\nu\) , with all three tests. The magnitude of the type I error inflation is different across the restricted randomization designs; e.g. for TBD it is minimal, whereas for more restrictive designs it may be large, especially for \(\nu \ge 0.4\) . PBD(2) is particularly vulnerable: for \(\nu\) in the range 0.4–1, its type I error rate is in the range 27–90% (for the nominal \(\alpha =5\) %).

In summary, our Example 1 includes most of the key ingredients of the roadmap for assessment of competing randomization designs which was described in the “ Methods ” section. For the chosen experimental scenarios, we evaluated CRD and several restricted randomization procedures, some of which belonged to the same class but with different values of the parameter (e.g. GBCD with \(\gamma =1, 2, 5\) ). We assessed two measures of imbalance, two measures of lack of randomness (predictability), and a metric that quantifies balance/randomness tradeoff. Based on these criteria, we found that BSD(3) provides overall best performance. We also evaluated type I error and power of selected randomization procedures under several treatment response models. We have observed important links between balance, randomness, type I error rate and power. It is beneficial to consider all these criteria simultaneously as they may complement each other in characterizing statistical properties of randomization designs. In particular, we found that a design that lacks randomness, such as PBD with blocks of 2 or 4, may be vulnerable to selection bias and lead to inflations of the type I error. Therefore, these designs should be avoided, especially in open-label studies. As regards statistical power, since all designs in this example targeted 1:1 allocation ratio (which is optimal if the outcomes are normally distributed and have between-group constant variance), they had very similar power of statistical tests in most scenarios except for the one with chronological bias. In the latter case, randomization-based tests were more robust and more powerful than the standard two-sample t-test under the population model assumption.

Overall, while Example 1 is based on a hypothetical 1:1 RCT, its true purpose is to showcase the thinking process in the application of our general roadmap. The following three examples are considered in the context of real RCTs.

Example 2: How can we reduce predictability of a randomization procedure and lower the risk of selection bias?