Chapter 2. Research Design

Getting started.

When I teach undergraduates qualitative research methods, the final product of the course is a “research proposal” that incorporates all they have learned and enlists the knowledge they have learned about qualitative research methods in an original design that addresses a particular research question. I highly recommend you think about designing your own research study as you progress through this textbook. Even if you don’t have a study in mind yet, it can be a helpful exercise as you progress through the course. But how to start? How can one design a research study before they even know what research looks like? This chapter will serve as a brief overview of the research design process to orient you to what will be coming in later chapters. Think of it as a “skeleton” of what you will read in more detail in later chapters. Ideally, you will read this chapter both now (in sequence) and later during your reading of the remainder of the text. Do not worry if you have questions the first time you read this chapter. Many things will become clearer as the text advances and as you gain a deeper understanding of all the components of good qualitative research. This is just a preliminary map to get you on the right road.

Research Design Steps

Before you even get started, you will need to have a broad topic of interest in mind. [1] . In my experience, students can confuse this broad topic with the actual research question, so it is important to clearly distinguish the two. And the place to start is the broad topic. It might be, as was the case with me, working-class college students. But what about working-class college students? What’s it like to be one? Why are there so few compared to others? How do colleges assist (or fail to assist) them? What interested me was something I could barely articulate at first and went something like this: “Why was it so difficult and lonely to be me?” And by extension, “Did others share this experience?”

Once you have a general topic, reflect on why this is important to you. Sometimes we connect with a topic and we don’t really know why. Even if you are not willing to share the real underlying reason you are interested in a topic, it is important that you know the deeper reasons that motivate you. Otherwise, it is quite possible that at some point during the research, you will find yourself turned around facing the wrong direction. I have seen it happen many times. The reason is that the research question is not the same thing as the general topic of interest, and if you don’t know the reasons for your interest, you are likely to design a study answering a research question that is beside the point—to you, at least. And this means you will be much less motivated to carry your research to completion.

Researcher Note

Why do you employ qualitative research methods in your area of study? What are the advantages of qualitative research methods for studying mentorship?

Qualitative research methods are a huge opportunity to increase access, equity, inclusion, and social justice. Qualitative research allows us to engage and examine the uniquenesses/nuances within minoritized and dominant identities and our experiences with these identities. Qualitative research allows us to explore a specific topic, and through that exploration, we can link history to experiences and look for patterns or offer up a unique phenomenon. There’s such beauty in being able to tell a particular story, and qualitative research is a great mode for that! For our work, we examined the relationships we typically use the term mentorship for but didn’t feel that was quite the right word. Qualitative research allowed us to pick apart what we did and how we engaged in our relationships, which then allowed us to more accurately describe what was unique about our mentorship relationships, which we ultimately named liberationships ( McAloney and Long 2021) . Qualitative research gave us the means to explore, process, and name our experiences; what a powerful tool!

How do you come up with ideas for what to study (and how to study it)? Where did you get the idea for studying mentorship?

Coming up with ideas for research, for me, is kind of like Googling a question I have, not finding enough information, and then deciding to dig a little deeper to get the answer. The idea to study mentorship actually came up in conversation with my mentorship triad. We were talking in one of our meetings about our relationship—kind of meta, huh? We discussed how we felt that mentorship was not quite the right term for the relationships we had built. One of us asked what was different about our relationships and mentorship. This all happened when I was taking an ethnography course. During the next session of class, we were discussing auto- and duoethnography, and it hit me—let’s explore our version of mentorship, which we later went on to name liberationships ( McAloney and Long 2021 ). The idea and questions came out of being curious and wanting to find an answer. As I continue to research, I see opportunities in questions I have about my work or during conversations that, in our search for answers, end up exposing gaps in the literature. If I can’t find the answer already out there, I can study it.

—Kim McAloney, PhD, College Student Services Administration Ecampus coordinator and instructor

When you have a better idea of why you are interested in what it is that interests you, you may be surprised to learn that the obvious approaches to the topic are not the only ones. For example, let’s say you think you are interested in preserving coastal wildlife. And as a social scientist, you are interested in policies and practices that affect the long-term viability of coastal wildlife, especially around fishing communities. It would be natural then to consider designing a research study around fishing communities and how they manage their ecosystems. But when you really think about it, you realize that what interests you the most is how people whose livelihoods depend on a particular resource act in ways that deplete that resource. Or, even deeper, you contemplate the puzzle, “How do people justify actions that damage their surroundings?” Now, there are many ways to design a study that gets at that broader question, and not all of them are about fishing communities, although that is certainly one way to go. Maybe you could design an interview-based study that includes and compares loggers, fishers, and desert golfers (those who golf in arid lands that require a great deal of wasteful irrigation). Or design a case study around one particular example where resources were completely used up by a community. Without knowing what it is you are really interested in, what motivates your interest in a surface phenomenon, you are unlikely to come up with the appropriate research design.

These first stages of research design are often the most difficult, but have patience . Taking the time to consider why you are going to go through a lot of trouble to get answers will prevent a lot of wasted energy in the future.

There are distinct reasons for pursuing particular research questions, and it is helpful to distinguish between them. First, you may be personally motivated. This is probably the most important and the most often overlooked. What is it about the social world that sparks your curiosity? What bothers you? What answers do you need in order to keep living? For me, I knew I needed to get a handle on what higher education was for before I kept going at it. I needed to understand why I felt so different from my peers and whether this whole “higher education” thing was “for the likes of me” before I could complete my degree. That is the personal motivation question. Your personal motivation might also be political in nature, in that you want to change the world in a particular way. It’s all right to acknowledge this. In fact, it is better to acknowledge it than to hide it.

There are also academic and professional motivations for a particular study. If you are an absolute beginner, these may be difficult to find. We’ll talk more about this when we discuss reviewing the literature. Simply put, you are probably not the only person in the world to have thought about this question or issue and those related to it. So how does your interest area fit into what others have studied? Perhaps there is a good study out there of fishing communities, but no one has quite asked the “justification” question. You are motivated to address this to “fill the gap” in our collective knowledge. And maybe you are really not at all sure of what interests you, but you do know that [insert your topic] interests a lot of people, so you would like to work in this area too. You want to be involved in the academic conversation. That is a professional motivation and a very important one to articulate.

Practical and strategic motivations are a third kind. Perhaps you want to encourage people to take better care of the natural resources around them. If this is also part of your motivation, you will want to design your research project in a way that might have an impact on how people behave in the future. There are many ways to do this, one of which is using qualitative research methods rather than quantitative research methods, as the findings of qualitative research are often easier to communicate to a broader audience than the results of quantitative research. You might even be able to engage the community you are studying in the collecting and analyzing of data, something taboo in quantitative research but actively embraced and encouraged by qualitative researchers. But there are other practical reasons, such as getting “done” with your research in a certain amount of time or having access (or no access) to certain information. There is nothing wrong with considering constraints and opportunities when designing your study. Or maybe one of the practical or strategic goals is about learning competence in this area so that you can demonstrate the ability to conduct interviews and focus groups with future employers. Keeping that in mind will help shape your study and prevent you from getting sidetracked using a technique that you are less invested in learning about.

STOP HERE for a moment

I recommend you write a paragraph (at least) explaining your aims and goals. Include a sentence about each of the following: personal/political goals, practical or professional/academic goals, and practical/strategic goals. Think through how all of the goals are related and can be achieved by this particular research study . If they can’t, have a rethink. Perhaps this is not the best way to go about it.

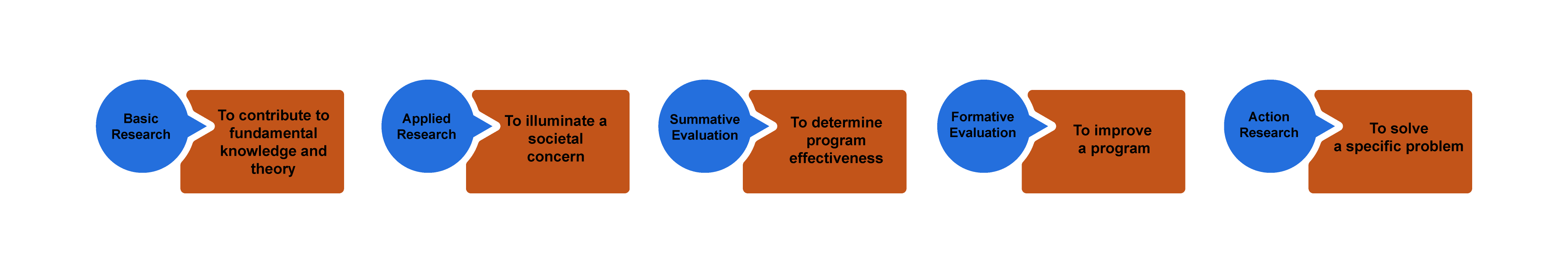

You will also want to be clear about the purpose of your study. “Wait, didn’t we just do this?” you might ask. No! Your goals are not the same as the purpose of the study, although they are related. You can think about purpose lying on a continuum from “ theory ” to “action” (figure 2.1). Sometimes you are doing research to discover new knowledge about the world, while other times you are doing a study because you want to measure an impact or make a difference in the world.

Basic research involves research that is done for the sake of “pure” knowledge—that is, knowledge that, at least at this moment in time, may not have any apparent use or application. Often, and this is very important, knowledge of this kind is later found to be extremely helpful in solving problems. So one way of thinking about basic research is that it is knowledge for which no use is yet known but will probably one day prove to be extremely useful. If you are doing basic research, you do not need to argue its usefulness, as the whole point is that we just don’t know yet what this might be.

Researchers engaged in basic research want to understand how the world operates. They are interested in investigating a phenomenon to get at the nature of reality with regard to that phenomenon. The basic researcher’s purpose is to understand and explain ( Patton 2002:215 ).

Basic research is interested in generating and testing hypotheses about how the world works. Grounded Theory is one approach to qualitative research methods that exemplifies basic research (see chapter 4). Most academic journal articles publish basic research findings. If you are working in academia (e.g., writing your dissertation), the default expectation is that you are conducting basic research.

Applied research in the social sciences is research that addresses human and social problems. Unlike basic research, the researcher has expectations that the research will help contribute to resolving a problem, if only by identifying its contours, history, or context. From my experience, most students have this as their baseline assumption about research. Why do a study if not to make things better? But this is a common mistake. Students and their committee members are often working with default assumptions here—the former thinking about applied research as their purpose, the latter thinking about basic research: “The purpose of applied research is to contribute knowledge that will help people to understand the nature of a problem in order to intervene, thereby allowing human beings to more effectively control their environment. While in basic research the source of questions is the tradition within a scholarly discipline, in applied research the source of questions is in the problems and concerns experienced by people and by policymakers” ( Patton 2002:217 ).

Applied research is less geared toward theory in two ways. First, its questions do not derive from previous literature. For this reason, applied research studies have much more limited literature reviews than those found in basic research (although they make up for this by having much more “background” about the problem). Second, it does not generate theory in the same way as basic research does. The findings of an applied research project may not be generalizable beyond the boundaries of this particular problem or context. The findings are more limited. They are useful now but may be less useful later. This is why basic research remains the default “gold standard” of academic research.

Evaluation research is research that is designed to evaluate or test the effectiveness of specific solutions and programs addressing specific social problems. We already know the problems, and someone has already come up with solutions. There might be a program, say, for first-generation college students on your campus. Does this program work? Are first-generation students who participate in the program more likely to graduate than those who do not? These are the types of questions addressed by evaluation research. There are two types of research within this broader frame; however, one more action-oriented than the next. In summative evaluation , an overall judgment about the effectiveness of a program or policy is made. Should we continue our first-gen program? Is it a good model for other campuses? Because the purpose of such summative evaluation is to measure success and to determine whether this success is scalable (capable of being generalized beyond the specific case), quantitative data is more often used than qualitative data. In our example, we might have “outcomes” data for thousands of students, and we might run various tests to determine if the better outcomes of those in the program are statistically significant so that we can generalize the findings and recommend similar programs elsewhere. Qualitative data in the form of focus groups or interviews can then be used for illustrative purposes, providing more depth to the quantitative analyses. In contrast, formative evaluation attempts to improve a program or policy (to help “form” or shape its effectiveness). Formative evaluations rely more heavily on qualitative data—case studies, interviews, focus groups. The findings are meant not to generalize beyond the particular but to improve this program. If you are a student seeking to improve your qualitative research skills and you do not care about generating basic research, formative evaluation studies might be an attractive option for you to pursue, as there are always local programs that need evaluation and suggestions for improvement. Again, be very clear about your purpose when talking through your research proposal with your committee.

Action research takes a further step beyond evaluation, even formative evaluation, to being part of the solution itself. This is about as far from basic research as one could get and definitely falls beyond the scope of “science,” as conventionally defined. The distinction between action and research is blurry, the research methods are often in constant flux, and the only “findings” are specific to the problem or case at hand and often are findings about the process of intervention itself. Rather than evaluate a program as a whole, action research often seeks to change and improve some particular aspect that may not be working—maybe there is not enough diversity in an organization or maybe women’s voices are muted during meetings and the organization wonders why and would like to change this. In a further step, participatory action research , those women would become part of the research team, attempting to amplify their voices in the organization through participation in the action research. As action research employs methods that involve people in the process, focus groups are quite common.

If you are working on a thesis or dissertation, chances are your committee will expect you to be contributing to fundamental knowledge and theory ( basic research ). If your interests lie more toward the action end of the continuum, however, it is helpful to talk to your committee about this before you get started. Knowing your purpose in advance will help avoid misunderstandings during the later stages of the research process!

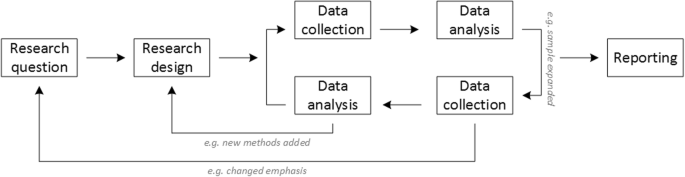

The Research Question

Once you have written your paragraph and clarified your purpose and truly know that this study is the best study for you to be doing right now , you are ready to write and refine your actual research question. Know that research questions are often moving targets in qualitative research, that they can be refined up to the very end of data collection and analysis. But you do have to have a working research question at all stages. This is your “anchor” when you get lost in the data. What are you addressing? What are you looking at and why? Your research question guides you through the thicket. It is common to have a whole host of questions about a phenomenon or case, both at the outset and throughout the study, but you should be able to pare it down to no more than two or three sentences when asked. These sentences should both clarify the intent of the research and explain why this is an important question to answer. More on refining your research question can be found in chapter 4.

Chances are, you will have already done some prior reading before coming up with your interest and your questions, but you may not have conducted a systematic literature review. This is the next crucial stage to be completed before venturing further. You don’t want to start collecting data and then realize that someone has already beaten you to the punch. A review of the literature that is already out there will let you know (1) if others have already done the study you are envisioning; (2) if others have done similar studies, which can help you out; and (3) what ideas or concepts are out there that can help you frame your study and make sense of your findings. More on literature reviews can be found in chapter 9.

In addition to reviewing the literature for similar studies to what you are proposing, it can be extremely helpful to find a study that inspires you. This may have absolutely nothing to do with the topic you are interested in but is written so beautifully or organized so interestingly or otherwise speaks to you in such a way that you want to post it somewhere to remind you of what you want to be doing. You might not understand this in the early stages—why would you find a study that has nothing to do with the one you are doing helpful? But trust me, when you are deep into analysis and writing, having an inspirational model in view can help you push through. If you are motivated to do something that might change the world, you probably have read something somewhere that inspired you. Go back to that original inspiration and read it carefully and see how they managed to convey the passion that you so appreciate.

At this stage, you are still just getting started. There are a lot of things to do before setting forth to collect data! You’ll want to consider and choose a research tradition and a set of data-collection techniques that both help you answer your research question and match all your aims and goals. For example, if you really want to help migrant workers speak for themselves, you might draw on feminist theory and participatory action research models. Chapters 3 and 4 will provide you with more information on epistemologies and approaches.

Next, you have to clarify your “units of analysis.” What is the level at which you are focusing your study? Often, the unit in qualitative research methods is individual people, or “human subjects.” But your units of analysis could just as well be organizations (colleges, hospitals) or programs or even whole nations. Think about what it is you want to be saying at the end of your study—are the insights you are hoping to make about people or about organizations or about something else entirely? A unit of analysis can even be a historical period! Every unit of analysis will call for a different kind of data collection and analysis and will produce different kinds of “findings” at the conclusion of your study. [2]

Regardless of what unit of analysis you select, you will probably have to consider the “human subjects” involved in your research. [3] Who are they? What interactions will you have with them—that is, what kind of data will you be collecting? Before answering these questions, define your population of interest and your research setting. Use your research question to help guide you.

Let’s use an example from a real study. In Geographies of Campus Inequality , Benson and Lee ( 2020 ) list three related research questions: “(1) What are the different ways that first-generation students organize their social, extracurricular, and academic activities at selective and highly selective colleges? (2) how do first-generation students sort themselves and get sorted into these different types of campus lives; and (3) how do these different patterns of campus engagement prepare first-generation students for their post-college lives?” (3).

Note that we are jumping into this a bit late, after Benson and Lee have described previous studies (the literature review) and what is known about first-generation college students and what is not known. They want to know about differences within this group, and they are interested in ones attending certain kinds of colleges because those colleges will be sites where academic and extracurricular pressures compete. That is the context for their three related research questions. What is the population of interest here? First-generation college students . What is the research setting? Selective and highly selective colleges . But a host of questions remain. Which students in the real world, which colleges? What about gender, race, and other identity markers? Will the students be asked questions? Are the students still in college, or will they be asked about what college was like for them? Will they be observed? Will they be shadowed? Will they be surveyed? Will they be asked to keep diaries of their time in college? How many students? How many colleges? For how long will they be observed?

Recommendation

Take a moment and write down suggestions for Benson and Lee before continuing on to what they actually did.

Have you written down your own suggestions? Good. Now let’s compare those with what they actually did. Benson and Lee drew on two sources of data: in-depth interviews with sixty-four first-generation students and survey data from a preexisting national survey of students at twenty-eight selective colleges. Let’s ignore the survey for our purposes here and focus on those interviews. The interviews were conducted between 2014 and 2016 at a single selective college, “Hilltop” (a pseudonym ). They employed a “purposive” sampling strategy to ensure an equal number of male-identifying and female-identifying students as well as equal numbers of White, Black, and Latinx students. Each student was interviewed once. Hilltop is a selective liberal arts college in the northeast that enrolls about three thousand students.

How did your suggestions match up to those actually used by the researchers in this study? It is possible your suggestions were too ambitious? Beginning qualitative researchers can often make that mistake. You want a research design that is both effective (it matches your question and goals) and doable. You will never be able to collect data from your entire population of interest (unless your research question is really so narrow to be relevant to very few people!), so you will need to come up with a good sample. Define the criteria for this sample, as Benson and Lee did when deciding to interview an equal number of students by gender and race categories. Define the criteria for your sample setting too. Hilltop is typical for selective colleges. That was a research choice made by Benson and Lee. For more on sampling and sampling choices, see chapter 5.

Benson and Lee chose to employ interviews. If you also would like to include interviews, you have to think about what will be asked in them. Most interview-based research involves an interview guide, a set of questions or question areas that will be asked of each participant. The research question helps you create a relevant interview guide. You want to ask questions whose answers will provide insight into your research question. Again, your research question is the anchor you will continually come back to as you plan for and conduct your study. It may be that once you begin interviewing, you find that people are telling you something totally unexpected, and this makes you rethink your research question. That is fine. Then you have a new anchor. But you always have an anchor. More on interviewing can be found in chapter 11.

Let’s imagine Benson and Lee also observed college students as they went about doing the things college students do, both in the classroom and in the clubs and social activities in which they participate. They would have needed a plan for this. Would they sit in on classes? Which ones and how many? Would they attend club meetings and sports events? Which ones and how many? Would they participate themselves? How would they record their observations? More on observation techniques can be found in both chapters 13 and 14.

At this point, the design is almost complete. You know why you are doing this study, you have a clear research question to guide you, you have identified your population of interest and research setting, and you have a reasonable sample of each. You also have put together a plan for data collection, which might include drafting an interview guide or making plans for observations. And so you know exactly what you will be doing for the next several months (or years!). To put the project into action, there are a few more things necessary before actually going into the field.

First, you will need to make sure you have any necessary supplies, including recording technology. These days, many researchers use their phones to record interviews. Second, you will need to draft a few documents for your participants. These include informed consent forms and recruiting materials, such as posters or email texts, that explain what this study is in clear language. Third, you will draft a research protocol to submit to your institutional review board (IRB) ; this research protocol will include the interview guide (if you are using one), the consent form template, and all examples of recruiting material. Depending on your institution and the details of your study design, it may take weeks or even, in some unfortunate cases, months before you secure IRB approval. Make sure you plan on this time in your project timeline. While you wait, you can continue to review the literature and possibly begin drafting a section on the literature review for your eventual presentation/publication. More on IRB procedures can be found in chapter 8 and more general ethical considerations in chapter 7.

Once you have approval, you can begin!

Research Design Checklist

Before data collection begins, do the following:

- Write a paragraph explaining your aims and goals (personal/political, practical/strategic, professional/academic).

- Define your research question; write two to three sentences that clarify the intent of the research and why this is an important question to answer.

- Review the literature for similar studies that address your research question or similar research questions; think laterally about some literature that might be helpful or illuminating but is not exactly about the same topic.

- Find a written study that inspires you—it may or may not be on the research question you have chosen.

- Consider and choose a research tradition and set of data-collection techniques that (1) help answer your research question and (2) match your aims and goals.

- Define your population of interest and your research setting.

- Define the criteria for your sample (How many? Why these? How will you find them, gain access, and acquire consent?).

- If you are conducting interviews, draft an interview guide.

- If you are making observations, create a plan for observations (sites, times, recording, access).

- Acquire any necessary technology (recording devices/software).

- Draft consent forms that clearly identify the research focus and selection process.

- Create recruiting materials (posters, email, texts).

- Apply for IRB approval (proposal plus consent form plus recruiting materials).

- Block out time for collecting data.

- At the end of the chapter, you will find a " Research Design Checklist " that summarizes the main recommendations made here ↵

- For example, if your focus is society and culture , you might collect data through observation or a case study. If your focus is individual lived experience , you are probably going to be interviewing some people. And if your focus is language and communication , you will probably be analyzing text (written or visual). ( Marshall and Rossman 2016:16 ). ↵

- You may not have any "live" human subjects. There are qualitative research methods that do not require interactions with live human beings - see chapter 16 , "Archival and Historical Sources." But for the most part, you are probably reading this textbook because you are interested in doing research with people. The rest of the chapter will assume this is the case. ↵

One of the primary methodological traditions of inquiry in qualitative research, ethnography is the study of a group or group culture, largely through observational fieldwork supplemented by interviews. It is a form of fieldwork that may include participant-observation data collection. See chapter 14 for a discussion of deep ethnography.

A methodological tradition of inquiry and research design that focuses on an individual case (e.g., setting, institution, or sometimes an individual) in order to explore its complexity, history, and interactive parts. As an approach, it is particularly useful for obtaining a deep appreciation of an issue, event, or phenomenon of interest in its particular context.

The controlling force in research; can be understood as lying on a continuum from basic research (knowledge production) to action research (effecting change).

In its most basic sense, a theory is a story we tell about how the world works that can be tested with empirical evidence. In qualitative research, we use the term in a variety of ways, many of which are different from how they are used by quantitative researchers. Although some qualitative research can be described as “testing theory,” it is more common to “build theory” from the data using inductive reasoning , as done in Grounded Theory . There are so-called “grand theories” that seek to integrate a whole series of findings and stories into an overarching paradigm about how the world works, and much smaller theories or concepts about particular processes and relationships. Theory can even be used to explain particular methodological perspectives or approaches, as in Institutional Ethnography , which is both a way of doing research and a theory about how the world works.

Research that is interested in generating and testing hypotheses about how the world works.

A methodological tradition of inquiry and approach to analyzing qualitative data in which theories emerge from a rigorous and systematic process of induction. This approach was pioneered by the sociologists Glaser and Strauss (1967). The elements of theory generated from comparative analysis of data are, first, conceptual categories and their properties and, second, hypotheses or generalized relations among the categories and their properties – “The constant comparing of many groups draws the [researcher’s] attention to their many similarities and differences. Considering these leads [the researcher] to generate abstract categories and their properties, which, since they emerge from the data, will clearly be important to a theory explaining the kind of behavior under observation.” (36).

An approach to research that is “multimethod in focus, involving an interpretative, naturalistic approach to its subject matter. This means that qualitative researchers study things in their natural settings, attempting to make sense of, or interpret, phenomena in terms of the meanings people bring to them. Qualitative research involves the studied use and collection of a variety of empirical materials – case study, personal experience, introspective, life story, interview, observational, historical, interactional, and visual texts – that describe routine and problematic moments and meanings in individuals’ lives." ( Denzin and Lincoln 2005:2 ). Contrast with quantitative research .

Research that contributes knowledge that will help people to understand the nature of a problem in order to intervene, thereby allowing human beings to more effectively control their environment.

Research that is designed to evaluate or test the effectiveness of specific solutions and programs addressing specific social problems. There are two kinds: summative and formative .

Research in which an overall judgment about the effectiveness of a program or policy is made, often for the purpose of generalizing to other cases or programs. Generally uses qualitative research as a supplement to primary quantitative data analyses. Contrast formative evaluation research .

Research designed to improve a program or policy (to help “form” or shape its effectiveness); relies heavily on qualitative research methods. Contrast summative evaluation research

Research carried out at a particular organizational or community site with the intention of affecting change; often involves research subjects as participants of the study. See also participatory action research .

Research in which both researchers and participants work together to understand a problematic situation and change it for the better.

The level of the focus of analysis (e.g., individual people, organizations, programs, neighborhoods).

The large group of interest to the researcher. Although it will likely be impossible to design a study that incorporates or reaches all members of the population of interest, this should be clearly defined at the outset of a study so that a reasonable sample of the population can be taken. For example, if one is studying working-class college students, the sample may include twenty such students attending a particular college, while the population is “working-class college students.” In quantitative research, clearly defining the general population of interest is a necessary step in generalizing results from a sample. In qualitative research, defining the population is conceptually important for clarity.

A fictional name assigned to give anonymity to a person, group, or place. Pseudonyms are important ways of protecting the identity of research participants while still providing a “human element” in the presentation of qualitative data. There are ethical considerations to be made in selecting pseudonyms; some researchers allow research participants to choose their own.

A requirement for research involving human participants; the documentation of informed consent. In some cases, oral consent or assent may be sufficient, but the default standard is a single-page easy-to-understand form that both the researcher and the participant sign and date. Under federal guidelines, all researchers "shall seek such consent only under circumstances that provide the prospective subject or the representative sufficient opportunity to consider whether or not to participate and that minimize the possibility of coercion or undue influence. The information that is given to the subject or the representative shall be in language understandable to the subject or the representative. No informed consent, whether oral or written, may include any exculpatory language through which the subject or the representative is made to waive or appear to waive any of the subject's rights or releases or appears to release the investigator, the sponsor, the institution, or its agents from liability for negligence" (21 CFR 50.20). Your IRB office will be able to provide a template for use in your study .

An administrative body established to protect the rights and welfare of human research subjects recruited to participate in research activities conducted under the auspices of the institution with which it is affiliated. The IRB is charged with the responsibility of reviewing all research involving human participants. The IRB is concerned with protecting the welfare, rights, and privacy of human subjects. The IRB has the authority to approve, disapprove, monitor, and require modifications in all research activities that fall within its jurisdiction as specified by both the federal regulations and institutional policy.

Introduction to Qualitative Research Methods Copyright © 2023 by Allison Hurst is licensed under a Creative Commons Attribution-ShareAlike 4.0 International License , except where otherwise noted.

- Types of qualitative research designs

Last updated

20 February 2023

Reviewed by

Jean Kaluza

Researchers often conduct these studies to gain a detailed understanding of a particular topic through a small, focused sample. Qualitative research methods delve into understanding why something is happening in a larger quantitative study.

To determine whether qualitative research is the best choice for your study, let’s look at the different types of qualitative research design.

Analyze all your qualitative research

Analyze qualitative data faster and surface more actionable insights

- What are qualitative research designs?

Qualitative research designs are research methods that collect and analyze non-numerical data. The research uncovers why or how a particular behavior or occurrence takes place. The information is usually subjective and in a written format instead of numerical.

Researchers may use interviews, focus groups , case studies , journaling, and open-ended questions to gather in-depth information. Qualitative research designs can determine users' concepts, develop a hypothesis , or add context to data from a quantitative study.

- Characteristics of qualitative research design

Most often, qualitative data answers how or why something occurs. Certain characteristics are usually present in all qualitative research designs to ensure accurate data.

The most common characteristics of qualitative research design include the following:

Natural environment

It’s best to collect qualitative research as close to the subject’s original environment as possible to encourage natural behavior and accurate insights.

Empathy is key

Qualitative researchers collect the best data when they’re in sync with their users’ concerns and motivations. They can play into natural human psychology by combining open-ended questioning and subtle cues.

They may mimic body language, adopt the users’ terminology, and use pauses or trailing sentences to encourage their participants to fill in the blanks. The more empathic the interviewer, the purer the data.

Participant selection

Qualitative research depends on the meaning obtained from participants instead of the meaning conveyed in similar research or studies. To increase research accuracy, you choose participants randomly from carefully chosen groups of potential participants.

Different research methods or multiple data sources

To gain in-depth knowledge, qualitative research designs often rely on multiple research methods within the same group.

Emergent design

Qualitative research constantly evolves, meaning the initial study plan might change after you collect data. This evolution might result in changes in research methods or the introduction of a new research problem.

Inductive reasoning

Since qualitative research seeks in-depth meaning, you need complex reasoning to get the right results. Qualitative researchers build categories, patterns, and themes from separate data sets to form a complete conclusion.

Interpretive data

Once you collect the data, you need to read between the lines rather than just noting what your participant said. Qualitative research is unique as we can attach actions to feedback.

If a user says they love the look of your design but haven’t completed any tasks, it’s up to you to interpret this as a failed test, even with their positive sentiments.

Holistic account

To paint a large picture of an issue and potential solutions, a qualitative researcher works to develop a complex description of the research problem. You can avoid a narrow cause-and-effect perspective by describing the problem’s wider perspectives.

- When to use qualitative research design

Qualitative research aims to get a detailed understanding of a particular topic. To accomplish this, you’ll typically use small focus groups to gather in-depth data from varied perspectives.

This approach is only effective for some types of study. For instance, a qualitative approach wouldn’t work for a study that seeks to understand a statistically relevant finding.

When determining if a qualitative research design is appropriate, remember the goal of qualitative research is understanding the “ why .”

Qualitative research design gathers in-depth information that stands on its own. It can also answer the “why” of a quantitative study or be a precursor to forming a hypothesis.

You can use qualitative research in these situations:

Developing a hypothesis for testing in a quantitative study

Identifying customer needs

Developing a new feature

Adding context to the results of a quantitative study

Understanding the motivations, values, and pain points that guide behavior

Difference between qualitative and quantitative research design

Qualitative and quantitative research designs gather data, but that's where the similarities end. Consider the difference between quality and quantity. Both are useful in different ways.

Qualitative research gathers in-depth information to answer how or why . It uses subjective data from detailed interviews, observations, and open-ended questions. Most often, qualitative data is thoughts, experiences, and concepts.

In contrast, quantitative research designs gather large amounts of objective data that you can quantify mathematically. You typically express quantitative data in numbers or graphs, and you use it to test or confirm hypotheses.

Qualitative research designs generally have the same goals. However, there are various ways to achieve these goals. Researchers may use one or more of these approaches in qualitative research.

Historical study

This is where you use extensive information about people and events in the past to draw conclusions about the present and future.

Phenomenology

Phenomenology investigates a phenomenon, activity, or event using data from participants' perspectives. Often, researchers use a combination of methods.

Grounded theory

Grounded theory uses interviews and existing data to build a theory inductively.

Ethnography

Researchers immerse themselves in the target participant's environments to understand goals, cultures, challenges, and themes with ethnography .

A case study is where you use multiple data sources to examine a person, group, community, or institution. Participants must share a connection to the research question you’re studying.

- Advantages and disadvantages of qualitative research

All qualitative research design types share the common goal of obtaining in-depth information. Achieving this goal generally requires extensive data collection methods that can be time-consuming. As such, qualitative research has advantages and disadvantages.

Natural settings

Since you can collect data closer to an authentic environment, it offers more accurate results.

The ability to paint a picture with data

Quantitative studies don't always reveal the full picture. With multiple data collection methods, you can expose the motivations and reasons behind data.

Flexibility

Analysis processes aren't set in stone, so you can adapt the process as ideas or patterns emerge.

Generation of new ideas

Using open-ended responses can uncover new opportunities or solutions that weren't part of your original research plan.

Small sample sizes

You can generate meaningful results with small groups.

Disadvantages

Potentially unreliable.

A natural setting can be a double-edged sword. The inability to attach findings to anything statistically relevant can make data more difficult to quantify.

Subjectivity

Since the researcher plays a vital role in collecting and interpreting data, qualitative research is subject to the researcher's skills. For example, they may miss a cue that changes some of the context of the quotes they collected.

Labor-intensive

You generally collect qualitative data through manual processes like extensive interviews, open-ended questions, and case studies.

Qualitative research designs allow researchers to provide an in-depth analysis of why specific behavior or events occur. It can offer fresh insights, generate new ideas, or add context to statistics from quantitative studies. Depending on your needs, qualitative data might be a great way to gain the information your organization needs to move forward.

Should you be using a customer insights hub?

Do you want to discover previous research faster?

Do you share your research findings with others?

Do you analyze research data?

Start for free today, add your research, and get to key insights faster

Editor’s picks

Last updated: 11 January 2024

Last updated: 15 January 2024

Last updated: 17 January 2024

Last updated: 25 November 2023

Last updated: 12 May 2023

Last updated: 30 April 2024

Last updated: 13 May 2024

Latest articles

Related topics, .css-je19u9{-webkit-align-items:flex-end;-webkit-box-align:flex-end;-ms-flex-align:flex-end;align-items:flex-end;display:-webkit-box;display:-webkit-flex;display:-ms-flexbox;display:flex;-webkit-flex-direction:row;-ms-flex-direction:row;flex-direction:row;-webkit-box-flex-wrap:wrap;-webkit-flex-wrap:wrap;-ms-flex-wrap:wrap;flex-wrap:wrap;-webkit-box-pack:center;-ms-flex-pack:center;-webkit-justify-content:center;justify-content:center;row-gap:0;text-align:center;max-width:671px;}@media (max-width: 1079px){.css-je19u9{max-width:400px;}.css-je19u9>span{white-space:pre;}}@media (max-width: 799px){.css-je19u9{max-width:400px;}.css-je19u9>span{white-space:pre;}} decide what to .css-1kiodld{max-height:56px;display:-webkit-box;display:-webkit-flex;display:-ms-flexbox;display:flex;-webkit-align-items:center;-webkit-box-align:center;-ms-flex-align:center;align-items:center;}@media (max-width: 1079px){.css-1kiodld{display:none;}} build next, decide what to build next.

Users report unexpectedly high data usage, especially during streaming sessions.

Users find it hard to navigate from the home page to relevant playlists in the app.

It would be great to have a sleep timer feature, especially for bedtime listening.

I need better filters to find the songs or artists I’m looking for.

Log in or sign up

Get started for free

Qualitative Research: Characteristics, Design, Methods & Examples

Lauren McCall

MSc Health Psychology Graduate

MSc, Health Psychology, University of Nottingham

Lauren obtained an MSc in Health Psychology from The University of Nottingham with a distinction classification.

Learn about our Editorial Process

Saul Mcleod, PhD

Editor-in-Chief for Simply Psychology

BSc (Hons) Psychology, MRes, PhD, University of Manchester

Saul Mcleod, PhD., is a qualified psychology teacher with over 18 years of experience in further and higher education. He has been published in peer-reviewed journals, including the Journal of Clinical Psychology.

Olivia Guy-Evans, MSc

Associate Editor for Simply Psychology

BSc (Hons) Psychology, MSc Psychology of Education

Olivia Guy-Evans is a writer and associate editor for Simply Psychology. She has previously worked in healthcare and educational sectors.

On This Page:

Qualitative research is a type of research methodology that focuses on gathering and analyzing non-numerical data to gain a deeper understanding of human behavior, experiences, and perspectives.

It aims to explore the “why” and “how” of a phenomenon rather than the “what,” “where,” and “when” typically addressed by quantitative research.

Unlike quantitative research, which focuses on gathering and analyzing numerical data for statistical analysis, qualitative research involves researchers interpreting data to identify themes, patterns, and meanings.

Qualitative research can be used to:

- Gain deep contextual understandings of the subjective social reality of individuals

- To answer questions about experience and meaning from the participant’s perspective

- To design hypotheses, theory must be researched using qualitative methods to determine what is important before research can begin.

Examples of qualitative research questions include:

- How does stress influence young adults’ behavior?

- What factors influence students’ school attendance rates in developed countries?

- How do adults interpret binge drinking in the UK?

- What are the psychological impacts of cervical cancer screening in women?

- How can mental health lessons be integrated into the school curriculum?

Characteristics

Naturalistic setting.

Individuals are studied in their natural setting to gain a deeper understanding of how people experience the world. This enables the researcher to understand a phenomenon close to how participants experience it.

Naturalistic settings provide valuable contextual information to help researchers better understand and interpret the data they collect.

The environment, social interactions, and cultural factors can all influence behavior and experiences, and these elements are more easily observed in real-world settings.

Reality is socially constructed

Qualitative research aims to understand how participants make meaning of their experiences – individually or in social contexts. It assumes there is no objective reality and that the social world is interpreted (Yilmaz, 2013).

The primacy of subject matter

The primary aim of qualitative research is to understand the perspectives, experiences, and beliefs of individuals who have experienced the phenomenon selected for research rather than the average experiences of groups of people (Minichiello, 1990).

An in-depth understanding is attained since qualitative techniques allow participants to freely disclose their experiences, thoughts, and feelings without constraint (Tenny et al., 2022).

Variables are complex, interwoven, and difficult to measure

Factors such as experiences, behaviors, and attitudes are complex and interwoven, so they cannot be reduced to isolated variables , making them difficult to measure quantitatively.

However, a qualitative approach enables participants to describe what, why, or how they were thinking/ feeling during a phenomenon being studied (Yilmaz, 2013).

Emic (insider’s point of view)

The phenomenon being studied is centered on the participants’ point of view (Minichiello, 1990).

Emic is used to describe how participants interact, communicate, and behave in the research setting (Scarduzio, 2017).

Interpretive analysis

In qualitative research, interpretive analysis is crucial in making sense of the collected data.

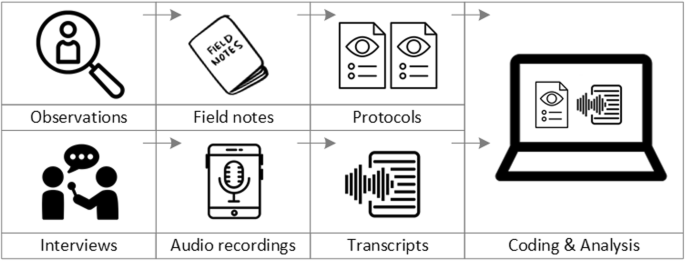

This process involves examining the raw data, such as interview transcripts, field notes, or documents, and identifying the underlying themes, patterns, and meanings that emerge from the participants’ experiences and perspectives.

Collecting Qualitative Data

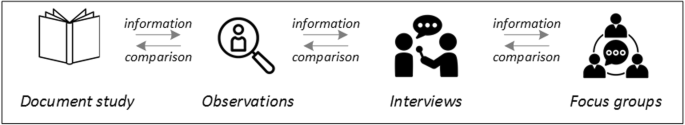

There are four main research design methods used to collect qualitative data: observations, interviews, focus groups, and ethnography.

Observations

This method involves watching and recording phenomena as they occur in nature. Observation can be divided into two types: participant and non-participant observation.

In participant observation, the researcher actively participates in the situation/events being observed.

In non-participant observation, the researcher is not an active part of the observation and tries not to influence the behaviors they are observing (Busetto et al., 2020).

Observations can be covert (participants are unaware that a researcher is observing them) or overt (participants are aware of the researcher’s presence and know they are being observed).

However, awareness of an observer’s presence may influence participants’ behavior.

Interviews give researchers a window into the world of a participant by seeking their account of an event, situation, or phenomenon. They are usually conducted on a one-to-one basis and can be distinguished according to the level at which they are structured (Punch, 2013).

Structured interviews involve predetermined questions and sequences to ensure replicability and comparability. However, they are unable to explore emerging issues.

Informal interviews consist of spontaneous, casual conversations which are closer to the truth of a phenomenon. However, information is gathered using quick notes made by the researcher and is therefore subject to recall bias.

Semi-structured interviews have a flexible structure, phrasing, and placement so emerging issues can be explored (Denny & Weckesser, 2022).

The use of probing questions and clarification can lead to a detailed understanding, but semi-structured interviews can be time-consuming and subject to interviewer bias.

Focus groups

Similar to interviews, focus groups elicit a rich and detailed account of an experience. However, focus groups are more dynamic since participants with shared characteristics construct this account together (Denny & Weckesser, 2022).

A shared narrative is built between participants to capture a group experience shaped by a shared context.

The researcher takes on the role of a moderator, who will establish ground rules and guide the discussion by following a topic guide to focus the group discussions.

Typically, focus groups have 4-10 participants as a discussion can be difficult to facilitate with more than this, and this number allows everyone the time to speak.

Ethnography

Ethnography is a methodology used to study a group of people’s behaviors and social interactions in their environment (Reeves et al., 2008).

Data are collected using methods such as observations, field notes, or structured/ unstructured interviews.

The aim of ethnography is to provide detailed, holistic insights into people’s behavior and perspectives within their natural setting. In order to achieve this, researchers immerse themselves in a community or organization.

Due to the flexibility and real-world focus of ethnography, researchers are able to gather an in-depth, nuanced understanding of people’s experiences, knowledge and perspectives that are influenced by culture and society.

In order to develop a representative picture of a particular culture/ context, researchers must conduct extensive field work.

This can be time-consuming as researchers may need to immerse themselves into a community/ culture for a few days, or possibly a few years.

Qualitative Data Analysis Methods

Different methods can be used for analyzing qualitative data. The researcher chooses based on the objectives of their study.

The researcher plays a key role in the interpretation of data, making decisions about the coding, theming, decontextualizing, and recontextualizing of data (Starks & Trinidad, 2007).

Grounded theory

Grounded theory is a qualitative method specifically designed to inductively generate theory from data. It was developed by Glaser and Strauss in 1967 (Glaser & Strauss, 2017).

This methodology aims to develop theories (rather than test hypotheses) that explain a social process, action, or interaction (Petty et al., 2012). To inform the developing theory, data collection and analysis run simultaneously.

There are three key types of coding used in grounded theory: initial (open), intermediate (axial), and advanced (selective) coding.

Throughout the analysis, memos should be created to document methodological and theoretical ideas about the data. Data should be collected and analyzed until data saturation is reached and a theory is developed.

Content analysis

Content analysis was first used in the early twentieth century to analyze textual materials such as newspapers and political speeches.

Content analysis is a research method used to identify and analyze the presence and patterns of themes, concepts, or words in data (Vaismoradi et al., 2013).

This research method can be used to analyze data in different formats, which can be written, oral, or visual.

The goal of content analysis is to develop themes that capture the underlying meanings of data (Schreier, 2012).

Qualitative content analysis can be used to validate existing theories, support the development of new models and theories, and provide in-depth descriptions of particular settings or experiences.

The following six steps provide a guideline for how to conduct qualitative content analysis.

- Define a Research Question : To start content analysis, a clear research question should be developed.

- Identify and Collect Data : Establish the inclusion criteria for your data. Find the relevant sources to analyze.

- Define the Unit or Theme of Analysis : Categorize the content into themes. Themes can be a word, phrase, or sentence.

- Develop Rules for Coding your Data : Define a set of coding rules to ensure that all data are coded consistently.

- Code the Data : Follow the coding rules to categorize data into themes.

- Analyze the Results and Draw Conclusions : Examine the data to identify patterns and draw conclusions in relation to your research question.

Discourse analysis

Discourse analysis is a research method used to study written/ spoken language in relation to its social context (Wood & Kroger, 2000).

In discourse analysis, the researcher interprets details of language materials and the context in which it is situated.

Discourse analysis aims to understand the functions of language (how language is used in real life) and how meaning is conveyed by language in different contexts. Researchers use discourse analysis to investigate social groups and how language is used to achieve specific communication goals.

Different methods of discourse analysis can be used depending on the aims and objectives of a study. However, the following steps provide a guideline on how to conduct discourse analysis.

- Define the Research Question : Develop a relevant research question to frame the analysis.

- Gather Data and Establish the Context : Collect research materials (e.g., interview transcripts, documents). Gather factual details and review the literature to construct a theory about the social and historical context of your study.

- Analyze the Content : Closely examine various components of the text, such as the vocabulary, sentences, paragraphs, and structure of the text. Identify patterns relevant to the research question to create codes, then group these into themes.

- Review the Results : Reflect on the findings to examine the function of the language, and the meaning and context of the discourse.

Thematic analysis

Thematic analysis is a method used to identify, interpret, and report patterns in data, such as commonalities or contrasts.

Although the origin of thematic analysis can be traced back to the early twentieth century, understanding and clarity of thematic analysis is attributed to Braun and Clarke (2006).

Thematic analysis aims to develop themes (patterns of meaning) across a dataset to address a research question.

In thematic analysis, qualitative data is gathered using techniques such as interviews, focus groups, and questionnaires. Audio recordings are transcribed. The dataset is then explored and interpreted by a researcher to identify patterns.

This occurs through the rigorous process of data familiarisation, coding, theme development, and revision. These identified patterns provide a summary of the dataset and can be used to address a research question.

Themes are developed by exploring the implicit and explicit meanings within the data. Two different approaches are used to generate themes: inductive and deductive.

An inductive approach allows themes to emerge from the data. In contrast, a deductive approach uses existing theories or knowledge to apply preconceived ideas to the data.

Phases of Thematic Analysis

Braun and Clarke (2006) provide a guide of the six phases of thematic analysis. These phases can be applied flexibly to fit research questions and data.

Template analysis

Template analysis refers to a specific method of thematic analysis which uses hierarchical coding (Brooks et al., 2014).

Template analysis is used to analyze textual data, for example, interview transcripts or open-ended responses on a written questionnaire.

To conduct template analysis, a coding template must be developed (usually from a subset of the data) and subsequently revised and refined. This template represents the themes identified by researchers as important in the dataset.

Codes are ordered hierarchically within the template, with the highest-level codes demonstrating overarching themes in the data and lower-level codes representing constituent themes with a narrower focus.

A guideline for the main procedural steps for conducting template analysis is outlined below.

- Familiarization with the Data : Read (and reread) the dataset in full. Engage, reflect, and take notes on data that may be relevant to the research question.

- Preliminary Coding : Identify initial codes using guidance from the a priori codes, identified before the analysis as likely to be beneficial and relevant to the analysis.

- Organize Themes : Organize themes into meaningful clusters. Consider the relationships between the themes both within and between clusters.

- Produce an Initial Template : Develop an initial template. This may be based on a subset of the data.

- Apply and Develop the Template : Apply the initial template to further data and make any necessary modifications. Refinements of the template may include adding themes, removing themes, or changing the scope/title of themes.

- Finalize Template : Finalize the template, then apply it to the entire dataset.

Frame analysis

Frame analysis is a comparative form of thematic analysis which systematically analyzes data using a matrix output.

Ritchie and Spencer (1994) developed this set of techniques to analyze qualitative data in applied policy research. Frame analysis aims to generate theory from data.

Frame analysis encourages researchers to organize and manage their data using summarization.

This results in a flexible and unique matrix output, in which individual participants (or cases) are represented by rows and themes are represented by columns.

Each intersecting cell is used to summarize findings relating to the corresponding participant and theme.

Frame analysis has five distinct phases which are interrelated, forming a methodical and rigorous framework.

- Familiarization with the Data : Familiarize yourself with all the transcripts. Immerse yourself in the details of each transcript and start to note recurring themes.

- Develop a Theoretical Framework : Identify recurrent/ important themes and add them to a chart. Provide a framework/ structure for the analysis.

- Indexing : Apply the framework systematically to the entire study data.

- Summarize Data in Analytical Framework : Reduce the data into brief summaries of participants’ accounts.

- Mapping and Interpretation : Compare themes and subthemes and check against the original transcripts. Group the data into categories and provide an explanation for them.

Preventing Bias in Qualitative Research

To evaluate qualitative studies, the CASP (Critical Appraisal Skills Programme) checklist for qualitative studies can be used to ensure all aspects of a study have been considered (CASP, 2018).

The quality of research can be enhanced and assessed using criteria such as checklists, reflexivity, co-coding, and member-checking.

Co-coding

Relying on only one researcher to interpret rich and complex data may risk key insights and alternative viewpoints being missed. Therefore, coding is often performed by multiple researchers.

A common strategy must be defined at the beginning of the coding process (Busetto et al., 2020). This includes establishing a useful coding list and finding a common definition of individual codes.

Transcripts are initially coded independently by researchers and then compared and consolidated to minimize error or bias and to bring confirmation of findings.

Member checking

Member checking (or respondent validation) involves checking back with participants to see if the research resonates with their experiences (Russell & Gregory, 2003).

Data can be returned to participants after data collection or when results are first available. For example, participants may be provided with their interview transcript and asked to verify whether this is a complete and accurate representation of their views.

Participants may then clarify or elaborate on their responses to ensure they align with their views (Shenton, 2004).

This feedback becomes part of data collection and ensures accurate descriptions/ interpretations of phenomena (Mays & Pope, 2000).

Reflexivity in qualitative research

Reflexivity typically involves examining your own judgments, practices, and belief systems during data collection and analysis. It aims to identify any personal beliefs which may affect the research.

Reflexivity is essential in qualitative research to ensure methodological transparency and complete reporting. This enables readers to understand how the interaction between the researcher and participant shapes the data.

Depending on the research question and population being researched, factors that need to be considered include the experience of the researcher, how the contact was established and maintained, age, gender, and ethnicity.

These details are important because, in qualitative research, the researcher is a dynamic part of the research process and actively influences the outcome of the research (Boeije, 2014).

Reflexivity Example

Who you are and your characteristics influence how you collect and analyze data. Here is an example of a reflexivity statement for research on smoking. I am a 30-year-old white female from a middle-class background. I live in the southwest of England and have been educated to master’s level. I have been involved in two research projects on oral health. I have never smoked, but I have witnessed how smoking can cause ill health from my volunteering in a smoking cessation clinic. My research aspirations are to help to develop interventions to help smokers quit.

Establishing Trustworthiness in Qualitative Research

Trustworthiness is a concept used to assess the quality and rigor of qualitative research. Four criteria are used to assess a study’s trustworthiness: credibility, transferability, dependability, and confirmability.

Credibility in Qualitative Research

Credibility refers to how accurately the results represent the reality and viewpoints of the participants.

To establish credibility in research, participants’ views and the researcher’s representation of their views need to align (Tobin & Begley, 2004).

To increase the credibility of findings, researchers may use data source triangulation, investigator triangulation, peer debriefing, or member checking (Lincoln & Guba, 1985).

Transferability in Qualitative Research

Transferability refers to how generalizable the findings are: whether the findings may be applied to another context, setting, or group (Tobin & Begley, 2004).

Transferability can be enhanced by giving thorough and in-depth descriptions of the research setting, sample, and methods (Nowell et al., 2017).

Dependability in Qualitative Research

Dependability is the extent to which the study could be replicated under similar conditions and the findings would be consistent.

Researchers can establish dependability using methods such as audit trails so readers can see the research process is logical and traceable (Koch, 1994).

Confirmability in Qualitative Research

Confirmability is concerned with establishing that there is a clear link between the researcher’s interpretations/ findings and the data.

Researchers can achieve confirmability by demonstrating how conclusions and interpretations were arrived at (Nowell et al., 2017).

This enables readers to understand the reasoning behind the decisions made.

Audit Trails in Qualitative Research

An audit trail provides evidence of the decisions made by the researcher regarding theory, research design, and data collection, as well as the steps they have chosen to manage, analyze, and report data.

The researcher must provide a clear rationale to demonstrate how conclusions were reached in their study.

A clear description of the research path must be provided to enable readers to trace through the researcher’s logic (Halpren, 1983).

Researchers should maintain records of the raw data, field notes, transcripts, and a reflective journal in order to provide a clear audit trail.

Discovery of unexpected data

Open-ended questions in qualitative research mean the researcher can probe an interview topic and enable the participant to elaborate on responses in an unrestricted manner.

This allows unexpected data to emerge, which can lead to further research into that topic.

The exploratory nature of qualitative research helps generate hypotheses that can be tested quantitatively (Busetto et al., 2020).

Flexibility

Data collection and analysis can be modified and adapted to take the research in a different direction if new ideas or patterns emerge in the data.

This enables researchers to investigate new opportunities while firmly maintaining their research goals.

Naturalistic settings

The behaviors of participants are recorded in real-world settings. Studies that use real-world settings have high ecological validity since participants behave more authentically.

Limitations

Time-consuming .

Qualitative research results in large amounts of data which often need to be transcribed and analyzed manually.

Even when software is used, transcription can be inaccurate, and using software for analysis can result in many codes which need to be condensed into themes.

Subjectivity

The researcher has an integral role in collecting and interpreting qualitative data. Therefore, the conclusions reached are from their perspective and experience.

Consequently, interpretations of data from another researcher may vary greatly.

Limited generalizability

The aim of qualitative research is to provide a detailed, contextualized understanding of an aspect of the human experience from a relatively small sample size.

Despite rigorous analysis procedures, conclusions drawn cannot be generalized to the wider population since data may be biased or unrepresentative.

Therefore, results are only applicable to a small group of the population.

Extraneous variables

Qualitative research is often conducted in real-world settings. This may cause results to be unreliable since extraneous variables may affect the data, for example:

- Situational variables : different environmental conditions may influence participants’ behavior in a study. The random variation in factors (such as noise or lighting) may be difficult to control in real-world settings.

- Participant characteristics : this includes any characteristics that may influence how a participant answers/ behaves in a study. This may include a participant’s mood, gender, age, ethnicity, sexual identity, IQ, etc.

- Experimenter effect : experimenter effect refers to how a researcher’s unintentional influence can change the outcome of a study. This occurs when (i) their interactions with participants unintentionally change participants’ behaviors or (ii) due to errors in observation, interpretation, or analysis.

What sample size should qualitative research be?

The sample size for qualitative studies has been recommended to include a minimum of 12 participants to reach data saturation (Braun, 2013).

Are surveys qualitative or quantitative?

Surveys can be used to gather information from a sample qualitatively or quantitatively. Qualitative surveys use open-ended questions to gather detailed information from a large sample using free text responses.

The use of open-ended questions allows for unrestricted responses where participants use their own words, enabling the collection of more in-depth information than closed-ended questions.

In contrast, quantitative surveys consist of closed-ended questions with multiple-choice answer options. Quantitative surveys are ideal to gather a statistical representation of a population.

What are the ethical considerations of qualitative research?

Before conducting a study, you must think about any risks that could occur and take steps to prevent them. Participant Protection : Researchers must protect participants from physical and mental harm. This means you must not embarrass, frighten, offend, or harm participants. Transparency : Researchers are obligated to clearly communicate how they will collect, store, analyze, use, and share the data. Confidentiality : You need to consider how to maintain the confidentiality and anonymity of participants’ data.

What is triangulation in qualitative research?

Triangulation refers to the use of several approaches in a study to comprehensively understand phenomena. This method helps to increase the validity and credibility of research findings.

Types of triangulation include method triangulation (using multiple methods to gather data); investigator triangulation (multiple researchers for collecting/ analyzing data), theory triangulation (comparing several theoretical perspectives to explain a phenomenon), and data source triangulation (using data from various times, locations, and people; Carter et al., 2014).

Why is qualitative research important?

Qualitative research allows researchers to describe and explain the social world. The exploratory nature of qualitative research helps to generate hypotheses that can then be tested quantitatively.

In qualitative research, participants are able to express their thoughts, experiences, and feelings without constraint.

Additionally, researchers are able to follow up on participants’ answers in real-time, generating valuable discussion around a topic. This enables researchers to gain a nuanced understanding of phenomena which is difficult to attain using quantitative methods.

What is coding data in qualitative research?

Coding data is a qualitative data analysis strategy in which a section of text is assigned with a label that describes its content.

These labels may be words or phrases which represent important (and recurring) patterns in the data.

This process enables researchers to identify related content across the dataset. Codes can then be used to group similar types of data to generate themes.

What is the difference between qualitative and quantitative research?

Qualitative research involves the collection and analysis of non-numerical data in order to understand experiences and meanings from the participant’s perspective.

This can provide rich, in-depth insights on complicated phenomena. Qualitative data may be collected using interviews, focus groups, or observations.

In contrast, quantitative research involves the collection and analysis of numerical data to measure the frequency, magnitude, or relationships of variables. This can provide objective and reliable evidence that can be generalized to the wider population.

Quantitative data may be collected using closed-ended questionnaires or experiments.

What is trustworthiness in qualitative research?