What Are The Key Skills Every Data Analyst Needs?

In the job, a wide range of data analytics skills are required on a daily basis; everything from in-depth analyses to data visualisation and storytelling. One minute you’ll be composing an SQL query to explore a data set, the next you’ll stand in front of a board of directors outlining how the business needs to adapt according to your findings.

Let’s take look at the key skills associated with being a data analyst. You probably already possess some of the skills, since they cover a broad range of skillsets touching on communication, analytics, and problem solving.

Want to pick up some data analytics skills from scratch, for free? Try out CareerFoundry’s 5-day data short course to see if it’s for you!

Here are the key data analyst skills you need:

- Excellent problem-solving skills

- Solid numerical skills

- Excel proficiency and knowledge of querying languages

- Expertise in data visualization

- Great communication skills

- Key takeways

1. Excellent problem-solving skills

Problem solving is one of the most important data analyst skills you should possess. Around 90% of analytics is about critical thinking, and knowing the right questions to ask.

If the questions you ask are grounded in knowledge of the business, the product and the industry, you’ll get the answers you need. Data analysis is about being presented with a problem (i.e., “why aren’t we selling more red bikes?”), and carrying out the necessary investigative tasks to find the answer.

Data analytics is a lot about thinking logically through the problems you encounter. You’ll come to the right conclusions quicker if you’re familiar with the challenges and nuances of the data. If red bikes aren’t selling well, why could this be? Is it because other colors have larger ranges? Are red bikes typically priced higher than other bikes? Are red bikes only available in mountain bike form, therefore discouraging city dwellers to purchase them? Data analysts draw conclusions quicker by using their logic to understand the data.

2. Solid numerical skills

Many data analysts don’t come from the world of numbers—often, they come from a business or marketing background. It’s perfectly possible to grow your knowledge of this area as you go. While not necessarily a ‘skill’, an aptitude for numbers is certainly a good thing for any aspiring data analyst to have.

You’re going to need to bring a level of numerical expertise to the role, either from formal education or other experience. You can learn most of the numerical data analyst skills—such as regression analysis, which involves examining two or more variables and their relationships—without having to go back to school.

Having a thorough grounding in statistics is also beneficial—you can start by learning about descriptive and inferential statistics , and work up from there. You’re going to need an appreciation for queries, which are commands used by computers to perform tasks. In analytics, these commands are used to extract information from data sets. Brushing up on your knowledge of applied science and linear algebra is going to make things easier for you, although don’t be put off if this is all a mystery to you.

3. Excel proficiency and knowledge of querying languages

As we mentioned earlier, knowledge of Microsoft Excel is an essential data analyst skill for working effectively.

It’s a spreadsheet program used by millions of people around the world to store and share information, perform mathematical and statistical operations and create reports and visualizations that summarize important findings. For data analysts, it’s a powerful tool for quickly accessing, organizing, and manipulating data to derive and share insights.

Data analysts work with Excel every day, so you’re going to have to really know your VLOOKUP from your pivot tables . Want to find out where the red bikes sell the most? Curious as to whether the average price of red bikes is higher than blue bikes? Excel can help provide answers to these kinds of questions.

As well as Excel, analysts need to be familiar with at least one querying language. These languages are used to instruct computers to do specific tasks, including many related to the analysis of data. The most popular languages for data analysis are SQL and SAS. For a good introduction to SQL, try this cheatsheet . Programming languages such as Python and R also have a wide variety of powerful programs dedicated to analyzing data.

Many of the languages available perform different functions or are geared at one particular industry. SAS is primarily used in the medical industry, whereas SQL is often used for retrieving data from databases. If you have an idea of the industry you’d like to work in, it’s beneficial to do some research and find out what languages they use—tailoring your learning to the sector(s) you’re most interested in is a clever move.

4. Expertise in data visualization

It’s difficult to take a complicated topic and present findings in a simple way, but that’s precisely the job of the data analyst!

It’s all about turning your findings into easily digestible chunks of information. Telling a compelling story with your data is crucial, and so much of this involves the use of visual aids. Graphs and pie charts are a popular and extremely effective means of illustrating data findings.

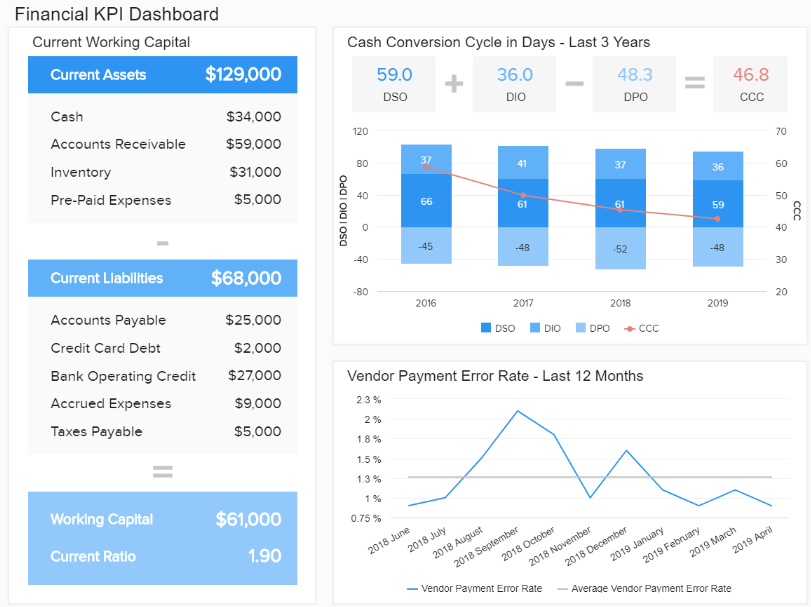

Both Microsoft Excel and Tableau boast plenty of options for visualizing data, enabling you to present findings in an accurate way. This data analyst skill lies in knowing how best to present the data, so that your findings speak for themselves. There’s something of a tendency among tech professionals to speak in complex and esoteric terms, but to be a good data analyst is to communicate findings easily and effectively through simple visualizations.

5. Great communication skills

As well as being able to visualize your findings, accurately, data analysts must be able to communicate findings verbally. Data analysts work constantly with stakeholders, fellow colleagues and data suppliers, so good communication is an essential data analyst. How good are you at talking to people? Can you effectively break down technical information into simple words? This is a crucial skill that goes hand in hand with data visualization—it’s all in the delivery!

You’ll often need to present your findings in front of an audience, who might not be familiar with your analytical methods and processes. The job of the data analyst is to clearly translate their findings into non-technical terms. Your audience wants to hear your findings in ways which relate to their own roles. The bike designer is interested in hearing what designs of the red bike aren’t selling that well, and if customers are choosing not to buy a certain design in red.

The marketing manager wants to know if red bikes aren’t selling well in a certain country and whether sales have been affected by lack of marketing spend. The product manager wants to know if there is a general shift in popularity towards fixed gear bikes, and whether the drop in red bike sales is likely to last for a longer period of time. It’s crucial data analysts take their audience into consideration.

Key takeaways

- Data analysts aren’t one trick ponies! They have a broad skillset incorporating a wide range of data analytics skills.

- A head for math and statistics is core to the work of a data analyst.

- As well as robust Excel knowledge, a good command of at least one programming language is required to carry out effective data analysis.

- Having the ability to effectively ask “what does this mean?’” and “what impact could this have on something else?” is an essential part of analyzing data.

- Similarly, possessing the ability to communicate your findings both visually and verbally is crucial to the role of the data analyst.

- Data analytics is a hands-on field; get a taste of what it’s like in this free introductory short course .

So you’ve now learned about the main data analyst skills. If that’s made you curious to learn more, our data analytics blog contains more related articles about working in the field. And, if you’re keen to find out how to become a data analyst, check out this guide .

8 of 10 chapters available

Solve Any Data Analysis Problem you own this product $(document).ready(function() { $.ajax({ url: "/ajax/getWishListDetails" }).done(function (data) { if (!jQuery.isEmptyObject(data) && data['wishlistProductIds']) { $(".wishlist-container").each(function() { if (data.wishlistProductIds.indexOf($(this).find('.wishlist-toggle').data('product-id')) > -1) { $(this).addClass("on-wishlist"); } }); } }); $.ajax({ url: "/ajax/getProductOwnershipDetails?productId=3091" }).done(function (data) { if (!jQuery.isEmptyObject(data)) { if (data['ownership']) { $(".wishlist-container").hide(); $(".ownership-indicator").addClass('owned'); $(document.body).addClass("user-owns-product"); } } }); }); document.addEventListener("subscription-status-loaded", function(e){ var status = e && e.detail && e.detail['status']; if(status != "ACTIVE" && status != "PAUSED"){ return; } if(window.readingListsServerVars != null){ $(document).ready(function() { var $readingListToggle = $(".reading-list-toggle"); $(document.body).append(' '); $(document.body).append(' loading reading lists ... '); function adjustReadingListIcon(isInReadingList){ $readingListToggle.toggleClass("fa-plus", !isInReadingList); $readingListToggle.toggleClass("fa-check", isInReadingList); var tooltipMessage = isInReadingList ? "edit in reading lists" : "add to reading list"; $readingListToggle.attr("title", tooltipMessage); $readingListToggle.attr("data-original-title", tooltipMessage); } $.ajax({ url: "/readingList/isInReadingList", data: { productId: 3091 } }).done(function (data) { adjustReadingListIcon(data && data.hasProductInReadingList); }).catch(function(e){ console.log(e); adjustReadingListIcon(false); }); $readingListToggle.on("click", function(){ if(codePromise == null){ showToast() } loadCode().then(function(store){ store.requestReadingListSpecificationForProduct({ id: window.readingListsServerVars.externalId, manningId: window.readingListsServerVars.productId, title: window.readingListsServerVars.title }); ReadingLists.ReactDOM.render( ReadingLists.React.createElement(ReadingLists.ManningOnlineReadingListModal, { store: store, }), document.getElementById("reading-lists-modal") ); }).catch(function(e){ console.log("Error loading code reading list code"); }); }); var codePromise var readingListStore function loadCode(){ if(codePromise) { return codePromise } return codePromise = new Promise(function (resolve, reject){ $.getScript(window.readingListsServerVars.libraryLocation).done(function(){ hideToast() readingListStore = new ReadingLists.ReadingListStore( new ReadingLists.ReadingListProvider( new ReadingLists.ReadingListWebProvider( ReadingLists.SourceApp.marketplace, getDeploymentType() ) ) ); readingListStore.onReadingListChange(handleChange); readingListStore.onReadingListModalChange(handleChange); resolve(readingListStore); }).catch(function(){ hideToast(); console.log("Error downloading reading lists source"); $readingListToggle.css("display", "none"); reject(); }); }); } function handleChange(){ if(readingListStore != null) { adjustReadingListIcon(readingListStore.isInAtLeastOneReadingList({ id: window.readingListsServerVars.externalId, manningId: window.readingListsServerVars.productId })); } } var $readingListToast = $("#reading-list-toast"); function showToast(){ $readingListToast.css("display", "flex"); setTimeout(function(){ $readingListToast.addClass("shown"); }, 16); } function hideToast(){ $readingListToast.removeClass("shown"); setTimeout(function(){ $readingListToast.css("display", "none"); }, 150); } function getDeploymentType(){ switch(window.readingListsServerVars.deploymentType){ case "development": case "test": return ReadingLists.DeploymentType.dev; case "qa": return ReadingLists.DeploymentType.qa; case "production": return ReadingLists.DeploymentType.prod; case "docker": return ReadingLists.DeploymentType.docker; default: console.error("Unknown deployment environment, defaulting to production"); return ReadingLists.DeploymentType.prod; } } }); } });

- MEAP began November 2023

- Publication in Fall 2024 ( estimated )

- ISBN 9781633437531

- 325 pages (estimated)

- printed in black & white

- eBook pdf, ePub, online

- print includes eBook

- subscription from $19.99 includes this product

pro $24.99 per month

- access to all Manning books, MEAPs, liveVideos, liveProjects, and audiobooks!

- choose one free eBook per month to keep

- exclusive 50% discount on all purchases

lite $19.99 per month

- access to all Manning books, including MEAPs!

5, 10 or 20 seats+ for your team - learn more

- High-value skills to tackle specific analytical problems

- Deconstructing problems for faster, practical solutions

- Data modeling, PDF data extraction, and categorical data manipulation

- Handling vague metrics, deciphering inherited projects, and defining customer records

about the book

About the reader, about the author, choose your plan.

- choose another free product every time you renew

- choose twelve free products per year

- five seats for your team

- Subscribe to our Newsletter

- Manning on LinkedIn

- Manning on Instagram

- Manning on Facebook

- Manning on Twitter

- Manning on YouTube

- Manning on Twitch

- Manning on Mastodon

how to play

- guess the geekle in 5-, 6-, 7- tries.

- each guess must be a valid 4-6 letter tech word. hit enter to submit.

- after each guess, the color of the tiles will change to show how close your guess was to the word.

geekle is based on a wordle clone .

How to analyze a problem

May 7, 2023 Companies that harness the power of data have the upper hand when it comes to problem solving. Rather than defaulting to solving problems by developing lengthy—sometimes multiyear—road maps, they’re empowered to ask how innovative data techniques could resolve challenges in hours, days or weeks, write senior partner Kayvaun Rowshankish and coauthors. But when organizations have more data than ever at their disposal, which data should they leverage to analyze a problem? Before jumping in, it’s crucial to plan the analysis, decide which analytical tools to use, and ensure rigor. Check out these insights to uncover ways data can take your problem-solving techniques to the next level, and stay tuned for an upcoming post on the potential power of generative AI in problem-solving.

The data-driven enterprise of 2025

How data can help tech companies thrive amid economic uncertainty

How to unlock the full value of data? Manage it like a product

Data ethics: What it means and what it takes

Author Talks: Think digital

Five insights about harnessing data and AI from leaders at the frontier

Real-world data quality: What are the opportunities and challenges?

How a tech company went from moving data to using data: An interview with Ericsson’s Sonia Boije

Harnessing the power of external data

5 Reasons Why Data Analytics is Important in Problem Solving

Data analytics is important in problem solving and it is a key sub-branch of data science. Even though there are endless data analytics applications in a business, one of the most crucial roles it plays is problem-solving.

Using data analytics not only boosts your problem-solving skills, but it also makes them a whole lot faster and efficient, automating a majority of the long and repetitive processes.

Whether you’re fresh out of university graduate or a professional who works for an organization, having top-notch problem-solving skills is a necessity and always comes in handy.

Everybody keeps facing new kinds of complex problems every day, and a lot of time is invested in overcoming these obstacles. Moreover, much valuable time is lost while trying to find solutions to unexpected problems, and your plans also get disrupted often.

This is where data analytics comes in. It lets you find and analyze the relevant data without too much of human-support. It’s a real time-saver and has become a necessity in problem-solving nowadays. So if you don’t already use data analytics in solving these problems, you’re probably missing out on a lot!

As the saying goes from the chief analytics officer of TIBCO,

“Think analytically, rigorously, and systematically about a business problem and come up with a solution that leverages the available data .”

– Michael O’Connell.

In this article, I will explain the importance of data analytics in problem-solving and go through the top 5 reasons why it cannot be ignored. So, let’s dive into it right away.

Highly Recommended Articles:

13 Reasons Why Data Analytics is Important in Decision Making

This is Why Business Analytics is Vital in Every Business

Is Data Analysis Qualitative or Quantitative? (We find Out!)

Will Algorithms Erode our Decision-Making Skills?

What is Data Analytics?

Data analytics is the art of automating processes using algorithms to collect raw data from multiple sources and transform it. This results in achieving the data that’s ready to be studied and used for analytical purposes, such as finding the trends, patterns, and so forth.

Why is Data Analytics Important in Problem Solving?

Problem-solving and data analytics often proceed hand in hand. When a particular problem is faced, everybody’s first instinct is to look for supporting data. Data analytics plays a pivotal role in finding this data and analyzing it to be used for tackling that specific problem.

Although the analytical part sometimes adds further complexities, since it’s a whole different process that might get challenging sometimes, it eventually helps you get a better hold of the situation.

Also, you come up with a more informed solution, not leaving anything out of the equation.

Having strong analytical skills help you dig deeper into the problem and get all the insights you need. Once you have extracted enough relevant knowledge, you can proceed with solving the problem.

However, you need to make sure you’re using the right, and complete data, or using data analytics may even backfire for you. Misleading data can make you believe things that don’t exist, and that’s bound to take you off the track, making the problem appear more complex or simpler than it is.

Let’s see a very straightforward daily life example to examine the importance of data analytics in problem-solving; what would you do if a question appears on your exam, but it doesn’t have enough data provided for you to solve the question?

Obviously, you won’t be able to solve that problem. You need a certain level of facts and figures about the situation first, or you’ll be wandering in the dark.

However, once you get the information you need, you can analyze the situation and quickly develop a solution. Moreover, getting more and more knowledge of the situation will further ease your ability to solve the given problem. This is precisely how data analytics assists you. It eases the process of collecting information and processing it to solve real-life problems.

5 Reasons Why Data Analytics Is Important in Problem Solving

Now that we’ve established a general idea of how strongly connected analytical skills and problem-solving are, let’s dig deeper into the top 5 reasons why data analytics is important in problem-solving .

1. Uncover Hidden Details

Data analytics is great at putting the minor details out in the spotlight. Sometimes, even the most qualified data scientists might not be able to spot tiny details existing in the data used to solve a certain problem. However, computers don’t miss. This enhances your ability to solve problems, and you might be able to come up with solutions a lot quicker.

Data analytics tools have a wide variety of features that let you study the given data very thoroughly and catch any hidden or recurring trends using built-in features without needing any effort. These tools are entirely automated and require very little programming support to work. They’re great at excavating the depths of data, going back way into the past.

2. Automated Models

Automation is the future. Businesses don’t have enough time nor the budget to let manual workforces go through tons of data to solve business problems.

Instead, what they do is hire a data analyst who automates problem-solving processes, and once that’s done, problem-solving becomes completely independent of any human intervention.

The tools can collect, combine, clean, and transform the relevant data all by themselves and finally using it to predict the solutions. Pretty impressive, right?

However, there might be some complex problems appearing now and then, which cannot be handled by algorithms since they’re completely new and nothing similar has come up before. But a lot of the work is still done using the algorithms, and it’s only once in a blue moon that they face something that rare.

However, there’s one thing to note here; the process of automation by designing complex analytical and ML algorithms might initially be a bit challenging. Many factors need to be kept in mind, and a lot of different scenarios may occur. But once it goes up and running, you’ll be saving a significant amount of manpower as well as resources.

3. Explore Similar Problems

If you’re using a data analytics approach for solving your problems, you will have a lot of data available at your disposal. Most of the data would indirectly help you in the form of similar problems, and you only have to figure out how these problems are related.

Once you’re there, the process gets a lot smoother because you get references to how such problems were tackled in the past.

Such data is available all over the internet and is automatically extracted by the data analytics tools according to the current problems. People run into difficulties all over the world, and there’s no harm if you follow the guidelines of someone who has gone through a similar situation before.

Even though exploring similar problems is also possible without the help of data analytics, we’re generating a lot of data nowadays , and searching through tons of this data isn’t as easy as you might think. So, using analytical tools is the smart choice since they’re quite fast and will save a lot of your time.

4. Predict Future Problems

While we have already gone through the fact that data analytics tools let you analyze the data available from the past and use it to predict the solutions to the problems you’re facing in the present, it also goes the other way around.

Whenever you use data analytics to solve any present problem, the tools you’re using store the data related to the problem and saves it in the form of variables forever. This way, similar problems faced in the future don’t need to be analyzed again. Instead, you can reuse the previous solutions you have, or the algorithms can predict the solutions for you even if the problems have evolved a bit.

This way, you’re not wasting any time on the problems that are recurring in nature. You jump directly onto the solution whenever you face a situation, and this makes the job quite simple.

5. Faster Data Extraction

However, with the latest tools, the data extraction is greatly reduced, and everything is done automatically with no human intervention whatsoever.

Moreover, once the appropriate data is mined and cleaned, there are not many hurdles that remain, and the rest of the processes are done without a lot of delays.

When businesses come across a problem, around 70%-80% is their time is consumed while gathering the relevant data and transforming it into usable forms. So, you can estimate how quick the process could get if the data analytics tools automate all this process.

Even though many of the tools are open-source, if you’re a bigger organization that can spend a bit on paid tools, problem-solving could get even better. The paid tools are literal workhorses, and in addition to generating the data, they could also develop the models to your solutions, unless it’s a very complex one, without needing any support of data analysts.

What problems can data analytics solve? 3 Real-World Examples

Employee performance problems .

Imagine a Call Center with over 100 agents

By Analyzing data sets of employee attendance, productivity, and issues that tend to delay in resolution. Through that, preparing refresher training plans, and mentorship plans according to key weak areas identified.

Sales Efficiency Problems

Imagine a Business that is spread out across multiple cities or regions

By analyzing the number of sales per area, the size of the sales reps’ team, the overall income and disposable income of potential customers, you can come up with interesting insights as to why some areas sell more or less than the others. Through that, prepping a recruitment and training plan or area expansion in order to boost sales could be a good move.

Business Investment Decisions Problems

Imagine an Investor with a portfolio of apps/software)

By analyzing the number of subscribers, sales, the trends in usage, the demographics, you can decide which peace of software has a better Return on Investment over the long term.

Throughout the article, we’ve seen various reasons why data analytics is very important for problem-solving.

Many different problems that may seem very complex in the start are made seamless using data analytics, and there are hundreds of analytical tools that can help us solve problems in our everyday lives.

Emidio Amadebai

As an IT Engineer, who is passionate about learning and sharing. I have worked and learned quite a bit from Data Engineers, Data Analysts, Business Analysts, and Key Decision Makers almost for the past 5 years. Interested in learning more about Data Science and How to leverage it for better decision-making in my business and hopefully help you do the same in yours.

Recent Posts

Causal vs Evidential Decision-making (How to Make Businesses More Effective)

In today’s fast-paced business landscape, it is crucial to make informed decisions to stay in the competition which makes it important to understand the concept of the different characteristics and...

Bootstrapping vs. Boosting

Over the past decade, the field of machine learning has witnessed remarkable advancements in predictive techniques and ensemble learning methods. Ensemble techniques are very popular in machine...

Skip to main content

- SAS Viya Platform

- Capabilities

- Why SAS Viya?

- Move to SAS Viya

- Risk Management

- All Products & Solutions

- Public Sector

- Life Sciences

- Retail & Consumer Goods

- All Industries

- Contracting with SAS

- Customer Stories

Why Learn SAS?

Demand for SAS skills is growing. Advance your career and train your team in sought after skills

- Train My Team

- Course Catalog

- Free Training

- My Training

- Academic Programs

- Free Academic Software

- Certification

- Choose a Credential

- Why get certified?

- Exam Preparation

- My Certification

- Communities

- Ask the Expert

- All Webinars

- Video Tutorials

- YouTube Channel

- SAS Programming

- Statistical Procedures

- New SAS Users

- Administrators

- All Communities

- Documentation

- Installation & Configuration

- SAS Viya Administration

- SAS Viya Programming

- System Requirements

- All Documentation

- Support & Services

- Knowledge Base

- Starter Kit

- Support by Product

- Support Services

- All Support & Services

- User Groups

- Partner Program

- Find a Partner

- Sign Into PartnerNet

Learn why SAS is the world's most trusted analytics platform, and why analysts, customers and industry experts love SAS.

Learn more about SAS

- Annual Report

- Vision & Mission

- Office Locations

- Internships

- Search Jobs

- News & Events

- Newsletters

- Trust Center

- support.sas.com

- documentation.sas.com

- blogs.sas.com

- communities.sas.com

- developer.sas.com

Select Your Region

Middle East & Africa

Asia Pacific

- Canada (English)

- Canada (Français)

- United States

- Bosnia & Herz.

- Česká Republika

- Deutschland

- Magyarország

- North Macedonia

- Schweiz (Deutsch)

- Suisse (Français)

- United Kingdom

- Middle East

- Saudi Arabia

- South Africa

- Indonesia (Bahasa)

- Indonesia (English)

- New Zealand

- Philippines

- Thailand (English)

- ประเทศไทย (ภาษาไทย)

- Worldwide Sites

Create Profile

Get access to My SAS, trials, communities and more.

Edit Profile

- SAS Insights

- Data Management

The “problem-solver” approach to data preparation for analytics

By david loshin, president, knowledge integrity, inc..

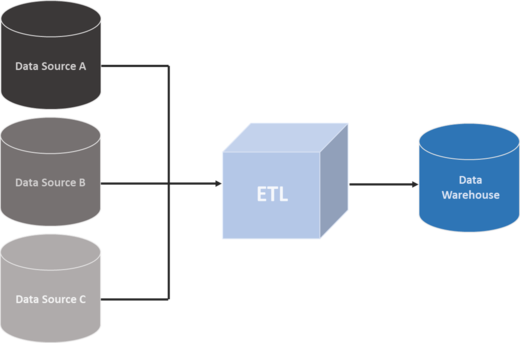

In many environments, the maturity of your reporting and business analytics functions depends on how effective you are at managing data before it’s time to analyze it. Traditional environments relied on a provisioning effort to conduct data preparation for analytics. After extracting data from source systems, the data landed at a staging area for cleansing, standardization and reorganization before loading it in a data warehouse.

Recently, there has been signification innovation in the evolution of end-user discovery and analysis tools. Often, these systems allow the analyst to bypass the traditional data warehouse by accessing the source data sets directly.

This is putting more data – and analysis of that data – in the hands of more people. This encourages “undirected analysis,” which doesn’t pose any significant problems; the analysts are free to point their tools at any (or all!) data sets, with the hope of identifying some nugget of actionable knowledge that can be exploited.

It’s important to ask the IT department to facilitate a problem-solver approach to data preparation by adjusting the methods by which data sets are made available.

However, it would be naïve to presume that many organizations are willing to allow a significant amount of “data-crunching” time to be spent on purely undirected discovery. Rather, data scientists have specific directions to solve particular types of business problems, such as analyzing:

- Global spend to identify opportunities for cost reduction.

- Logistics and facets of the supply chain to optimize the delivery channels.

- Customer interactions to increase customer lifetime value.

Different challenges have different data needs, but if the analysts need to use data from the original sources, it’s worth considering an alternate approach to the conventional means of data preparation. The data warehouse approach balances two key goals: organized data inclusion (a large amount of data is integrated into a single data platform), and objective presentation (data is managed in an abstract data model specifically suited for querying and reporting).

A new approach to data preparation for analytics

Does the data warehouse approach work in more modern, “built-to-suit” analytics? Maybe not, especially if data scientists go directly to the data – bypassing the data warehouse altogether. For data scientists, armed with analytics at their fingertips, let’s consider a rational, five-step approach to problem-solving.

- Clarify the question you want to answer.

- Identify the information necessary to answer the question.

- Determine what information is available and what is not available.

- Acquire the information that is not available.

- Solve the problem.

In this process, steps 2, 3, and 4 all deal with data assessment and acquisition – but in a way that is parametrically opposed to the data warehouse approach. First, the warehouse’s data inclusion is predefined, which means that the data that is not available at step 3 may not be immediately accessible from the warehouse in step 4. Second, the objectiveness of the warehouse’s data poses a barrier to creativity on the analyst’s behalf. In fact, this is why data discovery tools that don’t rely on the data warehouse are becoming more popular. By acquiring or accessing alternate data sources, the analyst can be more innovative in problem-solving!

Preparing data with the problem in mind

A problem-solver approach to data preparation for analytics lets the analyst decide what information needs to be integrated into the analysis platform, what transformations are to be done, and how the data is to be used. This approach differs from the conventional extract/transform/load cycle in three key ways:

- First, the determination of the data sources is done by the analyst based on data accessibility, not what the IT department has interpreted as a set of requirements.

- Second, the analyst is not constrained by the predefined transformations embedded in the data warehouse ETL processes.

- Third, the analyst decides the transformations and standardizations that are relevant for the analysis, not the IT department.

While it’s a departure from “standard operating procedure,” it’s important to ask the IT department to facilitate a problem-solver approach to data preparation by adjusting the methods by which data sets are made available. In particular, instead of loading all data into a data warehouse, IT can create an inventory or catalog of data assets that are available for consumption. And instead of applying a predefined set of data transformations, a data management center of excellence can provide a library of available transformations – and a services and rendering layer that an analyst can use for customized data preparation.

Both of these capabilities require some fundamental best practices and enterprise information management tools aside from the end-user discovery technology, such as:

- Metadata management as a framework for creating the data asset catalog and ensuring consistency in each data artifact’s use.

- Data integration and standardization tools that have an “easy-to-use” interface that can be employed by practitioner and analyst alike.

- Business rules-based data transformations that can be performed as part of a set of enterprise data services.

- Data federation and virtualization to enable access to virtual data sets whose storage footprint may span multiple sources.

- Event stream processing to enable acquisition of data streams as viable and usable data sources.

An evolving environment that encourages greater freedom for the data analyst community should not confine those analysts based on technology decisions for data preparation. Empowering the analysts with flexible tools for data preparation will help speed the time from the initial question to a practical, informed and data-driven decision.

David Loshin, president of Knowledge Integrity, Inc., is a recognized thought leader and expert consultant in the areas of data quality, master data management and business intelligence. David is a prolific author regarding data management best practices, via the expert channel at b-eye-network.com and numerous books, white papers, and web seminars on a variety of data management best practices.

Related Articles

What is a data governance framework... and do I already have one?

5 data management best practices to help you do data right

Get More Insights

Want more Insights from SAS? Subscribe to our Insights newsletter. Or check back often to get more insights on the topics you care about, including analytics , big data , data management , marketing , and risk & fraud .

Data Analytics with R

1 problem solving with data, 1.1 introduction.

This chapter will introduce you to a general approach to solving problems and answering questions using data. Throughout the rest of the module, we will reference back to this chapter as you work your way through your own data analysis exercises.

The approach is applicable to actuaries, data scientists, general data analysts, or anyone who intends to critically analyze data and develop insights from data.

This framework, which some may refer to as The Data Science Process includes the following five main components:

- Data Collection

- Data Cleaning

- Exploratory Data Analysis

- Model Building

- Inference and Communication

Note that all five steps may not be applicable in every situation, but these steps should guide you as you think about how to approach each analysis you perform.

In the subsections below, we’ll dive into each of these in more detail.

1.2 Data Collection

In order to solve a problem or answer a question using data, it seems obvious that you must need some sort of data to start with. Obtaining data may come in the form of pre-existing or generating new data (think surveys). As an actuary, your data will often come from pre-existing sources within your company. This could include querying data from databases or APIs, being sent excel files, text files, etc. You may also find supplemental data online to assist you with your project.

For example, let’s say you work for a health insurance company and you are interested in determining the average drive time for your insured population to the nearest in-network primary care providers to see if it would be prudent to contract with additional doctors in the area. You would need to collect at least three pieces of data:

- Addresses of your insured population (internal company source/database)

- Addresses of primary care provider offices (internal company source/database)

- Google Maps travel time API to calculate drive times between addresses (external data source)

In summary, data collection provides the fundamental pieces needed to solve your problem or answer your question.

1.3 Data Cleaning

We’ll discuss data cleaning in a little more detail in later chapters, but this phase generally refers to the process of taking the data you collected in step 1, and turning it into a usable format for your analysis. This phase can often be the most time consuming as it may involve handling missing data as well as pre-processing the data to be as error free as possible.

Depending on where you source your data will have major implications for how long this phase takes. For example, many of us actuaries benefit from devoted data engineers and resources within our companies who exert much effort to make our data as clean as possible for us to use. However, if you are sourcing your data from raw files on the internet, you may find this phase to be exceptionally difficult and time intensive.

1.4 Exploratory Data Analysis

Exploratory Data Analysis , or EDA, is an entire subject itself. In short, EDA is an iterative process whereby you:

- Generate questions about your data

- Search for answers, patterns, and characteristics of your data by transforming, visualizing, and summarizing your data

- Use learnings from step 2 to generate new questions and insights about your data

We’ll cover some basics of EDA in Chapter 4 on Data Manipulation and Chapter 5 on Data Visualization, but we’ll only be able to scratch the surface of this topic.

A successful EDA approach will allow you to better understand your data and the relationships between variables within your data. Sometimes, you may be able to answer your question or solve your problem after the EDA step alone. Other times, you may apply what you learned in the EDA step to help build a model for your data.

1.5 Model Building

In this step, we build a model, often using machine learning algorithms, in an effort to make sense of our data and gain insights that can be used for decision making or communicating to an audience. Examples of models could include regression approaches, classification algorithms, tree-based models, time-series applications, neural networks, and many, many more. Later in this module, we will practice building our own models using introductory machine learning algorithms.

It’s important to note that while model building gets a lot of attention (because it’s fun to learn and apply new types of models), it typically encompasses a relatively small portion of your overall analysis from a time perspective.

It’s also important to note that building a model doesn’t have to mean applying machine learning algorithms. In fact, in actuarial science, you may find more often than not that the actuarial models you create are Microsoft Excel-based models that blend together historical data, assumptions about the business, and other factors that allow you make projections or understand the business better.

1.6 Inference and Communication

The final phase of the framework is to use everything you’ve learned about your data up to this point to draw inferences and conclusions about the data, and to communicate those out to an audience. Your audience may be your boss, a client, or perhaps a group of actuaries at an SOA conference.

In any instance, it is critical for you to be able to condense what you’ve learned into clear and concise insights and convince your audience why your insights are important. In some cases, these insights will lend themselves to actionable next steps, or perhaps recommendations for a client. In other cases, the results will simply help you to better understand the world, or your business, and to make more informed decisions going forward.

1.7 Wrap-Up

As we conclude this chapter, take a few minutes to look at a couple alternative visualizations that others have used to describe the processes and components of performing analyses. What do they have in common?

- Karl Rohe - Professor of Statistics at the University of Wisconsin-Madison

- Chanin Nantasenamat - Associate Professor of Bioinformatics and Youtuber at the “Data Professor” channel

7 Common Data Analytics Problems – & How to Solve Them

By Rotem Yifat, Product Marketing Manager

May 30, 2023

In a nutshell:

- Data analysts often face issues with limited value of historical insights and unused insights.

- Data goes unused due to limited capacity to process and analyze it.

- Bias is unavoidable in traditional predictive modeling.

- Long time to value and data-security concerns are common problems.

- Predictive analytics platforms can overcome these issues by providing accurate predictions, easy integration, and automated processes.

As a data analyst, your job is to make sense of data by breaking it down into manageable parts, processing it, and performing statistical analyses that reveal trends, patterns, and relationships. And you typically need to present those insights in a way that’s easy for stakeholders to understand.

This process is crucial for organizations that are looking for a data-driven way to make informed decisions, improve business outcomes, and gain a competitive advantage. And thanks to the emergence of new tools, technologies, and techniques, the realm of what’s possible is constantly expanding. Indeed, with the advent of AI, data analytics has become more powerful and efficient than ever before.

However, like many analysts, you may be grappling with some all-too-familiar issues that prevent analysts from doing their best work and making a significant business impact.

In this article, we’ll explore seven common issues faced by data analysts, and how using predictive analytics can be a great way to overcome them.

Problem 1: Limited value of historical insights

The most common application of data analysis is descriptive analytics , where historical data is analyzed in order to understand past trends and events.

The problem? Relying solely on historical insights has limited value, especially in fast-changing businesses where consumer behavior and preferences, as well as market conditions, are constantly evolving. By definition, such insights are based on past trends and events, which may not apply to current or future scenarios and can lead to inaccurate or incomplete analyses.

Relying only on past data can also create bias towards the status quo, which limits your ability to identify new opportunities and potential risks. Take the example of a retailer that relies solely on historical data to determine which products to stock: they’re likely to miss out on new trends or shifts in customer preferences, which leads to missed sales opportunities.

To address this problem, you should strive to complement historical insights with predictive analytics. With this approach, you can identify emerging trends and quickly adapt to changing market conditions.

And by leveraging machine learning within a predictive analytics platform , you can identify meaningful patterns that would otherwise be undetectable. This leads to highly accurate predictions that your business can take advantage of in order to make proactive, well-informed decisions.

Problem 2: Insights aren’t utilized

No one loves the idea of toiling away for months or years, only to discover that their work has been overlooked, undervalued, or not put into practice.

Unfortunately, statistical insights are often viewed as unusable, or even meaningless, if stakeholders can’t easily identify and take relevant action. As mentioned above, this is often the case with descriptive analytics, where there is a focus on the past rather than the future. And as a result, analysts often invest lots of energy into preparing dashboards and reports that are rarely ever used or incorporated into the business workflow.

One way to overcome this challenge is by using a predictive analytics platform that allows you to choose from a variety of pre-built models that can be customized to fit specific use cases. This way, you can easily generate actionable predictions that serve a particular goal, and also enjoy the benefit of automatically generated, easy-to-use dashboards.

In addition, predictions can be integrated directly into your existing work tools. For example: if your goal is to reduce customer churn, you can integrate churn predictions alongside your existing CRM data. This would allow your colleagues to strategize something like an email campaign built on segments reflecting how likely each customer is to churn.

The best way to ensure all your hard work is put to use is by generating insights that can truly guide business decisions and help your company achieve mission-critical KPIs.

Problem 3: Data goes unused

Businesses collect and generate massive amounts of data. But even when they have sufficient resources, they’re not able to use much of this data due to humans’ limited capacity to think about and process data. In many cases, analysts aren’t even sure of whether particular data is worth using due to data quality issues or questions about its meaning.

For these reasons and more, data professionals are generally only able to build a small number of rule-based models, which can only account for two or three variables at a time.

This common challenge can be overcome with a predictive analytics platform like Pecan. By leveraging automated machine learning to analyze vast amounts of data, you can slash the time and effort it takes to decide which data is relevant. Here’s what we mean…

You can instantly feed raw data into a predictive model, and this data can come from any source (such as sales data, user engagement data , customer demographics, and social media). And automating this process means you can use updated data to generate fresh predictions regularly, helping you stay on top of changing customer behavior and market conditions.

The platform will then automatically determine which data is relevant (through behind-the-scenes processes like feature selection and feature engineering), and then will find the best predictive model that can be built using that data.These automated processes enable you to analyze massive datasets and generate accurate predictions within a matter of hours, instead of months. This means you can focus on communicating and creating real value out of your insights, without doing all the heavy lifting.

Hand-built models might seem like the ideal solution to data problems, but they can introduce their own issues.

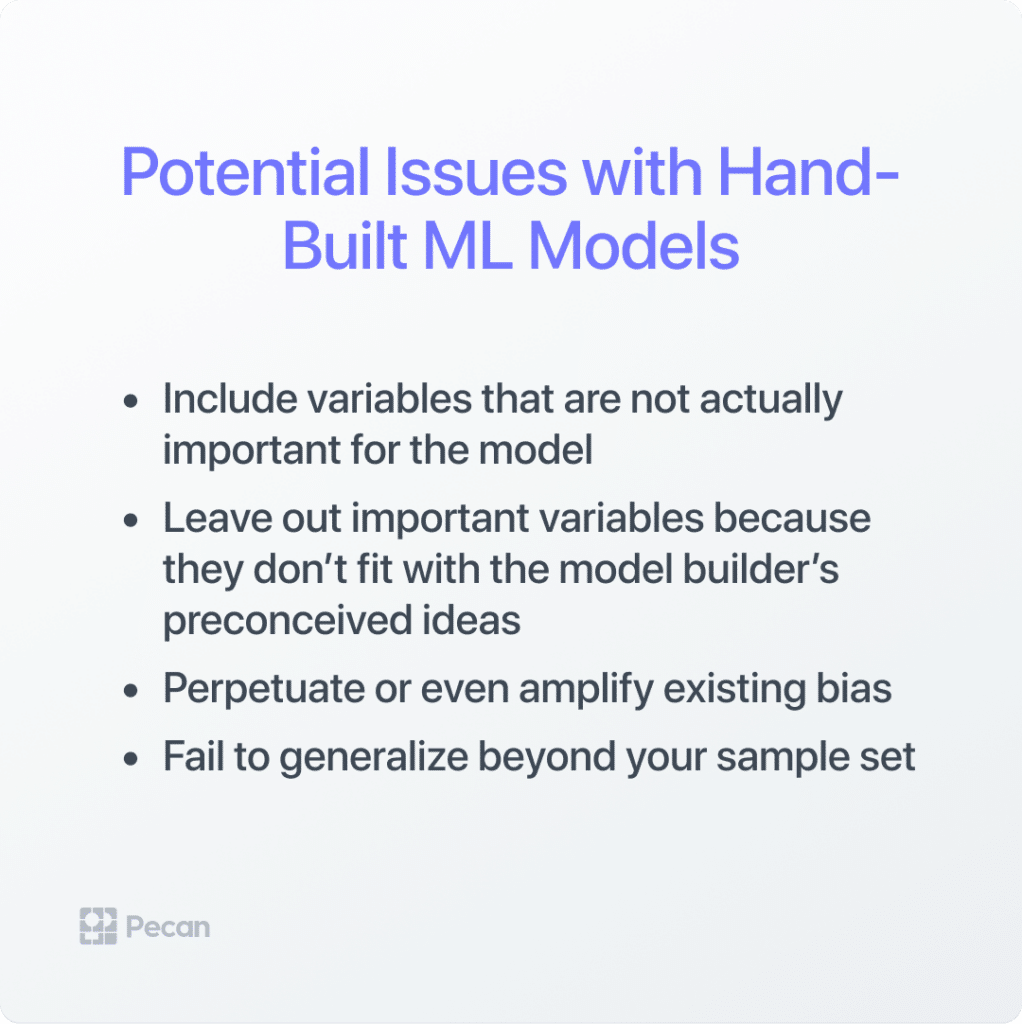

Problem 4: Bias is unavoidable

Traditional predictive modeling involves the use of statistical and mathematical techniques to uncover relationships and identify trends. But as scientific as it may be, there is always human bias in the process of selecting variables.

This means that hand-built models will inevitably, at some point:

- Include variables that are not actually important for the model (but possibly correlate with the outcome)

- Leave out important variables because they don’t fit with the model builder’s preconceived ideas

- Perpetuate or even amplify existing bias (e.g., by restricting your model to a certain gender or zip code)

- Fail to generalize beyond your sample set (e.g., if the model is based on data from a limited time period)

This bias doesn’t happen when you use a predictive analytics platform . One key reason is because automated feature engineering will evaluate and construct thousands of potential variables that could be used in your model, and then determine which are most relevant. (Naturally, this multi-variable approach also leads to more accurate and reliable predictions.)

In the case of Pecan, a simple dashboard will reveal how your model arrived at its predictions, by showing the degree to which each variable (a.k.a. feature) influenced its outcomes. This knowledge also enables you to identify and mitigate any potential biases that may be introduced through your raw data itself.

Problem 5: Long time to value

To be implemented and adopted, many analytics tools require significant change management and engineering assistance. And, of course, analytics projects themselves demand a significant amount of time and resources. Sometimes they will bear fruit, and other times they won’t.

Contrast that with a predictive analytics platform, which can do in hours what it might take a data analyst or scientist months to achieve. Not only can you connect multiple data sources to the platform for automatic importing, but with Pecan, you can use Predictive GenAI to define a predictive question that can be answered with a model based on your raw data. Auto-generated code kickstarts your model, which the Pecan engine then automatically builds. The model uses hidden patterns in your data to generate predictions. With those predictions, your business colleagues will be able to act quickly on the provided insights to achieve their business goals.

Another thing to keep in mind: data changes over time, and models require ongoing maintenance in order to remain accurate and effective. In the case of traditional rule-based models, this often means restarting the modeling process from scratch.

But with an automated approach, a predictive model only needs to be built once. With automated model-retraining and monitoring capabilities, all you need to do is feed it new data and/or adjust the variables you wish to use in your predictions.

The time required to resolve data problems with an automated platform can be far less than preparing data by hand for modeling.

Problem 6: Data-security concerns

Technical difficulties aside, concerns and regulations around data security can make it extremely challenging to integrate different data tools and achieve smooth adoption.

According to a 2020 report by IBM , the average cost of a data breach is $3.86 million. Navigating security best practices and avoiding potential security issues is a lot to ask of a data analyst who is not a security professional yet is tasked with managing and integrating sensitive data across multiple tools, whether locally or in the cloud.

This is where an all-in-one predictive analytics platform again proves advantageous. For example, Pecan prioritizes data security and takes various measures to ensure sensitive information is protected at all times. Let an enterprise-grade solution take care of the security business—so you can focus on yours.

Problem 7: Tedious, time-consuming processes

If we haven’t made it clear by this point, turning raw data into actionable insights is no small feat. And we’d be remiss not to spend some time talking about the person at the center of all this: the data analyst.

In data analytics projects, manual processes add multiple layers of complexity, difficulty, and stress. Analysts often need to carry out tedious and/or time-consuming tasks like data collection, data cleaning, data transformation, data visualization, and, of course, statistical analysis itself. Add a variety of tools and techniques—from programming languages like Python or R to data-visualization tools like Tableau or Power BI to statistical software like SPSS or SAS—and an analyst’s work is cut out for them.

Furthermore, incomplete, inconsistent, inaccurate, or outdated data can significantly impact the effectiveness of a statistical model. So when you add issues of data quality to an already heavy load, fulfilling a data analytics project can seem like an insurmountable feat.

Fortunately, predictive analytics platforms can handle all of the most tedious and complex processes. For example, Pecan automatically performs tasks like data prep , feature engineering, model tuning , and model deployment and monitoring. It can also identify and remove incomplete or inaccurate data and automatically transform your data into the right format for training accurate machine learning models .

What this means is that data analysts, instead of being weighed down by outdated data practices, can focus on building business use cases and imagining how their predictive insights can help solve real business needs.

Wrapping up

An honest assessment of the “old way” of doing things will lead to one obvious conclusion: It’s time to update the way your team performs data analytics. You and your organization should aim for a more holistic approach that maximizes the value of your data. You can gain a complete understanding of your customers and business processes, and make more informed (and profitable) decisions.

Predictive analytics platforms are a great solution for overcoming many of the pain points that plague data analysts. By leveraging machine learning algorithms and automated ML processes, you can quickly analyze huge volumes of data, generate accurate predictions that target a specific business need, and help your business make better decisions that keep you ahead of the competition.

Ready to see how easy it can be to use predictive analytics to supercharge your data analytics role and solve your data problems? Sign up for a free trial now and try it yourself!

Related content

How to Build a Predictive Analytics Model, Your Way

Learn how to build a predictive analytics model your way with our guide. Explore methods, data quality tips, and industry examples.

What is an AI Data Analyst?

Ready to become an AI data analyst? Get answers to your biggest questions about a career in AI data analytics, including skills and salary.

A Simple Guide to Rebuilding Your Data Team in 2024

Discover how to rebuild your data team in 2024. Leverage new technologies, foster a data-driven culture, and improve efficiency.

If you're seeing this message, it means we're having trouble loading external resources on our website.

If you're behind a web filter, please make sure that the domains *.kastatic.org and *.kasandbox.org are unblocked.

To log in and use all the features of Khan Academy, please enable JavaScript in your browser.

Unit 11: Advanced: Problem solving and data analysis

About this unit.

Ready for a challenge? This unit covers the hardest problem solving and data analysis questions on the SAT Math test. Work through each skill, taking quizzes and the unit test to level up your mastery progress.

Ratios, rates, and proportions: advanced

- Ratios, rates, and proportions | SAT lesson (Opens a modal)

- Ratios, rates, and proportions — Basic example (Opens a modal)

- Ratios, rates, and proportions — Harder example (Opens a modal)

- Ratios, rates, and proportions: advanced Get 3 of 4 questions to level up!

Unit conversion: advanced

- Unit conversion | Lesson (Opens a modal)

- Units — Basic example (Opens a modal)

- Units — Harder example (Opens a modal)

- Unit conversion: advanced Get 3 of 4 questions to level up!

Percentages: advanced

- Percentages | Lesson (Opens a modal)

- Percents — Basic example (Opens a modal)

- Percents — Harder example (Opens a modal)

- Percentages: advanced Get 3 of 4 questions to level up!

Center, spread, and shape of distributions: advanced

- Center, spread, and shape of distributions | Lesson (Opens a modal)

- Center, spread, and shape of distributions — Basic example (Opens a modal)

- Center, spread, and shape of distributions — Harder example (Opens a modal)

- Center, spread, and shape of distributions: advanced Get 3 of 4 questions to level up!

Data representations: advanced

- Data representations | Lesson (Opens a modal)

- Key features of graphs — Basic example (Opens a modal)

- Key features of graphs — Harder example (Opens a modal)

- Data representations: advanced Get 3 of 4 questions to level up!

Scatterplots: advanced

- Scatterplots | Lesson (Opens a modal)

- Scatterplots — Basic example (Opens a modal)

- Scatterplots — Harder example (Opens a modal)

- Scatterplots: advanced Get 3 of 4 questions to level up!

Linear and exponential growth: advanced

- Linear and exponential growth | Lesson (Opens a modal)

- Linear and exponential growth — Basic example (Opens a modal)

- Linear and exponential growth — Harder example (Opens a modal)

- Linear and exponential growth: advanced Get 3 of 4 questions to level up!

Probability and relative frequency: advanced

- Probability and relative frequency | Lesson (Opens a modal)

- Table data — Basic example (Opens a modal)

- Table data — Harder example (Opens a modal)

- Probability and relative frequency: advanced Get 3 of 4 questions to level up!

Data inferences: advanced

- Data inferences | Lesson (Opens a modal)

- Data inferences — Basic example (Opens a modal)

- Data inferences — Harder example (Opens a modal)

- Data inferences: advanced Get 3 of 4 questions to level up!

Evaluating statistical claims: advanced

- Evaluating statistical claims | Lesson (Opens a modal)

- Data collection and conclusions — Basic example (Opens a modal)

- Data collection and conclusions — Harder example (Opens a modal)

- Evaluating statistical claims: advanced Get 3 of 4 questions to level up!

Your Data Won’t Speak Unless You Ask It The Right Data Analysis Questions

In our increasingly competitive digital age, setting the right data analysis and critical thinking questions is essential to the ongoing growth and evolution of your business. It is not only important to gather your business’s existing information but you should also consider how to prepare your data to extract the most valuable insights possible.

That said, with endless rafts of data to sift through, arranging your insights for success isn’t always a simple process. Organizations may spend millions of dollars on collecting and analyzing information with various data analysis tools , but many fall flat when it comes to actually using that data in actionable, profitable ways.

Here we’re going to explore how asking the right data analysis and interpretation questions will give your analytical efforts a clear-cut direction. We’re also going to explore the everyday data questions you should ask yourself to connect with the insights that will drive your business forward with full force.

Let’s get started.

Data Is Only As Good As The Questions You Ask

The truth is that no matter how advanced your IT infrastructure is, your data will not provide you with a ready-made solution unless you ask it specific questions regarding data analysis.

To help transform data into business decisions, you should start preparing the pain points you want to gain insights into before you even start data gathering. Based on your company’s strategy, goals, budget, and target customers you should prepare a set of questions that will smoothly walk you through the online data analysis and enable you to arrive at relevant insights.

For example, you need to develop a sales strategy and increase revenue. By asking the right questions, and utilizing sales analytics software that will enable you to mine, manipulate and manage voluminous sets of data, generating insights will become much easier. An average business user and cross-departmental communication will increase its effectiveness, decreasing the time to make actionable decisions and, consequently, providing a cost-effective solution.

Before starting any business venture, you need to take the most crucial step: prepare your data for any type of serious analysis. By doing so, people in your organization will become empowered with clear systems that can ultimately be converted into actionable insights. This can include a multitude of processes, like data profiling, data quality management, or data cleaning, but we will focus on tips and questions to ask when analyzing data to gain the most cost-effective solution for an effective business strategy.

“Today, big data is about business disruption. Organizations are embarking on a battle not just for success but for survival. If you want to survive, you need to act.” – Capgemini and EMC² in their study Big & Fast Data: The Rise of Insight-Driven Business .

This quote might sound a little dramatic. However, consider the following statistics pulled from research developed by Forrester Consulting and Collibra:

- 84% of correspondents report that data at the center stage of developing business strategies is critical

- 81% of correspondents realized an advantage in growing revenue

- 8% admit an advantage in improving customers' trust

- 58% of "data intelligent" organizations are more likely to exceed revenue goals

Based on this survey, it seems that business professionals believe that data is the ultimate cure for all their business ills. And that's not a surprise considering the results of the survey and the potential that data itself brings to companies that decide to utilize it properly. Here we will take a look at data analysis questions examples and explain each in detail.

19 Data Analysis Questions To Improve Your Business Performance In The Long Run

What are data analysis questions, exactly? Let’s find out. While considering the industry you’re in, and competitors your business is trying to outperform, data questions should be clearly defined. Poor identification can result in faulty interpretation, which can directly affect business efficiency, and general results, and cause problems.

Here at datapine, we have helped solve hundreds of analytical problems for our clients by asking big data questions. All of our experience has taught us that data analysis is only as good as the questions you ask. Additionally, you want to clarify these questions regarding analytics now or as soon as possible – which will make your future business intelligence much clearer. Additionally, incorporating a decision support system software can save a lot of the company’s time – combining information from raw data, documents, personal knowledge, and business models will provide a solid foundation for solving business problems.

That’s why we’ve prepared this list of data analysis questions examples – to be sure you won’t fall into the trap of futile, “after the fact” data processing, and to help you start with the right mindset for proper data-driven decision-making while gaining actionable business insights.

1) What exactly do you want to find out?

It’s good to evaluate the well-being of your business first. Agree company-wide on what KPIs are most relevant for your business and how they already develop. Research different KPI examples and compare them to your own. Think about what way you want them to develop further. Can you influence this development? Identify where changes can be made. If nothing can be changed, there is no point in analyzing data. But if you find a development opportunity, and see that your business performance can be significantly improved, then a KPI dashboard software could be a smart investment to monitor your key performance indicators and provide a transparent overview of your company’s data.

The next step is to consider what your goal is and what decision-making it will facilitate. What outcome from the analysis you would deem a success? These introductory examples of analytical questions are necessary to guide you through the process and focus on key insights. You can start broad, by brainstorming and drafting a guideline for specific questions about the data you want to uncover. This framework can enable you to delve deeper into the more specific insights you want to achieve.

Let’s see this through an example and have fun with a little imaginative exercise.

Let’s say that you have access to an all-knowing business genie who can see into the future. This genie (who we’ll call Data Dan) embodies the idea of a perfect data analytics platform through his magic powers.

Now, with Data Dan, you only get to ask him three questions. Don’t ask us why – we didn’t invent the rules! Given that you’ll get exactly the right answer to each of them, what are you going to ask it? Let’s see….

Talking With A Data Genie

You: Data Dan! Nice to meet you, my friend. Didn’t know you were real.

Data Dan: Well, I’m not actually. Anyways – what’s your first data analysis question?

You: Well, I was hoping you could tell me how we can raise more revenue in our business.

Data Dan: (Rolls eyes). That’s a pretty lame question, but I guess I’ll answer it. How can you raise revenue? You can do partnerships with some key influencers, you can create some sales incentives, and you can try to do add-on services to your most existing clients. You can do a lot of things. Ok, that’s it. You have two questions left.

You: (Panicking) Uhhh, I mean – you didn’t answer well! You just gave me a bunch of hypotheticals!

Data Dan: I exactly answered your question. Maybe you should ask for better ones.

You: (Sweating) My boss is going to be so mad at me if I waste my questions with a magic business genie. Only two left, only two left… OK, I know! Genie – what should I ask you to make my business the most successful?

Data Dan: OK, you’re still not good at this, but I’ll be nice since you only have one data question left. Listen up buddy – I’m only going to say this once.

The Key To Asking Good Analytical Questions

Data Dan: First of all, you want your questions to be extremely specific. The more specific it is, the more valuable (and actionable) the answer is going to be. So, instead of asking, “How can I raise revenue?”, you should ask: “What are the channels we should focus more on in order to raise revenue while not raising costs very much, leading to bigger profit margins?”. Or even better: “Which marketing campaign that I did this quarter got the best ROI, and how can I replicate its success?”

These key questions to ask when analyzing data can define your next strategy in developing your organization. We have used a marketing example, but every department and industry can benefit from proper data preparation. By using a multivariate analysis, different aspects can be covered and specific inquiries defined.

2) What standard KPIs will you use that can help?

OK, let’s move on from the whole genie thing. Sorry, Data Dan! It’s crucial to know what data analysis questions you want to ask from the get-go. They form the bedrock for the rest of this process.

Think about it like this: your goal with business intelligence is to see reality clearly so that you can make profitable decisions to help your company thrive. The questions to ask when analyzing data will be the framework, the lens, that allows you to focus on specific aspects of your business reality.

Once you have your data analytics questions, you need to have some standard KPIs that you can use to measure them. For example, let’s say you want to see which of your PPC campaigns last quarter did the best. As Data Dan reminded us, “did the best” is too vague to be useful. Did the best according to what? Driving revenue? Driving profit? Giving the most ROI? Giving the cheapest email subscribers?

All of these KPI examples can be valid choices. You just need to pick the right ones first and have them in agreement company-wide (or at least within your department).

Let’s see this through a straightforward example.

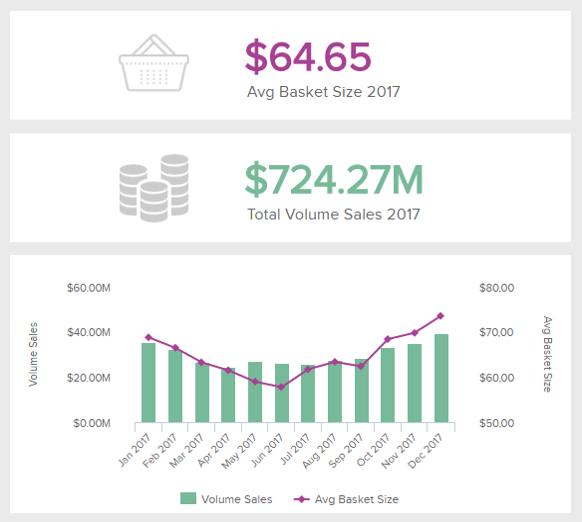

You are a retail company and want to know what you sell, where, and when – remember the specific questions for analyzing data? In the example above, it is clear that the amount of sales performed over a set period tells you when the demand is higher or lower – you got your specific KPI answer. Then you can dig deeper into the insights and establish additional sales opportunities, and identify underperforming areas that affect the overall sales of products.

It is important to note that the number of KPIs you choose should be limited as monitoring too many can make your analysis confusing and less efficient. As the old analytics saying goes, just because you can measure something, it doesn't mean you should. We recommended sticking to a careful selection of 3-6 KPIs per business goal, this way, you'll avoid getting distracted by meaningless data.

The criteria to pick your KPIs is they should be attainable, realistic, measurable in time, and directly linked to your business goals. It is also a good practice to set KPI targets to measure the progress of your efforts.

Now let’s proceed to one of the most important data questions to ask – the data source.

3) Where will your data come from?

Our next step is to identify data sources you need to dig into all your data, pick the fields that you’ll need, leave some space for data you might potentially need in the future, and gather all the information in one place. Be open-minded about your data sources in this step – all departments in your company, sales, finance, IT, etc., have the potential to provide insights.

Don’t worry if you feel like the abundance of data sources makes things seem complicated. Our next step is to “edit” these sources and make sure their data quality is up to par, which will get rid of some of them as useful choices.

Right now, though, we’re just creating the rough draft. You can use CRM data, data from things like Facebook and Google Analytics, or financial data from your company – let your imagination go wild (as long as the data source is relevant to the questions you’ve identified in steps 1 and It could also make sense to utilize business intelligence software , especially since datasets in recent years have expanded in so much volume that spreadsheets can no longer provide quick and intelligent solutions needed to acquire a higher quality of data.

Another key aspect of controlling where your data comes from and how to interpret it effectively boils down to connectivity. To develop a fluent data analytics environment, using data connectors is the way forward.

Digital data connectors will empower you to work with significant amounts of data from several sources with a few simple clicks. By doing so, you will grant everyone in the business access to valuable insights that will improve collaboration and enhance productivity.

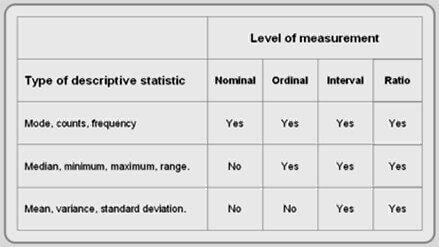

3.5) Which scales apply to your different datasets?

WARNING: This is a bit of a “data nerd out” section. You can skip this part if you like or if it doesn’t make much sense to you.

You’ll want to be mindful of the level of measurement for your different variables, as this will affect the statistical techniques you will be able to apply in your analysis.

There are basically 4 types of scales:

*Statistics Level Measurement Table*

- Nominal – you organize your data in non-numeric categories that cannot be ranked or compared quantitatively.

Examples: – Different colors of shirts – Different types of fruits – Different genres of music

- Ordinal – GraphPad gives this useful explanation of ordinal data:

“You might ask patients to express the amount of pain they are feeling on a scale of 1 to 10. A score of 7 means more pain than a score of 5, and that is more than a score of 3. But the difference between the 7 and the 5 may not be the same as that between 5 and 3. The values simply express an order. Another example would be movie ratings, from 0 to 5 stars.”

- Interval – in this type of scale, data is grouped into categories with order and equal distance between these categories.

Direct comparison is possible. Adding and subtracting is possible, but you cannot multiply or divide the variables. Example: Temperature ratings. An interval scale is used for both Fahrenheit and Celsius.

Again, GraphPad has a ready explanation: “The difference between a temperature of 100 degrees and 90 degrees is the same difference as between 90 degrees and 80 degrees.”

- Ratio – has the features of all three earlier scales.

Like a nominal scale, it provides a category for each item, items are ordered like on an ordinal scale and the distances between items (intervals) are equal and carry the same meaning.

With ratio scales, you can add, subtract, divide, multiply… all the fun stuff you need to create averages and get some cool, useful data. Examples: height, weight, revenue numbers, leads, and client meetings.

4) Will you use market and industry benchmarks?

In the previous point, we discussed the process of defining the data sources you’ll need for your analysis as well as different methods and techniques to collect them. While all of those internal sources of information are invaluable, it can also be a useful practice to gather some industry data to use as benchmarks for your future findings and strategies.

To do so, it is necessary to collect data from external sources such as industry reports, research papers, government studies, or even focus groups and surveys performed on your targeted customer as a market research study to extract valuable information regarding the state of the industry in general but also the position each competitor occupies in the market.

In doing so, you’ll not only be able to set accurate benchmarks for what your company should be achieving but also identify areas in which competitors are not strong enough and exploit them as a competitive advantage. For example, you can perform a market research survey to analyze the perception customers have about your brand and your competitors and generate a report to analyze the findings, as seen in the image below.

**click to enlarge**

This market research dashboard is displaying the results of a survey on brand perception for 8 outdoor brands. Respondents were asked different questions to analyze how each brand is recognized within the industry. With these answers, decision-makers are able to complement their strategies and exploit areas where there is potential.

5) Is the data in need of cleaning?

Insights and analytics based on a shaky “data foundation” will give you… well, poor insights and analytics. As mentioned earlier, information comes from various sources, and they can be good or bad. All sources within a business have a motivation for providing data, so the identification of which information to use and from which source it is coming should be one of the top questions to ask about data analytics.

Remember – your data analysis questions are designed to get a clear view of reality as it relates to your business being more profitable. If your data is incorrect, you’re going to be seeing a distorted view of reality.

That’s why your next step is to “clean” your data sets in order to discard wrong, duplicated, or outdated information. This is also an appropriate time to add more fields to your data to make it more complete and useful. That can be done by a data scientist or individually, depending on the size of the company.

An interesting survey comes from CrowdFlower , a provider or a data enrichment platform among data scientists. They have found out that most data scientists spend:

- 60% of their time organizing and cleaning data (!).

- 19% is spent on collecting datasets.

- 9% is spent mining the data to draw patterns.

- 3% is spent on training the datasets.

- 4% is spent refining the algorithms.

- 5% of the time is spent on other tasks.

57% of them consider the data cleaning process the most boring and least enjoyable task. If you are a small business owner, you probably don’t need a data scientist, but you will need to clean your data and ensure a proper standard of information.

Yes, this is annoying, but so are many things in life that are very important.

When you’ve done the legwork to ensure your data quality, you’ll have built yourself the useful asset of accurate data sets that can be transformed, joined, and measured with statistical methods. But, cleaning is not the only thing you need to do to ensure data quality, there are more things to consider which we’ll discuss in the next question.

6) How can you ensure data quality?