Tips to Create and Test a Value Hypothesis: A Step-by-Step Guide

Developing a robust value hypothesis is crucial as you bring a new product to market, guiding your startup toward answering a genuine market need. Constructing a verifiable value hypothesis anchors your product's development process in customer feedback and data-driven insight rather than assumptions.

This framework enables you to clarify the potential value your product offers and provides a foundation for testing and refining your approach, significantly reducing the risk of misalignment with your target market. To set the stage for success, employ logical structures and objective measures, such as creating a minimum viable product, to effectively validate your product's value proposition.

What Is a Verifiable Value Hypothesis?

A verifiable value hypothesis articulates your belief about how your product will deliver value to customers. It is a testable prediction aimed at demonstrating the expected outcomes for your target market.

To ensure that your value hypothesis is verifiable, it should adhere to the following conditions:

- Specific : Clearly defines the value proposition and the customer segment.

- Measurable : Includes metrics by which you can assess success or failure.

- Achievable : Realistic based on your resources and market conditions.

- Relevant : Directly addresses a significant customer need or desire.

- Time-Bound : Has a defined period for testing and validation.

When you create a value hypothesis, you're essentially forming the backbone of your business model. It goes beyond a mere assumption and relies on customer feedback data to inform its development. You also safeguard it with objective measures, such as a minimum viable product, to test the hypothesis in real life.

By articulating and examining a verifiable value hypothesis, you understand your product's potential impact and reduce the risk associated with new product development. It's about making informed decisions that increase your confidence in the product's potential success before committing significant resources.

Value Hypotheses vs. Growth Hypotheses

Value hypotheses and growth hypotheses are two distinct concepts often used in business, especially in the context of startups and product development.

Value Hypotheses : A value hypothesis is centered around the product itself. It focuses on whether the product truly delivers customer value. Key questions include whether the product meets a real need, how it compares to alternatives, and if customers are willing to pay for it. Valuing a value hypothesis is crucial before a business scales its operations.

Growth Hypotheses : A growth hypothesis, on the other hand, deals with the scalability and marketing aspects of the business. It involves strategies and channels used to acquire new customers. The focus is on how to grow the customer base, the cost-effectiveness of growth strategies, and the sustainability of growth. Validating a growth hypothesis is typically the next step after confirming that the product has value to the customers.

In practice, both hypotheses are crucial for the success of a business. A value hypothesis ensures the product is desirable and needed, while a growth hypothesis ensures that the product can reach a larger market effectively.

Tips to Create and Test a Verifiable Value Hypothesis

Creating a value hypothesis is crucial for understanding what drives customer interest in your product. It's an educated guess that requires rigor to define and clarity to test. When developing a value hypothesis, you're attempting to validate assumptions about your product's value to customers. Here are concise tips to help you with this process:

1. Understanding Your Market and Customers

Before formulating a hypothesis, you need a deep understanding of your market and potential customers. You're looking to uncover their pain points and needs which your product aims to address.

Begin with thorough market research and collect customer feedback to ensure your idea is built upon a solid foundation of real-world insights. This understanding is pivotal as it sets the tone for a relevant and testable hypothesis.

- Define Your Value Proposition Clearly: Articulate your product's value to the user. What problem does it solve? How does it improve the user's life or work?

- Identify Your Target Audience. Determine who your ideal customers are. Understand their needs, pain points, and how they currently address the problem your product intends to solve.

2. Defining Clear Assumptions

The next step is to outline clear assumptions based on your idea that you believe will bring value to your customers. Each assumption should be an assertion that directly relates to how your customers will find your product valuable.

For example, if your product is a task management app, you might assume that the ability to share task lists with team members is a pain point for your potential customers. Remember, assumptions are not facts—they are educated guesses that need verification.

3. Identify Key Metrics for Your Hypothesis Test

Once you've defined your assumptions, delineate the framework for testing your value hypothesis. This involves designing experiments that validate or invalidate your assumptions with measurable outcomes. Ensure that your hypothesis can be tested with measurable outcomes. This could be in the form of user engagement metrics, conversion rates, or customer satisfaction scores.

Determine what success looks like and define objective metrics that will prove your product's value. This could be user engagement, conversion rates, or revenue. Choosing the right metrics is essential for an accurate test. For instance, in your test, you might measure the increase in customer retention or the decrease in time spent on task organization with your app. Construct your test so that the results are unequivocal and actionable.

4. Construct a Testable Proposition

Formulate your hypothesis in a way that can be tested empirically. Use qualitative research methods such as interviews, surveys, and observation to gather data about your potential users. Formulate your value hypothesis based on insights from this research. Plan experiments that can validate or invalidate your value hypothesis. This might involve A/B testing, user testing sessions, or pilot programs.

A good example is to posit that "Introducing feature X will increase user onboarding by Y%." Avoid complexity by testing one variable simultaneously. This helps you identify which changes are actually making a difference.

5. Applying Evidence to Innovation

When your data indicates a promising avenue for product development , it's imperative that you validate your growth hypothesis through experimentation. Align your value proposition with the evidence at hand.

Develop a simplified version of your product that allows you to test the core value proposition with real users without investing in full-scale production. Start by crafting a minimum viable product ( MVP ) to begin testing in the market. This approach helps mitigate risk by not investing heavily in unproven ideas. Use analytics tools to collect data on how users interact with your MVP. Look for patterns that either support or contradict your value hypothesis.

If the data suggests that your value hypothesis is wrong, be prepared to revise your hypothesis or pivot your product strategy accordingly.

6. Gather Customer Feedback

Integrating customer feedback into your product development process can create a more tailored value proposition. This step is crucial in refining your product to meet user needs and validate your hypotheses.

Use customer feedback tools to collect data on how users interact with your MVP. Look for patterns that either support or contradict your value hypothesis. Here are some ways to collect feedback effectively :

- Feedback portals

- User testing sessions

- In-app feedback

- Website widgets

- Direct interviews

- Focus groups

- Feedback forums

Create a centralized place for product feedback to keep track of different types of customer feedback and improve SaaS products while listening to their customers. Rapidr helps companies be more customer-centric by consolidating feedback across different apps, prioritizing requests, having a discourse with customers, and closing the feedback loop.

7. Analyze and Iterate Quickly

Review the data and analyze customer feedback to see if it supports your hypothesis. If your hypothesis is not supported, iterate on your assumptions, and test again. Keep a detailed record of your hypotheses, experiments, and findings. This documentation will help you understand the evolution of your product and guide future decision-making.

Use the feedback and data from your tests to make quick iterations of your product and drive product development . This allows you to refine your value proposition and improve the fit with your target audience. Engage with your users throughout the process. Real-world feedback is invaluable and can provide insights that data alone cannot.

- Identify Patterns : What commonalities are present in the feedback?

- Implement Changes : Prioritize and make adjustments based on customer insights.

9. Align with Business Goals and Stay Customer-Focused

Ensure that your value hypothesis aligns with the broader goals of your business. The value provided should ultimately contribute to the success of the company. Remember that the ultimate goal of your value hypothesis is to deliver something that customers find valuable. Maintain a strong focus on customer needs and satisfaction throughout the process.

10. Communicate with Stakeholders and Update them

Keep all stakeholders informed about your findings and the implications for the product. Clear communication helps ensure everyone is aligned and understands the rationale behind product decisions. Communicate and close the feedback loop with the help of a product changelog through which you can announce new changes and engage with customers.

Understanding and validating a value hypothesis is essential for any business, particularly startups. It involves deeply exploring whether a product or service meets customer needs and offers real value. This process ensures that resources are invested in desirable and useful products, and it's a critical step before considering scalability and growth.

By focusing on the value hypothesis, businesses can better align their offerings with market demand, leading to more sustainable success. Placing customer feedback at the center of the process of testing a value hypothesis helps you develop a product that meets your customers' needs and stands out in the market.

Rapidr helps companies be more customer-centric by consolidating feedback across different apps, prioritizing requests, having a discourse with customers, and closing the feedback loop.

Build better products with user feedback

Rapidr helps SaaS companies understand what customers need through feedback, prioritize what to build next, inform the roadmap, and notify customers on product releases

12 min read

Value Hypothesis 101: A Product Manager's Guide

Talk to sales.

Humans make assumptions every day—it’s our brain’s way of making sense of the world around us, but assumptions are only valuable if they're verifiable . That’s where a value hypothesis comes in as your starting point.

A good hypothesis goes a step beyond an assumption. It’s a verifiable and validated guess based on the value your product brings to your real-life customers. When you verify your hypothesis, you confirm that the product has real-world value, thus you have a higher chance of product success.

What Is a Verifiable Value Hypothesis?

A value hypothesis is an educated guess about the value proposition of your product. When you verify your hypothesis , you're using evidence to prove that your assumption is correct. A hypothesis is verifiable if it does not prove false through experimentation or is shown to have rational justification through data, experiments, observation, or tests.

The most significant benefit of verifying a hypothesis is that it helps you avoid product failure and helps you build your product to your customers’ (and potential customers’) needs.

Verifying your assumptions is all about collecting data. Without data obtained through experiments, observations, or tests, your hypothesis is unverifiable, and you can’t be sure there will be a market need for your product.

A Verifiable Value Hypothesis Minimizes Risk and Saves Money

When you verify your hypothesis, you’re less likely to release a product that doesn’t meet customer expectations—a waste of your company’s resources. Harvard Business School explains that verifying a business hypothesis “...allows an organization to verify its analysis is correct before committing resources to implement a broader strategy.”

If you verify your hypothesis upfront, you’ll lower risk and have time to work out product issues.

UserVoice Validation makes product validation accessible to everyone. Consider using its research feature to speed up your hypothesis verification process.

Value Hypotheses vs. Growth Hypotheses

Your value hypothesis focuses on the value of your product to customers. This type of hypothesis can apply to a product or company and is a building block of product-market fit .

A growth hypothesis is a guess at how your business idea may develop in the long term based on how potential customers may find your product. It’s meant for estimating business model growth rather than individual products.

Because your value hypothesis is really the foundation for your growth hypothesis, you should focus on value hypothesis tests first and complete growth hypothesis tests to estimate business growth as a whole once you have a viable product.

4 Tips to Create and Test a Verifiable Value Hypothesis

A verifiable hypothesis needs to be based on a logical structure, customer feedback data , and objective safeguards like creating a minimum viable product. Validating your value significantly reduces risk . You can prevent wasting money, time, and resources by verifying your hypothesis in early-stage development.

A good value hypothesis utilizes a framework (like the template below), data, and checks/balances to avoid bias.

1. Use a Template to Structure Your Value Hypothesis

By using a template structure, you can create an educated guess that includes the most important elements of a hypothesis—the who, what, where, when, and why. If you don’t structure your hypothesis correctly, you may only end up with a flimsy or leap-of-faith assumption that you can’t verify.

A true hypothesis uses a few guesses about your product and organizes them so that you can verify or falsify your assumptions. Using a template to structure your hypothesis can ensure that you’re not missing the specifics.

You can’t just throw a hypothesis together and think it will answer the question of whether your product is valuable or not. If you do, you could end up with faulty data informed by bias , a skewed significance level from polling the wrong people, or only a vague idea of what your customer would actually pay for your product.

A template will help keep your hypothesis on track by standardizing the structure of the hypothesis so that each new hypothesis always includes the specifics of your client personas, the cost of your product, and client or customer pain points.

A value hypothesis template might look like:

[Client] will spend [cost] to purchase and use our [title of product/service] to solve their [specific problem] OR help them overcome [specific obstacle].

An example of your hypothesis might look like:

B2B startups will spend $500/mo to purchase our resource planning software to solve resource over-allocation and employee burnout.

By organizing your ideas and the important elements (who, what, where, when, and why), you can come up with a hypothesis that actually answers the question of whether your product is useful and valuable to your ideal customer.

2. Turn Customer Feedback into Data to Support Your Hypothesis

Once you have your hypothesis, it’s time to figure out whether it’s true—or, more accurately, prove that it’s valid. Since a hypothesis is never considered “100% proven,” it’s referred to as either valid or invalid based on the information you discover in your experiments or tests. Additionally, your results could lead to an alternative hypothesis, which is helpful in refining your core idea.

To support value hypothesis testing, you need data. To do that, you'll want to collect customer feedback . A customer feedback management tool can also make it easier for your team to access the feedback and create strategies to implement or improve customer concerns.

If you find that potential clients are not expressing pain points that could be solved with your product or you’re not seeing an interest in the features you hope to add, you can adjust your hypothesis and absorb a lower risk. Because you didn’t invest a lot of time and money into creating the product yet, you should have more resources to put toward the product once you work out the kinks.

On the other hand, if you find that customers are requesting features your product offers or pain points your product could solve, then you can move forward with product development, confident that your future customers will value (and spend money on) the product you’re creating.

A customer feedback management tool like UserVoice can empower you to challenge assumptions from your colleagues (often based on anecdotal information) which find their way into team decision making . Having data to reevaluate an assumption helps with prioritization, and it confirms that you’re focusing on the right things as an organization.

3. Validate Your Product

Since you have a clear idea of who your ideal customer is at this point and have verified their need for your product, it’s time to validate your product and decide if it’s better than your competitors’.

At this point, simply asking your customers if they would buy your product (or spend more on your product) instead of a competitor’s isn’t enough confirmation that you should move forward, and customers may be biased or reluctant to provide critical feedback.

Instead, create a minimum viable product (MVP). An MVP is a working, bare-bones version of the product that you can test out without risking your whole budget. Hypothesis testing with an MVP simulates the product experience for customers and, based on their actions and usage, validates that the full product will generate revenue and be successful.

If you take the steps to first verify and then validate your hypothesis using data, your product is more likely to do well. Your focus will be on the aspect that matters most—whether your customer actually wants and would invest money in purchasing the product.

4. Use Safeguards to Remain Objective

One of the pitfalls of believing in your product and attempting to validate it is that you’re subject to confirmation bias . Because you want your product to succeed, you may pay more attention to the answers in the collected data that affirm the value of your product and gloss over the information that may lead you to conclude that your hypothesis is actually false. Confirmation bias could easily cloud your vision or skew your metrics without you even realizing it.

Since it’s hard to know when you’re engaging in confirmation bias, it’s good to have safeguards in place to keep you in check and aligned with the purpose of objectively evaluating your value hypothesis.

Safeguards include sharing your findings with third-party experts or simply putting yourself in the customer’s shoes.

Third-party experts are the business version of seeking a peer review. External parties don’t stand to benefit from the outcome of your verification and validation process, so your work is verified and validated objectively. You gain the benefit of knowing whether your hypothesis is valid in the eyes of the people who aren’t stakeholders without the risk of confirmation bias.

In addition to seeking out objective minds, look into potential counter-arguments , such as customer objections (explicit or imagined). What might your customer think about investing the time to learn how to use your product? Will they think the value is commensurate with the monetary cost of the product?

When running an experiment on validating your hypothesis, it’s important not to elevate the importance of your beliefs over the objective data you collect. While it can be exciting to push for the validity of your idea, it can lead to false assumptions and the permission of weak evidence.

Validation Is the Key to Product Success

With your new value hypothesis in hand, you can confidently move forward, knowing that there’s a true need, desire, and market for your product.

Because you’ve verified and validated your guesses, there’s less of a chance that you’re wrong about the value of your product, and there are fewer financial and resource risks for your company. With this strong foundation and the new information you’ve uncovered about your customers, you can add even more value to your product or use it to make more products that fit the market and user needs.

If you think customer feedback management software would be useful in your hypothesis validation process, consider opting into our free trial to see how UserVoice can help.

Heather Tipton

Start your free trial.

- school Campus Bookshelves

- menu_book Bookshelves

- perm_media Learning Objects

- login Login

- how_to_reg Request Instructor Account

- hub Instructor Commons

- Download Page (PDF)

- Download Full Book (PDF)

- Periodic Table

- Physics Constants

- Scientific Calculator

- Reference & Cite

- Tools expand_more

- Readability

selected template will load here

This action is not available.

3.1: The Fundamentals of Hypothesis Testing

- Last updated

- Save as PDF

- Page ID 2883

- Diane Kiernan

- SUNY College of Environmental Science and Forestry via OpenSUNY

The previous two chapters introduced methods for organizing and summarizing sample data, and using sample statistics to estimate population parameters. This chapter introduces the next major topic of inferential statistics: hypothesis testing.

A hypothesis is a statement or claim about a property of a population.

The Fundamentals of Hypothesis Testing

When conducting scientific research, typically there is some known information, perhaps from some past work or from a long accepted idea. We want to test whether this claim is believable. This is the basic idea behind a hypothesis test:

- State what we think is true.

- Quantify how confident we are about our claim.

- Use sample statistics to make inferences about population parameters.

For example, past research tells us that the average life span for a hummingbird is about four years. You have been studying the hummingbirds in the southeastern United States and find a sample mean lifespan of 4.8 years. Should you reject the known or accepted information in favor of your results? How confident are you in your estimate? At what point would you say that there is enough evidence to reject the known information and support your alternative claim? How far from the known mean of four years can the sample mean be before we reject the idea that the average lifespan of a hummingbird is four years?

Definition: hypothesis testing

Hypothesis testing is a procedure, based on sample evidence and probability, used to test claims regarding a characteristic of a population.

A hypothesis is a claim or statement about a characteristic of a population of interest to us. A hypothesis test is a way for us to use our sample statistics to test a specific claim.

Example \(\PageIndex{1}\):

The population mean weight is known to be 157 lb. We want to test the claim that the mean weight has increased.

Example \(\PageIndex{2}\):

Two years ago, the proportion of infected plants was 37%. We believe that a treatment has helped, and we want to test the claim that there has been a reduction in the proportion of infected plants.

Components of a Formal Hypothesis Test

The null hypothesis is a statement about the value of a population parameter, such as the population mean (µ) or the population proportion ( p ). It contains the condition of equality and is denoted as H 0 (H-naught).

H 0 : µ = 157 or H0 : p = 0.37

The alternative hypothesis is the claim to be tested, the opposite of the null hypothesis. It contains the value of the parameter that we consider plausible and is denoted as H 1 .

H 1 : µ > 157 or H1 : p ≠ 0.37

The test statistic is a value computed from the sample data that is used in making a decision about the rejection of the null hypothesis. The test statistic converts the sample mean ( x̄ ) or sample proportion ( p̂ ) to a Z- or t-score under the assumption that the null hypothesis is true. It is used to decide whether the difference between the sample statistic and the hypothesized claim is significant.

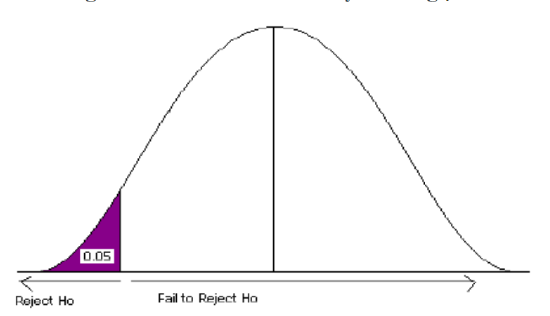

The p-value is the area under the curve to the left or right of the test statistic. It is compared to the level of significance (α).

The critical value is the value that defines the rejection zone (the test statistic values that would lead to rejection of the null hypothesis). It is defined by the level of significance.

The level of significance (α) is the probability that the test statistic will fall into the critical region when the null hypothesis is true. This level is set by the researcher.

The conclusion is the final decision of the hypothesis test. The conclusion must always be clearly stated, communicating the decision based on the components of the test. It is important to realize that we never prove or accept the null hypothesis. We are merely saying that the sample evidence is not strong enough to warrant the rejection of the null hypothesis. The conclusion is made up of two parts:

1) Reject or fail to reject the null hypothesis, and 2) there is or is not enough evidence to support the alternative claim.

Option 1) Reject the null hypothesis (H0). This means that you have enough statistical evidence to support the alternative claim (H 1 ).

Option 2) Fail to reject the null hypothesis (H0). This means that you do NOT have enough evidence to support the alternative claim (H 1 ).

Another way to think about hypothesis testing is to compare it to the US justice system. A defendant is innocent until proven guilty (Null hypothesis—innocent). The prosecuting attorney tries to prove that the defendant is guilty (Alternative hypothesis—guilty). There are two possible conclusions that the jury can reach. First, the defendant is guilty (Reject the null hypothesis). Second, the defendant is not guilty (Fail to reject the null hypothesis). This is NOT the same thing as saying the defendant is innocent! In the first case, the prosecutor had enough evidence to reject the null hypothesis (innocent) and support the alternative claim (guilty). In the second case, the prosecutor did NOT have enough evidence to reject the null hypothesis (innocent) and support the alternative claim of guilty.

The Null and Alternative Hypotheses

There are three different pairs of null and alternative hypotheses:

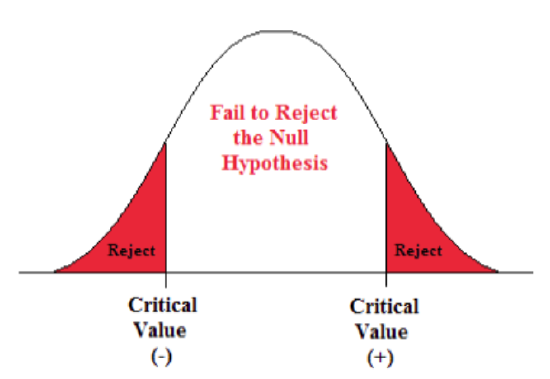

Table \(PageIndex{1}\): The rejection zone for a two-sided hypothesis test.

where c is some known value.

A Two-sided Test

This tests whether the population parameter is equal to, versus not equal to, some specific value.

Ho: μ = 12 vs. H 1 : μ ≠ 12

The critical region is divided equally into the two tails and the critical values are ± values that define the rejection zones.

Example \(\PageIndex{3}\):

A forester studying diameter growth of red pine believes that the mean diameter growth will be different if a fertilization treatment is applied to the stand.

- Ho: μ = 1.2 in./ year

- H 1 : μ ≠ 1.2 in./ year

This is a two-sided question, as the forester doesn’t state whether population mean diameter growth will increase or decrease.

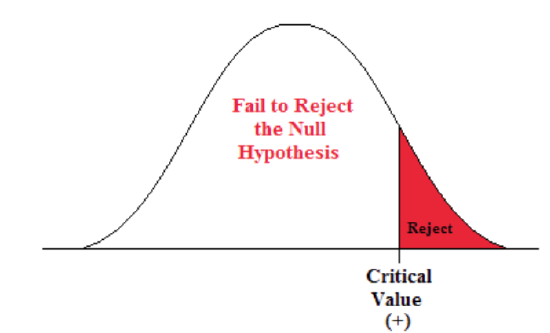

A Right-sided Test

This tests whether the population parameter is equal to, versus greater than, some specific value.

Ho: μ = 12 vs. H 1 : μ > 12

The critical region is in the right tail and the critical value is a positive value that defines the rejection zone.

Example \(\PageIndex{4}\):

A biologist believes that there has been an increase in the mean number of lakes infected with milfoil, an invasive species, since the last study five years ago.

- Ho: μ = 15 lakes

- H1: μ >15 lakes

This is a right-sided question, as the biologist believes that there has been an increase in population mean number of infected lakes.

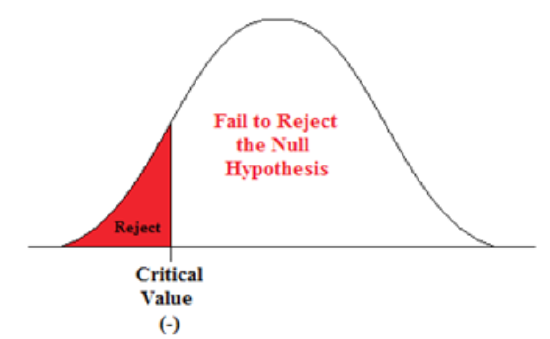

A Left-sided Test

This tests whether the population parameter is equal to, versus less than, some specific value.

Ho: μ = 12 vs. H 1 : μ < 12

The critical region is in the left tail and the critical value is a negative value that defines the rejection zone.

Example \(\PageIndex{5}\):

A scientist’s research indicates that there has been a change in the proportion of people who support certain environmental policies. He wants to test the claim that there has been a reduction in the proportion of people who support these policies.

- Ho: p = 0.57

- H 1 : p < 0.57

This is a left-sided question, as the scientist believes that there has been a reduction in the true population proportion.

Statistically Significant

When the observed results (the sample statistics) are unlikely (a low probability) under the assumption that the null hypothesis is true, we say that the result is statistically significant, and we reject the null hypothesis. This result depends on the level of significance, the sample statistic, sample size, and whether it is a one- or two-sided alternative hypothesis.

Types of Errors

When testing, we arrive at a conclusion of rejecting the null hypothesis or failing to reject the null hypothesis. Such conclusions are sometimes correct and sometimes incorrect (even when we have followed all the correct procedures). We use incomplete sample data to reach a conclusion and there is always the possibility of reaching the wrong conclusion. There are four possible conclusions to reach from hypothesis testing. Of the four possible outcomes, two are correct and two are NOT correct.

Table \(\PageIndex{2}\). Possible outcomes from a hypothesis test.

A Type I error is when we reject the null hypothesis when it is true. The symbol α (alpha) is used to represent Type I errors. This is the same alpha we use as the level of significance. By setting alpha as low as reasonably possible, we try to control the Type I error through the level of significance.

A Type II error is when we fail to reject the null hypothesis when it is false. The symbol β(beta) is used to represent Type II errors.

In general, Type I errors are considered more serious. One step in the hypothesis test procedure involves selecting the significance level ( α ), which is the probability of rejecting the null hypothesis when it is correct. So the researcher can select the level of significance that minimizes Type I errors. However, there is a mathematical relationship between α, β, and n (sample size).

- As α increases, β decreases

- As α decreases, β increases

- As sample size increases (n), both α and β decrease

The natural inclination is to select the smallest possible value for α, thinking to minimize the possibility of causing a Type I error. Unfortunately, this forces an increase in Type II errors. By making the rejection zone too small, you may fail to reject the null hypothesis, when, in fact, it is false. Typically, we select the best sample size and level of significance, automatically setting β.

Power of the Test

A Type II error (β) is the probability of failing to reject a false null hypothesis. It follows that 1-β is the probability of rejecting a false null hypothesis. This probability is identified as the power of the test, and is often used to gauge the test’s effectiveness in recognizing that a null hypothesis is false.

Definition: power of the test

The probability that at a fixed level α significance test will reject H0, when a particular alternative value of the parameter is true is called the power of the test.

Power is also directly linked to sample size. For example, suppose the null hypothesis is that the mean fish weight is 8.7 lb. Given sample data, a level of significance of 5%, and an alternative weight of 9.2 lb., we can compute the power of the test to reject μ = 8.7 lb. If we have a small sample size, the power will be low. However, increasing the sample size will increase the power of the test. Increasing the level of significance will also increase power. A 5% test of significance will have a greater chance of rejecting the null hypothesis than a 1% test because the strength of evidence required for the rejection is less. Decreasing the standard deviation has the same effect as increasing the sample size: there is more information about μ.

Blog » Value Hypothesis & Growth Hypothesis: lean startup validation

Value Hypothesis & Growth Hypothesis: lean startup validation

Posted on September 16, 2021 |

You’ve come up with a fantastic idea for a startup and you need to discuss the hypothesis and its value? But you’re not sure if it’s a viable one or not. What do you do next? It’s essential to get your ideas right before you start developing them. 95% of new products fail in their first year of launch. Or to put it another way, only one in twenty product ideas succeed. In this article, we’ll be taking a look at why it’s so important to validate your startup idea before you start spending a lot of time and money developing it. And that’s where the Lean Startup Validation process gets into, alongside the growth hypothesis and value hypothesis. We’ll also be looking at the questions that you need to ask.

Table of contents

The lean startup validation methodology, the benefits of validating your startup idea, the value hypothesis, the growth hypothesis, recommendations and questions for creating and running a good hypothesis, in conclusion – take the time to validate your product.

What does it mean to validate a lean startup?

Validating your lean startup idea may sound like a complicated process, but it’s a lot simpler than you may think. It may be the case that you were already planning on carrying out some of the work.

Essentially, validating your startup when you check your idea to see if it solves a problem that your prospective customers have. You can do this by creating hypotheses and then carrying out research to see if these hypotheses are true or false.

The best startups have always been about finding a gap in the market and offering a product or service that solves the problem. For example, take Airbnb . Before Airbnb launched, people only had the option of staying in hotels. Airbnb opened up the hospitality industry, offering cheaper accommodation to people who could not afford to stay inexpensive hotels.

“Don’t be in a rush to get big. Be in a rush to have a great product” – Eric Ries

Validation is a crucial part of the lean startup methodology, which was devised by entrepreneur Eric Ries. The lean startup methodology is all about optimizing the amount of time that is needed to ensure a product or service is viable.

Lean Startup Validation is a critical part of the lean startup process as it helps make sure that an idea will be successful before time is spent developing the final product.

As an example of a failed idea where more validation could have helped, take Google Glass . It sounded like a good idea on paper, but the technology failed spectacularly. Customer research would have shown that $1,500 was too much money, that people were worried about health and safety, and most importantly… there was no apparent benefit to the product.

Find out more about lean startup methodology on our blog

How to create a mobile app using lean startup methodology

The key benefit of validating your lean startup idea is to make sure that the idea you have is a viable one before you start using resources to build and promote it.

There are other less obvious benefits too:

- It can help you fine-tune your idea. So, it may be the case that you wanted your idea to go in a particular direction, but user research shows that pivoting may be the best thing to do

- It can help you get funding. Investors may be more likely to invest in your startup idea if you have evidence that your idea is a viable one

The value hypothesis and the growth hypothesis – are two ways to validate your idea

“To grow a successful business, validate your idea with customers” – Chad Boyda

In Eric Rie’s book ‘ The Lean Startup’ , he identifies two different types of hypotheses that entrepreneurs can use to validate their startup idea – the growth hypothesis and the value hypothesis.

Let’s look at the two different ideas, how they compare, and how you can use them to see if your startup idea could work.

The value hypothesis tests whether your product or service provides customers with enough value and most importantly, whether they are prepared to pay for this value.

For example, let’s say that you want to develop a mobile app to help dog owners find people to help walk their dogs while they are at work. Before you start spending serious time and money developing the app, you’ll want to see if it is something of interest to your target audience.

Your value hypothesis could say, “we believe that 60% of dog owners aged between 30 and 40 would be willing to pay upwards of €10 a month for this service.”

You then find dog owners in this age range and ask them the question. You’re pleased to see that 75% say that they would be willing to pay this amount! Your hypothesis has worked! This means that you should focus your app and your advertising on this target audience.

If the data comes back and says your prospective target audience isn’t willing to pay, then it means you have to rethink and reframe your app before running another hypothesis. For example, you may want to focus on another demographic, or look at reducing the price of the subscription.

Shoe retailer Zappos used a value hypothesis when starting out. Founder Nick Swinmurn went to local shoe stores, taking photos of the shoes and posting them on the Zappos website. Then, if customers bought the shoes, he’d buy them from the store and send them out to them. This allowed him to see if there was interest in his website, without having to spend lots of money on stock.

The growth hypothesis tests how your customers will find your product or service and shows how your potential product could grow over the years.

Let’s go back to the dog-walking app we talked about earlier. You think that 80% of app downloads will come from word-of-mouth recommendations.

You create a minimal viable product ( MVP for short ) – this is a basic version of your app that may not contain all of the features just yet. So, you then upload it to the app stores and wait for people to start downloading it. When you have a baseline of customers, you send them an email asking them how they heard of your app.

When the feedback comes back, it shows that only 30% of downloads have come from word-of-mouth recommendations. This means that your growth hypothesis has not been successful in this scenario.

Does this mean that your idea is a bad one? Not necessarily. It just means that you may have to look at other ways of promoting your app. If you are relying on word-of-mouth recommendations to advertise it, then it could potentially fail.

Dropbox used growth hypotheses to its advantage when creating its software. The file-storage company constantly tweaked its website, running A/B tests to see which features and changes were most popular with customers, using them in the final product.

Like any good science experiment, there are things that you need to bear in mind when running your hypotheses. Here are our recommendations:

- You may be wondering which type of hypothesis you should carry out first – a growth hypothesis or a value hypothesis. Eric Ries recommends carrying out a value hypothesis first, as it makes sense to see if there is interest before seeing how many people are interested. However, the precise order may depend on the type of product or service you want to sell;

- You will probably need to run multiple hypotheses to validate your product or service. If you do this, be sure to only test one hypothesis at a time. If you end up testing multiple ones in one go, you may not be sure which hypothesis has had which result;

- Test your most critical assumption first – this is one that you are most worried about, and could affect your idea the most. It may be that solving this issue makes your product or service a viable one;

- Specific – is your hypothesis simple? If it’s jumbled or confusing, you’re not going to get the best results from it. If you’re struggling to put together a clear hypothesis, it’s probably a sign to go back to the drawing board.

- Measurable – can your hypothesis be measured? You’ll want to get tangible results so you can check if the changes you have made have worked.

- Achievable – is your hypothesis attainable? If not, you may want to break it down into smaller goals.

- Relevant – will your hypothesis prove the validity of your product or service?

- Timely – can your hypothesis be measured in a set amount of time? You don’t want a goal that will take years to monitor and measure!

- Be as critical as possible. If you have created an idea, it is only natural that you want it to succeed. However, being objective rather than subjective will help your startup most in the long term;

- When you are carrying out customer research, use as vast a pool of people as time and money will allow. This will result in more accurate data. The great news is that you can use social media and other networking sites to reach out to potential customers and ask them their opinions;

- When carrying out customer research, be sure to ask the questions that matter. Bear in mind that liking your product or service isn’t the same as buying it. If a customer is enthusiastic about your idea, be sure to ask follow-on questions about why they like it, or if they would be willing to spend money on it. Otherwise, your data may end up being useless;

- While it is essential to have as many relevant hypotheses as possible, be careful not to have too many. While it may sound like a good idea to try out lots of different ideas, it can actually be counter-productive. As Eric Ries said:

“Don’t bog new teams down with too much information about falsifiable hypotheses. Because if we load our teams up with too much theory, they can easily get stuck in analysis paralysis. I’ve worked with teams that have come up with hundreds of leap-of-faith assumptions. They listed so many assumptions that were so detailed and complicated that they couldn’t decide what to do next. They were paralyzed by the just sheer quantity of the list.”

“We must learn what customers really want, not what they say they want or what we think they should want.” – Eric Ries

According to CB Insights , the number one reason why startups fail is that there is no demand for the product. Many entrepreneurs have gone ahead and launched a product that they think people want, only to find that there is no market at all.

Lean Startup Validation is essential in helping your business idea to succeed. While it may seem like extra work, the additional work you do in the beginning will be of a critical advantage later down the line.

Still not 100% convinced? Take HubSpot . Before HubSpot launched its sales and marketing services, it started off as a blog. Co-founders Dharmesh Shah and Brian Halligan used this blog to validate their ideas and see what their visitors wanted. This helped them confirm that their concept was on the right lines and meant they could launch a product that people actually wanted to use.

Validating a startup idea before development is crucial because it ensures that the idea is viable and addresses a real problem that customers have. With a high failure rate of new products, validation helps avoid wasting time and resources on ideas that might not succeed.

The value hypothesis tests whether customers find enough value in a product or service to pay for it. The growth hypothesis examines how customers will discover and adopt the product over time. Both hypotheses are essential for validating the viability of a startup idea.

Eric Ries recommends starting with a value hypothesis before a growth hypothesis. Validating whether the idea provides value is crucial before considering how to promote and grow it.

When creating and running a hypothesis, consider the following: 1. Focus on testing one hypothesis at a time. 2. Test your most critical assumptions first. 3. Ensure your hypothesis follows SMART goals (Specific, Measurable, Achievable, Relevant, Timely). 4. Use a wide pool of potential customers for accurate data. 5. Ask relevant and probing questions during customer research. 6. Avoid overwhelming your team with excessive hypotheses.

Validating your product idea before development helps you avoid the top reason for startup failure—lack of demand for the product. By confirming that there is a market need and interest in your idea, you increase the chances of building a successful product.

Lean Startup Validation helps entrepreneurs avoid the mistake of launching a product that doesn’t address a genuine need. By gathering evidence and feedback early, you can make informed decisions about pivoting or refining your idea before investing significant time and resources.

Certainly. Suppose you’re developing a mobile app for dog owners to find dog-walking services. Your value hypothesis could be: “We believe that 60% of dog owners aged between 30 and 40 would be willing to pay upwards of €10 a month for this service.” You then validate this hypothesis by surveying dog owners in that age range and analyzing their responses.

The growth hypothesis examines how customers will discover and adopt your product. If, for example, you expect 80% of app downloads to come from word-of-mouth recommendations, but feedback shows only 30% are from this source, you may need to reevaluate your promotion strategy.

Yes, Lean Startup Validation can be applied to startups across various industries. Whether you’re offering a product or service, the process of testing hypotheses and gathering evidence applies universally to ensure the viability of your idea.

To gather accurate data, focus on reaching a diverse pool of potential customers through various channels, including social media and networking sites. Ask relevant questions about their preferences, willingness to pay, and potential pain points related to your idea

Being critical and objective during validation helps you avoid confirmation bias and wishful thinking. Objectivity allows you to assess whether your idea truly addresses a problem and resonates with customers, ensuring that your startup’s foundation is built on solid evidence.

Launching Startups that get Success Stories

Contact us:

Quick links

© 2016 - 2024 URLAUNCHED LTD. All Rights Reserved

- Bipolar Disorder

- Therapy Center

- When To See a Therapist

- Types of Therapy

- Best Online Therapy

- Best Couples Therapy

- Best Family Therapy

- Managing Stress

- Sleep and Dreaming

- Understanding Emotions

- Self-Improvement

- Healthy Relationships

- Student Resources

- Personality Types

- Guided Meditations

- Verywell Mind Insights

- 2024 Verywell Mind 25

- Mental Health in the Classroom

- Editorial Process

- Meet Our Review Board

- Crisis Support

How to Write a Great Hypothesis

Hypothesis Definition, Format, Examples, and Tips

Kendra Cherry, MS, is a psychosocial rehabilitation specialist, psychology educator, and author of the "Everything Psychology Book."

:max_bytes(150000):strip_icc():format(webp)/IMG_9791-89504ab694d54b66bbd72cb84ffb860e.jpg)

Amy Morin, LCSW, is a psychotherapist and international bestselling author. Her books, including "13 Things Mentally Strong People Don't Do," have been translated into more than 40 languages. Her TEDx talk, "The Secret of Becoming Mentally Strong," is one of the most viewed talks of all time.

:max_bytes(150000):strip_icc():format(webp)/VW-MIND-Amy-2b338105f1ee493f94d7e333e410fa76.jpg)

Verywell / Alex Dos Diaz

- The Scientific Method

Hypothesis Format

Falsifiability of a hypothesis.

- Operationalization

Hypothesis Types

Hypotheses examples.

- Collecting Data

A hypothesis is a tentative statement about the relationship between two or more variables. It is a specific, testable prediction about what you expect to happen in a study. It is a preliminary answer to your question that helps guide the research process.

Consider a study designed to examine the relationship between sleep deprivation and test performance. The hypothesis might be: "This study is designed to assess the hypothesis that sleep-deprived people will perform worse on a test than individuals who are not sleep-deprived."

At a Glance

A hypothesis is crucial to scientific research because it offers a clear direction for what the researchers are looking to find. This allows them to design experiments to test their predictions and add to our scientific knowledge about the world. This article explores how a hypothesis is used in psychology research, how to write a good hypothesis, and the different types of hypotheses you might use.

The Hypothesis in the Scientific Method

In the scientific method , whether it involves research in psychology, biology, or some other area, a hypothesis represents what the researchers think will happen in an experiment. The scientific method involves the following steps:

- Forming a question

- Performing background research

- Creating a hypothesis

- Designing an experiment

- Collecting data

- Analyzing the results

- Drawing conclusions

- Communicating the results

The hypothesis is a prediction, but it involves more than a guess. Most of the time, the hypothesis begins with a question which is then explored through background research. At this point, researchers then begin to develop a testable hypothesis.

Unless you are creating an exploratory study, your hypothesis should always explain what you expect to happen.

In a study exploring the effects of a particular drug, the hypothesis might be that researchers expect the drug to have some type of effect on the symptoms of a specific illness. In psychology, the hypothesis might focus on how a certain aspect of the environment might influence a particular behavior.

Remember, a hypothesis does not have to be correct. While the hypothesis predicts what the researchers expect to see, the goal of the research is to determine whether this guess is right or wrong. When conducting an experiment, researchers might explore numerous factors to determine which ones might contribute to the ultimate outcome.

In many cases, researchers may find that the results of an experiment do not support the original hypothesis. When writing up these results, the researchers might suggest other options that should be explored in future studies.

In many cases, researchers might draw a hypothesis from a specific theory or build on previous research. For example, prior research has shown that stress can impact the immune system. So a researcher might hypothesize: "People with high-stress levels will be more likely to contract a common cold after being exposed to the virus than people who have low-stress levels."

In other instances, researchers might look at commonly held beliefs or folk wisdom. "Birds of a feather flock together" is one example of folk adage that a psychologist might try to investigate. The researcher might pose a specific hypothesis that "People tend to select romantic partners who are similar to them in interests and educational level."

Elements of a Good Hypothesis

So how do you write a good hypothesis? When trying to come up with a hypothesis for your research or experiments, ask yourself the following questions:

- Is your hypothesis based on your research on a topic?

- Can your hypothesis be tested?

- Does your hypothesis include independent and dependent variables?

Before you come up with a specific hypothesis, spend some time doing background research. Once you have completed a literature review, start thinking about potential questions you still have. Pay attention to the discussion section in the journal articles you read . Many authors will suggest questions that still need to be explored.

How to Formulate a Good Hypothesis

To form a hypothesis, you should take these steps:

- Collect as many observations about a topic or problem as you can.

- Evaluate these observations and look for possible causes of the problem.

- Create a list of possible explanations that you might want to explore.

- After you have developed some possible hypotheses, think of ways that you could confirm or disprove each hypothesis through experimentation. This is known as falsifiability.

In the scientific method , falsifiability is an important part of any valid hypothesis. In order to test a claim scientifically, it must be possible that the claim could be proven false.

Students sometimes confuse the idea of falsifiability with the idea that it means that something is false, which is not the case. What falsifiability means is that if something was false, then it is possible to demonstrate that it is false.

One of the hallmarks of pseudoscience is that it makes claims that cannot be refuted or proven false.

The Importance of Operational Definitions

A variable is a factor or element that can be changed and manipulated in ways that are observable and measurable. However, the researcher must also define how the variable will be manipulated and measured in the study.

Operational definitions are specific definitions for all relevant factors in a study. This process helps make vague or ambiguous concepts detailed and measurable.

For example, a researcher might operationally define the variable " test anxiety " as the results of a self-report measure of anxiety experienced during an exam. A "study habits" variable might be defined by the amount of studying that actually occurs as measured by time.

These precise descriptions are important because many things can be measured in various ways. Clearly defining these variables and how they are measured helps ensure that other researchers can replicate your results.

Replicability

One of the basic principles of any type of scientific research is that the results must be replicable.

Replication means repeating an experiment in the same way to produce the same results. By clearly detailing the specifics of how the variables were measured and manipulated, other researchers can better understand the results and repeat the study if needed.

Some variables are more difficult than others to define. For example, how would you operationally define a variable such as aggression ? For obvious ethical reasons, researchers cannot create a situation in which a person behaves aggressively toward others.

To measure this variable, the researcher must devise a measurement that assesses aggressive behavior without harming others. The researcher might utilize a simulated task to measure aggressiveness in this situation.

Hypothesis Checklist

- Does your hypothesis focus on something that you can actually test?

- Does your hypothesis include both an independent and dependent variable?

- Can you manipulate the variables?

- Can your hypothesis be tested without violating ethical standards?

The hypothesis you use will depend on what you are investigating and hoping to find. Some of the main types of hypotheses that you might use include:

- Simple hypothesis : This type of hypothesis suggests there is a relationship between one independent variable and one dependent variable.

- Complex hypothesis : This type suggests a relationship between three or more variables, such as two independent and dependent variables.

- Null hypothesis : This hypothesis suggests no relationship exists between two or more variables.

- Alternative hypothesis : This hypothesis states the opposite of the null hypothesis.

- Statistical hypothesis : This hypothesis uses statistical analysis to evaluate a representative population sample and then generalizes the findings to the larger group.

- Logical hypothesis : This hypothesis assumes a relationship between variables without collecting data or evidence.

A hypothesis often follows a basic format of "If {this happens} then {this will happen}." One way to structure your hypothesis is to describe what will happen to the dependent variable if you change the independent variable .

The basic format might be: "If {these changes are made to a certain independent variable}, then we will observe {a change in a specific dependent variable}."

A few examples of simple hypotheses:

- "Students who eat breakfast will perform better on a math exam than students who do not eat breakfast."

- "Students who experience test anxiety before an English exam will get lower scores than students who do not experience test anxiety."

- "Motorists who talk on the phone while driving will be more likely to make errors on a driving course than those who do not talk on the phone."

- "Children who receive a new reading intervention will have higher reading scores than students who do not receive the intervention."

Examples of a complex hypothesis include:

- "People with high-sugar diets and sedentary activity levels are more likely to develop depression."

- "Younger people who are regularly exposed to green, outdoor areas have better subjective well-being than older adults who have limited exposure to green spaces."

Examples of a null hypothesis include:

- "There is no difference in anxiety levels between people who take St. John's wort supplements and those who do not."

- "There is no difference in scores on a memory recall task between children and adults."

- "There is no difference in aggression levels between children who play first-person shooter games and those who do not."

Examples of an alternative hypothesis:

- "People who take St. John's wort supplements will have less anxiety than those who do not."

- "Adults will perform better on a memory task than children."

- "Children who play first-person shooter games will show higher levels of aggression than children who do not."

Collecting Data on Your Hypothesis

Once a researcher has formed a testable hypothesis, the next step is to select a research design and start collecting data. The research method depends largely on exactly what they are studying. There are two basic types of research methods: descriptive research and experimental research.

Descriptive Research Methods

Descriptive research such as case studies , naturalistic observations , and surveys are often used when conducting an experiment is difficult or impossible. These methods are best used to describe different aspects of a behavior or psychological phenomenon.

Once a researcher has collected data using descriptive methods, a correlational study can examine how the variables are related. This research method might be used to investigate a hypothesis that is difficult to test experimentally.

Experimental Research Methods

Experimental methods are used to demonstrate causal relationships between variables. In an experiment, the researcher systematically manipulates a variable of interest (known as the independent variable) and measures the effect on another variable (known as the dependent variable).

Unlike correlational studies, which can only be used to determine if there is a relationship between two variables, experimental methods can be used to determine the actual nature of the relationship—whether changes in one variable actually cause another to change.

The hypothesis is a critical part of any scientific exploration. It represents what researchers expect to find in a study or experiment. In situations where the hypothesis is unsupported by the research, the research still has value. Such research helps us better understand how different aspects of the natural world relate to one another. It also helps us develop new hypotheses that can then be tested in the future.

Thompson WH, Skau S. On the scope of scientific hypotheses . R Soc Open Sci . 2023;10(8):230607. doi:10.1098/rsos.230607

Taran S, Adhikari NKJ, Fan E. Falsifiability in medicine: what clinicians can learn from Karl Popper [published correction appears in Intensive Care Med. 2021 Jun 17;:]. Intensive Care Med . 2021;47(9):1054-1056. doi:10.1007/s00134-021-06432-z

Eyler AA. Research Methods for Public Health . 1st ed. Springer Publishing Company; 2020. doi:10.1891/9780826182067.0004

Nosek BA, Errington TM. What is replication ? PLoS Biol . 2020;18(3):e3000691. doi:10.1371/journal.pbio.3000691

Aggarwal R, Ranganathan P. Study designs: Part 2 - Descriptive studies . Perspect Clin Res . 2019;10(1):34-36. doi:10.4103/picr.PICR_154_18

Nevid J. Psychology: Concepts and Applications. Wadworth, 2013.

By Kendra Cherry, MSEd Kendra Cherry, MS, is a psychosocial rehabilitation specialist, psychology educator, and author of the "Everything Psychology Book."

- Business Essentials

- Leadership & Management

- Credential of Leadership, Impact, and Management in Business (CLIMB)

- Entrepreneurship & Innovation

- Digital Transformation

- Finance & Accounting

- Business in Society

- For Organizations

- Support Portal

- Media Coverage

- Founding Donors

- Leadership Team

- Harvard Business School →

- HBS Online →

- Business Insights →

Business Insights

Harvard Business School Online's Business Insights Blog provides the career insights you need to achieve your goals and gain confidence in your business skills.

- Career Development

- Communication

- Decision-Making

- Earning Your MBA

- Negotiation

- News & Events

- Productivity

- Staff Spotlight

- Student Profiles

- Work-Life Balance

- AI Essentials for Business

- Alternative Investments

- Business Analytics

- Business Strategy

- Business and Climate Change

- Design Thinking and Innovation

- Digital Marketing Strategy

- Disruptive Strategy

- Economics for Managers

- Entrepreneurship Essentials

- Financial Accounting

- Global Business

- Launching Tech Ventures

- Leadership Principles

- Leadership, Ethics, and Corporate Accountability

- Leading with Finance

- Management Essentials

- Negotiation Mastery

- Organizational Leadership

- Power and Influence for Positive Impact

- Strategy Execution

- Sustainable Business Strategy

- Sustainable Investing

- Winning with Digital Platforms

A Beginner’s Guide to Hypothesis Testing in Business

- 30 Mar 2021

Becoming a more data-driven decision-maker can bring several benefits to your organization, enabling you to identify new opportunities to pursue and threats to abate. Rather than allowing subjective thinking to guide your business strategy, backing your decisions with data can empower your company to become more innovative and, ultimately, profitable.

If you’re new to data-driven decision-making, you might be wondering how data translates into business strategy. The answer lies in generating a hypothesis and verifying or rejecting it based on what various forms of data tell you.

Below is a look at hypothesis testing and the role it plays in helping businesses become more data-driven.

Access your free e-book today.

What Is Hypothesis Testing?

To understand what hypothesis testing is, it’s important first to understand what a hypothesis is.

A hypothesis or hypothesis statement seeks to explain why something has happened, or what might happen, under certain conditions. It can also be used to understand how different variables relate to each other. Hypotheses are often written as if-then statements; for example, “If this happens, then this will happen.”

Hypothesis testing , then, is a statistical means of testing an assumption stated in a hypothesis. While the specific methodology leveraged depends on the nature of the hypothesis and data available, hypothesis testing typically uses sample data to extrapolate insights about a larger population.

Hypothesis Testing in Business

When it comes to data-driven decision-making, there’s a certain amount of risk that can mislead a professional. This could be due to flawed thinking or observations, incomplete or inaccurate data , or the presence of unknown variables. The danger in this is that, if major strategic decisions are made based on flawed insights, it can lead to wasted resources, missed opportunities, and catastrophic outcomes.

The real value of hypothesis testing in business is that it allows professionals to test their theories and assumptions before putting them into action. This essentially allows an organization to verify its analysis is correct before committing resources to implement a broader strategy.

As one example, consider a company that wishes to launch a new marketing campaign to revitalize sales during a slow period. Doing so could be an incredibly expensive endeavor, depending on the campaign’s size and complexity. The company, therefore, may wish to test the campaign on a smaller scale to understand how it will perform.

In this example, the hypothesis that’s being tested would fall along the lines of: “If the company launches a new marketing campaign, then it will translate into an increase in sales.” It may even be possible to quantify how much of a lift in sales the company expects to see from the effort. Pending the results of the pilot campaign, the business would then know whether it makes sense to roll it out more broadly.

Related: 9 Fundamental Data Science Skills for Business Professionals

Key Considerations for Hypothesis Testing

1. alternative hypothesis and null hypothesis.

In hypothesis testing, the hypothesis that’s being tested is known as the alternative hypothesis . Often, it’s expressed as a correlation or statistical relationship between variables. The null hypothesis , on the other hand, is a statement that’s meant to show there’s no statistical relationship between the variables being tested. It’s typically the exact opposite of whatever is stated in the alternative hypothesis.

For example, consider a company’s leadership team that historically and reliably sees $12 million in monthly revenue. They want to understand if reducing the price of their services will attract more customers and, in turn, increase revenue.

In this case, the alternative hypothesis may take the form of a statement such as: “If we reduce the price of our flagship service by five percent, then we’ll see an increase in sales and realize revenues greater than $12 million in the next month.”

The null hypothesis, on the other hand, would indicate that revenues wouldn’t increase from the base of $12 million, or might even decrease.

Check out the video below about the difference between an alternative and a null hypothesis, and subscribe to our YouTube channel for more explainer content.

2. Significance Level and P-Value

Statistically speaking, if you were to run the same scenario 100 times, you’d likely receive somewhat different results each time. If you were to plot these results in a distribution plot, you’d see the most likely outcome is at the tallest point in the graph, with less likely outcomes falling to the right and left of that point.

With this in mind, imagine you’ve completed your hypothesis test and have your results, which indicate there may be a correlation between the variables you were testing. To understand your results' significance, you’ll need to identify a p-value for the test, which helps note how confident you are in the test results.

In statistics, the p-value depicts the probability that, assuming the null hypothesis is correct, you might still observe results that are at least as extreme as the results of your hypothesis test. The smaller the p-value, the more likely the alternative hypothesis is correct, and the greater the significance of your results.

3. One-Sided vs. Two-Sided Testing

When it’s time to test your hypothesis, it’s important to leverage the correct testing method. The two most common hypothesis testing methods are one-sided and two-sided tests , or one-tailed and two-tailed tests, respectively.

Typically, you’d leverage a one-sided test when you have a strong conviction about the direction of change you expect to see due to your hypothesis test. You’d leverage a two-sided test when you’re less confident in the direction of change.

4. Sampling

To perform hypothesis testing in the first place, you need to collect a sample of data to be analyzed. Depending on the question you’re seeking to answer or investigate, you might collect samples through surveys, observational studies, or experiments.

A survey involves asking a series of questions to a random population sample and recording self-reported responses.

Observational studies involve a researcher observing a sample population and collecting data as it occurs naturally, without intervention.

Finally, an experiment involves dividing a sample into multiple groups, one of which acts as the control group. For each non-control group, the variable being studied is manipulated to determine how the data collected differs from that of the control group.

Learn How to Perform Hypothesis Testing

Hypothesis testing is a complex process involving different moving pieces that can allow an organization to effectively leverage its data and inform strategic decisions.

If you’re interested in better understanding hypothesis testing and the role it can play within your organization, one option is to complete a course that focuses on the process. Doing so can lay the statistical and analytical foundation you need to succeed.

Do you want to learn more about hypothesis testing? Explore Business Analytics —one of our online business essentials courses —and download our Beginner’s Guide to Data & Analytics .

About the Author

User Preferences

Content preview.

Arcu felis bibendum ut tristique et egestas quis:

- Ut enim ad minim veniam, quis nostrud exercitation ullamco laboris

- Duis aute irure dolor in reprehenderit in voluptate

- Excepteur sint occaecat cupidatat non proident

Keyboard Shortcuts

S.3.2 hypothesis testing (p-value approach).

The P -value approach involves determining "likely" or "unlikely" by determining the probability — assuming the null hypothesis was true — of observing a more extreme test statistic in the direction of the alternative hypothesis than the one observed. If the P -value is small, say less than (or equal to) \(\alpha\), then it is "unlikely." And, if the P -value is large, say more than \(\alpha\), then it is "likely."

If the P -value is less than (or equal to) \(\alpha\), then the null hypothesis is rejected in favor of the alternative hypothesis. And, if the P -value is greater than \(\alpha\), then the null hypothesis is not rejected.

Specifically, the four steps involved in using the P -value approach to conducting any hypothesis test are:

- Specify the null and alternative hypotheses.

- Using the sample data and assuming the null hypothesis is true, calculate the value of the test statistic. Again, to conduct the hypothesis test for the population mean μ , we use the t -statistic \(t^*=\frac{\bar{x}-\mu}{s/\sqrt{n}}\) which follows a t -distribution with n - 1 degrees of freedom.

- Using the known distribution of the test statistic, calculate the P -value : "If the null hypothesis is true, what is the probability that we'd observe a more extreme test statistic in the direction of the alternative hypothesis than we did?" (Note how this question is equivalent to the question answered in criminal trials: "If the defendant is innocent, what is the chance that we'd observe such extreme criminal evidence?")

- Set the significance level, \(\alpha\), the probability of making a Type I error to be small — 0.01, 0.05, or 0.10. Compare the P -value to \(\alpha\). If the P -value is less than (or equal to) \(\alpha\), reject the null hypothesis in favor of the alternative hypothesis. If the P -value is greater than \(\alpha\), do not reject the null hypothesis.

Example S.3.2.1

Mean gpa section .

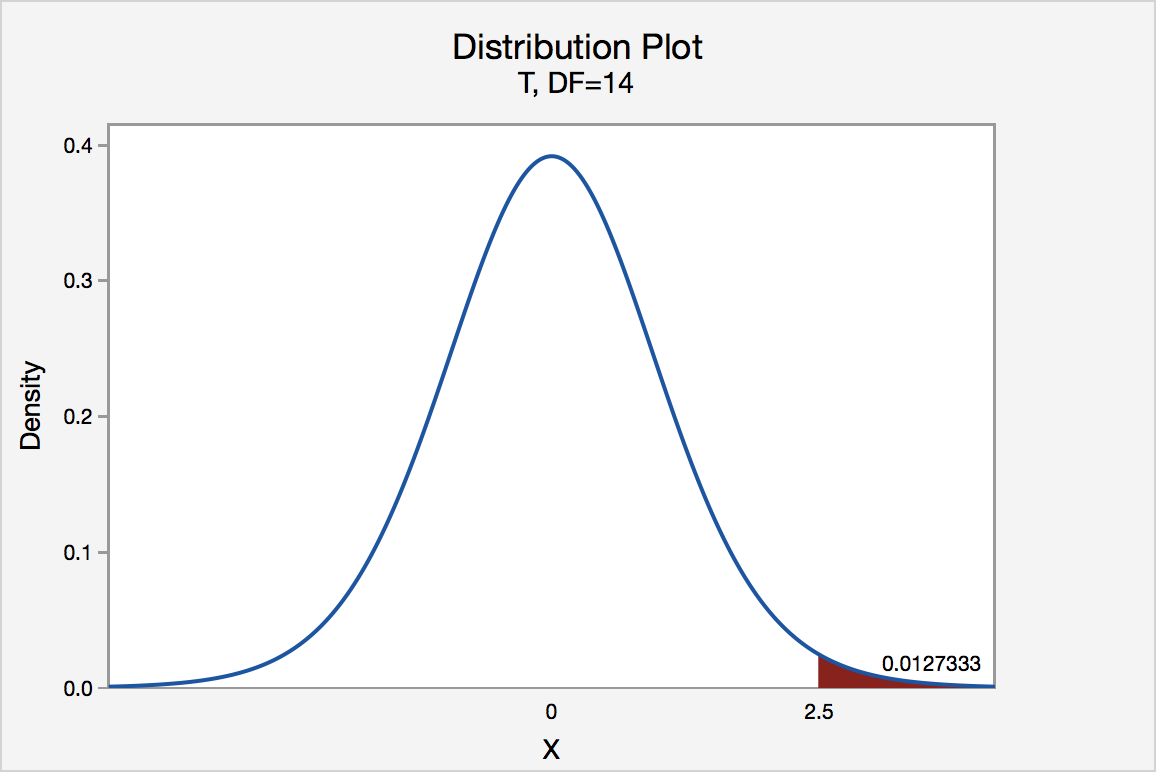

In our example concerning the mean grade point average, suppose that our random sample of n = 15 students majoring in mathematics yields a test statistic t * equaling 2.5. Since n = 15, our test statistic t * has n - 1 = 14 degrees of freedom. Also, suppose we set our significance level α at 0.05 so that we have only a 5% chance of making a Type I error.

Right Tailed

The P -value for conducting the right-tailed test H 0 : μ = 3 versus H A : μ > 3 is the probability that we would observe a test statistic greater than t * = 2.5 if the population mean \(\mu\) really were 3. Recall that probability equals the area under the probability curve. The P -value is therefore the area under a t n - 1 = t 14 curve and to the right of the test statistic t * = 2.5. It can be shown using statistical software that the P -value is 0.0127. The graph depicts this visually.

The P -value, 0.0127, tells us it is "unlikely" that we would observe such an extreme test statistic t * in the direction of H A if the null hypothesis were true. Therefore, our initial assumption that the null hypothesis is true must be incorrect. That is, since the P -value, 0.0127, is less than \(\alpha\) = 0.05, we reject the null hypothesis H 0 : μ = 3 in favor of the alternative hypothesis H A : μ > 3.

Note that we would not reject H 0 : μ = 3 in favor of H A : μ > 3 if we lowered our willingness to make a Type I error to \(\alpha\) = 0.01 instead, as the P -value, 0.0127, is then greater than \(\alpha\) = 0.01.

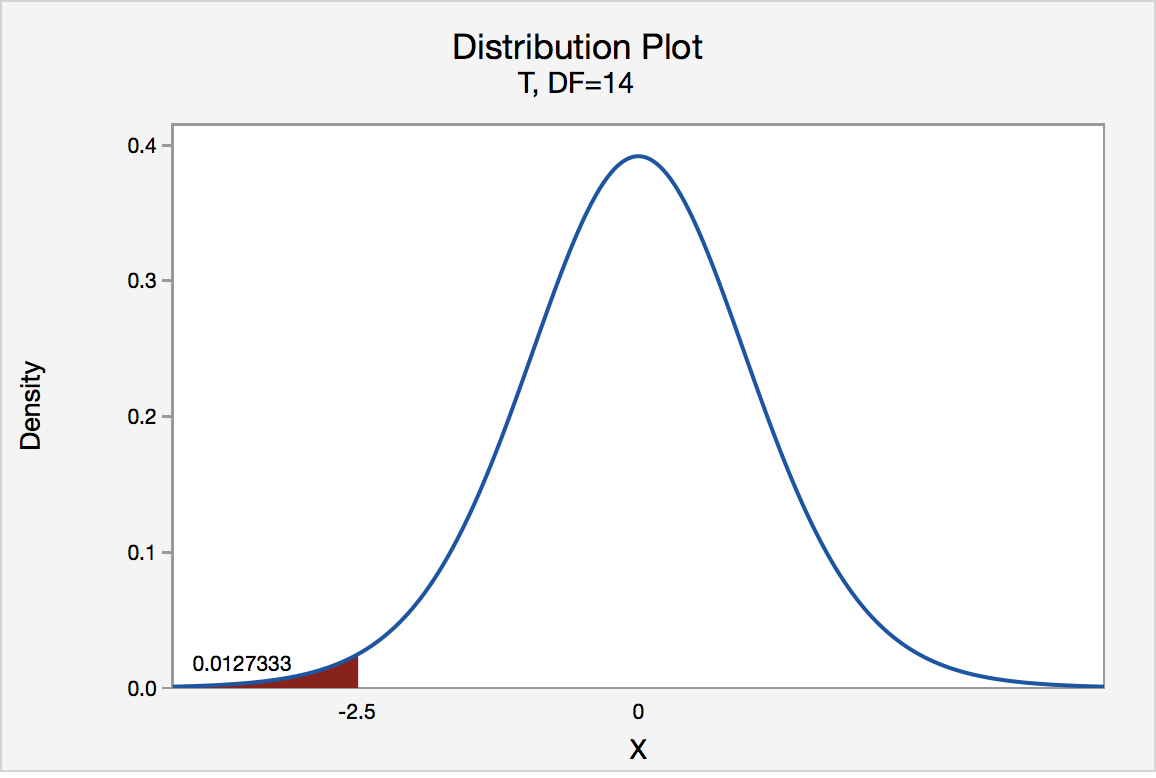

Left Tailed

In our example concerning the mean grade point average, suppose that our random sample of n = 15 students majoring in mathematics yields a test statistic t * instead of equaling -2.5. The P -value for conducting the left-tailed test H 0 : μ = 3 versus H A : μ < 3 is the probability that we would observe a test statistic less than t * = -2.5 if the population mean μ really were 3. The P -value is therefore the area under a t n - 1 = t 14 curve and to the left of the test statistic t* = -2.5. It can be shown using statistical software that the P -value is 0.0127. The graph depicts this visually.

The P -value, 0.0127, tells us it is "unlikely" that we would observe such an extreme test statistic t * in the direction of H A if the null hypothesis were true. Therefore, our initial assumption that the null hypothesis is true must be incorrect. That is, since the P -value, 0.0127, is less than α = 0.05, we reject the null hypothesis H 0 : μ = 3 in favor of the alternative hypothesis H A : μ < 3.

Note that we would not reject H 0 : μ = 3 in favor of H A : μ < 3 if we lowered our willingness to make a Type I error to α = 0.01 instead, as the P -value, 0.0127, is then greater than \(\alpha\) = 0.01.

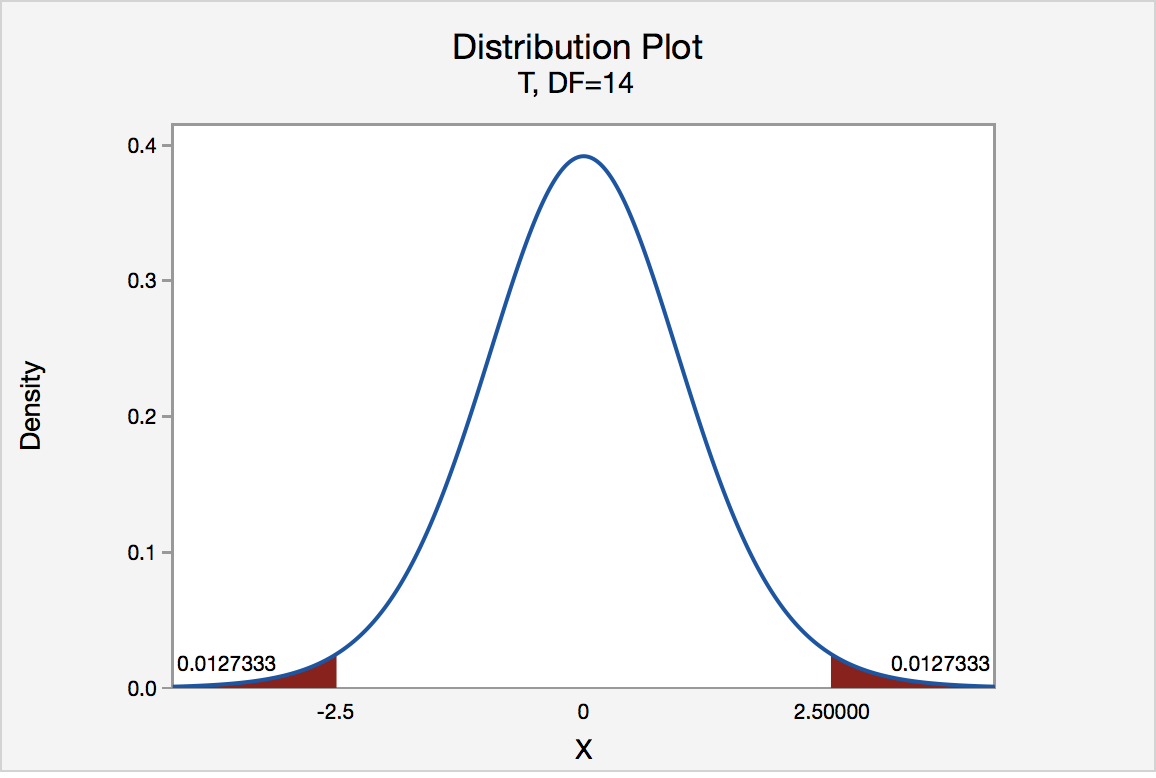

In our example concerning the mean grade point average, suppose again that our random sample of n = 15 students majoring in mathematics yields a test statistic t * instead of equaling -2.5. The P -value for conducting the two-tailed test H 0 : μ = 3 versus H A : μ ≠ 3 is the probability that we would observe a test statistic less than -2.5 or greater than 2.5 if the population mean μ really was 3. That is, the two-tailed test requires taking into account the possibility that the test statistic could fall into either tail (hence the name "two-tailed" test). The P -value is, therefore, the area under a t n - 1 = t 14 curve to the left of -2.5 and to the right of 2.5. It can be shown using statistical software that the P -value is 0.0127 + 0.0127, or 0.0254. The graph depicts this visually.

Note that the P -value for a two-tailed test is always two times the P -value for either of the one-tailed tests. The P -value, 0.0254, tells us it is "unlikely" that we would observe such an extreme test statistic t * in the direction of H A if the null hypothesis were true. Therefore, our initial assumption that the null hypothesis is true must be incorrect. That is, since the P -value, 0.0254, is less than α = 0.05, we reject the null hypothesis H 0 : μ = 3 in favor of the alternative hypothesis H A : μ ≠ 3.

Note that we would not reject H 0 : μ = 3 in favor of H A : μ ≠ 3 if we lowered our willingness to make a Type I error to α = 0.01 instead, as the P -value, 0.0254, is then greater than \(\alpha\) = 0.01.

Now that we have reviewed the critical value and P -value approach procedures for each of the three possible hypotheses, let's look at three new examples — one of a right-tailed test, one of a left-tailed test, and one of a two-tailed test.

The good news is that, whenever possible, we will take advantage of the test statistics and P -values reported in statistical software, such as Minitab, to conduct our hypothesis tests in this course.