Grab your spot at the free arXiv Accessibility Forum

Help | Advanced Search

Computer Science > Computation and Language

Title: information redundancy and biases in public document information extraction benchmarks.

Abstract: Advances in the Visually-rich Document Understanding (VrDU) field and particularly the Key-Information Extraction (KIE) task are marked with the emergence of efficient Transformer-based approaches such as the LayoutLM models. Despite the good performance of KIE models when fine-tuned on public benchmarks, they still struggle to generalize on complex real-life use-cases lacking sufficient document annotations. Our research highlighted that KIE standard benchmarks such as SROIE and FUNSD contain significant similarity between training and testing documents and can be adjusted to better evaluate the generalization of models. In this work, we designed experiments to quantify the information redundancy in public benchmarks, revealing a 75% template replication in SROIE official test set and 16% in FUNSD. We also proposed resampling strategies to provide benchmarks more representative of the generalization ability of models. We showed that models not suited for document analysis struggle on the adjusted splits dropping on average 10,5% F1 score on SROIE and 3.5% on FUNSD compared to multi-modal models dropping only 7,5% F1 on SROIE and 0.5% F1 on FUNSD.

| Comments: | 15 pages, ICDAR 2023 (17th International Conference on Document Analysis and Recognition) |

| Subjects: | Computation and Language (cs.CL); Artificial Intelligence (cs.AI) |

| Cite as: | [cs.CL] |

| (or [cs.CL] for this version) | |

| Focus to learn more arXiv-issued DOI via DataCite |

Submission history

Access paper:.

- Other Formats

References & Citations

- Google Scholar

- Semantic Scholar

BibTeX formatted citation

Bibliographic and Citation Tools

Code, data and media associated with this article, recommenders and search tools.

- Institution

arXivLabs: experimental projects with community collaborators

arXivLabs is a framework that allows collaborators to develop and share new arXiv features directly on our website.

Both individuals and organizations that work with arXivLabs have embraced and accepted our values of openness, community, excellence, and user data privacy. arXiv is committed to these values and only works with partners that adhere to them.

Have an idea for a project that will add value for arXiv's community? Learn more about arXivLabs .

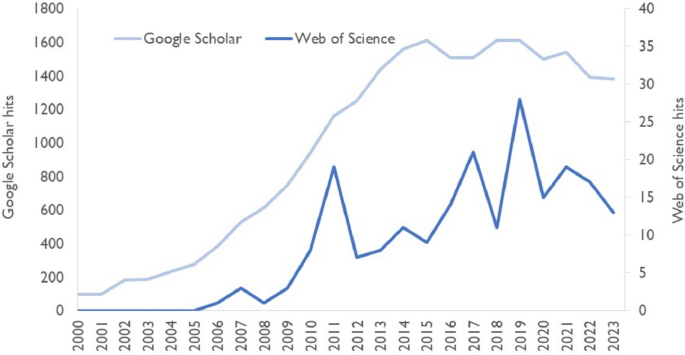

information redundancy Recently Published Documents

Total documents.

- Latest Documents

- Most Cited Documents

- Contributed Authors

- Related Sources

- Related Keywords

Information redundancy across spatial scales modulates early visual cortical processing

Graph transformer for drug response prediction.

Previous models have shown that learning drug features from their graph representation is more efficient than learning from their strings or numeric representations. Furthermore, integrating multi-omics data of cell lines increases the performance of drug response prediction. However, these models showed drawbacks in extracting drug features from graph representation and incorporating redundancy information from multi-omics data. This paper proposes a deep learning model, GraTransDRP, to better drug representation and reduce information redundancy. First, the Graph transformer was utilized to extract the drug representation more efficiently. Next, Convolutional neural networks were used to learn the mutation, meth, and transcriptomics features. However, the dimension of transcriptomics features is up to 17737. Therefore, KernelPCA was applied to transcriptomics features to reduce the dimension and transform them into a dense presentation before putting them through the CNN model. Finally, drug and omics features were combined to predict a response value by a fully connected network. Experimental results show that our model outperforms some state-of-the-art methods, including GraphDRP, GraOmicDRP.

TF-IDF Method and Vector Space Model Regarding the Covid-19 Vaccine on Online News

Advances in information and technology have caused the use of the internet to be a concern of the general public. Online news sites are one of the technologies that have developed as a means of disseminating the latest information in the world. When viewed in terms of numbers, newsreaders are very sufficient to get the desired information. However, with this, the amount of information collected will result in an explosion of information and the possibility of information redundancy. The search system is one of the solutions which expected to help in finding the desired or relevant information by the input query. The methods commonly used in this case are TF-IDF and VSM (Vector Space Model) which are used in weighting to measure statistics from a collection of documents on the search for some information about the Covid 19 vaccine on kompas.com news then tokenizing it to separate the text, stopword removal or filtering to remove unnecessary words which usually consist of conjunctions and others. The next step is sentence stemming which aims to eliminate word inflection to its basic form. Then the TF-IDF and VSM calculations were carried out and the final result are news documents 3 (DOC 3) with a weight of 5.914226424; news documents 2 (DOC 2) with a weight of 1.767692186; news documents 5 (DOC 5) with weights 1.550165096; news document 4 (DOC 4) with a weight of 1.17141223;, and the last is news document 1 (DOC 1) with a weight of 0.5244103739.

Multi-Scale Guided Attention Network for Crowd Counting

The CNN-based crowd counting method uses image pyramid and dense connection to fuse features to solve the problems of multiscale and information loss. However, these operations lead to information redundancy and confusion between crowd and background information. In this paper, we propose a multi-scale guided attention network (MGANet) to solve the above problems. Specifically, the multilayer features of the network are fused by a top-down approach to obtain multiscale information and context information. The attention mechanism is used to guide the acquired features of each layer in space and channel so that the network pays more attention to the crowd in the image, ignores irrelevant information, and further integrates to obtain the final high-quality density map. Besides, we propose a counting loss function combining SSIM Loss, MAE Loss, and MSE Loss to achieve effective network convergence. We experiment on four major datasets and obtain good results. The effectiveness of the network modules is proved by the corresponding ablation experiments. The source code is available at https://github.com/lpfworld/MGANet.

Predicting reposting latency of news content in social media: A focus on issue attention, temporal usage pattern, and information redundancy

Information redundancy across spatial scales modulates early visual cortex responses, optimising aircraft taxi speed: design and evaluation of new means to present information on a head-up display.

Abstract The objective of this study was to design and evaluate new means of complying to time constraints by presenting aircraft target taxi speeds on a head-up display (HUD). Four different HUD presentations were iteratively developed from paper sketches into digital prototypes. Each HUD presentation reflected different levels of information presentation. A subsequent evaluation included 32 pilots, with varying flight experience, in usability tests. The participants subjectively assessed which information was most useful to comply with time constraints. The assessment was based on six themes including information, workload, situational awareness, stress, support and usability. The evaluation consisted of computer-simulated taxi-runs, self-assessments and statistical analysis. Information provided by a graphical vertical tape descriptive/predictive HUD presentation, including alpha-numerical information redundancy, was rated most useful. Differences between novice and expert pilots can be resolved by incorporating combinations of graphics and alpha-numeric presentations. The findings can be applied for further studies of combining navigational and time-keeping HUD support during taxi.

Information Redundancy Neglect versus Overconfidence: A Social Learning Experiment

We study social learning in a continuous action space experiment. Subjects, acting in sequence, state their beliefs about the value of a good after observing their predecessors’ statements and a private signal. We compare the behavior in the laboratory with the Perfect Bayesian Equilibrium prediction and the predictions of bounded rationality models of decision-making: the redundancy of information neglect model and the overconfidence model. The results of our experiment are in line with the predictions of the overconfidence model and at odds with the others’. (JEL C91, D12, D82, D83)

Visual images contain redundant information across spatial scales where low spatial frequency contrast is informative towards the location and likely content of high spatial frequency detail. Previous research suggests that the visual system makes use of those redundancies to facilitate efficient processing. In this framework, a fast, initial analysis of low-spatial frequency (LSF) information guides the slower and later processing of high spatial frequency (HSF) detail. Here, we used multivariate classification as well as time-frequency analysis of MEG responses to the viewing of intact and phase scrambled images of human faces to demonstrate that the availability of redundant LSF information, as found in broadband intact images, correlates with a reduction in HSF representational dominance in both early and higher-level visual areas as well as a reduction of gamma-band power in early visual cortex. Our results indicate that the cross spatial frequency information redundancy that can be found in all natural images might be a driving factor in the efficient integration of fine image details.

THE DATA DIAGNOSTIC METHOD OF IN THE SYSTEM OF RESIDUE CLASSES

The subject of the article is the development of a method for diagnosing data that are presented in the system of residual classes (SRC). The purpose of the article is to develop a method for fast diagnostics of data in the SRC when entering the minimum information redundancy. Tasks: to analyze and identify possible shortcomings of existing methods for diagnosing data in the SRC, to explore possible ways to eliminate the identified shortcomings, to develop a method for prompt diagnosis of data in SRC. Research methods: methods of analysis and synthesis of computer systems, number theory, coding theory in SRC. The following results were obtained. It is shown that the main disadvantage of the existing methods is the significant time of data diagnostics when it is necessary to introduce significant information redundancy into the non-positional code structure (NCS). The method considered in the article makes it possible to increase the efficiency of the diagnostic procedure when introducing minimal information redundancy into the NCS. The data diagnostics time, in comparison with the known methods, is reduced primarily due to the elimination of the procedure for converting numbers from the NCS to the positional code, as well as the elimination of the positional operation of comparing numbers. Secondly, the data diagnostics time is reduced by reducing the number of SRC bases in which errors can occur. Third, the data diagnostics time is reduced due to the presentation of the set of values of the alternative set of numbers in a tabular form and the possibility of sampling them in one machine cycle. The amount of additionally introduced information redundancy is reduced due to the effective use of the internal information redundancy that exists in the SRC. An example of using the proposed method for diagnosing data in SRC is given. Conclusions. Thus, the proposed method makes it possible to reduce the time for diagnosing data errors that are presented in the SRC, which increases the efficiency of diagnostics with the introduction of minimal information redundancy.

Export Citation Format

Share document.

- Search Menu

- Sign in through your institution

- Author Guidelines

- Submission Site

- Self-Archiving Policy

- Why Submit?

- About Journal of Computer-Mediated Communication

- About International Communication Association

- Editorial Board

- Advertising & Corporate Services

- Journals Career Network

- Journals on Oxford Academic

- Books on Oxford Academic

Article Contents

Conclusion and discussion, about the authors.

- < Previous

Information Overload, Similarity, and Redundancy: Unsubscribing Information Sources on Twitter

- Article contents

- Figures & tables

- Supplementary Data

Hai Liang, King-wa Fu, Information Overload, Similarity, and Redundancy: Unsubscribing Information Sources on Twitter, Journal of Computer-Mediated Communication , Volume 22, Issue 1, 1 January 2017, Pages 1–17, https://doi.org/10.1111/jcc4.12178

- Permissions Icon Permissions

The emergence of social media has changed individuals' information consumption patterns. The purpose of this study is to explore the role of information overload, similarity, and redundancy in unsubscribing information sources from users' information repertoires. In doing so, we randomly selected nearly 7,500 ego networks on Twitter and tracked their activities in 2 waves. A multilevel logistic regression model was deployed to test our hypotheses. Results revealed that individuals (egos) obtain information by following a group of stable users (alters). An ego's likelihood of unfollowing alters is negatively associated with their information similarity, but is positively associated with both information overload and redundancy. Furthermore, relational factors can modify the impact of information redundancy on unfollowing.

Social media have changed the current media environment and can consequently influence individuals' selection of information sources. First, social media offer users a large number of information choices. All social media users can provide some kinds of content, and thus could be considered as information sources. However, the available human attention to consume information is always limited ( Webster, 2010 ), leading to varying degrees of information overload on social media ( Holton & Chyi, 2012 ). To cope with information overload, users will rely upon relatively small subsets or “repertoires” of their preferred channels (e.g., Kim, 2014 ; Taneja, Webster, Malthouse, & Ksiazek, 2012 ; Yuan, 2011 ).

Second, social media users are usually embedded in online social networks. They select information sources by “following” other users. Those users being followed are called followees. In social networks, users are inclined to follow other users who appear to be similar to themselves with respect to many attributes ( McPherson, Smith-Lovin, & Cook, 2001 ). Given the increasing number of available choices on social media, this homophilous selectivity is reinforced in computer-mediated communication and potentially results in audience fragmentation ( Sunstein, 2009 ). If users follow many content-similar followees, they are likely to receive many duplicated messages in their personal information streams, and thus lead to information redundancy ( Harrigan, Achananuparp, & Lim, 2012 ).

Although the repertoire approach to media use provides an important framework to capture information consumption patterns under information overload ( Kim, 2014 ; Taneja et al., 2012 ; Yuan, 2011 ), few studies have examined the dynamic process of repertoire formation and the role of content similarity and redundancy. In a networked environment on social media, information overload could increase the tendency of similarity-based selection and further leads to information redundancy, which in turn might increase information overload. Besides, little attention has been paid to the removal of information sources from personal repertoires. The deselection process helps reduce information overload ( Webster, 2010 ) and stabilize personal information repertoires ( Kwak, Chun, & Moon, 2011 ). In order to investigate the role of information overload, similarity, and redundancy in structuring information consumption patterns, this study extends the repertoire approach by incorporating media choice theories and social network analysis.

Followees as Information Repertoire on Social Media

Contemporary social media are usually conceived as a combination of information platforms and social network services, wherein “ordinary” users (as well as media organizations, journalists, and other “elite” users) create, share, and consume user-generated content (as well as professional news content) in social networks (e.g., Kwak, Lee, Park, & Moon, 2010 ; Murthy, 2012 ). This definition captures two essential aspects of the character of current social media platforms. First, ordinary people have turned themselves into media content providers on social media by producing and sharing news messages ( Murthy, 2012 ). Second, people's reception of information is basically constrained by their personal social networks online. Social media users decide whose messages they wish to receive by following other users. By creating such following connections, users receive the content that their followees post. Thus, as users choose whom to follow, they also choose the information to which they will have access.

Twitter is one of the most popular social media platforms. Figure 1 illustrates the information consumption structure on Twitter. An individual (e.g., Ego 1) can subscribe to receive the tweets of another user (e.g., Alter 2). We say Alter 2 is a followee of Ego 1 while Ego 1 is a follower of Alter 2. On Twitter, information seeking takes the form of following relationships ( Himelboim, Hansen, & Bowser, 2013 ). Egos receive messages from followees but do not receive them from followers. In Figure 1 , Ego 1 is following Alters 1–3. Therefore, Ego 1 receives all tweets posted by these alters. The following relationships on Twitter may not be reciprocal. Users may rebroadcast a tweet by retweeting the message to their followers. They can also converse with any other users by replying to their tweets or mentioning other users using the “@” sign. All original tweets, retweets, and replies are displayed in users' timelines.

An ego network approach to conceiving followees as information repertoires. An ego network consists of a focal node (“ego”) and the nodes to whom ego is directly connected to (alters) plus the ties (arrows in the figure) among the alters. The arrow pointing from ego 1 to alter 2 indicates that ego 1 follows alter 2. It also suggests information flows from alter 2 to ego 1.

The emerging characteristics of social media have several implications for existing theories about the choice of information sources. First, all social media users, including ordinary people, journalists, and media organizations, can be their followers' information sources. Hermida, Fletcher, Korell, & Logan ( 2012 ) found that social media users are more likely to receive information from the individuals than from news organizations and journalists followed on social media. Even for media content, Twitter users get roughly half of their media referrals via intermediaries ( Wu, Hofman, Mason, & Watts, 2011 ). In this sense, the selection of information sources partly becomes the choice of followees on social media. Therefore, alters, followees, and information sources are interchangeable terms in this study.

Second, users' followees serve as their information repertoires to help cope with information overload on social media. The conventional notion about media choice is that people assess the available resources and choose among them in an effort to achieve their purposes rationally. However, that rationality is bounded by the overabundance of choice and the limited human attention available on social media. It is impossible to follow all available information sources or select relevant alters by examining every single message posted by these alters. One technique many people use to manage their choices is to limit the number of choices by paring down their options to a more manageable repertoire of preferred sources ( Webster, 2010 , 2014 ). On Twitter, that usually means following a small number of followees/alters.

Third, repertoire is a subset of available media that an individual uses regularly or frequently (e.g., Webster, 2014 ; Webster & Ksiazek, 2012 ), which actually reflects the habitual nature of media consumption. However, most studies analyze media repertoires in a static way. Building information repertoires on social media by following other users is a dynamic process. On Twitter, users can take two steps to establish and maintain their followees. When joining Twitter, users may subscribe to other users. Later on, users are free to unsubscribe and remove users from their following lists (i.e., unfollow). Kwak et al. ( 2011 ) have documented a relationship stabilization process on Twitter—users are less likely to unfollow those whom the users have been following for a long time. Finally, this process will result in stable repertories of information sources, which users rely on heavily for daily information consumption. It suggests that researchers should pay more attention to the unfollowing process in the dynamic analysis of repertoire formation.

Finally, previous studies focus on explaining the absolute size of repertoires, but often say little about their composition (see Webster & Ksiazek, 2012 ). An information repertoire is introduced to cope with information overload by selecting preferred sources. By taking the composition into consideration, similarity-based selection may create redundant messages in personal repertoires ( Himelboim et al., 2013 ) and exacerbate information overload ( Franz, 1999 ). It remains largely unknown to what extent users unfollow their information sources based on individual preference of certain content category, information overload, and information redundancy.

Building Repertoires: Informational Factors

The above discussion suggests three intertwined variables that are relevant to the formation of user repertoires on social media platforms. Unlike previous repertoire studies that focus on the structure of media use, the variables are related to the communication content. First, the primary purpose of building information repertoires is to cope with information overload. Information overload has traditionally been conceived as a subjective experience in which users are overwhelmed by a large supply of information in a given period of time (e.g., Savolainen, 2007 ). Although many reasons can cause information overload, the core component is the volume of incoming information ( Franz, 1999 ). The growing number of information sources could have negative consequences for information seeking. Holton and Chyi ( 2012 ) found that 72.8% of respondents felt at least somewhat overloaded with the amount of news available today. In addition, the study reports that perceived overload depends on platforms: Use of Facebook is positively associated with information overload, whereas the use of Twitter is not significantly correlated with information overload. To cope with information overload, media users usually maintain a repertoire by limiting the number of sources. For instance, TV studies have demonstrated that people watch a small fraction of available channels in the US and across the world (see Webster, 2014 ).

H1: Users are more likely to unfollow the alters who post more messages during a given period of time.

Second, building information repertoires is closely related to the choice of specific information sources. Previous studies have used content preferences to explain cross-platform media repertoires (see Taneja et al., 2012 ). They found that information sources providing similar content are likely to be used together. For example, researchers have evidenced a news repertoire that combined Internet and television news (e.g., Dutta-Bergman, 2004 ; Yuan, 2011 ). This findings are consistent with the theory of media complementarity: Individuals who are interested in a particular content type expose themselves to various information channels that correspond with their area of interest ( Dutta-Bergman, 2004 ).

Although the original purpose was to explain cross-platform media consumption, Himelboim et al. ( 2013 ) have extended the theory of channel complementarity by considering the complementary selection of information sources that occur within a single social media platform. They found that Twitter users in different clusters follow different sets of sources that cover different areas of content (i.e., local versus national news). In a similar way, if users show consistent interest in posting a type of tweet, they are more likely to follow those users posting messages on a similar topic ( Weng, Lim, Jiang, & He, 2010 ).

H2: Users are less likely to unfollow the alters who post similar hashtags.

H3: The positive association between unfollowing and tweeting frequency is weaker for the alters who post similar hashtags to their egos.

Third, selecting information sources based on information similarity in a networked communication environment can lead to information redundancy in one's personal repertoire. If users select the sources posting similar topics (using similar hashtags) consistently, they will be expected to receive lots of redundant messages. Information redundancy refers to message repetition in a series of received messages ( Stephens, Barrett, & Mahometa, 2013 ). It does not mean posting repeated messages by a single alter user. As illustrated in Figure 1 , information redundancy quantifies the extent to which an alter posted a type of content (i.e., hashtag) similar to other alters in an ego network, while information similarity is related to the similarity of hashtags between egos and alters. Even though there are no duplicated tweets within an alter's timeline, the tweets by alters could be totally redundant to their egos, because other alters may post similar tweets in the ego network.

The role of information redundancy in media choice has been only implicitly mentioned. For example, previous studies suggest that use of one medium will displace the use of functionally alternative media, because the time available in any day is fixed (e.g., Ferguson & Perse, 2000 ). It implies that people are less likely to choose media with redundant information relative to their current media repertoires under information overload conditions. Another relevant argument is based on the theory of media complementarity. It predicts that people who select one type of information from one channel will also select the same type of information from other channels ( Dutta-Bergman, 2004 ; Himelboim et al., 2013 ). Eventually, that will cause duplicated messages received from different media platforms ( Jenkins, 2006 ). It implies that redundancy might be acceptable if people are interested in only a few types of content. For example, users who are interested in pop music may follow many pop stars on social media. However, empirical studies found that people are actually interested in different types of content and are likely to possess a complementary architecture in their information repertoires (e.g., Chaffee, 1982 ; Webster, 2014 ; Yuan, 2011 ). That means if the users are interested in both pop music and sports, they may unfollow a few pop stars and then follow some sports stars to avoid information overload, even though the unfollowed pop stars were posting unique tweets concerning their own stories.

H4: Users are more likely to unfollow those alters who post more redundant hashtags relative to what the users receive from all other alters.

H5: The positive association between information redundancy and unfollowing is stronger for the alters who post more messages during a given period of time.

H6: The negative association between hashtag similarity and unfollowing is stronger for the alters who post less redundant hashtags.

The Role of Relational Factors

In addition to these informational factors, relational factors could be another set of factors structuring the dynamic process of repertoire building on social media. Relationship building (e.g., maintaining friendship online) and information seeking are the two major motivations of Twitter use ( Kwak et al., 2010 ; Myers, Sharma, Gupta, & Lin, 2014 ; Wu et al., 2011 ). Information consumption on social media could be a byproduct of social networking behaviors. Many ordinary users socialize with their friends, family, and coworkers on Twitter. They establish ties for relational purposes, while the ties also serve as the conduits of information flow ( Golder & Yardi, 2010 ; Myers & Leskovec, 2014 ). The network structure can influence what information users will receive. For example, users in densely connected communities are expected to receive more redundant information ( Harrigan et al., 2012 ). That suggests that the relational factors might modify the relationships between the informational factors and unfollowing.

RQ: How will the relational factors influence the impacts of informational factors on unfollowing?

Data Collection

By using Twitter's REST APIs, we collected a two-wave panel dataset. To overcome the representativeness problem, this study sampled the panel users randomly from the population. First, we employed a method reported in Liang and Fu ( 2015 ) to generate random Twitter user IDs. We generated 90,000 random numbers. And then, we searched these numbers via the official API to check the existence of these Twitter IDs. Using this method, we obtained 34,006 valid Twitter user accounts (egos).

Second, we obtained the egos' user profiles, their followees' IDs, and up to 3,200 tweets and retweets (timeline) for each ego user. We collected the first wave of data in December 2014 and the second wave in March 2015. In the second wave, 33,774 egos still exist. Due to the privacy settings on Twitter, we could only get the tweets from the public accounts. In addition, since we are only interested in the unfollowing behavior, we exclude those users who are totally inactive during the period of data collection. Finally, we got 7,609 ego users who are both active and publicly available.

Third, we constructed ego networks in which nodes are users and ties are the following relationships between egos and followees. In the first wave, there are 1,314,156 nodes (including 7,360 ego users) and 1,766,269 ties in the ego networks. In the second wave, there are 1,403,291 nodes (including 7,464 ego users) and 1,888,039 ties in the ego networks. We further collected the followees' profiles and tweets, and their followees. We excluded the followees whose tweets and following relationships are kept private. The final dataset for our analyses include 7,449 ego networks with 1,180,903 nodes and 1,658,069 ties by combining the two waves.

Unfollowing was measured by comparing the ego networks between Wave 1 and Wave 2. If a followee in Wave 1 has not been observed in the followee list of Wave 2, we consider it was unfollowed during the two waves. Among the 1,658,069 ties, 2.89% (47,962) were removed during the two waves. Among the 7,449 egos, 38.44% (2,864) have deleted at least one followee. We note that most independent variables were extracted from the first wave only. Therefore, we only included the users (egos and followees) who appeared in Wave 1 (7,326 egos and 1,613,735 ties) for formal analyses.

In order to measure information similarity and information redundancy, we employed text mining techniques. First, we created a term-document matrix for each ego and its followees (i.e., 7,326 term-document matrices in total). In each term-document matrix, rows are the users (including an ego and their alters) and columns are the unique hashtags in the users' tweets (all available tweets). Given that our sample consists of active users, about 90% of the alters have posted at least one hashtag. Second, the hashtags used by user u were encoded into a feature vector of term frequency–inverse document frequency (tf-idf) ϕ( u ). The i th element ϕ i ( u ) represents the frequency of the hashtag indexed by i in all the hashtags used by u , scaled by the inverse document frequency (see Salton, Wong, & Yang, 1975 ). The tf-idf value increases proportionally to the number of times a word appears in the document, but is offset by the frequency of the word in the corpus.

Hashtag similarity was measured at the dyadic level by the semantic similarity between the hashtags used by the egos and those used by their alters. As a result, the score quantifying the similarity of information posted by two users u 1 and u 2 is given by a cosine similarity measure: ⟨ϕ i ( u 1 ), ϕ i ( u 2 )⟩/(||ϕ( u 1 )|| · ||ϕ( u 2 )||). Theoretically, hashtag similarity ranges from 0 (completely dissimilar) to 1 (actually the same). The mean of the hashtag similarity score in our data is 0.009 ( SD = 0.047). Since the hashtag similarity was calculated using the semantic distance based on the tf-idf values, if two hashtags are referring the same topic (e.g., #politics and #Obama), users are inclined to use them together, and thus the semantic similarity score between them will be high.

Hashtag redundancy was also measured based on the tf-idf values. Words are not equally important in terms of their uniqueness. In fact, some words have little or no discriminating power. For example, one's followees are all university researchers. It is likely that all followees may include “research” as a hashtag. This word is purely redundant. TF-IDF is a numerical statistic that is intended to reflect how important a word is to a document in a collection or corpus ( Robertson, 2004 ). It quantifies the word importance relative to all words used by alters in an ego network. If a followee has many hashtags with high tf-idf values, it means that the user included many unique hashtags and showed less redundancy relative to other alters in the same ego network. Therefore, we calculated the sum of all tf-idf values for each followee as an indicator of information uniqueness ( IU ). And then we subtract the value using the maximum value to measure information redundancy ( IR = min ( IR ))/(max( IR ) − min( IR )). We further normalized the raw score to range from 0 to 1 by using the formula: ( IR = min ( IR ))/(max( IR ) − min( IR )). As a result, the mean of information redundancy is in our data 0.368 ( SD = 0.283).

Information overload was measured at the alter level by calculating the tweeting frequency—the number of messages posted by an alter during the two waves. On average, a followee posted 984 ( Mdn = 82, SD = 5,609) messages. Although the information overload is a subjective feeling, we measured it in a more objective way. In order to control this individual (subjective) heterogeneity, we modeled its effect in a multilevel framework with other control variables.

Popularity of a followee was measured by the ratio of the number of followers to the number of followees at Wave 1. The numbers are directly provided by Twitter's profile API. The average of popularity is 19,880 ( Mdn = 2, SD = 332,295). Similarly, we calculated the popularity score for each ego user ( M = 0.90, Mdn = 0.45, SD = 12.06).

Reciprocity was measured by a binary variable. If a followee of an ego was also a follower of the ego at Wave 1, we said the tie was reciprocal at Wave 1. We calculated the reciprocity only at Wave 1 and use it as a time-lag predictor of the unfollowing behavior in Wave 2. In our data, 36.8% of the ties are reciprocal.

To calculate the number of common followees between the ego and their alters at Wave 1, we collected the followees of the egos and the followees of the alters. We then compared the followee lists of the egos and the followee lists of the alters to determine the number of shared followees. In Figure 1 , Ego 2 and Alter 5 shared a common followee—Alter 4. On average, the egos and alters shared 62 ( Mdn = 12, SD = 208) followees in our dataset.

We included two types of control variables that have been investigated in previous studies ( Kivran-Swaine et al., 2011 ; Kwak et al., 2012 ; Xu et al., 2013 ). The ego specific predictors are the characteristics of ego users, whereas alter specific predictors are the characteristics of the followees. Ego specific variables include ego popularity, the number of tweets, and years since registration, all of which are directly provided by Twitter's profile API. Alter specific variables include the number of tweets, year since registration, hashtag rate (the proportion of the tweets containing at least a hashtag), and interaction frequency. Interaction frequency was measured by the sum of the frequency of the ego retweeting its followees' tweets, the frequency of the ego replying to its followees, and the frequency of mentioning.

Finally, we include the order of follow as an alter-specific control variable. Twitter does not offer information about the establishment time of each relationship. However, it does provide the temporal order of the establishment of relationships in the personal network ( Kwak et al., 2011 ). Similarly, we constructed the relative order in relationship establishment of followees for each ego user by breaking the followees into 10 groups. The final score ranges from 10% (the most recent 10% of followees) to 100% (the oldest 10% of followees).

Data Analysis

We used multilevel logistic regression ( Snijders & Bosker, 2012 ) to test our hypotheses. The multilevel framework has been successfully employed to model (ego-centric) network formation problems (e.g., Golder & Yardi, 2010 ; Kivran-Swaine et al., 2011 ). In our study, the unit of analysis is the tie between egos and alters. Each following relationship nested under the same ego user could be influenced by the unique characteristics of that particular ego. We choose logistic as the link function because our dependent variables are binary responses (i.e., unfollowing or not). All alter-specific measures are Level-1 variables. All ego-level predictors are Level-2 variables.

Repertoire Stabilization

Table 1 presents the formal models to predict the unfollowing behavior on Twitter. We calculated two types of R 2 for multilevel models ( Nakagawa & Schielzeth, 2013 ): Marginal R 2 is concerned with variance explained by fixed factors, and conditional R 2 is concerned with variance explained by both fixed and random factors. The full model (the second column in Table 1 ) can explain 69.7% of the variance. We should note that much of the variance comes from the ego level. The Intraclass Correlation Coefficient for a null model without any predictors is 95.5%. In our data, nearly two-thirds of users didn't unfollow any users during our observations. The marginal R 2 of the full model is 6.1%

Multilevel Logistic Regression Models Predicting Unfollowing

| . | Model 1 . | Full Model . | Raw Text Measures . | |||

|---|---|---|---|---|---|---|

| . | ||||||

| . | Estimate (SE) . | Z . | Estimate (SE) . | Z . | Estimate (SE) . | Z . |

| Similarity | −2.312 (.313) | −7.39 | −1.700 (.339) | −5.02 | −2.349 (.604) | −3.89 |

| Overload | 0.102 (.023) | 11.55 | 0.083 (.009) | 9.18 | 0.038 (.013) | 2.87 |

| Redundancy | −0.019 (.002) | −0.80 | 0.120 (.024) | 5.00 | 0.071 (.031) | 2.26 |

| Overload × Similarity | −0.353 (.141) | −2.50 | −0.458 (.139) | −3.28 | 0.201 (0.77) | 2.63 |

| Overload × Redundancy | −0.068 (.018) | −3.82 | −0.032 (.018) | −1.79 | 0.049 (.020) | 2.47 |

| Similarity × Redundancy | 1.489 (.502) | 2.97 | 0.953 (.562) | 1.70 | 1.868 (1.056) | 1.77 |

| Alter popularity | −0.036 (.009) | −4.14 | −0.036 (.009) | −4.19 | ||

| Reciprocity | −1.518 (.017) | −89.58 | −1.513 (.0170) | −89.43 | ||

| Shared followees | −0.151 (.007) | −21.23 | −0.151 (.007) | −21.16 | ||

| Order of follow (old) | −1.555 (.021) | −72.95 | −1.452 (.022) | −66.80 | −1.456 (.022) | −66.95 |

| Interaction frequency | −0.259 (.0130) | −19.95 | −0.219 (.013) | −17.13 | −0.219 (.013) | −17.13 |

| of tweets | 0.004 (.007) | 0.57 | 0.013 (.007) | 1.72 | 0.019 (.007) | 2.59 |

| Year since registration | 0.005 (.004) | 1.26 | −0.060 (.004) | −13.48 | −0.062 (.004) | −14.13 |

| Hashtag rate | 0.006 (.052) | 0.11 | 0.106 (.052) | 2.01 | 0.021 (.052) | 0.41 |

| Ego popularity | 0.034 (.012) | 2.79 | −0.036 (.013) | −2.85 | 0.034 (.011) | 2.96 |

| of tweets | 0.143 (.034) | 4.19 | 0.192 (.036) | 5.35 | 0.173 (.032) | 5.41 |

| Year since registration | −0.031 (.026) | −1.20 | 0.003 (.027) | 0.10 | 0.004 (.026) | 0.15 |

| Intercept | −5.357 (.096) | −55.83 | −5.119 (.101) | −50.69 | −4.746 (.093) | −50.82 |

| 8.138 (2.853) | 9.021 (3.003) | 7.177 (2.679) | ||||

| −119,115.4 | −113,763.1 | −113,979.6 | ||||

| 67.8% | 69.7% | 65.2% | ||||

| 2.6% | 6.1% | 7.0% | ||||

| 1,613,735 | ||||||

| 7,326 | ||||||

| . | Model 1 . | Full Model . | Raw Text Measures . | |||

|---|---|---|---|---|---|---|

| . | ||||||

| . | Estimate (SE) . | Z . | Estimate (SE) . | Z . | Estimate (SE) . | Z . |

| Similarity | −2.312 (.313) | −7.39 | −1.700 (.339) | −5.02 | −2.349 (.604) | −3.89 |

| Overload | 0.102 (.023) | 11.55 | 0.083 (.009) | 9.18 | 0.038 (.013) | 2.87 |

| Redundancy | −0.019 (.002) | −0.80 | 0.120 (.024) | 5.00 | 0.071 (.031) | 2.26 |

| Overload × Similarity | −0.353 (.141) | −2.50 | −0.458 (.139) | −3.28 | 0.201 (0.77) | 2.63 |

| Overload × Redundancy | −0.068 (.018) | −3.82 | −0.032 (.018) | −1.79 | 0.049 (.020) | 2.47 |

| Similarity × Redundancy | 1.489 (.502) | 2.97 | 0.953 (.562) | 1.70 | 1.868 (1.056) | 1.77 |

| Alter popularity | −0.036 (.009) | −4.14 | −0.036 (.009) | −4.19 | ||

| Reciprocity | −1.518 (.017) | −89.58 | −1.513 (.0170) | −89.43 | ||

| Shared followees | −0.151 (.007) | −21.23 | −0.151 (.007) | −21.16 | ||

| Order of follow (old) | −1.555 (.021) | −72.95 | −1.452 (.022) | −66.80 | −1.456 (.022) | −66.95 |

| Interaction frequency | −0.259 (.0130) | −19.95 | −0.219 (.013) | −17.13 | −0.219 (.013) | −17.13 |

| of tweets | 0.004 (.007) | 0.57 | 0.013 (.007) | 1.72 | 0.019 (.007) | 2.59 |

| Year since registration | 0.005 (.004) | 1.26 | −0.060 (.004) | −13.48 | −0.062 (.004) | −14.13 |

| Hashtag rate | 0.006 (.052) | 0.11 | 0.106 (.052) | 2.01 | 0.021 (.052) | 0.41 |

| Ego popularity | 0.034 (.012) | 2.79 | −0.036 (.013) | −2.85 | 0.034 (.011) | 2.96 |

| of tweets | 0.143 (.034) | 4.19 | 0.192 (.036) | 5.35 | 0.173 (.032) | 5.41 |

| Year since registration | −0.031 (.026) | −1.20 | 0.003 (.027) | 0.10 | 0.004 (.026) | 0.15 |

| Intercept | −5.357 (.096) | −55.83 | −5.119 (.101) | −50.69 | −4.746 (.093) | −50.82 |

| 8.138 (2.853) | 9.021 (3.003) | 7.177 (2.679) | ||||

| −119,115.4 | −113,763.1 | −113,979.6 | ||||

| 67.8% | 69.7% | 65.2% | ||||

| 2.6% | 6.1% | 7.0% | ||||

| 1,613,735 | ||||||

| 7,326 | ||||||

p < .01,

Variables were rescaled using Z-scores ( M = 0, SD = 1) for multilevel analyses.

Variables that were measured at the ego level (i.e., level-2 variables).

All tie measures and alter-specific measures are Level-1 variables. All ego-level predictors are Level-2 variables.

The first two models were based on the hashtag measures, while the last model was based on the raw text measures of similarity and redundancy.

If we only focused on the users who had unfollowed at least once, the same model could explain 10.4% of the variance by the fixed factors.

The order of follow shows a significant effect on unfollowing, suggesting that people are less likely to unfollow the users who have connected for a relatively long time. In this way, users re-examine their recent followees and decide whether to keep them in their information repertoires. Finally, the repertoire becomes more and more stable. An alternative explanation is that the older followees might imply strong ties, thus are less likely to be unfollowed. Figure 2 shows that even we controlled for the interaction frequency and other variables, the negative relationship between the order of follow and unfollowing probability still holds. The result implies users are intentionally stabilizing their information repertoires over time.

The observed and estimated probabilities of unfollowing as a function of the order of follow. The estimated probability was calculated based on the full model in Table 1 .

Informational Predictors

The major concern of the current study is to examine the role of informational factors in building personal repertoires of information sources. H1 stated that people are more likely to unfollow when they receive overloaded information. The full model in Table 1 shows that information overload is significantly associated with unfollowing, which means that users are more likely to unfollow the followees who posted too many tweets during the two waves ( B = 0.071, SE = .006, p < .01). Therefore, H1 is supported.

H2 stated that users are less likely to unfollow the users sharing similar hashtags. The full model shows that hashtag similarity is negatively associated with unfollowing. That means users are more likely to keep the followees who tweeted similar hashtags ( B = −1.165, SE = .137, p < .01). Therefore, H2 is supported.

H3 stated that hashtag similarity moderates the impact of information overload on unfollowing other users. Table 1suggests that the interaction effect of hashtag similarity and information overload on unfollowing is statistically significant ( B = −0.458, SE = .139, p < .01). Figure 3 illustrates that the positive association between information overload and unfollowing is stronger when hashtag similarity between the ego and alter is low. From Figure 3 , we also note that the difference of unfollowing probability between high and low similarity increases exponentially as information overload increases, indicating that information overload reinforces similarity-based selection. Therefore, H3 is supported.

The interaction effect of information overload and similarity on unfollowing. The estimated probability was calculated based on the full model in Table 1 .

H4 stated that users are more likely to unfollow the users whose tweets included many redundant hashtags. In the full model, hashtag redundancy is positively associated with unfollowing in the full model ( B = 0.120, SE = .036, p < .01), suggesting that the increase of 1 hashtag redundancy will increase the probability of being unfollowed by 3%. Therefore, H4 is supported.

Concerning H5 and H6, the full model suggests that both interaction effects are not significant at all. It suggests that the redundancy effect is not conditional on information overload and similarity when relational factors are controlled for. Therefore, H5 and H6 are not supported.

Relational Predictors

According to the full model in Table 1 , all relational factors show significant impacts on unfollowing behavior. Consistent with previous studies, popularity is negatively associated with unfollowing ( B = −0.036, SE = .009, p < .01). Ego users are less likely to unfollow users with more followers. Reciprocity is also negatively correlated with unfollowing ( B = −1.518, SE = .017, p < .01). For followees with reciprocal ties with their egos at Wave 1, the probability of being unfollowed is 64% lower than the probability for the followees without reciprocal ties (82% versus 18%). Finally, the number of shared followees is negatively associated with unfollowing ( B = −0.151, SE = .017, p < .01), indicating that the followees who share more followees with the egos are less likely to be unfollowed by the egos.

To answer the RQ, Model 1 in Table 1 excluded all relational factors. The inconsistency between Model 1 and the full model is caused by the exclusion of relational variables. First, the redundancy effect is no longer significant in Model 1. It indicates that relational factors are suppressors. In our data, information redundancy is positively correlated with reciprocity and the number of shared followees. For reciprocal ties, the followees' information redundancy is 0.43 on average, whereas the average information redundancy is 0.33 for nonreciprocal ties, χ 2 (1, N = 1,613,733) = 50,676, p < .001. The Spearman's rank correlation between the number of followees and information redundancy is 0.17 ( p < .001). This means that high information redundancy implies dense connections (i.e., reciprocal and sharing more followees), which in turn decreases the unfollowing probability in Model 1. In addition, without considering the relational factors, the interaction effects with hashtag redundancy are significant in Model 1. The model suggests that users are less likely to unfollow the alters with redundant hashtags when information overload is high. It implies that users prefer information redundancy, which was produced by the relational factors.

This study conceptualized social media followees as information source repertoires and examined the dynamics of repertoire formation using panel data from Twitter. First, this study suggests that users maintain relatively stable information repertoires to cope with information overload. During the 3 months of observation, only 5.56% of the following ties have been changed. Despite that, our findings suggest that some users actively and continuously adjust their information source repertoires over time. It is consistent with previous research ( Kwak et al., 2011 ) that the new followees are most likely to be unfollowed, even when competing factors are controlled for. It implies that users are intentionally stabilizing their personal repertoires for daily information other than receiving it passively.

In our dataset, nearly two-thirds of users did not unfollow any users during our observations. This indicates that unfollowing actually is not a popular behavior on Twitter. However, it does not mean that unfollowing is a rare phenomenon or it lacks theoretical significance. We tracked the unfollowing behavior in a relatively short period of time. The number of users who have unfollowed other users should be much larger than 1/3. If we consider the frequency of unfollowing as an indicator for rational selection of information sources, the current study suggests that most users are not rational but habitual information consumers ( Wood, Quinn, & Kashy, 2002 ). Instead of browsing all information channels, users would like to check information from a few sources repeatedly.

Second, this study extended the repertoire approach by examining the role of information overload, similarity, and redundancy in structuring information consumption patterns on a single social media platform. We found that seeking information similarity and reducing information redundancy could coexist in the process of optimizing information repertoires. One popular argument states that users are increasingly seeking content similar sources on social media. This is one of the important coping strategies people have for finding preferred content in an increasingly complex media environment ( Webster & Ksiazek, 2012 ). Following this tendency, individuals would like to consume a steady diet of their preferred type of information sources. Finally, users with similar interests will cluster together ( Himelboim et al., 2013 ) and cause information redundancy.

The current study indeed found that Twitter users are more inclined to keep those followees sharing similar hashtags. Under the information overload situations, the tendency of selecting content similar alters is reinforced (see Figure 3 ). However, the average hashtag similarity between egos and followees is only weakly associated with the average redundancy among the followees ( r = 0.038, t = 3.27, df = 7,267, p < .01). The reason is that, as Table 1 suggests, people intentionally unfollowed the users with redundant information, even though they kept the similar alters at the same time. As a balance, their information repertoires contain the messages they are interested in and with very little redundancy. This also implies that people do have diverse interests and try to sample a diverse range of sources to build their information repertoires.

Third, we note that the formation process is significantly constrained by relational factors (i.e., popularity, reciprocity, and the number of common followees). In addition to their direct effects on unfollowing, the relational factors can alter the impacts of informational factors. We found that relational variables are suppressors of the redundancy effect. This implies that some users received unexpected and redundant information from their networked users. This relational constraint can also explain why previous research found that information overload is higher on Facebook than that on Twitter ( Holton & Chyi, 2012 ), because relational constraint on Twitter is expected to be lower (e.g., Marwick & boyd, 2011 ). In addition, we hypothesized that the informational effects are conditional on each other. However, our results suggest that the redundancy effect is not dependent on information overload and similarity when the relational factors are controlled for.

Furthermore, although we focused on the information variables in building information repertoires, it does not mean that alternative explanations are impossible. On the contrary, our study is consistent with previous repertoire studies that the structural factors are more important that other factors (see Webster, 2014 ). The structural factors in the present study include the relational variables that characterize the online social networks and the control variables. For example, the low ratio of the number of followers to the number of followees (popularity) indicates that the users are inclined to keep more information sources. This finding is consistent with the idea of audience availability in television program choice ( Webster & Wakshlag, 1983 ). Following many sources may suggest the users' availability in viewing new messages. However, these variables are at the microlevel or mesolevel in general. Future studies can explore the impacts of more macrolevel variables on the unfollowing behavior. As suggested by Webster ( 2014 ), the aggregate network level analysis would be beneficial to understand the bounded rationality of online user behaviors.

Limitations and Future Research

Several limitations can be associated with this study. First, when considering followees as information repertoire, we assume that users actually only read the messages posted by their followees. This assumption might not be accurate. Users can simply ignore the messages that they are not interested in to reduce information overload ( Savolainen, 2007 ). In addition, users can receive messages beyond their immediate following networks. Social networks are not the only mechanisms through which users are directed to media. The recommender system and search engine are commonly used for direct audience attention on social media platforms ( Webster, 2010 ). However, the following relationships do indicate awareness of the presence of the followees ( Himelboim et al., 2013 ). Future studies can track users' browsing history on social websites to examine patterns of consuming specific messages other than sources.

Second, Twitter provides researchers with the unique opportunity to track patterns of individual selection of information sources. Although, the unobtrusive approach provides more objective measures, it lacks information on both demographic and psychological variables. Previous studies have found that demographic variables, such as gender and age, show significant impact on the composition of media repertoires (e.g., Yuan, 2011 ). In addition, users with different psychological characteristics may prefer different information-seeking approaches ( Stefanone, Hurley, & Yang, 2013 ). Future studies need to further control these variables and examine the interaction effects between the self-reported and objective measures employed to build information repertoires.

In addition, the unobtrusive approach can cause potential measurement errors. For example, using tweeting frequency to measure information overload might be problematic. Even receiving the same amount of messages, some users may perceive more overload than would other users. We could not measure this subjective feeling directly. Instead, we employed the multilevel framework to control this individual heterogeneity carefully. First, the impact of tweeting frequency on the probability of being unfollowed by egos was considered separately for each ego. Furthermore, we included the potential compounding variables to control the individual differences. For example, Table 1 suggests that egos with more followees actually are less likely to unfollow other users, indicating that those users may have a higher threshold of information overload.

We measured information similarity and redundancy based on hashtags. This kind of operationalization was based on the repertoire approach to studying the user-defined channel types. Although the hashtag provides a convenient way to measure the content topics, 10% of followees did not post any hashtags in our sample. In the current study we considered them as “no preference” cases, i.e., the similarity and redundancy scores are zero. Another way to measure information similarity and redundancy is to calculate the variables based on the raw text. However, we think they are conceptually different things. The purpose of the current study is to demonstrate that the user's choice of information sources is based on content topics other than using similar or unique words. As a robustness check, we conducted a post hoc analysis based on the raw text measures (see the last column in Table 1 : Raw Text Measures). We found that the main effects are similar, whereas the interaction effects are slightly different. Future studies should use more advanced techniques to detect user-defined categories, such as the topic modeling approach, which is similar to factor analysis in media repertoire studies (see Weng et al., 2010 ).

Finally, social media platforms emphasize different technological characteristics. Our results rely on Twitter, which puts a greater emphasis on news sharing. For other social media platforms, like Facebook, studies may emphasize social networking. In this sense, users might be less susceptible to the information variables than was the case in our study. Furthermore, previous repertoire studies have demonstrated that people could build their personal repertoires across media platforms or rely on one of them. The choice of different repertoires is associated with user background characteristics (e.g., Kim, 2014 ). For studies based on a single platform, it is difficult to capture more general media use patterns. For example, watching TV news intensively may cause information overload or redundancy on Twitter. Therefore, future studies are encouraged to test our hypotheses across different social media platforms.

This study was supported by the Public Policy Research Funding Scheme of the Central Policy Unit of the Government of the Hong Kong Special Administrative Region (2013.A8.009.14A) and the Small Project Funding from The University of Hong Kong (201409176011).

Barabasi , A. L. , & Albert , R. ( 1999 ). Emergence of scaling in random networks . Science , 286 ( 5439 ), 509 – 512 . doi: 10.1126/science.286.5439.509

Google Scholar

Chaffee , S. H. ( 1982 ). Mass media and interpersonal channels: Competitive, convergent, or complementary . In G. Gumpert & R. Cathcart (Eds.), Inter/media: Interpersonal communication in a media world (3rd ed., pp. 62 – 80 ). New York, NY : Oxford University Press .

Google Preview

Dutta-Bergman , M. J. ( 2004 ). Complementarity in consumption of news types across traditional and new media . Journal of Broadcasting & Electronic Media , 48 ( 1 ), 41 – 60 . doi: 10.1207/s15506878jobem4801_3

Farhoomand , A. F. , & Drury , D. H. ( 2002 ). Managerial information overload . Communications of the ACM , 45 ( 10 ), 127 – 131 . doi: 10.1145/570907.570909

Ferguson , D. A. , & Perse , E. M. ( 2000 ). The World Wide Web as a functional alternative to television . Journal of Broadcasting & Electronic Media , 44 ( 2 ), 155 – 174 . doi: 10.1207/s15506878jobem4402_1

Franz , H. ( 1999 ). The impact of computer mediated communication on information overload in distributed teams . Proceedings of the 32nd Annual Hawaii International Conference on System Sciences, 1999, HICSS-32, 6182030 . doi: 10.1109/HICSS.1999.772712

Golder , S. A. , & Yardi , S. ( 2010 ). Structural predictors of tie formation in twitter: Transitivity and mutuality . Paper presented at the 2010 IEEE Second International Conference on Social Computing (SocialCom).

Harrigan , N. , Achananuparp , P. , & Lim , E. P. ( 2012 ). Influentials, novelty, and social contagion: The viral power of average friends, close communities, and old news . Social Networks , 34 ( 4 ), 470 – 480 . doi: 10.1016/j.socnet.2012.02.005

Hermida , A. , Fletcher , F. , Korell , D. , & Logan , D. ( 2012 ). Share, like, recommend: Decoding the social media news consumer . Journalism Studies , 13 ( 5–6 ), 815 – 824 . doi: 10.1080/1461670x.2012.664430

Himelboim , I. , Hansen , D. , & Bowser , A. ( 2013 ). Playing the same Twitter network: Political information seeking in the 2010 US gubernatorial elections . Information Communication & Society , 16 ( 9 ), 1373 – 1396 . doi: 10.1080/1369118x.2012.706316

Holton , A. E. , & Chyi , H. I. ( 2012 ). News and the overloaded consumer: Factors influencing information overload among news consumers . Cyberpsychology Behavior and Social Networking , 15 ( 11 ), 619 – 624 . doi: 10.1089/cyber.2011.0610

Jenkins , H. ( 2006 ). Convergence culture: Where old and new media collide . New York, NY : New York University Press .

Kim , S. J. ( 2014 ). A repertoire approach to cross-platform media use behavior . New Media & Society , 1 – 20 . doi: 10.1177/1461444814543162

Kivran-Swaine , F. , Govindan , P. , & Naaman , M. ( 2011 ). The impact of network structure on breaking ties in online social networks: Unfollowing on Twitter . Paper presented at the Proceedings of the SIGCHI conference on human factors in computing systems.

Kwak , H. , Chun , H. , & Moon , S. ( 2011 ). Fragile online relationship: A first look at unfollow dynamics in twitter . Paper presented at the Proceedings of the SIGCHI Conference on Human Factors in Computing Systems.

Kwak , H. , Lee , C. , Park , H. , & Moon , S. ( 2010 ). What is Twitter, a social network or a news media? Paper presented at the Proceedings of the 19th international conference on World wide web.

Kwak , H. , Moon , S. B. , & Lee , W. ( 2012 ). More of a receiver than a giver: Why do people unfollow in Twitter? Paper presented at the ICWSM.

Liang , H. , & Fu , K. W. ( 2015 ). Testing propositions derived from Twitter studies: Generalization and replication in computational social science . Plos One . doi: 10.1371/journal.pone.0134270

Marwick , A. E. , & boyd , d. ( 2011 ). I tweet honestly, I tweet passionately: Twitter users, context collapse, and the imagined audience . New Media & Society , 13 ( 1 ), 114 – 133 . doi: 10.1177/1461444810365313

McPherson , M. , Smith-Lovin , L. , & Cook , J. M. ( 2001 ). Birds of a feather: Homophily in social networks . Annual Review of Sociology , 27 , 415 – 444 . doi: 10.1146/annurev.soc.27.1.415

Murthy , D. ( 2012 ). Towards a sociological understanding of social media: Theorizing Twitter . Sociology-the Journal of the British Sociological Association , 46 ( 6 ), 1059 – 1073 . doi: 10.1177/0038038511422553

Myers , S. A. , & Leskovec , J. ( 2014 ). The bursty dynamics of the twitter information network . Paper presented at the Proceedings of the 23rd International Conference on World Wide Web.

Myers , S. A. , Sharma , A. , Gupta , P. , & Lin , J. ( 2014 ). Information network or social network?: The structure of the twitter follow graph . Paper presented at the Proceedings of the Companion Publication of the 23rd International Conference on World Wide Web.

Nakagawa , S. , & Schielzeth , H. ( 2013 ). A general and simple method for obtaining R2 from generalized linear mixed-effects models . Methods in Ecology and Evolution , 4 ( 2 ), 133 – 142 . doi: 10.1111/j.2041-210x.2012.00261.x

Quercia , D. , Bodaghi , M. , & Crowcroft , J. ( 2012 ). Loosing friends on Facebook . Paper presented at the Proceedings of the 4th Annual ACM Web Science Conference.

Robertson , S. ( 2004 ). Understanding inverse document frequency: On theoretical arguments for IDF . Journal of Documentation , 60 ( 5 ), 503 – 520 . doi: 10.1108/00220410560582

Salton , G. , Wong , A. , & Yang , C.-S. ( 1975 ). A vector space model for automatic indexing . Communications of the ACM , 18 ( 11 ), 613 – 620 . doi: 10.1145/361219.361220

Savolainen , R. ( 2007 ). Filtering and withdrawing: Strategies for coping with information overload in everyday contexts . Journal of Information Science , 33 ( 5 ), 611 – 621 . doi: 10.1177/0165551506077418

Snijders , T. A. B. , & Bosker , R. J. ( 2012 ). Multilevel analysis: An introduction to basic and advanced multilevel modeling (2nd ed.). London : Sage .

Stefanone , M. A. , Hurley , C. M. , & Yang , Z. J. ( 2013 ). Antecedents of online information seeking . Information Communication & Society , 16 ( 1 ), 61 – 81 . doi: 10.1080/1369118x.2012.656137

Stephens , K. K. , Barrett , A. K. , & Mahometa , M. J. ( 2013 ). Organizational communication in emergencies: Using multiple channels and sources to combat noise and capture attention . Human Communication Research , 39 ( 2 ), 230 – 251 . doi: 10.1111/hcre.12002

Sunstein , C. R. ( 2009 ). Republic.com 2.0 . Princeton, NJ : Princeton University Press .

Taneja , H. , Webster , J. G. , Malthouse , E. C. , & Ksiazek , T. B. ( 2012 ). Media consumption across platforms: Identifying user-defined repertoires . New Media & Society , 14 ( 6 ), 951 – 968 . doi: 10.1177/1461444811436146

Watson-Manheim , M. B. , & Belanger , F. ( 2007 ). Communication media repertoires: Dealing with the multiplicity of media choices . Mis Quarterly , 31 ( 2 ), 267 – 293 .

Webster , J. G. ( 2010 ). User information regimes: How social media shape patterns of consumption . Northwestern University Law Review , 104 ( 2 ), 593 – 612 .

Webster , J. G. ( 2014 ). The marketplace of attention: How audiences take shape in a digital age : MIT Press .

Webster , J. G. , & Ksiazek , T. B. ( 2012 ). The dynamics of audience fragmentation: Public attention in an age of digital media . Journal of Communication , 62 ( 1 ), 39 – 56 . doi: 10.1111/j.1460-2466.2011.01616.x

Webster , J. G. , & Wakshlag , J. J. ( 1983 ). A theory of television program choice . Communication Research , 10 ( 4 ), 430 – 446 . doi: 10.1177/009365083010004002

Weng , J. , Lim , E.-P. , Jiang , J. , & He , Q. ( 2010 ). Twitterrank: finding topic-sensitive influential twitterers . Paper presented at the Proceedings of the third ACM international conference on Web search and data mining.

Wood , W. , Quinn , J. M. , & Kashy , D. A. ( 2002 ). Habits in everyday life: Thought, emotion, and action . Journal of Personality and Social Psychology , 83 ( 6 ), 1281 – 1297 . doi: 10.1037//0022-3514.83.6.1281

Wu , S. , Hofman , J. M. , Mason , W. A. , & Watts , D. J. ( 2011 ). Who says what to whom on Twitter . Paper presented at the Proceedings of the 20th international conference on World Wide Web.

Xu , B. , Huang , Y. , Kwak , H. , & Contractor , N. ( 2013 ). Structures of broken ties: Exploring unfollow behavior on twitter . Paper presented at the Proceedings of the 2013 conference on Computer supported cooperative work.

Yuan , E. ( 2011 ). News consumption across multiple media platforms: A repertoire approach . Information Communication & Society , 14 ( 7 ), 998 – 1016 . doi: 10.1080/1369118x.2010.549235

Hai Liang is Assistant Professor in the School of Journalism and Communication at the Chinese University of Hong Kong. His research interests include political communication, dynamic communication process, social media analytics, and computational social science. E-mail: [email protected] . Address: School of Journalism and Communication, Room 424, Humanities Building, New Asia College, The Chinese University of Hong Kong, Shatin, N.T., Hong Kong

King-wa Fu is Associate Professor at the Journalism and Media Studies Centre, The University of Hong Kong. His research interests cover political participation and media use, computational media studies, health and the media, and younger generation's Internet use. E-mail: [email protected] . Address: Journalism and Media Studies Centre, Room 206, Eliot Hall, Pokfulam Road, The University of Hong Kong, Hong Kong.

| Month: | Total Views: |

|---|---|

| December 2017 | 7 |

| January 2018 | 25 |

| February 2018 | 30 |

| March 2018 | 67 |

| April 2018 | 75 |

| May 2018 | 98 |

| June 2018 | 53 |

| July 2018 | 57 |

| August 2018 | 99 |

| September 2018 | 113 |

| October 2018 | 103 |

| November 2018 | 136 |

| December 2018 | 76 |

| January 2019 | 63 |

| February 2019 | 83 |

| March 2019 | 107 |

| April 2019 | 129 |

| May 2019 | 68 |

| June 2019 | 68 |

| July 2019 | 68 |

| August 2019 | 87 |

| September 2019 | 56 |

| October 2019 | 97 |

| November 2019 | 77 |

| December 2019 | 77 |

| January 2020 | 74 |

| February 2020 | 114 |

| March 2020 | 108 |

| April 2020 | 152 |

| May 2020 | 88 |

| June 2020 | 154 |

| July 2020 | 106 |

| August 2020 | 82 |

| September 2020 | 130 |

| October 2020 | 171 |

| November 2020 | 165 |

| December 2020 | 113 |

| January 2021 | 116 |

| February 2021 | 148 |

| March 2021 | 159 |

| April 2021 | 158 |

| May 2021 | 126 |

| June 2021 | 104 |

| July 2021 | 108 |

| August 2021 | 150 |

| September 2021 | 111 |

| October 2021 | 140 |

| November 2021 | 114 |

| December 2021 | 82 |

| January 2022 | 73 |

| February 2022 | 89 |

| March 2022 | 114 |

| April 2022 | 110 |

| May 2022 | 74 |

| June 2022 | 95 |

| July 2022 | 97 |

| August 2022 | 72 |

| September 2022 | 61 |

| October 2022 | 109 |

| November 2022 | 64 |

| December 2022 | 56 |

| January 2023 | 55 |

| February 2023 | 70 |

| March 2023 | 64 |

| April 2023 | 125 |

| May 2023 | 61 |

| June 2023 | 44 |

| July 2023 | 54 |

| August 2023 | 71 |

| September 2023 | 72 |

| October 2023 | 61 |

| November 2023 | 101 |

| December 2023 | 63 |

| January 2024 | 86 |

| February 2024 | 67 |

| March 2024 | 78 |

| April 2024 | 107 |

| May 2024 | 90 |

| June 2024 | 109 |

| July 2024 | 97 |

| August 2024 | 51 |

Email alerts

Citing articles via.

- Recommend to Your Librarian

- Advertising and Corporate Services

Affiliations

- Online ISSN 1083-6101

- Copyright © 2024 International Communication Association

- About Oxford Academic

- Publish journals with us

- University press partners

- What we publish

- New features

- Open access

- Institutional account management

- Rights and permissions

- Get help with access

- Accessibility

- Advertising

- Media enquiries

- Oxford University Press

- Oxford Languages

- University of Oxford

Oxford University Press is a department of the University of Oxford. It furthers the University's objective of excellence in research, scholarship, and education by publishing worldwide

- Copyright © 2024 Oxford University Press

- Cookie settings

- Cookie policy

- Privacy policy

- Legal notice

This Feature Is Available To Subscribers Only

Sign In or Create an Account

This PDF is available to Subscribers Only

For full access to this pdf, sign in to an existing account, or purchase an annual subscription.

Have a language expert improve your writing

Run a free plagiarism check in 10 minutes, generate accurate citations for free.

- Knowledge Base

- Academic writing

- How to avoid repetition and redundancy

How to Avoid Repetition and Redundancy in Academic Writing

Published on March 15, 2019 by Kristin Wieben . Revised on July 23, 2023.

Repetition and redundancy can cause problems at the level of either the entire paper or individual sentences. However, repetition is not always a problem as, when used properly, it can help your reader follow along. This article shows how to streamline your writing.

Instantly correct all language mistakes in your text

Upload your document to correct all your mistakes in minutes

Table of contents

Avoiding repetition at the paper level, avoiding repetition at the sentence level, when is repetition not a problem, other interesting articles.

On the most basic level, avoid copy-and-pasting entire sentences or paragraphs into multiple sections of the paper. Readers generally don’t enjoy repetition of this type.

Don’t restate points you’ve already made

It’s important to strike an appropriate balance between restating main ideas to help readers follow along and avoiding unnecessary repetition that might distract or bore readers.

For example, if you’ve already covered your methods in a dedicated methodology chapter , you likely won’t need to summarize them a second time in the results chapter .

If you’re concerned about readers needing additional reminders, you can add short asides pointing readers to the relevant section of the paper (e.g. “For more details, see Chapter 4”).

Don’t use the same heading more than once

It’s important for each section to have its own heading so that readers skimming the text can easily identify what information it contains. If you have two conclusion sections, try making the heading more descriptive – for instance, “Conclusion of X.”

Are all sections relevant to the main goal of the paper?

Try to avoid providing redundant information. Every section, example and argument should serve the main goal of your paper and should relate to your thesis statement or research question .

If the link between a particular piece of information and your broader purpose is unclear, then you should more explicitly draw the connection or otherwise remove that information from your paper.

Receive feedback on language, structure, and formatting

Professional editors proofread and edit your paper by focusing on:

- Academic style

- Vague sentences

- Style consistency

See an example

Keep an eye out for lengthy introductory clauses that restate the main point of the previous sentence. This sort of sentence structure can bury the new point you’re trying to make. Try to keep introductory clauses relatively short so that readers are still focused by the time they encounter the main point of the sentence.

In addition to paying attention to these introductory clauses, you might want to read your paper aloud to catch excessive repetition. Below we listed some tips for avoiding the most common forms of repetition.

- Use a variety of different transition words

- Vary the structure and length of your sentences

- Don’t use the same pronoun to reference more than one antecedent (e.g. “ They asked whether they were ready for them”)

- Avoid repetition of particular sounds or words (e.g. “ Several shelves sheltered similar sets of shells ”)

- Avoid redundancies (e.g “ In the year 2019 ” instead of “ in 2019 ”)

- Don’t state the obvious (e.g. “The conclusion chapter contains the paper’s conclusions”)

It’s important to stress that repetition isn’t always problematic. Repetition can help your readers follow along. However, before adding repetitive elements to your paper, be sure to ask yourself if they are truly necessary.

Restating key points

Repeating key points from time to time can help readers follow along, especially in papers that address highly complex subjects. Here are some good examples of when repetition is not a problem:

Restating the research question in the conclusion This will remind readers of exactly what your paper set out to accomplish and help to demonstrate that you’ve indeed achieved your goal.

Referring to your key variables or themes Rather than use varied language to refer to these key elements of the paper, it’s best to use a standard set of terminology throughout the paper, as this can help your readers follow along.

Underlining main points

When used sparingly, repetitive sentence and paragraph structures can add rhetorical flourish and help to underline your main points. Here are a few famous examples:

“ Ask not what your country can do for you – ask what you can do for your country” – John F. Kennedy, inaugural address

“…and that government of the people , by the people , for the people shall not perish from the earth.” – Abraham Lincoln, Gettysburg Address

If you want to know more about AI for academic writing, AI tools, or fallacies make sure to check out some of our other articles with explanations and examples or go directly to our tools!

- Ad hominem fallacy

- Post hoc fallacy

- Appeal to authority fallacy

- False cause fallacy

- Sunk cost fallacy

- Deep learning

- Generative AI

- Machine learning

- Reinforcement learning

- Supervised vs. unsupervised learning

(AI) Tools

- Grammar Checker

- Paraphrasing Tool

- Text Summarizer

- AI Detector

- Plagiarism Checker

- Citation Generator

Cite this Scribbr article

If you want to cite this source, you can copy and paste the citation or click the “Cite this Scribbr article” button to automatically add the citation to our free Citation Generator.