Normal Hypothesis Testing ( AQA A Level Maths: Statistics )

Revision note.

Normal Hypothesis Testing

How is a hypothesis test carried out with the normal distribution.

- The population mean is tested by looking at the mean of a sample taken from the population

- A hypothesis test is used when the value of the assumed population mean is questioned

- Make sure you clearly define µ before writing the hypotheses, if it has not been defined in the question

- The null hypothesis will always be H 0 : µ = ...

- The alternative hypothesis will depend on if it is a one-tailed or two-tailed test

- The alternative hypothesis, H 1 will be H 1 : µ > ... or H 1 : µ < ...

- The alternative hypothesis, H 1 will be H 1 : µ ≠ ..

- Remember that the variance of the sample mean distribution will be the variance of the population distribution divided by n

- the mean of the sample mean distribution will be the same as the mean of the population distribution

- The normal distribution will be used to calculate the probability of the observed value of the test statistic taking the observed value or a more extreme value

- either calculating the probability of the test statistic taking the observed or a more extreme value ( p – value ) and comparing this with the significance level

- Finding the critical region can be more useful for considering more than one observed value or for further testing

How is the critical value found in a hypothesis test for the mean of a normal distribution?

- The probability of the observed value being within the critical region, given a true null hypothesis will be the same as the significance level

- To find the critical value(s) find the distribution of the sample means, assuming H 0 is true, and use the inverse normal function on your calculator

- For a two-tailed test you will need to find both critical values, one at each end of the distribution

What steps should I follow when carrying out a hypothesis test for the mean of a normal distribution?

- Following these steps will help when carrying out a hypothesis test for the mean of a normal distribution:

Step 2. Write the null and alternative hypotheses clearly using the form

H 0 : μ = ...

H 1 : μ ... ...

Step 4. Calculate either the critical value(s) or the p – value (probability of the observed value) for the test

Step 5. Compare the observed value of the test statistic with the critical value(s) or the p - value with the significance level

Step 6. Decide whether there is enough evidence to reject H 0 or whether it has to be accepted

Step 7. Write a conclusion in context

Worked example

You've read 0 of your 10 free revision notes

Get unlimited access.

to absolutely everything:

- Downloadable PDFs

- Unlimited Revision Notes

- Topic Questions

- Past Papers

- Model Answers

- Videos (Maths and Science)

Join the 100,000 + Students that ❤️ Save My Exams

the (exam) results speak for themselves:

Did this page help you?

Author: Amber

Amber gained a first class degree in Mathematics & Meteorology from the University of Reading before training to become a teacher. She is passionate about teaching, having spent 8 years teaching GCSE and A Level Mathematics both in the UK and internationally. Amber loves creating bright and informative resources to help students reach their potential.

Hypothesis Testing with the Normal Distribution

Contents Toggle Main Menu 1 Introduction 2 Test for Population Mean 3 Worked Example 3.1 Video Example 4 Approximation to the Binomial Distribution 5 Worked Example 6 Comparing Two Means 7 Workbooks 8 See Also

Introduction

When constructing a confidence interval with the standard normal distribution, these are the most important values that will be needed.

Significance Level | $10$% | $5$% | $1$% |

|---|---|---|---|

$z_{1-\alpha}$ | $1.28$ | $1.645$ | $2.33$ |

$z_{1-\frac{\alpha}{2} }$ | $1.645$ | $1.96$ | $2.58$ |

Distribution of Sample Means

where $\mu$ is the true mean and $\mu_0$ is the current accepted population mean. Draw samples of size $n$ from the population. When $n$ is large enough and the null hypothesis is true the sample means often follow a normal distribution with mean $\mu_0$ and standard deviation $\frac{\sigma}{\sqrt{n}}$. This is called the distribution of sample means and can be denoted by $\bar{X} \sim \mathrm{N}\left(\mu_0, \frac{\sigma}{\sqrt{n}}\right)$. This follows from the central limit theorem .

The $z$-score will this time be obtained with the formula \[Z = \dfrac{\bar{X} - \mu_0}{\frac{\sigma}{\sqrt{n}}}.\]

So if $\mu = \mu_0, X \sim \mathrm{N}\left(\mu_0, \frac{\sigma}{\sqrt{n}}\right)$ and $ Z \sim \mathrm{N}(0,1)$.

The alternative hypothesis will then take one of the following forms: depending on what we are testing.

Worked Example

An automobile company is looking for fuel additives that might increase gas mileage. Without additives, their cars are known to average $25$ mpg (miles per gallons) with a standard deviation of $2.4$ mpg on a road trip from London to Edinburgh. The company now asks whether a particular new additive increases this value. In a study, thirty cars are sent on a road trip from London to Edinburgh. Suppose it turns out that the thirty cars averaged $\overline{x}=25.5$ mpg with the additive. Can we conclude from this result that the additive is effective?

We are asked to show if the new additive increases the mean miles per gallon. The current mean $\mu = 25$ so the null hypothesis will be that nothing changes. The alternative hypothesis will be that $\mu > 25$ because this is what we have been asked to test.

\begin{align} &H_0:\mu=25. \\ &H_1:\mu>25. \end{align}

Now we need to calculate the test statistic. We start with the assumption the normal distribution is still valid. This is because the null hypothesis states there is no change in $\mu$. Thus, as the value $\sigma=2.4$ mpg is known, we perform a hypothesis test with the standard normal distribution. So the test statistic will be a $z$ score. We compute the $z$ score using the formula \[z=\frac{\bar{x}-\mu}{\frac{\sigma}{\sqrt{n} } }.\] So \begin{align} z&=\frac{\overline{x}-25}{\frac{2.4}{\sqrt{30} } }\\ &=1.14 \end{align}

We are using a $5$% significance level and a (right-sided) one-tailed test, so $\alpha=0.05$ so from the tables we obtain $z_{1-\alpha} = 1.645$ is our test statistic.

As $1.14<1.645$, the test statistic is not in the critical region so we cannot reject $H_0$. Thus, the observed sample mean $\overline{x}=25.5$ is consistent with the hypothesis $H_0:\mu=25$ on a $5$% significance level.

Video Example

In this video, Dr Lee Fawcett explains how to conduct a hypothesis test for the mean of a single distribution whose variance is known, using a one-sample z-test.

Approximation to the Binomial Distribution

A supermarket has come under scrutiny after a number of complaints that its carrier bags fall apart when the load they carry is $5$kg. Out of a random sample of $200$ bags, $185$ do not tear when carrying a load of $5$kg. Can the supermarket claim at a $5$% significance level that more that $90$% of the bags will not fall apart?

Let $X$ represent the number of carrier bags which can hold a load of $5$kg. Then $X \sim \mathrm{Bin}(200,p)$ and \begin{align}H_0&: p = 0.9 \\ H_1&: p > 0.9 \end{align}

We need to calculate the mean $\mu$ and variance $\sigma ^2$.

\[\mu = np = 200 \times 0.9 = 180\text{.}\] \[\sigma ^2= np(1-p) = 18\text{.}\]

Using the normal approximation to the binomial distribution we obtain $Y \sim \mathrm{N}(180, 18)$.

\[\mathrm{P}[X \geq 185] = \mathrm{P}\left[Z \geq \dfrac{184.5 - 180}{4.2426} \right] = \mathrm{P}\left[Z \geq 1.0607\right] \text{.}\]

Because we are using a one-tailed test at a $5$% significance level, we obtain the critical value $Z=1.645$. Now $1.0607 < 1.645$ so we cannot accept the alternative hypothesis. It is not true that over $90$% of the supermarket's carrier bags are capable of withstanding a load of $5$kg.

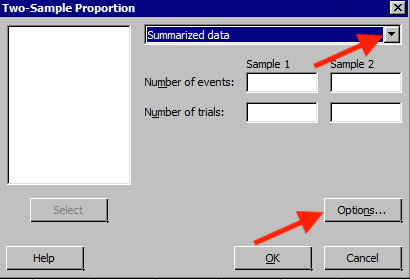

Comparing Two Means

When we test hypotheses with two means, we will look at the difference $\mu_1 - \mu_2$. The null hypothesis will be of the form

where $a$ is a constant. Often $a=0$ is used to test if the two means are the same. Given two continuous random variables $X_1$ and $X_2$ with means $\mu_1$ and $\mu_2$ and variances $\frac{\sigma_1^2}{n_1}$ and $\frac{\sigma_2^2}{n_2}$ respectively \[\mathrm{E} [\bar{X_1} - \bar{X_2} ] = \mathrm{E} [\bar{X_1}] - \mathrm{E} [\bar{X_2}] = \mu_1 - \mu_2\] and \[\mathrm{Var}[\bar{X_1} - \bar{X_2}] = \mathrm{Var}[\bar{X_1}] - \mathrm{Var}[\bar{X_2}]=\frac{\sigma_1^2}{n_1}+\frac{\sigma_2^2}{n_2}\text{.}\] Note this last result, the difference of the variances is calculated by summing the variances.

We then obtain the $z$-score using the formula \[Z = \frac{(\bar{X_1}-\bar{X_2})-(\mu_1 - \mu_2)}{\sqrt{\frac{\sigma_1^2}{n_1}+\frac{\sigma_2^2}{n_2}}}\text{.}\]

These workbooks produced by HELM are good revision aids, containing key points for revision and many worked examples.

- Tests concerning a single sample

- Tests concerning two samples

Selecting a Hypothesis Test

- The Open University

- Accessibility hub

- Guest user / Sign out

- Study with The Open University

My OpenLearn Profile

Personalise your OpenLearn profile, save your favourite content and get recognition for your learning

About this free course

Become an ou student, download this course, share this free course.

Start this free course now. Just create an account and sign in. Enrol and complete the course for a free statement of participation or digital badge if available.

4.1 The normal distribution

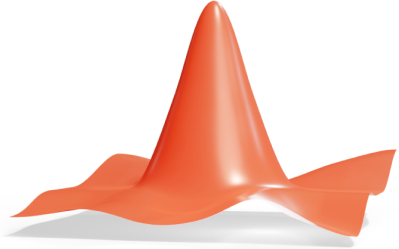

Here, you will look at the concept of normal distribution and the bell-shaped curve. The peak point (the top of the bell) represents the most probable occurrences, while other possible occurrences are distributed symmetrically around the peak point, creating a downward-sloping curve on either side of the peak point.

The cartoon shows a bell-shaped curve. The x-axis is titled ‘How high the hill is’ and the y-axis is titled ‘Number of hills’. The top of the bell-shaped curve is labelled ‘Average hill’, but on the lower right tail of the bell-shaped curve is labelled ‘Big hill’.

In order to test hypotheses, you need to calculate the test statistic and compare it with the value in the bell curve. This will be done by using the concept of ‘normal distribution’.

A normal distribution is a probability distribution that is symmetric about the mean, indicating that data near the mean are more likely to occur than data far from it. In graph form, a normal distribution appears as a bell curve. The values in the x-axis of the normal distribution graph represent the z-scores. The test statistic that you wish to use to test the set of hypotheses is the z-score . A z-score is used to measure how far the observation (sample mean) is from the 0 value of the bell curve (population mean). In statistics, this distance is measured by standard deviation. Therefore, when the z-score is equal to 2, the observation is 2 standard deviations away from the value 0 in the normal distribution curve.

A symmetrical graph reminiscent of a bell. The top of the bell-shaped curve appears where the x-axis is at 0. This is labelled as Normal distribution.

- Skip to secondary menu

- Skip to main content

- Skip to primary sidebar

Statistics By Jim

Making statistics intuitive

Normal Distribution in Statistics

By Jim Frost 181 Comments

The normal distribution, also known as the Gaussian distribution, is the most important probability distribution in statistics for independent, random variables. Most people recognize its familiar bell-shaped curve in statistical reports.

The normal distribution is a continuous probability distribution that is symmetrical around its mean, most of the observations cluster around the central peak, and the probabilities for values further away from the mean taper off equally in both directions. Extreme values in both tails of the distribution are similarly unlikely. While the normal distribution is symmetrical, not all symmetrical distributions are normal. For example, the Student’s t, Cauchy, and logistic distributions are symmetric.

As with any probability distribution, the normal distribution describes how the values of a variable are distributed. It is the most important probability distribution in statistics because it accurately describes the distribution of values for many natural phenomena. Characteristics that are the sum of many independent processes frequently follow normal distributions. For example, heights, blood pressure, measurement error, and IQ scores follow the normal distribution.

In this blog post, learn how to use the normal distribution, about its parameters, the Empirical Rule, and how to calculate Z-scores to standardize your data and find probabilities.

Example of Normally Distributed Data: Heights

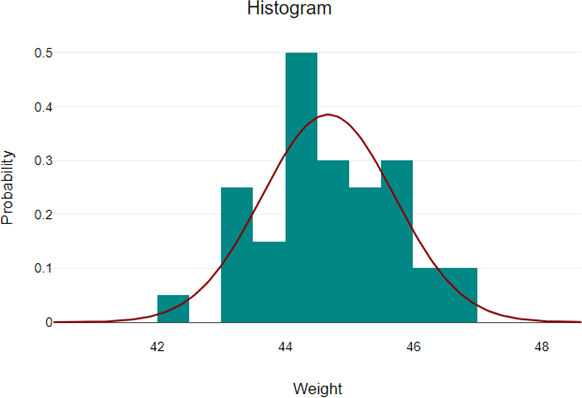

Height data are normally distributed. The distribution in this example fits real data that I collected from 14-year-old girls during a study. The graph below displays the probability distribution function for this normal distribution. Learn more about Probability Density Functions .

As you can see, the distribution of heights follows the typical bell curve pattern for all normal distributions. Most girls are close to the average (1.512 meters). Small differences between an individual’s height and the mean occur more frequently than substantial deviations from the mean. The standard deviation is 0.0741m, which indicates the typical distance that individual girls tend to fall from mean height.

The distribution is symmetric. The number of girls shorter than average equals the number of girls taller than average. In both tails of the distribution, extremely short girls occur as infrequently as extremely tall girls.

Parameters of the Normal Distribution

As with any probability distribution, the parameters for the normal distribution define its shape and probabilities entirely. The normal distribution has two parameters, the mean and standard deviation. The Gaussian distribution does not have just one form. Instead, the shape changes based on the parameter values, as shown in the graphs below.

The mean is the central tendency of the normal distribution. It defines the location of the peak for the bell curve. Most values cluster around the mean. On a graph, changing the mean shifts the entire curve left or right on the X-axis. Statisticians denote the population mean using μ (mu).

μ is the expected value of the normal distribution. Learn more about Expected Values: Definition, Using & Example .

Related posts : Measures of Central Tendency and What is the Mean?

Standard deviation σ

The standard deviation is a measure of variability. It defines the width of the normal distribution. The standard deviation determines how far away from the mean the values tend to fall. It represents the typical distance between the observations and the average. Statisticians denote the population standard deviation using σ (sigma).

On a graph, changing the standard deviation either tightens or spreads out the width of the distribution along the X-axis. Larger standard deviations produce wider distributions.

When you have narrow distributions, the probabilities are higher that values won’t fall far from the mean. As you increase the spread of the bell curve, the likelihood that observations will be further away from the mean also increases.

Related post : Measures of Variability and Standard Deviation

Population parameters versus sample estimates

The mean and standard deviation are parameter values that apply to entire populations. For the Gaussian distribution, statisticians signify the parameters by using the Greek symbol μ (mu) for the population mean and σ (sigma) for the population standard deviation.

Unfortunately, population parameters are usually unknown because it’s generally impossible to measure an entire population. However, you can use random samples to calculate estimates of these parameters. Statisticians represent sample estimates of these parameters using x̅ for the sample mean and s for the sample standard deviation.

Learn more about Parameters vs Statistics: Examples & Differences .

Common Properties for All Forms of the Normal Distribution

Despite the different shapes, all forms of the normal distribution have the following characteristic properties.

- They’re all unimodal , symmetric bell curves. The Gaussian distribution cannot model skewed distributions.

- The mean, median, and mode are all equal.

- Half of the population is less than the mean and half is greater than the mean.

- The Empirical Rule allows you to determine the proportion of values that fall within certain distances from the mean. More on this below!

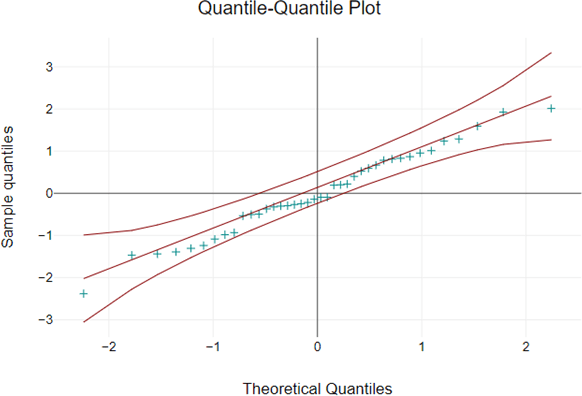

While the normal distribution is essential in statistics, it is just one of many probability distributions, and it does not fit all populations. To learn how to determine whether the normal distribution provides the best fit to your sample data, read my posts about How to Identify the Distribution of Your Data and Assessing Normality: Histograms vs. Normal Probability Plots .

The uniform distribution also models symmetric, continuous data, but all equal-sized ranges in this distribution have the same probability, which differs from the normal distribution.

If you have continuous data that are skewed, you’ll need to use a different distribution, such as the Weibull , lognormal , exponential , or gamma distribution.

Related post : Skewed Distributions

The Empirical Rule for the Normal Distribution

When you have normally distributed data, the standard deviation becomes particularly valuable. You can use it to determine the proportion of the values that fall within a specified number of standard deviations from the mean. For example, in a normal distribution, 68% of the observations fall within +/- 1 standard deviation from the mean. This property is part of the Empirical Rule, which describes the percentage of the data that fall within specific numbers of standard deviations from the mean for bell-shaped curves.

| 1 | 68% |

| 2 | 95% |

| 3 | 99.7% |

Let’s look at a pizza delivery example. Assume that a pizza restaurant has a mean delivery time of 30 minutes and a standard deviation of 5 minutes. Using the Empirical Rule, we can determine that 68% of the delivery times are between 25-35 minutes (30 +/- 5), 95% are between 20-40 minutes (30 +/- 2*5), and 99.7% are between 15-45 minutes (30 +/-3*5). The chart below illustrates this property graphically.

If your data do not follow the Gaussian distribution and you want an easy method to determine proportions for various standard deviations, use Chebyshev’s Theorem ! That method provides a similar type of result as the Empirical Rule but for non-normal data.

To learn more about this rule, read my post, Empirical Rule: Definition, Formula, and Uses .

Standard Normal Distribution and Standard Scores

As we’ve seen above, the normal distribution has many different shapes depending on the parameter values. However, the standard normal distribution is a special case of the normal distribution where the mean is zero and the standard deviation is 1. This distribution is also known as the Z-distribution.

A value on the standard normal distribution is known as a standard score or a Z-score. A standard score represents the number of standard deviations above or below the mean that a specific observation falls. For example, a standard score of 1.5 indicates that the observation is 1.5 standard deviations above the mean. On the other hand, a negative score represents a value below the average. The mean has a Z-score of 0.

Suppose you weigh an apple and it weighs 110 grams. There’s no way to tell from the weight alone how this apple compares to other apples. However, as you’ll see, after you calculate its Z-score, you know where it falls relative to other apples.

Learn how the Z Test uses Z-scores and the standard normal distribution to determine statistical significance.

Standardization: How to Calculate Z-scores

Standard scores are a great way to understand where a specific observation falls relative to the entire normal distribution. They also allow you to take observations drawn from normally distributed populations that have different means and standard deviations and place them on a standard scale. This standard scale enables you to compare observations that would otherwise be difficult.

This process is called standardization, and it allows you to compare observations and calculate probabilities across different populations. In other words, it permits you to compare apples to oranges. Isn’t statistics great!

To standardize your data, you need to convert the raw measurements into Z-scores.

To calculate the standard score for an observation, take the raw measurement, subtract the mean, and divide by the standard deviation. Mathematically, the formula for that process is the following:

X represents the raw value of the measurement of interest. Mu and sigma represent the parameters for the population from which the observation was drawn.

After you standardize your data, you can place them within the standard normal distribution. In this manner, standardization allows you to compare different types of observations based on where each observation falls within its own distribution.

Example of Using Standard Scores to Make an Apples to Oranges Comparison

Suppose we literally want to compare apples to oranges. Specifically, let’s compare their weights. Imagine that we have an apple that weighs 110 grams and an orange that weighs 100 grams.

If we compare the raw values, it’s easy to see that the apple weighs more than the orange. However, let’s compare their standard scores. To do this, we’ll need to know the properties of the weight distributions for apples and oranges. Assume that the weights of apples and oranges follow a normal distribution with the following parameter values:

| Mean weight grams | 100 | 140 |

| Standard Deviation | 15 | 25 |

Now we’ll calculate the Z-scores:

- Apple = (110-100) / 15 = 0.667

- Orange = (100-140) / 25 = -1.6

The Z-score for the apple (0.667) is positive, which means that our apple weighs more than the average apple. It’s not an extreme value by any means, but it is above average for apples. On the other hand, the orange has fairly negative Z-score (-1.6). It’s pretty far below the mean weight for oranges. I’ve placed these Z-values in the standard normal distribution below.

While our apple weighs more than our orange, we are comparing a somewhat heavier than average apple to a downright puny orange! Using Z-scores, we’ve learned how each fruit fits within its own bell curve and how they compare to each other.

For more detail about z-scores, read my post, Z-score: Definition, Formula, and Uses

Finding Areas Under the Curve of a Normal Distribution

The normal distribution is a probability distribution. As with any probability distribution, the proportion of the area that falls under the curve between two points on a probability distribution plot indicates the probability that a value will fall within that interval. To learn more about this property, read my post about Understanding Probability Distributions .

Typically, I use statistical software to find areas under the curve. However, when you’re working with the normal distribution and convert values to standard scores, you can calculate areas by looking up Z-scores in a Standard Normal Distribution Table.

Because there are an infinite number of different Gaussian distributions, publishers can’t print a table for each distribution. However, you can transform the values from any normal distribution into Z-scores, and then use a table of standard scores to calculate probabilities.

Using a Table of Z-scores

Let’s take the Z-score for our apple (0.667) and use it to determine its weight percentile. A percentile is the proportion of a population that falls below a specific value. Consequently, to determine the percentile, we need to find the area that corresponds to the range of Z-scores that are less than 0.667. In the portion of the table below, the closest Z-score to ours is 0.65, which we’ll use.

Click here for a full Z-table and illustrated instructions for using it !

The trick with these tables is to use the values in conjunction with the properties of the bell curve to calculate the probability that you need. The table value indicates that the area of the curve between -0.65 and +0.65 is 48.43%. However, that’s not what we want to know. We want the area that is less than a Z-score of 0.65.

We know that the two halves of the normal distribution are mirror images of each other. So, if the area for the interval from -0.65 and +0.65 is 48.43%, then the range from 0 to +0.65 must be half of that: 48.43/2 = 24.215%. Additionally, we know that the area for all scores less than zero is half (50%) of the distribution.

Therefore, the area for all scores up to 0.65 = 50% + 24.215% = 74.215%

Our apple is at approximately the 74 th percentile.

Below is a probability distribution plot produced by statistical software that shows the same percentile along with a graphical representation of the corresponding area under the bell curve. The value is slightly different because we used a Z-score of 0.65 from the table while the software uses the more precise value of 0.667.

Related post : Percentiles: Interpretations and Calculations

Other Reasons Why the Normal Distribution is Important

In addition to all of the above, there are several other reasons why the normal distribution is crucial in statistics.

- Some statistical hypothesis tests assume that the data follow a bell curve. However, as I explain in my post about parametric and nonparametric tests , there’s more to it than only whether the data are normally distributed.

- Linear and nonlinear regression both assume that the residuals follow a Gaussian distribution. Learn more in my post about assessing residual plots .

- The central limit theorem states that as the sample size increases, the sampling distribution of the mean follows a normal distribution even when the underlying distribution of the original variable is non-normal.

That was quite a bit about the bell curve! Hopefully, you can understand that it is crucial because of the many ways that analysts use it.

If you’re learning about statistics and like the approach I use in my blog, check out my Introduction to Statistics book! It’s available at Amazon and other retailers.

Share this:

Reader Interactions

January 17, 2023 at 8:55 am

Thanks Jim for the detailed response. much appreciated.. it makes sense.

January 16, 2023 at 7:20 pm

Hi Jim, well, one caveat to your caveat. 🙂 I am assuming that even though we know the general mean is 100, that we do NOT know if there is something inherent about the two schools whereby their mean might not represent the general population, in fact I made it extreme to show that their respective means are probably NOT 100.. So, for the school with an IQ of 60, maybe it is 100, maybe it is 80, maybe it is 60, maybe it is 50, etc. But it seems to me that we could do a probability distribution around each of those in some way. (i.e what if their real mean was 100, what is the sampling distribution, what if the real mean is 80, what is the samplind distribution, etc.) So, I guess ultimately, I am asking two things. 1) what is the real mean for the school with a mean of 60 (in the case of the lower scoring school-intuition tells me it must be higher), but the second question then is, and perhaps the real crux of my question is how would we go about estimating those respective means. To me, this has Bayesian written all over it (the prior is 100, the updated info is 60, etc). But I only know Bayes with probabilities. anyway, I think this is an important question with bayesian thinking needed, and I dont think this subject gets the attention it deserves. I much appreciate your time, Jim. Hopefully a hat trick (3rd response) will finish this up. 🙂 — and hopefully your readers get something from this. Thanks John

January 16, 2023 at 11:08 pm

I explicitly mentioned that as an assumption in my previous comment. The schools need to represent the general population in terms of its IQ score distribution. Yes, it’s possible that the schools represent a different population. In that case, the probabilities don’t apply AND you wouldn’t even know whether the subsequent sample mean was likely to be higher or lower. You’d need to do a bit investigation to determine whether the school represented the general population or some other population. That’s exactly why I mentioned that. And my answer was based on you wanting to use the outside knowledge of the population.

Now, if you don’t want to assume that the general population’s IQ distribution is a normal distribution with a mean of 100 and standard deviation of 15, then you’re back to what I was writing about in my previous comment where you don’t use that information. In short, if you want to know the school’s true mean IQ, you’ll need to treat it as your population. Then draw a good sized random sample from it. Or, if the school is small enough, assess the entire school. As it is, you only have a sample size of 5. That’s not going to give you a precise estimate. You’d check the confidence interval for that estimate to see a range of likely values for the school’s mean.

You could use a Bayesian approach. That’s not my forte. But if you did draw a random sample of 5 and got a mean IQ of 60, that’s so unlikely to occur if the school’s mean is 100 that using a prior of 100 in a Bayesian analysis is questionable. That’s the problem with Bayesian approaches. You need priors, for which you don’t always have solid information. In your example, you’d need to know a lot more about the schools to have reasonable priors.

In this case, it seems likely that the schools mean IQ is not 100. It’s probably lower, but what is it. Hard to say. Seems like you’d need to really investigate the school to see what’s going on. Did you just get a really flukey sample, but the school does represent the general population. Or, does the school represent a different population?

Until you really looked in-depth at the school to get at that information, your best estimate is your sample mean along with the CI to understand its low precision.

January 16, 2023 at 9:54 am

Hi Jim, Thanks for response. I was assuming that we DO KNOW it has a general population mean of 100. I was also thinking in a Bayesian way that knowing the general population mean is 100, that the REAL Mean of the one school is BETWEEN 60 and 100 and the REAL mean of the other school is BETWEEN 100 and 140, much like if you were a baseball scout and you know that the average player is a .260 hitter, and you watch him bat 10 times, and get 8 hits, you would not assume his REAL ability is .800, you would assume it is BETWEEN .260 and .800 and perhaps use a Beta distribution, to conclude his distribution of averages is centered, at.. I don’t know, something like .265… something LARGER than .260. But this seems paradoxical to the idea that if we did get a sample of 5 (or however, many) and got a mean of 60 and in thinking of a confidence interval for that mean of 60, it is equally like that the REAL mean is say 55 as compared to 65.

January 16, 2023 at 6:50 pm

Gotcha! So, yes, using the knowledge outside our dataset, we can draw some additional conclusions.

For one thing, there’s regression to the mean. Usually that applies to one unusual observation being followed by an observation that is likely to be closer to the mean. In this case, we can use the same principle but apply it to samples of N = 5. You’ve got an unusual sample from each school. If you were to draw another random sample of the same size from each school, those samples are likely to be closer to the mean.

There are a few caveats. We’re assuming that we’re drawing random samples and that the schools reflect the general population rather than special populations.

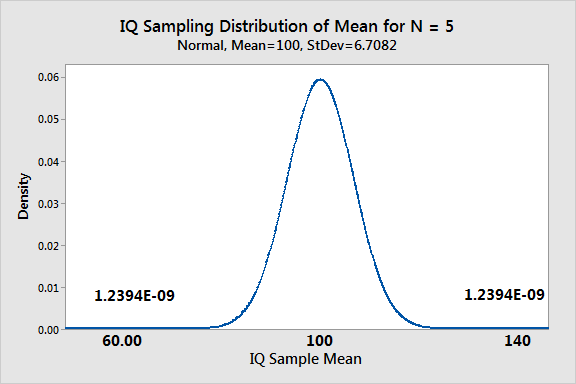

As for getting the probability for N = 5 of the IQ sample mean equaling 60 or 140, we can use the sampling distribution as I mentioned. We need to find the probability of obtaining a random sample 140. The graph shows the sampling distribution below.

The probability for 140 is the same. Total probability getting either condition in one random sample is 0.0000000024788.

As you can see, either probability is quite low! Basically, don’t count on getting either sample mean under these conditions! Those sample means are just far out in the tails of the sampling distribution.

But, if you did get either of those means, what’s the probability that the next random sample of N = 5 will be closer to the true mean?

That probability equals: 1 – 0.0000000024788 = 0.9999999975212

It’s virtually guaranteed in this case that the next random sample of 5 will be closer to the correct mean!

January 13, 2023 at 8:11 am

Hi Jim, Thanks for these posts. I have a question related to the error term in a normal distribution. Let’s assume that we are taking IQs at various high schools. we go to one high school, take 5 IQ’s and the mean is 140. we go to another, take 5 IQS and the mean is 60. We are trying to determine the population mean at each school. Of course, We know that the 140 and 60 are just estimates of the respective high schools, is there some “boundedness” concept (seems intuitive) that would suggest that the real mean at the one school is more likely higher than 60 than lower, and the mean at the other school is more likely lower than 140 than higher. I am thinking of a probability density function of error terms about each of 60 and 140 would illustrate that. Can we confirm this mathematically? hope my question makes sense. Thanks John

January 13, 2023 at 11:54 pm

That’s kind of a complex question! In the real world, we know that IQs are defined as having a general population mean of 100. But let’s pretend we don’t know that.

I’ll assume that you randomly sampled the 5 students at both of the schools.

Going strictly by the data we gathered, it would be hard for us to know whether the overall HS population mean is between the two schools. It’s also possible that the two high schools have different means for reasons unknown to us. So, it’s really hard to draw conclusions. Particularly with only a measly 5 observations at two schools. There’s going to be a large margin of uncertainty with both estimates.

So, we’ll left in a situation where we don’t know what the overall population mean is, we don’t know if the two high schools should have different means or not, and the two estimates we have wide margins of error.

In short, we don’t know much! What we should do over time is build our knowledge in this area. Get large samples from those two schools and other schools. Try to identify reasons why the IQs might be different at various schools. Or find that they should be nearly identical. After we build up our knowledge, we can help that aid our understanding.

But with just 5 observations at two schools and ignoring our real-world knowledge, we couldn’t put much faith in the estimates of 60 and 140 and really not much reason to assume the 60 should be higher and the 140 lower.

Now, if you want to apply real-world knowledge that we do have, yes, we can be reasonably sure that the 60 is too low and the 140 is too high. It is inconceivable that any reasonably sized school would have either mean for the entire school population unless they were schools intended for special students. It is much more likely that those are fluky samples based on the tiny sample size. We can know all that because we know that the population average truly is 100 with a standard deviation of 15. Given that fact, you could look at the sampling distribution of the mean for each school’s size to determine the probability of having such an extreme mean IQ for the entire school.

But it wasn’t clear from your question if you wanted to incorporate that information or not. If you do, then what you need is the sampling distribution of the mean and use that to calculate the probability for each school. It won’t tell you for sure whether the means are too high or too low, but you’ll see how unlikely they are to occur given the known properties of the population, and you could conclude it’s more likely they’re just wrong–too low and too high!

December 2, 2022 at 2:44 pm

Hello. I’m new to this field and have a very basic question. If the average number of engine hours on a small aircraft between oil services is 29.9. And my SD is 14.1, does that mean 68.27% of all values lie between 44.0 (29.9+14.1) and 15.8 (29.9-14.1)?

December 2, 2022 at 5:12 pm

You do need to assume or know that the distribution in question follows a normal distribution. If it does, then, yes, your conclusions are absolutely correct!

In statistics classes, you’ll frequently have questions that state you can assume the data follow a normal distribution or that it’s been determined that they do. In the real world, you’ll need previous research to establish that. Or you might use it as a rough estimate if you’re not positive about normality but pretty sure the distribution is at least roughly normal.

So, there are a few caveats but yes, you’re understanding is correct.

June 27, 2022 at 1:38 pm

Hello, I have a question related to judgments regarding a population and the potential to identify a mixture distribution. I have a dataset which is not continuous – there is a significant gap between two groups of data. Approximately 98% of my data is described by one group and 2% of my data by another group. The CDF of all data looks like a mixture distribution; there is a sharp change in local slopes on either side of the non-continuous data range. I am using NDE methods to detect residual stress levels in pipes. My hypothesis is that discrete stress levels exist as a result of manufacturing methods. That is, you either have typical stress levels or you have atypical stress levels. 1. Can the non-continuous nature of the data suggest a mixture distribution? 2. What test(s) can be performed to establish that the two sub-groups are not statistically compatible?

December 20, 2021 at 10:10 am

Thanks for how to identify what distribution to use. I was condused at first I have understoot in normal, it is continuous and w ecan see on the X -axis the SD line is not clossed on it. In the Poison, it is a discreet thus with a time frame/linit. In Binomial, the outcome expected is post/neg, yes/no, gfalse/true etc. Thus two outcomes.

I can also say that in normal , there is complexity in random variables to be used.

December 21, 2021 at 1:03 am

Hi Evalyne,

I’m not sure that I understand what your question is. Yes, normal distributions require continuous data. However, not all continuous data follow a normal distribution.

Poisson distributions use discrete data–specifically count data. Other types of discrete data do not follow the Poisson distribution. For more information, read about the Poisson distribution .

Binomial distributions model the expected outcomes for binary data. Read more about the binomial distribution .

December 20, 2021 at 10:00 am

Thanks Jim Frost for your resource. I am learning this and has added alot to my knowledge.

November 15, 2021 at 2:44 pm

Thanks for your explanations, they are very helpful

October 5, 2021 at 4:21 am

Interesting. I Need help. Lets say I have 5 columns A B C D E

A follows Poisson Distribution B follows Binomial Distribution C follows Weibull Distribution D follows Negative Binomial Distribution E follows Exponential Distribution

Alright now I know what type of distribution my data follows, Then What should I do next ? How can this help me in exploratory data analysis ,in decision making or in machine learning ?

What if I don’t know what type of distribution my data follows because they all look confusing or similar when plotting it. Is there any equation can help ? is there libraries help me identifies the probability distribution of the data ?

Kindly help

October 7, 2021 at 11:50 pm

Hi Savitur,

There are distribution tests that will help you identify the distribution that best fits your data. To learn how to do that, read my post about How to Identify the Distribution of Your Data .

After you know the distribution, you can use it to make better predictions, estimate probabilities and percentiles, etc.

June 17, 2021 at 2:25 pm

Jim, Simple question. I am working on a regression analysis to determine which of many variables predict success in nursing courses. I had hoped to use one cohort, but realize now that I need to use several to perform an adequate analysis. I worry that historic effects will bias the scores of different cohorts. I believe that using z-scores (using the mean and SD to normalize each course grade for each cohort) will attenuate these effects. Am I on the right track here?

June 19, 2021 at 4:11 pm

Keep in mind that for regression, it’s not the distribution of IVs that matter so much. Technically, it’s the distribution of the residuals. However, if the DV is highly skewed it can be more difficult to obtain normal residuals. For more information, read my post about OLS Assumptions .

If I understand correctly, you want to use Z-scores to transform your data so it is normal. Z-scores won’t work for that. There are types of transformations for doing what you need. I write about those in my regression analysis book .

Typically, you’d fit the model and see if you have problematic residuals before attempting a transformation or other type of solution.

March 1, 2021 at 11:28 pm

I have a question: why in linear models follow the normal distribution, but in generalized linear models (GLM) follow the exponential distribution? I want a detailed answer to a question

March 2, 2021 at 2:43 am

Hi S, the assumptions for any analysis depend on the calculations involved. When an analysis makes an assumption about the distribution of values, it’s usually because of the probability distribution that the assumption uses to determine statistical significance. Linear models determine significance because they use the t-distribution for individual continuous predictors and F-distribution for groups of indicator variables related to a categorical variable. Both distributions assume that the sampling distribution of means follow the normal distribution. However, generalized linear models can use other distributions for determining significance. By the way, typically regression models make assumptions about the distribution of the residuals rather than the variables themselves.

I hope that helps.

February 13, 2021 at 3:06 am

thanks a lot Jim. Regards

February 12, 2021 at 10:41 pm

Thanks a lot for your valuable comments. My dependant variable is binary, I have proportions on which I am applying binominal glm. I would like to ask that if we get non normal residuals with such data and are not able to meet the assumptions of binomial glm that what is the alternative test? If not binomial glm then what?

February 12, 2021 at 6:38 am

I applied glm on my binomial data. Shapiro test on residuals revealed normal distribution however the same test with response variable shows non-normal distribution of response variable. What should I assume in this case? Can you please clarify?

I shall be highly thankful for your comments.

February 12, 2021 at 3:02 pm

Binary data (for the binomial distribution) cannot follow the normal distribution. So, I’m unsure what you’re asking about? Is your binary data perhaps an independent variable?

At any rate, the normality assumption, along with the other applicable assumptions apply to the residuals and not the variables in your model. Assess the normality of the residuals. I write about this in my posts about residual plots and OLS assumptions , which apply to GLM.

And, answering the question in your other comment, yes, it is possible to obtain normal residuals even when your dependent variable is nonnormal. However, if your DV is very skewed, that can make it more difficult to obtain normal residuals. However, I’ve obtain normal residuals when the DV was not normal. I discuss that in the post about OLS assumptions that I link to above.

If you have more specific questions after reading those posts, please don’t hesitate to comment in one of those posts.

I hope that helps!

February 12, 2021 at 3:26 am

Thanks for the answer, so I can conclude that only some (but not all) numerical data (interval or ratio) follow the normal distribution. The categorical data almost has a non-normal distribution. But regarding the ordinal data, aren’t they categorical type? Regards

February 12, 2021 at 3:13 pm

Yes, not all numeric data follow the normal distribution. If you want to see an example of nonnormal data, and how to determine which distribution data follow, read my post about identifying the distribution of your data , which is about continuous data.

There are also distribution tests for discrete data .

Categorical data CANNOT follow the normal distribution.

Ordinal data is its own type of data. Ordinal data have some properties of numeric data and some of categorical data, but it is neither.

I cover data types and related topics in my Introduction to Statistics book . You probably should consider it!

February 9, 2021 at 2:52 am

Hi I have a question about the categorical data. Should we consider the categorical data (i.e. Nominal and Ordinal ) that they almost have a non-normal distribution, and therefore they need nonparametric tests? Regards Jagar

February 11, 2021 at 4:52 pm

Categorical data are synonymous with nominal data. However, ordinal data is not equivalent to categorical data.

Categorical data cannot follow a normal distribution because your talking about categories with no distance or order between them. Ordinal data have an order but no distance. Consequently, ordinal data cannot be normally distributed. Only numeric data can follow a normal distribution.

February 6, 2021 at 9:24 am

What actually I was also trying to wonder about is whether there can be no deviation between the results from the data which has been transformed and the same sample data where we waived the normality assumption. Because now I know that with nonparametric tests, I first need to ascertain whether the the other measures of central tendency (mode and median) fit the subject matter of my research questions. Thank you Jim

February 6, 2021 at 11:39 pm

If you meet the sample size minimums, you can safely waive the normality assumption. The results should be consistent with a transformation assuming you use an appropriate transformation. If you don’t meet the minimum requirements for waiving the assumption, there might be differences.

Those sample size requirements are based on simulation studies that intentionally violated the normality assumption and then compared the actual to expected results for different sample sizes. The researchers ran the simulations thousands and thousands of times using different distributions and sample sizes. When you exceed the minimums, the results are reliable even when the data were not normally distributed.

February 6, 2021 at 2:53 am

That is a good idea! Yes, you definitely understand what I am trying to do and why it makes sense too – but of course only if the dimensions are truly independent and, as you say, it depends on them all following a normal distribution.

What I worry about your solution is what if other dimensions are not outliers? Imagine 10 dimensions and eight of them have a very low z-score only two have outlier z-score of 2.4

If we assume the remaining 8 dimensions are totally not outliers, such as z-score 0.7. That z-score has two tailed probability of 0.4839 if you take it power of eight it results of 0.003 probability and I worry it is not correct it seems very low in case we imagine such quite ordinary figures.

But maybe it is accurate, I cannot decide. In this case though the more features we add the more our probability drops.

Imagine you take a totally ordinary random sample in 50 degrees (fifty z-values). When you multiply them this way it will seem like your sample is extraordinarily rare outlier like p < 0.000005. Don't you agree?

So isn't this a problem if every sample looks like an extraordinarily rare outlier like p < 0.000005? I would expect actually 50% of samples to look like p < 0.5 and only less than 2% of samples to look like p < 0.02 …

So I am thinking there should be some better way to combine the z values other than multiplying result two tailed p. I thought about average of z values but for example imagine eight values of 0.5 and two values of 6. The sixes are major major outliers (imagine six sigma) and in two dimensions it takes the cake. So should be super rare. However if I average 0.5, 0.5, 0.5, 0.5, 0.5, 0.5, 0.5, 0.5, 6, 6 I get 1.6 sigma which seems very close to ordinary. It is not ordinary! Never I should get a sample which independently in two dimensions falls outside of six sigma. So also average is not good method here.

So I hope you see my dilemma through these examples. I think your method to multiply probabilities is powerful but is there any way to make it more powerful such that 50% of samples are p < 0.5 only?

Also I would not like to use manually picking which dimensions to consider as outliers and discard others, because it would be better somehow to combine all the scores in a way that is automatic and gets a resulting amount of outlier overall.

Overall I think your technique is pretty powerful I just worry little bit about using it in many dimensions. Thank you for the powerful tip!

February 7, 2021 at 12:01 am

I’m also split on this! Part of me says that is the correct answer. It does seem low. But imagine flipping a coin 8 times. The probability of getting heads all 8 times is extraordinarily low. The process is legitimate. And, if you have a number of medium scores and a few outliers, that should translate to a low probability. So, that doesn’t surprise me.

I can see the problem if you have many properties. You might look into the Mahalanobis distance. It measures the distances of data points form the centroid of a multivariate data set. Here’s the Wikipedia article about it: Mahalanobis distance . I’m familiar with it enough to know it might be what you’re looking for but I haven’t worked with it myself so I don’t have the hands on info!

February 5, 2021 at 10:12 pm

I want to know how unlikely the observation is overall, expressed as a single pvalue or overall z-score. Yes, the dimensions are truly independent. Is this possible?

February 6, 2021 at 12:19 am

Thanks for the extra details. I have a better sense of what you want to accomplish!

Typically, in the analyses I’m familiar with, analysts would assess each dimension separately, particularly if they’re independent. Basically checking for outliers based on different characteristics. However, it seems like you want a cumulative probability for unlikeliness that factors in both dimensions together. For example, if both dimensions were somewhat unusual, then the observation as a whole might be extremely unusual. I personally have never done anything like that.

However, I think I have an idea. I think you were thinking in the right direction when you mentioned p-values. If you use the Z-score to find the probability of obtaining each value, or more extreme, that is similar to similar to a p-value. So, if your Z-score was say 2.4, you’d find the probability for less than -2.4 and greater than +2.4. Sum those two probability of those two tails. Of course, obtaining accurate results depends on your data following a normal distribution.

You do that for both dimensions. For each observation, you end up with two probabilities.

Because these are independent dimensions, you can simply multiple the two probabilities for each observation to obtain the overall probability. I think that’ll work. Like I said, I haven’t done this myself but it seems like a valid approach.

February 5, 2021 at 6:37 pm

How can I combine several independent z-scores (different dimensions about the same item) into one overall z-score about how unlikely or percentile such an item is? Assuming the dimensions are truly independent of course.

February 5, 2021 at 9:55 pm

If the dimensions are truly independent, why do you want to combine them? Wouldn’t you want to capture the full information they provide?

February 5, 2021 at 12:51 am

Deciding between waiving the normality assumption and performing data transformation for none-normal data always gives headache when I have a sample size of n > 20.

I wonder how critical is the normality assumption vis-a- vis data transformation. Please help me tell me the appropriate decision I can take. Thank you

February 5, 2021 at 10:14 pm

Hi Collinz,

It can be fairly easy to waive the normality assumption. You really don’t need a very large sample size. In my post about parametric vs. nonparametric tests , I provide a table that shows the sample sizes per group for various analyses that allow you to waive that assumption. If you can waive the assumption, I would not transform the data as that makes the results less intuitive. So, check out that table and it should be an easy decision to make! Also, nonparametric tests are an alternative to transforming your data when you can’t waive the normality assumption. I also have that table in my Hypothesis Testing book, which you have if I’m remembering correctly.

By the way, if you’re curious about why you can waive the normality assumption, you can thank the central limit theorem . Click the link if you want to see how it works. There’s a very good reason why you can just waive the normality assumption without worrying about it in some cases!

January 18, 2021 at 2:22 pm

Very easy to follow and a nicely structured article ! Thanks for making my life easy!!!

January 19, 2021 at 3:25 pm

You’re very welcome! I’m glad my site has been helpful!

January 17, 2021 at 11:56 pm

When checking for normal distribution property for a given data set, we divide the data in 5 ranges of Z score and then calculate x value and so on.. Is it compulsory to have minimum 5 ranges?

January 19, 2021 at 3:26 pm

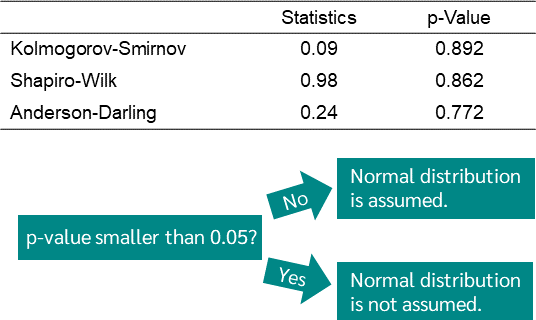

Hi Nikee, I’d just us a normality test, such as the Anderson-Darling test . Or use a normal probability plot .

January 4, 2021 at 12:40 am

Hi! Can you please tell me what are the applications of normal distribution?

January 4, 2021 at 4:04 pm

Well this article covers exactly that! Read it and if you questions about the specific applications, don’t hesitate to ask!

December 30, 2020 at 10:27 am

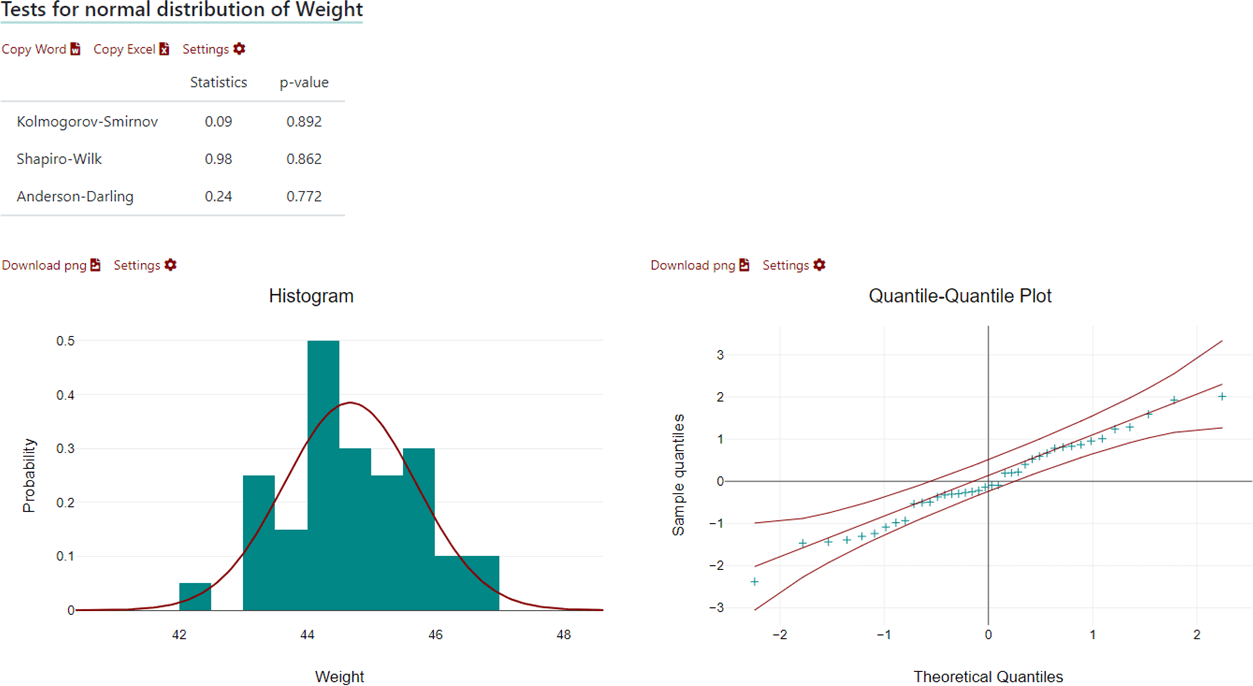

What is normality test & why it conducted, discuss results using soft ware for data from water resources iterms of normality or non normality

December 31, 2020 at 12:45 am

Hi, you use a normality test to determine whether your data diverges from the normal distribution. I write about distribution tests in general, of which a normality test is just one specific type, in my post about identifying the distribution of your data . Included in the discussion and the output I use for the example is a normality test and interpretation. Click that link to read more about it. Additionally, my Hypothesis Testing book covers normality tests in more details.

December 7, 2020 at 6:43 pm

Hi Jim, could you explain how the normal distribution is related to the linear regression?

December 7, 2020 at 11:06 pm

The main way that the normal distribution relates to regression is through the residuals. If you be able to trust the hypothesis testing (p-values, CIs), the residuals should be normally distributed. If they’re not normally distributed, you can’t trust those results. For more information about that, read my post about OLS regression assumptions .

December 7, 2020 at 6:59 am

Have one question which I am finding it difficult to answer. Why is it useful to see if other types of distributions can be approximated to a normal distribution?

Appreciate if you can briefly explain

December 7, 2020 at 11:17 pm

The main reason that comes to my mind is for ease of calculations in hypothesis testing. For example, some tests such proportions tests (which use the binomial distribution) and the Poisson rate tests (for count data and use the Poisson distribution) have a form that uses a normal approximation tests. These normal approximation tests uses Z-scores for the normal distribution rather than values for the “native” distribution.

The main reason these normal approximation tests exist is because they’re easier for students to calculate by hand in statistics classes. Also, I assume in the pre-computer days it was also a plus. However, the normal distribution only approximates these other distributions in certain circumstances and it’s important to know whether your data fit those requirements, otherwise the normal approximation tests will be inaccurate. Additionally, you should use t-tests to compare means when you have an estimate of the population distribution, which is almost always the case. Technically, Z-tests are for cases when you know the population standard deviation (almost never). However, when your sample size is large enough, the t-distribution approximates the normal distribution and you can use a Z-test. Again, that’s easier to calculate by hand.

With computers, I’d recommend using the appropriate distribution for each test rather than the normal approximation.

I show how this works in my Hypothesis Testing book .

There’s another context where normal approximation becomes important. That’s the central limit theorem, which states that as the sample size increases, the sampling distribution of the mean approximates the normal distribution even when the distribution of values is non-normal. For more information about that, read my post about the central limit theorem .

Those are the mains reason I can think of! I hope that helps!

November 22, 2020 at 9:33 pm

What does random variable X̄ (capital x-bar) mean? How would you describe it?

November 22, 2020 at 10:14 pm

X-bar refers to the variable’s mean.

November 18, 2020 at 6:38 am

Very helpful

November 14, 2020 at 4:33 pm

hi how can i compare between binomial, normal and poisson distribution?

October 14, 2020 at 3:15 am

Dear jim Thank you very much for your post. It clarifies many notions. I have an issue I hope you have the answer. To combie forecasting models, I have chosen to calculte the weights based on the normal distribution. This latter is fitted on the past observation of the data I am forecasting. In this case are the weights equal to the PDF or should I treat it as an error measure, so it would be equal to 1/PDF ???

September 25, 2020 at 3:44 am

My problem in interpreting normal and poisson distribution remains. When you want to calculate the probability of selling a random number of apples in a week for instance and you want to work this out with excel spreadsheet How do you know when to subtract your answer from one or not? Is the mean the sole reference?

September 23, 2020 at 11:23 am

Thank you for your your post. I have one small question concerning the Empirical Rule (68%, 95%, 99.7%):

In a normal distribution, 68% of the observations will fall between +/- 1 standard deviation from the mean.

For example, the lateral deviation of a dart from the middle of the bullseye is defined by a normal distribution with a mean of 0 cm and a standard deviation of 5 cm. Would it be possible to affirm that there is a probability of 68% that the dart will hit the board inside a ring of radius of 5 cm?

I’m confused because for me the probability of having a lateral deviation smaller than the standard deviation (x < 1 m ) is 84%.

September 24, 2020 at 11:08 pm

Hi Thibert,

If it was me playing darts, the standard deviation would be much higher than 5 cm!

So, your question is really about two aspects: accuracy and precision.

Accuracy has to do with where the darts fall on average. The mean of zero indicates that on average the darts center on the bullseye. If it had been a non-zero value, the darts would have been centered elsewhere.

The standard deviation has to do with precision, which is how close to the target the darts tend to hit. Because the darts clustered around the bullseye and have a standard deviation of 5cm, you’d be able to say that 68% of darts will fall within 5cm of the bullseye assuming the distances follow a normal distribution (or at least fairly close).

I’m not sure what you’re getting at with the lateral deviation being less than the standard deviation? I thought you were defining it as the standard deviation? I’m also not sure where you’re getting 84% from? It’s possible I’m missing something that you’re asking about.

August 23, 2020 at 6:14 am

I hope this question is relevant. I’ve been trying to find an answer to this question for quite some time. Is it possible to correlate two samples if one is normally distributed and the other is not normally distributed? Many thanks for your time.

August 24, 2020 at 12:22 am

When you’re talking about Pearson’s correlation between two continuous variables, it assumes that the two variables follow a bivariate normal distribution. Defining that is a bit complicated! Read here for a technical definition . However, when you have more than 25 observations, you can often disregard this assumption.

Additionally, as I write in my post about correlation , you should graph the data. Sometimes it graph is an obvious way to know when you won’t get good results!

August 20, 2020 at 11:24 pm

Awesome explanation Jim, all doubts about Z score got cleared up. by any chance do you have a soft copy of your book. or is it available in India? Thanks.

August 21, 2020 at 12:58 am

Hi Archana, I’m glad this post was helpful!

You can get my ebooks from anywhere in the world. Just go to My Store .

My Introduction to Statistics book, which is the one that covers the normal distribution among others, is also available in print. You should be able to order that from your preferred online retailer or ask a local bookstore to order it (ISBN: 9781735431109).

August 14, 2020 at 5:11 am

super explanation

August 12, 2020 at 4:18 am

you can use Python Numpy library random.normal

July 30, 2020 at 12:35 am

Experimentalists always aspire to have data having normal distribution but in real it shifts from the normal distribution behaviour. How his issue is addressed to approximate the values

July 31, 2020 at 5:13 pm

I’m always surprised at how often the normal distribution actually fits real data. And, in regression analysis, is often not hard to get the residuals to follow a normal distribution. However, when the data/residuals absolutely don’t follow the normal distribution, all is not lost! For one thing, the central limit theorem allows you to use many parametric tests even with nonnormal data. You can also use nonparametric tests with nonnormal data. And, while I always consider it a last resort, you can transform the data so it follows the normal distribution.

July 21, 2020 at 3:15 am

Blood pressure of 150 doctors was recorded. The mean BP was found to be 12.7 mmHG. The standard deviation was calculated to be 6mmHG. If blood pressure is normally distributed then how many doctors will have systolic blood pressure above 133 mmHG?

July 21, 2020 at 3:41 am

Calculate the Z-score for the value in question. I’m guessing that is 13.3 mmHG rather than 133! I show how to do that in this article. Then use a Z-table to look up that Z-score, which I also show in this article. You can find online Z-tables to help you out.

July 13, 2020 at 6:03 am

Good day professor

I would like to what is the different between ” sampling on the mean of value” and “normal distribution”. I really appreciate any help from you Thank

July 14, 2020 at 2:06 pm

I’m not really clear about what you’re asking. Normal distribution is a probability function that explains how values of a population/sample are distributed. I’m not sure what you mean by “sampling on the mean of value”? However, if you take a sample, you can calculate the mean for that sample. If you collected a random sample, then the sample mean is an unbiased estimator of the population mean. Further, if the population follows a normal distribution, then the mean also serves as one of the two parameters for the normal distribution, the other being the standard deviation.

July 8, 2020 at 7:25 am

Hello sir! I am a student, and have little knowledge about statistics and probability. How can I answer this (normal curve analysis), given by my teacher, here as follows: A production machine has a normally distributed daily output in units. The average daily output is 4000 and daily output standard deviation is 500. What is the probability that the production of one random day will be below 3580?

Thank you so much and God bless you! 🙂

July 8, 2020 at 3:34 pm

You’re looking at the right article to calculate your answer! The first step is for you to calculate your Z-score. Look for the section titled–Standardization: How to Calculate Z-scores. You need to calculate the Z-score for the value of 3580.

After calculating your z-score, look at the section titled–Using a Table of Z-scores. You won’t be able to use the little snippet of a table that include there, but there are online Z score tables . You need to find the proportion of the area under the curve to left of your z-score. That proportion is your probability! Hint: Because the value you’re considering (3580) is below the mean (4000), you will have a negative Z-score.

If you’d like me to verify your answer, I’d be happy to do that. Just post it here.

June 11, 2020 at 12:50 pm

I would like to cite your book in my journal paper but I can’t find its ISBN. Could you please provide me the ISBN?

April 24, 2020 at 7:56 am

Yours works really helped me to understand about normal distributions. Thank you so much

April 21, 2020 at 12:13 pm

Wow I loved this post, for someone who knows nothing about statistics, it really helped me understand why you would use this in a practical sense. I’m trying to follow a course on Udemy on BI that simply describes Normal Distribution and how it works, but without giving any understanding of why its used and how it could be used with examples. So, having the apples and oranges description really helped me!

April 23, 2020 at 12:58 am

Hi Michael,

Your kind comment totally made my day! Thanks so much!

April 19, 2020 at 12:40 am

I am still a newbie in statistics. I have curious question.

I have always heard people saying they need to make data to be of normal distribution before running prediction models.

And i have heard many methods, one which is standardisation and others are log transformation/cube root etc.

If i have a dataset that has both age and weight variables and the distribution are not normal. Should i use transform them using the Z score standardisation or can i use other methods such as log transformation to normalise them? Or should i log transform them first, and then standardise them again using Z score?

I can’t really wrap my head around these..

Thank you much!

April 20, 2020 at 3:34 am

Usually for predictive models, such as using regression analysis, it’s the residuals that have to be normally distributed rather than the dependent variable itself. If you the residuals are not normal, transforming the dependent variable is one possible solution. However, that should be a last resort. There are other possible solutions you should try first, which I describe in my post about least squares assumptions .

March 24, 2020 at 7:30 am

Sir, Can I have the reference ID of yours to add to my paper

March 11, 2020 at 5:19 am

In case of any skewed data, some transformation like log transformation can be attempted. In most of the cases, the log transformation reduces the skewness. With transformed Mean and SD, find the 95% confidence Interval that is Mean – 2SD to Mean+2SD. Having obtained the transformed confidence interval, take antilog of the lower and upper limit. Now, any value not falling in the confidence interval can be treated as an outlier.

March 2, 2020 at 8:33 am

Hi Jim, Thanks for the wonderful explanation. I have been doing a Target setting exercise and my data is skewed. In this scenario, how to apprpach Target setting? Also, how to approach outlier detection for skewed data. Thanks in advance.

February 14, 2020 at 12:03 am

Why do we need to use z-score when the apple and orange have the same unit measurement (gram) ?

February 20, 2020 at 4:07 pm

Even when you use the same measurement, z-scores can still provide helpful information. In the example, I show how the z-scores for each show where they fall within their own distribution and they also highlight the fact that we’re comparing a very underweight orange to a somewhat overweight apple.

February 13, 2020 at 3:00 am

In the “Example of Using Standard Scores to Make an Apples to Oranges Comparison” section, Could you explain detail the meaning when we have a z-score of apple and orange ?

February 13, 2020 at 11:40 am

I compare those two scores and explain what they mean. I’m not sure what more you need?

January 27, 2020 at 5:59 am

Hi! I have a data report which gives Mean = 1.91, S.D. = 1.06, N=22. The data range is between 1 and 5. Is it possible to generate the 22 points of the data from this information. Thanks.

January 28, 2020 at 11:46 pm

Unfortunately, you can’t reconstruct a dataset using those values.

January 26, 2020 at 9:06 pm

Okay..now I’ve got it. Thank you so much. And your post is really helpful to me. Actually because of this I can complete my notes..thank you..✨

January 26, 2020 at 1:37 am

In different posts about Normal Distribution they have written Varience as a parameter even my teacher also include Varience as the parameter. So it’s really confusing that on what basis the standard deviation is as parameter and on what basis the others say Varience as parameter.

And I’m really sorry for bothering you again and again…🙂

January 26, 2020 at 6:32 pm

I don’t know why they have confused those two terms but they are different. Standard deviation and variances are definitely different but related. Variance is not a parameter for the normal distribution. The square root of the variance is the standard deviation, which is a parameter.

January 25, 2020 at 3:53 am

Hi! It’s really helpful.. thank you so much. But I have a confusion that the one of the parameter of normal Distribution is Standard deviation. Is we can also say that the parameter of standard deviation is “Varience” .

January 26, 2020 at 12:10 am

Standard deviations and variances are two different measures of variation. They are related but different. The standard deviation is the square root of the variance. Read my post about measures of variability and focus on the sections about those measures for more information.

January 17, 2020 at 6:34 am

This is a great explanation for why we standardize values and the significance of a z-score. You managed to explain a concept that multiple professors and online trainings were unable to explain.

Though I was able to understand the formulae and how to calculate all these values, I was unable to understand WHY we needed to do it. Your post made that very clear to me!

Thank you for taking the time to put this together and for picking examples that make so much sense!

January 8, 2020 at 11:27 am

Hi Jim, thanks for an awesome blog. Currently I am busy with an assignment for university where I got a broad task, I have to find out if a specific independent variable and a specific dependent variable are linearly related in a hedonic pricing model.

In plain English, would checking for the linear relationship mean that I check the significance level of the specific independent variable within the broader hedonic pricing model? If so, should I check for anything else? If I am completely wrong, what would you advise me to do instead?

Sorry for such a long question, but me and classmates are a bit lost over the ambiguity of the assignment, as we are all not that familiar with statistics.

I thank you for your time!

January 10, 2020 at 10:05 am

Measures of statistical significance won’t indicate the nature of the relationship between two variables. For example, if you have a curved, positive relationship between X and Y, you might still obtain a significant result if you fit a straight line relationship between the two. To really see the nature of the relationship between variables, you should graph them in a scatterplot.

I hope this helps!

January 7, 2020 at 5:58 am

your blog is awesome I’v confusion … When we add or subtract 0.5 area?

January 7, 2020 at 10:48 am

Hi Ibrahim,

Sorry, but I don’t understand what you’re asking. Can you provide more details?

December 23, 2019 at 1:36 am

Hello Jim! How did (30+-2)*5 = 140-160 become 20 to 40 minutes?

Looking forward to your reply.. Thanks!

December 23, 2019 at 3:19 am

Hi Anupama,

You have to remember your order of operations in math! You put your parentheses in the wrong place. What I wrote is equivalent to 30 +/- (2*5). Remember, multiplication before addition and subtraction. 🙂

December 1, 2019 at 11:23 pm

what are the three different ways to find probabilities for normal distribution?

December 2, 2019 at 9:30 am

Hi Mark, if I understand your question correctly, you’ll find your answers in this blog post.

November 15, 2019 at 7:40 am

for really you have opened my eyes

November 15, 2019 at 1:35 am

Hi jim,why is normal distribution important. how can you access normality using graphical techniques like histogram and box plot

November 15, 2019 at 11:23 pm

I’d recommend using a normal probability plot to graphically assess normality. I write about it in this post that compares histograms and normal probability plots .

October 23, 2019 at 10:57 am

Check your Pearson’s coefficient of skew. 26 “high outliers” sounds to me like you have right-tailed aka positive skew, possibly. Potentially, it is only moderately skewed so you can still assume normality. If it is highly skewed, you need to transform it and then do calculations. Transforming is way easier than it sounds; Google can show you how to do that.

October 23, 2019 at 11:39 am

Hi Cynthia,

This is a case where diagnosing the situation can be difficult without the actual dataset. For others, here’s the original comment in question .

On the one hand, having 26 high outliers and only 3 low outliers does give the impression of a skew. However, we can’t tell the extremeness of the high versus low outliers. Perhaps the high outliers are less extreme?

On the other hand, the commenter wrote that a normality test indicated the distribution is normally distributed and that a histogram also looks normally distributed. Furthermore, the fact that the mean and median are close together suggests it is a symmetric distribution rather than skewed.

There are a number of uncertainties as well. I don’t know the criteria the original commenter is using to identify outliers. And, I was hoping to determine the sample size. If it’s very large, then even 26 outliers is just a small fraction and might be within the bounds of randomness.

On the whole, the bulk of the evidence suggests that the data follow a normal distribution. It’s hard to say for sure. But, it sounds like we can rule out a severe skew at the very least.

You mention using a data transformation. And, you’re correct, they’re fairly easy to use. However, I’m not a big fan of transforming data. I consider it a last resort and not my “go to” option. The problem is that you’re analyzing the transformed data rather than the original data. Consequently, the results are not intuitive. Fortunately, thanks the central limit theorem , you often don’t need to transform the data even when they are skewed. That’s not to say that I’d never transform data, I’d just look for other options first.

You also mention checking the Pearson’s coefficient of skewness, which is a great idea. However, for this specific case, it’s probably pretty low. You calculate this coefficient by finding the difference between the mean and median, multiplying that by three, and then dividing by the standard deviation. For this case, the commenter indicated the the mean and median were very close together, which means the numerator in this calculation is small and, hence, the coefficient of skewness is small. But, you’re right, it’s a good statistic to look at in general.

Thanks for writing and adding your thoughts to the discussion!

October 20, 2019 at 9:03 am

Thank you very much Jim,I understand this better

September 16, 2019 at 3:22 pm

What if my distribution has a like 26 outliers on the high end and 3 on the low end and still my mean and median happen to be pretty close. the distribution on a histogram looks normal too. and the ryan joiner test produces the p-value of >1.00. will this distribution be normal?

September 16, 2019 at 3:31 pm

Based on what you write, it sure sounds like it’s normally distributed. What’s your sample size and how are you defining outliers?