- Quality Improvement

- Talk To Minitab

Understanding Hypothesis Tests: Significance Levels (Alpha) and P values in Statistics

Topics: Hypothesis Testing , Statistics

What do significance levels and P values mean in hypothesis tests? What is statistical significance anyway? In this post, I’ll continue to focus on concepts and graphs to help you gain a more intuitive understanding of how hypothesis tests work in statistics.

To bring it to life, I’ll add the significance level and P value to the graph in my previous post in order to perform a graphical version of the 1 sample t-test. It’s easier to understand when you can see what statistical significance truly means!

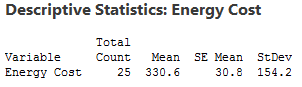

Here’s where we left off in my last post . We want to determine whether our sample mean (330.6) indicates that this year's average energy cost is significantly different from last year’s average energy cost of $260.

The probability distribution plot above shows the distribution of sample means we’d obtain under the assumption that the null hypothesis is true (population mean = 260) and we repeatedly drew a large number of random samples.

I left you with a question: where do we draw the line for statistical significance on the graph? Now we'll add in the significance level and the P value, which are the decision-making tools we'll need.

We'll use these tools to test the following hypotheses:

- Null hypothesis: The population mean equals the hypothesized mean (260).

- Alternative hypothesis: The population mean differs from the hypothesized mean (260).

What Is the Significance Level (Alpha)?

The significance level, also denoted as alpha or α, is the probability of rejecting the null hypothesis when it is true. For example, a significance level of 0.05 indicates a 5% risk of concluding that a difference exists when there is no actual difference.

These types of definitions can be hard to understand because of their technical nature. A picture makes the concepts much easier to comprehend!

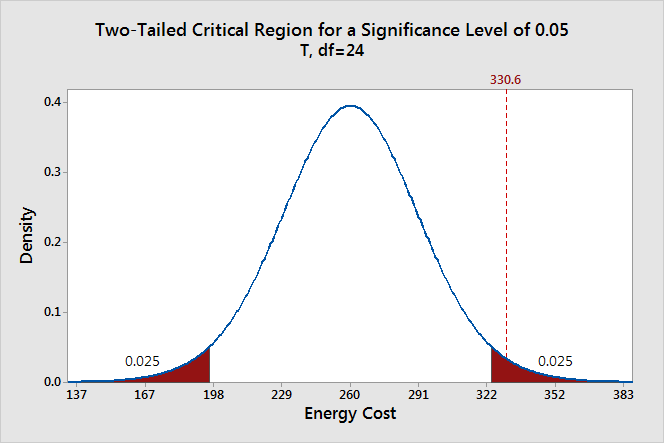

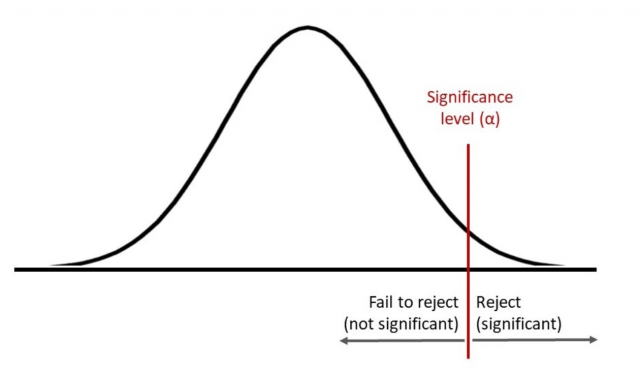

The significance level determines how far out from the null hypothesis value we'll draw that line on the graph. To graph a significance level of 0.05, we need to shade the 5% of the distribution that is furthest away from the null hypothesis.

In the graph above, the two shaded areas are equidistant from the null hypothesis value and each area has a probability of 0.025, for a total of 0.05. In statistics, we call these shaded areas the critical region for a two-tailed test. If the population mean is 260, we’d expect to obtain a sample mean that falls in the critical region 5% of the time. The critical region defines how far away our sample statistic must be from the null hypothesis value before we can say it is unusual enough to reject the null hypothesis.

Our sample mean (330.6) falls within the critical region, which indicates it is statistically significant at the 0.05 level.

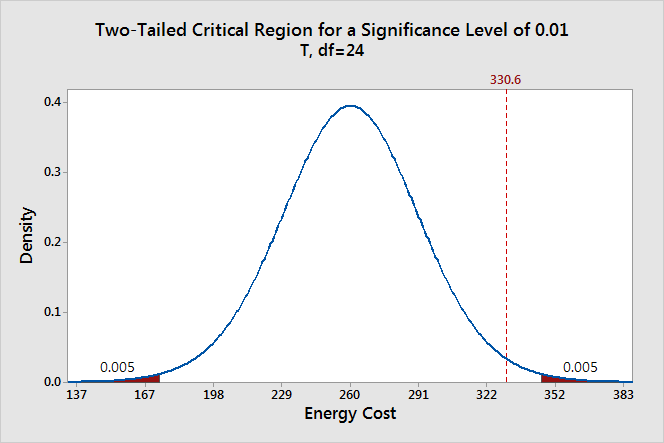

We can also see if it is statistically significant using the other common significance level of 0.01.

The two shaded areas each have a probability of 0.005, which adds up to a total probability of 0.01. This time our sample mean does not fall within the critical region and we fail to reject the null hypothesis. This comparison shows why you need to choose your significance level before you begin your study. It protects you from choosing a significance level because it conveniently gives you significant results!

Thanks to the graph, we were able to determine that our results are statistically significant at the 0.05 level without using a P value. However, when you use the numeric output produced by statistical software , you’ll need to compare the P value to your significance level to make this determination.

Ready for a demo of Minitab Statistical Software? Just ask!

What Are P values?

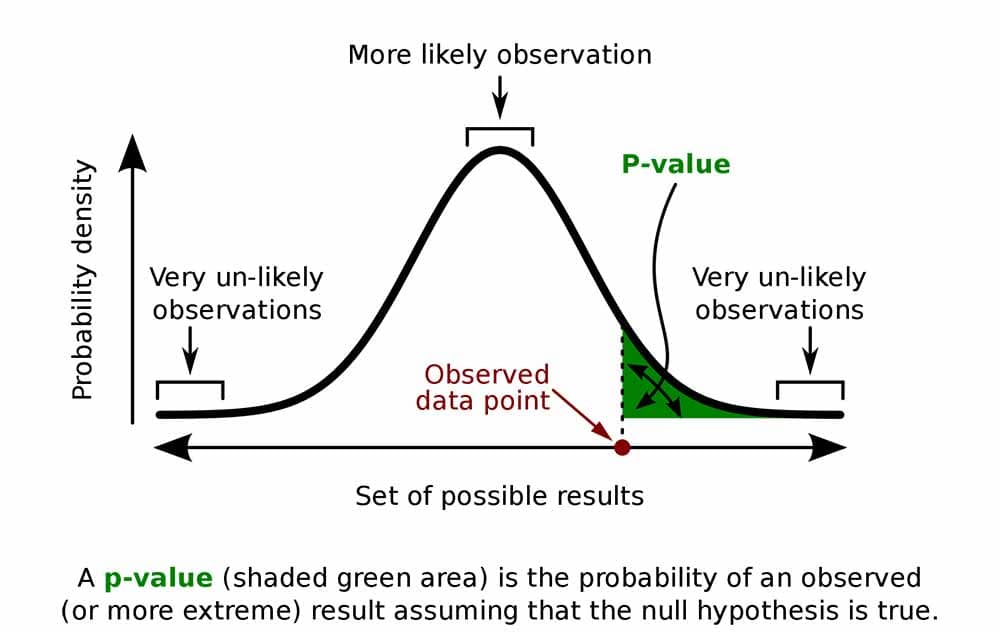

P-values are the probability of obtaining an effect at least as extreme as the one in your sample data, assuming the truth of the null hypothesis.

This definition of P values, while technically correct, is a bit convoluted. It’s easier to understand with a graph!

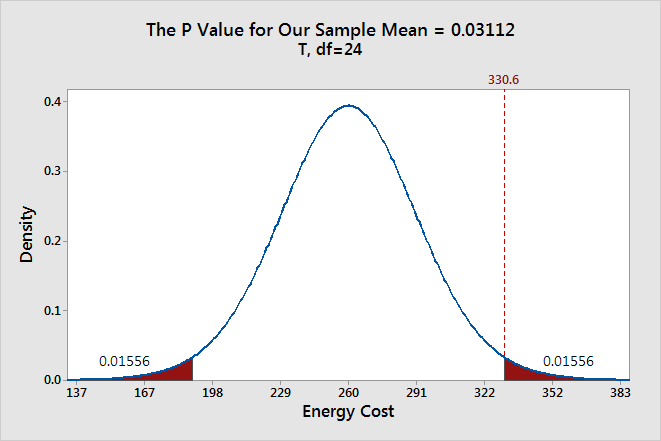

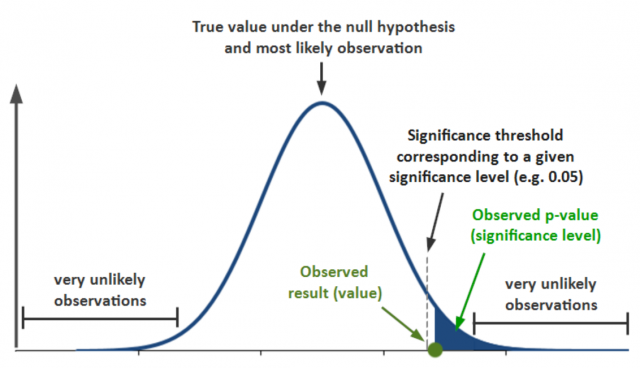

To graph the P value for our example data set, we need to determine the distance between the sample mean and the null hypothesis value (330.6 - 260 = 70.6). Next, we can graph the probability of obtaining a sample mean that is at least as extreme in both tails of the distribution (260 +/- 70.6).

In the graph above, the two shaded areas each have a probability of 0.01556, for a total probability 0.03112. This probability represents the likelihood of obtaining a sample mean that is at least as extreme as our sample mean in both tails of the distribution if the population mean is 260. That’s our P value!

When a P value is less than or equal to the significance level, you reject the null hypothesis. If we take the P value for our example and compare it to the common significance levels, it matches the previous graphical results. The P value of 0.03112 is statistically significant at an alpha level of 0.05, but not at the 0.01 level.

If we stick to a significance level of 0.05, we can conclude that the average energy cost for the population is greater than 260.

A common mistake is to interpret the P-value as the probability that the null hypothesis is true. To understand why this interpretation is incorrect, please read my blog post How to Correctly Interpret P Values .

Discussion about Statistically Significant Results

A hypothesis test evaluates two mutually exclusive statements about a population to determine which statement is best supported by the sample data. A test result is statistically significant when the sample statistic is unusual enough relative to the null hypothesis that we can reject the null hypothesis for the entire population. “Unusual enough” in a hypothesis test is defined by:

- The assumption that the null hypothesis is true—the graphs are centered on the null hypothesis value.

- The significance level—how far out do we draw the line for the critical region?

- Our sample statistic—does it fall in the critical region?

Keep in mind that there is no magic significance level that distinguishes between the studies that have a true effect and those that don’t with 100% accuracy. The common alpha values of 0.05 and 0.01 are simply based on tradition. For a significance level of 0.05, expect to obtain sample means in the critical region 5% of the time when the null hypothesis is true . In these cases, you won’t know that the null hypothesis is true but you’ll reject it because the sample mean falls in the critical region. That’s why the significance level is also referred to as an error rate!

This type of error doesn’t imply that the experimenter did anything wrong or require any other unusual explanation. The graphs show that when the null hypothesis is true, it is possible to obtain these unusual sample means for no reason other than random sampling error. It’s just luck of the draw.

Significance levels and P values are important tools that help you quantify and control this type of error in a hypothesis test. Using these tools to decide when to reject the null hypothesis increases your chance of making the correct decision.

If you like this post, you might want to read the other posts in this series that use the same graphical framework:

- Previous: Why We Need to Use Hypothesis Tests

- Next: Confidence Intervals and Confidence Levels

If you'd like to see how I made these graphs, please read: How to Create a Graphical Version of the 1-sample t-Test .

You Might Also Like

- Trust Center

© 2023 Minitab, LLC. All Rights Reserved.

- Terms of Use

- Privacy Policy

- Cookies Settings

- Prompt Library

- DS/AI Trends

- Stats Tools

- Interview Questions

- Generative AI

- Machine Learning

- Deep Learning

Level of Significance & Hypothesis Testing

In hypothesis testing , the level of significance is a measure of how confident you can be about rejecting the null hypothesis. This blog post will explore what hypothesis testing is and why understanding significance levels are important for your data science projects. In addition, you will also get to test your knowledge of level of significance towards the end of the blog with the help of quiz . These questions can help you test your understanding and prepare for data science / statistics interviews . Before we look into what level of significance is, let’s quickly understand what is hypothesis testing.

Table of Contents

What is Hypothesis testing and how is it related to significance level?

Hypothesis testing can be defined as tests performed to evaluate whether a claim or theory about something is true or otherwise. In order to perform hypothesis tests, the following steps need to be taken:

- Hypothesis formulation: Formulate the null and alternate hypothesis

- Data collection: Gather the sample of data

- Statistical tests: Determine the statistical test and test statistics. The statistical tests can be z-test or t-test depending upon the number of data samples and/or whether the population variance is known otherwise.

- Set the level of significance

- Calculate the p-value

- Draw conclusions: Based on the value of p-value and significance level, reject the null hypothesis or otherwise.

A detailed explanation is provided in one of my related posts titled hypothesis testing explained with examples .

What is the level of significance?

The level of significance is defined as the criteria or threshold value based on which one can reject the null hypothesis or fail to reject the null hypothesis. The level of significance determines whether the outcome of hypothesis testing is statistically significant or otherwise. The significance level is also called as alpha level.

Another way of looking at the level of significance is the value which represents the likelihood of making a type I error . You may recall that Type I error occurs while evaluating hypothesis testing outcomes. If you reject the null hypothesis by mistake, you end up making a Type I error. This scenario is also termed as “false positive”. Take an example of a person alleged with committing a crime. The null hypothesis is that the person is not guilty. Type I error happens when you reject the null hypothesis by mistake. Given the example, a Type I error happens when you reject the null hypothesis that the person is not guilty by mistake. The innocent person is convicted.

The level of significance can take values such as 0.1 , 0.05 , 0.01 . The most common value of the level of significance is 0.05 . The lower the value of significance level, the lesser is the chance of type I error. That would essentially mean that the experiment or hypothesis testing outcome would really need to be highly precise for one to reject the null hypothesis. The likelihood of making a type I error would be very low. However, that does increase the chances of making type II errors as you may make mistakes in failing to reject the null hypothesis. You may want to read more details in relation to type I errors and type II errors in this post – Type I errors and Type II errors in hypothesis testing

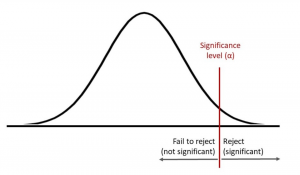

The outcome of the hypothesis testing is evaluated with the help of a p-value. If the p-value is less than the level of significance, then the hypothesis testing outcome is statistically significant. On the other hand, if the hypothesis testing outcome is not statistically significant or the p-value is more than the level of significance, then we fail to reject the null hypothesis. The same is represented in the picture below for a right-tailed test. I will be posting details on different types of tail test in future posts.

The picture below represents the concept for two-tailed hypothesis test:

For example: Let’s say that a school principal wants to find out whether extra coaching of 2 hours after school help students do better in their exams. The hypothesis would be as follows:

- Null hypothesis : There is no difference between the performance of students even after providing extra coaching of 2 hours after the schools are over.

- Alternate hypothesis : Students perform better when they get extra coaching of 2 hours after the schools are over. This hypothesis testing example would require a level of significant value at 0.05 or simply put, it would need to be highly precise that there’s actually a difference between the performance of students based on whether they take extra coaching.

Now, let’s say that we conduct this experiment with 100 students and measure their scores in exams. The test statistics is computed to be z=-0.50 (p-value=0.62). Since the p-value is more than 0.05, we fail to reject the null hypothesis. There is not enough evidence to show that there’s a difference in the performance of students based on whether they get extra coaching.

While performing hypothesis tests or experiments, it is important to keep the level of significance in mind.

Why does one need a level of significance?

In hypothesis tests, if we do not have some sort of threshold by which to determine whether your results are statistically significant enough for you to reject the null hypothesis, then it would be tough for us to determine whether your findings are significant or not. This is why we take into account levels of significance when performing hypothesis tests and experiments.

Since hypothesis testing helps us in making decisions about our data, having a level of significance set up allows one to know what sort of chances their findings might have of actually being due to the null hypothesis. If you set your level of significance at 0.05 for example, it would mean that there’s only a five percent chance that the difference between groups (assuming two groups are tested) is due to random sampling error. So if we found a difference in the performance of students based on whether they take extra coaching, we would need to consider other factors that could have contributed to the difference.

This is why hypothesis testing and level of significance go hand in hand with one another: hypothesis tests help us know whether our data falls within a certain range where it’s statistically significant or not so statistically significant whereas the level of significance tells us how likely is it that our hypothesis testing results are not due to random sampling error.

How is the level of significance used in hypothesis testing?

The level of significance along with the test statistic and p-value formed a key part of hypothesis testing. The value that you derive from hypothesis testing depends on whether or not you accept/reject the null hypothesis, given your findings at each step. Before going into rejection vs non-rejection, let’s understand the terms better.

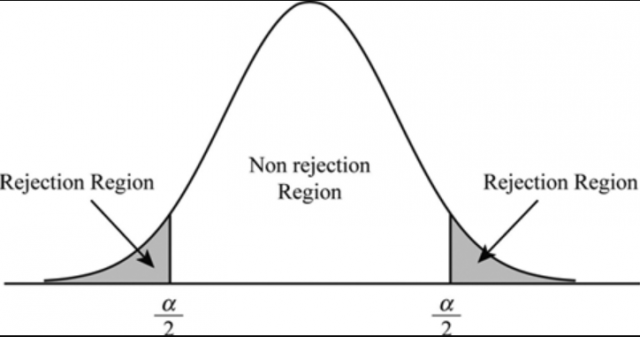

If the test statistic falls within the critical region, you reject the null hypothesis. This means that your findings are statistically significant and support the alternate hypothesis. The value of the p-value determines how likely it is for finding this outcome if, in fact, the null hypothesis were true. If the p-value is less than or equal to the level of significance, you reject the null hypothesis. This means that your hypothesis testing outcome was statistically significant at a certain degree and in favor of the alternate hypothesis.

If on the other hand, the p-value is greater than alpha level or significance level, then you fail to reject the null hypothesis. These findings are not statistically significant enough for one to reject the null hypothesis. The same is represented in the diagram below:

Level of Significance – Quiz / Interview Questions

Here are some practice questions which can help you in testing your questions, and, also prepare for interviews.

#1. The statistically significant outcome of hypothesis testing would mean which of the following?

#2. the p-value of 0.03 is statistically significant for significance level as 0.01, #3. which of the following is looks to be inappropriate level of significance, #4. which one of the following is considered most popular choice of significance level, #5. which of the following will result in greater type ii error, #6. the p-value less than the level of significance would mean which of the following, #7. which of the following will result in greater type i error, #8. level of significance is also called as ________, recent posts.

- Completion Model vs Chat Model: Python Examples - June 30, 2024

- LLM Hosting Strategy, Options & Cost: Examples - June 30, 2024

- Application Architecture for LLM Applications: Examples - June 25, 2024

Oops! Check your answers again. The minimum pass percentage is 70%.

Hypothesis testing is an important statistical concept that helps us determine whether the claim made about anything is true or otherwise. The hypothesis test statistic, level of significance, and p-value all work together to help you make decisions about your data. If our hypothesis tests show enough evidence to reject the null hypothesis, then we know statistically significant findings are at hand. This post gave you ideas for how you can use hypothesis testing in your experiments by understanding what it means when someone rejects or fails to reject the null hypothesis.

Ajitesh Kumar

3 responses.

Well explained with examples and helpful illustration

Thank you for your feedback

Well explained

Leave a Reply Cancel reply

Your email address will not be published. Required fields are marked *

- Search for:

- Excellence Awaits: IITs, NITs & IIITs Journey

ChatGPT Prompts (250+)

- Generate Design Ideas for App

- Expand Feature Set of App

- Create a User Journey Map for App

- Generate Visual Design Ideas for App

- Generate a List of Competitors for App

- Completion Model vs Chat Model: Python Examples

- LLM Hosting Strategy, Options & Cost: Examples

- Application Architecture for LLM Applications: Examples

- Python Pickle Security Issues / Risk

- Pricing Analytics in Banking: Strategies, Examples

Data Science / AI Trends

- • Prepend any arxiv.org link with talk2 to load the paper into a responsive chat application

- • Custom LLM and AI Agents (RAG) On Structured + Unstructured Data - AI Brain For Your Organization

- • Guides, papers, lecture, notebooks and resources for prompt engineering

- • Common tricks to make LLMs efficient and stable

- • Machine learning in finance

Free Online Tools

- Create Scatter Plots Online for your Excel Data

- Histogram / Frequency Distribution Creation Tool

- Online Pie Chart Maker Tool

- Z-test vs T-test Decision Tool

- Independent samples t-test calculator

Recent Comments

I found it very helpful. However the differences are not too understandable for me

Very Nice Explaination. Thankyiu very much,

in your case E respresent Member or Oraganization which include on e or more peers?

Such a informative post. Keep it up

Thank you....for your support. you given a good solution for me.

Warning: The NCBI web site requires JavaScript to function. more...

An official website of the United States government

The .gov means it's official. Federal government websites often end in .gov or .mil. Before sharing sensitive information, make sure you're on a federal government site.

The site is secure. The https:// ensures that you are connecting to the official website and that any information you provide is encrypted and transmitted securely.

- Publications

- Account settings

- Browse Titles

NCBI Bookshelf. A service of the National Library of Medicine, National Institutes of Health.

StatPearls [Internet]. Treasure Island (FL): StatPearls Publishing; 2024 Jan-.

StatPearls [Internet].

Hypothesis testing, p values, confidence intervals, and significance.

Jacob Shreffler ; Martin R. Huecker .

Affiliations

Last Update: March 13, 2023 .

- Definition/Introduction

Medical providers often rely on evidence-based medicine to guide decision-making in practice. Often a research hypothesis is tested with results provided, typically with p values, confidence intervals, or both. Additionally, statistical or research significance is estimated or determined by the investigators. Unfortunately, healthcare providers may have different comfort levels in interpreting these findings, which may affect the adequate application of the data.

- Issues of Concern

Without a foundational understanding of hypothesis testing, p values, confidence intervals, and the difference between statistical and clinical significance, it may affect healthcare providers' ability to make clinical decisions without relying purely on the research investigators deemed level of significance. Therefore, an overview of these concepts is provided to allow medical professionals to use their expertise to determine if results are reported sufficiently and if the study outcomes are clinically appropriate to be applied in healthcare practice.

Hypothesis Testing

Investigators conducting studies need research questions and hypotheses to guide analyses. Starting with broad research questions (RQs), investigators then identify a gap in current clinical practice or research. Any research problem or statement is grounded in a better understanding of relationships between two or more variables. For this article, we will use the following research question example:

Research Question: Is Drug 23 an effective treatment for Disease A?

Research questions do not directly imply specific guesses or predictions; we must formulate research hypotheses. A hypothesis is a predetermined declaration regarding the research question in which the investigator(s) makes a precise, educated guess about a study outcome. This is sometimes called the alternative hypothesis and ultimately allows the researcher to take a stance based on experience or insight from medical literature. An example of a hypothesis is below.

Research Hypothesis: Drug 23 will significantly reduce symptoms associated with Disease A compared to Drug 22.

The null hypothesis states that there is no statistical difference between groups based on the stated research hypothesis.

Researchers should be aware of journal recommendations when considering how to report p values, and manuscripts should remain internally consistent.

Regarding p values, as the number of individuals enrolled in a study (the sample size) increases, the likelihood of finding a statistically significant effect increases. With very large sample sizes, the p-value can be very low significant differences in the reduction of symptoms for Disease A between Drug 23 and Drug 22. The null hypothesis is deemed true until a study presents significant data to support rejecting the null hypothesis. Based on the results, the investigators will either reject the null hypothesis (if they found significant differences or associations) or fail to reject the null hypothesis (they could not provide proof that there were significant differences or associations).

To test a hypothesis, researchers obtain data on a representative sample to determine whether to reject or fail to reject a null hypothesis. In most research studies, it is not feasible to obtain data for an entire population. Using a sampling procedure allows for statistical inference, though this involves a certain possibility of error. [1] When determining whether to reject or fail to reject the null hypothesis, mistakes can be made: Type I and Type II errors. Though it is impossible to ensure that these errors have not occurred, researchers should limit the possibilities of these faults. [2]

Significance

Significance is a term to describe the substantive importance of medical research. Statistical significance is the likelihood of results due to chance. [3] Healthcare providers should always delineate statistical significance from clinical significance, a common error when reviewing biomedical research. [4] When conceptualizing findings reported as either significant or not significant, healthcare providers should not simply accept researchers' results or conclusions without considering the clinical significance. Healthcare professionals should consider the clinical importance of findings and understand both p values and confidence intervals so they do not have to rely on the researchers to determine the level of significance. [5] One criterion often used to determine statistical significance is the utilization of p values.

P values are used in research to determine whether the sample estimate is significantly different from a hypothesized value. The p-value is the probability that the observed effect within the study would have occurred by chance if, in reality, there was no true effect. Conventionally, data yielding a p<0.05 or p<0.01 is considered statistically significant. While some have debated that the 0.05 level should be lowered, it is still universally practiced. [6] Hypothesis testing allows us to determine the size of the effect.

An example of findings reported with p values are below:

Statement: Drug 23 reduced patients' symptoms compared to Drug 22. Patients who received Drug 23 (n=100) were 2.1 times less likely than patients who received Drug 22 (n = 100) to experience symptoms of Disease A, p<0.05.

Statement:Individuals who were prescribed Drug 23 experienced fewer symptoms (M = 1.3, SD = 0.7) compared to individuals who were prescribed Drug 22 (M = 5.3, SD = 1.9). This finding was statistically significant, p= 0.02.

For either statement, if the threshold had been set at 0.05, the null hypothesis (that there was no relationship) should be rejected, and we should conclude significant differences. Noticeably, as can be seen in the two statements above, some researchers will report findings with < or > and others will provide an exact p-value (0.000001) but never zero [6] . When examining research, readers should understand how p values are reported. The best practice is to report all p values for all variables within a study design, rather than only providing p values for variables with significant findings. [7] The inclusion of all p values provides evidence for study validity and limits suspicion for selective reporting/data mining.

While researchers have historically used p values, experts who find p values problematic encourage the use of confidence intervals. [8] . P-values alone do not allow us to understand the size or the extent of the differences or associations. [3] In March 2016, the American Statistical Association (ASA) released a statement on p values, noting that scientific decision-making and conclusions should not be based on a fixed p-value threshold (e.g., 0.05). They recommend focusing on the significance of results in the context of study design, quality of measurements, and validity of data. Ultimately, the ASA statement noted that in isolation, a p-value does not provide strong evidence. [9]

When conceptualizing clinical work, healthcare professionals should consider p values with a concurrent appraisal study design validity. For example, a p-value from a double-blinded randomized clinical trial (designed to minimize bias) should be weighted higher than one from a retrospective observational study [7] . The p-value debate has smoldered since the 1950s [10] , and replacement with confidence intervals has been suggested since the 1980s. [11]

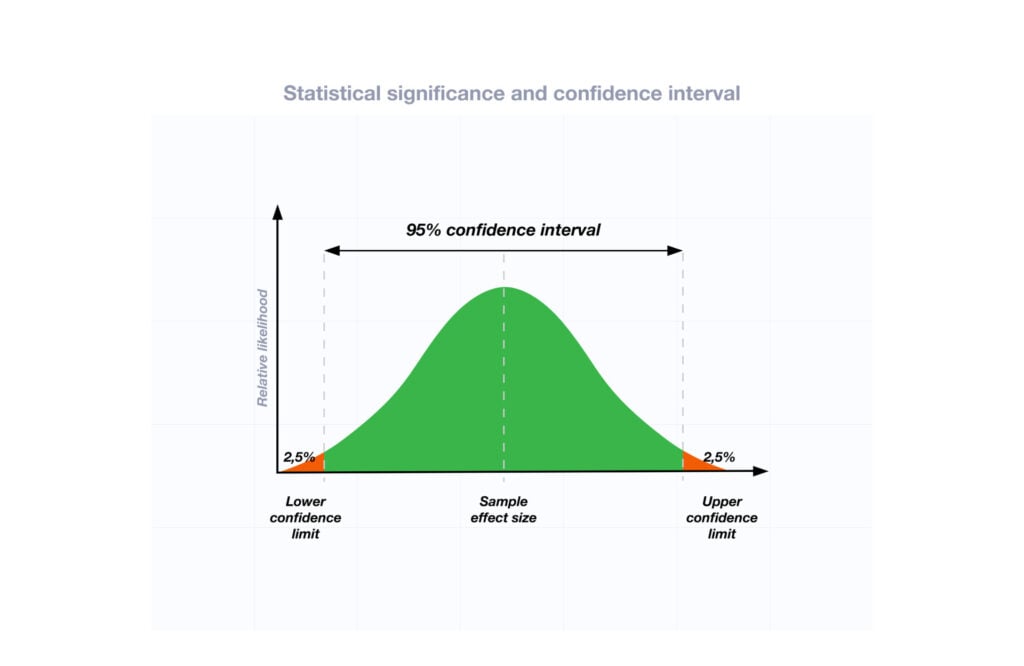

Confidence Intervals

A confidence interval provides a range of values within given confidence (e.g., 95%), including the accurate value of the statistical constraint within a targeted population. [12] Most research uses a 95% CI, but investigators can set any level (e.g., 90% CI, 99% CI). [13] A CI provides a range with the lower bound and upper bound limits of a difference or association that would be plausible for a population. [14] Therefore, a CI of 95% indicates that if a study were to be carried out 100 times, the range would contain the true value in 95, [15] confidence intervals provide more evidence regarding the precision of an estimate compared to p-values. [6]

In consideration of the similar research example provided above, one could make the following statement with 95% CI:

Statement: Individuals who were prescribed Drug 23 had no symptoms after three days, which was significantly faster than those prescribed Drug 22; there was a mean difference between the two groups of days to the recovery of 4.2 days (95% CI: 1.9 – 7.8).

It is important to note that the width of the CI is affected by the standard error and the sample size; reducing a study sample number will result in less precision of the CI (increase the width). [14] A larger width indicates a smaller sample size or a larger variability. [16] A researcher would want to increase the precision of the CI. For example, a 95% CI of 1.43 – 1.47 is much more precise than the one provided in the example above. In research and clinical practice, CIs provide valuable information on whether the interval includes or excludes any clinically significant values. [14]

Null values are sometimes used for differences with CI (zero for differential comparisons and 1 for ratios). However, CIs provide more information than that. [15] Consider this example: A hospital implements a new protocol that reduced wait time for patients in the emergency department by an average of 25 minutes (95% CI: -2.5 – 41 minutes). Because the range crosses zero, implementing this protocol in different populations could result in longer wait times; however, the range is much higher on the positive side. Thus, while the p-value used to detect statistical significance for this may result in "not significant" findings, individuals should examine this range, consider the study design, and weigh whether or not it is still worth piloting in their workplace.

Similarly to p-values, 95% CIs cannot control for researchers' errors (e.g., study bias or improper data analysis). [14] In consideration of whether to report p-values or CIs, researchers should examine journal preferences. When in doubt, reporting both may be beneficial. [13] An example is below:

Reporting both: Individuals who were prescribed Drug 23 had no symptoms after three days, which was significantly faster than those prescribed Drug 22, p = 0.009. There was a mean difference between the two groups of days to the recovery of 4.2 days (95% CI: 1.9 – 7.8).

- Clinical Significance

Recall that clinical significance and statistical significance are two different concepts. Healthcare providers should remember that a study with statistically significant differences and large sample size may be of no interest to clinicians, whereas a study with smaller sample size and statistically non-significant results could impact clinical practice. [14] Additionally, as previously mentioned, a non-significant finding may reflect the study design itself rather than relationships between variables.

Healthcare providers using evidence-based medicine to inform practice should use clinical judgment to determine the practical importance of studies through careful evaluation of the design, sample size, power, likelihood of type I and type II errors, data analysis, and reporting of statistical findings (p values, 95% CI or both). [4] Interestingly, some experts have called for "statistically significant" or "not significant" to be excluded from work as statistical significance never has and will never be equivalent to clinical significance. [17]

The decision on what is clinically significant can be challenging, depending on the providers' experience and especially the severity of the disease. Providers should use their knowledge and experiences to determine the meaningfulness of study results and make inferences based not only on significant or insignificant results by researchers but through their understanding of study limitations and practical implications.

- Nursing, Allied Health, and Interprofessional Team Interventions

All physicians, nurses, pharmacists, and other healthcare professionals should strive to understand the concepts in this chapter. These individuals should maintain the ability to review and incorporate new literature for evidence-based and safe care.

- Review Questions

- Access free multiple choice questions on this topic.

- Comment on this article.

Disclosure: Jacob Shreffler declares no relevant financial relationships with ineligible companies.

Disclosure: Martin Huecker declares no relevant financial relationships with ineligible companies.

This book is distributed under the terms of the Creative Commons Attribution-NonCommercial-NoDerivatives 4.0 International (CC BY-NC-ND 4.0) ( http://creativecommons.org/licenses/by-nc-nd/4.0/ ), which permits others to distribute the work, provided that the article is not altered or used commercially. You are not required to obtain permission to distribute this article, provided that you credit the author and journal.

- Cite this Page Shreffler J, Huecker MR. Hypothesis Testing, P Values, Confidence Intervals, and Significance. [Updated 2023 Mar 13]. In: StatPearls [Internet]. Treasure Island (FL): StatPearls Publishing; 2024 Jan-.

In this Page

Bulk download.

- Bulk download StatPearls data from FTP

Related information

- PMC PubMed Central citations

- PubMed Links to PubMed

Similar articles in PubMed

- The reporting of p values, confidence intervals and statistical significance in Preventive Veterinary Medicine (1997-2017). [PeerJ. 2021] The reporting of p values, confidence intervals and statistical significance in Preventive Veterinary Medicine (1997-2017). Messam LLM, Weng HY, Rosenberger NWY, Tan ZH, Payet SDM, Santbakshsing M. PeerJ. 2021; 9:e12453. Epub 2021 Nov 24.

- Review Clinical versus statistical significance: interpreting P values and confidence intervals related to measures of association to guide decision making. [J Pharm Pract. 2010] Review Clinical versus statistical significance: interpreting P values and confidence intervals related to measures of association to guide decision making. Ferrill MJ, Brown DA, Kyle JA. J Pharm Pract. 2010 Aug; 23(4):344-51. Epub 2010 Apr 13.

- Interpreting "statistical hypothesis testing" results in clinical research. [J Ayurveda Integr Med. 2012] Interpreting "statistical hypothesis testing" results in clinical research. Sarmukaddam SB. J Ayurveda Integr Med. 2012 Apr; 3(2):65-9.

- Confidence intervals in procedural dermatology: an intuitive approach to interpreting data. [Dermatol Surg. 2005] Confidence intervals in procedural dermatology: an intuitive approach to interpreting data. Alam M, Barzilai DA, Wrone DA. Dermatol Surg. 2005 Apr; 31(4):462-6.

- Review Is statistical significance testing useful in interpreting data? [Reprod Toxicol. 1993] Review Is statistical significance testing useful in interpreting data? Savitz DA. Reprod Toxicol. 1993; 7(2):95-100.

Recent Activity

- Hypothesis Testing, P Values, Confidence Intervals, and Significance - StatPearl... Hypothesis Testing, P Values, Confidence Intervals, and Significance - StatPearls

Your browsing activity is empty.

Activity recording is turned off.

Turn recording back on

Connect with NLM

National Library of Medicine 8600 Rockville Pike Bethesda, MD 20894

Web Policies FOIA HHS Vulnerability Disclosure

Help Accessibility Careers

Hypothesis Testing (cont...)

Hypothesis testing, the null and alternative hypothesis.

In order to undertake hypothesis testing you need to express your research hypothesis as a null and alternative hypothesis. The null hypothesis and alternative hypothesis are statements regarding the differences or effects that occur in the population. You will use your sample to test which statement (i.e., the null hypothesis or alternative hypothesis) is most likely (although technically, you test the evidence against the null hypothesis). So, with respect to our teaching example, the null and alternative hypothesis will reflect statements about all statistics students on graduate management courses.

The null hypothesis is essentially the "devil's advocate" position. That is, it assumes that whatever you are trying to prove did not happen ( hint: it usually states that something equals zero). For example, the two different teaching methods did not result in different exam performances (i.e., zero difference). Another example might be that there is no relationship between anxiety and athletic performance (i.e., the slope is zero). The alternative hypothesis states the opposite and is usually the hypothesis you are trying to prove (e.g., the two different teaching methods did result in different exam performances). Initially, you can state these hypotheses in more general terms (e.g., using terms like "effect", "relationship", etc.), as shown below for the teaching methods example:

| Null Hypotheses (H ): | Undertaking seminar classes has no effect on students' performance. |

| Alternative Hypothesis (H ): | Undertaking seminar class has a positive effect on students' performance. |

Depending on how you want to "summarize" the exam performances will determine how you might want to write a more specific null and alternative hypothesis. For example, you could compare the mean exam performance of each group (i.e., the "seminar" group and the "lectures-only" group). This is what we will demonstrate here, but other options include comparing the distributions , medians , amongst other things. As such, we can state:

| Null Hypotheses (H ): | The mean exam mark for the "seminar" and "lecture-only" teaching methods is the same in the population. |

| Alternative Hypothesis (H ): | The mean exam mark for the "seminar" and "lecture-only" teaching methods is not the same in the population. |

Now that you have identified the null and alternative hypotheses, you need to find evidence and develop a strategy for declaring your "support" for either the null or alternative hypothesis. We can do this using some statistical theory and some arbitrary cut-off points. Both these issues are dealt with next.

Significance levels

The level of statistical significance is often expressed as the so-called p -value . Depending on the statistical test you have chosen, you will calculate a probability (i.e., the p -value) of observing your sample results (or more extreme) given that the null hypothesis is true . Another way of phrasing this is to consider the probability that a difference in a mean score (or other statistic) could have arisen based on the assumption that there really is no difference. Let us consider this statement with respect to our example where we are interested in the difference in mean exam performance between two different teaching methods. If there really is no difference between the two teaching methods in the population (i.e., given that the null hypothesis is true), how likely would it be to see a difference in the mean exam performance between the two teaching methods as large as (or larger than) that which has been observed in your sample?

So, you might get a p -value such as 0.03 (i.e., p = .03). This means that there is a 3% chance of finding a difference as large as (or larger than) the one in your study given that the null hypothesis is true. However, you want to know whether this is "statistically significant". Typically, if there was a 5% or less chance (5 times in 100 or less) that the difference in the mean exam performance between the two teaching methods (or whatever statistic you are using) is as different as observed given the null hypothesis is true, you would reject the null hypothesis and accept the alternative hypothesis. Alternately, if the chance was greater than 5% (5 times in 100 or more), you would fail to reject the null hypothesis and would not accept the alternative hypothesis. As such, in this example where p = .03, we would reject the null hypothesis and accept the alternative hypothesis. We reject it because at a significance level of 0.03 (i.e., less than a 5% chance), the result we obtained could happen too frequently for us to be confident that it was the two teaching methods that had an effect on exam performance.

Whilst there is relatively little justification why a significance level of 0.05 is used rather than 0.01 or 0.10, for example, it is widely used in academic research. However, if you want to be particularly confident in your results, you can set a more stringent level of 0.01 (a 1% chance or less; 1 in 100 chance or less).

One- and two-tailed predictions

When considering whether we reject the null hypothesis and accept the alternative hypothesis, we need to consider the direction of the alternative hypothesis statement. For example, the alternative hypothesis that was stated earlier is:

| Alternative Hypothesis (H ): | Undertaking seminar classes has a positive effect on students' performance. |

The alternative hypothesis tells us two things. First, what predictions did we make about the effect of the independent variable(s) on the dependent variable(s)? Second, what was the predicted direction of this effect? Let's use our example to highlight these two points.

Sarah predicted that her teaching method (independent variable: teaching method), whereby she not only required her students to attend lectures, but also seminars, would have a positive effect (that is, increased) students' performance (dependent variable: exam marks). If an alternative hypothesis has a direction (and this is how you want to test it), the hypothesis is one-tailed. That is, it predicts direction of the effect. If the alternative hypothesis has stated that the effect was expected to be negative, this is also a one-tailed hypothesis.

Alternatively, a two-tailed prediction means that we do not make a choice over the direction that the effect of the experiment takes. Rather, it simply implies that the effect could be negative or positive. If Sarah had made a two-tailed prediction, the alternative hypothesis might have been:

| Alternative Hypothesis (H ): | Undertaking seminar classes has an effect on students' performance. |

In other words, we simply take out the word "positive", which implies the direction of our effect. In our example, making a two-tailed prediction may seem strange. After all, it would be logical to expect that "extra" tuition (going to seminar classes as well as lectures) would either have a positive effect on students' performance or no effect at all, but certainly not a negative effect. However, this is just our opinion (and hope) and certainly does not mean that we will get the effect we expect. Generally speaking, making a one-tail prediction (i.e., and testing for it this way) is frowned upon as it usually reflects the hope of a researcher rather than any certainty that it will happen. Notable exceptions to this rule are when there is only one possible way in which a change could occur. This can happen, for example, when biological activity/presence in measured. That is, a protein might be "dormant" and the stimulus you are using can only possibly "wake it up" (i.e., it cannot possibly reduce the activity of a "dormant" protein). In addition, for some statistical tests, one-tailed tests are not possible.

Rejecting or failing to reject the null hypothesis

Let's return finally to the question of whether we reject or fail to reject the null hypothesis.

If our statistical analysis shows that the significance level is below the cut-off value we have set (e.g., either 0.05 or 0.01), we reject the null hypothesis and accept the alternative hypothesis. Alternatively, if the significance level is above the cut-off value, we fail to reject the null hypothesis and cannot accept the alternative hypothesis. You should note that you cannot accept the null hypothesis, but only find evidence against it.

User Preferences

Content preview.

Arcu felis bibendum ut tristique et egestas quis:

- Ut enim ad minim veniam, quis nostrud exercitation ullamco laboris

- Duis aute irure dolor in reprehenderit in voluptate

- Excepteur sint occaecat cupidatat non proident

Keyboard Shortcuts

5.1 - introduction to hypothesis testing.

Previously we used confidence intervals to estimate unknown population parameters. We compared confidence intervals to specified parameter values and when the specific value was contained in the interval, we concluded that there was not sufficient evidence of a difference between the population parameter and the specified value. In other words, any values within the confidence intervals were reasonable estimates of the population parameter and any values outside of the confidence intervals were not reasonable estimates. Here, we are going to look at a more formal method for testing whether a given value is a reasonable value of a population parameter. To do this we need to have a hypothesized value of the population parameter.

In this lesson we will compare data from a sample to a hypothesized parameter. In each case, we will compute the probability that a population with the specified parameter would produce a sample statistic as extreme or more extreme to the one we observed in our sample. This probability is known as the p-value and it is used to evaluate statistical significance.

A test is considered to be statistically significant when the p-value is less than or equal to the level of significance, also known as the alpha (\(\alpha\)) level. For this class, unless otherwise specified, \(\alpha=0.05\); this is the most frequently used alpha level in many fields.

Sample statistics vary from the population parameter randomly. When results are statistically significant, we are concluding that the difference observed between our sample statistic and the hypothesized parameter is unlikely due to random sampling variation.

Have a language expert improve your writing

Run a free plagiarism check in 10 minutes, generate accurate citations for free.

- Knowledge Base

Hypothesis Testing | A Step-by-Step Guide with Easy Examples

Published on November 8, 2019 by Rebecca Bevans . Revised on June 22, 2023.

Hypothesis testing is a formal procedure for investigating our ideas about the world using statistics . It is most often used by scientists to test specific predictions, called hypotheses, that arise from theories.

There are 5 main steps in hypothesis testing:

- State your research hypothesis as a null hypothesis and alternate hypothesis (H o ) and (H a or H 1 ).

- Collect data in a way designed to test the hypothesis.

- Perform an appropriate statistical test .

- Decide whether to reject or fail to reject your null hypothesis.

- Present the findings in your results and discussion section.

Though the specific details might vary, the procedure you will use when testing a hypothesis will always follow some version of these steps.

Table of contents

Step 1: state your null and alternate hypothesis, step 2: collect data, step 3: perform a statistical test, step 4: decide whether to reject or fail to reject your null hypothesis, step 5: present your findings, other interesting articles, frequently asked questions about hypothesis testing.

After developing your initial research hypothesis (the prediction that you want to investigate), it is important to restate it as a null (H o ) and alternate (H a ) hypothesis so that you can test it mathematically.

The alternate hypothesis is usually your initial hypothesis that predicts a relationship between variables. The null hypothesis is a prediction of no relationship between the variables you are interested in.

- H 0 : Men are, on average, not taller than women. H a : Men are, on average, taller than women.

Receive feedback on language, structure, and formatting

Professional editors proofread and edit your paper by focusing on:

- Academic style

- Vague sentences

- Style consistency

See an example

For a statistical test to be valid , it is important to perform sampling and collect data in a way that is designed to test your hypothesis. If your data are not representative, then you cannot make statistical inferences about the population you are interested in.

There are a variety of statistical tests available, but they are all based on the comparison of within-group variance (how spread out the data is within a category) versus between-group variance (how different the categories are from one another).

If the between-group variance is large enough that there is little or no overlap between groups, then your statistical test will reflect that by showing a low p -value . This means it is unlikely that the differences between these groups came about by chance.

Alternatively, if there is high within-group variance and low between-group variance, then your statistical test will reflect that with a high p -value. This means it is likely that any difference you measure between groups is due to chance.

Your choice of statistical test will be based on the type of variables and the level of measurement of your collected data .

- an estimate of the difference in average height between the two groups.

- a p -value showing how likely you are to see this difference if the null hypothesis of no difference is true.

Based on the outcome of your statistical test, you will have to decide whether to reject or fail to reject your null hypothesis.

In most cases you will use the p -value generated by your statistical test to guide your decision. And in most cases, your predetermined level of significance for rejecting the null hypothesis will be 0.05 – that is, when there is a less than 5% chance that you would see these results if the null hypothesis were true.

In some cases, researchers choose a more conservative level of significance, such as 0.01 (1%). This minimizes the risk of incorrectly rejecting the null hypothesis ( Type I error ).

Here's why students love Scribbr's proofreading services

Discover proofreading & editing

The results of hypothesis testing will be presented in the results and discussion sections of your research paper , dissertation or thesis .

In the results section you should give a brief summary of the data and a summary of the results of your statistical test (for example, the estimated difference between group means and associated p -value). In the discussion , you can discuss whether your initial hypothesis was supported by your results or not.

In the formal language of hypothesis testing, we talk about rejecting or failing to reject the null hypothesis. You will probably be asked to do this in your statistics assignments.

However, when presenting research results in academic papers we rarely talk this way. Instead, we go back to our alternate hypothesis (in this case, the hypothesis that men are on average taller than women) and state whether the result of our test did or did not support the alternate hypothesis.

If your null hypothesis was rejected, this result is interpreted as “supported the alternate hypothesis.”

These are superficial differences; you can see that they mean the same thing.

You might notice that we don’t say that we reject or fail to reject the alternate hypothesis . This is because hypothesis testing is not designed to prove or disprove anything. It is only designed to test whether a pattern we measure could have arisen spuriously, or by chance.

If we reject the null hypothesis based on our research (i.e., we find that it is unlikely that the pattern arose by chance), then we can say our test lends support to our hypothesis . But if the pattern does not pass our decision rule, meaning that it could have arisen by chance, then we say the test is inconsistent with our hypothesis .

If you want to know more about statistics , methodology , or research bias , make sure to check out some of our other articles with explanations and examples.

- Normal distribution

- Descriptive statistics

- Measures of central tendency

- Correlation coefficient

Methodology

- Cluster sampling

- Stratified sampling

- Types of interviews

- Cohort study

- Thematic analysis

Research bias

- Implicit bias

- Cognitive bias

- Survivorship bias

- Availability heuristic

- Nonresponse bias

- Regression to the mean

Hypothesis testing is a formal procedure for investigating our ideas about the world using statistics. It is used by scientists to test specific predictions, called hypotheses , by calculating how likely it is that a pattern or relationship between variables could have arisen by chance.

A hypothesis states your predictions about what your research will find. It is a tentative answer to your research question that has not yet been tested. For some research projects, you might have to write several hypotheses that address different aspects of your research question.

A hypothesis is not just a guess — it should be based on existing theories and knowledge. It also has to be testable, which means you can support or refute it through scientific research methods (such as experiments, observations and statistical analysis of data).

Null and alternative hypotheses are used in statistical hypothesis testing . The null hypothesis of a test always predicts no effect or no relationship between variables, while the alternative hypothesis states your research prediction of an effect or relationship.

Cite this Scribbr article

If you want to cite this source, you can copy and paste the citation or click the “Cite this Scribbr article” button to automatically add the citation to our free Citation Generator.

Bevans, R. (2023, June 22). Hypothesis Testing | A Step-by-Step Guide with Easy Examples. Scribbr. Retrieved July 27, 2024, from https://www.scribbr.com/statistics/hypothesis-testing/

Is this article helpful?

Rebecca Bevans

Other students also liked, choosing the right statistical test | types & examples, understanding p values | definition and examples, what is your plagiarism score.

Back to blog home

Significance levels: what, why, and how, the statsig team.

In a world where data-driven decisions reign supreme, understanding statistical significance is like having a trusty compass to navigate the vast ocean of information. Just as a compass guides sailors to their destination, statistical significance helps researchers and analysts separate meaningful insights from random noise, ensuring they're on the right course.

Statistical significance is a crucial concept in data analysis, acting as a gatekeeper between coincidence and genuine patterns. It's the key to unlocking the true potential of your data, enabling you to make informed decisions with confidence.

Understanding significance levels

Statistical significance is a measure of the reliability and trustworthiness of your data analysis results. It helps you determine whether the patterns or differences you observe in your data are likely to be real or just a result of random chance.

Significance levels play a central role in hypothesis testing , a process used to make data-driven decisions. When you conduct a hypothesis test, you start with a null hypothesis (usually assuming no effect or difference) and an alternative hypothesis (proposing an effect or difference exists). The significance level you choose (commonly denoted as α) sets the threshold for rejecting the null hypothesis.

For example, if you set a significance level of 0.05 (5%), you're essentially saying, "I'm willing to accept a 5% chance of rejecting the null hypothesis when it's actually true." This means that if your p-value (the probability of observing results as extreme as yours, assuming the null hypothesis is true) is less than 0.05, you can confidently reject the null hypothesis and conclude that your results are statistically significant.

However, it's crucial to understand that p-values are often misinterpreted . A common misconception is that a p-value tells you the probability that your null hypothesis is true. In reality, it only tells you the probability of observing results as extreme as yours if the null hypothesis were true.

Another misinterpretation is that a smaller p-value always implies a larger effect size or practical importance. While a small p-value suggests that your results are unlikely to be due to chance, it doesn't necessarily mean that the effect is large or practically meaningful.

To find the appropriate significance level for your analysis, consider factors such as:

The consequences of making a Type I error (false positive) or Type II error (false negative)

The sample size and expected effect size

The conventions in your field of study

By carefully selecting your significance level and interpreting your p-values correctly, you can make sound decisions based on your data analysis results. Remember, statistical significance is just one piece of the puzzle – always consider the practical implications and context of your findings to make truly meaningful conclusions.

Why significance levels matter

Significance levels are crucial for distinguishing meaningful patterns from random noise in data. They help businesses avoid making decisions based on chance fluctuations. Setting the right significance level ensures that resources are allocated to genuine insights.

Significance levels impact business decisions and resource allocation . A stringent significance level (e.g., 0.01) reduces false positives but may miss valuable insights. A relaxed level (e.g., 0.10) captures more potential effects but risks acting on false positives. Choosing the appropriate level depends on the cost of false positives versus false negatives for your business.

Balancing statistical significance with practical relevance is key in real-world applications . A statistically significant result may not have a meaningful impact on user experience or revenue. When deciding how to find significance level, consider the practical implications alongside the statistical evidence . Focus on changes that drive tangible improvements for your users and business.

Calculating statistical significance

Formulating hypotheses is the first step in calculating statistical significance . Start by defining a null hypothesis (no significant difference) and an alternative hypothesis (presence of a meaningful difference). Choose a significance level , typically 0.01 or 0.05, which represents the probability of rejecting the null hypothesis when it's true.

Statistical tests help determine if observed differences are statistically significant. T-tests compare means between two groups, while chi-square tests analyze categorical data. ANOVA (Analysis of Variance) compares means among three or more groups. The choice of test depends on your data type and experimental design .

P-values indicate the probability of obtaining observed results if the null hypothesis is true. Compare the p-value to your chosen significance level to determine statistical significance. If the p-value is less than or equal to the significance level, reject the null hypothesis and conclude that the results are statistically significant.

To find significance level, consider the consequences of a Type I error (false positive) and a Type II error (false negative). A lower significance level reduces the risk of a Type I error but increases the risk of a Type II error. Balance these risks based on the context and implications of your study.

Sample size plays a crucial role in determining statistical significance. Larger sample sizes increase the power of a statistical test, making it easier to detect significant differences. However, an excessively large sample size can make even minor differences statistically significant, so consider practical relevance alongside statistical significance .

Effect size measures the magnitude of a difference or relationship. It provides context for interpreting statistically significant results. A small p-value doesn't always imply a large effect size, so consider both when drawing conclusions and making decisions based on your analysis .

Common pitfalls in significance testing

Overlooking sample size can lead to false conclusions. Smaller samples have less power to detect real differences, while larger samples may flag trivial differences as significant.

Misinterpreting p-values is another common mistake. A low p-value indicates strong evidence against the null hypothesis but doesn't measure the size or importance of an effect.

External factors like seasonality, marketing campaigns, or technical issues can influence results. Failing to account for these variables can skew your analysis and lead to incorrect conclusions.

To find significance level accurately:

Clearly define your null and alternative hypotheses upfront. This helps frame your analysis and interpretation of results.

Choose an appropriate significance level (usually 0.05 or 0.01) before collecting data. Stick to this predetermined level to avoid "p-hacking" or manipulating data to achieve significance.

Use the right statistical test for your data and research question. Different tests have different assumptions and are suited for various types of data.

Interpret results in context, considering both statistical significance and practical importance. A statistically significant result may not be meaningful if the effect size is small.

Replicate findings with new data when possible. Consistent results across multiple studies strengthen evidence for a genuine effect.

By understanding these pitfalls and best practices for finding significance level, you can make more reliable inferences from your data .

Practical applications of significance testing

Significance testing is a powerful tool for making data-driven decisions across various industries. By leveraging significance levels, product teams can optimize user experiences and drive meaningful improvements. Here's how you can apply significance testing in practice:

Using significance levels in product development

Identify high-impact features : Conduct A/B tests to determine which product features significantly improve user engagement or satisfaction. Focus development efforts on features that demonstrate statistically significant improvements.

Optimize user flows : Test different user flow variations to find the most intuitive and efficient paths. Use significance levels to validate that the chosen flow outperforms alternatives.

Refine UI/UX elements : Experiment with various UI/UX elements, such as button placement, color schemes, or typography. Analyze results using significance testing to select the most effective designs.

Applying statistical significance to marketing campaigns

Evaluate ad effectiveness : Compare the performance of different ad creatives, targeting strategies, or platforms. Use significance testing to identify the most impactful approaches and allocate marketing budgets accordingly.

Optimize landing pages : Test different landing page variations to maximize conversion rates. Determine the significance level of each variation's performance to implement the most effective design.

Refine email campaigns : Experiment with subject lines, email content, and call-to-actions. Use significance testing to identify the elements that drive the highest open and click-through rates.

Leveraging significance testing for data-driven decision making

Validate business strategies : Test different pricing models, product bundles, or promotional offers. Use significance levels to determine which strategies yield the best results and align with business objectives.

Improve customer support : Experiment with various support channels, response times, or communication styles. Analyze the significance of each approach's impact on customer satisfaction and loyalty.

Optimize resource allocation : Test different resource allocation strategies across departments or projects. Use significance testing to identify the most efficient and effective approaches for maximizing ROI.

By embracing significance testing as a core part of their decision-making process, organizations can confidently optimize their products , marketing efforts, and overall strategies. Significance levels provide a clear framework for determining which ideas and approaches are worth pursuing, enabling teams to focus on the most impactful initiatives.

To find significance levels, start by defining clear hypotheses and selecting appropriate statistical tests . Collect data through well-designed experiments and analyze the results using the chosen tests. Compare the p-values obtained against the predetermined significance level (e.g., 0.05) to determine if the observed differences are statistically significant.

Remember, while significance testing is a valuable tool, it should be used in conjunction with other factors, such as practical significance, user feedback, and business goals . By combining statistical insights with a holistic understanding of your users and industry, you can make informed decisions that drive meaningful growth and success.

2M Events per Month, Free Forever.

Sign up for Statsig and launch your first experiment in minutes.

Build fast?

Try statsig today.

Recent Posts

Hypothesis testing explained in 4 parts.

Hypothesis Testing often confuses data scientists due to mixed teachings on p-values and significance testing. This article clarifies 10 key concepts with visuals and intuitive explanations.

Top 8 common experimentation mistakes and how to fix them

I discussed 8 A/B testing mistakes with Allon Korem (Bell Statistics) and Tyler VanHaren (Statsig). Learn fixes to improve accuracy and drive better business outcomes.

Introducing Differential Impact Detection

Introducing Differential Impact Detection: Identify how different user groups respond to treatments and gain useful insights from varied experiment results.

Identifying and experimenting with Power Users using Statsig

Identify power users to drive growth and engagement. Learn to pinpoint and leverage these key players with targeted experiments for maximum impact.

How to Ingest Data Into Statsig

Simplify data pipelines with Statsig. Use SDKs, third-party integrations, and Data Warehouse Native Solution for effortless data ingestion at any stage.

A/B Testing performance wins on NestJS API servers

Learn how we use Statsig to enhance our NestJS API servers, reducing request processing time and CPU usage through performance experiments.

- Skip to secondary menu

- Skip to main content

- Skip to primary sidebar

Statistics By Jim

Making statistics intuitive

Hypothesis Testing and Confidence Intervals

By Jim Frost 20 Comments

Confidence intervals and hypothesis testing are closely related because both methods use the same underlying methodology. Additionally, there is a close connection between significance levels and confidence levels. Indeed, there is such a strong link between them that hypothesis tests and the corresponding confidence intervals always agree about statistical significance.

A confidence interval is calculated from a sample and provides a range of values that likely contains the unknown value of a population parameter . To learn more about confidence intervals in general, how to interpret them, and how to calculate them, read my post about Understanding Confidence Intervals .

In this post, I demonstrate how confidence intervals work using graphs and concepts instead of formulas. In the process, I compare and contrast significance and confidence levels. You’ll learn how confidence intervals are similar to significance levels in hypothesis testing. You can even use confidence intervals to determine statistical significance.

Read the companion post for this one: How Hypothesis Tests Work: Significance Levels (Alpha) and P-values . In that post, I use the same graphical approach to illustrate why we need hypothesis tests, how significance levels and P-values can determine whether a result is statistically significant, and what that actually means.

Significance Level vs. Confidence Level

Let’s delve into how confidence intervals incorporate the margin of error. Like the previous post, I’ll use the same type of sampling distribution that showed us how hypothesis tests work. This sampling distribution is based on the t-distribution , our sample size , and the variability in our sample. Download the CSV data file: FuelsCosts .

There are two critical differences between the sampling distribution graphs for significance levels and confidence intervals–the value that the distribution centers on and the portion we shade.

The significance level chart centers on the null value, and we shade the outside 5% of the distribution.

Conversely, the confidence interval graph centers on the sample mean, and we shade the center 95% of the distribution.

The shaded range of sample means [267 394] covers 95% of this sampling distribution. This range is the 95% confidence interval for our sample data. We can be 95% confident that the population mean for fuel costs fall between 267 and 394.

Confidence Intervals and the Inherent Uncertainty of Using Sample Data

The graph emphasizes the role of uncertainty around the point estimate . This graph centers on our sample mean. If the population mean equals our sample mean, random samples from this population (N=25) will fall within this range 95% of the time.

We don’t know whether our sample mean is near the population mean. However, we know that the sample mean is an unbiased estimate of the population mean. An unbiased estimate does not tend to be too high or too low. It’s correct on average. Confidence intervals are correct on average because they use sample estimates that are correct on average. Given what we know, the sample mean is the most likely value for the population mean.

Given the sampling distribution, it would not be unusual for other random samples drawn from the same population to have means that fall within the shaded area. In other words, given that we did, in fact, obtain the sample mean of 330.6, it would not be surprising to get other sample means within the shaded range.

If these other sample means would not be unusual, we must conclude that these other values are also plausible candidates for the population mean. There is inherent uncertainty when using sample data to make inferences about the entire population. Confidence intervals help gauge the degree of uncertainty, also known as the margin of error.

Related post : Sampling Distributions

Confidence Intervals and Statistical Significance

If you want to determine whether your hypothesis test results are statistically significant, you can use either P-values with significance levels or confidence intervals. These two approaches always agree.

The relationship between the confidence level and the significance level for a hypothesis test is as follows:

Confidence level = 1 – Significance level (alpha)

For example, if your significance level is 0.05, the equivalent confidence level is 95%.

Both of the following conditions represent statistically significant results:

- The P-value in a hypothesis test is smaller than the significance level.

- The confidence interval excludes the null hypothesis value.

Further, it is always true that when the P-value is less than your significance level, the interval excludes the value of the null hypothesis.

In the fuel cost example, our hypothesis test results are statistically significant because the P-value (0.03112) is less than the significance level (0.05). Likewise, the 95% confidence interval [267 394] excludes the null hypotheses value (260). Using either method, we draw the same conclusion.

Hypothesis Testing and Confidence Intervals Always Agree

The hypothesis testing and confidence interval results always agree. To understand the basis of this agreement, remember how confidence levels and significance levels function:

- A confidence level determines the distance between the sample mean and the confidence limits.

- A significance level determines the distance between the null hypothesis value and the critical regions.

Both of these concepts specify a distance from the mean to a limit. Surprise! These distances are precisely the same length.

A 1-sample t-test calculates this distance as follows:

The critical t-value * standard error of the mean

Interpreting these statistics goes beyond the scope of this article. But, using this equation, the distance for our fuel cost example is $63.57.

P-value and significance level approach : If the sample mean is more than $63.57 from the null hypothesis mean, the sample mean falls within the critical region, and the difference is statistically significant.

Confidence interval approach : If the null hypothesis mean is more than $63.57 from the sample mean, the interval does not contain this value, and the difference is statistically significant.

Of course, they always agree!

The two approaches always agree as long as the same hypothesis test generates the P-values and confidence intervals and uses equivalent confidence levels and significance levels.

Related posts : Standard Error of the Mean and Critical Values

I Really Like Confidence Intervals!

In statistics, analysts often emphasize using hypothesis tests to determine statistical significance. Unfortunately, a statistically significant effect might not always be practically meaningful. For example, a significant effect can be too small to be important in the real world. Confidence intervals help you navigate this issue!

Similarly, the margin of error in a survey tells you how near you can expect the survey results to be to the correct population value.

Learn more about this distinction in my post about Practical vs. Statistical Significance .

Learn how to use confidence intervals to compare group means !

Finally, learn about bootstrapping in statistics to see an alternative to traditional confidence intervals that do not use probability distributions and test statistics. In that post, I create bootstrapped confidence intervals.

Neyman, J. (1937). Outline of a Theory of Statistical Estimation Based on the Classical Theory of Probability . Philosophical Transactions of the Royal Society A . 236 (767): 333–380.

Share this:

Reader Interactions

December 7, 2021 at 3:14 pm

I am helping my Physics students use their data to determine whether they can say momentum is conserved. One of the columns in their data chart was change in momentum and ultimately we want this to be 0. They are obviously not getting zero from their data because of outside factors. How can I explain to them that their data supports or does not support conservation of momentum using statistics? They are using a 95% confidence level. Again, we want the change in momentum to be 0. Thank you.

December 9, 2021 at 6:54 pm

I can see several complications with that approach and also my lack of familiarity with the subject area limits what I can say. But here are some considerations.

For starters, I’m unsure whether the outside factors you mention bias the results systematically from zero or just add noise (variability) to the data (but not systematically bias).

If the outside factors bias the results to a non-zero value, then you’d expect the case where larger samples will be more likely to produce confidence intervals that exclude zero. Indeed, only smaller samples sizes might produce CIs that include zero, but that would only be due to the relative lack of precision associated with small sample sizes. In other words, limited data won’t be able to distinguish the sample value from zero even though, given the bias of the outside factors, you’d expect a non-zero value. In other words, if the bias exists, the larger samples will detect the non-zero values correctly while smaller samples might miss it.

If the outside factors don’t bias the results but just add noise, then you’d expect that both small and larger samples will include zero. However, you still have the issue of precision. Smaller samples will include zero because they’re relatively wider intervals. Larger samples should include zero but have narrower intervals. Obviously, you can trust the larger samples more.

In hypothesis testing, when you fail to reject the null, as occurs in the unbiased discussion above, you’re not accepting the null . Click the link to read about that. Failing to reject the null does not mean that the population value equals the hypothesized value (zero in your case). That’s because you can fail to reject the null due to poor quality data (high noise and/or small sample sizes). And you don’t want to draw conclusions based on poor data.

There’s a class of hypothesis testing called equivalence testing that you should use in this case. It flips the null and alternative hypotheses so that the test requires you to collect strong evidence to show that the sample value equals the null value (again, zero in your case). I don’t have a post on that topic (yet), but you can read the Wikipedia article about Equivalence Testing .

I hope that helps!

September 19, 2021 at 5:16 am

Thank you very much. When training a machine learning model using bootstrap, in the end we will have the confidence interval of accuracy. How can I say that this result is statistically significant? Do I have to convert the confidence interval to p-values first and if p-value is less than 0.05, then it is statistically significant?

September 19, 2021 at 3:16 pm

As I mention in this article, you determine significance using a confidence interval by assessing whether it excludes the null hypothesis value. When it excludes the null value, your results are statistically significant.

September 18, 2021 at 12:47 pm

Dear Jim, Thanks for this post. I am new to hypothesis testing and would like to ask you how we know that the null hypotheses value is equal to 260.

Thank you. Kind regards, Loukas