Machine Learning

Artificial Intelligence

Control System

Supervised Learning

Classification, miscellaneous, related tutorials.

Interview Questions

| The hypothesis is a common term in Machine Learning and data science projects. As we know, machine learning is one of the most powerful technologies across the world, which helps us to predict results based on past experiences. Moreover, data scientists and ML professionals conduct experiments that aim to solve a problem. These ML professionals and data scientists make an initial assumption for the solution of the problem. This assumption in Machine learning is known as Hypothesis. In Machine Learning, at various times, Hypothesis and Model are used interchangeably. However, a Hypothesis is an assumption made by scientists, whereas a model is a mathematical representation that is used to test the hypothesis. In this topic, "Hypothesis in Machine Learning," we will discuss a few important concepts related to a hypothesis in machine learning and their importance. So, let's start with a quick introduction to Hypothesis. It is just a guess based on some known facts but has not yet been proven. A good hypothesis is testable, which results in either true or false. : Let's understand the hypothesis with a common example. Some scientist claims that ultraviolet (UV) light can damage the eyes then it may also cause blindness. In this example, a scientist just claims that UV rays are harmful to the eyes, but we assume they may cause blindness. However, it may or may not be possible. Hence, these types of assumptions are called a hypothesis. The hypothesis is one of the commonly used concepts of statistics in Machine Learning. It is specifically used in Supervised Machine learning, where an ML model learns a function that best maps the input to corresponding outputs with the help of an available dataset. There are some common methods given to find out the possible hypothesis from the Hypothesis space, where hypothesis space is represented by and hypothesis by Th ese are defined as follows: It is used by supervised machine learning algorithms to determine the best possible hypothesis to describe the target function or best maps input to output. It is often constrained by choice of the framing of the problem, the choice of model, and the choice of model configuration. . It is primarily based on data as well as bias and restrictions applied to data. Hence hypothesis (h) can be concluded as a single hypothesis that maps input to proper output and can be evaluated as well as used to make predictions. The hypothesis (h) can be formulated in machine learning as follows: Where, Y: Range m: Slope of the line which divided test data or changes in y divided by change in x. x: domain c: intercept (constant) : Let's understand the hypothesis (h) and hypothesis space (H) with a two-dimensional coordinate plane showing the distribution of data as follows: Hypothesis space (H) is the composition of all legal best possible ways to divide the coordinate plane so that it best maps input to proper output. Further, each individual best possible way is called a hypothesis (h). Hence, the hypothesis and hypothesis space would be like this: Similar to the hypothesis in machine learning, it is also considered an assumption of the output. However, it is falsifiable, which means it can be failed in the presence of sufficient evidence. Unlike machine learning, we cannot accept any hypothesis in statistics because it is just an imaginary result and based on probability. Before start working on an experiment, we must be aware of two important types of hypotheses as follows: A null hypothesis is a type of statistical hypothesis which tells that there is no statistically significant effect exists in the given set of observations. It is also known as conjecture and is used in quantitative analysis to test theories about markets, investment, and finance to decide whether an idea is true or false. An alternative hypothesis is a direct contradiction of the null hypothesis, which means if one of the two hypotheses is true, then the other must be false. In other words, an alternative hypothesis is a type of statistical hypothesis which tells that there is some significant effect that exists in the given set of observations.The significance level is the primary thing that must be set before starting an experiment. It is useful to define the tolerance of error and the level at which effect can be considered significantly. During the testing process in an experiment, a 95% significance level is accepted, and the remaining 5% can be neglected. The significance level also tells the critical or threshold value. For e.g., in an experiment, if the significance level is set to 98%, then the critical value is 0.02%. The p-value in statistics is defined as the evidence against a null hypothesis. In other words, P-value is the probability that a random chance generated the data or something else that is equal or rarer under the null hypothesis condition. If the p-value is smaller, the evidence will be stronger, and vice-versa which means the null hypothesis can be rejected in testing. It is always represented in a decimal form, such as 0.035. Whenever a statistical test is carried out on the population and sample to find out P-value, then it always depends upon the critical value. If the p-value is less than the critical value, then it shows the effect is significant, and the null hypothesis can be rejected. Further, if it is higher than the critical value, it shows that there is no significant effect and hence fails to reject the Null Hypothesis. In the series of mapping instances of inputs to outputs in supervised machine learning, the hypothesis is a very useful concept that helps to approximate a target function in machine learning. It is available in all analytics domains and is also considered one of the important factors to check whether a change should be introduced or not. It covers the entire training data sets to efficiency as well as the performance of the models. Hence, in this topic, we have covered various important concepts related to the hypothesis in machine learning and statistics and some important parameters such as p-value, significance level, etc., to understand hypothesis concepts in a better way. |

- Send your Feedback to [email protected]

Help Others, Please Share

Learn Latest Tutorials

Transact-SQL

Reinforcement Learning

R Programming

React Native

Python Design Patterns

Python Pillow

Python Turtle

Preparation

Verbal Ability

Company Questions

Trending Technologies

Cloud Computing

Data Science

B.Tech / MCA

Data Structures

Operating System

Computer Network

Compiler Design

Computer Organization

Discrete Mathematics

Ethical Hacking

Computer Graphics

Software Engineering

Web Technology

Cyber Security

C Programming

Data Mining

Data Warehouse

Intro to Machine Learning

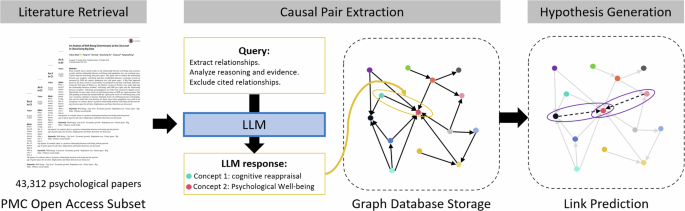

This lecture will be a broad introduction to machine learning, framed in the context of specifying three separate aspects of machine learning algorithms. We will first cover a broad introduction to the idea of (supervised machine learning), define some notation on the topic, and then finally define the three elements of a machine learning algorithm: the hypothesis class, the loss function, and optimization methods.

The Three Ingredients of Machine Learning Algorithms

Despite the seemingly vast number of machine learning algorithms, they all share a common framework that consists of three key ingredients: the hypothesis class, the loss function, and the optimization method. Understanding these three components is essential for situating the wide range of machine learning algorithms into a common framework.

Hypothesis Class : This is the set of all functions that the algorithm can choose from when learning from the data. For example, in the case of linear classification, the hypothesis class would consist of all linear functions.

Loss Function : The loss function measures the discrepancy between the predicted outputs of the model and the true outputs. It guides the learning algorithm by providing a metric for the quality of a particular hypothesis.

Optimization Method : This refers to the algorithm used to search through the hypothesis class and find the function that minimizes the loss function. Common optimization methods include gradient descent and its variants.

By specifying these three ingredients for a given task, one can define a machine learning algorithm. These ingredients are foundational to all machine learning algorithms, whether they are decision trees, boosting, neural networks, or any other method.

Running Example: Multi-class Linear Classification

The running example used throughout the lecture series is multi-class linear classification. This example serves as a practical application of the concepts of supervised machine learning. It involves classifying images into categories based on their pixel values. For instance, an image of a handwritten digit is represented as a vector of pixel values, and the goal is to classify it as one of the possible digit categories (0 through 9).

In multi-class linear classification, the hypothesis class consists of linear functions, the loss function could be something like the softmax loss, and the optimization method could be stochastic gradient descent (SGD). This example will be used to illustrate the general principles of machine learning and will be implemented in code to provide a hands-on understanding of the concepts.

Supervised Machine Learning

Machine learning offers an alternative approach to classical programming. Instead of manually encoding the logic for digit recognition, machine learning relies on providing a large set of examples of the desired input-output mappings. The machine learning algorithm then “figures out” the underlying logic from these examples.

Supervised machine learning, in particular, involves collecting numerous examples of digits, each paired with its correct label (e.g., a collection of images of the digit ‘5’ labeled as ‘5’). This collection of labeled examples is known as the training set. The training set is fed into a machine learning algorithm, which processes the data and learns to map new inputs to their appropriate outputs.

The process of machine learning is sometimes described as data-driven programming. Rather than specifying the logic explicitly, the programmer provides examples of the input-output pairs and lets the algorithm deduce the patterns and rules that govern the mapping. This approach can handle the variability and complexity of real-world data more effectively than classical programming.

Training Set and Machine Learning Algorithm

The training set is a crucial component of supervised machine learning. It consists of numerous examples of inputs (e.g., images of digits) along with their corresponding outputs (the actual digits they represent). The machine learning algorithm uses this training set to learn the patterns and features that are predictive of the output.

The machine learning algorithm itself can be thought of as a “black box” that takes the training set and produces a model capable of making predictions on new, unseen data. The specifics of how the algorithm learns from the training set and what kind of model it produces will be discussed in further detail throughout the course.

Digit Classification and Language Modeling

Two primary examples of machine learning tasks are digit classification and language modeling. In digit classification, the goal is to classify images of handwritten digits into their corresponding numerical categories. Language modeling, on the other hand, deals with predicting the next word in a sequence given the beginning of a sentence. For example, given the input “the quick brown,” the model should predict the next word, such as “fox.”

Despite the apparent differences between images and text, machine learning algorithms can treat them similarly by operating on vector representations of the data. This approach allows for a unified treatment of various types of data.

Notation and Vector Representation

Inputs in machine learning algorithms are represented as vectors, denoted by \(x \in \mathbb{R}^n\) , which reside in an \(n\) -dimensional space. This means that each input vector is a collection of \(n\) real-valued numbers. For instance, an example of such a vector could be \[ x = \begin{bmatrix} 0.1 \\ 0 \\ -5 \\ \end{bmatrix}. \]

To refer to individual elements within this vector, subscripts are used. For example, \(x_2\) denotes the second element of the vector \(x\) . In general \(x_j\) represents the \(j\) -th element of the vector.

Training and Evaluation Sets

In practice, machine learning algorithms work with not just a single input but a set of inputs known as a training set. To denote different vectors within a training set, superscripts enclosed in parentheses are used. For example, \(x^{(i)}\) represents the \(i\) -th vector in the training set, where \(i\) ranges from \(1\) to \(m\) . Here, \(m\) is the number of training examples, and \(n\) is the dimensionality of the input space.

Target Vectors

The targets or outputs, denoted by \(y\) , correspond to the desired outcome for each input. In a multi-class classification setting, which is the focus of this discussion, the targets are a set of \(k\) numbers. Each output \(y^{(i)}\) is associated with an input vector \(x^{(i)}\) and is a discrete number in the set \(\{1, 2, ..., k\}\) , where \(k\) is the number of possible classes. The evaluation set, often referred to as the test set, is another collection of ordered pairs, denoted as \(\bar{x}^{(i)}, \bar{y}^{(i))}\) , where \(i\) ranges from 1 to \(\bar{m}\) . The vectors \(\bar{x}^{(i)}\) are in the same space \(\mathbb{R}^n\) , and the targets \(\bar{y}^{(i)}\) are from the same discrete set 1 to \(k\) . The evaluation set is used to assess the performance of the machine learning model.

Multiple different settings are possible depending on the type of target:

- Multi-class Classification : This is the scenario where there are multiple classes, and each input is classified into one of these classes. For example, in digit recognition, there are 10 classes (digits 0 through 9).

- Binary Classification : A special case of classification where there are only two classes. It is distinct enough to have its own name.

- Regression : If the target is a real value, the problem is referred to as regression. There are also more complex forms such as multi-value or vector-value regression and structured prediction, which deals with complex structured output spaces.

It is important to note that while modern AI may seem to produce complex outputs like paragraphs, the underlying algorithms often operate by outputting simpler elements, like one word at a time, which collectively form a structured output. Understanding multi-class classification provides a foundation to grasp these more complex outputs.

Digit Classification Example

To illustrate the concept of the training set, consider the digit classification problem. Images of handwritten digits are represented as matrices of pixel values, where each pixel value ranges from 0 to 1, with 0 representing a black pixel and 1 representing a white pixel. For a 28 by 28 pixel image, the matrix is flattened into a vector \(x\) in \(\mathbb{R}^{784}\) , where 784 is the product of the image dimensions. Each image corresponds to a high-dimensional vector, and the target value \(y\) is the actual digit the image represents, ranging from 0 to 9.

Language Modeling Example

In language modeling, the input can be a sentence, such as “The quick brown fox.” The target for this input could be the next word in the sequence, for example, “jumps.” Each word in the vocabulary is assigned a unique number. For example, the word “the” might be assigned the number 283, “quick” the number 78, “brown” the number 151 and so on. This numbering is arbitrary but must remain consistent across all inputs and examples within the model. The vocabulary size is defined, such as 1,000 possible words, and this set of words encompasses all the terms the language model needs to recognize.

One-hot encoding is used to represent words numerically. In this encoding, a word is represented by a vector that is mostly zeros except for a single one at the position corresponding to the word’s assigned number. For instance, if the vocabulary size is 1,000 words, and the word “the” is number 253, then the one-hot encoding for “the” would be a vector with a one at position 23 and zeros everywhere else.

The input to the model \(x\) , could be a vector contains the contatenation of some past history of words. If the model is designed to take three words as input for each prediction, and the vocabulary size is 1,000 words, then \(x\) would be a 3,000-dimensional vector. This vector would contain mostly zeros, with ones at the positions corresponding to the input words’ numbers. For example, if the input words are numbered 283, 78, and 151, then \(X_{283}\) , \(X_{1078}\) , and \(X_{2151}\) would be set to one, with all other positions in the vector set to zero.

The output of the model, denoted as \(Y\) , is not one-hot encoded but is simply the index number of the target word. If the target word is “fox” and it corresponds to index 723, then \(Y\) would be equal to 723.

Importance of Order

The order of words is crucial in language modeling. The input vector’s structure encodes the order of the words, with different segments of the vector representing the positions of the words in the input sequence. The concatenation of these segments ensures that the order is preserved, which is essential for the model to understand the meaning of sentences correctly.

In summary, language modeling involves assigning numbers to words, representing these words with one-hot encodings, and processing the input through a model that predicts the next word based on the numerical representation of the input sequence. The process of tokenization and the importance of maintaining the order of words are also highlighted as key components of language modeling.

Hypothesis Functions

In the context of machine learning, a hypothesis function, also referred to as a model, is a function that maps inputs to predictions. These predictions are made based on the input data, and if the hypothesis function is well-chosen, these predictions should be close to the actual targets. The formal representation of a hypothesis function is a function \[h : \mathbb{R}^n \rightarrow \mathbb{R}^k\] that maps inputs from \(\mathbb{R}^n\) to \(\mathbb{R}^k\) , where \(n\) is the dimensionality of the input space and \(k\) is the number of classes in a classification problem.

For multiclass classification, the output of the hypothesis function is a vector in \(\mathbb{R}^k\) , where each element of the vector represents a measure of belief for the corresponding class. This measure of belief is not necessarily a probability but indicates the relative confidence that the input belongs to each class. The \(j\) -th element of the vector \(h(x)\) , denoted as \(h(x)_j\) , corresponds to the belief that the input \(x\) is of class \(j\) . For example, if $ \[h(x) = \begin{bmatrix} -5.2 \\ 1.3 \\ 0.2 \end{bmatrix}\] this would suggest a low belief in class 1 and higher beliefs in classes 2 and 3. The class with the highest value in the vector is typically taken as the predicted class.

Parameterization of Hypothesis Functions

In practice, hypothesis functions are often parameterized by a set of parameters \(\theta\) , which is denoted \(h_\theta\) . These parameters define which specific hypothesis function from the hypothesis class is being used. The hypothesis class itself is a set of potential models from which the learning algorithm selects the most appropriate one based on the data. The choice of parameters \(\theta\) determines the behavior of the hypothesis function and its predictions.

Linear Hypothesis Class

A common example of a hypothesis class is the linear hypothesis class. In this class, the hypothesis function \(h_\theta(x)\) is defined as the matrix product of \(\theta\) and \(x\) , \[ h_\theta(x) = \theta^T x \] where \(\theta\) is a matrix of parameters and \(x\) is an input vector. The dimensions of \(\theta\) are determined by the dimensions of the input and output spaces. Specifically, for an input space of \(n\) dimensions and an output space of \(k\) dimensions, \(\theta\) must be a matrix of size \(n \times k\) to ensure the output is \(k\) -dimensional. Here, \(\theta\) is a matrix and the transpose operation \(\theta^T\) swaps its rows and columns. This function takes \(n\) -dimensional input vectors and produces \(k\) -dimensional output vectors, satisfying the requirements of the hypothesis class.

The parameters of a hypothesis function, often referred to as weights in machine learning terminology, define the specific instance of the function within the hypothesis class. These parameters are the elements of the matrix \(\theta\) in the case of a linear hypothesis class. The number of parameters, or weights, in a model can vary greatly depending on the complexity of the model. For instance, a neural network may have a large number of parameters, sometimes in the billions, which define the specific function it represents within its hypothesis class.

Understanding Matrix-Vector Products

To fully grasp the operation of a linear hypothesis function, one must be familiar with matrix-vector products. The product of a matrix \(\theta\) and a vector \(x\) results in a new vector, where each element is a linear combination of the elements of \(x\) weighted by the corresponding elements of \(\theta\) .

Efficacy of Linear Models

Linear models are surprisingly effective in many machine learning tasks. Despite their simplicity, they can achieve high accuracy in problems such as digit classification. The success of linear models raises questions about why they work well and under what circumstances more complex models might be necessary. These considerations will be explored further in subsequent discussions, along with practical examples and coding demonstrations.

Matrix Representation of Linear Hypothesis Functions

One can gain some insight into the performance of such a linear hypothesis function by looking more closely at the computations being performed. When the transposed matrix \(\theta^T\) is multiplied by the input vector \(x\) , the result is a \(k \times 1\) dimensional vector. This operation can be expressed as follows:

\[ \theta^T x = \begin{bmatrix} \theta_1^T \\ \theta_2^T \\ \vdots \\ \theta_k^T \end{bmatrix} x = \begin{bmatrix} \theta_1^T x \\ \theta_2^T x \\ \vdots \\ \theta_k^T x \end{bmatrix} \]

The \(i\) -th element of the resulting vector is the inner product of \(\theta_i^T\) and \(x\) , which can be written as:

\[ (\theta^T x)_i = \theta_i^T x = \sum_{j=1}^{n} \theta_{ij} x_j \]

This represents the sum of the products of the corresponding elements of the \(i\) -th row of \(\theta^T\) and the input vector \(x\) . Each element of the resulting vector is a scalar, as it is the product of a \(1 \times n\) matrix and an \(n \times 1\) matrix.

Template Matching in Linear Classification

The idea of template matches can help explain the effectiveness of such a model. Each \(\theta_i\) can be visualized as an image, where positive values indicate pixels that increase the likelihood of class \(i\) , and negative values indicate pixels that decrease it. When visualized, \(\theta_i\) resembles a template that matches the features of the corresponding digit. For example, \(\theta_1\) might have positive values where a digit ‘1’ typically has pixels and negative values around it, forming a template that matches the general shape of a ‘1’.

This template matching is a fundamental aspect of linear models in vision systems, where the weights in the model serve as generic templates for the classifier. The effectiveness of this approach is demonstrated by the fact that a linear classifier can achieve approximately 93% accuracy on a digit classification task, significantly better than random guessing, which would yield only 10% accuracy.

Batch/Minibatch Notation

In the context of machine learning, particularly for vision systems, inputs and targets are often represented in a batch or minibatch format. Inputs, denoted as \(x^{(i)}\) , are vectors in \(\mathbb{R}^n\) , where \(n\) is the dimension of the input. Targets, denoted as \(y^{(i)}\) , are elements in the set \(\{1, 2, \ldots, k\}\) , where \(k\) is the number of classes. These inputs and targets are typically organized into matrices for batch processing.

Inputs and Targets as Matrices

Inputs are defined as an \(m \times n\) matrix \(X\) , where \(m\) is the number of examples in the training set. Each row of \(X\) corresponds to an input vector transposed, such that the first row is \(x_1^T\) , the second row is \(x_2^T\) , and so on, up to \(x_m^T\) . The matrix \(X\) is expressed as:

\[ X = \begin{bmatrix} {x^{(1)}}^T \\ {x_{(2)}}^T \\ \vdots \\ {x_{(m)}}^T \end{bmatrix} \]

Targets are similarly organized into a vector \(Y\) , which is an \(m\) -dimensional vector containing the target values for each example in the training set. The vector \(Y\) is expressed as:

\[ Y = \begin{bmatrix} y^{(1)} \\ y^{(2)} \\ \vdots \\ y^{(m)} \end{bmatrix} \]

The hypothesis function \(h_\theta\) can be also applied to an entire set of examples. When applied to a batch, the notation \(h_\theta(X)\) represents the application of \(h_\theta\) to every example within the batch. The result is a matrix where each row corresponds to the hypothesis function applied to the respective input vector. The expression for the hypothesis function applied to a batch is:

\[ h_{\theta}(X) = \begin{bmatrix} h_{\theta}(x^{(1)})^T \\ h_{\theta}(x^{(2)})^T \\ \vdots \\ h_{\theta}(x^{(m)})^T \end{bmatrix} \]

For a linear hypothesis function \(h_\theta(x) = \theta^T x\) , this takes a very simple form

\[ h_{\theta}(X) = \begin{bmatrix} (\theta^T x^{(1)} )^T \\ (\theta^T x^{(2)} )^T \\ \vdots \\ (\theta^T x^{(2)} )^T \end{bmatrix} = \begin{bmatrix} {x^{(1)}}^T \theta \\ {x^{(2)}}^T \theta \\ \vdots \\ {x^{(m)}}^T \theta \end{bmatrix} = X \theta \]

In other words, the hypothesis class applied to every example in the dataset has the extremely simple form of a single matrix operation \(X \theta\)

Implementation

We can implement all these operations very easily using libraries like PyTorch. Below is code that loads the MNIST dataset and computes a linear hypothesis function applied to all the data

Loss Functions

The second ingredient of a machine learning algorithm is the loss function. This function quantifies the difference between the predictions made by the classifier and the actual target labels. It formalizes the concept of a ‘good’ prediction by assigning a numerical value to the accuracy of the predictions. The loss function is a critical component of the training process, as it guides the optimization of the model parameters to improve the classifier’s performance.

Formal Definition of Loss Function

A loss function, denoted as \[ \ell : \mathbb{R}^k \times \{1,\ldots,k\} \] is a mapping from the output of hypothesis functions, which are vectors in \(\mathbb{R}^k\) for multiclass classification and true classes \(\{1, \ldots, k\}\) to positive real numbers. This mapping is applicable not only to multiclass classification but also has analogs for binary classification, regression, and other machine learning tasks.

Zero-One Loss Function

One of the most straightforward loss functions is the zero-one loss, also known as the error. This loss function is defined such that it equals zero if the prediction made by the classifier is correct and one otherwise. Formally, the zero-one loss function can be expressed as follows:

\[ \ell(h_\theta(x), y) = \begin{cases} 0 & \text{if } h_\theta(x)_y > h_\theta(x)_{y'} \text{ for all } y' \\ 1 & \text{otherwise} \end{cases} \]

This means that the loss is zero if the element with the highest value in the hypothesis output corresponds to the true class, indicating a correct prediction. Conversely, if any other element is higher, indicating an incorrect prediction, the loss is one.

Limitations of Zero-One Loss Function

Despite its intuitive appeal, the zero-one loss function is not ideal for two main reasons. Firstly, it is not differentiable, meaning it does not provide a smooth gradient that can guide the improvement of the classifier. This lack of differentiability means that the loss function does not offer a nuanced way to adjust the classifier’s parameters based on the degree of error in the predictions.

Secondly, the zero-one loss function does not handle the notion of stochastic or uncertain outputs well. In tasks like language modeling, where there is no single correct answer, the zero-one loss function fails to capture the probabilistic nature of the predictions. It assigns a hard loss value without considering the closeness of the prediction to the true class or the possibility of multiple plausible predictions.

Cross-Entropy Loss

The most commonly used loss function in modern machine learning is the cross-entropy loss. This loss function addresses the issue of transforming hypothesis outputs, which are often fuzzy and amorphous in terms of “belief,” into concrete probabilities. To achieve this, we introduce the softmax operator

Softmax Operator

To define cross-entropy loss, we need a mechanism to convert real-valued predictions to probabilities. This is achieved using the softmax function. Given a hypothesis function \(h\) that maps \(n\) -dimensional real-valued inputs to \(k\) -dimensional real-valued outputs (where \(k\) is the number of classes), the softmax function is defined as follows:

\[ \text{softmax}(h(x))_i = \frac{\exp(h(x)_i)}{\sum_{j=1}^{k} \exp(h(x)_j)} \]

This function ensures that the output is a probability distribution: each element is non-negative and the sum of all elements is 1.

Defining Cross-Entropy Loss

The goal of cross-entropy loss is to maximize the probability of the correct class. However, for practical and technical reasons, loss functions are typically minimized rather than maximized. Therefore, the negative log probability is used. The cross-entropy loss for a single prediction and the true class is defined as:

\[ \ell_{ce}(h(x), y) = -\log(\text{softmax}(h(x))_y) \]

This loss function takes the negative natural logarithm of the softmax probability corresponding to the true class \(y\) . The use of the logarithm helps to manage the scale of probability values, which can become very small or very large due to the exponential nature of the softmax function. While the initial intention might be to maximize the probability of the correct class, the cross-entropy loss is designed to be minimized. This is a common practice in optimization, where minimizing a loss function is equivalent to maximizing some form of likelihood or probability.

In the context of machine learning, the term “log” typically refers to the natural logarithm, sometimes denoted as \(\ln\) . However, for simplicity, the notation \(\log\) is used with the understanding that it implies the natural logarithm unless otherwise specified.

Simplification of Cross-Entropy Loss

The cross-entropy loss function can be simplified by examining the softmax function. The softmax function is defined as the exponential of a value over the sum of exponentials of a set of elements. When the logarithm of the softmax function is taken, due to the properties of logarithms, the expression simplifies to the difference between the log of the numerator and the log of the denominator. Since the numerator is an exponential function, and the logarithm is being applied to it, these operations cancel each other out, leaving the \(y\) th element of the hypothesis function \(h_{\theta}(x)\) .

The simplified expression for the first element of the cross-entropy loss, including the negation, is given by: \[ \ell_{ce}(h(x), y) = - h_{\theta}(x)_y + \log \sum_{j=1}^{k} \exp\left( h_{\theta}(x)_j \right) \] where \(k\) is the number of classes. The second term in the simplified cross-entropy loss expression involves the logarithm of a sum of exponentials, which is a non-simplifiable function known as the log-sum-exp function.

Vector Notation and Hadamard Product

To facilitate the computation, especially when taking derivatives, the cross-entropy loss can be expressed using vector notation. We specifically introduce the unit basis vector \(e_y\) , which is a one-hot vector with all elements being zero except for a one in the \(y\) th position. The cross-entropy loss can then be written as the inner product of \(E_y\) and the hypothesis function \(h_{\beta}(x)\) , negated: \[ \ell_{ce}(h(x), y) = -e_y^T h_{\theta}(x) + \log \sum_{j=1}^{K} \exp\left( h_{\theta}(x)_j \right) \]

Batch Form of Loss Function

The loss function can also be expressed in batch form, which is useful for processing multiple examples simultaneously. The batch loss is defined as the average of the individual losses over all examples in the batch. Let \(X \in \mathbb{R}^{M \times N}\) be the matrix of input features for all examples in the batch, and \(Y\) be the corresponding vector of labels. The batch loss is given by: \[ \ell_{ce}(h_{\beta}(X), Y) = \frac{1}{m} \sum_{i=1}^{m} \ell_{ce}(h_{\theta}(x^{(i)}), y^{(i)}) \] where \(\ell_{ce}\) is the cross-entropy loss for a single example, \(,\) is the number of examples in the batch, and \(x^{(i)}\) and \(y^{(i)}\) are the \(i\) th example and target, respectively.

Implementing both the zero-one loss and the cross entropy loss in Python is straightforward.

Zero-one loss

The zero-one loss is a simple loss function that counts the number of misclassifications. It is defined as zero if the predicted class (the class with largest value of the hypothesis function) for an example matches the actual class, and one otherwise. To compute the 0-1 loss in Python, the argmax function is used to identify the column that achieves the maximum value for each sample. The argmax function takes an argument specifying the dimension over which to perform the operation. Using -1 as the argument indicates that the operation should be performed over the last dimension, yielding a list of indices corresponding to the maximum values in each row of the tensor. The matrix \(H\) represents the hypothesis function applied to the input matrix \(X\) , and vector \(Y\) contains the actual class labels for each example.

The cross-entropy loss function is more complex than the 0-1 loss but can also be implemented concisely in Python. To compute the first term of the cross entropy loss, we simply index intro the \(y\) th element for each row of \(H\) . The second term, the log-sum-exp, is computed by exponentiating the hypothesis matrix \(H\) , summing over the last dimension (each row), and then taking the logarithm of the result. This term accounts for the normalization of the predicted probabilities and is summed over all samples to complete the cross-entropy loss calculation.

An example is provided where the cross-entropy loss is calculated between a prediction \(H\) and the true labels \(Y\) , resulting in a high loss value of 15. This high value is indicative of the fact that when predictions are incorrect, they can be significantly off, which is captured by the cross-entropy loss. A comparison is made to a scenario where the predictions are all zeros, which would result in a lower cross-entropy loss, highlighting the sensitivity of this loss function to the probabilities associated with the true class labels.

Optimization

The final ingredient of machine learning is the optimization method. Specifically, the goal of the machine learning optimization problem is to find the set of paramaters that minimizes the average loss of the corresponding hypothesis function and target output, over the entire training set. This is written as finding the set of parameters \(\theta\) that minimizes the average loss over the entire training set:

\[ \DeclareMathOperator{\minimize}{minimize} \minimize_{\theta} \frac{1}{m} \sum_{i=1}^{m} \ell(h_{\theta}(x^{(i)}), y^{(i)}) \]

This optimization problem is at the core of all machine learning algorithms, regardless of the specific type, such as neural networks, linear regression, or boosted decision trees. The goal is to search among the class of allowable functions determined by the parameters \(\theta\) to find the one that best fits the training data according to the chosen loss function.

While the lecture will initially focus on the manual computation of derivatives, modern libraries like PyTorch offer automatic differentiation, which eliminates the need for manual derivative calculations. However, understanding the underlying process is valuable. The course will later cover automatic differentiation at a high level, and after some initial manual calculations, we will rely on PyTorch to handle derivatives.

For linear classification problems utilizing cross-entropy loss, the optimization problem is formalized as minimizing the average cross-entropy loss over all training examples. This is given by

\[ \minimize_{\theta} \frac{1}{m} \sum_{i=1}^{m} \ell_{ce}(x^{(i)}), y^{(i)}) \] where \(\theta\) represents the parameters of the linear classifier.

Gradient Descent

The gradient descent algorithm is an iterative method to find the parameters that minimize the loss function. The process involves taking small steps in the direction opposite to the gradient (a multivariate analog of the derivate derivative) of the loss function at the current point.

An analogous update rule for the parameters in the one-dimensional case is given by: \[ x_{n+1} = x_n - \alpha \cdot f'(x_n) \] where \(x_n\) is some current parameter value, \(\alpha\) is the step size (also known as the learning rate), and \(f'(x_n)\) is the derivative of the loss function with respect to the parameter at \(x_n\) .

The derivative provides the direction of the steepest ascent, and by moving in the opposite direction (negative derivative), the algorithm seeks to reduce the loss function value, moving towards a local minimum.

The Gradient

The gradient is a generalization of the derivative for functions with multivariate inputs, such as vectors or matrices. It is a matrix of partial derivatives and is denoted as \(\nabla f(\theta)\) , where \(\theta\) is the point at which the gradient is evaluated. The gradient is only defined for functions with scalar-valued outputs.

For a function \(f: \mathbb{R}^{n \times k} \rightarrow \mathbb{R}\) , the gradient \(\nabla_\theta f(\theta)\) is an \(n \times k\) matrix given by:

\[ \nabla_\theta f(\theta) = \begin{bmatrix} \frac{\partial f(\theta)}{\partial \theta_{11}} & \cdots & \frac{\partial f(\theta)}{\partial \theta_{1k}} \\ \vdots & \ddots & \vdots \\ \frac{\partial f(\theta)}{\partial \theta_{n1}} & \cdots & \frac{\partial f(\theta)}{\partial \theta_{nk}} \end{bmatrix} \]

Each element of this matrix is the partial derivative of \(f\) with respect to the corresponding element of \(\theta\) . A partial derivative is computed by treating all other elements of \(\theta\) as constants.

The gradient has a crucial property that it always points in the direction of the steepest ascent in the function’s value. Conversely, the negative gradient points in the direction of the steepest descent, which is useful for optimization. This property holds true for functions with vector or matrix inputs, just as the derivative points in the direction of steepest ascent for functions with a single input. This concept is fundamental as it implies that by evaluating the gradient, one can determine the direction that is most uphill or, conversely, most downhill by considering the negative gradient. This property is crucial in machine learning because it allows for scanning every possible direction around a current point to find the single direction that points most uphill or downhill.

Gradient Descent: The Core Algorithm

Gradient descent is highlighted as one of the single most important algorithms in computer science, particularly in the field of artificial intelligence. It is the underlying algorithm for training various AI models and is used in almost every advance in AI. Gradient descent is a procedure for iteratively minimizing a function, and it consists of the following steps:

- Initialization : Begin with an initial point \(\theta\) .

- Iteration : For a predetermined number of iterations \(T\) , repeat the following update: \[ \theta := \theta - \alpha \nabla_\theta f(\theta) \] where \(\alpha\) is a positive real value known as the step size, and \(\nabla_\theta f(\theta)\) is the gradient of the function \(f\) with respect to \(\theta\) evaluated at the current point \(\theta\) .

The function \(f\) is the one to be optimized, and the choice of step size \(\alpha\) and the number of iterations \(T\) can significantly affect the optimization process. Libraries such as PyTorch can compute gradients automatically using a technique called automatic differentiation, simplifying the optimization process even further.

Practical Considerations and Variants

While the basic premise of gradient descent is straightforward, practical implementation involves several considerations. The choice of step size is critical, and the number of iterations must be chosen carefully to ensure convergence. In the context of neural networks, initialization plays a significant role, although it is less critical for convex problems.

Stochastic Gradient Descent (SGD)

Stochastic Gradient Descent (SGD) is a variant of the gradient descent algorithm, particularly useful when the objective function is an average of many individual functions. This is often the case in machine learning, where the objective is to minimize the average loss across a dataset.

For example, consider the applciation of SGD to multi-class linear classification. The objective function in such cases is typically the sum or average of loss functions over the training examples:

\[ \min_{\theta} \frac{1}{m} \sum_{i=1}^{m} \ell(h_{\theta}(x^{(i)}), y^{(i)}) \]

where \(\ell\) is the loss function, \(h_{\theta}\) is the hypothesis function parameterized by \(\theta\) , \(x^{(i)}\) is the \(i\) -th training example, and \(y^{(i)}\) is the corresponding target value.

Batch Processing in SGD

Instead of computing the gradient over the entire dataset, which can be computationally expensive, SGD approximates the gradient using a random subset of the data, known as a batch or minibatch. The size of the batch, denoted by \(|B|\) , is typically much smaller than the size of the full dataset, allowing for more frequent updates of the parameters within the same computational budget. The approximation of the objective function using a batch is given by: \[ \frac{1}{|B|} \sum_{i \in B} \ell(h_{\theta}(x^{(i)}), y^{(i)}) \] where \(B\) is a randomly selected subset of the indices from \(1\) to \(m\) . This subset is used to compute the gradient and update the parameters.

Algorithmic Description of SGD

The SGD algorithm proceeds by initializing the parameters and then iteratively updating them by subtracting a scaled gradient computed on a random batch. The scaling factor is often referred to as the learning rate. The algorithm can be summarized as follows:

- Initialize \(\theta\) .

- Select a random batch $B {1,,m}.

- Update \(\theta\) by subtracting a fraction of the gradient. \[ \theta := \theta - \frac{\alpha}{|B|} \sum_{i\in B} \nabla_\theta \ell(h_{\theta}(x^{(i)}), y^{(i)})\]

We emphasize that while the intermediate steps of deriving gradients may appear complex, the process is manageable and will eventually be simplified by using automatic differentiation tools. However, understanding the mechanics of SGD is important for grasping the underlying principles of optimization in machine learning.

In practice, rather than selecting a completely random subset each time, the dataset is often divided into batches of a fixed size, and the algorithm iterates over these batches. This approach is known as mini-batch gradient descent. The size of the mini-batch is a trade-off between computational efficiency and the frequency of updates. While a batch size of one (pure SGD) maximizes the number of updates, it is not computationally efficient due to the reliance on matrix-vector products rather than matrix-matrix products. Common batch sizes are 32 or 64, which balance the speed of updates with computational efficiency.

Cyclic SGD is a variant where the entire dataset is iterated over in chunks or batches, possibly in a randomized order. This method is harder to analyze mathematically compared to the true form of SGD, where a new random subset is selected at each iteration. However, cyclic SGD is a reasonable approximation used in practice.

Computational Trade-offs

The choice of batch size in SGD is influenced by the trade-off between the accuracy of the gradient direction and the speed of computation. Smaller batch sizes allow for more frequent updates but may provide a rougher approximation of the true gradient. Conversely, larger batch sizes use computational resources more efficiently but update the parameters less frequently. The optimal batch size depends on the specific problem and computational constraints.

Computing the Stochastic Gradient Descent Updates

To perform SGD updates, we need to compute the gradient of the loss function with respect to the parameters. In the context of multi-class linear classification, the loss function is the cross-entropy loss of the hypothesis class, which is a linear function of the parameters \(\beta\) and the input features \(x\) . The cross-entropy loss can be expressed as:

\[ \ell(\theta^T x, y) = -e_y^T (\theta^T x) + \log \left( \sum_{j=1}^{k} e^{\theta_j^T x} \right) \]

where \(e_y\) is the basis vector corresponding to the true class, \(\theta\) is the parameter matrix, and \(k\) is the number of classes. The \(j\) -th column of \(\theta\) , denoted as \(\theta_j\) , represents the parameters corresponding to the \(j\) -th class.

Elementwise computation of the Gradient

The gradient of the loss function with respect to \(\theta\) is a matrix of partial derivatives. To compute this gradient, we can consider each element of the matrix, which involves taking the partial derivative with respect to each element \(\theta_{rs}\) of the parameter matrix \(\theta\) . The gradient with respect to \(\theta_{rs}\) of the loss function is given by: \[ \frac{\partial}{\partial \theta_{rs}} -e_y^T (\theta^T x) = -x_r \cdot \mathbb{1}_{\{s=y\}} \] where \(\mathbb{1}(y = s)\) is the indicator function that is 1 if the true class label \(y\) \(s\) and 0 otherwise, and \(x_r\) is the \(r\) -th feature of the \(x\) .

Next we consider the partial derivative of the log-sum-exp term. The derivative of the log of a function is the derivative of the function divided by the function itself. Applying this to the softmax function, the derivative with respect to \(\theta_{rs}\) is computed as follows:

\[ \begin{align} \frac{\partial}{\partial \theta_{rs}} \log \left( \sum_{j=1}^{K} \exp \left( \sum_{i=1}^{N} \theta_{ij} X_i \right) \right) & = \frac{\frac{\partial}{\partial \theta_{rs}} \left( \sum_{j=1}^{K} \exp \left( \sum_{i=1}^{N} \theta_{ij} x_i \right) \right)}{ \sum_{j=1}^{K} \exp \left( \sum_{i=1}^{N} \theta_{ij} X_i \right) } \\ & = \frac{\exp \left( \sum_{i=1}^{N} \theta_{is} x_i \right) \cdot x_r}{ \sum_{j=1}^{K} \exp \left( \sum_{i=1}^{N} \theta_{ij} X_i \right) } \end{align} \]

Gradient in Native Matrix Form

The gradient of a function with respect to its parameters can be expressed in a native matrix form. This form simplifies the representation and calculation of the gradient. Looking at the expression for the \(rs\) element of the gradient, we can respresent all elements of the gradient simultaneously as \[ \nabla_\theta \ell_{ce}(\theta^T x, y) = -x (\mathbf{e}_y - \text{softmax}(\theta^T x))^T. \]

Thus, the gradient of the loss for a given example is the product of the example vector \(\mathbf{x}\) and the difference between the probabilistic prediction and the one-hot vector of the targets. This form of the gradient is intuitive as it adjusts the parameters corresponding to the weights of the matrix to minimize the difference between the predicted probabilities and the actual target distribution.

Secret of Vector Calculus

Although we can always derive gradients element-by-element in this manner, there is a way that is easier in practice, and works well for most practical cases. The core idea is to treat all the elements in an expression as scalars, compute the derivates using normal scalar calculus, and then determine the gradient form by matching sizes. This may not always work, but it often in fact gives the correct gradient. To see how this works, we can first define the cross entropy loss of a linear classifier in a “vector” form \[ \ell_{ce}(\theta^T x,y) = -e_y^T \theta^T x + \log(\mathbf{1}^T \exp(\theta^T x)) \] where \(\mathbf{1}\) represents the vector of all ones. Now taking the derivative with respect to \(\theta\) , assuming that all values are scalars, and applying the chain rule we have \[ \frac{\partial}{\partial \theta} \ell_{ce}(\theta^T x,y) = -e_y x + \frac{\exp(\theta^T x) x}{1^T \exp(\theta^T x)} = x (-e_y^T + \text{softmax}(\theta^T x)). \] Re-arranging terms so that the sizes match, we have as above that \[ \nabla_\theta \ell_{ce}(\theta^T x, y) = -x (\mathbf{e}_y - \text{softmax}(\theta^T x))^T. \]

Batch Gradient Computation

This gradient has be easily extended to a batch of examples. The loss function is overloaded to handle batches, and the derivative with respect to \(\theta\) for the entire batch is computed. The batch version of the hypothesis function is represented by the matrix product \(X\theta\) , where \(X\) is the matrix of input features for the entire batch. The derivative for the batch is given by: \[ \frac{\partial}{\partial \theta} \ell_{ce}(X\theta, Y) = X^T \left(-I_y + \text{softmax}(X\theta)\right) \] where \(I_Y\) is the matrix of one-hot encoded targets in each row for the entire batch, and \(\text{softmax}(X\theta)\) is the softmax function applied to each row of the matrix product \(X\theta\) . The resulting gradient is an \(n \times k\) dimensional matrix, where \(n\) is the number of features and \(k\) is the number of classes.

Numerical Gradient Approximation

To verify the correctness of the derived gradient expression, a numerical gradient approximation method is introduced. This method involves perturbing each element of the parameter matrix \(\theta\) by a small value \(\epsilon\) and computing the resulting change in the cross-entropy loss. This can be computed using the following code:

The numerical gradient approximation serves as a check against the analytically derived gradient. If the numerical and analytical gradients match for a randomly initialized \(\theta\) , it is likely that the analytical gradient is correct. However, it is cautioned that this method can be computationally expensive, as it requires iterating over every element of \(\theta\) and evaluating the loss function multiple times. Thus, it’s only used as a measure to check the analytical gradient, and then you would want to use the analytical computation of the gradient after that.

Implementation in PyTorch

Let’s put all these elements together to implement a complete implementation of linear class classification in Python. While the resulting code is quite small, it’s important to emphasize the complexity of what is happening. Specifically, the implementation defines all the three ingredients of a machine learning algorithm: 1. We use a linear hypothesis function \(h_\theta(x) = \theta^T x\) 2. We use the cross entropy loss \(\ell_{ce}(h_\theta(x), y)\) 3. We solve the optimization problem of finding the parameters that minimize the loss over the training set using stochastic gradient descent updates by via the updates \[ \theta := \theta - \frac{\alpha}{|B|} \cdot X^T(\text{softmax}(X\theta) - I_Y) \]

Running this example on the MNIST dataset results in a linear classifier that achieves about 7.5% error, on a held out test set. We can further try to visualize the results columns of theta and the results look approximately like “templates” for the digits we are trying to classify. Generally speaking, such visualization won’t be possible for more complex classifiers, but this kind of “template matching” nonetheless provides a reasonable intuition about what machine learning methods are doing, even for more complex classifiers.

- Comprehensive Learning Paths

- 150+ Hours of Videos

- Complete Access to Jupyter notebooks, Datasets, References.

Hypothesis Testing – A Deep Dive into Hypothesis Testing, The Backbone of Statistical Inference

- September 21, 2023

Explore the intricacies of hypothesis testing, a cornerstone of statistical analysis. Dive into methods, interpretations, and applications for making data-driven decisions.

In this Blog post we will learn:

- What is Hypothesis Testing?

- Steps in Hypothesis Testing 2.1. Set up Hypotheses: Null and Alternative 2.2. Choose a Significance Level (α) 2.3. Calculate a test statistic and P-Value 2.4. Make a Decision

- Example : Testing a new drug.

- Example in python

1. What is Hypothesis Testing?

In simple terms, hypothesis testing is a method used to make decisions or inferences about population parameters based on sample data. Imagine being handed a dice and asked if it’s biased. By rolling it a few times and analyzing the outcomes, you’d be engaging in the essence of hypothesis testing.

Think of hypothesis testing as the scientific method of the statistics world. Suppose you hear claims like “This new drug works wonders!” or “Our new website design boosts sales.” How do you know if these statements hold water? Enter hypothesis testing.

2. Steps in Hypothesis Testing

- Set up Hypotheses : Begin with a null hypothesis (H0) and an alternative hypothesis (Ha).

- Choose a Significance Level (α) : Typically 0.05, this is the probability of rejecting the null hypothesis when it’s actually true. Think of it as the chance of accusing an innocent person.

- Calculate Test statistic and P-Value : Gather evidence (data) and calculate a test statistic.

- p-value : This is the probability of observing the data, given that the null hypothesis is true. A small p-value (typically ≤ 0.05) suggests the data is inconsistent with the null hypothesis.

- Decision Rule : If the p-value is less than or equal to α, you reject the null hypothesis in favor of the alternative.

2.1. Set up Hypotheses: Null and Alternative

Before diving into testing, we must formulate hypotheses. The null hypothesis (H0) represents the default assumption, while the alternative hypothesis (H1) challenges it.

For instance, in drug testing, H0 : “The new drug is no better than the existing one,” H1 : “The new drug is superior .”

2.2. Choose a Significance Level (α)

When You collect and analyze data to test H0 and H1 hypotheses. Based on your analysis, you decide whether to reject the null hypothesis in favor of the alternative, or fail to reject / Accept the null hypothesis.

The significance level, often denoted by $α$, represents the probability of rejecting the null hypothesis when it is actually true.

In other words, it’s the risk you’re willing to take of making a Type I error (false positive).

Type I Error (False Positive) :

- Symbolized by the Greek letter alpha (α).

- Occurs when you incorrectly reject a true null hypothesis . In other words, you conclude that there is an effect or difference when, in reality, there isn’t.

- The probability of making a Type I error is denoted by the significance level of a test. Commonly, tests are conducted at the 0.05 significance level , which means there’s a 5% chance of making a Type I error .

- Commonly used significance levels are 0.01, 0.05, and 0.10, but the choice depends on the context of the study and the level of risk one is willing to accept.

Example : If a drug is not effective (truth), but a clinical trial incorrectly concludes that it is effective (based on the sample data), then a Type I error has occurred.

Type II Error (False Negative) :

- Symbolized by the Greek letter beta (β).

- Occurs when you accept a false null hypothesis . This means you conclude there is no effect or difference when, in reality, there is.

- The probability of making a Type II error is denoted by β. The power of a test (1 – β) represents the probability of correctly rejecting a false null hypothesis.

Example : If a drug is effective (truth), but a clinical trial incorrectly concludes that it is not effective (based on the sample data), then a Type II error has occurred.

Balancing the Errors :

In practice, there’s a trade-off between Type I and Type II errors. Reducing the risk of one typically increases the risk of the other. For example, if you want to decrease the probability of a Type I error (by setting a lower significance level), you might increase the probability of a Type II error unless you compensate by collecting more data or making other adjustments.

It’s essential to understand the consequences of both types of errors in any given context. In some situations, a Type I error might be more severe, while in others, a Type II error might be of greater concern. This understanding guides researchers in designing their experiments and choosing appropriate significance levels.

2.3. Calculate a test statistic and P-Value

Test statistic : A test statistic is a single number that helps us understand how far our sample data is from what we’d expect under a null hypothesis (a basic assumption we’re trying to test against). Generally, the larger the test statistic, the more evidence we have against our null hypothesis. It helps us decide whether the differences we observe in our data are due to random chance or if there’s an actual effect.

P-value : The P-value tells us how likely we would get our observed results (or something more extreme) if the null hypothesis were true. It’s a value between 0 and 1. – A smaller P-value (typically below 0.05) means that the observation is rare under the null hypothesis, so we might reject the null hypothesis. – A larger P-value suggests that what we observed could easily happen by random chance, so we might not reject the null hypothesis.

2.4. Make a Decision

Relationship between $α$ and P-Value

When conducting a hypothesis test:

We then calculate the p-value from our sample data and the test statistic.

Finally, we compare the p-value to our chosen $α$:

- If $p−value≤α$: We reject the null hypothesis in favor of the alternative hypothesis. The result is said to be statistically significant.

- If $p−value>α$: We fail to reject the null hypothesis. There isn’t enough statistical evidence to support the alternative hypothesis.

3. Example : Testing a new drug.

Imagine we are investigating whether a new drug is effective at treating headaches faster than drug B.

Setting Up the Experiment : You gather 100 people who suffer from headaches. Half of them (50 people) are given the new drug (let’s call this the ‘Drug Group’), and the other half are given a sugar pill, which doesn’t contain any medication.

- Set up Hypotheses : Before starting, you make a prediction:

- Null Hypothesis (H0): The new drug has no effect. Any difference in healing time between the two groups is just due to random chance.

- Alternative Hypothesis (H1): The new drug does have an effect. The difference in healing time between the two groups is significant and not just by chance.

Calculate Test statistic and P-Value : After the experiment, you analyze the data. The “test statistic” is a number that helps you understand the difference between the two groups in terms of standard units.

For instance, let’s say:

- The average healing time in the Drug Group is 2 hours.

- The average healing time in the Placebo Group is 3 hours.

The test statistic helps you understand how significant this 1-hour difference is. If the groups are large and the spread of healing times in each group is small, then this difference might be significant. But if there’s a huge variation in healing times, the 1-hour difference might not be so special.

Imagine the P-value as answering this question: “If the new drug had NO real effect, what’s the probability that I’d see a difference as extreme (or more extreme) as the one I found, just by random chance?”

For instance:

- P-value of 0.01 means there’s a 1% chance that the observed difference (or a more extreme difference) would occur if the drug had no effect. That’s pretty rare, so we might consider the drug effective.

- P-value of 0.5 means there’s a 50% chance you’d see this difference just by chance. That’s pretty high, so we might not be convinced the drug is doing much.

- If the P-value is less than ($α$) 0.05: the results are “statistically significant,” and they might reject the null hypothesis , believing the new drug has an effect.

- If the P-value is greater than ($α$) 0.05: the results are not statistically significant, and they don’t reject the null hypothesis , remaining unsure if the drug has a genuine effect.

4. Example in python

For simplicity, let’s say we’re using a t-test (common for comparing means). Let’s dive into Python:

Making a Decision : “The results are statistically significant! p-value < 0.05 , The drug seems to have an effect!” If not, we’d say, “Looks like the drug isn’t as miraculous as we thought.”

5. Conclusion

Hypothesis testing is an indispensable tool in data science, allowing us to make data-driven decisions with confidence. By understanding its principles, conducting tests properly, and considering real-world applications, you can harness the power of hypothesis testing to unlock valuable insights from your data.

More Articles

Correlation – connecting the dots, the role of correlation in data analysis, sampling and sampling distributions – a comprehensive guide on sampling and sampling distributions, law of large numbers – a deep dive into the world of statistics, central limit theorem – a deep dive into central limit theorem and its significance in statistics, skewness and kurtosis – peaks and tails, understanding data through skewness and kurtosis”, similar articles, complete introduction to linear regression in r, how to implement common statistical significance tests and find the p value, logistic regression – a complete tutorial with examples in r.

Subscribe to Machine Learning Plus for high value data science content

© Machinelearningplus. All rights reserved.

Machine Learning A-Z™: Hands-On Python & R In Data Science

Free sample videos:.

Best Guesses: Understanding The Hypothesis in Machine Learning

- February 22, 2024

- General , Supervised Learning , Unsupervised Learning

Machine learning is a vast and complex field that has inherited many terms from other places all over the mathematical domain.

It can sometimes be challenging to get your head around all the different terminologies, never mind trying to understand how everything comes together.

In this blog post, we will focus on one particular concept: the hypothesis.

While you may think this is simple, there is a little caveat regarding machine learning.

The statistics side and the learning side.

Don’t worry; we’ll do a full breakdown below.

You’ll learn the following:

What Is a Hypothesis in Machine Learning?

- Is This any different than the hypothesis in statistics?

- What is the difference between the alternative hypothesis and the null?

- Why do we restrict hypothesis space in artificial intelligence?

- Example code performing hypothesis testing in machine learning

In machine learning, the term ‘hypothesis’ can refer to two things.

First, it can refer to the hypothesis space, the set of all possible training examples that could be used to predict or answer a new instance.

Second, it can refer to the traditional null and alternative hypotheses from statistics.

Since machine learning works so closely with statistics, 90% of the time, when someone is referencing the hypothesis, they’re referencing hypothesis tests from statistics.

Is This Any Different Than The Hypothesis In Statistics?

In statistics, the hypothesis is an assumption made about a population parameter.

The statistician’s goal is to prove it true or disprove it.

This will take the form of two different hypotheses, one called the null, and one called the alternative.

Usually, you’ll establish your null hypothesis as an assumption that it equals some value.

For example, in Welch’s T-Test Of Unequal Variance, our null hypothesis is that the two means we are testing (population parameter) are equal.

This means our null hypothesis is that the two population means are the same.

We run our statistical tests, and if our p-value is significant (very low), we reject the null hypothesis.

This would mean that their population means are unequal for the two samples you are testing.

Usually, statisticians will use the significance level of .05 (a 5% risk of being wrong) when deciding what to use as the p-value cut-off.

What Is The Difference Between The Alternative Hypothesis And The Null?

The null hypothesis is our default assumption, which we are trying to prove correct.

The alternate hypothesis is usually the opposite of our null and is much broader in scope.

For most statistical tests, the null and alternative hypotheses are already defined.

You are then just trying to find “significant” evidence we can use to reject our null hypothesis.

These two hypotheses are easy to spot by their specific notation. The null hypothesis is usually denoted by H₀, while H₁ denotes the alternative hypothesis.

Example Code Performing Hypothesis Testing In Machine Learning

Since there are many different hypothesis tests in machine learning and data science, we will focus on one of my favorites.

This test is Welch’s T-Test Of Unequal Variance, where we are trying to determine if the population means of these two samples are different.

There are a couple of assumptions for this test, but we will ignore those for now and show the code.

You can read more about this here in our other post, Welch’s T-Test of Unequal Variance .

We see that our p-value is very low, and we reject the null hypothesis.

What Is The Difference Between The Biased And Unbiased Hypothesis Spaces?

The difference between the Biased and Unbiased hypothesis space is the number of possible training examples your algorithm has to predict.

The unbiased space has all of them, and the biased space only has the training examples you’ve supplied.

Since neither of these is optimal (one is too small, one is much too big), your algorithm creates generalized rules (inductive learning) to be able to handle examples it hasn’t seen before.

Here’s an example of each:

Example of The Biased Hypothesis Space In Machine Learning

The Biased Hypothesis space in machine learning is a biased subspace where your algorithm does not consider all training examples to make predictions.

This is easiest to see with an example.

Let’s say you have the following data:

Happy and Sunny and Stomach Full = True

Whenever your algorithm sees those three together in the biased hypothesis space, it’ll automatically default to true.

This means when your algorithm sees:

Sad and Sunny And Stomach Full = False

It’ll automatically default to False since it didn’t appear in our subspace.

This is a greedy approach, but it has some practical applications.

Example of the Unbiased Hypothesis Space In Machine Learning

The unbiased hypothesis space is a space where all combinations are stored.

We can use re-use our example above:

This would start to breakdown as

Happy = True

Happy and Sunny = True

Happy and Stomach Full = True

Let’s say you have four options for each of the three choices.

This would mean our subspace would need 2^12 instances (4096) just for our little three-word problem.

This is practically impossible; the space would become huge.

So while it would be highly accurate, this has no scalability.

More reading on this idea can be found in our post, Inductive Bias In Machine Learning .

Why Do We Restrict Hypothesis Space In Artificial Intelligence?

We have to restrict the hypothesis space in machine learning. Without any restrictions, our domain becomes much too large, and we lose any form of scalability.

This is why our algorithm creates rules to handle examples that are seen in production.

This gives our algorithms a generalized approach that will be able to handle all new examples that are in the same format.

Other Quick Machine Learning Tutorials

At EML, we have a ton of cool data science tutorials that break things down so anyone can understand them.

Below we’ve listed a few that are similar to this guide:

- Instance-Based Learning in Machine Learning

- Types of Data For Machine Learning

- Verbose in Machine Learning

- Generalization In Machine Learning

- Epoch In Machine Learning

- Inductive Bias in Machine Learning

- Understanding The Hypothesis In Machine Learning

- Zip Codes In Machine Learning

- get_dummies() in Machine Learning

- Bootstrapping In Machine Learning

- X and Y in Machine Learning

- F1 Score in Machine Learning

- Recent Posts

- Python for Data Science: Applications & Impact Unveiled [Must-Read] - July 18, 2024

- Boost Customer Service in the Workplace [Unleash Your Team’s Potential] - July 18, 2024

- What Computer Software Do Veterinarians Use? [Discover the Best Tools Here] - July 17, 2024

Hypothesis in Machine Learning: Comprehensive Overview(2021)

Introduction

Supervised machine learning (ML) is regularly portrayed as the issue of approximating an objective capacity that maps inputs to outputs. This portrayal is described as looking through and assessing competitor hypothesis from hypothesis spaces.

The conversation of hypothesis in machine learning can be confused for a novice, particularly when “hypothesis” has a discrete, but correlated significance in statistics and all the more comprehensively in science.

Hypothesis Space (H)

The hypothesis space utilized by an ML system is the arrangement of all hypotheses that may be returned by it. It is ordinarily characterized by a Hypothesis Language, conceivably related to a Language Bias.

Many ML algorithms depend on some sort of search methodology: given a set of perceptions and a space of all potential hypotheses that may be thought in the hypothesis space. They see in this space for those hypotheses that adequately furnish the data or are ideal concerning some other quality standard.

ML can be portrayed as the need to utilize accessible data objects to discover a function that most reliable maps inputs to output, alluded to as function estimate, where we surmised an anonymous objective function that can most reliably map inputs to outputs on all expected perceptions from the difficult domain. An illustration of a model that approximates the performs mappings and target function of inputs to outputs is known as hypothesis testing in machine learning.

The hypothesis in machine learning of all potential hypothesis that you are looking over, paying little mind to their structure. For the wellbeing of accommodation, the hypothesis class is normally compelled to be just each sort of function or model in turn, since learning techniques regularly just work on each type at a time. This doesn’t need to be the situation, however:

- Hypothesis classes don’t need to comprise just one kind of function. If you’re looking through exponential, quadratic, and overall linear functions, those are what your joined hypothesis class contains.

- Hypothesis classes additionally don’t need to comprise of just straightforward functions. If you figure out how to look over all piecewise-tanh2 functions, those functions are what your hypothesis class incorporates.

The enormous trade-off is that the bigger your hypothesis class in machine learning, the better the best hypothesis models the basic genuine function, yet the harder it is to locate that best hypothesis. This is identified with the bias-variance trade-off.

- Hypothesis (h)

A hypothesis function in machine learning is best describes the target. The hypothesis that an algorithm would concoct relies on the data and relies on the bias and restrictions that we have forced on the data.

The hypothesis formula in machine learning:

- y is range

- m changes in y divided by change in x

- x is domain

- b is intercept

The purpose of restricting hypothesis space in machine learning is so that these can fit well with the general data that is needed by the user. It checks the reality or deception of observations or inputs and examinations them appropriately. Subsequently, it is extremely helpful and it plays out the valuable function of mapping all the inputs till they come out as outputs. Consequently, the target functions are deliberately examined and restricted dependent on the outcomes (regardless of whether they are free of bias), in ML.

The hypothesis in machine learning space and inductive bias in machine learning is that the hypothesis space is a collection of valid Hypothesis, for example, every single desirable function, on the opposite side the inductive bias (otherwise called learning bias) of a learning algorithm is the series of expectations that the learner uses to foresee outputs of given sources of inputs that it has not experienced. Regression and Classification are a kind of realizing which relies upon continuous-valued and discrete-valued sequentially. This sort of issues (learnings) is called inductive learning issues since we distinguish a function by inducting it on data.

In the Maximum a Posteriori or MAP hypothesis in machine learning, enhancement gives a Bayesian probability structure to fitting model parameters to training data and another option and sibling may be a more normal Maximum Likelihood Estimation system. MAP learning chooses a solitary in all probability theory given the data. The hypothesis in machine learning earlier is as yet utilized and the technique is regularly more manageable than full Bayesian learning.

Bayesian techniques can be utilized to decide the most plausible hypothesis in machine learning given the data the MAP hypothesis. This is the ideal hypothesis as no other hypothesis is more probable.

Hypothesis in machine learning or ML the applicant model that approximates a target function for mapping instances of inputs to outputs.

Hypothesis in statistics probabilistic clarification about the presence of a connection between observations.

Hypothesis in science is a temporary clarification that fits the proof and can be disproved or confirmed. We can see that a hypothesis in machine learning draws upon the meaning of the hypothesis all the more extensively in science.

There are no right or wrong ways of learning AI and ML technologies – the more, the better! These valuable resources can be the starting point for your journey on how to learn Artificial Intelligence and Machine Learning. Do pursuing AI and ML interest you? If you want to step into the world of emerging tech, you can accelerate your career with this Machine Learning And AI Courses by Jigsaw Academy.

- XGBoost Algorithm: An Easy Overview For 2021

Fill in the details to know more

PEOPLE ALSO READ

Related Articles

From The Eyes Of Emerging Technologies: IPL Through The Ages

April 29, 2023

Personalized Teaching with AI: Revolutionizing Traditional Teaching Methods

April 28, 2023

Metaverse: The Virtual Universe and its impact on the World of Finance

April 13, 2023

Artificial Intelligence – Learning To Manage The Mind Created By The Human Mind!

March 22, 2023

Wake Up to the Importance of Sleep: Celebrating World Sleep Day!

March 18, 2023

Operations Management and AI: How Do They Work?

March 15, 2023

How Does BYOP(Bring Your Own Project) Help In Building Your Portfolio?

What Are the Ethics in Artificial Intelligence (AI)?

November 25, 2022

What is Epoch in Machine Learning?| UNext

November 24, 2022

The Impact Of Artificial Intelligence (AI) in Cloud Computing

November 18, 2022

Role of Artificial Intelligence and Machine Learning in Supply Chain Management

November 11, 2022

Best Python Libraries for Machine Learning in 2022

November 7, 2022

Are you ready to build your own career?

Query? Ask Us

Enter Your Details ×

- Machine Learning Basics

- Machine Learning - Home

- Machine Learning - Getting Started

- Machine Learning - Basic Concepts

- Machine Learning - Python Libraries

- Machine Learning - Applications

- Machine Learning - Life Cycle

- Machine Learning - Required Skills

- Machine Learning - Implementation

- Machine Learning - Challenges & Common Issues

- Machine Learning - Limitations

- Machine Learning - Reallife Examples

- Machine Learning - Data Structure

- Machine Learning - Mathematics

- Machine Learning - Artificial Intelligence

- Machine Learning - Neural Networks

- Machine Learning - Deep Learning

- Machine Learning - Getting Datasets

- Machine Learning - Categorical Data

- Machine Learning - Data Loading

- Machine Learning - Data Understanding