A Survey on Modern Recommendation System based on Big Data

This survey provides an exhaustive exploration of the evolution and current state of recommendation systems, which have seen widespread integration in various web applications. It focuses on the advancement of personalized recommendation strategies for online products or services. We categorize recommendation techniques into four primary types: content-based, collaborative filtering-based, knowledge-based, and hybrid-based, each addressing unique scenarios. The survey offers a detailed examination of the historical context and the latest innovative approaches in recommendation systems, particularly those employing big data. Additionally, it identifies and discusses key challenges faced by modern recommendation systems, such as data sparsity, scalability issues, and the need for diversity in recommendations. The survey concludes by highlighting these challenges as potential areas for fruitful future research in the field.

1 Introduction

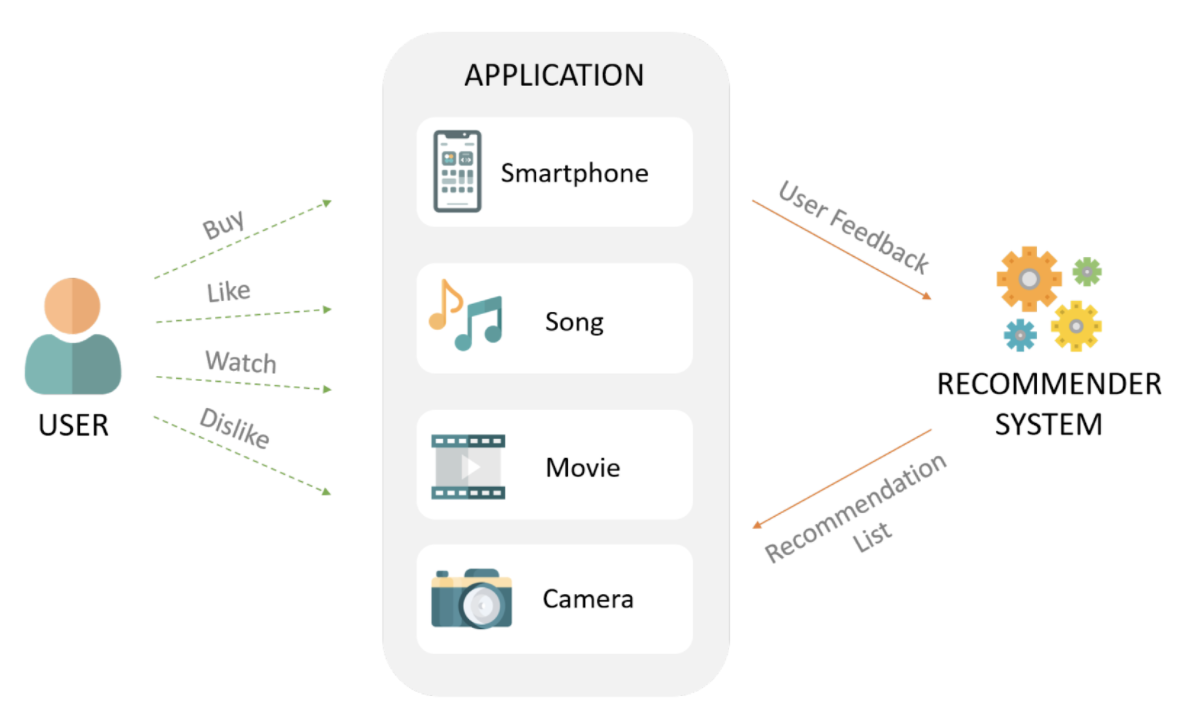

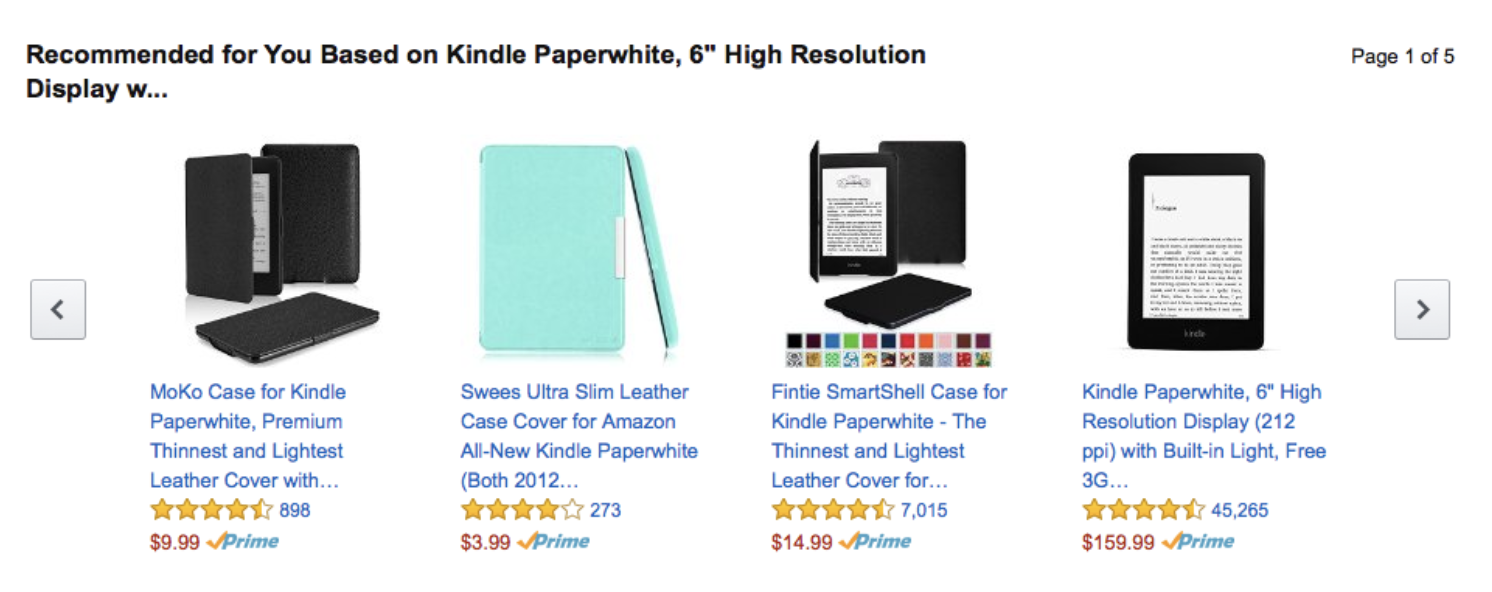

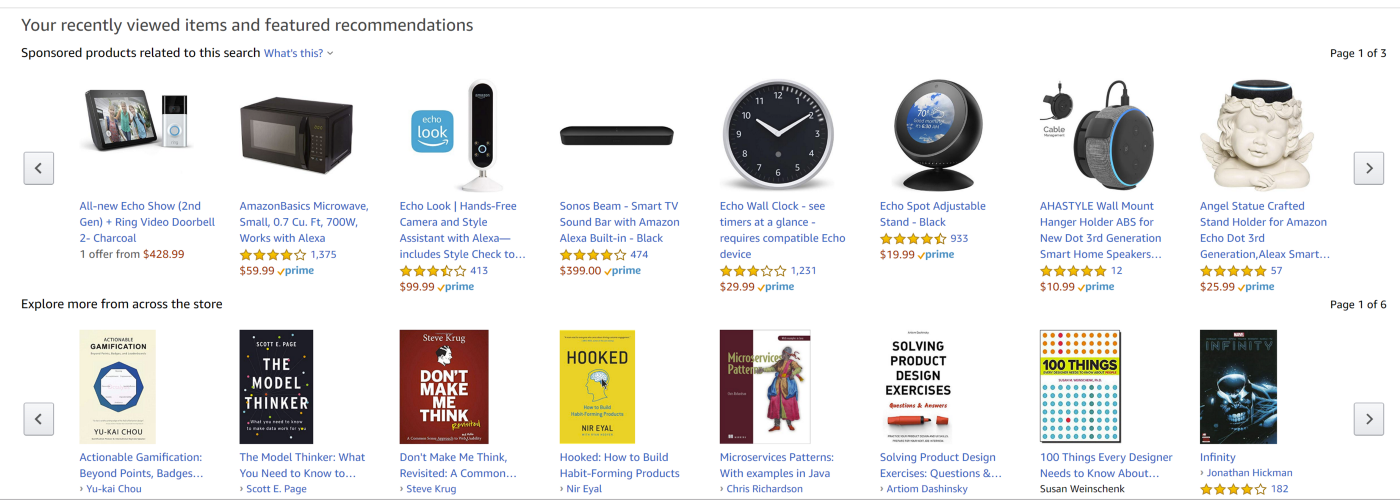

In this survey, we examine the escalating popularity and diverse application of recommendation systems in web applications, a topic extensively covered by Zhou et al. [ 1 ] . These systems, a specialized category of information filtering systems, are designed to predict user preferences for various items. They play a crucial role in guiding decision-making processes, such as purchasing decisions and music selections, as Wang et al. discuss [ 2 ] . A prime example of this application is Amazon’s personalized recommendation engine, which tailors each user’s homepage. Major companies like Amazon, YouTube, and Netflix employ these systems to enhance user experience and generate significant revenue, as noted by Adomavicius et al. and Omura et al. [ 3 , 4 ] . Figure 1 from Entezari et al. [ 5 ] illustrates a modern recommendation system. Additionally, these systems are increasingly relevant in the field of human-computer interaction (HCI), where they enhance interaction efficiency through feedback mechanisms, a topic explored in several studies [ 6 , 7 , 8 , 9 ] .

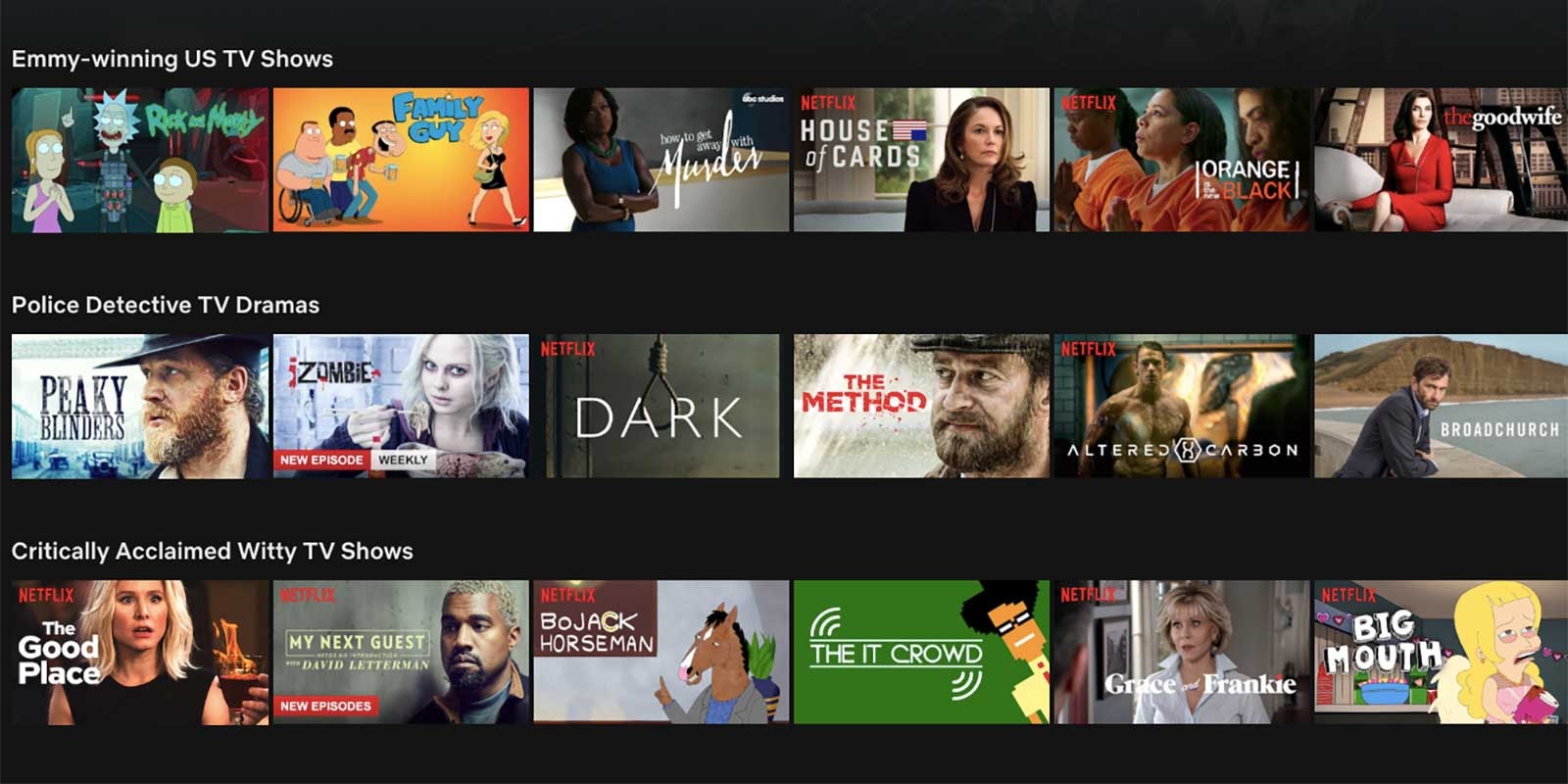

Recommendation systems are particularly crucial for certain companies, as their efficiency can lead to substantial revenue generation and competitive advantage, as evidenced in the research by Rismanto et al. and Cui et al. [ 10 , 11 ] . For instance, Netflix’s “Netflix Prize” challenge aimed to develop a recommender system surpassing their existing algorithm, with a substantial prize to incentivize innovation.

Furthermore, in the domain of big data, recommendation systems are highly prevalent, as detailed by Li et al. [ 12 , 13 ] . These systems predict user interests in purchasing based on extensive data analysis, including purchase history, ratings, and reviews. There are four widely recognized types of recommendation systems, as identified by Numnonda [ 14 ] : content-based, collaborative filtering-based, knowledge-based, and hybrid-based, each with distinct advantages and drawbacks, as Xiao et al. elucidate [ 15 ] . For example, collaborative filtering-based systems may face issues such as data sparsity and scalability, as Huang et al. mention [ 16 ] , and cold-start problems, while content-based systems might struggle to diversify user interests, as noted by Zhang et al. and Benouaret et al. [ 17 , 18 ] .

This paper is organized as follows: Section II provides a comprehensive review of both historical and modern state-of-the-art approaches in recommendation systems, coupled with an in-depth analysis of the latest advancements in the field. Section III discusses the challenges in big data-based recommendation systems, including sparsity, scalability, and diversity, and explores solutions for these challenges. The paper concludes with a summary in Section IV.

2 Recommendation Systems

Recommendation systems aim to predict users’ preferences for a certain item and provide personalized services [ 19 ] . This section will discuss several commonly used recommender methods, such as content-based method, collaborative filtering-based method, knowledge-based method, and hybrid-based method.

2.1 Content-based Recommendation Systems

The main idea of content-based recommenders is to recommend items based on the similarity between different users or items [ 20 ] . This algorithm determines and differentiates the main common attributes of a particular user’s favorite items by analyzing the descriptions of those items. Then, these preferences are stored in this user’s profile. The algorithm then recommends items with a higher degree of similarity with the user’s profile. Besides, content-based recommendation systems can capture the specific interests of the user and can recommend rare items that are of little interest to other users. However, since the feature representations of items are designed manually to a certain extent, this method requires a lot of domain knowledge. In addition, content-based recommendation systems can only recommend based on users’ existing interests, so the ability to expand users’ existing interests is limited.

2.2 Collaborative Filtering-based Recommendation Systems

Collaborative Filtering-based (CF) methods are primarily used in big data processing platforms due to their parallelization characteristics [ 21 ] . The basic principle of the recommendation system based on collaborative filtering is shown in Fig. 2 [ 22 ] . CF recommendation systems use the behavior of a group of users to recommend to other users [ 23 ] . There are mainly two types of collaborative filtering techniques, which are user-based and item-based.

User-based CF: In the user-based CF recommendation system, users will receive recommendations of products that similar users like [ 24 ] . Many similarity metrics can calculate the similarity between users or items, such as Constrained Pearson Correlation coefficient (CPC), cosine similarity, adjusted cosine similarity, etc. For example, cosine similarity is a measure of similarity between two vectors. Let x 𝑥 x italic_x and y 𝑦 y italic_y denote two vectors, cosine similarity between x 𝑥 x italic_x and y 𝑦 y italic_y can be represented by

| (1) |

| (2) |

Item-based CF: Item-based CF algorithm predicts user ratings for items based on item similarity. Generally, item-based CF yields better results than user-based CF because user-based CF suffers from sparsity and scalability issues. However, both user-based CF and item-based CF may suffer from cold-start problems [ 25 ] .

2.3 Knowledge-based Recommendation SystemsThe main idea of knowledge-based recommendation systems is to recommend items to users based on basic knowledge of users, items, and relationships between items [ 41 , 42 ] . Since knowledge-based recommendation systems do not require user ratings or purchase history, there is no cold start problem for this type of recommendation [ 43 ] . Knowledge-based recommendation systems are well suited for complex domains where items are not frequently purchased, such as cars and apartments [ 44 ] . But the acquisition of required domain knowledge can become a bottleneck for this recommendation technique [ 33 ] . 2.4 Hybrid-based Recommendation SystemsHybrid-based recommendation systems combine the advantages of multiple recommendation techniques and aim to overcome the potential weaknesses in traditional recommendation systems [ 45 ] . There are seven basic hybrid recommendation techniques [ 40 ] : weighted, mixed, switching, feature combination, feature augmentation, cascade, and meta-level methods [ 46 , 47 ] . Among all of these methods, the most commonly used is the combination of the CF recommendation methods with other recommendation methods (such as content-based or knowledge-based) to avoid sparsity, scalability, and cold-start problems [ 37 , 39 , 48 ] . 2.5 Challenges in Modern Recommendation SystemsSparsity. As we know, the usage of recommendation systems is growing rapidly. Many commercial recommendation systems use large datasets, and the user-item matrix used for filtering may be very large and sparse. Therefore, the performance of the recommendation process may be degraded due to the cold start problems caused by data sparsity [ 49 ] . Scalability. Traditional algorithms will face scalability issues as the number of users and items increases. Assuming there are millions of customers and millions of items, the algorithm’s complexity will be too large. However, recommendation systems must respond to the user’s needs immediately, regardless of the user’s rating history and purchase situation, which requires high scalability. For example, Twitter is a large web company that uses clusters of machines to scale recommendations for its millions of users [ 38 ] . Diversity. Recommendation systems also need to increase diversity to help users discover new items. Unfortunately, some traditional algorithms may accidentally do the opposite because they always recommend popular and highly-rated items that some specific users love. Therefore, new hybrid methods need to be developed to improve the performance of the recommendation systems [ 50 ] . 3 Recommendation System based on Big DataBig data refers to the massive, high growth rate and diversified information [ 51 , 52 ] . It requires new processing models to have stronger decision-making and process optimization capabilities [ 53 ] . Big data has its unique “4V” characteristics, as shown in Fig. 3 [ 54 ] : Volume, Variety, Velocity, and Veracity.  3.1 Big Data Processing FlowBig data comes from many sources, and there are many methods to process it [ 55 ] . However, the primary processing of big data can be divided into four steps [ 56 ] . Besides, Fig. 4 presents the basic flow of big data processing. Data Collection. Data Processing and Integration. The collection terminal itself already has a data repository, but it cannot accurately analyze the data. The received information needs to be pre-processed [ 57 ] . Data Analysis. In this process, these initial data are always deeply analyzed using cloud computing technology [ 58 ] . Data Interpretation.  3.2 Modern Recommendation Systems based on the Big DataThe shortcomings of traditional recommendation systems mainly focus on insufficient scalability and parallelism [ 59 ] . For small-scale recommendation tasks, a single desktop computer is sufficient for data mining goals, and many techniques are designed for this type of problems [ 60 ] .  However, the rating data is usually so large for medium-scale recommendation systems that it is impossible to load all the data into memory at once [ 61 ] . Common solutions are based on parallel computing or collective mining, sampling and aggregating data from different sources, and using parallel computing programming to perform the mining process [ 62 ] . The big data processing framework will rely on cluster computers with high-performance computing platforms [ 63 ] . At the same time, data mining tasks will be deployed on a large number of computing nodes (i.e., clusters) by running some parallel programming tools [ 64 ] , such as MapReduce [ 52 , 65 ] . For example, Fig. 5 is the MapReduce in the Recommendation Systems. In recent years, various big data platforms have emerged [ 66 ] . For example, Hadoop and Spark [ 52 ] , both developed by the Apache Software Foundation, are widely used open-source frameworks for big data architectures [ 52 , 67 ] . Each framework contains an extensive ecosystem of open-source technologies that prepare, process, manage and analyze big data sets [ 68 ] . For example, Fig. 6 is the ecosystem of Apache Hadoop [ 69 ] .  Hadoop allows users to manage big data sets by enabling a network of computers (or “nodes”) to solve vast and intricate data problems. It is a highly scalable, cost-effective solution that stores and processes structured, semi-structured and unstructured data. Spark is a data processing engine for big data sets. Like Hadoop, Spark splits up large tasks across different nodes. However, it tends to perform faster than Hadoop, and it uses random access memory (RAM) to cache and process data instead of a file system. This enables Spark to handle use cases that Hadoop cannot. The following are some benefits of the Spark framework: It is a unified engine that supports SQL queries, streaming data, machine learning (ML), and graph processing. It can be 100x faster than Hadoop for smaller workloads via in-memory processing, disk data storage, etc. It has APIs designed for ease of use when manipulating semi-structured data and transforming data.  Furthermore, Spark is fully compatible with the Hadoop eco-system and works smoothly with Hadoop Distributed File System (HDFS), Apache Hive, and others. Thus, when the data size is too big for Spark to handle in-memory, Hadoop can help overcome that hurdle via its HDFS functionality. Fig. 7 is a visual example of how Spark and Hadoop can work together. Fig. 8 is the the architecture of the modern recommendation system based on Spark.  Recommendation systems have become very popular in recent years and are used in various web applications. Modern recommendation systems aim at providing users with personalized recommendations of online products or services. Various recommendation techniques, such as content-based, collaborative filtering-based, knowledge-based, and hybrid-based recommendation systems, have been developed to fulfill the needs in different scenarios. This paper presents a comprehensive review of historical and recent state-of-the-art recommendation approaches, followed by an in-depth analysis of groundbreaking advances in modern recommendation systems based on big data. Furthermore, this paper reviews the issues faced in modern recommendation systems such as sparsity, scalability, and diversity and illustrates how these challenges can be transformed into prolific future research avenues.

Navigation MenuSearch code, repositories, users, issues, pull requests..., provide feedback. We read every piece of feedback, and take your input very seriously. Saved searchesUse saved searches to filter your results more quickly. To see all available qualifiers, see our documentation .

A Machine Learning Case Study for Recommendation System of movies based on collaborative filtering and content based filtering. veeralakrishna/Case-Study-ML-Netflix-Movie-Recommendation-SystemFolders and files.

Repository files navigationCase-study-ml-netflix-movie-recommendation-system, business problem. Netflix is all about connecting people to the movies they love. To help customers find those movies, they developed world-class movie recommendation system: CinematchSM. Its job is to predict whether someone will enjoy a movie based on how much they liked or disliked other movies. Netflix use those predictions to make personal movie recommendations based on each customer’s unique tastes. And while Cinematch is doing pretty well, it can always be made better. Now there are a lot of interesting alternative approaches to how Cinematch works that netflix haven’t tried. Some are described in the literature, some aren’t. We’re curious whether any of these can beat Cinematch by making better predictions. Because, frankly, if there is a much better approach it could make a big difference to our customers and our business. Credits: https://www.netflixprize.com/rules.html Problem StatementNetflix provided a lot of anonymous rating data, and a prediction accuracy bar that is 10% better than what Cinematch can do on the same training data set. (Accuracy is a measurement of how closely predicted ratings of movies match subsequent actual ratings.)

Real world/Business Objectives and constraintsObjectives:.

Constraints:

Type of Data:

Getting StartedStart by downloading the project and run "NetflixMoviesRecommendation.ipynb" file in ipython-notebook. PrerequisitesYou need to have installed following softwares and libraries in your machine before running this project.

Veerala Hari Krishna - Complete work AcknowledgmentsApplied AI Course

A Case Study on Various Recommendation Systems

One CitationAdaptive user interface design: a case study of web recommendation system, 12 references, amazon.com recommendations: item-to-item collaborative filtering, amazon . com recommendations item-to-item collaborative filtering, examining collaborative query reformulation: a case of travel information searching, user interactions with everyday applications as context for just-in-time information access, towards a model of collaborative information retrieval in tourism, visualizing implicit queries for information management and retrieval, implicit queries (iq) for contextualized search, just-in-time information retrieval agents, remembrance agent: a continuously running automated information retrieval system, ada and grace: direct interaction with museum visitors, related papers. Showing 1 through 3 of 0 Related Papers Information

InitiativesYou are accessing a machine-readable page. In order to be human-readable, please install an RSS reader. All articles published by MDPI are made immediately available worldwide under an open access license. No special permission is required to reuse all or part of the article published by MDPI, including figures and tables. For articles published under an open access Creative Common CC BY license, any part of the article may be reused without permission provided that the original article is clearly cited. For more information, please refer to https://www.mdpi.com/openaccess . Feature papers represent the most advanced research with significant potential for high impact in the field. A Feature Paper should be a substantial original Article that involves several techniques or approaches, provides an outlook for future research directions and describes possible research applications. Feature papers are submitted upon individual invitation or recommendation by the scientific editors and must receive positive feedback from the reviewers. Editor’s Choice articles are based on recommendations by the scientific editors of MDPI journals from around the world. Editors select a small number of articles recently published in the journal that they believe will be particularly interesting to readers, or important in the respective research area. The aim is to provide a snapshot of some of the most exciting work published in the various research areas of the journal. Original Submission Date Received: .

Article Menu

Find support for a specific problem in the support section of our website. Please let us know what you think of our products and services. Visit our dedicated information section to learn more about MDPI. JSmol ViewerSystematic review of recommendation systems for course selection.  1. Introduction2. motivation and rationale of the research, 3. research questions, 3.1. questions about the used algorithms.

3.2. Questions about the Used Dataset

3.3. Questions about the Research

4. Research Methodology4.1. title-level screening stage.

4.2. Abstract-Level Screening Stage4.3. full-text article scanning stage.

4.4. Full-Text Article Screening Stage

4.5. Data Extraction Stage5. research results, 5.1. the studies included in the slr, 5.1.1. collaborative filtering studies, 5.1.2. content-based filtering studies, 5.1.3. hybrid recommender system studies, 5.1.4. studies based on machine learning, 5.1.5. similarity-based study, 6. key studies analysis, 6.1. discussion of aims and contributions of the existing research works, 6.1.1. aim of studies that used collaborative filtering, 6.1.2. aim of studies that used content-based filtering, 6.1.3. aim of studies that used hybrid recommender systems, 6.1.4. aim of studies that used novel approaches, 6.1.5. aim of studies that used similarity-based filtering, 6.2. description of datasets used in the studies, 6.2.1. dataset description of studies that used collaborative filtering, 6.2.2. dataset description of studies that used content-based filtering, 6.2.3. dataset description of studies that used hybrid recommender systems, 6.2.4. dataset description of studies that used novel approaches.

6.2.5. Dataset Description of the Study That Used Similarity-Based Filtering6.3. research evaluation, 6.3.1. research evaluation for studies that used collaborative filtering, 6.3.2. research evaluation for studies that used content-based filtering, 6.3.3. research evaluation for studies that used hybrid recommender systems, 6.3.4. research evaluation for studies that used novel approaches, 6.3.5. research evaluation for the study that used similarity-based filtering, 7. discussion of findings, 8. gaps, challenges, future directions and conclusions for (crs) selection, 8.2. challenges, 8.3. future directions, 9. conclusions.

Author ContributionsData availability statement, conflicts of interest.

Click here to enlarge figure

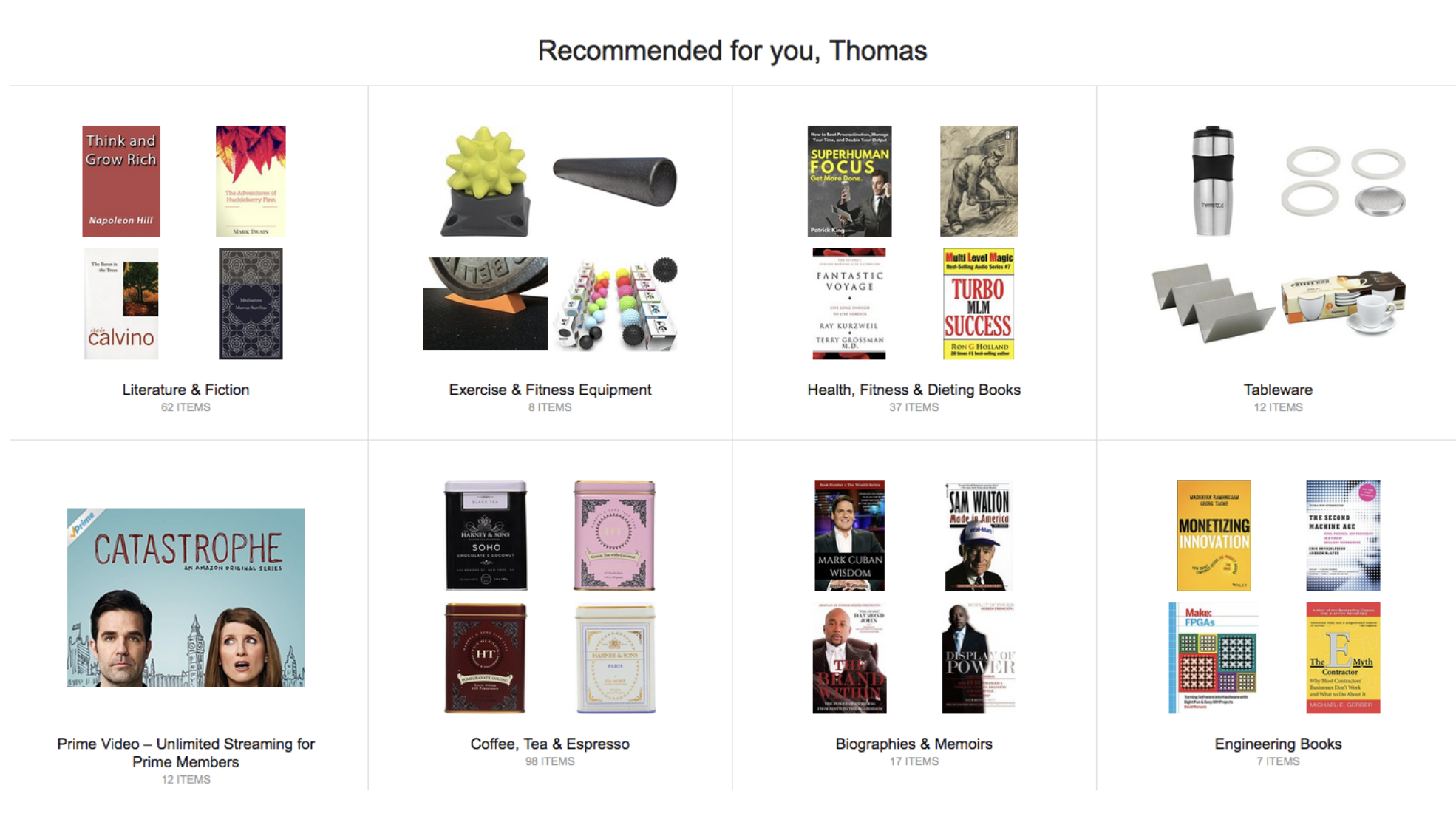

Share and CiteAlgarni, S.; Sheldon, F. Systematic Review of Recommendation Systems for Course Selection. Mach. Learn. Knowl. Extr. 2023 , 5 , 560-596. https://doi.org/10.3390/make5020033 Algarni S, Sheldon F. Systematic Review of Recommendation Systems for Course Selection. Machine Learning and Knowledge Extraction . 2023; 5(2):560-596. https://doi.org/10.3390/make5020033 Algarni, Shrooq, and Frederick Sheldon. 2023. "Systematic Review of Recommendation Systems for Course Selection" Machine Learning and Knowledge Extraction 5, no. 2: 560-596. https://doi.org/10.3390/make5020033 Article MetricsArticle access statistics, further information, mdpi initiatives, follow mdpi.  Subscribe to receive issue release notifications and newsletters from MDPI journals Amazon Recommender System Case StudyPresented on 31st October 2021 by Jihyun Table of contentsRelationships among users and products, relationships provide insight, insight is monetizable, content based filtering, collaborative filtering, association rules learning, two types of data processing, gathering basic data - batch processing, gathering behavioral data - streaming, step 2: data transformation, step 3: machine learning model renewal, the big picture, recommender system, what is recommender system.  Recommendation System predicts a user’s preference for a product and serves personalized recommendations based on the prediction.

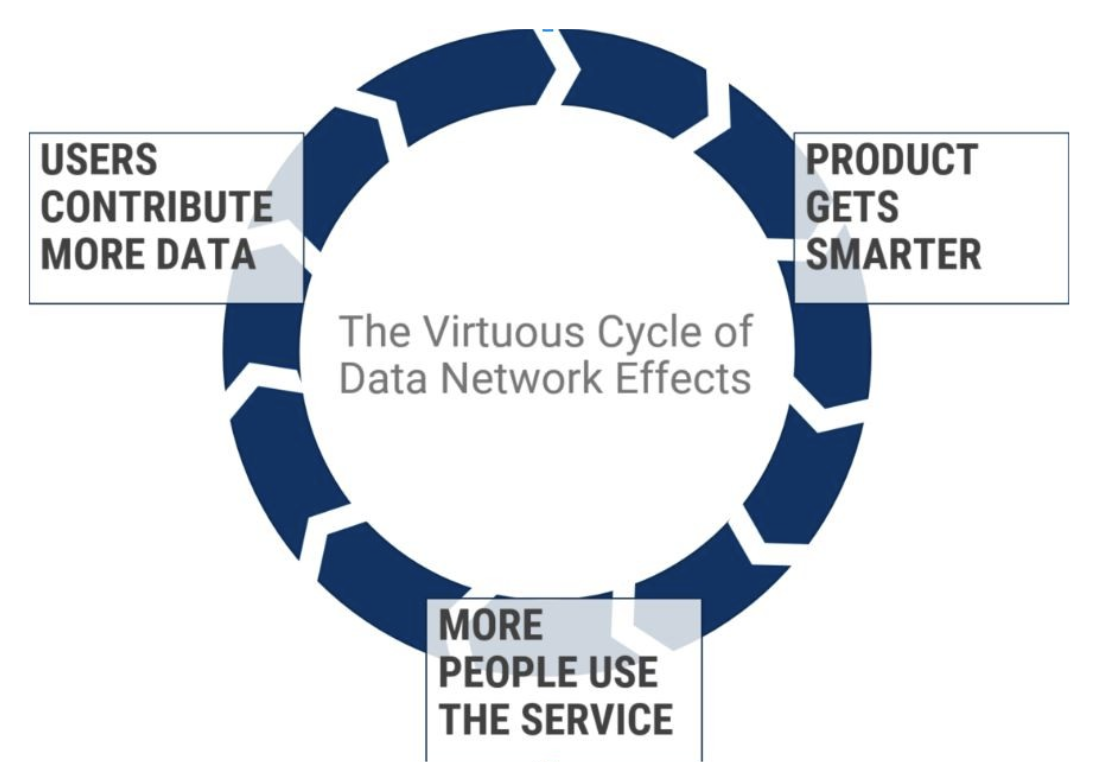

When a service recommends more personalized offers, users are more likely to make a purchase. This is how many businesses use the insignt for monetization. In fact, 35% of Amazon’s transactions come from recommendation algorithm.

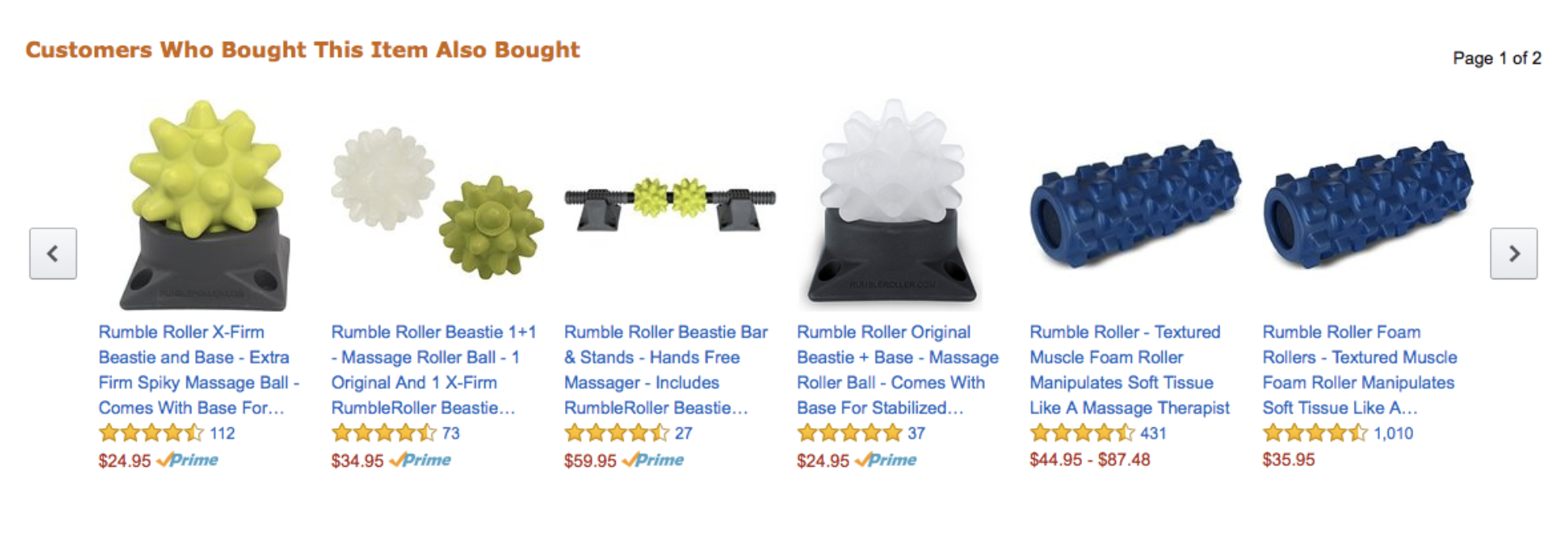

Type of RecommendationsFinding products with similar attributes

Find products liked by users with similar interests

Find complementary products

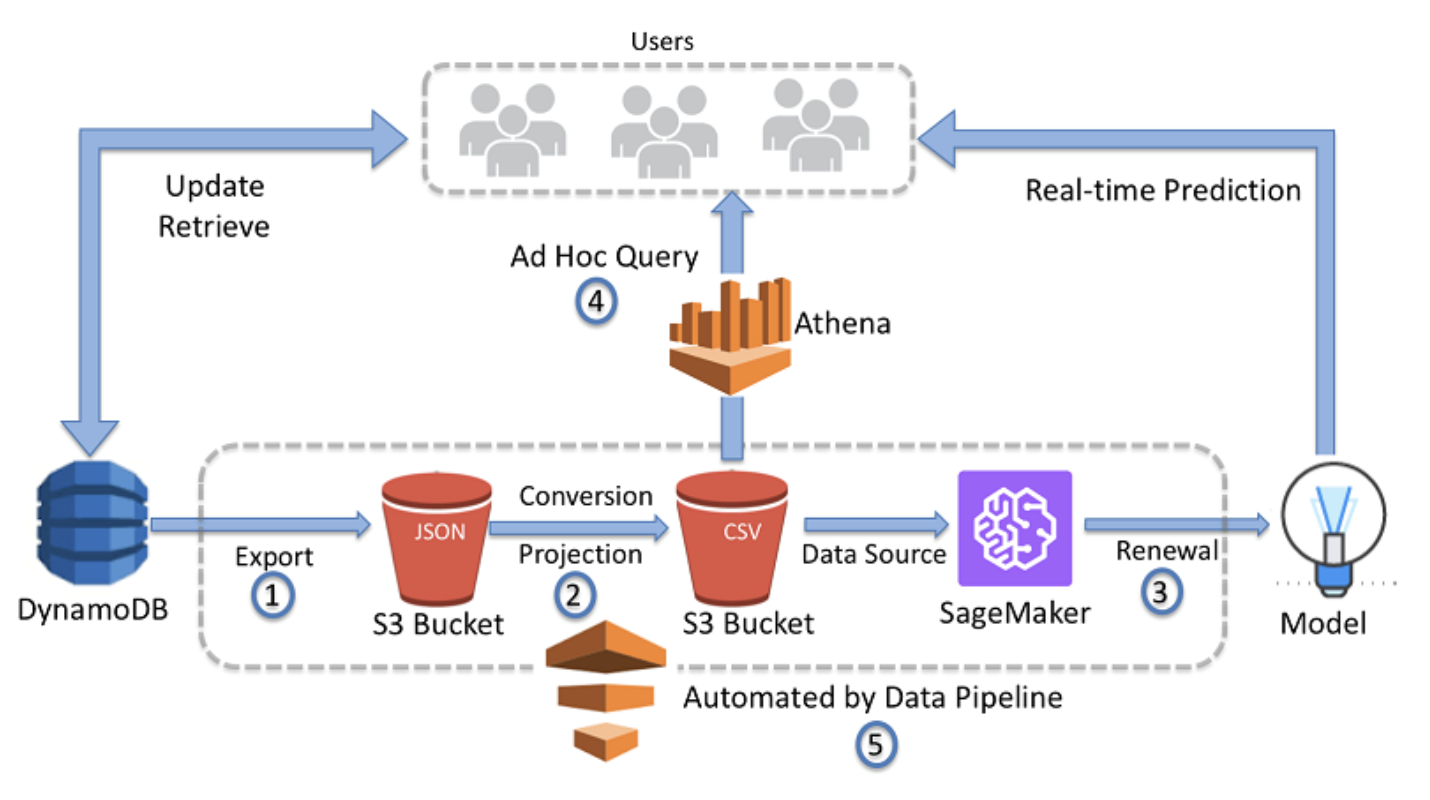

How Amazon Uses Recommender SystemStep 1: data processing.

Batch processing is mainly used to gather basic data that don’t change often or don’t need to be updated in real time.

Streaming is mainly used to gather behavioral data that need to be updated in real time.

Gathered data are transformed into appropriate format (e.g. JSON, CSV, etc.) and provided to the machine learning model.

Deep Learning for Recommender Systems: A Netflix Case Study

Deep learning has profoundly impacted many areas of machine learning. However, it took a while for its impact to be felt in the field of recommender systems. In this article, we outline some of the challenges encountered and lessons learned in using deep learning for recommender systems at Netflix. We first provide an overview of the various recommendation tasks on the Netflix service. We found that different model architectures excel at different tasks. Even though many deep-learning models can be understood as extensions of existing (simple) recommendation algorithms, we initially did not observe significant improvements in performance over well-tuned non-deep-learning approaches. Only when we added numerous features of heterogeneous types to the input data, deep-learning models did start to shine in our setting. We also observed that deep-learning methods can exacerbate the problem of offline–online metric (mis-)alignment. After addressing these challenges, deep learning has ultimately resulted in large improvements to our recommendations as measured by both offline and online metrics. On the practical side, integrating deep-learning toolboxes in our system has made it faster and easier to implement and experiment with both deep-learning and non-deep-learning approaches for various recommendation tasks. We conclude this article by summarizing our take-aways that may generalize to other applications beyond Netflix.  How to Cite

Information

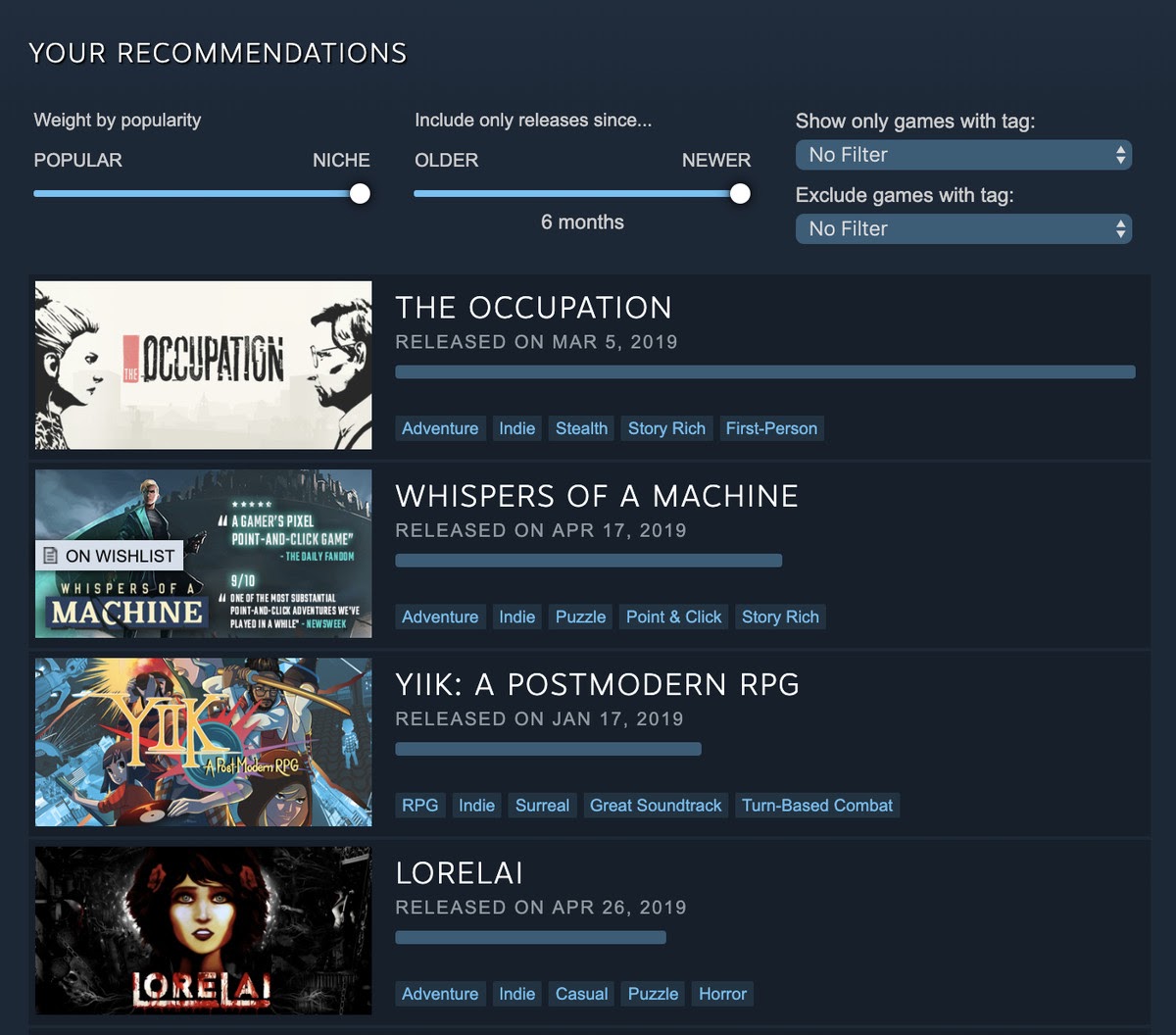

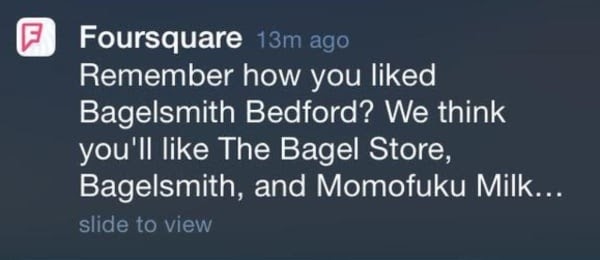

Developed ByPart of the PKP Publishing Services Network Copyright © 2021, Association for the Advancement of Artificial Intelligence. All rights reserved.  Book a DemoMultiply your Shopify Store's Revenue with Personalization 30 day free-trialTry Argoid for your business now! Get product recommendation ribbons like 'Trending', 'Similar Products' and more, to improve conversion and sales Try Argoid, risk-free 5 Use Case Scenarios for Recommendation Systems and How They Help SHARE THIS BLOGThe market for recommendation engines is projected to grow from USD 1.14B in 2018 to 12.03B by 2025 with a CAGR of 32.39%, for the forecasted period. These figures are an indication of the growing emphasis on customer experience while also being a byproduct of the widespread proliferation of data. On that note, here are five practical use cases of recommendation systems across different industry verticals. Use these to understand the multiple ways in which recommendation systems can add value to your business. Five Practical Use Cases of Recommendation Systems1. ecommerce recommendations.  eCommerce is by far the commonest and most frequently encountered use case of recommendation systems in action. Amazon was a pioneer in introducing this change back in 2012 by making use of item-item collaborative filtering to recommend products to the buyers. The result? A resounding 29% uplift in sales in comparison to the performance in the previous quarter! Soon enough, the recommendation engine contributed to 35% of purchases made on the platform, which was bound to impact the bottom line of the eCommerce giant. To this date, Amazon continues to remain a market leader by virtue of its helpful and user-friendly recommender engine that now also extends to the streaming platform - Amazon Prime (more on this later). The recommendation system is designed to intuitively understand and predict account interest and behaviors to drive purchases, boost engagement, increase cart volume, up-sell and cross-sell, and prevent cart abandonment. Other retailers like ASOS, Pandora, and H&M utilize recommender systems to achieve a gamut of favorable results. 2. Media Recommendations If Amazon is a frontrunner in the recommendation engine race, platforms like Netflix, Spotify, Prime Video, YouTube, and Disney+ are consolidating the role of recommendations in the field of media, entertainment, publishing, etc. Such channels have successfully normalized recommendation systems in the real world. Typically, most media streaming service providers employ a relational understanding of the type of content consumed by the user to suggest fresh content accordingly. Additionally, the self-learning and self-training aspect of AI in recommendation engines improves relevancy to maintain high levels of engagement while preventing customer churn. Consider Netflix, for example. About 75% of what users watch on Netflix is a result of its product recommendation algorithm. As a result, it is unsurprising that the platform has pegged its personalized recommendation engine at a whopping USD 1B per year as it maintains sustained subscription rates and delivers an impressive ROI that the company can redirect in fresh content creation. 3. Video Games and Stores Video games are a treasure trove of user-generated data as it contains everything - from the games they play to the choices they make. This stored repository of action, reaction, and behavior translates into usable data that allows developers to curate the experience to maximize revenue without coming off as pushy or annoying. Gaming platforms like Steam, Xbox Games Store, PlayStation Store are already well-known for their excellent recommender engines that suggest games based on the player’s gaming history, browsing history, and purchase history. As such, someone who has an interest in battle royale games like Fortnite will recommend games like PUBG, Apex Legends, and CoD rather than MMORPGs like WoW. Similarly, video games can also deploy recommender systems to nudge players towards the top of the micro-transactions funnel to make the gaming experience easier or more rewarding. Apart from boosting engagements and in-game purchases, AI-based recommender algorithms can unlock cross-selling and up-selling opportunities. 4. Location-Based Recommendations Geographic location can be a demographic factor that acts as a glue between the online and offline customer experience. It can augment marketing, advertising, and sales efforts to improve overall profitability. As a result, businesses have been in the works of developing a reliable location-based recommender system (LBRS) or a location-aware recommender system (LARS) for quite a while now, and have registered successful results. Sephora, for instance, issues geo-triggered app notifications alerting customers on existing promos and offers when they are in the vicinity of a physical store. The Starbucks app also follows a similar system for recommending happy hours and store locations. An extension of this feature is seen in Foursquare, a local search-and-discovery mobile app, which matches users with establishments like local eateries, breweries, or activity centers, based on customer location and preferences. In the process, it maintains high engagement levels and promotes businesses in the same breath. 5. Health and Fitness Health and fitness is one of the newest entrants in the recommender system but enjoys immense potential because of this lag. Applications can capture user inputs, such as their dietary preferences, activity levels, fitness goals, height and weight, BMI, etc., to suggest customized diet plans, recipes, or workout routines to match their fitness goals. In addition to logging data on such platforms, its integration with wearable devices can streamline its ability to make more accurate and valuable suggestions - such as suggesting meditation or mindfulness exercises to high-risk groups upon registering elevated vitals. Most importantly, it can capture user feedback on their fitness journey and experiences in the form of ratings to fine tune the plan and make the recommendation smarter and more personalized. Say, someone is dissatisfied with the level of difficulty of the exercise regimen, then the app can recalibrate it to suit their abilities. Final WordsRecommendations have become an implicit customer expectation as they no longer wish to sift through stores and websites or find things that they do not like. As a result, AI-based content recommendation engines, such as the one developed by Argoid , are no longer a “nice-to-have” feature but the life blood for your business. Talk to us to know more! FAQs on Recommendation System Use CasesWhat is a recommendation system. A recommendation system uses the process of information filtering to predict the products a user will like and accordingly rate the products based on users' preferences. A recommender system easily highlights the most relevant products to the users and ensures faster conversion. Why recommendation systems are essential for eCommerce stores?A recommendation system makes the shopping journey simpler and enjoyable for users. With a recommendation system, you can get product recommendations with minimum search efforts and create long-term engagement. Which recommendation system should you use?A recommendation system that you must use is - Argoid. It helps with 1:1 personalized product recommendations for your eCommerce store and ensures faster conversion and retention. Similar blogs recommended for you Revolutionizing TV Content Scheduling with AI: Introducing Argoid’s FAST Channel AI Co-PlannerLearn about Argoid's FAST channel AI Co-planner  The Rise of FAST: How Ad-Supported Streaming is Changing TVA brief on what is FAST and it is changing the television .png) Framing the Future: How Streaming Media Recommendation Engines Can Transform Your Content StrategyLearn how you can leverage streaming streaming media recommendation engines to transform your content strategy Try Argoid for your businessZero setup fee . comprehensive product . packages that suit your business..  AI-powered real-time relevance for your viewers!  Subscribe to our newsletterGet the latest on AI in eCommerce and Streaming/OTT, AI-based recommendation systems and hyper-personalization. A Case Study on Recommendation Systems Based on Big Data

Part of the book series: Smart Innovation, Systems and Technologies ((SIST,volume 105)) 1606 Accesses Recommender systems mainly utilize for finding and recover contents from large datasets; it has been determining and analysis based on the scenario—Big Data. In this paper, we describe the process of recommendation system using big data with a clear explanation in representing the operation of mapreduce. We demonstrate the various stage of recommendation namely data collection rating, types of filtering. Analysis Scenario based drug recommender system, it consists of three components namely drug storage, cloud server, and recommender server. The system is evaluating with specific parameters like F-score, Precision, and recall. Finally, we describe the challenge of recommendation systems like data sparsity, cold start, sentimental analysis and No surprise. This is a preview of subscription content, log in via an institution to check access.  Access this chapterSubscribe and save.

Tax calculation will be finalised at checkout Purchases are for personal use only Institutional subscriptions Similar content being viewed by others Health Recommender Systems: A Survey Challenges and Issues of Recommender System for Big Data ApplicationsCadre: cloud-assisted drug recommendation service for online pharmacies. Bhosale, H.S., Gadekar, D.P.: A review paper on big data and Hadoop. Int. J. Sci. Res. Publ. 4 (10), 1–7 (2014) Google Scholar Verma, J.P., Patel, B., Patel, A.: Big data analysis: recommendation system with Hadoop framework. In: 2015 IEEE International Conference on Computational Intelligence and Communication Technology (CICT), pp. 92–97. IEEE, Feb 2015 Adomavicius, G., Tuzhilin, A.: Toward the next generation of recommender systems: a survey of the state-of-the-art and possible extensions. IEEE Trans. Knowl. Data Eng. 17 (6), 734–749 (2005) Article Google Scholar Zhao, Z.D., Shang, M.S.: User-based collaborative-filtering recommendation algorithms on Hadoop. In: Third International Conference on Knowledge Discovery and Data Mining, 2010. WKDD’10, pp. 478–481. IEEE, Jan 2010 Patil, S.N., Deshpande, S.M., Potgantwar, A.D.: Product recommendation using multiple filtering mechanisms on Apache spark (2017) Isinkaye, F.O., Folajimi, Y.O., Ojokoh, B.A.: Recommendation systems: principles, methods, and evaluation. Egypt. Inf. J. 16 (3), 261–273 (2015) Chen, R.C., Huang, Y.H., Bau, C.T., Chen, S.M.: A recommendation system based on domain ontology and SWRL for anti-diabetic drugs selection. Expert Syst. Appl. 39 (4), 3995–4006 (2012) Wang, H., Gu, Q., Wei, J., Cao, Z., Liu, Q.: Mining drug-disease relationships as a complement to medical genetics-based drug repositioning: where a recommendation system meets genome-wide association studies. Clin. Pharmacol. Ther. 97 (5), 451–454 (2015) Jamiy, F.E., Daif, A., Azouazi, M., Marzak, A.: The potential and challenges of Big data-Recommendation systems next level application. arXiv preprint arXiv: 1501.03424 (2015) Wali, M.N., Sree Prasanna, K., Surabhi, L.: An optimistic analysis of big data by using HDFS Dhavapriya, M., Yasodha, N.: Big data analytics: challenges and solutions using Hadoop, map reduce and big table. Int. J. Comput. Sci. Trends Technol. (IJCST) 4 (1) (2016) Bollier, D., Firestone, C.M.: The promise and peril of big data, p. 56. Aspen Institute, Communications, and Society Program, Washington, DC (2010) Helbing, D., Frey, B.S., Gigerenzer, G., Hafen, E., Hagner, M., Hofstetter, Y., Zwitter, A.: Will democracy survive big data and artificial intelligence? Scientific American. 25 Feb 2017 Azar, A.T., Vaidyanathan, S. (eds.): Advances in Chaos Theory and Intelligent Control, vol. 337. Springer (2016) Wiesner, M., Pfeifer, D.: Health recommender systems: concepts, requirements, technical basics, and challenges. Int. J. Environ. Res. Public Health 11 (3), 2580–2607 (2014) Yang, S., Zhou, P., Duan, K., Hossain, M.S., Alhamid, M.F.: emHealth: towards emotion health through depression prediction and intelligent health recommender system. Mob. Netw. Appl. 1–11 (2017) Holzinger, A.: Machine learning for health informatics. In: Machine Learning for Health Informatics, pp. 1–24. Springer International Publishing (2016) Aznoli, F., Navimipour, N.J.: Cloud services recommendation: reviewing the recent advances and suggesting the future research directions. J. Netw. Comput. Appl. 77 , 73–86 (2017) Sharma, S.K., Suman, U.: An efficient semantic clustering of URLs for web page recommendation. Int. J. Data Anal. Tech. Strat. 5 (4), 339–358 (2013) Ma, H., Yang, H., Lyu, M.R., King, I.: Sorec: social recommendation using probabilistic matrix factorization. In: Proceedings of the 17th ACM Conference on Information and Knowledge Management, pp. 931–940. ACM, Oct 2008 Melville, P., Mooney, R.J., Nagarajan, R.: Content-boosted collaborative filtering for improved recommendations. In: Aaai/iaai, pp. 187–192, July 2002 Schein, A.I., Popescul, A., Ungar, L.H., Pennock, D.M.: Methods and metrics for cold-start recommendations. In: Proceedings of the 25th Annual International ACM SIGIR Conference on Research and Development in Information Retrieval, pp. 253–260. ACM, Aug 2002 Sagiroglu, S., Sinanc, D.: Big data: a review. In: 2013 International Conference on Collaboration Technologies and Systems (CTS), pp. 42–47. IEEE, May 2013 Das, A.S., Datar, M., Garg, A., Rajaram, S.: Google news personalization: scalable online collaborative filtering. In: Proceedings of the 16th International Conference on World Wide Web, pp. 271–280. ACM, May 2007 Download references Author informationAuthors and affiliations. School of Information Technology and Engineering, VIT University, Vellore, 632014, TamilNadu, India M. Sandeep Kumar & J. Prabhu You can also search for this author in PubMed Google Scholar Corresponding authorCorrespondence to M. Sandeep Kumar . Editor informationEditors and affiliations. School of Computer Engineering, KIIT Deemed to be University, Bhubaneswar, Odisha, India Suresh Chandra Satapathy Department of Electronics and Communication Engineering, Shri Ramswaroop Memorial Group of Professional Colleges, Lucknow, Uttar Pradesh, India Vikrant Bhateja Electronics and Communication Sciences Unit, Indian Statistical Institute, Kolkata, West Bengal, India Swagatam Das Rights and permissionsReprints and permissions Copyright information© 2019 Springer Nature Singapore Pte Ltd. About this paperCite this paper. Sandeep Kumar, M., Prabhu, J. (2019). A Case Study on Recommendation Systems Based on Big Data. In: Satapathy, S., Bhateja, V., Das, S. (eds) Smart Intelligent Computing and Applications . Smart Innovation, Systems and Technologies, vol 105. Springer, Singapore. https://doi.org/10.1007/978-981-13-1927-3_44 Download citationDOI : https://doi.org/10.1007/978-981-13-1927-3_44 Published : 05 November 2018 Publisher Name : Springer, Singapore Print ISBN : 978-981-13-1926-6 Online ISBN : 978-981-13-1927-3 eBook Packages : Engineering Engineering (R0) Share this paperAnyone you share the following link with will be able to read this content: Sorry, a shareable link is not currently available for this article. Provided by the Springer Nature SharedIt content-sharing initiative

Policies and ethics

Recommendation Systems: Applications and Examples in 2024Cem is the principal analyst at AIMultiple since 2017. AIMultiple informs hundreds of thousands of businesses (as per Similarweb) including 60% of Fortune 500 every month. Cem's work focuses on how enterprises can leverage new technologies in AI, automation, cybersecurity(including network security, application security), data collection including web data collection and process intelligence.  We adhere to clear ethical standards and follow an objective methodology . The brands with links to their websites fund our research. The recent global pandemic not only raised the demand for online shopping but also changed consumers’ behavior, as they required more personalized services from brands (Figure 1). As the e-commerce industry grows , the demand for recommendation systems will grow with it. If you are planning to leverage recommendation systems to enhance your online store, keep reading. In this article, we cover the following:

Figure 1. The importance of personalization in the post-pandemic market What is a recommendation system?It is easy to get confused about recommendation systems as they are also sometimes called recommender systems or recommendation engines. All of these perform the same actions; they are systems that predict what your customers want by analyzing their behavior which contains information on past preferences. How does it work?Recommendation systems collect customer data and auto-analyze it to generate customized recommendations for your customers. These systems rely on both:

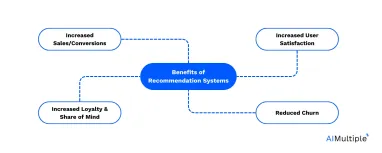

Content-based filtering and collaborative filtering are two approaches commonly used to generate recommendations. For more, please read the approaches section of our list of recommendation system vendors . Benefits of recommendation systems1. increased sales/conversion. There are very few ways to achieve increased sales without increased marketing effort, and a recommendation system is one of them. Once you set up an automated recommendation system, you get recurring additional sales without any effort since it connects the shoppers with their desired products much faster. 2. Increased user satisfactionThe shortest path to a sale is great since it reduces the effort for both you and your customer. Recommendation systems allow you to reduce your customers’ path to a sale by recommending them a suitable option, sometimes even before they search for it. 3. Increased loyalty and share of mindBy getting customers to spend more on your website, you can increase their familiarity with your brand and user interface, increasing their probability of making future purchases from you. 4. Reduced churnRecommendation system-powered emails are one of the best ways to re-engage customers. Discounts or coupons are other effective yet costly ways of re-engaging customers, and they can be coupled with recommendations to increase customers’ probability of conversion. Applicable areasAlmost any business can benefit from a recommendation system. There are two important aspects that determine the level of benefit a business can gain from the technology.

With this framework, we can identify industries that stand to gain from recommendation systems: 1. E-CommerceIs an industry where recommendation systems were first widely used. With millions of customers and data on their online behavior, e-commerce companies are best suited to generate accurate recommendations. Target scared shoppers back in the 2000s when Target systems were able to predict pregnancies even before mothers realized their own pregnancies . Shopping data is the most valuable data as it is the most direct data point on a customer’s intent. Retailers with troves of shopping data are at the forefront of companies making accurate recommendations. Similar to e-commerce, media businesses are one of the first to jump into recommendations. It is difficult to see a news site without a recommendation system. A mass-market product that is consumed digitally by millions. Banking for the masses and SMEs are prime for recommendations. Knowing a customer’s detailed financial situation, along with their past preferences, coupled with data of thousands of similar users, is quite powerful. It Shares similar dynamics with banking. Telcos have access to millions of customers whose every interaction is recorded. Their product range is also rather limited compared to other industries, making recommendations in telecom an easier problem. 6. UtilitiesSimilar dynamics with telecom, but utilities have an even narrower range of products, making recommendations rather simple. Examples from companies that use a recommendation engine1. amazon.com. Amazon.com uses item-to-item collaborative filtering recommendations on most pages of their website and e-mail campaigns. According to McKinsey, 35% of Amazon purchases are thanks to recommendation systems. Some examples of where Amazon uses recommendation systems are Netflix is another data-driven company that leverages recommendation systems to boost customer satisfaction. The same Mckinsey study we mentioned above highlights that 75% of Netflix viewing is driven by recommendations. In fact, Netflix is so obsessed with providing the best results for users that they held data science competitions called Netflix Prize where one with the most accurate movie recommendation algorithm wins a prize worth $1,000,000. Every week, Spotify generates a new customized playlist for each subscriber called “Discover Weekly” which is a personalized list of 30 songs based on users’ unique music tastes. Their acquisition of Echo Nest, a music intelligence and data-analytics startup, enable them to create a music recommendation engine that uses three different types of recommendation models:

4. LinkedinJust like any other social media channel, LinkedIn also uses “You may also know” or “You may also like” types of recommendations. Setting up a recommendation systemWhile most companies would benefit from adopting an existing solution, companies in niche categories or very high scale could experiment with building their own recommendation engine. 1. Using an out-of-the-box solutionRecommendation systems are one of the earliest and most mature AI use cases. As of Jan/2022, we have identified 10+ products in this domain. Visit our guide on recommendation systems to see all the vendors and learn more about specific recommendation engines. The advantages of this approach include fast implementation and highly accurate results for most cases:

To pick the right system, you can use historical or, even better, live data to test the effectiveness of different systems quickly. 2. Building your own solutionThis can make sense if

Recommendation systems in the market today use logic like: customers with the similar purchase and browsing histories will purchase similar products in the future. To make such a system work, you either need a large number of historical transactions or detailed data on your user’s behavior on other websites. If you need such data, you could search for it in data marketplaces . More data and better algorithms improve recommendations. You need to make use of all relevant data in your company, and you could expand your customer data with 3rd party data. If a regular customer of yours has been looking for red sneakers on other websites, why shouldn’t you show them a great pair when they visit your website? 3. Working with a consultant to build your own solutionsA slightly better recommendation engine could boost a company’s sales by a few percentage points, which could make a dramatic change in the profitability of a company with low-profit margins. Therefore, it can make sense to invest in building better recommendation engines if the company is not having satisfactory results from existing solution providers in the market . AI consultants can help build specific models. We can help you identify partners in building custom recommendation engines: If you want to learn more about custom AI solutions, feel free to read our whitepaper on the topic: 4. Running a data science competition to build your own solutionOne possible approach is to use the wisdom of the crowd to build such systems. Companies can use encrypted historical data, launch data science competitions or work with consultants and get models providing highly effective recommendations. Further readingAI is not only applied to recommendation personalization. You can check out AI applications in marketing , sales , customer service , IT , data or analytics . If you have any questions about recommendation systems, let us know:  Cem's work has been cited by leading global publications including Business Insider, Forbes, Washington Post, global firms like Deloitte, HPE, NGOs like World Economic Forum and supranational organizations like European Commission. You can see more reputable companies and media that referenced AIMultiple. Cem's hands-on enterprise software experience contributes to the insights that he generates. He oversees AIMultiple benchmarks in dynamic application security testing (DAST), data loss prevention (DLP), email marketing and web data collection. Other AIMultiple industry analysts and tech team support Cem in designing, running and evaluating benchmarks. Throughout his career, Cem served as a tech consultant, tech buyer and tech entrepreneur. He advised enterprises on their technology decisions at McKinsey & Company and Altman Solon for more than a decade. He also published a McKinsey report on digitalization. He led technology strategy and procurement of a telco while reporting to the CEO. He has also led commercial growth of deep tech company Hypatos that reached a 7 digit annual recurring revenue and a 9 digit valuation from 0 within 2 years. Cem's work in Hypatos was covered by leading technology publications like TechCrunch and Business Insider. Cem regularly speaks at international technology conferences. He graduated from Bogazici University as a computer engineer and holds an MBA from Columbia Business School. To stay up-to-date on B2B tech & accelerate your enterprise: Next to ReadTop 5 tools for customer engagement automation in 2024, minimum advertised price (map): what it is & how to automate, top 6 use cases / applications of deep learning in finance in '24. Your email address will not be published. All fields are required. Related research Retail Market Research: Benefits, Trends & 5 Real-Life Examples X (Twitter) Web Scraping: Legality, Methods & Use Cases 2024

Recommendation engineRecostream is an AI/ML personalized recommendation engine offered as a SaaS service for online stores of any size. The software is built on the foundation of the Openkoda, utilizing a significant number of pre-existing features within the platform.  Recostream is a product recommendation engine built with Openkoda

Key features of the application

The ambitious scope of the product and the fast-paced nature of the ecommerce industry required to:

Ideally, the implementation should focus on research and development of the recommendation engine’s machine learning algorithms, with the rest of the system delivered with minimal development effort, if possible.  Recostream’s engineering team decided to use Openkoda as the foundation for the recommendation service, as a significant number of ready-to-use Openkoda features were already available in the platform. The team started with a smaller PoC focused on building the prototype of the recommendation engine only, exploring and testing different machine learning algorithms and scaling the research prototypes to handle real, massive amounts of collected data describing shopping behavior and user interactions. Once the system was validated and promoted from the experimental phase to production, the same team of developers was able to take over Openkoda and build a full enterprise SaaS service around the engine, reusing a large number of features, components, and integrations that already existed in Openkoda. The engineering team of only 4 developers was able to evolve the bare prototype of the recommendation engine into a working product prototype and start the first implementations within two months after the PoC phase was completed. Such a short delivery time was made possible by adding their highly specialized IP behind the developed AI/ML recommendation engine to an enterprise-ready SaaS platform provided by Openkoda. Since the Openkoda platform is freely available as an open-source system under the MIT license , there were no licensing costs associated with using Openkoda in the project.  Recostream quickly became an industry-recognized product recommendation service for ecommerce stores of all sizes and was acquired two years later by GetResponse , a global leader in marketing automation. Read more about the product history and the acquisition . The Recostream product is now a part of the larger offering from GetResponse. What is Openkoda?Openkoda enables faster application development. This AI-powered open source platform provides pre-built application templates and customizable solutions to help you quickly build any functionality you need to run your business. With Openkoda, you can achieve up to 60% faster development and significant cost savings. Start streamlining your development process with Openkoda today. Reduce time-to-marketBuild enterprise applications based on openkoda, loved by developers.  Build business applications fasterOpen-source business application platform for fast development Laciarska 4 50-140 Wroclaw, Poland [email protected]

Article ContentsIntroduction, methods and evaluation, conclusion and future work, acknowledgement, conflicts of interest..

A content-based dataset recommendation system for researchers—a case study on Gene Expression Omnibus (GEO) repository

Braja Gopal Patra, Kirk Roberts, Hulin Wu, A content-based dataset recommendation system for researchers—a case study on Gene Expression Omnibus (GEO) repository, Database , Volume 2020, 2020, baaa064, https://doi.org/10.1093/database/baaa064

It is a growing trend among researchers to make their data publicly available for experimental reproducibility and data reusability. Sharing data with fellow researchers helps in increasing the visibility of the work. On the other hand, there are researchers who are inhibited by the lack of data resources. To overcome this challenge, many repositories and knowledge bases have been established to date to ease data sharing. Further, in the past two decades, there has been an exponential increase in the number of datasets added to these dataset repositories. However, most of these repositories are domain-specific, and none of them can recommend datasets to researchers/users. Naturally, it is challenging for a researcher to keep track of all the relevant repositories for potential use. Thus, a dataset recommender system that recommends datasets to a researcher based on previous publications can enhance their productivity and expedite further research. This work adopts an information retrieval (IR) paradigm for dataset recommendation. We hypothesize that two fundamental differences exist between dataset recommendation and PubMed-style biomedical IR beyond the corpus. First, instead of keywords, the query is the researcher, embodied by his or her publications. Second, to filter the relevant datasets from non-relevant ones, researchers are better represented by a set of interests, as opposed to the entire body of their research. This second approach is implemented using a non-parametric clustering technique. These clusters are used to recommend datasets for each researcher using the cosine similarity between the vector representations of publication clusters and datasets. The maximum normalized discounted cumulative gain at 10 (NDCG@10), precision at 10 (p@10) partial and p@10 strict of 0.89, 0.78 and 0.61, respectively, were obtained using the proposed method after manual evaluation by five researchers. As per the best of our knowledge, this is the first study of its kind on content-based dataset recommendation. We hope that this system will further promote data sharing, offset the researchers’ workload in identifying the right dataset and increase the reusability of biomedical datasets. Database URL : http://genestudy.org/recommends/#/ In the Big Data era, extensive amounts of data have been generated for scientific discoveries. However, storing, accessing, analyzing and sharing a vast amount of data are becoming major bottlenecks for scientific research. Furthermore, making a large number of public scientific data findable, accessible, interoperable and reusable is a challenging task. The research community has devoted substantial effort to enable data sharing. Promoting existing datasets for reuse is a major initiative that gained momentum in the past decade ( 1 ). Many repositories and knowledge bases have been established for specific types of data and domains. Gene Expression Omnibus (GEO) (https://www.ncbi.nlm.nih.gov/geo/), UKBioBank (https://www.ukbiobank.ac.uk/), ImmPort (https://www.immport.org/shared/home) and TCGA (https://portal.gdc.cancer.gov/) are some examples of repositories for biomedical datasets. DATA.GOV archives the U.S. Government’s open data related to agriculture, climate, education, etc. for research use. However, a researcher looking for previous datasets on a topic still has to painstakingly visit all the individual repositories to find relevant datasets. This is a tedious and time-consuming process. An initiative was taken by the developers of DataMed (https://datamed.org) to solve the aforementioned issues for the biomedical community by combining biomedical repositories together and enhancing the query searching based on advanced natural language processing (NLP) techniques ( 1 , 2 ). DataMed indexes provides the functionality to search diverse categories of biomedical datasets ( 1 ). The research focus of this last work was retrieving datasets using a focused query. In addition to that biomedical and healthCAre Data Discovery Index Ecosystem (bioCADDIE) dataset retrieval challenge was organized in 2016 to evaluate the effectiveness of information retrieval (IR) techniques in identifying relevant biomedical datasets in DataMed ( 3 ). Among the teams participated in this shared task, use of probabilistic or machine learning based IR ( 4 ), medical subject headings (MeSH) term based query expansion ( 5 ), word embeddings and identifying named entity ( 6 ), and re-ranking ( 7 ) for searching datasets using a query were the prevalent approaches. Similarly, a specialized search engine named Omicseq was developed for retrieving omics data ( 8 ). Google Dataset Search (https://toolbox.google.com/datasetsearch) provides the facility to search datasets on the web, similar to DataMed. While DataMed indexes only biomedical domain data, indexing in Google Dataset Search covers data across several domains. Datasets are created and added to repositories frequently, which makes it difficult for a researcher to know and keep track of all datasets. Further, search engines such as DataMed or Google Dataset Search are helpful when the user knows what type of dataset to search for, but determining the user intent in web searches is a difficult problem due to the sparse data available concerning the searcher ( 9 ). To overcome the aforementioned problems and make dataset search more user-friendly, a dataset recommendation system based on a researcher’s profile is proposed here. The publications of researchers indicate their academic interest, and this information can be used to recommend datasets. Recommending a dataset to an appropriate researcher is a new field of research. There are many datasets available that may be useful to certain researchers for further exploration, and this important aspect of dataset recommendation has not been explored earlier. Recommendation systems, or recommenders, are an information filtering system that deploys data mining and analytics of users’ behaviors, including preferences and activities, for predictions of users’ interests on information, products or services. Research publications in recommendation systems can be broadly grouped as content-based or collaborative filtering recommendation systems ( 10 ). This article describes the development of a recommendation system for scholarly use. In general, developing a scholarly recommendation system is both challenging and unique because semantic information plays an important role in this context, as inputs such as title, abstract and keywords need to be considered ( 11 ). The usefulness of similar research article recommendation systems has been established by the acceptance of applications such as Google Scholar (https://scholar.google.com/), Academia.edu (https://www.academia.edu/), ResearchGate (https://www.researchgate.net/), Semantic Scholar (https://www.semanticscholar.org/) and PubMed (https://www.ncbi.nlm.nih.gov/pubmed/) by the research community. Dataset recommendation is a challenging task due to the following reasons. First, while standardized formats for dataset metadata exist ( 12 ), no such standard has achieved universal adoption, and researchers use their own convention to describe their datasets. Further, many datasets do not have proper metadata, which makes the prepared dataset difficult to reuse/recommend. Second, there are many dataset repositories with the same dataset in different formats, making recommendation a challenging task. Additionally, the dataset recommendation system should be scalable to the increasing number of online datasets. We cast the problem of recommending datasets to researchers as a ranking problem of datasets matched against the researcher’s individual publication(s). The recommendation system can be viewed as an IR system where the most similar datasets can be retrieved for a researcher using his/her publications. Data linking or identifying/clustering similar datasets have received relatively less attention in research on recommendation systems. Previous work on this topic includes ( 13–15 ). Reference ( 13 ) defined dataset recommendation as to the problem of computing a rank score for each of a set of target datasets ( D T ) so that the rank score indicates the relatedness of D T to a given source dataset ( D S ). The rank scores provide information on the likelihood of a D T to contain linking candidates for D S . Reference ( 15 ) proposed a dataset recommendation system by first creating similarity-based dataset networks, and then recommending connected datasets to users for each dataset searched. Despite the promising result this approach suffers from the cold start problem. Here cold start problem refers to the user’s initial dataset selection, where the user has no idea what dataset to select/search. If a user chooses a wrong dataset initially, then the system will always recommend wrong datasets to the user. Some experiments were performed to identify datasets shared in the biomedical literature ( 16–18 ). Reference ( 17 ) identified data shared in biomedical literature articles using regular expression patterns and machine learning algorithms. Reference ( 16 ) identified datasets in social sciences papers using a semi-automatic method. The last system reportedly performed well (F-measure of 0.83) in finding datasets in the da|ra dataset registry. Different deep learning methods were used to extract the dataset mentions in publication and detect mention text fragment to a particular dataset in the knowledge base ( 18 ). Further, a content-based recommendation system was developed for recommending literature for datasets in ( 11 ), which was the first step toward developing a literature recommendation tool by recommending relevant literature for datasets. This article proposes a dataset recommender that recommends datasets to researchers based on their publications. We collected dataset metadata (title and summary) from GEO and researcher’s publications (title, abstract and year of publication) from PubMed using name and curriculum vitae (CV) for developing a dataset recommendation system. A vector space model (VSM) is used to compare publications and datasets. We propose two novel ideas: A method for representing researchers with multiple vectors reflecting each researcher’s diverse interests. A system for recommending datasets to researchers based on their research vectors. For the datasets, we focus on GEO (https://www.ncbi.nlm.nih.gov/geo/). GEO is a public repository for high-throughput microarray and next-generation sequence functional genomics data. It was found that an average of 21 datasets was added daily in the last 6 years (i.e. 2014–19). This gives a glimpse of the increasing number of datasets being made available online, considering that there are many other online data repositories as well. Many of these datasets were collected at significant expense, and most of these datasets were used only once. We believe that reusability of these datasets can be improved by recommending these to appropriate researchers. Efforts on restructuring GEO have been performed by curating available metadata. In reference ( 19 ), the authors identified the important keywords present in the datasets descriptions and searched other similar datasets. Another task on restructuring the GEO database, ReGEO (http://regeo.org/) was developed by ( 20 ), who identified important metadata such as time points and cell lines for datasets using automated NLP techniques. We developed this dataset recommendation system for researchers as a part of the dataset reusability platform (GETc Research Platform(http://genestudy.org/)) for GEO developed at the University Texas Health Science Center at Houston. This website recommends datasets to users using their publications. The rest of the article is organized in the following manner. Section 2 provides an overview of GEO datasets and researcher publications. Methods used for developing the recommendation system and evaluation techniques used in this experiment are described in Section 3 . Section 4 describes results. Section 5 provides a discussion. Finally, conclusion and future directions are discussed in Section 6 . The proposed dataset recommendation system requires both dataset metadata and the user profile for which datasets will be recommended. We collected metadata of datasets from the GEO repository, and researcher publications from PubMed using their names and CVs. The data collection methods and summaries of data are discussed next. GEO DatasetsGEO is one of the most popular public repositories for functional genomics data. As of December 18, 2019, there were 122 222 series of datasets available in GEO. Histograms of datasets submitted to GEO per day and per year as presented in Figure 1 showed an increasing trend of submitting datasets to GEO, which justified our selection of this repository for developing the recommendation system.  Histogram of datasets submitted to GEO based on datasets collected on December 18, 2019  Overview of dataset indexing pipeline Statistics of datasets collected from GEO