Featured Clinical Reviews

- Screening for Atrial Fibrillation: US Preventive Services Task Force Recommendation Statement JAMA Recommendation Statement January 25, 2022

- Evaluating the Patient With a Pulmonary Nodule: A Review JAMA Review January 18, 2022

Select Your Interests

Customize your JAMA Network experience by selecting one or more topics from the list below.

- Academic Medicine

- Acid Base, Electrolytes, Fluids

- Allergy and Clinical Immunology

- American Indian or Alaska Natives

- Anesthesiology

- Anticoagulation

- Art and Images in Psychiatry

- Artificial Intelligence

- Assisted Reproduction

- Bleeding and Transfusion

- Caring for the Critically Ill Patient

- Challenges in Clinical Electrocardiography

- Climate and Health

- Climate Change

- Clinical Challenge

- Clinical Decision Support

- Clinical Implications of Basic Neuroscience

- Clinical Pharmacy and Pharmacology

- Complementary and Alternative Medicine

- Consensus Statements

- Coronavirus (COVID-19)

- Critical Care Medicine

- Cultural Competency

- Dental Medicine

- Dermatology

- Diabetes and Endocrinology

- Diagnostic Test Interpretation

- Drug Development

- Electronic Health Records

- Emergency Medicine

- End of Life, Hospice, Palliative Care

- Environmental Health

- Equity, Diversity, and Inclusion

- Facial Plastic Surgery

- Gastroenterology and Hepatology

- Genetics and Genomics

- Genomics and Precision Health

- Global Health

- Guide to Statistics and Methods

- Hair Disorders

- Health Care Delivery Models

- Health Care Economics, Insurance, Payment

- Health Care Quality

- Health Care Reform

- Health Care Safety

- Health Care Workforce

- Health Disparities

- Health Inequities

- Health Policy

- Health Systems Science

- History of Medicine

- Hypertension

- Images in Neurology

- Implementation Science

- Infectious Diseases

- Innovations in Health Care Delivery

- JAMA Infographic

- Law and Medicine

- Leading Change

- Less is More

- LGBTQIA Medicine

- Lifestyle Behaviors

- Medical Coding

- Medical Devices and Equipment

- Medical Education

- Medical Education and Training

- Medical Journals and Publishing

- Mobile Health and Telemedicine

- Narrative Medicine

- Neuroscience and Psychiatry

- Notable Notes

- Nutrition, Obesity, Exercise

- Obstetrics and Gynecology

- Occupational Health

- Ophthalmology

- Orthopedics

- Otolaryngology

- Pain Medicine

- Palliative Care

- Pathology and Laboratory Medicine

- Patient Care

- Patient Information

- Performance Improvement

- Performance Measures

- Perioperative Care and Consultation

- Pharmacoeconomics

- Pharmacoepidemiology

- Pharmacogenetics

- Pharmacy and Clinical Pharmacology

- Physical Medicine and Rehabilitation

- Physical Therapy

- Physician Leadership

- Population Health

- Primary Care

- Professional Well-being

- Professionalism

- Psychiatry and Behavioral Health

- Public Health

- Pulmonary Medicine

- Regulatory Agencies

- Reproductive Health

- Research, Methods, Statistics

- Resuscitation

- Rheumatology

- Risk Management

- Scientific Discovery and the Future of Medicine

- Shared Decision Making and Communication

- Sleep Medicine

- Sports Medicine

- Stem Cell Transplantation

- Substance Use and Addiction Medicine

- Surgical Innovation

- Surgical Pearls

- Teachable Moment

- Technology and Finance

- The Art of JAMA

- The Arts and Medicine

- The Rational Clinical Examination

- Tobacco and e-Cigarettes

- Translational Medicine

- Trauma and Injury

- Treatment Adherence

- Ultrasonography

- Users' Guide to the Medical Literature

- Vaccination

- Venous Thromboembolism

- Veterans Health

- Women's Health

- Workflow and Process

- Wound Care, Infection, Healing

- Download PDF

- Share X Facebook Email LinkedIn

- Permissions

Scientific Misconduct and Medical Journals

- 1 JAMA and the JAMA Network, Chicago, Illinois

According to the US Department of Health and Human Services, “Research misconduct means fabrication, falsification, or plagiarism in proposing, performing, or reviewing research, or in reporting research results.” 1 Other important irregularities involving the biomedical research process include, but are not limited to, ethical issues (eg, failure to obtain informed consent, failure to obtain appropriate approval from an institutional review board, and mistreatment of research participants), issues involving authorship responsibilities and disputes, duplicate publication, and failure to report conflicts of interest. When authors are found to have been involved with research misconduct or other serious irregularities involving articles that have been published in scientific journals, editors have a responsibility to ensure the accuracy and integrity of the scientific record. 2 , 3

Although not much is known about the prevalence of scientific misconduct, several studies with limited methods have estimated that the prevalence of scientists who have been involved in scientific misconduct ranges from 1% to 2%. 4 - 6 During the last 5 years, JAMA and the JAMA Network journals have published 12 notices of Retraction about 15 articles (including recent Retractions of 6 articles by the same author) 7 and 6 notices of Expression of Concern about 9 articles. These notices were published primarily because the original studies were found to involve fabrication or falsification of data that invalidated the research and the published articles; in some cases, postpublication investigations could not provide evidence that the original research was valid. Since 2015, JAMA and the JAMA Network journals also have retracted and replaced 12 articles for instances of inadvertent pervasive error resulting from incorrect data coding or incorrect analyses and without evidence of research misconduct. 8 During the same period, 1021 correction notices have been published in these journals. The JAMA Network policies regarding corrections and retraction with replacement have been published previously. 8 , 9 In this Editorial, the focus is on a more complex and challenging issue—scientific misconduct involving fabrication, falsification, and plagiarism in the reporting of research. 1

The Role and Responsibilities of Editors

JAMA and the JAMA Network journals receive numerous communications from readers, such as letters to the editor and emails, that are critical of the published content. Most of the critiques involve matters of interpretation, the need for clarification of content, or differences of opinion; some address ethical concerns, some are frivolous complaints, and some include calls for retraction. However, typically 10 to 12 times each year these journals receive allegations of scientific misconduct. All matters related to allegations of scientific misconduct for articles published in JAMA and the JAMA Network journals are evaluated and managed by the senior staff of JAMA including the editor in chief of JAMA , executive editor, executive managing editor, and the editorial counsel. This provides a consistent process for dealing with potential scientific misconduct. If the allegation involves an article published in a network journal, the editor in chief of that journal is involved and kept informed about the progress of the investigation. In addition, when necessary, additional expertise is obtained.

Allegations of scientific misconduct brought to journals are challenging and time-consuming for the authors, for editors, and potentially for institutions. The first step involves determining the validity of the allegation and an assessment of whether the allegation is consistent with the definition of research misconduct. In some cases, when authors are accused of misconduct, the criticism represents a different interpretation of the data or disagreement with the statistical approach used, rather than scientific misconduct. This initial step also involves determining whether the individuals alleging misconduct have relevant conflicts of interest. In some cases, it appears that financial interests and strongly held views (intellectual conflict of interest) may have led to the allegation. This does not mean that potential conflicts of interest on the part of the persons bringing the allegations preclude the possibility of scientific misconduct on the part of the authors, but rather, evaluation of conflict of interest is part of the assessment process.

If scientific misconduct or the presence of other substantial research irregularities is a possibility, the allegations are shared with the corresponding author, who, on behalf of all of the coauthors, is requested to provide a detailed response. Depending on the nature of the allegation, it can take months for some authors to respond to the concerns. After the response is received and evaluated, additional review and involvement of experts (such as statistical reviewers) may be obtained. In the majority of cases, the authors’ responses and additional information provided regarding the concerns raised are sufficient to make a determination of whether the allegations raised are likely to represent misconduct. For cases in which it is unlikely that misconduct has occurred, clarifications, additional analyses, or both, published as letters to the editor, and often including a correction notice and correction to the published article are sufficient. To date, JAMA has had very few disagreements with individuals making allegations of scientific misconduct, although some have been critical of the time it has taken for JAMA and other journals to resolve an issue of alleged scientific misconduct. 10 - 12

However, if the authors’ responses to the allegations raised are unsatisfactory or unconvincing, or if there is any doubt as to whether scientific misconduct has occurred, additional information and investigation are usually necessary, and the appropriate institution is contacted with a request to conduct a formal evaluation. At that time, and depending on the nature of the allegations, the journal may publish a notice of Expression of Concern about the published reports in question, indicating that issues of validity or other concerns have arisen and are under investigation. 2

Involving institutions is done with great care for several reasons. First, even just an allegation of misconduct can harm the reputation of an individual. Individuals involved in such allegations have expressed this concern and notification of an institution increases the level of scrutiny directed toward the involved person. In these cases, institutions are responsible for ensuring appropriate due process and confidentiality, based on their policies and procedures. Second, just as JAMA receives allegations of scientific misconduct and research irregularities, so too do institutions. It simply is not possible for every institution to conduct a detailed investigation of every allegation received; thus, JAMA and the JAMA Network journals ensure that institutions are only asked to be involved after a determination has been made that scientific misconduct is a possibility and for which the authors have not adequately responded to the concerns raised.

The Role and Responsibilities of Institutions

Institutions are expected to conduct an appropriate and thorough investigation of allegations of scientific misconduct. Some institutions are immediately responsive, acknowledging receipt of the letter from the journal describing the concerns, and quickly begin an investigation. In other cases, it may take time to identify the appropriate institutional individuals to contact, and even then, many months to receive a response. Some institutions appear well-equipped to conduct investigations, whereas other institutions appear to have little experience in such matters or fail to conduct adequate investigations 13 ; these institutions can take months to years to provide JAMA with an adequate response. In some cases involving questions of misconduct from outside of the United States, institutions have indicated that further investigation must wait until numerous legal issues are resolved, further delaying a response.

The type of investigation an institution conducts depends on the specific allegations and the institutional policies and procedures. In some cases, the investigation has involved reviewing the data, the article and related articles, and the analysis. In other cases, the investigation has involved reanalysis by the authors, or independent statistical analysis by a third party not involved in the initial study. Other cases have involved investigation of ethical issues related to the research, such as appropriate ethical review and approval of the study, informed consent for study participants, and notification of study participants about information related to risks of an intervention. No single approach is appropriate in all cases, but rather it depends on the specific allegation. In 2017, a group of representatives who deal with scientific misconduct, including university and institutional leaders and research integrity officers, federal officials, researchers, journal editors, journalists, and attorneys representing respondents, whistle-blowers, and institutions, examined best and failed practices related to institutional investigation of scientific misconduct. 14 The group developed a checklist that can be used by institutions to follow reasonable standards to investigate an allegation of scientific misconduct and to provide an appropriate and complete report following the investigation. 14

JAMA editors request institutions to provide periodic updates on the status of an investigation, and once the investigation is completed, institutions are expected to provide the editors with a detailed report of their findings. For cases in which misconduct has been identified, the institution and the authors may recommend and request retraction of the published article. In other cases, based on the report of the investigation from the institution, the journal editors make the determination of what actions are needed, such as whether an article should be retracted; or when a notice of Expression of Concern had been posted, whether it should be subsequently followed by a notice of Retraction. In each case, the notices are linked to and from the original article, and retracted articles are clearly watermarked as retracted so that readers and researchers are properly alerted to the invalid nature of the original articles. 2

Conclusions

Allegations of scientific misconduct are challenging. Not all such allegations warrant investigation, but some require extensive evaluation. JAMA reviews its approach to allegations of scientific misconduct on a regular basis to ensure that the process is timely, objective, and fair to authors and their institutions, and results in evidence that will directly address the allegations of misconduct. Ultimately, authors, journals, and institutions have an important obligation to ensure the accuracy of the scientific record. By responding appropriately to concerns about scientific misconduct, and taking necessary actions based on evaluation of these concerns, such as corrections, retractions with replacement, notices of Expression of Concern, and Retractions, JAMA and the JAMA Network journals will continue to fulfill the responsibilities of ensuring the validity and integrity of the scientific record.

Corresponding Author: Howard Bauchner, MD, Editor in Chief, JAMA , 330 N Wabash Ave, Chicago, IL 60611 ( [email protected] ).

Published Online: October 19, 2018. doi:10.1001/jama.2018.14350

Conflict of Interest Disclosures: All authors have completed and submitted the ICMJE Form for Disclosure of Potential Conflicts of Interest. Ms Flanagin reports serving as an unpaid member of the board of STM: International Association of Scientific, Technical, and Medical Publishers. No other disclosures were reported.

See More About

Bauchner H , Fontanarosa PB , Flanagin A , Thornton J. Scientific Misconduct and Medical Journals. JAMA. 2018;320(19):1985–1987. doi:10.1001/jama.2018.14350

Manage citations:

© 2024

Artificial Intelligence Resource Center

Cardiology in JAMA : Read the Latest

Browse and subscribe to JAMA Network podcasts!

Others Also Liked

- Register for email alerts with links to free full-text articles

- Access PDFs of free articles

- Manage your interests

- Save searches and receive search alerts

- Research article

- Open access

- Published: 30 April 2021

A scoping review of the literature featuring research ethics and research integrity cases

- Anna Catharina Vieira Armond ORCID: orcid.org/0000-0002-7121-5354 1 ,

- Bert Gordijn 2 ,

- Jonathan Lewis 2 ,

- Mohammad Hosseini 2 ,

- János Kristóf Bodnár 1 ,

- Soren Holm 3 , 4 &

- Péter Kakuk 5

BMC Medical Ethics volume 22 , Article number: 50 ( 2021 ) Cite this article

13k Accesses

25 Citations

28 Altmetric

Metrics details

The areas of Research Ethics (RE) and Research Integrity (RI) are rapidly evolving. Cases of research misconduct, other transgressions related to RE and RI, and forms of ethically questionable behaviors have been frequently published. The objective of this scoping review was to collect RE and RI cases, analyze their main characteristics, and discuss how these cases are represented in the scientific literature.

The search included cases involving a violation of, or misbehavior, poor judgment, or detrimental research practice in relation to a normative framework. A search was conducted in PubMed, Web of Science, SCOPUS, JSTOR, Ovid, and Science Direct in March 2018, without language or date restriction. Data relating to the articles and the cases were extracted from case descriptions.

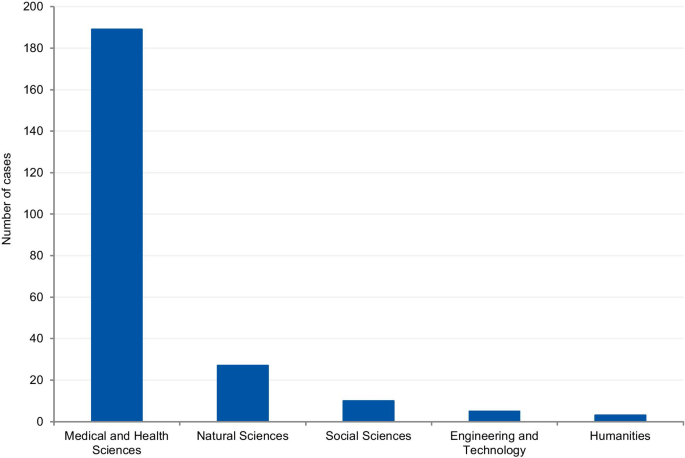

A total of 14,719 records were identified, and 388 items were included in the qualitative synthesis. The papers contained 500 case descriptions. After applying the eligibility criteria, 238 cases were included in the analysis. In the case analysis, fabrication and falsification were the most frequently tagged violations (44.9%). The non-adherence to pertinent laws and regulations, such as lack of informed consent and REC approval, was the second most frequently tagged violation (15.7%), followed by patient safety issues (11.1%) and plagiarism (6.9%). 80.8% of cases were from the Medical and Health Sciences, 11.5% from the Natural Sciences, 4.3% from Social Sciences, 2.1% from Engineering and Technology, and 1.3% from Humanities. Paper retraction was the most prevalent sanction (45.4%), followed by exclusion from funding applications (35.5%).

Conclusions

Case descriptions found in academic journals are dominated by discussions regarding prominent cases and are mainly published in the news section of journals. Our results show that there is an overrepresentation of biomedical research cases over other scientific fields compared to its proportion in scientific publications. The cases mostly involve fabrication, falsification, and patient safety issues. This finding could have a significant impact on the academic representation of misbehaviors. The predominance of fabrication and falsification cases might diverge the attention of the academic community from relevant but less visible violations, and from recently emerging forms of misbehaviors.

Peer Review reports

There has been an increase in academic interest in research ethics (RE) and research integrity (RI) over the past decade. This is due, among other reasons, to the changing research environment with new and complex technologies, increased pressure to publish, greater competition in grant applications, increased university-industry collaborative programs, and growth in international collaborations [ 1 ]. In addition, part of the academic interest in RE and RI is due to highly publicized cases of misconduct [ 2 ].

There is a growing body of published RE and RI cases, which may contribute to public attitudes regarding both science and scientists [ 3 ]. Different approaches have been used in order to analyze RE and RI cases. Studies focusing on ORI files (Office of Research Integrity) [ 2 ], retracted papers [ 4 ], quantitative surveys [ 5 ], data audits [ 6 ], and media coverage [ 3 ] have been conducted to understand the context, causes, and consequences of these cases.

Analyses of RE and RI cases often influence policies on responsible conduct of research [ 1 ]. Moreover, details about cases facilitate a broader understanding of issues related to RE and RI and can drive interventions to address them. Currently, there are no comprehensive studies that have collected and evaluated the RE and RI cases available in the academic literature. This review has been developed by members of the EnTIRE consortium to generate information on the cases that will be made available on the Embassy of Good Science platform ( www.embassy.science ). Two separate analyses have been conducted. The first analysis uses identified research articles to explore how the literature presents cases of RE and RI, in relation to the year of publication, country, article genre, and violation involved. The second analysis uses the cases extracted from the literature in order to characterize the cases and analyze them concerning the violations involved, sanctions, and field of science.

This scoping review was performed according to the Preferred Reporting Items for Systematic Reviews and Meta-Analyses (PRISMA) statement and PRISMA Extension for Scoping Reviews (PRISMA-ScR). The full protocol was pre-registered and it is available at https://ec.europa.eu/research/participants/documents/downloadPublic?documentIds=080166e5bde92120&appId=PPGMS .

Eligibility

Articles with non-fictional case(s) involving a violation of, or misbehavior, poor judgment, or detrimental research practice in relation to a normative framework, were included. Cases unrelated to scientific activities, research institutions, academic or industrial research and publication were excluded. Articles that did not contain a substantial description of the case were also excluded.

A normative framework consists of explicit rules, formulated in laws, regulations, codes, and guidelines, as well as implicit rules, which structure local research practices and influence the application of explicitly formulated rules. Therefore, if a case involves a violation of, or misbehavior, poor judgment, or detrimental research practice in relation to a normative framework, then it does so on the basis of explicit and/or implicit rules governing RE and RI practice.

Search strategy

A search was conducted in PubMed, Web of Science, SCOPUS, JSTOR, Ovid, and Science Direct in March 2018, without any language or date restrictions. Two parallel searches were performed with two sets of medical subject heading (MeSH) terms, one for RE and another for RI. The parallel searches generated two sets of data thereby enabling us to analyze and further investigate the overlaps in, differences in, and evolution of, the representation of RE and RI cases in the academic literature. The terms used in the first search were: (("research ethics") AND (violation OR unethical OR misconduct)). The terms used in the parallel search were: (("research integrity") AND (violation OR unethical OR misconduct)). The search strategy’s validity was tested in a pilot search, in which different keyword combinations and search strings were used, and the abstracts of the first hundred hits in each database were read (Additional file 1 ).

After searching the databases with these two search strings, the titles and abstracts of extracted items were read by three contributors independently (ACVA, PK, and KB). Articles that could potentially meet the inclusion criteria were identified. After independent reading, the three contributors compared their results to determine which studies were to be included in the next stage. In case of a disagreement, items were reassessed in order to reach a consensus. Subsequently, qualified items were read in full.

Data extraction

Data extraction processes were divided by three assessors (ACVA, PK and KB). Each list of extracted data generated by one assessor was cross-checked by the other two. In case of any inconsistencies, the case was reassessed to reach a consensus. The following categories were employed to analyze the data of each extracted item (where available): (I) author(s); (II) title; (III) year of publication; (IV) country (according to the first author's affiliation); (V) article genre; (VI) year of the case; (VII) country in which the case took place; (VIII) institution(s) and person(s) involved; (IX) field of science (FOS-OECD classification)[ 7 ]; (X) types of violation (see below); (XI) case description; and (XII) consequences for persons or institutions involved in the case.

Two sets of data were created after the data extraction process. One set was used for the analysis of articles and their representation in the literature, and the other set was created for the analysis of cases. In the set for the analysis of articles, all eligible items, including duplicate cases (cases found in more than one paper, e.g. Hwang case, Baltimore case) were included. The aim was to understand the historical aspects of violations reported in the literature as well as the paper genre in which cases are described and discussed. For this set, the variables of the year of publication (III); country (IV); article genre (V); and types of violation (X) were analyzed.

For the analysis of cases, all duplicated cases and cases that did not contain enough information about particularities to differentiate them from others (e.g. names of the people or institutions involved, country, date) were excluded. In this set, prominent cases (i.e. those found in more than one paper) were listed only once, generating a set containing solely unique cases. These additional exclusion criteria were applied to avoid multiple representations of cases. For the analysis of cases, the variables: (VI) year of the case; (VII) country in which the case took place; (VIII) institution(s) and person(s) involved; (IX) field of science (FOS-OECD classification); (X) types of violation; (XI) case details; and (XII) consequences for persons or institutions involved in the case were considered.

Article genre classification

We used ten categories to capture the differences in genre. We included a case description in a “news” genre if a case was published in the news section of a scientific journal or newspaper. Although we have not developed a search strategy for newspaper articles, some of them (e.g. New York Times) are indexed in scientific databases such as Pubmed. The same method was used to allocate case descriptions to “editorial”, “commentary”, “misconduct notice”, “retraction notice”, “review”, “letter” or “book review”. We applied the “case analysis” genre if a case description included a normative analysis of the case. The “educational” genre was used when a case description was incorporated to illustrate RE and RI guidelines or institutional policies.

Categorization of violations

For the extraction process, we used the articles’ own terminology when describing violations/ethical issues involved in the event (e.g. plagiarism, falsification, ghost authorship, conflict of interest, etc.) to tag each article. In case the terminology was incompatible with the case description, other categories were added to the original terminology for the same case. Subsequently, the resulting list of terms was standardized using the list of major and minor misbehaviors developed by Bouter and colleagues [ 8 ]. This list consists of 60 items classified into four categories: Study design, data collection, reporting, and collaboration issues. (Additional file 2 ).

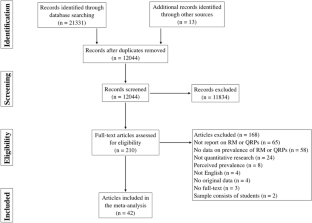

Systematic search

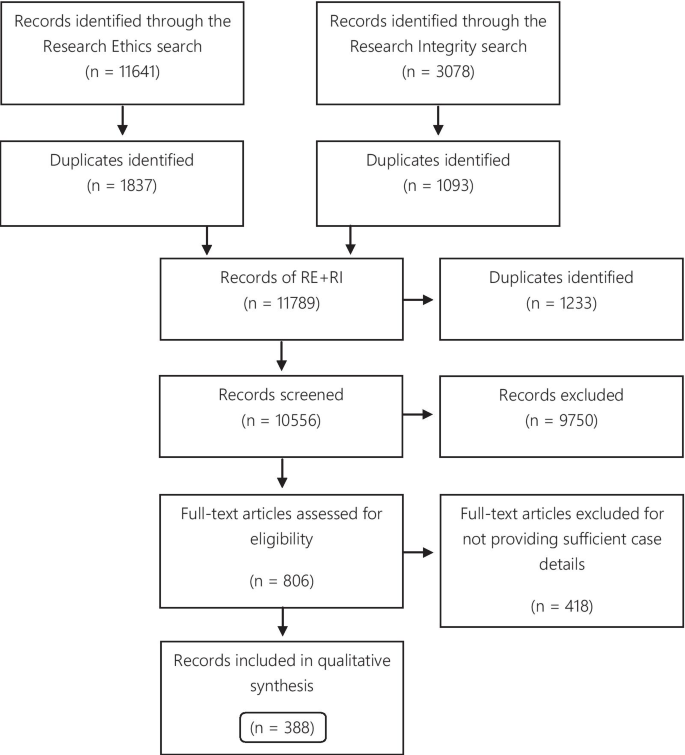

A total of 11,641 records were identified through the RE search and 3078 in the RI search. The results of the parallel searches were combined and the duplicates removed. The remaining 10,556 records were screened, and at this stage, 9750 items were excluded because they did not fulfill the inclusion criteria. 806 items were selected for full-text reading. Subsequently, 388 articles were included in the qualitative synthesis (Fig. 1 ).

Flow diagram

Of the 388 articles, 157 were only identified via the RE search, 87 exclusively via the RI search, and 144 were identified via both search strategies. The eligible articles contained 500 case descriptions, which were used for the analysis of the publications articles analysis. 256 case descriptions discussed the same 50 cases. The Hwang case was the most frequently described case, discussed in 27 articles. Furthermore, the top 10 most described cases were found in 132 articles (Table 1 ).

For the analysis of cases, 206 (41.2% of the case descriptions) duplicates were excluded, and 56 (11.2%) cases were excluded for not providing enough information to distinguish them from other cases, resulting in 238 eligible cases.

Analysis of the articles

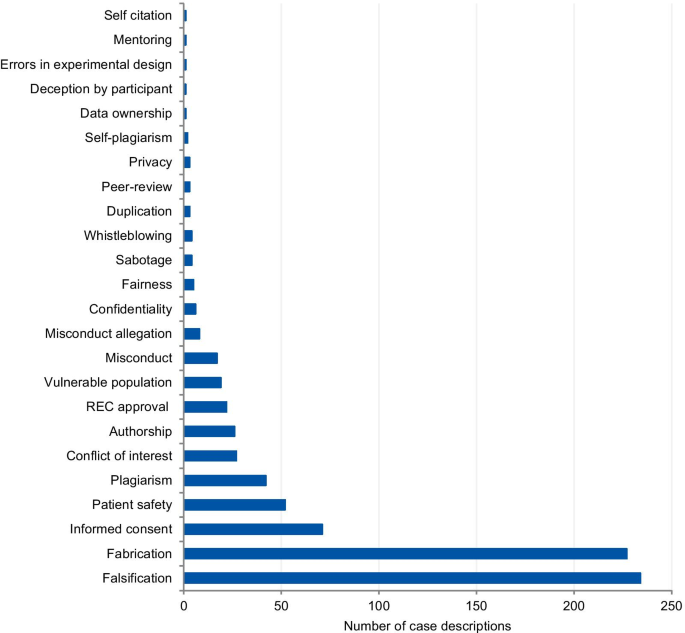

The categories used to classify the violations include those that pertain to the different kinds of scientific misconduct (falsification, fabrication, plagiarism), detrimental research practices (authorship issues, duplication, peer-review, errors in experimental design, and mentoring), and “other misconduct” (according to the definitions from the National Academies of Sciences and Medicine, [ 1 ]). Each case could involve more than one type of violation. The majority of cases presented more than one violation or ethical issue, with a mean of 1.56 violations per case. Figure 2 presents the frequency of each violation tagged to the articles. Falsification and fabrication were the most frequently tagged violations. The violations accounted respectively for 29.1% and 30.0% of the number of taggings (n = 780), and they were involved in 46.8% and 45.4% of the articles (n = 500 case descriptions). Problems with informed consent represented 9.1% of the number of taggings and 14% of the articles, followed by patient safety (6.7% and 10.4%) and plagiarism (5.4% and 8.4%). Detrimental research practices, such as authorship issues, duplication, peer-review, errors in experimental design, mentoring, and self-citation were mentioned cumulatively in 7.0% of the articles.

Tagged violations from the article analysis

Analysis of the cases

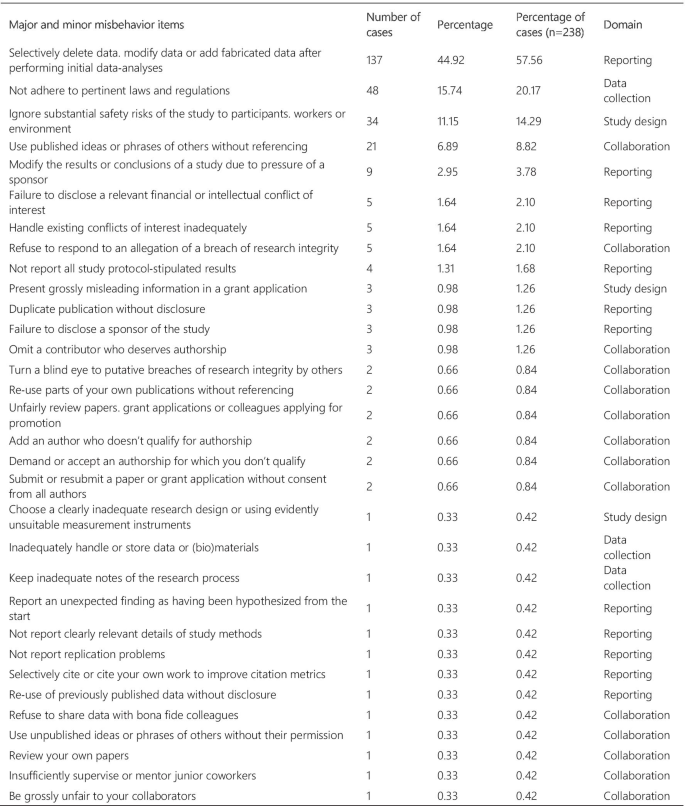

Figure 3 presents the frequency and percentage of each violation found in the cases. Each case could include more than one item from the list. The 238 cases were tagged 305 times, with a mean of 1.28 items per case. Fabrication and falsification were the most frequently tagged violations (44.9%), involved in 57.7% of the cases (n = 238). The non-adherence to pertinent laws and regulations, such as lack of informed consent and REC approval, was the second most frequently tagged violation (15.7%) and involved in 20.2% of the cases. Patient safety issues were the third most frequently tagged violations (11.1%), involved in 14.3% of the cases, followed by plagiarism (6.9% and 8.8%). The list of major and minor misbehaviors [ 8 ] classifies the items into study design, data collection, reporting, and collaboration issues. Our results show that 56.0% of the tagged violations involved issues in reporting, 16.4% in data collection, 15.1% involved collaboration issues, and 12.5% in the study design. The items in the original list that were not listed in the results were not involved in any case collected.

Major and minor misbehavior items from the analysis of cases

Article genre

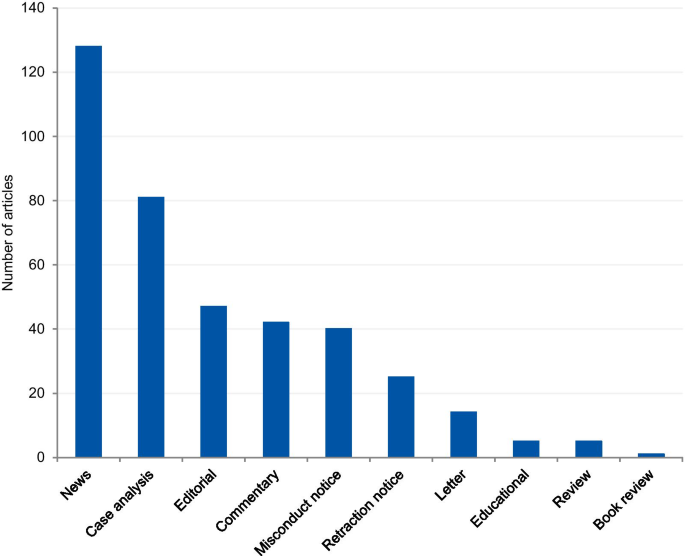

The articles were mostly classified into “news” (33.0%), followed by “case analysis” (20.9%), “editorial” (12.1%), “commentary” (10.8%), “misconduct notice” (10.3%), “retraction notice” (6.4%), “letter” (3.6%), “educational paper” (1.3%), “review” (1%), and “book review” (0.3%) (Fig. 4 ). The articles classified into “news” and “case analysis” included predominantly prominent cases. Items classified into “news” often explored all the investigation findings step by step for the associated cases as the case progressed through investigations, and this might explain its high prevalence. The case analyses included mainly normative assessments of prominent cases. The misconduct and retraction notices included the largest number of unique cases, although a relatively large portion of the retraction and misconduct records could not be included because of insufficient case details. The articles classified into “editorial”, “commentary” and “letter” also included unique cases.

Article genre of included articles

Article analysis

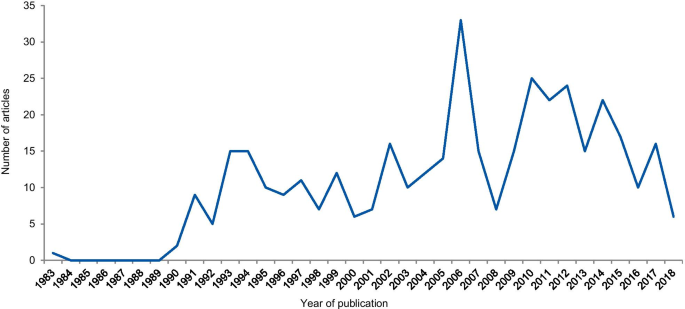

The dates of the eligible articles range from 1983 to 2018 with notable peaks between 1990 and 1996, most probably associated with the Gallo [ 9 ] and Imanishi-Kari cases [ 10 ], and around 2005 with the Hwang [ 11 ], Wakefield [ 12 ], and CNEP trial cases [ 13 ] (Fig. 5 ). The trend line shows an increase in the number of articles over the years.

Frequency of articles according to the year of publication

Case analysis

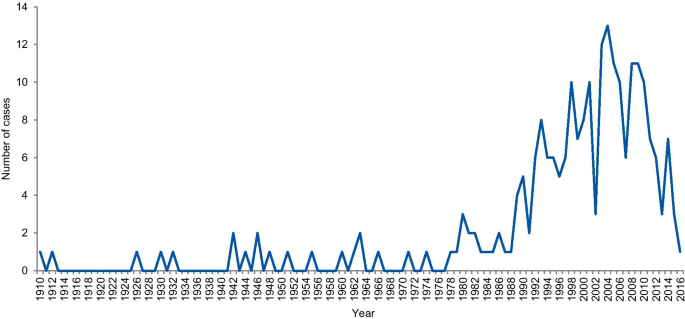

The dates of included cases range from 1798 to 2016. Two cases occurred before 1910, one in 1798 and the other in 1845. Figure 6 shows the number of cases per year from 1910. An increase in the curve started in the early 1980s, reaching the highest frequency in 2004 with 13 cases.

Frequency of cases per year

Geographical distribution

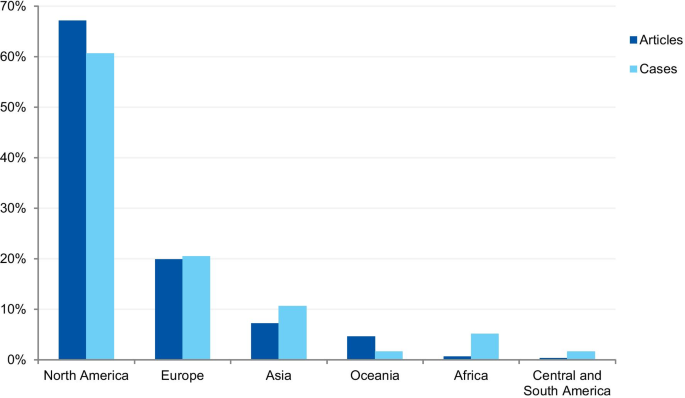

The first analysis concerned the authors’ affiliation and the corresponding author’s address. Where the article contained more than one country in the affiliation list, only the first author’s location was considered. Eighty-one articles were excluded because the authors’ affiliations were not available, and 307 articles were included in the analysis. The articles originated from 26 different countries (Additional file 3 ). Most of the articles emanated from the USA and the UK (61.9% and 14.3% of articles, respectively), followed by Canada (4.9%), Australia (3.3%), China (1.6%), Japan (1.6%), Korea (1.3%), and New Zealand (1.3%). Some of the most discussed cases occurred in the USA; the Imanishi-Kari, Gallo, and Schön cases [ 9 , 10 ]. Intensely discussed cases are also associated with Canada (Fisher/Poisson and Olivieri cases), the UK (Wakefield and CNEP trial cases), South Korea (Hwang case), and Japan (RIKEN case) [ 12 , 14 ]. In terms of percentages, North America and Europe stand out in the number of articles (Fig. 7 ).

Percentage of articles and cases by continent

The case analysis involved the location where the case took place, taking into account the institutions involved in the case. For cases involving more than one country, all the countries were considered. Three cases were excluded from the analysis due to insufficient information. In the case analysis, 40 countries were involved in 235 different cases (Additional file 4 ). Our findings show that most of the reported cases occurred in the USA and the United Kingdom (59.6% and 9.8% of cases, respectively). In addition, a number of cases occurred in Canada (6.0%), Japan (5.5%), China (2.1%), and Germany (2.1%). In terms of percentages, North America and Europe stand out in the number of cases (Fig. 7 ). To enable comparison, we have additionally collected the number of published documents according to country distribution, available on SCImago Journal & Country Rank [ 16 ]. The numbers correspond to the documents published from 1996 to 2019. The USA occupies the first place in the number of documents, with 21.9%, followed by China (11.1%), UK (6.3%), Germany (5.5%), and Japan (4.9%).

Field of science

The cases were classified according to the field of science. Four cases (1.7%) could not be classified due to insufficient information. Where information was available, 80.8% of cases were from the Medical and Health Sciences, 11.5% from the Natural Sciences, 4.3% from Social Sciences, 2.1% from Engineering and Technology, and 1.3% from Humanities (Fig. 8 ). Additionally, we have retrieved the number of published documents according to scientific field distribution, available on SCImago [ 16 ]. Of the total number of scientific publications, 41.5% are related to natural sciences, 22% to engineering, 25.1% to health and medical sciences, 7.8% to social sciences, 1.9% to agricultural sciences, and 1.7% to the humanities.

Field of science from the analysis of cases

This variable aimed to collect information on possible consequences and sanctions imposed by funding agencies, scientific journals and/or institutions. 97 cases could not be classified due to insufficient information. 141 cases were included. Each case could potentially include more than one outcome. Most of cases (45.4%) involved paper retraction, followed by exclusion from funding applications (35.5%). (Table 2 ).

RE and RI cases have been increasingly discussed publicly, affecting public attitudes towards scientists and raising awareness about ethical issues, violations, and their wider consequences [ 5 ]. Different approaches have been applied in order to quantify and address research misbehaviors [ 5 , 17 , 18 , 19 ]. However, most cases are investigated confidentially and the findings remain undisclosed even after the investigation [ 19 , 20 ]. Therefore, the study aimed to collect the RE and RI cases available in the scientific literature, understand how the cases are discussed, and identify the potential of case descriptions to raise awareness on RE and RI.

We collected and analyzed 500 detailed case descriptions from 388 articles and our results show that they mostly relate to extensively discussed and notorious cases. Approximately half of all included cases was mentioned in at least two different articles, and the top ten most commonly mentioned cases were discussed in 132 articles.

The prominence of certain cases in the literature, based on the number of duplicated cases we found (e.g. Hwang case), can be explained by the type of article in which cases are discussed and the type of violation involved in the case. In the article genre analysis, 33% of the cases were described in the news section of scientific publications. Our findings show that almost all article genres discuss those cases that are new and in vogue. Once the case appears in the public domain, it is intensely discussed in the media and by scientists, and some prominent cases have been discussed for more than 20 years (Table 1 ). Misconduct and retraction notices were exceptions in the article genre analysis, as they presented mostly unique cases. The misconduct notices were mainly found on the NIH repository, which is indexed in the searched databases. Some federal funding agencies like NIH usually publicize investigation findings associated with the research they fund. The results derived from the NIH repository also explains the large proportion of articles from the US (61.9%). However, in some cases, only a few details are provided about the case. For cases that have not received federal funding and have not been reported to federal authorities, the investigation is conducted by local institutions. In such instances, the reporting of findings depends on each institution’s policy and willingness to disclose information [ 21 ]. The other exception involves retraction notices. Despite the existence of ethical guidelines [ 22 ], there is no uniform and a common approach to how a journal should report a retraction. The Retraction Watch website suggests two lists of information that should be included in a retraction notice to satisfy the minimum and optimum requirements [ 22 , 23 ]. As well as disclosing the reason for the retraction and information regarding the retraction process, optimal notices should include: (I) the date when the journal was first alerted to potential problems; (II) details regarding institutional investigations and associated outcomes; (III) the effects on other papers published by the same authors; (IV) statements about more recent replications only if and when these have been validated by a third party; (V) details regarding the journal’s sanctions; and (VI) details regarding any lawsuits that have been filed regarding the case. The lack of transparency and information in retraction notices was also noted in studies that collected and evaluated retractions [ 24 ]. According to Resnik and Dinse [ 25 ], retractions notices related to cases of misconduct tend to avoid naming the specific violation involved in the case. This study found that only 32.8% of the notices identify the actual problem, such as fabrication, falsification, and plagiarism, and 58.8% reported the case as replication failure, loss of data, or error. Potential explanations for euphemisms and vague claims in retraction notices authored by editors could pertain to the possibility of legal actions from the authors, honest or self-reported errors, and lack of resources to conduct thorough investigations. In addition, the lack of transparency can also be explained by the conflicts of interests of the article’s author(s), since the notices are often written by the authors of the retracted article.

The analysis of violations/ethical issues shows the dominance of fabrication and falsification cases and explains the high prevalence of prominent cases. Non-adherence to laws and regulations (REC approval, informed consent, and data protection) was the second most prevalent issue, followed by patient safety, plagiarism, and conflicts of interest. The prevalence of the five most tagged violations in the case analysis was higher than the prevalence found in the analysis of articles that involved the same violations. The only exceptions are fabrication and falsification cases, which represented 45% of the tagged violations in the analysis of cases, and 59.1% in the article analysis. This disproportion shows a predilection for the publication of discussions related to fabrication and falsification when compared to other serious violations. Complex cases involving these types of violations make good headlines and this follows a custom pattern of writing about cases that catch the public and media’s attention [ 26 ]. The way cases of RE and RI violations are explored in the literature gives a sense that only a few scientists are “the bad apples” and they are usually discovered, investigated, and sanctioned accordingly. This implies that the integrity of science, in general, remains relatively untouched by these violations. However, studies on misconduct determinants show that scientific misconduct is a systemic problem, which involves not only individual factors, but structural and institutional factors as well, and that a combined effort is necessary to change this scenario [ 27 , 28 ].

Analysis of cases

A notable increase in RE and RI cases occurred in the 1990s, with a gradual increase until approximately 2006. This result is in agreement with studies that evaluated paper retractions [ 24 , 29 ]. Although our study did not focus only on retractions, the trend is similar. This increase in cases should not be attributed only to the increase in the number of publications, since studies that evaluated retractions show that the percentage of retraction due to fraud has increased almost ten times since 1975, compared to the total number of articles. Our results also show a gradual reduction in the number of cases from 2011 and a greater drop in 2015. However, this reduction should be considered cautiously because many investigations take years to complete and have their findings disclosed. ORI has shown that from 2001 to 2010 the investigation of their cases took an average of 20.48 months with a maximum investigation time of more than 9 years [ 24 ].

The countries from which most cases were reported were the USA (59.6%), the UK (9.8%), Canada (6.0%), Japan (5.5%), and China (2.1%). When analyzed by continent, the highest percentage of cases took place in North America, followed by Europe, Asia, Oceania, Latin America, and Africa. The predominance of cases from the USA is predictable, since the country publishes more scientific articles than any other country, with 21.8% of the total documents, according to SCImago [ 16 ]. However, the same interpretation does not apply to China, which occupies the second position in the ranking, with 11.2%. These differences in the geographical distribution were also found in a study that collected published research on research integrity [ 30 ]. The results found by Aubert Bonn and Pinxten (2019) show that studies in the United States accounted for more than half of the sample collected, and although China is one of the leaders in scientific publications, it represented only 0.7% of the sample. Our findings can also be explained by the search strategy that included only keywords in English. Since the majority of RE and RI cases are investigated and have their findings locally disclosed, the employment of English keywords and terms in the search strategy is a limitation. Moreover, our findings do not allow us to draw inferences regarding the incidence or prevalence of misconduct around the world. Instead, it shows where there is a culture of publicly disclosing information and openly discussing RE and RI cases in English documents.

Scientific field analysis

The results show that 80.8% of reported cases occurred in the medical and health sciences whilst only 1.3% occurred in the humanities. This disciplinary difference has also been observed in studies on research integrity climates. A study conducted by Haven and colleagues, [ 28 ] associated seven subscales of research climate with the disciplinary field. The subscales included: (1) Responsible Conduct of Research (RCR) resources, (2) regulatory quality, (3) integrity norms, (4) integrity socialization, (5) supervisor/supervisee relations, (6) (lack of) integrity inhibitors, and (7) expectations. The results, based on the seven subscale scores, show that researchers from the humanities and social sciences have the lowest perception of the RI climate. By contrast, the natural sciences expressed the highest perception of the RI climate, followed by the biomedical sciences. There are also significant differences in the depth and extent of the regulatory environments of different disciplines (e.g. the existence of laws, codes of conduct, policies, relevant ethics committees, or authorities). These findings corroborate our results, as those areas of science most familiar with RI tend to explore the subject further, and, consequently, are more likely to publish case details. Although the volume of published research in each research area also influences the number of cases, the predominance of medical and health sciences cases is not aligned with the trends regarding the volume of published research. According to SCImago Journal & Country Rank [ 16 ], natural sciences occupy the first place in the number of publications (41,5%), followed by the medical and health sciences (25,1%), engineering (22%), social sciences (7,8%), and the humanities (1,7%). Moreover, biomedical journals are overrepresented in the top scientific journals by IF ranking, and these journals usually have clear policies for research misconduct. High-impact journals are more likely to have higher visibility and scrutiny, and consequently, more likely to have been the subject of misconduct investigations. Additionally, the most well-known general medical journals, including NEJM, The Lancet, and the BMJ, employ journalists to write their news sections. Since these journals have the resources to produce extensive news sections, it is, therefore, more likely that medical cases will be discussed.

Violations analysis

In the analysis of violations, the cases were categorized into major and minor misbehaviors. Most cases involved data fabrication and falsification, followed by cases involving non-adherence to laws and regulations, patient safety, plagiarism, and conflicts of interest. When classified by categories, 12.5% of the tagged violations involved issues in the study design, 16.4% in data collection, 56.0% in reporting, and 15.1% involved collaboration issues. Approximately 80% of the tagged violations involved serious research misbehaviors, based on the ranking of research misbehaviors proposed by Bouter and colleagues. However, as demonstrated in a meta-analysis by Fanelli (2009), most self-declared cases involve questionable research practices. In the meta-analysis, 33.7% of scientists admitted questionable research practices, and 72% admitted when asked about the behavior of colleagues. This finding contrasts with an admission rate of 1.97% and 14.12% for cases involving fabrication, falsification, and plagiarism. However, Fanelli’s meta-analysis does not include data about research misbehaviors in its wider sense but focuses on behaviors that bias research results (i.e. fabrication and falsification, intentional non-publication of results, biased methodology, misleading reporting). In our study, the majority of cases involved FFP (66.4%). Overrepresentation of some types of violations, and underrepresentation of others, might lead to misguided efforts, as cases that receive intense publicity eventually influence policies relating to scientific misconduct and RI [ 20 ].

Sanctions analysis

The five most prevalent outcomes were paper retraction, followed by exclusion from funding applications, exclusion from service or position, dismissal and suspension, and paper correction. This result is similar to that found by Redman and Merz [ 31 ], who collected data from misconduct cases provided by the ORI. Moreover, their results show that fabrication and falsification cases are 8.8 times more likely than others to receive funding exclusions. Such cases also received, on average, 0.6 more sanctions per case. Punishments for misconduct remain under discussion, ranging from the criminalization of more serious forms of misconduct [ 32 ] to social punishments, such as those recently introduced by China [ 33 ]. The most common sanction identified by our analysis—paper retraction—is consistent with the most prevalent types of violation, that is, falsification and fabrication.

Publicizing scientific misconduct

The lack of publicly available summaries of misconduct investigations makes it difficult to share experiences and evaluate the effectiveness of policies and training programs. Publicizing scientific misconduct can have serious consequences and creates a stigma around those involved in the case. For instance, publicized allegations can damage the reputation of the accused even when they are later exonerated [ 21 ]. Thus, for published cases, it is the responsibility of the authors and editors to determine whether the name(s) of those involved should be disclosed. On the one hand, it is envisaged that disclosing the name(s) of those involved will encourage others in the community to foster good standards. On the other hand, it is suggested that someone who has made a mistake should have the right to a chance to defend his/her reputation. Regardless of whether a person's name is left out or disclosed, case reports have an important educational function and can help guide RE- and RI-related policies [ 34 ]. A recent paper published by Gunsalus [ 35 ] proposes a three-part approach to strengthen transparency in misconduct investigations. The first part consists of a checklist [ 36 ]. The second suggests that an external peer reviewer should be involved in investigative reporting. The third part calls for the publication of the peer reviewer’s findings.

Limitations

One of the possible limitations of our study may be our search strategy. Although we have conducted pilot searches and sensitivity tests to reach the most feasible and precise search strategy, we cannot exclude the possibility of having missed important cases. Furthermore, the use of English keywords was another limitation of our search. Since most investigations are performed locally and published in local repositories, our search only allowed us to access cases from English-speaking countries or discussed in academic publications written in English. Additionally, it is important to note that the published cases are not representative of all instances of misconduct, since most of them are never discovered, and when discovered, not all are fully investigated or have their findings published. It is also important to note that the lack of information from the extracted case descriptions is a limitation that affects the interpretation of our results. In our review, only 25 retraction notices contained sufficient information that allowed us to include them in our analysis in conformance with the inclusion criteria. Although our search strategy was not focused specifically on retraction and misconduct notices, we believe that if sufficiently detailed information was available in such notices, the search strategy would have identified them.

Case descriptions found in academic journals are dominated by discussions regarding prominent cases and are mainly published in the news section of journals. Our results show that there is an overrepresentation of biomedical research cases over other scientific fields when compared with the volume of publications produced by each field. Moreover, published cases mostly involve fabrication, falsification, and patient safety issues. This finding could have a significant impact on the academic representation of ethical issues for RE and RI. The predominance of fabrication and falsification cases might diverge the attention of the academic community from relevant but less visible violations and ethical issues, and recently emerging forms of misbehaviors.

Availability of data and materials

This review has been developed by members of the EnTIRE project in order to generate information on the cases that will be made available on the Embassy of Good Science platform ( www.embassy.science ). The dataset supporting the conclusions of this article is available in the Open Science Framework (OSF) repository in https://osf.io/3xatj/?view_only=313a0477ab554b7489ee52d3046398b9 .

National Academies of Sciences E, Medicine. Fostering integrity in research. National Academies Press; 2017.

Davis MS, Riske-Morris M, Diaz SR. Causal factors implicated in research misconduct: evidence from ORI case files. Sci Eng Ethics. 2007;13(4):395–414. https://doi.org/10.1007/s11948-007-9045-2 .

Article Google Scholar

Ampollini I, Bucchi M. When public discourse mirrors academic debate: research integrity in the media. Sci Eng Ethics. 2020;26(1):451–74. https://doi.org/10.1007/s11948-019-00103-5 .

Hesselmann F, Graf V, Schmidt M, Reinhart M. The visibility of scientific misconduct: a review of the literature on retracted journal articles. Curr Sociol La Sociologie contemporaine. 2017;65(6):814–45. https://doi.org/10.1177/0011392116663807 .

Martinson BC, Anderson MS, de Vries R. Scientists behaving badly. Nature. 2005;435(7043):737–8. https://doi.org/10.1038/435737a .

Loikith L, Bauchwitz R. The essential need for research misconduct allegation audits. Sci Eng Ethics. 2016;22(4):1027–49. https://doi.org/10.1007/s11948-016-9798-6 .

OECD. Revised field of science and technology (FoS) classification in the Frascati manual. Working Party of National Experts on Science and Technology Indicators 2007. p. 1–12.

Bouter LM, Tijdink J, Axelsen N, Martinson BC, ter Riet G. Ranking major and minor research misbehaviors: results from a survey among participants of four World Conferences on Research Integrity. Res Integrity Peer Rev. 2016;1(1):17. https://doi.org/10.1186/s41073-016-0024-5 .

Greenberg DS. Resounding echoes of Gallo case. Lancet. 1995;345(8950):639.

Dresser R. Giving scientists their due. The Imanishi-Kari decision. Hastings Center Rep. 1997;27(3):26–8.

Hong ST. We should not forget lessons learned from the Woo Suk Hwang’s case of research misconduct and bioethics law violation. J Korean Med Sci. 2016;31(11):1671–2. https://doi.org/10.3346/jkms.2016.31.11.1671 .

Opel DJ, Diekema DS, Marcuse EK. Assuring research integrity in the wake of Wakefield. BMJ (Clinical research ed). 2011;342(7790):179. https://doi.org/10.1136/bmj.d2 .

Wells F. The Stoke CNEP Saga: did it need to take so long? J R Soc Med. 2010;103(9):352–6. https://doi.org/10.1258/jrsm.2010.10k010 .

Normile D. RIKEN panel finds misconduct in controversial paper. Science. 2014;344(6179):23. https://doi.org/10.1126/science.344.6179.23 .

Wager E. The Committee on Publication Ethics (COPE): Objectives and achievements 1997–2012. La Presse Médicale. 2012;41(9):861–6. https://doi.org/10.1016/j.lpm.2012.02.049 .

SCImago nd. SJR — SCImago Journal & Country Rank [Portal]. http://www.scimagojr.com . Accessed 03 Feb 2021.

Fanelli D. How many scientists fabricate and falsify research? A systematic review and meta-analysis of survey data. PLoS ONE. 2009;4(5):e5738. https://doi.org/10.1371/journal.pone.0005738 .

Steneck NH. Fostering integrity in research: definitions, current knowledge, and future directions. Sci Eng Ethics. 2006;12(1):53–74. https://doi.org/10.1007/PL00022268 .

DuBois JM, Anderson EE, Chibnall J, Carroll K, Gibb T, Ogbuka C, et al. Understanding research misconduct: a comparative analysis of 120 cases of professional wrongdoing. Account Res. 2013;20(5–6):320–38. https://doi.org/10.1080/08989621.2013.822248 .

National Academy of Sciences NAoE, Institute of Medicine Panel on Scientific R, the Conduct of R. Responsible Science: Ensuring the Integrity of the Research Process: Volume I. Washington (DC): National Academies Press (US) Copyright (c) 1992 by the National Academy of Sciences; 1992.

Bauchner H, Fontanarosa PB, Flanagin A, Thornton J. Scientific misconduct and medical journals. JAMA. 2018;320(19):1985–7. https://doi.org/10.1001/jama.2018.14350 .

COPE Council. COPE Guidelines: Retraction Guidelines. 2019. https://doi.org/10.24318/cope.2019.1.4 .

Retraction Watch. What should an ideal retraction notice look like? 2015, May 21. https://retractionwatch.com/2015/05/21/what-should-an-ideal-retraction-notice-look-like/ .

Fang FC, Steen RG, Casadevall A. Misconduct accounts for the majority of retracted scientific publications. Proc Natl Acad Sci USA. 2012;109(42):17028–33. https://doi.org/10.1073/pnas.1212247109 .

Resnik DB, Dinse GE. Scientific retractions and corrections related to misconduct findings. J Med Ethics. 2013;39(1):46–50. https://doi.org/10.1136/medethics-2012-100766 .

de Vries R, Anderson MS, Martinson BC. Normal misbehavior: scientists talk about the ethics of research. J Empir Res Hum Res Ethics JERHRE. 2006;1(1):43–50. https://doi.org/10.1525/jer.2006.1.1.43 .

Sovacool BK. Exploring scientific misconduct: isolated individuals, impure institutions, or an inevitable idiom of modern science? J Bioethical Inquiry. 2008;5(4):271. https://doi.org/10.1007/s11673-008-9113-6 .

Haven TL, Tijdink JK, Martinson BC, Bouter LM. Perceptions of research integrity climate differ between academic ranks and disciplinary fields: results from a survey among academic researchers in Amsterdam. PLoS ONE. 2019;14(1):e0210599. https://doi.org/10.1371/journal.pone.0210599 .

Trikalinos NA, Evangelou E, Ioannidis JPA. Falsified papers in high-impact journals were slow to retract and indistinguishable from nonfraudulent papers. J Clin Epidemiol. 2008;61(5):464–70. https://doi.org/10.1016/j.jclinepi.2007.11.019 .

Aubert Bonn N, Pinxten W. A decade of empirical research on research integrity: What have we (not) looked at? J Empir Res Hum Res Ethics. 2019;14(4):338–52. https://doi.org/10.1177/1556264619858534 .

Redman BK, Merz JF. Scientific misconduct: do the punishments fit the crime? Science. 2008;321(5890):775. https://doi.org/10.1126/science.1158052 .

Bülow W, Helgesson G. Criminalization of scientific misconduct. Med Health Care Philos. 2019;22(2):245–52. https://doi.org/10.1007/s11019-018-9865-7 .

Cyranoski D. China introduces “social” punishments for scientific misconduct. Nature. 2018;564(7736):312. https://doi.org/10.1038/d41586-018-07740-z .

Bird SJ. Publicizing scientific misconduct and its consequences. Sci Eng Ethics. 2004;10(3):435–6. https://doi.org/10.1007/s11948-004-0001-0 .

Gunsalus CK. Make reports of research misconduct public. Nature. 2019;570(7759):7. https://doi.org/10.1038/d41586-019-01728-z .

Gunsalus CK, Marcus AR, Oransky I. Institutional research misconduct reports need more credibility. JAMA. 2018;319(13):1315–6. https://doi.org/10.1001/jama.2018.0358 .

Download references

Acknowledgements

The authors wish to thank the EnTIRE research group. The EnTIRE project (Mapping Normative Frameworks for Ethics and Integrity of Research) aims to create an online platform that makes RE+RI information easily accessible to the research community. The EnTIRE Consortium is composed by VU Medical Center, Amsterdam, gesinn. It Gmbh & Co Kg, KU Leuven, University of Split School of Medicine, Dublin City University, Central European University, University of Oslo, University of Manchester, European Network of Research Ethics Committees.

EnTIRE project (Mapping Normative Frameworks for Ethics and Integrity of Research) has received funding from the European Union’s Horizon 2020 research and innovation program under grant agreement N 741782. The funder had no role in study design, data collection and analysis, decision to publish, or preparation of the manuscript.

Author information

Authors and affiliations.

Department of Behavioural Sciences, Faculty of Medicine, University of Debrecen, Móricz Zsigmond krt. 22. III. Apartman Diákszálló, Debrecen, 4032, Hungary

Anna Catharina Vieira Armond & János Kristóf Bodnár

Institute of Ethics, School of Theology, Philosophy and Music, Dublin City University, Dublin, Ireland

Bert Gordijn, Jonathan Lewis & Mohammad Hosseini

Centre for Social Ethics and Policy, School of Law, University of Manchester, Manchester, UK

Center for Medical Ethics, HELSAM, Faculty of Medicine, University of Oslo, Oslo, Norway

Center for Ethics and Law in Biomedicine, Central European University, Budapest, Hungary

Péter Kakuk

You can also search for this author in PubMed Google Scholar

Contributions

All authors (ACVA, BG, JL, MH, JKB, SH and PK) developed the idea for the article. ACVA, PK, JKB performed the literature search and data analysis, ACVA and PK produced the draft, and all authors critically revised it. All authors have read and approved the manuscript.

Corresponding author

Correspondence to Anna Catharina Vieira Armond .

Ethics declarations

Ethics approval and consent to participate.

Not applicable.

Consent for publication

Competing interests.

The authors declare that they have no conflict of interest.

Additional information

Publisher's note.

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Supplementary Information

Additional file 1.

. Pilot search and search strategy.

Additional file 2

. List of Major and minor misbehavior items (Developed by Bouter LM, Tijdink J, Axelsen N, Martinson BC, ter Riet G. Ranking major and minor research misbehaviors: results from a survey among participants of four World Conferences on Research Integrity. Research integrity and peer review. 2016;1(1):17. https://doi.org/10.1186/s41073-016-0024-5 ).

Additional file 3

. Table containing the number and percentage of countries included in the analysis of articles.

Additional file 4

. Table containing the number and percentage of countries included in the analysis of the cases.

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article's Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article's Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/ . The Creative Commons Public Domain Dedication waiver ( http://creativecommons.org/publicdomain/zero/1.0/ ) applies to the data made available in this article, unless otherwise stated in a credit line to the data.

Reprints and permissions

About this article

Cite this article.

Armond, A.C.V., Gordijn, B., Lewis, J. et al. A scoping review of the literature featuring research ethics and research integrity cases. BMC Med Ethics 22 , 50 (2021). https://doi.org/10.1186/s12910-021-00620-8

Download citation

Received : 06 October 2020

Accepted : 21 April 2021

Published : 30 April 2021

DOI : https://doi.org/10.1186/s12910-021-00620-8

Share this article

Anyone you share the following link with will be able to read this content:

Sorry, a shareable link is not currently available for this article.

Provided by the Springer Nature SharedIt content-sharing initiative

- Research ethics

- Research integrity

- Scientific misconduct

BMC Medical Ethics

ISSN: 1472-6939

- General enquiries: [email protected]

Advertisement

Prevalence of Research Misconduct and Questionable Research Practices: A Systematic Review and Meta-Analysis

- Original Research/Scholarship

- Published: 29 June 2021

- Volume 27 , article number 41 , ( 2021 )

Cite this article

- Yu Xie 1 , 2 ,

- Kai Wang 1 &

- Yan Kong 1

4146 Accesses

65 Citations

140 Altmetric

16 Mentions

Explore all metrics

Irresponsible research practices damaging the value of science has been an increasing concern among researchers, but previous work failed to estimate the prevalence of all forms of irresponsible research behavior. Additionally, these analyses have not included articles published in the last decade from 2011 to 2020. This meta-analysis provides an updated meta-analysis that calculates the pooled estimates of research misconduct (RM) and questionable research practices (QRPs), and explores the factors associated with the prevalence of these issues. The estimates, committing RM concern at least 1 of FFP (falsification, fabrication, plagiarism) and (unspecified) QRPs concern 1 or more QRPs, were 2.9% (95% CI 2.1–3.8%) and 12.5% (95% CI 10.5–14.7%), respectively. In addition, 15.5% (95% CI 12.4–19.2%) of researchers witnessed others who had committed at least 1 RM, while 39.7% (95% CI 35.6–44.0%) were aware of others who had used at least 1 QRP. The results document that response proportion, limited recall period, career level, disciplinary background and locations all affect significantly the prevalence of these issues. This meta-analysis addresses a gap in existing meta-analyses and estimates the prevalence of all forms of RM and QRPs, thus providing a better understanding of irresponsible research behaviors.

This is a preview of subscription content, log in via an institution to check access.

Access this article

Price includes VAT (Russian Federation)

Instant access to the full article PDF.

Rent this article via DeepDyve

Institutional subscriptions

Similar content being viewed by others

Why, When, Who, What, How, and Where for Trainees Writing Literature Review Articles

Gerry L. Koons, Katja Schenke-Layland & Antonios G. Mikos

Literature reviews as independent studies: guidelines for academic practice

Sascha Kraus, Matthias Breier, … João J. Ferreira

The Trustworthiness of Content Analysis

Availability of data and material.

Full data from the current meta-analysis can be retrieved from Harvard Dataverse (Available at https://dataverse.harvard.edu/dataset.xhtml?persistentId=doi:10.7910/DVN/F9C8OK ).

Code availability

The code for the analysis can be retrieved from Harvard Dataverse (Available at https://dataverse.harvard.edu/dataset.xhtml?persistentId=doi:10.7910/DVN/F9C8OK ).

Adeleye, O. A., & Adebamowo, C. A. (2012). Factors associated with research wrongdoing in Nigeria. Journal of Empirical Research on Human Research Ethics, 7 (5), 15–24.

Article Google Scholar

Agnoli, F., Wicherts, J. M., Veldkamp, C. L., Albiero, P., & Cubelli, R. (2017). Questionable research practices among Italian research psychologists. PLoS ONE, 12 (3), 0172792.

All European Academies. (2017). The European code of conduct for research integrity revised edition . https://allea.org/code-of-conduct . Accessed 4 April 2021.

Allen, G. N., Ball, N. L., & Smith, H. J. (2011). Information systems research behaviors: What are the normative standards? Mis Quarterly, 35 (3), 533–551.

Awasthi, S., & Ranjan, S. (2019). Perception and attitude towards data cooking: A perspective of LIS research scholars. Library Philosophy and Practice , 2872.

Banks, G. C., O’Boyle, E. H., Jr., Pollack, J. M., White, C. D., Batchelor, J. H., Whelpley, C. E., et al. (2016). Questions about questionable research practices in the field of management: A guest commentary. Journal of Management, 42 (1), 5–20.

Bebeau, M. J., & Davis, E. L. (1996). Survey of ethical issues in dental research. Journal of Dental Research, 75 (2), 845–855.

Bedeian, A. G., Taylor, S. G., & Miller, A. N. (2010). Management science on the credibility bubble: Cardinal sins and various misdemeanors. Academy of Management Learning & Education, 9 (4), 715–725.

Google Scholar

Begg, C. B., & Mazumdar, M. (1994). Operating characteristics of a rank correlation test for publication bias. Biometrics, 50 (4), 1088–1101.

Braun, M., & Roussos, A. J. (2012). Psychotherapy researchers: Reported misbehaviors and opinions. Journal of Empirical Research on Human Research Ethics, 7 (5), 25–29.

Bruton, S. V., Brown, M., & Sacco, D. F. (2020). Ethical consistency and experience: An attempt to influence researcher attitudes toward questionable research practices through reading prompts. Journal of Empirical Research on Human Research Ethics, 15 (3), 216–226.

Burgess, G. L., & Mullen, D. (2002). Observations of ethical misconduct among industrial hygienists in England. AIHA Journal, 63 (2), 151–155.

Dhingra, D., & Mishra, D. (2014). Publication misconduct among medical professionals in India. Indian Journal of Medical Ethics, 11 (2), 104–107.

Dotterweich, D. P., & Garrison, S. (1998). Research ethics of business academic researchers at AACSB institutions. Teaching Business Ethics, 1 (4), 431–447.

Eastwood, S., Derish, P., Leash, E., & Ordway, S. (1996). Ethical issues in biomedical research: Perceptions and practices of postdoctoral research fellows responding to a survey. Science and Engineering Ethics, 2 (1), 89–114.

Egger, M., Smith, G. D., Schneider, M., & Minder, C. (1997). Bias in meta-analysis detected by a simple, graphical test. BMJ, 315 (7109), 629–634.

Fanelli, D. (2009). How many scientists fabricate and falsify research? A systematic review and meta-analysis of survey data. PLoS ONE, 4 (5), e5738.

Fiedler, K., & Schwarz, N. (2016). Questionable research practices revisited. Social Psychological and Personality Science, 7 (1), 45–52.

Fraser, H., Parker, T., Nakagawa, S., Barnett, A., & Fidler, F. (2018). Questionable research practices in ecology and evolution. PLoS ONE , 13 (7), e0200303.

Gardner, W., Lidz, C. W., & Hartwig, K. C. (2005). Authors’ reports about research integrity problems in clinical trials. Contemporary Clinical Trials, 26 (2), 244–251.

Geggie, D. (2001). A survey of newly appointed consultants’ attitudes towards research fraud. Journal of Medical Ethics, 27 (5), 344–346.

Glick, J. L. (1993). Perceptions concerning research integrity and the practice of data audit in the biotechnology industry. Accountability in Research, 3 (2–3), 187–195.

Glick, L. J., & Shamoo, A. E. (1994). Results of a survey on research practices, completed by attendees at the third conference on research policies and quality assurance. Accountability in Research, 3 , 275–280.

Godecharle, S., Fieuws, S., Nemery, B., & Dierickx, K. (2017). Scientists still behaving badly? A survey within industry and universities. Science and Engineering Ethics, 24 (6), 1697–1717.

Greenberg, M., & Goldberg, L. (1994). Ethical challenges to risk scientists: An exploratory analysis of survey data. Science, Technology, & Human Values, 19 (2), 223–241.

Henry, D. A., Hill, S. R., Doran, E., Newby, D. A., Henderson, K. M., Maguire, J., et al. (2005). Medical specialists and pharmaceutical industry-sponsored research: A survey of the Australian experience. Medical Journal of Australia, 182 (11), 557–560.

Higgins, J. P., Thompson, S. G., Deeks, J. J., & Altman, D. G. (2003). Measuring inconsistency in meta-analyses. BMJ, 327 (7414), 557–560.

Hofmann, B., Helgesson, G., Juth, N., & Holm, S. (2015). Scientific dishonesty: A survey of doctoral students at the major medical faculties in Sweden and Norway. Journal of Empirical Research on Human Research Ethics, 10 (4), 380–388.

Hofmann, B., Jensen, L. B., Eriksen, M. B., Helgesson, G., Juth, N., & Holm, S. (2020). Research integrity among PhD students at the faculty of medicine: A comparison of three Scandinavian universities. Journal of Empirical Research on Human Research Ethics, 15 (4), 1–10.

Hofmann, B., Myhr, A. I., & Holm, S. (2013). Scientific dishonesty—a nationwide survey of doctoral students in Norway. BMC Medical Ethics, 14 (1), 3.

Holm, S., & Hofmann, B. (2018). Associations between attitudes towards scientific misconduct and self-reported behavior. Accountability in Research: Policies and Quality Assurance, 25 (5), 290–300.

Jensen, L. B., Kyvik, K. O., Leth-Larsen, R., & Eriksen, M. B. (2018). Research integrity among PhD students within clinical research at the University of Southern Denmark. Danish Medical Journal, 65 (4), 1–5.

John, L. K., Loewenstein, G., & Prelec, D. (2012). Measuring the prevalence of questionable research practices with incentives for truth telling. Psychological Science, 23 (5), 524–532.

Kalichman, M. W., & Friedman, P. J. (1992). A pilot study of biomedical trainees' perceptions concerning research ethics. Academic Medicine, 67 (11), 769–775.

Kattenbraker, M. S. (2007). Health education research and publication: Ethical considerations and the response of health educators . PhD thesis, Southern Illinois University Carbondale, Carbondale, Illinois, United States.

Koklu, N. (2003). Views of academicians on research ethics. Journal of Educational Sciences & Practices, 2 (4), 138–151.

Lensvelt-Mulders, G. J. L. M., Hox, J. J., van der Heijden, P. G. M., & Maas, C. J. M. (2005). Meta-analysis of randomized response research: Thirty-five years of validation. Sociological Methods & Research, 33 (3), 319–348.

Lock, S. (1988). Misconduct in medical research: Does it exist in Britain? British Medical Journal, 297 , 1531–1535.

Long, T. C., Errami, M., George, A. C., Sun, Z., & Garner, H. R. (2009). Scientific integrity: Responding to possible plagiarism. Science, 323 (5919), 1293–1294.

Lindsay, D. S. (2015). Replication in psychological science. Psychological Science, 26 (12), 1827–1832.

List, J. A., Bailey, C. D., Euzent, P. J., & Martin, T. L. (2001). Academic economists behaving badly? A survey on three areas of unethical behavior. Economic Inquiry, 39 (1), 162–170.

Martinson, B. C., Anderson, M. S., & De Vries, R. (2005). Scientists behaving badly. Nature, 435 (7043), 737–738.

May, C., Campbell, S., & Doyle, H. (1998). Research misconduct: A pilot study of British addiction researchers. Addiction Research, 6 (4), 371–373.

Meyer, M. J., & McMahon, D. (2004). An examination of ethical research conduct by experienced and novice accounting academics. Issues in Accounting Education, 19 (4), 413–442.

Moher, D., Liberati, A., Tetzlaff, J., & Altman, D. G. (2009). Preferred reporting items for systematic reviews and meta-analyses: The PRISMA statement. Annals of Internal Medicine, 151 (4), 264–269.

NAS-NAE-IOM (National Academy of Sciences, National Academy of Engineering, and Institute of Medicine). (1992). Responsible science: Ensuring the integrity of the research process . National Academy Press.

National Academies of Sciences, Engineering, and Medicine. (2017). Fostering integrity in research . The National Academies Press.

Necker, S. (2014). Scientific misbehavior in economics. Research Policy, 43 (10), 1747–1759.

Nilstun, T., Löfmark, R., & Lundqvist, A. (2010). Scientific dishonesty—questionnaire to doctoral students in Sweden. Journal of Medical Ethics, 36 (5), 315–318.

Office of the President. (2000). Federal research misconduct policy. https://ori.hhs.gov/federal-research-misconduct-policy . Accessed 4 April 2021.

Okonta, P. I., & Rossouw, T. (2013). Prevalence of scientific misconduct among a group of researchers in Nigeria. Developing World Bioethics, 13 (3), 149–157.

Open Science Collaboration. (2015). Estimating the reproducibility of psychological science. Science, 349 (6251), aac4716.

Ozturk, N., Armato, S. G., Giger, M. L., Serago, C. F., & Ross, L. F. (2013). Ethics and professionalism in medical physics: A survey of AAPM members. Medical Physics, 40 (4), 047001.

Pupovac, V., & Fanelli, D. (2015). Scientists admitting to plagiarism: A meta-analysis of surveys. Science and Engineering Ethics, 21 (5), 1331–1352.

Pupovac, V., Prijić-Samaržija, S., & Petrovečki, M. (2016). Research misconduct in the Croatian scientific community: A survey assessing the forms and characteristics of research misconduct. Science and Engineering Ethics, 23 (1), 165–181.