Logistic regression

Logistic regression #.

We model the joint probability as:

This is the same as using a linear model for the log odds:

Fitting logistic regression #

The training data is a list of pairs \((y_1,x_1), (y_2,x_2), \dots, (y_n,x_n)\) .

We don’t observe the left hand side in the model

\(\implies\) We cannot use a least squares fit.

Likelihood #

Solution: The likelihood is the probability of the training data, for a fixed set of coefficients \(\beta_0,\dots,\beta_p\) :

We can rewrite as

Choose estimates \(\hat \beta_0, \dots,\hat \beta_p\) which maximize the likelihood.

Solved with numerical methods (e.g. Newton’s algorithm).

Logistic regression in R #

Inference for logistic regression #.

We can estimate the Standard Error of each coefficient.

The \(z\) -statistic is the equivalent of the \(t\) -statistic in linear regression:

The \(p\) -values are test of the null hypothesis \(\beta_j=0\) (Wald’s test).

Other possible hypothesis tests: likelihood ratio test (chi-square distribution).

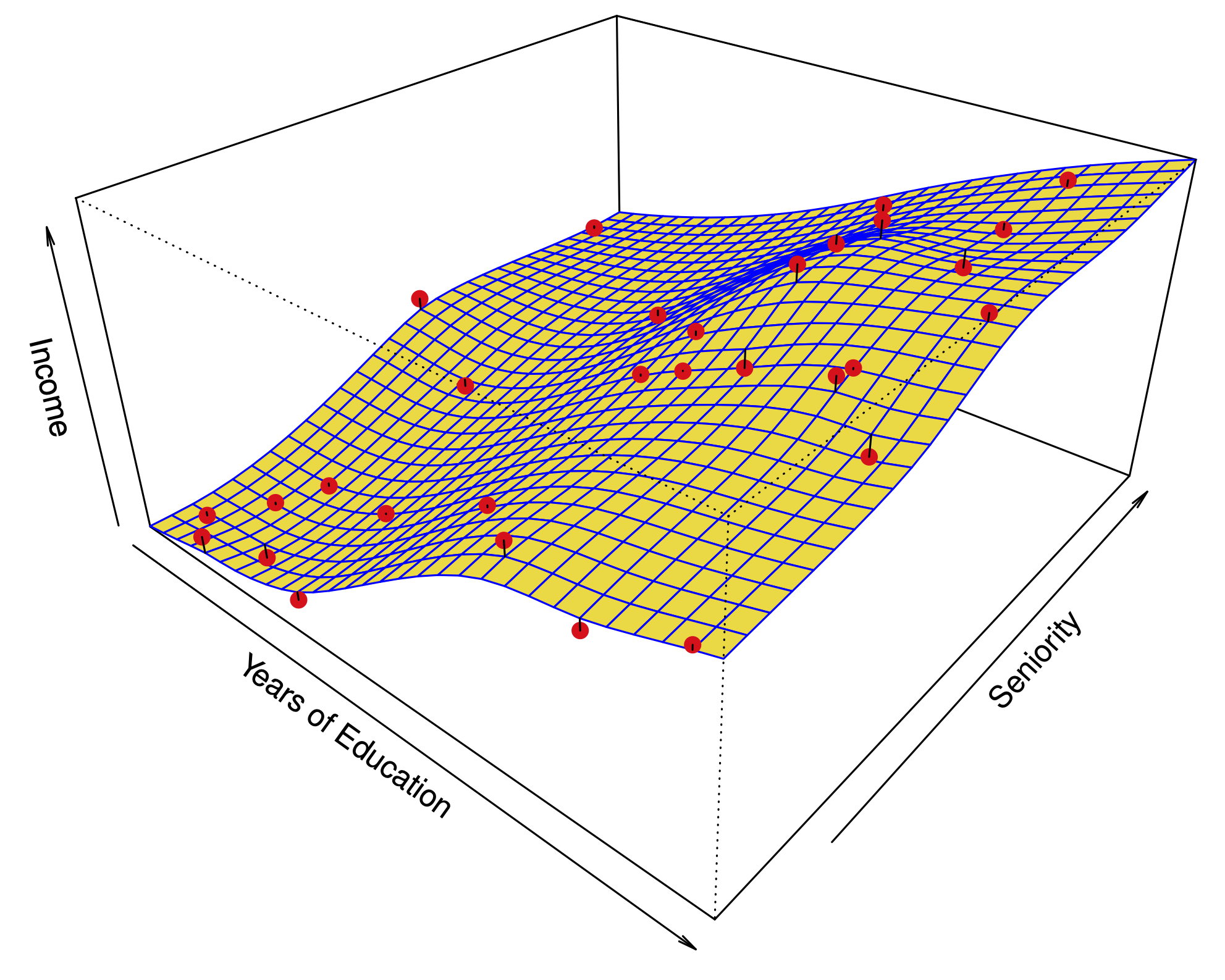

Example: Predicting credit card default #

Predictors:

student : 1 if student, 0 otherwise

balance : credit card balance

income : person’s income.

Confounding #

In this dataset, there is confounding , but little collinearity.

Students tend to have higher balances. So, balance is explained by student , but not very well.

People with a high balance are more likely to default.

Among people with a given balance , students are less likely to default.

Results: predicting credit card default #

Fig. 16 Confounding in Default data #

Using only balance #

Using only student #, using both balance and student #, using all 3 predictors #, multinomial logistic regression #.

Extension of logistic regression to more than 2 categories

Suppose \(Y\) takes values in \(\{1,2,\dots,K\}\) , then we can use a linear model for the log odds against a baseline category (e.g. 1): for \(j \neq 1\)

In this case \(\beta \in \mathbb{R}^{p \times (K-1)}\) is a matrix of coefficients.

Some potential problems #

The coefficients become unstable when there is collinearity. Furthermore, this affects the convergence of the fitting algorithm.

When the classes are well separated, the coefficients become unstable. This is always the case when \(p\geq n-1\) . In this case, prediction error is low, but \(\hat{\beta}\) is very variable.

Logistic Regression and Survival Analysis

- 1

- | 2

- | 3

- | 4

- Contributing Authors:

- Learning Objectives

- Logistic Regression

- Why use logistic regression?

- Overview of Logistic Regression

Logistic Regression in R

- Survival Analysis

- Why use survival analysis?

- Overview of Survival Analysis

- Things we did not cover (or only touched on)

To perform logistic regression in R, you need to use the glm() function. Here, glm stands for "general linear model." Suppose we want to run the above logistic regression model in R, we use the following command:

> summary( glm( vomiting ~ age, family = binomial(link = logit) ) )

glm(formula = vomiting ~ age, family = binomial(link = logit))

Deviance Residuals:

Min 1Q Median 3Q Max

-1.0671 -1.0174 -0.9365 1.3395 1.9196

Coefficients:

Estimate Std. Error z value Pr(>|z|)

(Intercept) -0.141729 0.106206 -1.334 0.182

age -0.015437 0.003965 -3.893 9.89e-05 ***

Signif. codes: 0 '***' 0.001 '**' 0.01 '*' 0.05 '.' 0.1 ' ' 1

(Dispersion parameter for binomial family taken to be 1)

Null deviance: 1452.3 on 1093 degrees of freedom

Residual deviance: 1433.9 on 1092 degrees of freedom

AIC: 1437.9

Number of Fisher Scoring iterations: 4

To get the significance for the overall model we use the following command:

> 1-pchisq(1452.3-1433.9, 1093-1092)

[1] 1.79058e-05

The input to this test is:

- deviance of "null" model minus deviance of current model (can be thought of as "likelihood")

- degrees of freedom of the null model minus df of current model

This is analogous to the global F test for the overall significance of the model that comes automatically when we run the lm() command. This is testing the null hypothesis that the model is no better (in terms of likelihood) than a model fit with only the intercept term, i.e. that all beta terms are 0.

Thus the logistic model for these data is:

E[ odds(vomiting) ] = -0.14 – 0.02*age

This means that for a one-unit increase in age there is a 0.02 decrease in the log odds of vomiting. This can be translated to e -0.02 = 0.98. Groups of people in an age group one unit higher than a reference group have, on average, 0.98 times the odds of vomiting.

How do we test the association between vomiting and age?

- H 0 : There is no association between vomiting and age (the odds ratio is equal to 1).

- H a : There is an association between vomiting and age (the odds ratio is not equal to 1).

When testing the null hypothesis that there is no association between vomiting and age we reject the null hypothesis at the 0.05 alpha level ( z = -3.89, p-value = 9.89e-05).

On average, the odds of vomiting is 0.98 times that of identical subjects in an age group one unit smaller.

Finally, when we are looking at whether we should include a particular variable in our model (maybe it's a confounder), we can include it based on the "10% rule," where if the change in our estimate of interest changes more than 10% when we include the new covariate in the model, then we that new covariate in our model. When we do this in logistic regression, we compare the exponential of the betas, not the untransformed betas themselves!

|

Test the hypothesis that being nauseated was not associated with sex and age (hint: use a multiple logistic regression model). Test the overall hypothesis that there is no association between nausea and sex and age. Then test the individual main effects hypothesis (i.e. no association between sex and nausea after adjusting for age, and vice versa). |

return to top | previous page | next page

17.2 Inference for Logistic Regression

Statistical inference for logistic regression with one explanatory variable is similar to statistical inference for simple linear regression. We calculate estimates of the model parameters and standard errors for these estimates. Confidence intervals are formed in the usual way, but we use standard Normal -values rather than critical values from the distributions. The ratio of the estimate to the standard error is the basis for hypothesis tests.

Wald statistic

The statistic is sometimes called the Wald statistic. Output from some statistical software reports the significance test result in terms of the square of the statistic.

This statistic is called a chi-square statistic. When the null hypothesis is true, it has a distribution that is approximately a distribution with one degree of freedom, and the -value is calculated as . Because the square of a standard Normal random variable has a distribution with one degree of freedom, the statistic and the chi-square statistic give the same results for statistical inference.

chi-square statistic, p. 463

Confidence Intervals and Significance Tests for Logistic Regression

An approximate level confidence interval for the slope in the logistic regression model is

The ratio of the odds for a value of the explanatory variable equal to to the odds for a value of the explanatory variable equal to is the odds ratio . A level confidence interval for the odds ratio is obtained by transforming the confidence interval for the slope,

In these expressions is the standard Normal critical value with area between and .

To test the hypothesis , compute the test statistic

In terms of a random variable having the distribution with one degree of freedom, the -value for a test of against is approximately .

We have expressed the null hypothesis in terms of the slope because this form closely resembles what we studied in simple linear regression. In many applications, however, the results are expressed in terms of the odds ratio. A slope of 0 is the same as an odds ratio of 1, so we often express the null hypothesis of interest as “the odds ratio is 1.” This means that the two odds are equal and the explanatory variable is not useful for predicting the odds.

EXAMPLE 17.7 Computer Output for Tipping Study

CASE 17.1 Figure 17.3 gives the output from Minitab and SAS for the tipping study. The parameter estimates match those we calculated in Example 17.4 . The standard errors are 0.1107 and 0.2678. A 95% confidence interval for the slope is

We are 95% confident that the slope is between 0.3182 and 1.368. Both Minitab and SAS output provide the odds ratio estimate and 95% confidence interval. If this interval is not provided, it is easy to compute from the interval for the slope :

We conclude, “Servers wearing red are more likely to be tipped than servers wearing a different color ( , 95% to 3.928).”

It is standard to use 95% confidence intervals, and software often reports these intervals. A 95% confidence interval for the odds ratio also provides a test of the null hypothesis that the odds ratio is 1 at the 5% significance level. If the confidence interval does not include 1, we reject and conclude that the odds for the two groups are different; if the interval does include 1, the data do not provide enough evidence to distinguish the groups in this way.

Apply Your Knowledge

Question 17.8

17.8 Read the output.

CASE 17.1 Examine the Minitab and SAS output in Figure 17.3 . Create a table that reports the estimates of and with the standard errors. Also report the odds ratio with its 95% confidence interval as given in this output.

Question 17.9

17.9 Inference for energy drink commercials.

Use software to run a logistic regression analysis for the energy drink commercial data of Exercise 17.1 . Summarize the results of the inference.

. The odds ratio estimate is 1.227; the 95% confidence interval is (0.761, 1.979).

Question 17.10

17.10 Inference for audio/visual sharing.

Use software to run the logistic regression analysis for the audio/visual sharing data of Exercise 17.2 . Summarize the results of the inference.

Examples of logistic regression analyses

The following example is typical of many applications of logistic regression. It concerns a designed experiment with five different values for the explanatory variable.

EXAMPLE 17.8 Effectiveness of an Insecticide

As part of a cost-effectiveness study, a wholesale florist company ran an experiment to examine how well the insecticide rotenone kills an aphid called Macrosiphoniella sanborni that feeds on the chrysanthemum plant. 3 The explanatory variable is the concentration (in log of milligrams per liter) of the insecticide. About 50 aphids each were exposed to one of five concentrations. Each insect was either killed or not killed. Here are the data, along with the results of some calculations:

| Concentration (log scale) | Number of insects | Number killed | Proportion killed | Log odds |

| 0.96 | 50 | 6 | 0.1200 | −1.9924 |

| 1.33 | 48 | 16 | 0.3333 | −0.6931 |

| 1.63 | 46 | 24 | 0.5217 | 0.0870 |

| 2.04 | 49 | 42 | 0.8571 | 1.7918 |

| 2.32 | 50 | 44 | 0.8800 | 1.9924 |

Because there are replications at each concentration, we can calculate the proportion killed and estimate the log odds of death at each concentration. The logistic model in this case assumes that the log odds are linearly related to log concentration. Least-squares regression of log odds on log concentration gives the fit illustrated in Figure 17.4 . There is a clear linear relationship, which justifies our use of the logistic model. The logistic regression fit for the proportion killed appears in Figure 17.5 . It is a transformed version of Figure 17.4 with the fit calculated using the logistic model rather than least squares.

When the explanatory variable has several values, we can often use graphs like those in Figures 17.4 and 17.5 to visually assess whether the logistic regression model seems appropriate. Just as a scatterplot of y versus x in simple linear regression should show a linear pattern, a plot of log odds versus x in logistic regression should be close to linear. Just as in simple linear regression, outliers in the x direction should be avoided because they may overly influence the fitted model.

The graphs strongly suggest that insecticide concentration affects the kill rate in a way that fits the logistic regression model. Is the effect statistically significant ? Suppose that rotenone has no ability to kill Macrosiphoniella san-borni. What is the chance that we would observe experimental results at least as convincing as what we observed if this supposition were true? The answer is the -value for the test of the null hypothesis that the logistic regression slope is zero. If this -value is not small, our graph may be misleading. As usual, we must add inference to our data analysis.

EXAMPLE 17.9 Does Concentration Affect the Kill Rate?

Figure 17.6 gives the output from JMP and Minitab for logistic regression analysis of the insecticide data. The model is

where the values of the explanatory variable are 0.96, 1.33, 1.63, 2.04, 2.32. From the JMP output, we see that the fitted model is

Figure 17.5 is a graph of the fitted given by this equation against , along with the data used to fit the model. JMP gives the statistic under the heading “ChiSquare.” The null hypothesis that is clearly rejected ( , ).

The estimated odds ratio is 22.394. An increase of one unit in the log concentration of insecticide ( ) is associated with a 22-fold increase in the odds that an insect will be killed. The confidence interval for the odds is given in the Minitab output: (10.470, 47.896).

Remember that the test of the null hypothesis that the slope is 0 is the same as the test of the null hypothesis that the odds ratio is 1. If we were reporting the results in terms of the odds, we could say, “The odds of killing an insect increase by a factor of 22.3 for each unit increase in the log concentration of insecticide ( , ; 95% ).”

Question 17.11

17.11 Find the 95% confidence interval for the slope.

Using the information in the output of Figure 17.6 , find a 95% confidence interval for .

(2.349, 3.869).

Question 17.12

17.12 Find the 95% confidence interval for the odds ratio.

Using the estimate and its standard error in the output of Figure 17.6 , find the 95% confidence interval for the odds ratio and verify that this agrees with the interval given by Minitab.

Question 17.13

The Minitab output in Figure 17.6 does not give the value of . The column labeled “ -Value” provides similar information.

- Find the value under the heading “ -Value” for the predictor LCONC. Verify that this value is simply the estimated coefficient divided by its standard error. This is a statistic that has approximately the standard Normal distribution if the null hypothesis (slope 0) is true.

- Show that the square of is . The two-sided -value for is the same as for .

(a) . (b) 64.16, which agrees with the output up to rounding error.

In Example 17.6 , we studied the problem of predicting whether a movie will be profitable using the log opening-weekend revenue as the explanatory variable. We now revisit this example to include the results of inference.

EXAMPLE 17.10 Predicting a Movie's Profitability

Figure 17.7 gives the output from Minitab for a logistic regression analysis using log opening-weekend revenue as the explanatory variable. The fitted model is

This agrees up to rounding with the result reported in Example 17.6 .

From the output, we see that because , we cannot reject the null hypothesis that the slope . The value of the test statistic is , calculated from the estimate and its standard error . Minitab reports the odds ratio as 2.184, with a 95% confidence interval of (0.7584, 6.2898). Notice that this confidence interval contains the value 1, which is another way to assess . In this case, we don't have enough evidence to conclude that this explanatory variable, by itself, is helpful in predicting the probability that a movie will be profitable.

We estimate that a one-unit increase in the log opening-weekend revenue will increase the odds that the movie is profitable about 2.2 times. The data, however, do not give us a very accurate estimate. We do not have strong enough evidence to conclude that movies with higher opening-weekend revenues are more likely to be profitable. Establishing the true relationship accurately would require more data.

Companion to BER 642: Advanced Regression Methods

Chapter 11 multinomial logistic regression, 11.1 introduction to multinomial logistic regression.

Logistic regression is a technique used when the dependent variable is categorical (or nominal). For Binary logistic regression the number of dependent variables is two, whereas the number of dependent variables for multinomial logistic regression is more than two.

Examples: Consumers make a decision to buy or not to buy, a product may pass or fail quality control, there are good or poor credit risks, and employee may be promoted or not.

11.2 Equation

In logistic regression, a logistic transformation of the odds (referred to as logit) serves as the depending variable:

\[\log (o d d s)=\operatorname{logit}(P)=\ln \left(\frac{P}{1-P}\right)=a+b_{1} x_{1}+b_{2} x_{2}+b_{3} x_{3}+\ldots\]

\[p=\frac{\exp \left(a+b_{1} X_{1}+b_{2} X_{2}+b_{3} X_{3}+\ldots\right)}{1+\exp \left(a+b_{1} X_{1}+b_{2} X_{2}+b_{3} X_{3}+\ldots\right)}\] > Where:

p = the probability that a case is in a particular category,

exp = the exponential (approx. 2.72),

a = the constant of the equation and,

b = the coefficient of the predictor or independent variables.

Logits or Log Odds

Odds value can range from 0 to infinity and tell you how much more likely it is that an observation is a member of the target group rather than a member of the other group.

- Odds = p/(1-p)

If the probability is 0.80, the odds are 4 to 1 or .80/.20; if the probability is 0.25, the odds are .33 (.25/.75).

The odds ratio (OR), estimates the change in the odds of membership in the target group for a one unit increase in the predictor. It is calculated by using the regression coefficient of the predictor as the exponent or exp.

Assume in the example earlier where we were predicting accountancy success by a maths competency predictor that b = 2.69. Thus the odds ratio is exp(2.69) or 14.73. Therefore the odds of passing are 14.73 times greater for a student for example who had a pre-test score of 5 than for a student whose pre-test score was 4.

11.3 Hypothesis Test of Coefficients

In logistic regression, hypotheses are of interest:

The null hypothesis, which is when all the coefficients in the regression equation take the value zero, and

The alternate hypothesis that the model currently under consideration is accurate and differs significantly from the null of zero, i.e. gives significantly better than the chance or random prediction level of the null hypothesis.

Evaluation of Hypothesis

We then work out the likelihood of observing the data we actually did observe under each of these hypotheses. The result is usually a very small number, and to make it easier to handle, the natural logarithm is used, producing a log likelihood (LL) . Probabilities are always less than one, so LL’s are always negative. Log likelihood is the basis for tests of a logistic model.

11.4 Likelihood Ratio Test

The likelihood ratio test is based on -2LL ratio. It is a test of the significance of the difference between the likelihood ratio (-2LL) for the researcher’s model with predictors (called model chi square) minus the likelihood ratio for baseline model with only a constant in it.

Significance at the .05 level or lower means the researcher’s model with the predictors is significantly different from the one with the constant only (all ‘b’ coefficients being zero). It measures the improvement in fit that the explanatory variables make compared to the null model.

Chi square is used to assess significance of this ratio (see Model Fitting Information in SPSS output).

\(H_0\) : There is no difference between null model and final model.

\(H_1\) : There is difference between null model and final model.

11.5 Checking AssumptionL: Multicollinearity

Just run “linear regression” after assuming categorical dependent variable as continuous variable

If the largest VIF (Variance Inflation Factor) is greater than 10 then there is cause of concern (Bowerman & O’Connell, 1990)

Tolerance below 0.1 indicates a serious problem.

Tolerance below 0.2 indicates a potential problem (Menard,1995).

If the Condition index is greater than 15 then the multicollinearity is assumed.

11.6 Features of Multinomial logistic regression

Multinomial logistic regression to predict membership of more than two categories. It (basically) works in the same way as binary logistic regression. The analysis breaks the outcome variable down into a series of comparisons between two categories.

E.g., if you have three outcome categories (A, B and C), then the analysis will consist of two comparisons that you choose:

Compare everything against your first category (e.g. A vs. B and A vs. C),

Or your last category (e.g. A vs. C and B vs. C),

Or a custom category (e.g. B vs. A and B vs. C).

The important parts of the analysis and output are much the same as we have just seen for binary logistic regression.

11.7 R Labs: Running Multinomial Logistic Regression in R

11.7.1 understanding the data: choice of programs.

The data set(hsbdemo.sav) contains variables on 200 students. The outcome variable is prog, program type (1=general, 2=academic, and 3=vocational). The predictor variables are ses, social economic status (1=low, 2=middle, and 3=high), math, mathematics score, and science, science score: both are continuous variables.

(Research Question):When high school students choose the program (general, vocational, and academic programs), how do their math and science scores and their social economic status (SES) affect their decision?

11.7.2 Prepare and review the data

Now let’s do the descriptive analysis

11.7.3 Run the Multinomial Model using “nnet” package

Below we use the multinom function from the nnet package to estimate a multinomial logistic regression model. There are other functions in other R packages capable of multinomial regression. We chose the multinom function because it does not require the data to be reshaped (as the mlogit package does) and to mirror the example code found in Hilbe’s Logistic Regression Models.

First, we need to choose the level of our outcome that we wish to use as our baseline and specify this in the relevel function. Then, we run our model using multinom. The multinom package does not include p-value calculation for the regression coefficients, so we calculate p-values using Wald tests (here z-tests).

These are the logit coefficients relative to the reference category. For example,under ‘math’, the -0.185 suggests that for one unit increase in ‘science’ score, the logit coefficient for ‘low’ relative to ‘middle’ will go down by that amount, -0.185.

11.7.4 Check the model fit information

Interpretation of the Model Fit information

The log-likelihood is a measure of how much unexplained variability there is in the data. Therefore, the difference or change in log-likelihood indicates how much new variance has been explained by the model.

The chi-square test tests the decrease in unexplained variance from the baseline model (408.1933) to the final model (333.9036), which is a difference of 408.1933 - 333.9036 = 74.29. This change is significant, which means that our final model explains a significant amount of the original variability.

The likelihood ratio chi-square of 74.29 with a p-value < 0.001 tells us that our model as a whole fits significantly better than an empty or null model (i.e., a model with no predictors).

11.7.5 Calculate the Goodness of fit

11.7.6 calculate the pseudo r-square.

Interpretation of the R-Square:

These are three pseudo R squared values. Logistic regression does not have an equivalent to the R squared that is found in OLS regression; however, many people have tried to come up with one. These statistics do not mean exactly what R squared means in OLS regression (the proportion of variance of the response variable explained by the predictors), we suggest interpreting them with great caution.

Cox and Snell’s R-Square imitates multiple R-Square based on ‘likelihood’, but its maximum can be (and usually is) less than 1.0, making it difficult to interpret. Here it is indicating that there is the relationship of 31% between the dependent variable and the independent variables. Or it is indicating that 31% of the variation in the dependent variable is explained by the logistic model.

The Nagelkerke modification that does range from 0 to 1 is a more reliable measure of the relationship. Nagelkerke’s R2 will normally be higher than the Cox and Snell measure. In our case it is 0.357, indicating a relationship of 35.7% between the predictors and the prediction.

McFadden = {LL(null) – LL(full)} / LL(null). In our case it is 0.182, indicating a relationship of 18.2% between the predictors and the prediction.

11.7.7 Likelihood Ratio Tests

Interpretation of the Likelihood Ratio Tests

The results of the likelihood ratio tests can be used to ascertain the significance of predictors to the model. This table tells us that SES and math score had significant main effects on program selection, \(X^2\) (4) = 12.917, p = .012 for SES and \(X^2\) (2) = 10.613, p = .005 for SES.

These likelihood statistics can be seen as sorts of overall statistics that tell us which predictors significantly enable us to predict the outcome category, but they don’t really tell us specifically what the effect is. To see this we have to look at the individual parameter estimates.

11.7.8 Parameter Estimates

Note that the table is split into two rows. This is because these parameters compare pairs of outcome categories.

We specified the second category (2 = academic) as our reference category; therefore, the first row of the table labelled General is comparing this category against the ‘Academic’ category. the second row of the table labelled Vocational is also comparing this category against the ‘Academic’ category.

Because we are just comparing two categories the interpretation is the same as for binary logistic regression:

The relative log odds of being in general program versus in academic program will decrease by 1.125 if moving from the highest level of SES (SES = 3) to the lowest level of SES (SES = 1) , b = -1.125, Wald χ2(1) = -5.27, p <.001.

Exp(-1.1254491) = 0.3245067 means that when students move from the highest level of SES (SES = 3) to the lowest level of SES (1= SES) the odds ratio is 0.325 times as high and therefore students with the lowest level of SES tend to choose general program against academic program more than students with the highest level of SES.

The relative log odds of being in vocational program versus in academic program will decrease by 0.56 if moving from the highest level of SES (SES = 3) to the lowest level of SES (SES = 1) , b = -0.56, Wald χ2(1) = -2.82, p < 0.01.

Exp(-0.56) = 0.57 means that when students move from the highest level of SES (SES = 3) to the lowest level of SES (SES=1) the odds ratio is 0.57 times as high and therefore students with the lowest level of SES tend to choose vocational program against academic program more than students with the highest level of SES.

11.7.9 Interpretation of the Predictive Equation

Please check your slides for detailed information. You can find all the values on above R outcomes.

11.7.10 Build a classification table

11.8 supplementary learning materials.

Field, A (2013). Discovering statistics using IBM SPSS statistics (4th ed.). Los Angeles, CA: Sage Publications

Agresti, A. (1996). An introduction to categorical data analysis. New York, NY: Wiley & Sons.

IBM SPSS Regression 22.

Logistic Regression

Logistic regression is the extension of simple linear regression . Simple Linear regression is a statistical technique that is used to learn about the relationship between the dependent and independent variables. In Linear regression, dependent and independent variables are continuous in nature. For example, we could apply it to sale and marketing expenditure, where we want to predict sales based on marketing expenditure. Where the dependent variable(s) is dichotomous or binary in nature, we cannot use simple linear regression. Logistic regression is the statistical technique used to predict the relationship between predictors and predicted variables where the dependent variable is binary. Furthermore, where our dependent variable has two categories, we use binary logistic regression. If our dependent variable has more than two categories, it will be necessary to use multinomial logistic regression, whereas if our dependent variable is ordinal in nature, we use ordinal logistic regression.

In logistic regression, we assume one reference category with which we compare other variables for the probability of the occurrence of specific ‘events’ by fitting a logistic curve.

Like other regression techniques, logistic regression involves the use of two hypotheses:

1.A Null hypothesis : null hypothesis beta coefficient is equal to zero, and,

2. Alternative hypothesis : Alternative hypothesis assumes that beta coefficient is not equal to zero.

Logistic regression does not require that the relationship between the dependent variable and independent variable(s) be linear. Also, logistic regression does not require the error term to be normally distributed. Logistic regression assumes that the independent variables are interval scaled or binary in nature. However, logistic regression does not require the variance between the categorical variables. In logistic regression, normality is also not required. However, logistic regression does assume the absence of outliers.

There are some key differences in the methodologies and processes involved in simple vs. logistic regression. In the case of simple regression, ANOVA is used to evaluate the overall model fitness. Furthermore, R-square is used to evaluate the variance, as explained by the independent variable. Cox and Snell’s R 2 , Nagelkerke’s R 2 , McFadden’s R 2 , Pseudo-R 2 are alternatives to the R-square in logistic regression. Furthermore, we use the t-test to assess the significance of individual variables where simple regression is concerned. However, in the case of logistic regression, we use the Wald statistic to assess the significance of the independent variables. Instead of simple beta, exponential beta is used in logistic regression as the independent coefficient. Exponential beta provides an odd ratio for the dependent variable based on the independent variables. This essentially is a probability of an event occurring vs. not occurring.

Rule of thumb (Peduzzi et al, 1996) recommends that to estimate the logistic regression function, a minimum of 10 cases per independent variable is required to achieve reliable and meaningful results. For instance, where 10 independent variables are concerned, a minimum sample size of 100 with at least 10 cases per variable (once you take missing values and outliers into account) are permissible.

Click here for dissertation statistics help.

We work with graduate students every day and know what it takes to get your research approved.

- Address committee feedback

- Roadmap to completion

- Understand your needs and timeframe

Introduction to Statistics and Data Science

Chapter 18 logistic regression, 18.1 what is logistic regression used for.

Logistic regression is useful when we have a response variable which is categorical with only two categories. This might seem like it wouldn’t be especially useful, however with a little thought we can see that this is actually a very useful thing to know how to do. Here are some examples where we might use logistic regression .

- Predict whether a customer will visit your website again using browsing data

- Predict whether a voter will vote for the democratic candidate in an upcoming election using demographic and polling data

- Predict whether a patient given a surgery will survive for 5+ years after the surgery using health data

- Given the history of a stock, market trends predict if the closing price tomorrow will be higher or lower than today?

With many other possible examples. We can often phrase important questions as yes/no or (0-1) answers where we want to use some data to better predict the outcome. This is a simple case of what is called a classification problem in the machine learning/data science community. Given some information we want to use a computer to decide make a prediction which can be sorted into some finite number of outcomes.

18.2 GLM: Generalized Linear Models

Our linear regression techniques thus far have focused on cases where the response ( \(Y\) ) variable is continuous in nature. Recall, they take the form: \[ \begin{equation} Y_i=\alpha+ \sum_{j=1}^N \beta_j X_{ij} \end{equation} \] Where \(alpha\) is the intercept and \(\{\beta_1, \beta_2, ... \beta_N\}\) are the slope parameters for the explanatory variables ( \(\{X_1, X_2, ...X_N\}\) ). However, our outputs \(Y_i\) should give the probability that \(Y_i\) takes the value 1 given the \(X_j\) values. The right hand side of our model above will produce values in \(\mathbb{R}=(-\infty, \infty)\) while the left hand side should live in \([0,1]\) .

Therefore to use a model like this we need to transform our outputs from [0,1] to the whole real line \(\mathbb{R}\) .

\[y_i=g \left( \alpha+ \sum_{j=1}^N \beta_j X_{ij} \right)\]

18.3 A Starting Example

Let’s consider the shot logs data set again. We will use the shot distance column SHOT_DIST and the FGM columns for a logistic regression. The FGM column is 1 if the shot was made and 0 otherwise (perfect candidate for the response variable in a logistic regression). We expect that the further the shot is from the basket (SHOT_DIST) the less likely it will be that the shot is made (FGM=1).

To build this model in R we will use the glm() command and specify the link function we are using a the logit function.

\[logit(p)=0.392-0.04 \times SD \implies p=logit^{-1}(0.392-0.04 \times SD)\] So we can find the probability of a shot going in 12 feet from the basket as:

Here is a plot of the probability of a shot going in as a function of the distance from the basket using our best fit coefficients.

18.3.1 Confidence Intervals for the Parameters

A major point of this book is that you should never be satisfied with a single number summary in statistics. Rather than just considering a single best fit for our coefficients we should really form some confidence intervals for their values.

As we saw for simple regression we can look at the confidence intervals for our intercepts and slopes using the confint command.

Note, these values are still in the logit transformed scale.

18.4 Equivalence of Logistic Regression and Proportion Tests

Suppose we want to use the categorical variable of the individual player in our analysis. In the interest of keeping our tables and graphs visible we will limit our players to just those who took more than 820 shots in the data set.

| Name | Number of Shots |

|---|---|

| blake griffin | 878 |

| chris paul | 851 |

| damian lillard | 925 |

| gordon hayward | 833 |

| james harden | 1006 |

| klay thompson | 953 |

| kyle lowry | 832 |

| kyrie irving | 919 |

| lamarcus aldridge | 1010 |

| lebron james | 947 |

| mnta ellis | 1004 |

| nikola vucevic | 889 |

| rudy gay | 861 |

| russell westbrook | 943 |

| stephen curry | 941 |

| tyreke evans | 875 |

Now we can get a reduced data set with just these players.

Lets form a logistic regression using just a categorical variable as the explanatory variable. \[ \begin{equation} logit(p)=\beta Player \end{equation} \]

If we take the inverse logit of the coefficients we get the field goal percentage of the players in our data set.

Now suppose we want to see if the players in our data set truly differ in their field goal percentages or whether the differences we observe could just be caused by random effects. To do this we want to compare a model without the players information included with one that includes this information. Let’s create a null model to compare against our player model.

This null model contains no explanatory variables and takes the form: \[logit(p_i)=\alpha\]

Thus, the shooting percentage is not allowed to vary between the players. We find based on this data an overall field goal percentage of:

Now we may compare logistic regression models using the anova command in R.

The second line contains a p value of 2.33e-5 telling us to reject the null hypothesis that the two models are equivalent. So we found that knowledge of the player does matter in calculating the probability of a shot being made.

Notice we could have performed this analysis as a proportion test using the null that all players shooting percentages are the same \(p_1=p_2=...p_{15}\)

Notice the p-value obtained matches the logistic regression ANOVA almost exactly. Thus, a proportion test can be viewed as a special case of a logistic regression.

18.5 Example: Building a More Accurate Model

Now we can form a model for the shooting percentages using the individual players data:

\[ logit(p_i)=\alpha+\beta_1 SF+\beta_2DD+\beta_3 \text{player_dummy} \]

18.6 Example: Measuring Team Defense Using Logistic Regression

\[ logit(p_i)=\alpha+\beta_1 SD+\beta_2 \text{Team}+\beta_3 (\text{Team}) (SD) \] Since the team defending is a categorical variable R will store it as a dummy variable when forming the regression. Thus the first level of this variable will not appear in our regression (or more precisely it will be included in the intercept \(\alpha\) and slope \(\beta_1\) ). Before we run the model we can see which team will be missing.

The below plot shows the expected shooting percentages at each distance for the teams in the data set.

#Better Approach

Kahneman, Daniel. 2011. Thinking, Fast and Slow . Macmillan.

Wickham, Hadley, and Garrett Grolemund. 2016. R for Data Science: Import, Tidy, Transform, Visualize, and Model Data . " O’Reilly Media, Inc.".

Xie, Yihui. 2019. Bookdown: Authoring Books and Technical Documents with R Markdown . https://CRAN.R-project.org/package=bookdown .

A comprehensive comparison of goodness-of-fit tests for logistic regression models

- Original Paper

- Published: 30 August 2024

- Volume 34 , article number 175 , ( 2024 )

Cite this article

- Huiling Liu 1 ,

- Xinmin Li 2 ,

- Feifei Chen 3 ,

- Wolfgang Härdle 4 , 5 , 6 &

- Hua Liang 7

We introduce a projection-based test for assessing logistic regression models using the empirical residual marked empirical process and suggest a model-based bootstrap procedure to calculate critical values. We comprehensively compare this test and Stute and Zhu’s test with several commonly used goodness-of-fit (GoF) tests: the Hosmer–Lemeshow test, modified Hosmer–Lemeshow test, Osius–Rojek test, and Stukel test for logistic regression models in terms of type I error control and power performance in small ( \(n=50\) ), moderate ( \(n=100\) ), and large ( \(n=500\) ) sample sizes. We assess the power performance for two commonly encountered situations: nonlinear and interaction departures from the null hypothesis. All tests except the modified Hosmer–Lemeshow test and Osius–Rojek test have the correct size in all sample sizes. The power performance of the projection based test consistently outperforms its competitors. We apply these tests to analyze an AIDS dataset and a cancer dataset. For the former, all tests except the projection-based test do not reject a simple linear function in the logit, which has been illustrated to be deficient in the literature. For the latter dataset, the Hosmer–Lemeshow test, modified Hosmer–Lemeshow test, and Osius–Rojek test fail to detect the quadratic form in the logit, which was detected by the Stukel test, Stute and Zhu’s test, and the projection-based test.

This is a preview of subscription content, log in via an institution to check access.

Access this article

Subscribe and save.

- Get 10 units per month

- Download Article/Chapter or eBook

- 1 Unit = 1 Article or 1 Chapter

- Cancel anytime

Price includes VAT (Russian Federation)

Instant access to the full article PDF.

Rent this article via DeepDyve

Institutional subscriptions

Similar content being viewed by others

A generalized Hosmer–Lemeshow goodness-of-fit test for a family of generalized linear models

Fifty Years with the Cox Proportional Hazards Regression Model

CPMCGLM: an R package for p -value adjustment when looking for an optimal transformation of a single explanatory variable in generalized linear models

Explore related subjects.

- Artificial Intelligence

Data availibility

No datasets were generated or analysed during the current study.

Chen, K., Hu, I., Ying, Z.: Strong consistency of maximum quasi-likelihood estimators in generalized linear models with fixed and adaptive designs. Ann. Stat. 27 (4), 1155–1163 (1999)

Article MathSciNet Google Scholar

Dardis, C.: LogisticDx: diagnostic tests and plots for logistic regression models. R package version 0.3 (2022)

Dikta, G., Kvesic, M., Schmidt, C.: Bootstrap approximations in model checks for binary data. J. Am. Stat. Assoc. 101 , 521–530 (2006)

Ekanem, I.A., Parkin, D.M.: Five year cancer incidence in Calabar, Nigeria (2009–2013). Cancer Epidemiol. 42 , 167–172 (2016)

Article Google Scholar

Escanciano, J.C.: A consistent diagnostic test for regression models using projections. Economet. Theor. 22 , 1030–1051 (2006)

Härdle, W., Mammen, E., Müller, M.: Testing parametric versus semiparametric modeling in generalized linear models. J. Am. Stat. Assoc. 93 , 1461–1474 (1998)

MathSciNet Google Scholar

Harrell, F.E.: rms: Regression modeling strategies. R package version 6.3-0 (2022)

Hosmer, D.W., Hjort, N.L.: Goodness-of-fit processes for logistic regression: simulation results. Stat. Med. 21 (18), 2723–2738 (2002)

Hosmer, D.W., Lemesbow, S.: Goodness of fit tests for the multiple logistic regression model. Commun Stat Theory Methods 9 , 1043–1069 (1980)

Hosmer, D.W., Hosmer, T., Le Cessie, S., Lemeshow, S.: A comparison of goodness-of-fit tests for the logistic regression model. Stat. Med. 16 (9), 965–980 (1997)

Hosmer, D., Lemeshow, S., Sturdivant, R.: Applied Logistic Regression. Wiley Series in Probability and Statistics, Wiley, New York (2013)

Book Google Scholar

Jones, L.K.: On a conjecture of Huber concerning the convergence of projection pursuit regression. Ann. Stat. 15 , 880–882 (1987)

Kohl, M.: MKmisc: miscellaneous functions from M. Kohl. R package version, vol. 1, p. 8 (2021)

Kosorok, M.R.: Introduction to Empirical Processes and Semiparametric Inference, vol. 61. Springer, New York (2008)

Lee, S.-M., Tran, P.-L., Li, C.-S.: Goodness-of-fit tests for a logistic regression model with missing covariates. Stat. Methods Med. Res. 31 , 1031–1050 (2022)

Lindsey, J.K.: Applying Generalized Linear Models. Springer, Berlin (2000)

McCullagh, P., Nelder, J.A.: Generalized Linear Models, vol. 37. Chapman and Hall (1989)

Nelder, J.A., Wedderburn, R.W.M.: Generalized linear models. J. R. Stat. Soc. Ser. A 135 , 370–384 (1972)

Oguntunde, P.E., Adejumo, A.O., Okagbue, H.I.: Breast cancer patients in Nigeria: data exploration approach. Data Brief 15 , 47 (2017)

Osius, G., Rojek, D.: Normal goodness-of-fit tests for multinomial models with large degrees of freedom. J. Am. Stat. Assoc. 87 (420), 1145–1152 (1992)

Rady, E.-H.A., Abonazel, M.R., Metawe’e, M.H.: A comparison study of goodness of fit tests of logistic regression in R: simulation and application to breast cancer data. Appl. Math. Sci. 7 , 50–59 (2021)

Google Scholar

Stukel, T.A.: Generalized logistic models. J. Am. Stat. Assoc. 83 (402), 426–431 (1988)

Stute, W., Zhu, L.-X.: Model checks for generalized linear models. Scand. J. Stat. Theory Appl. 29 , 535–545 (2002)

van der Vaart, A.W., Wellner, J.A.: Weak Convergence and Empirical Processes. Springer (1996)

van Heel, M., Dikta, G., Braekers, R.: Bootstrap based goodness-of-fit tests for binary multivariate regression models. J. Korean Stat. Soc. 51 (1), 308–335 (2022)

Yin, C., Zhao, L., Wei, C.: Asymptotic normality and strong consistency of maximum quasi-likelihood estimates in generalized linear models. Sci. China Ser. A Math. 49 , 145–157 (2006)

Download references

Acknowledgements

Li’s research was partially supported by NNSFC grant 11871294. Härdle gratefully acknowledges support through the European Cooperation in Science & Technology COST Action grant CA19130 - Fintech and Artificial Intelligence in Finance - Towards a transparent financial industry; the project “IDA Institute of Digital Assets”, CF166/15.11.2022, contract number CN760046/ 23.05.2024 financed under the Romanias National Recovery and Resilience Plan, Apel nr. PNRR-III-C9-2022-I8; and the Marie Skłodowska-Curie Actions under the European Union’s Horizon Europe research and innovation program for the Industrial Doctoral Network on Digital Finance, acronym DIGITAL, Project No. 101119635

Author information

Authors and affiliations.

Department of Statistics, South China University of Technology, Guangzhou, China

Huiling Liu

School of Mathematics and Statistics, Qingdao University, Shandong, 266071, China

Center for Statistics and Data Science, Beijing Normal University, Zhuhai, 519087, China

Feifei Chen

BRC Blockchain Research Center, Humboldt-Universität zu Berlin, 10178, Berlin, Germany

Wolfgang Härdle

Dept Information Management and Finance, National Yang Ming Chiao Tung U, Hsinchu, Taiwan

IDA Institute Digital Assets, Bucharest University of Economic Studies, Bucharest, Romania

Department of Statistics, George Washington University, Washington, DC, 20052, USA

You can also search for this author in PubMed Google Scholar

Contributions

LHL, LXM and LH wrote the main manuscript text, LHL and CFF program, HW commented on the methodological section. All authors reviewed the manuscript.

Corresponding author

Correspondence to Hua Liang .

Ethics declarations

Competing interests.

The authors declare no competing interests.

Additional information

Publisher's note.

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Springer Nature or its licensor (e.g. a society or other partner) holds exclusive rights to this article under a publishing agreement with the author(s) or other rightsholder(s); author self-archiving of the accepted manuscript version of this article is solely governed by the terms of such publishing agreement and applicable law.

Reprints and permissions

About this article

Liu, H., Li, X., Chen, F. et al. A comprehensive comparison of goodness-of-fit tests for logistic regression models. Stat Comput 34 , 175 (2024). https://doi.org/10.1007/s11222-024-10487-5

Download citation

Received : 02 December 2023

Accepted : 19 August 2024

Published : 30 August 2024

DOI : https://doi.org/10.1007/s11222-024-10487-5

Share this article

Anyone you share the following link with will be able to read this content:

Sorry, a shareable link is not currently available for this article.

Provided by the Springer Nature SharedIt content-sharing initiative

- Consistent test

- Model based bootstrap (MBB)

- Residual marked empirical process (RMEP)

- Find a journal

- Publish with us

- Track your research

Logistic regression

This page offers all the basic information you need about logistic regression analysis. It is part of Statkat’s wiki module, containing similarly structured info pages for many different statistical methods. The info pages give information about null and alternative hypotheses, assumptions, test statistics and confidence intervals, how to find p values, SPSS how-to’s and more.

To compare logistic regression analysis with other statistical methods, go to Statkat's Comparison tool or practice with logistic regression analysis at Statkat's Practice question center

- 1. When to use

- 2. Null hypothesis

- 3. Alternative hypothesis

- 4. Assumptions

- 5. Test statistic

- 6. Sampling distribution

- 7. Significant?

- 8. Wald-type approximate $C\%$ confidence interval for $\beta_k$

- 9. Goodness of fit measure $R^2_L$

- 10. Example context

When to use?

Note that theoretically, it is always possible to 'downgrade' the measurement level of a variable. For instance, a test that can be performed on a variable of ordinal measurement level can also be performed on a variable of interval measurement level, in which case the interval variable is downgraded to an ordinal variable. However, downgrading the measurement level of variables is generally a bad idea since it means you are throwing away important information in your data (an exception is the downgrade from ratio to interval level, which is generally irrelevant in data analysis).

If you are not sure which method you should use, you might like the assistance of our method selection tool or our method selection table .

Null hypothesis

Logistic regression analysis tests the following null hypothesis (H 0 ):

- H 0 : $\beta_1 = \beta_2 = \ldots = \beta_K = 0$

- H 0 : $\beta_k = 0$ or in terms of odds ratio:

- H 0 : $e^{\beta_k} = 1$

Alternative hypothesis

Logistic regression analysis tests the above null hypothesis against the following alternative hypothesis (H 1 or H a ):

- H 1 : not all population regression coefficients are 0

- H 1 : $\beta_k \neq 0$ or in terms of odds ratio:

- H 1 : $e^{\beta_k} \neq 1$ If defined as Wald $ = \dfrac{b_k}{SE_{b_k}}$ (see 'Test statistic'), also one sided alternatives can be tested:

- H 1 right sided: $\beta_k > 0$

- H 1 left sided: $\beta_k < 0$

- H 1 : $e^{\beta_k} \neq 1$

Assumptions

Statistical tests always make assumptions about the sampling procedure that was used to obtain the sample data. So called parametric tests also make assumptions about how data are distributed in the population. Non-parametric tests are more 'robust' and make no or less strict assumptions about population distributions, but are generally less powerful. Violation of assumptions may render the outcome of statistical tests useless, although violation of some assumptions (e.g. independence assumptions) are generally more problematic than violation of other assumptions (e.g. normality assumptions in combination with large samples).

Logistic regression analysis makes the following assumptions:

- In the population, the relationship between the independent variables and the log odds $\ln (\frac{\pi_{y=1}}{1 - \pi_{y=1}})$ is linear

- The residuals are independent of one another

- Variables are measured without error

- Multicollinearity

Test statistic

Logistic regression analysis is based on the following test statistic:

- $X^2 = D_{null} - D_K = \mbox{null deviance} - \mbox{model deviance} $ $D_{null}$, the null deviance, is conceptually similar to the total variance of the dependent variable in OLS regression analysis. $D_K$, the model deviance, is conceptually similar to the residual variance in OLS regression analysis.

- Wald $ = \dfrac{b_k^2}{SE^2_{b_k}}$

- Wald $ = \dfrac{b_k}{SE_{b_k}}$

- $X^2 = D_{K-1} - D_K$ $D_{K-1}$ is the model deviance, where independent variable $k$ is excluded from the model. $D_{K}$ is the model deviance, where independent variable $k$ is included in the model.

Sampling distribution

- chi-squared distribution with $K$ (number of independent variables) degrees of freedom

- If defined as Wald $ = \dfrac{b_k^2}{SE^2_{b_k}}$: approximately the chi-squared distribution with 1 degree of freedom

- If defined as Wald $ = \dfrac{b_k}{SE_{b_k}}$: approximately the standard normal distribution

- chi-squared distribution with 1 degree of freedom

Significant?

This is how you find out if your test result is significant:

- Check if $X^2$ observed in sample is equal to or larger than critical value $X^{2*}$ or

- Find $p$ value corresponding to observed $X^2$ and check if it is equal to or smaller than $\alpha$

- If defined as Wald $ = \dfrac{b_k^2}{SE^2_{b_k}}$: same procedure as for the chi-squared tests. Wald can be interpret as $X^2$

- If defined as Wald $ = \dfrac{b_k}{SE_{b_k}}$: same procedure as for any $z$ test. Wald can be interpreted as $z$.

Wald-type approximate $C\%$ confidence interval for $\beta_k$

Goodness of fit measure $r^2_l$, example context.

Logistic regression analysis could for instance be used to answer the question:

How to perform a logistic regression analysis in SPSS:

- Put your dependent variable in the box below Dependent and your independent (predictor) variables in the box below Covariate(s)

How to perform a logistic regression analysis in jamovi :

- Put your dependent variable in the box below Dependent Variable and your independent variables of interval/ratio level in the box below Covariates

- If you also have code (dummy) variables as independent variables, you can put these in the box below Covariates as well

- Instead of transforming your categorical independent variable(s) into code variables, you can also put the untransformed categorical independent variables in the box below Factors. Jamovi will then make the code variables for you 'behind the scenes'

- Skip to primary navigation

- Skip to main content

- Skip to primary sidebar

Statistical Methods and Data Analytics

Logistic Regression | SPSS Annotated Output

This page shows an example of logistic regression with footnotes explaining the output. These data were collected on 200 high schools students and are scores on various tests, including science, math, reading and social studies ( socst ). The variable female is a dichotomous variable coded 1 if the student was female and 0 if male.

In the syntax below, the get file command is used to load the hsb2 data into SPSS. In quotes, you need to specify where the data file is located on your computer. Remember that you need to use the .sav extension and that you need to end the command with a period. By default, SPSS does a listwise deletion of missing values. This means that only cases with non-missing values for the dependent as well as all independent variables will be used in the analysis.

Because we do not have a suitable dichotomous variable to use as our dependent variable, we will create one (which we will call honcomp , for honors composition) based on the continuous variable write . We do not advocate making dichotomous variables out of continuous variables; rather, we do this here only for purposes of this illustration.

Use the keyword with after the dependent variable to indicate all of the variables (both continuous and categorical) that you want included in the model. If you have a categorical variable with more than two levels, for example, a three-level ses variable (low, medium and high), you can use the categorical subcommand to tell SPSS to create the dummy variables necessary to include the variable in the logistic regression, as shown below. You can use the keyword by to create interaction terms. For example, the command logistic regression honcomp with read female read by female. will create a model with the main effects of read and female , as well as the interaction of read by female .

We will start by showing the SPSS commands to open the data file, creating the dichotomous dependent variable, and then running the logistic regression. We will show the entire output, and then break up the output with explanation.

Logistic Regression

Block 0: Beginning Block

Block 1: Method = Enter

This part of the output tells you about the cases that were included and excluded from the analysis, the coding of the dependent variable, and coding of any categorical variables listed on the categorical subcommand. (Note: You will not get the third table (“Categorical Variable Codings”) if you do not specify the categorical subcommand.)

b. N – This is the number of cases in each category (e.g., included in the analysis, missing, total).

c. Percent – This is the percent of cases in each category (e.g., included in the analysis, missing, total).

d. Included in Analysis – This row gives the number and percent of cases that were included in the analysis. Because we have no missing data in our example data set, this also corresponds to the total number of cases.

e. Missing Cases – This row give the number and percent of missing cases. By default, SPSS logistic regression does a listwise deletion of missing data. This means that if there is missing value for any variable in the model, the entire case will be excluded from the analysis.

f. Total – This is the sum of the cases that were included in the analysis and the missing cases. In our example, 200 + 0 = 200.

Unselected Cases – If the select subcommand is used and a logical condition is specified with a categorical variable in the dataset, then the number of unselected cases would be listed here. Using the select subcommand is different from using the filter command. When the select subcommand is used, diagnostic and residual values are computed for all cases in the data. If the filter command is used to select cases to be used in the analysis, residual and diagnostic values are not computed for unselected cases.

This part of the output describes a “null model”, which is model with no predictors and just the intercept. This is why you will see all of the variables that you put into the model in the table titled “Variables not in the Equation”.

c. Step 0 – SPSS allows you to have different steps in your logistic regression model. The difference between the steps is the predictors that are included. This is similar to blocking variables into groups and then entering them into the equation one group at a time. By default, SPSS logistic regression is run in two steps. The first step, called Step 0, includes no predictors and just the intercept. Often, this model is not interesting to researchers.

d. Observed – This indicates the number of 0’s and 1’s that are observed in the dependent variable.

e. Predicted – In this null model, SPSS has predicted that all cases are 0 on the dependent variable.

f. Overall Percentage – This gives the percent of cases for which the dependent variables was correctly predicted given the model. In this part of the output, this is the null model. 73.5 = 147/200.

g. B – This is the coefficient for the constant (also called the “intercept”) in the null model.

h. S.E. – This is the standard error around the coefficient for the constant.

i. Wald and Sig . – This is the Wald chi-square test that tests the null hypothesis that the constant equals 0. This hypothesis is rejected because the p-value (listed in the column called “Sig.”) is smaller than the critical p-value of .05 (or .01). Hence, we conclude that the constant is not 0. Usually, this finding is not of interest to researchers.

j. df – This is the degrees of freedom for the Wald chi-square test. There is only one degree of freedom because there is only one predictor in the model, namely the constant.

k. Exp(B) – This is the exponentiation of the B coefficient, which is an odds ratio. This value is given by default because odds ratios can be easier to interpret than the coefficient, which is in log-odds units. This is the odds: 53/147 = .361.

l. Score and Sig. – This is a Score test that is used to predict whether or not an independent variable would be significant in the model. Looking at the p-values (located in the column labeled “Sig.”), we can see that each of the predictors would be statistically significant except the first dummy for ses .

m. df – This column lists the degrees of freedom for each variable. Each variable to be entered into the model, e.g., read , science , ses(1) and ses(2) , has one degree of freedom, which leads to the total of four shown at the bottom of the column. The variable ses is listed here only to show that if the dummy variables that represent ses were tested simultaneously, the variable ses would be statistically significant.

n. Overall Statistics – This shows the result of including all of the predictors into the model.

The section contains what is frequently the most interesting part of the output: the overall test of the model (in the “Omnibus Tests of Model Coefficients” table) and the coefficients and odds ratios (in the “Variables in the Equation” table).

b. Step 1 – This is the first step (or model) with predictors in it. In this case, it is the full model that we specified in the logistic regression command. You can have more steps if you do stepwise or use blocking of variables.

c. Chi-square and Sig. – This is the chi-square statistic and its significance level. In this example, the statistics for the Step, Model and Block are the same because we have not used stepwise logistic regression or blocking. The value given in the Sig. column is the probability of obtaining the chi-square statistic given that the null hypothesis is true. In other words, this is the probability of obtaining this chi-square statistic (65.588) if there is in fact no effect of the independent variables, taken together, on the dependent variable. This is, of course, the p-value, which is compared to a critical value, perhaps .05 or .01 to determine if the overall model is statistically significant. In this case, the model is statistically significant because the p-value is less than .000.

d. df – This is the number of degrees of freedom for the model. There is one degree of freedom for each predictor in the model. In this example, we have four predictors: read , write and two dummies for ses (because there are three levels of ses ).

e. -2 Log likelihood – This is the -2 log likelihood for the final model. By itself, this number is not very informative. However, it can be used to compare nested (reduced) models.

f. Cox & Snell R Square and Nagelkerke R Square – These are pseudo R-squares. Logistic regression does not have an equivalent to the R-squared that is found in OLS regression; however, many people have tried to come up with one. There are a wide variety of pseudo-R-square statistics (these are only two of them). Because this statistic does not mean what R-squared means in OLS regression (the proportion of variance explained by the predictors), we suggest interpreting this statistic with great caution.

g. Observed – This indicates the number of 0’s and 1’s that are observed in the dependent variable.

h. Predicted – These are the predicted values of the dependent variable based on the full logistic regression model. This table shows how many cases are correctly predicted (132 cases are observed to be 0 and are correctly predicted to be 0; 27 cases are observed to be 1 and are correctly predicted to be 1), and how many cases are not correctly predicted (15 cases are observed to be 0 but are predicted to be 1; 26 cases are observed to be 1 but are predicted to be 0).

i. Overall Percentage – This gives the overall percent of cases that are correctly predicted by the model (in this case, the full model that we specified). As you can see, this percentage has increased from 73.5 for the null model to 79.5 for the full model.

j. B – These are the values for the logistic regression equation for predicting the dependent variable from the independent variable. They are in log-odds units. Similar to OLS regression, the prediction equation is

log(p/1-p) = b0 + b1*x1 + b2*x2 + b3*x3 + b3*x3+b4*x4

where p is the probability of being in honors composition. Expressed in terms of the variables used in this example, the logistic regression equation is

log(p/1-p) = –9.561 + 0.098*read + 0.066*science + 0.058*ses(1) – 1.013*ses(2)

These estimates tell you about the relationship between the independent variables and the dependent variable, where the dependent variable is on the logit scale. These estimates tell the amount of increase (or decrease, if the sign of the coefficient is negative) in the predicted log odds of honcomp = 1 that would be predicted by a 1 unit increase (or decrease) in the predictor, holding all other predictors constant. Note: For the independent variables which are not significant, the coefficients are not significantly different from 0, which should be taken into account when interpreting the coefficients. (See the columns labeled Wald and Sig. regarding testing whether the coefficients are statistically significant). Because these coefficients are in log-odds units, they are often difficult to interpret, so they are often converted into odds ratios. You can do this by hand by exponentiating the coefficient, or by looking at the right-most column in the Variables in the Equation table labeled “Exp(B)”. read – For every one-unit increase in reading score (so, for every additional point on the reading test), we expect a 0.098 increase in the log-odds of honcomp , holding all other independent variables constant. science – For every one-unit increase in science score, we expect a 0.066 increase in the log-odds of honcomp , holding all other independent variables constant. ses – This tells you if the overall variable ses is statistically significant. There is no coefficient listed, because ses is not a variable in the model. Rather, dummy variables which code for ses are in the equation, and those have coefficients. However, as you can see in this example, the coefficient for one of the dummies is statistically significant while the other one is not. The statistic given on this row tells you if the dummies that represent ses , taken together, are statistically significant. Because there are two dummies, this test has two degrees of freedom. This is equivalent to using the test statement in SAS or the test command is Stata. ses(1) – The reference group is level 3 (see the Categorical Variables Codings table above), so this coefficient represents the difference between level 1 of ses and level 3. Note: The number in the parentheses only indicate the number of the dummy variable; it does not tell you anything about which levels of the categorical variable are being compared. For example, if you changed the reference group from level 3 to level 1, the labeling of the dummy variables in the output would not change. ses(2) – The reference group is level 3 (see the Categorical Variables Codings table above), so this coefficient represents the difference between level 2 of ses and level 3. Note: The number in the parentheses only indicate the number of the dummy variable; it does not tell you anything about which levels of the categorical variable are being compared. For example, if you changed the reference group from level 3 to level 1, the labeling of the dummy variables in the output would not change. constant – This is the expected value of the log-odds of honcomp when all of the predictor variables equal zero. In most cases, this is not interesting. Also, oftentimes zero is not a realistic value for a variable to take.

k. S.E. – These are the standard errors associated with the coefficients. The standard error is used for testing whether the parameter is significantly different from 0; by dividing the parameter estimate by the standard error you obtain a t-value. The standard errors can also be used to form a confidence interval for the parameter.

l. Wald and Sig. – These columns provide the Wald chi-square value and 2-tailed p-value used in testing the null hypothesis that the coefficient (parameter) is 0. If you use a 2-tailed test, then you would compare each p-value to your preselected value of alpha. Coefficients having p-values less than alpha are statistically significant. For example, if you chose alpha to be 0.05, coefficients having a p-value of 0.05 or less would be statistically significant (i.e., you can reject the null hypothesis and say that the coefficient is significantly different from 0). If you use a 1-tailed test (i.e., you predict that the parameter will go in a particular direction), then you can divide the p-value by 2 before comparing it to your preselected alpha level. For the variable read , the p-value is .000, so the null hypothesis that the coefficient equals 0 would be rejected. For the variable science , the p-value is .015, so the null hypothesis that the coefficient equals 0 would be rejected. For the variable ses , the p-value is .035, so the null hypothesis that the coefficient equals 0 would be rejected. Because the test of the overall variable is statistically significant, you can look at the one degree of freedom tests for the dummies ses(1) and ses(2). The dummy ses(1) is not statistically significantly different from the dummy ses(3) (which is the omitted, or reference, category), but the dummy ses(2) is statistically significantly different from the dummy ses(3) with a p-value of .022.

m. df – This column lists the degrees of freedom for each of the tests of the coefficients.

n. Exp(B) – These are the odds ratios for the predictors. They are the exponentiation of the coefficients. There is no odds ratio for the variable ses because ses (as a variable with 2 degrees of freedom) was not entered into the logistic regression equation.

Odds Ratios

In this next example, we will illustrate the interpretation of odds ratios. In this example, we will simplify our model so that we have only one predictor, the binary variable female . Before we run the logistic regression, we will use the crosstabs command to obtain a crosstab of the two variables.

If we divide the number of males who are in honors composition, 18, by the number of males who are not in honors composition, 73, we get the odds of being in honors composition for males, 18/73 = .246. If we do the same thing for females, we get 35/74 = .472. To get the odds ratio, which is the ratio of the two odds that we have just calculated, we get .472/.246 = 1.918. As we can see in the output below, this is exactly the odds ratio we obtain from the logistic regression. The thing to remember here is that you want the group coded as 1 over the group coded as 0, so honcomp=1/honcomp=0 for both males and females, and then the odds for females/odds for males, because the females are coded as 1.

You can get the odds ratio from the crosstabs command by using the /statistics risk subcommand, as shown below.

As you can see in the output below, we get the same odds ratio when we run the logistic regression. (NOTE: Although it is equivalent to the odds ratio estimated from the logistic regression, the odds ratio in the “Risk Estimate” table is calculated as the ratio of the odds of honcomp=0 for males over the odds of honcomp=0 for females, which explains the confusing row heading “Odds Ratio for female (.00/1.00)”). If we calculated a 95% confidence interval, we would not want this to include the value of 1. When we were considering the coefficients, we did not want the confidence interval to include 0. If we exponentiate 0, we get 1 (exp(0) = 1). Hence, this is two ways of saying the same thing. As you can see, the 95% confidence interval includes 1; hence, the odds ratio is not statistically significant. Because the lower bound of the 95% confidence interval is so close to 1, the p-value is very close to .05. We can use the /print = ic(95) subcommand to get the 95% confidence intervals included in our output.

There are a few other things to note about the output below. The first is that although we have only one predictor variable, the test for the odds ratio does not match with the overall test of the model. This is because the test of the coefficient is a Wald chi-square test, while the test of the overall model is a likelihood ratio chi-square test. While these two types of chi-square tests are asymptotically equivalent, in small samples they can differ, as they do here. Also, we have the unfortunate situation in which the results of the two tests give different conclusions. This does not happen very often. In a situation like this, it is difficult to know what to conclude. One might consider the power, or one might decide if an odds ratio of this magnitude is important from a clinical or practical standpoint.

For more information on interpreting odds ratios, please see How do I interpret odds ratios in logistic regression? . Although this FAQ uses Stata for purposes of illustration, the concepts and explanations are useful.

Your Name (required)

Your Email (must be a valid email for us to receive the report!)

Comment/Error Report (required)

How to cite this page

- © 2024 UC REGENTS

Stack Exchange Network

Stack Exchange network consists of 183 Q&A communities including Stack Overflow , the largest, most trusted online community for developers to learn, share their knowledge, and build their careers.

Q&A for work

Connect and share knowledge within a single location that is structured and easy to search.

Wald test for logistic regression

As far as I understand the Wald test in the context of logistic regression is used to determine whether a certain predictor variable $X$ is significant or not. It rejects the null hypothesis of the corresponding coefficient being zero.

The test consists of dividing the value of the coefficient by standard error $\sigma$.

What I am confused about is that $X/\sigma$ is also known as Z-score and indicates how likely it is that a given observation comes form the normal distribution (with mean zero).

- z-statistic

- 2 $\begingroup$ Possible duplicate of Wald test in regression (OLS and GLMs): t- vs. z-distribution $\endgroup$ – Firebug Commented Nov 27, 2017 at 21:50

- 3 $\begingroup$ Perhaps it could be the other way around though, as the answer in this one is more developed. $\endgroup$ – Firebug Commented Nov 27, 2017 at 21:51

The estimates of the coefficients and the intercepts in logistic regression (and any GLM) are found via maximum-likelihood estimation (MLE). These estimates are denoted with a hat over the parameters, something like $\hat{\theta}$. Our parameter of interest is denoted $\theta_{0}$ and this is usually 0 as we want to test whether the coefficient differs from 0 or not. From asymptotic theory of MLE, we know that the difference between $\hat{\theta}$ and $\theta_{0}$ will be approximately normally distributed with mean 0 (details can be found in any mathematical statistics book such as Larry Wasserman's All of statistics ). Recall that standard errors are nothing else than standard deviations of statistics (Sokal and Rohlf write in their book Biometry : "a statistic is any one of many computed or estimated statistical quantities", e.g. the mean, median, standard deviation, correlation coefficient, regression coefficient, ...). Dividing a normal distribution with mean 0 and standard deviation $\sigma$ by its standard deviation will yield the standard normal distribution with mean 0 and standard deviation 1. The Wald statistic is defined as (e.g. Wasserman (2006): All of Statistics , pages 153, 214-215): $$ W=\frac{(\hat{\beta}-\beta_{0})}{\widehat{\operatorname{se}}(\hat{\beta})}\sim \mathcal{N}(0,1) $$ or $$ W^{2}=\frac{(\hat{\beta}-\beta_{0})^2}{\widehat{\operatorname{Var}}(\hat{\beta})}\sim \chi^{2}_{1} $$ The second form arises from the fact that the square of a standard normal distribution is the $\chi^{2}_{1}$-distribution with 1 degree of freedom (the sum of two squared standard normal distributions would be a $\chi^{2}_{2}$-distribution with 2 degrees of freedom and so on).

Because the parameter of interest is usually 0 (i.e. $\beta_{0}=0$), the Wald statistic simplifies to $$ W=\frac{\hat{\beta}}{\widehat{\operatorname{se}}(\hat{\beta})}\sim \mathcal{N}(0,1) $$ Which is what you described: The estimate of the coefficient divided by its standard error.

When is a $z$ and when a $t$ value used?