| Discuss the contribution of other researchers to improve reliability and validity. | Frequently Asked QuestionsWhat is reliability and validity in research. Reliability in research refers to the consistency and stability of measurements or findings. Validity relates to the accuracy and truthfulness of results, measuring what the study intends to. Both are crucial for trustworthy and credible research outcomes. What is validity?Validity in research refers to the extent to which a study accurately measures what it intends to measure. It ensures that the results are truly representative of the phenomena under investigation. Without validity, research findings may be irrelevant, misleading, or incorrect, limiting their applicability and credibility. What is reliability?Reliability in research refers to the consistency and stability of measurements over time. If a study is reliable, repeating the experiment or test under the same conditions should produce similar results. Without reliability, findings become unpredictable and lack dependability, potentially undermining the study’s credibility and generalisability. What is reliability in psychology?In psychology, reliability refers to the consistency of a measurement tool or test. A reliable psychological assessment produces stable and consistent results across different times, situations, or raters. It ensures that an instrument’s scores are not due to random error, making the findings dependable and reproducible in similar conditions. What is test retest reliability?Test-retest reliability assesses the consistency of measurements taken by a test over time. It involves administering the same test to the same participants at two different points in time and comparing the results. A high correlation between the scores indicates that the test produces stable and consistent results over time. How to improve reliability of an experiment?- Standardise procedures and instructions.

- Use consistent and precise measurement tools.

- Train observers or raters to reduce subjective judgments.

- Increase sample size to reduce random errors.

- Conduct pilot studies to refine methods.

- Repeat measurements or use multiple methods.

- Address potential sources of variability.

What is the difference between reliability and validity?Reliability refers to the consistency and repeatability of measurements, ensuring results are stable over time. Validity indicates how well an instrument measures what it’s intended to measure, ensuring accuracy and relevance. While a test can be reliable without being valid, a valid test must inherently be reliable. Both are essential for credible research. Are interviews reliable and valid?Interviews can be both reliable and valid, but they are susceptible to biases. The reliability and validity depend on the design, structure, and execution of the interview. Structured interviews with standardised questions improve reliability. Validity is enhanced when questions accurately capture the intended construct and when interviewer biases are minimised. Are IQ tests valid and reliable?IQ tests are generally considered reliable, producing consistent scores over time. Their validity, however, is a subject of debate. While they effectively measure certain cognitive skills, whether they capture the entirety of “intelligence” or predict success in all life areas is contested. Cultural bias and over-reliance on tests are also concerns. Are questionnaires reliable and valid?Questionnaires can be both reliable and valid if well-designed. Reliability is achieved when they produce consistent results over time or across similar populations. Validity is ensured when questions accurately measure the intended construct. However, factors like poorly phrased questions, respondent bias, and lack of standardisation can compromise their reliability and validity. You May Also LikeIn historical research, a researcher collects and analyse the data, and explain the events that occurred in the past to test the truthfulness of observations. This article provides the key advantages of primary research over secondary research so you can make an informed decision. Inductive and deductive reasoning takes into account assumptions and incidents. Here is all you need to know about inductive vs deductive reasoning. USEFUL LINKS LEARNING RESOURCES  COMPANY DETAILS   Home » Validity – Types, Examples and Guide Validity – Types, Examples and GuideTable of Contents  Validity is a fundamental concept in research, referring to the extent to which a test, measurement, or study accurately reflects or assesses the specific concept that the researcher is attempting to measure. Ensuring validity is crucial as it determines the trustworthiness and credibility of the research findings. Research ValidityResearch validity pertains to the accuracy and truthfulness of the research. It examines whether the research truly measures what it claims to measure. Without validity, research results can be misleading or erroneous, leading to incorrect conclusions and potentially flawed applications. How to Ensure Validity in ResearchEnsuring validity in research involves several strategies: - Clear Operational Definitions : Define variables clearly and precisely.

- Use of Reliable Instruments : Employ measurement tools that have been tested for reliability.

- Pilot Testing : Conduct preliminary studies to refine the research design and instruments.

- Triangulation : Use multiple methods or sources to cross-verify results.

- Control Variables : Control extraneous variables that might influence the outcomes.

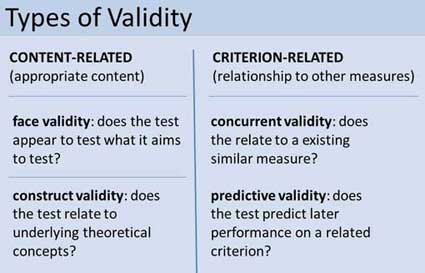

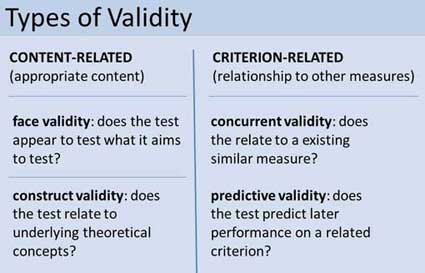

Types of ValidityValidity is categorized into several types, each addressing different aspects of measurement accuracy. Internal ValidityInternal validity refers to the degree to which the results of a study can be attributed to the treatments or interventions rather than other factors. It is about ensuring that the study is free from confounding variables that could affect the outcome. External ValidityExternal validity concerns the extent to which the research findings can be generalized to other settings, populations, or times. High external validity means the results are applicable beyond the specific context of the study. Construct ValidityConstruct validity evaluates whether a test or instrument measures the theoretical construct it is intended to measure. It involves ensuring that the test is truly assessing the concept it claims to represent. Content ValidityContent validity examines whether a test covers the entire range of the concept being measured. It ensures that the test items represent all facets of the concept. Criterion ValidityCriterion validity assesses how well one measure predicts an outcome based on another measure. It is divided into two types: - Predictive Validity : How well a test predicts future performance.

- Concurrent Validity : How well a test correlates with a currently existing measure.

Face ValidityFace validity refers to the extent to which a test appears to measure what it is supposed to measure, based on superficial inspection. While it is the least scientific measure of validity, it is important for ensuring that stakeholders believe in the test’s relevance. Importance of ValidityValidity is crucial because it directly affects the credibility of research findings. Valid results ensure that conclusions drawn from research are accurate and can be trusted. This, in turn, influences the decisions and policies based on the research. Examples of Validity- Internal Validity : A randomized controlled trial (RCT) where the random assignment of participants helps eliminate biases.

- External Validity : A study on educational interventions that can be applied to different schools across various regions.

- Construct Validity : A psychological test that accurately measures depression levels.

- Content Validity : An exam that covers all topics taught in a course.

- Criterion Validity : A job performance test that predicts future job success.

Where to Write About Validity in A ThesisIn a thesis, the methodology section should include discussions about validity. Here, you explain how you ensured the validity of your research instruments and design. Additionally, you may discuss validity in the results section, interpreting how the validity of your measurements affects your findings. Applications of ValidityValidity has wide applications across various fields: - Education : Ensuring assessments accurately measure student learning.

- Psychology : Developing tests that correctly diagnose mental health conditions.

- Market Research : Creating surveys that accurately capture consumer preferences.

Limitations of ValidityWhile ensuring validity is essential, it has its limitations: - Complexity : Achieving high validity can be complex and resource-intensive.

- Context-Specific : Some validity types may not be universally applicable across all contexts.

- Subjectivity : Certain types of validity, like face validity, involve subjective judgments.

By understanding and addressing these aspects of validity, researchers can enhance the quality and impact of their studies, leading to more reliable and actionable results. About the author Muhammad HassanResearcher, Academic Writer, Web developer You may also like Internal Validity – Threats, Examples and Guide Reliability Vs Validity Inter-Rater Reliability – Methods, Examples and... Criterion Validity – Methods, Examples and... Content Validity – Measurement and Examples Split-Half Reliability – Methods, Examples and...Validity in research: a guide to measuring the right thingsLast updated 27 February 2023 Reviewed by Cathy Heath Short on time? Get an AI generated summary of this article instead Validity is necessary for all types of studies ranging from market validation of a business or product idea to the effectiveness of medical trials and procedures. So, how can you determine whether your research is valid? This guide can help you understand what validity is, the types of validity in research, and the factors that affect research validity. Make research less tediousDovetail streamlines research to help you uncover and share actionable insights In the most basic sense, validity is the quality of being based on truth or reason. Valid research strives to eliminate the effects of unrelated information and the circumstances under which evidence is collected. Validity in research is the ability to conduct an accurate study with the right tools and conditions to yield acceptable and reliable data that can be reproduced. Researchers rely on carefully calibrated tools for precise measurements. However, collecting accurate information can be more of a challenge. Studies must be conducted in environments that don't sway the results to achieve and maintain validity. They can be compromised by asking the wrong questions or relying on limited data. Why is validity important in research?Research is used to improve life for humans. Every product and discovery, from innovative medical breakthroughs to advanced new products, depends on accurate research to be dependable. Without it, the results couldn't be trusted, and products would likely fail. Businesses would lose money, and patients couldn't rely on medical treatments. While wasting money on a lousy product is a concern, lack of validity paints a much grimmer picture in the medical field or producing automobiles and airplanes, for example. Whether you're launching an exciting new product or conducting scientific research, validity can determine success and failure. Reliability is the ability of a method to yield consistency. If the same result can be consistently achieved by using the same method to measure something, the measurement method is said to be reliable. For example, a thermometer that shows the same temperatures each time in a controlled environment is reliable. While high reliability is a part of measuring validity, it's only part of the puzzle. If the reliable thermometer hasn't been properly calibrated and reliably measures temperatures two degrees too high, it doesn't provide a valid (accurate) measure of temperature. Similarly, if a researcher uses a thermometer to measure weight, the results won't be accurate because it's the wrong tool for the job. - How are reliability and validity assessed?

While measuring reliability is a part of measuring validity, there are distinct ways to assess both measurements for accuracy. How is reliability measured?These measures of consistency and stability help assess reliability, including: Consistency and stability of the same measure when repeated multiple times and conditions Consistency and stability of the measure across different test subjects Consistency and stability of results from different parts of a test designed to measure the same thing How is validity measured?Since validity refers to how accurately a method measures what it is intended to measure, it can be difficult to assess the accuracy. Validity can be estimated by comparing research results to other relevant data or theories. The adherence of a measure to existing knowledge of how the concept is measured The ability to cover all aspects of the concept being measured The relation of the result in comparison with other valid measures of the same concept - What are the types of validity in a research design?

Research validity is broadly gathered into two groups: internal and external. Yet, this grouping doesn't clearly define the different types of validity. Research validity can be divided into seven distinct groups. Face validity : A test that appears valid simply because of the appropriateness or relativity of the testing method, included information, or tools used. Content validity : The determination that the measure used in research covers the full domain of the content. Construct validity : The assessment of the suitability of the measurement tool to measure the activity being studied. Internal validity : The assessment of how your research environment affects measurement results. This is where other factors can’t explain the extent of an observed cause-and-effect response. External validity : The extent to which the study will be accurate beyond the sample and the level to which it can be generalized in other settings, populations, and measures. Statistical conclusion validity: The determination of whether a relationship exists between procedures and outcomes (appropriate sampling and measuring procedures along with appropriate statistical tests). Criterion-related validity : A measurement of the quality of your testing methods against a criterion measure (like a “gold standard” test) that is measured at the same time. Like different types of research and the various ways to measure validity, examples of validity can vary widely. These include: A questionnaire may be considered valid because each question addresses specific and relevant aspects of the study subject. In a brand assessment study, researchers can use comparison testing to verify the results of an initial study. For example, the results from a focus group response about brand perception are considered more valid when the results match that of a questionnaire answered by current and potential customers. A test to measure a class of students' understanding of the English language contains reading, writing, listening, and speaking components to cover the full scope of how language is used. - Factors that affect research validity

Certain factors can affect research validity in both positive and negative ways. By understanding the factors that improve validity and those that threaten it, you can enhance the validity of your study. These include: Random selection of participants vs. the selection of participants that are representative of your study criteria Blinding with interventions the participants are unaware of (like the use of placebos) Manipulating the experiment by inserting a variable that will change the results Randomly assigning participants to treatment and control groups to avoid bias Following specific procedures during the study to avoid unintended effects Conducting a study in the field instead of a laboratory for more accurate results Replicating the study with different factors or settings to compare results Using statistical methods to adjust for inconclusive data What are the common validity threats in research, and how can their effects be minimized or nullified?Research validity can be difficult to achieve because of internal and external threats that produce inaccurate results. These factors can jeopardize validity. History: Events that occur between an early and later measurement Maturation: The passage of time in a study can include data on actions that would have naturally occurred outside of the settings of the study Repeated testing: The outcome of repeated tests can change the outcome of followed tests Selection of subjects: Unconscious bias which can result in the selection of uniform comparison groups Statistical regression: Choosing subjects based on extremes doesn't yield an accurate outcome for the majority of individuals Attrition: When the sample group is diminished significantly during the course of the study Maturation: When subjects mature during the study, and natural maturation is awarded to the effects of the study While some validity threats can be minimized or wholly nullified, removing all threats from a study is impossible. For example, random selection can remove unconscious bias and statistical regression. Researchers can even hope to avoid attrition by using smaller study groups. Yet, smaller study groups could potentially affect the research in other ways. The best practice for researchers to prevent validity threats is through careful environmental planning and t reliable data-gathering methods. - How to ensure validity in your research

Researchers should be mindful of the importance of validity in the early planning stages of any study to avoid inaccurate results. Researchers must take the time to consider tools and methods as well as how the testing environment matches closely with the natural environment in which results will be used. The following steps can be used to ensure validity in research: Choose appropriate methods of measurement Use appropriate sampling to choose test subjects Create an accurate testing environment How do you maintain validity in research?Accurate research is usually conducted over a period of time with different test subjects. To maintain validity across an entire study, you must take specific steps to ensure that gathered data has the same levels of accuracy. Consistency is crucial for maintaining validity in research. When researchers apply methods consistently and standardize the circumstances under which data is collected, validity can be maintained across the entire study. Is there a need for validation of the research instrument before its implementation?An essential part of validity is choosing the right research instrument or method for accurate results. Consider the thermometer that is reliable but still produces inaccurate results. You're unlikely to achieve research validity without activities like calibration, content, and construct validity. - Understanding research validity for more accurate results

Without validity, research can't provide the accuracy necessary to deliver a useful study. By getting a clear understanding of validity in research, you can take steps to improve your research skills and achieve more accurate results. Should you be using a customer insights hub?Do you want to discover previous research faster? Do you share your research findings with others? Do you analyze research data? Start for free today, add your research, and get to key insights faster Editor’s picksLast updated: 18 April 2023 Last updated: 27 February 2023 Last updated: 6 February 2023 Last updated: 6 October 2023 Last updated: 5 February 2023 Last updated: 16 April 2023 Last updated: 9 March 2023 Last updated: 12 December 2023 Last updated: 11 March 2024 Last updated: 4 July 2024 Last updated: 6 March 2024 Last updated: 5 March 2024 Last updated: 13 May 2024 Latest articlesRelated topics, .css-je19u9{-webkit-align-items:flex-end;-webkit-box-align:flex-end;-ms-flex-align:flex-end;align-items:flex-end;display:-webkit-box;display:-webkit-flex;display:-ms-flexbox;display:flex;-webkit-flex-direction:row;-ms-flex-direction:row;flex-direction:row;-webkit-box-flex-wrap:wrap;-webkit-flex-wrap:wrap;-ms-flex-wrap:wrap;flex-wrap:wrap;-webkit-box-pack:center;-ms-flex-pack:center;-webkit-justify-content:center;justify-content:center;row-gap:0;text-align:center;max-width:671px;}@media (max-width: 1079px){.css-je19u9{max-width:400px;}.css-je19u9>span{white-space:pre;}}@media (max-width: 799px){.css-je19u9{max-width:400px;}.css-je19u9>span{white-space:pre;}} decide what to .css-1kiodld{max-height:56px;display:-webkit-box;display:-webkit-flex;display:-ms-flexbox;display:flex;-webkit-align-items:center;-webkit-box-align:center;-ms-flex-align:center;align-items:center;}@media (max-width: 1079px){.css-1kiodld{display:none;}} build next, decide what to build next.  Users report unexpectedly high data usage, especially during streaming sessions.  Users find it hard to navigate from the home page to relevant playlists in the app.  It would be great to have a sleep timer feature, especially for bedtime listening.  I need better filters to find the songs or artists I’m looking for. Log in or sign upGet started for free Log in using your username and password- Search More Search for this keyword Advanced search

- Latest content

- Current issue

- Write for Us

- BMJ Journals

You are here- Volume 18, Issue 3

- Validity and reliability in quantitative studies

- Article Text

- Article info

- Citation Tools

- Rapid Responses

- Article metrics

- Roberta Heale 1 ,

- Alison Twycross 2

- 1 School of Nursing, Laurentian University , Sudbury, Ontario , Canada

- 2 Faculty of Health and Social Care , London South Bank University , London , UK

- Correspondence to : Dr Roberta Heale, School of Nursing, Laurentian University, Ramsey Lake Road, Sudbury, Ontario, Canada P3E2C6; rheale{at}laurentian.ca

https://doi.org/10.1136/eb-2015-102129 Statistics from Altmetric.comRequest permissions. If you wish to reuse any or all of this article please use the link below which will take you to the Copyright Clearance Center’s RightsLink service. You will be able to get a quick price and instant permission to reuse the content in many different ways. Evidence-based practice includes, in part, implementation of the findings of well-conducted quality research studies. So being able to critique quantitative research is an important skill for nurses. Consideration must be given not only to the results of the study but also the rigour of the research. Rigour refers to the extent to which the researchers worked to enhance the quality of the studies. In quantitative research, this is achieved through measurement of the validity and reliability. 1 Types of validity The first category is content validity . This category looks at whether the instrument adequately covers all the content that it should with respect to the variable. In other words, does the instrument cover the entire domain related to the variable, or construct it was designed to measure? In an undergraduate nursing course with instruction about public health, an examination with content validity would cover all the content in the course with greater emphasis on the topics that had received greater coverage or more depth. A subset of content validity is face validity , where experts are asked their opinion about whether an instrument measures the concept intended. Construct validity refers to whether you can draw inferences about test scores related to the concept being studied. For example, if a person has a high score on a survey that measures anxiety, does this person truly have a high degree of anxiety? In another example, a test of knowledge of medications that requires dosage calculations may instead be testing maths knowledge. There are three types of evidence that can be used to demonstrate a research instrument has construct validity: Homogeneity—meaning that the instrument measures one construct. Convergence—this occurs when the instrument measures concepts similar to that of other instruments. Although if there are no similar instruments available this will not be possible to do. Theory evidence—this is evident when behaviour is similar to theoretical propositions of the construct measured in the instrument. For example, when an instrument measures anxiety, one would expect to see that participants who score high on the instrument for anxiety also demonstrate symptoms of anxiety in their day-to-day lives. 2 The final measure of validity is criterion validity . A criterion is any other instrument that measures the same variable. Correlations can be conducted to determine the extent to which the different instruments measure the same variable. Criterion validity is measured in three ways: Convergent validity—shows that an instrument is highly correlated with instruments measuring similar variables. Divergent validity—shows that an instrument is poorly correlated to instruments that measure different variables. In this case, for example, there should be a low correlation between an instrument that measures motivation and one that measures self-efficacy. Predictive validity—means that the instrument should have high correlations with future criterions. 2 For example, a score of high self-efficacy related to performing a task should predict the likelihood a participant completing the task. ReliabilityReliability relates to the consistency of a measure. A participant completing an instrument meant to measure motivation should have approximately the same responses each time the test is completed. Although it is not possible to give an exact calculation of reliability, an estimate of reliability can be achieved through different measures. The three attributes of reliability are outlined in table 2 . How each attribute is tested for is described below. Attributes of reliability Homogeneity (internal consistency) is assessed using item-to-total correlation, split-half reliability, Kuder-Richardson coefficient and Cronbach's α. In split-half reliability, the results of a test, or instrument, are divided in half. Correlations are calculated comparing both halves. Strong correlations indicate high reliability, while weak correlations indicate the instrument may not be reliable. The Kuder-Richardson test is a more complicated version of the split-half test. In this process the average of all possible split half combinations is determined and a correlation between 0–1 is generated. This test is more accurate than the split-half test, but can only be completed on questions with two answers (eg, yes or no, 0 or 1). 3 Cronbach's α is the most commonly used test to determine the internal consistency of an instrument. In this test, the average of all correlations in every combination of split-halves is determined. Instruments with questions that have more than two responses can be used in this test. The Cronbach's α result is a number between 0 and 1. An acceptable reliability score is one that is 0.7 and higher. 1 , 3 Stability is tested using test–retest and parallel or alternate-form reliability testing. Test–retest reliability is assessed when an instrument is given to the same participants more than once under similar circumstances. A statistical comparison is made between participant's test scores for each of the times they have completed it. This provides an indication of the reliability of the instrument. Parallel-form reliability (or alternate-form reliability) is similar to test–retest reliability except that a different form of the original instrument is given to participants in subsequent tests. The domain, or concepts being tested are the same in both versions of the instrument but the wording of items is different. 2 For an instrument to demonstrate stability there should be a high correlation between the scores each time a participant completes the test. Generally speaking, a correlation coefficient of less than 0.3 signifies a weak correlation, 0.3–0.5 is moderate and greater than 0.5 is strong. 4 Equivalence is assessed through inter-rater reliability. This test includes a process for qualitatively determining the level of agreement between two or more observers. A good example of the process used in assessing inter-rater reliability is the scores of judges for a skating competition. The level of consistency across all judges in the scores given to skating participants is the measure of inter-rater reliability. An example in research is when researchers are asked to give a score for the relevancy of each item on an instrument. Consistency in their scores relates to the level of inter-rater reliability of the instrument. Determining how rigorously the issues of reliability and validity have been addressed in a study is an essential component in the critique of research as well as influencing the decision about whether to implement of the study findings into nursing practice. In quantitative studies, rigour is determined through an evaluation of the validity and reliability of the tools or instruments utilised in the study. A good quality research study will provide evidence of how all these factors have been addressed. This will help you to assess the validity and reliability of the research and help you decide whether or not you should apply the findings in your area of clinical practice. - Lobiondo-Wood G ,

- Shuttleworth M

- ↵ Laerd Statistics . Determining the correlation coefficient . 2013 . https://statistics.laerd.com/premium/pc/pearson-correlation-in-spss-8.php

Twitter Follow Roberta Heale at @robertaheale and Alison Twycross at @alitwy Competing interests None declared. Read the full text or download the PDF: What is the Significance of Validity in Research? Introduction- What is validity in simple terms?

Internal validity vs. external validity in researchUncovering different types of research validity, factors that improve research validity. In qualitative research , validity refers to an evaluation metric for the trustworthiness of study findings. Within the expansive landscape of research methodologies , the qualitative approach, with its rich, narrative-driven investigations, demands unique criteria for ensuring validity. Unlike its quantitative counterpart, which often leans on numerical robustness and statistical veracity, the essence of validity in qualitative research delves deep into the realms of credibility, dependability, and the richness of the data . The importance of validity in qualitative research cannot be overstated. Establishing validity refers to ensuring that the research findings genuinely reflect the phenomena they are intended to represent. It reinforces the researcher's responsibility to present an authentic representation of study participants' experiences and insights. This article will examine validity in qualitative research, exploring its characteristics, techniques to bolster it, and the challenges that researchers might face in establishing validity.  At its core, validity in research speaks to the degree to which a study accurately reflects or assesses the specific concept that the researcher is attempting to measure or understand. It's about ensuring that the study investigates what it purports to investigate. While this seems like a straightforward idea, the way validity is approached can vary greatly between qualitative and quantitative research . Quantitative research often hinges on numerical, measurable data. In this paradigm, validity might refer to whether a specific tool or method measures the correct variable, without interference from other variables. It's about numbers, scales, and objective measurements. For instance, if one is studying personalities by administering surveys, a valid instrument could be a survey that has been rigorously developed and tested to verify that the survey questions are referring to personality characteristics and not other similar concepts, such as moods, opinions, or social norms. Conversely, qualitative research is more concerned with understanding human behavior and the reasons that govern such behavior. It's less about measuring in the strictest sense and more about interpreting the phenomenon that is being studied. The questions become: "Are these interpretations true representations of the human experience being studied?" and "Do they authentically convey participants' perspectives and contexts?"  Differentiating between qualitative and quantitative validity is crucial because the research methods to ensure validity differ between these research paradigms. In quantitative realms, validity might involve test-retest reliability or examining the internal consistency of a test. In the qualitative sphere, however, the focus shifts to ensuring that the researcher's interpretations align with the actual experiences and perspectives of their subjects. This distinction is fundamental because it impacts how researchers engage in research design , gather data , and draw conclusions . Ensuring validity in qualitative research is like weaving a tapestry: every strand of data must be carefully interwoven with the interpretive threads of the researcher, creating a cohesive and faithful representation of the studied experience. While often terms associated more closely with quantitative research, internal and external validity can still be relevant concepts to understand within the context of qualitative inquiries. Grasping these notions can help qualitative researchers better navigate the challenges of ensuring their findings are both credible and applicable in wider contexts. Internal validityInternal validity refers to the authenticity and truthfulness of the findings within the study itself. In qualitative research , this might involve asking: Do the conclusions drawn genuinely reflect the perspectives and experiences of the study's participants? Internal validity revolves around the depth of understanding, ensuring that the researcher's interpretations are grounded in participants' realities. Techniques like member checking , where participants review and verify the researcher's interpretations , can bolster internal validity. External validityExternal validity refers to the extent to which the findings of a study can be generalized or applied to other settings or groups. For qualitative researchers, the emphasis isn't on statistical generalizability, as often seen in quantitative studies. Instead, it's about transferability. It becomes a matter of determining how and where the insights gathered might be relevant in other contexts. This doesn't mean that every qualitative study's findings will apply universally, but qualitative researchers should provide enough detail (through rich, thick descriptions) to allow readers or other researchers to determine the potential for transfer to other contexts.  Try out a free trial of ATLAS.ti todaySee how you can turn your data into critical research findings with our intuitive interface. Looking deeper into the realm of validity, it's crucial to recognize and understand its various types. Each type offers distinct criteria and methods of evaluation, ensuring that research remains robust and genuine. Here's an exploration of some of these types. Construct validityConstruct validity is a cornerstone in research methodology . It pertains to ensuring that the tools or methods used in a research study genuinely capture the intended theoretical constructs. In qualitative research , the challenge lies in the abstract nature of many constructs. For example, if one were to investigate "emotional intelligence" or "social cohesion," the definitions might vary, making them hard to pin down.  To bolster construct validity, it is important to clearly and transparently define the concepts being studied. In addition, researchers may triangulate data from multiple sources , ensuring that different viewpoints converge towards a shared understanding of the construct. Furthermore, they might delve into iterative rounds of data collection, refining their methods with each cycle to better align with the conceptual essence of their focus. Content validityContent validity's emphasis is on the breadth and depth of the content being assessed. In other words, content validity refers to capturing all relevant facets of the phenomenon being studied. Within qualitative paradigms, ensuring comprehensive representation is paramount. If, for instance, a researcher is using interview protocols to understand community perceptions of a local policy, it's crucial that the questions encompass all relevant aspects of that policy. This could range from its implementation and impact to public awareness and opinion variations across demographic groups. Enhancing content validity can involve expert reviews where subject matter experts evaluate tools or methods for comprehensiveness. Another strategy might involve pilot studies , where preliminary data collection reveals gaps or overlooked aspects that can be addressed in the main study. Ecological validityEcological validity refers to the genuine reflection of real-world situations in research findings. For qualitative researchers, this means their observations , interpretations , and conclusions should resonate with the participants and context being studied. If a study explores classroom dynamics, for example, studying students and teachers in a controlled research setting would have lower ecological validity than studying real classroom settings. Ecological validity is important to consider because it helps ensure the research is relevant to the people being studied. Individuals might behave entirely different in a controlled environment as opposed to their everyday natural settings. Ecological validity tends to be stronger in qualitative research compared to quantitative research , because qualitative researchers are typically immersed in their study context and explore participants' subjective perceptions and experiences. Quantitative research, in contrast, can sometimes be more artificial if behavior is being observed in a lab or participants have to choose from predetermined options to answer survey questions. Qualitative researchers can further bolster ecological validity through immersive fieldwork, where researchers spend extended periods in the studied environment. This immersion helps them capture the nuances and intricacies that might be missed in brief or superficial engagements. Face validityFace validity, while seemingly straightforward, holds significant weight in the preliminary stages of research. It serves as a litmus test, gauging the apparent appropriateness and relevance of a tool or method. If a researcher is developing a new interview guide to gauge employee satisfaction, for instance, a quick assessment from colleagues or a focus group can reveal if the questions intuitively seem fit for the purpose. While face validity is more subjective and lacks the depth of other validity types, it's a crucial initial step, ensuring that the research starts on the right foot. Criterion validityCriterion validity evaluates how well the results obtained from one method correlate with those from another, more established method. In many research scenarios, establishing high criterion validity involves using statistical methods to measure validity. For instance, a researcher might utilize the appropriate statistical tests to determine the strength and direction of the linear relationship between two sets of data. If a new measurement tool or method is being introduced, its validity might be established by statistically correlating its outcomes with those of a gold standard or previously validated tool. Correlational statistics can estimate the strength of the relationship between the new instrument and the previously established instrument, and regression analyses can also be useful to predict outcomes based on established criteria. While these methods are traditionally aligned with quantitative research, qualitative researchers, particularly those using mixed methods , may also find value in these statistical approaches, especially when wanting to quantify certain aspects of their data for comparative purposes. More broadly, qualitative researchers could compare their operationalizations and findings to other similar qualitative studies to assess that they are indeed examining what they intend to study. In the realm of qualitative research , the role of the researcher is not just that of an observer but often as an active participant in the meaning-making process. This unique positioning means the researcher's perspectives and interactions can significantly influence the data collected and its interpretation . Here's a deep dive into the researcher's pivotal role in upholding validity. ReflexivityA key concept in qualitative research, reflexivity requires researchers to continually reflect on their worldviews, beliefs, and potential influence on the data. By maintaining a reflexive journal or engaging in regular introspection, researchers can identify and address their own biases , ensuring a more genuine interpretation of participant narratives. Building rapportThe depth and authenticity of information shared by participants often hinge on the rapport and trust established with the researcher. By cultivating genuine, non-judgmental, and empathetic relationships with participants, researchers can enhance the validity of the data collected. PositionalityEvery researcher brings to the study their own background, including their culture, education, socioeconomic status, and more. Recognizing how this positionality might influence interpretations and interactions is crucial. By acknowledging and transparently sharing their positionality, researchers can offer context to their findings and interpretations. Active listeningThe ability to listen without imposing one's own judgments or interpretations is vital. Active listening ensures that researchers capture the participants' experiences and emotions without distortion, enhancing the validity of the findings. Transparency in methodsTo ensure validity, researchers should be transparent about every step of their process. From how participants were selected to how data was analyzed , a clear documentation offers others a chance to understand and evaluate the research's authenticity and rigor . Member checkingOnce data is collected and interpreted, revisiting participants to confirm the researcher's interpretations can be invaluable. This process, known as member checking , ensures that the researcher's understanding aligns with the participants' intended meanings, bolstering validity. Embracing ambiguityQualitative data can be complex and sometimes contradictory. Instead of trying to fit data into preconceived notions or frameworks, researchers must embrace ambiguity, acknowledging areas of uncertainty or multiple interpretations.  Make the most of your research study with ATLAS.tiFrom study design to data analysis, let ATLAS.ti guide you through the research process. Download a free trial today.  Validity In Psychology Research: Types & ExamplesSaul Mcleod, PhD Editor-in-Chief for Simply Psychology BSc (Hons) Psychology, MRes, PhD, University of Manchester Saul Mcleod, PhD., is a qualified psychology teacher with over 18 years of experience in further and higher education. He has been published in peer-reviewed journals, including the Journal of Clinical Psychology. Learn about our Editorial Process Olivia Guy-Evans, MSc Associate Editor for Simply Psychology BSc (Hons) Psychology, MSc Psychology of Education Olivia Guy-Evans is a writer and associate editor for Simply Psychology. She has previously worked in healthcare and educational sectors. In psychology research, validity refers to the extent to which a test or measurement tool accurately measures what it’s intended to measure. It ensures that the research findings are genuine and not due to extraneous factors. Validity can be categorized into different types based on internal and external validity . The concept of validity was formulated by Kelly (1927, p. 14), who stated that a test is valid if it measures what it claims to measure. For example, a test of intelligence should measure intelligence and not something else (such as memory). Internal and External Validity In ResearchInternal validity refers to whether the effects observed in a study are due to the manipulation of the independent variable and not some other confounding factor. In other words, there is a causal relationship between the independent and dependent variables . Internal validity can be improved by controlling extraneous variables, using standardized instructions, counterbalancing, and eliminating demand characteristics and investigator effects. External validity refers to the extent to which the results of a study can be generalized to other settings (ecological validity), other people (population validity), and over time (historical validity). External validity can be improved by setting experiments more naturally and using random sampling to select participants. Types of Validity In PsychologyTwo main categories of validity are used to assess the validity of the test (i.e., questionnaire, interview, IQ test, etc.): Content and criterion. - Content validity refers to the extent to which a test or measurement represents all aspects of the intended content domain. It assesses whether the test items adequately cover the topic or concept.

- Criterion validity assesses the performance of a test based on its correlation with a known external criterion or outcome. It can be further divided into concurrent (measured at the same time) and predictive (measuring future performance) validity.

Face ValidityFace validity is simply whether the test appears (at face value) to measure what it claims to. This is the least sophisticated measure of content-related validity, and is a superficial and subjective assessment based on appearance. Tests wherein the purpose is clear, even to naïve respondents, are said to have high face validity. Accordingly, tests wherein the purpose is unclear have low face validity (Nevo, 1985). A direct measurement of face validity is obtained by asking people to rate the validity of a test as it appears to them. This rater could use a Likert scale to assess face validity. For example:- The test is extremely suitable for a given purpose

- The test is very suitable for that purpose;

- The test is adequate

- The test is inadequate

- The test is irrelevant and, therefore, unsuitable

It is important to select suitable people to rate a test (e.g., questionnaire, interview, IQ test, etc.). For example, individuals who actually take the test would be well placed to judge its face validity. Also, people who work with the test could offer their opinion (e.g., employers, university administrators, employers). Finally, the researcher could use members of the general public with an interest in the test (e.g., parents of testees, politicians, teachers, etc.). The face validity of a test can be considered a robust construct only if a reasonable level of agreement exists among raters. It should be noted that the term face validity should be avoided when the rating is done by an “expert,” as content validity is more appropriate. Having face validity does not mean that a test really measures what the researcher intends to measure, but only in the judgment of raters that it appears to do so. Consequently, it is a crude and basic measure of validity. A test item such as “ I have recently thought of killing myself ” has obvious face validity as an item measuring suicidal cognitions and may be useful when measuring symptoms of depression. However, the implication of items on tests with clear face validity is that they are more vulnerable to social desirability bias. Individuals may manipulate their responses to deny or hide problems or exaggerate behaviors to present a positive image of themselves. It is possible for a test item to lack face validity but still have general validity and measure what it claims to measure. This is good because it reduces demand characteristics and makes it harder for respondents to manipulate their answers. For example, the test item “ I believe in the second coming of Christ ” would lack face validity as a measure of depression (as the purpose of the item is unclear). This item appeared on the first version of The Minnesota Multiphasic Personality Inventory (MMPI) and loaded on the depression scale. Because most of the original normative sample of the MMPI were good Christians, only a depressed Christian would think Christ is not coming back. Thus, for this particular religious sample, the item does have general validity but not face validity. Construct ValidityConstruct validity assesses how well a test or measure represents and captures an abstract theoretical concept, known as a construct. It indicates the degree to which the test accurately reflects the construct it intends to measure, often evaluated through relationships with other variables and measures theoretically connected to the construct. Construct validity was invented by Cronbach and Meehl (1955). This type of content-related validity refers to the extent to which a test captures a specific theoretical construct or trait, and it overlaps with some of the other aspects of validity Construct validity does not concern the simple, factual question of whether a test measures an attribute. Instead, it is about the complex question of whether test score interpretations are consistent with a nomological network involving theoretical and observational terms (Cronbach & Meehl, 1955). To test for construct validity, it must be demonstrated that the phenomenon being measured actually exists. So, the construct validity of a test for intelligence, for example, depends on a model or theory of intelligence . Construct validity entails demonstrating the power of such a construct to explain a network of research findings and to predict further relationships. The more evidence a researcher can demonstrate for a test’s construct validity, the better. However, there is no single method of determining the construct validity of a test. Instead, different methods and approaches are combined to present the overall construct validity of a test. For example, factor analysis and correlational methods can be used. Convergent validityConvergent validity is a subtype of construct validity. It assesses the degree to which two measures that theoretically should be related are related. It demonstrates that measures of similar constructs are highly correlated. It helps confirm that a test accurately measures the intended construct by showing its alignment with other tests designed to measure the same or similar constructs. For example, suppose there are two different scales used to measure self-esteem: Scale A and Scale B. If both scales effectively measure self-esteem, then individuals who score high on Scale A should also score high on Scale B, and those who score low on Scale A should score similarly low on Scale B. If the scores from these two scales show a strong positive correlation, then this provides evidence for convergent validity because it indicates that both scales seem to measure the same underlying construct of self-esteem.  Concurrent Validity (i.e., occurring at the same time)Concurrent validity evaluates how well a test’s results correlate with the results of a previously established and accepted measure, when both are administered at the same time. It helps in determining whether a new measure is a good reflection of an established one without waiting to observe outcomes in the future. If the new test is validated by comparison with a currently existing criterion, we have concurrent validity. Very often, a new IQ or personality test might be compared with an older but similar test known to have good validity already. Predictive ValidityPredictive validity assesses how well a test predicts a criterion that will occur in the future. It measures the test’s ability to foresee the performance of an individual on a related criterion measured at a later point in time. It gauges the test’s effectiveness in predicting subsequent real-world outcomes or results. For example, a prediction may be made on the basis of a new intelligence test that high scorers at age 12 will be more likely to obtain university degrees several years later. If the prediction is born out, then the test has predictive validity. Cronbach, L. J., and Meehl, P. E. (1955) Construct validity in psychological tests. Psychological Bulletin , 52, 281-302. Hathaway, S. R., & McKinley, J. C. (1943). Manual for the Minnesota Multiphasic Personality Inventory . New York: Psychological Corporation. Kelley, T. L. (1927). Interpretation of educational measurements. New York : Macmillan. Nevo, B. (1985). Face validity revisited . Journal of Educational Measurement , 22(4), 287-293.   Validity & Reliability In ResearchA Plain-Language Explanation (With Examples) By: Derek Jansen (MBA) | Expert Reviewer: Kerryn Warren (PhD) | September 2023 Validity and reliability are two related but distinctly different concepts within research. Understanding what they are and how to achieve them is critically important to any research project. In this post, we’ll unpack these two concepts as simply as possible. This post is based on our popular online course, Research Methodology Bootcamp . In the course, we unpack the basics of methodology using straightfoward language and loads of examples. If you’re new to academic research, you definitely want to use this link to get 50% off the course (limited-time offer). Overview: Validity & Reliability- The big picture

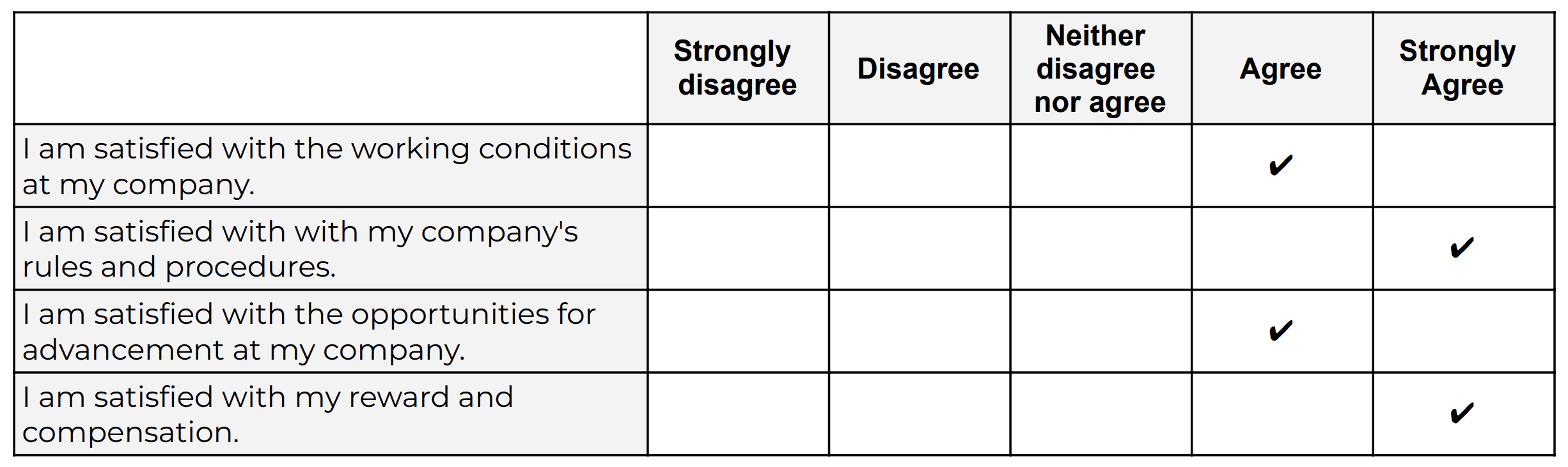

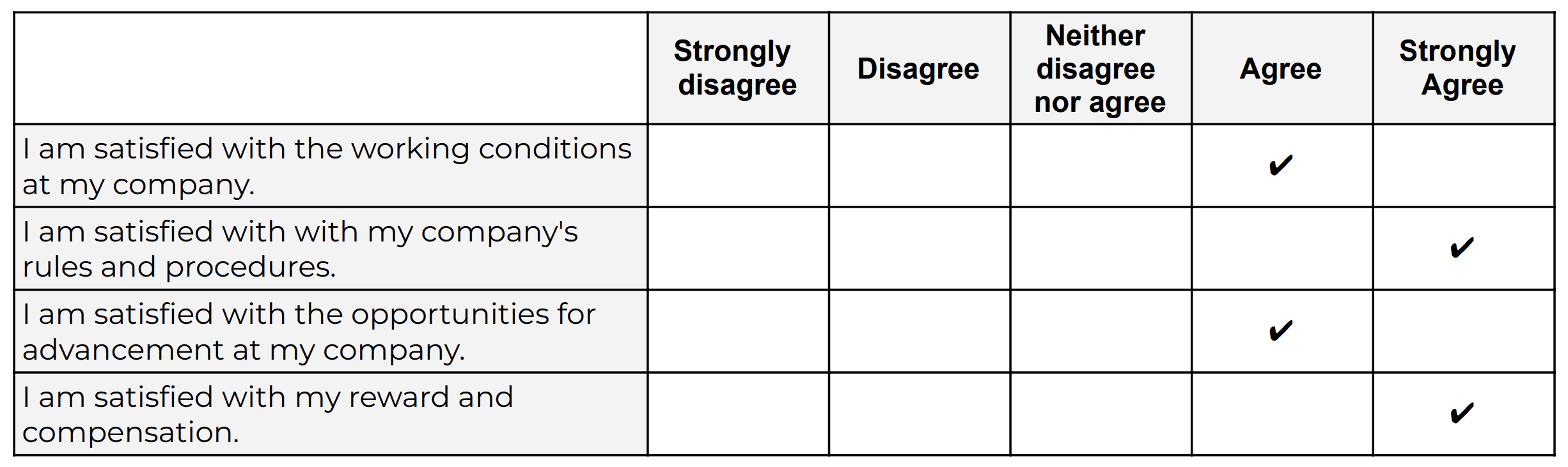

- Validity 101

- Reliability 101

- Key takeaways

First, The Basics…First, let’s start with a big-picture view and then we can zoom in to the finer details. Validity and reliability are two incredibly important concepts in research, especially within the social sciences. Both validity and reliability have to do with the measurement of variables and/or constructs – for example, job satisfaction, intelligence, productivity, etc. When undertaking research, you’ll often want to measure these types of constructs and variables and, at the simplest level, validity and reliability are about ensuring the quality and accuracy of those measurements . As you can probably imagine, if your measurements aren’t accurate or there are quality issues at play when you’re collecting your data, your entire study will be at risk. Therefore, validity and reliability are very important concepts to understand (and to get right). So, let’s unpack each of them.  What Is Validity?In simple terms, validity (also called “construct validity”) is all about whether a research instrument accurately measures what it’s supposed to measure . For example, let’s say you have a set of Likert scales that are supposed to quantify someone’s level of overall job satisfaction. If this set of scales focused purely on only one dimension of job satisfaction, say pay satisfaction, this would not be a valid measurement, as it only captures one aspect of the multidimensional construct. In other words, pay satisfaction alone is only one contributing factor toward overall job satisfaction, and therefore it’s not a valid way to measure someone’s job satisfaction.  Oftentimes in quantitative studies, the way in which the researcher or survey designer interprets a question or statement can differ from how the study participants interpret it . Given that respondents don’t have the opportunity to ask clarifying questions when taking a survey, it’s easy for these sorts of misunderstandings to crop up. Naturally, if the respondents are interpreting the question in the wrong way, the data they provide will be pretty useless . Therefore, ensuring that a study’s measurement instruments are valid – in other words, that they are measuring what they intend to measure – is incredibly important. There are various types of validity and we’re not going to go down that rabbit hole in this post, but it’s worth quickly highlighting the importance of making sure that your research instrument is tightly aligned with the theoretical construct you’re trying to measure . In other words, you need to pay careful attention to how the key theories within your study define the thing you’re trying to measure – and then make sure that your survey presents it in the same way. For example, sticking with the “job satisfaction” construct we looked at earlier, you’d need to clearly define what you mean by job satisfaction within your study (and this definition would of course need to be underpinned by the relevant theory). You’d then need to make sure that your chosen definition is reflected in the types of questions or scales you’re using in your survey . Simply put, you need to make sure that your survey respondents are perceiving your key constructs in the same way you are. Or, even if they’re not, that your measurement instrument is capturing the necessary information that reflects your definition of the construct at hand. If all of this talk about constructs sounds a bit fluffy, be sure to check out Research Methodology Bootcamp , which will provide you with a rock-solid foundational understanding of all things methodology-related. Remember, you can take advantage of our 60% discount offer using this link. Need a helping hand? What Is Reliability?As with validity, reliability is an attribute of a measurement instrument – for example, a survey, a weight scale or even a blood pressure monitor. But while validity is concerned with whether the instrument is measuring the “thing” it’s supposed to be measuring, reliability is concerned with consistency and stability . In other words, reliability reflects the degree to which a measurement instrument produces consistent results when applied repeatedly to the same phenomenon , under the same conditions . As you can probably imagine, a measurement instrument that achieves a high level of consistency is naturally more dependable (or reliable) than one that doesn’t – in other words, it can be trusted to provide consistent measurements . And that, of course, is what you want when undertaking empirical research. If you think about it within a more domestic context, just imagine if you found that your bathroom scale gave you a different number every time you hopped on and off of it – you wouldn’t feel too confident in its ability to measure the variable that is your body weight 🙂 It’s worth mentioning that reliability also extends to the person using the measurement instrument . For example, if two researchers use the same instrument (let’s say a measuring tape) and they get different measurements, there’s likely an issue in terms of how one (or both) of them are using the measuring tape. So, when you think about reliability, consider both the instrument and the researcher as part of the equation. As with validity, there are various types of reliability and various tests that can be used to assess the reliability of an instrument. A popular one that you’ll likely come across for survey instruments is Cronbach’s alpha , which is a statistical measure that quantifies the degree to which items within an instrument (for example, a set of Likert scales) measure the same underlying construct . In other words, Cronbach’s alpha indicates how closely related the items are and whether they consistently capture the same concept .  Recap: Key TakeawaysAlright, let’s quickly recap to cement your understanding of validity and reliability: - Validity is concerned with whether an instrument (e.g., a set of Likert scales) is measuring what it’s supposed to measure

- Reliability is concerned with whether that measurement is consistent and stable when measuring the same phenomenon under the same conditions.

In short, validity and reliability are both essential to ensuring that your data collection efforts deliver high-quality, accurate data that help you answer your research questions . So, be sure to always pay careful attention to the validity and reliability of your measurement instruments when collecting and analysing data. As the adage goes, “rubbish in, rubbish out” – make sure that your data inputs are rock-solid.  Psst… there’s more!This post is an extract from our bestselling short course, Methodology Bootcamp . If you want to work smart, you don't want to miss this .  THE MATERIAL IS WONDERFUL AND BENEFICIAL TO ALL STUDENTS. THE MATERIAL IS WONDERFUL AND BENEFICIAL TO ALL STUDENTS AND I HAVE GREATLY BENEFITED FROM THE CONTENT. Submit a Comment Cancel replyYour email address will not be published. Required fields are marked * Save my name, email, and website in this browser for the next time I comment. Have a language expert improve your writingRun a free plagiarism check in 10 minutes, generate accurate citations for free. - Knowledge Base

- Working with sources

- What Are Credible Sources & How to Spot Them | Examples

What Are Credible Sources & How to Spot Them | ExamplesPublished on August 26, 2021 by Tegan George . Revised on May 9, 2024. A credible source is free from bias and backed up with evidence. It is written by a trustworthy author or organization. There are a lot of sources out there, and it can be hard to tell what’s credible and what isn’t at first glance. Evaluating source credibility is an important information literacy skill. It ensures that you collect accurate information to back up the arguments you make and the conclusions you draw. Table of contentsTypes of sources, how to identify a credible source, the craap test, where to find credible sources, evaluating web sources, other interesting articles, frequently asked questions. There are many different types of sources , which can be divided into three categories: primary sources , secondary sources , and tertiary sources . Primary sources are often considered the most credible in terms of providing evidence for your argument, as they give you direct evidence of what you are researching. However, it’s up to you to ensure the information they provide is reliable and accurate. You will likely use a combination of the three types over the course of your research process . | Type | Definition | Example | | Primary | First-hand evidence giving you direct access to your research topic | | | Secondary | Second-hand information that analyzes, describes, or (primary) | | | Tertiary | Sources that identify, index, or consolidate primary and secondary sources | | Prevent plagiarism. Run a free check.There are a few criteria to look at right away when assessing a source. Together, these criteria form what is known as the CRAAP test . - The information should be up-to-date and current.

- The source should be relevant to your research.

- The author and publication should be a trusted authority on the subject you are researching.

- The sources the author cited should be easy to find, clear, and unbiased.

- For web sources, the URL and layout should signify that it is trustworthy.

The CRAAP test is a catchy acronym that will help you evaluate the credibility of a source you are thinking about using. California State University developed it in 2004 to help students remember best practices for evaluating content. - C urrency: Is the source up-to-date?

- R elevance: Is the source relevant to your research?

- A uthority: Where is the source published? Who is the author? Are they considered reputable and trustworthy in their field?

- A ccuracy: Is the source supported by evidence? Are the claims cited correctly?

- P urpose: What was the motive behind publishing this source?

The criteria for evaluating each point depend on your research topic . For example, if you are researching cutting-edge scientific technology, a source from 10 years ago will not be sufficiently current . However, if you are researching the Peloponnesian War, a source from 200 years ago would be reasonable to refer to. Be careful when ascertaining purpose . It can be very unclear (often by design!) what a source’s motive is. For example, a journal article discussing the efficacy of a particular medication may seem credible, but if the publisher is the manufacturer of the medication, you can’t be sure that it is free from bias. As a rule of thumb, if a source is even passively trying to convince you to purchase something, it may not be credible. Newspapers can be a great way to glean first-hand information about a historical event or situate your research topic within a broader context. However, the veracity and reliability of online news sources can vary enormously—be sure to pay careful attention to authority here. When evaluating academic journals or books published by university presses, it’s always a good rule of thumb to ensure they are peer-reviewed and published in a reputable journal. What is peer review?The peer review process evaluates submissions to academic journals. A panel of reviewers in the same subject area decide whether a submission should be accepted for publication based on a set of criteria. For this reason, academic journals are often considered among the most credible sources you can use in a research project– provided that the journal itself is trustworthy and well-regarded. What sources you use depend on the kind of research you are conducting. For preliminary research and getting to know a new topic, you could use a combination of primary, secondary, and tertiary sources. - Encyclopedias

- Websites with .edu or .org domains

- News sources with first-hand reporting

- Research-oriented magazines like ScienceMag or Nature Weekly .

As you dig deeper into your scholarly research, books and academic journals are usually your best bet. Academic journals are often a great place to find trustworthy and credible content, and are considered one of the most reliable sources you can use in academic writing. - Is the journal indexed in academic databases?

- Has the journal had to retract many articles?

- Are the journal’s policies on copyright and peer review easily available?

- Are there solid “About” and “ Scope ” pages detailing what sorts of articles they publish?

- Has the author of the article published other articles? A quick Google Scholar search will show you.

- Has the author been cited by other scholars? Google Scholar also has a function called “Cited By” that can show you where the author has been cited. A high number of “Cited By” results can often be a measurement of credibility.

Google Scholar is a search engine for academic sources. This is a great place to kick off your research. You can also consider using an academic database like LexisNexis or government open data to get started. Open Educational Resources , or OERs, are materials that have been licensed for “free use” in educational settings. Legitimate OERs can be a great resource. Be sure they have a Creative Commons license allowing them to be duplicated and shared, and meet the CRAAP test criteria, especially in the authority section. The OER Commons is a public digital library that is curated by librarians, and a solid place to start. A few places you can find academic journals online include: | Interdisciplinary | | | Science + Mathematics | | | Social Science + Humanities | | Don't submit your assignments before you do thisThe academic proofreading tool has been trained on 1000s of academic texts. Making it the most accurate and reliable proofreading tool for students. Free citation check included.  Try for free It can be especially challenging to verify the credibility of online sources. They often do not have single authors or publication dates, and their motivation can be more difficult to ascertain. Websites are not subject to the peer-review and editing process that academic journals or books go through, and can be published by anyone at any time. When evaluating the credibility of a website, look first at the URL. The domain extension can help you understand what type of website you’re dealing with. - Educational resources end in .edu, and are generally considered the most credible in academic settings.

- Advocacy or non-profit organizations end in .org.

- Government-affiliated websites end in .gov.

- Websites with some sort of commercial aspect end in .com (or .co.uk, or another country-specific domain).

In general, check for vague terms, buzzwords, or writing that is too emotive or subjective . Beware of grandiose claims, and critically analyze anything not cited or backed up by evidence. - How does the website look and feel? Does it look professional to you?

- Is there an “About Us” page, or a way to contact the author or organization if you need clarification on a claim they have made?

- Are there links to other sources on the page, and are they trustworthy?

- Can the information you found be verified elsewhere, even via a simple Google search?

- When was the website last updated? If it hasn’t been updated recently, it may not pass the CRAAP test.

- Does the website have a lot of advertisements or sponsored content? This could be a sign of bias.

- Is a source of funding disclosed? This could also give you insight into the author and publisher’s motivations.

Social media posts, blogs, and personal websites can be good resources for a situational analysis or grounding of your preliminary ideas, but exercise caution here. These highly personal and subjective sources are seldom reliable enough to stand on their own in your final research product. Similarly, Wikipedia is not considered a reliable source due to the fact that it can be edited by anyone at any time. However, it can be a good starting point for general information and finding other sources. Checklist: Is my source credible?My source is relevant to my research topic. My source is recent enough to contain up-to-date information on my topic. There are no glaring grammatical or orthographic errors. The author is an expert in their field. The information provided is accurate to the best of my knowledge. I have checked that it is supported by evidence and/or verifiable elsewhere. My source cites or links to other sources that appear relevant and trustworthy. There is a way to contact the author or publisher of my source. The purpose of my source is to educate or inform, not to sell a product or push a particular opinion. My source is unbiased, and offers multiple perspectives fairly. My source avoids vague or grandiose claims, and writing that is too emotive or subjective. [For academic journals]: My source is peer-reviewed and published in a reputable and established journal. [For web sources]: The layout of my source is professional and recently updated. Backlinks to other sources are up-to-date and not broken. [For web sources]: My source’s URL suggests the domain is trustworthy, e.g. a .edu address. Your sources are likely to be credible! If you want to know more about ChatGPT, AI tools , citation , and plagiarism , make sure to check out some of our other articles with explanations and examples. - ChatGPT vs human editor

- ChatGPT citations

- Is ChatGPT trustworthy?

- Using ChatGPT for your studies

- What is ChatGPT?

- Chicago style

- Paraphrasing

Plagiarism - Types of plagiarism

- Self-plagiarism

- Avoiding plagiarism

- Academic integrity

- Consequences of plagiarism

- Common knowledge

A credible source should pass the CRAAP test and follow these guidelines: - The information should be up to date and current.

- For a web source, the URL and layout should signify that it is trustworthy.

Peer review is a process of evaluating submissions to an academic journal. Utilizing rigorous criteria, a panel of reviewers in the same subject area decide whether to accept each submission for publication. For this reason, academic journals are often considered among the most credible sources you can use in a research project– provided that the journal itself is trustworthy and well-regarded. The CRAAP test is an acronym to help you evaluate the credibility of a source you are considering using. It is an important component of information literacy . The CRAAP test has five main components: - Currency: Is the source up to date?

- Relevance: Is the source relevant to your research?

- Authority: Where is the source published? Who is the author? Are they considered reputable and trustworthy in their field?

- Accuracy: Is the source supported by evidence? Are the claims cited correctly?

- Purpose: What was the motive behind publishing this source?

Academic dishonesty can be intentional or unintentional, ranging from something as simple as claiming to have read something you didn’t to copying your neighbor’s answers on an exam. You can commit academic dishonesty with the best of intentions, such as helping a friend cheat on a paper. Severe academic dishonesty can include buying a pre-written essay or the answers to a multiple-choice test, or falsifying a medical emergency to avoid taking a final exam. To determine if a source is primary or secondary, ask yourself: - Was the source created by someone directly involved in the events you’re studying (primary), or by another researcher (secondary)?

- Does the source provide original information (primary), or does it summarize information from other sources (secondary)?

- Are you directly analyzing the source itself (primary), or only using it for background information (secondary)?

Some types of source are nearly always primary: works of art and literature, raw statistical data, official documents and records, and personal communications (e.g. letters, interviews ). If you use one of these in your research, it is probably a primary source. Primary sources are often considered the most credible in terms of providing evidence for your argument, as they give you direct evidence of what you are researching. However, it’s up to you to ensure the information they provide is reliable and accurate. Always make sure to properly cite your sources to avoid plagiarism . Cite this Scribbr articleIf you want to cite this source, you can copy and paste the citation or click the “Cite this Scribbr article” button to automatically add the citation to our free Citation Generator. George, T. (2024, May 09). What Are Credible Sources & How to Spot Them | Examples. Scribbr. Retrieved July 10, 2024, from https://www.scribbr.com/working-with-sources/credible-sources/ Is this article helpful? Tegan GeorgeOther students also liked, applying the craap test & evaluating sources, how to cite a wikipedia article | apa, mla & chicago, primary vs. secondary sources | difference & examples, "i thought ai proofreading was useless but..". I've been using Scribbr for years now and I know it's a service that won't disappoint. It does a good job spotting mistakes”  An official website of the United States government The .gov means it’s official. Federal government websites often end in .gov or .mil. Before sharing sensitive information, make sure you’re on a federal government site. The site is secure. The https:// ensures that you are connecting to the official website and that any information you provide is encrypted and transmitted securely. - Publications

- Account settings

- My Bibliography

- Collections

- Citation manager

Save citation to fileEmail citation, add to collections. - Create a new collection

- Add to an existing collection

Add to My BibliographyYour saved search, create a file for external citation management software, your rss feed. - Search in PubMed

- Search in NLM Catalog

- Add to Search

Reliability and validity: Importance in Medical ResearchAffiliations. - 1 Al-Nafees Medical College,Isra University, Islamabad, Pakistan.

- 2 Fauji Foundation Hospital, Foundation University Medical College, Islamabad, Pakistan.

- PMID: 34974579

- DOI: 10.47391/JPMA.06-861

Reliability and validity are among the most important and fundamental domains in the assessment of any measuring methodology for data-collection in a good research. Validity is about what an instrument measures and how well it does so, whereas reliability concerns the truthfulness in the data obtained and the degree to which any measuring tool controls random error. The current narrative review was planned to discuss the importance of reliability and validity of data-collection or measurement techniques used in research. It describes and explores comprehensively the reliability and validity of research instruments and also discusses different forms of reliability and validity with concise examples. An attempt has been taken to give a brief literature review regarding the significance of reliability and validity in medical sciences. Keywords: Validity, Reliability, Medical research, Methodology, Assessment, Research tools.. PubMed Disclaimer Similar articles- The measurement of collaboration within healthcare settings: a systematic review of measurement properties of instruments. Walters SJ, Stern C, Robertson-Malt S. Walters SJ, et al. JBI Database System Rev Implement Rep. 2016 Apr;14(4):138-97. doi: 10.11124/JBISRIR-2016-2159. JBI Database System Rev Implement Rep. 2016. PMID: 27532315 Review.

- Principles and methods of validity and reliability testing of questionnaires used in social and health science researches. Bolarinwa OA. Bolarinwa OA. Niger Postgrad Med J. 2015 Oct-Dec;22(4):195-201. doi: 10.4103/1117-1936.173959. Niger Postgrad Med J. 2015. PMID: 26776330

- Validity and reliability of measurement instruments used in research. Kimberlin CL, Winterstein AG. Kimberlin CL, et al. Am J Health Syst Pharm. 2008 Dec 1;65(23):2276-84. doi: 10.2146/ajhp070364. Am J Health Syst Pharm. 2008. PMID: 19020196 Review.

- [Psychometric characteristics of questionnaires designed to assess the knowledge, perceptions and practices of health care professionals with regards to alcoholic patients]. Jaussent S, Labarère J, Boyer JP, François P. Jaussent S, et al. Encephale. 2004 Sep-Oct;30(5):437-46. doi: 10.1016/s0013-7006(04)95458-9. Encephale. 2004. PMID: 15627048 Review. French.

- Evaluation of research studies. Part IV: Validity and reliability--concepts and application. Fullerton JT. Fullerton JT. J Nurse Midwifery. 1993 Mar-Apr;38(2):121-5. doi: 10.1016/0091-2182(93)90146-8. J Nurse Midwifery. 1993. PMID: 8492191

- A psychometric assessment of a novel scale for evaluating vaccination attitudes amidst a major public health crisis. Cheng L, Kong J, Xie X, Zhang F. Cheng L, et al. Sci Rep. 2024 May 4;14(1):10250. doi: 10.1038/s41598-024-61028-z. Sci Rep. 2024. PMID: 38704420 Free PMC article.