- SUGGESTED TOPICS

- The Magazine

- Newsletters

- Managing Yourself

- Managing Teams

- Work-life Balance

- The Big Idea

- Data & Visuals

- Reading Lists

- Case Selections

- HBR Learning

- Topic Feeds

- Account Settings

- Email Preferences

Present Your Data Like a Pro

- Joel Schwartzberg

Demystify the numbers. Your audience will thank you.

While a good presentation has data, data alone doesn’t guarantee a good presentation. It’s all about how that data is presented. The quickest way to confuse your audience is by sharing too many details at once. The only data points you should share are those that significantly support your point — and ideally, one point per chart. To avoid the debacle of sheepishly translating hard-to-see numbers and labels, rehearse your presentation with colleagues sitting as far away as the actual audience would. While you’ve been working with the same chart for weeks or months, your audience will be exposed to it for mere seconds. Give them the best chance of comprehending your data by using simple, clear, and complete language to identify X and Y axes, pie pieces, bars, and other diagrammatic elements. Try to avoid abbreviations that aren’t obvious, and don’t assume labeled components on one slide will be remembered on subsequent slides. Every valuable chart or pie graph has an “Aha!” zone — a number or range of data that reveals something crucial to your point. Make sure you visually highlight the “Aha!” zone, reinforcing the moment by explaining it to your audience.

With so many ways to spin and distort information these days, a presentation needs to do more than simply share great ideas — it needs to support those ideas with credible data. That’s true whether you’re an executive pitching new business clients, a vendor selling her services, or a CEO making a case for change.

- JS Joel Schwartzberg oversees executive communications for a major national nonprofit, is a professional presentation coach, and is the author of Get to the Point! Sharpen Your Message and Make Your Words Matter and The Language of Leadership: How to Engage and Inspire Your Team . You can find him on LinkedIn and X. TheJoelTruth

Partner Center

Home Blog Design Understanding Data Presentations (Guide + Examples)

Understanding Data Presentations (Guide + Examples)

In this age of overwhelming information, the skill to effectively convey data has become extremely valuable. Initiating a discussion on data presentation types involves thoughtful consideration of the nature of your data and the message you aim to convey. Different types of visualizations serve distinct purposes. Whether you’re dealing with how to develop a report or simply trying to communicate complex information, how you present data influences how well your audience understands and engages with it. This extensive guide leads you through the different ways of data presentation.

Table of Contents

What is a Data Presentation?

What should a data presentation include, line graphs, treemap chart, scatter plot, how to choose a data presentation type, recommended data presentation templates, common mistakes done in data presentation.

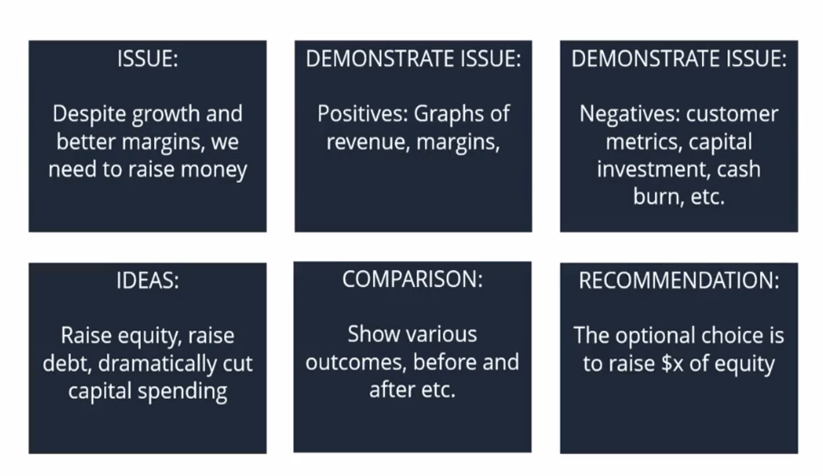

A data presentation is a slide deck that aims to disclose quantitative information to an audience through the use of visual formats and narrative techniques derived from data analysis, making complex data understandable and actionable. This process requires a series of tools, such as charts, graphs, tables, infographics, dashboards, and so on, supported by concise textual explanations to improve understanding and boost retention rate.

Data presentations require us to cull data in a format that allows the presenter to highlight trends, patterns, and insights so that the audience can act upon the shared information. In a few words, the goal of data presentations is to enable viewers to grasp complicated concepts or trends quickly, facilitating informed decision-making or deeper analysis.

Data presentations go beyond the mere usage of graphical elements. Seasoned presenters encompass visuals with the art of data storytelling , so the speech skillfully connects the points through a narrative that resonates with the audience. Depending on the purpose – inspire, persuade, inform, support decision-making processes, etc. – is the data presentation format that is better suited to help us in this journey.

To nail your upcoming data presentation, ensure to count with the following elements:

- Clear Objectives: Understand the intent of your presentation before selecting the graphical layout and metaphors to make content easier to grasp.

- Engaging introduction: Use a powerful hook from the get-go. For instance, you can ask a big question or present a problem that your data will answer. Take a look at our guide on how to start a presentation for tips & insights.

- Structured Narrative: Your data presentation must tell a coherent story. This means a beginning where you present the context, a middle section in which you present the data, and an ending that uses a call-to-action. Check our guide on presentation structure for further information.

- Visual Elements: These are the charts, graphs, and other elements of visual communication we ought to use to present data. This article will cover one by one the different types of data representation methods we can use, and provide further guidance on choosing between them.

- Insights and Analysis: This is not just showcasing a graph and letting people get an idea about it. A proper data presentation includes the interpretation of that data, the reason why it’s included, and why it matters to your research.

- Conclusion & CTA: Ending your presentation with a call to action is necessary. Whether you intend to wow your audience into acquiring your services, inspire them to change the world, or whatever the purpose of your presentation, there must be a stage in which you convey all that you shared and show the path to staying in touch. Plan ahead whether you want to use a thank-you slide, a video presentation, or which method is apt and tailored to the kind of presentation you deliver.

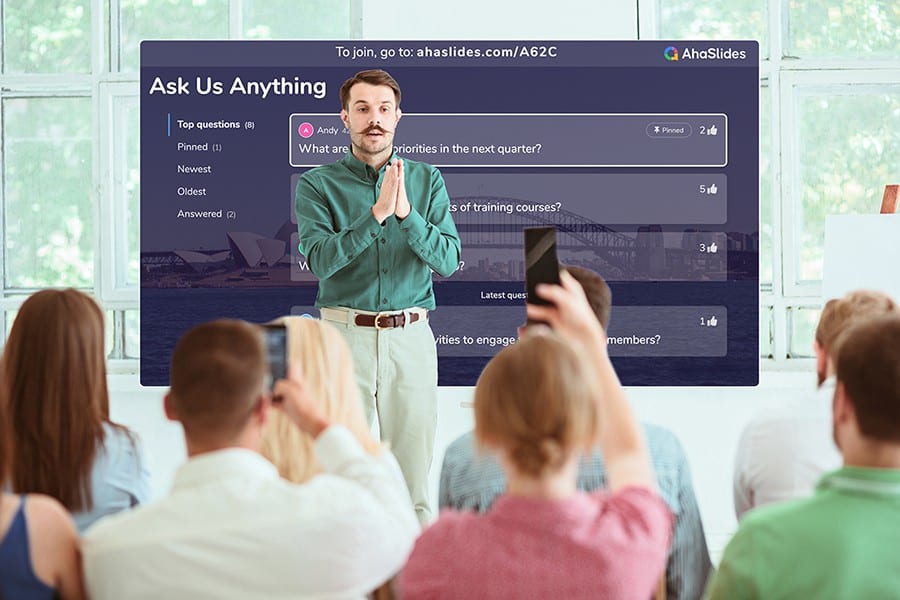

- Q&A Session: After your speech is concluded, allocate 3-5 minutes for the audience to raise any questions about the information you disclosed. This is an extra chance to establish your authority on the topic. Check our guide on questions and answer sessions in presentations here.

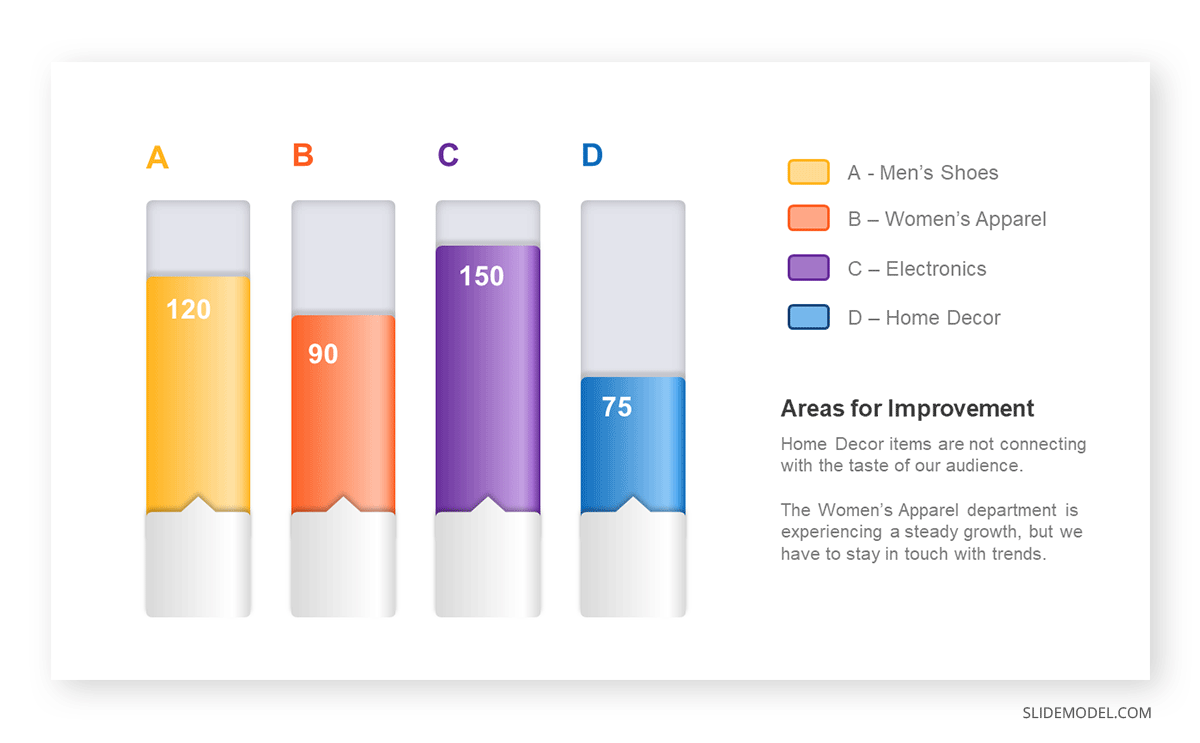

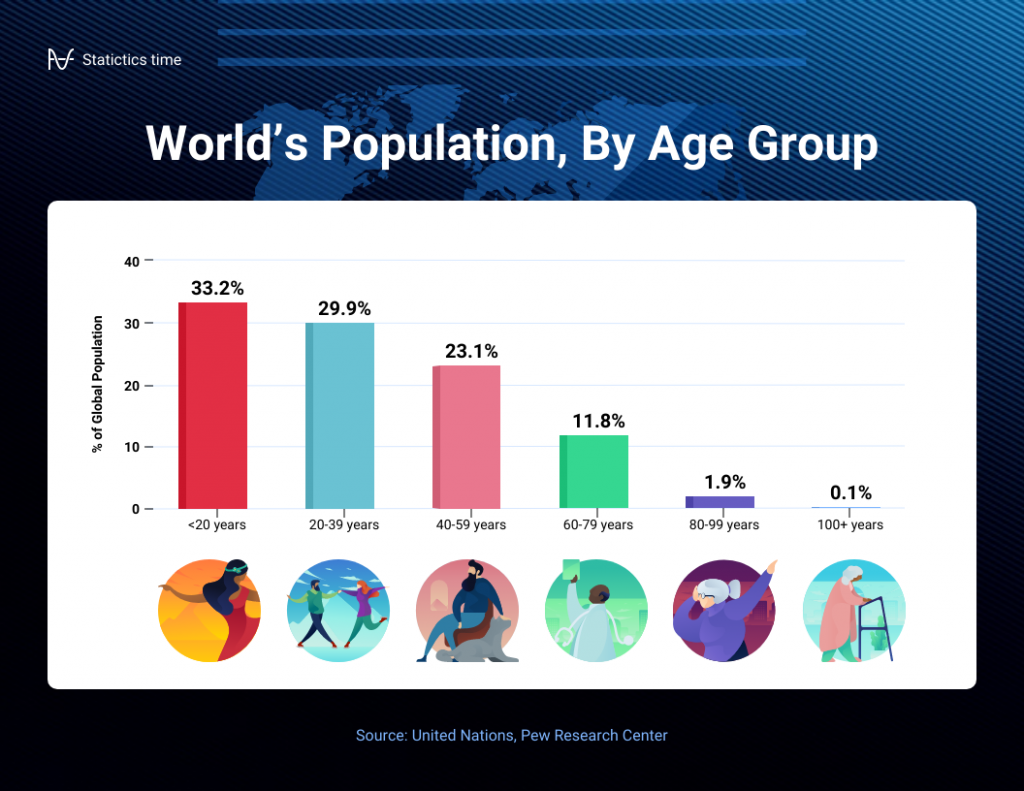

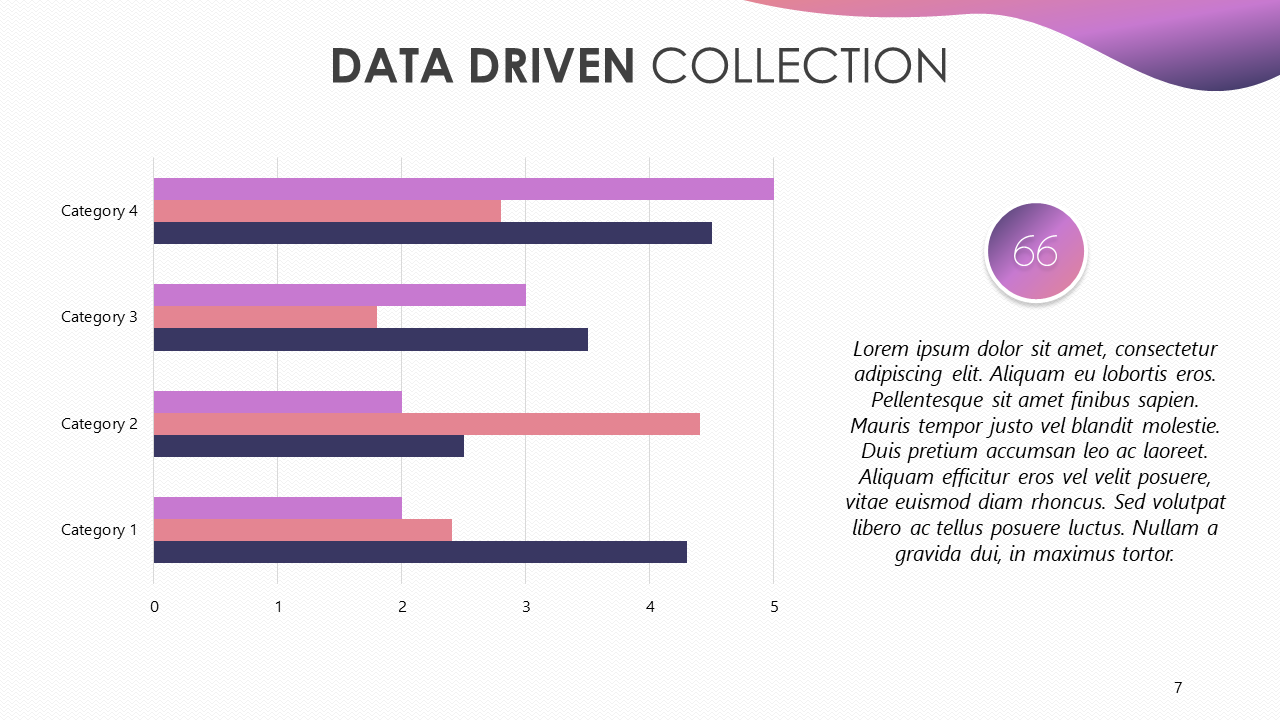

Bar charts are a graphical representation of data using rectangular bars to show quantities or frequencies in an established category. They make it easy for readers to spot patterns or trends. Bar charts can be horizontal or vertical, although the vertical format is commonly known as a column chart. They display categorical, discrete, or continuous variables grouped in class intervals [1] . They include an axis and a set of labeled bars horizontally or vertically. These bars represent the frequencies of variable values or the values themselves. Numbers on the y-axis of a vertical bar chart or the x-axis of a horizontal bar chart are called the scale.

Real-Life Application of Bar Charts

Let’s say a sales manager is presenting sales to their audience. Using a bar chart, he follows these steps.

Step 1: Selecting Data

The first step is to identify the specific data you will present to your audience.

The sales manager has highlighted these products for the presentation.

- Product A: Men’s Shoes

- Product B: Women’s Apparel

- Product C: Electronics

- Product D: Home Decor

Step 2: Choosing Orientation

Opt for a vertical layout for simplicity. Vertical bar charts help compare different categories in case there are not too many categories [1] . They can also help show different trends. A vertical bar chart is used where each bar represents one of the four chosen products. After plotting the data, it is seen that the height of each bar directly represents the sales performance of the respective product.

It is visible that the tallest bar (Electronics – Product C) is showing the highest sales. However, the shorter bars (Women’s Apparel – Product B and Home Decor – Product D) need attention. It indicates areas that require further analysis or strategies for improvement.

Step 3: Colorful Insights

Different colors are used to differentiate each product. It is essential to show a color-coded chart where the audience can distinguish between products.

- Men’s Shoes (Product A): Yellow

- Women’s Apparel (Product B): Orange

- Electronics (Product C): Violet

- Home Decor (Product D): Blue

Bar charts are straightforward and easily understandable for presenting data. They are versatile when comparing products or any categorical data [2] . Bar charts adapt seamlessly to retail scenarios. Despite that, bar charts have a few shortcomings. They cannot illustrate data trends over time. Besides, overloading the chart with numerous products can lead to visual clutter, diminishing its effectiveness.

For more information, check our collection of bar chart templates for PowerPoint .

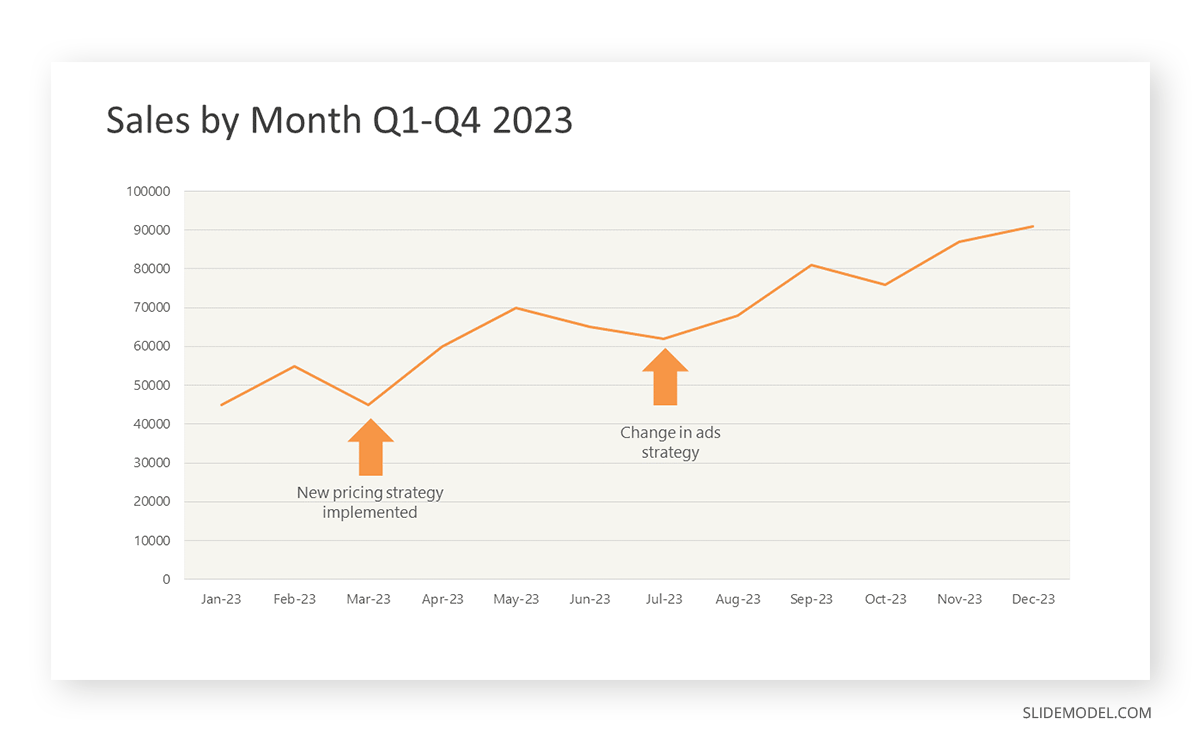

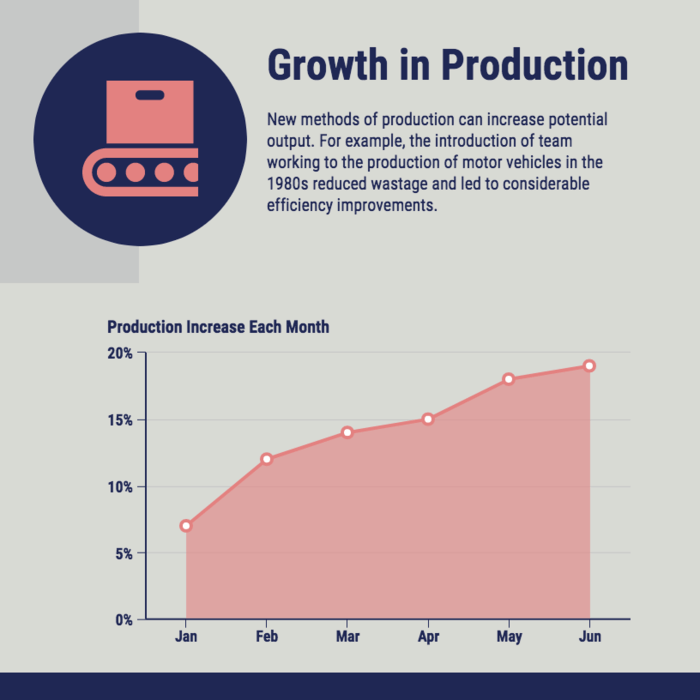

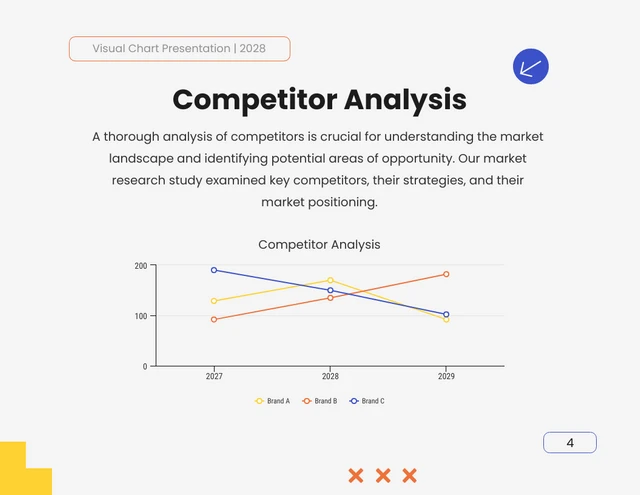

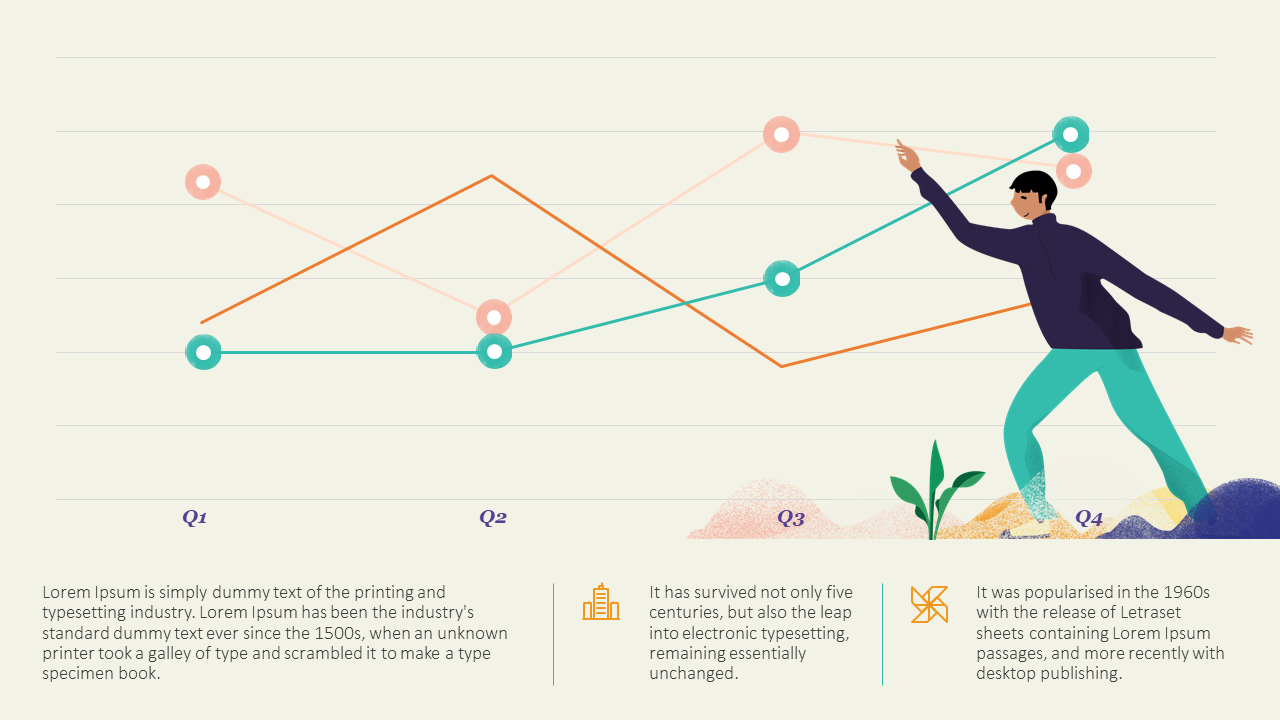

Line graphs help illustrate data trends, progressions, or fluctuations by connecting a series of data points called ‘markers’ with straight line segments. This provides a straightforward representation of how values change [5] . Their versatility makes them invaluable for scenarios requiring a visual understanding of continuous data. In addition, line graphs are also useful for comparing multiple datasets over the same timeline. Using multiple line graphs allows us to compare more than one data set. They simplify complex information so the audience can quickly grasp the ups and downs of values. From tracking stock prices to analyzing experimental results, you can use line graphs to show how data changes over a continuous timeline. They show trends with simplicity and clarity.

Real-life Application of Line Graphs

To understand line graphs thoroughly, we will use a real case. Imagine you’re a financial analyst presenting a tech company’s monthly sales for a licensed product over the past year. Investors want insights into sales behavior by month, how market trends may have influenced sales performance and reception to the new pricing strategy. To present data via a line graph, you will complete these steps.

First, you need to gather the data. In this case, your data will be the sales numbers. For example:

- January: $45,000

- February: $55,000

- March: $45,000

- April: $60,000

- May: $ 70,000

- June: $65,000

- July: $62,000

- August: $68,000

- September: $81,000

- October: $76,000

- November: $87,000

- December: $91,000

After choosing the data, the next step is to select the orientation. Like bar charts, you can use vertical or horizontal line graphs. However, we want to keep this simple, so we will keep the timeline (x-axis) horizontal while the sales numbers (y-axis) vertical.

Step 3: Connecting Trends

After adding the data to your preferred software, you will plot a line graph. In the graph, each month’s sales are represented by data points connected by a line.

Step 4: Adding Clarity with Color

If there are multiple lines, you can also add colors to highlight each one, making it easier to follow.

Line graphs excel at visually presenting trends over time. These presentation aids identify patterns, like upward or downward trends. However, too many data points can clutter the graph, making it harder to interpret. Line graphs work best with continuous data but are not suitable for categories.

For more information, check our collection of line chart templates for PowerPoint and our article about how to make a presentation graph .

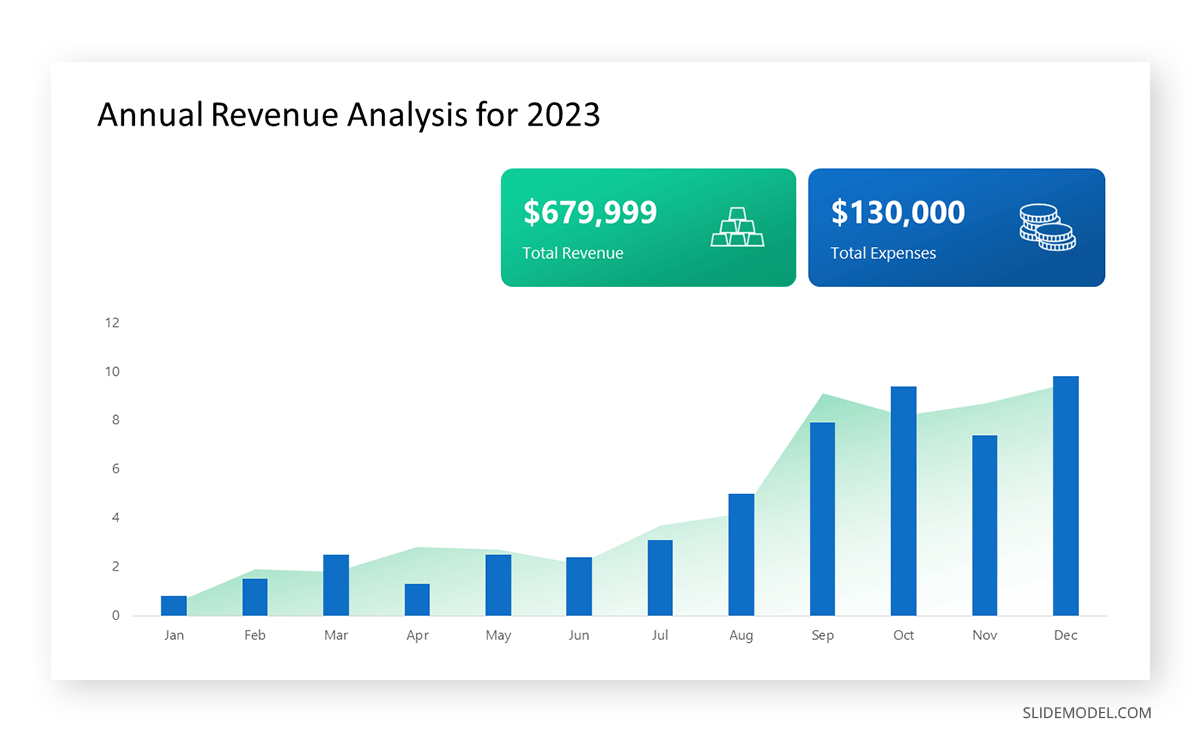

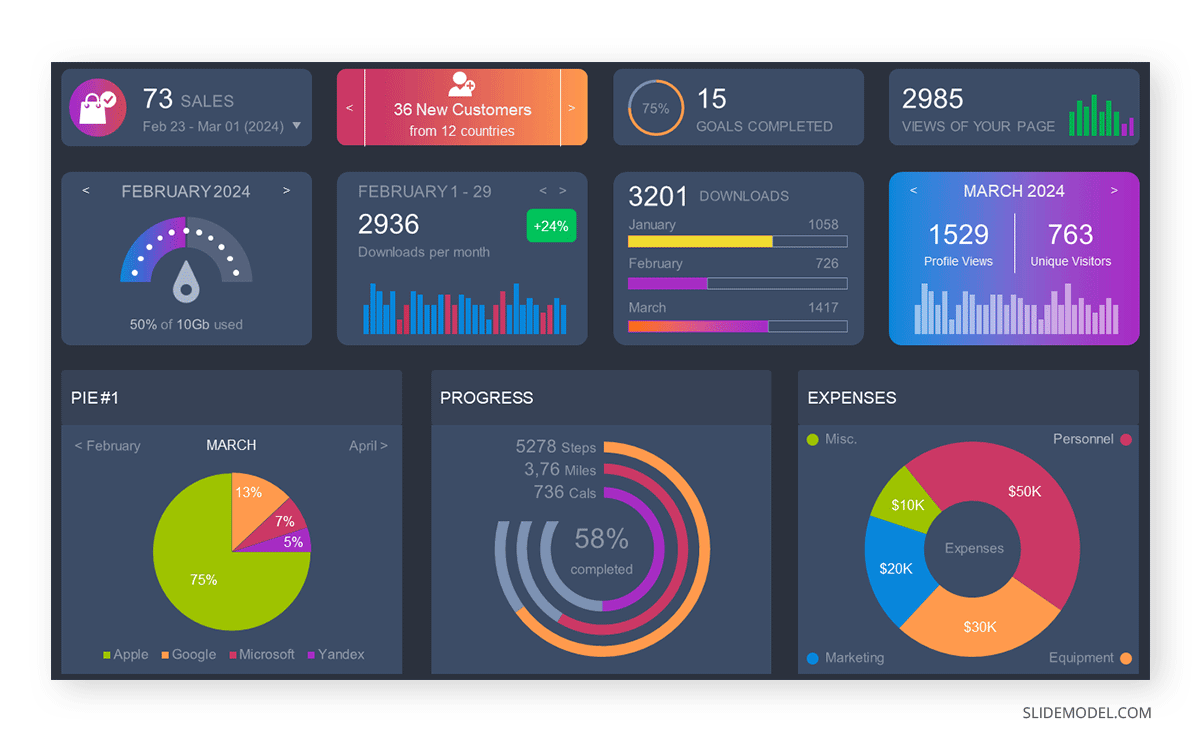

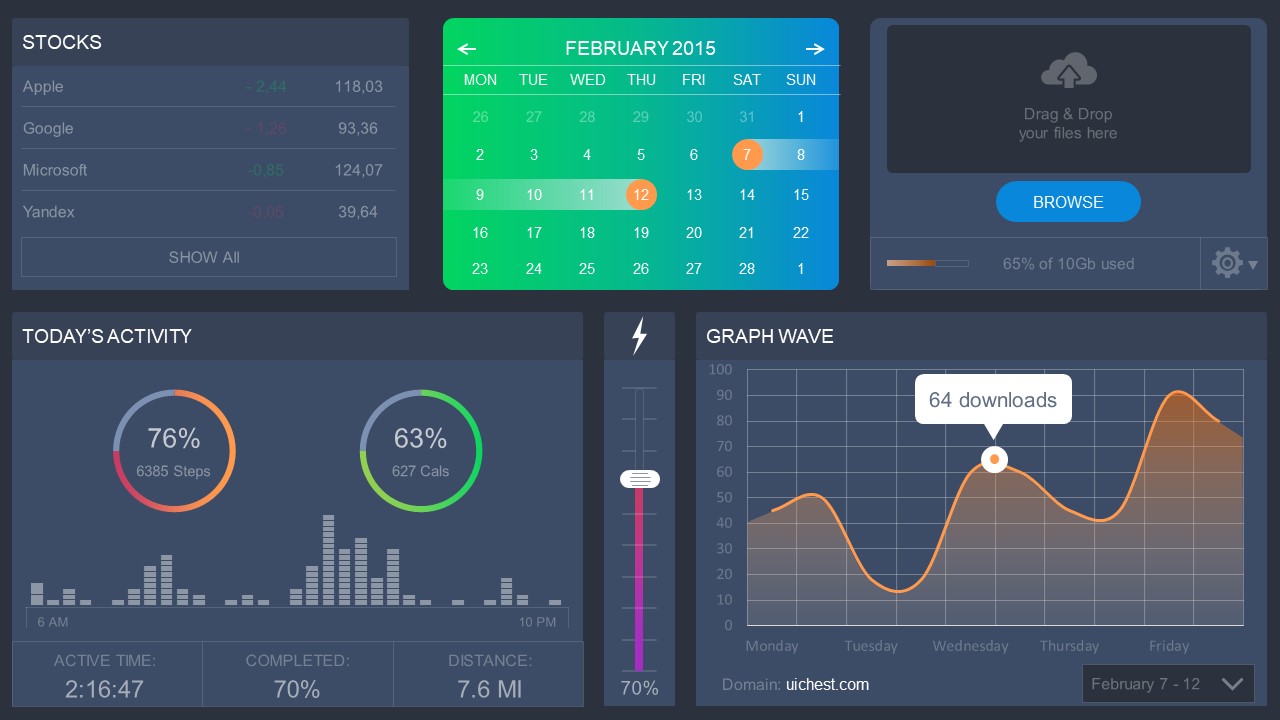

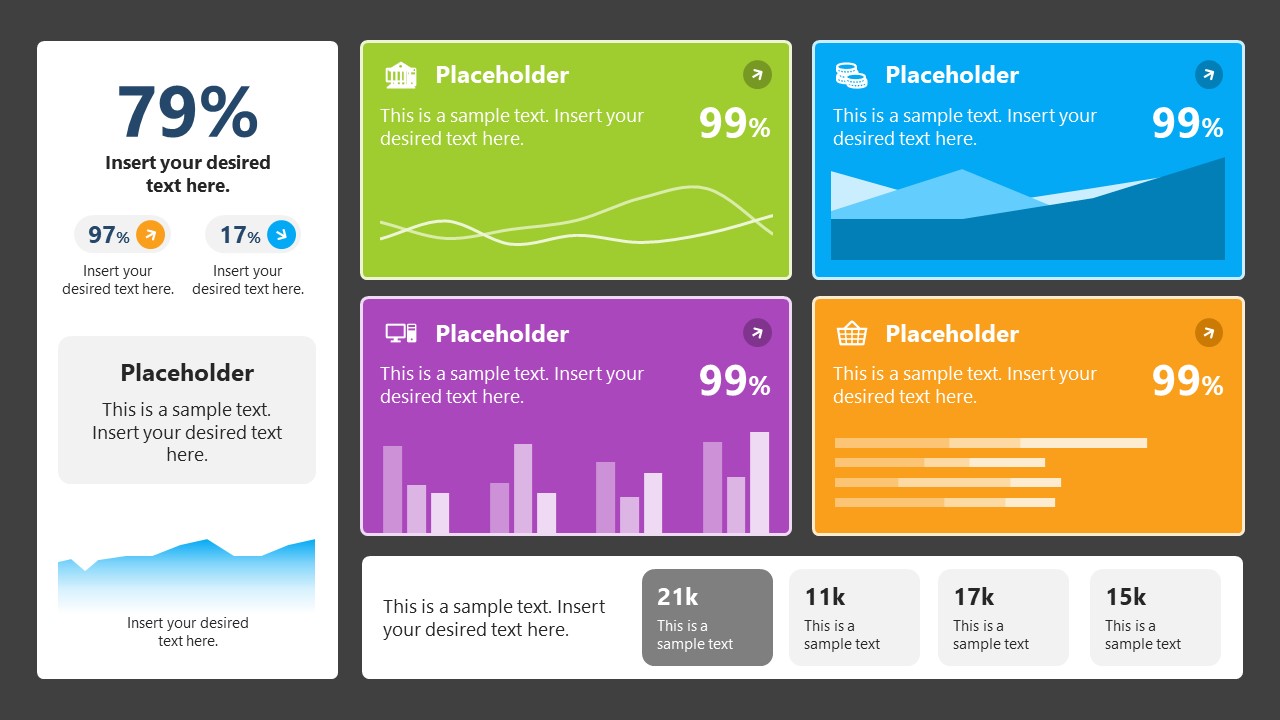

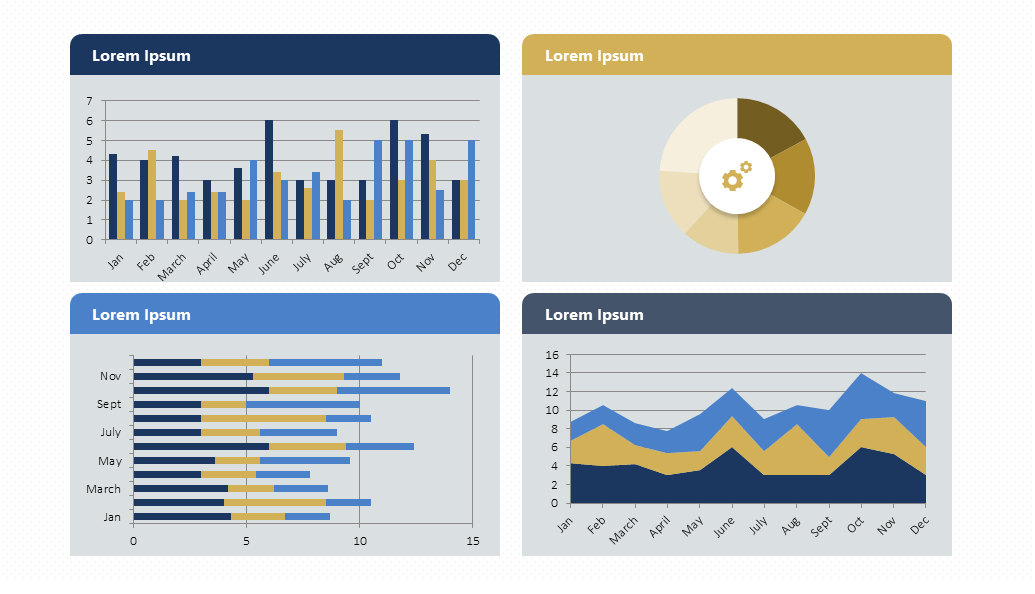

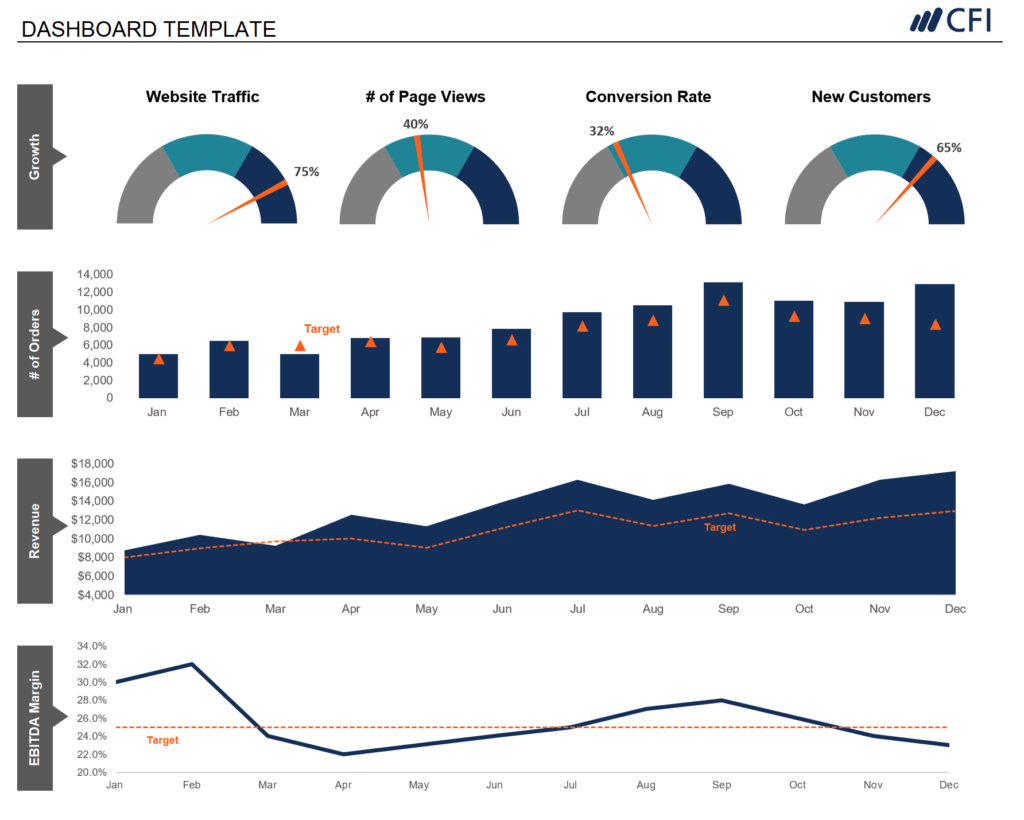

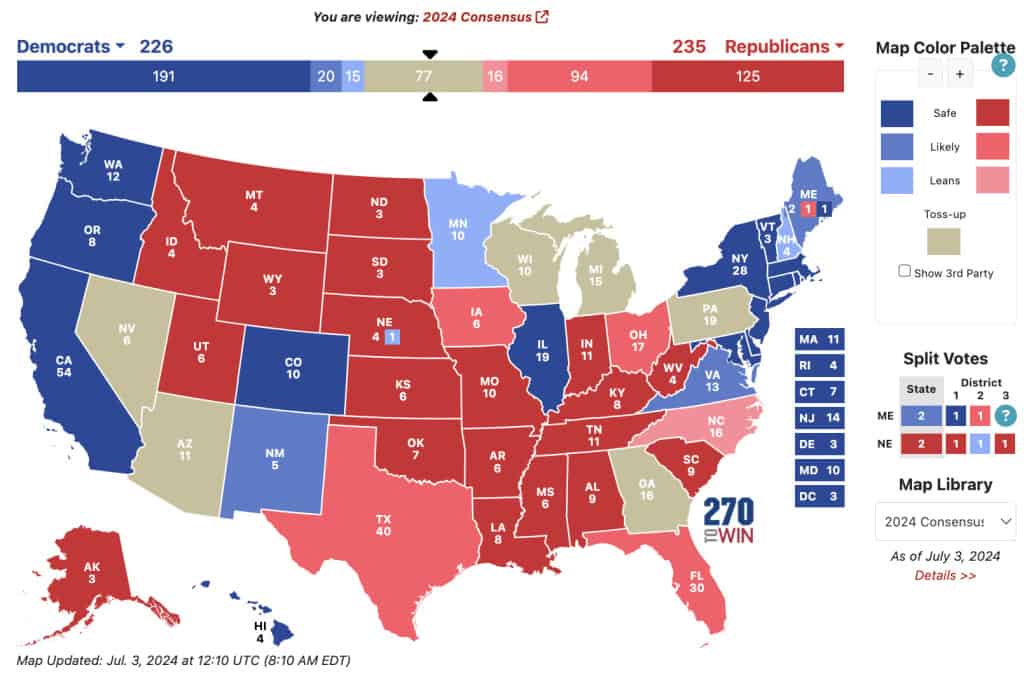

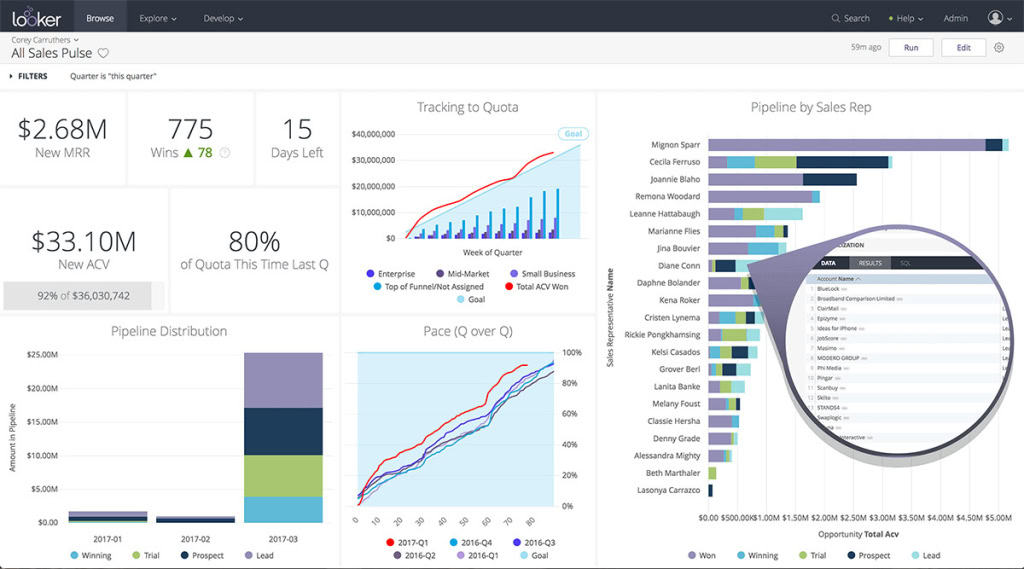

A data dashboard is a visual tool for analyzing information. Different graphs, charts, and tables are consolidated in a layout to showcase the information required to achieve one or more objectives. Dashboards help quickly see Key Performance Indicators (KPIs). You don’t make new visuals in the dashboard; instead, you use it to display visuals you’ve already made in worksheets [3] .

Keeping the number of visuals on a dashboard to three or four is recommended. Adding too many can make it hard to see the main points [4]. Dashboards can be used for business analytics to analyze sales, revenue, and marketing metrics at a time. They are also used in the manufacturing industry, as they allow users to grasp the entire production scenario at the moment while tracking the core KPIs for each line.

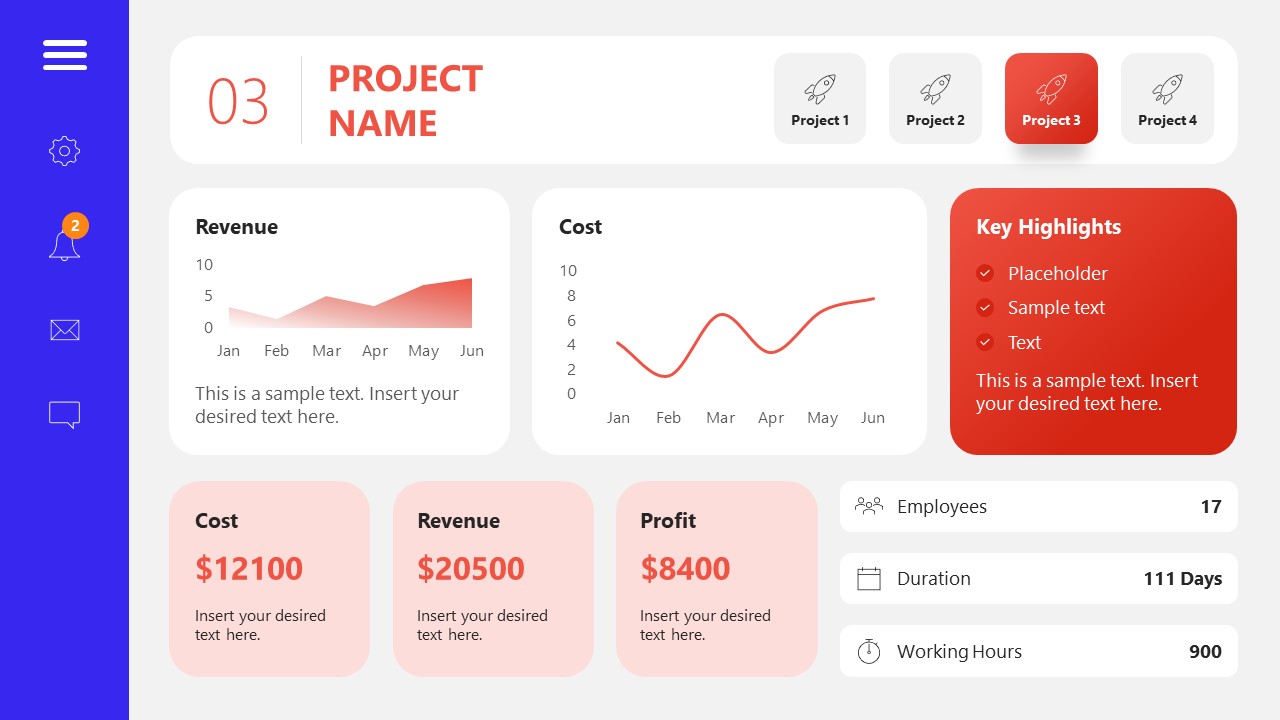

Real-Life Application of a Dashboard

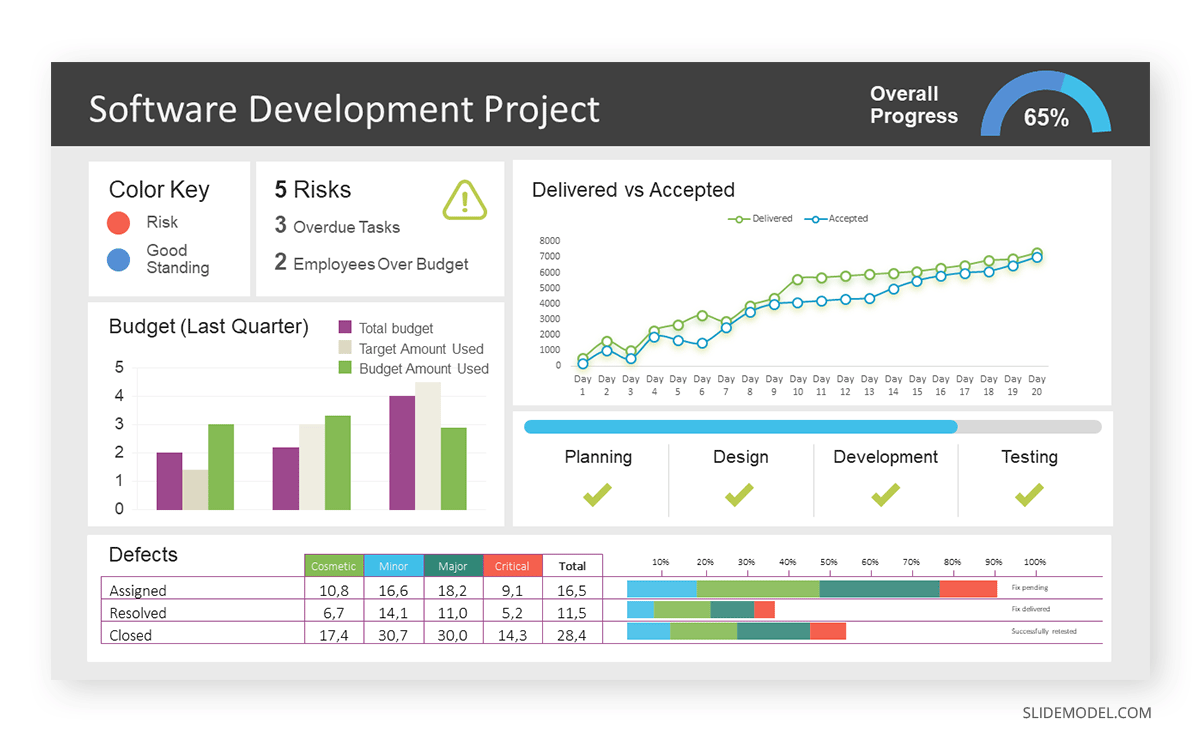

Consider a project manager presenting a software development project’s progress to a tech company’s leadership team. He follows the following steps.

Step 1: Defining Key Metrics

To effectively communicate the project’s status, identify key metrics such as completion status, budget, and bug resolution rates. Then, choose measurable metrics aligned with project objectives.

Step 2: Choosing Visualization Widgets

After finalizing the data, presentation aids that align with each metric are selected. For this project, the project manager chooses a progress bar for the completion status and uses bar charts for budget allocation. Likewise, he implements line charts for bug resolution rates.

Step 3: Dashboard Layout

Key metrics are prominently placed in the dashboard for easy visibility, and the manager ensures that it appears clean and organized.

Dashboards provide a comprehensive view of key project metrics. Users can interact with data, customize views, and drill down for detailed analysis. However, creating an effective dashboard requires careful planning to avoid clutter. Besides, dashboards rely on the availability and accuracy of underlying data sources.

For more information, check our article on how to design a dashboard presentation , and discover our collection of dashboard PowerPoint templates .

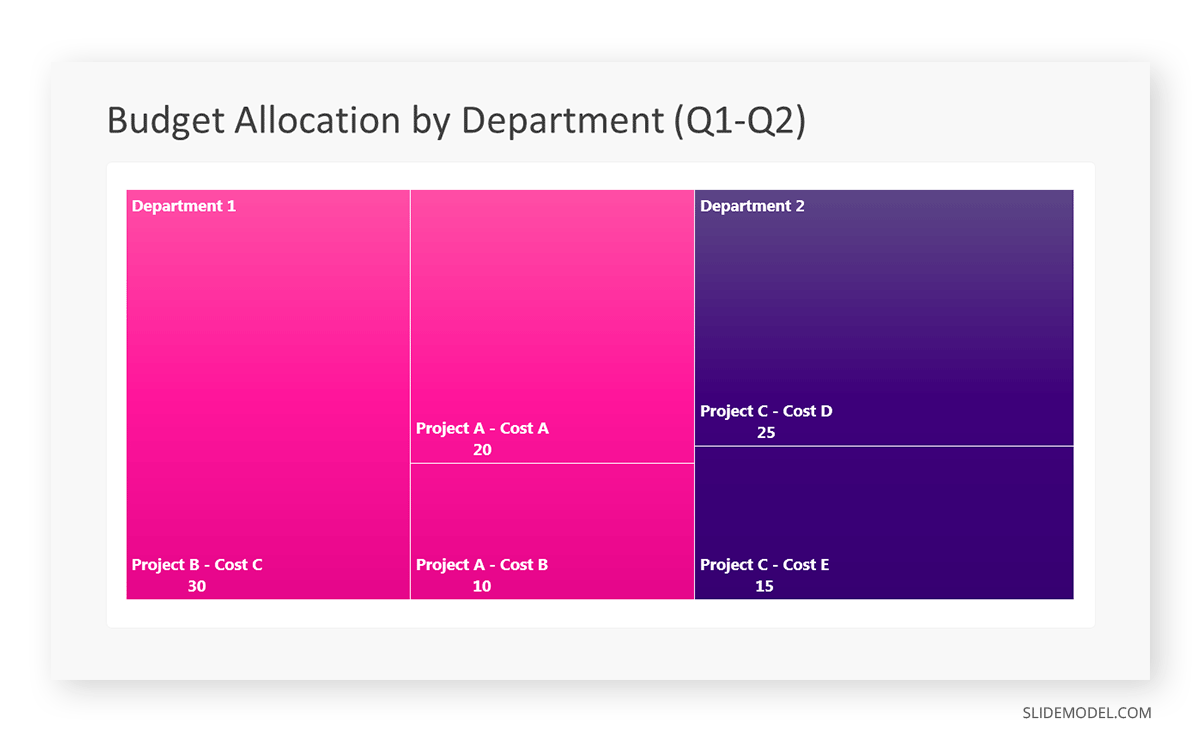

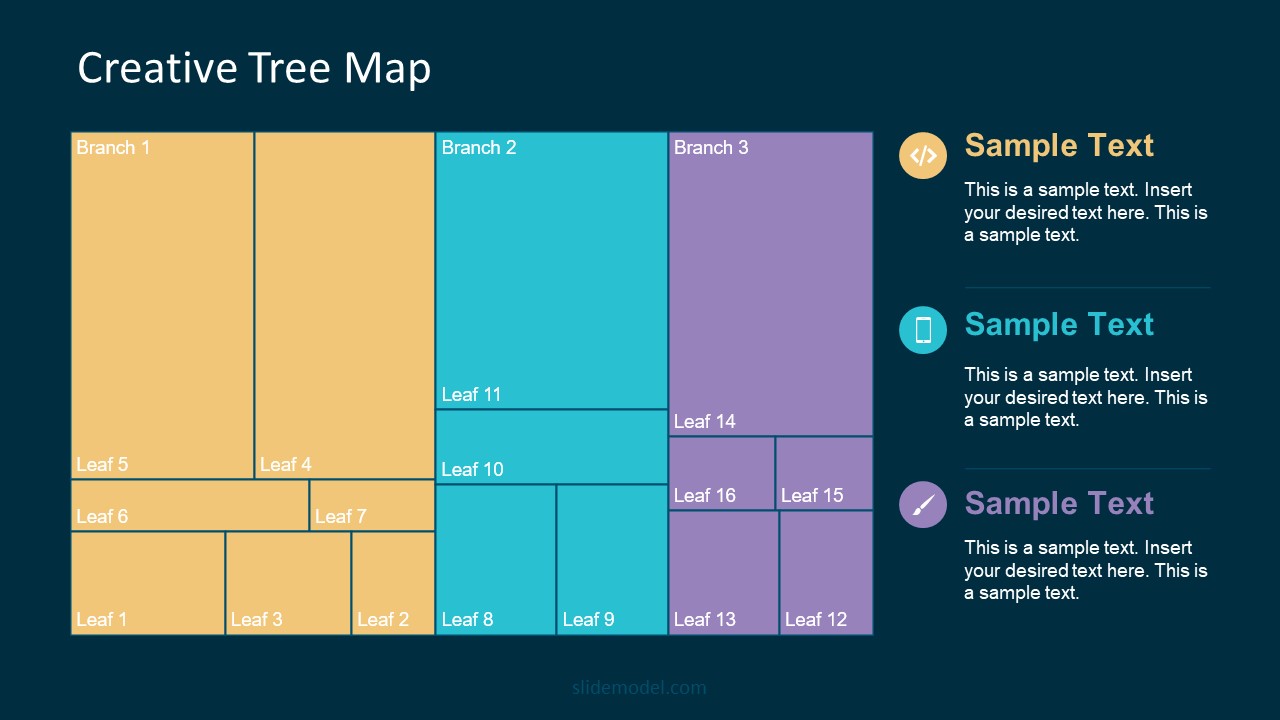

Treemap charts represent hierarchical data structured in a series of nested rectangles [6] . As each branch of the ‘tree’ is given a rectangle, smaller tiles can be seen representing sub-branches, meaning elements on a lower hierarchical level than the parent rectangle. Each one of those rectangular nodes is built by representing an area proportional to the specified data dimension.

Treemaps are useful for visualizing large datasets in compact space. It is easy to identify patterns, such as which categories are dominant. Common applications of the treemap chart are seen in the IT industry, such as resource allocation, disk space management, website analytics, etc. Also, they can be used in multiple industries like healthcare data analysis, market share across different product categories, or even in finance to visualize portfolios.

Real-Life Application of a Treemap Chart

Let’s consider a financial scenario where a financial team wants to represent the budget allocation of a company. There is a hierarchy in the process, so it is helpful to use a treemap chart. In the chart, the top-level rectangle could represent the total budget, and it would be subdivided into smaller rectangles, each denoting a specific department. Further subdivisions within these smaller rectangles might represent individual projects or cost categories.

Step 1: Define Your Data Hierarchy

While presenting data on the budget allocation, start by outlining the hierarchical structure. The sequence will be like the overall budget at the top, followed by departments, projects within each department, and finally, individual cost categories for each project.

- Top-level rectangle: Total Budget

- Second-level rectangles: Departments (Engineering, Marketing, Sales)

- Third-level rectangles: Projects within each department

- Fourth-level rectangles: Cost categories for each project (Personnel, Marketing Expenses, Equipment)

Step 2: Choose a Suitable Tool

It’s time to select a data visualization tool supporting Treemaps. Popular choices include Tableau, Microsoft Power BI, PowerPoint, or even coding with libraries like D3.js. It is vital to ensure that the chosen tool provides customization options for colors, labels, and hierarchical structures.

Here, the team uses PowerPoint for this guide because of its user-friendly interface and robust Treemap capabilities.

Step 3: Make a Treemap Chart with PowerPoint

After opening the PowerPoint presentation, they chose “SmartArt” to form the chart. The SmartArt Graphic window has a “Hierarchy” category on the left. Here, you will see multiple options. You can choose any layout that resembles a Treemap. The “Table Hierarchy” or “Organization Chart” options can be adapted. The team selects the Table Hierarchy as it looks close to a Treemap.

Step 5: Input Your Data

After that, a new window will open with a basic structure. They add the data one by one by clicking on the text boxes. They start with the top-level rectangle, representing the total budget.

Step 6: Customize the Treemap

By clicking on each shape, they customize its color, size, and label. At the same time, they can adjust the font size, style, and color of labels by using the options in the “Format” tab in PowerPoint. Using different colors for each level enhances the visual difference.

Treemaps excel at illustrating hierarchical structures. These charts make it easy to understand relationships and dependencies. They efficiently use space, compactly displaying a large amount of data, reducing the need for excessive scrolling or navigation. Additionally, using colors enhances the understanding of data by representing different variables or categories.

In some cases, treemaps might become complex, especially with deep hierarchies. It becomes challenging for some users to interpret the chart. At the same time, displaying detailed information within each rectangle might be constrained by space. It potentially limits the amount of data that can be shown clearly. Without proper labeling and color coding, there’s a risk of misinterpretation.

A heatmap is a data visualization tool that uses color coding to represent values across a two-dimensional surface. In these, colors replace numbers to indicate the magnitude of each cell. This color-shaded matrix display is valuable for summarizing and understanding data sets with a glance [7] . The intensity of the color corresponds to the value it represents, making it easy to identify patterns, trends, and variations in the data.

As a tool, heatmaps help businesses analyze website interactions, revealing user behavior patterns and preferences to enhance overall user experience. In addition, companies use heatmaps to assess content engagement, identifying popular sections and areas of improvement for more effective communication. They excel at highlighting patterns and trends in large datasets, making it easy to identify areas of interest.

We can implement heatmaps to express multiple data types, such as numerical values, percentages, or even categorical data. Heatmaps help us easily spot areas with lots of activity, making them helpful in figuring out clusters [8] . When making these maps, it is important to pick colors carefully. The colors need to show the differences between groups or levels of something. And it is good to use colors that people with colorblindness can easily see.

Check our detailed guide on how to create a heatmap here. Also discover our collection of heatmap PowerPoint templates .

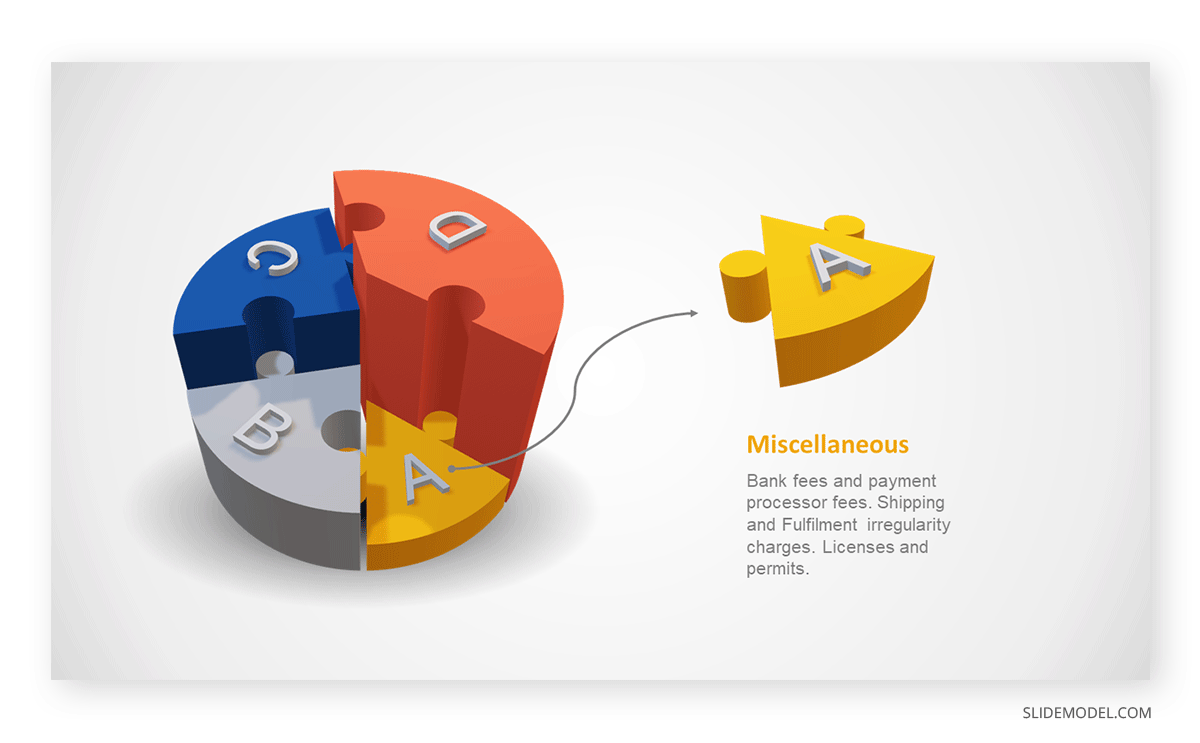

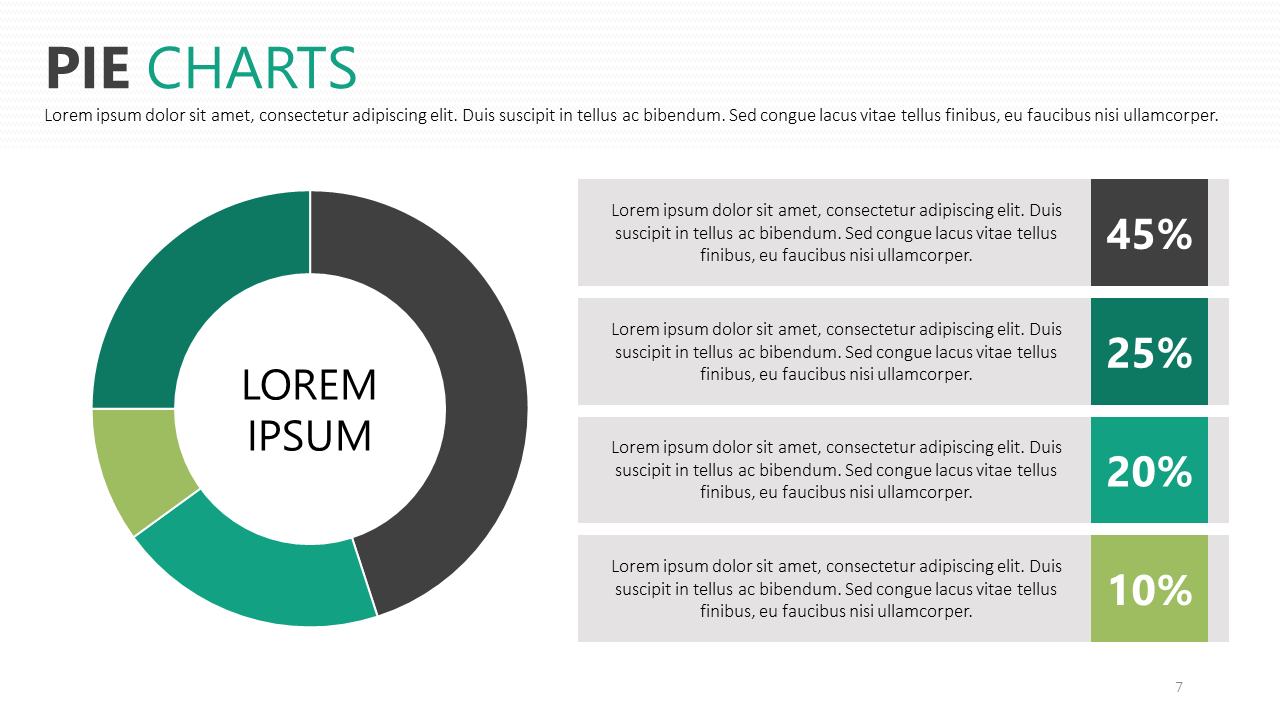

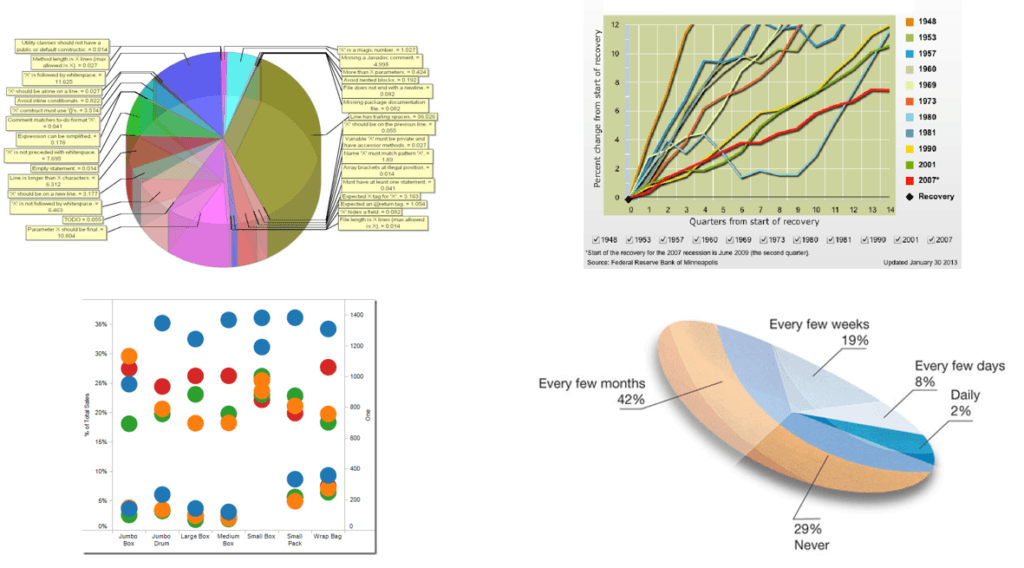

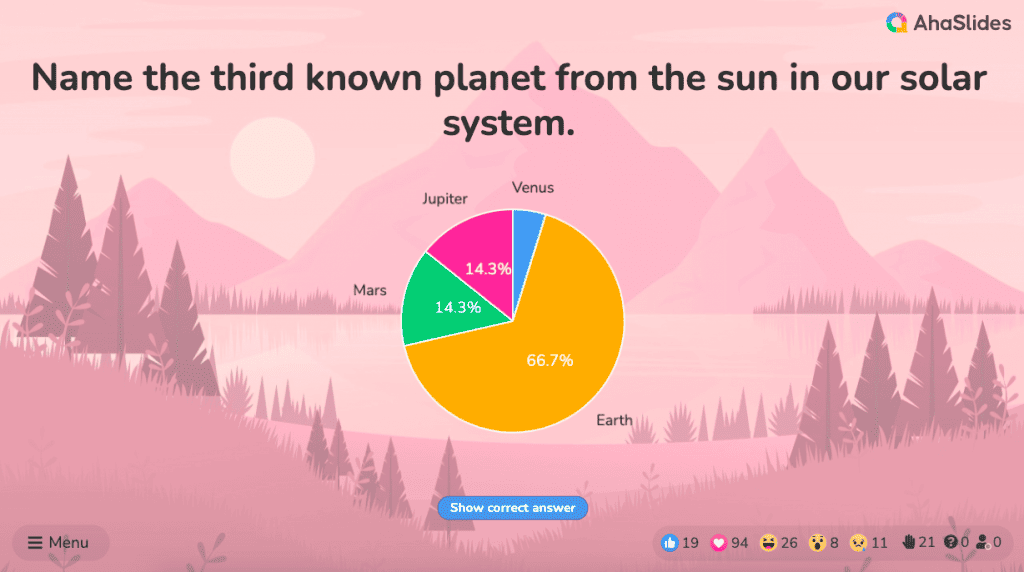

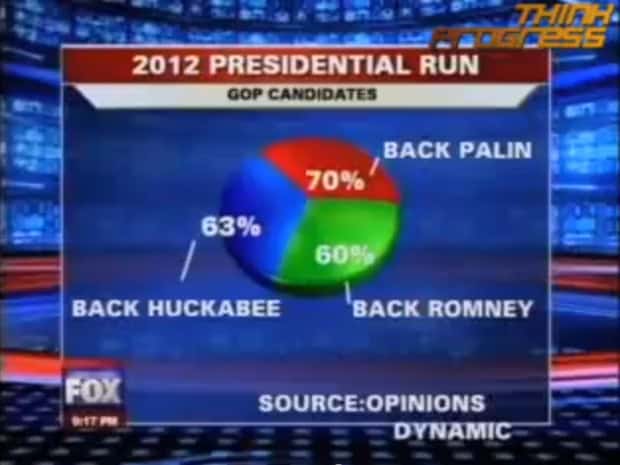

Pie charts are circular statistical graphics divided into slices to illustrate numerical proportions. Each slice represents a proportionate part of the whole, making it easy to visualize the contribution of each component to the total.

The size of the pie charts is influenced by the value of data points within each pie. The total of all data points in a pie determines its size. The pie with the highest data points appears as the largest, whereas the others are proportionally smaller. However, you can present all pies of the same size if proportional representation is not required [9] . Sometimes, pie charts are difficult to read, or additional information is required. A variation of this tool can be used instead, known as the donut chart , which has the same structure but a blank center, creating a ring shape. Presenters can add extra information, and the ring shape helps to declutter the graph.

Pie charts are used in business to show percentage distribution, compare relative sizes of categories, or present straightforward data sets where visualizing ratios is essential.

Real-Life Application of Pie Charts

Consider a scenario where you want to represent the distribution of the data. Each slice of the pie chart would represent a different category, and the size of each slice would indicate the percentage of the total portion allocated to that category.

Step 1: Define Your Data Structure

Imagine you are presenting the distribution of a project budget among different expense categories.

- Column A: Expense Categories (Personnel, Equipment, Marketing, Miscellaneous)

- Column B: Budget Amounts ($40,000, $30,000, $20,000, $10,000) Column B represents the values of your categories in Column A.

Step 2: Insert a Pie Chart

Using any of the accessible tools, you can create a pie chart. The most convenient tools for forming a pie chart in a presentation are presentation tools such as PowerPoint or Google Slides. You will notice that the pie chart assigns each expense category a percentage of the total budget by dividing it by the total budget.

For instance:

- Personnel: $40,000 / ($40,000 + $30,000 + $20,000 + $10,000) = 40%

- Equipment: $30,000 / ($40,000 + $30,000 + $20,000 + $10,000) = 30%

- Marketing: $20,000 / ($40,000 + $30,000 + $20,000 + $10,000) = 20%

- Miscellaneous: $10,000 / ($40,000 + $30,000 + $20,000 + $10,000) = 10%

You can make a chart out of this or just pull out the pie chart from the data.

3D pie charts and 3D donut charts are quite popular among the audience. They stand out as visual elements in any presentation slide, so let’s take a look at how our pie chart example would look in 3D pie chart format.

Step 03: Results Interpretation

The pie chart visually illustrates the distribution of the project budget among different expense categories. Personnel constitutes the largest portion at 40%, followed by equipment at 30%, marketing at 20%, and miscellaneous at 10%. This breakdown provides a clear overview of where the project funds are allocated, which helps in informed decision-making and resource management. It is evident that personnel are a significant investment, emphasizing their importance in the overall project budget.

Pie charts provide a straightforward way to represent proportions and percentages. They are easy to understand, even for individuals with limited data analysis experience. These charts work well for small datasets with a limited number of categories.

However, a pie chart can become cluttered and less effective in situations with many categories. Accurate interpretation may be challenging, especially when dealing with slight differences in slice sizes. In addition, these charts are static and do not effectively convey trends over time.

For more information, check our collection of pie chart templates for PowerPoint .

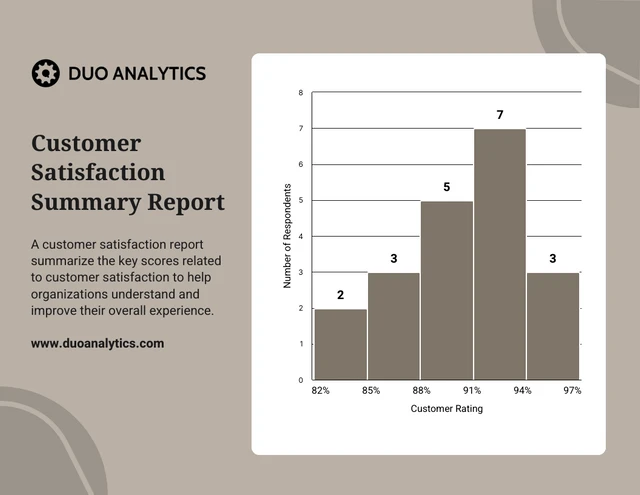

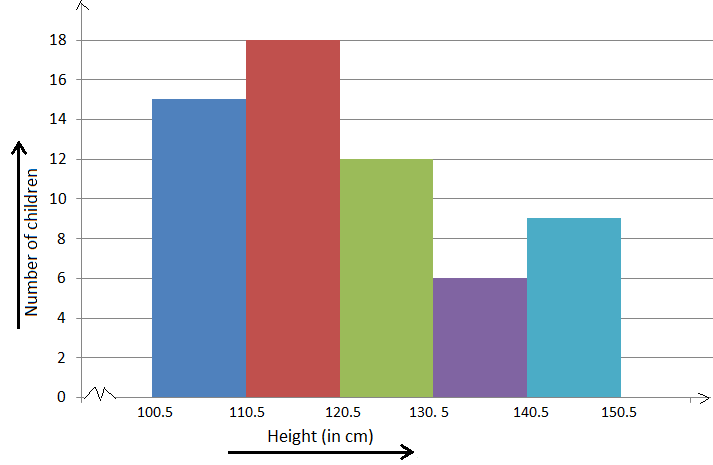

Histograms present the distribution of numerical variables. Unlike a bar chart that records each unique response separately, histograms organize numeric responses into bins and show the frequency of reactions within each bin [10] . The x-axis of a histogram shows the range of values for a numeric variable. At the same time, the y-axis indicates the relative frequencies (percentage of the total counts) for that range of values.

Whenever you want to understand the distribution of your data, check which values are more common, or identify outliers, histograms are your go-to. Think of them as a spotlight on the story your data is telling. A histogram can provide a quick and insightful overview if you’re curious about exam scores, sales figures, or any numerical data distribution.

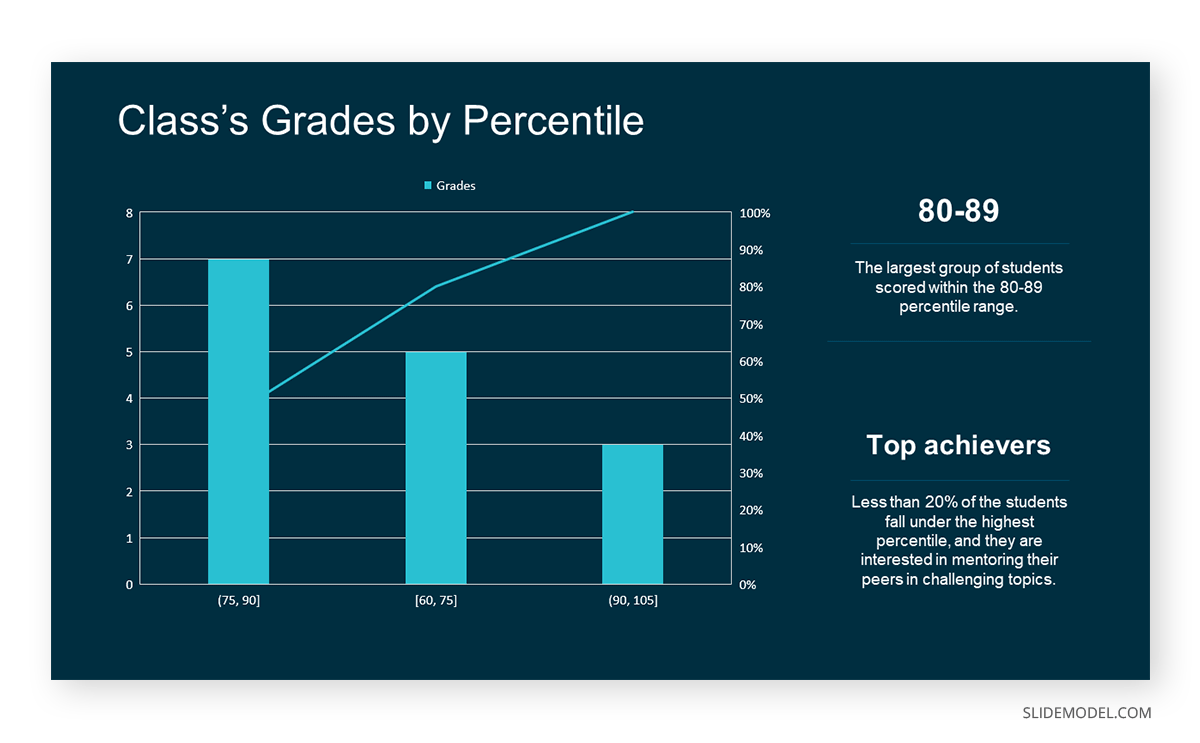

Real-Life Application of a Histogram

In the histogram data analysis presentation example, imagine an instructor analyzing a class’s grades to identify the most common score range. A histogram could effectively display the distribution. It will show whether most students scored in the average range or if there are significant outliers.

Step 1: Gather Data

He begins by gathering the data. The scores of each student in class are gathered to analyze exam scores.

| Names | Score |

|---|---|

| Alice | 78 |

| Bob | 85 |

| Clara | 92 |

| David | 65 |

| Emma | 72 |

| Frank | 88 |

| Grace | 76 |

| Henry | 95 |

| Isabel | 81 |

| Jack | 70 |

| Kate | 60 |

| Liam | 89 |

| Mia | 75 |

| Noah | 84 |

| Olivia | 92 |

After arranging the scores in ascending order, bin ranges are set.

Step 2: Define Bins

Bins are like categories that group similar values. Think of them as buckets that organize your data. The presenter decides how wide each bin should be based on the range of the values. For instance, the instructor sets the bin ranges based on score intervals: 60-69, 70-79, 80-89, and 90-100.

Step 3: Count Frequency

Now, he counts how many data points fall into each bin. This step is crucial because it tells you how often specific ranges of values occur. The result is the frequency distribution, showing the occurrences of each group.

Here, the instructor counts the number of students in each category.

- 60-69: 1 student (Kate)

- 70-79: 4 students (David, Emma, Grace, Jack)

- 80-89: 7 students (Alice, Bob, Frank, Isabel, Liam, Mia, Noah)

- 90-100: 3 students (Clara, Henry, Olivia)

Step 4: Create the Histogram

It’s time to turn the data into a visual representation. Draw a bar for each bin on a graph. The width of the bar should correspond to the range of the bin, and the height should correspond to the frequency. To make your histogram understandable, label the X and Y axes.

In this case, the X-axis should represent the bins (e.g., test score ranges), and the Y-axis represents the frequency.

The histogram of the class grades reveals insightful patterns in the distribution. Most students, with seven students, fall within the 80-89 score range. The histogram provides a clear visualization of the class’s performance. It showcases a concentration of grades in the upper-middle range with few outliers at both ends. This analysis helps in understanding the overall academic standing of the class. It also identifies the areas for potential improvement or recognition.

Thus, histograms provide a clear visual representation of data distribution. They are easy to interpret, even for those without a statistical background. They apply to various types of data, including continuous and discrete variables. One weak point is that histograms do not capture detailed patterns in students’ data, with seven compared to other visualization methods.

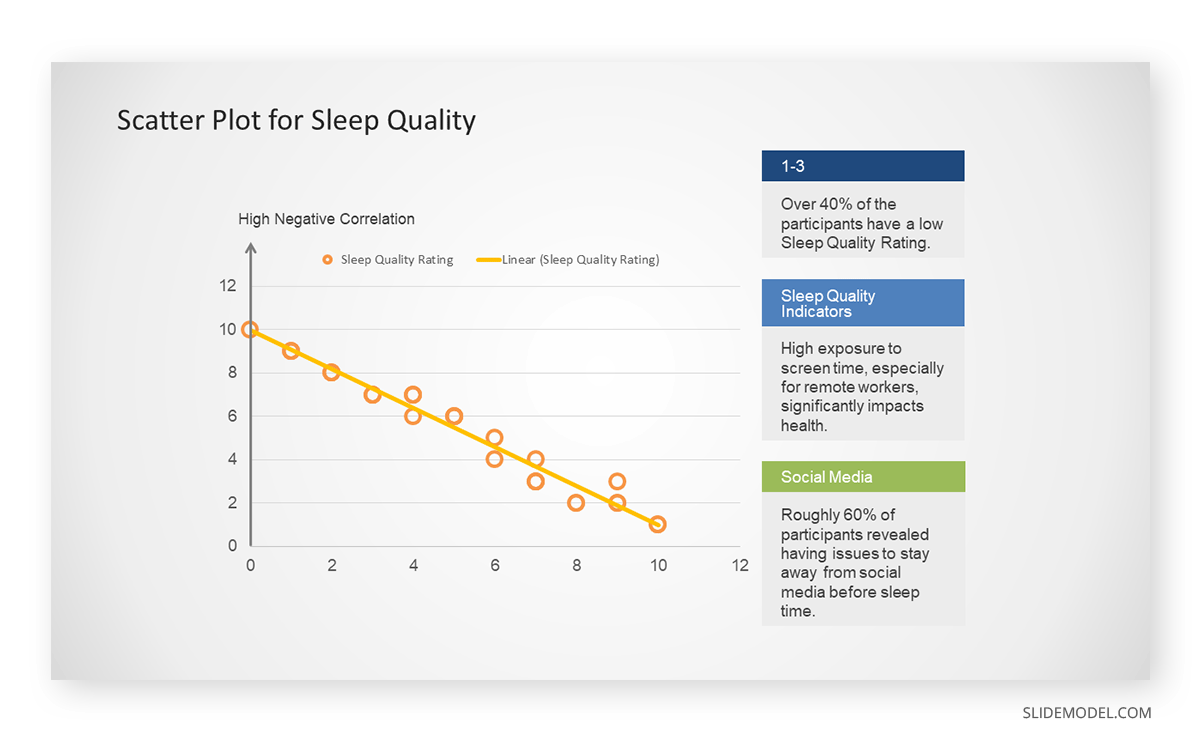

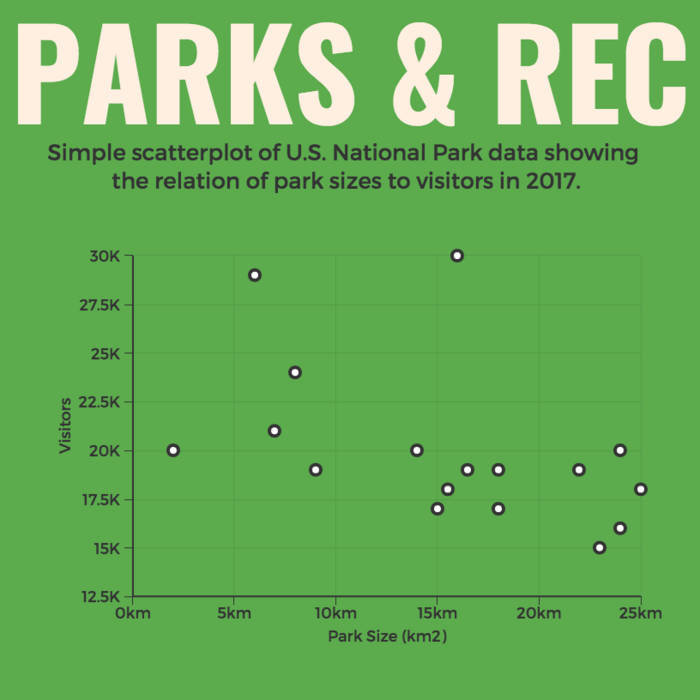

A scatter plot is a graphical representation of the relationship between two variables. It consists of individual data points on a two-dimensional plane. This plane plots one variable on the x-axis and the other on the y-axis. Each point represents a unique observation. It visualizes patterns, trends, or correlations between the two variables.

Scatter plots are also effective in revealing the strength and direction of relationships. They identify outliers and assess the overall distribution of data points. The points’ dispersion and clustering reflect the relationship’s nature, whether it is positive, negative, or lacks a discernible pattern. In business, scatter plots assess relationships between variables such as marketing cost and sales revenue. They help present data correlations and decision-making.

Real-Life Application of Scatter Plot

A group of scientists is conducting a study on the relationship between daily hours of screen time and sleep quality. After reviewing the data, they managed to create this table to help them build a scatter plot graph:

| Participant ID | Daily Hours of Screen Time | Sleep Quality Rating |

|---|---|---|

| 1 | 9 | 3 |

| 2 | 2 | 8 |

| 3 | 1 | 9 |

| 4 | 0 | 10 |

| 5 | 1 | 9 |

| 6 | 3 | 7 |

| 7 | 4 | 7 |

| 8 | 5 | 6 |

| 9 | 5 | 6 |

| 10 | 7 | 3 |

| 11 | 10 | 1 |

| 12 | 6 | 5 |

| 13 | 7 | 3 |

| 14 | 8 | 2 |

| 15 | 9 | 2 |

| 16 | 4 | 7 |

| 17 | 5 | 6 |

| 18 | 4 | 7 |

| 19 | 9 | 2 |

| 20 | 6 | 4 |

| 21 | 3 | 7 |

| 22 | 10 | 1 |

| 23 | 2 | 8 |

| 24 | 5 | 6 |

| 25 | 3 | 7 |

| 26 | 1 | 9 |

| 27 | 8 | 2 |

| 28 | 4 | 6 |

| 29 | 7 | 3 |

| 30 | 2 | 8 |

| 31 | 7 | 4 |

| 32 | 9 | 2 |

| 33 | 10 | 1 |

| 34 | 10 | 1 |

| 35 | 10 | 1 |

In the provided example, the x-axis represents Daily Hours of Screen Time, and the y-axis represents the Sleep Quality Rating.

The scientists observe a negative correlation between the amount of screen time and the quality of sleep. This is consistent with their hypothesis that blue light, especially before bedtime, has a significant impact on sleep quality and metabolic processes.

There are a few things to remember when using a scatter plot. Even when a scatter diagram indicates a relationship, it doesn’t mean one variable affects the other. A third factor can influence both variables. The more the plot resembles a straight line, the stronger the relationship is perceived [11] . If it suggests no ties, the observed pattern might be due to random fluctuations in data. When the scatter diagram depicts no correlation, whether the data might be stratified is worth considering.

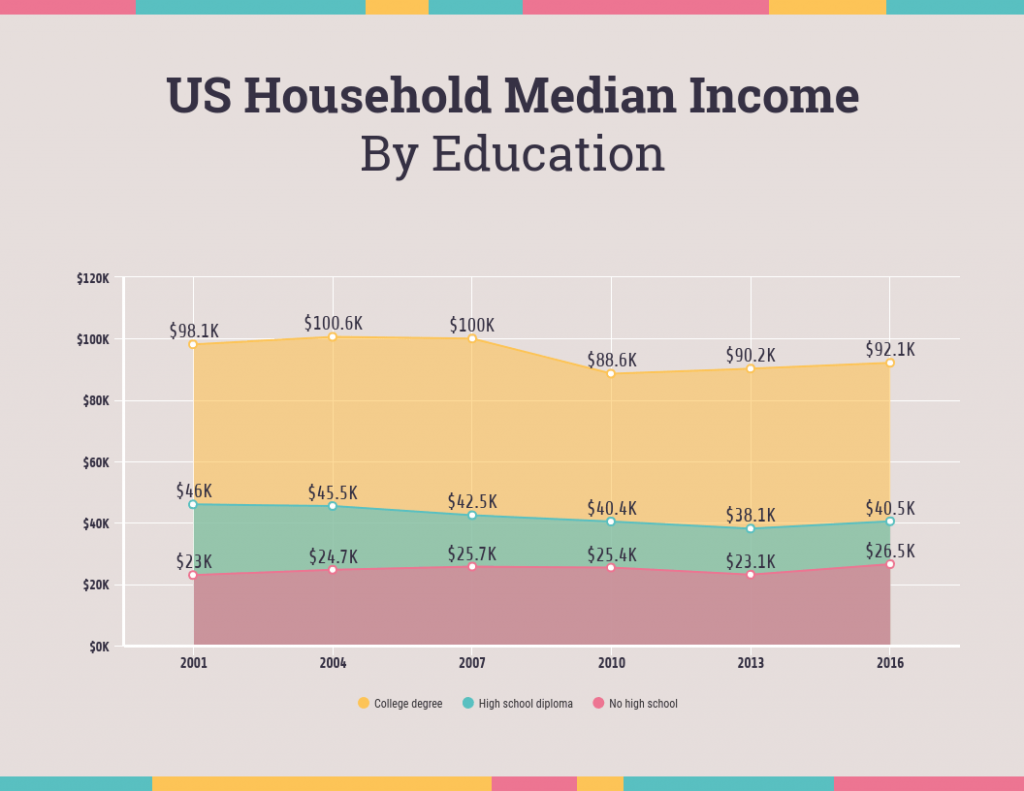

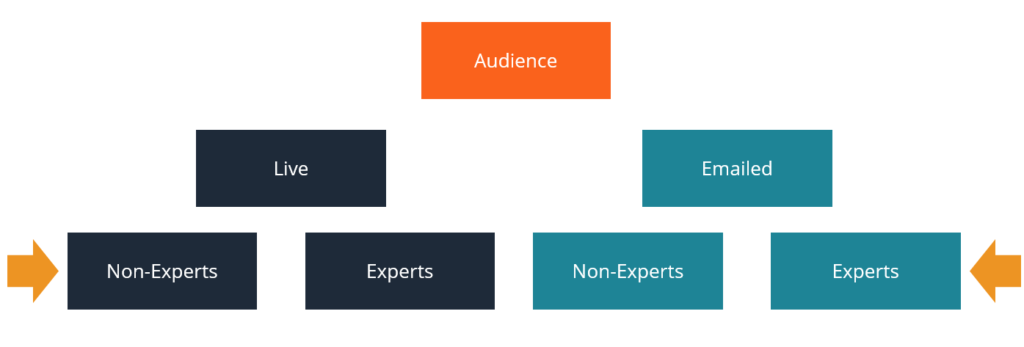

Choosing the appropriate data presentation type is crucial when making a presentation . Understanding the nature of your data and the message you intend to convey will guide this selection process. For instance, when showcasing quantitative relationships, scatter plots become instrumental in revealing correlations between variables. If the focus is on emphasizing parts of a whole, pie charts offer a concise display of proportions. Histograms, on the other hand, prove valuable for illustrating distributions and frequency patterns.

Bar charts provide a clear visual comparison of different categories. Likewise, line charts excel in showcasing trends over time, while tables are ideal for detailed data examination. Starting a presentation on data presentation types involves evaluating the specific information you want to communicate and selecting the format that aligns with your message. This ensures clarity and resonance with your audience from the beginning of your presentation.

1. Fact Sheet Dashboard for Data Presentation

Convey all the data you need to present in this one-pager format, an ideal solution tailored for users looking for presentation aids. Global maps, donut chats, column graphs, and text neatly arranged in a clean layout presented in light and dark themes.

Use This Template

2. 3D Column Chart Infographic PPT Template

Represent column charts in a highly visual 3D format with this PPT template. A creative way to present data, this template is entirely editable, and we can craft either a one-page infographic or a series of slides explaining what we intend to disclose point by point.

3. Data Circles Infographic PowerPoint Template

An alternative to the pie chart and donut chart diagrams, this template features a series of curved shapes with bubble callouts as ways of presenting data. Expand the information for each arch in the text placeholder areas.

4. Colorful Metrics Dashboard for Data Presentation

This versatile dashboard template helps us in the presentation of the data by offering several graphs and methods to convert numbers into graphics. Implement it for e-commerce projects, financial projections, project development, and more.

5. Animated Data Presentation Tools for PowerPoint & Google Slides

A slide deck filled with most of the tools mentioned in this article, from bar charts, column charts, treemap graphs, pie charts, histogram, etc. Animated effects make each slide look dynamic when sharing data with stakeholders.

6. Statistics Waffle Charts PPT Template for Data Presentations

This PPT template helps us how to present data beyond the typical pie chart representation. It is widely used for demographics, so it’s a great fit for marketing teams, data science professionals, HR personnel, and more.

7. Data Presentation Dashboard Template for Google Slides

A compendium of tools in dashboard format featuring line graphs, bar charts, column charts, and neatly arranged placeholder text areas.

8. Weather Dashboard for Data Presentation

Share weather data for agricultural presentation topics, environmental studies, or any kind of presentation that requires a highly visual layout for weather forecasting on a single day. Two color themes are available.

9. Social Media Marketing Dashboard Data Presentation Template

Intended for marketing professionals, this dashboard template for data presentation is a tool for presenting data analytics from social media channels. Two slide layouts featuring line graphs and column charts.

10. Project Management Summary Dashboard Template

A tool crafted for project managers to deliver highly visual reports on a project’s completion, the profits it delivered for the company, and expenses/time required to execute it. 4 different color layouts are available.

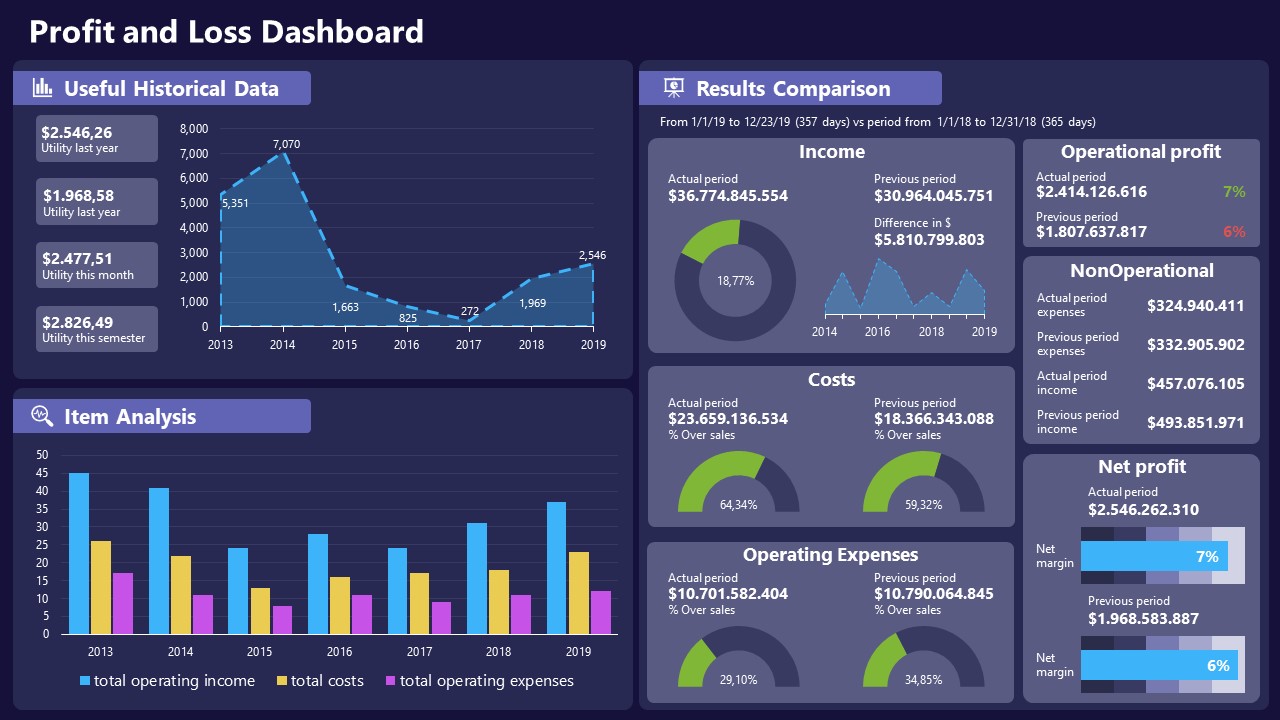

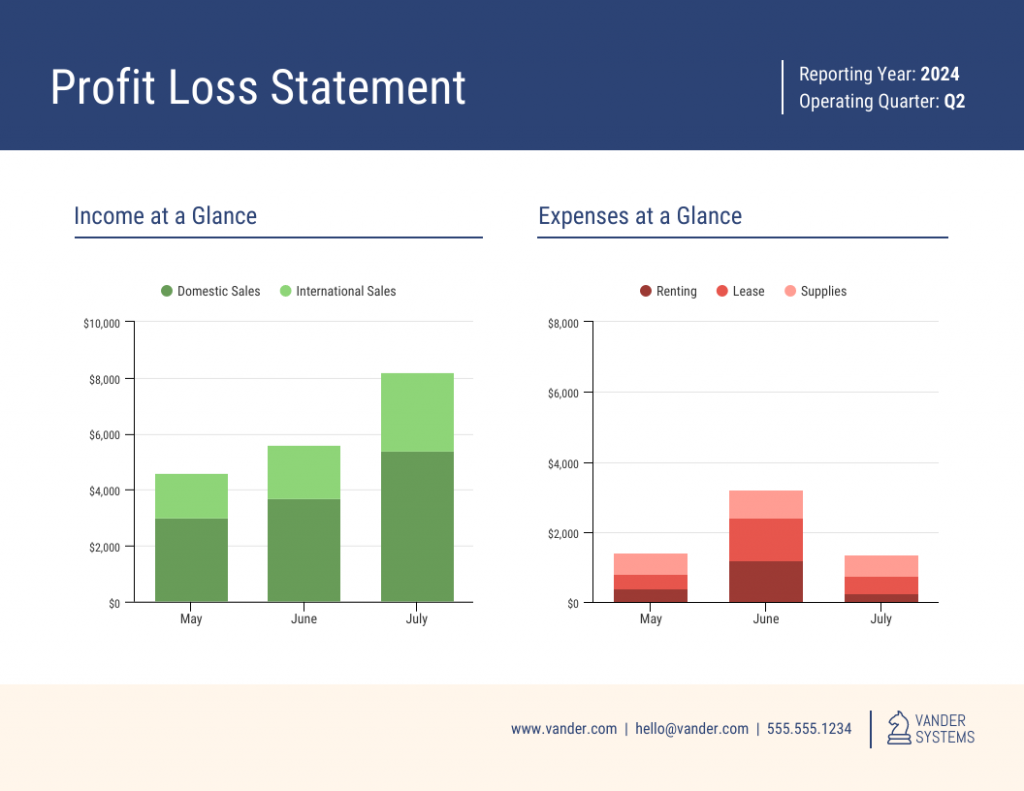

11. Profit & Loss Dashboard for PowerPoint and Google Slides

A must-have for finance professionals. This typical profit & loss dashboard includes progress bars, donut charts, column charts, line graphs, and everything that’s required to deliver a comprehensive report about a company’s financial situation.

Overwhelming visuals

One of the mistakes related to using data-presenting methods is including too much data or using overly complex visualizations. They can confuse the audience and dilute the key message.

Inappropriate chart types

Choosing the wrong type of chart for the data at hand can lead to misinterpretation. For example, using a pie chart for data that doesn’t represent parts of a whole is not right.

Lack of context

Failing to provide context or sufficient labeling can make it challenging for the audience to understand the significance of the presented data.

Inconsistency in design

Using inconsistent design elements and color schemes across different visualizations can create confusion and visual disarray.

Failure to provide details

Simply presenting raw data without offering clear insights or takeaways can leave the audience without a meaningful conclusion.

Lack of focus

Not having a clear focus on the key message or main takeaway can result in a presentation that lacks a central theme.

Visual accessibility issues

Overlooking the visual accessibility of charts and graphs can exclude certain audience members who may have difficulty interpreting visual information.

In order to avoid these mistakes in data presentation, presenters can benefit from using presentation templates . These templates provide a structured framework. They ensure consistency, clarity, and an aesthetically pleasing design, enhancing data communication’s overall impact.

Understanding and choosing data presentation types are pivotal in effective communication. Each method serves a unique purpose, so selecting the appropriate one depends on the nature of the data and the message to be conveyed. The diverse array of presentation types offers versatility in visually representing information, from bar charts showing values to pie charts illustrating proportions.

Using the proper method enhances clarity, engages the audience, and ensures that data sets are not just presented but comprehensively understood. By appreciating the strengths and limitations of different presentation types, communicators can tailor their approach to convey information accurately, developing a deeper connection between data and audience understanding.

[1] Government of Canada, S.C. (2021) 5 Data Visualization 5.2 Bar Chart , 5.2 Bar chart . https://www150.statcan.gc.ca/n1/edu/power-pouvoir/ch9/bargraph-diagrammeabarres/5214818-eng.htm

[2] Kosslyn, S.M., 1989. Understanding charts and graphs. Applied cognitive psychology, 3(3), pp.185-225. https://apps.dtic.mil/sti/pdfs/ADA183409.pdf

[3] Creating a Dashboard . https://it.tufts.edu/book/export/html/1870

[4] https://www.goldenwestcollege.edu/research/data-and-more/data-dashboards/index.html

[5] https://www.mit.edu/course/21/21.guide/grf-line.htm

[6] Jadeja, M. and Shah, K., 2015, January. Tree-Map: A Visualization Tool for Large Data. In GSB@ SIGIR (pp. 9-13). https://ceur-ws.org/Vol-1393/gsb15proceedings.pdf#page=15

[7] Heat Maps and Quilt Plots. https://www.publichealth.columbia.edu/research/population-health-methods/heat-maps-and-quilt-plots

[8] EIU QGIS WORKSHOP. https://www.eiu.edu/qgisworkshop/heatmaps.php

[9] About Pie Charts. https://www.mit.edu/~mbarker/formula1/f1help/11-ch-c8.htm

[10] Histograms. https://sites.utexas.edu/sos/guided/descriptive/numericaldd/descriptiven2/histogram/ [11] https://asq.org/quality-resources/scatter-diagram

Like this article? Please share

Data Analysis, Data Science, Data Visualization Filed under Design

Related Articles

Filed under Google Slides Tutorials • June 3rd, 2024

How To Make a Graph on Google Slides

Creating quality graphics is an essential aspect of designing data presentations. Learn how to make a graph in Google Slides with this guide.

Filed under Design • March 27th, 2024

How to Make a Presentation Graph

Detailed step-by-step instructions to master the art of how to make a presentation graph in PowerPoint and Google Slides. Check it out!

Filed under Presentation Ideas • January 6th, 2024

All About Using Harvey Balls

Among the many tools in the arsenal of the modern presenter, Harvey Balls have a special place. In this article we will tell you all about using Harvey Balls.

Leave a Reply

A Step-by-Step Guide to the Data Analysis Process

Like any scientific discipline, data analysis follows a rigorous step-by-step process. Each stage requires different skills and know-how. To get meaningful insights, though, it’s important to understand the process as a whole. An underlying framework is invaluable for producing results that stand up to scrutiny.

In this post, we’ll explore the main steps in the data analysis process. This will cover how to define your goal, collect data, and carry out an analysis. Where applicable, we’ll also use examples and highlight a few tools to make the journey easier. When you’re done, you’ll have a much better understanding of the basics. This will help you tweak the process to fit your own needs.

Here are the steps we’ll take you through:

- Defining the question

- Collecting the data

- Cleaning the data

- Analyzing the data

- Sharing your results

- Embracing failure

On popular request, we’ve also developed a video based on this article. Scroll further along this article to watch that.

Ready? Let’s get started with step one.

1. Step one: Defining the question

The first step in any data analysis process is to define your objective. In data analytics jargon, this is sometimes called the ‘problem statement’.

Defining your objective means coming up with a hypothesis and figuring how to test it. Start by asking: What business problem am I trying to solve? While this might sound straightforward, it can be trickier than it seems. For instance, your organization’s senior management might pose an issue, such as: “Why are we losing customers?” It’s possible, though, that this doesn’t get to the core of the problem. A data analyst’s job is to understand the business and its goals in enough depth that they can frame the problem the right way.

Let’s say you work for a fictional company called TopNotch Learning. TopNotch creates custom training software for its clients. While it is excellent at securing new clients, it has much lower repeat business. As such, your question might not be, “Why are we losing customers?” but, “Which factors are negatively impacting the customer experience?” or better yet: “How can we boost customer retention while minimizing costs?”

Now you’ve defined a problem, you need to determine which sources of data will best help you solve it. This is where your business acumen comes in again. For instance, perhaps you’ve noticed that the sales process for new clients is very slick, but that the production team is inefficient. Knowing this, you could hypothesize that the sales process wins lots of new clients, but the subsequent customer experience is lacking. Could this be why customers don’t come back? Which sources of data will help you answer this question?

Tools to help define your objective

Defining your objective is mostly about soft skills, business knowledge, and lateral thinking. But you’ll also need to keep track of business metrics and key performance indicators (KPIs). Monthly reports can allow you to track problem points in the business. Some KPI dashboards come with a fee, like Databox and DashThis . However, you’ll also find open-source software like Grafana , Freeboard , and Dashbuilder . These are great for producing simple dashboards, both at the beginning and the end of the data analysis process.

2. Step two: Collecting the data

Once you’ve established your objective, you’ll need to create a strategy for collecting and aggregating the appropriate data. A key part of this is determining which data you need. This might be quantitative (numeric) data, e.g. sales figures, or qualitative (descriptive) data, such as customer reviews. All data fit into one of three categories: first-party, second-party, and third-party data. Let’s explore each one.

What is first-party data?

First-party data are data that you, or your company, have directly collected from customers. It might come in the form of transactional tracking data or information from your company’s customer relationship management (CRM) system. Whatever its source, first-party data is usually structured and organized in a clear, defined way. Other sources of first-party data might include customer satisfaction surveys, focus groups, interviews, or direct observation.

What is second-party data?

To enrich your analysis, you might want to secure a secondary data source. Second-party data is the first-party data of other organizations. This might be available directly from the company or through a private marketplace. The main benefit of second-party data is that they are usually structured, and although they will be less relevant than first-party data, they also tend to be quite reliable. Examples of second-party data include website, app or social media activity, like online purchase histories, or shipping data.

What is third-party data?

Third-party data is data that has been collected and aggregated from numerous sources by a third-party organization. Often (though not always) third-party data contains a vast amount of unstructured data points (big data). Many organizations collect big data to create industry reports or to conduct market research. The research and advisory firm Gartner is a good real-world example of an organization that collects big data and sells it on to other companies. Open data repositories and government portals are also sources of third-party data .

Tools to help you collect data

Once you’ve devised a data strategy (i.e. you’ve identified which data you need, and how best to go about collecting them) there are many tools you can use to help you. One thing you’ll need, regardless of industry or area of expertise, is a data management platform (DMP). A DMP is a piece of software that allows you to identify and aggregate data from numerous sources, before manipulating them, segmenting them, and so on. There are many DMPs available. Some well-known enterprise DMPs include Salesforce DMP , SAS , and the data integration platform, Xplenty . If you want to play around, you can also try some open-source platforms like Pimcore or D:Swarm .

Want to learn more about what data analytics is and the process a data analyst follows? We cover this topic (and more) in our free introductory short course for beginners. Check out tutorial one: An introduction to data analytics .

3. Step three: Cleaning the data

Once you’ve collected your data, the next step is to get it ready for analysis. This means cleaning, or ‘scrubbing’ it, and is crucial in making sure that you’re working with high-quality data . Key data cleaning tasks include:

- Removing major errors, duplicates, and outliers —all of which are inevitable problems when aggregating data from numerous sources.

- Removing unwanted data points —extracting irrelevant observations that have no bearing on your intended analysis.

- Bringing structure to your data —general ‘housekeeping’, i.e. fixing typos or layout issues, which will help you map and manipulate your data more easily.

- Filling in major gaps —as you’re tidying up, you might notice that important data are missing. Once you’ve identified gaps, you can go about filling them.

A good data analyst will spend around 70-90% of their time cleaning their data. This might sound excessive. But focusing on the wrong data points (or analyzing erroneous data) will severely impact your results. It might even send you back to square one…so don’t rush it! You’ll find a step-by-step guide to data cleaning here . You may be interested in this introductory tutorial to data cleaning, hosted by Dr. Humera Noor Minhas.

Carrying out an exploratory analysis

Another thing many data analysts do (alongside cleaning data) is to carry out an exploratory analysis. This helps identify initial trends and characteristics, and can even refine your hypothesis. Let’s use our fictional learning company as an example again. Carrying out an exploratory analysis, perhaps you notice a correlation between how much TopNotch Learning’s clients pay and how quickly they move on to new suppliers. This might suggest that a low-quality customer experience (the assumption in your initial hypothesis) is actually less of an issue than cost. You might, therefore, take this into account.

Tools to help you clean your data

Cleaning datasets manually—especially large ones—can be daunting. Luckily, there are many tools available to streamline the process. Open-source tools, such as OpenRefine , are excellent for basic data cleaning, as well as high-level exploration. However, free tools offer limited functionality for very large datasets. Python libraries (e.g. Pandas) and some R packages are better suited for heavy data scrubbing. You will, of course, need to be familiar with the languages. Alternatively, enterprise tools are also available. For example, Data Ladder , which is one of the highest-rated data-matching tools in the industry. There are many more. Why not see which free data cleaning tools you can find to play around with?

4. Step four: Analyzing the data

Finally, you’ve cleaned your data. Now comes the fun bit—analyzing it! The type of data analysis you carry out largely depends on what your goal is. But there are many techniques available. Univariate or bivariate analysis, time-series analysis, and regression analysis are just a few you might have heard of. More important than the different types, though, is how you apply them. This depends on what insights you’re hoping to gain. Broadly speaking, all types of data analysis fit into one of the following four categories.

Descriptive analysis

Descriptive analysis identifies what has already happened . It is a common first step that companies carry out before proceeding with deeper explorations. As an example, let’s refer back to our fictional learning provider once more. TopNotch Learning might use descriptive analytics to analyze course completion rates for their customers. Or they might identify how many users access their products during a particular period. Perhaps they’ll use it to measure sales figures over the last five years. While the company might not draw firm conclusions from any of these insights, summarizing and describing the data will help them to determine how to proceed.

Learn more: What is descriptive analytics?

Diagnostic analysis

Diagnostic analytics focuses on understanding why something has happened . It is literally the diagnosis of a problem, just as a doctor uses a patient’s symptoms to diagnose a disease. Remember TopNotch Learning’s business problem? ‘Which factors are negatively impacting the customer experience?’ A diagnostic analysis would help answer this. For instance, it could help the company draw correlations between the issue (struggling to gain repeat business) and factors that might be causing it (e.g. project costs, speed of delivery, customer sector, etc.) Let’s imagine that, using diagnostic analytics, TopNotch realizes its clients in the retail sector are departing at a faster rate than other clients. This might suggest that they’re losing customers because they lack expertise in this sector. And that’s a useful insight!

Predictive analysis

Predictive analysis allows you to identify future trends based on historical data . In business, predictive analysis is commonly used to forecast future growth, for example. But it doesn’t stop there. Predictive analysis has grown increasingly sophisticated in recent years. The speedy evolution of machine learning allows organizations to make surprisingly accurate forecasts. Take the insurance industry. Insurance providers commonly use past data to predict which customer groups are more likely to get into accidents. As a result, they’ll hike up customer insurance premiums for those groups. Likewise, the retail industry often uses transaction data to predict where future trends lie, or to determine seasonal buying habits to inform their strategies. These are just a few simple examples, but the untapped potential of predictive analysis is pretty compelling.

Prescriptive analysis

Prescriptive analysis allows you to make recommendations for the future. This is the final step in the analytics part of the process. It’s also the most complex. This is because it incorporates aspects of all the other analyses we’ve described. A great example of prescriptive analytics is the algorithms that guide Google’s self-driving cars. Every second, these algorithms make countless decisions based on past and present data, ensuring a smooth, safe ride. Prescriptive analytics also helps companies decide on new products or areas of business to invest in.

Learn more: What are the different types of data analysis?

5. Step five: Sharing your results

You’ve finished carrying out your analyses. You have your insights. The final step of the data analytics process is to share these insights with the wider world (or at least with your organization’s stakeholders!) This is more complex than simply sharing the raw results of your work—it involves interpreting the outcomes, and presenting them in a manner that’s digestible for all types of audiences. Since you’ll often present information to decision-makers, it’s very important that the insights you present are 100% clear and unambiguous. For this reason, data analysts commonly use reports, dashboards, and interactive visualizations to support their findings.

How you interpret and present results will often influence the direction of a business. Depending on what you share, your organization might decide to restructure, to launch a high-risk product, or even to close an entire division. That’s why it’s very important to provide all the evidence that you’ve gathered, and not to cherry-pick data. Ensuring that you cover everything in a clear, concise way will prove that your conclusions are scientifically sound and based on the facts. On the flip side, it’s important to highlight any gaps in the data or to flag any insights that might be open to interpretation. Honest communication is the most important part of the process. It will help the business, while also helping you to excel at your job!

Tools for interpreting and sharing your findings

There are tons of data visualization tools available, suited to different experience levels. Popular tools requiring little or no coding skills include Google Charts , Tableau , Datawrapper , and Infogram . If you’re familiar with Python and R, there are also many data visualization libraries and packages available. For instance, check out the Python libraries Plotly , Seaborn , and Matplotlib . Whichever data visualization tools you use, make sure you polish up your presentation skills, too. Remember: Visualization is great, but communication is key!

You can learn more about storytelling with data in this free, hands-on tutorial . We show you how to craft a compelling narrative for a real dataset, resulting in a presentation to share with key stakeholders. This is an excellent insight into what it’s really like to work as a data analyst!

6. Step six: Embrace your failures

The last ‘step’ in the data analytics process is to embrace your failures. The path we’ve described above is more of an iterative process than a one-way street. Data analytics is inherently messy, and the process you follow will be different for every project. For instance, while cleaning data, you might spot patterns that spark a whole new set of questions. This could send you back to step one (to redefine your objective). Equally, an exploratory analysis might highlight a set of data points you’d never considered using before. Or maybe you find that the results of your core analyses are misleading or erroneous. This might be caused by mistakes in the data, or human error earlier in the process.

While these pitfalls can feel like failures, don’t be disheartened if they happen. Data analysis is inherently chaotic, and mistakes occur. What’s important is to hone your ability to spot and rectify errors. If data analytics was straightforward, it might be easier, but it certainly wouldn’t be as interesting. Use the steps we’ve outlined as a framework, stay open-minded, and be creative. If you lose your way, you can refer back to the process to keep yourself on track.

In this post, we’ve covered the main steps of the data analytics process. These core steps can be amended, re-ordered and re-used as you deem fit, but they underpin every data analyst’s work:

- Define the question —What business problem are you trying to solve? Frame it as a question to help you focus on finding a clear answer.

- Collect data —Create a strategy for collecting data. Which data sources are most likely to help you solve your business problem?

- Clean the data —Explore, scrub, tidy, de-dupe, and structure your data as needed. Do whatever you have to! But don’t rush…take your time!

- Analyze the data —Carry out various analyses to obtain insights. Focus on the four types of data analysis: descriptive, diagnostic, predictive, and prescriptive.

- Share your results —How best can you share your insights and recommendations? A combination of visualization tools and communication is key.

- Embrace your mistakes —Mistakes happen. Learn from them. This is what transforms a good data analyst into a great one.

What next? From here, we strongly encourage you to explore the topic on your own. Get creative with the steps in the data analysis process, and see what tools you can find. As long as you stick to the core principles we’ve described, you can create a tailored technique that works for you.

To learn more, check out our free, 5-day data analytics short course . You might also be interested in the following:

- These are the top 9 data analytics tools

- 10 great places to find free datasets for your next project

- How to build a data analytics portfolio

We use essential cookies to make Venngage work. By clicking “Accept All Cookies”, you agree to the storing of cookies on your device to enhance site navigation, analyze site usage, and assist in our marketing efforts.

Manage Cookies

Cookies and similar technologies collect certain information about how you’re using our website. Some of them are essential, and without them you wouldn’t be able to use Venngage. But others are optional, and you get to choose whether we use them or not.

Strictly Necessary Cookies

These cookies are always on, as they’re essential for making Venngage work, and making it safe. Without these cookies, services you’ve asked for can’t be provided.

Show cookie providers

- Google Login

Functionality Cookies

These cookies help us provide enhanced functionality and personalisation, and remember your settings. They may be set by us or by third party providers.

Performance Cookies

These cookies help us analyze how many people are using Venngage, where they come from and how they're using it. If you opt out of these cookies, we can’t get feedback to make Venngage better for you and all our users.

- Google Analytics

Targeting Cookies

These cookies are set by our advertising partners to track your activity and show you relevant Venngage ads on other sites as you browse the internet.

- Google Tag Manager

- Infographics

- Daily Infographics

- Popular Templates

- Accessibility

- Graphic Design

- Graphs and Charts

- Data Visualization

- Human Resources

- Beginner Guides

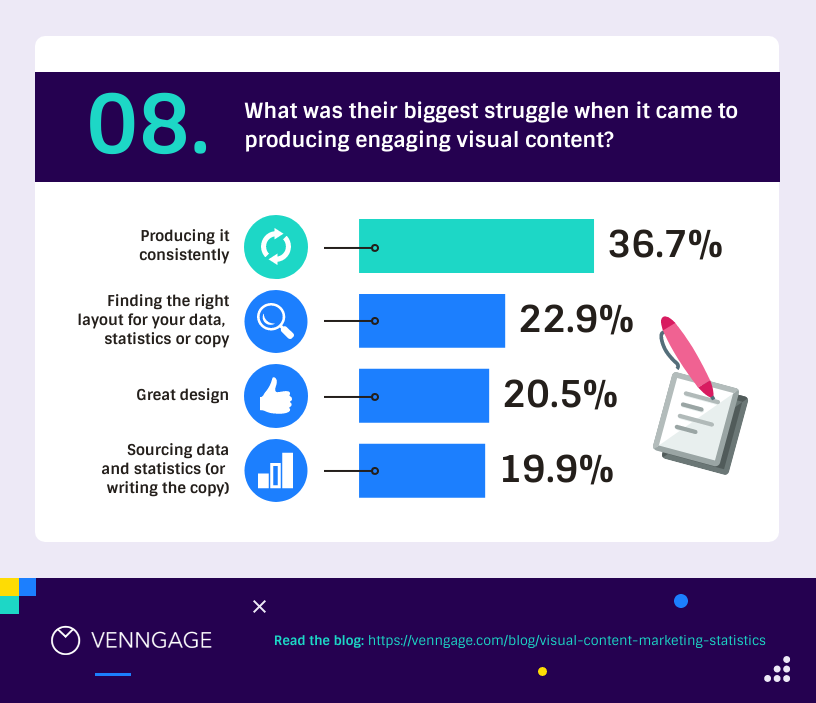

Blog Data Visualization 10 Data Presentation Examples For Strategic Communication

10 Data Presentation Examples For Strategic Communication

Written by: Krystle Wong Sep 28, 2023

Knowing how to present data is like having a superpower.

Data presentation today is no longer just about numbers on a screen; it’s storytelling with a purpose. It’s about captivating your audience, making complex stuff look simple and inspiring action.

To help turn your data into stories that stick, influence decisions and make an impact, check out Venngage’s free chart maker or follow me on a tour into the world of data storytelling along with data presentation templates that work across different fields, from business boardrooms to the classroom and beyond. Keep scrolling to learn more!

Click to jump ahead:

10 Essential data presentation examples + methods you should know

What should be included in a data presentation, what are some common mistakes to avoid when presenting data, faqs on data presentation examples, transform your message with impactful data storytelling.

Data presentation is a vital skill in today’s information-driven world. Whether you’re in business, academia, or simply want to convey information effectively, knowing the different ways of presenting data is crucial. For impactful data storytelling, consider these essential data presentation methods:

1. Bar graph

Ideal for comparing data across categories or showing trends over time.

Bar graphs, also known as bar charts are workhorses of data presentation. They’re like the Swiss Army knives of visualization methods because they can be used to compare data in different categories or display data changes over time.

In a bar chart, categories are displayed on the x-axis and the corresponding values are represented by the height of the bars on the y-axis.

It’s a straightforward and effective way to showcase raw data, making it a staple in business reports, academic presentations and beyond.

Make sure your bar charts are concise with easy-to-read labels. Whether your bars go up or sideways, keep it simple by not overloading with too many categories.

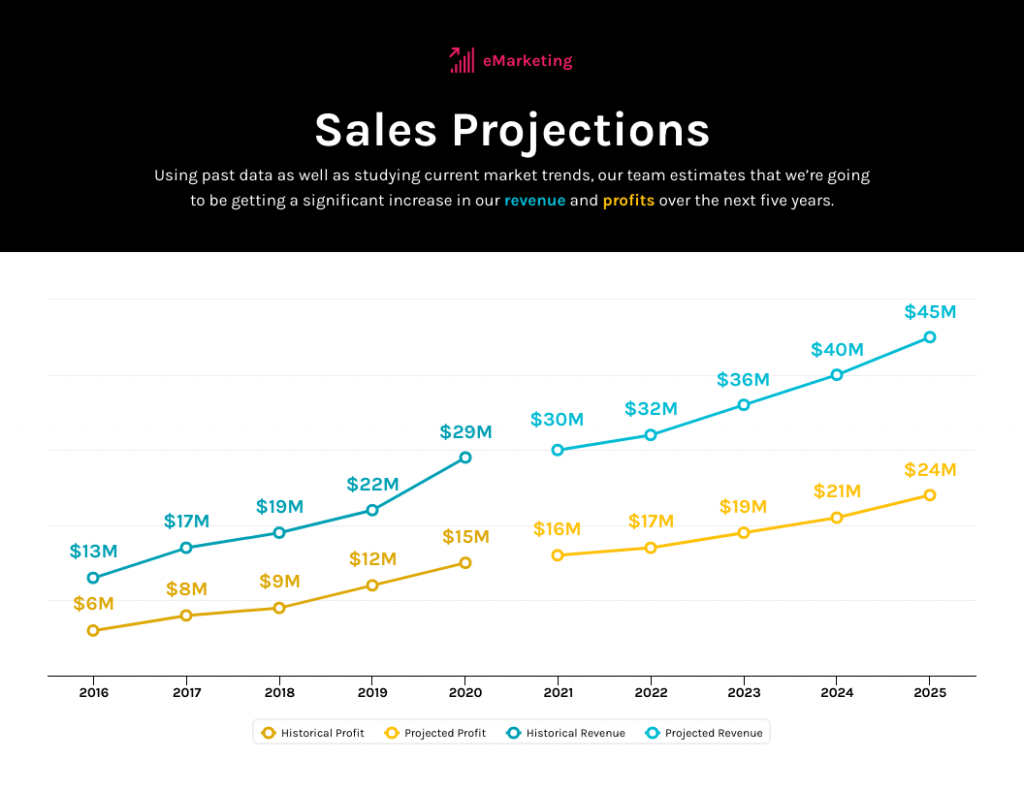

2. Line graph

Great for displaying trends and variations in data points over time or continuous variables.

Line charts or line graphs are your go-to when you want to visualize trends and variations in data sets over time.

One of the best quantitative data presentation examples, they work exceptionally well for showing continuous data, such as sales projections over the last couple of years or supply and demand fluctuations.

The x-axis represents time or a continuous variable and the y-axis represents the data values. By connecting the data points with lines, you can easily spot trends and fluctuations.

A tip when presenting data with line charts is to minimize the lines and not make it too crowded. Highlight the big changes, put on some labels and give it a catchy title.

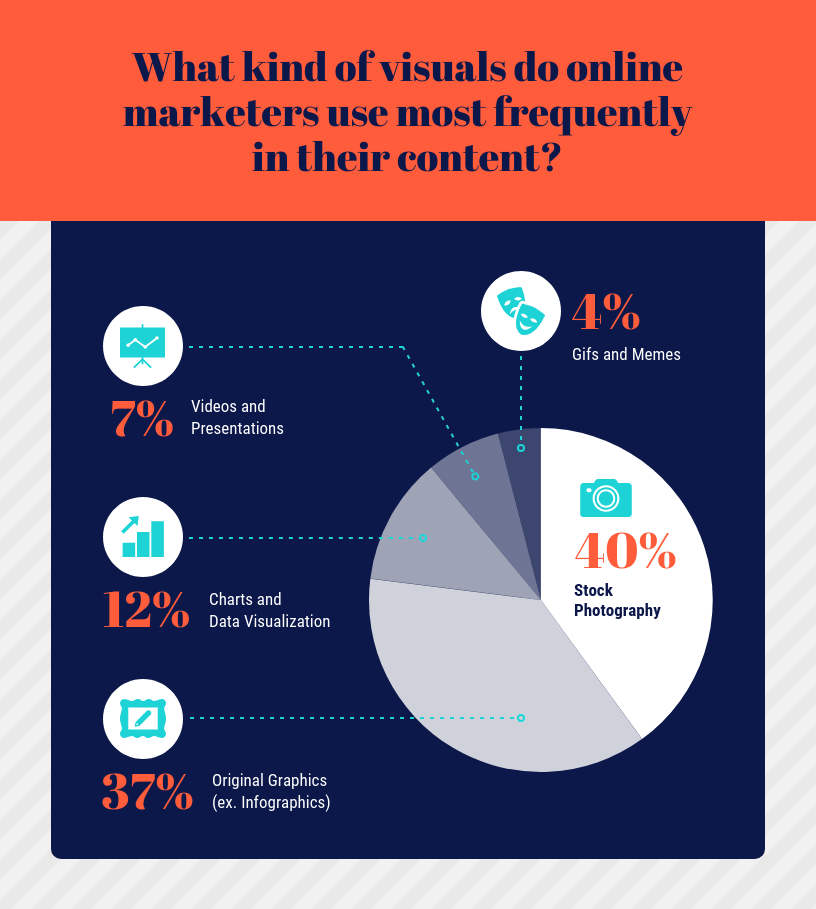

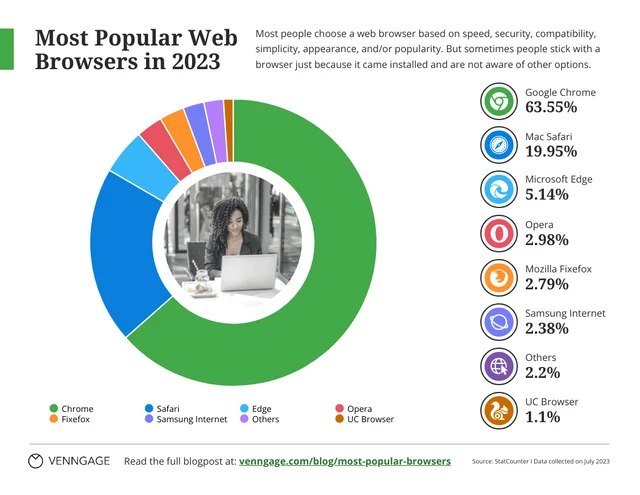

3. Pie chart

Useful for illustrating parts of a whole, such as percentages or proportions.

Pie charts are perfect for showing how a whole is divided into parts. They’re commonly used to represent percentages or proportions and are great for presenting survey results that involve demographic data.

Each “slice” of the pie represents a portion of the whole and the size of each slice corresponds to its share of the total.

While pie charts are handy for illustrating simple distributions, they can become confusing when dealing with too many categories or when the differences in proportions are subtle.

Don’t get too carried away with slices — label those slices with percentages or values so people know what’s what and consider using a legend for more categories.

4. Scatter plot

Effective for showing the relationship between two variables and identifying correlations.

Scatter plots are all about exploring relationships between two variables. They’re great for uncovering correlations, trends or patterns in data.

In a scatter plot, every data point appears as a dot on the chart, with one variable marked on the horizontal x-axis and the other on the vertical y-axis.

By examining the scatter of points, you can discern the nature of the relationship between the variables, whether it’s positive, negative or no correlation at all.

If you’re using scatter plots to reveal relationships between two variables, be sure to add trendlines or regression analysis when appropriate to clarify patterns. Label data points selectively or provide tooltips for detailed information.

5. Histogram

Best for visualizing the distribution and frequency of a single variable.

Histograms are your choice when you want to understand the distribution and frequency of a single variable.

They divide the data into “bins” or intervals and the height of each bar represents the frequency or count of data points falling into that interval.

Histograms are excellent for helping to identify trends in data distributions, such as peaks, gaps or skewness.

Here’s something to take note of — ensure that your histogram bins are appropriately sized to capture meaningful data patterns. Using clear axis labels and titles can also help explain the distribution of the data effectively.

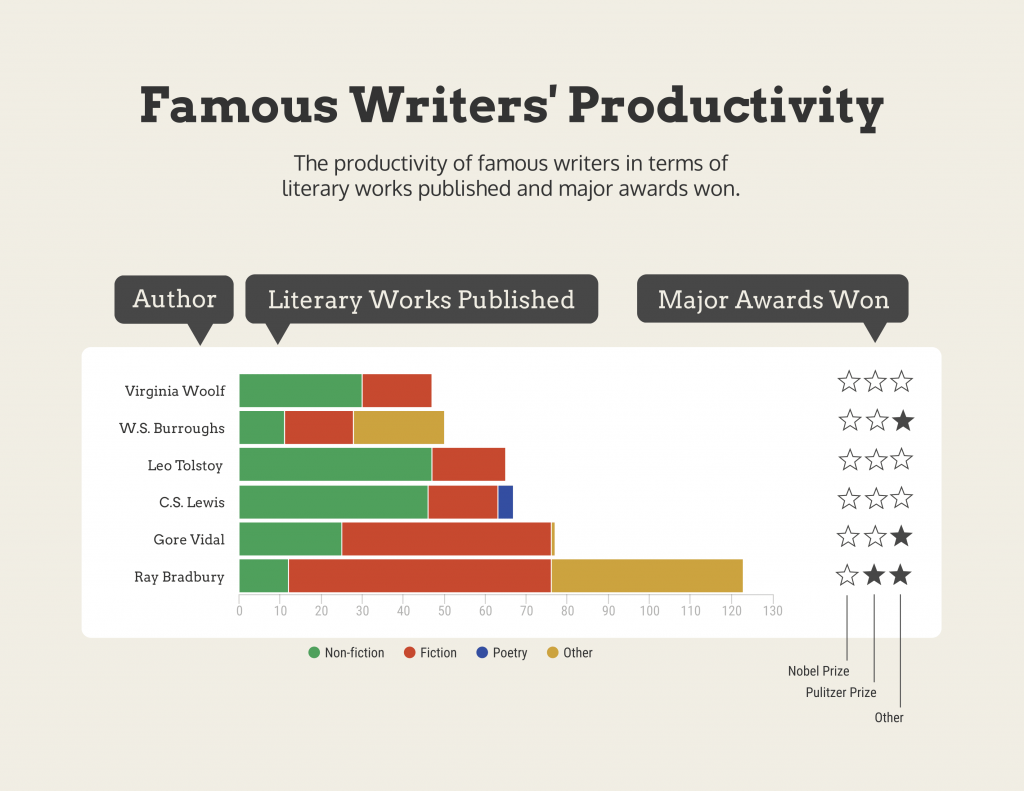

6. Stacked bar chart

Useful for showing how different components contribute to a whole over multiple categories.

Stacked bar charts are a handy choice when you want to illustrate how different components contribute to a whole across multiple categories.

Each bar represents a category and the bars are divided into segments to show the contribution of various components within each category.

This method is ideal for highlighting both the individual and collective significance of each component, making it a valuable tool for comparative analysis.

Stacked bar charts are like data sandwiches—label each layer so people know what’s what. Keep the order logical and don’t forget the paintbrush for snazzy colors. Here’s a data analysis presentation example on writers’ productivity using stacked bar charts:

7. Area chart

Similar to line charts but with the area below the lines filled, making them suitable for showing cumulative data.

Area charts are close cousins of line charts but come with a twist.

Imagine plotting the sales of a product over several months. In an area chart, the space between the line and the x-axis is filled, providing a visual representation of the cumulative total.

This makes it easy to see how values stack up over time, making area charts a valuable tool for tracking trends in data.

For area charts, use them to visualize cumulative data and trends, but avoid overcrowding the chart. Add labels, especially at significant points and make sure the area under the lines is filled with a visually appealing color gradient.

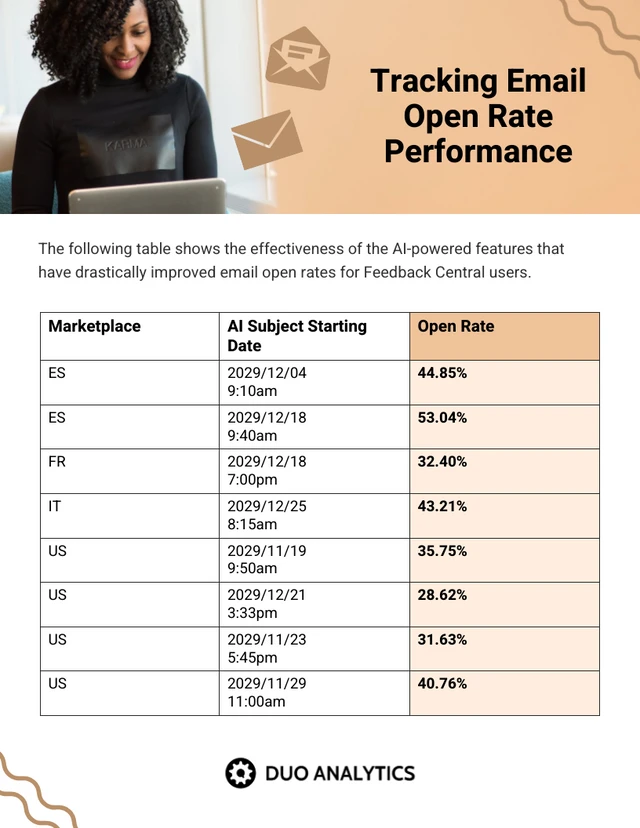

8. Tabular presentation

Presenting data in rows and columns, often used for precise data values and comparisons.

Tabular data presentation is all about clarity and precision. Think of it as presenting numerical data in a structured grid, with rows and columns clearly displaying individual data points.

A table is invaluable for showcasing detailed data, facilitating comparisons and presenting numerical information that needs to be exact. They’re commonly used in reports, spreadsheets and academic papers.

When presenting tabular data, organize it neatly with clear headers and appropriate column widths. Highlight important data points or patterns using shading or font formatting for better readability.

9. Textual data

Utilizing written or descriptive content to explain or complement data, such as annotations or explanatory text.

Textual data presentation may not involve charts or graphs, but it’s one of the most used qualitative data presentation examples.

It involves using written content to provide context, explanations or annotations alongside data visuals. Think of it as the narrative that guides your audience through the data.

Well-crafted textual data can make complex information more accessible and help your audience understand the significance of the numbers and visuals.

Textual data is your chance to tell a story. Break down complex information into bullet points or short paragraphs and use headings to guide the reader’s attention.

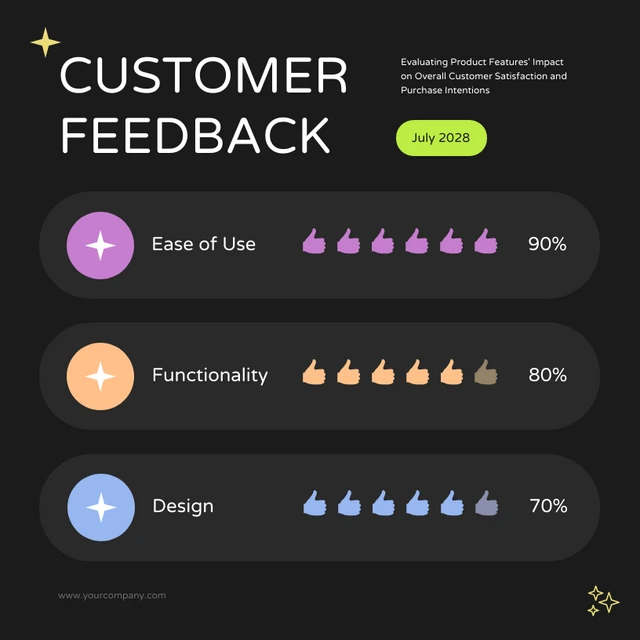

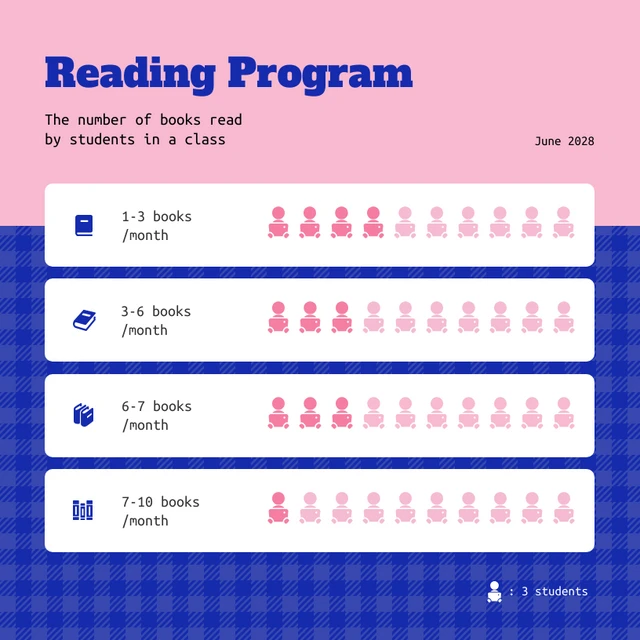

10. Pictogram

Using simple icons or images to represent data is especially useful for conveying information in a visually intuitive manner.

Pictograms are all about harnessing the power of images to convey data in an easy-to-understand way.

Instead of using numbers or complex graphs, you use simple icons or images to represent data points.

For instance, you could use a thumbs up emoji to illustrate customer satisfaction levels, where each face represents a different level of satisfaction.

Pictograms are great for conveying data visually, so choose symbols that are easy to interpret and relevant to the data. Use consistent scaling and a legend to explain the symbols’ meanings, ensuring clarity in your presentation.

Looking for more data presentation ideas? Use the Venngage graph maker or browse through our gallery of chart templates to pick a template and get started!

A comprehensive data presentation should include several key elements to effectively convey information and insights to your audience. Here’s a list of what should be included in a data presentation:

1. Title and objective

- Begin with a clear and informative title that sets the context for your presentation.

- State the primary objective or purpose of the presentation to provide a clear focus.

2. Key data points

- Present the most essential data points or findings that align with your objective.

- Use charts, graphical presentations or visuals to illustrate these key points for better comprehension.

3. Context and significance

- Provide a brief overview of the context in which the data was collected and why it’s significant.

- Explain how the data relates to the larger picture or the problem you’re addressing.

4. Key takeaways

- Summarize the main insights or conclusions that can be drawn from the data.

- Highlight the key takeaways that the audience should remember.

5. Visuals and charts

- Use clear and appropriate visual aids to complement the data.

- Ensure that visuals are easy to understand and support your narrative.

6. Implications or actions

- Discuss the practical implications of the data or any recommended actions.

- If applicable, outline next steps or decisions that should be taken based on the data.

7. Q&A and discussion

- Allocate time for questions and open discussion to engage the audience.

- Address queries and provide additional insights or context as needed.

Presenting data is a crucial skill in various professional fields, from business to academia and beyond. To ensure your data presentations hit the mark, here are some common mistakes that you should steer clear of:

Overloading with data

Presenting too much data at once can overwhelm your audience. Focus on the key points and relevant information to keep the presentation concise and focused. Here are some free data visualization tools you can use to convey data in an engaging and impactful way.

Assuming everyone’s on the same page

It’s easy to assume that your audience understands as much about the topic as you do. But this can lead to either dumbing things down too much or diving into a bunch of jargon that leaves folks scratching their heads. Take a beat to figure out where your audience is coming from and tailor your presentation accordingly.

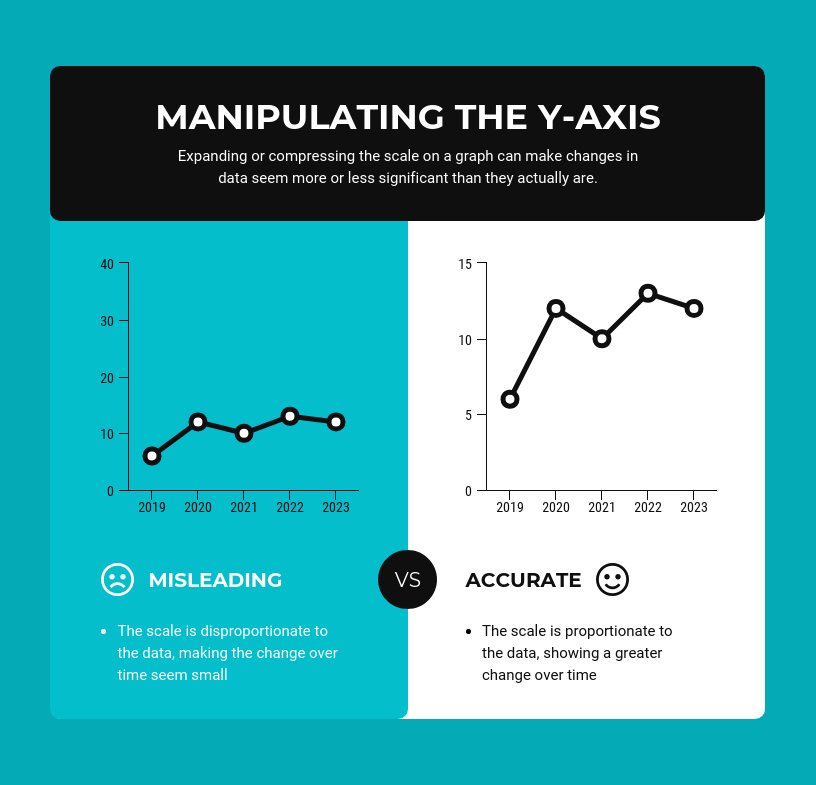

Misleading visuals

Using misleading visuals, such as distorted scales or inappropriate chart types can distort the data’s meaning. Pick the right data infographics and understandable charts to ensure that your visual representations accurately reflect the data.

Not providing context

Data without context is like a puzzle piece with no picture on it. Without proper context, data may be meaningless or misinterpreted. Explain the background, methodology and significance of the data.

Not citing sources properly

Neglecting to cite sources and provide citations for your data can erode its credibility. Always attribute data to its source and utilize reliable sources for your presentation.

Not telling a story

Avoid simply presenting numbers. If your presentation lacks a clear, engaging story that takes your audience on a journey from the beginning (setting the scene) through the middle (data analysis) to the end (the big insights and recommendations), you’re likely to lose their interest.

Infographics are great for storytelling because they mix cool visuals with short and sweet text to explain complicated stuff in a fun and easy way. Create one with Venngage’s free infographic maker to create a memorable story that your audience will remember.

Ignoring data quality

Presenting data without first checking its quality and accuracy can lead to misinformation. Validate and clean your data before presenting it.

Simplify your visuals

Fancy charts might look cool, but if they confuse people, what’s the point? Go for the simplest visual that gets your message across. Having a dilemma between presenting data with infographics v.s data design? This article on the difference between data design and infographics might help you out.

Missing the emotional connection

Data isn’t just about numbers; it’s about people and real-life situations. Don’t forget to sprinkle in some human touch, whether it’s through relatable stories, examples or showing how the data impacts real lives.

Skipping the actionable insights

At the end of the day, your audience wants to know what they should do with all the data. If you don’t wrap up with clear, actionable insights or recommendations, you’re leaving them hanging. Always finish up with practical takeaways and the next steps.

Can you provide some data presentation examples for business reports?

Business reports often benefit from data presentation through bar charts showing sales trends over time, pie charts displaying market share,or tables presenting financial performance metrics like revenue and profit margins.

What are some creative data presentation examples for academic presentations?

Creative data presentation ideas for academic presentations include using statistical infographics to illustrate research findings and statistical data, incorporating storytelling techniques to engage the audience or utilizing heat maps to visualize data patterns.

What are the key considerations when choosing the right data presentation format?

When choosing a chart format , consider factors like data complexity, audience expertise and the message you want to convey. Options include charts (e.g., bar, line, pie), tables, heat maps, data visualization infographics and interactive dashboards.

Knowing the type of data visualization that best serves your data is just half the battle. Here are some best practices for data visualization to make sure that the final output is optimized.

How can I choose the right data presentation method for my data?

To select the right data presentation method, start by defining your presentation’s purpose and audience. Then, match your data type (e.g., quantitative, qualitative) with suitable visualization techniques (e.g., histograms, word clouds) and choose an appropriate presentation format (e.g., slide deck, report, live demo).

For more presentation ideas , check out this guide on how to make a good presentation or use a presentation software to simplify the process.

How can I make my data presentations more engaging and informative?

To enhance data presentations, use compelling narratives, relatable examples and fun data infographics that simplify complex data. Encourage audience interaction, offer actionable insights and incorporate storytelling elements to engage and inform effectively.

The opening of your presentation holds immense power in setting the stage for your audience. To design a presentation and convey your data in an engaging and informative, try out Venngage’s free presentation maker to pick the right presentation design for your audience and topic.

What is the difference between data visualization and data presentation?

Data presentation typically involves conveying data reports and insights to an audience, often using visuals like charts and graphs. Data visualization , on the other hand, focuses on creating those visual representations of data to facilitate understanding and analysis.

Now that you’ve learned a thing or two about how to use these methods of data presentation to tell a compelling data story , it’s time to take these strategies and make them your own.

But here’s the deal: these aren’t just one-size-fits-all solutions. Remember that each example we’ve uncovered here is not a rigid template but a source of inspiration. It’s all about making your audience go, “Wow, I get it now!”

Think of your data presentations as your canvas – it’s where you paint your story, convey meaningful insights and make real change happen.

So, go forth, present your data with confidence and purpose and watch as your strategic influence grows, one compelling presentation at a time.

Discover popular designs

Infographic maker

Brochure maker

White paper online

Newsletter creator

Flyer maker

Timeline maker

Letterhead maker

Mind map maker

Ebook maker

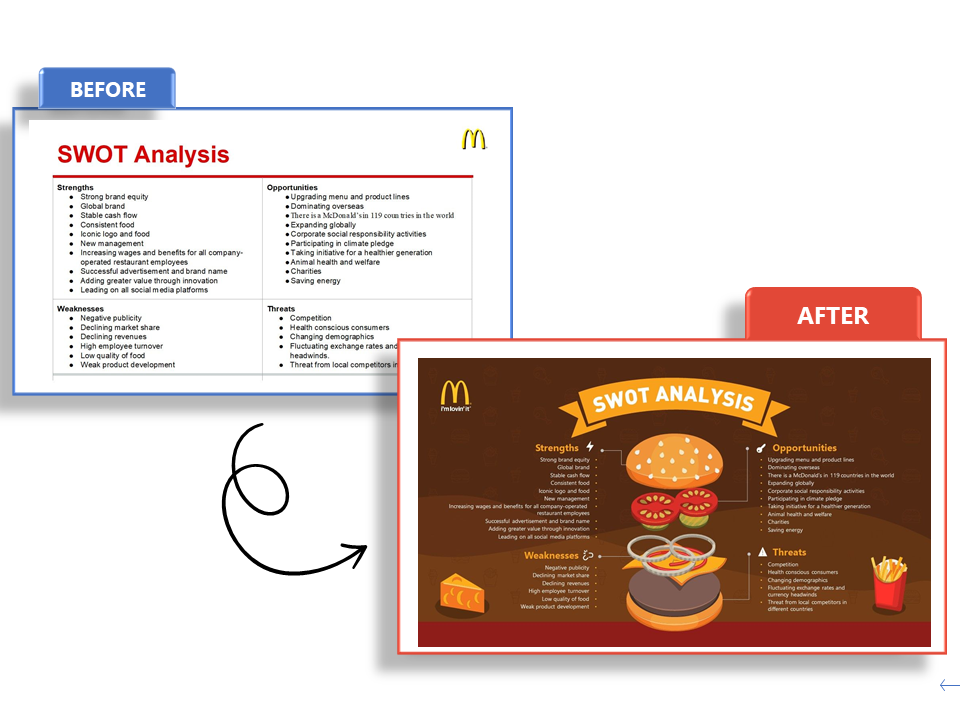

Mastering the Art of Presenting Data in PowerPoint

Presenting data in PowerPoint is easy. However, making it visually appealing and effective takes more time and effort. It’s not hard to bore your audience with the same old data presentation formats. So, there is one simple golden rule: Make it not boring.

When used correctly, data can add weight, authority, and punch to your message. It should support and highlight your ideas, making a concept come to life. But this begs the question: How to present data in PowerPoint?

After talking to our 200+ expert presentation designers, I compiled information about their best-kept secrets to presenting data in PowerPoint.

Below, I’ll show our designers ' favorite ways to add data visualization for global customers and their expert tips for making your data shine. Read ahead and master the art of data visualization in PowerPoint!

Feel free to explore sections to find what's most useful!

How to present data in PowePoint: a step-by-step guide

Creative ways to present data in powerpoint.

- Tips for data visualization

Seeking to optimize your presentations? – 24Slides designers have got you covered!

How you present your data can make or break your presentation. It can make it stand out and stick with your audience, or make it fall flat from the go.

It’s not enough to just copy and paste your data into a presentation slide. Luckily, PowerPoint has many smart data visualization tools! You only need to put in your numbers, and PowerPoint will work it up for you.

Follow these steps, and I guarantee your presentations will level up!

1. Collect your data

First things first, and that is to have all your information ready. Especially for long business presentations, there can be a lot of information to consider when working on your slides. Having it all organized and ready to use will make the whole process much easier to go through.

Consider where your data comes from, whether from research, surveys, or databases. Make sure your data is accurate, up-to-date, and relevant to your presentation topic.

Your goal will be to create clear conclusions based on your data and highlight trends.

2. Know your audience

Knowing who your audience is and the one thing you want them to get from your data is vital. If you don’t have any idea where to start, you can begin with these key questions:

- What impact do you want your data to make on them?

- Is the subject of your presentation familiar to them?

- Are they fellow sales professionals?

- Are they interested in the relationships in the data you’re presenting?

By answering these, you'll be able to clearly understand the purpose of your data. As a storyteller, you want to capture your audience’s attention.