Generative AI for Educators

As a teacher, we know your time is valuable and student needs are broad. With Generative AI for Educators, you’ll learn how to use generative AI tools to help you save time on everyday tasks, personalize instruction, enhance lessons and activities in creative ways, and more.

- Built by AI experts at Google in collaboration with MIT RAISE

- No previous experience necessary

Flexible AI training designed for teachers

This self-paced course fits into a teacher’s busy schedule with flexibility in mind. It offers hands-on, practical experience for teachers across disciplines.

online, self-paced learning

to complete the course

Learn more about the course

Developed by AI experts at Google in collaboration with MIT RAISE , this course will help you bring AI into your practice. You’ll also gain a foundational understanding of AI — you'll learn what it is, the opportunities and limitations of this technology, and how to use it responsibly.

You’ll learn how to use generative AI tools to:

- Save time on everyday tasks like drafting emails and other correspondence

- Personalize instruction for different learning styles and abilities

- Enhance lessons and activities in creative ways

More details

Close details, save time and enhance student learning with ai skills.

When you complete the online course, you’ll gain essential AI skills you can apply to your workflow immediately. By using AI as a helpful collaboration tool, you can work smarter, not harder.

Earn a certificate which you can present to your district for professional development (PD) credit, depending on district and state requirements.

Get hands-on experience using generative AI tools and apply your new skills right away.

Bring this course to your school or district

If you’re a school or district leader, we’ve designed this course to fit into a teacher’s standard school day. The five modules are each thirty minutes or less, allowing teachers to fit the training into professional development or planning periods.

Nellie Tayloe Sanders

Oklahoma Secretary of Education

Jose L. Dotres, Superintendent of Schools

Miami-Dade County Public Schools

Michael Matsuda, Superintendent of Schools

Anaheim Union High School District

Frequently asked questions

Why enroll in generative ai for educators.

Generative AI for Educators is a two-hour, self-paced course designed to help teachers save time on everyday tasks, personalize instruction to meet student needs, and enhance lessons and activities in creative ways with generative AI tools — no previous experience required. Developed by experts at Google in collaboration with MIT RAISE (Responsible AI for Social Empowerment and Education), this no cost course is built for teachers across disciplines and provides practical, hands-on experience. After completing the course, teachers earn a certificate from the course, which they can present to their district for professional development (PD) credit, depending on district and state requirements.

Who is the Generative AI for Educators course for?

Generative AI for Educators is designed for high school and middle school educators of any subject, with no technical experience required. Any teacher who is interested in using AI to save time and enhance student education could benefit from this course.

How are the skills I learn applicable to my work?

This generative AI course for teachers will provide practical applications that help save time by increasing efficiency, productivity, and creativity. The course includes hands-on experience using generative AI tools to do things like write class correspondence (messages, emails, newsletters), create assessments and provide feedback, differentiate instruction to meet various student needs, and create instructional strategies to make lessons more engaging for students.

What will I get when I finish?

After completing the course, teachers earn a certificate that they can present to their district for professional development (PD) credit, depending on district and state requirements.

How much does Generative AI for Educators cost?

The Generative AI for Educators course is provided at no cost.

Who designed Generative AI for Educators?

Generative AI for Educators was designed by AI experts at Google in collaboration with MIT RAISE (Responsible AI for Social Empowerment and Education).

In what languages is Generative AI for Educators available?

Generative AI for Educators is currently available in English, and we are working to offer this course in additional languages. Please check back here for updates.

Looking to help your middle school students learn AI skills?

Check out Experience AI . Created by Google DeepMind and The Raspberry Pi Foundation, this no cost program provides ready-to-use resources to introduce AI technology in middle school classrooms, including lesson plans, slide decks, worksheets, and videos on AI and machine learning.

What is generative AI?

Generative AI is artificial intelligence that can generate new content, such as text, images, or other media.

Stay up to date on Google Career Certificates

By clicking subscribe, you consent to receive email communication from Grow with Google and its programs. Your information will be used in accordance with Google Privacy Policy and you may opt out at any time by clicking unsubscribe at the bottom of each communication.

Thanks for subscribing to the Grow with Google newsletter!

Subscribe to discover even more ways to grow

This site uses cookies from Google to deliver its services and to analyze traffic.

- Getting Started with AI-Enhanced Teaching: A Practical Guide for Instructors

Home » AI Resource Hub » Teach & Learn » Getting Started with AI-Enhanced Teaching: A Practical Guide for Instructors

SKIP AHEAD TO

Introduction

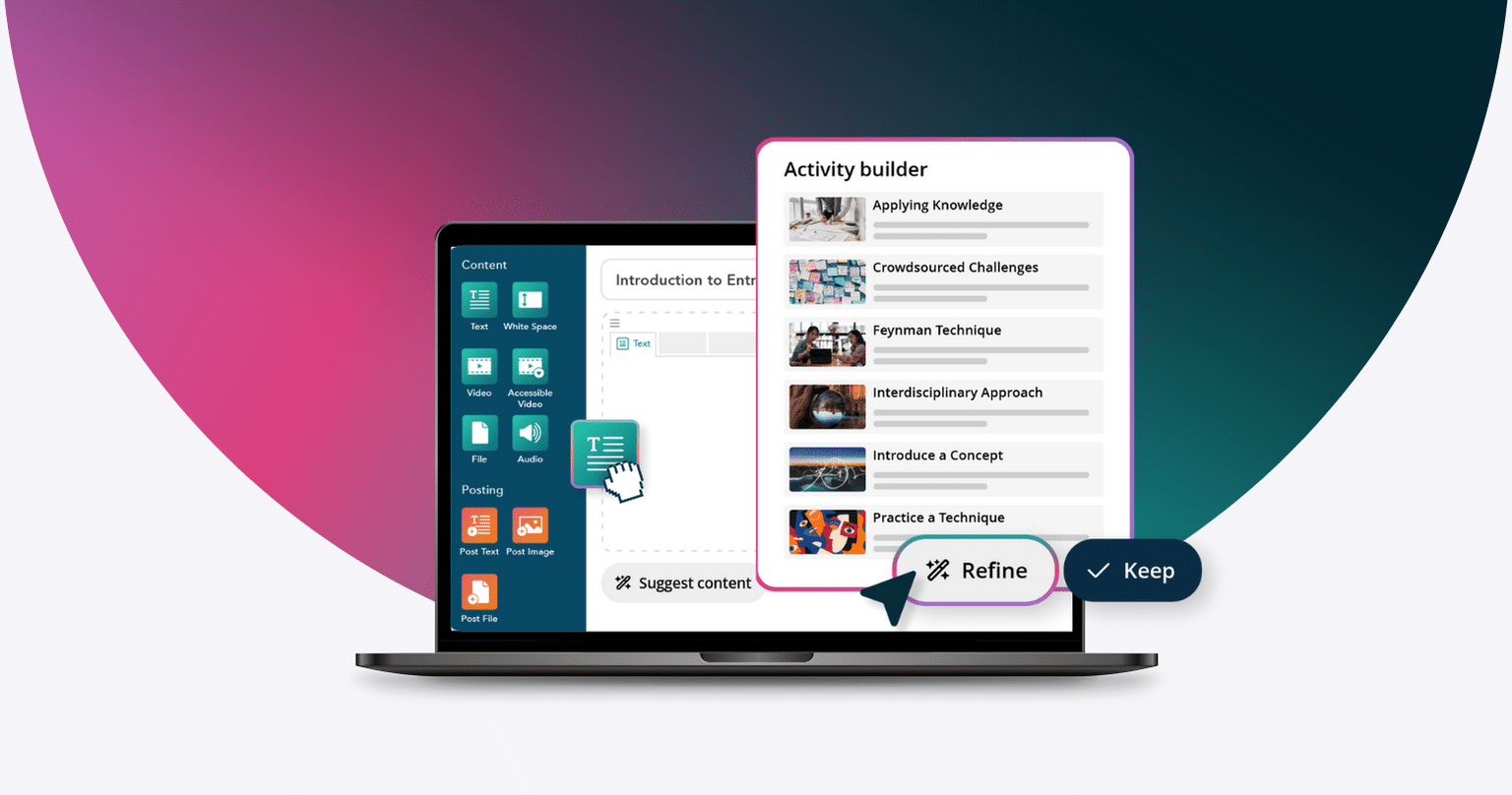

Welcome to our guide to leveraging generative AI for teaching at MIT Sloan. The fundamentals of great teaching haven’t changed with the emergence of new AI tools. However, if you’re struggling to find the time to implement certain research-backed teaching strategies, these new technologies could be just what you need. Here are just a few of the many ways you can use AI in your teaching:

- Do you want to provide students with concrete examples that help illustrate abstract concepts? AI can generate examples on demand.

- Looking to create low-stakes quizzes for comprehension checks? AI can instantly generate practice questions tailored to your needs.

- Want your students to teach new concepts to an inquisitive partner? Consider asking them to converse with an AI model.

This guide will equip you with foundational knowledge, MIT policies, curated tools, ethical considerations, suggested use cases, and avenues to get support when teaching with generative AI tools. Our goal is to provide you with the knowledge and resources to smoothly incorporate these technologies into your teaching.

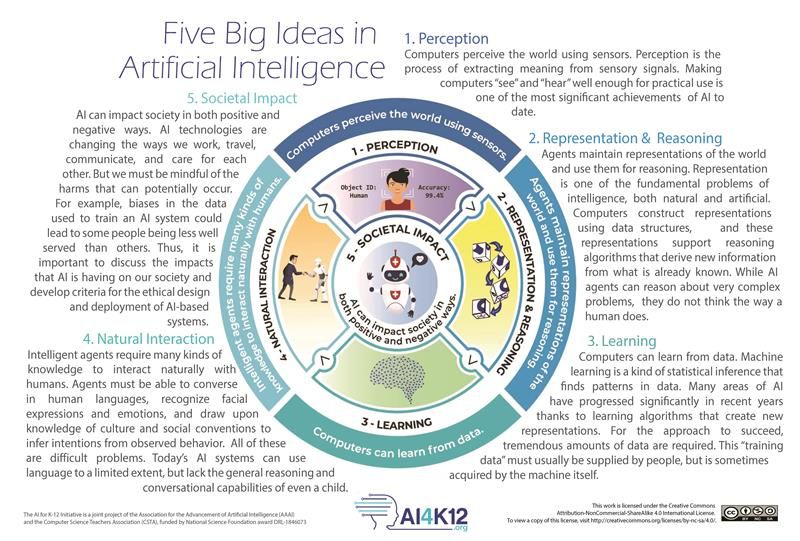

Generative AI is an artificial intelligence subset that learns from data to produce new, unique outputs on a vast scale, ranging from educational content to software code and more. Central to this are foundational AI models trained on massive datasets. Generative AI models are essentially advanced language prediction tools.

There’s a lot of jargon involved in discussing generative AI systems. Learn more about generative AI terminology in the AI Glossary .

The following video is the first in Wharton Interactive’s five-part course on Practical AI for Instructors and Students . In these videos, MIT Sloan alum and Wharton Associate Professor Ethan Mollick, along with Lilach Mollick, Director of Pedagogy at Wharton Interactive, provide an accessible overview of large language models and their potential for enhancing teaching and learning.

In this first video, you can learn about the following:

- Why AI is now accessible to everyone and how students are using it

- What we mean by AI, specifically large language models and generative AI

- How models like ChatGPT work and their surprising capabilities

- The potentially outsized impact of AI on educators and creative professionals

- Ethical considerations and risks related to generative AI

You can watch the other four videos in the Mollicks’ Practical AI for Instructors and Students Course to learn more about large language models, prompting AI, using AI to enhance your teaching, and how students can use AI to support their learning.

Generative AI Tools

We encourage you to spend some time exploring the generative AI tools in this resource hub. It’s important to get a sense of any technology’s capabilities and limitations before you integrate it into your teaching. Also, trying these technologies yourself may help you get a sense of how your students are using generative AI.

Before you start using AI tools in your teaching, make sure to review MIT Sloan’s Guiding Principles for the Use of Generative AI in Courses .

The tools we’ve curated in this resource hub fall into these categories:

- AI Writing and Content Creation Tools: Large language models accessed through tools like ChatGPT and Claude can help generate written content, provide grammar suggestions, summarize texts, and more. Our overview of AI writing assistants covers the types of support they can provide along with important ethical considerations. While not a substitute for human writing, these tools can help accelerate drafting and revision.

- AI Data Analysis and Quantitative Tools: Complex data sets are now more understandable thanks to AI analytics and visualization platforms. Explore options like IBM Watson and ToolsAI to see how algorithms can help process, interpret, and generate insights based on quantitative data. Consider use cases for statistical modeling, data visualization, and other applications while keeping key limitations in mind.

- AI Image Generation Tools: Models like DALL-E 3 and Stable Diffusion enable the creation of original images, videos, and other multimedia just by describing desired outputs. With experimentation, they may enable you to transform your visual media workflows.

While you explore each platform’s potential, make sure to closely monitor for quality, bias, and responsible usage.

Ethical Considerations

The emergence of powerful generative AI systems presents exciting possibilities for enhancing teaching and learning. However, integrating these technologies into teaching also raises important ethical questions. Three key areas of concern are data privacy, AI-generated falsehoods, and bias in AI systems.

Data Privacy

Make sure to treat unsecured AI systems like public platforms. As a general rule, and in accordance with MIT’s Written Information Security Policy , you should never enter any data or input that is confidential or sensitive into publicly accessible generative AI tools . This includes (but is not limited to) individual names, physical or email addresses, identification numbers, and specific medical, HR, financial records, as well as proprietary company details and any research or organizational data that are not publicly available. If in doubt, please consult with MIT Sloan Technology Services Office of Information Security .

Note that some of this data is also governed by FERPA (Family Educational Rights and Privacy Act), the federal law in the United States that mandates the protection of students’ educational records (U.S. Department of Education), as well as various international privacy regulations including the European GDPR and Chinese PIPL .

Microsoft Copilot provides the MIT Sloan community with data-protected access to AI tools GPT-4 and DALLE-3. Chat data is not shared with Microsoft or used to train their AI models. Access Microsoft Copilot by logging in with your MIT Kerberos account at https://copilot.microsoft.com/ . To learn more, see What is Microsoft Copilot (AI Chat)?

Beyond never sharing sensitive data with publicly available AI systems, we recommend that you remove or change any details that can identify you or someone else in any documents or text that you upload or provide as input. If there’s something you wouldn’t want others to know or see, it’s best to keep it out of the AI system altogether (Nield, 2023). This is not just about personal details, but also proprietary information (including ideas, algorithms or code), unpublished research, or sensitive communications.

It’s also essential to recognize that once data is entered into most AI systems, it’s challenging—if not impossible—to remove it (Heikkilä, 2023). Always exercise caution and make sure any information you provide aligns with your comfort level and understanding of its potential long-term presence in the AI system, as well as with MIT’s privacy and security requirements .

Falsehoods and Bias

There are well-documented issues around AI systems generating content that includes falsehoods (“hallucinations”) and harmful bias (Germain, 2023; Nicoletti & Bass, 2023). Educators have a responsibility to monitor AI output, address problems promptly, and encourage critical thinking about AI’s limitations.

We encourage you to review our resources on protecting privacy, integrating AI responsibly into your course, and mitigating AI’s issues with hallucinations and bias:

- Navigating Data Privacy: Using generative AI tools to enhance your teaching requires a strong commitment to data privacy. This article outlines considerations for protecting your and students’ privacy when using publicly available generative AI tools for teaching and learning. These include avoiding sharing sensitive data, treating AI inputs carefully, and customizing privacy settings.

- Practical Strategies for Teaching with AI: This guide offers strategies for harnessing AI tools to augment education while addressing AI biases and hallucinations, guiding student engagement with AI tools, and developing AI literacy.

- When AI Gets It Wrong: Addressing AI Hallucinations and Bias: This article provides an overview of the biases and inaccuracies currently common in generative AI outputs. It outlines strategies for identifying and mitigating the impact of problematic AI content.

By proactively addressing ethical considerations and AI’s limitations, we can realize the promise of generative AI while upholding principles of fairness, accuracy, and transparency.

AI-Powered Teaching Strategies

Thinking about using generative AI in your teaching but not sure where to start? In this section, we’ll walk through several simple strategies for implementing research-based teaching best practices with the help of generative AI tools. These approaches are grounded in the principles of Universal Design for Learning (UDL) and insights from the learning sciences . You can use the strategies as-is or think about creative ways to adapt them to your own courses.

1. Use AI to Generate Concrete Examples

Teaching often involves explaining abstract concepts or theories. While these are essential for academic understanding, they can sometimes be challenging for students to grasp without real-world context. You can use generative AI tools to come up with many concrete examples to make abstract ideas more relatable and understandable for students.

How to Implement This Strategy:

- Identify an abstract concept. Select one abstract concept or theory that you’ll be covering in your lesson.

- Choose a generative AI tool. Select one or several AI Writing and Content Creation Tools that you’ll use for this task.

- Teach the AI. Prompt your chosen AI tool to engage with the concept you’ve selected. If the tool is connected to the internet, you can ask it to look up and summarize the concept. If the tool is not connected to the internet, provide it with open-source content describing the concept and ask it to summarize that information.

- Prompt the AI. Ask your chosen chatbot for examples or applications of the chosen concept. You can use a prompt like this one created by Ethan Mollick and Lilach Mollick: “I would like you to act as an example generator for students. When confronted with new and complex concepts, adding many and varied examples helps students better understand those concepts. I would like you to ask what concept I would like examples of, and what level of students I am teaching. You will look up the concept, and then provide me with four different and varied accurate examples of the concept in action” (Mollick & Mollick, 2023-b).

- Review and select examples. From the generated examples, select the most relevant and clear examples that align with the lesson’s objectives. Always verify the accuracy of the examples provided by the AI using trusted sources. Make sure to address and eliminate any harmful bias in AI-generated examples.

- Integrate the examples into lessons. Incorporate these examples into your lectures, discussions, or assignments.

What’s the research? Concrete examples help bridge the gap between abstract theories and real-world applications. Research shows that exploring tangible instances can help students better relate to and understand complex concepts, activating their background knowledge and making learning experiences more meaningful (Smith & Weinstein, n.d.-a; CAST, n.d.-b).

2. Use AI to Create Practice Quizzes

Frequent low-stakes quizzes are a great way to help students test their knowledge and reinforce their understanding. However, creating quizzes can be time-consuming for faculty. With the rise of generative AI tools like ChatGPT, though, it’s now possible to streamline the quiz creation process. You can use AI to generate practice quizzes tailored to specific topics. Moreover, these AI-generated quizzes can be adapted to fit various teaching approaches and course requirements, offering a flexible solution for assessment needs.

- Choose your topics. Identify the topics or concepts for which you want to create practice quizzes.

- Select a generative AI tool. Identify one or several AI Writing and Content Creation Tools that you’ll use for this task.

- Prompt the AI. Ask the AI tool to generate quiz questions related to these topics. Use a quiz-question generating prompt like this one created by Ethan Mollick and Lilach Mollick: “You are a quiz creator of highly diagnostic quizzes. You will look up how to do good low-stakes tests and diagnostics. You will then ask me two questions. (1) First, what, specifically, should the quiz test. (2) Second, for which audience is the quiz. Once you have my answers you will look up the topic and construct several multiple choice questions to quiz the audience on that topic. The questions should be highly relevant and go beyond just facts. Multiple choice questions should include plausible, competitive alternate responses and should not include an ‘all of the above’ option. At the end of the quiz, you will provide an answer key and explain the right answer” (Mollick & Mollick, 2023-b).

- Review and refine the results. Examine the generated questions for relevance and accuracy. Remove any content that perpetuates harmful biases. Modify or refine as necessary.

- Distribute the quizzes to students. Share the practice quizzes with students. You may want to incorporate the questions into a Canvas quiz .

What’s the research? Retrieval practice, or the act of recalling information from memory, strengthens memory retention (Smith & Weinstein, n.d.-d). Practice quizzes offer students an opportunity to test their understanding and reinforce their learning, making the information more retrievable in the future.

3. Assign Students to Generate Visual Summaries

Visual aids have always been a cornerstone in effective teaching, aiding in comprehension and retention. With the rise of image-generating AI models, we now have new tools in hand to help create these visual aids. In this use case, you’ll ask students to craft visual summaries of specific topics, blending both verbal descriptions and AI-generated imagery. This not only deepens their understanding but also fosters creativity and critical thinking as they evaluate and refine the visuals produced by AI tools.

- Assign topics. Provide students with specific topics for which they should create visual summaries.

- Guide students to explore AI Image Generation Tools . Follow the tips in our article Practical Strategies for Teaching with AI to set your students up for success with their chosen AI tool. Make sure they are aware of generative AI’s limitations and privacy implications .

- Have students create visual summaries. Ask students to find or generate images that they can use to create visual aids for the assigned topics. Encourage students to combine text and visual information to summarize the topic’s main points.

- Review and discuss students’ work. Examine the visual summaries in class, discussing the concepts and clarifying any misconceptions. If this assignment is graded, make sure to grade based on conceptual understanding rather than image quality.

What’s the research? Dual coding is when learners interact with content through both verbal and visual information, enhancing memory and understanding (Smith & Weinstein, n.d.-b). This research-backed study strategy aligns with the Universal Design for Learning checkpoint “Illustrate through multiple media” (CAST, n.d.-a). Visual summaries allow students to integrate two forms of information, deepening their comprehension and making the learning experience more engaging.

4. Ask Students to Teach the AI

Deep understanding often comes from the act of explaining. In the realm of education, having students articulate their understanding of a concept can solidify their grasp and highlight areas needing further clarification. With the advent of AI tools like ChatGPT, students now have an interactive platform where they can practice this act of elaboration. By engaging in detailed conversations with the AI, students can receive instant feedback, refine their understanding, and practice the art of explanation.

To see what this strategy can look like in action, check out our blog post: Harnessing AI in Finance: Eric So’s Innovative Take on Teaching Value Investing .

- Choose a generative AI tool. Select a free AI Writing and Content Creation Tool that students can use for this activity.

- Introduce your chosen platform to students. Follow the tips in our article Practical Strategies for Teaching with AI to set your students up for success with their chosen AI tool. Make sure they are aware of generative AI’s limitations and privacy implications .

- Assign topics. Provide students with specific topics or concepts they should explain to the AI.

- Invite students to interact with the AI. Encourage students to have detailed conversations with the AI, explaining concepts and receiving feedback.

- Reflect and discuss. Ask students to reflect on their conversation with the AI and identify areas for improvement.

What’s the research? Elaborative interrogation is a research-backed study strategy in which students deepen their understanding by asking questions and explaining concepts (Smith & Weinstein, n.d.-c). By interacting with AI, students can practice this strategy, enhancing their comprehension and reinforcing their learning.

Get Support

As you consider how to best use generative AI in your course, questions will arise. Contact us for a personalized consultation. We’re here to be your thought partner during your development and implementation process.

Integrating artificial intelligence into your teaching offers both opportunities and challenges. In this guide, we’ve provided an initial roadmap to begin exploring this new space. We’ve covered the basics of what generative AI is, considered its potential benefits, and explored practical use cases to incorporate generative AI tools into teaching. We’ve also emphasized the importance of ethical considerations like prioritizing student privacy and addressing potential biases.

While AI offers powerful tools to augment our teaching methods, the human touch remains irreplaceable. The goal is not to replace educators but to empower them with additional resources. By combining the strengths of AI with the expertise of skilled instructors, we can create richer, more effective learning experiences for our students.

As you move forward, remember that you’re not alone on this journey. Our team is here to support you, answer questions, and provide guidance. We’re excited to see how you’ll harness the potential of AI in your classrooms and look forward to hearing about your experiences. Let’s explore, learn, and innovate together.

MIT Sloan Faculty: We want to know how you’re incorporating generative AI in your courses—big or small. Your experiences are more than just personal milestones; they’re shaping the future of pedagogy. By sharing your insights, you contribute to a community of innovation and inspire colleagues to venture into new territories. Contact us to be featured . We’re here to help you tell your story!

CAST. (n.d.-a). Checkpoint 2.5: Illustrate through multiple media. UDL Guidelines. https://udlguidelines.cast.org/representation/language-symbols/illustrate-multimedia

CAST. (n.d.-b). Checkpoint 3.1: Activate or supply background knowledge. UDL Guidelines. https://udlguidelines.cast.org/representation/comprehension/background-knowledge

Germain, T. (2023, April 13). ‘They’re all so dirty and smelly:’ study unlocks ChatGPT’s inner racist. Gizmodo. https://gizmodo.com/chatgpt-ai-openai-study-frees-chat-gpt-inner-racist-1850333646

Heikkilä, M. (2023, April 19). OpenAI’s hunger for data is coming back to bite it. MIT Technology Review. https://www.technologyreview.com/2023/04/19/1071789/openais-hunger-for-data-is-coming-back-to-bite-it

Mollick, E., & Mollick, L. (2023, July 31-a). Practical AI for instructors and students part 1: Introduction to AI for teachers and students [Video]. YouTube. https://www.youtube.com/watch?v=t9gmyvf7JYo

Mollick, E., & Mollick, L. (2023, March 17-b). Using AI to implement effective teaching strategies in classrooms: Five strategies, including prompts. Available at SSRN: http://dx.doi.org/10.2139/ssrn.4391243

Nicoletti, L., & Bass, D. (2023, June 14). Humans are biased. Generative AI is even worse. Bloomberg Technology + Equality. https://www.bloomberg.com/graphics/2023-generative-ai-bias

Nield, D. (2023, July 16). How to use generative AI tools while still protecting your privacy. Wired. https://www.wired.com/story/how-to-use-ai-tools-protect-privacy

Smith, M., & Weinstein, Yana. (n.d.-a). Learn how to study using… concrete examples. The Learning Scientists. https://www.learningscientists.org/blog/2016/8/25-1

Smith, M. ,& Weinstein, Yana. (n.d.-b). Learn how to study using… dual coding. The Learning Scientists. https://www.learningscientists.org/blog/2016/9/1-1

Smith, M., & Weinstein, Yana. (n.d.-c). Learn how to study using… elaboration. The Learning Scientists. https://www.learningscientists.org/blog/2016/7/7-1

Smith, M., & Weinstein, Yana. (n.d.-d). Learn how to study using… retrieval practice. The Learning Scientists. https://www.learningscientists.org/blog/2016/6/23-1

Teach & Learn with AI

- Teach & Learn

- 4 Steps to Design an AI-Resilient Learning Experience

- AI Detectors Don’t Work. Here’s What to Do Instead.

- Data-Driven Teaching: AI for Pre- and Post-Class Surveys

- Practical Strategies for Teaching with AI

- Privacy Statement

Live Trainings

- Canvas Essentials

- Digital Whiteboarding

- Poll Everywhere

- View All Live Trainings

Request Forms

- General Support Request

- Course Copy Request

- Consultation Request

- View All Request Forms

Self-Paced Courses

- Build a Gold Standard Canvas Course

- Create a Virtual Exam

- Flip Your Class

- View All Self-Paced Courses

Quick Start Guides

- Membership Tool

- Term Start Checklist

- Teaching Spaces

- Teaching Studio

- Reserve a Space

- Classroom Technologies

- Equipment Recommendations

Recent Blog Posts

Bold technology, applied responsibly

AI can never replace the expertise, knowledge or creativity of an educator — but it can be a helpful tool to enhance and enrich teaching and learning experiences. As part of our Responsible AI practices , we use a human-centered design approach. And when it comes to building tools for education, we are especially thoughtful and deliberate.

Elevate educators

AI can help educators boost their creativity and productivity, giving them time back to invest in themselves and their students.

More security with Gmail and ChromeOS

With 99.9% of spam, phishing attempts, and malware blocked by AI-powered detection and zero reported ransomware attacks on any ChromeOS device, we make security our top priority.

More productivity and creativity with Gemini

With Gemini , educators have an AI assistant that can help them save time, get inspired with fresh ideas, and create captivating learning experiences for every student.

More interactivity with YouTube videos in Classroom

Educators can make learning more engaging through video lessons, and save time with AI-suggested questions for YouTube videos in Classroom (coming soon).

More power to get you through your day with Chromebook Plus

Chromebook Plus is affordable, powerful, and built with the best of Google AI to meet the needs of both teachers and staff.

Make learning personal for students

AI can help meet students where they are, so they can learn in ways that work for them.

More supportive with practice sets

Practice sets in Google Classroom enables educators to automatically provide their students with real-time feedback and helpful in-the-moment hints if they get stuck.

More accessible with Chromebooks

AI built into Chromebooks provides advanced text-to-speech, dictation, and live and closed captions.

More adaptive with Read Along in Classroom

The Read Along integration with Classroom provides real-time feedback on pronunciation to help build reading skills at a personal pace.

Gemini for Google Workspace

Gemini is an AI assistant across Google Workspace for Education that helps you save time, create captivating learning experiences, and inspire fresh ideas — all in a private and secure environment. Gemini Education can be purchased as an add-on to any Google Workspace for Education edition.

- Get Gemini for Google Workspace

AI training, toolkits and guides for educators

Guardian's Guide to AI

- Explore the guide

Generative AI for Educators

- Explore training

Google’s LearnLM Models

Get Started with Gemini for Workspace

Applied Digital Skills: Discover AI in Daily Life

A Guide to AI in Education

PILOT PROGRAM

AI Track of the Google for Education Pilot Program

- Express interest

Teach AI's AI Guidance for Schools Toolkit

- Explore toolkit

How Google for Education Champions Use AI

- Watch videos

Future of Education Global Research Report

- Explore the findings

Experience AI, Raspberry Pi, and Google DeepMind

Grow with Google: AI and Machine Learning Courses

Google Cloud Skills Boost: Intro to Gen AI Learning

Introduction to Machine Learning

- Explore learning module

EXPLORATION

Google Arts & Culture: Overview of AI

Teaching for tomorrow.

A Google for Education YouTube series, featuring conversations with thought leaders who are shaping the future of education.

- Watch the full playlist

Season 2 Trailer

In season 2 of Teaching for Tomorrow, educational thought leaders talk about the potential of AI to transform teaching and learning, from elevating educators to making learning more inclusive and personal for students.

- Watch video

Interview with David Hardy

David Hardy, CEO of All-365 and Made by Change, explains how digital technology can help make education and learning more inclusive.

Interview with Lisa Nielsen

Lisa Nielsen, Founder of The Innovative Educator, explains how generative AI can help teachers spend more time with students, personalize learning, and connect their classrooms to the world.

How Google is making AI helpful for everyone

Learn more about our company approach to developing and harnessing ai..

- Visit Google AI

Have questions? We’ve got answers

Safety and privacy

How does Google keep a student’s data safe and secure?

Google Workspace for Education is built on our secure, reliable, industry-leading technology infrastructure. Users get the same level of security that Google uses to protect our own services, which are trusted by over a billion users around the world every day. While AI capabilities introduce new ways of interacting with our tools, our overarching privacy policies and practices help keep users and organizations in control of their data. In addition, all core Workspace tools – like Gmail, Google Calendar, and Classroom – meet rigorous local, national, and international compliance standards, including GDPR, FERPA, and COPPA. Chromebooks are designed with multiple layers of security to keep them safe from viruses and malware without any additional software required. Each time a Chromebook powers on, security is checked. And because they can be managed centrally, Chromebooks make it easy for school IT administrators to configure policies and settings, like enabling safe browsing or blocking malicious sites.

Is Google Workspace for Education data used to train Google’s generative AI tools like Gemini and Search?

No. When using Google Workspace for Education Core Services, your customer data is not used to train or improve the underlying generative AI and LLMs that power Gemini, Search, and other systems outside of Google Workspace without permission. And prompts entered when interacting with tools like Gemini are not used without permission beyond the context of that specific user session.

How does Google ensure its AI-enabled technology is safe for kids?

Google takes the safety and security of its users very seriously, especially children. With technology as bold as AI, we believe it is imperative to be responsible from the start. That means designing our AI features and products with age-appropriate experiences and protections that are backed by research. And prior to launching any product, we conduct rigorous testing to ensure that our tools minimize potential harms, and work to ensure that a variety of perspectives are included to identify and mitigate unfair bias.

What is Google’s approach to privacy with AI in education?

Across Google Cloud & Google Workspace, we’ve long shared robust privacy commitments that outline how we protect user data and prioritize privacy. AI doesn’t change these commitments - it actually reaffirms their importance. We are committed to preserving our customers’ privacy with our AI offerings and to supporting their compliance journey. Google Cloud has a long-standing commitment to GDPR compliance, and AI is no different in how we incorporate privacy-by-design and default from the beginning. We engage regularly with customers, regulators, policymakers, and other stakeholders as we evolve our offering to get their feedback for Edu AI offerings which process personal data.

Partnership and resources

Does Google consult with educators and experts when developing AI tools for use in the classroom?

Yes. A big component of being thoughtful with new technology is our commitment to partnering with schools and educators, as well as other education experts (like learning scientists) and organizations, along the way. We don’t just build for educators, we build with them. Through our Customer Advisory Boards and Google for Education Pilot Program, we also work directly with school communities around the world to gather feedback on our products and features before making them widely available. By listening to their perspectives, understanding how they’re using our tools, and addressing their challenges, we can be thoughtful in our product development and implementation. We also roll out new features gradually, ensuring that schools can stay in control of what works best for them.

What resources are being provided by Google to educate teachers on AI?

Teams across Google are actively creating and curating content and tutorials. Here are a few of our favorites, with more on the way: Generative AI for Educators A Guide to AI in Education Grow with Google: AI and machine learning courses Applied Digital Skills: Discover AI in Daily Life Google Cloud Skills Boost: Intro to Gen AI Learning Path Introduction to Machine Learning Google Arts & Culture: overview of AI Experience AI

You're now viewing content for a different region.

For content more relevant to your region, we suggest:

Sign up here for updates, insights, resources, and more.

Browse Course Material

Course info, instructors.

- Prof. Harold Abelson

- Prof. Randall Davis

- Prof. Cynthia Breazeal

- Safinah Ali

- Prerna Ravi

Departments

- Electrical Engineering and Computer Science

- Media Arts and Sciences

As Taught In

- Artificial Intelligence

- Curriculum and Teaching

- Educational Technology

Learning Resource Types

Generative artificial intelligence in k–12 education, course description.

You are leaving MIT OpenCourseWare

This browser is no longer supported.

Upgrade to Microsoft Edge to take advantage of the latest features, security updates, and technical support.

Microsoft Learn Educator Center

AI for education: Resources and learning opportunities

Explore resources and courses on how to use artificial intelligence (AI) for educational purposes with Microsoft.

Educator Center trainings

Navigate AI in education by looking at essential AI concepts, techniques, and tools.

Explore using Microsoft Copilot in education by learning basic concepts, modes, and features.

Build fluency through independent practice and save time with Insights.

Interact with Microsoft Copilot in Bing to learn about the capabilities of generative AI.

Help learners discover, interact, and create with AI and generative AI including responsible use of AI and prompt engineering.

Explore the potential of artificial intelligence in education.

AI classroom toolkit

Classroom toolkit: Unlocking generative AI safely and responsibly is a creative resource that blends engaging narrative stories with instructional information to create an immersive and effective learning experience for educators and students aged 13-15 years.

Reading Coach

Engage students and improve reading fluency with AI-powered stories and personalized practice. Learners create unique AI-generated stories that are moderated for content quality, safety and age appropriateness.

AI in education 101

This deep dive provides the latest updates from Microsoft EDU across the AI spectrum.

Educators can use this curated collection of Bing Chat prompts to help craft engaging lessons, respond to student inquiries, or streamline administrative tasks and improve productivity.

Enhance your higher education curriculum with continually updated technical AI content for your classroom. Your students will benefit from the technical skilling that is relevant to industry needs and real-world job responsibilities.

Microsoft works with communities, nonprofits, education institutions, businesses, and governments to help people excluded from the digital economy gain the skills, knowledge, and opportunity to gain jobs and livelihoods. Learn about AI and access resources and training on in-demand AI and machine learning skills for jobs and organizations.

Microsoft Copilot resources for education

Find resources to get started with Copilot, the AI assistant for educators, staff, and higher education students.

Microsoft Education AI blog posts

Learn about the latest updates and availability for Microsoft Copilot in education.

Enhance AI literacy with 11 resources from Microsoft Education useful for educators, parents/guardians, and curious learners.

The next wave of AI innovations includes expanded Copilot for Microsoft 365 availability, Loop coming to education, Reading Coach, and features designed to free up time for educators and personalize learning.

Build AI literacy with Minecraft

Teach AI across the curriculum with Minecraft Education! Explore immersive lessons and videos to help your learners understand AI, download our prompt-writing resource for educators to create engaging lesson plans, and spark classroom discussion on this critical topic.

Resources from our partners

Discover the world of AI and its potential in education with Code.org’s learning series for teachers.

Toolkit to create guidance to help communities realize the potential benefits of incorporating AI in primary and secondary education.

Introduce students to how AI works and why it matters.

Technical AI paths

Microsoft Azure AI fundamentals

Introduction to fundamental concepts related to artificial intelligence (AI) and the services in Microsoft Azure that can be used to create AI solutions.

Prepare to teach AI-900 Microsoft Azure AI fundamentals in academic programs

Learn about the Microsoft Learn for Educators program and how to best deliver its content to your students. Prepare to deliver Microsoft’s Azure AI fundamentals.

Develop Generative AI Solutions with Azure OpenAI Service

Learn how to provision Azure OpenAI service, deploy models, and use them in generative AI applications.

Resources for students

Learn what AI is, why it's needed, and how cloud computing and platforms relate to it.

Introduction to how AI is applied in daily life and across industries, including NLG and speech recognition.

Explore the roles and types of APIs and databases and how they're a part of programming.

Explore two computing languages used for AI programming, R and Python, and Minecraft Hour of Code.

You are using an outdated browser. This website is best viewed in IE 9 and above. You may continue using the site in this browser. However, the site may not display properly and some features may not be supported. For a better experience using this site, we recommend upgrading your version of Internet Explorer or using another browser to view this website.

- Download the latest Internet Explorer - No thanks (close this window)

- Penn GSE Environmental Justice Statement

- Philadelphia Impact

- Global Initiatives

- Diversity & Inclusion

- Catalyst @ Penn GSE

- Penn GSE Leadership

- Program Finder

- Academic Divisions & Programs

- Professional Development & Continuing Education

- Teacher Programs & Certifications

- Undergraduates

- Dual and Joint Degrees

- Faculty Directory

- Research Centers, Projects & Initiatives

- Lectures & Colloquia

- Books & Publications

- Academic Journals

- Application Requirements & Deadlines

- Tuition & Financial Aid

- Campus Visits & Events

- International Students

- Options for Undergraduates

- Non-Degree Studies

- Contact Admissions / Request Information

- Life at Penn GSE

- Penn GSE Career Paths

- Living in Philadelphia

- DE&I Resources for Students

- Student Organizations

- Career & Professional Development

- News Archive

- Events Calendar

- The Educator's Playbook

- Find an Expert

- Race, Equity & Inclusion

- Counseling & Psychology

- Education Innovation & Entrepreneurship

- Education Policy & Analysis

- Higher Education

- Language, Literacy & Culture

- Teaching & Learning

- Support Penn GSE

- Contact Development & Alumni Relations

- Find a Program

- Request Info

- Make a Gift

- Current Students

- Staff & Faculty

Search form

Introduction to teaching with artificial intelligence (ai), penn gse professional learning program, you are here.

Advances in Artificial Intelligence (AI) are set to revolutionize teaching and learning. Explore transformative ideas, design innovative learning activities, and collaborate with peers to integrate AI into your classroom.

What Sets Us Apart

About the program.

The Introduction to Teaching with Artificial Intelligence (AI) program convenes K-12 and higher-education educators who are eager to explore how artificial intelligence can enhance their learning environments.

Application Deadline

- Final Deadline: July 31, 2024

Program Start September 10, 2024

Certificate Offered Penn GSE Certificate of Participation

Credits 4 Continuing Education (CE) Credits 40 Pennsylvania ACT 48 Credits

- Standard rate: $1,500

- Leadership track rate: $2,250

- 30% discount for School District of Philadelphia employees

- 15% discount available for:

- Penn GSE and Center for Professional Learning alumni

- Penn employees

- Groups of 5+ from the same organization, contact us prior to applying.

Ideal Candidates

- K-12 teachers and administrators

- Instructional leaders (e.g., coaches, mentors, professional development leaders)

- Higher education teachers and administrators

- Teachers in informal and non-traditional settings

This program aims to engage professionals in exploring the benefits and challenges of using AI in education. Through the course, gain exposure to AI tools to support teaching and learning, as well as develop a greater capacity to perceive the nuances and dilemmas of this technology, pedagogy, and its effect on education.

The Introduction to Teaching with AI program includes three parts.

Part I: Explore You will deepen your own understanding of the recent advances in AI, as well as the questions, tensions, and possibilities it brings to the classroom, by exploring resources, materials, and videos on our online learning platform.

Part II: Envision You will engage in a series of live virtual workshops where you will design learning activities and experiences that you intend to implement in your own classroom. Workshops in previous program cohorts have included:

- An Introduction to AI: Understanding the scope of what foundation models can do - Dr. Ryan Baker

- Considering the dangers of using AI in the classroom and supporting students to be thoughtful users of AI tools - Dr. Sarah Schneider Kavanagh

- Integrating AI to augment student thinking and collaboration - Dr. Bodong Chen

- Designing writing assignments with AI - Dr. Amy Stornaiuolo

Part III: Enact You will implement learning activities and experiences with your students in your local context. You will also participate in live virtual sessions with small teams of colleagues to connect, collaborate, reflect, and learn from each other's experiences.

By the end of the program, you will gain the ability to:

- Utilize tools such as OpenAI, ChatGPT4, and Generative AI.

- Apply scaffolding techniques taught by Penn GSE faculty experts in instructional design and learning technologies to enhance your curriculum.

- Assess how AI affects students' abilities to learn.

- Make informed decisions regarding AI implementation, usage, and policies.

Fall 2024 Schedule

| September 10, 2024 | Asynchronous session; ~10 hours | Learners will begin to explore resources, materials, and videos to help deepen their understanding of the recent advancements in artificial intelligence. |

| October 10, 2024 | 5:00-7:00 p.m. (ET) | Workshop #1: Designing writing assignments with AI - Dr. Amy Stornaiuolo |

| October 15, 2024 | 5:00-7:00 p.m. (ET) | Workshop #2: An Introduction to AI: Understanding the scope of what foundation models can do - Dr. Ryan Baker |

| October 17, 2024 | 5:00-7:00 p.m. (ET) | Workshop #3: Integrating AI to augment student thinking and collaboration - Dr. Bodong Chen |

| October 22, 2024 | 5:00-7:00 p.m. (ET) | Workshop #4: Considering the dangers of using AI in the classroom and supporting students to be thoughtful users of AI tools - Dr. Sarah Schneider Kavanagh |

| October 29, 2024 | 5:00-7:00 p.m. (ET) | Workshop #5: Project launch design |

Part III - General Track October - December | 2-4 hours per session; dates and times to be scheduled. | Learners will implement their AI activities and experiences in their classrooms and share their findings in small discussion groups. The cohort will be divided into sub-groups based on learners’ time zones to connect, collaborate, reflect, and learn from each other’s experiences in live virtual sessions. |

Part III - Leadership Track November 13 , 2024 | 5 - 8 p.m. |

*New Offering - Leadership Track

As AI continues to impact education, school and district leaders must carefully consider, develop, and implement guidance for teachers, students, parents, and tech staff. If you are in a leadership position, join our specialized AI Leadership Track to enhance your leadership skills and capabilities around AI. Develop a school resource such as incisive policies and professional development aligned with your community's mission alongside other educational leaders.

Delve deeper into thought-provoking questions such as:

- How does and how should AI impact student learning, teacher planning, and overall school culture?

- In what ways does the system and which parts of the system - teachers, students, curriculum, AI, pedagogies/teaching/assessment practices, resources, beliefs - need to be redesigned and adjusted as a result of AI?

- How do you design policy and professional development that cultivates informed and ethical AI use?

The Leadership Track follows the same course of study through Parts I and II. During Part III, join other educational leaders for a specialized home team led by Dr. Tyler Thigpen , an expert in leading education innovation and transformation.

- Meet with experts in the field in an exclusive small group session

- Engage in a 3 hour workshop designed for leaders to create a resource for your school such as policies, professional development, and assessment practices which address AI and align with your community’s mission.

Continuing Education Credits - FAQs & Policy

Frequently asked questions.

What are the benefits of obtaining Continuing Education (CE) credits?

CE credits are issued on an official University of Pennsylvania transcript. Having an official record of your participation in this program can add credibility to the work that you invest in completing it. In some school districts, CE credits can be used to help educators advance along their pay scale.

What style of grading does this program follow?

Programs that offer CE credits award "Pass/Fail" grading.

Can I add CE credits after I get started in the program?

You must decide before you start the program if you will be participating as a learner who is completing the program for CE credentialing. You will not be able to opt in after the program starts.

Can I receive partial credit or opt out of receiving CE credits after I start the program?

No, this program can not offer partial credit if a learner can not complete the program. However, a learner can unenroll from the program by the drop deadline and will be responsible for 100% of the program fee.

Why might you not want CE credits?

If you choose to receive CE credits but do not successfully complete this program, you risk receiving an F on your permanent Penn transcript.

Can participating educators receive Pennsylvania Act 48 credits if they don't register for CE credits?

Yes! All Pennsylvania educators are eligible to receive the total amount of ACT 48 credits regardless of their preference for CE credits.

Continuing Education Credits Policy

Please read the policy below carefully to understand the important consequences that choosing to receive CE credits may have for your University of Pennsylvania transcript .

As a participant in this Penn GSE Certificate program, you are eligible to receive Continuing Education (CE) credits for successful completion of the program requirements. Whether or not you choose to receive CE credits for your participation in this program, all program expectations and requirements are the same.

If you choose to receive CE credits, this course will appear on your permanent Penn transcript.

If you decide to unenroll from this program, you will have until the Add/Drop Deadline to do so without consequence for your transcript. This course will no longer appear on your Penn transcript.

If you decide to unenroll from this program after the Add/Drop Deadline, then this course will appear on your Penn transcript. Courses dropped after the Add/Drop Deadline requires instructor approval, and a 'W' will appear on your transcript in place of a grade.

If you decide to unenroll from this program after the Withdrawal Deadline, then this course will appear on your Penn transcript, and you may earn an F.

If you choose to receive CE credits for your participation in this program but do not successfully complete it, then you may earn an F on your Penn transcript.

Please indicate whether or not you would like to receive CE credits for your participation in this program on your application.

Program Contact

Gillian Daar

Academic Director & Program Director

Our Faculty

Related News & Research

Catalyst @ Penn GSE summit shows how entrepreneurs can harness research to help learners

M.S.Ed. in Education Entrepreneurship marks 10th year and looks ahead

Penn gse’s pilot abcs elective builds new math friendships and curriculum along the way.

Dean Strunk advocates for student teacher stipend in the "Philadelphia Inquirer"

- Become a Member

- Artificial Intelligence

- Computational Thinking

- Digital Citizenship

- Edtech Selection

- Global Collaborations

- STEAM in Education

- Teacher Preparation

- ISTE Certification

- School Partners

- Career Development

- ISTELive 24

- Solutions Summit

- Leadership Exchange

- 2024 ASCD Leadership Summit

- 2025 ASCD Annual Conference

- Edtech Product Database

- Solutions Network

- Sponsorship & Advertising

- Sponsorship & Advertising

Artificial Intelligence in Education

To prepare students to thrive as learners and leaders of the future, educators must become comfortable teaching with and about Artificial Intelligence. Generative AI tools such as ChatGPT , Claude and Midjourney , for example, further the opportunity to rethink and redesign learning. Educators can use these tools to strengthen learning experiences while addressing the ethical considerations of using AI. ISTE is the global leader in supporting schools in thoughtfully, safely and responsibly introducing AI in ways that enhance learning and empower students and teachers.

Interested in learning how to teach AI?

Sign up to learn about ISTE’s AI resources and PD opportunities.

StretchAI: An AI Coach Just for Educators

ISTE and ASCD are developing the first AI coach specifically for educators. With Stretch AI, educators can get tailored guidance to improve their teaching, from tips on ways to use technology to support learning, to strategies to create more inclusive learning experiences. Answers are based on a carefully validated set of resources and include the citations from source documents used to generate answers. If you are interested in becoming a beta tester for StretchAI, please sign up below.

Evolving Teacher Education in an AI World

Download this free report—with a framework and recommendations—on how educator prep programs can better ready their program and teacher candidates for incorporating AI.

Leaders' Guide to Artificial Intelligence

School leaders must ensure the use of AI is thoughtful and appropriate, and supports the district’s vision. Download this free guide (or the UK version ) to get the background you need to guide your district in an AI-infused world.

UPDATED! Free Guides for Engaging Students in AI Creation

ISTE and GM have partnered to create Hands-On AI Projects for the Classroom guides to provide educators with a variety of activities to teach students about AI across various grade levels and subject areas. Each guide includes background information for teachers and student-driven project ideas that relate to subject-area standards.

The hands-on activities in the guides range from “unplugged” projects to explore the basic concepts of how AI works to creating chatbots and simple video games with AI, allowing students to work directly with innovative AI technologies and demonstrate their learning.

These updated hands-on guides are available in downloadable PDF format in English, Spanish and Arabic from the list below.

Artificial Intelligence Explorations for Educators unpacks everything educators need to know about bringing AI to the classroom. Sign up for the next course and find out how to earn graduate-level credit for completing the course.

As a co-founder of TeachAI , ISTE provides guidance to support school leaders and policy makers around leveraging AI for learning.

Dive deeper into AI and learn how to navigate ChatGPT in schools with curated resources and tools from ASCD and ISTE.

Join our Educator AI Community on Connect

ISTE+ASCD’s free online community brings together educators from around the world to share ideas and best practices for using artificial intelligence to support learning.

Learn More From These Podcasts, Blog Posts, Case Studies and Websites

Partners Code.org, ETS, ISTE and Khan Academy offer engaging sessions with renowned experts to demystify AI, explore responsible implementation, address bias, and showcase how AI-powered learning can revolutionize student outcomes

One of the challenges with bias in AI comes down to who has access to these careers in the first place, and that's the area that Tess Posner, CEO of the nonprofit AI4All, is trying to address.

Featuring in-depth interviews with practitioners, guidelines for classroom teachers and a webinar about the importance of AI in education, this site provides K-12 educators with practical tools for integrating AI and computational thinking across their curricula.

This 15-hour, self-paced introduction to artificial intelligence is designed for students in grades 9-12. Educators and students should create a free account at P-TECH before viewing the course.

Explore More in the Learning Library

Explore more books, articles, and tools about artificial intelligence in the Learning Library.

- artificial intelligence

Grokking GenAI: 9 Unique Ways

9 Weird & Wonderful Ways to Grok Generative AI and Large Language Models

A human neural network trained on Anime subtitles was used to generate this article.

- Stanford Medicine Offers Courses with Free Certificate & CME Credit

- 5 Best Free Web Development Courses for 2024: Over 900 Hours of Learning

- 5 Best Notion Courses for 2024: Boost Your Productivity

- Beginning to Program: Review of CS50 Scratch Course

- 1000+ Open University Free Certificates

600 Free Google Certifications

Most common

- digital marketing

Popular subjects

Graphic Design

Artificial Intelligence

Design & Creativity

Popular courses

Product Management Essentials

Estadística Aplicada a los Negocios

How to Write Your First Song

Organize and share your learning with Class Central Lists.

View our Lists Showcase

AI in Education Courses and Certifications

Learn AI in Education, earn certificates with paid and free online courses from Georgia Tech, UNAM, University System of Georgia and other top universities around the world. Read reviews to decide if a class is right for you.

- Machine Learning Courses

- Educational Technology Courses

- Data Analytics Courses

- Python Courses

- TensorFlow Courses

- Instructional Design Courses

- Cognitive Sciences Courses

- Learning Analytics Courses

- With certificate (5)

- Free course (11)

- University course only (4)

- Beginner (6)

- Intermediate (1)

- < 30 mins (2)

- 1 - 2 hours (1)

- 2 - 5 hours (3)

- 5 - 10 hours (3)

- 10+ hours (3)

- English (10)

- Spanish (1)

AI for Teacher Assistance

Learn how artificial intelligence can be an assistant not only to students, but also to teachers.

- 4 weeks, 3-4 hours a week

- Free Online Course (Audit)

AI for Education (Intermediate)

This course expands on the AI for Education (Basic) course. Participants will learn quick ways to refine prompt engineering methods for assignments and course design that can be scaled to multiple levels of educational contexts. This course further provi…

- 5 hours 25 minutes

AI for Education (Advanced)

This course expands on the AI for Education (Intermediate) course. Participants will learn quick ways to refine prompt engineering methods for assignments and course design that can be scaled to multiple levels of educational contexts. This course furt…

- 5 hours 57 minutes

Generative AI Concepts

Discover how to begin responsibly leveraging generative AI. Learn how generative AI models are developed and how they will impact society moving forward.

- Free Trial Available

How ChatGPT Made My Job as a Teacher Harder

Explore Dr. Ibrahim Albluwi's TEDx talk on the impact of ChatGPT on teaching programming, and his research in computer science education. Less than 1 hour.

- Conference Talk

Embracing Humanoid Robot Shalu in Classrooms

Explore the potential of humanoid robot Shalu in classrooms with Dinesh Kunwar Patel's TEDx talk. Learn about AI, robotics, and innovation in under an hour.

Turing Lecture - Is Education AI-Ready?

Explore the role of AI in education post-COVID with Professor Luckin from the Alan Turing Institute. Understand current applications, future possibilities, and how to make education 'AI ready'. (1-2 hours)

- 1 hour 21 minutes

- Free Online Course

AI for educators

Module 1: Explore essential AI concepts, techniques, and tools that can support personalized learning, automate daily tasks, and provide insights for education.Upon completion of this module, you'll be able to: Describe generative AI in the broader cont…

- Microsoft Learn

- 2 hours 53 minutes

Introduction to artificial intelligence for trainers

Module 1: This module explores fundamental concepts in artificial intelligence (AI) and machine learning.By the end of this module, you'll be able to: Distinguish between supervised, unsupervised, and reinforcement learning, and identify the type of mac…

- 2 hours 36 minutes

AI business school for education

Learn to develop an AI strategy to create value in government, including artificial intelligence and machine learning technologies, culture, and responsible AI.

IA generativa en el aula

Conocerás, el concepto, utilidad, retos y posibilidades educativas de la IA generativa a través de la experiencia directa, y de lecturas y discusiones, con la intención de proponer aplicaciones útiles para el aprendizaje y la enseñanza. Discutirás las im…

- 11 hours 5 minutes

L'Intelligence Artificielle... avec intelligence !

Class'Code IAI est un MOOC citoyen accessible à toutes et à tous de 7 à 107 ans pour se questionner, expérimenter et comprendre ce qu’est l’Intelligence Artificielle… avec intelligence !

- France Université Numerique

Never Stop Learning.

Get personalized course recommendations, track subjects and courses with reminders, and more.

AI Will Transform Teaching and Learning. Let’s Get it Right.

At the recent AI+Education Summit, Stanford researchers, students, and industry leaders discussed both the potential of AI to transform education for the better and the risks at play.

When the Stanford Accelerator for Learning and the Stanford Institute for Human-Centered AI began planning the inaugural AI+Education Summit last year, the public furor around AI had not reached its current level. This was the time before ChatGPT. Even so, intensive research was already underway across Stanford University to understand the vast potential of AI, including generative AI, to transform education as we know it.

By the time the summit was held on Feb. 15, ChatGPT had reached more than 100 million unique users , and 30% of all college students had used it for assignments, making it one of the fastest-ever applications ever adopted overall – and certainly in education settings. Within the education world, teachers and school districts have been wrestling with how to respond to this emerging technology.

The AI+Education Summit explored a central question: How can AI like this and other applications be best used to advance human learning?

“Technology offers the prospect of universal access to increase fundamentally new ways of teaching,” said Graduate School of Education Dean Daniel Schwartz in his opening remarks. “I want to emphasize that a lot of AI is also going to automate really bad ways of teaching. So [we need to] think about it as a way of creating new types of teaching.”

Researchers across Stanford – from education, technology, psychology, business, law, and political science – joined industry leaders like Sal Khan, founder and CEO of Khan Academy, in sharing cutting-edge research and brainstorming ways to unlock the potential of AI in education in an ethical, equitable, and safe manner.

Participants also spent a major portion of the day engaged in small discussion groups in which faculty, students, researchers, staff, and other guests shared their ideas about AI in education. Discussion topics included natural language processing applied to education; developing students’ AI literacy; assisting students with learning differences; informal learning outside of school; fostering creativity; equity and closing achievement gaps; workforce development; and avoiding potential misuses of AI with students and teachers.

Several themes emerged over the course of the day on AI’s potential, as well as its significant risks.

First, a look at AI’s potential:

1. Enhancing personalized support for teachers at scale

Great teachers remain the cornerstone of effective learning. Yet teachers receive limited actionable feedback to improve their practice. AI presents an opportunity to support teachers as they refine their craft at scale through applications such as:

- Simulating students: AI language models can serve as practice students for new teachers. Percy Liang , director of the Stanford HAI Center for Research on Foundation Models , said that they are increasingly effective and are now capable of demonstrating confusion and asking adaptive follow-up questions.

- Real-time feedback and suggestions: Dora Demszky , assistant professor of education data science, highlighted the ability for AI to provide real-time feedback and suggestions to teachers (e.g., questions to ask the class), creating a bank of live advice based on expert pedagogy.

- Post-teaching feedback: Demszky added that AI can produce post-lesson reports that summarize the classroom dynamics. Potential metrics include student speaking time or identification of the questions that triggered the most engagement. Research finds that when students talk more, learning is improved.

- Refreshing expertise: Sal Khan, founder of online learning environment Khan Academy, suggested that AI could help teachers stay up-to-date with the latest advancements in their field. For example, a biology teacher would have AI update them on the latest breakthroughs in cancer research, or leverage AI to update their curriculum.

2. Changing what is important for learners

Stanford political science Professor Rob Reich proposed a compelling question: Is generative AI comparable to the calculator in the classroom, or will it be a more detrimental tool? Today, the calculator is ubiquitous in middle and high schools, enabling students to quickly solve complex computations, graph equations, and solve problems. However, it has not resulted in the removal of basic mathematical computation from the curriculum: Students still know how to do long division and calculate exponents without technological assistance. On the other hand, Reich noted, writing is a way of learning how to think. Could outsourcing much of that work to AI harm students’ critical thinking development?

Liang suggested that students must learn about how the world works from first principles – this could be basic addition or sentence structure. However, they no longer need to be fully proficient – in other words, doing all computation by hand or writing all essays without AI support.

In fact, by no longer requiring mastery of proficiency, Demszky argued that AI may actually raise the bar. The models won’t be doing the thinking for the students; rather, students will now have to edit and curate, forcing them to engage deeper than they have previously. In Khan’s view, this allows learners to become architects who are able to pursue something more creative and ambitious.

And Noah Goodman , associate professor of psychology and of computer science, questioned the analogy, saying this tool may be more like the printing press, which led to democratization of knowledge and did not eliminate the need for human writing skills.

3. Enabling learning without fear of judgment

Ran Liu, chief AI scientist at Amira Learning, said that AI has the potential to support learners’ self-confidence. Teachers commonly encourage class participation by insisting that there is no such thing as a stupid question. However, for most students, fear of judgment from their peers holds them back from fully engaging in many contexts. As Liu explained, children who believe themselves to be behind are the least likely to engage in these settings.

Interfaces that leverage AI can offer constructive feedback that does not carry the same stakes or cause the same self-consciousness as a human’s response. Learners are therefore more willing to engage, take risks, and be vulnerable.

One area in which this can be extremely valuable is soft skills. Emma Brunskill , associate professor of computer science, noted that there are an enormous number of soft skills that are really hard to teach effectively, like communication, critical thinking, and problem-solving. With AI, a real-time agent can provide support and feedback, and learners are able to try different tactics as they seek to improve.

4. Improving learning and assessment quality

Bryan Brown , professor of education, said that “what we know about learning is not reflected in how we teach.” For example, teachers know that learning happens through powerful classroom discussions. However, only one student can speak up at a time. AI has the potential to support a single teacher who is trying to generate 35 unique conversations with each student.

This also applies to the workforce. During a roundtable discussion facilitated by Stanford Digital Economy Lab Director Erik Brynjolfsson and Candace Thille , associate professor of education and faculty lead on adult learning at the Stanford Accelerator for Learning, attendees noted that the inability to judge a learner’s skill profile is a leading industry challenge. AI has the potential to quickly determine a learner’s skills, recommend solutions to fill the gaps, and match them with roles that actually require those skills.

Of course, AI is never a panacea. Now a look at AI’s significant risks:

1. Model output does not reflect true cultural diversity

At present, ChatGPT and AI more broadly generates text in language that fails to reflect the diversity of students served by the education system or capture the authentic voice of diverse populations. When the bot was asked to speak in the cadence of the author of The Hate U Give , which features an African American protagonist, ChatGPT simply added “yo” in front of random sentences. As Sarah Levine , assistant professor of education, explained, this overwhelming gap fails to foster an equitable environment of connection and safety for some of America’s most underserved learners.

2. Models do not optimize for student learning

While ChatGPT spits out answers to queries, these responses are not designed to optimize for student learning. As Liang noted, the models are trained to deliver answers as fast as possible, but that is often in conflict with what would be pedagogically sound, whether that’s a more in-depth explanation of key concepts or a framing that is more likely to spark curiosity to learn more.

3. Incorrect responses come in pretty packages

Goodman demonstrated that AI can produce coherent text that is completely erroneous. His lab trained a virtual tutor that was tasked with solving and explaining algebra equations in a chatbot format. The chatbot would produce perfect sentences that exhibited top-quality teaching techniques, such as positive reinforcement, but fail to get to the right mathematical answer.

4. Advances exacerbate a motivation crisis

Chris Piech , assistant professor of computer science, told a story about a student who recently came into his office crying. The student was concerned about the rapid progress of ChatGPT and how this would deter future job prospects after many years of learning how to code. Piech connected the incident to a broader existential motivation crisis, where many students may no longer know what they should be focusing on or don’t see the value of their hard-earned skills.

The full impact of AI in education remains unclear at this juncture, but as all speakers agreed, things are changing, and now is the time to get it right.

Watch the full conference:

Stanford HAI’s mission is to advance AI research, education, policy and practice to improve the human condition. Learn more

More News Topics

- Ethics of AI

- AI in Education

- Digital Inclusion

- Digital Policy, Capacities and Inclusion

- Women’s access to and participation in technological developments

- Internet Universality Indicators

- All publications

- Recommendation on the Ethics of AI

- Report on ethics in robotics

- Map of emerging AI areas in the Global South

- 7 minutes to understand AI

- On the ethics of AI

- On a possible normative instrument for the ethics of AI

- On technical and legal aspects of the desirability of a standard-setting instrument for AI ethics

Artificial intelligence in education

Artificial Intelligence (AI) has the potential to address some of the biggest challenges in education today, innovate teaching and learning practices, and accelerate progress towards SDG 4. However, rapid technological developments inevitably bring multiple risks and challenges, which have so far outpaced policy debates and regulatory frameworks. UNESCO is committed to supporting Member States to harness the potential of AI technologies for achieving the Education 2030 Agenda, while ensuring that its application in educational contexts is guided by the core principles of inclusion and equity. UNESCO’s mandate calls inherently for a human-centred approach to AI . It aims to shift the conversation to include AI’s role in addressing current inequalities regarding access to knowledge, research and the diversity of cultural expressions and to ensure AI does not widen the technological divides within and between countries. The promise of “AI for all” must be that everyone can take advantage of the technological revolution under way and access its fruits, notably in terms of innovation and knowledge.

Furthermore, UNESCO has developed within the framework of the Beijing Consensus a publication aimed at fostering the readiness of education policy-makers in artificial intelligence. This publication, Artificial Intelligence and Education: Guidance for Policy-makers , will be of interest to practitioners and professionals in the policy-making and education communities. It aims to generate a shared understanding of the opportunities and challenges that AI offers for education, as well as its implications for the core competencies needed in the AI era

The UNESCO Courier, October-December 2023

- Plurilingual

by Stefania Giannini, UNESCO Assistant Director-General for Education

International Forum on artificial intelligence and education

- More information

- Analytical report