Subscribe to the PwC Newsletter

Join the community, natural language processing, language modelling.

Long-range modeling

Protein language model, sentence pair modeling, representation learning.

Disentanglement

Graph representation learning, sentence embeddings.

Network Embedding

Classification.

Text Classification

Graph Classification

Audio Classification

Medical Image Classification

Text retrieval, deep hashing, table retrieval, nlp based person retrival, question answering.

Open-Ended Question Answering

Open-Domain Question Answering

Conversational question answering.

Knowledge Base Question Answering

Image generation.

Image-to-Image Translation

Text-to-Image Generation

Image Inpainting

Conditional Image Generation

Translation, data augmentation.

Image Augmentation

Text Augmentation

Large language model.

Knowledge Graphs

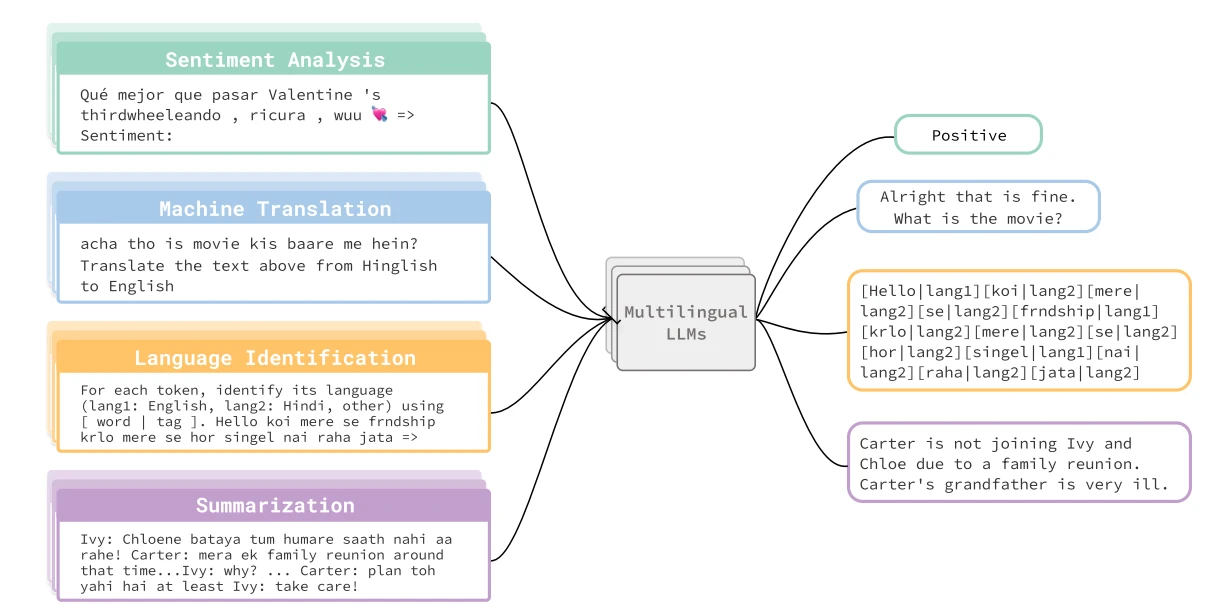

Machine translation.

Transliteration

Multimodal Machine Translation

Bilingual lexicon induction.

Unsupervised Machine Translation

Knowledge graph completion, triple classification, inductive knowledge graph completion, inductive relation prediction, text generation.

Dialogue Generation

Data-to-Text Generation

Multi-Document Summarization

Story Generation

2d semantic segmentation, image segmentation, text style transfer.

Scene Parsing

Reflection Removal

Visual question answering (vqa).

Visual Question Answering

Machine Reading Comprehension

Chart Question Answering

Chart understanding.

Topic Models

Document Classification

Sentence Classification

Emotion Classification

Data-free knowledge distillation.

Benchmarking

Sentiment analysis.

Aspect-Based Sentiment Analysis (ABSA)

Multimodal Sentiment Analysis

Aspect Sentiment Triplet Extraction

Twitter Sentiment Analysis

Named entity recognition (ner).

Nested Named Entity Recognition

Chinese named entity recognition, few-shot ner, few-shot learning.

One-Shot Learning

Few-Shot Semantic Segmentation

Cross-domain few-shot.

Unsupervised Few-Shot Learning

Optical character recognition (ocr).

Active Learning

Handwriting Recognition

Handwritten digit recognition, irregular text recognition, word embeddings.

Learning Word Embeddings

Multilingual Word Embeddings

Embeddings evaluation, contextualised word representations, continual learning.

Class Incremental Learning

Continual named entity recognition, unsupervised class-incremental learning, information retrieval.

Passage Retrieval

Cross-lingual information retrieval, table search, text summarization.

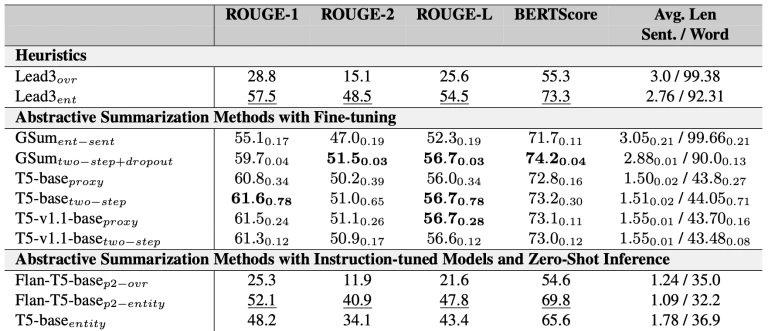

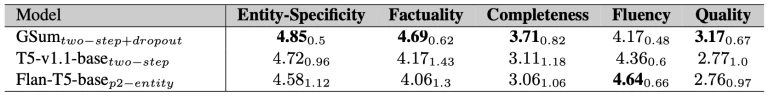

Abstractive Text Summarization

Document summarization, opinion summarization, relation extraction.

Relation Classification

Document-level relation extraction, joint entity and relation extraction, temporal relation extraction, link prediction.

Inductive Link Prediction

Dynamic link prediction, hyperedge prediction, anchor link prediction, natural language inference.

Answer Generation

Visual Entailment

Cross-lingual natural language inference, reading comprehension.

Intent Recognition

Implicit relations, active object detection, emotion recognition.

Speech Emotion Recognition

Emotion Recognition in Conversation

Multimodal Emotion Recognition

Emotion-cause pair extraction, natural language understanding, vietnamese social media text processing.

Emotional Dialogue Acts

Image captioning.

3D dense captioning

Controllable image captioning, aesthetic image captioning.

Relational Captioning

Semantic textual similarity.

Paraphrase Identification

Cross-Lingual Semantic Textual Similarity

In-context learning, event extraction, event causality identification, zero-shot event extraction, dialogue state tracking, task-oriented dialogue systems.

Visual Dialog

Dialogue understanding, code generation.

Code Translation

Code documentation generation, class-level code generation, library-oriented code generation, coreference resolution, coreference-resolution, cross document coreference resolution, semantic parsing.

AMR Parsing

Semantic dependency parsing, drs parsing, ucca parsing, conformal prediction.

Text Simplification

Music Source Separation

Decision Making Under Uncertainty

Audio source separation, semantic similarity.

Sentence Embedding

Sentence compression, joint multilingual sentence representations, sentence embeddings for biomedical texts, specificity, instruction following, visual instruction following, dependency parsing.

Transition-Based Dependency Parsing

Prepositional phrase attachment, unsupervised dependency parsing, cross-lingual zero-shot dependency parsing, information extraction, extractive summarization, temporal information extraction, document-level event extraction, cross-lingual, cross-lingual transfer, cross-lingual document classification.

Cross-Lingual Entity Linking

Cross-language text summarization, common sense reasoning.

Physical Commonsense Reasoning

Riddle sense, memorization, prompt engineering.

Visual Prompting

Response generation, data integration.

Entity Alignment

Entity Resolution

Table annotation, mathematical reasoning.

Math Word Problem Solving

Formal logic, geometry problem solving, abstract algebra, entity linking.

Question Generation

Poll generation.

Topic coverage

Dynamic topic modeling, part-of-speech tagging.

Unsupervised Part-Of-Speech Tagging

Abuse detection, hate speech detection, open information extraction.

Hope Speech Detection

Hate speech normalization, hate speech detection crisishatemm benchmark, data mining.

Argument Mining

Opinion Mining

Subgroup discovery, cognitive diagnosis, sequential pattern mining, bias detection, selection bias, language identification, dialect identification, native language identification, word sense disambiguation.

Word Sense Induction

Fake news detection, few-shot relation classification, implicit discourse relation classification, cause-effect relation classification, intrusion detection.

Network Intrusion Detection

Relational Reasoning

Semantic Role Labeling

Predicate Detection

Semantic role labeling (predicted predicates).

Textual Analogy Parsing

Slot filling.

Zero-shot Slot Filling

Extracting covid-19 events from twitter, grammatical error correction.

Grammatical Error Detection

Text matching, symbolic regression, equation discovery, document text classification.

Learning with noisy labels

Multi-label classification of biomedical texts, political salient issue orientation detection, pos tagging, spoken language understanding, dialogue safety prediction, deep clustering, trajectory clustering, deep nonparametric clustering, nonparametric deep clustering, stance detection, zero-shot stance detection, few-shot stance detection, stance detection (us election 2020 - biden), stance detection (us election 2020 - trump), multi-modal entity alignment, intent detection.

Open Intent Detection

Word similarity, model editing, knowledge editing, cross-modal retrieval, image-text matching, cross-modal retrieval with noisy correspondence, multilingual cross-modal retrieval.

Zero-shot Composed Person Retrieval

Cross-modal retrieval on rsitmd, document ai, document understanding, fact verification, text-to-speech synthesis.

Prosody Prediction

Zero-shot multi-speaker tts, intent classification.

Zero-Shot Cross-Lingual Transfer

Cross-lingual ner, self-learning, language acquisition, grounded language learning, constituency parsing.

Constituency Grammar Induction

Entity typing.

Entity Typing on DH-KGs

Line items extraction, word alignment, ad-hoc information retrieval, document ranking.

Multimodal Deep Learning

Multimodal text and image classification, abstract meaning representation, open-domain dialog, dialogue evaluation, novelty detection.

text-guided-image-editing

Text-based image editing, concept alignment.

Zero-Shot Text-to-Image Generation

Conditional text-to-image synthesis.

Shallow Syntax

Molecular representation, multi-label text classification, explanation generation, discourse parsing, discourse segmentation, connective detection, de-identification, privacy preserving deep learning, morphological analysis.

Text-to-Video Generation

Text-to-video editing, subject-driven video generation, conversational search, sarcasm detection.

Lemmatization

Speech-to-text translation, simultaneous speech-to-text translation.

Aspect Extraction

Aspect category sentiment analysis, extract aspect.

Aspect-Category-Opinion-Sentiment Quadruple Extraction

Aspect-oriented Opinion Extraction

Session search, knowledge distillation.

Self-Knowledge Distillation

Chinese Word Segmentation

Handwritten chinese text recognition, chinese spelling error correction, chinese zero pronoun resolution, offline handwritten chinese character recognition, entity disambiguation, authorship attribution, source code summarization, method name prediction, text clustering.

Short Text Clustering

Open Intent Discovery

Hierarchical text clustering, linguistic acceptability.

Column Type Annotation

Cell entity annotation, columns property annotation, row annotation.

Visual Storytelling

KG-to-Text Generation

Unsupervised KG-to-Text Generation

Abusive language, keyphrase extraction, few-shot text classification, zero-shot out-of-domain detection, safety alignment, key information extraction, key-value pair extraction, multilingual nlp, protein folding, term extraction, text2text generation, keyphrase generation, figurative language visualization, sketch-to-text generation, morphological inflection, phrase grounding, grounded open vocabulary acquisition, deep attention, spam detection, context-specific spam detection, traditional spam detection, word translation, natural language transduction, image-to-text retrieval, summarization, unsupervised extractive summarization, query-focused summarization.

Conversational Response Selection

Cross-lingual word embeddings, knowledge base population, passage ranking, text annotation, authorship verification.

Multimodal Association

Multimodal generation, video generation, image to video generation.

Unconditional Video Generation

Keyword extraction, biomedical information retrieval.

SpO2 estimation

Meme classification, hateful meme classification, news classification, graph-to-sequence, nlg evaluation, automated essay scoring, morphological tagging, key point matching, component classification, argument pair extraction (ape), claim extraction with stance classification (cesc), claim-evidence pair extraction (cepe), temporal processing, timex normalization, document dating, sentence summarization, unsupervised sentence summarization, long-context understanding, weakly supervised classification, weakly supervised data denoising, entity extraction using gan.

Rumour Detection

Semantic retrieval, emotional intelligence, dark humor detection, review generation, semantic composition.

Sentence Ordering

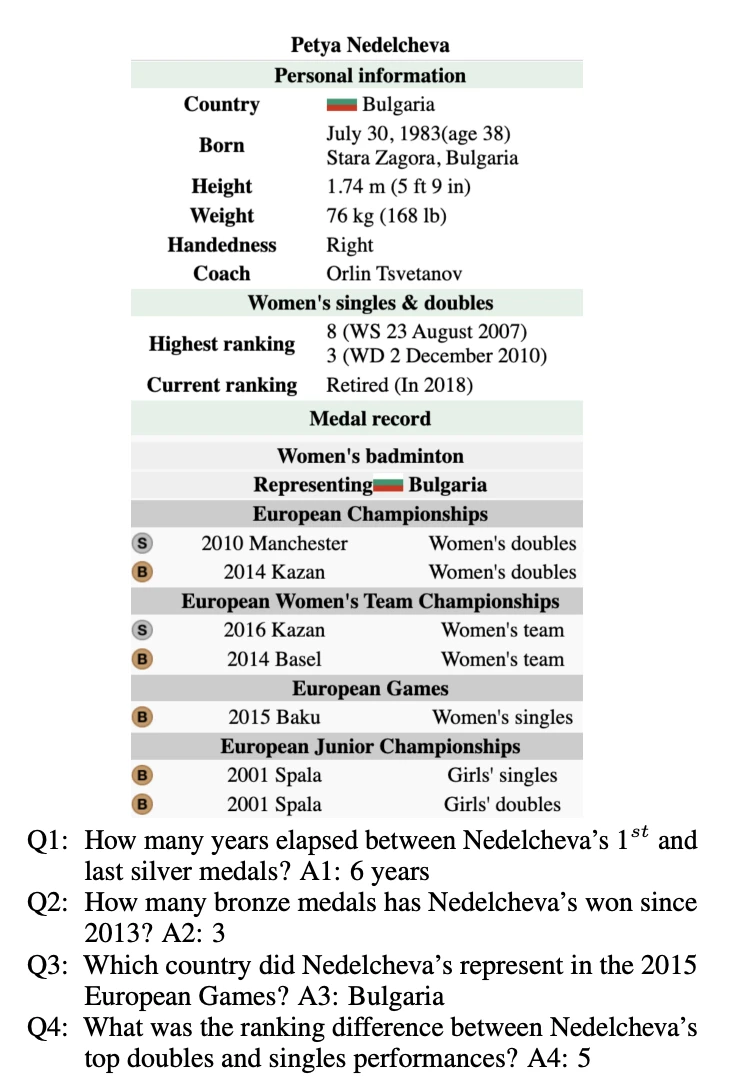

1 image, 2*2 stitchi, comment generation.

Goal-Oriented Dialog

User simulation, lexical simplification, sentence-pair classification, conversational response generation.

Personalized and Emotional Conversation

Token classification, toxic spans detection.

Blackout Poetry Generation

Humor detection.

Passage Re-Ranking

Subjectivity analysis.

Taxonomy Learning

Taxonomy expansion, hypernym discovery, propaganda detection, propaganda span identification, propaganda technique identification, lexical normalization, pronunciation dictionary creation, negation detection, negation scope resolution, question similarity, medical question pair similarity computation, intent discovery, reverse dictionary, lexical analysis, lexical complexity prediction, question rewriting, legal reasoning, punctuation restoration, attribute value extraction.

Hallucination Evaluation

Meeting summarization, table-based fact verification, diachronic word embeddings, pretrained multilingual language models, formality style transfer, semi-supervised formality style transfer, word attribute transfer, aspect category detection, extreme summarization.

Persian Sentiment Analysis

Binary classification, llm-generated text detection, cancer-no cancer per breast classification, cancer-no cancer per image classification, stable mci vs progressive mci, suspicous (birads 4,5)-no suspicous (birads 1,2,3) per image classification, clinical concept extraction.

Clinical Information Retreival

Constrained clustering.

Only Connect Walls Dataset Task 1 (Grouping)

Incremental constrained clustering, recognizing emotion cause in conversations.

Causal Emotion Entailment

trustable and focussed LLM generated content

Game design, dialog act classification, text compression, decipherment, nested mention recognition, probing language models, relationship extraction (distant supervised), semantic entity labeling, handwriting verification, bangla spelling error correction, clickbait detection, code repair, gender bias detection, ccg supertagging, linguistic steganography, toponym resolution.

Timeline Summarization

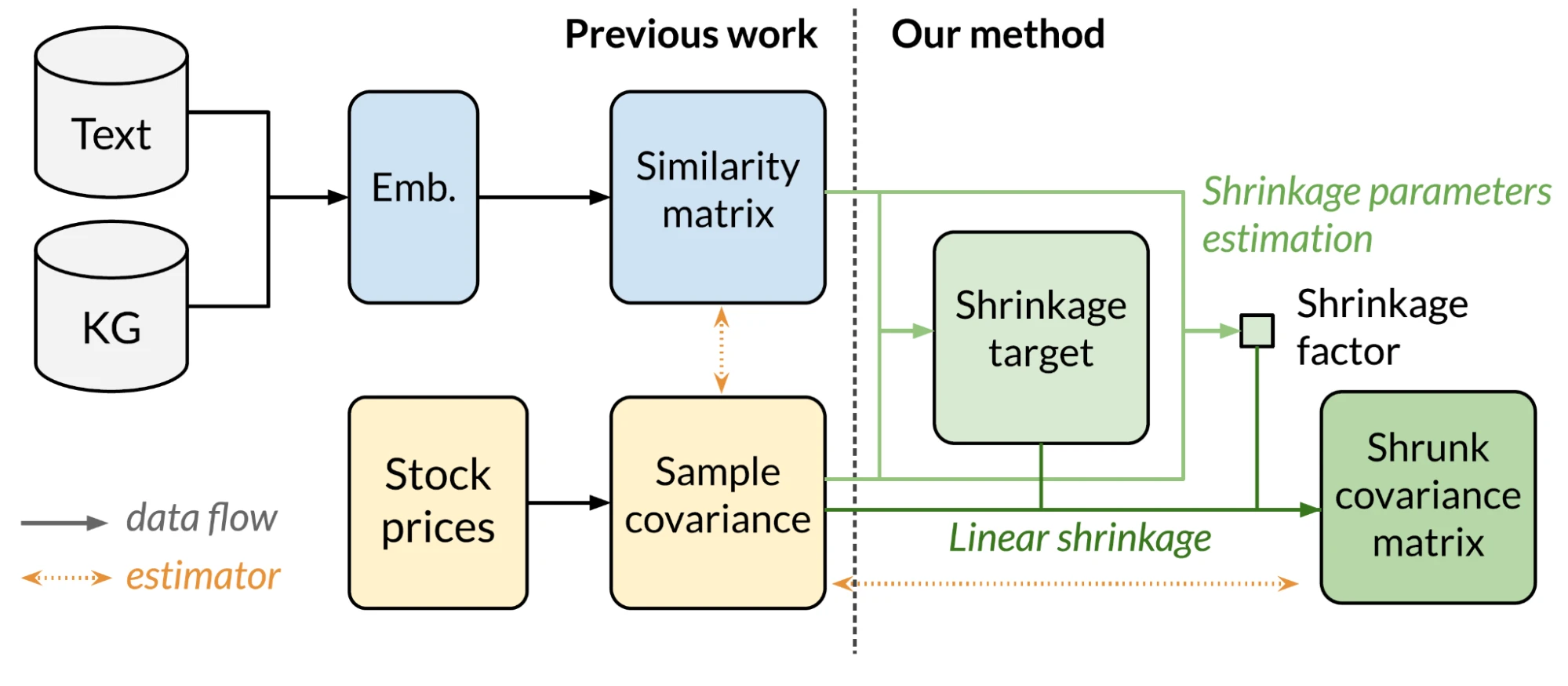

Multimodal abstractive text summarization, reader-aware summarization, stock prediction, text-based stock prediction, pair trading, event-driven trading, vietnamese visual question answering, explanatory visual question answering, arabic text diacritization, fact selection, thai word segmentation, vietnamese datasets.

Face to Face Translation

Multimodal lexical translation, semantic shift detection, similarity explanation, aggression identification, arabic sentiment analysis, commonsense causal reasoning, complex word identification, sign language production, suggestion mining, temporal relation classification, vietnamese word segmentation, speculation detection, speculation scope resolution, aspect category polarity, cross-lingual bitext mining, morphological disambiguation, multi-agent integration, scientific document summarization, lay summarization, text anonymization, text attribute transfer.

Image-guided Story Ending Generation

Personality generation, personality alignment, abstract argumentation, chinese spell checking, dialogue rewriting, logical reasoning reading comprehension.

Unsupervised Sentence Compression

Stereotypical bias analysis, temporal tagging, anaphora resolution, bridging anaphora resolution.

Abstract Anaphora Resolution

Hope speech detection for english, hope speech detection for malayalam, hope speech detection for tamil, hidden aspect detection, latent aspect detection, attribute mining, cognate prediction, japanese word segmentation, memex question answering, polyphone disambiguation, spelling correction, table-to-text generation.

KB-to-Language Generation

Zero-shot machine translation, zero-shot sentiment classification, conditional text generation, contextualized literature-based discovery, multimedia generative script learning, image-sentence alignment, open-world social event classification, ai and safety, action parsing, author attribution, binary condescension detection, context query reformulation, conversational web navigation, croatian text diacritization, czech text diacritization, definition modelling, document-level re with incomplete labeling, domain labelling, french text diacritization, hungarian text diacritization, irish text diacritization, latvian text diacritization, literature mining, misogynistic aggression identification, morpheme segmentaiton, multi-label condescension detection, news annotation, open relation modeling, personality recognition in conversation.

Reading Order Detection

Record linking, role-filler entity extraction, romanian text diacritization, simultaneous speech-to-speech translation, slovak text diacritization, social media mental health detection, spanish text diacritization, syntax representation, text-to-video search, turkish text diacritization, turning point identification, twitter event detection.

Vietnamese Language Models

Vietnamese scene text, vietnamese text diacritization.

Conversational Sentiment Quadruple Extraction

Attribute extraction, legal outcome extraction, automated writing evaluation, binary text classification, detection of potentially void clauses, chemical indexing, clinical assertion status detection.

Coding Problem Tagging

Collaborative plan acquisition, commonsense reasoning for rl.

Variable Disambiguation

Cross-lingual text-to-image generation, crowdsourced text aggregation.

Description-guided molecule generation

Multi-modal Dialogue Generation

Page stream segmentation.

Email Thread Summarization

Emergent communications on relations, emotion detection and trigger summarization, extractive tags summarization.

Hate Intensity Prediction

Hate span identification, job prediction, joint entity and relation extraction on scientific data, joint ner and classification, math information retrieval, meme captioning, multi-grained named entity recognition, multilingual machine comprehension in english hindi, multimodal text prediction, negation and speculation cue detection, negation and speculation scope resolution, only connect walls dataset task 2 (connections), overlapping mention recognition, paraphrase generation, multilingual paraphrase generation, phrase ranking, phrase tagging, phrase vector embedding, poem meters classification, query wellformedness.

Question-Answer categorization

Readability optimization, reliable intelligence identification, sentence completion, hurtful sentence completion, speaker attribution in german parliamentary debates (germeval 2023, subtask 1), text effects transfer, text-variation, vietnamese aspect-based sentiment analysis, sentiment dependency learning, vietnamese natural language understanding, vietnamese sentiment analysis, vietnamese multimodal sentiment analysis, web page tagging, workflow discovery, answerability prediction, incongruity detection, multi-word expression embedding, multi-word expression sememe prediction, pcl detection, semeval-2022 task 4-1 (binary pcl detection), semeval-2022 task 4-2 (multi-label pcl detection), automatic writing, complaint comment classification, counterspeech detection, extractive text summarization, face selection, job classification, multi-lingual text-to-image generation, multlingual neural machine translation, optical charater recogntion, bangla text detection, question to declarative sentence, relation mention extraction.

Tweet-Reply Sentiment Analysis

Vietnamese fact checking, vietnamese parsing.

Natural language processing: state of the art, current trends and challenges

- Published: 14 July 2022

- Volume 82 , pages 3713–3744, ( 2023 )

Cite this article

- Diksha Khurana 1 ,

- Aditya Koli 1 ,

- Kiran Khatter ORCID: orcid.org/0000-0002-1000-6102 2 &

- Sukhdev Singh 3

165k Accesses

402 Citations

34 Altmetric

Explore all metrics

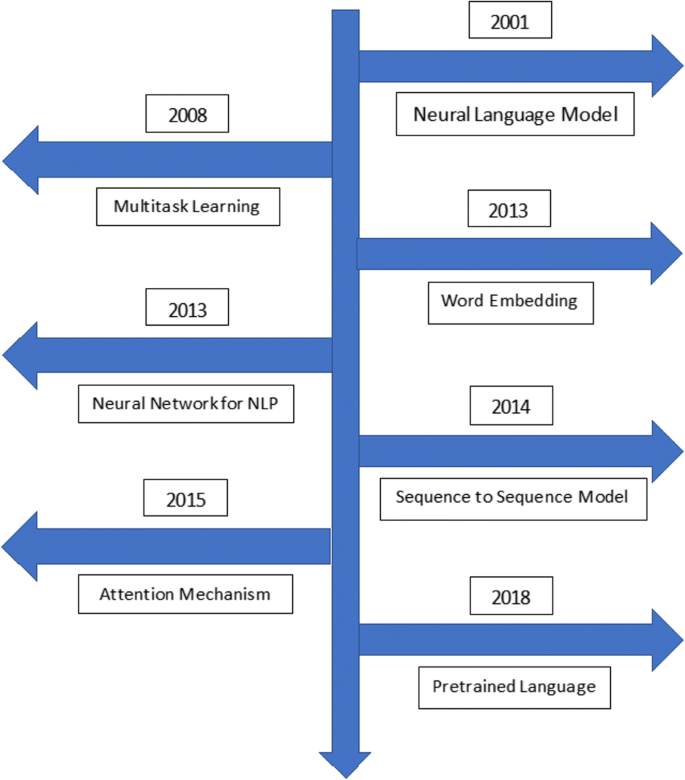

This article has been updated

Natural language processing (NLP) has recently gained much attention for representing and analyzing human language computationally. It has spread its applications in various fields such as machine translation, email spam detection, information extraction, summarization, medical, and question answering etc. In this paper, we first distinguish four phases by discussing different levels of NLP and components of N atural L anguage G eneration followed by presenting the history and evolution of NLP. We then discuss in detail the state of the art presenting the various applications of NLP, current trends, and challenges. Finally, we present a discussion on some available datasets, models, and evaluation metrics in NLP.

Similar content being viewed by others

Natural Language Processing: Challenges and Future Directions

Progress in Natural Language Processing and Language Understanding

Natural Language Processing

Explore related subjects.

- Artificial Intelligence

Avoid common mistakes on your manuscript.

1 Introduction

A language can be defined as a set of rules or set of symbols where symbols are combined and used for conveying information or broadcasting the information. Since all the users may not be well-versed in machine specific language, N atural Language Processing (NLP) caters those users who do not have enough time to learn new languages or get perfection in it. In fact, NLP is a tract of Artificial Intelligence and Linguistics, devoted to make computers understand the statements or words written in human languages. It came into existence to ease the user’s work and to satisfy the wish to communicate with the computer in natural language, and can be classified into two parts i.e. Natural Language Understanding or Linguistics and Natural Language Generation which evolves the task to understand and generate the text. L inguistics is the science of language which includes Phonology that refers to sound, Morphology word formation, Syntax sentence structure, Semantics syntax and Pragmatics which refers to understanding. Noah Chomsky, one of the first linguists of twelfth century that started syntactic theories, marked a unique position in the field of theoretical linguistics because he revolutionized the area of syntax (Chomsky, 1965) [ 23 ]. Further, Natural Language Generation (NLG) is the process of producing phrases, sentences and paragraphs that are meaningful from an internal representation. The first objective of this paper is to give insights of the various important terminologies of NLP and NLG.

In the existing literature, most of the work in NLP is conducted by computer scientists while various other professionals have also shown interest such as linguistics, psychologists, and philosophers etc. One of the most interesting aspects of NLP is that it adds up to the knowledge of human language. The field of NLP is related with different theories and techniques that deal with the problem of natural language of communicating with the computers. Few of the researched tasks of NLP are Automatic Summarization ( Automatic summarization produces an understandable summary of a set of text and provides summaries or detailed information of text of a known type), Co-Reference Resolution ( Co-reference resolution refers to a sentence or larger set of text that determines all words which refer to the same object), Discourse Analysis ( Discourse analysis refers to the task of identifying the discourse structure of connected text i.e. the study of text in relation to social context),Machine Translation ( Machine translation refers to automatic translation of text from one language to another),Morphological Segmentation ( Morphological segmentation refers to breaking words into individual meaning-bearing morphemes), Named Entity Recognition ( Named entity recognition (NER) is used for information extraction to recognized name entities and then classify them to different classes), Optical Character Recognition ( Optical character recognition (OCR) is used for automatic text recognition by translating printed and handwritten text into machine-readable format), Part Of Speech Tagging ( Part of speech tagging describes a sentence, determines the part of speech for each word) etc. Some of these tasks have direct real-world applications such as Machine translation, Named entity recognition, Optical character recognition etc. Though NLP tasks are obviously very closely interwoven but they are used frequently, for convenience. Some of the tasks such as automatic summarization, co-reference analysis etc. act as subtasks that are used in solving larger tasks. Nowadays NLP is in the talks because of various applications and recent developments although in the late 1940s the term wasn’t even in existence. So, it will be interesting to know about the history of NLP, the progress so far has been made and some of the ongoing projects by making use of NLP. The second objective of this paper focus on these aspects. The third objective of this paper is on datasets, approaches, evaluation metrics and involved challenges in NLP. The rest of this paper is organized as follows. Section 2 deals with the first objective mentioning the various important terminologies of NLP and NLG. Section 3 deals with the history of NLP, applications of NLP and a walkthrough of the recent developments. Datasets used in NLP and various approaches are presented in Section 4 , and Section 5 is written on evaluation metrics and challenges involved in NLP. Finally, a conclusion is presented in Section 6 .

2 Components of NLP

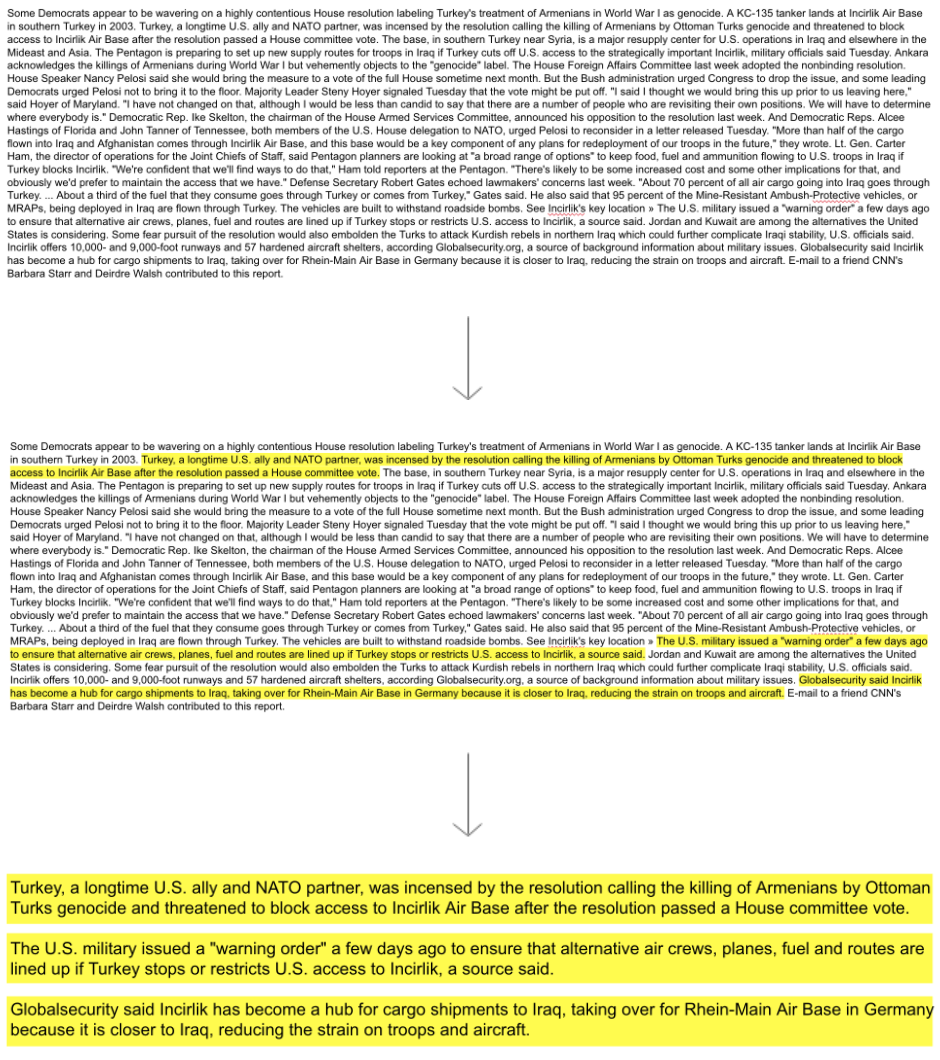

NLP can be classified into two parts i.e., Natural Language Understanding and Natural Language Generation which evolves the task to understand and generate the text. Figure 1 presents the broad classification of NLP. The objective of this section is to discuss the Natural Language Understanding (Linguistic) (NLU) and the Natural Language Generation (NLG) .

Broad classification of NLP

NLU enables machines to understand natural language and analyze it by extracting concepts, entities, emotion, keywords etc. It is used in customer care applications to understand the problems reported by customers either verbally or in writing. Linguistics is the science which involves the meaning of language, language context and various forms of the language. So, it is important to understand various important terminologies of NLP and different levels of NLP. We next discuss some of the commonly used terminologies in different levels of NLP.

Phonology is the part of Linguistics which refers to the systematic arrangement of sound. The term phonology comes from Ancient Greek in which the term phono means voice or sound and the suffix –logy refers to word or speech. In 1993 Nikolai Trubetzkoy stated that Phonology is “the study of sound pertaining to the system of language” whereas Lass1998 [ 66 ]wrote that phonology refers broadly with the sounds of language, concerned with sub-discipline of linguistics, behavior and organization of sounds. Phonology includes semantic use of sound to encode meaning of any Human language.

The different parts of the word represent the smallest units of meaning known as Morphemes. Morphology which comprises Nature of words, are initiated by morphemes. An example of Morpheme could be, the word precancellation can be morphologically scrutinized into three separate morphemes: the prefix pre , the root cancella , and the suffix -tion . The interpretation of morphemes stays the same across all the words, just to understand the meaning humans can break any unknown word into morphemes. For example, adding the suffix –ed to a verb, conveys that the action of the verb took place in the past. The words that cannot be divided and have meaning by themselves are called Lexical morpheme (e.g.: table, chair). The words (e.g. -ed, −ing, −est, −ly, −ful) that are combined with the lexical morpheme are known as Grammatical morphemes (eg. Worked, Consulting, Smallest, Likely, Use). The Grammatical morphemes that occur in combination called bound morphemes (eg. -ed, −ing) Bound morphemes can be divided into inflectional morphemes and derivational morphemes. Adding Inflectional morphemes to a word changes the different grammatical categories such as tense, gender, person, mood, aspect, definiteness and animacy. For example, addition of inflectional morphemes –ed changes the root park to parked . Derivational morphemes change the semantic meaning of the word when it is combined with that word. For example, in the word normalize, the addition of the bound morpheme –ize to the root normal changes the word from an adjective ( normal ) to a verb ( normalize ).

In Lexical, humans, as well as NLP systems, interpret the meaning of individual words. Sundry types of processing bestow to word-level understanding – the first of these being a part-of-speech tag to each word. In this processing, words that can act as more than one part-of-speech are assigned the most probable part-of-speech tag based on the context in which they occur. At the lexical level, Semantic representations can be replaced by the words that have one meaning. In fact, in the NLP system the nature of the representation varies according to the semantic theory deployed. Therefore, at lexical level, analysis of structure of words is performed with respect to their lexical meaning and PoS. In this analysis, text is divided into paragraphs, sentences, and words. Words that can be associated with more than one PoS are aligned with the most likely PoS tag based on the context in which they occur. At lexical level, semantic representation can also be replaced by assigning the correct POS tag which improves the understanding of the intended meaning of a sentence. It is used for cleaning and feature extraction using various techniques such as removal of stop words, stemming, lemmatization etc. Stop words such as ‘ in ’, ‘the’, ‘and’ etc. are removed as they don’t contribute to any meaningful interpretation and their frequency is also high which may affect the computation time. Stemming is used to stem the words of the text by removing the suffix of a word to obtain its root form. For example: consulting and consultant words are converted to the word consult after stemming, using word gets converted to us and driver is reduced to driv . Lemmatization does not remove the suffix of a word; in fact, it results in the source word with the use of a vocabulary. For example, in case of token drived , stemming results in “driv”, whereas lemmatization attempts to return the correct basic form either drive or drived depending on the context it is used.

After PoS tagging done at lexical level, words are grouped to phrases and phrases are grouped to form clauses and then phrases are combined to sentences at syntactic level. It emphasizes the correct formation of a sentence by analyzing the grammatical structure of the sentence. The output of this level is a sentence that reveals structural dependency between words. It is also known as parsing which uncovers the phrases that convey more meaning in comparison to the meaning of individual words. Syntactic level examines word order, stop-words, morphology and PoS of words which lexical level does not consider. Changing word order will change the dependency among words and may also affect the comprehension of sentences. For example, in the sentences “ram beats shyam in a competition” and “shyam beats ram in a competition”, only syntax is different but convey different meanings [ 139 ]. It retains the stopwords as removal of them changes the meaning of the sentence. It doesn’t support lemmatization and stemming because converting words to its basic form changes the grammar of the sentence. It focuses on identification on correct PoS of sentences. For example: in the sentence “frowns on his face”, “frowns” is a noun whereas it is a verb in the sentence “he frowns”.

On a semantic level, the most important task is to determine the proper meaning of a sentence. To understand the meaning of a sentence, human beings rely on the knowledge about language and the concepts present in that sentence, but machines can’t count on these techniques. Semantic processing determines the possible meanings of a sentence by processing its logical structure to recognize the most relevant words to understand the interactions among words or different concepts in the sentence. For example, it understands that a sentence is about “movies” even if it doesn’t comprise actual words, but it contains related concepts such as “actor”, “actress”, “dialogue” or “script”. This level of processing also incorporates the semantic disambiguation of words with multiple senses (Elizabeth D. Liddy, 2001) [ 68 ]. For example, the word “bark” as a noun can mean either as a sound that a dog makes or outer covering of the tree. The semantic level examines words for their dictionary interpretation or interpretation is derived from the context of the sentence. For example: the sentence “Krishna is good and noble.” This sentence is either talking about Lord Krishna or about a person “Krishna”. That is why, to get the proper meaning of the sentence, the appropriate interpretation is considered by looking at the rest of the sentence [ 44 ].

While syntax and semantics level deal with sentence-length units, the discourse level of NLP deals with more than one sentence. It deals with the analysis of logical structure by making connections among words and sentences that ensure its coherence. It focuses on the properties of the text that convey meaning by interpreting the relations between sentences and uncovering linguistic structures from texts at several levels (Liddy,2001) [ 68 ]. The two of the most common levels are: Anaphora Resolution an d Coreference Resolution. Anaphora resolution is achieved by recognizing the entity referenced by an anaphor to resolve the references used within the text with the same sense. For example, (i) Ram topped in the class. (ii) He was intelligent. Here i) and ii) together form a discourse. Human beings can quickly understand that the pronoun “he” in (ii) refers to “Ram” in (i). The interpretation of “He” depends on another word “Ram” presented earlier in the text. Without determining the relationship between these two structures, it would not be possible to decide why Ram topped the class and who was intelligent. Coreference resolution is achieved by finding all expressions that refer to the same entity in a text. It is an important step in various NLP applications that involve high-level NLP tasks such as document summarization, information extraction etc. In fact, anaphora is encoded through one of the processes called co-reference.

Pragmatic level focuses on the knowledge or content that comes from the outside the content of the document. It deals with what speaker implies and what listener infers. In fact, it analyzes the sentences that are not directly spoken. Real-world knowledge is used to understand what is being talked about in the text. By analyzing the context, meaningful representation of the text is derived. When a sentence is not specific and the context does not provide any specific information about that sentence, Pragmatic ambiguity arises (Walton, 1996) [ 143 ]. Pragmatic ambiguity occurs when different persons derive different interpretations of the text, depending on the context of the text. The context of a text may include the references of other sentences of the same document, which influence the understanding of the text and the background knowledge of the reader or speaker, which gives a meaning to the concepts expressed in that text. Semantic analysis focuses on literal meaning of the words, but pragmatic analysis focuses on the inferred meaning that the readers perceive based on their background knowledge. For example, the sentence “Do you know what time is it?” is interpreted to “Asking for the current time” in semantic analysis whereas in pragmatic analysis, the same sentence may refer to “expressing resentment to someone who missed the due time” in pragmatic analysis. Thus, semantic analysis is the study of the relationship between various linguistic utterances and their meanings, but pragmatic analysis is the study of context which influences our understanding of linguistic expressions. Pragmatic analysis helps users to uncover the intended meaning of the text by applying contextual background knowledge.

The goal of NLP is to accommodate one or more specialties of an algorithm or system. The metric of NLP assess on an algorithmic system allows for the integration of language understanding and language generation. It is even used in multilingual event detection. Rospocher et al. [ 112 ] purposed a novel modular system for cross-lingual event extraction for English, Dutch, and Italian Texts by using different pipelines for different languages. The system incorporates a modular set of foremost multilingual NLP tools. The pipeline integrates modules for basic NLP processing as well as more advanced tasks such as cross-lingual named entity linking, semantic role labeling and time normalization. Thus, the cross-lingual framework allows for the interpretation of events, participants, locations, and time, as well as the relations between them. Output of these individual pipelines is intended to be used as input for a system that obtains event centric knowledge graphs. All modules take standard input, to do some annotation, and produce standard output which in turn becomes the input for the next module pipelines. Their pipelines are built as a data centric architecture so that modules can be adapted and replaced. Furthermore, modular architecture allows for different configurations and for dynamic distribution.

Ambiguity is one of the major problems of natural language which occurs when one sentence can lead to different interpretations. This is usually faced in syntactic, semantic, and lexical levels. In case of syntactic level ambiguity, one sentence can be parsed into multiple syntactical forms. Semantic ambiguity occurs when the meaning of words can be misinterpreted. Lexical level ambiguity refers to ambiguity of a single word that can have multiple assertions. Each of these levels can produce ambiguities that can be solved by the knowledge of the complete sentence. The ambiguity can be solved by various methods such as Minimizing Ambiguity, Preserving Ambiguity, Interactive Disambiguation and Weighting Ambiguity [ 125 ]. Some of the methods proposed by researchers to remove ambiguity is preserving ambiguity, e.g. (Shemtov 1997; Emele & Dorna 1998; Knight & Langkilde 2000; Tong Gao et al. 2015, Umber & Bajwa 2011) [ 39 , 46 , 65 , 125 , 139 ]. Their objectives are closely in line with removal or minimizing ambiguity. They cover a wide range of ambiguities and there is a statistical element implicit in their approach.

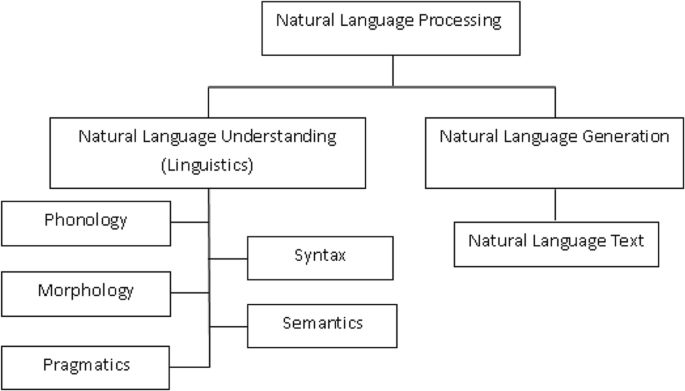

Natural Language Generation (NLG) is the process of producing phrases, sentences and paragraphs that are meaningful from an internal representation. It is a part of Natural Language Processing and happens in four phases: identifying the goals, planning on how goals may be achieved by evaluating the situation and available communicative sources and realizing the plans as a text (Fig. 2 ). It is opposite to Understanding.

Speaker and Generator

Components of NLG

To generate a text, we need to have a speaker or an application and a generator or a program that renders the application’s intentions into a fluent phrase relevant to the situation.

Components and Levels of Representation

The process of language generation involves the following interweaved tasks. Content selection: Information should be selected and included in the set. Depending on how this information is parsed into representational units, parts of the units may have to be removed while some others may be added by default. Textual Organization : The information must be textually organized according to the grammar, it must be ordered both sequentially and in terms of linguistic relations like modifications. Linguistic Resources : To support the information’s realization, linguistic resources must be chosen. In the end these resources will come down to choices of particular words, idioms, syntactic constructs etc. Realization : The selected and organized resources must be realized as an actual text or voice output.

Application or Speaker

This is only for maintaining the model of the situation. Here the speaker just initiates the process doesn’t take part in the language generation. It stores the history, structures the content that is potentially relevant and deploys a representation of what it knows. All these forms the situation, while selecting subset of propositions that speaker has. The only requirement is the speaker must make sense of the situation [ 91 ].

3 NLP: Then and now

In the late 1940s the term NLP wasn’t in existence, but the work regarding machine translation (MT) had started. In fact, Research in this period was not completely localized. Russian and English were the dominant languages for MT (Andreev,1967) [ 4 ]. In fact, MT/NLP research almost died in 1966 according to the ALPAC report, which concluded that MT is going nowhere. But later, some MT production systems were providing output to their customers (Hutchins, 1986) [ 60 ]. By this time, work on the use of computers for literary and linguistic studies had also started. As early as 1960, signature work influenced by AI began, with the BASEBALL Q-A systems (Green et al., 1961) [ 51 ]. LUNAR (Woods,1978) [ 152 ] and Winograd SHRDLU were natural successors of these systems, but they were seen as stepped-up sophistication, in terms of their linguistic and their task processing capabilities. There was a widespread belief that progress could only be made on the two sides, one is ARPA Speech Understanding Research (SUR) project (Lea, 1980) and other in some major system developments projects building database front ends. The front-end projects (Hendrix et al., 1978) [ 55 ] were intended to go beyond LUNAR in interfacing the large databases. In early 1980s computational grammar theory became a very active area of research linked with logics for meaning and knowledge’s ability to deal with the user’s beliefs and intentions and with functions like emphasis and themes.

By the end of the decade the powerful general purpose sentence processors like SRI’s Core Language Engine (Alshawi,1992) [ 2 ] and Discourse Representation Theory (Kamp and Reyle,1993) [ 62 ] offered a means of tackling more extended discourse within the grammatico-logical framework. This period was one of the growing communities. Practical resources, grammars, and tools and parsers became available (for example: Alvey Natural Language Tools) (Briscoe et al., 1987) [ 18 ]. The (D)ARPA speech recognition and message understanding (information extraction) conferences were not only for the tasks they addressed but for the emphasis on heavy evaluation, starting a trend that became a major feature in 1990s (Young and Chase, 1998; Sundheim and Chinchor,1993) [ 131 , 157 ]. Work on user modeling (Wahlster and Kobsa, 1989) [ 142 ] was one strand in a research paper. Cohen et al. (2002) [ 28 ] had put forwarded a first approximation of a compositional theory of tune interpretation, together with phonological assumptions on which it is based and the evidence from which they have drawn their proposals. At the same time, McKeown (1985) [ 85 ] demonstrated that rhetorical schemas could be used for producing both linguistically coherent and communicatively effective text. Some research in NLP marked important topics for future like word sense disambiguation (Small et al., 1988) [ 126 ] and probabilistic networks, statistically colored NLP, the work on the lexicon, also pointed in this direction. Statistical language processing was a major thing in 90s (Manning and Schuetze,1999) [ 75 ], because this not only involves data analysts. Information extraction and automatic summarizing (Mani and Maybury,1999) [ 74 ] was also a point of focus. Next, we present a walkthrough of the developments from the early 2000.

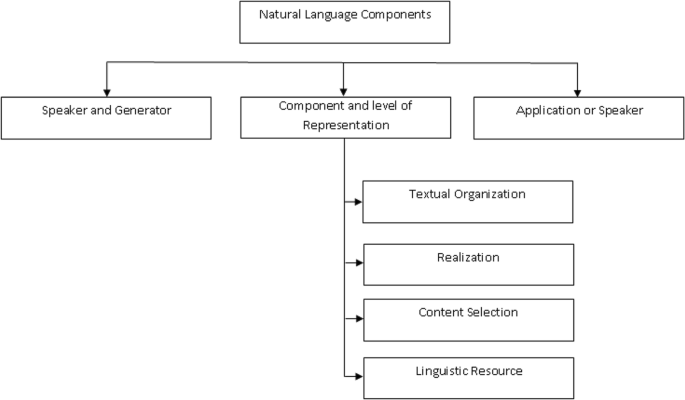

3.1 A walkthrough of recent developments in NLP

The main objectives of NLP include interpretation, analysis, and manipulation of natural language data for the intended purpose with the use of various algorithms, tools, and methods. However, there are many challenges involved which may depend upon the natural language data under consideration, and so makes it difficult to achieve all the objectives with a single approach. Therefore, the development of different tools and methods in the field of NLP and relevant areas of studies have received much attention from several researchers in the recent past. The developments can be seen in the Fig. 3 :

A walkthrough of recent developments in NLP

In early 2000, neural language modeling in which the probability of occurring of next word (token) is determined given n previous words. Bendigo et al. [ 12 ] proposed the concept of feed forward neural network and lookup table which represents the n previous words in sequence. Collobert et al. [ 29 ] proposed the application of multitask learning in the field of NLP, where two convolutional models with max pooling were used to perform parts-of-speech and named entity recognition tagging. Mikolov et.al. [ 87 ] proposed a word embedding process where the dense vector representation of text was addressed. They also report the challenges faced by traditional sparse bag-of-words representation. After the advancement of word embedding, neural networks were introduced in the field of NLP where variable length input is taken for further processing. Sutskever et al. [ 132 ] proposed a general framework for sequence-to-sequence mapping where encoder and decoder networks are used to map from sequence to vector and vector to sequence respectively. In fact, the use of neural networks have played a very important role in NLP. One can observe from the existing literature that enough use of neural networks was not there in the early 2000s but till the year 2013enough discussion had happened about the use of neural networks in the field of NLP which transformed many things and further paved the way to implement various neural networks in NLP. Earlier the use of Convolutional neural networks ( CNN ) contributed to the field of image classification and analyzing visual imagery for further analysis. Later the use of CNNs can be observed in tackling problems associated with NLP tasks like Sentence Classification [ 127 ], Sentiment Analysis [ 135 ], Text Classification [ 118 ], Text Summarization [ 158 ], Machine Translation [ 70 ] and Answer Relations [ 150 ] . An article by Newatia (2019) [ 93 ] illustrates the general architecture behind any CNN model, and how it can be used in the context of NLP. One can also refer to the work of Wang and Gang [ 145 ] for the applications of CNN in NLP. Further Neural Networks those are recurrent in nature due to performing the same function for every data, also known as Recurrent Neural Networks (RNNs), have also been used in NLP, and found ideal for sequential data such as text, time series, financial data, speech, audio, video among others, see article by Thomas (2019) [ 137 ]. One of the modified versions of RNNs is Long Short-Term Memory (LSTM) which is also very useful in the cases where only the desired important information needs to be retained for a much longer time discarding the irrelevant information, see [ 52 , 58 ]. Further development in the LSTM has also led to a slightly simpler variant, called the gated recurrent unit (GRU), which has shown better results than standard LSTMs in many tasks [ 22 , 26 ]. Attention mechanisms [ 7 ] which suggest a network to learn what to pay attention to in accordance with the current hidden state and annotation together with the use of transformers have also made a significant development in NLP, see [ 141 ]. It is to be noticed that Transformers have a potential of learning longer-term dependency but are limited by a fixed-length context in the setting of language modeling. In this direction recently Dai et al. [ 30 ] proposed a novel neural architecture Transformer-XL (XL as extra-long) which enables learning dependencies beyond a fixed length of words. Further the work of Rae et al. [ 104 ] on the Compressive Transformer, an attentive sequence model which compresses memories for long-range sequence learning, may be helpful for the readers. One may also refer to the recent work by Otter et al. [ 98 ] on uses of Deep Learning for NLP, and relevant references cited therein. The use of BERT (Bidirectional Encoder Representations from Transformers) [ 33 ] model and successive models have also played an important role for NLP.

Many researchers worked on NLP, building tools and systems which makes NLP what it is today. Tools like Sentiment Analyser, Parts of Speech (POS) Taggers, Chunking, Named Entity Recognitions (NER), Emotion detection, Semantic Role Labeling have a huge contribution made to NLP, and are good topics for research. Sentiment analysis (Nasukawaetal.,2003) [ 156 ] works by extracting sentiments about a given topic, and it consists of a topic specific feature term extraction, sentiment extraction, and association by relationship analysis. It utilizes two linguistic resources for the analysis: the sentiment lexicon and the sentiment pattern database. It analyzes the documents for positive and negative words and tries to give ratings on scale −5 to +5. The mainstream of currently used tagsets is obtained from English. The most widely used tagsets as standard guidelines are designed for Indo-European languages but it is less researched on Asian languages or middle- eastern languages. Various authors have done research on making parts of speech taggers for various languages such as Arabic (Zeroual et al., 2017) [ 160 ], Sanskrit (Tapswi & Jain, 2012) [ 136 ], Hindi (Ranjan & Basu, 2003) [ 105 ] to efficiently tag and classify words as nouns, adjectives, verbs etc. Authors in [ 136 ] have used treebank technique for creating rule-based POS Tagger for Sanskrit Language. Sanskrit sentences are parsed to assign the appropriate tag to each word using suffix stripping algorithm, wherein the longest suffix is searched from the suffix table and tags are assigned. Diab et al. (2004) [ 34 ] used supervised machine learning approach and adopted Support Vector Machines (SVMs) which were trained on the Arabic Treebank to automatically tokenize parts of speech tag and annotate base phrases in Arabic text.

Chunking is a process of separating phrases from unstructured text. Since simple tokens may not represent the actual meaning of the text, it is advisable to use phrases such as “North Africa” as a single word instead of ‘North’ and ‘Africa’ separate words. Chunking known as “Shadow Parsing” labels parts of sentences with syntactic correlated keywords like Noun Phrase (NP) and Verb Phrase (VP). Chunking is often evaluated using the CoNLL 2000 shared task. Various researchers (Sha and Pereira, 2003; McDonald et al., 2005; Sun et al., 2008) [ 83 , 122 , 130 ] used CoNLL test data for chunking and used features composed of words, POS tags, and tags.

There are particular words in the document that refer to specific entities or real-world objects like location, people, organizations etc. To find the words which have a unique context and are more informative, noun phrases are considered in the text documents. Named entity recognition (NER) is a technique to recognize and separate the named entities and group them under predefined classes. But in the era of the Internet, where people use slang not the traditional or standard English which cannot be processed by standard natural language processing tools. Ritter (2011) [ 111 ] proposed the classification of named entities in tweets because standard NLP tools did not perform well on tweets. They re-built NLP pipeline starting from PoS tagging, then chunking for NER. It improved the performance in comparison to standard NLP tools.

Emotion detection investigates and identifies the types of emotion from speech, facial expressions, gestures, and text. Sharma (2016) [ 124 ] analyzed the conversations in Hinglish means mix of English and Hindi languages and identified the usage patterns of PoS. Their work was based on identification of language and POS tagging of mixed script. They tried to detect emotions in mixed script by relating machine learning and human knowledge. They have categorized sentences into 6 groups based on emotions and used TLBO technique to help the users in prioritizing their messages based on the emotions attached with the message. Seal et al. (2020) [ 120 ] proposed an efficient emotion detection method by searching emotional words from a pre-defined emotional keyword database and analyzing the emotion words, phrasal verbs, and negation words. Their proposed approach exhibited better performance than recent approaches.

Semantic Role Labeling (SRL) works by giving a semantic role to a sentence. For example, in the PropBank (Palmer et al., 2005) [ 100 ] formalism, one assigns roles to words that are arguments of a verb in the sentence. The precise arguments depend on the verb frame and if multiple verbs exist in a sentence, it might have multiple tags. State-of-the-art SRL systems comprise several stages: creating a parse tree, identifying which parse tree nodes represent the arguments of a given verb, and finally classifying these nodes to compute the corresponding SRL tags.

Event discovery in social media feeds (Benson et al.,2011) [ 13 ], using a graphical model to analyze any social media feeds to determine whether it contains the name of a person or name of a venue, place, time etc. The model operates on noisy feeds of data to extract records of events by aggregating multiple information across multiple messages, despite the noise of irrelevant noisy messages and very irregular message language, this model was able to extract records with a broader array of features on factors.

We first give insights on some of the mentioned tools and relevant work done before moving to the broad applications of NLP.

3.2 Applications of NLP

Natural Language Processing can be applied into various areas like Machine Translation, Email Spam detection, Information Extraction, Summarization, Question Answering etc. Next, we discuss some of the areas with the relevant work done in those directions.

Machine Translation

As most of the world is online, the task of making data accessible and available to all is a challenge. Major challenge in making data accessible is the language barrier. There are a multitude of languages with different sentence structure and grammar. Machine Translation is generally translating phrases from one language to another with the help of a statistical engine like Google Translate. The challenge with machine translation technologies is not directly translating words but keeping the meaning of sentences intact along with grammar and tenses. The statistical machine learning gathers as many data as they can find that seems to be parallel between two languages and they crunch their data to find the likelihood that something in Language A corresponds to something in Language B. As for Google, in September 2016, announced a new machine translation system based on artificial neural networks and Deep learning. In recent years, various methods have been proposed to automatically evaluate machine translation quality by comparing hypothesis translations with reference translations. Examples of such methods are word error rate, position-independent word error rate (Tillmann et al., 1997) [ 138 ], generation string accuracy (Bangalore et al., 2000) [ 8 ], multi-reference word error rate (Nießen et al., 2000) [ 95 ], BLEU score (Papineni et al., 2002) [ 101 ], NIST score (Doddington, 2002) [ 35 ] All these criteria try to approximate human assessment and often achieve an astonishing degree of correlation to human subjective evaluation of fluency and adequacy (Papineni et al., 2001; Doddington, 2002) [ 35 , 101 ].

Text Categorization

Categorization systems input a large flow of data like official documents, military casualty reports, market data, newswires etc. and assign them to predefined categories or indices. For example, The Carnegie Group’s Construe system (Hayes, 1991) [ 54 ], inputs Reuters articles and saves much time by doing the work that is to be done by staff or human indexers. Some companies have been using categorization systems to categorize trouble tickets or complaint requests and routing to the appropriate desks. Another application of text categorization is email spam filters. Spam filters are becoming important as the first line of defence against the unwanted emails. A false negative and false positive issue of spam filters is at the heart of NLP technology, it has brought down the challenge of extracting meaning from strings of text. A filtering solution that is applied to an email system uses a set of protocols to determine which of the incoming messages are spam; and which are not. There are several types of spam filters available. Content filters : Review the content within the message to determine whether it is spam or not. Header filters : Review the email header looking for fake information. General Blacklist filters : Stop all emails from blacklisted recipients. Rules Based Filters : It uses user-defined criteria. Such as stopping mails from a specific person or stopping mail including a specific word. Permission Filters : Require anyone sending a message to be pre-approved by the recipient. Challenge Response Filters : Requires anyone sending a message to enter a code to gain permission to send email.

Spam Filtering

It works using text categorization and in recent times, various machine learning techniques have been applied to text categorization or Anti-Spam Filtering like Rule Learning (Cohen 1996) [ 27 ], Naïve Bayes (Sahami et al., 1998; Androutsopoulos et al., 2000; Rennie.,2000) [ 5 , 109 , 115 ],Memory based Learning (Sakkiset al.,2000b) [ 117 ], Support vector machines (Druker et al., 1999) [ 36 ], Decision Trees (Carreras and Marquez, 2001) [ 19 ], Maximum Entropy Model (Berger et al. 1996) [ 14 ], Hash Forest and a rule encoding method (T. Xia, 2020) [ 153 ], sometimes combining different learners (Sakkis et al., 2001) [ 116 ]. Using these approaches is better as classifier is learned from training data rather than making by hand. The naïve bayes is preferred because of its performance despite its simplicity (Lewis, 1998) [ 67 ] In Text Categorization two types of models have been used (McCallum and Nigam, 1998) [ 77 ]. Both modules assume that a fixed vocabulary is present. But in first model a document is generated by first choosing a subset of vocabulary and then using the selected words any number of times, at least once irrespective of order. This is called Multi-variate Bernoulli model. It takes the information of which words are used in a document irrespective of number of words and order. In second model, a document is generated by choosing a set of word occurrences and arranging them in any order. This model is called multi-nomial model, in addition to the Multi-variate Bernoulli model, it also captures information on how many times a word is used in a document. Most text categorization approaches to anti-spam Email filtering have used multi variate Bernoulli model (Androutsopoulos et al., 2000) [ 5 ] [ 15 ].

Information Extraction

Information extraction is concerned with identifying phrases of interest of textual data. For many applications, extracting entities such as names, places, events, dates, times, and prices is a powerful way of summarizing the information relevant to a user’s needs. In the case of a domain specific search engine, the automatic identification of important information can increase accuracy and efficiency of a directed search. There is use of hidden Markov models (HMMs) to extract the relevant fields of research papers. These extracted text segments are used to allow searched over specific fields and to provide effective presentation of search results and to match references to papers. For example, noticing the pop-up ads on any websites showing the recent items you might have looked on an online store with discounts. In Information Retrieval two types of models have been used (McCallum and Nigam, 1998) [ 77 ]. Both modules assume that a fixed vocabulary is present. But in first model a document is generated by first choosing a subset of vocabulary and then using the selected words any number of times, at least once without any order. This is called Multi-variate Bernoulli model. It takes the information of which words are used in a document irrespective of number of words and order. In second model, a document is generated by choosing a set of word occurrences and arranging them in any order. This model is called multi-nominal model, in addition to the Multi-variate Bernoulli model, it also captures information on how many times a word is used in a document.

Discovery of knowledge is becoming important areas of research over the recent years. Knowledge discovery research use a variety of techniques to extract useful information from source documents like Parts of Speech (POS) tagging , Chunking or Shadow Parsing , Stop-words (Keywords that are used and must be removed before processing documents), Stemming (Mapping words to some base for, it has two methods, dictionary-based stemming and Porter style stemming (Porter, 1980) [ 103 ]. Former one has higher accuracy but higher cost of implementation while latter has lower implementation cost and is usually insufficient for IR). Compound or Statistical Phrases (Compounds and statistical phrases index multi token units instead of single tokens.) Word Sense Disambiguation (Word sense disambiguation is the task of understanding the correct sense of a word in context. When used for information retrieval, terms are replaced by their senses in the document vector.)

The extracted information can be applied for a variety of purposes, for example to prepare a summary, to build databases, identify keywords, classifying text items according to some pre-defined categories etc. For example, CONSTRUE, it was developed for Reuters, that is used in classifying news stories (Hayes, 1992) [ 54 ]. It has been suggested that many IE systems can successfully extract terms from documents, acquiring relations between the terms is still a difficulty. PROMETHEE is a system that extracts lexico-syntactic patterns relative to a specific conceptual relation (Morin,1999) [ 89 ]. IE systems should work at many levels, from word recognition to discourse analysis at the level of the complete document. An application of the Blank Slate Language Processor (BSLP) ( Bondale et al., 1999) [ 16 ] approach for the analysis of a real-life natural language corpus that consists of responses to open-ended questionnaires in the field of advertising.

There is a system called MITA (Metlife’s Intelligent Text Analyzer) (Glasgow et al. (1998) [ 48 ]) that extracts information from life insurance applications. Ahonen et al. (1998) [ 1 ] suggested a mainstream framework for text mining that uses pragmatic and discourse level analyses of text .

Summarization

Overload of information is the real thing in this digital age, and already our reach and access to knowledge and information exceeds our capacity to understand it. This trend is not slowing down, so an ability to summarize the data while keeping the meaning intact is highly required. This is important not just allowing us the ability to recognize the understand the important information for a large set of data, it is used to understand the deeper emotional meanings; For example, a company determines the general sentiment on social media and uses it on their latest product offering. This application is useful as a valuable marketing asset.

The types of text summarization depends on the basis of the number of documents and the two important categories are single document summarization and multi document summarization (Zajic et al. 2008 [ 159 ]; Fattah and Ren 2009 [ 43 ]).Summaries can also be of two types: generic or query-focused (Gong and Liu 2001 [ 50 ]; Dunlavy et al. 2007 [ 37 ]; Wan 2008 [ 144 ]; Ouyang et al. 2011 [ 99 ]).Summarization task can be either supervised or unsupervised (Mani and Maybury 1999 [ 74 ]; Fattah and Ren 2009 [ 43 ]; Riedhammer et al. 2010 [ 110 ]). Training data is required in a supervised system for selecting relevant material from the documents. Large amount of annotated data is needed for learning techniques. Few techniques are as follows–

Bayesian Sentence based Topic Model (BSTM) uses both term-sentences and term document associations for summarizing multiple documents. (Wang et al. 2009 [ 146 ])

Factorization with Given Bases (FGB) is a language model where sentence bases are the given bases and it utilizes document-term and sentence term matrices. This approach groups and summarizes the documents simultaneously. (Wang et al. 2011) [ 147 ])

Topic Aspect-Oriented Summarization (TAOS) is based on topic factors. These topic factors are various features that describe topics such as capital words are used to represent entity. Various topics can have various aspects and various preferences of features are used to represent various aspects. (Fang et al. 2015 [ 42 ])

Dialogue System

Dialogue systems are very prominent in real world applications ranging from providing support to performing a particular action. In case of support dialogue systems, context awareness is required whereas in case to perform an action, it doesn’t require much context awareness. Earlier dialogue systems were focused on small applications such as home theater systems. These dialogue systems utilize phonemic and lexical levels of language. Habitable dialogue systems offer potential for fully automated dialog systems by utilizing all levels of a language. (Liddy, 2001) [ 68 ].This leads to producing systems that can enable robots to interact with humans in natural languages such as Google’s assistant, Windows Cortana, Apple’s Siri and Amazon’s Alexa etc.

NLP is applied in the field as well. The Linguistic String Project-Medical Language Processor is one the large scale projects of NLP in the field of medicine [ 21 , 53 , 57 , 71 , 114 ]. The LSP-MLP helps enabling physicians to extract and summarize information of any signs or symptoms, drug dosage and response data with the aim of identifying possible side effects of any medicine while highlighting or flagging data items [ 114 ]. The National Library of Medicine is developing The Specialist System [ 78 , 79 , 80 , 82 , 84 ]. It is expected to function as an Information Extraction tool for Biomedical Knowledge Bases, particularly Medline abstracts. The lexicon was created using MeSH (Medical Subject Headings), Dorland’s Illustrated Medical Dictionary and general English Dictionaries. The Centre d’Informatique Hospitaliere of the Hopital Cantonal de Geneve is working on an electronic archiving environment with NLP features [ 81 , 119 ]. In the first phase, patient records were archived. At later stage the LSP-MLP has been adapted for French [ 10 , 72 , 94 , 113 ], and finally, a proper NLP system called RECIT [ 9 , 11 , 17 , 106 ] has been developed using a method called Proximity Processing [ 88 ]. It’s task was to implement a robust and multilingual system able to analyze/comprehend medical sentences, and to preserve a knowledge of free text into a language independent knowledge representation [ 107 , 108 ]. The Columbia university of New York has developed an NLP system called MEDLEE (MEDical Language Extraction and Encoding System) that identifies clinical information in narrative reports and transforms the textual information into structured representation [ 45 ].

3.3 NLP in talk

We next discuss some of the recent NLP projects implemented by various companies:

ACE Powered GDPR Robot Launched by RAVN Systems [ 134 ]

RAVN Systems, a leading expert in Artificial Intelligence (AI), Search and Knowledge Management Solutions, announced the launch of a RAVN (“Applied Cognitive Engine”) i.e. powered software Robot to help and facilitate the GDPR (“General Data Protection Regulation”) compliance. The Robot uses AI techniques to automatically analyze documents and other types of data in any business system which is subject to GDPR rules. It allows users to search, retrieve, flag, classify, and report on data, mediated to be super sensitive under GDPR quickly and easily. Users also can identify personal data from documents, view feeds on the latest personal data that requires attention and provide reports on the data suggested to be deleted or secured. RAVN’s GDPR Robot is also able to hasten requests for information (Data Subject Access Requests - “DSAR”) in a simple and efficient way, removing the need for a physical approach to these requests which tends to be very labor thorough. Peter Wallqvist, CSO at RAVN Systems commented, “GDPR compliance is of universal paramountcy as it will be exploited by any organization that controls and processes data concerning EU citizens.

Link: http://markets.financialcontent.com/stocks/news/read/33888795/RAVN_Systems_Launch_the_ACE_Powered_GDPR_Robot

Eno A Natural Language Chatbot Launched by Capital One [ 56 ]

Capital One announces a chatbot for customers called Eno. Eno is a natural language chatbot that people socialize through texting. CapitalOne claims that Eno is First natural language SMS chatbot from a U.S. bank that allows customers to ask questions using natural language. Customers can interact with Eno asking questions about their savings and others using a text interface. Eno makes such an environment that it feels that a human is interacting. This provides a different platform than other brands that launch chatbots like Facebook Messenger and Skype. They believed that Facebook has too much access to private information of a person, which could get them into trouble with privacy laws U.S. financial institutions work under. Like Facebook Page admin can access full transcripts of the bot’s conversations. If that would be the case then the admins could easily view the personal banking information of customers with is not correct.

Link: https://www.macobserver.com/analysis/capital-one-natural-language-chatbot-eno/

Future of BI in Natural Language Processing [ 140 ]

Several companies in BI spaces are trying to get with the trend and trying hard to ensure that data becomes more friendly and easily accessible. But still there is a long way for this.BI will also make it easier to access as GUI is not needed. Because nowadays the queries are made by text or voice command on smartphones.one of the most common examples is Google might tell you today what tomorrow’s weather will be. But soon enough, we will be able to ask our personal data chatbot about customer sentiment today, and how we feel about their brand next week; all while walking down the street. Today, NLP tends to be based on turning natural language into machine language. But with time the technology matures – especially the AI component –the computer will get better at “understanding” the query and start to deliver answers rather than search results. Initially, the data chatbot will probably ask the question ‘how have revenues changed over the last three-quarters?’ and then return pages of data for you to analyze. But once it learns the semantic relations and inferences of the question, it will be able to automatically perform the filtering and formulation necessary to provide an intelligible answer, rather than simply showing you data.

Link: http://www.smartdatacollective.com/eran-levy/489410/here-s-why-natural-language-processing-future-bi

Using Natural Language Processing and Network Analysis to Develop a Conceptual Framework for Medication Therapy Management Research [ 97 ]

Natural Language Processing and Network Analysis to Develop a Conceptual Framework for Medication Therapy Management Research describes a theory derivation process that is used to develop a conceptual framework for medication therapy management (MTM) research. The MTM service model and chronic care model are selected as parent theories. Review article abstracts target medication therapy management in chronic disease care that were retrieved from Ovid Medline (2000–2016). Unique concepts in each abstract are extracted using Meta Map and their pair-wise co-occurrence are determined. Then the information is used to construct a network graph of concept co-occurrence that is further analyzed to identify content for the new conceptual model. 142 abstracts are analyzed. Medication adherence is the most studied drug therapy problem and co-occurred with concepts related to patient-centered interventions targeting self-management. The enhanced model consists of 65 concepts clustered into 14 constructs. The framework requires additional refinement and evaluation to determine its relevance and applicability across a broad audience including underserved settings.

Link: https://www.ncbi.nlm.nih.gov/pubmed/28269895?dopt=Abstract

Meet the Pilot, world’s first language translating earbuds [ 96 ]

The world’s first smart earpiece Pilot will soon be transcribed over 15 languages. According to Spring wise, Waverly Labs’ Pilot can already transliterate five spoken languages, English, French, Italian, Portuguese, and Spanish, and seven written affixed languages, German, Hindi, Russian, Japanese, Arabic, Korean and Mandarin Chinese. The Pilot earpiece is connected via Bluetooth to the Pilot speech translation app, which uses speech recognition, machine translation and machine learning and speech synthesis technology. Simultaneously, the user will hear the translated version of the speech on the second earpiece. Moreover, it is not necessary that conversation would be taking place between two people; only the users can join in and discuss as a group. As if now the user may experience a few second lag interpolated the speech and translation, which Waverly Labs pursue to reduce. The Pilot earpiece will be available from September but can be pre-ordered now for $249. The earpieces can also be used for streaming music, answering voice calls, and getting audio notifications.

Link: https://www.indiegogo.com/projects/meet-the-pilot-smart-earpiece-language-translator-headphones-travel#/

4 Datasets in NLP and state-of-the-art models

The objective of this section is to present the various datasets used in NLP and some state-of-the-art models in NLP.

4.1 Datasets in NLP

Corpus is a collection of linguistic data, either compiled from written texts or transcribed from recorded speech. Corpora are intended primarily for testing linguistic hypotheses - e.g., to determine how a certain sound, word, or syntactic construction is used across a culture or language. There are various types of corpus: In an annotated corpus, the implicit information in the plain text has been made explicit by specific annotations. Un-annotated corpus contains raw state of plain text. Different languages can be compared using a reference corpus. Monitor corpora are non-finite collections of texts which are mostly used in lexicography. Multilingual corpus refers to a type of corpus that contains small collections of monolingual corpora based on the same sampling procedure and categories for different languages. Parallel corpus contains texts in one language and their translations into other languages which are aligned sentence phrase by phrase. Reference corpus contains text of spoken (formal and informal) and written (formal and informal) language which represents various social and situational contexts. Speech corpus contains recorded speech and transcriptions of recording and the time each word occurred in the recorded speech. There are various datasets available for natural language processing; some of these are listed below for different use cases:

Sentiment Analysis: Sentiment analysis is a rapidly expanding field of natural language processing (NLP) used in a variety of fields such as politics, business etc. Majorly used datasets for sentiment analysis are:

Stanford Sentiment Treebank (SST): Socher et al. introduced SST containing sentiment labels for 215,154 phrases in parse trees for 11,855 sentences from movie reviews posing novel sentiment compositional difficulties [ 127 ].

Sentiment140: It contains 1.6 million tweets annotated with negative, neutral and positive labels.

Paper Reviews: It provides reviews of computing and informatics conferences written in English and Spanish languages. It has 405 reviews which are evaluated on a 5-point scale ranging from very negative to very positive.

IMDB: For natural language processing, text analytics, and sentiment analysis, this dataset offers thousands of movie reviews split into training and test datasets. This dataset was introduced in by Mass et al. in 2011 [ 73 ].

G.Rama Rohit Reddy of the Language Technologies Research Centre, KCIS, IIIT Hyderabad, generated the corpus “Sentiraama.” The corpus is divided into four datasets, each of which is annotated with a two-value scale that distinguishes between positive and negative sentiment at the document level. The corpus contains data from a variety of fields, including book reviews, product reviews, movie reviews, and song lyrics. The annotators meticulously followed the annotation technique for each of them. The folder “Song Lyrics” in the corpus contains 339 Telugu song lyrics written in Telugu script [ 121 ].

Language Modelling: Language models analyse text data to calculate word probability. They use an algorithm to interpret the data, which establishes rules for context in natural language. The model then uses these rules to accurately predict or construct new sentences. The model basically learns the basic characteristics and features of language and then applies them to new phrases. Majorly used datasets for Language modeling are as follows:

Salesforce’s WikiText-103 dataset has 103 million tokens collected from 28,475 featured articles from Wikipedia.

WikiText-2 is a scaled-down version of WikiText-103. It contains 2 million tokens with a 33,278 jargon size.

Penn Treebank piece of the Wall Street Diary corpus includes 929,000 tokens for training, 73,000 tokens for validation, and 82,000 tokens for testing purposes. Its context is limited since it comprises sentences rather than paragraphs [ 76 ].

The Ministry of Electronics and Information Technology’s Technology Development Programme for Indian Languages (TDIL) launched its own data distribution portal ( www.tdil-dc.in ) which has cataloged datasets [ 24 ].

Machine Translation: The task of converting the text of one natural language into another language while keeping the sense of the input text is known as machine translation. Majorly used datasets are as follows: