- Skip to main content

- Skip to primary sidebar

- Skip to footer

Additional menu

Khan Academy Blog

Introducing Khanmigo’s New Academic Essay Feedback Tool

posted on November 29, 2023

By Sarah Robertson , senior product manager at Khan Academy

Khan Academy has always been about leveraging technology to deliver world-class educational experiences to students everywhere. We think the newest AI-powered feature in our Khanmigo pilot—our Academic Essay Feedback tool—is a groundbreaking step toward revolutionizing how students improve their writing skills.

The reality of writing instruction

Here’s a word problem for you: A ninth-grade English teacher assigns a two-page essay to 100 students. If she limits herself to spending 10 minutes per essay providing personalized, detailed feedback on each draft, how many hours will it take her to finish reviewing all 100 essays?

The answer is that it would take her nearly 17 hours —and that’s just for the first draft!

Research tells us that the most effective methods of improving student writing skills require feedback to be focused, actionable, aligned to clear objectives, and delivered often and in a timely manner .

The unfortunate reality is that teachers are unable to provide this level of feedback to students as often as students need it—and they need it now more than ever. Only 25% of eighth and twelfth graders are proficient in writing, according to the most recent NAEP scores .

An AI writing tutor for every student

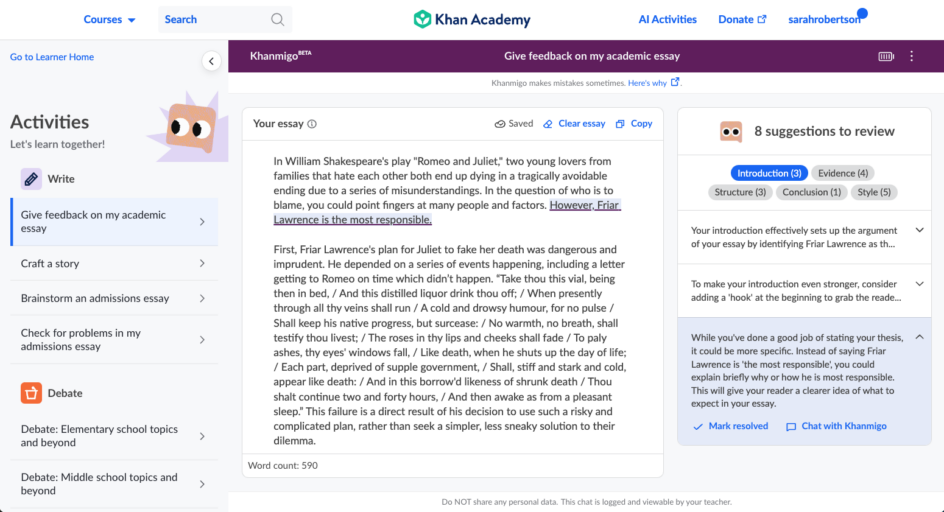

Developed by experts in English Language Arts (ELA) and writing instruction, the pilot Khanmigo Academic Essay Feedback tool uses AI to offer students specific, immediate, and actionable feedback on their argumentative, expository, or literary analysis essays.

Unlike other AI-powered writing tools, the Academic Essay Feedback tool isn’t limited to giving feedback on sentence- or language-level issues alone, like grammar or spelling. Instead, it provides feedback on areas like essay structure and organization, how well students support their arguments, introduction and conclusion, and style and tone.

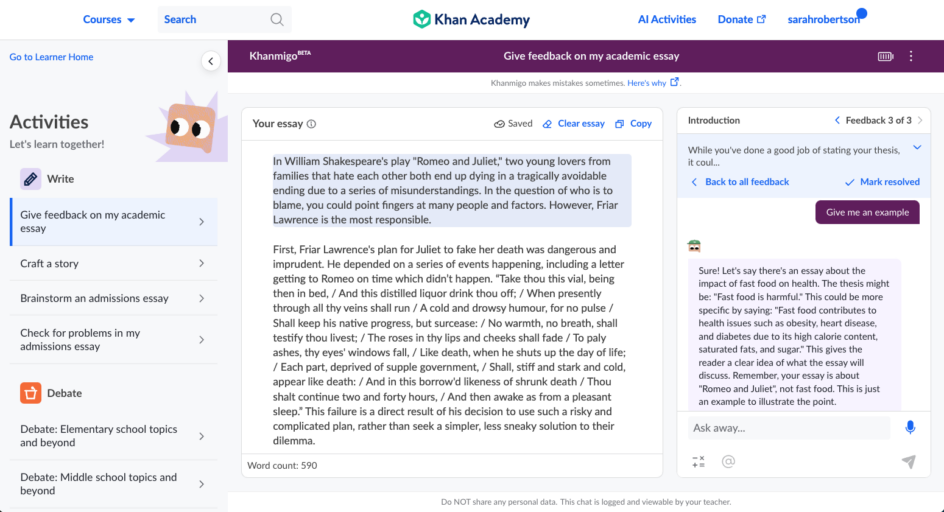

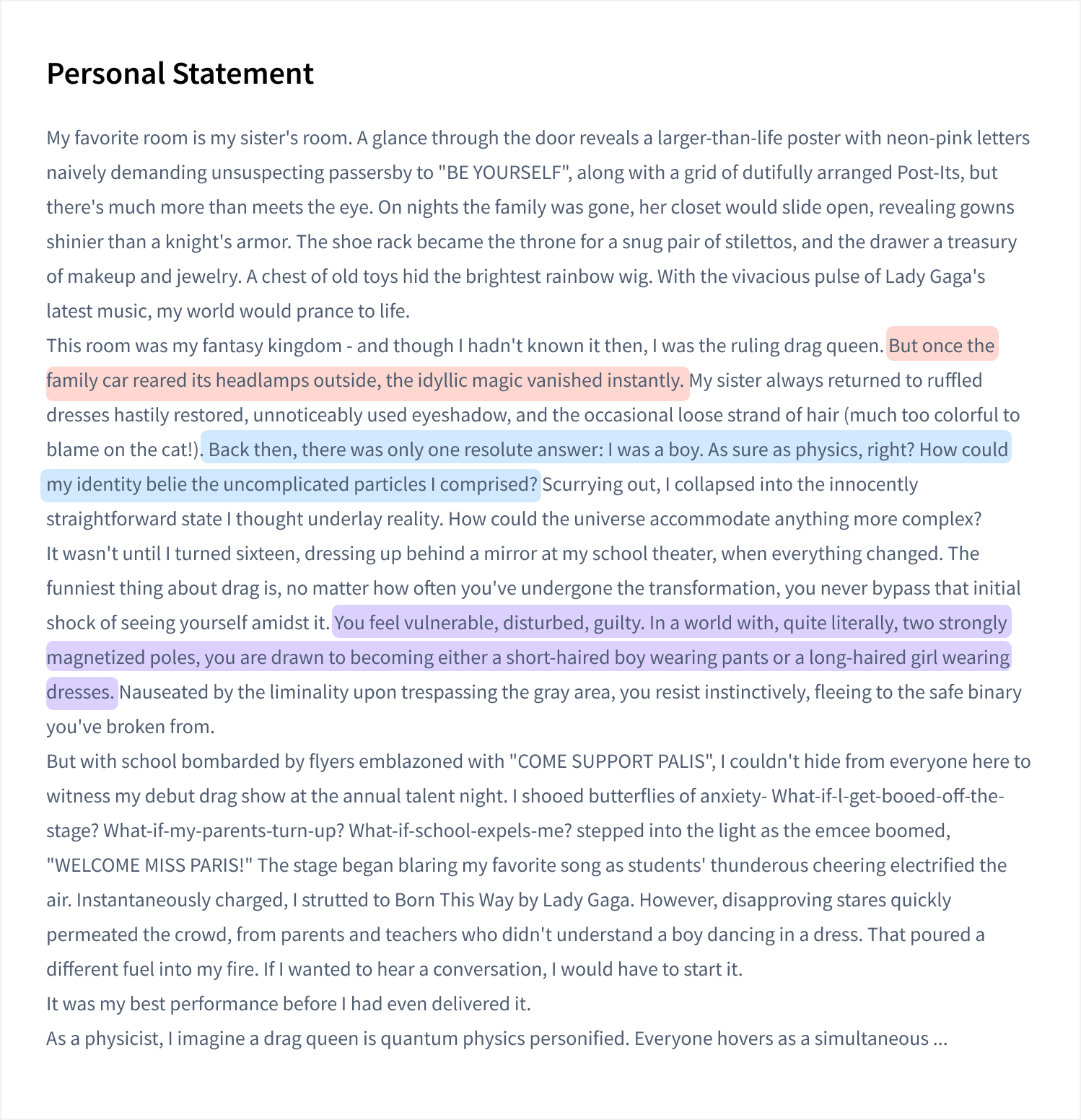

The tool also doesn’t just stop at providing feedback, it also guides students through the revision process. Students can view highlighted feedback, ask clarifying questions, see exemplar writing, make revisions, and ask for further review—without the AI doing any actual writing for them.

Unique features of Khanmigo pilot Academic Essay Feedback tool

- Immediate, personalized feedback: within seconds, students get detailed, actionable, grade-level-appropriate feedback (both praise and constructive) that is personalized to their specific writing assignment and tied directly to interactive highlights in their essay.

- Comprehensive approach: feedback covers a wide range of writing skills, from crafting an engaging yet focused introduction and thesis, to overall essay structure and organization, to style and tone, to alignment and use of evidence.

- Interactive revision process: students can interact with Khanmigo to ask questions about specific pieces of feedback, get examples of model writing, make immediate revisions based on the feedback, and see if their revisions addressed the suggestion.

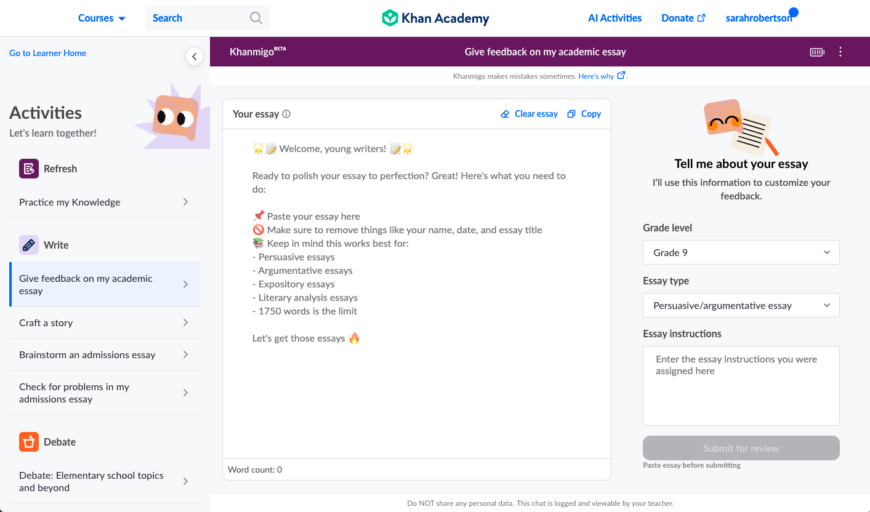

- Support for various essay types: the tool is versatile and assists with multi-paragraph persuasive, argumentative, explanatory, and literary analysis essay assignments for grades 8-12 (and more, coming soon).

- Focus on instruction and growth: like all Khanmigo features, the Academic Essay Feedback tool will not do the work for the student. Teachers and parents can rest assured that Khanmigo is there to improve the students’ independent writing skills, not provide one-click suggested revisions.

How parents can use Khanmigo’s Academic Essay Feedback tool

Any student with Khanmigo access can find the feedback tool under the “Write” category on their AI Activities menu.

For academic essays, students should simply paste their first draft into the essay field, select their grade level and essay type, and provide the essay instructions from the teacher.

Students then click “Submit” and feedback begins generating. Once Khanmigo is done generating feedback, students can work their way through the suggestions for each category, chat with Khanmigo for help, make revisions, and resolve feedback. They can then submit their second draft for another round of feedback, or copy the final draft to submit to their teacher.

Bringing Khanmigo to your classroom, school, or district

Teachers in Khan Academy Districts partnerships can begin using the Khanmigo Academic Essay Feedback tool with their students right away. Simply direct students to the feedback tool under the “Write” category on their AI Activities menu.

Like all other Khanmigo activities, students’ interactions are monitored and moderated for safety. Teachers or parents can view the student’s initial draft, AI-generated feedback, chat history, and final draft in the student’s chat history. If anything is flagged for moderation, teachers or parents will receive an email notification.

Looking ahead

With the Academic Essay Feedback tool in our Khanmigo pilot, teachers and parents can empower students to take charge of their writing.The tool helps facilitate a deeper understanding of effective writing techniques and encourages self-improvement. For teachers, we think this tool is a valuable ally, enabling them to provide more frequent, timely, detailed, and actionable feedback for students on multiple drafts.

In the coming months, we’ll be launching exciting improvements to the tool and even more writing resources for learners, parents, teachers, and administrators:

- The ability for teachers to create an essay-revision assignment for their students on Khan Academy

- More varied feedback areas and flexibility in what feedback is given

- Support for students in essay outlining and drafting

- Insights for teachers and parents into their students’ full writing process

Stay tuned!

Sarah Robertson is a senior product manager at Khan Academy. She has a M.Ed. in Curriculum and Instruction and over a decade of experience teaching English, developing curriculum, and creating software products that have helped tens of millions of students improve their reading and writing skills.

Get Khanmigo

The best way to learn and teach with AI is here. Ace the school year with our AI-powered guide, Khanmigo.

For learners For teachers For parents

The world’s leading AI platform for teachers to grade essays

EssayGrader is an AI powered grading assistant that gives high quality, specific and accurate writing feedback for essays. On average it takes a teacher 10 minutes to grade a single essay, with EssayGrader that time is cut down to 30 seconds. That's a 95% reduction in the time it takes to grade an essay, with the same results.

How we've done

Happy users

Essays graded

EssayGrader analyzes essays with the power of AI. Our software is trained on massive amounts of diverse text data, including books, articles and websites. This gives us the ability to provide accurate and detailed writing feedback to students and save teachers loads of time. We are the perfect AI powered grading assistant.

EssayGrader analyzes essays for grammar, punctuation, spelling, coherence, clarity and writing style errors. We provide detailed reports of the errors found and suggestions on how to fix those errors. Our error reports help speed up grading times by quickly highlighting mistakes made in the essay.

Bulk uploading

Uploading a single essay at a time, then waiting for it to complete is a pain. Bulk uploading allows you to upload an entire class worth of essays at a single time. You can work on other important tasks, come back in a few minutes to see all the essays perfectly graded.

Custom rubrics

We don't assume how you want to grade your essays. Instead, we provide you with the ability to create the same rubrics you already use. Those rubrics are then used to grade essays with the same grading criteria you are already accustomed to.

Sometimes you don't want to read a 5000 word essay and you'd just like a quick summary. Or maybe you're a student that needs to provide a summary of your essay to your teacher. We can help with our summarizer feature. We can provide a concise summary including the most important information and unique phrases.

AI detector

Our AI detector feature allows teachers to identify if an essay was written by AI or if only parts of it were written by AI. AI is becoming very popular and teachers need to be able to detect if essays are being written by students or AI.

Create classes to neatly organize your students essays. This is an essential feature when you have multiple classes and need to be able to track down students essays quickly.

Our mission

At EssayGrader, our mission is crystal clear: we're transforming the grading experience for teachers and students alike. Picture a space where teachers can efficiently and accurately grade essays, lightening their workload, while empowering students to enhance their writing skills. Our software is a dynamic work in progress, a testament to our commitment to constant improvement. We're dedicated to refining and enhancing our platform continually. With each update, we strive to simplify the lives of both educators and learners, making the process of grading and writing essays smoother and more efficient.We recognize the immense challenges teachers face – the heavy burdens, the long hours, and the often underappreciated efforts. EssayGrader is our way of shouldering some of that load. We are here to support you, to make your tasks more manageable, and to give you the tools you need to excel in your teaching journey.

Join the newsletter

Subscribe to get our latest content by email.

Super charge your college essay

Elevate Your Essay to Perfection

Admissions expertise

Impression analysis

Actionable insights

Instant feedback

Get started free.

Recent blog posts

Frequently Asked Questions

AI Essay Reviewer

Ai-powered essay analysis and feedback.

- Improve academic essays: Get detailed feedback on your essays to enhance your academic writing skills.

- Enhance professional writing: Use the tool to refine your professional writing and communicate more effectively in the workplace.

- Support teaching and learning: Teachers can use the tool to provide comprehensive feedback to students, while students can use it to understand their strengths and areas for improvement.

- Prepare for exams: Use the tool to prepare for exams that require essay writing, such as SAT, ACT, GRE, and more.

New & Trending Tools

Ai speech generator, detailed lesson plan builder, love letter generator.

The Hechinger Report

Covering Innovation & Inequality in Education

PROOF POINTS: AI writing feedback ‘better than I thought,’ top researcher says

Share this:

- Click to share on LinkedIn (Opens in new window)

- Click to share on Pinterest (Opens in new window)

- Click to share on Reddit (Opens in new window)

- Click to share on WhatsApp (Opens in new window)

- Click to email a link to a friend (Opens in new window)

The Hechinger Report is a national nonprofit newsroom that reports on one topic: education. Sign up for our weekly newsletters to get stories like this delivered directly to your inbox. Consider supporting our stories and becoming a member today.

Get important education news and analysis delivered straight to your inbox

- Weekly Update

- Future of Learning

- Higher Education

- Early Childhood

- Proof Points

This week I challenged my editor to face off against a machine. Barbara Kantrowitz gamely accepted, under one condition: “You have to file early.” Ever since ChatGPT arrived in 2022, many journalists have made a public stunt out of asking the new generation of artificial intelligence to write their stories. Those AI stories were often bland and sprinkled with errors. I wanted to understand how well ChatGPT handled a different aspect of writing: giving feedback.

My curiosity was piqued by a new study , published in the June 2024 issue of the peer-reviewed journal Learning and Instruction, that evaluated the quality of ChatGPT’s feedback on students’ writing. A team of researchers compared AI with human feedback on 200 history essays written by students in grades 6 through 12 and they determined that human feedback was generally a bit better. Humans had a particular advantage in advising students on something to work on that would be appropriate for where they are in their development as a writer.

But ChatGPT came close. On a five-point scale that the researchers used to rate feedback quality, with a 5 being the highest quality feedback, ChatGPT averaged a 3.6 compared with a 4.0 average from a team of 16 expert human evaluators. It was a tough challenge. Most of these humans had taught writing for more than 15 years or they had considerable experience in writing instruction. All received three hours of training for this exercise plus extra pay for providing the feedback.

ChatGPT even beat these experts in one aspect; it was slightly better at giving feedback on students’ reasoning, argumentation and use of evidence from source materials – the features that the researchers had wanted the writing evaluators to focus on.

“It was better than I thought it was going to be because I didn’t have a lot of hope that it was going to be that good,” said Steve Graham, a well-regarded expert on writing instruction at Arizona State University, and a member of the study’s research team. “It wasn’t always accurate. But sometimes it was right on the money. And I think we’ll learn how to make it better.”

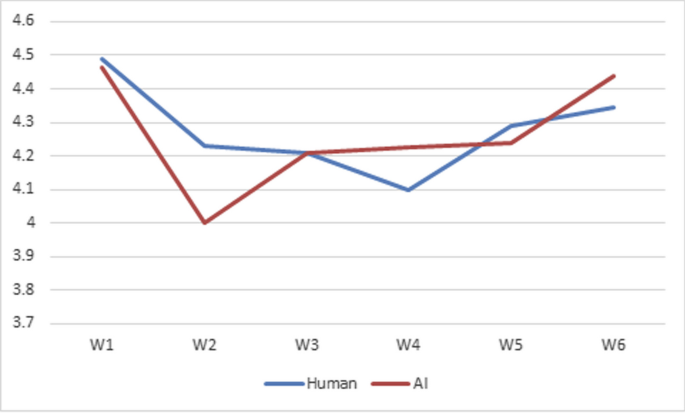

Average ratings for the quality of ChatGPT and human feedback on 200 student essays

Exactly how ChatGPT is able to give good feedback is something of a black box even to the writing researchers who conducted this study. Artificial intelligence doesn’t comprehend things in the same way that humans do. But somehow, through the neural networks that ChatGPT’s programmers built, it is picking up on patterns from all the writing it has previously digested, and it is able to apply those patterns to a new text.

The surprising “relatively high quality” of ChatGPT’s feedback is important because it means that the new artificial intelligence of large language models, also known as generative AI, could potentially help students improve their writing. One of the biggest problems in writing instruction in U.S. schools is that teachers assign too little writing, Graham said, often because teachers feel that they don’t have the time to give personalized feedback to each student. That leaves students without sufficient practice to become good writers. In theory, teachers might be willing to assign more writing or insist on revisions for each paper if students (or teachers) could use ChatGPT to provide feedback between drafts.

Despite the potential, Graham isn’t an enthusiastic cheerleader for AI. “My biggest fear is that it becomes the writer,” he said. He worries that students will not limit their use of ChatGPT to helpful feedback, but ask it to do their thinking, analyzing and writing for them. That’s not good for learning. The research team also worries that writing instruction will suffer if teachers delegate too much feedback to ChatGPT. Seeing students’ incremental progress and common mistakes remain important for deciding what to teach next, the researchers said. For example, seeing loads of run-on sentences in your students’ papers might prompt a lesson on how to break them up. But if you don’t see them, you might not think to teach it. Another common concern among writing instructors is that AI feedback will steer everyone to write in the same homogenized way. A young writer’s unique voice could be flattened out before it even has the chance to develop.

There’s also the risk that students may not be interested in heeding AI feedback. Students often ignore the painstaking feedback that their teachers already give on their essays. Why should we think students will pay attention to feedback if they start getting more of it from a machine?

Still, Graham and his research colleagues at the University of California, Irvine, are continuing to study how AI could be used effectively and whether it ultimately improves students’ writing. “You can’t ignore it,” said Graham. “We either learn to live with it in useful ways, or we’re going to be very unhappy with it.”

Right now, the researchers are studying how students might converse back-and-forth with ChatGPT like a writing coach in order to understand the feedback and decide which suggestions to use.

Example of feedback from a human and ChatGPT on the same essay

In the current study, the researchers didn’t track whether students understood or employed the feedback, but only sought to measure its quality. Judging the quality of feedback is a rather subjective exercise, just as feedback itself is a bundle of subjective judgment calls. Smart people can disagree on what good writing looks like and how to revise bad writing.

In this case, the research team came up with its own criteria for what constitutes good feedback on a history essay. They instructed the humans to focus on the student’s reasoning and argumentation, rather than, say, grammar and punctuation. They also told the human raters to adopt a “glow and grow strategy” for delivering the feedback by first finding something to praise, then identifying a particular area for improvement.

The human raters provided this kind of feedback on hundreds of history essays from 2021 to 2023, as part of an unrelated study of an initiative to boost writing at school . The researchers randomly grabbed 200 of these essays and fed the raw student writing – without the human feedback – to version 3.5 of ChatGPT and asked it to give feedback , too .

At first, the AI feedback was terrible, but as the researchers tinkered with the instructions, or the “prompt,” they typed into ChatGPT, the feedback improved. The researchers eventually settled upon this wording: “Pretend you are a secondary school teacher. Provide 2-3 pieces of specific, actionable feedback on each of the following essays…. Use a friendly and encouraging tone.” The researchers also fed the assignment that the students were given, for example, “Why did the Montgomery Bus Boycott succeed?” along with the reading source material that the students were provided. (More details about how the researchers prompted ChatGPT are explained in Appendix C of the study .)

The humans took about 20 to 25 minutes per essay. ChatGPT’s feedback came back instantly. The humans sometimes marked up sentences by, for example, showing a place where the student could have cited a source to buttress an argument. ChatGPT didn’t write any in-line comments and only wrote a note to the student.

Researchers then read through both sets of feedback – human and machine – for each essay, comparing and rating them. (It was supposed to be a blind comparison test and the feedback raters were not told who authored each one. However, the language and tone of ChatGPT were distinct giveaways, and the in-line comments were a tell of human feedback.)

Humans appeared to have a clear edge with the very strongest and the very weakest writers, the researchers found. They were better at pushing a strong writer a little bit further, for example, by suggesting that the student consider and address a counterargument. ChatGPT struggled to come up with ideas for a student who was already meeting the objectives of a well-argued essay with evidence from the reading source materials. ChatGPT also struggled with the weakest writers. The researchers had to drop two of the essays from the study because they were so short that ChatGPT didn’t have any feedback for the student. The human rater was able to parse out some meaning from a brief, incomplete sentence and offer a suggestion.

In one student essay about the Montgomery Bus Boycott, reprinted above, the human feedback seemed too generic to me: “Next time, I would love to see some evidence from the sources to help back up your claim.” ChatGPT, by contrast, specifically suggested that the student could have mentioned how much revenue the bus company lost during the boycott – an idea that was mentioned in the student’s essay. ChatGPT also suggested that the student could have mentioned specific actions that the NAACP and other organizations took. But the student had actually mentioned a few of these specific actions in his essay. That part of ChatGPT’s feedback was plainly inaccurate.

In another student writing example, also reprinted below, the human straightforwardly pointed out that the student had gotten an historical fact wrong. ChatGPT appeared to affirm that the student’s mistaken version of events was correct.

Another example of feedback from a human and ChatGPT on the same essay

So how did ChatGPT’s review of my first draft stack up against my editor’s? One of the researchers on the study team suggested a prompt that I could paste into ChatGPT. After a few back and forth questions with the chatbot about my grade level and intended audience, it initially spit out some generic advice that had little connection to the ideas and words of my story. It seemed more interested in format and presentation, suggesting a summary at the top and subheads to organize the body. One suggestion would have made my piece too long-winded. Its advice to add examples of how AI feedback might be beneficial was something that I had already done. I then asked for specific things to change in my draft, and ChatGPT came back with some great subhead ideas. I plan to use them in my newsletter, which you can see if you sign up for it here . (And if you want to see my prompt and dialogue with ChatGPT, here is the link .)

My human editor, Barbara, was the clear winner in this round. She tightened up my writing, fixed style errors and helped me brainstorm this ending. Barbara’s job is safe – for now.

This story about AI feedback was written by Jill Barshay and produced by The Hechinger Report , a nonprofit, independent news organization focused on inequality and innovation in education. Sign up for Proof Points and other Hechinger newsletters .

Related articles

The Hechinger Report provides in-depth, fact-based, unbiased reporting on education that is free to all readers. But that doesn't mean it's free to produce. Our work keeps educators and the public informed about pressing issues at schools and on campuses throughout the country. We tell the whole story, even when the details are inconvenient. Help us keep doing that.

Join us today.

Jill Barshay SENIOR REPORTER

(212)... More by Jill Barshay

Letters to the Editor

At The Hechinger Report, we publish thoughtful letters from readers that contribute to the ongoing discussion about the education topics we cover. Please read our guidelines for more information. We will not consider letters that do not contain a full name and valid email address. You may submit news tips or ideas here without a full name, but not letters.

By submitting your name, you grant us permission to publish it with your letter. We will never publish your email address. You must fill out all fields to submit a letter.

One key point is that humans took 20-25 minutes per essay while AI was instant. I estimate I can read AI’s feedback, comment on it, and improve it in about five minutes per essay, meaning I could give four to five times as much feedback in the same amount of time. Likely, AI + me will create better feedback than either of us alone. Given the number of students I teach, I could only give the same amount of human attention that the humans in this experiment gave were my district to give me an entire free week to read and score. (120 students X 20 minutes = 2,400 minutes = 40 hours) That’s not likely, plus it would take time away from vital in-class connections.

Your email address will not be published. Required fields are marked *

Save my name, email, and website in this browser for the next time I comment.

Sign me up for the newsletter!

Submit a letter

Independent Analysis, Innovative Ideas

- High-Quality Tutoring

- Artificial Intelligence

- Chronic Absenteeism

The Potential of AI Feedback to Improve Student Writing

This week I challenged my editor to face off against a machine. Barbara Kantrowitz gamely accepted, under one condition: “You have to file early.” Ever since ChatGPT arrived in 2022, many journalists have made a public stunt out of asking the new generation of artificial intelligence to write their stories. Those AI stories were often bland and sprinkled with errors. I wanted to understand how well ChatGPT handled a different aspect of writing: giving feedback

My curiosity was piqued by a new study , published in the June 2024 issue of the peer-reviewed journal Learning and Instruction, that evaluated the quality of ChatGPT’s feedback on students’ writing. A team of researchers compared AI with human feedback on 200 history essays written by students in grades 6 through 12 and they determined that human feedback was generally a bit better. Humans had a particular advantage in advising students on something to work on that would be appropriate for where they are in their development as a writer.

But ChatGPT came close. On a five-point scale that the researchers used to rate feedback quality, with a 5 being the highest quality feedback, ChatGPT averaged a 3.6 compared with a 4.0 average from a team of 16 expert human evaluators. It was a tough challenge. Most of these humans had taught writing for more than 15 years or they had considerable experience in writing instruction. All received three hours of training for this exercise plus extra pay for providing the feedback.

ChatGPT even beat these experts in one aspect; it was slightly better at giving feedback on students’ reasoning, argumentation and use of evidence from source materials – the features that the researchers had wanted the writing evaluators to focus on.

“It was better than I thought it was going to be because I didn’t have a lot of hope that it was going to be that good,” said Steve Graham, a well-regarded expert on writing instruction at Arizona State University, and a member of the study’s research team. “It wasn’t always accurate. But sometimes it was right on the money. And I think we’ll learn how to make it better.”

Exactly how ChatGPT is able to give good feedback is something of a black box even to the writing researchers who conducted this study. Artificial intelligence doesn’t comprehend things in the same way that humans do. But somehow, through the neural networks that ChatGPT’s programmers built, it is picking up on patterns from all the writing it has previously digested, and it is able to apply those patterns to a new text.

The surprising “relatively high quality” of ChatGPT’s feedback is important because it means that the new artificial intelligence of large language models, also known as generative AI, could potentially help students improve their writing. One of the biggest problems in writing instruction in U.S. schools is that teachers assign too little writing, Graham said, often because teachers feel that they don’t have the time to give personalized feedback to each student. That leaves students without sufficient practice to become good writers. In theory, teachers might be willing to assign more writing or insist on revisions for each paper if students (or teachers) could use ChatGPT to provide feedback between drafts.

Despite the potential, Graham isn’t an enthusiastic cheerleader for AI. “My biggest fear is that it becomes the writer,” he said. He worries that students will not limit their use of ChatGPT to helpful feedback, but ask it to do their thinking, analyzing and writing for them. That’s not good for learning. The research team also worries that writing instruction will suffer if teachers delegate too much feedback to ChatGPT. Seeing students’ incremental progress and common mistakes remain important for deciding what to teach next, the researchers said. For example, seeing loads of run-on sentences in your students’ papers might prompt a lesson on how to break them up. But if you don’t see them, you might not think to teach it. Another common concern among writing instructors is that AI feedback will steer everyone to write in the same homogenized way. A young writer’s unique voice could be flattened out before it even has the chance to develop.

There’s also the risk that students may not be interested in heeding AI feedback. Students often ignore the painstaking feedback that their teachers already give on their essays. Why should we think students will pay attention to feedback if they start getting more of it from a machine?

Still, Graham and his research colleagues at the University of California, Irvine, are continuing to study how AI could be used effectively and whether it ultimately improves students’ writing. “You can’t ignore it,” said Graham. “We either learn to live with it in useful ways, or we’re going to be very unhappy with it.”

Right now, the researchers are studying how students might converse back-and-forth with ChatGPT like a writing coach in order to understand the feedback and decide which suggestions to use.

In the current study, the researchers didn’t track whether students understood or employed the feedback, but only sought to measure its quality. Judging the quality of feedback is a rather subjective exercise, just as feedback itself is a bundle of subjective judgment calls. Smart people can disagree on what good writing looks like and how to revise bad writing.

In this case, the research team came up with its own criteria for what constitutes good feedback on a history essay. They instructed the humans to focus on the student’s reasoning and argumentation, rather than, say, grammar and punctuation. They also told the human raters to adopt a “glow and grow strategy” for delivering the feedback by first finding something to praise, then identifying a particular area for improvement.

The human raters provided this kind of feedback on hundreds of history essays from 2021 to 2023, as part of an unrelated study of an initiative to boost writing at school . The researchers randomly grabbed 200 of these essays and fed the raw student writing – without the human feedback – to version 3.5 of ChatGPT and asked it to give feedback, too.

At first, the AI feedback was terrible, but as the researchers tinkered with the instructions, or the “prompt,” they typed into ChatGPT, the feedback improved. The researchers eventually settled upon this wording: “Pretend you are a secondary school teacher. Provide 2-3 pieces of specific, actionable feedback on each of the following essays…. Use a friendly and encouraging tone.” The researchers also fed the assignment that the students were given, for example, “Why did the Montgomery Bus Boycott succeed?” along with the reading source material that the students were provided. (More details about how the researchers prompted ChatGPT are explained in Appendix C of the study .)

The humans took about 20 to 25 minutes per essay. ChatGPT’s feedback came back instantly. The humans sometimes marked up sentences by, for example, showing a place where the student could have cited a source to buttress an argument. ChatGPT didn’t write any in-line comments and only wrote a note to the student.

Researchers then read through both sets of feedback – human and machine – for each essay, comparing and rating them. (It was supposed to be a blind comparison test and the feedback raters were not told who authored each one. However, the language and tone of ChatGPT were distinct giveaways, and the in-line comments were a tell of human feedback.)

Humans appeared to have a clear edge with the very strongest and the very weakest writers, the researchers found. They were better at pushing a strong writer a little bit further, for example, by suggesting that the student consider and address a counterargument. ChatGPT struggled to come up with ideas for a student who was already meeting the objectives of a well-argued essay with evidence from the reading source materials. ChatGPT also struggled with the weakest writers. The researchers had to drop two of the essays from the study because they were so short that ChatGPT didn’t have any feedback for the student. The human rater was able to parse out some meaning from a brief, incomplete sentence and offer a suggestion.

In one student essay about the Montgomery Bus Boycott, reprinted above, the human feedback seemed too generic to me: “Next time, I would love to see some evidence from the sources to help back up your claim.” ChatGPT, by contrast, specifically suggested that the student could have mentioned how much revenue the bus company lost during the boycott – an idea that was mentioned in the student’s essay. ChatGPT also suggested that the student could have mentioned specific actions that the NAACP and other organizations took. But the student had actually mentioned a few of these specific actions in his essay. That part of ChatGPT’s feedback was plainly inaccurate.

In another student writing example, also reprinted below, the human straightforwardly pointed out that the student had gotten an historical fact wrong. ChatGPT appeared to affirm that the student’s mistaken version of events was correct.

So how did ChatGPT’s review of my first draft stack up against my editor’s? One of the researchers on the study team suggested a prompt that I could paste into ChatGPT. After a few back and forth questions with the chatbot about my grade level and intended audience, it initially spit out some generic advice that had little connection to the ideas and words of my story. It seemed more interested in format and presentation, suggesting a summary at the top and subheads to organize the body. One suggestion would have made my piece too long-winded. Its advice to add examples of how AI feedback might be beneficial was something that I had already done. I then asked for specific things to change in my draft, and ChatGPT came back with some great subhead ideas. I plan to use them in my newsletter, which you can see if you sign up for it here . (And if you want to see my prompt and dialogue with ChatGPT, here is the link .)

My human editor, Barbara, was the clear winner in this round. She tightened up my writing, fixed style errors and helped me brainstorm this ending. Barbara’s job is safe – for now.

Jill Barshay is a senior reporter at The Hechinger Report, where she writes the weekly “Proof Points” column about education research and data. This column was initially published by The Hechinger Report .

Published: June 3, 2024

Like what you're reading? Share with a colleague.

Related Focus Areas:

- Curriculum & Classrooms

The latest leadership changes in the education sector

A listing of upcoming events at FutureEd and throughout the education sector

FutureEd in the News

Media mentions and our work on other platforms

This link is opening a new tab or window in your browser.

You’ve clicked on a link that is set to open in a new tab or window, depending on your browser settings.

- Research article

- Open access

- Published: 27 October 2023

AI-generated feedback on writing: insights into efficacy and ENL student preference

- Juan Escalante ORCID: orcid.org/0009-0009-8534-2504 1 ,

- Austin Pack 1 &

- Alex Barrett 2

International Journal of Educational Technology in Higher Education volume 20 , Article number: 57 ( 2023 ) Cite this article

15k Accesses

17 Citations

17 Altmetric

Metrics details

The question of how generative AI tools, such as large language models and chatbots, can be leveraged ethically and effectively in education is ongoing. Given the critical role that writing plays in learning and assessment within educational institutions, it is of growing importance for educators to make thoughtful and informed decisions as to how and in what capacity generative AI tools should be leveraged to assist in the development of students’ writing skills. This paper reports on two longitudinal studies. Study 1 examined learning outcomes of 48 university English as a new language (ENL) learners in a six-week long repeated measures quasi experimental design where the experimental group received writing feedback generated from ChatGPT (GPT-4) and the control group received feedback from their human tutor. Study 2 analyzed the perceptions of a different group of 43 ENLs who received feedback from both ChatGPT and their tutor. Results of study 1 showed no difference in learning outcomes between the two groups. Study 2 results revealed a near even split in preference for AI-generated or human-generated feedback, with clear advantages to both forms of feedback apparent from the data. The main implication of these studies is that the use of AI-generated feedback can likely be incorporated into ENL essay evaluation without affecting learning outcomes, although we recommend a blended approach that utilizes the strengths of both forms of feedback. The main contribution of this paper is in addressing generative AI as an automatic essay evaluator while incorporating learner perspectives.

Introduction

Automated writing evaluation (AWE) systems such as Grammarly and Pigai assist learners and educators in the writing process by providing corrective feedback on learner writing. These systems, and older tools such as spelling and grammar checkers, rely on natural language processing to identify errors and infelicities in writing and suggest improvements. However, with the recent unleashing of highly sophisticated generative pretrained transformer (GPT) large language models (LLMs), such as GPT-4 by OpenAI and PaLM 2 by Google , AWE may be entering a new era.

As Godwin-Jones ( 2022 ) pointed out in his treatise on AWE tools in second language writing, GPT-powered programs are capable of not only correcting errors in essays, but can also compose essays. Given a simple prompt, generative artificial intelligence (GenAI) LLMs and chatbots that allow users to interface with LLMs, such as ChatGPT and Bard , can produce complete essays that are passable at the university level (Abd-Elaal et al., 2022 ; Herbold et al., 2023 ). It is also possible for English as a new language (ENL) writers to use GPT-powered machine translation to turn their essays written in their first language (L1) into an English essay (Godwin-Jones, 2022 ) take problematic writing and correct any mistakes wholesale, change its tone from informal to academic, or add cohesive elements like discourse markers (Tate et al., 2023 ). Educators have begun to use AI-powered plagiarism detectors to identify student submissions that were generated by AI, yet AI paraphrasing programs like Quillbot have been found to render AI-generated text indetectable by such tools (Krishna et al., 2023 ). With millions of users engaging with ChatGPT and other GenAI tools since ChatGPT ’s debut in November of 2022, public discourse has speculated on the disruptive and problematic nature of these tools for the field of education (Lampropoulos et al., 2023 ).

The public reaction to GenAI in education has been diverse. In Fütterer et al.’s ( 2023 ) systematic review of popular publications across Australia, New Zealand, The U.K., and the U.S., general sentiment appeared evenly split between positive and negative, but concerns about academic integrity have been raised (Sullivan, 2023 ), with some educational institutions deciding to ban ChatGPT than to allow its use (Yang, 2023 ). The disruption GenAI represents for language education has been likened to the pocket calculator’s impact on math education (Urlaub & Dessein, 2022 ), when institutions debated between prohibiting the technology or incorporating it by rethinking the educational objectives of math education. The prevailing sentiment on GenAI seems to be that reforms are needed to adapt educational practices in accommodation of the technology (Fütterer et al., 2023 ; Tseng & Warschauer, 2023 ). However, research is urgently needed so that teachers, students, and instructional designers can appropriately apply GenAI in education (Chiu et al., 2023 ).

This article represents a step in the direction of better understanding how GenAI might be used in language learning classrooms by examining how language teachers and learners employ it in the writing process. Specifically, we will attempt to investigate the efficacy of using GPT-4 as an AWE tool for generating corrective feedback on student writing and whether students will prefer this feedback over that of a human tutor.

Overview of relevant literature

ChatGPT is a public-facing GenAI chatbot that allows users to interface with LLMs. GenAI chatbots have been trained on a large corpus of language from the Internet to statistically predict the next most probable word in response to a user prompt; these responses are then put through an algorithm of reinforcement learning (OpenAI, 2023a ). From this relatively simple premise these tools can generate, synthesize, or modify natural language to a high degree of sophistication (Elkins & Chun, 2020 ), and are rapidly becoming more sophisticated (Baktash & Dawodi, 2023 ). GenAI has proven capable at a variety of tasks including writing essays or creative texts such as poems or stories, writing or correcting computer programming code, answering questions, summarizing and paraphrasing provided text, and synthesizing disparate tones and styles to generate new and creative text. The vast capabilities and ease of use of GenAI chatbots have led to widespread concerns of the misuse of these tools by students (Yeo, 2023 ).

Educational systems currently rely on student formative and summative writing in assessment and instruction to develop and assess critical thinking, argumentation, synthesis of information, knowledge and competence, and language proficiency (Behizadeh & Engelhard, 2011 ); but the benefits of writing extend in other ways, such as learning about oneself, participating in a community, or simply to occupy free time (Florio & Clark, 1982 ). With writing being a beneficial and critical component of many educational systems, the task of reforming these systems to accommodate GenAI authoring apps seems both daunting and unappealing. Yet the historical lesson of pocket calculators shows that it is equally unappealing to prohibit the technology, or even ignore it (Urlaub & Dessein, 2022 ).

Godwin-Jones ( 2022 ) called for the “thoughtful, informed differentiation in the use and the advocacy of AI-enabled tools, based on situated practice, established goals, and desired outcomes” (p. 13). To address this involved agenda researchers and practitioners need to re-examine educational objectives which, for ENL writing instruction, includes identifying the purpose of writing in the curricula. Writing for placement or other summative writing will have a different objective than process-oriented writing, for instance. How AI-enabled tools can be integrated with these objectives remains unclear.

From a foundation of second language acquisition principles, [Ingley, 2023 ] proposed several practical ways in which GenAI might be used to improve academic writing in ENL contexts. For example, they propose questioning AI-enabled chatbots, and reflecting on output as a way of generating ideas or better understanding a topic versus simply asking the AI to brainstorm a topic for you. They also suggest that AI can help by serving in specific roles (e.g., a conference proposal reviewer, a writing teacher in a writing conference) and organize writing by drafting outlines or by providing feedback on a draft’s organization. Similarly, they propose that feedback on coherence, grammar, vocabulary, and tone can be asked of these AI-tools to help support formative essay writing. Through purposeful prompting, AI-enabled chatbots can act as a more knowledgeable other (John-Steiner & Mahn, 1996 ) that can provide comprehensible input (Krashen, 1982 ) along different stages of the writing process.

Suggestions such as these illustrate how instructors might frame acceptable and unacceptable use of AI-enabled writing tools by learners. Working in concert with AI in a creative and iterative process positions the learner as the driver in the writing process, as opposed to the learner prompting the AI to do all the thinking for them. Identifying and communicating the ethical and appropriate use of AI is an urgent task for practitioners. Since learners have increasingly relied on forms of AI in the writing process for decades, from the red or blue squiggly lines under text in word processors to recommendations on usage and style from Grammarly , they may not question using more enveloping forms of writing assistance.

From the perspective of learners, the use of AI by teachers and institutions may also need to be negotiated in terms of what is appropriate and ethical. Major exams such as the GRE and TOEFL often rely on AI-enabled AWE programs to score large numbers of essays (Elliot & Klobucar, 2013 ), as algorithmic assessment of writing reduces bias and noise and is likely more consistently accurate than the judgments of human experts (Grove et al., 2000 ). But with easily accessed AWE tools like Grammarly , and GenAI tools like ChatGPT , it is simple for any teacher to offload the responsibility of essay evaluation to automated processes (Kumar, 2023 ). Personalized learning through evaluating and giving feedback on essay writing has been identified as a potential strength of GenAI (Chiu et al., 2023 ; Farrokhnia et al., 2023 ; Zhu et al., 2023 ), which can, in turn, help decrease teacher workload (Farrokhnia et al., 2023 ) and prevent teacher burnout. However, teachers will need to make informed decisions regarding if and when to incorporate AWE by consulting learner perceptions and considering the benefit to learning.

Although Grammarly has been shown to be useful as an AWE tool (Fitria, 2021 ), it is not yet known whether ChatGPT and similar GenAI tools can effectively or reliably be used for this purpose, nor whether learners will accept feedback from these tools. Programs like Grammarly and Pigai are specifically designed for essay evaluation and scoring using latent semantic analysis, a modeling approach that relies on large corpora of essays to determine whether a student’s writing is statistically similar to writing in that corpora in terms of both mechanics and semantics (Shermis et al., 2013 ). The LLMs that ChatGPT interfaces with, on the other hand, are not trained with a corpora from a specific domain, such as essays, but with text scraped from the Internet. The domain-general nature of the LLMs behind ChatGPT means its efficacy as an AWE tool needs to be researched before being used as such.

In a recent feasibility study, Dai et al. ( 2023 ) used ChatGPT to provide corrective feedback in undergraduate writing. They found the GenAI feedback to be more readable and detailed than instructor feedback, but still maintained high agreement levels with instructor feedback on certain (but not all) aspects of student writing. Another study that examined ChatGPT for essay evaluation and feedback by Mizumoto and Eguchi ( 2023 ) fed a corpus of 12,100 essays by non-native English writers to ChatGPT and compared rubric-grounded feedback and scores to benchmark levels. Their results showed that ChatGPT was reasonably reliable and accurate. These studies suggest the feasibility and reliability of using GenAI tools like ChatGPT for the purpose of AWE, however the efficacy and student perceptions of ChatGPT AWE use needs to be better understood.

GenAI has many known and unknown limitations which need to be considered before using it as an AWE tool. One of the limitations identified by OpenAI itself is the tendency for ChatGPT to produce text that is untruthful and even malicious (OpenAI, 2023a ). ChatGPT does not function as an information retrieving program in the way that internet search engines do, for example, and only produces text that is tailored to the user prompt using the statistically best-fitting combination of words. Considering students’ tendency to accept information from AWE tools without verifying it (Koltovskaia, 2020 ), this suggests a need to teach learners to approach GenAI-produced output critically. Other relevant concerns about the use of GenAI are bias in output and privacy of user data (Derner & Batistič, 2023 ). Although OpenAI is working on solutions to these issues (OpenAI, 2023a ), safeguards are still vulnerable to certain prompting practices (Derner & Batistič, 2023 ).

The accuracy and efficacy of GenAI chatbots relies to some extent on prompt engineering (Strobelt et al., 2023 ), as well as which LLM is used (e.g., BERT, GPT-4). According to Zhou et al. ( 2023 ), prompt engineering is the practice of optimizing the language of a prompt with the intention of eliciting the best possible performance from LLMs. With prompt engineering, users can guide ChatGPT to desired behaviors by specifying things like task, context, outcome, length, format, and style.

Prompting for optimal AWE application is not yet fully explored. Mizumoto and Eguchi ( 2023 ) used a zero-shot prompting method where scoring samples were not included. Their scoring rubric was inputted in plain text format and they inserted all of their essays (n = 12,100) using a for loop in Python. Dai et al. ( 2023 ) used multi-turn prompting and pasted each essay (n = 103) at the end of each prompt. It may be within the capabilities of ChatGPT to therefore act as an AWE tool and, provided a scoring rubric or other criteria, furnish corrective feedback on student writing without fine-tuning. However, prompt engineering is a nascent science and it is not yet known whether such a practice would produce corrective feedback reliably from LLMs.

Ultimately, the success of any learning technology depends on whether users adopt it. According to Davis’s ( 1989 ) seminal paper, the primary influences on user adoption of technology are in its perceived ease of use and perceived usefulness. Huawei and Aryadoust’s ( 2023 ) review of AWE literature revealed that several studies reported that students and teachers viewed AWE scores negatively compared to scores provided by human raters. However, studies with ENL populations have noted that students often find human feedback to be confusing (Weigle, 2013 ). One advantage of LLMs is the ability to tailor the output by, for example, asking the chatbot to reiterate feedback in easier to understand terms or to explain things further. Roscoe et al.’s ( 2017 ) study of student perceptions of AWE described students’ attitudes toward the system as “cautiously positive” (p. 212) which was influenced by presenting the AWE system as helpful, student initial expectations of the system, and student direct experience with the system feedback. Given the prominence that ChatGPT has garnered, its reputation will likely precede itself in the classroom and students and teachers may not initially trust it as an AWE tool, but whether students and teachers perceive the feedback from ChatGPT as being useful has not yet been studied.

The present study

ChatGPT represents a new technology with a vast array of capabilities in natural language processing. Educators are rushing to understand how this technology can appropriately be incorporated into classrooms, which has inspired new research agendas investigating its limitations and affordances. One avenue of research that is needed is understanding how GenAI can be included in the writing process in a way that is acceptable to both students and teachers. This study intends to examine the efficacy of ChatGPT and GPT-4 as an AWE tool in terms of language improvement and student perceptions in the ENL population. Specifically, this study will be guided by the following research questions.

Does the application of AI-generated feedback result in superior linguistic progress among ENL students compared to those who receive feedback from a human tutor?

Does the preference for AI-generated feedback surpass that for human tutor-generated feedback among ENL students?

Study 1 explored the first research question by means of a six-week longitudinal mixed repeated measures quasi experimental design. Study 2 investigated the second research question through a weekly survey administered over six weeks.

Participants

Both studies were conducted at a small liberal arts university in the Asia–Pacific region during the shortened Spring 2023 semester. A non-probability self-selection method was used to recruit 91 participants who were ENL students enrolled in an academic reading and writing language course. Based on the institution’s English Language Admission Test, students were assessed as having at least a Common European Framework of Reference (CEFR) B1 English proficiency level. Standard ethical procedures were followed, with participants voluntarily consenting to participate. As part of the communication with the participants, it was explicitly stated that their involvement in the study would not be rewarded with additional academic credit.

The first research question was explored in study 1 with a total of 48 ENL students, 21 males and 27 females, ranging in age from 20 to 30 years old. They were divided into two groups: a control group (CG) that received feedback on their assignments from a human tutor, and an experimental group (EG) that was given feedback generated by AI (GPT-4).

To address the second research question, in study 2 a separate group of 43 ENL students, composed of 13 males, 30 females, whose ages ranged from 19 to 36 years, received written feedback on their weekly assignments from both AI and human tutors. Complete questionnaire responses varied among participants across the six weeks, from 32 to 41 with an average of 37.7.

Instruments

In study 1, to gauge the linguistic progress among students, the study implemented a pre-and post-test design. A diagnostic writing test administered on the first day of class served as the pretest and a final writing exam served as the posttest. For these assessments, and for a recurring weekly writing task, participants were required to write a 300-word paragraph centered around diverse academic topics discussed in class and integrate sources from readings.

In study 2, to assess student preferences between human and AI-generated feedback, a questionnaire was developed to gather quantitative and qualitative data (see appendix A). Eight five-point Likert scale items, arranged into four pairs, captured various dimensions of feedback preference, including, satisfaction, comprehensibility and clarity, helpfulness, and overall preference (e.g.,: “I am satisfied with the feedback I received from the ENL tutor this week compared with “I am satisfied with the feedback I received from the AI program this week”). Participants were asked to respond using a scale from “1—Strongly Disagree” to “5—Strongly Agree.” In addition, participants were asked “If you were to only get one kind of feedback next week, which kind of feedback would you prefer?”. A follow-up open-ended question in which participants were asked to provide an explanation for their choice was also included.

OpenAI’s GPT-4 was utilized to generate feedback on student writing for both studies. GPT-4 is a multimodal LLM that can process image and text inputs and produce text outputs. GPT-4 was selected as we found it to provide the most suitable and accurate feedback out of the LLMs we tested. Furthermore, GPT-4 outperformed other LLMs on academic benchmarks, at least at the time of its release (OpenAI, 2023b ) and when this study was conducted.

The prompt sent to ChatGPT to generate feedback on students’ weekly writing consisted of several parts. Two experienced language educators familiar with the course and its assignments and assessments developed the prompt iteratively in line with the prompt-engineering framework presented by [Ingley, 2023 ]. First, GPT-4 was given the role of a professional language teacher who is an expert on providing feedback on the writing of English language learners. Second, the weekly writing prompt that students were given was included. Third, the LLM was instructed to, using simple language, comment on six areas of the students’ writing: the topic sentence, the development of ideas, language that lowers the academic quality of the writing, the use of transitional phrases, the use of sources and evidence, and the grammatical accuracy of the language of the writing. The AI was instructed to put the feedback on grammatical accuracy into a table that organized the following elements: the sentence where the error in the writing is found, the error type, a description of what this kind of error is, and suggestions as to how to address the error. Lastly, the student’s paragraph was copied into the prompt. An example prompt and resultant feedback are given in appendices B and C. A teaching assistant utilized GPT-4 and the prompt described above to generate feedback for each student. Feedback was emailed to students within two working days to ensure students had ample time to read the feedback and incorporate it into their revisions, should they desire to.

Data collection procedures

In study 1, students completed the pretest in the first week of the semester. For the next six weeks these students completed a weekly writing assignment. For each week students wrote a 300 word paragraph on a provided topic related to the material in class, received feedback either from a human tutor (CG) or AI (EG), revised, and submitted a final draft. Human tutors were paid trained English language tutors from the university’s ENL Tutor Program and certified by the College Reading & Learning Association. Participants in the CG held one 30-min one-on-one tutoring session per week with the same tutor for the duration of the study. The EG was required to submit an initial draft of their writing assignment each week. These submissions were reviewed to remove any identifying information. We then utilized GPT-4 to generate individualized feedback for each student, which was subsequently sent to them via email. Upon receiving and reviewing the AI-generated feedback, students made revisions to their writing and submitted their final drafts. In week 8, the final week of the semester, these students completed the posttest. Both pre- and posttests were independently rated by two experienced academic English language instructors. An analytic rubric assessing four key writing areas, namely content, coherence, language use, and sources and evidence, was used to assess students’ writing in the pre- and post test. Each category was scored individually on a scale of four, with descriptors outlining the performance at each level. Scores for each criterion varied from 1 to 4, with 1 representing an "Initial" level of performance and 4 representing a "Highly Developed" level of performance. The maximum achievable score on the rubric was 40. Inter-rater reliability was assessed by calculating intraclass correlation coefficients (ICC). These values indicated excellent inter-rater reliability for the CG and EG pretests (0.932, p < 0.001; 0.919, p < 0.001), as well as good reliability for the CG and EG posttests (0.877, p < 0.001; 816, p < 0.001).

In study 2, participants received feedback from a human tutor and from AI (GPT-4) on their weekly writing assignments. These human tutors were unaware that the participants were also receiving AI-generated feedback. The preference survey was distributed to participants via Qualtrics, once a week after students had completed their weekly writing assignment.

Data analysis procedures

To explore the first research question in study 1, a repeated-measure analysis of variance (RM-ANOVA) was conducted using SPSS 28. Using a general linear model, the time of the tests (pretest [T1] and posttest [T2]) served as a within-subjects variable, with the group serving as a between-subjects variable (experimental group or EG, and control group or CG). Missing data, outliers, and possible statistical assumption violations were examined. The scores of one student who only completed the posttest were not included in the analysis. Shapiro–Wilk test results suggested the data was normally distributed, except for the posttest control group (0.833, p < 0.001). Levene’s test of equality of error variances indicated homogeneity of variance for both pre- ( p = 0.888) and posttest ( p = 0.938). RM-ANOVA results reported below follow a Greenhouse-Giesser correction. Lastly, two independent samples t -tests were conducted to explore potential differences in means at T1 and T2 to see if there were any significant differences in proficiency levels between the two groups before and after the treatment.

To explore the second research question in study 2, descriptive statistics were calculated for questions 1–9 for each week. Three researchers independently performed a thematic analysis of the qualitative data and then consulted on salient themes found in the data.

Relating to RQ1 in Study 1

Descriptive statistics for the EG and CG scores for the pre- (T1) and posttest (T2) along with results of the Shapiro–Wilk tests are reported in Table 1 . Both groups made similar progress in their academic writing over the six week long treatment, as indicated by the increase in mean scores for the EG (5.848) and CG (6.86).

The results of the mixed 2 × 2 RM-ANOVA analysis (Table 2 ) revealed there was no significant interaction effect between group and time (F = 3.094, p = 0.085, η p 2 = 0.063) The effect size of the difference ( η p 2 ) signifies that this two-way interaction accounts for 6.3% of the variance in scores. The test of between-subjects effects revealed no significant difference between the EG and CG (F = 0.241, p = 0.626, η p 2 = 0.005), indicating that the method of providing feedback (human tutor or AI) did not have a significant effect on students’ posttest scores; the between-subjects variable (group) only accounted for 0.5% of the variance.

Independent sample t- tests of the between-subjects variable at T1 and T2 are reported in Table 3 . No significant difference was found between EG and CG means in the pre- and posttest, suggesting no significant difference in writing proficiency between the groups at either times of measurement.

Relating to RQ2 in Study 2

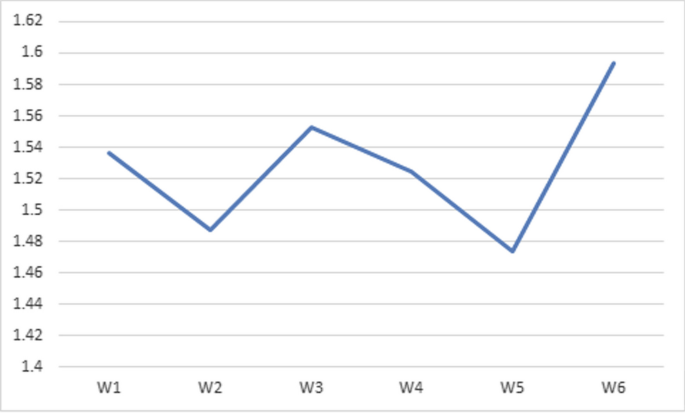

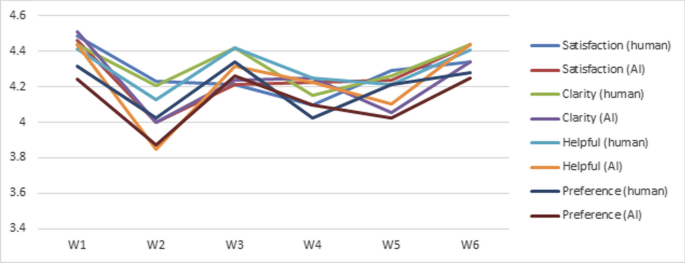

The descriptive statistics for question items 1–9 of the weekly preference survey are shown in Appendix A. The data suggest a near even split in preferences. Question 9, for example, asked “If you were to only get one kind of feedback next week, which kind of feedback would you prefer?”. Figure 1 shows the slight fluctuations in mean responses to this question, with a value of 1.5 indicating an even split in preference. The six-week average of the number of students that preferred feedback from human tutors was 18, which was slightly lower than the average of those that preferred AI-generated feedback (19.667), although this may be due to fewer students completing the survey in the final week. The means for human tutor feedback (H) for the entire six weeks, were slightly higher in each pair of items: satisfaction (Q1&2), H = 4.277 AI = 4.262; clarity (Q3&4), H = 4.319 AI = 4.236; helpfulness (Q5&6), H = 4.305 AI = 4.228; and preference (7–8), H = 4.2 AI = 4.126. Given how close each pair of means were, and to further explore the data, students were grouped according to how they answered Q9 in week six, and a t- test for comparing the mean response of these two groups for each item was conducted. All means were nonsignificant between these groups, further suggesting that student preference was equally split.

Mean responses to item 9, where 1 equals preference for human feedback and 2 equals preference for AI feedback

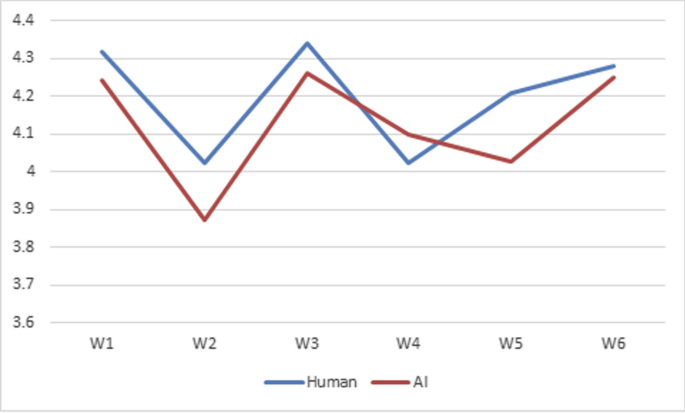

Line graphs that included items 1–8 (Fig. 2 ), and each pair of items (Figs. 3 , 4 , 5 , 6 ) show two trends. First, preference for human tutor feedback is generally slightly higher. Second, means were highest in weeks one, three, and six, with slight dips in weeks two, four, and five. Important to note, however, is that the means for items Q1-8 in all weeks, except for Q6&8 in week two, were above 4, suggesting that students generally perceived both forms of feedback as being of value.

Means of items 1–8

Means of items 1 and 2 relating to satisfaction with feedback

Means of items 3 and 4 relating to clarity

Means of items 5 and 6 relating to helpfulness

Means of items 7 and 8 relating to preference

An analysis of the qualitative data did not yield any insights into why the means of items 1–8 dipped in weeks two, four, and five. It may be that the writing topics proved more difficult in these weeks, or that students did not get as much out of the human or AI-generated feedback because of other pressures in their academic and/or personal lives, such as having unit tests during these weeks.

There were several recurring themes in the qualitative data as to why some students preferred receiving feedback from a human tutor. Perhaps the most prominent was the affordances of sitting down face to face and interacting with a human. For some this was viewed as beneficial because they found personal interaction to be “more engaging” and “a fun way to learn” when compared to just reading through AI-generated feedback. Some cited the ability to ask follow-up questions and get immediate feedback as being instrumental. Others noted that interacting with a human tutor allowed them to develop their writing and speaking skills at the same time. One student observed that “AI comment is super helpful, but personal characteristic [sic] could be missing after AI revision”, suggesting that reliance on AI might unintentionally result in the erasure of the personal voice of the writer.

As for those students that preferred receiving AI-generated feedback, clarity, understandability, consistency, and specificity of feedback, especially in regards to academic writing style and vocabulary, were common themes found in the data. Several students commented on how the AI-generated detailed feedback on errors in academic writing. For example, one student wrote “the AI program provides me with concrete feedback and easy-to-understand documentation of where the errors were.” Another noted “The AI feedback was so accurate and it also suggested academic words that I could use in place of the words that I used which was not very academic.” Several students commented how AI does not have constraints of time or availability, and students can review the feedback whenever they want: “AI gives me correct and accurate feedback on every sentence, no matter the time. However, [ENL] tutors have limited time and can only get limited feedback.”

Several students highlighted the advantages of both forms of feedback. For example, one student wrote “I prefer to have both. The reason why I like my [ENL] tutor, is because I think interaction helps my brain to learn and focus, so I could improve and progress. On the other hand, the AI also was very helpful, it pointed out the problems precisely and clear to understand. I would say they are a good combination for students.” Another student wrote “If there was a question whether to receive feedback from both [ENL] tutors and AI program I would say yes because I would be able to learn more from both”.

Discussion and conclusion

Study 1 compared human tutor and AI-generated feedback to see if one would influence linguistic gains more than the other. The results indicate that AI-generated feedback did not result in superior linguistic progress among ENL students compared to those who received feedback from a human tutor. The between-subject variable of group did not have a significant effect on writing scores, suggesting that one method of feedback was not better than another in terms of scores.

While the study found that AI-generated feedback did not lead to superior linguistic progress among ENL students compared to human tutor feedback, it is important to consider the potential time-saving benefits offered by AI-generated feedback for educators. Utilizing AI for providing feedback can potentially significantly reduce the time teachers spend on reviewing and responding to each student's assignment, thereby freeing up valuable time for other tasks. Furthermore, the time efficiency of AI-generated feedback can be particularly advantageous in large classes where providing individualized feedback by the instructor is logistically challenging and time-consuming.

Study 2 investigated which form of feedback ENL students preferred and why. We found about half the students preferred receiving feedback from a human tutor, and half preferred AI-generated feedback. Those that preferred sitting down and discussing their feedback with a tutor cited the face-to-face interaction as having affective benefits, such as increasing engagement, as well as benefits for developing their speaking abilities. Those that preferred AI-generated feedback primarily cited the clarity and specificity of the feedback as being useful for improving their writing. This echoes the findings of Dai et al. ( 2023 ), namely that AI-generated feedback was found to be more readable and detailed than feedback from an instructor.

We offer several suggestions as to how the inclusion of AI-generated feedback might be better incorporated into practice. In this study, as students were emailed the feedback generated by the AI, the students had no opportunity to ask follow-up questions to the AI. This is because we wanted to limit students' access to the AI and prevent potential misuses where students asked the AI to write their assignments for them. However, providing opportunities for students to ask follow-up questions to the AI may lead to a greater preference in AI-generated feedback.

In light of the major findings highlighted above, we believe a mixed approach to providing feedback may be most beneficial for both language educators and students. By utilizing GenAI, language educators may be able to produce more detailed feedback in a shorter amount of time for each individual learner. Providing opportunities for students to discuss AI-generated feedback with a human tutor and ask follow up questions affords students with the benefits of each modality, namely the clarity and specificity of the AI-generated feedback, and the benefits of interacting with another human, such as engagement and the ability to practice speaking.

With AI industry leaders predicting artificial capable intelligence, able to perform day to day tasks, being available in two years (Suleyman, 2023 ), and super artificial intelligence arriving potentially this decade (Leike & Sutskever, 2023 ), it is of growing importance that practitioners in the field of language education become more familiar with this rapidly changing technology, its potential uses, and how it may drastically influence and personalize language education in the future.

Currently GPT-4 is not optimized for AWE purposes and therefore features like text annotation, common in existing AWE programs, are cumbersome to reproduce. However, some established AWE programs have begun to integrate GPT technology (e.g., GrammarlyGO ). It is likely only a matter of time before large language models exist that have been fine tuned and optimized for language learning and teaching purposes, including assessing writing. Furthermore, as the public gets increased access to these models (e.g., through application program interfaces, or APIs), GenAI will likely be woven into the learning management systems commonly used in educational institutions, thereby becoming a more integral part of the practices of educators and students.

A potentially fruitful avenue of research would be to examine how the proficiency levels of students affect their ability to understand and learn from AI-generated feedback. Furthermore, investigating GenAI’s ability to reliably assess and score writing would be insightful. Lastly, in a similar vein to this study, further research could focus on investigating the efficacy of AI-generated feedback on native English-speaking students.

In addition, we encourage language educators to consider the following questions:

What aspects of the language learning process are best performed by GenAI, now and in the future?

What aspects of the language learning process are best performed by humans, now and in the future?

As GenAI becomes more capable and prevalent, what skills will become more important for language educators to cultivate?

To conclude, while admittedly there are a number of vehicles for personalized learning, the potential of GenAI in this area merits further attention. As GenAI continues to be developed and permeate the sphere of language education, it becomes imperative to ensure a balanced approach, one that capitalizes on its strengths while duly recognizing the indispensable contributions of human pedagogy. The endeavor of comprehending and assessing the capabilities of GenAI, along with its potential influence on language learning and teaching, is arguably now of paramount importance.

Availability of data and materials

The datasets generated and/or analyzed during the current study are available from the corresponding author on reasonable request.

Abbreviations

- Artificial intelligence

English as a new language

Generative Pretrained Transformer 4 (from OpenAI)

A specific chatbot interface for the GPT models

- Automated writing evaluation

Large language models

Generative Artificial Intelligence

A language model from Google

Graduate record examination

Test of English as a Foreign Language

Bidirectional Encoder Representations from Transformers (another type of language model)

Abd-Elaal, E.-S., Gamage, S., & Mills, J. (2022). Assisting academics to identify computer generated writing. European Journal of Engineering Education . https://doi.org/10.1080/03043797.2022.2046709

Article Google Scholar

Baktash, J. A. & Dawodi, M. (2023). Gpt-4: A review on advancements and opportunities in natural language processing. [preprint in arXiv]. https://doi.org/10.48550/arXiv.2305.03195

Behizadeh, N., & Engelhard, G., Jr. (2011). Historical view of the influences of measurement and writing theories on the practice of writing assessment in the United States. Assessing Writing, 16 (3), 189–211. https://doi.org/10.1016/j.asw.2011.03.001

Chiu, T. K. F., Xia, Q., Zhou, X., Chai, C. S., & Cheng, M. (2023). Systematic literature review on opportunities, challenges, and future research recommendations of artificial intelligence in education. Computers and Education Artificial Intelligence . https://doi.org/10.1016/j.caeai.2022.100118

Dai, W., Lin, J., Jin, F., Li, T., Tsai, Y.-S., Gašević, D. & Chen, G. (2023). Can large language models provide feedback to student? A case study on ChatGPT. [Preprint from EdArXiv]. https://doi.org/10.35542/osf.io/hcgzj

Davis, F. D. (1989). Perceived usefulness, perceived ease of use, and user acceptance of information technology. MIS Quarterly , 319–340.

Derner, E. & Batistič, K. (2023). Beyond the safeguards: Exploring the security risks of ChatGPT. [preprint in arXiv] , abs/2305.08005. https://doi.org/10.48550/arXiv.2305.08005

Elkins, K., & Chun, J. (2020). Can GPT-3 pass a writer’s Turing Test. Journal of Cultural Analytics. https://doi.org/10.22148/001c.17212

Elliot, N. & Klobucar, A. (2013). Automated essay evaluation and the teaching of writing. In M. D. Shermis & J. Burstein (Eds.), The Handbook of automated essay evaluation: Current applications and new directions . Routledge.

Farrokhnia, M., Banihashem, S. K., Norooz, O., & Wals, A. (2023). A SWOT analysis of ChatGPT: Implications for educational practice and research. Innovations in Education and Teaching International . https://doi.org/10.1080/14703297.2023.2195846

Fitria, T. N. (2021). Grammarly as AI-powered English writing asssistant: Students' alternative for writing English. Metathesis, 5 (1), 65–78. https://doi.org/10.31002/metathesis.v5i1.3519

Florio, S., & Clark, C. M. (1982). The functions of writing in an elementary classroom. Research in the Teaching of English, 16 (2), 115–130.

Google Scholar

Fütterer, T., Fischer, C., Alekseeva, A., Chen, X., Tate, T., Warschauer, M., & Gerjets, P. (2023). ChatGPT in education: Global reactions to AI innovations. Research Square. https://doi.org/10.21203/rs.3.rs-2840105/v1

Godwin-Jones, R. (2022). Partnering with AI: Intelligent writing assistance and instructed language learning. Language Learning Technology, 26 (2), 5–24.

Grove, W. M., Zald, D. H., Lebow, B. S., Snitz, B. E., & Nelson, C. (2000). Clinical versus mechanical prediction: A meta-analysis. Psychological Assessment . https://doi.org/10.1037//1040-3590.12.1.19

Herbold, S., Hautli-Janisz, A., Heuer, U., Kikteva, Z. & Trautsch, A. (2023). AI, write an essay for me: A large-scale comparison of human-written versus ChatGPT-generated essays. [preprint in ArXiv], abs/2304.14276. https://doi.org/10.48550/arXiv.2304.14276

Huawei, S., & Aryadoust, V. (2023). A systematic review of automated writing evaluation systems. Education and Information Technologies, 28 , 771–795. https://doi.org/10.1007/s10639-022-11200-7

Ingley, S. J., & Pack, A. (2023). Leveraging AI tools to develop the writer rather than the writing. Trends in Ecology Evolution, 38 (9), 785–787. https://doi.org/10.1016/j.tree.2023.05.007

John-Steiner, V., & Mahn, H. (1996). Sociocultural approaches to learning and development: A Vygotskian framework. Educational Psychologist, 31 (3–4), 191–206. https://doi.org/10.1080/00461520.1996.9653266

Koltovskaia, S. (2020). Student engagement with automated written corrective feedback (AWCF) provided by Grammarly: A multiple case study. Assessing Writing . https://doi.org/10.1016/j.asw.2020.100450

Krashen, S. D. (1982). Principles and practice in second language acquisition . Pergamon Press Inc.