- Utility Menu

GA4 Tracking Code

fa51e2b1dc8cca8f7467da564e77b5ea

- Make a Gift

- Join Our Email List

- Problem Solving in STEM

Solving problems is a key component of many science, math, and engineering classes. If a goal of a class is for students to emerge with the ability to solve new kinds of problems or to use new problem-solving techniques, then students need numerous opportunities to develop the skills necessary to approach and answer different types of problems. Problem solving during section or class allows students to develop their confidence in these skills under your guidance, better preparing them to succeed on their homework and exams. This page offers advice about strategies for facilitating problem solving during class.

How do I decide which problems to cover in section or class?

In-class problem solving should reinforce the major concepts from the class and provide the opportunity for theoretical concepts to become more concrete. If students have a problem set for homework, then in-class problem solving should prepare students for the types of problems that they will see on their homework. You may wish to include some simpler problems both in the interest of time and to help students gain confidence, but it is ideal if the complexity of at least some of the in-class problems mirrors the level of difficulty of the homework. You may also want to ask your students ahead of time which skills or concepts they find confusing, and include some problems that are directly targeted to their concerns.

You have given your students a problem to solve in class. What are some strategies to work through it?

- Try to give your students a chance to grapple with the problems as much as possible. Offering them the chance to do the problem themselves allows them to learn from their mistakes in the presence of your expertise as their teacher. (If time is limited, they may not be able to get all the way through multi-step problems, in which case it can help to prioritize giving them a chance to tackle the most challenging steps.)

- When you do want to teach by solving the problem yourself at the board, talk through the logic of how you choose to apply certain approaches to solve certain problems. This way you can externalize the type of thinking you hope your students internalize when they solve similar problems themselves.

- Start by setting up the problem on the board (e.g you might write down key variables and equations; draw a figure illustrating the question). Ask students to start solving the problem, either independently or in small groups. As they are working on the problem, walk around to hear what they are saying and see what they are writing down. If several students seem stuck, it might be a good to collect the whole class again to clarify any confusion. After students have made progress, bring the everyone back together and have students guide you as to what to write on the board.

- It can help to first ask students to work on the problem by themselves for a minute, and then get into small groups to work on the problem collaboratively.

- If you have ample board space, have students work in small groups at the board while solving the problem. That way you can monitor their progress by standing back and watching what they put up on the board.

- If you have several problems you would like to have the students practice, but not enough time for everyone to do all of them, you can assign different groups of students to work on different – but related - problems.

When do you want students to work in groups to solve problems?

- Don’t ask students to work in groups for straightforward problems that most students could solve independently in a short amount of time.

- Do have students work in groups for thought-provoking problems, where students will benefit from meaningful collaboration.

- Even in cases where you plan to have students work in groups, it can be useful to give students some time to work on their own before collaborating with others. This ensures that every student engages with the problem and is ready to contribute to a discussion.

What are some benefits of having students work in groups?

- Students bring different strengths, different knowledge, and different ideas for how to solve a problem; collaboration can help students work through problems that are more challenging than they might be able to tackle on their own.

- In working in a group, students might consider multiple ways to approach a problem, thus enriching their repertoire of strategies.

- Students who think they understand the material will gain a deeper understanding by explaining concepts to their peers.

What are some strategies for helping students to form groups?

- Instruct students to work with the person (or people) sitting next to them.

- Count off. (e.g. 1, 2, 3, 4; all the 1’s find each other and form a group, etc)

- Hand out playing cards; students need to find the person with the same number card. (There are many variants to this. For example, you can print pictures of images that go together [rain and umbrella]; each person gets a card and needs to find their partner[s].)

- Based on what you know about the students, assign groups in advance. List the groups on the board.

- Note: Always have students take the time to introduce themselves to each other in a new group.

What should you do while your students are working on problems?

- Walk around and talk to students. Observing their work gives you a sense of what people understand and what they are struggling with. Answer students’ questions, and ask them questions that lead in a productive direction if they are stuck.

- If you discover that many people have the same question—or that someone has a misunderstanding that others might have—you might stop everyone and discuss a key idea with the entire class.

After students work on a problem during class, what are strategies to have them share their answers and their thinking?

- Ask for volunteers to share answers. Depending on the nature of the problem, student might provide answers verbally or by writing on the board. As a variant, for questions where a variety of answers are relevant, ask for at least three volunteers before anyone shares their ideas.

- Use online polling software for students to respond to a multiple-choice question anonymously.

- If students are working in groups, assign reporters ahead of time. For example, the person with the next birthday could be responsible for sharing their group’s work with the class.

- Cold call. To reduce student anxiety about cold calling, it can help to identify students who seem to have the correct answer as you were walking around the class and checking in on their progress solving the assigned problem. You may even want to warn the student ahead of time: "This is a great answer! Do you mind if I call on you when we come back together as a class?"

- Have students write an answer on a notecard that they turn in to you. If your goal is to understand whether students in general solved a problem correctly, the notecards could be submitted anonymously; if you wish to assess individual students’ work, you would want to ask students to put their names on their notecard.

- Use a jigsaw strategy, where you rearrange groups such that each new group is comprised of people who came from different initial groups and had solved different problems. Students now are responsible for teaching the other students in their new group how to solve their problem.

- Have a representative from each group explain their problem to the class.

- Have a representative from each group draw or write the answer on the board.

What happens if a student gives a wrong answer?

- Ask for their reasoning so that you can understand where they went wrong.

- Ask if anyone else has other ideas. You can also ask this sometimes when an answer is right.

- Cultivate an environment where it’s okay to be wrong. Emphasize that you are all learning together, and that you learn through making mistakes.

- Do make sure that you clarify what the correct answer is before moving on.

- Once the correct answer is given, go through some answer-checking techniques that can distinguish between correct and incorrect answers. This can help prepare students to verify their future work.

How can you make your classroom inclusive?

- The goal is that everyone is thinking, talking, and sharing their ideas, and that everyone feels valued and respected. Use a variety of teaching strategies (independent work and group work; allow students to talk to each other before they talk to the class). Create an environment where it is normal to struggle and make mistakes.

- See Kimberly Tanner’s article on strategies to promoste student engagement and cultivate classroom equity.

A few final notes…

- Make sure that you have worked all of the problems and also thought about alternative approaches to solving them.

- Board work matters. You should have a plan beforehand of what you will write on the board, where, when, what needs to be added, and what can be erased when. If students are going to write their answers on the board, you need to also have a plan for making sure that everyone gets to the correct answer. Students will copy what is on the board and use it as their notes for later study, so correct and logical information must be written there.

For more information...

Tipsheet: Problem Solving in STEM Sections

Tanner, K. D. (2013). Structure matters: twenty-one teaching strategies to promote student engagement and cultivate classroom equity . CBE-Life Sciences Education, 12(3), 322-331.

- Designing Your Course

- A Teaching Timeline: From Pre-Term Planning to the Final Exam

- The First Day of Class

- Group Agreements

- Classroom Debate

- Flipped Classrooms

- Leading Discussions

- Polling & Clickers

- Teaching with Cases

- Engaged Scholarship

- Devices in the Classroom

- Beyond the Classroom

- On Professionalism

- Getting Feedback

- Equitable & Inclusive Teaching

- Advising and Mentoring

- Teaching and Your Career

- Teaching Remotely

- Tools and Platforms

- The Science of Learning

- Bok Publications

- Other Resources Around Campus

The Problem Solving Approach in Science Education

Table of Contents

Have you ever wondered how science , with its vast array of facts and figures, becomes so deeply integrated into our understanding of the world? It isn’t just about memorizing data; it’s about engaging with problems and seeking solutions through a systematic approach. This is where the problem\-solving approach in science education takes the spotlight. It transforms passive listeners into active participants, nurturing the next generation of critical thinkers and innovators.

What is the Problem-Solving Approach?

At its core, the problem-solving approach is a student\-centered method that encourages learners to tackle scientific problems with curiosity and rigor. It isn’t just a teaching strategy; it’s a journey that begins with recognizing a problem and ends with reaching a conclusion through investigation and reasoning.

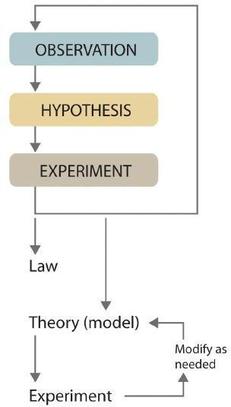

Step 1: Identifying the Problem

Every scientific journey begins with a question. In the classroom, this means fostering an environment where students are prompted to observe phenomena and articulate their curiosities in the form of clear, concise problems. This might look like a teacher demonstrating an unexpected result in an experiment and asking students to ponder why it occurred.

Step 2: Gathering Information

Once the problem is set, the next step is to gather relevant information. Here, students exercise their research skills, looking through textbooks, scientific journals, and credible internet sources to understand the context of their problem. They learn to differentiate between reliable and unreliable information—a skill with far-reaching implications.

Step 3: Formulating Hypotheses

Armed with information, students then formulate hypotheses. A hypothesis is an educated guess that can be tested through experiments. Encouraging learners to come up with their hypotheses promotes creativity and ownership of the learning process.

Step 4: Conducting Experiments

What sets science apart is its reliance on empirical evidence . In this step, students design and conduct experiments to test their hypotheses. They learn about controls, variables, and the importance of replicability. This hands-on experience is invaluable and often the most engaging part of the approach.

Step 5: Analyzing Data

After the experiment, comes the analysis. Students examine their results, often using statistical methods , to see if the data supports or refutes their hypotheses. This is where critical thinking is paramount, as they must interpret the data without bias.

Step 6: Drawing Conclusions

The final step in the process is drawing conclusions. Here, students evaluate the entirety of their work and determine the implications of their findings. Whether their hypotheses were supported or not, they gain insights into the scientific process and develop the ability to argue their conclusions based on evidence.

The Benefits of Problem Solving in Science Education

This methodology goes beyond knowledge acquisition; it’s about instilling a scientific mindset. Let’s explore how this approach benefits learners:

Develops Higher-Order Thinking Skills

By grappling with complex problems, students develop higher\-order thinking skills such as analysis, synthesis, and evaluation. These are not only vital in science but in everyday decision-making as well.

Encourages Active Learning

Active engagement in learning through problem-solving keeps students invested in their education. They’re not passive receivers of information but active participants in their learning journey.

Promotes Autonomy and Confidence

As students navigate through problems on their own, they build autonomy and confidence in their ability to tackle challenges. This self-assurance can translate to various aspects of their lives.

Fosters a Deeper Understanding of Scientific Principles

By connecting theoretical knowledge to practical problems, students develop a more nuanced understanding of scientific principles. It’s one thing to read about a concept; it’s another to see it in action.

Improves Collaboration Skills

Problem-solving often involves teamwork, allowing students to improve their collaborative skills . They learn to communicate ideas, share tasks, and respect different viewpoints.

Enhances Persistence and Resilience

Not every experiment will go as planned, and not every hypothesis will be correct. Navigating these challenges teaches learners persistence and resilience —qualities that are essential in science and in life.

Bringing Problem Solving Into the Classroom

Integrating the problem-solving approach into science education requires careful planning and a shift in mindset. Teachers become facilitators rather than lecturers, guiding students through the process and providing support when needed. Classrooms become active learning environments where mistakes are seen as learning opportunities.

The problem-solving approach in science education is more than a teaching strategy; it’s a blueprint for developing curious, independent, and analytical thinkers. By engaging learners in this manner, we’re not just teaching them science; we’re equipping them with the tools to solve the complex problems of tomorrow.

What do you think? How can we further encourage problem-solving skills in students from an early age? Do you believe that the problem-solving approach should be applied to other subjects beyond science? Share your thoughts and experiences with this dynamic educational strategy.

How useful was this post?

Click on a star to rate it!

Average rating 0 / 5. Vote count: 0

No votes so far! Be the first to rate this post.

We are sorry that this post was not useful for you!

Let us improve this post!

Tell us how we can improve this post?

Submit a Comment Cancel reply

Your email address will not be published. Required fields are marked *

Save my name, email, and website in this browser for the next time I comment.

Submit Comment

Pedagogy of Science

1 Science – Perspectives and Nature

- Understanding Science

- Myths about Nature of Science

- Understanding Nature of Science

- Domains of Science

2 Aims and Objectives of Science Teaching-Learning

- Aims of Science Education

- Objectives of Science Teaching-Learning

- Developing Learning Objectives

- Shift in Pedagogic Approach

3 Process Skills in Science

- Process Skills in Science

- Basic Process Skills in Science

- Developing Scientific Attitude and Scientific Temper

- Nurturing Aesthetic Sense and Curiosity

- Interdependence of Different Aspects of Nature of Science

4 Science in School Curriculum

- Historical Development of Science Education in India

- Teaching of Science as Recommended in National Curriculum Framework-2005

- Correlation of Science with Other Subjects/Disciplines

5 Organizing Teaching – Learning Experiences

- Linking Process Skills with Content

- Formulating Learning Objectives

- Unit Planning in Science

- Lesson Planning in Science

- Using Laboratory for Teaching-Learning

6 Approaches in Science Teaching – Learning

- Science as a Process of Construction of Knowledge

- Inquiry Approach

- Problem Solving Approach

- Cooperative Learning Approach

- Experiential Learning Approach

- Concept Mapping as an Approach for Planning and Transaction

- Adopting Critical Pedagogy in Science Teaching-Learning

7 Methods in Science Teaching – Learning

- Teacher Centric Methods

- Learner Centric Methods

- Cooperative Learning Methods

- Inclusion in Science Classroom

- Adopting Critical Pedagogy

8 Learning Resources in Science

- Identifying Appropriate Learning Resource

- Various Learning Resources

- Classroom Learning Resources

- ICT as Learning Resource

- Developing Learning Resource Centres

- Importance of Various Activities in Science Teaching-Learning

- Innovations in Science Laboratories

- Role of Innovation and Research in Science

- Professional Development of Science Teachers

9 Assessment in Science

- Nature of Assessment in Science

- Assessment Indicators in Science

- Tools and Techniques for Assessment

- Diagnostics Assessment in Science

- Schemes for Promoting Scientific Attitude

- Components of Food

- How to Get Higher Yields

- Animal Husbandry

11 Material

- Classification of Substances

- States of Material

- Mole Valency and Equivalence

- Types of Chemical Reactions

- Basic Metallurgical Processes

12 The Living World

- Diversity in Plants and Animals

- Nomenclature Scientific Names and Hierarchy

- Cell and Cell Organelles

- Life Processes

13 How Things Work

- Electric Current and Electric Circuit

- Electric Potential and Potential Difference

- Combination of Resistors — Series and Parallel

- Electric Power

- Heating Effects of Electric Current

- Magnetic Effects of Electric Current

- Electric Motor

- Electromagnetic Induction

- Electric Generator

- Domestic Electric Circuits

14 Moving Things, People and Ideas

- Newton’s Law of Motion

- Conservation of Momentum

- Kinetic and Potential Energy

15 Natural Phenomenon

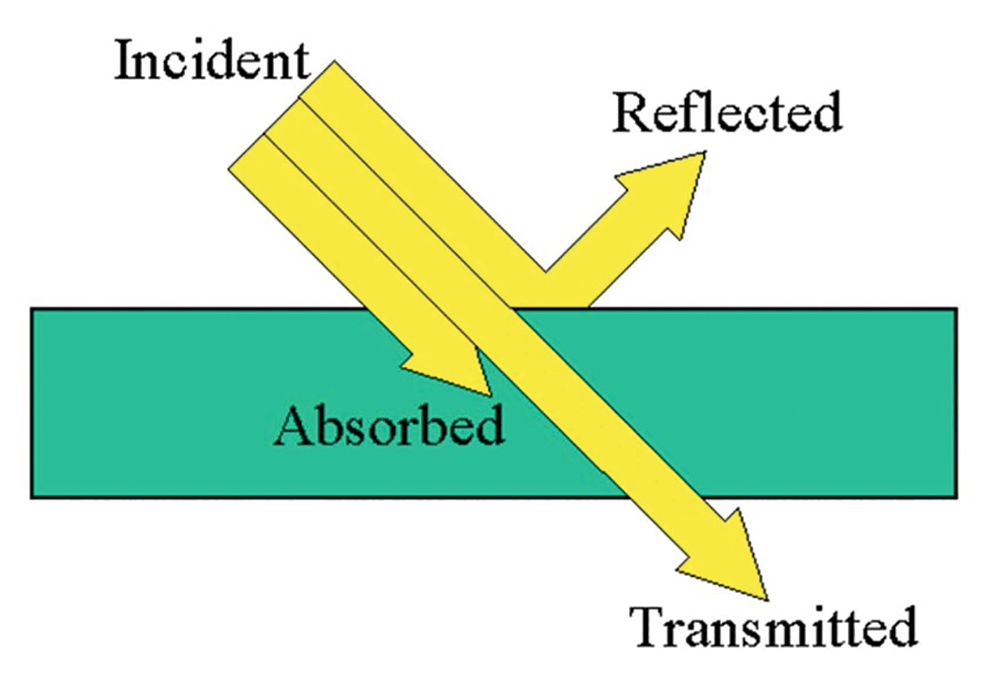

- Light as a Natural Phenomenon

- Water Cycle

- Conservation of Water Bodies

- Natural Disasters

- Waste Management

16 Natural Resources

- Physical Resources and their Utilization

- Pollution and Role of Human Being

- Bio-Geo-Chemical Cycles in Nature

- Natural Resource Management

Share on Mastodon

STEM Problem Solving: Inquiry, Concepts, and Reasoning

- Published: 29 January 2022

- Volume 32 , pages 381–397, ( 2023 )

Cite this article

- Aik-Ling Tan ORCID: orcid.org/0000-0002-4627-4977 1 ,

- Yann Shiou Ong ORCID: orcid.org/0000-0002-6092-2803 1 ,

- Yong Sim Ng ORCID: orcid.org/0000-0002-8400-2040 1 &

- Jared Hong Jie Tan 1

9447 Accesses

8 Citations

2 Altmetric

Explore all metrics

Balancing disciplinary knowledge and practical reasoning in problem solving is needed for meaningful learning. In STEM problem solving, science subject matter with associated practices often appears distant to learners due to its abstract nature. Consequently, learners experience difficulties making meaningful connections between science and their daily experiences. Applying Dewey’s idea of practical and science inquiry and Bereiter’s idea of referent-centred and problem-centred knowledge, we examine how integrated STEM problem solving offers opportunities for learners to shuttle between practical and science inquiry and the kinds of knowledge that result from each form of inquiry. We hypothesize that connecting science inquiry with practical inquiry narrows the gap between science and everyday experiences to overcome isolation and fragmentation of science learning. In this study, we examine classroom talk as students engage in problem solving to increase crop yield. Qualitative content analysis of the utterances of six classes of 113 eighth graders and their teachers were conducted for 3 hours of video recordings. Analysis showed an almost equal amount of science and practical inquiry talk. Teachers and students applied their everyday experiences to generate solutions. Science talk was at the basic level of facts and was used to explain reasons for specific design considerations. There was little evidence of higher-level scientific conceptual knowledge being applied. Our observations suggest opportunities for more intentional connections of science to practical problem solving, if we intend to apply higher-order scientific knowledge in problem solving. Deliberate application and reference to scientific knowledge could improve the quality of solutions generated.

Similar content being viewed by others

Science Camps for Introducing Nature of Scientific Inquiry Through Student Inquiries in Nature: Two Applications with Retention Study

G. Leblebicioglu, N. M. Abik, … R. Schwartz

Ways of thinking in STEM-based problem solving

Lyn D. English

Framing and Assessing Scientific Inquiry Practices

Avoid common mistakes on your manuscript.

1 Introduction

As we enter to second quarter of the twenty-first century, it is timely to take stock of both the changes and demands that continue to weigh on our education system. A recent report by World Economic Forum highlighted the need to continuously re-position and re-invent education to meet the challenges presented by the disruptions brought upon by the fourth industrial revolution (World Economic Forum, 2020 ). There is increasing pressure for education to equip children with the necessary, relevant, and meaningful knowledge, skills, and attitudes to create a “more inclusive, cohesive and productive world” (World Economic Forum, 2020 , p. 4). Further, the shift in emphasis towards twenty-first century competencies over mere acquisition of disciplinary content knowledge is more urgent since we are preparing students for “jobs that do not yet exist, technology that has not yet been invented, and problems that has yet exist” (OECD, 2018 , p. 2). Tan ( 2020 ) concurred with the urgent need to extend the focus of education, particularly in science education, such that learners can learn to think differently about possibilities in this world. Amidst this rhetoric for change, the questions that remained to be answered include how can science education transform itself to be more relevant; what is the role that science education play in integrated STEM learning; how can scientific knowledge, skills and epistemic practices of science be infused in integrated STEM learning; what kinds of STEM problems should we expose students to for them to learn disciplinary knowledge and skills; and what is the relationship between learning disciplinary content knowledge and problem solving skills?

In seeking to understand the extent of science learning that took place within integrated STEM learning, we dissected the STEM problems that were presented to students and examined in detail the sense making processes that students utilized when they worked on the problems. We adopted Dewey’s ( 1938 ) theoretical idea of scientific and practical/common-sense inquiry and Bereiter’s ideas of referent-centred and problem-centred knowledge building process to interpret teacher-students’ interactions during problem solving. There are two primary reasons for choosing these two theoretical frameworks. Firstly, Dewey’s ideas about the relationship between science inquiry and every day practical problem-solving is important in helping us understand the role of science subject matter knowledge and science inquiry in solving practical real-world problems that are commonly used in STEM learning. Secondly, Bereiter’s ideas of referent-centred and problem-centred knowledge augment our understanding of the types of knowledge that students can learn when they engage in solving practical real-world problems.

Taken together, Dewey’s and Bereiter’s ideas enable us to better understand the types of problems used in STEM learning and their corresponding knowledge that is privileged during the problem-solving process. As such, the two theoretical lenses offered an alternative and convincing way to understand the actual types of knowledge that are used within the context of integrated STEM and help to move our understanding of STEM learning beyond current focus on examining how engineering can be used as an integrative mechanism (Bryan et al., 2016 ) or applying the argument of the strengths of trans-, multi-, or inter-disciplinary activities (Bybee, 2013 ; Park et al., 2020 ) or mapping problems by the content and context as pure STEM problems, STEM-related problems or non-STEM problems (Pleasants, 2020 ). Further, existing research (for example, Gale et al., 2000 ) around STEM education focussed largely on description of students’ learning experiences with insufficient attention given to the connections between disciplinary conceptual knowledge and inquiry processes that students use to arrive at solutions to problems. Clarity in the role of disciplinary knowledge and the related inquiry will allow for more intentional design of STEM problems for students to learn higher-order knowledge. Applying Dewey’s idea of practical and scientific inquiry and Bereiter’s ideas of referent-centred and problem-centred knowledge, we analysed six lessons where students engaged with integrated STEM problem solving to propose answers to the following research questions: What is the extent of practical and scientific inquiry in integrated STEM problem solving? and What conceptual knowledge and problem-solving skills are learnt through practical and science inquiry during integrated STEM problem solving?

2 Inquiry in Problem Solving

Inquiry, according to Dewey ( 1938 ), involves the direct control of unknown situations to change them into a coherent and unified one. Inquiry usually encompasses two interrelated activities—(1) thinking about ideas related to conceptual subject-matter and (2) engaging in activities involving our senses or using specific observational techniques. The National Science Education Standards released by the National Research Council in the US in 1996 defined inquiry as “…a multifaceted activity that involves making observations; posing questions; examining books and other sources of information to see what is already known; planning investigations; reviewing what is already known in light of experimental evidence; using tools to gather, analyze, and interpret data; proposing answers, explanations, and predictions; and communicating the results. Inquiry requires identification of assumptions, use of critical and logical thinking, and consideration of alternative explanations” (p. 23). Planning investigation; collecting empirical evidence; using tools to gather, analyse and interpret data; and reasoning are common processes shared in the field of science and engineering and hence are highly relevant to apply to integrated STEM education.

In STEM education, establishing the connection between general inquiry and its application helps to link disciplinary understanding to epistemic knowledge. For instance, methods of science inquiry are popular in STEM education due to the familiarity that teachers have with scientific methods. Science inquiry, a specific form of inquiry, has appeared in many science curriculum (e.g. NRC, 2000 ) since Dewey proposed in 1910 that learning of science should be perceived as both subject-matter and a method of learning science (Dewey, 1910a , 1910b ). Science inquiry which involved ways of doing science should also encompass the ways in which students learn the scientific knowledge and investigative methods that enable scientific knowledge to be constructed. Asking scientifically orientated questions, collecting empirical evidence, crafting explanations, proposing models and reasoning based on available evidence are affordances of scientific inquiry. As such, science should be pursued as a way of knowing rather than merely acquisition of scientific knowledge.

Building on these affordances of science inquiry, Duschl and Bybee ( 2014 ) advocated the 5D model that focused on the practice of planning and carrying out investigations in science and engineering, representing two of the four disciplines in STEM. The 5D model includes science inquiry aspects such as (1) deciding on what and how to measure, observe and sample; (2) developing and selecting appropriate tools to measure and collect data; (3) recording the results and observations in a systematic manner; (4) creating ways to represent the data and patterns that are observed; and (5) determining the validity and the representativeness of the data collected. The focus on planning and carrying out investigations in the 5D model is used to help teachers bridge the gap between the practices of building and refining models and explanation in science and engineering. Indeed, a common approach to incorporating science inquiry in integrated STEM curriculum involves student planning and carrying out scientific investigations and making sense of the data collected to inform engineering design solution (Cunningham & Lachapelle, 2016 ; Roehrig et al., 2021 ). Duschl and Bybee ( 2014 ) argued that it is needful to design experiences for learners to appreciate that struggles are part of problem solving in science and engineering. They argued that “when the struggles of doing science is eliminated or simplified, learners get the wrong perceptions of what is involved when obtaining scientific knowledge and evidence” (Duschl & Bybee, 2014 , p. 2). While we concur with Duschl and Bybee about the need for struggles, in STEM learning, these struggles must be purposeful and grade appropriate so that students will also be able to experience success amidst failure.

The peculiar nature of science inquiry was scrutinized by Dewey ( 1938 ) when he cross-examined the relationship between science inquiry and other forms of inquiry, particularly common-sense inquiry. He positioned science inquiry along a continuum with general or common-sense inquiry that he termed as “logic”. Dewey argued that common-sense inquiry serves a practical purpose and exhibits features of science inquiry such as asking questions and a reliance on evidence although the focus of common-sense inquiry tends to be different. Common-sense inquiry deals with issues or problems that are in the immediate environment where people live, whereas the objects of science inquiry are more likely to be distant (e.g. spintronics) from familiar experiences in people’s daily lives. While we acknowledge the fundamental differences (such as novel discovery compared with re-discovering science, ‘messy’ science compared with ‘sanitised’ science) between school science and science that is practiced by scientists, the subject of interest in science (understanding the world around us) remains the same.

The unfamiliarity between the functionality and purpose of science inquiry to improve the daily lives of learners does little to motivate learners to learn science (Aikenhead, 2006 ; Lee & Luykx, 2006 ) since learners may not appreciate the connections of science inquiry in their day-to-day needs and wants. Bereiter ( 1992 ) has also distinguished knowledge into two forms—referent-centred and problem-centred. Referent-centred knowledge refers to subject-matter that is organised around topics such as that in textbooks. Problem-centred knowledge is knowledge that is organised around problems, whether they are transient problems, practical problems or problems of explanations. Bereiter argued that referent-centred knowledge that is commonly taught in schools is limited in their applications and meaningfulness to the lives of students. This lack of familiarity and affinity to referent-centred knowledge is likened to the science subject-matter knowledge that was mentioned by Dewey. Rather, it is problem-centred knowledge that would be useful when students encounter problems. Learning problem-centred knowledge will allow learners to readily harness the relevant knowledge base that is useful to understand and solve specific problems. This suggests a need to help learners make the meaningful connections between science and their daily lives.

Further, Dewey opined that while the contexts in which scientific knowledge arise could be different from our daily common-sense world, careful consideration of scientific activities and applying the resultant knowledge to daily situations for use and enjoyment is possible. Similarly, in arguing for problem-centred knowledge, Bereiter ( 1992 ) questioned the value of inert knowledge that plays no role in helping us understand or deal with the world around us. Referent-centred knowledge has a higher tendency to be inert due to the way that the knowledge is organised and the way that the knowledge is encountered by learners. For instance, learning about the equation and conditions for photosynthesis is not going to help learners appreciate how plants are adapted for photosynthesis and how these adaptations can allow plants to survive changes in climate and for farmers to grow plants better by creating the best growing conditions. Rather, students could be exposed to problems of explanations where they are asked to unravel the possible reasons for low crop yield and suggest possible ways to overcome the problem. Hence, we argue here that the value of the referent knowledge is that they form the basis and foundation for the students to be able to discuss or suggest ways to overcome real life problems. Referent-centred knowledge serves as part of the relevant knowledge base that can be harnessed to solve specific problems or as foundational knowledge students need to progress to learn higher-order conceptual knowledge that typically forms the foundations or pillars within a discipline. This notion of referent-centred knowledge serving as foundational knowledge that can be and should be activated for application in problem-solving situation is shown by Delahunty et al. ( 2020 ). They found that students show high reliance on memory when they are conceptualising convergent problem-solving tasks.

While Bereiter argues for problem-centred knowledge, he cautioned that engagement should be with problems of explanation rather than transient or practical problems. He opined that if learners only engage in transient or practical problem alone, they will only learn basic-category types of knowledge and fail to understand higher-order conceptual knowledge. For example, for photosynthesis, basic-level types of knowledge included facts about the conditions required for photosynthesis, listing the products formed from the process of photosynthesis and knowing that green leaves reflect green light. These basic-level knowledges should intentionally help learners learn higher-level conceptual knowledge that include learners being able to draw on the conditions for photosynthesis when they encounter that a plant is not growing well or is exhibiting discoloration of leaves.

Transient problems disappear once a solution becomes available and there is a high likelihood that we will not remember the problem after that. Practical problems, according to Bereiter are “stuck-door” problems that could be solved with or without basic-level knowledge and often have solutions that lacks precise definition. There are usually a handful of practical strategies, such as pulling or pushing the door harder, kicking the door, etc. that will work for the problems. All these solutions lack a well-defined approach related to general scientific principles that are reproducible. Problems of explanations are the most desirable types of problems for learners since these are problems that persist and recur such that they can become organising points for knowledge. Problems of explanations consist of the conceptual representations of (1) a text base that serves to represent the text content and (2) a situation model that shows the portion of the world in which the text is relevant. The idea of text base to represent text content in solving problems of explanations is like the idea of domain knowledge and structural knowledge (refers to knowledge of how concepts within a domain are connected) proposed by Jonassen ( 2000 ). He argued that both types of knowledges are required to solve a range of problems from well-structured problems to ill-structured problems with a simulated context, to simple ill-structured problems and to complex ill-structured problems.

Jonassen indicated that complex ill-structured problems are typically design problems and are likely to be the most useful forms of problems for learners to be engaged in inquiry. Complex ill-structured design problems are the “wicked” problems that Buchanan ( 1992 ) discussed. Buchanan’s idea is that design aims to incorporate knowledge from different fields of specialised inquiry to become whole. Complex or wicked problems are akin to the work of scientists who navigate multiple factors and evidence to offer models that are typically oversimplified, but they apply them to propose possible first approximation explanations or solutions and iteratively relax constraints or assumptions to refine the model. The connections between the subject matter of science and the design process to engineer a solution are delicate. While it is important to ensure that practical concerns and questions are taken into consideration in designing solutions (particularly a material artefact) to a practical problem, the challenge here lies in ensuring that creativity in design is encouraged even if students initially lack or neglect the scientific conceptual understanding to explain/justify their design. In his articulation of wicked problems and the role of design thinking, Buchanan ( 1992 ) highlighted the need to pay attention to category and placement. Categories “have fixed meanings that are accepted within the framework of a theory or a philosophy and serve as the basis for analyzing what already exist” (Buchanan, 1992 , p. 12). Placements, on the other hand, “have boundaries to shape and constrain meaning, but are not rigidly fixed and determinate” (p. 12).

The difference in the ideas presented by Dewey and Bereiter lies in the problem design. For Dewey, scientific knowledge could be learnt from inquiring into practical problems that learners are familiar with. After all, Dewey viewed “modern science as continuous with, and to some degree an outgrowth and refinement of, practical or ‘common-sense’ inquiry” (Brown, 2012 ). For Bereiter, he acknowledged the importance of familiar experiences, but instead of using them as starting points for learning science, he argued that practical problems are limiting in helping learners acquire higher-order knowledge. Instead, he advocated for learners to organize their knowledge around problems that are complex, persistent and extended and requiring explanations to better understand the problems. Learners are to have a sense of the kinds of problems to which the specific concept is relevant before they can be said to have grasp the concept in a functionally useful way.

To connect between problem solving, scientific knowledge and everyday experiences, we need to examine ways to re-negotiate the disciplinary boundaries (such as epistemic understanding, object of inquiry, degree of precision) of science and make relevant connections to common-sense inquiry and to the problem at hand. Integrated STEM appears to be one way in which the disciplinary boundaries of science can be re-negotiated to include practices from the fields of technology, engineering and mathematics. In integrated STEM learning, inquiry is seen more holistically as a fluid process in which the outcomes are not absolute but are tentative. The fluidity of the inquiry process is reflected in the non-deterministic inquiry approach. This means that students can use science inquiry, engineering design, design process or any other inquiry approaches that fit to arrive at the solution. This hybridity of inquiry between science, common-sense and problems allows for some familiar aspects of the science inquiry process to be applied to understand and generate solutions to familiar everyday problems. In attempting to infuse elements of common-sense inquiry with science inquiry in problem-solving, logic plays an important role to help learners make connections. Hypothetically, we argue that with increasing exposure to less familiar ways of thinking such as those associated with science inquiry, students’ familiarity with scientific reasoning increases, and hence such ways of thinking gradually become part of their common-sense, which students could employ to solve future relevant problems. The theoretical ideas related to complexities of problems, the different forms of inquiry afforded by different problems and the arguments for engaging in problem solving motivated us to examine empirically how learners engage with ill-structured problems to generate problem-centred knowledge. Of particular interest to us is how learners and teachers weave between practical and scientific reasoning as they inquire to integrate the components in the original problem into a unified whole.

3.1 Context

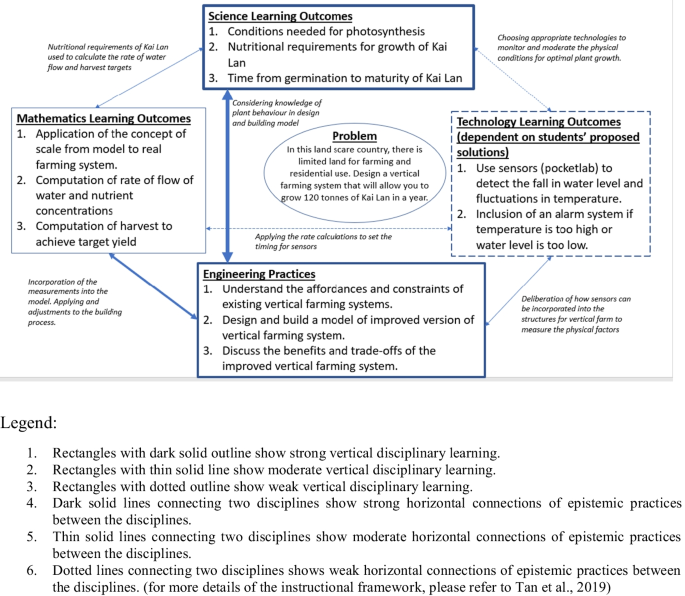

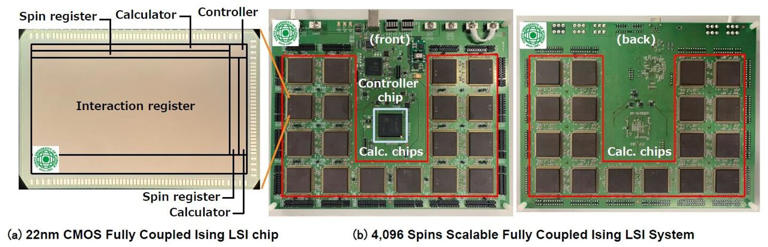

The integrated STEM activity in our study was planned using the S-T-E-M quartet instructional framework (Tan et al., 2019 ). The S-T-E-M quartet instructional framework positions complex, persistent and extended problems at its core and focusses on the vertical disciplinary knowledge and understanding of the horizontal connections between the disciplines that could be gained by learners through solving the problem (Tan et al., 2019 ). Figure 1 depicts the disciplinary aspects of the problem that was presented to the students. The activity has science and engineering as the two lead disciplines. It spanned three 1-h lessons and required students to both learn and apply relevant scientific conceptual knowledge to solve a complex, real-world problem through processes that resemble the engineering design process (Wheeler et al., 2019 ).

Connections across disciplines in integrate STEM activity

Frequency of different types of reasoning

In the first session (1 h), students were introduced to the problem and its context. The problem pertains to the issue of limited farmland in a land scarce country that imports 90% of food (Singapore Food Agency [SFA], 2020 ). The students were required to devise a solution by applying knowledge of the conditions required for photosynthesis and plant growth to design and build a vertical farming system to help farmers increase crop yield with limited farmland. This context was motivated by the government’s effort to generate interests and knowledge in farming to achieve the 30 by 30 goal—supplying 30% of country’s nutritional needs by 2030. The scenario was a fictitious one where they were asked to produce 120 tonnes of Kailan (a type of leafy vegetable) with two hectares of land instead of the usual six hectares over a specific period. In addition to the abovementioned constraints, the teacher also discussed relevant success criteria for evaluating the solution with the students. Students then researched about existing urban farming approaches. They were given reading materials pertaining to urban farming to help them understand the affordances and constraints of existing solutions. In the second session (6 h), students engaged in ideation to generate potential solutions. They then designed, built and tested their solution and had opportunities to iteratively refine their solution. Students were given a list of materials (e.g. mounting board, straws, ice-cream stick, glue, etc.) that they could use to design their solutions. In the final session (1 h), students presented their solution and reflected on how well their solution met the success criteria. The prior scientific conceptual knowledge that students require to make sense of the problem include knowledge related to plant nutrition, namely, conditions for photosynthesis, nutritional requirements of Kailin and growth cycle of Kailin. The problem resembles a real-world problem that requires students to engage in some level of explanation of their design solution.

A total of 113 eighth graders (62 boys and 51 girls), 14-year-olds, from six classes and their teachers participated in the study. The students and their teachers were recruited as part of a larger study that examined the learning experiences of students when they work on integrated STEM activities that either begin with a problem, a solution or are focused on the content. Invitations were sent to schools across the country and interested schools opted in for the study. For the study reported here, all students and teachers were from six classes within a school. The teachers had all undergone 3 h of professional development with one of the authors on ways of implementing the integrated STEM activity used in this study. During the professional development session, the teachers learnt about the rationale of the activity, familiarize themselves with the materials and clarified the intentions and goals of the activity. The students were mostly grouped in groups of three, although a handful of students chose to work independently. The group size of students was not critical for the analysis of talk in this study as the analytic focus was on the kinds of knowledge applied rather than collaborative or group think. We assumed that the types of inquiry adopted by teachers and students were largely dependent on the nature of problem. Eighth graders were chosen for this study since lower secondary science offered at this grade level is thematic and integrated across biology, chemistry and physics. Furthermore, the topic of photosynthesis is taught under the theme of Interactions at eighth grade (CPDD, 2021 ). This thematic and integrated nature of science at eighth grade offered an ideal context and platform for integrated STEM activities to be trialled.

The final lessons in a series of three lessons in each of the six classes was analysed and reported in this study. Lessons where students worked on their solutions were not analysed because the recordings had poor audibility due to masking and physical distancing requirements as per COVID-19 regulations. At the start of the first lesson, the instructions given by the teacher were:

You are going to present your models. Remember the scenario that you were given at the beginning that you were tasked to solve using your model. …. In your presentation, you have to present your prototype and its features, what is so good about your prototype, how it addresses the problem and how it saves costs and space. So, this is what you can talk about during your presentation. ….. pay attention to the presentation and write down questions you like to ask the groups after the presentation… you can also critique their model, you can evaluate, critique and ask questions…. Some examples of questions you can ask the groups are? Do you think your prototype can achieve optimal plant growth? You can also ask questions specific to their models.

3.2 Data collection

Parental consent was sought a month before the start of data collection. The informed consent adhered to confidentiality and ethics guidelines as described by the Institutional Review Board. The data collection took place over a period of one month with weekly video recording. Two video cameras, one at the front and one at the back of the science laboratory were set up. The front camera captured the students seated at the front while the back video camera recorded the teacher as well as the groups of students at the back of the laboratory. The video recordings were synchronized so that the events captured from each camera can be interpreted from different angles. After transcription of the raw video files, the identities of students were substituted with pseudonyms.

3.3 Data analysis

The video recordings were analysed using the qualitative content analysis approach. Qualitative content analysis allows for patterns or themes and meanings to emerge from the process of systematic classification (Hsieh & Shannon, 2005 ). Qualitative content analysis is an appropriate analytic method for this study as it allows us to systematically identify episodes of practical inquiry and science inquiry to map them to the purposes and outcomes of these episodes as each lesson unfolds.

In total, six h of video recordings where students presented their ideas while the teachers served as facilitator and mentor were analysed. The video recordings were transcribed, and the transcripts were analysed using the NVivo software. Our unit of analysis is a single turn of talk (one utterance). We have chosen to use utterances as proxy indicators of reasoning practices based on the assumption that an utterance relates to both grammar and context. An utterance is a speech act that reveals both meaning and intentions of the speaker within specific contexts (Li, 2008 ).

Our research analytical lens is also interpretative in nature and the validity of our interpretation is through inter-rater discussion and agreement. Each utterance at the speaker level in transcripts was examined and coded either as relevant to practical reasoning or scientific reasoning based on the content. The utterances could be a comment by the teacher, a question by a student or a response by another student. Deductive coding is deployed with the two codes, practical reasoning and scientific reasoning derived from the theoretical ideas of Dewey and Bereiter as described earlier. Practical reasoning refers to utterances that reflect commonsensical knowledge or application of everyday understanding. Scientific reasoning refers to utterances that consist of scientifically oriented questions, scientific terms, or the use of empirical evidence to explain. Examples of each type of reasoning are highlighted in the following section. Each coded utterance is then reviewed for detailed description of the events that took place that led to that specific utterance. The description of the context leading to the utterance is considered an episode. The episodes and codes were discussed and agreed upon by two of the authors. Two coders simultaneously watched the videos to identify and code the episodes. The coders interpreted the content of each utterance, examine the context where the utterance was made and deduced the purpose of the utterance. Once each coder has established the sense-making aspect of the utterance in relation to the context, a code of either practical reasoning or scientific reasoning is assigned. Once that was completed, the two coders compared their coding for similarities and differences. They discussed the differences until an agreement was reached. Through this process, an agreement of 85% was reached between the coders. Where disagreement persisted, codes of the more experienced coder were adopted.

4 Results and Discussion

The specific STEM lessons analysed were taken from the lessons whereby students presented the model of their solutions to the class for peer evaluation. Every group of students stood in front of the class and placed their model on the bench as they presented. There was also a board where they could sketch or write their explanations should they want to. The instructions given by the teacher to the students were to explain their models and state reasons for their design.

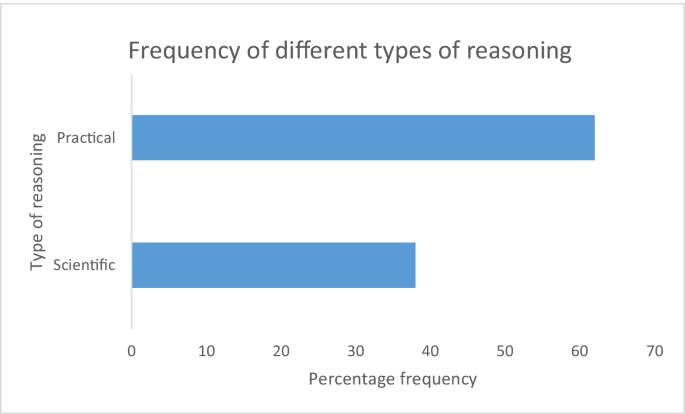

4.1 Prevalence of Reasoning

The 6h of videos consists of 1422 turns of talk. Three hundred four turns of talk (21%) were identified as talk related to reasoning, either practical reasoning or scientific reasoning. Practical reasoning made up 62% of the reasoning turns while 38% were scientific reasoning (Fig. 2 ).

The two types of reasoning differ in the justifications that are used to substantiate the claims or decisions made. Table 1 describes the differences between the two categories of reasoning.

4.2 Applications of Scientific Reasoning

Instances of engagement with scientific reasoning (for instance, using scientific concepts to justify, raising scientifically oriented questions, or providing scientific explanations) revolved around the conditions for photosynthesis and the concept of energy conversion when students were presenting their ideas or when they were questioned by their peers. For example, in explaining the reason for including fish in their plant system, one group of students made connection to cyclical energy transfer: “…so as the roots of the plants submerged in the water, faeces from the fish will be used as fertilizers so that the plant can grow”. The students considered how organic matter that is still trapped within waste materials can be released and taken up by plants to enhance the growth. The application of scientific reasoning made their design one that is innovative and sustainable as evaluated by the teacher. Some students attempted more ecofriendly designs by considering energy efficiencies through incorporating water turbines in their farming systems. They applied the concept of different forms of energy and energy conversion when their peers inquired about their design. The same scientific concepts were explained at different levels of details by different students. At one level, the students explained in a purely descriptive manner of what happens to the different entities in their prototypes, with implied changes to the forms of energy─ “…spins then generates electricity. So right, when the water falls down, then it will spin. The water will fall on the fan blade thing, then it will spin and then it generates electricity. So, it saves electricity, and also saves water”. At another level, students defended their design through an explanation of energy conversion─ “…because when the water flows right, it will convert gravitational potential energy so, when it reaches the bottom, there is not really much gravitational potential energy”. While these instances of applying scientific reasoning indicated that students have knowledge about the scientific phenomena and can apply them to assist in the problem-solving process, we are not able to establish if students understood the science behind how the dynamo works to generate electricity. Students in eighth grade only need to know how a generator works at a descriptive level and the specialized understanding how a dynamo works is beyond the intended learning outcomes at this grade level.

The application of scientific concepts for justification may not always be accurate. For instance, the naïve conception that students have about plants only respiring at night and not in the day surfaced when one group of students tried to justify the growth rates of Kailan─ “…I mean, they cannot be making food 24/7 and growing 24/7. They have nighttime for a reason. They need to respire”. These students do not appreciate that plants respire in the day as well, and hence respiration occurs 24/7. This naïve conception that plants only respire at night is one that is common among learners of biology (e.g. Svandova, 2014 ) since students learn that plant gives off oxygen in the day and takes in oxygen at night. The hasty conclusion to that observation is that plants carry out photosynthesis in the day and respire at night. The relative rates of photosynthesis and respiration were not considered by many students.

Besides naïve conceptions, engagement with scientific ideas to solve a practical problem offers opportunities for unusual and alternative ideas about science to surface. For instance, another group of students explained that they lined up their plants so that “they can take turns to absorb sunlight for photosynthesis”. These students appear to be explaining that the sun will move and depending on the position of the sun, some plants may be under shade, and hence rates of photosynthesis are dependent on the position of the sun. However, this idea could also be interpreted as (1) the students failed to appreciate that sunlight is everywhere, and (2) plants, unlike animals, particularly humans, do not have the concept of turn-taking. These diverse ideas held by students surfaced when students were given opportunities to apply their knowledge of photosynthesis to solve a problem.

4.3 Applications of Practical Reasoning

Teachers and students used more practical reasoning during an integrated STEM activity requiring both science and engineering practices as seen from 62% occurrence of practical reasoning compared with 38% for scientific reasoning. The intention of the activity to integrate students’ scientific knowledge related to plant nutrition to engineering practice of building a model of vertical farming system could be the reason for the prevalence of practical reasoning. The practical reasoning used related to structural design considerations of the farming system such as how water, light and harvesting can be carried out in the most efficient manner. Students defended the strengths of designs using logic based on their everyday experiences. In the excerpt below (transcribed verbatim), we see students applied their everyday experiences when something is “thinner” (likely to mean narrower), logically it would save space. Further, to reach a higher level, you use a machine to climb up.

Excerpt 1. “Thinner, more space” Because it is more thinner, so like in terms of space, it’s very convenient. So right, because there is – because it rotates right, so there is this button where you can stop it. Then I also installed steps, so that – because there are certain places you can’t reach even if you stop the – if you stop the machine, so when you stop it and you climb up, and then you see the condition of the plants, even though it costs a lot of labour, there is a need to have an experienced person who can grow plants. Then also, when like – when water reach the plants, cos the plants I want to use is soil-based, so as the water reach the soil, the soil will xxx, so like the water will be used, and then we got like – and then there’s like this filter that will filter like the dirt.

In the examples of practical reasoning, we were not able to identify instances where students and teachers engaged with discussion around trade-off and optimisation. Understanding constraints, trade-offs and optimisations are important ideas in informed design matrix for engineering as suggested by Crismond and Adams ( 2012 ). For instance, utterances such as “everything will be reused”, “we will be saving space”, “it looks very flimsy” or “so that it can contains [sic] the plants” were used. These utterances were made both by students while justifying their own prototypes and also by peers who challenged the design of others. Longer responses involving practical reasoning were made based on common-sense, everyday logic─ “…the product does not require much manpower, so other than one or two supervisors like I said just now, to harvest the Kailan, hence, not too many people need to be used, need to be hired to help supervise the equipment and to supervise the growth”. We infer that the higher instances of utterances related to practical reasoning could be due to the presence of more concrete artefacts that is shown, and the students and teachers were more focused on questioning the structure at hand. This inference was made as instructions given by the teacher at the start of students’ presentation focus largely on the model rather than the scientific concepts or reasoning behind the model.

4.4 Intersection Between Scientific and Practical Reasoning

Comparing science subject matter knowledge and problem-solving to the idea of categories and placement (Buchanan, 1992 ), subject matter is analogous to categories where meanings are fixed with well-established epistemic practices and norms. The problem-solving process and design of solutions are likened to placements where boundaries are less rigid, hence opening opportunities for students’ personal experiences and ideas to be presented. Placements allow students to apply their knowledge from daily experiences and common-sense logic to justify decisions. Common-sense knowledge and logic are more accessible, and hence we observe higher frequency of usage. Comparatively, while science subject matter (categories) is also used, it is observed less frequently. This could possibly be due either to less familiarity with the subject matter or lack of appropriate opportunity to apply in practical problem solving. The challenge for teachers during implementation of a STEM problem-solving activity, therefore, lies in the balance of the application of scientific and practical reasoning to deepen understanding of disciplinary knowledge in the context of solving a problem in a meaningful manner.

Our observations suggest that engaging students with practical inquiry tasks with some engineering demands such as the design of modern farm systems offers opportunities for them to convert their personal lived experiences into feasible concrete ideas that they can share in a public space for critique. The peer critique following the sharing of their practical ideas allows for both practical and scientific questions to be asked and for students to defend their ideas. For instance, after one group of students presented their prototype that has silvered surfaces, a student asked a question: “what is the function of the silver panels?”, to which his peers replied : “Makes the light bounce. Bounce the sunlight away and then to other parts of the tray.” This question indicated that students applied their knowledge that shiny silvered surfaces reflect light, and they used this knowledge to disperse the light to other trays where the crops were growing. An example of a practical question asked was “what is the purpose of the ladder?”, to which the students replied: “To take the plants – to refill the plants, the workers must climb up”. While the process of presentation and peer critique mimic peer review in the science inquiry process, the conceptual knowledge of science may not always be evident as students paid more attention to the design constraints such as lighting, watering, and space that was set in the activity. Given the context of growing plants, engagement with the science behind nutritional requirements of plants, the process of photosynthesis, and the adaptations of plants could be more deliberately explored.

5 Conclusion

The goal of our work lies in applying the theoretical ideas of Dewey and Bereiter to better understand reasoning practices in integrate STEM problem solving. We argue that this is a worthy pursue to better understand the roles of scientific reasoning in practical problem solving. One of the goals of integrated STEM education in schools is to enculture students into the practices of science, engineering and mathematics that include disciplinary conceptual knowledge, epistemic practices, and social norms (Kelly & Licona, 2018 ). In the integrated form, the boundaries and approaches to STEM learning are more diverse compared with monodisciplinary ways of problem solving. For instance, in integrated STEM problem solving, besides scientific investigations and explanations, students are also required to understand constraints, design optimal solutions within specific parameters and even to construct prototypes. For students to learn the ways of speaking, doing and being as they participate in integrated STEM problem solving in schools in a meaningful manner, students could benefit from these experiences.

With reference to the first research question of What is the extent of practical and scientific reasoning in integrated STEM problem solving, our analysis suggests that there are fewer instances of scientific reasoning compared with practical reasoning. Considering the intention of integrated STEM learning and adopting Bereiter’s idea that students should learn higher-order conceptual knowledge through engagement with problem solving, we argue for a need for scientific reasoning to be featured more strongly in integrated STEM lessons so that students can gain higher order scientific conceptual knowledge. While the lessons observed were strong in design and building, what was missing in generating solutions was the engagement in investigations, where learners collected or are presented with data and make decisions about the data to allow them to assess how viable the solutions are. Integrated STEM problems can be designed so that science inquiry can be infused, such as carrying out investigations to figure out relationships between variables. Duschl and Bybee ( 2014 ) have argued for the need to engage students in problematising science inquiry and making choices about what works and what does not.

With reference to the second research question , What is achieved through practical and scientific reasoning during integrated STEM problem solving? , our analyses suggest that utterance for practical reasoning are typically used to justify the physical design of the prototype. These utterances rely largely on what is observable and are associated with basic-level knowledge and experiences. The higher frequency of utterances related to practical reasoning and the nature of the utterances suggests that engagement with practical reasoning is more accessible since they relate more to students’ lived experiences and common-sense. Bereiter ( 1992 ) has urged educators to engage learners in learning that is beyond basic-level knowledge since accumulation of basic-level knowledge does not lead to higher-level conceptual learning. Students should be encouraged to use scientific knowledge also to justify their prototype design and to apply scientific evidence and logic to support their ideas. Engagement with scientific reasoning is preferred as conceptual knowledge, epistemic practices and social norms of science are more widely recognised compared with practical reasoning that are likely to be more varied since they rely on personal experiences and common-sense. This leads us to assert that both context and content are important in integrated STEM learning. Understanding the context or the solution without understanding the scientific principles that makes it work makes the learning less meaningful since we “…cannot strip learning of its context, nor study it in a ‘neutral’ context. It is always situated, always relayed to some ongoing enterprise”. (Bruner, 2004 , p. 20).

To further this discussion on how integrated STEM learning experiences harness the ideas of practical and scientific reasoning to move learners from basic-level knowledge to higher-order conceptual knowledge, we propose the need for further studies that involve working with teachers to identify and create relevant problems-of-explanations that focuses on feasible, worthy inquiry ideas such as those related to specific aspects of transportation, alternative energy sources and clean water that have impact on the local community. The design of these problems can incorporate opportunities for systematic scientific investigations and scaffolded such that there are opportunities to engage in epistemic practices of the constitute disciplines of STEM. Researchers could then examine the impact of problems-of-explanations on students’ learning of higher order scientific concepts. During the problem-solving process, more attention can be given to elicit students’ initial and unfolding ideas (practical) and use them as a basis to start the science inquiry process. Researchers can examine how to encourage discussions that focus on making meaning of scientific phenomena that are embedded within specific problems. This will help students to appreciate how data can be used as evidence to support scientific explanations as well as justifications for the solutions to problems. With evidence, learners can be guided to work on reasoning the phenomena with explanatory models. These aspects should move engagement in integrated STEM problem solving from being purely practice to one that is explanatory.

6 Limitations

There are four key limitations of our study. Firstly, the degree of generalisation of our observations is limited. This study sets out to illustrate what how Dewey and Bereiter’s ideas can be used as lens to examine knowledge used in problem-solving. As such, the findings that we report here is limited in its ability to generalise across different contexts and problems. Secondly, the lessons that were analysed came from teacher-frontal teaching and group presentation of solution and excluded students’ group discussions. We acknowledge that there could potentially be talk that could involve practical and scientific reasonings within group work. There are two practical consideration for choosing to analyse the first and presentation segments of the suite of lesson. Firstly, these two lessons involved participation from everyone in class and we wanted to survey the use of practical and scientific reasoning by the students as a class. Secondly, methodologically, clarity of utterances is important for accurate analysis and as students were wearing face masks during the data collection, their utterances during group discussions lack the clarity for accurate transcription and analysis. Thirdly, insights from this study were gleaned from a small sample of six classes of students. Further work could involve more classes of students although that could require more resources devoted to analysis of the videos. Finally, the number of students varied across groups and this could potentially affect the reasoning practices during discussions.

Data Availability

The datasets used and analysed during the current study are available from the corresponding author on reasonable request.

Aikenhead, G. S. (2006). Science education for everyday life: Evidence-based practice . Teachers College Press.

Google Scholar

Bereiter, C. (1992). Referent-centred and problem-centred knowledge: Elements of an educational epistemology. Interchange, 23 (4), 337–361.

Article Google Scholar

Breiner, J. M., Johnson, C. C., Harkness, S. S., & Koehler, C. M. (2012). What is STEM? A discussion about conceptions of STEM in education and partnership. School Science and Mathematics, 112 (1), 3–11. https://doi.org/10.1111/j.194908594.2011.00109.x

Brown, M. J. (2012). John Dewey’s logic of science. HOPS: The Journal of the International Society for the History of Philosophy of Science, 2 (2), 258–306.

Bruner, J. (2004). The psychology of learning: A short history (pp.13–20). Winter: Daedalus.

Bryan, L. A., Moore, T. J., Johnson, C. C., & Roehrig, G. H. (2016). Integrated STEM education. In C. C. Johnson, E. E. Peters-Burton, & T. J. Moore (Eds.), STEM road map: A framework for integrated STEM education (pp. 23–37). Routledge.

Buchanan, R. (1992). Wicked problems in design thinking. Design Issues, 8 (2), 5–21.

Bybee, R. W. (2013). The case for STEM education: Challenges and opportunities . NSTA Press.

Crismond, D. P., & Adams, R. S. (2012). The informed design teaching and learning matrix. Journal of Engineering Education, 101 (4), 738–797.

Cunningham, C. M., & Lachapelle, P. (2016). Experiences to engage all students. Educational Designer , 3(9), 1–26. https://www.educationaldesigner.org/ed/volume3/issue9/article31/

Curriculum Planning and Development Division [CPDD] (2021). 2021 Lower secondary science express/ normal (academic) teaching and learning syllabus . Singapore: Ministry of Education.

Delahunty, T., Seery, N., & Lynch, R. (2020). Exploring problem conceptualization and performance in STEM problem solving contexts. Instructional Science, 48 , 395–425. https://doi.org/10.1007/s11251-020-09515-4

Dewey, J. (1938). Logic: The theory of inquiry . Henry Holt and Company Inc.

Dewey, J. (1910a). Science as subject-matter and as method. Science, 31 (787), 121–127.

Dewey, J. (1910b). How we think . D.C. Heath & Co Publishers.

Book Google Scholar

Duschl, R. A., & Bybee, R. W. (2014). Planning and carrying out investigations: an entry to learning and to teacher professional development around NGSS science and engineering practices. International Journal of STEM Education, 1 (12). DOI: https://doi.org/10.1186/s40594-014-0012-6 .

Gale, J., Alemder, M., Lingle, J., & Newton, S (2000). Exploring critical components of an integrated STEM curriculum: An application of the innovation implementation framework. International Journal of STEM Education, 7(5), https://doi.org/10.1186/s40594-020-0204-1 .

Hsieh, H.-F., & Shannon, S. E. (2005). Three approaches to qualitative content analysis. Qualitative Health Research, 15 (9), 1277–1288.

Jonassen, D. H. (2000). Toward a design theory of problem solving. ETR&D, 48 (4), 63–85.

Kelly, G., & Licona, P. (2018). Epistemic practices and science education. In M. R. Matthews (Ed.), History, philosophy and science teaching: New perspectives (pp. 139–165). Cham, Switzerland: Springer. https://doi.org/10.1007/978-3-319-62616-1 .

Lee, O., & Luykx, A. (2006). Science education and student diversity: Synthesis and research agenda . Cambridge University Press.

Li, D. (2008). The pragmatic construction of word meaning in utterances. Journal of Chinese Language and Computing, 18 (3), 121–137.

National Research Council. (1996). The National Science Education standards . National Academy Press.

National Research Council (2000). Inquiry and the national science education standards: A guide for teaching and learning. Washington, DC: The National Academies Press. https://doi.org/10.17226/9596 .

OECD (2018). The future of education and skills: Education 2030. Downloaded on October 3, 2020 from https://www.oecd.org/education/2030/E2030%20Position%20Paper%20(05.04.2018).pdf

Park, W., Wu, J.-Y., & Erduran, S. (2020) The nature of STEM disciplines in science education standards documents from the USA, Korea and Taiwan: Focusing on disciplinary aims, values and practices. Science & Education, 29 , 899–927.

Pleasants, J. (2020). Inquiring into the nature of STEM problems: Implications for pre-college education. Science & Education, 29 , 831–855.

Roehrig, G. H., Dare, E. A., Ring-Whalen, E., & Wieselmann, J. R. (2021). Understanding coherence and integration in integrated STEM curriculum. International Journal of STEM Education, 8(2), https://doi.org/10.1186/s40594-020-00259-8

SFA (2020). The food we eat . Downloaded on May 5, 2021 from https://www.sfa.gov.sg/food-farming/singapore-food-supply/the-food-we-eat

Svandova, K. (2014). Secondary school students’ misconceptions about photosynthesis and plant respiration: Preliminary results. Eurasia Journal of Mathematics, Science, & Technology Education, 10 (1), 59–67.

Tan, M. (2020). Context matters in science education. Cultural Studies of Science Education . https://doi.org/10.1007/s11422-020-09971-x

Tan, A.-L., Teo, T. W., Choy, B. H., & Ong, Y. S. (2019). The S-T-E-M Quartet. Innovation and Education , 1 (1), 3. https://doi.org/10.1186/s42862-019-0005-x

Wheeler, L. B., Navy, S. L., Maeng, J. L., & Whitworth, B. A. (2019). Development and validation of the Classroom Observation Protocol for Engineering Design (COPED). Journal of Research in Science Teaching, 56 (9), 1285–1305.

World Economic Forum (2020). Schools of the future: Defining new models of education for the fourth industrial revolution. Retrieved on Jan 18, 2020 from https://www.weforum.org/reports/schools-of-the-future-defining-new-models-of-education-for-the-fourth-industrial-revolution/

Download references

Acknowledgements

The authors would like to acknowledge the contributions of the other members of the research team who gave their comment and feedback in the conceptualization stage.

This study is funded by Office of Education Research grant OER 24/19 TAL.

Author information

Authors and affiliations.

Natural Sciences and Science Education, meriSTEM@NIE, National Institute of Education, Nanyang Technological University, Singapore, Singapore

Aik-Ling Tan, Yann Shiou Ong, Yong Sim Ng & Jared Hong Jie Tan

You can also search for this author in PubMed Google Scholar

Contributions

The first author conceptualized, researched, read, analysed and wrote the article.

The second author worked on compiling the essential features and the variations tables.

The third and fourth authors worked with the first author on the ideas and refinements of the idea.

Corresponding author

Correspondence to Yann Shiou Ong .

Ethics declarations

Competing interests.

The authors declare that they have no competing interests.

Additional information

Publisher's note.

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Reprints and permissions

About this article

Tan, AL., Ong, Y.S., Ng, Y.S. et al. STEM Problem Solving: Inquiry, Concepts, and Reasoning. Sci & Educ 32 , 381–397 (2023). https://doi.org/10.1007/s11191-021-00310-2