Perceived diversity in software engineering: a systematic literature review

Affiliations.

- 1 University of British Columbia, Kelowna, Canada.

- 2 University of Waterloo, Waterloo, Canada.

- PMID: 34305441

- PMCID: PMC8284041

- DOI: 10.1007/s10664-021-09992-2

We define perceived diversity as the diversity factors that individuals are born with. Perceived diversity in Software Engineering has been recognized as a high-value team property and companies are willing to increase their efforts to create more diverse work teams. The current diversity state-of-the-art shows that gender diversity studies have been growing during the past decade, and they have shown the benefits of including women in software teams. However, less is known about how other perceived diversity factors such as race, nationality, disability, and age of developers are related to Software Engineering. Through a systematic literature review, we aim to clarify the research area concerned with perceived diversity in Software Engineering. Our goal is to identify (1) what issues have been studied and what results have been reported; (2) what methods, tools, models, and processes have been proposed to help perceived diversity issues; and (3) what limitations have been reported when studying perceived diversity in Software Engineering. Furthermore, our ultimate goal is to identify gaps in the current literature and create a call for future action in perceived diversity in Software Engineering. Our results indicate that the individual studies have typically had a gender diversity perspective focusing on showing gender bias or gender differences instead of developing methods and tools to mitigate the gender diversity issues faced in SE. Moreover, perceived diversity aspects related to SE participants' race, age, and disability need to be further analyzed in Software Engineering research. From our systematic literature review, we conclude that researchers need to consider a wider set of perceived diversity aspects for future research.

Keywords: Perceived diversity; Software engineering; Systematic literature review.

© The Author(s), under exclusive licence to Springer Science+Business Media, LLC, part of Springer Nature 2021.

Help | Advanced Search

Computer Science > Software Engineering

Title: large language models for software engineering: a systematic literature review.

Abstract: Large Language Models (LLMs) have significantly impacted numerous domains, including Software Engineering (SE). Many recent publications have explored LLMs applied to various SE tasks. Nevertheless, a comprehensive understanding of the application, effects, and possible limitations of LLMs on SE is still in its early stages. To bridge this gap, we conducted a systematic literature review (SLR) on LLM4SE, with a particular focus on understanding how LLMs can be exploited to optimize processes and outcomes. We select and analyze 395 research papers from January 2017 to January 2024 to answer four key research questions (RQs). In RQ1, we categorize different LLMs that have been employed in SE tasks, characterizing their distinctive features and uses. In RQ2, we analyze the methods used in data collection, preprocessing, and application, highlighting the role of well-curated datasets for successful LLM for SE implementation. RQ3 investigates the strategies employed to optimize and evaluate the performance of LLMs in SE. Finally, RQ4 examines the specific SE tasks where LLMs have shown success to date, illustrating their practical contributions to the field. From the answers to these RQs, we discuss the current state-of-the-art and trends, identifying gaps in existing research, and flagging promising areas for future study. Our artifacts are publicly available at this https URL .

Submission history

Access paper:.

- HTML (experimental)

- Other Formats

References & Citations

- Google Scholar

- Semantic Scholar

BibTeX formatted citation

Bibliographic and Citation Tools

Code, data and media associated with this article, recommenders and search tools.

- Institution

arXivLabs: experimental projects with community collaborators

arXivLabs is a framework that allows collaborators to develop and share new arXiv features directly on our website.

Both individuals and organizations that work with arXivLabs have embraced and accepted our values of openness, community, excellence, and user data privacy. arXiv is committed to these values and only works with partners that adhere to them.

Have an idea for a project that will add value for arXiv's community? Learn more about arXivLabs .

Systematic Reviews in the Engineering Literature: A Scoping Review

Ieee account.

- Change Username/Password

- Update Address

Purchase Details

- Payment Options

- Order History

- View Purchased Documents

Profile Information

- Communications Preferences

- Profession and Education

- Technical Interests

- US & Canada: +1 800 678 4333

- Worldwide: +1 732 981 0060

- Contact & Support

- About IEEE Xplore

- Accessibility

- Terms of Use

- Nondiscrimination Policy

- Privacy & Opting Out of Cookies

A not-for-profit organization, IEEE is the world's largest technical professional organization dedicated to advancing technology for the benefit of humanity. © Copyright 2024 IEEE - All rights reserved. Use of this web site signifies your agreement to the terms and conditions.

Ethics in AI through the practitioner’s view: a grounded theory literature review

- Open access

- Published: 06 May 2024

- Volume 29 , article number 67 , ( 2024 )

Cite this article

You have full access to this open access article

- Aastha Pant ORCID: orcid.org/0000-0002-6183-0492 1 ,

- Rashina Hoda 1 ,

- Chakkrit Tantithamthavorn 1 &

- Burak Turhan 2

56 Accesses

Explore all metrics

The term ethics is widely used, explored, and debated in the context of developing Artificial Intelligence (AI) based software systems. In recent years, numerous incidents have raised the profile of ethical issues in AI development and led to public concerns about the proliferation of AI technology in our everyday lives. But what do we know about the views and experiences of those who develop these systems – the AI practitioners? We conducted a grounded theory literature review (GTLR) of 38 primary empirical studies that included AI practitioners’ views on ethics in AI and analysed them to derive five categories: practitioner awareness , perception , need , challenge , and approach . These are underpinned by multiple codes and concepts that we explain with evidence from the included studies. We present a taxonomy of ethics in AI from practitioners’ viewpoints to assist AI practitioners in identifying and understanding the different aspects of AI ethics. The taxonomy provides a landscape view of the key aspects that concern AI practitioners when it comes to ethics in AI. We also share an agenda for future research studies and recommendations for practitioners, managers, and organisations to help in their efforts to better consider and implement ethics in AI.

Similar content being viewed by others

Ethics by design for artificial intelligence

All that glitters is not gold: trustworthy and ethical AI principles

Implementing Ethics in AI: Initial Results of an Industrial Multiple Case Study

Avoid common mistakes on your manuscript.

1 Introduction

Over the last few years, there has been a swift rise in the adoption of AI technology across diverse sectors such as health, transportation, education, IT, banking, and more. The widespread use of AI has underscored the significance of ethical considerations within the realm of AI (Hagendorff 2020 ). Ethics refers to “ the moral principles that govern the behaviors or activities of a person or a group of people ” (Nalini 2020 ). The process of attributing moral values and ethical principles to machines to resolve ethical issues they encounter, and enabling them to operate ethically is a form of applied ethics (Anderson and Anderson 2011 ). There is a lack of a universal definition of AI ethics and ethical principles (Kazim and Koshiyama 2021 ). In our study, we adopted the definition proposed by Siau and Wang ( 2020 ), stating that “AI ethics refers to the principles of developing AI to interact with other AIs and humans ethically and function ethically in society” . Likewise, we have adopted the definitions of AI ethical principles outlined in Australia’s AI Ethics Principles Footnote 1 list because there is a lack of a universal set of AI ethics principles that the whole world follows. Different countries and organisations have their own distinct AI ethical principles. For example, the European Commission has defined its own guidelines for trustworthy AI (Commission 2019 ), the United States Department of Defense has adopted 5 principles of AI Ethics (Defense 2020 ), and the Organisation for Economic Cooperation and Development (OECD) has defined its AI principles to promote the use of ethical AI (OECD 2019 ). Australia’s AI Ethics Principles address a broad spectrum of ethical concerns, spanning from human to environmental well-being. They encompass widely recognised ethical principles like fairness, privacy, and transparency, along with less common but crucial concepts such as contestability and accountability. The definitions of the terminologies used in this study have been provided in Appendix C .

The consideration of ethics in AI includes the process of development as well as the resulting product. Footnote 2 It is very important to incorporate ethical considerations in the development of AI products to ensure that the end product is ethically, socially, and legally responsible (Obermeyer and Emanuel 2016 ). The importance of ethical consideration in AI is highlighted by recent incidents that demonstrate its impact (Bostrom and Yudkowsky 2018 ). For example, GitHub was criticised for using unlicensed source code as training data for their AI product, which resulted in disappointment among software developers (Al-Kaswan and Izadi 2023 ). There were also cases of racial and gender bias in AI systems, such as facial recognition algorithms that performed better on white men and worse on black women, highlighting issues of accountability and bias (Buolamwini and Gebru 2018 ). Additionally, in 2018, Amazon had to halt the use of their AI-powered recruitment tool due to gender bias (Dastin (2018) ), and in 2020, the Dutch court halted the use of System Risk Indication (SyRI) - a secret algorithm to detect possible social welfare fraud as this algorithm lacked transparency for citizens about what it does with the personal information of the people (SyR 2020 ). In each of these examples, ethical problems might have arisen during the development process, giving rise to ethical concerns regarding the resulting product. These incidents emphasise the importance of ethical considerations in AI development.

We were motivated to study the area of ethics in AI due to various case studies and the importance of the topic. Despite the existence of ethical principles, guidelines, and company policies, the implementation of these principles is ultimately up to the AI practitioners. Thus, we became interested in conducting a review study to explore existing research on ethics in AI. Specifically, we were interested in exploring the perspectives of those closest to it – the AI practitioners, Footnote 3 as they are in a unique position to bring about changes and improvements and the need for review studies in the area of AI ethics to understand practitioners’ perspectives have also been highlighted in the literature (Khan et al. 2022 ; Leikas et al. 2019 ).

To understand practitioners’ views on AI ethics as presented in the literature, we conducted a grounded theory literature review (GTLR) following the five-step framework of define , search , select , analyse , and present proposed by Wolfswinkel et al. ( 2013 ). We first defined the overarching research question (RQ), What do we know from the literature about the AI practitioners’ views and experiences of ethics in AI? Footnote 4 Our study aimed to find empirical studies that focused on capturing the views and experiences of AI practitioners regarding AI ethics and ethical principles, and their implementation in developing AI-based systems. Then, we used the grounded theory literature review (GTLR) protocol to search and select primary research articles Footnote 5 that include practitioners’ views on AI ethics. To analyse the selected studies, we applied the procedures of socio-technical grounded theory (STGT) for data analysis (Hoda 2021 ) such as open coding , targeted coding , constant comparison , and memoing , iteratively on the 38 primary empirical studies. Wolfswinkel et al. ( 2013 ) welcome adaptations to their framework by acknowledging that “... one size does not fit all, and there should be no hesitation whatsoever to deviate from our proposed steps, as long as such variation is well motivated.” Since there was little concrete guidance available on how to perform in-depth analysis and develop theory from literature as a data source, we made some adaptations, as explained in the methodology section (Section 3 ).

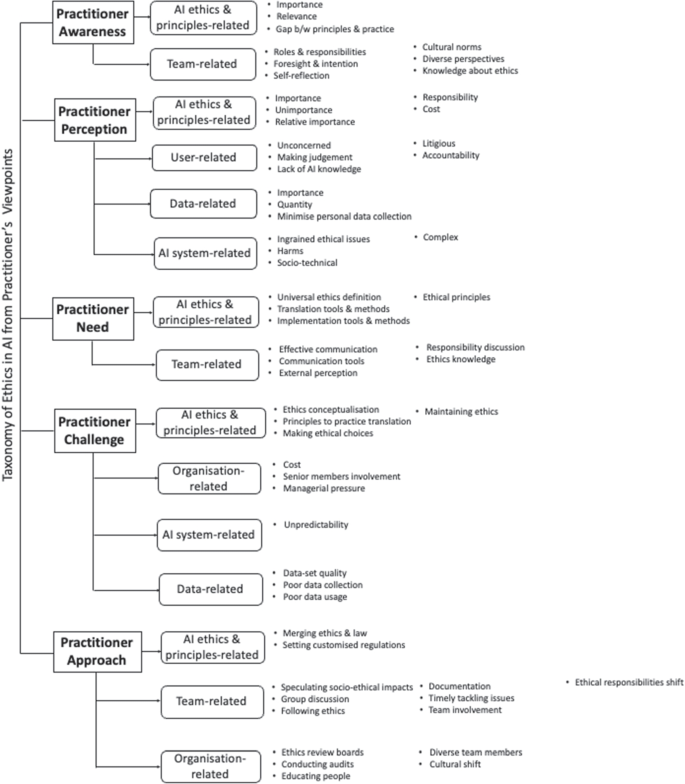

Based on our analysis, we present a taxonomy of ethics in AI from practitioners’ viewpoints spanning five categories: (i) practitioner awareness, (ii) practitioner perception, (iii) practitioner need, (iv) practitioner challenge, and (v) practitioner approach , captured in Figs. 4 and 5 , and described in-depth in Sections 5 and 6.1 . The main contributions of this paper are:

A source of gathered information from literature on AI practitioners’ views and experiences of ethics in AI,

A taxonomy of ethics in AI from practitioners’ viewpoints which includes five categories such their awareness , perception , need , challenge , and approach related to ethics in AI,

An example of the application of grounded theory literature review (GTLR) in software engineering,

Guidance for practitioners who require a better understanding of the requirements and factors affecting ethics implementation in AI,

A set of recommendations for future research in the area of ethics implementation in AI from practitioners’ perspective.

The rest of the paper is structured as follows: Section 2 presents the background details in the area of ethics in Information and Communications Technology (ICT), software engineering, and AI, followed by the details of the grounded theory literature review (GTLR) methodology in Section 3 . Then, we discuss the challenges, threats, and limitations of the methodology in Section 4 , present the findings in Section 5 which is followed by the description of the taxonomy , insights, and recommendations in Section 6 . Then, we present the methodological lessons learned in Section 7 followed by a conclusion in Section 8 .

2 Background

2.1 ethics in ict and software engineering.

The topic of ‘ethics’ has been a well-researched and widely discussed topic in the field of ICT for a long time. Over recent years, various IT professional organisations worldwide, like the Association for Computing Machinery (ACM), Footnote 6 the Institute for Certification of IT Professionals (ICCP), Footnote 7 and AITP Footnote 8 have developed their own codes of ethics (Payne and Landry 2006 ). These codes of ethics in the ICT domain are created to motivate and steer the ethical behavior of all computer professionals. This includes those who are currently working in the field, those who aspire to do so, teachers, students, influencers, and anyone who makes significant use of computer technology, as defined by the Association for Computing Machinery (ACM).

In 1991, Gotterbarn ( 1991 ) expressed concern about the insufficient emphasis placed on professional ethics in guiding the daily activities of computing professionals within their respective roles. Subsequently, he actively engaged in various initiatives aimed at advocating for ethical codes and fostering a sense of professional responsibility in the field. Studies have been conducted to explore how these codes of ethics affect the decision-making of professionals in the ICT sector. Ethics within the professional sphere can significantly aid ICT professionals in their decision-making, as evidenced by research conducted by Allen et al. ( 2011 ), and these codes have been observed to influence the conduct of ICT professionals (Harrington 1996 ). In 2010, Van den Bergh and Deschoolmeester ( 2010 ) conducted a survey involving 276 ICT professionals to explore the potential value of ethical codes of conduct for the ICT industry in dealing with contentious issues. They concluded that having a policy regarding ICT ethics does indeed significantly influence how professionals assess ethical or unethical situations in some cases. Fleischmann et al. ( 2017 ) conducted a mixed-method study with ICT professionals on the role of codes of ethics and the relationship between their experiences and attitudes towards the codes of ethics.

Likewise, studies have been conducted to investigate the impact of ethics in the area of Software Engineering. Rashid et al. ( 2009 ) concluded that ethics has been a very important part of software engineering and discussed the ethical challenges of software engineers who design systems for the digital world. Aydemir and Dalpiaz ( 2018 ) introduced an analytical framework to aid stakeholders including users and developers in capturing and analysing ethical requirements to foster ethical alignment within software artifacts and the development processes. In a similar vein, according to Pierce and Henry ( 1996 ), one’s personal ethical principles, workplace ethics, and adherence to formal codes of conduct all play a significant role in influencing the ethical conduct of software professionals. Pierce and Henry ( 1996 ) also delves into the extent of influence exerted by these three factors. On a related note, Hall ( 2009 ) examines the concept of ethical conduct in the context of software engineers, emphasizing the importance of good professional ethics. Furthermore, in a study by Fraga ( 2022 ), they conducted a survey involving software engineering professionals to explore the role of ethics in their field. The findings of the study suggest that the promotion of ethical leadership among systems engineers can be achieved when they adhere to established standards, codes, and ethical principles. These studies into ethics within the realms of ICT and Software Engineering indicate that this subject has been of significant importance for a long time, and there has been a prolonged effort to improve ethical considerations in these fields.

In summary, there is a recognised need for a stronger focus on professional ethics in guiding the daily activities of computing professionals. Multiple studies consistently demonstrate the substantial influence of ethical codes on decision-making in the ICT sector and Software Engineering, shaping behavior and ethical assessments. The collective findings underscore the importance of ethical considerations in the fields of ICT and Software Engineering.

2.2 Secondary Studies on AI Ethics

A number of secondary studies have been conducted that focused on the theme of investigating the ethical principles and guidelines related to AI. For example, Khan et al. ( 2022 ) conducted a Systematic Literature Review (SLR) to investigate the agreement on the significance of AI ethical principles and identify potential challenges to their adoption. They found that the most common AI ethics principles are transparency, privacy, accountability, and fairness. However, significant challenges in incorporating ethics into AI include a lack of ethical knowledge and vague principles. Likewise, Ryan and Stahl ( 2020 ) conducted a review study to provide a comprehensive analysis of the normative consequences associated with current AI ethics guidelines, specifically targeting AI developers and organisational users. Lu et al. ( 2022 ) conducted a Systematic Literature Review (SLR) to identify the responsible AI principles discussed in the existing literature and to uncover potential solutions for responsible AI. Additionally, they outlined a research roadmap for the field of software engineering with a focus on responsible AI.

Likewise, review studies have been conducted to investigate the ethical concerns of the use of AI in different domains. Möllmann et al. ( 2021 ) conducted a Systematic Literature Review (SLR) to explore which ethical considerations of AI are being investigated in digital health and classified the relevant literature based on the five ethical principles of AI including beneficence,non-maleficence, autonomy, justice, and explicability . Likewise, Royakkers et al. ( 2018 ) conducted an SLR to explore the social and ethical issues that arise due to digitization based on six different technologies like Internet of Things, robotics, bio-metrics, persuasive technology, virtual & augmented reality, and digital platforms. The review uncovered recurring themes such as privacy, security, autonomy, justice, human dignity, control of technology, and the balance of powers.

Studies have also been conducted to explore different methods and approaches to enhance the ethical development of AI. For example, Wiese et al. ( 2023 ) conducted a Systematic Literature Review (SLR) to explore the methods to promote and engage practice on the front end of ethical and responsible AI. The study was guided by an adaption of the PRISMA framework and Hess & Fore’s 2017 methodological approach. Morley et al. ( 2020 ) conducted a review study with the aim of exploring AI ethics tools, methods, and research that are accessible to the public, for translating ethical principles into practice.

Most of the secondary studies have either focused on investigating specific AI ethical principles, the ethical consequences of AI systems, or the approaches to enhance the ethical development of AI. Conducting a review study to identify and analyse primary empirical research on AI practitioners’ perspectives regarding AI ethics is important for gaining an understanding of the ethical landscape in the field of AI. It can also inform practical interventions, contribute to policy development, and guide educational initiatives aimed at promoting responsible and ethical practices in the development and deployment of AI technologies.

2.3 Ethics in AI

There are numerous and divergent views on the topic of ethics in AI (Vakkuri et al. 2020b ; Mittelstadt 2019 ; Hagendorff 2020 ), as it has been increasingly applied in various contexts and industries (Kessing 2021 ). AI practitioners and researchers seem to have mixed perspectives about AI ethics. Some believe there is no rush to consider AI-related ethical issues as AI has a long way from being comparable to human capabilities and behaviors (Siau and Wang 2020 ), while others conclude that AI systems must be developed by considering ethics as they can have enormous societal impact (Bostrom and Yudkowsky 2018 ; Bryson and Winfield 2017 ). Although the viewpoints vary from practitioner to practitioner, most conclude that AI ethics is an emerging and widely discussed topic and a current relevant issue of the real world (Vainio-Pekka 2020 ). This indicates that while opinions on the importance of AI ethics may differ, there is a consensus that the subject is highly relevant in the present context.

A number of studies conducted in the area of ethics in AI have been conceptual and theoretical in nature (Seah and Findlay 2021 ). Critically, there are copious numbers of guidelines on AI ethics, making it challenging for AI practitioners to decide which guidelines to follow. Unsurprisingly, studies have been conducted to analyse the ever-growing list of specific AI principles (Kelley 2021 ; Mark and Anya 2019 ; Siau and Wang 2020 ). For example, Jobin et al. ( 2019 ) reviewed 84 ethical AI principles and guidelines and concluded that only five AI ethical principles – transparency , fairness , non-maleficence , responsibility and privacy – are mainly discussed and followed. Fjeld et al. ( 2020 ) reviewed 36 AI ethical principles and reported that there are eight key themes of AI ethics – privacy , accountability , safety and security , transparency and explainability , fairness and non-discrimination , human control of technology , professional responsibility , and promotion of human values . Likewise, Hagendorff ( 2020 ) analysed and compared 22 AI ethical guidelines to examine their implementation in the practice of research, development, and application of AI systems. Some review studies focused on exploring the challenges and potential solutions in the area of ethics in AI, for example, Jameel et al. ( 2020 ); Khan et al. ( 2022 ). The desire to set ethical guidelines in AI has been enhanced due to increased competition between organisations to develop robust AI tools (Vainio-Pekka 2020 ). Among them, only a few guidelines indicate an oversight or enforcement mechanism (Inv 2019 ). It suggests that recent research has dedicated significant attention to the analysis and comparison of various sets of ethical principles and guidelines for AI.

Similarly, AI practitioners have expressed various concerns regarding the public policies and ethical guidelines related to AI. For example, while the ACM Codes of Ethics puts responsibilities to AI practitioners creating AI-based systems, a research study revealed that these practitioners generally believe that only physical harm caused by AI systems is crucial and should be taken into account (Veale et al. 2018 ). Similarly, in November 2021, the UN Educational, Scientific, and Cultural Organisation (UNESCO) signed a historic agreement outlining shared values needed to ensure the development of Responsible AI (UN 2021 ). The study conducted by Varanasi and Goyal ( 2023 ) involved interviewing 23 AI practitioners from 10 organisations to investigate the challenges they encounter when collaborating on Responsible AI (RAI) principles defined by UNESCO. The findings revealed that practitioners felt overwhelmed by the responsibility of adhering to specific RAI principles (non-maleficence, trustworthiness, privacy, equity, transparency, and explainability), leading to an uneven distribution of their workload. Moreover, implementing certain RAI principles (accuracy, diversity, fairness, privacy, and interoperability) in real-world scenarios proved difficult due to conflicts with personal and team values. Similarly, a study by Rothenberger et al. ( 2019 ) conducted an empirical study with AI experts to evaluate several AI ethics guidelines among which Microsoft AI Ethical Principles were one of them. The study found that the participants considered ‘Responsibility’ to be the foremost and notably significant ethical principle in the realm of AI. Following closely, they ranked ‘Privacy protection’ as the second most crucial principle among all other principles. This emphasises the perspective of these AI experts, who consider prioritising responsible AI practices and safeguarding user privacy to be fundamental aspects of ethical advancement and implementation of AI, without regarding other principles as equally crucial. Likewise, an empirical investigation was carried out by Sanderson et al. ( 2023 ), involving AI practitioners and designers. This study aimed to assess the Australian Government’s high-level AI principles and investigate how these ethical guidelines were understood and applied by AI practitioners and designers within their professional contexts. The results indicated that implementing certain AI ethical principles, such as those related to ‘Privacy and security’ , ‘Transparency’ and ‘Explainability’ , and ‘Accuracy’ , posed significant challenges for them. This suggests that there have been studies exploring the relationship between AI practitioners and the guidelines established by public organisations, as well as their sentiments towards each guideline.

Another prominent area of focus has been studies that were conducted to discuss the existing gap between research and practice in the field of ethics in AI. Smith et al. ( 2020 ) conducted a review study to identify gaps in ethics research and practice of ethical data-driven software development and highlighted how ethics can be integrated into the development of modern software. Similarly, Shneiderman ( 2020 ) provided 15 recommendations to bridge the gap between ethical principles of AI and practical steps for ethical governance. Likewise, there are solution-based papers and papers discussing models, frameworks, and methods for AI developers to enhance their AI ethics implementation. For example, an article by Vakkuri et al. ( 2021 ) presents the AI maturity model for AI software. In contrast, another article by Vakkuri et al. ( 2020a ) discusses the ECCOLA method for implementing ethically aligned AI systems. There are also papers presenting the toolkit to address fairness in ML algorithms (Castelnovo et al. 2020 ) and transparency model to design transparent AI systems (Felzmann et al. 2020 ). In general, it suggests that recent studies have centered on addressing the gap between research and practical application in the field of AI ethics. This also involves the development of various tools and methods aimed at improving the ethical implementation of AI.

Overall, existing studies seem to primarily focus on either analysing the plethora of ethical AI principles, filling the gap between research and practice, or discussing tool-kits and methods. However, compared to the number of papers on AI ethics describing ethical guidelines and principles, and tools and methods, there is a relative lack of studies that focus on the views and experiences of AI practitioners on AI ethics (Vakkuri et al. 2020b ). Furthermore, the literature also underscores the necessity for review studies that evaluate and synthesise the existing primary research on AI practitioners’ views and experiences of AI ethics (Khan et al. 2022 ; Leikas et al. 2019 ). To assimilate, analyse, and present the empirical evidence spread across the literature, we conducted a Grounded Theory Literature Review (GTLR) to investigate AI practitioners’ viewpoints on ethics in AI with some adaptations to the original framework, drawing data from papers whose prime focus may not have been understanding practitioners’ viewpoints but that nonetheless contained information about the same.

3 Review Methodology

While the importance of understanding AI practitioners’ viewpoints on ethics in AI has been highlighted (Vakkuri et al. 2020b ), yet, there are not enough dedicated research articles on the topic to effectively conduct a systematic literature review or mapping study. This is mainly because there are not enough papers dedicated to investigating AI practitioners’ views on ethics in AI such that their focus could be apparent from the title and abstract. Papers that include this as part of their findings are difficult to identify and select without a full read-through, making it ineffective and impractical when dealing with thousands of papers. At the same time, we were aware of a more responsive yet systematic method for reviewing the literature, called grounded theory literature review (GTLR) introduced by Wolfswinkel et al. ( 2013 ). GT is a popular research method that offers a pragmatic and adaptable approach for interpreting complex social phenomena, (Charmaz 2000 ). It provides a robust intellectual rationale for employing qualitative research to develop theoretical analyses (Goulding 1998 ). In Grounded Theory, researchers refrain from starting with preconceived hypotheses or theories to validate or invalidate. Instead, they initiate the research process by gathering data within the context, conducting simultaneous analysis, and subsequently formulating hypotheses (Strauss and Corbin 1990 ). This method is appropriate for our study because our research topic incorporates socio-technical aspects, and we also chose not to commence with a predetermined hypothesis. Instead, our approach was centered on examining the viewpoints of AI practitioners regarding AI ethics as outlined in the existing literature.

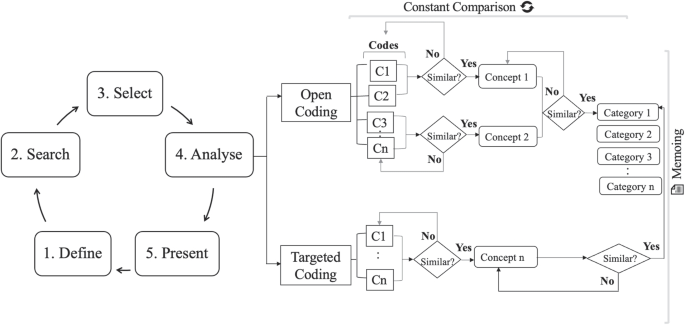

While the overarching review framework of grounded theory literature review (GTLR) helped frame the review process, we found ourselves having to work through the concrete application details using the practices of socio-technical grounded theory (STGT). In doing so, we made some adaptations to the five-step framework of define , search , select , analyse , and present described in the original grounded theory literature review (GTLR) guidelines by Wolfswinkel et al. ( 2013 ) and applied socio-technical grounded theory (STGT)’s concrete data analysis steps (Hoda 2021 ). Figure 1 presents an overview of the grounded theory literature review (GTLR) steps using the socio-technical grounded theory (STGT) method for data analysis as applied in this study. Table 1 presents the comparison between Grounded Theory Literature Review (GTLR) as we applied it, and traditional Systematic Literature Review (SLR) (Kitchenham et al. 2009 ).

Steps of the Grounded Theory Literature Review (GTLR) method with Socio-Technical Grounded Theory (STGT) for data analysis

The first step of grounded theory literature review (GTLR) is to formulate the initial review protocol, including determining the scope of the study by defining inclusion and exclusion criteria and search items, followed by finalising databases and search strings, with the aim of obtaining as many relevant primary empirical studies as possible. Studies that are empirical were one of the inclusion criteria of our study which is presented in Table 3 . By ‘empirical papers’, we are referring to those that draw information directly from primary sources, such as interviews and survey papers (studies that involve participants by using surveys to gather their perspectives on a specific subject, not literature surveys.) The research question (RQ) formulated was, What do we know from the literature about the AI practitioners’ views and experiences of ethics in AI?

3.1.1 Sources

Four popular digital databases, namely, ACM Digital Library (ACM DL), IEEE Xplore, SpringerLink, and Wiley Online Library (Wiley OL) were used as sources to identify the relevant literature. This choice was driven by the interdisciplinary nature of the topic, ‘ethics in AI.’ Given the rapid expansion of literature on AI ethics in recent years, researchers have been contributing their work to different venues. We were interested in understanding how AI practitioners perceive AI ethics. This emphasis on AI ethics perspectives was particularly prominent within Software Engineering and Information Systems venues. These databases have also been regularly used to conduct reviews on human aspects of software engineering, for example, Hidellaarachchi et al. ( 2021 ); Perera et al. ( 2020 ). Initially, we searched for relevant studies which were published in journals and conferences only and for which full texts were available.

3.1.2 Search Strings

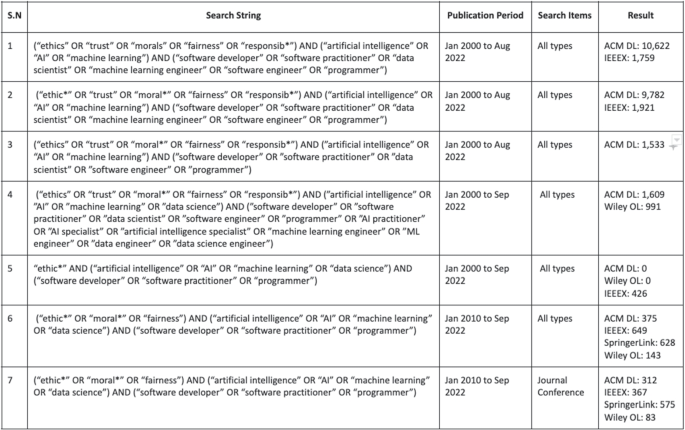

To begin with, we initiated the process of developing search queries by selecting key terms related to our research topic. Our initial set of key terms included “ethics”, “AI”, and “developer”. This choice was made in line with the primary objective of our study, which was to investigate the perspectives of AI practitioners on ethics in AI. Subsequently, we expanded our search by incorporating synonyms for these key terms to ensure a more comprehensive retrieval of relevant primary studies. As we constructed the final search string, we employed Boolean operators ‘AND’ and ‘OR’ to link these search terms. However, using the terms “ethics”, “AI”, and “developer”, along with their synonyms, resulted in a large number of papers that proved impractical to review, as illustrated in Appendix B . In an attempt to reduce the number of papers to a manageable level, we used the term “ethic*” along with synonyms for “AI” and “developer”. Unfortunately, this approach yielded no results in some databases, as detailed in Appendix B . Therefore, it became imperative for us to develop a search query that would provide us with a reasonable number of relevant primary studies to effectively conduct our study.

Six candidate search strings were developed and executed on databases before one was finalised. Table 2 shows the initial and final search strings. As the finalised search string returned an extremely large number of primary studies (N=9,899), we restricted the publication period from January 2010 to September 2022, in all four databases, as the topic of ethics in AI has been gaining rapid prominence in the last ten years. Table 3 shows the seed and final protocols, including inclusion and exclusion criteria (Wolfswinkel et al. 2013 ).

We performed the search using our seed review protocol , presented in Table 3 . The search process was iterative and time-consuming because some combinations of search strings resulted in too many papers that were unmanageable to go through, whereas some combinations resulted in very few studies. Appendix B contains the documentation of the search process showing the revision of the first search string through to the final search string.

We obtained a total of 1,337 primary articles ( ACM DL: 312, IEEEX: 367, SpringerLink: 575 and Wiley OL: 83 ) using the final search string (as shown in Table 2 ) and the seed review protocol (as shown in Table 3 ). After filtering out the duplicates, we were left with 1073 articles. As per Wolfswinkel et al. ( 2013 ) grounded theory literature review (GTLR) guidelines, the next step was to refine the whole sample based on the title and abstract. We tried this approach for the first 200 articles each that came up in ACM DL, IEEEX, and SpringerLink and all 83 articles in Wiley OL to get a sense of the number of relevant articles to our research question. We read the abstracts of the articles whose titles seemed relevant to our research topic and tried to apply the inclusion and exclusion criteria to select the relevant articles. We quickly realised that selection based on title and abstract was not working well. This is because the presence of the key search terms (for example, “ ethics ” AND “ AI ” AND “ developer ”) was rather common and did not imply that the paper would include the practitioner’s perspective on ethics in AI. We found ourselves having to scan through full texts to judge the relevance to our research question (RQ). Despite the effort involved, the return on investment was very low, for example, for every hundred papers read, we found only one or two relevant papers, i.e., those that included the AI practitioners’ views on ethics in AI.

Out of 683 papers, we obtained only 13 primary articles that were relevant to our research topic. Many articles, albeit interesting, did not present the AI practitioners’ views on ethics in AI. So, we decided to find more relevant articles through snowballing of articles. “Snowballing refers to using the reference list of a paper or the citations to the paper to identify additional papers” (Wohlin 2014 ). Snowballing of those 13 articles via forward citations and backward citations was done to find more relevant articles and enrich the overview review quality. Snowballing seemed to work better for us than the traditional search approach. We modified the seed review protocol accordingly, to include papers published in other databases and those published beyond journals and conferences, including students’ theses, reports, and research papers uploaded to arXiv . The final review protocol used in this study is presented in Table 3 . In this way, we obtained 25 more relevant articles through snowballing, taking the total number of primary articles to 38.

Here we note that the select step of scanning through the full contents of 683 articles was very tedious with a very low return on investment, with only 13 relevant studies obtained. In hindsight, we would have done better to start with a set of seed papers that were collectively known to the research team or those obtained from some quick searches on Google Scholar. What we did next by proceeding from the seed papers to cycles of snowballing, was more practical, productive, and in line with the iterative Grounded Theory (GT) approach as a form of applied theoretical sampling.

3.4 Analyse

Our review topic and domain lent themselves well to the socio-technical research context supported by socio-technical grounded theory (STGT) where our domain was AI, the actors were AI practitioners, the researcher team was collectively well versed in qualitative research and the AI domain, and the data was collected from relevant sources (Hoda 2021 ). We applied procedures of open coding , constant comparison , and memoing in the basic stage and targeted data collection and analysis, and theoretical structuring in the advanced stage of theory development using the emergent mode.

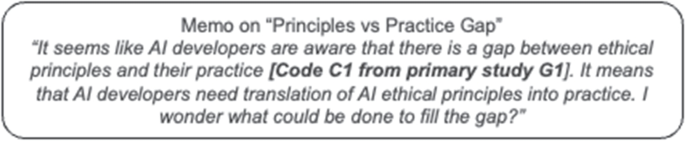

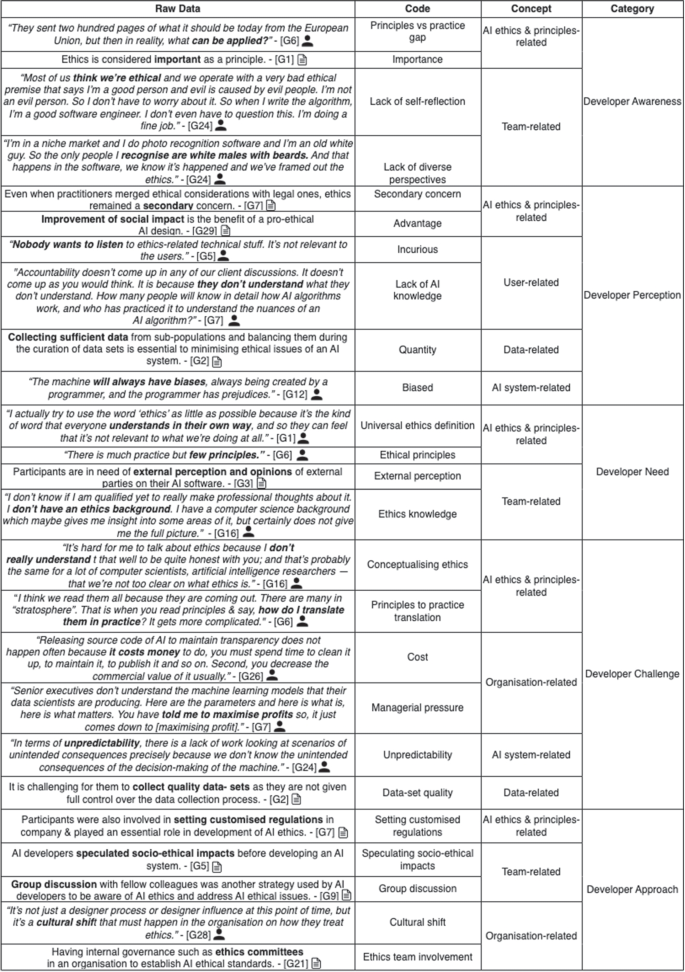

The qualitative data included findings covered in the primary studies, including excerpts of raw underlying empirical data contained in the papers. Data were analysed iteratively in small batches. At first, we analysed the qualitative data of 13 articles that were obtained in the initial phase. We used the standard socio-technical grounded theory (STGT) data analysis techniques such as open coding, constant comparison, and memoing for those 13 articles, and advanced techniques such as targeted coding on the remaining 25 articles, followed by theoretical structuring. This approach of data analysis is rigorous and helped us to obtain multidimensional results that were original, relevant, and dense, as evidenced by the depth of the categories and underlying concepts (presented in Section 5 ). The techniques of the socio-technical grounded theory (STGT) data analysis are explained in the following section. We also obtained layered understanding and reflections through reflective practices like memo writing (Fig. 2 ), which are presented in Section 6 .

3.4.1 The Basic Stage

We performed open coding to generate codes from the qualitative data of the initial set of 13 articles. Open coding was done for each line of the ‘Findings’ sections of the included articles to ensure we did not miss any information and insights related to our research question (RQ). The amount of qualitative data varied from article to article. For example: some articles had in-depth and long ‘Findings’ sections whereas some had short sections. Open coding for some articles consumed a lot of time and led to hundreds of codes whereas a limited number of codes were generated for some other articles (Fig. 3 ).

Similar codes were grouped into concepts and similar concepts into categories using constant comparison. Examples of the application of Socio-Technical Grounded Theory (STGT)’s data analysis techniques to generate codes, concepts, and categories are shown in Fig. 3 , and a number of quotations from the original papers are included in Section 5 , to provide “ strength of evidence ” (Hoda 2021 ). The process of developing concepts and categories was iterative. As we read more papers, we refined the emerging concepts and categories based on the new insights obtained. The coding process was initiated by the first author using Google Docs initially, and later, they transitioned to Google Spreadsheet due to the growing number of codes and concepts. Subsequently, the second author conducted a review of the codes and concepts generated by the first author independently. Following this review, feedback and revisions were discussed in detail during meetings involving all the authors. To clarify roles, the first author handled the coding, the second author offered feedback on the codes, concepts, and categories, while the remaining two authors contributed to refining the findings through critical questioning and feedback.

Each code was numbered as C1, C2, C3 and labeled with the paper ID (for example, G1, G2, G3) that it belonged to, to enable tracing and improve retrospective comprehension of the underlying contexts.

While the open coding led to valuable results in the form of codes, concepts, and categories, memoing helped us reflect on the insights related to the most prominent codes, concepts, and emerging categories. We also wrote reflective memos to document our reflections on the process of performing a grounded theory literature review (GTLR). These insights and reflections are presented in Section 6 . An example of a memo created for this study is presented in Fig. 2 .

Example of a memo arising from the code (“principles vs practice gap”) labeled [C1]

3.4.2 The Advanced Stage

The codes and concepts generated from open coding in the basic stage led to the emergence of five categories: practitioner awareness , practitioner perception , practitioner need , practitioner challenge and practitioner approach to AI ethics, with different level of details and depth underlying each. Once these categories were generated, we proceeded to identify new papers using forward and backward snowballing in the advanced stage of theory development. Since our topic under investigation was rather broad, to begin with, and some key categories of varying strengths had been identified, an emergent mode of theory development seemed appropriate for the advanced stage (Hoda 2021 ).

We proceeded to iteratively perform targeted data collection and analysis on more papers. Targeted coding involves generating codes that are relevant to the concepts and categories emerging from the basic stage (Hoda 2021 ). Reflections captured through memoing and snowballing served as an application of theoretical sampling when dealing with published literature, similar to how it is applied in primary socio-technical grounded theory (STGT) studies.

We performed targeted coding in chunks of two to three sentences or short paragraphs that seemed relevant to our emergent findings, instead of the line-by-line coding, and continued with constant comparison. This process was a lot faster than open coding. The codes developed using targeted coding were placed under relevant concepts, and new concepts were aligned with existing categories in the same Google spreadsheet. In this stage, our memos became more advanced in the sense that they helped identify relationships between the concepts and develop a taxonomy. We continued with targeted data collection and analysis until all 38 selected articles were analysed. Finally, theoretical structuring was applied. This involved considering our findings against common theory templates to identify if any naturally fit. In doing so, we realised that the five categories together describe the main facets of how AI practitioners view ethics in AI, forming a form of multi-faceted taxonomy, similar to Madampe et al. ( 2021 ).

3.5 Present

As the final step of the grounded theory literature review (GTLR) method, we present the findings of our review study, the five key categories that together form the multi-faceted taxonomy with underlying concepts and codes. We developed a taxonomy instead of a theory because we adhered to the principles outlined by Wolfswinkel et al. ( 2013 ) for conducting our Grounded Theory Literature Review and according to Wolfswinkel et al. ( 2013 ), the key idea is to use the knowledge you’ve gained through analysis to decide how to best structure and present your findings in a way that makes sense and communicates your insights effectively. Likewise, we used the Socio-Technical Grounded Theory (STGT) method (Hoda 2021 ) to analyse our data, which includes a recommendation: “STGT suggests that researchers should engage in theoretical structuring by identifying the type of theories that align best with their data, such as process , taxonomy , degree, or strategies (Glaser 1978 ).” This is why we chose to create a taxonomy, as it was the most suitable approach based on the data we collected.

This is followed by a discussion of the findings and recommendations. In presenting the findings, we also make use of visualisations (see Figs. 4 and 5 ) (Wolfswinkel et al. 2013 ).

4 Challenges, Threats and Limitations

We now discuss some of the challenges, threats, and limitations of the Grounded Theory Literature Review (GTLR) method in our study.

4.1 Grounded Theory Literature Review (GTLR) Nature

Unlike a Systematic Literature Review (SLR), a Grounded Theory Literature Review (GTLR) study does not aim to achieve completeness. Rather, it focuses on capturing the ‘lay of the land’ by identifying the key aspects of the topic and presenting rich explanations and nuanced insights. As such, while the process of a grounded theory literature review (GTLR) can be replicated, the results – the resulting descriptive findings – are not easily reproducible. Similarly, our study does not aim to be exhaustive, as it adheres to a grounded theory methodology. The chosen literature sample underwent thoughtful consideration, and although it is not all-encompassing, we have taken steps to assess its representativeness. Instead of using a representative sampling approach, we used theoretical sampling in our study, acknowledging that our sample might not exhibit the same level of representativeness as seen in a Systematic Literature Review (SLR), which is one of the limitations of our study.

4.2 Search Items and Strategies

Our search and selection steps for identifying the seed papers and subsequent snowballing may have resulted in missing some relevant papers. This threat is dependent on the list of keywords selected for the study and the limitations of the search engines. To minimise the risk of this threat, we used an iterative approach to develop the search strings for the study. Initially, we chose the key terms from our research title and added their synonyms to develop the final search strings which returned the most relevant studies. For example, we included “fairness” in our final search string because when we used only the term “ethics”, we obtained zero articles in two databases ( ACM DL and Wiley OL ). The documentation of the search process is presented in Appendix B . Likewise, we only used the term “fairness” but did not include other terms like “explainability” and “interpretability” in our final search string. Due to this, there is a possibility that we missed papers that explore AI practitioners’ views on these terms (“interpretability” and “explainability”), which is a limitation of our study.

The final search terms (“ethic*” OR “moral*” OR “fairness”) AND (“artificial intelligence” OR “AI” OR “machine learning” OR “data science”) AND (“software developer” OR “software practitioner” OR “programmer”) that we used in our study seem to be biased towards engineering/computer science publication outputs. This represents one of the limitations of our research since publications related to understanding AI practitioners’ perspectives on ‘ethics in AI’ may not exclusively reside within technical publications but may also extend to disciplines within the social sciences and humanities. Our use of these search terms, which are inclined towards outputs in engineering and computer science, might have led to the omission of relevant publications from social science and humanities domains.

In our final search query, we opted for the term “software developer”. Given the iterative nature of our keyword design process, we had previously experimented with incorporating keywords like “data scientist”, in combination with terms like “AI practitioner” and “machine learning engineer”, to ensure that we did not inadvertently miss relevant papers. Unfortunately, this led to an overwhelming number of papers, posing a challenge for our study. Therefore, we decided to reduce the number of keywords and used only terms like “software developer”, “software practitioner”, and “programmer” to obtain a more manageable set of papers for our study. However, we acknowledge that not including the term “data scientist” in the search query may have caused us to miss some relevant papers, which is a limitation of our study.

The main objective of our study was to explore the empirical studies that focused on understanding AI practitioners’ views and experiences on ethics in AI. We were looking at the people involved in the technical development of AI systems but not managers, which is a limitation of our study. However, future studies could encompass managers, or separate reviews may delve into their perspectives on AI ethics. Likewise, we focused on studies published in the Software Engineering and Information Systems domains. However, we acknowledge that AI practitioners’ perspectives on AI ethics might have been extensively studied in social sciences and humanities, areas we didn’t explore - a limitation of our study. Future research can encompass studies from these domains.

4.3 Review Protocol Modification

We decided to include only research-based articles in our grounded theory literature review (GTLR) study. Future grounded theory literature review (GTLR) studies can include literature from non-academic sources like in multi-vocal literature reviews (MLRs). Since, there is a lack of theories, frameworks, and theoretical models around this topic, we wanted to conduct a rigorous review study to present multidimensional findings and develop theoretical foundations for this critical and emerging topic. Finding enough empirical articles related to the research topic was another challenge. To overcome this, we had to make some adaptions to the original grounded theory literature review (GTLR) framework proposed by Wolfswinkel et al. ( 2013 ) and relaxed the review protocol during the snowballing of articles and included studies published in venues other than journals and conferences. We also used studies uploaded on arXiv as our seed papers due to the lack of enough peer-reviewed publications relevant to our research topic. arXiv is a useful resource to find the latest research on emerging topics, and the quality of the work can be reasonably assessed from the draft. The growing impact of open sources like arXiv is evidenced by the increase in direct citations to arXiv in Scopus-indexed scholarly publications from 2000 to 2013 (Li et al. 2015 ).

Taxonomy of ethics in AI from practitioners’ viewpoints

4.4 Time Constraints

We applied the socio-technical grounded theory (Hoda 2021 ) approach to analyse the qualitative data of primary studies and focused on the ‘Findings’ section of the studies that presented empirical evidence. We did not find information on tools/software/framework/models used by AI practitioners to implement ethics in AI, although a study mentioned the existence of various tools but with no details provided [G10]. Since we were following a broad and inductive approach, we were not specifically looking for information on tools. This lack of information was surprising, but future reviews and studies can investigate the use of tools in implementing AI ethics.

As explained above, five key categories emerged from the analysis: (i) practitioner awareness , (ii) practitioner perception , (iii) practitioner need , (iv) practitioner challenge and (v) practitioner approach . Taken together, they form a taxonomy of ethics in AI from practitioners’ viewpoints , shown in Fig. 4 , with the underlying codes and concepts. Taken together, they represent the key aspects AI practitioners have been concerned with when considering ethics in AI. We describe each of the five key categories, and their underlying codes and concepts, and share quotes from the included primary studies by attributing them to paper IDs, G1 to G38 . The list of included studies is presented in Appendix A .

5.1 Practitioner Awareness

The first key category, or facet of the taxonomy, that emerged is Practitioner Awareness . This category emerged from two underlying concepts: AI ethics & principles-related awareness and team-related awareness .

5.1.1 AI Ethics & Principles—Related Awareness

5.1.2 Team—Related Awareness

Participants in some studies acknowledged their awareness of their roles and responsibilities in integrating ethics into AI during its development. For instance, a participant from the study [G7] highlighted being aware of their roles and responsibilities in implementing ethics during the development of AI systems. Similarly, a participant in another study [G23] expressed awareness of playing a pivotal role in shaping the ethics embedded in an AI system.

In a study [G25], a participant acknowledged their lack of knowledge about ethics of AI. Similarly, participants in other studies, such as [G6] and [G8], also expressed awareness of their insufficient understanding of AI ethics and ethical principles.

5.1.3 Overall Summary

Few AI practitioners reported their awareness of the concept of AI ethics, ethical principles, their importance, and relevance in AI development. Likewise, very few AI practitioners were aware of the gap that exists between the ethical principles of AI and practice. Overall, this indicates a positive aspect concerning AI practitioners, as awareness of ethics is the initial step toward implementing ethical practices in AI development.

Similarly, some AI practitioners reported their understanding of the roles and responsibilities involved in the development of ethical AI systems. However, the primary focus of the majority of AI practitioners who participated in some studies was on recognising their own limitations that could result in the development of unethical AI systems. These limitations encompassed a lack of foresight and intention, insufficient self-reflection, limited knowledge of ethics, and a lack of awareness regarding cultural norms. In summary, this suggests that AI practitioners who participated in those studies engaged in significant introspection to comprehend the reasons behind the development of unethical AI systems. This introspective approach is positive because self-reflection can play a crucial role in identifying personal shortcomings and finding ways to address them.

5.2 Practitioner Perception

The second category is Practitioner Perception which emerged from four underlying concepts: AI ethics & principles-related perception , User-related perception , Data-related perception , and AI system-related perception .

The perception category goes beyond acknowledging the existence of something and captures practitioners’ views & opinions about it, including held notions and beliefs. For example, it includes shared perceptions about the relative importance of ethical principles in developing AI systems, who is considered accountable for applying and upholding them, and the perceived cost of implementing ethics in AI.

5.2.1 AI Ethics & Principles-Related Perception

Perceptions about the importance of ethics varied. Some AI practitioners who participated in studies like [G1], [G29], and [G20] perceived ‘ethics’ as very important in developing AI systems. A study [G1] reported that AI practitioners acknowledged the importance of AI ethics. In the paper, when participants were asked if ethics is useful in AI, all (N=6) of them answered “Yes”. Nevertheless, it’s important to consider that the participant sample size of this study [G1] was only 6.

Developing responsible AI was seen as building positive relations between organisations and human beings by minimising inequality, improving well-being, and ensuring data protection and privacy. However, when it comes to the relative importance of ethical principles, it was a divided house. An AI practitioner who participated in a study [G11] thought that AI systems must be fair in every way. Likewise, some participants in another study [G8] also thought that fairness issues in AI systems must not only be minimised but completely avoided, highlighting the importance of developing a fair AI system. On the other hand, within the same study [G8] surveying 51 participants, the highest importance, with an arithmetic mean of 4.71, was attributed to the principle of Protection of data privacy. Other studies – [G6] and [G10] – also concluded that ‘Privacy protection and security’ was the most important ethical principle in AI system development.

5.2.2 User-Related Perception

Some AI practitioners who participated in studies like [G2], [G3], [G5], [G6], [G7], and [G34] had perceptions about users’ nature, technical abilities, drivers, and their role in the context of ethics in AI. In this context, “users” encompassed either the party commissioning a system, the end users, or both. We have provided additional clarity regarding the specific user categories that participants referred to when engaging in discussions about ethics in AI.

5.2.3 Data-Related Perception

The developer’s naïve perception of the potential for harm (or lack thereof) is worth noting in the above example. Along with that, some participants in a study [G2] highlighted the importance of data collection and curation in AI system development. They mentioned that collecting sufficient data from sub-populations and balancing them during the curation of data sets is essential to minimising the ethical issues of an AI system. A participant in [G15] also shared a similar idea on collecting sufficient ethical data for developing AI systems.

On the other hand, some participants in a study [G18] reported that they minimised getting the personal data of users or avoided its collection as much as possible so that no ethical issues related to data privacy arise during AI system development, whereas a participant in [G21] mentioned that they used privacy-preserving data collection techniques to reduce unethical work with data.

5.2.4 AI System-Related Perception

5.2.5 Overall Summary

Overall, our synthesis says that AI practitioners who participated in the studies had both positive and negative perceptions about the concept of AI ethics. While some practitioners thought ethics were important to consider while developing AI systems, others perceived it as a secondary concern and non-functional requirement of AI. This diversity of views on AI ethics can have implications for the development and deployment of AI technologies and how ethical considerations are integrated into AI practices. Likewise, there were different views on the importance of different principles of AI ethics. Some practitioners perceived developing a fair AI is important whereas others perceived maintaining privacy during AI development is more important. This diversity in the views of different ethical principles might also impact the development of ethical AI-based systems.

Perceptions regarding ethical considerations in the development of AI systems also extended to the question of responsibility. While some AI professionals felt it was their duty to create ethical AI systems and bear the accountability for any resulting harm, others believed that both users and practitioners shared this responsibility. We think it’s essential to establish clear definitions of who should be accountable for ethical considerations during AI development and the consequences that arise from it. This way, there can be no evasion of this important issue. The discussion revolved around the expense associated with implementing ethical standards in AI development. We are curious whether, in the absence of cost barriers, AI practitioners could have created more ethically sound AI systems.

Some practitioners who participated in the studies also held unfavorable views regarding AI system users. Some believed that users generally did not pay much attention to AI ethics until actual ethical problems arose. Users were viewed as making judgments about AI systems based on personal biases rather than a deep understanding of how AI worked. Additionally, some participants perceived that users might resort to legal action against companies only when ethical issues with AI systems become apparent. Overall, this suggests a gap in user awareness and engagement with AI ethics, which could have implications for how AI is developed, used, and regulated.

Likewise, AI practitioners perceived a few steps to be important related to data to develop ethical AI systems. Proper data handling, sufficient data collection and data balancing, and avoiding personal data collection were perceived as important measures to mitigate ethical issues of AI systems. This implies that data-related practices contribute to ethical behavior and responsible AI development.

A few AI practitioners also had mixed perceptions about the nature of AI systems. Some expressed pessimism, suggesting that AI systems are excessively complex and inherently possess ethical issues that are difficult to mitigate. On the other hand, others viewed AI as socio-technical systems that, at the very least, take ethical considerations into account. Overall, this diversity in views highlights the ongoing debate and complexity surrounding AI ethics and underscores the importance of continued discussion and efforts to improve the ethical aspects of AI technology.

5.3 Practitioner Need

The review highlighted the different needs of AI practitioners which can help them enhance ethical implementation in AI systems. This category is underpinned by concepts such as AI ethics & principles-related need and team-related need .

5.3.1 AI Ethics & Principles—Related Need

Likewise, a few AI practitioners who participated in a study [G1] and [G5] reported that they are challenged to implement ethics in AI as there is a lack of tools or methods for implementing ethics . For example, in a study [G1] , when AI practitioners were asked, “Do your AI development practices take into account ethics, and if yes, how?” , all respondents (N=6) answered “No” . This indicates that AI companies lack clear tools and methods that help AI practitioners implement ethics in AI. Another study [G19] concluded that there is a lack of tools that support continuous assurance of AI ethics. A participant in a study [G19] stated that it was challenging for them as they had to rely on manual practice to manage ethics principles during AI system development.

5.3.2 Team—Related Need

There are a few needs related to AI practitioners that influence ethical implementation in a system. There is a need for effective communication between AI practitioners as it supports ethics implementation [G2], [G3], [G15]. A few participants in studies [G2] and [G3] expressed the need for tools to facilitate communication between AI model developers and data collectors. In the study [G2], out of those surveyed, 52% of respondents (79% of them when asked) expressed that tools aiding communication between model developers and data collectors would be incredibly valuable.

Similarly, some participants in [G18] reported that they were technology experts but didn’t have any knowledge and background in ethics . However, they were extremely aware of privacy concerns in AI use, highlighting an interesting relationship between practitioner awareness, perception, and challenges. A few participants in other studies like [G6], [G8], and [G25] also supported the notion.

5.3.3 Overall Summary

The AI practitioners who participated in the included primary studies discussed several requirements concerning the conceptualisation of AI ethics and ethical guidelines. Some of them also expressed the necessity for tools and methodologies that could aid them in improving the development of ethical AI systems. This suggests that there is an ongoing need for support and resources to assist AI practitioners in adhering to ethical principles during the AI development process.

Similarly, a few participants in some of the included primary studies also addressed certain requirements regarding AI development teams. Some of these needs pertained to individual self-improvement, including the improvement of communication within the team and possessing a strong foundation in ethics as prerequisites for developing ethical AI systems. Additionally, there was a mention of the importance of discussing ethical responsibilities among team members as another requirement. Overall, the data suggests a commitment to improving the ethical aspects of AI development, both in terms of principles and practical implementation, and a recognition that addressing these ethical challenges requires a multifaceted approach involving teams and individual professionals.

5.4 Practitioner Challenge

The fourth key category is Practitioner Challenge . Several challenges are faced by AI practitioners in implementing AI ethics including AI ethics and principles-related challenge , organisation-related challenge , AI system-related challenge , and data-related challenge .

5.4.1 AI Ethics & Principles—Related Challenge

A number of challenges related to implementing AI ethics were reported, including knowledge gaps, gaps between principles & practice, ethical trade-offs including business value considerations, and challenges to do with implementing specific ethical principles such as transparency, privacy, and accountability.

Different types of challenges are mentioned and solutions are discussed in theory but there is no demonstration of those solutions in practice [G1], [G3]. Translation of AI principles into practice is a challenge for AI practitioners as discussed by some participants in studies including [G1] and [G6].

5.4.2 Organisation—Related Challenge

5.4.3 AI System—Related Challenge

5.4.4 Data—Related Challenge

5.4.5 Overall Summary

Participants in the included primary studies discussed various challenges related to the concept of AI ethics and ethical principles. Some participants discussed challenges related to ethics, including variations in how people understand ethics, the practical application of ethical principles, and the consistent adherence to various ethical standards throughout the AI development process. In general, this data suggests that the primary challenge for practitioners is grasping the essence of ethics, which we consider to be the fundamental issue and should be prioritised for resolution.

Similarly, organisations have contributed to obstructing AI practitioners in their efforts to develop ethical AI systems. Challenges raised by participants, such as limited budgets for integrating ethics, tight project deadlines, and restricted decision-making authority during AI development, indicate that organisations could assist AI practitioners by addressing these issues when feasible.

Some participants also discussed the challenges regarding the unpredictability of AI systems. They identified factors contributing to this unpredictability, such as profit maximisation, attention optimisation, and cyber-security threats. The absence of contingency plans to address issues stemming from AI system unpredictability was also discussed. Overall, it indicates that AI practitioners employ certain strategies to mitigate unpredictability in AI systems, but there is a demand for methods and tools to effectively prevent or manage such unpredictability. The development of such methods or tools would aid in reducing ethical risks associated with AI.

Participants discussed challenges associated with the data used to train AI models. They explained how the quality of data and the processes involved in handling data can influence AI development. Some AI practitioners faced challenges related to ensuring the ethical development of AI, primarily due to issues like inadequate data quality, poor data collection practices, and improper data usage. Overall, the data suggests that to ensure ethical AI development, it is essential to address issues related to data quality and data handling processes.

5.5 Practitioner Approach

The review of empirical studies provided insights into the approaches used by AI practitioners to implement ethics during AI system development. This category is underpinned by three key concepts, AI ethics & principles-related approach , team-related approach , and organisation-related approach to enhance ethics implementation in AI. AI practitioners discussed the applied and/or potential strategies related to these three concepts. Applied strategies refer to the techniques or ways that AI practitioners reported using to enhance the implementation of ethics in AI, whereas possible strategies are the recommendations or potential solutions discussed by AI practitioners to enhance the implementation of ethics in AI.

5.5.1 AI Ethics & Principles—Related Approach

AI practitioners were also involved in setting customised regulations in the company and played an essential role in the development of AI ethics. This strategy was used to enhance ethics implementation by developing comprehensive and well-defined guidelines for AI ethics for the company [G7]. Some participants in a study [G11] also reported that they needed to customize the general policies in the organisation to better support privacy and accessibility for their specific circumstances to ensure AI fairness.

5.5.2 Team—Related Approach

Some participants in a study [G1] reported that organisations used proactive strategies such as speculating socio-ethical impacts and analysing hypothetical situations to enhance ethics implementation in AI development. Likewise, a few participants in another study [G5] supported the notion and mentioned that such strategies aimed to address ethical issues that may arise and plan for their potential consequences [G5]. Analysing a hypothetical situation of unpredictability was a strategy used to solve an AI system’s unpredictable behavior [G1]. Similarly, a participant in a study [G2] reported that speculating possible fairness issues of an AI system before deploying it was a strategy used to minimise fairness issues [G2] in AI.

However, some companies did not use proactive strategies to maintain transparency of AI systems but addressed transparency issues only when it impacted their business [G6]. Some AI practitioners just followed what is legal and shifted the ethical responsibilities to policymakers and legislative authorities [G7]. In contrast, some participants in a study [G24] placed the ethical responsibility on the company manager.

In addition to sharing experiences of tried and tested strategies, practitioners also discussed potential strategies that they thought could improve ethics in AI. A study [G10] concluded that appointing one individual to implement ethics during AI development is not a good option. The whole AI development team must be involved in the process of ethics implementation. In another study [G15], a participant proposed a similar notion, emphasising the involvement of not just senior members but also junior AI practitioners in integrating ethics during AI development.

Likewise, a participant in a study [G10] mentioned that tackling ethical issues timely i.e., during the design and development of an AI system to enhance system transparency is good. In another study [G4], a participant recommended addressing ethical concerns during the development of AI systems, highlighting the necessity for providing AI developers with supportive methods.

5.5.3 Organisation—Related Approach

Some participants in a study [G18] reported several strategies provided by organisations to enhance ethics implementation in AI such as ethics review boards . Likewise, a participant in a study [G21] mentioned that having internal governance such as ethics committees in an organisation to establish AI ethical standards can provide AI practitioners an opportunity to work closely with ethicists so that they can verify if ethics is being implemented appropriately during AI system development.

Some participants in studies like [G1] and [G5] stated that conducting audits was the other important strategy organisations provided to them to solve transparency issues. A participant in [G21] reported that employing AI auditors could help AI practitioners in developing ethical AI systems.

5.5.4 Overall Summary

Participants discussed several strategies that they used to ensure the ethical development of AI systems. The applied strategies related to AI ethics and principles were used by the participants to ensure the ethical development of AI systems such as merging ethics and law and setting customised AI ethics regulations in the company. Overall, this indicates that practitioners emphasize the comprehensive integration of all AI ethical principles to ensure that no aspect is overlooked during the development process.

Some approaches were performed by the team to ensure the ethical development of AI systems such as group discussions with colleagues on AI ethics, analysing hypothetical situations of AI ethical issues, considering socio-ethical impacts of AI, and discussion with policymakers and legal teams to ensure algorithms are abiding by laws. Overall, this data suggests a comprehensive and multidisciplinary approach to addressing AI ethics, where the team actively engages in discussions, analysis, and collaboration with various stakeholders to promote the ethical development of AI systems.

Some participants mentioned that their organisations currently use various methods, such as audits, and ethics review boards, to promote ethical AI development. However, the discussion highlighted a greater emphasis on potential approaches that organisations could offer to their AI development teams to ensure ethical AI. For instance, some participants proposed that organisations could prioritise diversity within AI teams, provide education and training on AI ethics for practitioners, establish internal governance mechanisms like ethics committees, cultivate a cultural shift within the organisation towards ethical considerations, and implement tools like quizzes during the hiring process for AI teams to enhance ethical development. It indicates that organisations can offer additional support to AI practitioners in their pursuit of ethical AI systems, suggesting that there is more that can be done in this regard.

6 Discussion and Recommendations

6.1 taxonomy of ethics in ai from practitioners’ viewpoints.

The taxonomy of ethics in AI from practitioners’ viewpoints aims to assist AI practitioners in identifying different aspects related to ethics in AI such as their awareness of ethics in AI, their perception towards it, the challenges they face during ethics implementation in AI, their needs, and the approaches they use to enhance better implementation of ethics in AI. Using the findings, we believe that AI development teams will have a better understanding of AI ethics, and AI managers will be able to better manage their teams by understanding the needs and challenges of their team members.