Society Homepage About Public Health Policy Contact

Data-driven hypothesis generation in clinical research: what we learned from a human subject study, article sidebar.

Submit your own article

Register as an author to reserve your spot in the next issue of the Medical Research Archives.

Join the Society

The European Society of Medicine is more than a professional association. We are a community. Our members work in countries across the globe, yet are united by a common goal: to promote health and health equity, around the world.

Join Europe’s leading medical society and discover the many advantages of membership, including free article publication.

Main Article Content

Hypothesis generation is an early and critical step in any hypothesis-driven clinical research project. Because it is not yet a well-understood cognitive process, the need to improve the process goes unrecognized. Without an impactful hypothesis, the significance of any research project can be questionable, regardless of the rigor or diligence applied in other steps of the study, e.g., study design, data collection, and result analysis. In this perspective article, the authors provide a literature review on the following topics first: scientific thinking, reasoning, medical reasoning, literature-based discovery, and a field study to explore scientific thinking and discovery. Over the years, scientific thinking has shown excellent progress in cognitive science and its applied areas: education, medicine, and biomedical research. However, a review of the literature reveals the lack of original studies on hypothesis generation in clinical research. The authors then summarize their first human participant study exploring data-driven hypothesis generation by clinical researchers in a simulated setting. The results indicate that a secondary data analytical tool, VIADS—a visual interactive analytic tool for filtering, summarizing, and visualizing large health data sets coded with hierarchical terminologies, can shorten the time participants need, on average, to generate a hypothesis and also requires fewer cognitive events to generate each hypothesis. As a counterpoint, this exploration also indicates that the quality ratings of the hypotheses thus generated carry significantly lower ratings for feasibility when applying VIADS. Despite its small scale, the study confirmed the feasibility of conducting a human participant study directly to explore the hypothesis generation process in clinical research. This study provides supporting evidence to conduct a larger-scale study with a specifically designed tool to facilitate the hypothesis-generation process among inexperienced clinical researchers. A larger study could provide generalizable evidence, which in turn can potentially improve clinical research productivity and overall clinical research enterprise.

Article Details

The Medical Research Archives grants authors the right to publish and reproduce the unrevised contribution in whole or in part at any time and in any form for any scholarly non-commercial purpose with the condition that all publications of the contribution include a full citation to the journal as published by the Medical Research Archives .

- Urgent Support

Engineering Graduate Studies

Hypothesis and Experimental Design

Jump to: Activity Examples | Resources

Two important elements of The Scientific Method that will help you design your research approach more efficiently are “Generating Hypotheses” and “Designing Controlled Experiments” to test these hypotheses. A well-designed experiment that you deeply understand will save time and resources and facilitate easier data analysis/interpretation. Many people reading this may be working on a project that focuses on designing a product, or discovery research where the hypothesis it is not immediately obvious. We encourage you to read on however as the exercise of generating a hypothesis will likely help you think about the assumptions you are making in your research and the physical principles your work builds upon.

These activities will help you …

- Begin formulating an appropriate hypothesis related to your research.

- Apply a systematic process for designing experiments.

What is a Hypothesis?

A hypothesis is an “educated guess/prediction” or “ proposed explanation ” of how a system will behave based on the available evidence . A hypothesis is a starting point for further investigation and testing because a hypothesis makes a prediction about the behavior of a measurable outcome of an experiment. A hypothesis should be:

- Testable – you can design an experiment to test it

- Falsifiable – it can be proven wrong (note it cannot be “proved”)

- Useful – the outcome must give valuable information

A useful hypothesis may relate to the underlying question of your research. For example:

“We hypothesize that therapy resistant cell populations will be enriched in hypoxic microenvironments. “

“We hypothesize that increasing the number of boreholes simulated in 3D geological models minimizes the variation of the geological model results.”

Some research projects do not have an obvious hypothesis to test, but the design strategy/concept chosen is based on an underlying assumption about how the system being designed works (i.e. the hypothesis). For example:

“We hypothesize that decreasing the baking temperature of the photoresist layer will reduce thermal expansion and device cracking”

In this case the researcher is troubleshooting poor device quality and is proposing to vary different fabrication parameters (one being baking temperature). Understanding the assumptions (working hypotheses) of why different variables might improve device quality is useful as it provides a basis to prioritize what variables to focus on first. The core goal of this research is not to test a specific hypothesis, but using the scientific method to troubleshoot a design challenge will enable the researcher to understand the parameters that control the behavior of different designs and to identify a design that is successful more efficiently.

In all the examples above, the hypothesis helps to guide the design of a useful and interpretable experiment with appropriate controls that rule out alternative explanations of the experimental observation. Hypotheses are therefore likely essential and useful parts of all research projects.

Suggested Activity – Create a Hypothesis for Your Research

Estimated time: 30 mins

- Write down the parameters you are varying or testing in your experimental system or model and how you think the behaviour of the system is going to vary with these parameters.

- (Alternative) If your project goal is to design a device, write down the parameters you believe control whether the device will work.

- (Alternative) If your project goal involves optimizing a process, write down the underlying physics or chemistry controlling the process you are studying.

- With these parameters in mind, write down the key assumption(s) you are making about how your system works.

- Try to formulate each one of these assumptions into a hypothesis that might be useful for your research project. If you have multiple aims each one may have a separate hypothesis. Make sure the hypothesis meets each of the three key elements above.

- Share your hypothesis with a peer or your supervisor to discuss if this is a good hypothesis – is it testable? Does it make a useful prediction? Does it capture the key underlying assumptions your research is based upon?

Remember that writing a good research hypothesis is challenging and will take a lot of careful thought about the underlying science that governs your system.

Designing Experiments

Designing experiments appropriately is very important to avoid wasting resources (time!) and to ensure results can be interpreted correctly. It is often very useful to discuss the design of your planned experiments in your meetings with your supervisor to get feedback before you start doing experiments. This will also ensure you and your supervisor have a consistent understanding of experimental design and that all the appropriate controls required to interpret your data have been considered.

The factors that must be considered when you design experiments is going to depend on your specific area of research. S ome important things to think about when designing experiments include:

Rationale: What is the purpose of this experiment? Is this the best experiment I can do? Does my experiment answer any question ? Does this experiment help answer the question I am trying to ask? What hypothesis am I trying to test?

Will my experiment be interpretable? What controls can I use to distinguish my results from other potential explanations? Can I add a control to distinguish between explanations? Can I add a control to further test my hypothesis?

Is my experiment/model rigorous? What is the sensitivity of the method I am using and can it measure accurately what I want to measure? What outcomes (metrics) will I measure and is this measurement appropriate? How many replicates (technical replicates versus independent replicates) will I do? Am I only changing the variable that I am testing? What am I keeping constant? What statistical tests do I plan to carry out and what considerations are needed? Is my statistical design appropriate (power analysis, sufficient replicates)?

What logistics do I need to consider? Are the equipment/resources I need available? Do I need additional training or equipment access? Are there important safety or ethical issues/permits to consider? Are pilot experiments needed to assess feasibility and what would these be? What is my planned experimental protocol and are there important timing issues to consider? What experimental outputs and parameters need to be documented throughout experiment?

This list is not exhaustive and you should consider what is missing for your particular situation.

Suggested Activity – Design an Experiment Using a Template

Estimated time: 45 min

- Explore the excel template for experimental design ( Resource 1 ) or modelling ( Resource 2 ). A template like this is very useful for keeping track of protocols as well as improving the reproducibility of your experiments. Note this template is simply a starting point to get you thinking systematically and should be adapted to best suit your needs.

- Fill out the template for an experiment or modelling project you are planning to complete soon.

- Consider how you can modify this template to be more applicable to your specific project.

- Using the template document, explain your experimental design/model design to a peer or your supervisor. Let them ask questions to understand your design and provide feedback. Alternatively, if there is a part of your design that you are unclear about this is a great starting point for a targeted and efficient discussion with your supervisor.

- Revise your design based on feedback.

Activity Examples

© 2024 Faculty of Applied Science and Engineering

- U of T Home

- Accessibility

- Student Data Practices

- Website Feedback

- First Online: 01 January 2024

Cite this chapter

- Hiroshi Ishikawa 3

Part of the book series: Studies in Big Data ((SBD,volume 139))

147 Accesses

This chapter will explain the definition and properties of a hypothesis, the related concepts, and basic methods of hypothesis generation as follows.

Describe the definition, properties, and life cycle of a hypothesis.

Describe relationships between a hypothesis and a theory, a model, and data.

Categorize and explain research questions that provide hints for hypothesis generation.

Explain how to visualize data and analysis results.

Explain the philosophy of science and scientific methods in relation to hypothesis generation in science.

Explain deduction, induction, plausible reasoning, and analogy concretely as reasoning methods useful for hypothesis generation.

Explain problem solving as hypothesis generation methods by using familiar examples.

This is a preview of subscription content, log in via an institution to check access.

Access this chapter

- Available as PDF

- Read on any device

- Instant download

- Own it forever

- Available as EPUB and PDF

- Durable hardcover edition

- Dispatched in 3 to 5 business days

- Free shipping worldwide - see info

Tax calculation will be finalised at checkout

Purchases are for personal use only

Institutional subscriptions

Aufmann RN, Lockwood JS et al (2018) Mathematical excursions. CENGAGE

Google Scholar

Bortolotti L (2008) An introduction to the philosophy of science. Polity

Cairo A (2016) The truthful art: data, charts, and maps for communication. New Riders

Cellucci C (2017) Rethinking knowledge: the heuristic view. Springer

Chang M (2014) Principles of scientific methods. CRC Press

Crease RP (2010) The great equations: breakthroughs in science from Pythagoras to Heisenberg. W. W. Norton & Company

Danks D, Ippoliti E (eds) Building theories: Heuristics and hypotheses in sciences. Springer

Diggle PJ, Chetwynd AG (2011) Statistics and scientific method: an introduction for students and researchers. Oxford University Press

DOAJ (2022) Directory of open access journal. https://doaj.org/ Accessed 2022

Gilchrist P, Wheaton B (2011) Lifestyle sport, public policy and youth engagement: examining the emergence of Parkour. Int J Sport Policy Polit 3(1):109–131. https://doi.org/10.1080/19406940.2010.547866

Article Google Scholar

Google Maps. https://www.google.com/maps Accessed 2022.

Ishikawa H (2015) Social big data mining. CRC Press

Järvinen P (2008) Mapping research questions to research methods. In: Avison D, Kasper GM, Pernici B, Ramos I, Roode D (eds) Advances in information systems research, education and practice. Proceedings of IFIP 20th world computer congress, TC 8, information systems, vol 274. Springer. https://doi.org/10.1007/978-0-387-09682-7-9_3

JAXA (2022) Martian moons eXploration. http://www.mmx.jaxa.jp/en/ . Accessed 2022

Lewton T (2020) How the bits of quantum gravity can buzz. Quanta Magazine. 2020. https://www.quantamagazine.org/gravitons-revealed-in-the-noise-of-gravitational-waves-20200723/ . Accessed 2022

Mahajan S (2014) The art of insight in science and engineering: Mastering complexity. The MIT Press

Méndez A, Rivera–Valentín EG (2017) The equilibrium temperature of planets in elliptical orbits. Astrophys J Lett 837(1)

NASA (2022) Mars sample return. https://www.jpl.nasa.gov/missions/mars-sample-return-msr Accessed 2022

OpenStreetMap (2022). https://www.openstreetmap.org . Accessed 2022

Pólya G (2009) Mathematics and plausible reasoning: vol I: induction and analogy in mathematics. Ishi Press

Pólya G, Conway JH (2014) How to solve it. Princeton University Press

Rehm J (2019) The four fundamental forces of nature. Live science https://www.livescience.com/the-fundamental-forces-of-nature.html

Sadler-Smith E (2015) Wallas’ four-stage model of the creative process: more than meets the eye? Creat Res J 27(4):342–352. https://doi.org/10.1080/10400419.2015.1087277

Siegel E, This is why physicists think string theory might be our ‘theory of everything.’ Forbes, 2018. https://www.forbes.com/sites/startswithabang/2018/05/31/this-is-why-physicists-think-string-theory-might-be-our-theory-of-everything/?sh=b01d79758c25

Zeitz P (2006) The art and craft of problem solving. Wiley

Download references

Author information

Authors and affiliations.

Department of Systems Design, Tokyo Metropolitan University, Hino, Tokyo, Japan

Hiroshi Ishikawa

You can also search for this author in PubMed Google Scholar

Corresponding author

Correspondence to Hiroshi Ishikawa .

Rights and permissions

Reprints and permissions

Copyright information

© 2024 The Author(s), under exclusive license to Springer Nature Switzerland AG

About this chapter

Ishikawa, H. (2024). Hypothesis. In: Hypothesis Generation and Interpretation. Studies in Big Data, vol 139. Springer, Cham. https://doi.org/10.1007/978-3-031-43540-9_2

Download citation

DOI : https://doi.org/10.1007/978-3-031-43540-9_2

Published : 01 January 2024

Publisher Name : Springer, Cham

Print ISBN : 978-3-031-43539-3

Online ISBN : 978-3-031-43540-9

eBook Packages : Computer Science Computer Science (R0)

Share this chapter

Anyone you share the following link with will be able to read this content:

Sorry, a shareable link is not currently available for this article.

Provided by the Springer Nature SharedIt content-sharing initiative

- Publish with us

Policies and ethics

- Find a journal

- Track your research

Want to create or adapt books like this? Learn more about how Pressbooks supports open publishing practices.

Developing a Hypothesis

Rajiv S. Jhangiani; I-Chant A. Chiang; Carrie Cuttler; and Dana C. Leighton

Learning Objectives

- Distinguish between a theory and a hypothesis.

- Discover how theories are used to generate hypotheses and how the results of studies can be used to further inform theories.

- Understand the characteristics of a good hypothesis.

Theories and Hypotheses

Before describing how to develop a hypothesis, it is important to distinguish between a theory and a hypothesis. A theory is a coherent explanation or interpretation of one or more phenomena. Although theories can take a variety of forms, one thing they have in common is that they go beyond the phenomena they explain by including variables, structures, processes, functions, or organizing principles that have not been observed directly. Consider, for example, Zajonc’s theory of social facilitation and social inhibition (1965) [1] . He proposed that being watched by others while performing a task creates a general state of physiological arousal, which increases the likelihood of the dominant (most likely) response. So for highly practiced tasks, being watched increases the tendency to make correct responses, but for relatively unpracticed tasks, being watched increases the tendency to make incorrect responses. Notice that this theory—which has come to be called drive theory—provides an explanation of both social facilitation and social inhibition that goes beyond the phenomena themselves by including concepts such as “arousal” and “dominant response,” along with processes such as the effect of arousal on the dominant response.

Outside of science, referring to an idea as a theory often implies that it is untested—perhaps no more than a wild guess. In science, however, the term theory has no such implication. A theory is simply an explanation or interpretation of a set of phenomena. It can be untested, but it can also be extensively tested, well supported, and accepted as an accurate description of the world by the scientific community. The theory of evolution by natural selection, for example, is a theory because it is an explanation of the diversity of life on earth—not because it is untested or unsupported by scientific research. On the contrary, the evidence for this theory is overwhelmingly positive and nearly all scientists accept its basic assumptions as accurate. Similarly, the “germ theory” of disease is a theory because it is an explanation of the origin of various diseases, not because there is any doubt that many diseases are caused by microorganisms that infect the body.

A hypothesis , on the other hand, is a specific prediction about a new phenomenon that should be observed if a particular theory is accurate. It is an explanation that relies on just a few key concepts. Hypotheses are often specific predictions about what will happen in a particular study. They are developed by considering existing evidence and using reasoning to infer what will happen in the specific context of interest. Hypotheses are often but not always derived from theories. So a hypothesis is often a prediction based on a theory but some hypotheses are a-theoretical and only after a set of observations have been made, is a theory developed. This is because theories are broad in nature and they explain larger bodies of data. So if our research question is really original then we may need to collect some data and make some observations before we can develop a broader theory.

Theories and hypotheses always have this if-then relationship. “ If drive theory is correct, then cockroaches should run through a straight runway faster, and a branching runway more slowly, when other cockroaches are present.” Although hypotheses are usually expressed as statements, they can always be rephrased as questions. “Do cockroaches run through a straight runway faster when other cockroaches are present?” Thus deriving hypotheses from theories is an excellent way of generating interesting research questions.

But how do researchers derive hypotheses from theories? One way is to generate a research question using the techniques discussed in this chapter and then ask whether any theory implies an answer to that question. For example, you might wonder whether expressive writing about positive experiences improves health as much as expressive writing about traumatic experiences. Although this question is an interesting one on its own, you might then ask whether the habituation theory—the idea that expressive writing causes people to habituate to negative thoughts and feelings—implies an answer. In this case, it seems clear that if the habituation theory is correct, then expressive writing about positive experiences should not be effective because it would not cause people to habituate to negative thoughts and feelings. A second way to derive hypotheses from theories is to focus on some component of the theory that has not yet been directly observed. For example, a researcher could focus on the process of habituation—perhaps hypothesizing that people should show fewer signs of emotional distress with each new writing session.

Among the very best hypotheses are those that distinguish between competing theories. For example, Norbert Schwarz and his colleagues considered two theories of how people make judgments about themselves, such as how assertive they are (Schwarz et al., 1991) [2] . Both theories held that such judgments are based on relevant examples that people bring to mind. However, one theory was that people base their judgments on the number of examples they bring to mind and the other was that people base their judgments on how easily they bring those examples to mind. To test these theories, the researchers asked people to recall either six times when they were assertive (which is easy for most people) or 12 times (which is difficult for most people). Then they asked them to judge their own assertiveness. Note that the number-of-examples theory implies that people who recalled 12 examples should judge themselves to be more assertive because they recalled more examples, but the ease-of-examples theory implies that participants who recalled six examples should judge themselves as more assertive because recalling the examples was easier. Thus the two theories made opposite predictions so that only one of the predictions could be confirmed. The surprising result was that participants who recalled fewer examples judged themselves to be more assertive—providing particularly convincing evidence in favor of the ease-of-retrieval theory over the number-of-examples theory.

Theory Testing

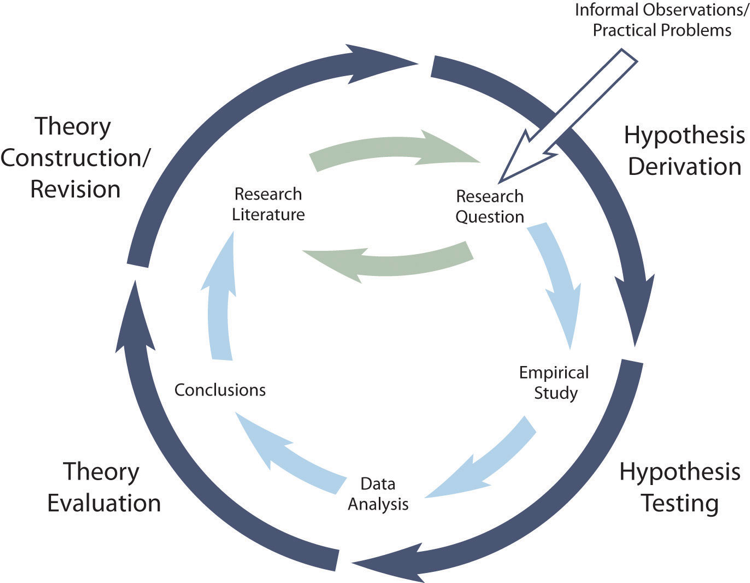

The primary way that scientific researchers use theories is sometimes called the hypothetico-deductive method (although this term is much more likely to be used by philosophers of science than by scientists themselves). Researchers begin with a set of phenomena and either construct a theory to explain or interpret them or choose an existing theory to work with. They then make a prediction about some new phenomenon that should be observed if the theory is correct. Again, this prediction is called a hypothesis. The researchers then conduct an empirical study to test the hypothesis. Finally, they reevaluate the theory in light of the new results and revise it if necessary. This process is usually conceptualized as a cycle because the researchers can then derive a new hypothesis from the revised theory, conduct a new empirical study to test the hypothesis, and so on. As Figure 2.3 shows, this approach meshes nicely with the model of scientific research in psychology presented earlier in the textbook—creating a more detailed model of “theoretically motivated” or “theory-driven” research.

As an example, let us consider Zajonc’s research on social facilitation and inhibition. He started with a somewhat contradictory pattern of results from the research literature. He then constructed his drive theory, according to which being watched by others while performing a task causes physiological arousal, which increases an organism’s tendency to make the dominant response. This theory predicts social facilitation for well-learned tasks and social inhibition for poorly learned tasks. He now had a theory that organized previous results in a meaningful way—but he still needed to test it. He hypothesized that if his theory was correct, he should observe that the presence of others improves performance in a simple laboratory task but inhibits performance in a difficult version of the very same laboratory task. To test this hypothesis, one of the studies he conducted used cockroaches as subjects (Zajonc, Heingartner, & Herman, 1969) [3] . The cockroaches ran either down a straight runway (an easy task for a cockroach) or through a cross-shaped maze (a difficult task for a cockroach) to escape into a dark chamber when a light was shined on them. They did this either while alone or in the presence of other cockroaches in clear plastic “audience boxes.” Zajonc found that cockroaches in the straight runway reached their goal more quickly in the presence of other cockroaches, but cockroaches in the cross-shaped maze reached their goal more slowly when they were in the presence of other cockroaches. Thus he confirmed his hypothesis and provided support for his drive theory. (Zajonc also showed that drive theory existed in humans [Zajonc & Sales, 1966] [4] in many other studies afterward).

Incorporating Theory into Your Research

When you write your research report or plan your presentation, be aware that there are two basic ways that researchers usually include theory. The first is to raise a research question, answer that question by conducting a new study, and then offer one or more theories (usually more) to explain or interpret the results. This format works well for applied research questions and for research questions that existing theories do not address. The second way is to describe one or more existing theories, derive a hypothesis from one of those theories, test the hypothesis in a new study, and finally reevaluate the theory. This format works well when there is an existing theory that addresses the research question—especially if the resulting hypothesis is surprising or conflicts with a hypothesis derived from a different theory.

To use theories in your research will not only give you guidance in coming up with experiment ideas and possible projects, but it lends legitimacy to your work. Psychologists have been interested in a variety of human behaviors and have developed many theories along the way. Using established theories will help you break new ground as a researcher, not limit you from developing your own ideas.

Characteristics of a Good Hypothesis

There are three general characteristics of a good hypothesis. First, a good hypothesis must be testable and falsifiable . We must be able to test the hypothesis using the methods of science and if you’ll recall Popper’s falsifiability criterion, it must be possible to gather evidence that will disconfirm the hypothesis if it is indeed false. Second, a good hypothesis must be logical. As described above, hypotheses are more than just a random guess. Hypotheses should be informed by previous theories or observations and logical reasoning. Typically, we begin with a broad and general theory and use deductive reasoning to generate a more specific hypothesis to test based on that theory. Occasionally, however, when there is no theory to inform our hypothesis, we use inductive reasoning which involves using specific observations or research findings to form a more general hypothesis. Finally, the hypothesis should be positive. That is, the hypothesis should make a positive statement about the existence of a relationship or effect, rather than a statement that a relationship or effect does not exist. As scientists, we don’t set out to show that relationships do not exist or that effects do not occur so our hypotheses should not be worded in a way to suggest that an effect or relationship does not exist. The nature of science is to assume that something does not exist and then seek to find evidence to prove this wrong, to show that it really does exist. That may seem backward to you but that is the nature of the scientific method. The underlying reason for this is beyond the scope of this chapter but it has to do with statistical theory.

- Zajonc, R. B. (1965). Social facilitation. Science, 149 , 269–274 ↵

- Schwarz, N., Bless, H., Strack, F., Klumpp, G., Rittenauer-Schatka, H., & Simons, A. (1991). Ease of retrieval as information: Another look at the availability heuristic. Journal of Personality and Social Psychology, 61 , 195–202. ↵

- Zajonc, R. B., Heingartner, A., & Herman, E. M. (1969). Social enhancement and impairment of performance in the cockroach. Journal of Personality and Social Psychology, 13 , 83–92. ↵

- Zajonc, R.B. & Sales, S.M. (1966). Social facilitation of dominant and subordinate responses. Journal of Experimental Social Psychology, 2 , 160-168. ↵

A coherent explanation or interpretation of one or more phenomena.

A specific prediction about a new phenomenon that should be observed if a particular theory is accurate.

A cyclical process of theory development, starting with an observed phenomenon, then developing or using a theory to make a specific prediction of what should happen if that theory is correct, testing that prediction, refining the theory in light of the findings, and using that refined theory to develop new hypotheses, and so on.

The ability to test the hypothesis using the methods of science and the possibility to gather evidence that will disconfirm the hypothesis if it is indeed false.

Developing a Hypothesis Copyright © 2022 by Rajiv S. Jhangiani; I-Chant A. Chiang; Carrie Cuttler; and Dana C. Leighton is licensed under a Creative Commons Attribution-NonCommercial-ShareAlike 4.0 International License , except where otherwise noted.

Share This Book

Thank you for visiting nature.com. You are using a browser version with limited support for CSS. To obtain the best experience, we recommend you use a more up to date browser (or turn off compatibility mode in Internet Explorer). In the meantime, to ensure continued support, we are displaying the site without styles and JavaScript.

- View all journals

- Explore content

- About the journal

- Publish with us

- Sign up for alerts

- NATURE INDEX

- 17 November 2023

Hypotheses devised by AI could find ‘blind spots’ in research

- Matthew Hutson 0

Matthew Hutson is a science writer based in New York City.

You can also search for this author in PubMed Google Scholar

Credit: Olemedia/Getty

In early October, as the Nobel Foundation announced the recipients of this year’s Nobel prizes, a group of researchers, including a previous laureate, met in Stockholm to discuss how artificial intelligence (AI) might have an increasingly creative role in the scientific process. The workshop, led in part by Hiroaki Kitano, a biologist and chief executive of Sony AI in Tokyo, considered creating prizes for AIs and AI–human collaborations that produce world-class science. Two years earlier, Kitano proposed the Nobel Turing Challenge 1 : the creation of highly autonomous systems (‘AI scientists’) with the potential to make Nobel-worthy discoveries by 2050.

It’s easy to imagine that AI could perform some of the necessary steps in scientific discovery. Researchers already use it to search the literature, automate data collection, run statistical analyses and even draft parts of papers. Generating hypotheses — a task that typically requires a creative spark to ask interesting and important questions — poses a more complex challenge. For Sendhil Mullainathan, an economist at the University of Chicago Booth School of Business in Illinois, “it’s probably been the single most exhilarating kind of research I’ve ever done in my life”.

Network effects

AI systems capable of generating hypotheses go back more than four decades. In the 1980s, Don Swanson, an information scientist at the University of Chicago, pioneered literature-based discovery — a text-mining exercise that aimed to sift ‘undiscovered public knowledge’ from the scientific literature. If some research papers say that A causes B, and others that B causes C, for example, one might hypothesize that A causes C. Swanson created software called Arrowsmith that searched collections of published papers for such indirect connections and proposed, for instance, that fish oil, which reduces blood viscosity, might treat Raynaud’s syndrome, in which blood vessels narrow in response to cold 2 . Subsequent experiments proved the hypothesis correct.

Literature-based discovery and other computational techniques can organize existing findings into ‘knowledge graphs’, networks of nodes representing, say, molecules and properties. AI can analyse these networks and propose undiscovered links between molecule nodes and property nodes. This process powers much of modern drug discovery, as well as the task of assigning functions to genes. A review article published in Nature 3 earlier this year explores other ways in which AI has generated hypotheses, such as proposing simple formulae that can organize noisy data points and predicting how proteins will fold up. Researchers have automated hypothesis generation in particle physics, materials science, biology, chemistry and other fields.

An AI revolution is brewing in medicine. What will it look like?

One approach is to use AI to help scientists brainstorm. This is a task that large language models — AI systems trained on large amounts of text to produce new text — are well suited for, says Yolanda Gil, a computer scientist at the University of Southern California in Los Angeles who has worked on AI scientists. Language models can produce inaccurate information and present it as real, but this ‘hallucination’ isn’t necessarily bad, Mullainathan says. It signifies, he says, “‘here’s a kind of thing that looks true’. That’s exactly what a hypothesis is.”

Blind spots are where AI might prove most useful. James Evans, a sociologist at the University of Chicago, has pushed AI to make ‘alien’ hypotheses — those that a human would be unlikely to make. In a paper published earlier this year in Nature Human Behaviour 4 , he and his colleague Jamshid Sourati built knowledge graphs containing not just materials and properties, but also researchers. Evans and Sourati’s algorithm traversed these networks, looking for hidden shortcuts between materials and properties. The aim was to maximize the plausibility of AI-devised hypotheses being true while minimizing the chances that researchers would hit on them naturally. For instance, if scientists who are studying a particular drug are only distantly connected to those studying a disease that it might cure, then the drug’s potential would ordinarily take much longer to discover.

When Evans and Sourati fed data published up to 2001 to their AI, they found that about 30% of its predictions about drug repurposing and the electrical properties of materials had been uncovered by researchers, roughly six to ten years later. The system can be tuned to make predictions that are more likely to be correct but also less of a leap, on the basis of concurrent findings and collaborations, Evans says. But “if we’re predicting what people are going to do next year, that just feels like a scoop machine”, he adds. He’s more interested in how the technology can take science in entirely new directions.

Keep it simple

Scientific hypotheses lie on a spectrum, from the concrete and specific (‘this protein will fold up in this way’) to the abstract and general (‘gravity accelerates all objects that have mass’). Until now, AI has produced more of the former. There’s another spectrum of hypotheses, partially aligned with the first, which ranges from the uninterpretable (these thousand factors lead to this result) to the clear (a simple formula or sentence). Evans argues that if a machine makes useful predictions about individual cases — “if you get all of these particular chemicals together, boom, you get this very strange effect” — but can’t explain why those cases work, that’s a technological feat rather than science. Mullainathan makes a similar point. In some fields, the underlying principles, such as the mechanics of protein folding, are understood and scientists just want AI to solve the practical problem of running complex computations that determine how bits of proteins will move around. But in fields in which the fundamentals remain hidden, such as medicine and social science, scientists want AI to identify rules that can be applied to fresh situations, Mullainathan says.

In a paper presented in September 5 at the Economics of Artificial Intelligence Conference in Toronto, Canada, Mullainathan and Jens Ludwig, an economist at the University of Chicago, described a method for AI and humans to collaboratively generate broad, clear hypotheses. In a proof of concept, they sought hypotheses related to characteristics of defendants’ faces that might influence a judge’s decision to free or detain them before trial. Given mugshots of past defendants, as well the judges’ decisions, an algorithm found that numerous subtle facial features correlated with judges’ decisions. The AI generated new mugshots with those features cranked either up or down, and human participants were asked to describe the general differences between them. Defendants likely to be freed were found to be more “well-groomed” and “heavy-faced”. Mullainathan says the method could be applied to other complex data sets, such as electrocardiograms, to find markers of an impending heart attack that doctors might not otherwise know to look for. “I love that paper,” Evans says. “That’s an interesting class of hypothesis generation.”

In science, experimentation and hypothesis generation often form an iterative cycle: a researcher asks a question, collects data and adjusts the question or asks a fresh one. Ross King, a computer scientist at Chalmers University of Technology in Gothenburg, Sweden, aims to complete this loop by building robotic systems that can perform experiments using mechanized arms 6 . One system, called Adam, automated experiments on microbe growth. Another, called Eve, tackled drug discovery. In one experiment, Eve helped to reveal the mechanism by which a toothpaste ingredient called triclosan can be used to fight malaria.

Robot scientists

King is now developing Genesis, a robotic system that experiments with yeast. Genesis will formulate and test hypotheses related to the biology of yeast by growing actual yeast cells in 10,000 bioreactors at a time, adjusting factors such as environmental conditions or making genome edits, and measuring characteristics such as gene expression. Conceivably, the hypotheses could involve many subtle factors, but King says they tend to involve a single gene or protein whose effects mirror those in human cells, which would make the discoveries potentially applicable in drug development. King, who is on the organizing committee of the Nobel Turing Challenge, says that these “robot scientists” have the potential to be more consistent, unbiased, cheap, efficient and transparent than humans.

Researchers see several hurdles to and opportunities for progress. AI systems that generate hypotheses often rely on machine learning, which usually requires a lot of data. Making more papers and data sets openly available would help, but scientists also need to build AI that doesn’t just operate by matching patterns but can also reason about the physical world, says Rose Yu, a computer scientist at the University of California, San Diego. Gil agrees that AI systems should not be driven only by data — they should also be guided by known laws. “That’s a very powerful way to include scientific knowledge into AI systems,” she says.

As data gathering becomes more automated, Evans predicts that automating hypothesis generation will become increasingly important. Giant telescopes and robotic labs collect more measurements than humans can handle. “We naturally have to scale up intelligent, adaptive questions”, he says, “if we don’t want to waste that capacity.”

doi: https://doi.org/10.1038/d41586-023-03596-0

Kitano, H. npj Syst. Biol. Appl. 7 , 29 (2021).

Article PubMed Google Scholar

Swanson, D. R. Perspect. Biol. Med. 30 , 7–18 (1986).

Wang, H. et al. Nature 620 , 47–60 (2023).

Sourati, J. & Evans, J. A. Nature Hum. Behav. 7 , 1682–1696 (2023).

Ludwig, J. & Mullainathan, S. Working Paper 31017 (National Bureau of Economic Research, 2023).

King, R., Peter, O. & Courtney, P. in Artificial Intelligence in Science 129–139 (OECD Publishing, 2023).

Download references

Related Articles

- Machine learning

- Computer science

Lethal AI weapons are here: how can we control them?

News Feature 23 APR 24

Will AI accelerate or delay the race to net-zero emissions?

Comment 22 APR 24

AI’s keen diagnostic eye

Outlook 18 APR 24

AI now beats humans at basic tasks — new benchmarks are needed, says major report

News 15 APR 24

High-threshold and low-overhead fault-tolerant quantum memory

Article 27 MAR 24

Three reasons why AI doesn’t model human language

Correspondence 19 MAR 24

NATO is boosting AI and climate research as scientific diplomacy remains on ice

News Explainer 25 APR 24

Are robots the solution to the crisis in older-person care?

Outlook 25 APR 24

Junior Group Leader

The Imagine Institute is a leading European research centre dedicated to genetic diseases, with the primary objective to better understand and trea...

Paris, Ile-de-France (FR)

Imagine Institute

Director of the Czech Advanced Technology and Research Institute of Palacký University Olomouc

The Rector of Palacký University Olomouc announces a Call for the Position of Director of the Czech Advanced Technology and Research Institute of P...

Czech Republic (CZ)

Palacký University Olomouc

Course lecturer for INFH 5000

The HKUST(GZ) Information Hub is recruiting course lecturer for INFH 5000: Information Science and Technology: Essentials and Trends.

Guangzhou, Guangdong, China

The Hong Kong University of Science and Technology (Guangzhou)

Suzhou Institute of Systems Medicine Seeking High-level Talents

Full Professor, Associate Professor, Assistant Professor

Suzhou, Jiangsu, China

Suzhou Institute of Systems Medicine (ISM)

Postdoctoral Fellowships: Early Diagnosis and Precision Oncology of Gastrointestinal Cancers

We currently have multiple postdoctoral fellowship positions within the multidisciplinary research team headed by Dr. Ajay Goel, professor and foun...

Monrovia, California

Beckman Research Institute, City of Hope, Goel Lab

Sign up for the Nature Briefing newsletter — what matters in science, free to your inbox daily.

Quick links

- Explore articles by subject

- Guide to authors

- Editorial policies

- Bipolar Disorder

- Therapy Center

- When To See a Therapist

- Types of Therapy

- Best Online Therapy

- Best Couples Therapy

- Best Family Therapy

- Managing Stress

- Sleep and Dreaming

- Understanding Emotions

- Self-Improvement

- Healthy Relationships

- Student Resources

- Personality Types

- Guided Meditations

- Verywell Mind Insights

- 2023 Verywell Mind 25

- Mental Health in the Classroom

- Editorial Process

- Meet Our Review Board

- Crisis Support

How to Write a Great Hypothesis

Hypothesis Definition, Format, Examples, and Tips

Kendra Cherry, MS, is a psychosocial rehabilitation specialist, psychology educator, and author of the "Everything Psychology Book."

:max_bytes(150000):strip_icc():format(webp)/IMG_9791-89504ab694d54b66bbd72cb84ffb860e.jpg)

Amy Morin, LCSW, is a psychotherapist and international bestselling author. Her books, including "13 Things Mentally Strong People Don't Do," have been translated into more than 40 languages. Her TEDx talk, "The Secret of Becoming Mentally Strong," is one of the most viewed talks of all time.

:max_bytes(150000):strip_icc():format(webp)/VW-MIND-Amy-2b338105f1ee493f94d7e333e410fa76.jpg)

Verywell / Alex Dos Diaz

- The Scientific Method

Hypothesis Format

Falsifiability of a hypothesis.

- Operationalization

Hypothesis Types

Hypotheses examples.

- Collecting Data

A hypothesis is a tentative statement about the relationship between two or more variables. It is a specific, testable prediction about what you expect to happen in a study. It is a preliminary answer to your question that helps guide the research process.

Consider a study designed to examine the relationship between sleep deprivation and test performance. The hypothesis might be: "This study is designed to assess the hypothesis that sleep-deprived people will perform worse on a test than individuals who are not sleep-deprived."

At a Glance

A hypothesis is crucial to scientific research because it offers a clear direction for what the researchers are looking to find. This allows them to design experiments to test their predictions and add to our scientific knowledge about the world. This article explores how a hypothesis is used in psychology research, how to write a good hypothesis, and the different types of hypotheses you might use.

The Hypothesis in the Scientific Method

In the scientific method , whether it involves research in psychology, biology, or some other area, a hypothesis represents what the researchers think will happen in an experiment. The scientific method involves the following steps:

- Forming a question

- Performing background research

- Creating a hypothesis

- Designing an experiment

- Collecting data

- Analyzing the results

- Drawing conclusions

- Communicating the results

The hypothesis is a prediction, but it involves more than a guess. Most of the time, the hypothesis begins with a question which is then explored through background research. At this point, researchers then begin to develop a testable hypothesis.

Unless you are creating an exploratory study, your hypothesis should always explain what you expect to happen.

In a study exploring the effects of a particular drug, the hypothesis might be that researchers expect the drug to have some type of effect on the symptoms of a specific illness. In psychology, the hypothesis might focus on how a certain aspect of the environment might influence a particular behavior.

Remember, a hypothesis does not have to be correct. While the hypothesis predicts what the researchers expect to see, the goal of the research is to determine whether this guess is right or wrong. When conducting an experiment, researchers might explore numerous factors to determine which ones might contribute to the ultimate outcome.

In many cases, researchers may find that the results of an experiment do not support the original hypothesis. When writing up these results, the researchers might suggest other options that should be explored in future studies.

In many cases, researchers might draw a hypothesis from a specific theory or build on previous research. For example, prior research has shown that stress can impact the immune system. So a researcher might hypothesize: "People with high-stress levels will be more likely to contract a common cold after being exposed to the virus than people who have low-stress levels."

In other instances, researchers might look at commonly held beliefs or folk wisdom. "Birds of a feather flock together" is one example of folk adage that a psychologist might try to investigate. The researcher might pose a specific hypothesis that "People tend to select romantic partners who are similar to them in interests and educational level."

Elements of a Good Hypothesis

So how do you write a good hypothesis? When trying to come up with a hypothesis for your research or experiments, ask yourself the following questions:

- Is your hypothesis based on your research on a topic?

- Can your hypothesis be tested?

- Does your hypothesis include independent and dependent variables?

Before you come up with a specific hypothesis, spend some time doing background research. Once you have completed a literature review, start thinking about potential questions you still have. Pay attention to the discussion section in the journal articles you read . Many authors will suggest questions that still need to be explored.

How to Formulate a Good Hypothesis

To form a hypothesis, you should take these steps:

- Collect as many observations about a topic or problem as you can.

- Evaluate these observations and look for possible causes of the problem.

- Create a list of possible explanations that you might want to explore.

- After you have developed some possible hypotheses, think of ways that you could confirm or disprove each hypothesis through experimentation. This is known as falsifiability.

In the scientific method , falsifiability is an important part of any valid hypothesis. In order to test a claim scientifically, it must be possible that the claim could be proven false.

Students sometimes confuse the idea of falsifiability with the idea that it means that something is false, which is not the case. What falsifiability means is that if something was false, then it is possible to demonstrate that it is false.

One of the hallmarks of pseudoscience is that it makes claims that cannot be refuted or proven false.

The Importance of Operational Definitions

A variable is a factor or element that can be changed and manipulated in ways that are observable and measurable. However, the researcher must also define how the variable will be manipulated and measured in the study.

Operational definitions are specific definitions for all relevant factors in a study. This process helps make vague or ambiguous concepts detailed and measurable.

For example, a researcher might operationally define the variable " test anxiety " as the results of a self-report measure of anxiety experienced during an exam. A "study habits" variable might be defined by the amount of studying that actually occurs as measured by time.

These precise descriptions are important because many things can be measured in various ways. Clearly defining these variables and how they are measured helps ensure that other researchers can replicate your results.

Replicability

One of the basic principles of any type of scientific research is that the results must be replicable.

Replication means repeating an experiment in the same way to produce the same results. By clearly detailing the specifics of how the variables were measured and manipulated, other researchers can better understand the results and repeat the study if needed.

Some variables are more difficult than others to define. For example, how would you operationally define a variable such as aggression ? For obvious ethical reasons, researchers cannot create a situation in which a person behaves aggressively toward others.

To measure this variable, the researcher must devise a measurement that assesses aggressive behavior without harming others. The researcher might utilize a simulated task to measure aggressiveness in this situation.

Hypothesis Checklist

- Does your hypothesis focus on something that you can actually test?

- Does your hypothesis include both an independent and dependent variable?

- Can you manipulate the variables?

- Can your hypothesis be tested without violating ethical standards?

The hypothesis you use will depend on what you are investigating and hoping to find. Some of the main types of hypotheses that you might use include:

- Simple hypothesis : This type of hypothesis suggests there is a relationship between one independent variable and one dependent variable.

- Complex hypothesis : This type suggests a relationship between three or more variables, such as two independent and dependent variables.

- Null hypothesis : This hypothesis suggests no relationship exists between two or more variables.

- Alternative hypothesis : This hypothesis states the opposite of the null hypothesis.

- Statistical hypothesis : This hypothesis uses statistical analysis to evaluate a representative population sample and then generalizes the findings to the larger group.

- Logical hypothesis : This hypothesis assumes a relationship between variables without collecting data or evidence.

A hypothesis often follows a basic format of "If {this happens} then {this will happen}." One way to structure your hypothesis is to describe what will happen to the dependent variable if you change the independent variable .

The basic format might be: "If {these changes are made to a certain independent variable}, then we will observe {a change in a specific dependent variable}."

A few examples of simple hypotheses:

- "Students who eat breakfast will perform better on a math exam than students who do not eat breakfast."

- "Students who experience test anxiety before an English exam will get lower scores than students who do not experience test anxiety."

- "Motorists who talk on the phone while driving will be more likely to make errors on a driving course than those who do not talk on the phone."

- "Children who receive a new reading intervention will have higher reading scores than students who do not receive the intervention."

Examples of a complex hypothesis include:

- "People with high-sugar diets and sedentary activity levels are more likely to develop depression."

- "Younger people who are regularly exposed to green, outdoor areas have better subjective well-being than older adults who have limited exposure to green spaces."

Examples of a null hypothesis include:

- "There is no difference in anxiety levels between people who take St. John's wort supplements and those who do not."

- "There is no difference in scores on a memory recall task between children and adults."

- "There is no difference in aggression levels between children who play first-person shooter games and those who do not."

Examples of an alternative hypothesis:

- "People who take St. John's wort supplements will have less anxiety than those who do not."

- "Adults will perform better on a memory task than children."

- "Children who play first-person shooter games will show higher levels of aggression than children who do not."

Collecting Data on Your Hypothesis

Once a researcher has formed a testable hypothesis, the next step is to select a research design and start collecting data. The research method depends largely on exactly what they are studying. There are two basic types of research methods: descriptive research and experimental research.

Descriptive Research Methods

Descriptive research such as case studies , naturalistic observations , and surveys are often used when conducting an experiment is difficult or impossible. These methods are best used to describe different aspects of a behavior or psychological phenomenon.

Once a researcher has collected data using descriptive methods, a correlational study can examine how the variables are related. This research method might be used to investigate a hypothesis that is difficult to test experimentally.

Experimental Research Methods

Experimental methods are used to demonstrate causal relationships between variables. In an experiment, the researcher systematically manipulates a variable of interest (known as the independent variable) and measures the effect on another variable (known as the dependent variable).

Unlike correlational studies, which can only be used to determine if there is a relationship between two variables, experimental methods can be used to determine the actual nature of the relationship—whether changes in one variable actually cause another to change.

The hypothesis is a critical part of any scientific exploration. It represents what researchers expect to find in a study or experiment. In situations where the hypothesis is unsupported by the research, the research still has value. Such research helps us better understand how different aspects of the natural world relate to one another. It also helps us develop new hypotheses that can then be tested in the future.

Thompson WH, Skau S. On the scope of scientific hypotheses . R Soc Open Sci . 2023;10(8):230607. doi:10.1098/rsos.230607

Taran S, Adhikari NKJ, Fan E. Falsifiability in medicine: what clinicians can learn from Karl Popper [published correction appears in Intensive Care Med. 2021 Jun 17;:]. Intensive Care Med . 2021;47(9):1054-1056. doi:10.1007/s00134-021-06432-z

Eyler AA. Research Methods for Public Health . 1st ed. Springer Publishing Company; 2020. doi:10.1891/9780826182067.0004

Nosek BA, Errington TM. What is replication ? PLoS Biol . 2020;18(3):e3000691. doi:10.1371/journal.pbio.3000691

Aggarwal R, Ranganathan P. Study designs: Part 2 - Descriptive studies . Perspect Clin Res . 2019;10(1):34-36. doi:10.4103/picr.PICR_154_18

Nevid J. Psychology: Concepts and Applications. Wadworth, 2013.

By Kendra Cherry, MSEd Kendra Cherry, MS, is a psychosocial rehabilitation specialist, psychology educator, and author of the "Everything Psychology Book."

- Data, AI, & Machine Learning

- Managing Technology

- Social Responsibility

- Workplace, Teams, & Culture

- AI & Machine Learning

- Diversity & Inclusion

- Big ideas Research Projects

- Artificial Intelligence and Business Strategy

- Responsible AI

- Future of the Workforce

- Future of Leadership

- All Research Projects

- AI in Action

- Most Popular

- The Truth Behind the Nursing Crisis

- Work/23: The Big Shift

- Coaching for the Future-Forward Leader

- Measuring Culture

The spring 2024 issue’s special report looks at how to take advantage of market opportunities in the digital space, and provides advice on building culture and friendships at work; maximizing the benefits of LLMs, corporate venture capital initiatives, and innovation contests; and scaling automation and digital health platform.

- Past Issues

- Upcoming Events

- Video Archive

- Me, Myself, and AI

- Three Big Points

Why Hypotheses Beat Goals

- Developing Strategy

- Skills & Learning

Not long ago, it became fashionable to embrace failure as a sign of a company’s willingness to take risks. This trend lost favor as executives recognized that what they wanted was learning, not necessarily failure. Every failure can be attributed to a raft of missteps, and many failures do not automatically contribute to future success.

Certainly, if companies want to aggressively pursue learning, they must accept that failures will happen. But the practice of simply setting goals and then being nonchalant if they fail is inadequate.

Instead, companies should focus organizational energy on hypothesis generation and testing. Hypotheses force individuals to articulate in advance why they believe a given course of action will succeed. A failure then exposes an incorrect hypothesis — which can more reliably convert into organizational learning.

What Exactly Is a Hypothesis?

When my son was in second grade, his teacher regularly introduced topics by asking students to state some initial assumptions. For example, she introduced a unit on whales by asking: How big is a blue whale? The students all knew blue whales were big, but how big? Guesses ranged from the size of the classroom to the size of two elephants to the length of all the students in class lined up in a row. Students then set out to measure the classroom and the length of the row they formed, and they looked up the size of an elephant. They compared their results with the measurements of the whale and learned how close their estimates were.

Note that in this example, there is much more going on than just learning the size of a whale. Students were learning to recognize assumptions, make intelligent guesses based on those assumptions, determine how to test the accuracy of their guesses, and then assess the results.

This is the essence of hypothesis generation. A hypothesis emerges from a set of underlying assumptions. It is an articulation of how those assumptions are expected to play out in a given context. In short, a hypothesis is an intelligent, articulated guess that is the basis for taking action and assessing outcomes.

Get Updates on Transformative Leadership

Evidence-based resources that can help you lead your team more effectively, delivered to your inbox monthly.

Please enter a valid email address

Thank you for signing up

Privacy Policy

Hypothesis generation in companies becomes powerful if people are forced to articulate and justify their assumptions. It makes the path from hypothesis to expected outcomes clear enough that, should the anticipated outcomes fail to materialize, people will agree that the hypothesis was faulty.

Building a culture of effective hypothesizing can lead to more thoughtful actions and a better understanding of outcomes. Not only will failures be more likely to lead to future successes, but successes will foster future successes.

Why Is Hypothesis Generation Important?

Digital technologies are creating new business opportunities, but as I’ve noted in earlier columns , companies must experiment to learn both what is possible and what customers want. Most companies are relying on empowered, agile teams to conduct these experiments. That’s because teams can rapidly hypothesize, test, and learn.

Hypothesis generation contrasts starkly with more traditional management approaches designed for process optimization. Process optimization involves telling employees both what to do and how to do it. Process optimization is fine for stable business processes that have been standardized for consistency. (Standardized processes can usually be automated, specifically because they are stable.) Increasingly, however, companies need their people to steer efforts that involve uncertainty and change. That’s when organizational learning and hypothesis generation are particularly important.

Shifting to a culture that encourages empowered teams to hypothesize isn’t easy. Established hierarchies have developed managers accustomed to directing employees on how to accomplish their objectives. Those managers invariably rose to power by being the smartest person in the room. Such managers can struggle with the requirements for leading empowered teams. They may recognize the need to hold teams accountable for outcomes rather than specific tasks, but they may not be clear about how to guide team efforts.

Some newer companies have baked this concept into their organizational structure. Leaders at the Swedish digital music service Spotify note that it is essential to provide clear missions to teams . A clear mission sets up a team to articulate measurable goals. Teams can then hypothesize how they can best accomplish those goals. The role of leaders is to quiz teams about their hypotheses and challenge their logic if those hypotheses appear to lack support.

A leader at another company told me that accountability for outcomes starts with hypotheses. If a team cannot articulate what it intends to do and what outcomes it anticipates, it is unlikely that team will deliver on its mission. In short, the success of empowered teams depends upon management shifting from directing employees to guiding their development of hypotheses. This is how leaders hold their teams accountable for outcomes.

Members of empowered teams are not the only people who need to hone their ability to hypothesize. Leaders in companies that want to seize digital opportunities are learning through their experiments which strategies hold real promise for future success. They must, in effect, hypothesize about what will make the company successful in a digital economy. If they take the next step and articulate those hypotheses and establish metrics for assessing the outcomes of their actions, they will facilitate learning about the company’s long-term success. Hypothesis generation can become a critical competency throughout a company.

How Does a Company Become Proficient at Hypothesizing?

Most business leaders have embraced the importance of evidence-based decision-making. But developing a culture of evidence-based decision-making by promoting hypothesis generation is a new challenge.

For one thing, many hypotheses are sloppy. While many people naturally hypothesize and take actions based on their hypotheses, their underlying assumptions may go unexamined. Often, they don’t clearly articulate the premise itself. The better hypotheses are straightforward and succinctly written. They’re pointed about the suppositions they’re based on. And they’re shared, allowing an audience to examine the assumptions (are they accurate?) and the postulate itself (is it an intelligent, articulated guess that is the basis for taking action and assessing outcomes?).

Related Articles

Seven-Eleven Japan offers a case in how do to hypotheses right.

For over 30 years, Seven-Eleven Japan was the most profitable retailer in Japan. It achieved that stature by relying on each store’s salesclerks to decide what items to stock on that store’s shelves. Many of the salesclerks were part-time, but they were each responsible for maximizing turnover for one part of the store’s inventory, and they received detailed reports so they could monitor their own performance.

The language of hypothesis formulation was part of their process. Each week, Seven-Eleven Japan counselors visited the stores and asked salesclerks three questions:

- What did you hypothesize this week? (That is, what did you order?)

- How did you do? (That is, did you sell what you ordered?)

- How will you do better next week? (That is, how will you incorporate the learning?)

By repeatedly asking these questions and checking the data for results, counselors helped people throughout the company hypothesize, test, and learn. The result was consistently strong inventory turnover and profitability.

How can other companies get started on this path? Evidence-based decision-making requires data — good data, as the Seven-Eleven Japan example shows. But rather than get bogged down with the limits of a company’s data, I would argue that companies can start to change their culture by constantly exposing individual hypotheses. Those hypotheses will highlight what data matters most — and the need of teams to test hypotheses will help generate enthusiasm for cleaning up bad data. A sense of accountability for generating and testing hypotheses then fosters a culture of evidence-based decision-making.

The uncertainties and speed of change in the current business environment render traditional management approaches ineffective. To create the agile, evidence-based, learning culture your business needs to succeed in a digital economy, I suggest that instead of asking What is your goal? you make it a habit to ask What is your hypothesis?

About the Author

Jeanne Ross is principal research scientist for MIT’s Center for Information Systems Research . Follow CISR on Twitter @mit_cisr .

More Like This

Add a comment cancel reply.

You must sign in to post a comment. First time here? Sign up for a free account : Comment on articles and get access to many more articles.

Comment (1)

Richard jones.

Hypothesis Maker Online

Looking for a hypothesis maker? This online tool for students will help you formulate a beautiful hypothesis quickly, efficiently, and for free.

Are you looking for an effective hypothesis maker online? Worry no more; try our online tool for students and formulate your hypothesis within no time.

- 🔎 How to Use the Tool?

- ⚗️ What Is a Hypothesis in Science?

👍 What Does a Good Hypothesis Mean?

- 🧭 Steps to Making a Good Hypothesis

🔗 References

📄 hypothesis maker: how to use it.

Our hypothesis maker is a simple and efficient tool you can access online for free.

If you want to create a research hypothesis quickly, you should fill out the research details in the given fields on the hypothesis generator.

Below are the fields you should complete to generate your hypothesis:

- Who or what is your research based on? For instance, the subject can be research group 1.

- What does the subject (research group 1) do?

- What does the subject affect? - This shows the predicted outcome, which is the object.

- Who or what will be compared with research group 1? (research group 2).

Once you fill the in the fields, you can click the ‘Make a hypothesis’ tab and get your results.

⚗️ What Is a Hypothesis in the Scientific Method?

A hypothesis is a statement describing an expectation or prediction of your research through observation.

It is similar to academic speculation and reasoning that discloses the outcome of your scientific test . An effective hypothesis, therefore, should be crafted carefully and with precision.

A good hypothesis should have dependent and independent variables . These variables are the elements you will test in your research method – it can be a concept, an event, or an object as long as it is observable.

You can observe the dependent variables while the independent variables keep changing during the experiment.

In a nutshell, a hypothesis directs and organizes the research methods you will use, forming a large section of research paper writing.

Hypothesis vs. Theory

A hypothesis is a realistic expectation that researchers make before any investigation. It is formulated and tested to prove whether the statement is true. A theory, on the other hand, is a factual principle supported by evidence. Thus, a theory is more fact-backed compared to a hypothesis.

Another difference is that a hypothesis is presented as a single statement , while a theory can be an assortment of things . Hypotheses are based on future possibilities toward a specific projection, but the results are uncertain. Theories are verified with undisputable results because of proper substantiation.

When it comes to data, a hypothesis relies on limited information , while a theory is established on an extensive data set tested on various conditions.

You should observe the stated assumption to prove its accuracy.

Since hypotheses have observable variables, their outcome is usually based on a specific occurrence. Conversely, theories are grounded on a general principle involving multiple experiments and research tests.

This general principle can apply to many specific cases.

The primary purpose of formulating a hypothesis is to present a tentative prediction for researchers to explore further through tests and observations. Theories, in their turn, aim to explain plausible occurrences in the form of a scientific study.

It would help to rely on several criteria to establish a good hypothesis. Below are the parameters you should use to analyze the quality of your hypothesis.

🧭 6 Steps to Making a Good Hypothesis

Writing a hypothesis becomes way simpler if you follow a tried-and-tested algorithm. Let’s explore how you can formulate a good hypothesis in a few steps:

Step #1: Ask Questions

The first step in hypothesis creation is asking real questions about the surrounding reality.

Why do things happen as they do? What are the causes of some occurrences?

Your curiosity will trigger great questions that you can use to formulate a stellar hypothesis. So, ensure you pick a research topic of interest to scrutinize the world’s phenomena, processes, and events.

Step #2: Do Initial Research

Carry out preliminary research and gather essential background information about your topic of choice.

The extent of the information you collect will depend on what you want to prove.

Your initial research can be complete with a few academic books or a simple Internet search for quick answers with relevant statistics.

Still, keep in mind that in this phase, it is too early to prove or disapprove of your hypothesis.

Step #3: Identify Your Variables

Now that you have a basic understanding of the topic, choose the dependent and independent variables.

Take note that independent variables are the ones you can’t control, so understand the limitations of your test before settling on a final hypothesis.

Step #4: Formulate Your Hypothesis

You can write your hypothesis as an ‘if – then’ expression . Presenting any hypothesis in this format is reliable since it describes the cause-and-effect you want to test.

For instance: If I study every day, then I will get good grades.

Step #5: Gather Relevant Data

Once you have identified your variables and formulated the hypothesis, you can start the experiment. Remember, the conclusion you make will be a proof or rebuttal of your initial assumption.

So, gather relevant information, whether for a simple or statistical hypothesis, because you need to back your statement.

Step #6: Record Your Findings

Finally, write down your conclusions in a research paper .

Outline in detail whether the test has proved or disproved your hypothesis.

Edit and proofread your work, using a plagiarism checker to ensure the authenticity of your text.

We hope that the above tips will be useful for you. Note that if you need to conduct business analysis, you can use the free templates we’ve prepared: SWOT , PESTLE , VRIO , SOAR , and Porter’s 5 Forces .

❓ Hypothesis Formulator FAQ

Updated: Oct 25th, 2023

- How to Write a Hypothesis in 6 Steps - Grammarly

- Forming a Good Hypothesis for Scientific Research

- The Hypothesis in Science Writing

- Scientific Method: Step 3: HYPOTHESIS - Subject Guides

- Hypothesis Template & Examples - Video & Lesson Transcript

- Free Essays

- Writing Tools

- Lit. Guides

- Donate a Paper

- Referencing Guides

- Free Textbooks

- Tongue Twisters

- Job Openings

- Expert Application

- Video Contest

- Writing Scholarship

- Discount Codes

- IvyPanda Shop

- Terms and Conditions

- Privacy Policy

- Cookies Policy

- Copyright Principles

- DMCA Request

- Service Notice

Use our hypothesis maker whenever you need to formulate a hypothesis for your study. We offer a very simple tool where you just need to provide basic info about your variables, subjects, and predicted outcomes. The rest is on us. Get a perfect hypothesis in no time!

Type of Research projects Part 2: Hypothesis-driven versus hypothesis-generating research (1 August 2018)

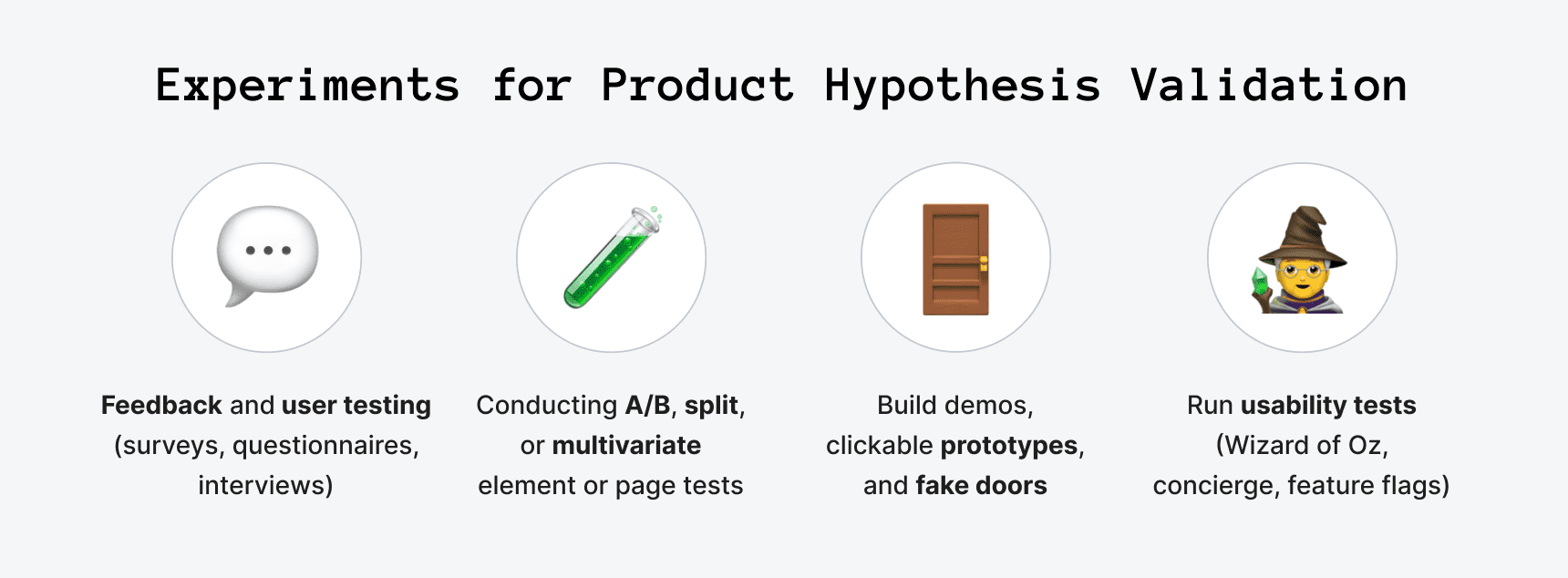

How to Generate and Validate Product Hypotheses

Every product owner knows that it takes effort to build something that'll cater to user needs. You'll have to make many tough calls if you wish to grow the company and evolve the product so it delivers more value. But how do you decide what to change in the product, your marketing strategy, or the overall direction to succeed? And how do you make a product that truly resonates with your target audience?

There are many unknowns in business, so many fundamental decisions start from a simple "what if?". But they can't be based on guesses, as you need some proof to fill in the blanks reasonably.

Because there's no universal recipe for successfully building a product, teams collect data, do research, study the dynamics, and generate hypotheses according to the given facts. They then take corresponding actions to find out whether they were right or wrong, make conclusions, and most likely restart the process again.

On this page, we thoroughly inspect product hypotheses. We'll go over what they are, how to create hypothesis statements and validate them, and what goes after this step.

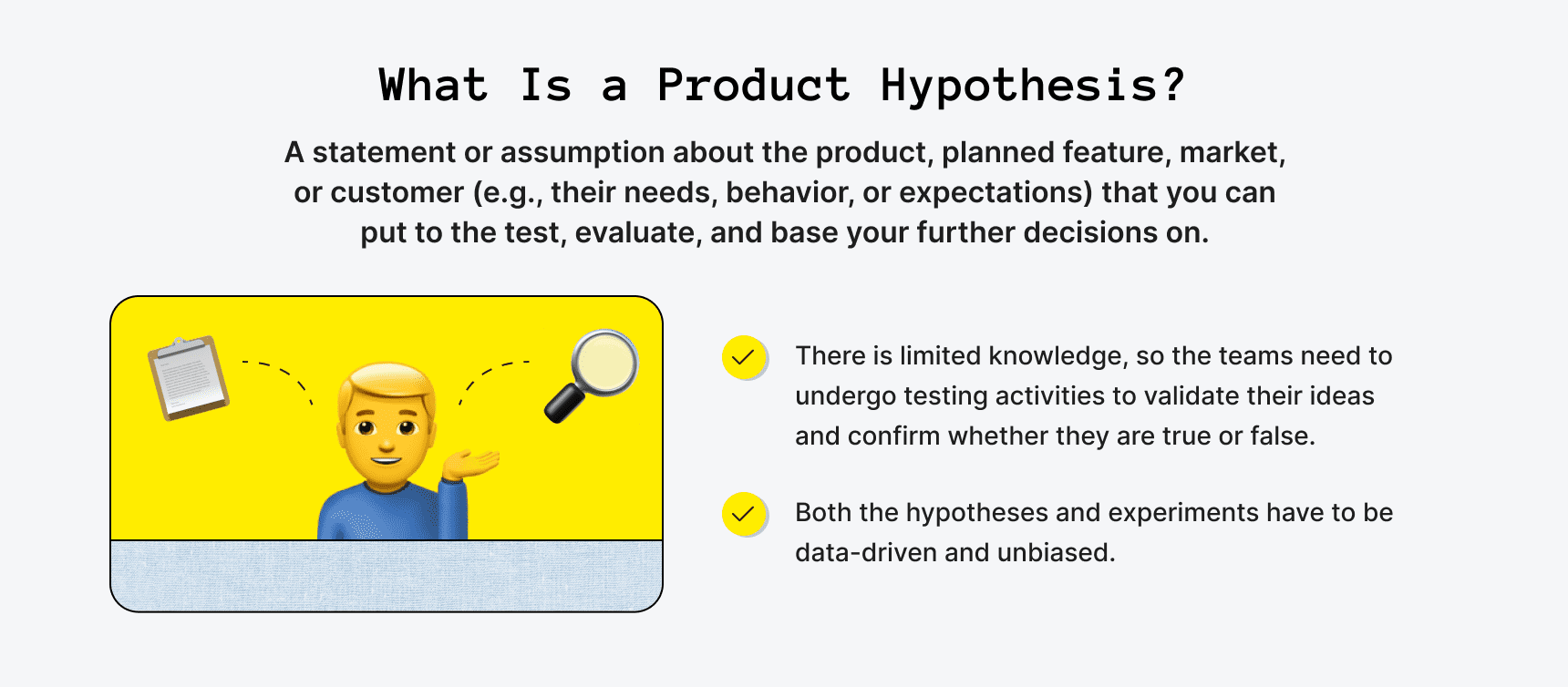

What Is a Hypothesis in Product Management?

A hypothesis in product development and product management is a statement or assumption about the product, planned feature, market, or customer (e.g., their needs, behavior, or expectations) that you can put to the test, evaluate, and base your further decisions on . This may, for instance, regard the upcoming product changes as well as the impact they can result in.

A hypothesis implies that there is limited knowledge. Hence, the teams need to undergo testing activities to validate their ideas and confirm whether they are true or false.

Hypotheses guide the product development process and may point at important findings to help build a better product that'll serve user needs. In essence, teams create hypothesis statements in an attempt to improve the offering, boost engagement, increase revenue, find product-market fit quicker, or for other business-related reasons.