TEACHING MATERIALS

Blue ocean pedagogical materials, used in nearly 3,000 universities and in almost every country in the world, go beyond the standard case-based method. Our multimedia cases and interactive exercises are designed to help you build a deeper understanding of key blue ocean concepts, from blue ocean strategy to nondisruptive creation, developed by world-renowned professors Chan Kim and Renée Mauborgne . Currently, with over 20 Harvard bestselling cases .

CASES BY TOPIC

TEACHING GUIDE

DRIVING THE FUTURE

Driving the future: how autonomous vehicles will change industries and strategy.

Author(s): KIM, W. Chan, MAUBORGNE, Renée, CHEN, Guoli, OLENICK, Michael

Case study trailer

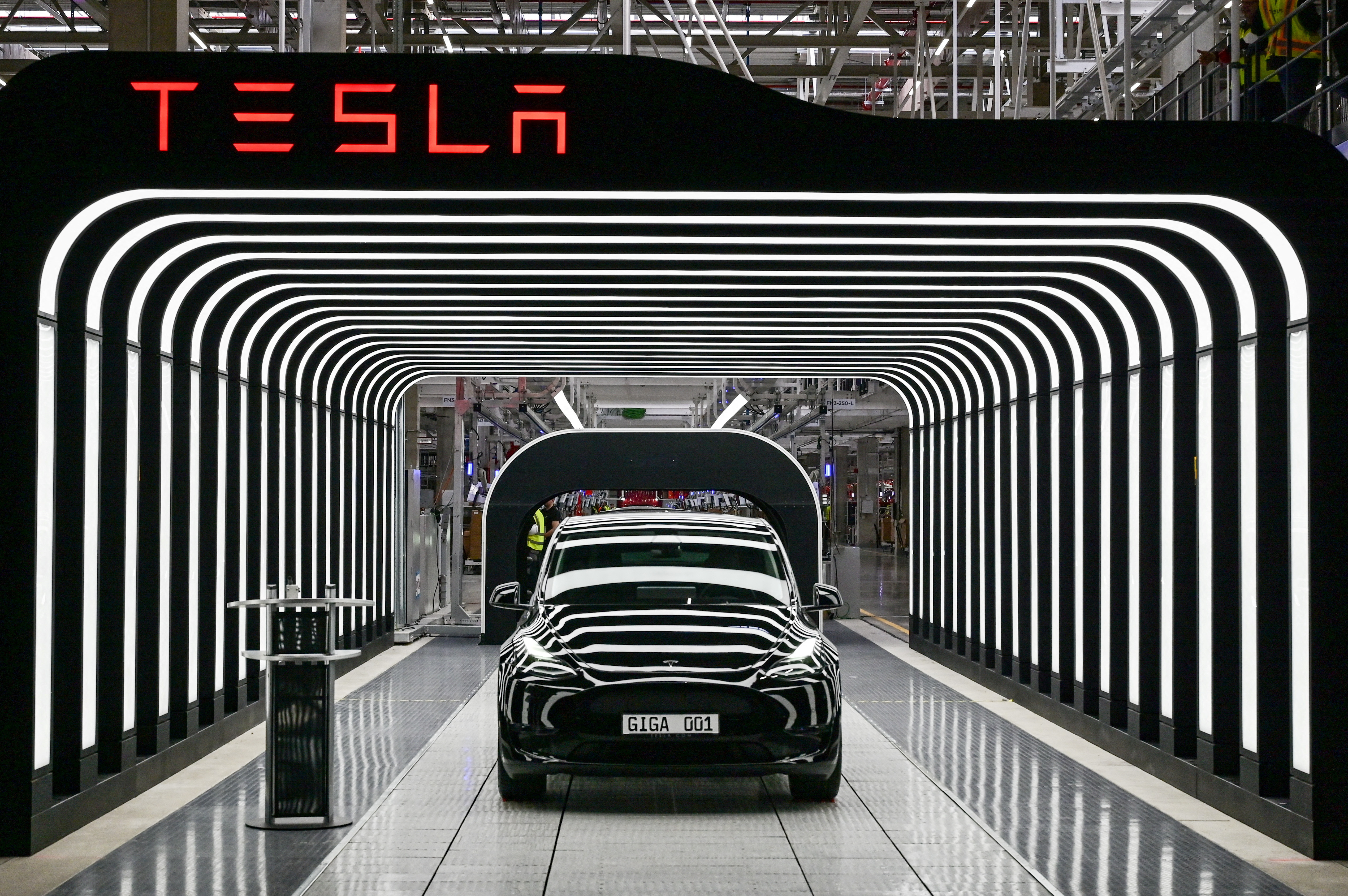

Self-driving cars are moving from science fiction to showroom fact, or at least to a car summoning platform. Waymo, the self-driving car division of Google, has ordered 82,000 self-driving cars for delivery through 2020. Cruise Automation, from General Motors, is perfecting its own fleet. Countless companies are driving full-throttle into the future. This case explores whether self-driving cars (autonomous vehicles or AVs) are a red ocean or blue ocean opportunity, and explains the difference between technological innovation and value innovation . It will prompt students to think about disruptive innovation and nondisruptive market creation , and why inventors of major technological innovations throughout history have often failed to meaningfully monetize their inventions.

HBSP | Case Centre | INSEAD

Teaching Note

Case Centre | INSEAD

Autonomous driving’s future: Convenient and connected

The dream of seeing fleets of driverless cars efficiently delivering people to their destinations has captured consumers’ imaginations and fueled billions of dollars in investment in recent years. But even after some setbacks that have pushed out timelines for autonomous-vehicle (AV) launches and delayed customer adoption, the mobility community still broadly agrees that autonomous driving (AD) has the potential to transform transportation , consumer behavior, and society at large.

About the authors

Because of this, AD could create massive value for the auto industry, generating hundreds of billions of dollars before the end of this decade, McKinsey research shows. 1 McKinsey Center for Future Mobility analysis. To realize the consumer and commercial benefits of autonomous driving, however, auto OEMs and suppliers may need to develop new sales and business strategies, acquire new technological capabilities, and address concerns about safety.

This report, which focuses on the private-passenger-car segment of the AD market, examines the potential for autonomous technologies to disrupt the passenger car market. It also outlines critical success factors that every auto OEM, supplier, and tech provider should know in order to win in the AD passenger car market. (Other McKinsey publications explore the potential of shared AVs such as robo-taxis and robo-shuttles , as well as autonomous trucks and autonomous last-mile delivery.)

Autonomous driving could produce substantial value for drivers, the auto industry, and society

AD could revolutionize the way consumers experience mobility. AD systems may make driving safer, more convenient, and more enjoyable. Hours on the road previously spent driving could be used to video call a friend, watch a funny movie, or even work. For employees with long commutes, driving an AV might increase worker productivity and even shorten the workday. Since workers can perform their jobs from an autonomous car, they could more easily move farther away from the office, which, in turn, could attract more people to rural areas and suburbs. AD might also improve mobility for elderly drivers, providing them with mobility options that go beyond public transportation or car-sharing services. Safety might also increase, with one study showing that the growing adoption of advanced driver-assistance systems (ADAS) in Europe could reduce the number of accidents by about 15 percent by 2030. 2 Tom Seymour, “Crash repair market to reduce by 17% by 2030 due to advanced driver systems, says ICDP,” Automotive Management Online , April 7, 2018.

Along with these consumer benefits, AD may also generate additional value for the auto industry. Today, most cars only include basic ADAS features, but major advancements in AD capabilities are on the horizon. Vehicles will ultimately achieve Society of Automotive Engineers (SAE) Level 4 (L4), or driverless control under certain conditions. Consumers want access to AD features and are willing to pay for them, according to a 2021 McKinsey consumer survey. Growing demand for AD systems could create billions of dollars in revenue. Vehicles with lidar-based Level 2+ (L2+) capabilities contain approximately $1,500 to $2,000 in component costs, and even more for cars with Level 3 (L3) and L4 options. Based on consumer interest in AD features and commercial solutions available on the market today, ADAS and AD could generate between $300 billion and $400 billion in the passenger car market by 2035, according to McKinsey analysis 3 McKinsey Center for Future Mobility analysis. Total revenue potential is derived from McKinsey’s base case on ADAS and AD installation rates, with the share of OEM subscription offerings at a 100 percent installation rate, and actual customer take rate and pricing assumptions by segment and ADAS/AD features. Prices are assumed to decline over time as features become industry standards and because of overall cost digression. (Exhibit 1).

The knock-on effects of autonomous cars on other industries could be significant. For example, by reducing the number of car accidents and collisions, AD technology could limit the number of consumers requiring roadside assistance and repairs. That may put pressure on those types of businesses as consumer adoption of AD rises. In addition, consumers with self-driving cars might not be required to pay steep insurance premiums, since handing over control of vehicles to AD systems might mean that individual drivers could no longer be held liable for car accidents. As a consequence, new business-to-business insurance models may arise for autonomous travel.

Several automakers are already piloting new insurance products. These companies are gleaning insights on driving behavior from autonomous technology and making personalized offers to their consumers. Since OEMs control the AD system, its performance, and the data that it generates (such as the real-time performance of drivers), auto companies can precisely tailor insurance policies to their consumers, giving them a significant advantage over external insurance providers.

How AD could transform the passenger car market

Given today’s high levels of uncertainty in the auto industry, McKinsey has developed three scenarios for autonomous-passenger car sales based upon varying levels of technology availability, customer adoption, and regulatory support (Exhibit 2). In our delayed scenario, automakers further push out AV launch timelines, and consumer adoption remains low. This scenario projects that in 2030, only 4 percent of new passenger cars sold are installed with L3+ AD functions, with that figure increasing to 17 percent in 2035.

Our base scenario assumes that OEMs can meet their announced timelines for AV launches, with a medium level of customer adoption despite the high costs of AD systems. By 2030, 12 percent of new passenger cars are sold with L3+ autonomous technologies, and 37 percent have advanced AD technologies in 2035.

Would you like to learn more about the McKinsey Center for Future Mobility ?

Finally, in our accelerated scenario, OEMs debut new AVs quickly, with sizable revenues coming in through new business models (for example, pay as you go, which offers AD on demand, or new subscription services). In this scenario, most premium automakers preinstall hardware that makes fully autonomous driving possible when the software is ready to upgrade. In this scenario, 20 percent of passenger cars sold in 2030 include advanced AD technologies, and 57 percent have them by 2035.

Delivering higher levels of automation

For automakers focused on delivering vehicles with higher levels of automation, there is enormous potential for growth. Consumers interested in the convenience of hands-free driving might want cars with more advanced autonomous functions (including L2+, L3, and L4), which give the autonomous system greater control over driving tasks. Costs for sensors and high-performance computers are decreasing, while safety standards for AD technologies are continuing to advance. (For instance, standards currently available for traffic jam pilots, which allow autonomous vehicles to navigate through stop-and-go traffic while maintaining a safe distance from other cars, could soon extend to other advanced AD functions.) Taken together, these factors could help the auto industry introduce more advanced autonomous features to a broad range of vehicles over time.

Based on McKinsey’s sales scenarios, L3 and L4 systems for driving on highways will likely be more commonly available in the private-passenger-car segment by around 2025 in Europe and North America, even though the first applications are just now coming into market. (One luxury European brand offers an L3 conditional self-driving system but restricts usage to certain well-marked highways and at reduced speeds.)

About the McKinsey Center for Future Mobility

These insights were developed by the McKinsey Center for Future Mobility (MCFM). Since 2011, the MCFM has worked with stakeholders across the mobility ecosystem by providing independent and integrated evidence about possible future-mobility scenarios. With our unique, bottom-up modeling approach, our insights enable an end-to-end analytics journey through the future of mobility—from consumer needs to modal mix across urban and rural areas, sales, value pools, and life cycle sustainability. Contact us , if you are interested in getting full access to our market insights via the McKinsey Mobility Insights Portal.

Steep up-front costs for developing L3 and L4 driving systems suggest that auto companies’ efforts to commercialize more advanced AD systems may first be limited to premium-vehicle segments. Additional hardware- and software-licensing costs per vehicle for L3 and L4 systems could reach $5,000 or more during the early rollout phase, with development and validation costs likely exceeding more than $1 billion. Because the sticker price on these vehicles is likely to be high, there might be greater commercial potential in offering L2+ systems. These autonomous systems somewhat blur the lines between standard ADAS and automated driving, allowing drivers to take their hands off the wheel for certain periods in areas permitted by law.

L2+ systems are already available from several OEMs, with many other vehicle launches planned over the next few years. If equipped with sufficient sensor and computing power, the technology developed for L2+ systems could also contribute to the development of L3 systems. This is the approach taken by several Chinese disruptor OEMs. These companies are launching vehicles that offer L2+ systems pre-equipped with lidar sensors. The vehicles are likely to reach L3 functionality relatively soon, since the companies are likely using their on-road fleet of enhanced L2+ vehicles to collect data to learn how to handle rare edge cases, or to run the L3 system in shadow mode.

Where true L3 systems are not available, developers might also offer a combination of L2+ and L3 features. This may include an L2+ feature for automated driving on highways and in cities, together with an L3 feature for use in traffic jams.

Car buyers are highly interested in AD features

Consumers benefit from using AD systems in many ways, including greater levels of safety; ease of operation for parking, merging, and other maneuvers; additional fuel savings because of the autonomous system’s ability to maintain optimal speeds; and more quality time. Consumers understand these benefits and continue to be highly willing to consider using AD features, according to our research.

Consumers benefit from using AD systems in many ways, such as greater levels of safety, and some are highly willing to consider using AD features.

In McKinsey’s 2021 autonomous driving, connectivity, electrification, and shared mobility (ACES) 4 Timo Möller, Asutosh Padhi, Dickon Pinner, and Andreas Tschiesner, “ The future of mobility is at our doorstep ,” December 19, 2019. survey, which polled more than 25,000 consumers about their mobility preferences, about a quarter of respondents said they are very likely to choose an advanced AD feature when purchasing their next vehicle. Two-thirds of these highly interested customers would pay a one-time fee of $10,000 or an equivalent subscription rate for an L4 highway pilot, which provides hands-free driving on highways under certain conditions (Exhibit 3). This represents a price point and willingness to pay that is consistent with a few top-of-the-line AD vehicles launched in the past few years, as well as with our value-based pricing model.

Since consumers have such different lifestyles and needs, AD systems may benefit some consumers far more than others, making them much more likely to pay for AD features. For instance, a sales manager who drives 30,000 miles a year and upgrades to an autonomous car could use all of that time previously spent driving to contact new leads or to create in-depth sales strategies with his or her team. On the other hand, a parent who uses a car primarily for shopping or for driving the kids to school might be more reluctant to pay for AD features.

Exploring the values of different consumer personas could enable OEMs and dealerships to tailor their value propositions and pricing schemes. For instance, they might implement a flexible pricing model that includes a fixed one-time fee, subscription offerings, and, potentially, an on-demand option such as paying an hourly rate for each use of a traffic jam pilot. Our research indicates that consumers prefer having different pricing options. Of the highly interested consumers, 20 percent of ACES survey respondents said they would prefer to purchase ADAS features through a subscription, while nearly 30 percent said they would prefer to pay each time they use a feature. In addition, one in four respondents said they would like to be able to unlock additional ADAS features even after purchasing a new car.

Although consumers continue to be very interested in autonomous driving, they are also adopting more cautious and realistic attitudes toward self-driving cars. For the first time in five years, consumers are less willing to consider driving a fully autonomous vehicle, our ACES consumer surveys show. Readiness to switch to a private AV is down by almost ten percentage points, with 26 percent of respondents saying they would prefer to switch to a fully autonomous car in 2021, compared with 35 percent in 2020 (Exhibit 4).

Our ACES consumer research also reveals that trust in the safety of AVs is down by five percentage points, and that the share of consumers who support government regulation of fully self-driving cars has declined by about 15 percentage points. While safety concerns are top of mind, consumers also want opportunities to test-drive AD systems and more information about the technology. To help customers become more comfortable with AVs, OEMs may need to offer hands-on experiences with AVs, address safety concerns, and educate consumers about how autonomous driving works.

Regulatory support is critical

Support from regulators is essential to overcoming AD safety concerns, creating a trusted and safe ecosystem, and implementing global standards. So far, most public officials have strongly advocated for the inclusion of ADAS capabilities in existing regulations, laying the groundwork for autonomous driving. This has resulted in a much higher penetration of ADAS functions, both in passenger cars and commercial vehicles.

The auto industry and public authorities agree on autonomous driving’s potential to save lives . Today, basic SAE L1 and L2 ADAS features are increasingly coming under regulation. This includes Europe’s Vehicle General Safety Regulation, along with Europe and North America’s New Car Assessment Program (NCAP), a voluntary program that establishes standards for car safety. NCAP is a key advocate for the integration of active safety systems in passenger cars.

In 2020 and 2022, OEMs seeking the NCAP’s highest, five-star safety rating were faced with the challenges of implementing features such as automatic emergency braking (AEB) and automatic emergency steering (AES). As a result, US and European OEMs in all segments have developed these features, with more than 90 percent of all European- and American-made cars offering L1 capabilities as a baseline.

There is already sufficient regulation to enable companies to pilot robo-shuttle services in cities, primarily in the United States, China, Israel, and now in Europe. Companies will continue their test-and-learn cycles with the robo-shuttle pilots and move into a phase of stable operations over the next few years. Still missing, however, are global standards regarding AD functions for use in private vehicles, although many regulators are working on them.

The United Nations Economic Commission for Europe has a rule on automated lane-keeping systems that regulates the introduction of L3 AD for speeds up to 60 kilometers per hour. In addition, the UN’s World Forum for Harmonization of Vehicle Regulations (WP.29) is working on additional regulation for using AD functions at higher speeds. This group plans to extend the use of advanced autonomous systems to speeds of up to 130 kilometers per hour, with the rule coming into force in 2023. Germany has also offered comprehensive legislation on AD that has allowed one European OEM to launch the first true L3 feature in a current model. Similar legislation exists in Japan and has recently been authorized in France. The development of global AD standards for private-passenger vehicles is clearly in motion.

Succeeding in the passenger car market

To succeed in the autonomous passenger car market, OEMs and suppliers will likely need to change how they operate. This may require a new approach to R&D that focuses on software-driven development processes, a plan to make use of fleet data, and flexible, feature-rich offerings across vehicle segments that consider consumers’ varying price points. Decoupling the development of hardware components and software for AD platforms could allow automakers and suppliers to keep design costs more feasible, since the AV architecture could then be reused.

To win over consumers, auto companies could also develop a customer-centered, go-to-market strategy. Moreover, leaders might explore different ownership models and sales methods with the end-to-end (E2E) business case in mind, taking into account the entire life cycle of the autonomous vehicle. Finally, leaders may also need to create an organization that will support all of the above changes.

Creating a new R&D strategy

Succeeding in AD may require OEMs to make a mindset change. Simply put, the old ways of doing things are no longer valid. Successful OEMs should focus on building up in-house competencies such as excellence in software development. Although the automotive industry has honed its ability to split development work among multiple partners and suppliers, the sheer complexity of an L3- or L4-capable AD stack limits the potential for partnering with many different specialists.

Indeed, developing AD capabilities requires much stronger ownership of the entire ecosystem, as well as the ability to codevelop hardware and software—in particular, chips and neural networks. This suggests leading OEMs should either develop strong in-house capabilities or form partnerships with leading tech players tasked with delivering the entire driving platform.

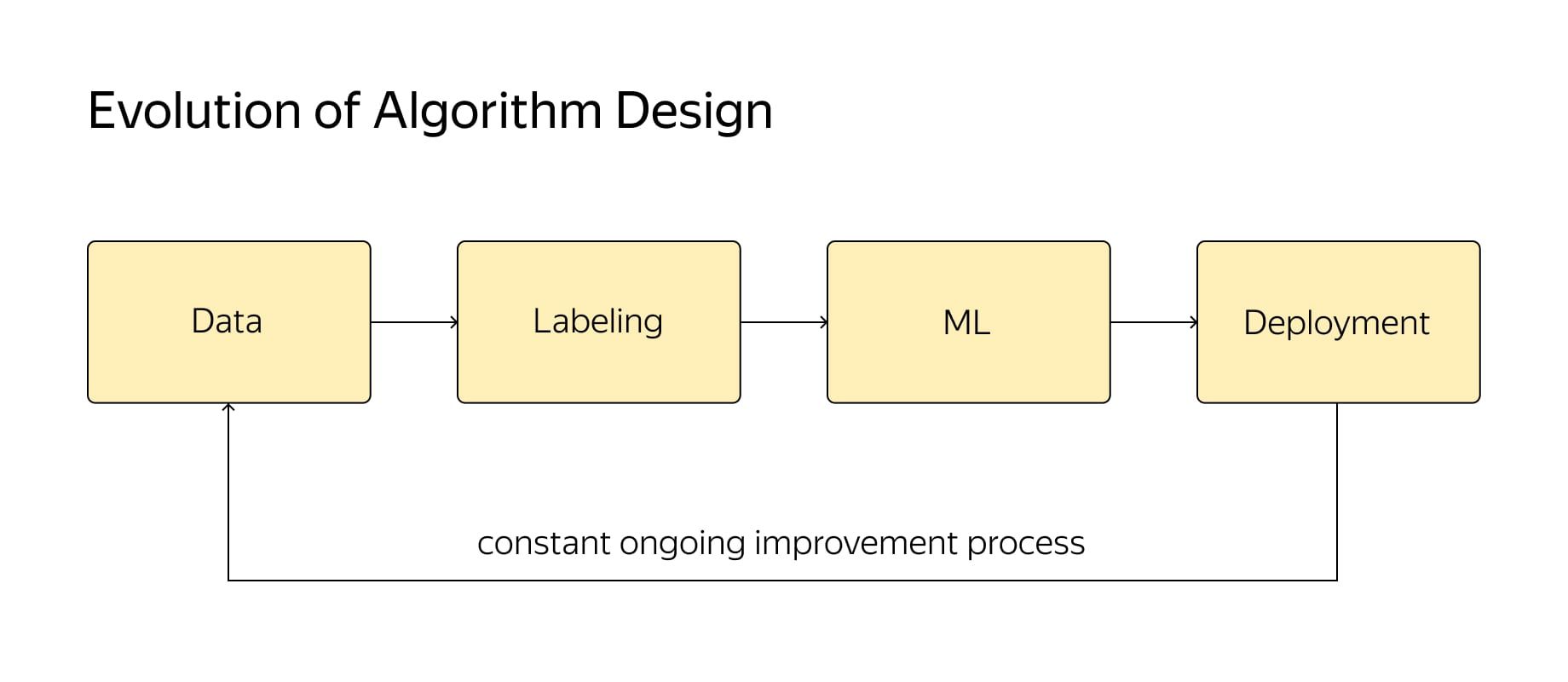

OEMs would also benefit from holistically managing their road maps for developing AD features and portfolios of offerings. They should ensure that the AD architecture is flexible and reusable where possible. Moreover, to stay competitive over a vehicle platform’s life cycle, systems must be easy to upgrade. Developing new strategies to collect fleet data, selecting relevant testing scenarios, and using the data to train and validate the AD system are also likely to be essential.

Developing customer-centered, go-to-market strategies

OEMs and their dealer networks should work to dispel the many uncertainties faced by consumers when deciding to buy a car with AD capabilities. Although consumers remain highly interested in AD, most buyers have not yet driven an autonomous car. Consumers receive many different (and sometimes contradictory) messages throughout the car-buying journey, from sources that hype up the technology to those that tout significant safety concerns. To win the trust of consumers, OEMs and dealerships may need to deliver additional sales training so that employees can pitch AD systems to customers and explain the technologies in enough detail to alleviate customer concerns.

Enabling customers to experience AD firsthand is critical, so auto companies may want to offer a test-drive that introduces the AD platform. Changing the business model from offering one-time licensing to an ongoing subscription plan could make it easier for customers to afford an AV and provide additional upside for OEMs. Our research indicates that in the future, all three business models (one-time sales, subscription pricing, and pay per use) may generate significant revenue. This implies that OEMs and other companies might need to adapt their go-to-market approach in order to sell subscriptions. OEMs might consider offering subscriptions that go beyond AD features and potential vehicle ownership, such as in-car connectivity.

Making an end-to-end business case

With new forms of revenue coming in through subscriptions and pay-per-use offerings, OEMs may need to rethink how they calculate the business case for their vehicles and shift toward E2E marketing strategies. That means considering subscription pricing and length, sales of upgrades, software maintenance, and potential upselling to more advanced systems. For subscription pricing, OEMs are likely to face higher up-front costs, since they have to equip all vehicles with the technologies that will make AD features run. In return, they might expect higher customer use and revenues over the vehicle’s life cycle.

Based on McKinsey’s customer research and business case studies, AD subscription models may initially be economically viable only for premium D-segment vehicles (large cars such as sedans or wagons) and above, particularly those that already exhibit higher revenues from ADAS/AD functions. OEMs may need to adjust their internal key performance indicators (KPI), financing structures, and strategies for communicating with investors, since the E2E business model reduces short-term profitability in exchange for long-term revenue.

Reorganizing the company

Software is the key differentiator for AD, so organizations must excel in several areas: attracting coding talent, the development process, and capabilities in simulation and validation. It’s worth noting that leading AD players do not necessarily have the biggest development teams. In fact, the development teams deployed by leading disruptive players are often significantly smaller than those of some other large OEM groups and tier-one suppliers. This highlights the importance of having the right talent, combined with effective development processes and best-in-class tool chains.

Experience suggests that deploying more resources can backfire, creating additional fragmentation and making communication needlessly complex for companies managing development projects. This is why it is often not a winning formula to install managers experienced in hardware development or embedded-software development to lead AD systems’ software development teams.

Implications for suppliers

Suppliers may also need to adapt to new industry success factors. They face fierce competition for full-stack solutions that may likely lead to a consolidation of players. To compete, suppliers must be focused and nimble. They might benefit from offering different delivery models to OEM customers, from stand-alone hardware solutions to fully integrated hardware–software solutions. In return, new opportunities may open up for developing joint business models closer to end customers, potentially including the possibility of revenue sharing.

For state-of-the-art AD solutions, companies will need access to large amounts of fleet data to train algorithms to achieve low-enough failure rates. While OEMs have fleet access and only need to find suitable technology to extract data from their customer fleets, suppliers must depend on partners or key customers to gain access. Consequently, it is mission critical for suppliers seeking to develop state-of-the-art AD systems to recruit a close lead customer early on for codevelopment and fleet access.

A lack of access to substantial amounts of fleet data, funding, and sufficient talent will probably limit the number of companies that can successfully offer full-stack AD systems. The result may be a “winner takes most” market dynamic. Companies with the best access to data and funding will likely enjoy a strong competitive advantage over those that do not have this information, since they will have a better chance to advance their technology and get ahead of their competitors.

As a result, the number of successful suppliers or tech companies delivering a full AD system could likely remain limited to a handful of companies, in both the West and China. For the first generation of AD systems, joint development of software and the required chips may help the full system achieve better performance and efficiency, with a lower risk of late integration issues. This could further limit the number of potential industry winners.

Achieving long-term success may also require suppliers to articulate their competitive advantage and strategies. A targeted approach may yield higher returns.

Achieving long-term success may also require suppliers to articulate their competitive advantage, value proposition, and strategies. They should decide whether or not to become a full-stack player for the most advanced systems or concentrate on dedicated areas of the stack, which could be either hardware components or software elements. Our research shows that a targeted approach may yield higher returns for many suppliers and potentially offer substantial and attractive value pools. The total value of the passenger car AD components market could reach $55 billion to $80 billion by 2030, based on a detailed analysis that assumes a medium AD adoption scenario (Exhibit 5). In this scenario, most of the revenue would be generated by control units.

OEMs often follow different strategies for their lower-end ADAS and higher-end AD solutions, so suppliers that want to play across the entire technology stack may need to work within flexible delivery models. This could include supplying components, delivering stand-alone software functions such as parking, or delivering fully integrated ADAS or AD stacks. While delivering components is a business model that allows partnering with many different OEMs, supplying targeted software solutions or fully integrated software stacks is only possible when OEMs have decided to outsource.

Because most leading OEMs in AD development use in-house development for their most advanced systems, the number of potential customers for full-stack solutions is quite limited. For singular functions or add-ons (for example, parking or less sophisticated ADAS systems), there is a larger range of customers looking for suppliers. ADAS and AD systems are highly dependent on software, so supply chain monetization strategies could change. For instance, instead of charging a one-time fee for each component, suppliers might charge for performing regular system updates. They might even transition to a revenue-sharing model, which would increase the financial incentive to keep features up to date.

New technology companies are also entering a market previously reserved for tier-one automotive suppliers. Tech companies currently active in the passenger car market are mainly starting from a system-on-chip competency and building the software suite on top. There is also the chance that, in the future, L4 robo-taxi and robo-shuttle technology providers may enter the auto-supply market, but these companies will need to evaluate the applicability of AD technologies and cost positions against what customers require from passenger cars.

What’s next for autonomous vehicles?

At first glance, these new tech companies may appear to threaten incumbent tier-one auto suppliers, since they compete for business from the same OEMs. But tech companies and incumbent tier-one suppliers could potentially benefit from new partnership opportunities in which they provide complementary capabilities in software and hardware development that would also help to industrialize AD solutions.

Securing a leading position as an AD supplier will likely be challenging. It may require companies to develop strong capabilities in technology and economies of scale to attain a position as cost leader. But as suppliers begin to talk to OEMs about equipping fleets with new technologies, additional new business opportunities—including profit sharing—could arise. Critically, suppliers could benefit from a new operating model for working with OEMs that ensures sufficient upside beyond just sharing risks, since suppliers do not have the direct access to car buyers or drivers that would allow them to communicate certain value propositions.

High potential, high uncertainty

New AD technologies have tremendous potential to provide new levels of safety and convenience for consumers, generate significant value within the auto industry, and transform how people travel. At the same time, the dynamic and rapidly evolving AD passenger car market is producing high levels of uncertainty. All companies in the AD value chain—from automakers and suppliers to technology providers—must have clear, well-aligned strategies. Companies seeking to win in the autonomous passenger car market could benefit from a targeted value proposition, a clear vision of where the market is heading (including well-developed scenarios that cover the next ten years at minimum), and an understanding of what consumers want most.

To start, companies can evaluate their starting positions against their longer-term business goals and priorities. The result should be an AD portfolio strategy, feature road map, and detailed implementation plan that addresses each critical success factor. Companies will likely benefit from securing key capabilities, revamping the organization, updating internal processes, and developing external relationships with partners and regulators. With OEMs regularly revisiting timelines for rolling out new AD vehicles, companies may also need to frequently review and update their business strategies. Success in AD is not a given. But to realize the full promise of autonomous driving, forward-looking companies and regulatory bodies can pave the way.

Johannes Deichmann is a partner in McKinsey’s Stuttgart office; Eike Ebel is a consultant in the Frankfurt office, where Kersten Heineke is a partner; Ruth Heuss is a senior partner in the Berlin office; and Martin Kellner is an associate partner in the Munich office, where Fabian Steiner is a consultant.

This article was edited by Belinda Yu, an editor in McKinsey’s Atlanta office.

Explore a career with us

Related articles.

The road to affordable autonomous mobility

Automotive semiconductors for the autonomous age

Self-driving Vehicles

Main navigation, related documents.

2015 Study Panel Charge

June 2016 Interim Summary

Download Full Report

[ go to the annotated version ]

Since the 1930s, science fiction writers dreamed of a future with self-driving cars, and building them has been a challenge for the AI community since the 1960s. By the 2000s, the dream of autonomous vehicles became a reality in the sea and sky, and even on Mars, but self-driving cars existed only as research prototypes in labs. Driving in a city was considered to be a problem too complex for automation due to factors like pedestrians, heavy traffic, and the many unexpected events that can happen outside of the car’s control. Although the technological components required to make such autonomous driving possible were available in 2000—and indeed some autonomous car prototypes existed [30] [31] [32] —few predicted that mainstream companies would be developing and deploying autonomous cars by 2015. During the first Defense Advanced Research Projects Agency (DARPA) “grand challenge” on autonomous driving in 2004, research teams failed to complete the challenge in a limited desert setting.

But in eight short years, from 2004-2012, speedy and surprising progress occurred in both academia and industry. Advances in sensing technology and machine learning for perception tasks has sped progress and, as a result, Google’s autonomous vehicles and Tesla’s semi-autonomous cars are driving on city streets today. Google’s self-driving cars, which have logged more than 1,500,000 miles (300,000 miles without an accident), [33] are completely autonomous—no human input needed. Tesla has widely released self-driving capability to existing cars with a software update. [34] Their cars are semi-autonomous, with human drivers expected to stay engaged and take over if they detect a potential problem. It is not yet clear whether this semi-autonomous approach is sustainable, since as people become more confident in the cars' capabilities, they are likely to pay less attention to the road, and become less reliable when they are most needed. The first traffic fatality involving an autonomous car, which occurred in June of 2016, brought this question into sharper focus. [35]

In the near future, sensing algorithms will achieve super-human performance for capabilities required for driving. Automated perception, including vision, is already near or at human-level performance for well-defined tasks such as recognition and tracking. Advances in perception will be followed by algorithmic improvements in higher level reasoning capabilities such as planning. A recent report predicts self-driving cars to be widely adopted by 2020. [36] And the adoption of self-driving capabilities won’t be limited to personal transportation. We will see self-driving and remotely controlled delivery vehicles, flying vehicles, and trucks. Peer-to-peer transportation services (e.g. ridesharing) are also likely to utilize self-driving vehicles. Beyond self-driving cars, advances in robotics will facilitate the creation and adoption of other types of autonomous vehicles, including robots and drones.

It is not yet clear how much better self-driving cars need to become to encourage broad acceptance. The collaboration required in semi-self-driving cars and its implications for the cognitive load of human drivers is not well understood. But if future self-driving cars are adopted with the predicted speed, and they exceed human-level performance in driving, other significant societal changes will follow. Self-driving cars will eliminate one of the biggest causes of accidental death and injury in United States, and lengthen people’s life expectancy. On average, a commuter in US spends twenty-five minutes driving each way. [37] With self-driving car technology, people will have more time to work or entertain themselves during their commutes. And the increased comfort and decreased cognitive load with self-driving cars and shared transportation may affect where people choose to live. The reduced need for parking may affect the way cities and public spaces are designed. Self-driving cars may also serve to increase the freedom and mobility of different subgroups of the population, including youth, elderly and disabled.

Self-driving cars and peer-to-peer transportation services may eliminate the need to own a vehicle. The effect on total car use is hard to predict. Trips of empty vehicles and people’s increased willingness to travel may lead to more total miles driven. Alternatively, shared autonomous vehicles—people using cars as a service rather than owning their own—may reduce total miles, especially if combined with well-constructed incentives, such as tolls or discounts, to spread out travel demand, share trips, and reduce congestion. The availability of shared transportation may displace the need for public transportation—or public transportation may change form towards personal rapid transit, already available in four cities, [38] which uses small capacity vehicles to transport people on demand and point-to-point between many stations. [39]

As autonomous vehicles become more widespread, questions will arise over their security, including how to ensure that technologies are safe and properly tested under different road conditions prior to their release. Autonomous vehicles and the connected transportation infrastructure will create a new venue for hackers to exploit vulnerabilities to attack. There are also ethical questions involved in programming cars to act in situations in which human injury or death is inevitable, especially when there are split-second choices to be made regarding whom to put at risk. The legal systems in most states in the US do not have rules covering self-driving cars. As of 2016, four states in the US (Nevada, Florida, California, and Michigan), Ontario in Canada, the United Kingdom, France, and Switzerland have passed rules for the testing of self-driving cars on public roads. Even these laws do not address issues about responsibility and assignment of blame for an accident for self-driving and semi-self-driving cars. [40]

[30] "Navlab," Wikipedia , last updated June 4, 2016, accessed August 1, 2016, https://en.wikipedia.org/wiki/Navlab .

[31] "Navlab: The Carnegie Mellon University Navigation Laboratory," Carnegie Mellon University, accessed August 1, 2016, http://www.cs.cmu.edu/afs/cs/project/alv/www/ .

[32] "Eureka Prometheus Project," Wikipedia , last modified February 12, 2016, accessed August 1, 2016, https://en.wikipedia.org/wiki/Eureka_Prometheus_Project .

[33] “Google Self-Driving Car Project,” Google, accessed August 1, 2016, https://www.google.com/selfdrivingcar/ .

[34] Molly McHugh, "Tesla’s Cars Now Drive Themselves, Kinda," Wired , October 14, 2015, accessed August 1, 2016, http://www.wired.com/2015/10/tesla-self-driving-over-air-update-live/ .

[35] Anjali Singhvi and Karl Russell, "Inside the Self-Driving Tesla Fatal Accident," The New York Times , Last updated July 12, 2016, accessed August 1, 2016, http://www.nytimes.com/interactive/2016/07/01/business/inside-tesla-accident.html .

[36] John Greenough, "10 million self-driving cars will be on the road by 2020," Business Insider , June 15, 2016, accessed August 1, 2016, http://www.businessinsider.com/report-10-million-self-driving-cars-will-be-on-the-road-by-2020-2015-5-6 .

[37] Brian McKenzie and Melanie Rapino, "Commuting in the United States: 2009," American Community Survey Reports , United States Census Bureau, September 2011, accessed August 1, 2016, https://www.census.gov/prod/2011pubs/acs-15.pdf .

[38] Morgantown, West Virginia; Masdar City, UAE; London, England; and Suncheon, South Korea.

[39] "Personal rapid transit," Wikipedia , Last modified July 18, 2016, accessed August 1, 2016, https://en.wikipedia.org/wiki/Personal_rapid_transit .

[40] Patrick Lin, "The Ethics of Autonomous Cars," The Atlantic , October 8, 2013, accessed August 1, 2016, http://www.theatlantic.com/technology/archive/2013/10/the-ethics-of-autonomous-cars/280360/ .

Cite This Report

Peter Stone, Rodney Brooks, Erik Brynjolfsson, Ryan Calo, Oren Etzioni, Greg Hager, Julia Hirschberg, Shivaram Kalyanakrishnan, Ece Kamar, Sarit Kraus, Kevin Leyton-Brown, David Parkes, William Press, AnnaLee Saxenian, Julie Shah, Milind Tambe, and Astro Teller. "Artificial Intelligence and Life in 2030." One Hundred Year Study on Artificial Intelligence: Report of the 2015-2016 Study Panel, Stanford University, Stanford, CA, September 2016. Doc: http://ai100.stanford.edu/2016-report . Accessed: September 6, 2016.

Report Authors

AI100 Standing Committee and Study Panel

© 2016 by Stanford University. Artificial Intelligence and Life in 2030 is made available under a Creative Commons Attribution-NoDerivatives 4.0 License (International): https://creativecommons.org/licenses/by-nd/4.0/ .

Thank you for visiting nature.com. You are using a browser version with limited support for CSS. To obtain the best experience, we recommend you use a more up to date browser (or turn off compatibility mode in Internet Explorer). In the meantime, to ensure continued support, we are displaying the site without styles and JavaScript.

- View all journals

- Explore content

- About the journal

- Publish with us

- Sign up for alerts

- 24 October 2018

Self-driving car dilemmas reveal that moral choices are not universal

You can also search for this author in PubMed Google Scholar

When a driver slams on the brakes to avoid hitting a pedestrian crossing the road illegally, she is making a moral decision that shifts risk from the pedestrian to the people in the car. Self-driving cars might soon have to make such ethical judgments on their own — but settling on a universal moral code for the vehicles could be a thorny task, suggests a survey of 2.3 million people from around the world.

Access options

Access Nature and 54 other Nature Portfolio journals

Get Nature+, our best-value online-access subscription

24,99 € / 30 days

cancel any time

Subscribe to this journal

Receive 51 print issues and online access

185,98 € per year

only 3,65 € per issue

Rent or buy this article

Prices vary by article type

Prices may be subject to local taxes which are calculated during checkout

Nature 562 , 469-470 (2018)

doi: https://doi.org/10.1038/d41586-018-07135-0

Awad, E. et al . Nature https://doi.org/10.1038/s41586-018-0637-6 (2018).

Article Google Scholar

Bonnefon, J. et al. Science 352 , 1573-1576 (2016).

Article PubMed Google Scholar

Download references

Reprints and permissions

Related Articles

Autonomous vehicles: No drivers required

Editorial: Road test

- Computer science

AI-fuelled election campaigns are here — where are the rules?

World View 09 APR 24

How papers with doctored images can affect scientific reviews

News 28 MAR 24

Superconductivity case shows the need for zero tolerance of toxic lab culture

Correspondence 26 MAR 24

How to break big tech’s stranglehold on AI in academia

Correspondence 09 APR 24

Use fines from EU social-media act to fund research on adolescent mental health

‘Without these tools, I’d be lost’: how generative AI aids in accessibility

Technology Feature 08 APR 24

High-threshold and low-overhead fault-tolerant quantum memory

Article 27 MAR 24

Three reasons why AI doesn’t model human language

Correspondence 19 MAR 24

So … you’ve been hacked

Technology Feature 19 MAR 24

Junior Group Leader Position at IMBA - Institute of Molecular Biotechnology

The Institute of Molecular Biotechnology (IMBA) is one of Europe’s leading institutes for basic research in the life sciences. IMBA is located on t...

Austria (AT)

IMBA - Institute of Molecular Biotechnology

Husbandry Technician I

Memphis, Tennessee

St. Jude Children's Research Hospital (St. Jude)

Lead Researcher – Department of Bone Marrow Transplantation & Cellular Therapy

Researcher in the center for in vivo imaging and therapy, scientist or lead researcher (protein engineering, hematology, shengdar q. tsai lab).

Sign up for the Nature Briefing newsletter — what matters in science, free to your inbox daily.

Quick links

- Explore articles by subject

- Guide to authors

- Editorial policies

Suggestions or feedback?

MIT News | Massachusetts Institute of Technology

- Machine learning

- Social justice

- Black holes

- Classes and programs

Departments

- Aeronautics and Astronautics

- Brain and Cognitive Sciences

- Architecture

- Political Science

- Mechanical Engineering

Centers, Labs, & Programs

- Abdul Latif Jameel Poverty Action Lab (J-PAL)

- Picower Institute for Learning and Memory

- Lincoln Laboratory

- School of Architecture + Planning

- School of Engineering

- School of Humanities, Arts, and Social Sciences

- Sloan School of Management

- School of Science

- MIT Schwarzman College of Computing

Exploring new methods for increasing safety and reliability of autonomous vehicles

Press contact :.

Previous image Next image

When we think of getting on the road in our cars, our first thoughts may not be that fellow drivers are particularly safe or careful — but human drivers are more reliable than one may expect. For each fatal car crash in the United States, motor vehicles log a whopping hundred million miles on the road.

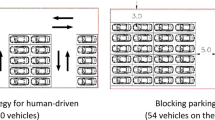

Human reliability also plays a role in how autonomous vehicles are integrated in the traffic system, especially around safety considerations. Human drivers continue to surpass autonomous vehicles in their ability to make quick decisions and perceive complex environments: Autonomous vehicles are known to struggle with seemingly common tasks, such as taking on- or off-ramps, or turning left in the face of oncoming traffic. Despite these enormous challenges, embracing autonomous vehicles in the future could yield great benefits, like clearing congested highways; enhancing freedom and mobility for non-drivers; and boosting driving efficiency, an important piece in fighting climate change.

MIT engineer Cathy Wu envisions ways that autonomous vehicles could be deployed with their current shortcomings, without experiencing a dip in safety. “I started thinking more about the bottlenecks. It’s very clear that the main barrier to deployment of autonomous vehicles is safety and reliability,” Wu says.

One path forward may be to introduce a hybrid system, in which autonomous vehicles handle easier scenarios on their own, like cruising on the highway, while transferring more complicated maneuvers to remote human operators. Wu, who is a member of the Laboratory for Information and Decision Systems (LIDS), a Gilbert W. Winslow Assistant Professor of Civil and Environmental Engineering (CEE) and a member of the MIT Institute for Data, Systems, and Society (IDSS), likens this approach to air traffic controllers on the ground directing commercial aircraft.

In a paper published April 12 in IEEE Transactions on Robotics , Wu and co-authors Cameron Hickert and Sirui Li (both graduate students at LIDS) introduced a framework for how remote human supervision could be scaled to make a hybrid system efficient without compromising passenger safety. They noted that if autonomous vehicles were able to coordinate with each other on the road, they could reduce the number of moments in which humans needed to intervene.

Humans and cars: finding a balance that’s just right

For the project, Wu, Hickert, and Li sought to tackle a maneuver that autonomous vehicles often struggle to complete. They decided to focus on merging, specifically when vehicles use an on-ramp to enter a highway. In real life, merging cars must accelerate or slow down in order to avoid crashing into cars already on the road. In this scenario, if an autonomous vehicle was about to merge into traffic, remote human supervisors could momentarily take control of the vehicle to ensure a safe merge. In order to evaluate the efficiency of such a system, particularly while guaranteeing safety, the team specified the maximum amount of time each human supervisor would be expected to spend on a single merge. They were interested in understanding whether a small number of remote human supervisors could successfully manage a larger group of autonomous vehicles, and the extent to which this human-to-car ratio could be improved while still safely covering every merge.

With more autonomous vehicles in use, one might assume a need for more remote supervisors. But in scenarios where autonomous vehicles coordinated with each other, the team found that cars could significantly reduce the number of times humans needed to step in. For example, a coordinating autonomous vehicle already on a highway could adjust its speed to make room for a merging car, eliminating a risky merging situation altogether.

The team substantiated the potential to safely scale remote supervision in two theorems. First, using a mathematical framework known as queuing theory, the researchers formulated an expression to capture the probability of a given number of supervisors failing to handle all merges pooled together from multiple cars. This way, the researchers were able to assess how many remote supervisors would be needed in order to cover every potential merge conflict, depending on the number of autonomous vehicles in use. The researchers derived a second theorem to quantify the influence of cooperative autonomous vehicles on surrounding traffic for boosting reliability, to assist cars attempting to merge.

When the team modeled a scenario in which 30 percent of cars on the road were cooperative autonomous vehicles, they estimated that a ratio of one human supervisor to every 47 autonomous vehicles could cover 99.9999 percent of merging cases. But this level of coverage drops below 99 percent, an unacceptable range, in scenarios where autonomous vehicles did not cooperate with each other.

“If vehicles were to coordinate and basically prevent the need for supervision, that’s actually the best way to improve reliability,” Wu says.

Cruising toward the future

The team decided to focus on merging not only because it’s a challenge for autonomous vehicles, but also because it’s a well-defined task associated with a less-daunting scenario: driving on the highway. About half of the total miles traveled in the United States occur on interstates and other freeways. Since highways allow higher speeds than city roads, Wu says, “If you can fully automate highway driving … you give people back about a third of their driving time.”

If it became feasible for autonomous vehicles to cruise unsupervised for most highway driving, the challenge of safely navigating complex or unexpected moments would remain. For instance, “you [would] need to be able to handle the start and end of the highway driving,” Wu says. You would also need to be able to manage times when passengers zone out or fall asleep, making them unable to quickly take over controls should it be needed. But if remote human supervisors could guide autonomous vehicles at key moments, passengers may never have to touch the wheel. Besides merging, other challenging situations on the highway include changing lanes and overtaking slower cars on the road.

Although remote supervision and coordinated autonomous vehicles are hypotheticals for high-speed operations, and not currently in use, Wu hopes that thinking about these topics can encourage growth in the field.

“This gives us some more confidence that the autonomous driving experience can happen,” Wu says. “I think we need to be more creative about what we mean by ‘autonomous vehicles.’ We want to give people back their time — safely. We want the benefits, we don’t strictly want something that drives autonomously.”

Share this news article on:

Related links.

- Laboratory for Information and Decision Systems

- Institute for Data, Systems, and Society

- Department of Civil and Environmental Engineering

Related Topics

- Autonomous vehicles

- Technology and society

- Human-computer interaction

- Civil and environmental engineering

- Laboratory for Information and Decision Systems (LIDS)

Related Articles

Computers that power self-driving cars could be a huge driver of global carbon emissions

Researchers release open-source photorealistic simulator for autonomous driving

On the road to cleaner, greener, and faster driving

Previous item Next item

More MIT News

A biomedical engineer pivots from human movement to women’s health

Read full story →

MIT tops among single-campus universities in US patents granted

A new way to detect radiation involving cheap ceramics

A crossroads for computing at MIT

Growing our donated organ supply

New AI method captures uncertainty in medical images

- More news on MIT News homepage →

Massachusetts Institute of Technology 77 Massachusetts Avenue, Cambridge, MA, USA

- Map (opens in new window)

- Events (opens in new window)

- People (opens in new window)

- Careers (opens in new window)

- Accessibility

- Social Media Hub

- MIT on Facebook

- MIT on YouTube

- MIT on Instagram

Play with a live Neptune project -> Take a tour 📈

Self-Driving Cars With Convolutional Neural Networks (CNN)

Humanity has been waiting for self-driving cars for several decades. Thanks to the extremely fast evolution of technology, this idea recently went from “possible” to “commercially available in a Tesla”.

Deep learning is one of the main technologies that enabled self-driving. It’s a versatile tool that can solve almost any problem – it can be used in physics, for example, the proton-proton collision in the Large Hadron Collider, just as well as in Google Lens to classify pictures. Deep learning is a technology that can help solve almost any type of science or engineering problem.

In this article, we’ll focus on deep learning algorithms in self-driving cars – convolutional neural networks (CNN). CNN is the primary algorithm that these systems use to recognize and classify different parts of the road, and to make appropriate decisions.

Along the way, we’ll see how Tesla, Waymo, and Nvidia use CNN algorithms to make their cars driverless or autonomous.

You may also like

Experiment Tracking for Systems Powering Self-Driving Vehicles [Case Study with Waabi]

How do self-driving cars work?

The first self-driving car was invented in 1989, it was the Automatic Land Vehicle in Neural Network (ALVINN). It used neural networks to detect lines, segment the environment, navigate itself, and drive. It worked well, but it was limited by slow processing powers and insufficient data.

With today’s high-performance graphics cards, processors, and huge amounts of data, self-driving is more powerful than ever. If it becomes mainstream, it will reduce traffic congestion and increase road safety.

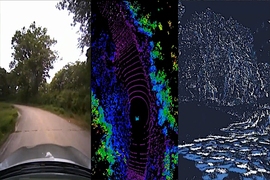

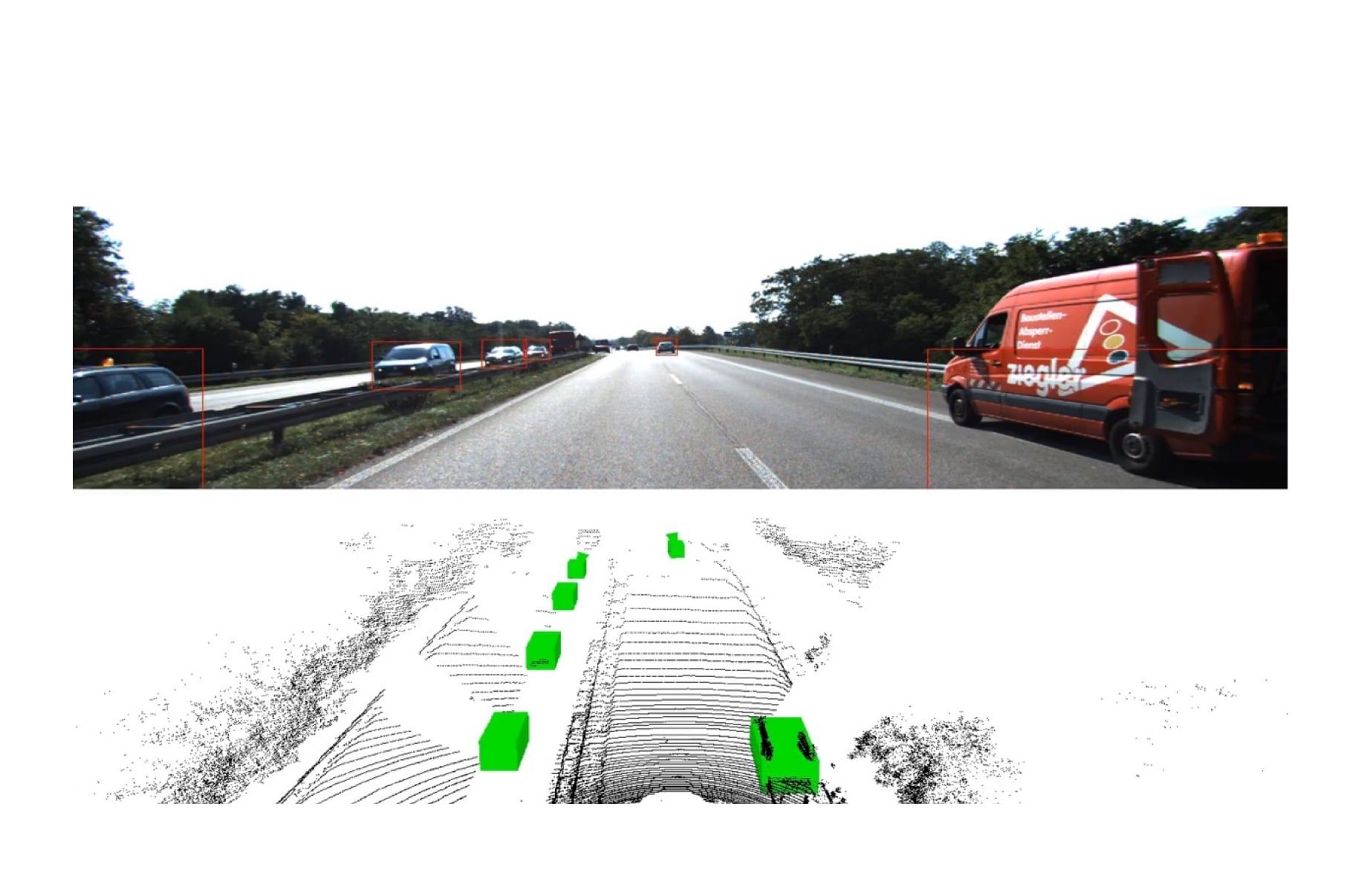

Self-driving cars are autonomous decision-making systems. They can process streams of data from different sensors such as cameras, LiDAR, RADAR, GPS, or inertia sensors. This data is then modeled using deep learning algorithms, which then make decisions relevant to the environment the car is in.

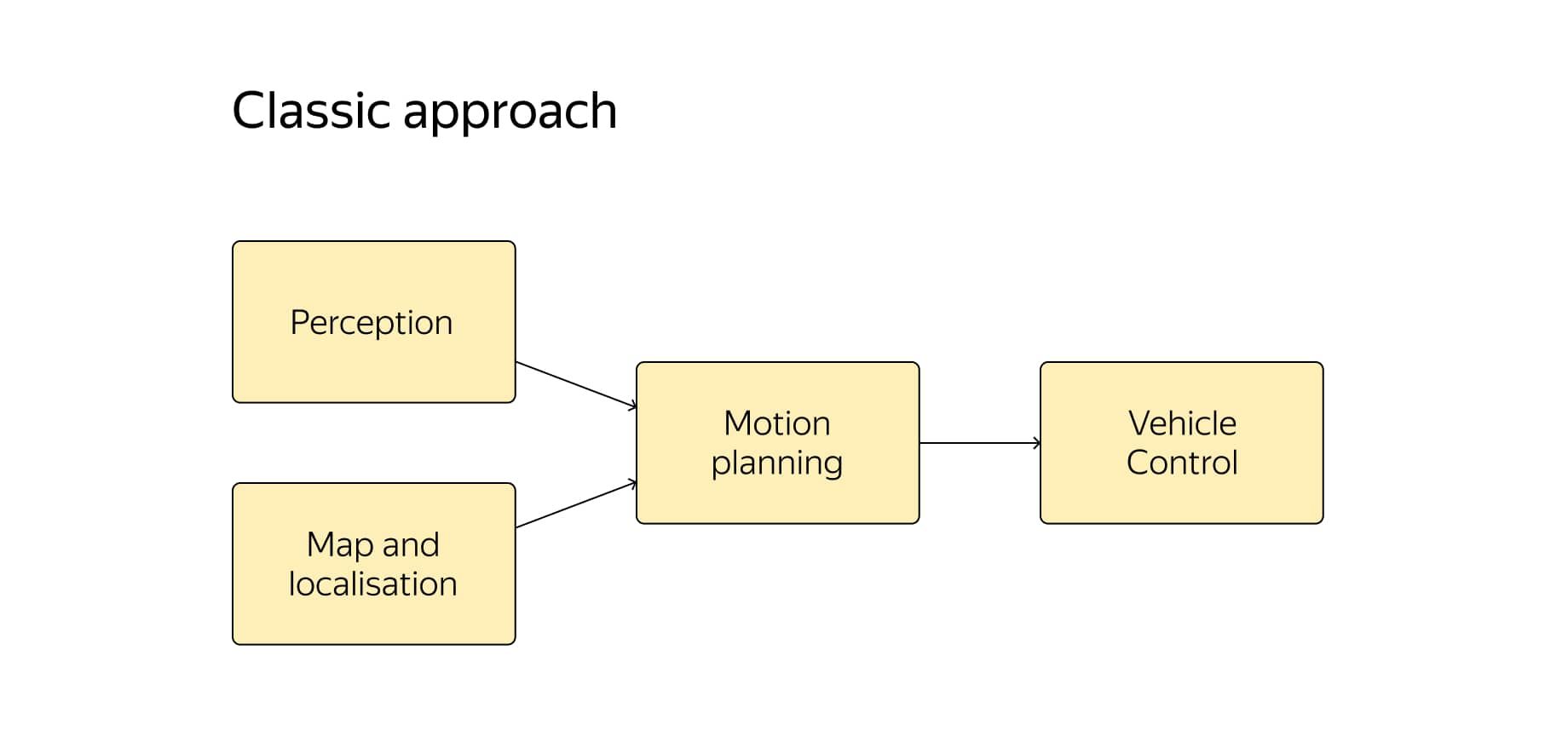

The image above shows a modular perception-planning-action pipeline used to make driving decisions. The key components of this method are the different sensors that fetch data from the environment.

To understand the workings of self-driving cars, we need to examine the four main parts:

Perception

Localization.

- High-level path planning

- Behaviour Arbitration

- Motion Controllers

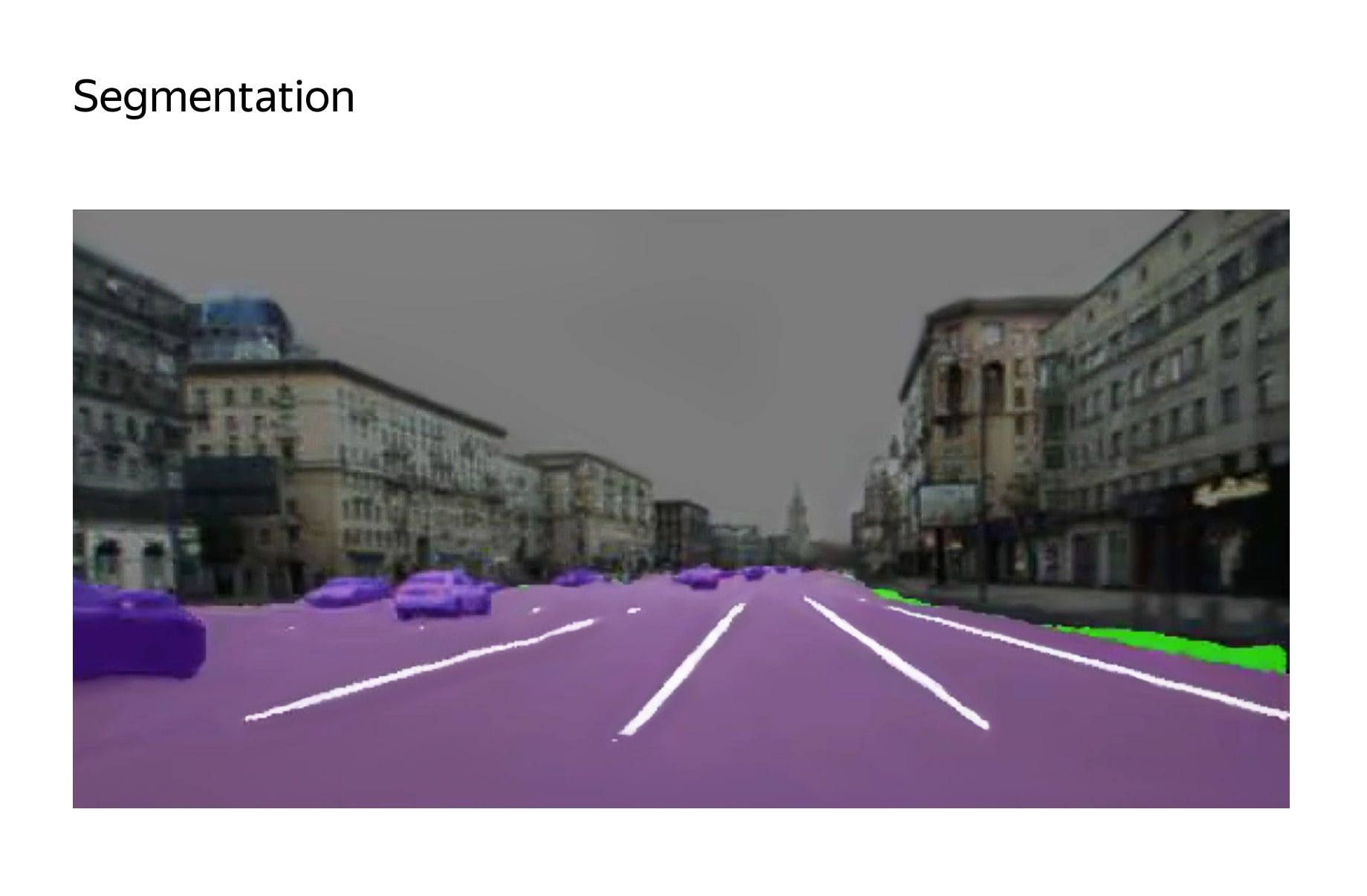

One of the most important properties that self-driving cars must have is perception , which helps the car see the world around itself, as well as recognize and classify the things that it sees. In order to make good decisions, the car needs to recognize objects instantly.

So, the car needs to see and classify traffic lights, pedestrians, road signs, walkways, parking spots, lanes, and much more. Not only that, it also needs to know the exact distance between itself and the objects around it. Perception is more than just seeing and classifying, it enables the system to evaluate the distance and decide to either slow down or brake.

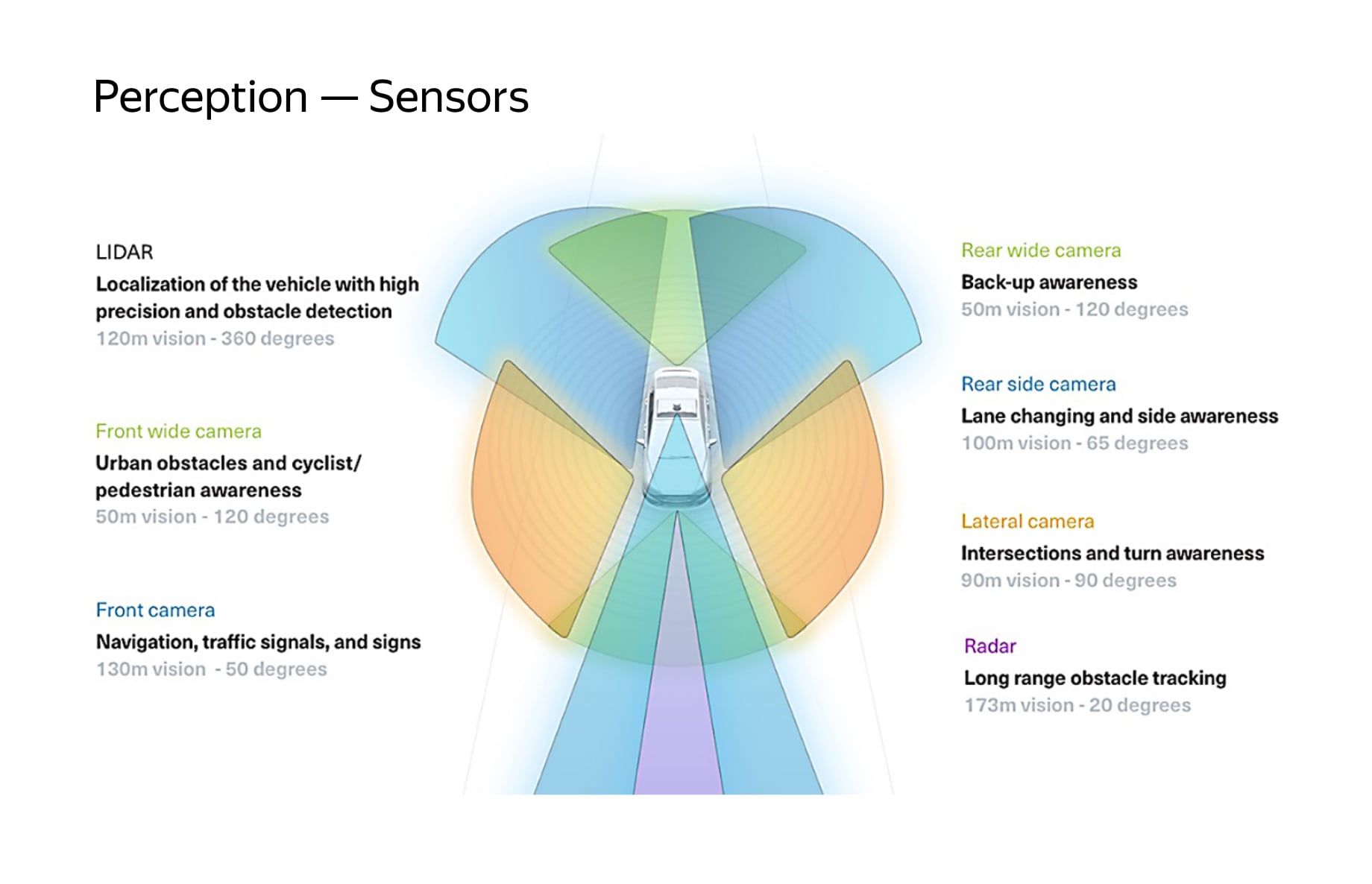

To achieve such a high level of perception, a self-driving car must have three sensors:

The camera provides vision to the car, enabling multiple tasks like classification, segmentation, and localization . The cameras need to be high-resolution and represent the environment accurately.

In order to make sure that the car receives visual information from every side: front, back, left, and right, the cameras are stitched together to get a 360-degree view of the entire environment. These cameras provide a wide-range view as far as 200 meters as well as a short-range view for more focused perception.

In some tasks like parking, the camera also provides a panoramic view for better decision-making.

Even though the cameras do all the perception related tasks, it’s hardly of any use during extreme conditions like fog, heavy rain, and especially at night time. During extreme conditions, all that cameras capture is noise and discrepancies, which can be life-threatening.

To overcome these limitations, we need sensors that can work without light and also measure distance.

LiDAR stands for Light Detection And Ranging, it’s a method to measure the distance of objects by firing a laser beam and then measuring how long it takes for it to be reflected by something.

A camera can only provide the car with images of what’s going around itself. When it’s combined with the LiDAR sensor, it gains depth in the images – it suddenly has a 3D perception of what’s going on around the car.

So, LiDAR perceives spatial information . And when this data is fed into deep neural networks, the car can predict the actions of the objects or vehicles close to it. This sort of technology is very useful in a complex driving scenario, like a multi-exit intersection, where the car can analyze all other cars and make the appropriate, safest decision.

In 2019, Elon Musk openly stated that “ anyone relying on LiDARs are doomed… ”. Why? Well, LiDARs have limitations that can be catastrophic. For example, the LiDAR sensor uses lasers or light to measure the distance of the nearby object. It will work at night and in dark environments, but it can still fail when there’s noise from rain or fog. That’s why we also need a RADAR sensor.

Radio detection and ranging (RADAR) is a key component in many military and consumer applications. It was first used by the military to detect objects. It calculates distance using radio wave signals . Today, it’s used in many vehicles and has become a primary component of the self-driving car.

RADARs are highly effective because they use radio waves instead of lasers, so they work in any conditions.

It’s important to understand that radars are noisy sensors. This means that even if the camera sees no obstacle, the radar will detect some obstacles.

The image above shows the self-driving car (in green) using LiDAR to detect objects around and to calculate the distance and shape of the object. Compare the same scene, but captured with the RADAR sensor below, and you can see a lot of unnecessary noise.

The RADAR data should be cleaned in order to make good decisions and predictions. We need to separate weak signals from strong ones; this is called thresholding . We also use Fast Fourier Transforms (FFT) to filter and interpret the signal.

If you look at the below above, you’ll notice that the RADAR and LiDAR signals are point-based data. This data should be clustered so that it can be interpreted nicely. Clustering algorithms such as Euclidean Clustering or K means Clustering are used to achieve this task.

Localization algorithms in self-driving cars calculate the position and orientation of the vehicle as it navigates – a science known as Visual Odometry (VO).

VO works by matching key points in consecutive video frames. With each frame, the key points are used as the input to a mapping algorithm. The mapping algorithm, such as Simultaneous localization and mapping (SLAM), computes the position and orientation of each object nearby with respect to the previous frame and helps to classify roads, pedestrians, and other objects around.

Deep learning is generally used to improve the performance of VO, and to classify different objects. Neural networks, such as PoseNet and VLocNet++, are some of the frameworks that use point data to estimate the 3D position and orientation. These estimated 3D positions and orientations can be used to derive scene semantics, as seen in the image below.

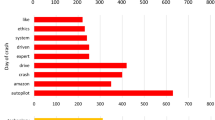

Understanding human drivers is a very complex task. It involves emotions rather than logic, and these are all fueled with reactions . It becomes very uncertain what the next action will be of the drivers or pedestrians nearby, so a system that can predict the actions of other road users can be very important for road safety.

The car has a 360-degree view of its environment that enables it to perceive and capture all the information and process it. Once fed into the deep learning algorithm, it can come up with all the possible moves that other road users might make. It’s like a game where the player has a finite number of moves and tries to find the best move to defeat the opponent.

The sensors in self-driving cars enable them to perform tasks like image classification, object detection, segmentation, and localization. With various forms of data representation, the car can make predictions of the object around it.

A deep learning algorithm can model such information (images and cloud data points from LiDARs and RADARs) during training. The same model, but during inference, can help the car to prepare for all the possible moves which involve braking, halting, slowing down, changing lanes, and so on.

The role of deep learning is to interpret complex vision tasks, localize itself in the environment, enhance perception, and actuate kinematic maneuvers in self-driving cars. This ensures road safety and easy commute as well.

But the tricky part is to choose the correct action out of a finite number of actions.

Decision-making

Decision-making is vital in self-driving cars. They need a system that’s dynamic and precise in an uncertain environment. It needs to take into account that not all sensor readings will be true, and that humans can make unpredictable choices while driving. These things can’t be measured directly. Even if we could measure them, we can’t predict them with good accuracy.

The image above shows a self-driving car moving towards an intersection. Another car, in blue, is also moving towards the intersection. In this scenario, the self-driving car has to predict whether the other car will go straight, left, or right. In each case, the car has to decide what maneuver it should perform to prevent a collision.

In order to make a decision, the car should have enough information so that it can select the necessary set of actions. We learned that the sensors help the car to collect information and deep learning algorithms can be used for localization and prediction.

To recap, localization enables the car to know its initial position, and prediction creates an n number of possible actions or moves based on the environment. The question remains: which option is best out of the many predicted actions?

When it comes to making decisions, we use deep reinforcement learning (DRL). More specifically, a decision-making algorithm called the Markov decision process (MDP) lies at the heart of DRL (we’ll learn more about MDP in a later section where we talk about reinforcement learning).

Usually, an MDP is used to predict the future behavior of the road-users. We should keep in mind that the scenario can get very complex if the number of objects, especially moving ones, increases. This eventually increases the number of possible moves for the self-driving car itself.

In order to tackle the problem of finding the best move for itself, the deep learning model is optimized with Bayesian optimization . There are also situations where the framework, consisting of both a hidden Markov model and Bayesian Optimization, is used for decision-making.

In general, decision-making in self-driving cars is a hierarchical process. This process has four components:

- Path or Route planning : Essentially, route planning is the first of four decisions that the car must make. Entering the environment, the car should plan the best possible route from its current position to the requested destination. The idea is to find an optimal solution among all the other solutions.

- Behaviour Arbitration : Once the route is planned, the car needs to navigate itself through the route. The car knows about the static elements, like roads, intersections, average road congestion and more, but it can’t know exactly what the other road users are going to be doing throughout the journey. This uncertainty in the behavior of other road users is solved by using probabilistic planning algorithms like MDPs.

- Motion Planning : Once the behavior layer decides how to navigate through a certain route, the motion planning system orchestrates the motion of the car. The motion of the car must be feasible and comfortable for the passenger. Motion planning includes speed of the vehicle, lane-changing, and more, all of which should be relevant to the environment the car is in.

- Vehicle Control : Vehicle control is used to execute the reference path from the motion planning system.

CNNs used for self-driving cars

Convolutional neural networks (CNN) are used to model spatial information, such as images. CNNs are very good at extracting features from images, and they’re often seen as universal non-linear function approximators.

CNNs can capture different patterns as the depth of the network increases. For example, the layers at the beginning of the network will capture edges, while the deep layers will capture more complex features like the shape of the objects (leaves in trees, or tires on a vehicle). This is the reason why CNNs are the main algorithm in self-driving cars.

The key component of the CNN is the convolutional layer itself. It has a convolutional kernel which is often called the filter matrix . The filter matrix is convolved with a local region of the input image which can be defined as:

Where:

- the operator * represents the convolution operation,

- w is the filter matrix and b is the bias,

- x is the input,

- y is the output.

The dimension of the filter matrix in practice is usually 3X3 or 5X5. During the training process, the filter matrix will constantly update itself to get a reasonable weight. One of the properties of CNN is that the weights are shareable. The same weight parameters can be used to represent two different transformations in the network. The shared parameter saves a lot of processing space; they can produce more diverse feature representations learned by the network.

The output of the CNN is usually fed to a nonlinear activation function . The activation function enables the network to solve the linear inseparable problems, and these functions can represent high-dimensional manifolds in lower-dimensional manifolds. Commonly used activation functions are Sigmoid, Tanh, and ReLU, which are listed as follows:

It’s worth mentioning that the ReLU is the preferred activation function, because it converges faster compared to the other activation functions. In addition to that, the output of the convolution layer is modified by the max-pooling layer which keeps more information about the input image, like the background and texture.

The three important CNN properties that make them versatile and a primary component of self-driving cars are:

- local receptive fields,

- shared weights,

- spatial sampling .

These properties reduce overfitting and store representations and features that are vital for image classification, segmentation, localization, and more.

Next, we’ll discuss three CNN networks that are used by three companies pioneering self-driving cars:

- HydraNet by Tesla

- ChauffeurNet by Google Waymo

- Nvidia Self driving car

HydraNet – semantic segmentation for self-driving cars

HydraNet was introduced by Ravi et al. in 2018 . It was developed for semantic segmentation, for improving computational efficiency during inference time.

HydraNets is dynamic architecture so it can have different CNN networks, each assigned to different tasks. These blocks or networks are called branches. The idea of HydraNet is to get various inputs and feed them into a task-specific CNN network.

Take the context of self-driving cars. One input dataset can be of static environments like trees and road-railing, another can be of the road and the lanes, another of traffic lights and road, and so on. These inputs are trained in different branches. During the inference time, the gate chooses which branches to run, and the combiner aggregates branch outputs and makes a final decision.

In the case of Tesla, they have modified this network slightly because it’s difficult to segregate data for the individual tasks during inference. To overcome that problem, engineers at Tesla developed a shared backbone. The shared backbones are usually modified ResNet-50 blocks.

This HydraNet is trained on all the object’s data. There are task-specific heads that allow the model to predict task-specific outputs. The heads are based on semantic segmentation architecture like the U-Net.

The Tesla HydraNet can also project a birds-eye, meaning it can create a 3D view of the environment from any angle, giving the car much more dimensionality to navigate properly. It’s important to know that Tesla doesn’t use LiDAR sensors. It has only two sensors, a camera and a radar. Although LiDAR explicitly creates depth perception for the car, Tesla’s hydranet is so efficient that it can stitch all the visual information from the 8 cameras in it and create depth perception.

ChauffeurNet: training self-driving car using imitation learning

ChauffeurNet is an RNN-based neural network used by Google Waymo, however, CNN is actually one of the core components here and it’s used to extract features from the perception system.

The CNN in ChauffeurNet is described as a convolutional feature network, or FeatureNet, that extracts contextual feature representation shared by the other networks. These representations are then fed to a recurrent agent network (AgentRNN) that iteratively yields the prediction of successive points in the driving trajectory.

The idea behind this network is to train a self-driving car using imitation learning. In the paper released by Bansal et al “ ChauffeurNet: Learning to Drive by Imitating the Best and Synthesizing the Worst ”, they argue that training a self-driving car even with 30 million examples is not enough. In order to tackle that limitation, the authors trained the car in synthetic data. This synthetic data introduced deviations such as introducing perturbation to the trajectory path, adding obstacles, introducing unnatural scenes, etc. They found that such synthetic data was able to train the car much more efficiently than the normal data.

Usually, self-driving has an end-to-end process as we saw earlier, where the perception system is part of a deep learning algorithm along with planning and controlling. In the case of ChauffeurNet, the perception system is not a part of the end-to-end process; instead, it’s a mid-level system where the network can have different variations of input from the perception system.

ChauffeurNet yields a driving trajectory by observing a mid-level representation of the scene from the sensors, using the input along with synthetic data to imitate an expert driver.

In the image above, the cyan path depicts the input route, green box is the self-driving car, blue dots are the agent’s past route or position, and green dots are the predicted future routes or positions.

Essentially, a mid-level representation doesn’t directly use raw sensor data as input, factoring out the perception task, so we can combine real and simulated data for easier transfer learning. This way, the network can create a high-level bird’s eye view of the environment which ultimately yields better decisions.

Nvidia self-driving car: a minimalist approach towards self-driving cars

Nvidia also uses a Convolution Neural Network as a primary algorithm for its self-driving car. But unlike Tesla, it uses 3 cameras, one on each side and one at the front. See the image below.

The network is capable of operating inroads that don’t have lane markings, including parking lots. It can also learn features and representations that are necessary for detecting useful road features.

Compared to the explicit decomposition of the problem such as lane marking detection, path planning, and control, this end-to-end system optimizes all processing steps at the same time.

Better performance is the result of internal components self-optimizing to maximize overall system performance, instead of optimizing human-selected intermediate criteria like lane detection. Such criteria understandably are selected for ease of human interpretation, which doesn’t automatically guarantee maximum system performance. Smaller networks are possible because the system learns to solve the problem with a minimal number of processing steps.

Reinforcement learning used for self-driving cars

Reinforcement learning (RL) is a type of machine learning where an agent learns by exploring and interacting with the environment. In this case, the self-driving car is an agent .

Explore more applications of RL

10 Real-Life Applications of Reinforcement Learning

7 Applications of Reinforcement Learning in Finance and Trading

We discussed earlier how the neural network predicts a number of actions from the perception data. But, choosing an appropriate action requires deep reinforcement learning (DRL). At the core of DRL, we have three important variables:

- State describes the current situation in a given time. In this case, it would be a position on the road.

- Action describes all the possible moves that the car can make.

- Reward is feedback that the car receives whenever it takes a certain action.

Generally, the agent is not told what to do or what actions to take. So far as we have seen, in supervised learning, the algorithm maps input to the output. In DRL, the algorithm learns by exploring the environment and each interaction yields a certain reward. The reward can be both positive and negative. The goal of the DRL is to maximize the cumulative rewards.

In self-driving cars, the same procedure is followed: the network is trained on perception data, where it learns what decision it should make. Because the CNNs are very good at extracting features of representations from the input, DRL algorithms can be trained on those representations. Training a DRL algorithm on these representations can yield good results because these extracted representations are the transformation of higher-dimensional manifolds into simpler lower-dimensional manifolds. Training on lower representation yields efficiency which is required at the inference.

One key point to remember is that self-driving cars can’t be trained in real-world scenarios or roads because they will be extremely dangerous. Instead, self-driving cars are trained on a simulator where there’s no risk at all.

Some open-source simulators are:

These cars (agents) are trained for thousands of epochs with highly difficult simulations before they’re deployed in the real world.

During training, the agent (the car) learns by taking a certain action in a certain state. Based on this state-action pair, it receives a reward . This process happens over and over again. Each time the agent updates its memory of rewards. This is called the policy .

The policy is described as how the agent makes decisions. It’s a decision-making rule. The policy defines the behaviour of the agent at a given time.

For every negative decision the agent makes, the policy is changed. So in order to avoid the negative rewards, the agent checks the quality of a certain action. This is measured by the state-value function. State-value can be measured using the Bellman Expectation Equation.

The Bellman expectation equation, along with Markov Decision Process (MDP), makes up the two core concepts of DRL. But when it comes to self-driving cars, we have to keep in mind that the observations from the perception data should be mapped with the appropriate action and not just map the underlying state to the action. This is where a partially observed decision process or a Partially Observable Markov Decision Process (POMDP) is required, which can make decisions based on the observation.

Partially Observable Markov Decision Process used for self-driving cars

The Markov Decision Process gives us a way to sequentialize decision-making. When the agent interacts with the environment, it does so sequentially over time. Each time the agent interacts with the environment, it gives some representation of the environment state. Given the representation of the state, the agent selects the action to take, as in the image below.

The action taken is transitioned into some new state and the agent is given a reward. This process of evaluating a state, taking action, changing states, and rewarding is repeated. Throughout the process, it’s the agent’s goal to maximize the total amount of rewards.

Let’s get a more constructive idea of the whole process:

- At a give time t, the state of the environment is at St

- The agent observes the current state St and selects an action At

- The environment is then transitioned into a new state St+1, simultaneously the agent is rewarded Rt