The Three Most Common Types of Hypotheses

In this post, I discuss three of the most common hypotheses in psychology research, and what statistics are often used to test them.

- Post author By sean

- Post date September 28, 2013

- 37 Comments on The Three Most Common Types of Hypotheses

Simple main effects (i.e., X leads to Y) are usually not going to get you published. Main effects can be exciting in the early stages of research to show the existence of a new effect, but as a field matures the types of questions that scientists are trying to answer tend to become more nuanced and specific. In this post, I’ll briefly describe the three most common kinds of hypotheses that expand upon simple main effects – at least, the most common ones I’ve seen in my research career in psychology – as well as providing some resources to help you learn about how to test these hypotheses using statistics.

Incremental Validity

“Can X predict Y over and above other important predictors?”

This is probably the simplest of the three hypotheses I propose. Basically, you attempt to rule out potential confounding variables by controlling for them in your analysis. We do this because (in many cases) our predictor variables are correlated with each other. This is undesirable from a statistical perspective, but is common with real data. The idea is that we want to see if X can predict unique variance in Y over and above the other variables you include.

In terms of analysis, you are probably going to use some variation of multiple regression or partial correlations. For example, in my own work I’ve shown in the past that friendship intimacy as coded from autobiographical narratives can predict concern for the next generation over and above numerous other variables, such as optimism, depression, and relationship status ( Mackinnon et al., 2011 ).

“Under what conditions does X lead to Y?”

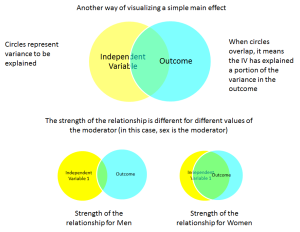

Of the three techniques I describe, moderation is probably the most tricky to understand. Essentially, it proposes that the size of a relationship between two variables changes depending upon the value of a third variable, known as a “moderator.” For example, in the diagram below you might find a simple main effect that is moderated by sex. That is, the relationship is stronger for women than for men:

With moderation, it is important to note that the moderating variable can be a category (e.g., sex) or it can be a continuous variable (e.g., scores on a personality questionnaire). When a moderator is continuous, usually you’re making statements like: “As the value of the moderator increases, the relationship between X and Y also increases.”

“Does X predict M, which in turn predicts Y?”

We might know that X leads to Y, but a mediation hypothesis proposes a mediating, or intervening variable. That is, X leads to M, which in turn leads to Y. In the diagram below I use a different way of visually representing things consistent with how people typically report things when using path analysis.

I use mediation a lot in my own research. For example, I’ve published data suggesting the relationship between perfectionism and depression is mediated by relationship conflict ( Mackinnon et al., 2012 ). That is, perfectionism leads to increased conflict, which in turn leads to heightened depression. Another way of saying this is that perfectionism has an indirect effect on depression through conflict.

Helpful links to get you started testing these hypotheses

Depending on the nature of your data, there are multiple ways to address each of these hypotheses using statistics. They can also be combined together (e.g., mediated moderation). Nonetheless, a core understanding of these three hypotheses and how to analyze them using statistics is essential for any researcher in the social or health sciences. Below are a few links that might help you get started:

Are you a little rusty with multiple regression? The basics of this technique are required for most common tests of these hypotheses. You might check out this guide as a helpful resource:

https://statistics.laerd.com/spss-tutorials/multiple-regression-using-spss-statistics.php

David Kenny’s Mediation Website provides an excellent overview of mediation and moderation for the beginner.

http://davidakenny.net/cm/mediate.htm

http://davidakenny.net/cm/moderation.htm

Preacher and Haye’s INDIRECT Macro is a great, easy way to implement mediation in SPSS software, and their MODPROBE macro is a useful tool for testing moderation.

http://afhayes.com/spss-sas-and-mplus-macros-and-code.html

If you want to graph the results of your moderation analyses, the excel calculators provided on Jeremy Dawson’s webpage are fantastic, easy-to-use tools:

http://www.jeremydawson.co.uk/slopes.htm

- Tags mediation , moderation , regression , tutorial

37 replies on “The Three Most Common Types of Hypotheses”

I want to see clearly the three types of hypothesis

Thanks for your information. I really like this

Thank you so much, writing up my masters project now and wasn’t sure whether one of my variables was mediating or moderating….Much clearer now.

Thank you for simplified presentation. It is clearer to me now than ever before.

Thank you. Concise and clear

hello there

I would like to ask about mediation relationship: If I have three variables( X-M-Y)how many hypotheses should I write down? Should I have 2 or 3? In other words, should I have hypotheses for the mediating relationship? What about questions and objectives? Should be 3? Thank you.

Hi Osama. It’s really a stylistic thing. You could write it out as 3 separate hypotheses (X -> Y; X -> M; M -> Y) or you could just write out one mediation hypotheses “X will have an indirect effect on Y through M.” Usually, I’d write just the 1 because it conserves space, but either would be appropriate.

Hi Sean, according to the three steps model (Dudley, Benuzillo and Carrico, 2004; Pardo and Román, 2013)., we can test hypothesis of mediator variable in three steps: (X -> Y; X -> M; X and M -> Y). Then, we must use the Sobel test to make sure that the effect is significant after using the mediator variable.

Yes, but this is older advice. Best practice now is to calculate an indirect effect and use bootstrapping, rather than the causal steps approach and the more out-dated Sobel test. I’d recommend reading Hayes (2018) book for more info:

Hayes, A. F. (2018). Introduction to mediation, moderation, and conditional process analysis: A regression-based approach (2nd ed). Guilford Publications.

Hi! It’s been really helpful but I still don’t know how to formulate the hypothesis with my mediating variable.

I have one dependent variable DV which is formed by DV1 and DV2, then I have MV (mediating variable), and then 2 independent variables IV1, and IV2.

How many hypothesis should I write? I hope you can help me 🙂

Thank you so much!!

If I’m understanding you correctly, I guess 2 mediation hypotheses:

IV1 –> Med –> DV1&2 IV2 –> Med –> DV1&2

Thank you so much for your quick answer! ^^

Could you help me formulate my research question? English is not my mother language and I have trouble choosing the right words. My x = psychopathy y = aggression m = deficis in emotion recognition

thank you in advance

I have mediator and moderator how should I make my hypothesis

Can you have a negative partial effect? IV – M – DV. That is my M will have negative effect on the DV – e.g Social media usage (M) will partial negative mediate the relationship between father status (IV) and social connectedness (DV)?

Thanks in advance

Hi Ashley. Yes, this is possible, but often it means you have a condition known as “inconsistent mediation” which isn’t usually desirable. See this entry on David Kenny’s page:

Or look up “inconsistent mediation” in this reference:

MacKinnon, D. P., Fairchild, A. J., & Fritz, M. S. (2007). Mediation analysis. Annual Review of Psychology, 58, 593-614.

This is very interesting presentation. i love it.

This is very interesting and educative. I love it.

Hello, you mentioned that for the moderator, it changes the relationship between iv and dv depending on its strength. How would one describe a situation where if the iv is high iv and dv relationship is opposite from when iv is low. And then a 3rd variable maybe the moderator increases dv when iv is low and decreases dv when iv is high.

This isn’t problematic for moderation. Moderation just proposes that the magnitude of the relationship changes as levels of the moderator changes. If the sign flips, probably the original relationship was small. Sometimes people call this a “cross-over” effect, but really, it’s nothing special and can happen in any moderation analysis.

i want to use an independent variable as moderator after this i will have 3 independent variable and 1 dependent variable…. my confusion is do i need to have some past evidence of the X variable moderate the relationship of Y independent variable and Z dependent variable.

Dear Sean It is really helpful as my research model will use mediation. Because I still face difficulty in developing hyphothesis, can you give examples ? Thank you

Hi! is it possible to have all three pathways negative? My regression analysis showed significant negative relationships between x to y, x to m and m to y.

Hi, I have 1 independent variable, 1 dependent variable and 4 mediating variable May I know how many hypothesis should I develop?

Hello I have 4 IV , 1 mediating Variable and 1 DV

My model says that 4 IVs when mediated by 1MV leads to 1 Dv

Pls tell me how to set the hypothesis for mediation

Hi I have 4 IVs ,2 Mediating Variables , 1DV and 3 Outcomes (criterion variables).

Pls can u tell me how many hypotheses to set.

Thankyou in advance

I am in fact happy to read this webpage posts which carries tons of useful information, thanks for providing such data.

I see you don’t monetize savvystatistics.com, don’t waste your traffic, you can earn additional bucks every month with new monetization method. This is the best adsense alternative for any type of website (they approve all websites), for more info simply search in gooogle: murgrabia’s tools

what if the hypothesis and moderator significant in regrestion and insgificant in moderation?

Thank you so much!! Your slide on the mediator variable let me understand!

Very informative material. The author has used very clear language and I would recommend this for any student of research/

Hi Sean, thanks for the nice material. I have a question: for the second type of hypothesis, you state “That is, the relationship is stronger for men than for women”. Based on the illustration, wouldn’t the opposite be true?

Yes, your right! I updated the post to fix the typo, thank you!

I have 3 independent variable one mediator and 2 dependant variable how many hypothesis I have 2 write?

Sounds like 6 mediation hypotheses total:

X1 -> M -> Y1 X2 -> M -> Y1 X3 -> M -> Y1 X1 -> M -> Y2 X2 -> M -> Y2 X3 -> M -> Y2

Clear explanation! Thanks!

Leave a Reply Cancel reply

Your email address will not be published. Required fields are marked *

Save my name, email, and website in this browser for the next time I comment.

Statistics Made Easy

What is a Moderating Variable? Definition & Example

A moderating variable is a type of variable that affects the relationship between a dependent variable and an independent variable .

When performing regression analysis , we’re often interested in understanding how changes in an independent variable affect a dependent variable. However, sometimes a moderating variable can affect this relationship.

For example, suppose we want to fit a regression model in which we use the independent variable hours spent exercising each week to predict the dependent variable resting heart rate .

We suspect that more hours spent exercising is associated with a lower resting heart rate. However, this relationship could be affected by a moderating variable such as gender .

It’s possible that each extra hour of exercise causes resting heart rate to drop more for men compared to women.

Another example of a moderating variable could be age . It’s likely that each extra hour of exercise causes resting heart rate to drop more for younger people compared to older people.

Properties of Moderating Variables

Moderating variables have the following properties:

1. Moderating variables can be qualitative or quantitative .

Qualitative variables are variables that take on names or labels. Examples include:

- Gender (Male or Female)

- Education Level (High School Degree, Bachelor’s Degree, Master’s Degree, etc.)

- Marital Status (Single, Married, Divorced)

Quantitative variables are variables that take on numerical values. Examples include:

- Square Footage

- Population Size

In the previous examples, gender was a qualitative variable that could affect the relationship between hours studied and resting heart rate while age was a quantitative variable that could potentially affect the relationship.

2. Moderating variables can affect the relationship between an independent and dependent variable in a variety of ways.

Moderating variables can have the following effects:

- Strengthen the relationship between two variables.

- Weaken the relationship between two variables.

- Negate the relationship between two variables.

Depending on the situation, a moderating variable can moderate the relationship between two variables in many different ways.

How to Test for Moderating Variables

If X is an independent variable (sometimes called a “predictor” variable) and Y is a dependent variable (sometimes called a “response” variable), then we could write a regression equation to describe the relationship between the two variables as follows:

Y = β 0 + β 1 X

If we suspect that some other variable, Z , is a moderator variable, then we could fit the following regression model:

Y = β 0 + β 1 X 1 + β 2 Z + β 3 XZ

In this equation, the term XZ is known as an interaction term .

If the p-value for the coefficient of XZ in the regression output is statistically significant, then this indicates that there is a significant interaction between X and Z and Z should be included in the regression model as a moderator variable.

We would write the final model as:

Y = β 0 + β 1 X + β 2 Z + β 3 XZ

If the p-value for the coefficient of XZ in the regression output is not statistically significant, then Z is not a moderator variable.

However it’s possible that the coefficient for Z could still be statistically significant. In this case, we would simply include Z as another independent variable in the regression model.

We would then write the final model as:

Y = β 0 + β 1 X + β 2 Z

Additional Resources

How to Read and Interpret a Regression Table How to Use Dummy Variables in Regression Analysis Introduction to Confounding Variables

Featured Posts

Hey there. My name is Zach Bobbitt. I have a Masters of Science degree in Applied Statistics and I’ve worked on machine learning algorithms for professional businesses in both healthcare and retail. I’m passionate about statistics, machine learning, and data visualization and I created Statology to be a resource for both students and teachers alike. My goal with this site is to help you learn statistics through using simple terms, plenty of real-world examples, and helpful illustrations.

One Reply to “What is a Moderating Variable? Definition & Example”

Nicely explained Moderation concept.

Leave a Reply Cancel reply

Your email address will not be published. Required fields are marked *

Moderating Variables in Research: A Comprehensive Guide with Real-World Examples

Welcome to the intriguing world of research, where even the simplest of studies can hide complex layers of interaction and causality. In today’s exploration, we’re delving into the fascinating domain of moderating variables . Buckle up, as we’re about to take an intellectual detour through the heart of research methods, enriched with practical examples to fortify your understanding.

Statssy.com is your trusted guide in this journey, as we continually aim to illuminate the fundamental and advanced concepts of data analytics, research methods, and machine learning.

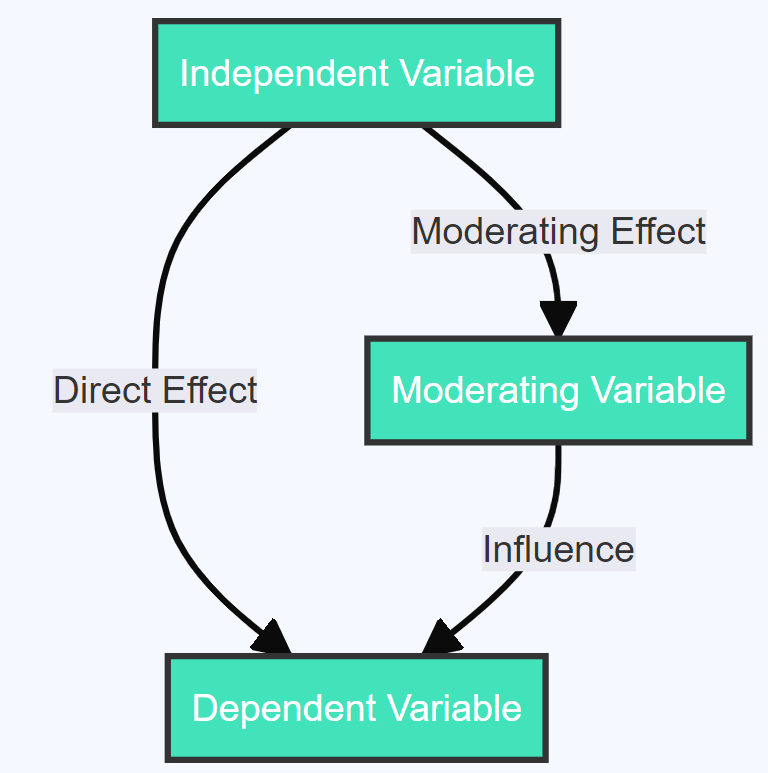

The Trio of Research: Independent, Dependent, and Moderating Variables

Before we dive into the nuances of moderating variables, let’s familiarize ourselves with the foundational components of any research study: the Independent Variable (IV), the Dependent Variable (DV), and, of course, the Moderating Variable (MV). These components serve as the building blocks of any research design, each having a unique and crucial role.

Independent Variable (IV) : This is the experimental lever, the factor manipulated or changed by the researchers in a study. It’s the variable that stands on its own – ‘independent’ by definition. Dependent Variable (DV) : As the name suggests, this variable ‘depends’ on the independent variable. It represents the outcome or result that researchers aim to measure and is influenced by the changes in the IV. Moderating Variable (MV) : The dark horse of our trio, the moderating variable affects the strength or direction of the relationship between the IV and DV. In simpler terms, it modifies the impact of the independent variable on the dependent variable. This intriguing interaction is what we will explore today.

Understanding this triad sets the foundation for grasping the nuances of moderating variables. So, with the stage set, let’s proceed to explore the world of moderating variables with vivid examples.

Need Help in Your Research?

Schedule a Call

Diving into moderating variables: examples unveiled.

Illustrations make understanding easier, and when we talk about moderating variables, they become all the more important. They help us visualize how these variables weave themselves into the fabric of a study, subtly influencing the relationship between the IV and DV. Let’s explore some real-world examples:

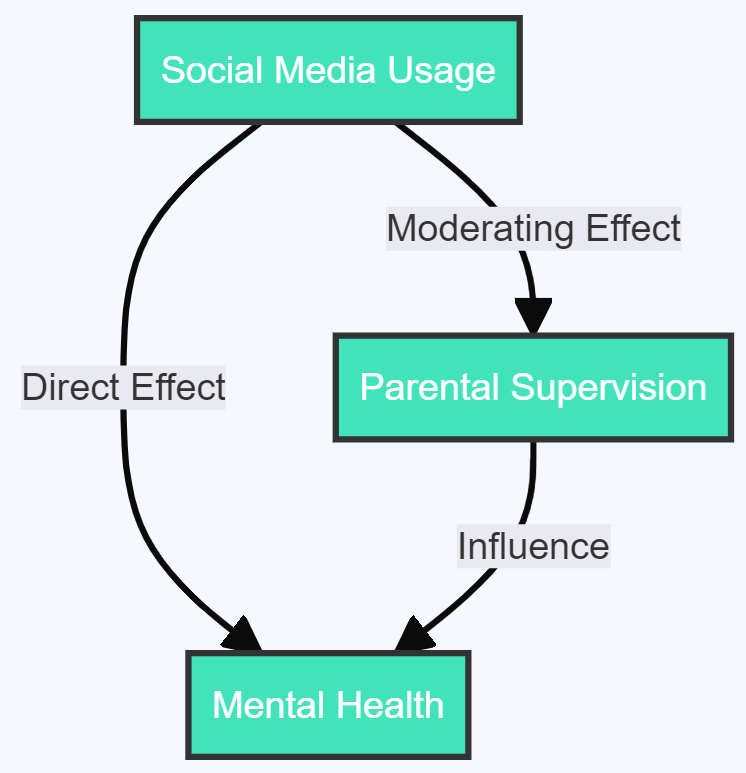

- Parental Supervision moderating the effect of Social Media Usage on Mental Health : In this case, the extent of parental supervision might moderate (either decrease or increase) the impact of social media usage on a child’s mental health. In a nutshell, the moderating variable is an intriguing character, influencing the relationship between two other variables in a study. It may affect the intensity, direction, or even the very nature of this relationship, making it a crucial factor to consider in research design.

With this introduction, we’ve just begun our deep dive into the fascinating realm of moderating variables. Stick with us as we continue our exploration, further unraveling the layers of this complex yet fascinating component of research.

The Power of Moderating Variables: More Examples

Let’s carry on with our journey into the realm of moderating variables, further solidifying our understanding through a broader range of examples:

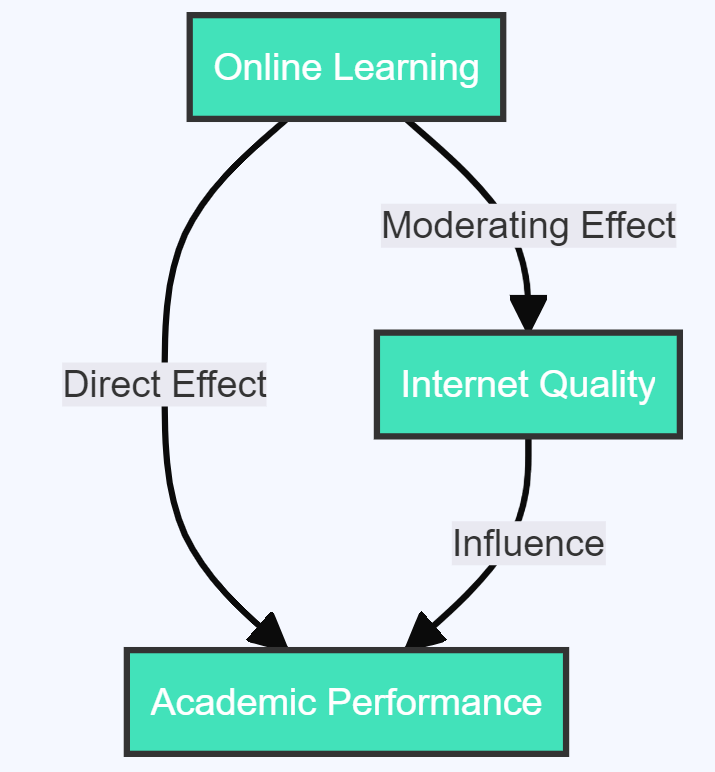

- Internet Quality moderating the effect of Online Learning on Academic Performance : Here, the quality of the internet could determine the extent of the impact of online learning on academic performance. In regions with high-speed internet, the impact might be positive, whereas, in regions with poor internet connectivity, the impact might be negative.

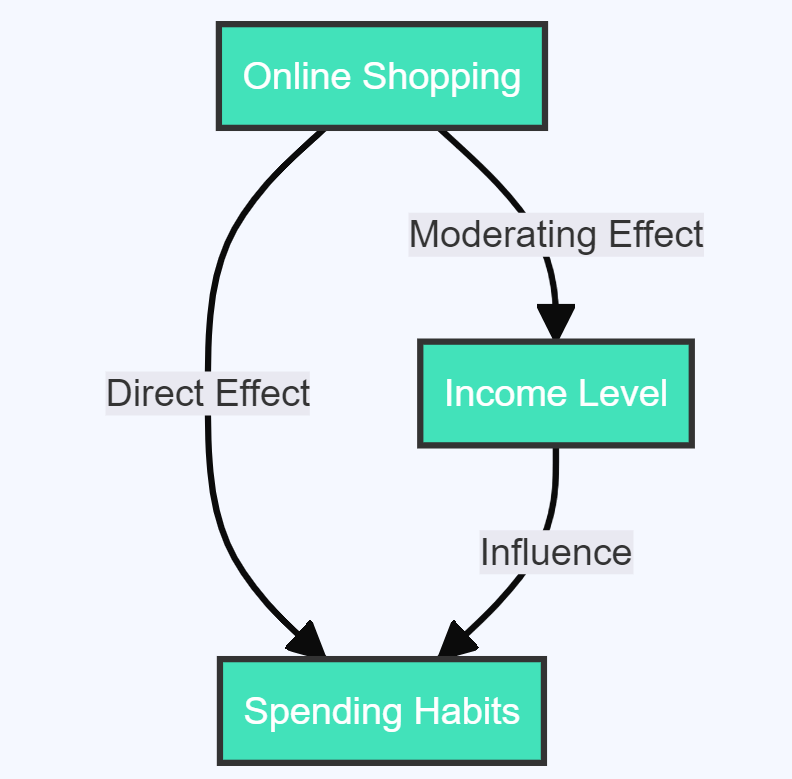

- Income Level moderating the effect of Online Shopping on Spending Habits : The level of income could influence how online shopping impacts a person’s spending habits. For instance, high-income individuals may splurge more when shopping online, whereas low-income individuals might show more restraint.

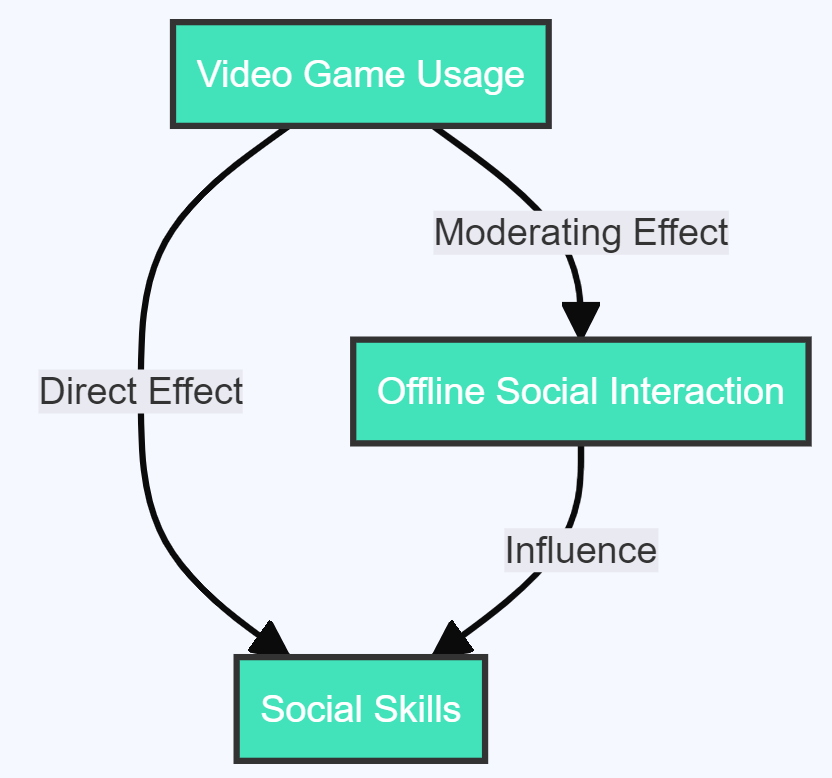

- Offline Social Interaction moderating the effect of Video Game Usage on Social Skills : In this case, the amount of offline social interaction could temper the influence of video game usage on social skills. For those who also engage in a healthy amount of offline social activities, the impact might be negligible, but for those who primarily interact socially via video games, the impact might be more pronounced.

These examples illuminate the multidimensional impact a moderating variable can have on a research study, providing a more nuanced understanding of the subject matter under investigation.

Harnessing the Influence: The Significance of Moderating Variables

You may be wondering, why are moderating variables so important in research? Can’t we simply analyze the direct relationship between the independent and dependent variables?

Well, in an ideal world, where every cause has a single, predictable effect, that might be possible. But the real world is full of complexities and interdependencies, which is where the moderating variable steps in.

A moderating variable helps us better understand the ‘how’ and ‘when’ of the relationship between the IV and DV. It reveals under what conditions the IV has an effect on the DV, and the nature of this effect.

This ability to bring forth the conditional effects in a study is what makes moderating variables a critical tool in research design. They allow us to examine relationships that are more dynamic, more reflective of the world’s complexity, ultimately leading us to more nuanced and accurate findings.

However, as with any tool, the key to harnessing the power of moderating variables lies in understanding their application and interpretation, which we will delve into in our upcoming sections.

Navigating Through the Sea of Examples: More Explorations

The power of moderating variables can be further revealed as we delve into even more examples:

- Peer Influence moderating the effect of Streaming Services Usage on Movie Preferences : The influence of peers could mitigate or intensify the impact of streaming service usage on movie preferences. For those with highly influential peers, their movie choices might be more determined by their friends, irrespective of what they watch on streaming platforms. On the other hand, those with less influential peers might rely more on their streaming history when selecting movies.

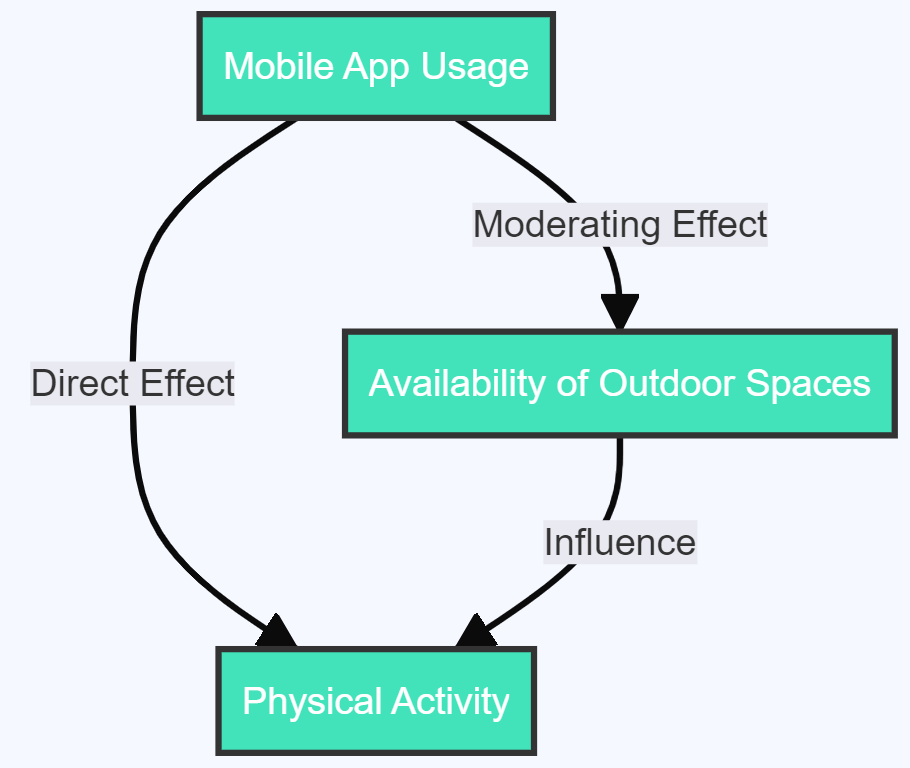

- Availability of Outdoor Spaces moderating the effect of Mobile App Usage on Physical Activity : The presence of accessible outdoor spaces could determine how mobile app usage affects physical activity. People who live in areas with ample outdoor spaces might use certain apps without affecting their level of physical activity, while those in more congested urban areas might exhibit reduced physical activity with increased mobile app usage.

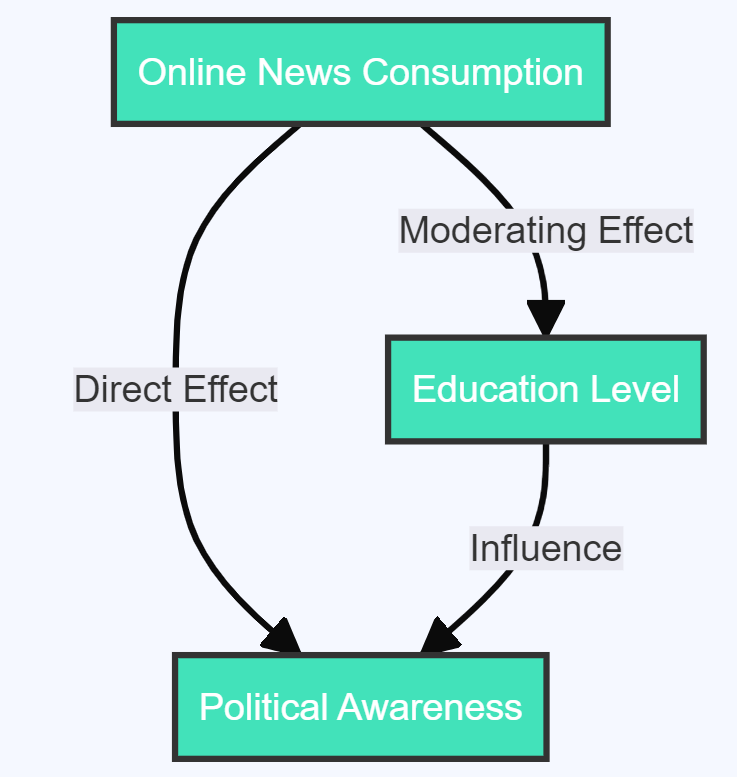

- Education Level moderating the effect of Online News Consumption on Political Awareness : A person’s education level could affect how online news consumption influences their political awareness. Those with higher education levels may extract more insightful information and exhibit increased political awareness with more online news consumption, whereas the less educated might not see a significant change in their political awareness.

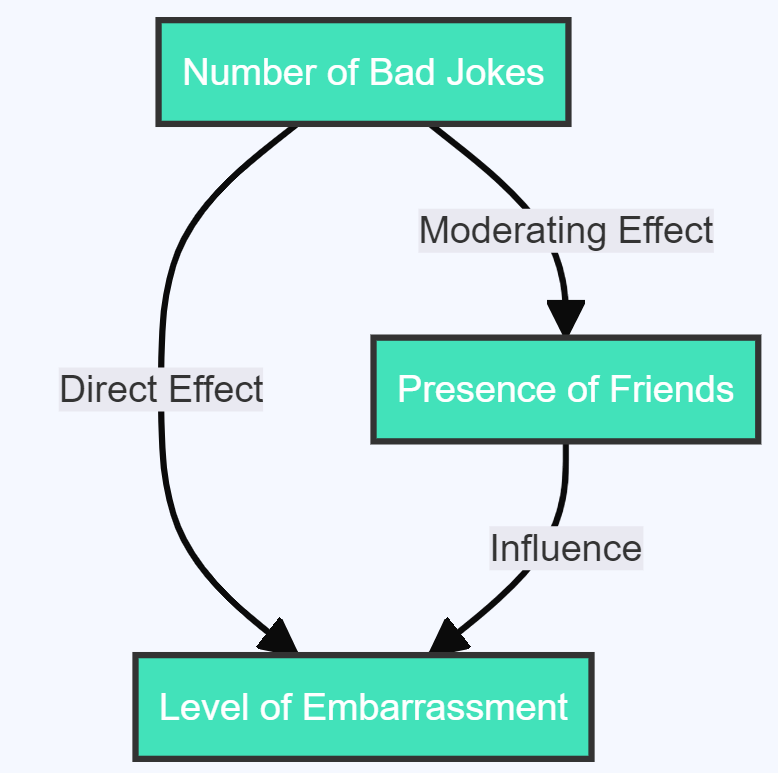

- Presence of Friends moderating the effect of Number of bad Jokes Told on Level of Embarrassment : The presence of friends may decrease or increase the level of embarrassment felt when a person tells a certain number of bad jokes. It depends on the relationship dynamics within the group – are the friends supportive and likely to laugh along, or are they more likely to mock the joke-teller?

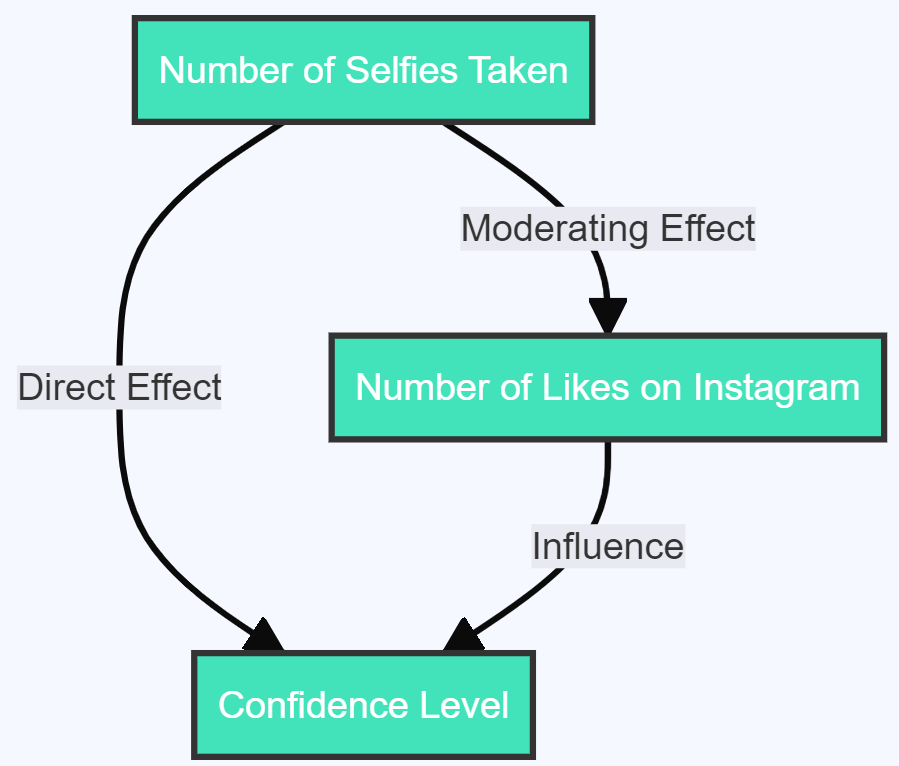

- Number of Likes on Instagram moderating the effect of Number of Selfies Taken on Confidence Level : The number of selfies taken can affect confidence, but the impact is likely to vary depending on the number of likes received on Instagram. More likes can lead to increased confidence, while fewer likes might lower it, despite the number of selfies taken.

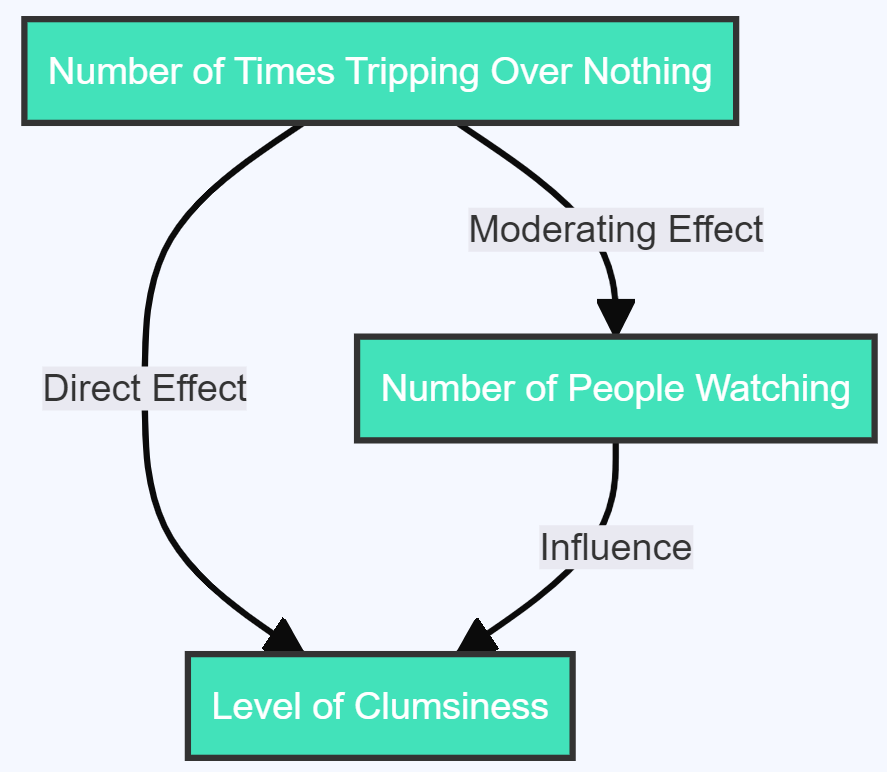

- Number of People Watching moderating the effect of Number of Times Tripping Over Nothing on Level of Clumsiness : The more people are watching, the more clumsy a person might feel if they trip over nothing multiple times. With fewer spectators, the perceived level of clumsiness might be less.

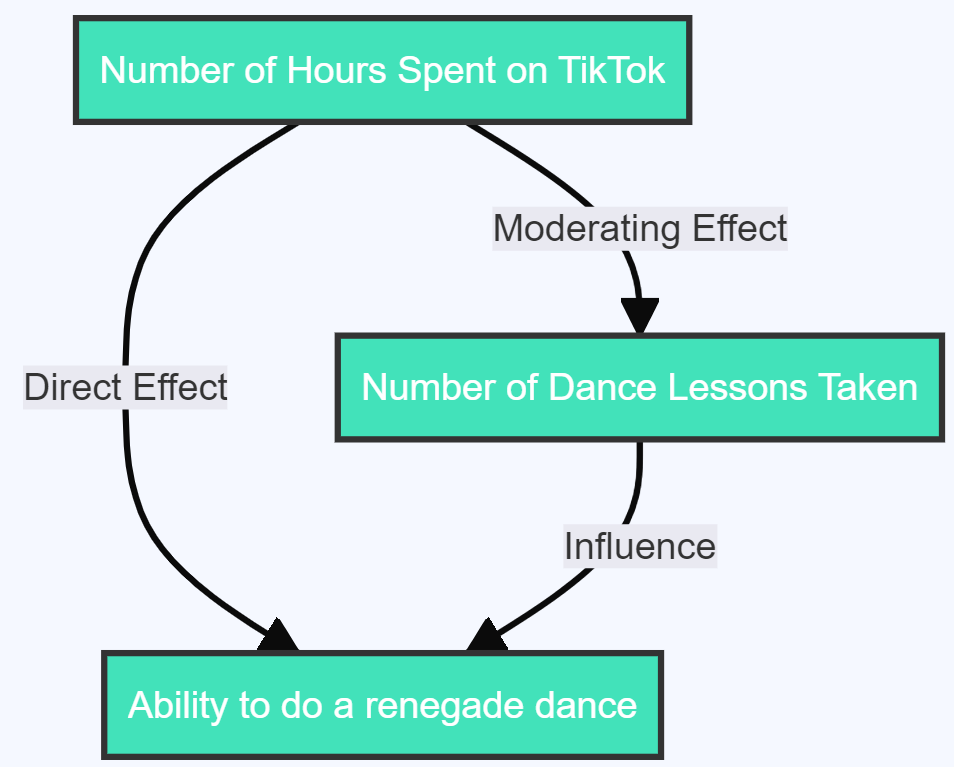

- Number of Dance Lessons Taken moderating the effect of Number of Hours Spent on TikTok on Ability to Do a Renegade Dance : More dance lessons can enhance the ability to do a Renegade Dance, even if the number of hours spent on TikTok is high. Conversely, fewer dance lessons might not improve the dance skills despite many hours spent watching TikTok.

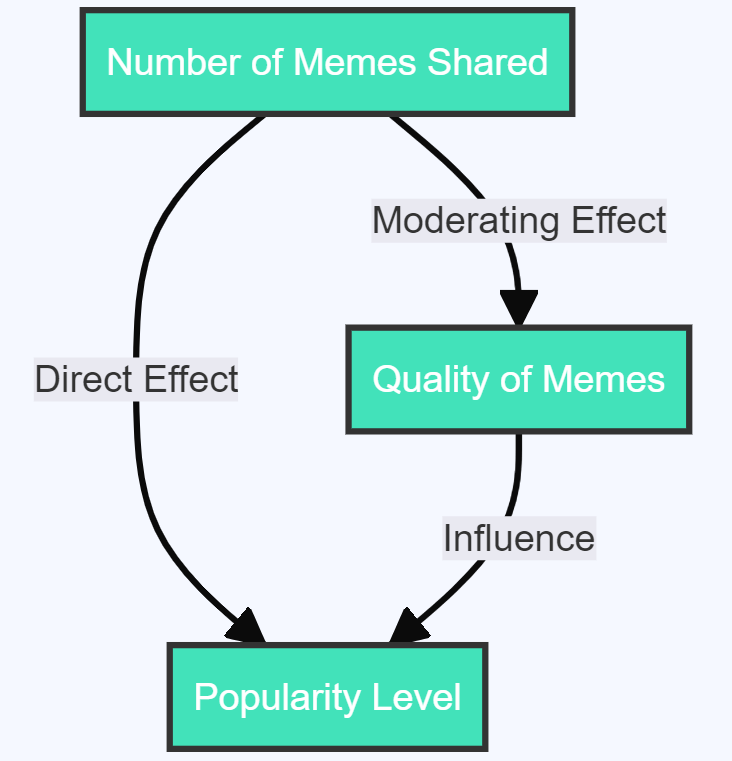

- Quality of Memes moderating the effect of Number of Memes Shared on Popularity Level : Sharing a large number of memes may not necessarily increase popularity if the quality of those memes is low. High-quality memes can boost popularity, even when the quantity shared is less.

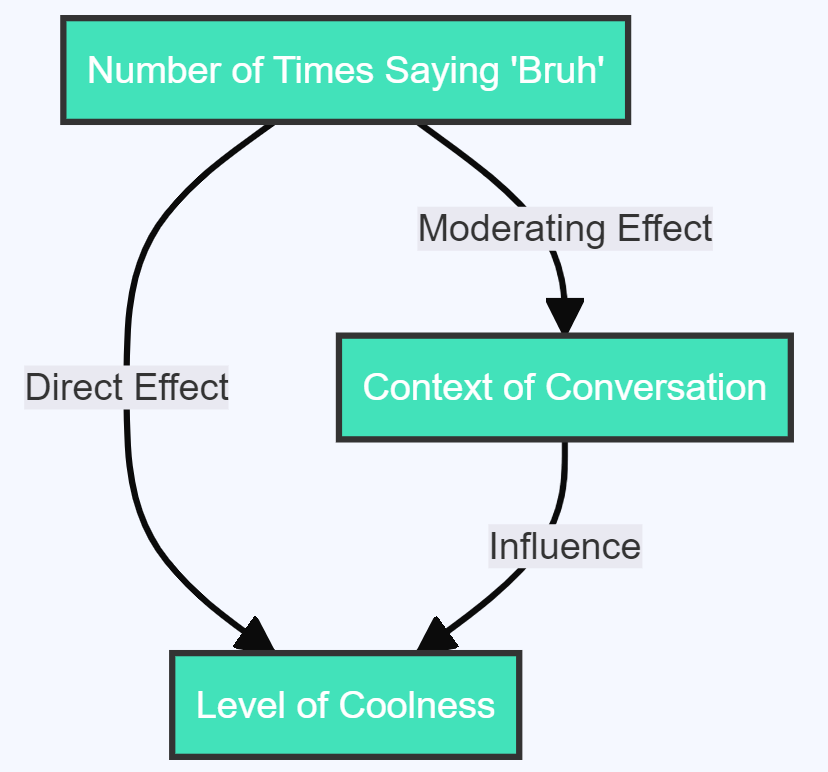

- Context of Conversation moderating the effect of Number of Times Saying ‘Bruh’ on Level of Coolness : Saying ‘Bruh’ multiple times might be seen as cool in a casual conversation among peers. However, the same might not be considered cool in a formal or serious conversation. Thus, the context moderates the effect of the frequency of saying ‘Bruh’ on perceived coolness.

These instances further elucidate how the moderating variable can contextualize and refine our understanding of the relationship between an independent and a dependent variable.

Identifying Moderating Variables: The Vital Steps

After gaining a robust understanding of what a moderating variable is and how it operates, let’s move onto identifying them in research studies. Here are the crucial steps to follow:

- Formulate the Research Question : The research question should be clear and concise, and it should mention the presumed moderating variable.

- Design the Study : Design your research such that it enables the isolation of the moderating variable.

- Collect Data : Gather data on the independent, dependent, and moderating variables. It’s essential to measure all three for the subsequent analysis.

- Analyze the Data : Use statistical methods to analyze the data and ascertain the impact of the moderating variable.

Remember, correctly identifying and incorporating moderating variables into your study can significantly enhance its depth, richness, and overall validity. But keep an eye out! Misidentification or misuse can lead to misleading conclusions.

In the next section, we’ll explore the potential challenges and common pitfalls to avoid when dealing with moderating variables.

Potential Pitfalls and Challenges: A Guided Cautionary Tale

Engaging with moderating variables can feel like navigating a labyrinth at times. Missteps could potentially distort your research findings. Here are some of the most common pitfalls and challenges you should keep an eye on:

- Misidentification : An all-too-common mistake is misidentifying a moderating variable as either an independent or a dependent variable. Understanding and defining the role of each variable in your study will help mitigate this risk.

- Multicollinearity : This phenomenon arises when your independent variables are too closely associated with each other. It can result in unstable estimates of regression coefficients, making it hard to interpret your results. Multicollinearity can be particularly challenging when it involves your moderating variable.

- Overfitting : Trying to fit too many moderating variables into your model can lead to overfitting, where your model performs well on the training data but poorly with new, unseen data. Striking a balance is key here.

- Misinterpretation : Even if correctly identified and measured, moderating variables can still be misinterpreted. Researchers must ensure they accurately interpret the impact of the moderating variable on the relationship between the independent and dependent variables.

Understanding these challenges is crucial, but it’s only one side of the coin. The next step is learning how to correctly interpret and present the results involving moderating variables.

Making Sense of Results: The Art of Interpretation

Interpreting results involving moderating variables can be a complex task. However, by following a structured approach, it becomes more manageable:

- Analysis : Use appropriate statistical methods to analyze your data, such as regression analysis or Analysis of Variance (ANOVA).

- Visualisation : Plotting the interaction between your variables can often make it easier to understand. Interaction plots or 3D surface plots are commonly used.

- Interpretation : Consider the direction and magnitude of the effect of your moderating variable. Does it strengthen or weaken the relationship between your independent and dependent variables? Does it reverse the relationship?

- Communication : Explain the role of your moderating variable in layman’s terms. Your research should be accessible to those outside of your specific field.

In the final segment of this comprehensive guide, we will wrap up our discussion and reinforce some key takeaways about moderating variables.

Wrapping Up: Final Thoughts on Moderating Variables

A researcher’s journey into the world of moderating variables can be complex and, at times, even challenging. Still, it’s an essential path to tread for those seeking to unveil nuanced and contextual understanding from their research. It is the subtle interplay of variables that often delivers the most valuable insights.

But let’s not forget the individuals who are at the heart of this journey – the researchers. To those who are keen on incorporating moderating variables in their research, here’s a distilled summary of the key takeaways from this guide:

- Aim for Clarity : Understand and clearly define the role of each variable in your study. The success of your research hinges on how well you’ve comprehended the triad of independent, dependent, and moderating variables.

- Design Matters : Structure your study keeping the moderating variable(s) in mind. Be wary of common pitfalls, like multicollinearity and overfitting.

- Statistical Tools are your Friends : Familiarise yourself with the statistical tools necessary to analyze the effects of your moderating variable(s).

- Interpretation is Key : Ensure that you interpret the results correctly. Remember, the effectiveness of your research lies in its interpretation.

- Communicate with Precision : Lastly, communicate your findings effectively. The world needs to know what you’ve discovered.

Embarking on a research journey with moderating variables in tow might be challenging, but the result is undeniably rewarding. The added depth and context they bring to your research can be the difference between a good study and a great one.

Happy researching!

We trust that this article has provided you with a deep understanding of the topic. Feel free to reach out for any further queries or discussions. Keep visiting statssy.com for more insights and guides on data analytics, research methods, and machine learning.

Submit a Comment Cancel reply

Your email address will not be published. Required fields are marked *

Save my name, email, and website in this browser for the next time I comment.

- Please wait..

- Into 2023: The Best Must-Attend Data Science Conferences You Can’t Miss! 🚀

- Power of Data Analysis in Research: A Comprehensive Guide to Types, Methods, and Tools

- Becoming a Data Analyst After B. Com: A Comprehensive Roadmap

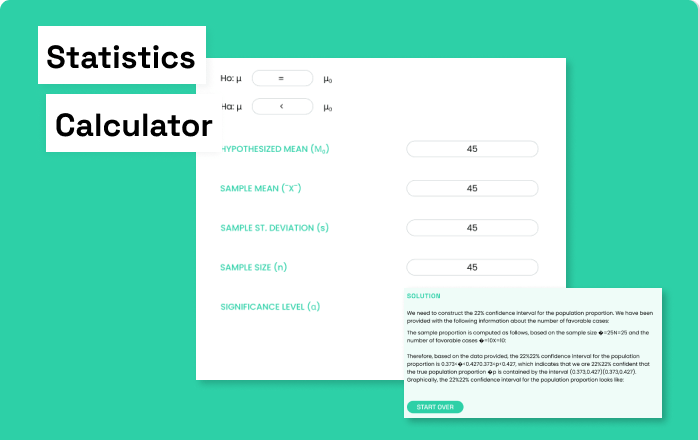

Unlock the power of data with our user-friendly statistics calculator.

Explore our data science courses to supercharge your career growth in the world of data and analytics.

Test Your Skills With Our Quiz

Contact me today i have solution to all your problems..

- Privacy Policy

Home » Moderating Variable – Definition, Analysis Methods and Examples

Moderating Variable – Definition, Analysis Methods and Examples

Table of Contents

Moderating Variable

Definition:

A moderating variable is a variable that affects the strength or direction of the relationship between two other variables. It is also referred to as an interactive variable or a moderator.

In social science research, a moderating variable is often used to understand how the relationship between two variables changes depending on the level of a third variable. For example, in a study examining the relationship between stress and job performance, age might be a moderating variable. The relationship between stress and job performance may be stronger for younger workers than for older workers, meaning that age is influencing the relationship between stress and job performance.

Moderating Variable Analysis Methods

Moderating Variable Analysis Methods are as follows:

Regression Analysis

Regression analysis is a statistical technique that examines the relationship between a dependent variable and one or more independent variables. In the case of a moderating variable, a regression analysis can be used to examine the interaction between the independent and moderating variables in predicting the dependent variable. This can be done using a simple regression or multiple regression analysis, depending on the number of variables involved.

Analysis of Variance (ANOVA)

ANOVA is a statistical method used to compare the means of two or more groups. In the case of a moderating variable, ANOVA can be used to compare the mean differences between groups based on different levels of the moderating variable. For example, if age is a moderating variable, ANOVA can be used to compare the mean differences in job performance between younger and older workers at different levels of stress.

Multiple Regression Analysis

Multiple regression analysis is a statistical technique used to predict the value of a dependent variable based on two or more independent variables. In the case of a moderating variable, multiple regression analysis can be used to examine the interaction between the independent variables and the moderating variable in predicting the dependent variable.

Moderating Variable Examples

Here are a few examples of moderating variables:

- Age as a moderating variable : Suppose a study examines the relationship between exercise and heart health. Age may act as a moderating variable, influencing the relationship between exercise and heart health. For example, the relationship between exercise and heart health may be stronger for younger adults compared to older adults.

- Gender as a moderating variable: Consider a study examining the relationship between salary and job satisfaction. Gender may act as a moderating variable, influencing the relationship between salary and job satisfaction. For example, the relationship between salary and job satisfaction may be stronger for men than for women.

- Social support as a moderating variable: Suppose a study examines the relationship between stress and mental health. Social support may act as a moderating variable, influencing the relationship between stress and mental health. For example, the relationship between stress and mental health may be stronger for individuals with low social support compared to those with high social support.

- Education level as a moderating variable: Consider a study examining the relationship between technology use and academic performance. Education level may act as a moderating variable, influencing the relationship between technology use and academic performance. For example, the relationship between technology use and academic performance may be stronger for individuals with higher education levels compared to those with lower education levels.

Applications of Moderating Variable

- Market research: Moderating variables are often used in market research to identify the factors that influence consumer behavior. For example, age, income, and education level can be moderating variables that affect the relationship between advertising and consumer purchasing behavior.

- Psychology : In psychology, moderating variables can help explain the relationship between variables such as personality traits and job performance. For example, a person’s level of conscientiousness may moderate the relationship between their job performance and job satisfaction.

- Education: In education, moderating variables can help explain the relationship between teaching methods and student learning outcomes. For example, the level of student engagement may moderate the relationship between a teacher’s teaching style and student learning outcomes.

- Health : In health research, moderating variables can help explain the relationship between risk factors and health outcomes. For example, gender may moderate the relationship between smoking and lung cancer.

- Social sciences: In the social sciences, moderating variables can help explain the relationship between variables such as income and happiness. For example, the level of social support may moderate the relationship between income and happiness.

Purpose of Moderating Variable

The purpose of a moderating variable is to identify the conditions under which the relationship between two other variables changes or becomes stronger or weaker. In other words, a moderating variable helps to explain the context in which a particular relationship exists.

For example, let’s consider the relationship between stress and job performance. The relationship may be different depending on the level of social support that an individual receives. In this case, social support is the moderating variable. If an individual has high levels of social support, the negative impact of stress on job performance may be reduced. On the other hand, if an individual has low levels of social support, the negative impact of stress on job performance may be amplified.

The purpose of identifying moderating variables is to help researchers better understand the complex relationships between variables and to provide more accurate predictions of outcomes in specific situations. By identifying the conditions under which a relationship exists or changes, researchers can develop more effective interventions and treatments. Moderating variables can also help to identify subgroups of individuals who may benefit more or less from a particular intervention or treatment.

When to use Moderating Variable

Here are some scenarios where using a moderating variable can be helpful:

- When there is a complex relationship: In situations where the relationship between two variables is complex, a moderating variable can help to clarify the relationship. For example, the relationship between stress and job performance may be influenced by a variety of factors such as job demands, social support, and coping mechanisms.

- When there is a subgroup effect : In situations where the effect of one variable on another is stronger or weaker for certain subgroups of individuals, a moderating variable can be helpful. For example, the relationship between exercise and weight loss may be stronger for individuals who are obese compared to individuals who are not obese.

- When there is a need for tailored interventions: In situations where the effect of one variable on another is different for different individuals, a moderating variable can be useful for developing tailored interventions. For example, the relationship between diet and weight loss may be influenced by individual differences in genetics, metabolism, and lifestyle.

Characteristics of Moderating Variable

The following are some key characteristics of moderating variables:

- Interact with other variables : Moderating variables interact with other variables in a statistical relationship, influencing the strength or direction of the relationship between two other variables.

- Independent variable: Moderating variables are independent variables in a statistical analysis, meaning that they are not influenced by any of the other variables in the analysis.

- Categorical or continuous: Moderating variables can be either categorical or continuous. Categorical moderating variables have distinct categories or levels (e.g., gender), while continuous moderating variables can take on any value within a range (e.g., age).

- Can be identified through statistical analysis: Moderating variables can be identified through statistical analysis using regression analysis or ANOVA. Researchers can examine the interaction between the independent and moderating variables in predicting the dependent variable to determine if the moderating variable has a significant impact.

- Influence the relationship between other variables : The impact of a moderating variable on the relationship between other variables can be positive, negative, or null. It depends on the specific research question and the data analyzed.

- Provide insight into underlying mechanisms: Moderating variables can provide insight into underlying mechanisms driving the relationship between other variables, providing a more nuanced understanding of the relationship.

Advantages of Moderating Variable

There are several advantages of using a moderating variable in research:

- Provides a more nuanced understanding of relationships: By identifying the conditions under which a particular relationship exists or changes, a moderating variable provides a more nuanced understanding of the relationship between two variables. This can help researchers to better understand complex relationships and to develop more effective interventions.

- Improves accuracy of predictions: By identifying the conditions under which a relationship exists or changes, a moderating variable can improve the accuracy of predictions about outcomes in specific situations. This can help researchers to develop more effective interventions and treatments.

- Identifies subgroups of individuals : Moderating variables can help to identify subgroups of individuals who may benefit more or less from a particular intervention or treatment. This can help researchers to develop more tailored interventions that are more effective for specific groups of individuals.

- Increases generalizability: By identifying the conditions under which a relationship exists or changes, a moderating variable can increase the generalizability of findings. This can help researchers to apply findings from one study to other populations and contexts.

- Provides more complete understanding of phenomena : By considering the role of a moderating variable, researchers can gain a more complete understanding of the phenomena they are studying. This can help to identify areas for future research and to generate new hypotheses.

Disadvantages of Moderating Variable

Disadvantages of Moderating Variable are as follows:

- Complexity: The use of moderating variables can make research more complex and challenging to design, analyze, and interpret. This can require more resources and expertise than simpler research designs.

- Increased risk of Type I errors : When using a moderating variable, there is an increased risk of Type I errors, or false positives. This can occur when a relationship is identified that appears significant, but is actually due to chance.

- Reduced generalizability: Moderating variables can limit the generalizability of findings to other populations and contexts. This is because the relationship between two variables may be influenced by different moderating variables in different contexts.

- Limited explanatory power: While moderating variables can help to identify conditions under which a relationship exists, they may not provide a complete explanation of why the relationship exists. Other variables may also play a role in the relationship.

- Data requirements: Using moderating variables often requires larger sample sizes and more data than simpler research designs. This can increase the time and resources required to conduct the research.

About the author

Muhammad Hassan

Researcher, Academic Writer, Web developer

You may also like

Control Variable – Definition, Types and Examples

Qualitative Variable – Types and Examples

Variables in Research – Definition, Types and...

Categorical Variable – Definition, Types and...

Independent Variable – Definition, Types and...

Ratio Variable – Definition, Purpose and Examples

- Utility Menu

Arts, Science and Writing Studio

A place for everyone to share and learn.

- Regression with a moderator 101

What is a moderator?

The linear regression modeling as illustrated in chapter 3 has different variations, just like ANOVA. The mathematics behind various regression models can be messy; however, the main objective of this book is to how to apply it. Regression with a moderator is an advance regression model to test for interaction, but what is a moderator in the first place?

A moderator was defined in Hayes (2017), “The effect of X on some variable Y is moderated by W if its size, sign, or strength depends on or can be predicted by W. In that case, W is said to be a moderator of X’s effect on Y, or that W and X interact in their influence on Y (220).” Now, let’s translate it into English. Let’s consider the following hypothetical scenarios.

You live in a city A or a literally utopian city with extremely low crime rate. Everyone in the city has abundant resources. There are many designer shops including Gucci, Louis Vuitton, Montblanc, etc. In the city, people with higher income tend to buy designer products to exhibit their socioeconomic status.

Now, it is reasonable to assume there is a positive significant correlation between the income and the amount of money spent on designer products. Let’s assume that beta is 20, and p-value is .01.

You live in a city B town where the crime rate has been skyrocketing in the past decade. Everyone in the city is afraid of being robbed. Therefore, designer shops are not quite as popular as they are in the miracle town. In city B, people with higher income do not really buy designer products to exhibit their socioeconomic status. Instead, they hire personal guards or install high-tech security system at home.

Now, consider the correlation between the income and the money spent on designer products. It vanishes. There is no significant correlation anymore. Let’s assume that beta is .02, and p-value is .82.

If you were a researcher, you collect samples from both city A and B. Your research population is residents from both city A and B, and you found that income is not a significant predictor variable of the money spent on designer products. Is your finding accurate? The answer is obvious NO. What is happening here? What makes the difference?

The main difference between city A and B is the crime rate. The crime rate influences people’s perception of safety, or how safe people think it is to exhibit their wealth by wearing designer products. People’s perception of safety here is a moderator. Put it back to the definition. People’s perception about safety is a moderator of income’s effect on the amount of money they spend on designer products, or people’s perception of safe and their income interacts with the amount of money they spend on designer products.

The other way to think how a moderator works is to look at beta or coefficient. In the first scenario when the moderator’s size is high, meaning that people have a high sense of security, the corresponding coefficient is 20. When the moderator’s size is low, meaning that people have a low sense of security, the corresponding coefficient is 0.02 which is a lot lower.

Figure 5.1.a Illustrating the moderator

Look at the figure 5.1.a, the moderator variable is color-coded by blue and orange. Both lines are the linear regression line where income predicts the money spent on designer products. The orange one is in the dangerous city where people do not feel safe showing off their designer products while the blue one is in the safe city where people feel safe doing so. It is logically plausible.

Regression with a moderator?

What is the statistical way to test for an interaction? How can we test if there is a significant moderator? First, we need to think if it logically makes sense. When one variable can change the direction of another variable, it is a potential candidate for the moderator.

Let’s consider the following hypothesis. Students tend to develop a more positive attitude towards the renewable energy policy if he/she knows more about climate change. Assume that we do not find a correlation between attitude towards the renewable energy policy and the amount of knowledge about climate change. We can stop here concluding that the data does not support enough evidences to reject the null.

If we do not know anything about moderators, we would definitely stop here, but since we know the concept of the moderator. Maybe, political stand or belief about climate change might be a moderator. If a person believes that climate change is fake and created as a political tool by the leftist, it is possible that he would only read and accumulate knowledge about why climate change is fake due to the conformation bias. Therefore, climate change deniers might indeed spend more time reading and have a great volume of knowledge about the invalidity of climate change. Therefore, for people holding this belief, there might be a negative correlation instead of a positive correlation. In fact, then, if tested to be significant, the belief about whether climate change is real is the moderator here!

The formula for regression with a moderator is

Y= b 1 X 1 + b 2 X 2 + b 3 X 1 X 2 +C (5.1)

By testing this model, three possible coefficients and p values would be given. Then, b1 and b2 is the coefficient for direct effect while B3 is the interaction. P value for b3 would indicate whether the correlation is significant.

Applying to Gender Report Data

Are there any interaction in the World Bank Gender Report Database (2017)? Off course, there are. Let’s look at one closer. Let’s define binary qualitative variable X (independent variable) to be whether law requires equal gender hiring (1=yes; 0=no). Then, define a quantitative continuous variable Y (dependent variable) to be expected years of schooling for girls in the country. Now, we define our moderator M to be whether men and married women have equal ownership rights to property.

Our research hypothesis is legislation about whether men and married women, and legislation about whether law requires equal gender hiring interacts in their influence to expected years of schooling for girls in a country.

The result is significant. Let’s take a look at the figure 5.1 (b).

Figure 5.1(b)

It is obvious that marriage with or without equal ownership of property changes the coefficient. For countries with legislation requiring equal ownership within marriage, there is a positive correlation between law requiring equal gender hiring and expected years of schooling for girls. It means that girls receive longer education if the countries have laws requiring equal gender hiring.

However, if the countries do not have legislation requiring equal ownership, there is a negative correlation meaning girls receive less education if the countries have laws requiring equal gender hiring. It is plausible logically. If there is no hope for females because once they are married, their husbands own everything, there is no point for them to go to school even if there is law requiring equal gender hiring. Why even work if all money females make belong to their husbands.

To run regression with a moderator, you can either code the moderator by multiplying your independent variable and moderator. Otherwise, you can use Andrew F. Hayes’s process in SPSS. It also gives you conditional effects of the focal predictor at values of the moderator. Please read Andrew F. Hayes’s book, Introduction to Mediation, Moderation, and Conditional Process Analysis , if you want to learn more about moderation and conditional process analysis.

The interaction modeling can help us explain a lot of real-life scenarios. Sometimes, even if two variables are not correlated significant, there would still something to discover.

Practice and Homework

Hayes, A. F. (2017). Introduction to Mediation, Moderation, and Conditional Process Analysis, Second Edition: A Regression-Based Approach. Guilford Publications.

- Call for Authors and Research Methodologists

- Let's Run Some Analysis

- Theory of Reverse Interest

Scientific research methods are not just for PhD. In fact, you can learn how to apply scientific methods and statistical tests in no time! The Research Methods and Statistics section will help you learn some statistical tests which are commonly used in scientific research. The goal is to explain the materials without 0 calculus involved, and we will make it as practical and easy as possble.

With scientific methods, you will never have to rely on getting information from media anymore. You can discover the truth with your own hands!

Have a language expert improve your writing

Run a free plagiarism check in 10 minutes, automatically generate references for free.

- Knowledge Base

- Methodology

- How to Write a Strong Hypothesis | Guide & Examples

How to Write a Strong Hypothesis | Guide & Examples

Published on 6 May 2022 by Shona McCombes .

A hypothesis is a statement that can be tested by scientific research. If you want to test a relationship between two or more variables, you need to write hypotheses before you start your experiment or data collection.

Table of contents

What is a hypothesis, developing a hypothesis (with example), hypothesis examples, frequently asked questions about writing hypotheses.

A hypothesis states your predictions about what your research will find. It is a tentative answer to your research question that has not yet been tested. For some research projects, you might have to write several hypotheses that address different aspects of your research question.

A hypothesis is not just a guess – it should be based on existing theories and knowledge. It also has to be testable, which means you can support or refute it through scientific research methods (such as experiments, observations, and statistical analysis of data).

Variables in hypotheses

Hypotheses propose a relationship between two or more variables . An independent variable is something the researcher changes or controls. A dependent variable is something the researcher observes and measures.

In this example, the independent variable is exposure to the sun – the assumed cause . The dependent variable is the level of happiness – the assumed effect .

Prevent plagiarism, run a free check.

Step 1: ask a question.

Writing a hypothesis begins with a research question that you want to answer. The question should be focused, specific, and researchable within the constraints of your project.

Step 2: Do some preliminary research

Your initial answer to the question should be based on what is already known about the topic. Look for theories and previous studies to help you form educated assumptions about what your research will find.

At this stage, you might construct a conceptual framework to identify which variables you will study and what you think the relationships are between them. Sometimes, you’ll have to operationalise more complex constructs.

Step 3: Formulate your hypothesis

Now you should have some idea of what you expect to find. Write your initial answer to the question in a clear, concise sentence.

Step 4: Refine your hypothesis

You need to make sure your hypothesis is specific and testable. There are various ways of phrasing a hypothesis, but all the terms you use should have clear definitions, and the hypothesis should contain:

- The relevant variables

- The specific group being studied

- The predicted outcome of the experiment or analysis

Step 5: Phrase your hypothesis in three ways

To identify the variables, you can write a simple prediction in if … then form. The first part of the sentence states the independent variable and the second part states the dependent variable.

In academic research, hypotheses are more commonly phrased in terms of correlations or effects, where you directly state the predicted relationship between variables.

If you are comparing two groups, the hypothesis can state what difference you expect to find between them.

Step 6. Write a null hypothesis

If your research involves statistical hypothesis testing , you will also have to write a null hypothesis. The null hypothesis is the default position that there is no association between the variables. The null hypothesis is written as H 0 , while the alternative hypothesis is H 1 or H a .

Hypothesis testing is a formal procedure for investigating our ideas about the world using statistics. It is used by scientists to test specific predictions, called hypotheses , by calculating how likely it is that a pattern or relationship between variables could have arisen by chance.

A hypothesis is not just a guess. It should be based on existing theories and knowledge. It also has to be testable, which means you can support or refute it through scientific research methods (such as experiments, observations, and statistical analysis of data).

A research hypothesis is your proposed answer to your research question. The research hypothesis usually includes an explanation (‘ x affects y because …’).

A statistical hypothesis, on the other hand, is a mathematical statement about a population parameter. Statistical hypotheses always come in pairs: the null and alternative hypotheses. In a well-designed study , the statistical hypotheses correspond logically to the research hypothesis.

Cite this Scribbr article

If you want to cite this source, you can copy and paste the citation or click the ‘Cite this Scribbr article’ button to automatically add the citation to our free Reference Generator.

McCombes, S. (2022, May 06). How to Write a Strong Hypothesis | Guide & Examples. Scribbr. Retrieved 29 April 2024, from https://www.scribbr.co.uk/research-methods/hypothesis-writing/

Is this article helpful?

Shona McCombes

Other students also liked, operationalisation | a guide with examples, pros & cons, what is a conceptual framework | tips & examples, a quick guide to experimental design | 5 steps & examples.

A Guide on Data Analysis

17 moderation.

- Spotlight Analysis: Compare the mean of the dependent of the two groups (treatment and control) at every value ( Simple Slopes Analysis )

- Floodlight Analysis: is spotlight analysis on the whole range of the moderator ( Johnson-Neyman intervals )

Other Resources:

BANOVAL : floodlight analysis on Bayesian ANOVA models

cSEM : doFloodlightAnalysis in SEM model

( Spiller et al. 2013 )

Terminology:

Main effects (slopes): coefficients that do no involve interaction terms

Simple slope: when a continuous independent variable interact with a moderating variable, its slope at a particular level of the moderating variable

Simple effect: when a categorical independent variable interacts with a moderating variable, its effect at a particular level of the moderating variable.

\[ Y = \beta_0 + \beta_1 X + \beta_2 M + \beta_3 X \times M \]

\(\beta_0\) = intercept

\(\beta_1\) = simple effect (slope) of \(X\) (independent variable)

\(\beta_2\) = simple effect (slope) of \(M\) (moderating variable)

\(\beta_3\) = interaction of \(X\) and \(M\)

Three types of interactions:

- Continuous by continuous

- Continuous by categorical

- Categorical by categorical

When interpreting the three-way interactions, one can use the slope difference test ( Dawson and Richter 2006 )

17.1 emmeans package

Data set is from UCLA seminar where gender and prog are categorical

17.1.1 Continuous by continuous

Simple slopes for a continuous by continuous model

Spotlight analysis ( Aiken and West 2005 ) : usually pick 3 values of moderating variable:

Mean Moderating Variable + \(\sigma \times\) (Moderating variable)

Mean Moderating Variable

Mean Moderating Variable - \(\sigma \times\) (Moderating variable)

The 3 p-values are the same as the interaction term.

For publication, we use

17.1.2 Continuous by categorical

Get simple slopes by each level of the categorical moderator

17.1.3 Categorical by categorical

Simple effects

17.2 probmod package

- Not recommend: package has serious problem with subscript.

17.3 interactions package

17.3.1 continuous interaction.

- (at least one of the two variables is continuous)

For continuous moderator, the three values chosen are:

-1 SD above the mean

-1 SD below the mean

To include weights from the regression inn the plot

Partial Effect Plot

Check linearity assumption in the model

Plot the lines based on the subsample (red line), and whole sample (black line)

17.3.1.1 Simple Slopes Analysis

continuous by continuous variable interaction (still work for binary)

conditional slope of the variable of interest (i.e., the slope of \(X\) when we hold \(M\) constant at a value)

Using sim_slopes it will

mean-center all variables except the variable of interest

For moderator that is

Continuous, it will pick mean, and plus/minus 1 SD

Categorical, it will use all factor

sim_slopes requires

A regression model with an interaction term)

Variable of interest ( pred = )

Moderator: ( modx = )

17.3.1.2 Johnson-Neyman intervals

To know all the values of the moderator for which the slope of the variable of interest will be statistically significant, we can use the Johnson-Neyman interval ( P. O. Johnson and Neyman 1936 )

Even though we kind of know that the alpha level when implementing the Johnson-Neyman interval is not correct ( Bauer and Curran 2005 ) , not until recently that there is a correction for the type I and II errors ( Esarey and Sumner 2018 ) .

Since Johnson-Neyman inflates the type I error (comparisons across all values of the moderator)

For plotting, we can use johnson_neyman

- y-axis is the conditional slope of the variable of interest

17.3.1.3 3-way interaction

Johnson-Neyman 3-way interaction

17.3.2 Categorical interaction

17.4 interactionR package

- For publication purposes

( Knol and VanderWeele 2012 ) for presentation

( Hosmer and Lemeshow 1992 ) for confidence intervals based on the delta method

( Zou 2008 ) for variance recovery “mover” method

( Assmann et al. 1996 ) for bootstrapping

17.5 sjPlot package

For publication purposes (recommend, but more advanced)

What is a Moderating Variable? Definition & Example

A moderating variable is a type of variable that affects the relationship between a dependent variable and an independent variable .

When performing regression analysis , we’re often interested in understanding how changes in an independent variable affect a dependent variable. However, sometimes a moderating variable can affect this relationship.

For example, suppose we want to fit a regression model in which we use the independent variable hours spent exercising each week to predict the dependent variable resting heart rate .

We suspect that more hours spent exercising is associated with a lower resting heart rate. However, this relationship could be affected by a moderating variable such as gender .

It’s possible that each extra hour of exercise causes resting heart rate to drop more for men compared to women.

Another example of a moderating variable could be age . It’s likely that each extra hour of exercise causes resting heart rate to drop more for younger people compared to older people.

Properties of Moderating Variables

Moderating variables have the following properties:

1. Moderating variables can be qualitative or quantitative .

Qualitative variables are variables that take on names or labels. Examples include:

- Gender (Male or Female)

- Education Level (High School Degree, Bachelor’s Degree, Master’s Degree, etc.)

- Marital Status (Single, Married, Divorced)

Quantitative variables are variables that take on numerical values. Examples include:

- Square Footage

- Population Size

In the previous examples, gender was a qualitative variable that could affect the relationship between hours studied and resting heart rate while age was a quantitative variable that could potentially affect the relationship.

2. Moderating variables can affect the relationship between an independent and dependent variable in a variety of ways.

Moderating variables can have the following effects:

- Strengthen the relationship between two variables.

- Weaken the relationship between two variables.

- Negate the relationship between two variables.

Depending on the situation, a moderating variable can moderate the relationship between two variables in many different ways.

How to Test for Moderating Variables

If X is an independent variable (sometimes called a “predictor” variable) and Y is a dependent variable (sometimes called a “response” variable), then we could write a regression equation to describe the relationship between the two variables as follows:

Y = β 0 + β 1 X

If we suspect that some other variable, Z , is a moderator variable, then we could fit the following regression model:

Y = β 0 + β 1 X 1 + β 2 Z + β 3 XZ

In this equation, the term XZ is known as an interaction term .

If the p-value for the coefficient of XZ in the regression output is statistically significant, then this indicates that there is a significant interaction between X and Z and Z should be included in the regression model as a moderator variable.

We would write the final model as:

Y = β 0 + β 1 X + β 2 Z + β 3 XZ

If the p-value for the coefficient of XZ in the regression output is not statistically significant, then Z is not a moderator variable.

However it’s possible that the coefficient for Z could still be statistically significant. In this case, we would simply include Z as another independent variable in the regression model.

We would then write the final model as:

Y = β 0 + β 1 X + β 2 Z

Additional Resources

How to Read and Interpret a Regression Table How to Use Dummy Variables in Regression Analysis Introduction to Confounding Variables

When to Use a Chi-Square Test (With Examples)

How to create a normal probability plot in excel (step-by-step), related posts, how to normalize data between -1 and 1, vba: how to check if string contains another..., how to interpret f-values in a two-way anova, how to create a vector of ones in..., how to find the mode of a histogram..., how to find quartiles in even and odd..., how to determine if a probability distribution is..., what is a symmetric histogram (definition & examples), how to calculate sxy in statistics (with example), how to calculate sxx in statistics (with example).

- Chapter 1: Introduction

- Chapter 2: Indexing

- Chapter 3: Loops & Logicals

- Chapter 4: Apply Family

- Chapter 5: Plyr Package

- Chapter 6: Vectorizing

- Chapter 7: Sample & Replicate

- Chapter 8: Melting & Casting

- Chapter 9: Tidyr Package

- Chapter 10: GGPlot1: Basics

- Chapter 11: GGPlot2: Bars & Boxes

- Chapter 12: Linear & Multiple

- Chapter 13: Ploting Interactions

- Chapter 14: Moderation/Mediation

- Chapter 15: Moderated-Mediation

- Chapter 16: MultiLevel Models

- Chapter 17: Mixed Models

- Chapter 18: Mixed Assumptions Testing

- Chapter 19: Logistic & Poisson

- Chapter 20: Between-Subjects

- Chapter 21: Within- & Mixed-Subjects

- Chapter 22: Correlations

- Chapter 23: ARIMA

- Chapter 24: Decision Trees

- Chapter 25: Signal Detection

- Chapter 26: Intro to Shiny

- Chapter 27: ANOVA Variance

- Download Rmd

Chapter 14: Mediation and Moderation

Alyssa blair, 1 what are mediation and moderation.

Mediation analysis tests a hypothetical causal chain where one variable X affects a second variable M and, in turn, that variable affects a third variable Y. Mediators describe the how or why of a (typically well-established) relationship between two other variables and are sometimes called intermediary variables since they often describe the process through which an effect occurs. This is also sometimes called an indirect effect. For instance, people with higher incomes tend to live longer but this effect is explained by the mediating influence of having access to better health care.

In R, this kind of analysis may be conducted in two ways: Baron & Kenny’s (1986) 4-step indirect effect method and the more recent mediation package (Tingley, Yamamoto, Hirose, Keele, & Imai, 2014). The Baron & Kelly method is among the original methods for testing for mediation but tends to have low statistical power. It is covered in this chapter because it provides a very clear approach to establishing relationships between variables and is still occassionally requested by reviewers. However, the mediation package method is highly recommended as a more flexible and statistically powerful approach.

Moderation analysis also allows you to test for the influence of a third variable, Z, on the relationship between variables X and Y. Rather than testing a causal link between these other variables, moderation tests for when or under what conditions an effect occurs. Moderators can stength, weaken, or reverse the nature of a relationship. For example, academic self-efficacy (confidence in own’s ability to do well in school) moderates the relationship between task importance and the amount of test anxiety a student feels (Nie, Lau, & Liau, 2011). Specifically, students with high self-efficacy experience less anxiety on important tests than students with low self-efficacy while all students feel relatively low anxiety for less important tests. Self-efficacy is considered a moderator in this case because it interacts with task importance, creating a different effect on test anxiety at different levels of task importance.

In general (and thus in R), moderation can be tested by interacting variables of interest (moderator with IV) and plotting the simple slopes of the interaction, if present. A variety of packages also include functions for testing moderation but as the underlying statistical approaches are the same, only the “by hand” approach is covered in detail in here.

Finally, this chapter will cover these basic mediation and moderation techniques only. For more complicated techniques, such as multiple mediation, moderated mediation, or mediated moderation please see the mediation package’s full documentation.

1.1 Getting Started

If necessary, review the Chapter on regression. Regression test assumptions may be tested with gvlma . You may load all the libraries below or load them as you go along. Review the help section of any packages you may be unfamiliar with ?(packagename).

2 Mediation Analyses

Mediation tests whether the effects of X (the independent variable) on Y (the dependent variable) operate through a third variable, M (the mediator). In this way, mediators explain the causal relationship between two variables or “how” the relationship works, making it a very popular method in psychological research.

Both mediation and moderation assume that there is little to no measurement error in the mediator/moderator variable and that the DV did not CAUSE the mediator/moderator. If mediator error is likely to be high, researchers should collect multiple indicators of the construct and use SEM to estimate latent variables. The safest ways to make sure your mediator is not caused by your DV are to experimentally manipulate the variable or collect the measurement of your mediator before you introduce your IV.

Total Effect Model.

Basic Mediation Model.

c = the total effect of X on Y c = c’ + ab c’= the direct effect of X on Y after controlling for M; c’=c-ab ab= indirect effect of X on Y

The above shows the standard mediation model. Perfect mediation occurs when the effect of X on Y decreases to 0 with M in the model. Partial mediation occurs when the effect of X on Y decreases by a nontrivial amount (the actual amount is up for debate) with M in the model.

2.1 Example Mediation Data

Set an appropriate working directory and generate the following data set.

In this example we’ll say we are interested in whether the number of hours since dawn (X) affect the subjective ratings of wakefulness (Y) 100 graduate students through the consumption of coffee (M).

Note that we are intentionally creating a mediation effect here (because statistics is always more fun if we have something to find) and we do so below by creating M so that it is related to X and Y so that it is related to M. This creates the causal chain for our analysis to parse.

2.2 Method 1: Baron & Kenny

This is the original 4-step method used to describe a mediation effect. Steps 1 and 2 use basic linear regression while steps 3 and 4 use multiple regression. For help with regression, see Chapter 10.

The Steps: 1. Estimate the relationship between X on Y (hours since dawn on degree of wakefulness) -Path “c” must be significantly different from 0; must have a total effect between the IV & DV

Estimate the relationship between X on M (hours since dawn on coffee consumption) -Path “a” must be significantly different from 0; IV and mediator must be related.

Estimate the relationship between M on Y controlling for X (coffee consumption on wakefulness, controlling for hours since dawn) -Path “b” must be significantly different from 0; mediator and DV must be related. -The effect of X on Y decreases with the inclusion of M in the model

Estimate the relationship between Y on X controlling for M (wakefulness on hours since dawn, controlling for coffee consumption) -Should be non-significant and nearly 0.

2.3 Interpreting Barron & Kenny Results

Here we find that our total effect model shows a significant positive relationship between hours since dawn (X) and wakefulness (Y). Our Path A model shows that hours since down (X) is also positively related to coffee consumption (M). Our Path B model then shows that coffee consumption (M) positively predicts wakefulness (Y) when controlling for hours since dawn (X). Finally, wakefulness (Y) does not predict hours since dawn (X) when controlling for coffee consumption (M).

Since the relationship between hours since dawn and wakefulness is no longer significant when controlling for coffee consumption, this suggests that coffee consumption does in fact mediate this relationship. However, this method alone does not allow for a formal test of the indirect effect so we don’t know if the change in this relationship is truly meaningful.

There are two primary methods for formally testing the significance of the indirect test: the Sobel test & bootstrapping (covered under the mediatation method).

The Sobel Test uses a specialized t-test to determine if there is a significant reduction in the effect of X on Y when M is present. Using the sobel function of the multilevel package will show provide you with three of the basic models we ran before (Mod1 = Total Effect; Mod2 = Path B; and Mod3 = Path A) as well as an estimate of the indirect effect, the standard error of that effect, and the z-value for that effect. You can either use this value to calculate your p-value or run the mediation.test function from the bda package to receive a p-value for this estimate.

In this case, we can now confirm that the relationship between hours since dawn and feelings of wakefulness are significantly mediated by the consumption of coffee (z’ = 3.84, p < .001).

However, the Sobel Test is largely considered an outdated method since it assumes that the indirect effect (ab) is normally distributed and tends to only have adequate power with large sample sizes. Thus, again, it is highly recommended to use the mediation bootstrapping method instead.

2.4 Method 2: The Mediation Pacakge Method

This package uses the more recent bootstrapping method of Preacher & Hayes (2004) to address the power limitations of the Sobel Test. This method computes the point estimate of the indirect effect (ab) over a large number of random sample (typically 1000) so it does not assume that the data are normally distributed and is especially more suitable for small sample sizes than the Barron & Kenny method.

To run the mediate function, we will again need a model of our IV (hours since dawn), predicting our mediator (coffee consumption) like our Path A model above. We will also need a model of the direct effect of our IV (hours since dawn) on our DV (wakefulness), when controlling for our mediator (coffee consumption). When can then use mediate to repeatedly simulate a comparsion between these models and to test the signifcance of the indirect effect of coffee consumption.

2.5 Interpreting Mediation Results

The mediate function gives us our Average Causal Mediation Effects (ACME), our Average Direct Effects (ADE), our combined indirect and direct effects (Total Effect), and the ratio of these estimates (Prop. Mediated). The ACME here is the indirect effect of M (total effect - direct effect) and thus this value tells us if our mediation effect is significant.

In this case, our fitMed model again shows a signifcant affect of coffee consumption on the relationship between hours since dawn and feelings of wakefulness, (ACME = .28, p < .001) with no direct effect of hours since dawn (ADE = -0.11, p = .27) and significant total effect ( p < .05).