Want to create or adapt books like this? Learn more about how Pressbooks supports open publishing practices.

Module 2 Chapter 3: What is Empirical Literature & Where can it be Found?

In Module 1, you read about the problem of pseudoscience. Here, we revisit the issue in addressing how to locate and assess scientific or empirical literature . In this chapter you will read about:

- distinguishing between what IS and IS NOT empirical literature

- how and where to locate empirical literature for understanding diverse populations, social work problems, and social phenomena.

Probably the most important take-home lesson from this chapter is that one source is not sufficient to being well-informed on a topic. It is important to locate multiple sources of information and to critically appraise the points of convergence and divergence in the information acquired from different sources. This is especially true in emerging and poorly understood topics, as well as in answering complex questions.

What Is Empirical Literature

Social workers often need to locate valid, reliable information concerning the dimensions of a population group or subgroup, a social work problem, or social phenomenon. They might also seek information about the way specific problems or resources are distributed among the populations encountered in professional practice. Or, social workers might be interested in finding out about the way that certain people experience an event or phenomenon. Empirical literature resources may provide answers to many of these types of social work questions. In addition, resources containing data regarding social indicators may also prove helpful. Social indicators are the “facts and figures” statistics that describe the social, economic, and psychological factors that have an impact on the well-being of a community or other population group.The United Nations (UN) and the World Health Organization (WHO) are examples of organizations that monitor social indicators at a global level: dimensions of population trends (size, composition, growth/loss), health status (physical, mental, behavioral, life expectancy, maternal and infant mortality, fertility/child-bearing, and diseases like HIV/AIDS), housing and quality of sanitation (water supply, waste disposal), education and literacy, and work/income/unemployment/economics, for example.

Three characteristics stand out in empirical literature compared to other types of information available on a topic of interest: systematic observation and methodology, objectivity, and transparency/replicability/reproducibility. Let’s look a little more closely at these three features.

Systematic Observation and Methodology. The hallmark of empiricism is “repeated or reinforced observation of the facts or phenomena” (Holosko, 2006, p. 6). In empirical literature, established research methodologies and procedures are systematically applied to answer the questions of interest.

Objectivity. Gathering “facts,” whatever they may be, drives the search for empirical evidence (Holosko, 2006). Authors of empirical literature are expected to report the facts as observed, whether or not these facts support the investigators’ original hypotheses. Research integrity demands that the information be provided in an objective manner, reducing sources of investigator bias to the greatest possible extent.

Transparency and Replicability/Reproducibility. Empirical literature is reported in such a manner that other investigators understand precisely what was done and what was found in a particular research study—to the extent that they could replicate the study to determine whether the findings are reproduced when repeated. The outcomes of an original and replication study may differ, but a reader could easily interpret the methods and procedures leading to each study’s findings.

What is NOT Empirical Literature

By now, it is probably obvious to you that literature based on “evidence” that is not developed in a systematic, objective, transparent manner is not empirical literature. On one hand, non-empirical types of professional literature may have great significance to social workers. For example, social work scholars may produce articles that are clearly identified as describing a new intervention or program without evaluative evidence, critiquing a policy or practice, or offering a tentative, untested theory about a phenomenon. These resources are useful in educating ourselves about possible issues or concerns. But, even if they are informed by evidence, they are not empirical literature. Here is a list of several sources of information that do not meet the standard of being called empirical literature:

- your course instructor’s lectures

- political statements

- advertisements

- newspapers & magazines (journalism)

- television news reports & analyses (journalism)

- many websites, Facebook postings, Twitter tweets, and blog postings

- the introductory literature review in an empirical article

You may be surprised to see the last two included in this list. Like the other sources of information listed, these sources also might lead you to look for evidence. But, they are not themselves sources of evidence. They may summarize existing evidence, but in the process of summarizing (like your instructor’s lectures), information is transformed, modified, reduced, condensed, and otherwise manipulated in such a manner that you may not see the entire, objective story. These are called secondary sources, as opposed to the original, primary source of evidence. In relying solely on secondary sources, you sacrifice your own critical appraisal and thinking about the original work—you are “buying” someone else’s interpretation and opinion about the original work, rather than developing your own interpretation and opinion. What if they got it wrong? How would you know if you did not examine the primary source for yourself? Consider the following as an example of “getting it wrong” being perpetuated.

Example: Bullying and School Shootings . One result of the heavily publicized April 1999 school shooting incident at Columbine High School (Colorado), was a heavy emphasis placed on bullying as a causal factor in these incidents (Mears, Moon, & Thielo, 2017), “creating a powerful master narrative about school shootings” (Raitanen, Sandberg, & Oksanen, 2017, p. 3). Naturally, with an identified cause, a great deal of effort was devoted to anti-bullying campaigns and interventions for enhancing resilience among youth who experience bullying. However important these strategies might be for promoting positive mental health, preventing poor mental health, and possibly preventing suicide among school-aged children and youth, it is a mistaken belief that this can prevent school shootings (Mears, Moon, & Thielo, 2017). Many times the accounts of the perpetrators having been bullied come from potentially inaccurate third-party accounts, rather than the perpetrators themselves; bullying was not involved in all instances of school shooting; a perpetrator’s perception of being bullied/persecuted are not necessarily accurate; many who experience severe bullying do not perpetrate these incidents; bullies are the least targeted shooting victims; perpetrators of the shooting incidents were often bullying others; and, bullying is only one of many important factors associated with perpetrating such an incident (Ioannou, Hammond, & Simpson, 2015; Mears, Moon, & Thielo, 2017; Newman &Fox, 2009; Raitanen, Sandberg, & Oksanen, 2017). While mass media reports deliver bullying as a means of explaining the inexplicable, the reality is not so simple: “The connection between bullying and school shootings is elusive” (Langman, 2014), and “the relationship between bullying and school shooting is, at best, tenuous” (Mears, Moon, & Thielo, 2017, p. 940). The point is, when a narrative becomes this publicly accepted, it is difficult to sort out truth and reality without going back to original sources of information and evidence.

What May or May Not Be Empirical Literature: Literature Reviews

Investigators typically engage in a review of existing literature as they develop their own research studies. The review informs them about where knowledge gaps exist, methods previously employed by other scholars, limitations of prior work, and previous scholars’ recommendations for directing future research. These reviews may appear as a published article, without new study data being reported (see Fields, Anderson, & Dabelko-Schoeny, 2014 for example). Or, the literature review may appear in the introduction to their own empirical study report. These literature reviews are not considered to be empirical evidence sources themselves, although they may be based on empirical evidence sources. One reason is that the authors of a literature review may or may not have engaged in a systematic search process, identifying a full, rich, multi-sided pool of evidence reports.

There is, however, a type of review that applies systematic methods and is, therefore, considered to be more strongly rooted in evidence: the systematic review .

Systematic review of literature. A systematic reviewis a type of literature report where established methods have been systematically applied, objectively, in locating and synthesizing a body of literature. The systematic review report is characterized by a great deal of transparency about the methods used and the decisions made in the review process, and are replicable. Thus, it meets the criteria for empirical literature: systematic observation and methodology, objectivity, and transparency/reproducibility. We will work a great deal more with systematic reviews in the second course, SWK 3402, since they are important tools for understanding interventions. They are somewhat less common, but not unheard of, in helping us understand diverse populations, social work problems, and social phenomena.

Locating Empirical Evidence

Social workers have available a wide array of tools and resources for locating empirical evidence in the literature. These can be organized into four general categories.

Journal Articles. A number of professional journals publish articles where investigators report on the results of their empirical studies. However, it is important to know how to distinguish between empirical and non-empirical manuscripts in these journals. A key indicator, though not the only one, involves a peer review process . Many professional journals require that manuscripts undergo a process of peer review before they are accepted for publication. This means that the authors’ work is shared with scholars who provide feedback to the journal editor as to the quality of the submitted manuscript. The editor then makes a decision based on the reviewers’ feedback:

- Accept as is

- Accept with minor revisions

- Request that a revision be resubmitted (no assurance of acceptance)

When a “revise and resubmit” decision is made, the piece will go back through the review process to determine if it is now acceptable for publication and that all of the reviewers’ concerns have been adequately addressed. Editors may also reject a manuscript because it is a poor fit for the journal, based on its mission and audience, rather than sending it for review consideration.

Indicators of journal relevance. Various journals are not equally relevant to every type of question being asked of the literature. Journals may overlap to a great extent in terms of the topics they might cover; in other words, a topic might appear in multiple different journals, depending on how the topic was being addressed. For example, articles that might help answer a question about the relationship between community poverty and violence exposure might appear in several different journals, some with a focus on poverty, others with a focus on violence, and still others on community development or public health. Journal titles are sometimes a good starting point but may not give a broad enough picture of what they cover in their contents.

In focusing a literature search, it also helps to review a journal’s mission and target audience. For example, at least four different journals focus specifically on poverty:

- Journal of Children & Poverty

- Journal of Poverty

- Journal of Poverty and Social Justice

- Poverty & Public Policy

Let’s look at an example using the Journal of Poverty and Social Justice . Information about this journal is located on the journal’s webpage: http://policy.bristoluniversitypress.co.uk/journals/journal-of-poverty-and-social-justice . In the section headed “About the Journal” you can see that it is an internationally focused research journal, and that it addresses social justice issues in addition to poverty alone. The research articles are peer-reviewed (there appear to be non-empirical discussions published, as well). These descriptions about a journal are almost always available, sometimes listed as “scope” or “mission.” These descriptions also indicate the sponsorship of the journal—sponsorship may be institutional (a particular university or agency, such as Smith College Studies in Social Work ), a professional organization, such as the Council on Social Work Education (CSWE) or the National Association of Social Work (NASW), or a publishing company (e.g., Taylor & Frances, Wiley, or Sage).

Indicators of journal caliber. Despite engaging in a peer review process, not all journals are equally rigorous. Some journals have very high rejection rates, meaning that many submitted manuscripts are rejected; others have fairly high acceptance rates, meaning that relatively few manuscripts are rejected. This is not necessarily the best indicator of quality, however, since newer journals may not be sufficiently familiar to authors with high quality manuscripts and some journals are very specific in terms of what they publish. Another index that is sometimes used is the journal’s impact factor . Impact factor is a quantitative number indicative of how often articles published in the journal are cited in the reference list of other journal articles—the statistic is calculated as the number of times on average each article published in a particular year were cited divided by the number of articles published (the number that could be cited). For example, the impact factor for the Journal of Poverty and Social Justice in our list above was 0.70 in 2017, and for the Journal of Poverty was 0.30. These are relatively low figures compared to a journal like the New England Journal of Medicine with an impact factor of 59.56! This means that articles published in that journal were, on average, cited more than 59 times in the next year or two.

Impact factors are not necessarily the best indicator of caliber, however, since many strong journals are geared toward practitioners rather than scholars, so they are less likely to be cited by other scholars but may have a large impact on a large readership. This may be the case for a journal like the one titled Social Work, the official journal of the National Association of Social Workers. It is distributed free to all members: over 120,000 practitioners, educators, and students of social work world-wide. The journal has a recent impact factor of.790. The journals with social work relevant content have impact factors in the range of 1.0 to 3.0 according to Scimago Journal & Country Rank (SJR), particularly when they are interdisciplinary journals (for example, Child Development , Journal of Marriage and Family , Child Abuse and Neglect , Child Maltreatmen t, Social Service Review , and British Journal of Social Work ). Once upon a time, a reader could locate different indexes comparing the “quality” of social work-related journals. However, the concept of “quality” is difficult to systematically define. These indexes have mostly been replaced by impact ratings, which are not necessarily the best, most robust indicators on which to rely in assessing journal quality. For example, new journals addressing cutting edge topics have not been around long enough to have been evaluated using this particular tool, and it takes a few years for articles to begin to be cited in other, later publications.

Beware of pseudo-, illegitimate, misleading, deceptive, and suspicious journals . Another side effect of living in the Age of Information is that almost anyone can circulate almost anything and call it whatever they wish. This goes for “journal” publications, as well. With the advent of open-access publishing in recent years (electronic resources available without subscription), we have seen an explosion of what are called predatory or junk journals . These are publications calling themselves journals, often with titles very similar to legitimate publications and often with fake editorial boards. These “publications” lack the integrity of legitimate journals. This caution is reminiscent of the discussions earlier in the course about pseudoscience and “snake oil” sales. The predatory nature of many apparent information dissemination outlets has to do with how scientists and scholars may be fooled into submitting their work, often paying to have their work peer-reviewed and published. There exists a “thriving black-market economy of publishing scams,” and at least two “journal blacklists” exist to help identify and avoid these scam journals (Anderson, 2017).

This issue is important to information consumers, because it creates a challenge in terms of identifying legitimate sources and publications. The challenge is particularly important to address when information from on-line, open-access journals is being considered. Open-access is not necessarily a poor choice—legitimate scientists may pay sizeable fees to legitimate publishers to make their work freely available and accessible as open-access resources. On-line access is also not necessarily a poor choice—legitimate publishers often make articles available on-line to provide timely access to the content, especially when publishing the article in hard copy will be delayed by months or even a year or more. On the other hand, stating that a journal engages in a peer-review process is no guarantee of quality—this claim may or may not be truthful. Pseudo- and junk journals may engage in some quality control practices, but may lack attention to important quality control processes, such as managing conflict of interest, reviewing content for objectivity or quality of the research conducted, or otherwise failing to adhere to industry standards (Laine & Winker, 2017).

One resource designed to assist with the process of deciphering legitimacy is the Directory of Open Access Journals (DOAJ). The DOAJ is not a comprehensive listing of all possible legitimate open-access journals, and does not guarantee quality, but it does help identify legitimate sources of information that are openly accessible and meet basic legitimacy criteria. It also is about open-access journals, not the many journals published in hard copy.

An additional caution: Search for article corrections. Despite all of the careful manuscript review and editing, sometimes an error appears in a published article. Most journals have a practice of publishing corrections in future issues. When you locate an article, it is helpful to also search for updates. Here is an example where data presented in an article’s original tables were erroneous, and a correction appeared in a later issue.

- Marchant, A., Hawton, K., Stewart A., Montgomery, P., Singaravelu, V., Lloyd, K., Purdy, N., Daine, K., & John, A. (2017). A systematic review of the relationship between internet use, self-harm and suicidal behaviour in young people: The good, the bad and the unknown. PLoS One, 12(8): e0181722. https://www.ncbi.nlm.nih.gov/pmc/articles/PMC5558917/

- Marchant, A., Hawton, K., Stewart A., Montgomery, P., Singaravelu, V., Lloyd, K., Purdy, N., Daine, K., & John, A. (2018).Correction—A systematic review of the relationship between internet use, self-harm and suicidal behaviour in young people: The good, the bad and the unknown. PLoS One, 13(3): e0193937. http://journals.plos.org/plosone/article?id=10.1371/journal.pone.0193937

Search Tools. In this age of information, it is all too easy to find items—the problem lies in sifting, sorting, and managing the vast numbers of items that can be found. For example, a simple Google® search for the topic “community poverty and violence” resulted in about 15,600,000 results! As a means of simplifying the process of searching for journal articles on a specific topic, a variety of helpful tools have emerged. One type of search tool has previously applied a filtering process for you: abstracting and indexing databases . These resources provide the user with the results of a search to which records have already passed through one or more filters. For example, PsycINFO is managed by the American Psychological Association and is devoted to peer-reviewed literature in behavioral science. It contains almost 4.5 million records and is growing every month. However, it may not be available to users who are not affiliated with a university library. Conducting a basic search for our topic of “community poverty and violence” in PsychINFO returned 1,119 articles. Still a large number, but far more manageable. Additional filters can be applied, such as limiting the range in publication dates, selecting only peer reviewed items, limiting the language of the published piece (English only, for example), and specified types of documents (either chapters, dissertations, or journal articles only, for example). Adding the filters for English, peer-reviewed journal articles published between 2010 and 2017 resulted in 346 documents being identified.

Just as was the case with journals, not all abstracting and indexing databases are equivalent. There may be overlap between them, but none is guaranteed to identify all relevant pieces of literature. Here are some examples to consider, depending on the nature of the questions asked of the literature:

- Academic Search Complete—multidisciplinary index of 9,300 peer-reviewed journals

- AgeLine—multidisciplinary index of aging-related content for over 600 journals

- Campbell Collaboration—systematic reviews in education, crime and justice, social welfare, international development

- Google Scholar—broad search tool for scholarly literature across many disciplines

- MEDLINE/ PubMed—National Library of medicine, access to over 15 million citations

- Oxford Bibliographies—annotated bibliographies, each is discipline specific (e.g., psychology, childhood studies, criminology, social work, sociology)

- PsycINFO/PsycLIT—international literature on material relevant to psychology and related disciplines

- SocINDEX—publications in sociology

- Social Sciences Abstracts—multiple disciplines

- Social Work Abstracts—many areas of social work are covered

- Web of Science—a “meta” search tool that searches other search tools, multiple disciplines

Placing our search for information about “community violence and poverty” into the Social Work Abstracts tool with no additional filters resulted in a manageable 54-item list. Finally, abstracting and indexing databases are another way to determine journal legitimacy: if a journal is indexed in a one of these systems, it is likely a legitimate journal. However, the converse is not necessarily true: if a journal is not indexed does not mean it is an illegitimate or pseudo-journal.

Government Sources. A great deal of information is gathered, analyzed, and disseminated by various governmental branches at the international, national, state, regional, county, and city level. Searching websites that end in.gov is one way to identify this type of information, often presented in articles, news briefs, and statistical reports. These government sources gather information in two ways: they fund external investigations through grants and contracts and they conduct research internally, through their own investigators. Here are some examples to consider, depending on the nature of the topic for which information is sought:

- Agency for Healthcare Research and Quality (AHRQ) at https://www.ahrq.gov/

- Bureau of Justice Statistics (BJS) at https://www.bjs.gov/

- Census Bureau at https://www.census.gov

- Morbidity and Mortality Weekly Report of the CDC (MMWR-CDC) at https://www.cdc.gov/mmwr/index.html

- Child Welfare Information Gateway at https://www.childwelfare.gov

- Children’s Bureau/Administration for Children & Families at https://www.acf.hhs.gov

- Forum on Child and Family Statistics at https://www.childstats.gov

- National Institutes of Health (NIH) at https://www.nih.gov , including (not limited to):

- National Institute on Aging (NIA at https://www.nia.nih.gov

- National Institute on Alcohol Abuse and Alcoholism (NIAAA) at https://www.niaaa.nih.gov

- National Institute of Child Health and Human Development (NICHD) at https://www.nichd.nih.gov

- National Institute on Drug Abuse (NIDA) at https://www.nida.nih.gov

- National Institute of Environmental Health Sciences at https://www.niehs.nih.gov

- National Institute of Mental Health (NIMH) at https://www.nimh.nih.gov

- National Institute on Minority Health and Health Disparities at https://www.nimhd.nih.gov

- National Institute of Justice (NIJ) at https://www.nij.gov

- Substance Abuse and Mental Health Services Administration (SAMHSA) at https://www.samhsa.gov/

- United States Agency for International Development at https://usaid.gov

Each state and many counties or cities have similar data sources and analysis reports available, such as Ohio Department of Health at https://www.odh.ohio.gov/healthstats/dataandstats.aspx and Franklin County at https://statisticalatlas.com/county/Ohio/Franklin-County/Overview . Data are available from international/global resources (e.g., United Nations and World Health Organization), as well.

Other Sources. The Health and Medicine Division (HMD) of the National Academies—previously the Institute of Medicine (IOM)—is a nonprofit institution that aims to provide government and private sector policy and other decision makers with objective analysis and advice for making informed health decisions. For example, in 2018 they produced reports on topics in substance use and mental health concerning the intersection of opioid use disorder and infectious disease, the legal implications of emerging neurotechnologies, and a global agenda concerning the identification and prevention of violence (see http://www.nationalacademies.org/hmd/Global/Topics/Substance-Abuse-Mental-Health.aspx ). The exciting aspect of this resource is that it addresses many topics that are current concerns because they are hoping to help inform emerging policy. The caution to consider with this resource is the evidence is often still emerging, as well.

Numerous “think tank” organizations exist, each with a specific mission. For example, the Rand Corporation is a nonprofit organization offering research and analysis to address global issues since 1948. The institution’s mission is to help improve policy and decision making “to help individuals, families, and communities throughout the world be safer and more secure, healthier and more prosperous,” addressing issues of energy, education, health care, justice, the environment, international affairs, and national security (https://www.rand.org/about/history.html). And, for example, the Robert Woods Johnson Foundation is a philanthropic organization supporting research and research dissemination concerning health issues facing the United States. The foundation works to build a culture of health across systems of care (not only medical care) and communities (https://www.rwjf.org).

While many of these have a great deal of helpful evidence to share, they also may have a strong political bias. Objectivity is often lacking in what information these organizations provide: they provide evidence to support certain points of view. That is their purpose—to provide ideas on specific problems, many of which have a political component. Think tanks “are constantly researching solutions to a variety of the world’s problems, and arguing, advocating, and lobbying for policy changes at local, state, and federal levels” (quoted from https://thebestschools.org/features/most-influential-think-tanks/ ). Helpful information about what this one source identified as the 50 most influential U.S. think tanks includes identifying each think tank’s political orientation. For example, The Heritage Foundation is identified as conservative, whereas Human Rights Watch is identified as liberal.

While not the same as think tanks, many mission-driven organizations also sponsor or report on research, as well. For example, the National Association for Children of Alcoholics (NACOA) in the United States is a registered nonprofit organization. Its mission, along with other partnering organizations, private-sector groups, and federal agencies, is to promote policy and program development in research, prevention and treatment to provide information to, for, and about children of alcoholics (of all ages). Based on this mission, the organization supports knowledge development and information gathering on the topic and disseminates information that serves the needs of this population. While this is a worthwhile mission, there is no guarantee that the information meets the criteria for evidence with which we have been working. Evidence reported by think tank and mission-driven sources must be utilized with a great deal of caution and critical analysis!

In many instances an empirical report has not appeared in the published literature, but in the form of a technical or final report to the agency or program providing the funding for the research that was conducted. One such example is presented by a team of investigators funded by the National Institute of Justice to evaluate a program for training professionals to collect strong forensic evidence in instances of sexual assault (Patterson, Resko, Pierce-Weeks, & Campbell, 2014): https://www.ncjrs.gov/pdffiles1/nij/grants/247081.pdf . Investigators may serve in the capacity of consultant to agencies, programs, or institutions, and provide empirical evidence to inform activities and planning. One such example is presented by Maguire-Jack (2014) as a report to a state’s child maltreatment prevention board: https://preventionboard.wi.gov/Documents/InvestmentInPreventionPrograming_Final.pdf .

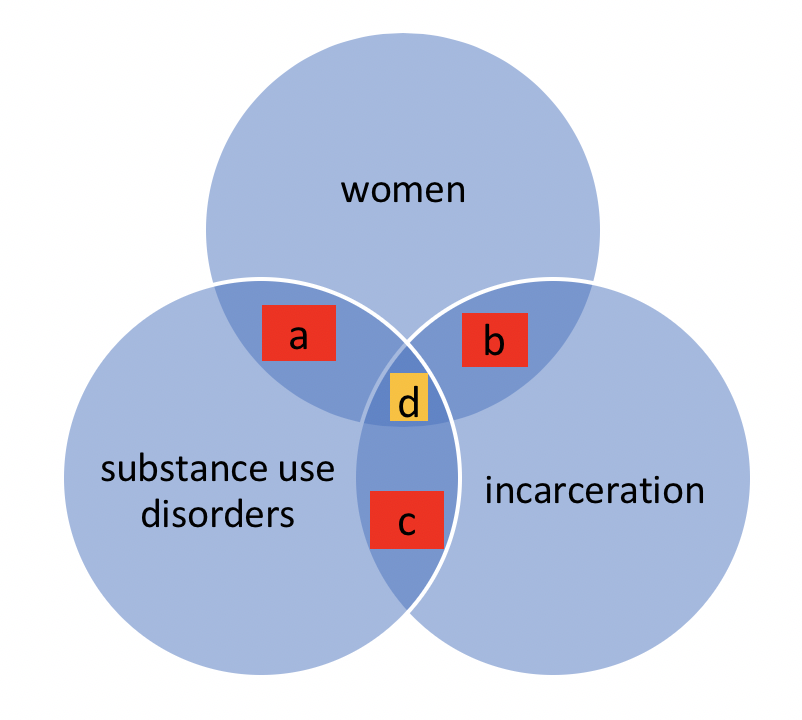

When Direct Answers to Questions Cannot Be Found. Sometimes social workers are interested in finding answers to complex questions or questions related to an emerging, not-yet-understood topic. This does not mean giving up on empirical literature. Instead, it requires a bit of creativity in approaching the literature. A Venn diagram might help explain this process. Consider a scenario where a social worker wishes to locate literature to answer a question concerning issues of intersectionality. Intersectionality is a social justice term applied to situations where multiple categorizations or classifications come together to create overlapping, interconnected, or multiplied disadvantage. For example, women with a substance use disorder and who have been incarcerated face a triple threat in terms of successful treatment for a substance use disorder: intersectionality exists between being a woman, having a substance use disorder, and having been in jail or prison. After searching the literature, little or no empirical evidence might have been located on this specific triple-threat topic. Instead, the social worker will need to seek literature on each of the threats individually, and possibly will find literature on pairs of topics (see Figure 3-1). There exists some literature about women’s outcomes for treatment of a substance use disorder (a), some literature about women during and following incarceration (b), and some literature about substance use disorders and incarceration (c). Despite not having a direct line on the center of the intersecting spheres of literature (d), the social worker can develop at least a partial picture based on the overlapping literatures.

Figure 3-1. Venn diagram of intersecting literature sets.

Take a moment to complete the following activity. For each statement about empirical literature, decide if it is true or false.

Social Work 3401 Coursebook Copyright © by Dr. Audrey Begun is licensed under a Creative Commons Attribution-NonCommercial-NoDerivatives 4.0 International License , except where otherwise noted.

Share This Book

- Open access

- Published: 09 August 2022

A scoping review of frameworks in empirical studies and a review of dissemination frameworks

- Ana A. Baumann ORCID: orcid.org/0000-0002-4523-0147 1 ,

- Cole Hooley 2 ,

- Emily Kryzer 3 ,

- Alexandra B. Morshed 4 ,

- Cassidy A. Gutner 5 , 6 ,

- Sara Malone 7 ,

- Callie Walsh-Bailey 7 ,

- Meagan Pilar 8 ,

- Brittney Sandler 9 ,

- Rachel G. Tabak 7 &

- Stephanie Mazzucca 7

Implementation Science volume 17 , Article number: 53 ( 2022 ) Cite this article

10k Accesses

19 Citations

49 Altmetric

Metrics details

The field of dissemination and implementation (D&I) research has grown immensely in recent years. However, the field of dissemination research has not coalesced to the same degree as the field of implementation research. To advance the field of dissemination research, this review aimed to (1) identify the extent to which dissemination frameworks are used in dissemination empirical studies, (2) examine how scholars define dissemination, and (3) identify key constructs from dissemination frameworks.

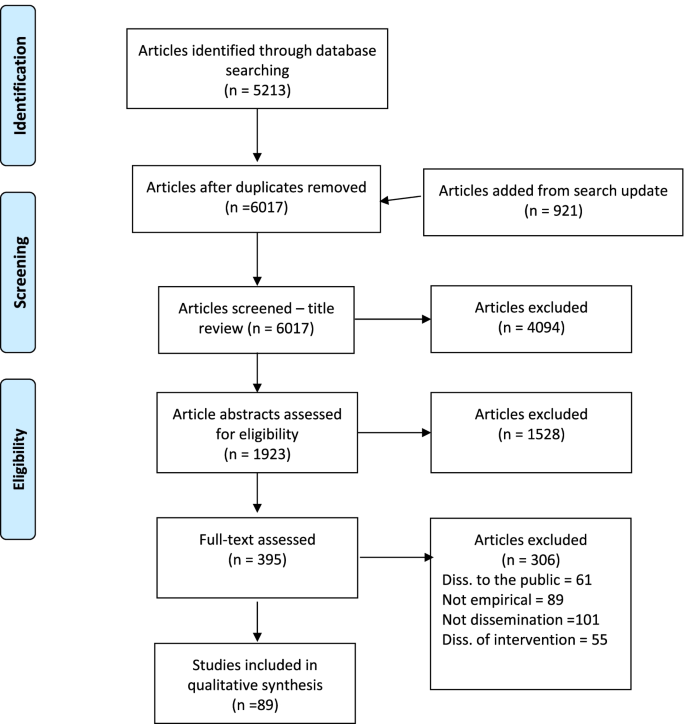

To achieve aims 1 and 2, we conducted a scoping review of dissemination studies published in D&I science journals. The search strategy included manuscripts published from 1985 to 2020. Articles were included if they were empirical quantitative or mixed methods studies about the dissemination of information to a professional audience. Studies were excluded if they were systematic reviews, commentaries or conceptual papers, scale-up or scale-out studies, qualitative or case studies, or descriptions of programs. To achieve aim 1, we compiled the frameworks identified in the empirical studies. To achieve aim 2, we compiled the definitions from dissemination from frameworks identified in aim 1 and from dissemination frameworks identified in a 2021 review (Tabak RG, Am J Prev Med 43:337-350, 2012). To achieve aim 3, we compile the constructs and their definitions from the frameworks.

Out of 6017 studies, 89 studies were included for full-text extraction. Of these, 45 (51%) used a framework to guide the study. Across the 45 studies, 34 distinct frameworks were identified, out of which 13 (38%) defined dissemination. There is a lack of consensus on the definition of dissemination. Altogether, we identified 48 constructs, divided into 4 categories: process, determinants, strategies, and outcomes. Constructs in the frameworks are not well defined.

Implication for D&I research

This study provides a critical step in the dissemination research literature by offering suggestions on how to define dissemination research and by cataloging and defining dissemination constructs. Strengthening these definitions and distinctions between D&I research could enhance scientific reproducibility and advance the field of dissemination research.

Peer Review reports

Contributions to the literature

The field of dissemination research has not coalesced to the same degree as the field of implementation research. Clearly defining dissemination and identifying dissemination constructs will help enhance dissemination research.

In a review of 34 frameworks, we found a lack of consensus in the definition of dissemination and 48 constructs identified in the frameworks.

We provide a suggested definition of dissemination and a catalog of the constructs to advance the field of dissemination research.

The field of dissemination and implementation (D&I) research has grown extensively in the past years. While scholars from the field of implementation research have made substantial advances, the field of dissemination research has not coalesced to the same degree, limiting the ability to conduct rigorous, reproducible dissemination research. Dissemination research has broadly focused on examining how evidence-based information gets packaged into practices, policies, and programs. This information delivery is often targeted at providers in public health and clinical settings and policymakers to improve public health decision-making. Here, we use provider to refer to a person or group that provides something—in this case, information. The chasm between how evidence-based information is disseminated and how this information is used by providers and policymakers is well-documented [ 1 ] and further evidenced by the ongoing COVID-19 pandemic [ 2 , 3 ].

The definition of dissemination research has been modified over the years and is not consistent across various sources. Dissemination research could be advanced by further development of existing conceptual and theoretical work. In a previous review [ 4 ], nine D&I science frameworks were categorized as “dissemination only” frameworks (i.e., the explicit focus of the framework was on the spread of information about evidence-based interventions to a target audience) [ 4 ]. Frameworks are important because they provide a systematic way to develop, plan, manage and evaluate a study [ 5 , 6 ]. The extent to which dissemination scholars are using frameworks to inform their studies, and which frameworks are used, is unclear.

Building on previous compilations of dissemination frameworks [ 7 ], this paper intends to advance the knowledge of dissemination research by examining dissemination frameworks reported in the empirical literature, cataloging the constructs across different frameworks, and providing definitions for these constructs. A scoping review is ideal at this stage of the dissemination research literature because it helps map the existing frameworks from a body of emerging literature and identifies gaps in the field [ 8 ].

Specifically, this study has three aims: (1) to conduct a scoping review of the empirical dissemination literature and identify the dissemination frameworks informing those studies, (2) to examine how scholars define dissemination, and (3) to catalog and define the constructs from the dissemination frameworks identified in aim 1 and the frameworks categorized as dissemination only by Tabak et al. [ 4 ]

The methods section is divided into the three aims of this study. First, we report the methods for our scoping review to identify the frameworks used in empirical dissemination studies. Second, we report on how we identified the definitions of dissemination. Third, we report the methods for abstracting the dissemination constructs from the frameworks identified in the empirical literature (aim 1) and from the frameworks categorized as “dissemination only” by Tabak et al. [ 4 ] Tabak et al. [ 4 ] categorized models “on a continuum from dissemination to implementation” and acknowledge that “these divisions are intended to assist the reader in model selection, rather than to provide actual classifications for models.” For the current review, we selected only those categorized as dissemination-only because we aimed to examine whether there were any distinct components between the dissemination and implementation frameworks by coding the dissemination-only frameworks.

Scoping review of the literature

We conducted a scoping review to identify dissemination frameworks used in the empirical dissemination literature. A scoping review is appropriate as the goal of this work is to map the current state of the literature, not to evaluate evidence or provide specific recommendations as is the case with a systematic review [ 8 ]. We followed the method developed by Arksey and O’Malley [ 9 ] and later modified by Levac and colleagues [ 10 ]. In doing so, we first identified the research questions (i.e., “Which dissemination frameworks are used in the literature?” and “How are the dissemination constructs defined?”), identified relevant studies (see below), and charted the data to present a summary of our results.

We iteratively created a search strategy in Scopus with terms relevant to dissemination. We ran the search in 2017 and again in December 2020, using the following terms: TITLE-ABS-KEY (dissem* OR (knowledge AND trans*) OR diffuse* OR spread*) in the 20 most relevant journals for the D&I science field, identified by Norton et al. [ 11 ] We ran an identical search at a second time point due to several logistical reasons. This review was an unfunded project conducted by faculty and students who experienced numerous significant life transitions during the project period. We anticipated the original search would be out of date by the time of submission for publication, thus wanted to provide the most up-to-date literature feasible given the time needed to complete the review steps. This approach is appropriate for systematic and scoping reviews [ 12 ]

We included studies if they were (a) quantitative or mixed methods empirical studies, (b) if they were about the dissemination of information (e.g., guidelines) to targeted professional audiences, and (c) published since 1985. Articles were excluded if they were (a) systematic reviews, commentaries, or other non-empirical articles; (b) qualitative studies; (c) scale-up studies (i.e., expanding a program into additional delivery settings); (d) case studies or description of programs; and/or (e) dissemination of information to lay consumer audiences or the general public. Some of the exclusion criteria, specifically around distinguishing studies that were dissemination studies from scale-up or health communication studies, were refined as we reviewed the paper abstracts. In the “Definition of dissemination section, we explain our rationale and process to distinguish these types of studies.

The screening procedures were piloted among all coders with a random sample of articles. AB, SaM, CH, CG, EK, and CWB screened titles for inclusion/exclusion independently, then met to ensure a shared understanding of the criteria and to generate consensus. The same coders then reviewed titles based on the above inclusion/exclusion criteria. Any unclear records were retained for abstract review. Consistent with the previously utilized methodology, the abstract review was conducted sequentially to the title review [ 13 , 14 ]. This approach can improve efficiency while maintaining accuracy [ 15 ]. In this round of review, abstracts were single-screened for inclusion/exclusion. Then, 26% of the articles were independently co-screened by pairs of coders; coding pairs met to generate consensus on disagreements.

Articles that passed to full-text review were independently screened by two coders (AB, CH, EK, and CWB). Coders met to reach a consensus and a third reviewer was consulted if the pair could not reach an agreement. From included records, coders extracted bibliometric information about the article (authors, journal, and year of publication) and the name of the framework used in the study (if a framework was used). Coders met regularly to discuss any discrepancies in coding and to generate consensus; final decisions were made by a third reviewer if necessary.

Review of definitions of dissemination

First, we compiled the list of frameworks identified in the empirical studies. Because some frameworks categorized as dissemination-only by the review of frameworks in Tabak et al. [ 4 ] were not present in our sample, we added those to our list of frameworks to review. From the articles describing these frameworks, we extracted dissemination definitions, constructs, and construct definitions. AB, SM, AM, and MP independently abstracted and compared the constructs’ definitions.

Review of dissemination constructs

Once constructs were identified, the frequency of the constructs was counted, and definitions were abstracted. We then organized the constructs into four categories: dissemination processes, determinants, strategies, and outcomes. These categories were organized based on themes by AB and reviewed by all authors. We presented different versions of these categories to groups of stakeholders along our process, including posters at the 2019 and 2021 Conferences on the Science of Dissemination and Implementation in Health, the Washington University Network for Dissemination and Implementation Researchers (WUNDIR), and our network of D&I research peers. During these presentations and among our internal authorship group, we received feedback that the categorization of the constructs was helpful.

We defined the constructs in the dissemination process as constructs that relate to processes, stages, or events by which the dissemination process happens. The dissemination determinants construct encompasses constructs that may facilitate or obstruct the dissemination process (i.e., barriers or facilitators). The dissemination strategies constructs are those that describe the approaches or actions of a dissemination process. Finally, dissemination outcomes are the identified dissemination outcomes in the frameworks (distinct from health service, clinical, or population health outcomes). These categories are subjective and defined by the study team. The tables in Additional file 1 include our suggested labels and definitions for the constructs within these four categories, the definitions as provided by the articles describing the frameworks, and the total frequency of each construct from the frameworks reviewed.

The PRISMA Extension for Scoping Reviews (PRISMA-ScR) flowchart is shown in Fig. 1 . The combined searches yielded 6017 unique articles. Of those, 5622 were excluded during the title and abstract screening. Of the 395 full-text articles, we retained 89 in our final sample.

PRISMA chart

Papers were excluded during the full-text review for several reasons. Many papers ( n = 101, 33%) were excluded because they did not meet the coding definitions for dissemination studies. For example, some studies were focused on larger quality improvement initiatives without a clear dissemination component while other studies reported disseminating findings tangentially. Many ( n = 61; 20%) were excluded because they reported a study testing approaches to spread information to the general public or lay audiences instead of to a group of professionals (e.g., disseminating information about HIV perinatal transmission to mothers, not healthcare providers.) Several articles ( n = 55, 18%) were related to the scale up of interventions and not the dissemination of information.

Frameworks identified

Table 1 shows the frameworks used in the included studies. We identified a total of 27 unique frameworks in the empirical studies. Out of the 27 frameworks identified, only three overlapped with the 11 frameworks cataloged as “dissemination only” in Tabak et al. [ 4 ] review. Two frameworks identified in the empirical studies were cataloged by Tabak et al [ 4 ] as “D = I,” one was cataloged as “D > I,” and one as “I only.” Additional file 1 : Table S1 shows all the frameworks, with frameworks 1–11 being “D only” from Tabak et al. [ 4 ], and frameworks 12–34 are the ones identified in our empirical sample. Rogers’ diffusion of innovation [ 16 ] was used most frequently (in 10 studies), followed by the Knowledge to Action Framework (in 4 studies) [ 17 ] and RE-AIM (in 3 studies) [ 18 ]. Dobbins’ Framework for the Dissemination and Utilization of Research for Health-Care Policy and Practice [ 19 ], the Interactive Systems Framework and Network Theory [ 20 ], and Kingdon’s Multiple Streams Framework [ 21 ] were each used by two studies. Thirty studies (33%) did not explicitly describe a dissemination framework that informed their work.

Definition of dissemination

Table 2 shows the definition of dissemination from the frameworks. Out of the 38 frameworks, only 12 (32%) defined dissemination. There is wide variability in the depth of the definitions, with some authors defining dissemination as a process “transferring research to the users,” [ 24 ] and others defining it as both a process and an outcome [ 19 , 23 ]. The definitions of dissemination varied among the 13 frameworks that defined dissemination; however, some shared characteristics were identified. In nine of the 13 frameworks, the definition of dissemination included language about the movement or spread of something, whether an idea, innovation, program, or research finding [ 16 , 23 , 24 , 25 , 26 , 27 , 28 , 31 , 32 ]. Seven of the frameworks described dissemination as active, intentional, or planned by those leading a dissemination effort [ 7 , 16 , 23 , 25 , 26 , 27 , 32 ]. Five frameworks specified some type of outcome as a result of dissemination (e.g., the adoption of an innovation or awareness of research results) [ 7 , 19 , 23 , 27 , 29 , 30 ]. Three of the frameworks’ definitions included the role of influential determinants of dissemination [ 19 , 27 , 29 , 30 ]. Only two frameworks highlighted dissemination as a process [ 23 , 25 ].

Definition of dissemination constructs

Below, we describe the results presented in Tables 3 , 4 , 5 , and 6 with constructs grouped by dissemination process, determinants, strategies, and outcomes. The definitions proposed for the constructs were based on a thematic review of the definitions provided in the articles, which can be found in the Additional file 1 : Tables S2-S5.

Table 3 shows the constructs that relate to the dissemination processes , i.e., the steps or processes through which dissemination happens. Seven constructs were categorized as processes: knowledge inquiry, knowledge synthesis, communication, interaction, persuasion, activation, and research transfer. That is, six frameworks suggest that the dissemination process starts with an inquiry of what type of information is needed to close the knowledge gap. Next, there is a process of gathering and synthesizing the information, including examining the context in which the information will be shared. After the information is identified and gathered, there is a process of communication, interaction with the information, and persuasion where the information is shared with the target users, where the users then engage with the information and activate towards action based on the information received. Finally, there is a process of research transfer, where the information sharing “becomes essentially independent of explicit intentional change activity.” [ 33 ]

Table 4 shows the 17 constructs categorized as dissemination determinants , which are constructs that reflect aspects that may facilitate or hinder the dissemination process. Determinants identified included content of the information, context, interpersonal networks, source of knowledge and audience, the medium of dissemination, opinion leaders, compatibility of the information with the setting, type of information, and capacity of the audience to adopt the innovation. Communication, the salience of communication, and users’ perceived attitudes towards the information were the most frequent constructs ( n = 14 each), followed by context ( n = 13), interpersonal networks ( n = 12), sources of knowledge, and audience ( n = 10 each).

Table 5 shows the nine constructs related to dissemination strategies , which are constructs that describe the approaches or actions to promote or support dissemination. Leeman and colleagues [ 34 ] conceptualize dissemination strategies as strategies that provide synthesis, translation, and support of information. The authors refer to dissemination as two broad strategies: developing materials and distributing materials. We identified several strategies related to the synthesis of information (e.g., identify the knowledge), translation of information (e.g., adapt information to context), and other constructs. Monitoring and evaluation were the most frequent constructs ( n = 10), with identify the quality gap and increase audience’s skills next ( n = 6).

Finally, Table 6 shows the dissemination outcomes , which are constructs related to the effects of the dissemination process. Fifteen constructs were categorized here, including awareness and changes in policy, decision and impact, adoption and cost, emotion reactions, knowledge gained, accountability, maintenance, persuasion, reception, confirmation, and fidelity. Knowledge utilization was the most cited construct across frameworks ( n = 11), followed by awareness and change in policy ( n = 8 each).

The goals of this study were threefold. First, we conducted a scoping review of the empirical literature to catalog the dissemination frameworks informing dissemination studies. Second, we compiled the definition of dissemination, and third, we cataloged and defined the constructs from the dissemination frameworks. During our review process, we found that clearly identifying dissemination studies was more complicated than anticipated. Defining the sample of articles to code for this study was a challenge because of the large variability of studies that use the word “dissemination” in the titles but that are actually scale-up or health communication studies.

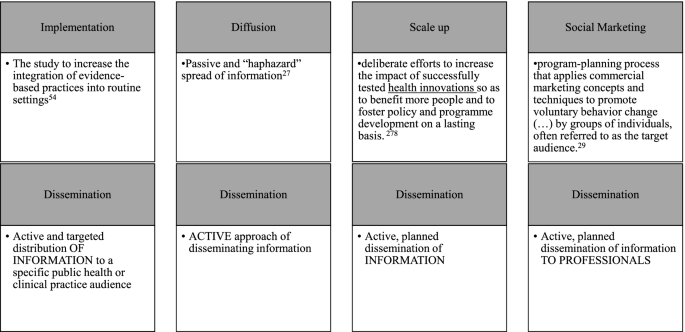

The high variability in the definition of dissemination poses a challenge for the field because if we do not clearly define what we are doing, we are unable to set boundaries to distinguish dissemination research from other fields. Among the identified frameworks that defined dissemination, the definitions highlighted that dissemination involved the spread of something, whether knowledge, an innovation, or a program. Distinct from diffusion, several definitions described dissemination as an active process, using intentional strategies. Few definitions described the role of determinants, whether dissemination is a process or a discrete event, and what strategies and outcomes may be pertinent. Future work is needed to unify these distinct conceptualizations into a comprehensive definition that dissemination researchers can use.

While it is clear that dissemination differs from diffusion, as the latter has been considered the passive and “haphazard” spread of information [ 35 ], the distinction between dissemination and scale-up—as shown in the definitions identified in this study—is less clear. Some articles from our search not included in the review conceptualized dissemination as similar to scale-up. To clarify the distinction between dissemination and scale-up in our review, we used the WHO’s definition of scale-up [ 36 ] as “deliberate efforts to increase the impact of successfully tested health innovations to benefit more people and to foster policy and program development on a lasting basis.” In other words, based on these definitions, our team considered scale-up as referencing active efforts to spread evidence-based interventions , whereas diffusion is the passive spread of information. Dissemination, therefore, can be conceptualized as the active and planned spread of information.

Another helpful component in distinguishing dissemination science from other sciences is related to the target audience. Brownson et al. [ 1 ] define dissemination as an “active approach of spreading evidence-based information to the target audience via determined channels using planned strategies” (p. 9). Defining the target audience in the context of dissemination is important because it may help distinguish the field from social marketing. Indeed, several studies we excluded involved sharing information with the public (e.g., increasing the awareness of the importance of sunscreen in public swimming pools). Grier and Bryant define social marketing as a “program-planning process that applies commercial marketing concepts and techniques to promote voluntary behavior change ( … ) by groups of individuals, often referred to as the target audience.” [ 37 ] The target audience in the context of social marketing, the authors explain, is usually considered consumers but can also be policymakers [ 37 ]. To attempt to delineate a distinction between these two fields, dissemination work has traditionally identified professionals (e.g., clinicians, public health practitioners, policymakers) as the target audience of dissemination efforts, whereas the target audience in social marketing is conceptualized as a broader audience. Figure 2 shows how we conceptualize the distinct components of dissemination research from other fields. Based on these distinctions, we propose the following coalesced definition for dissemination research to guide this review: the scientific study of the targeted distribution of information to a specific professional person or group of professionals. Clearly distinguishing dissemination from scale-up as well as health communication will help further advance the dissemination research field.

Proposed distinction of definitions between diffusion, scale-up, and dissemination

Our results show that of the empirical papers identified in this review, 51% used a framework to guide their study. This finding mirrors the suboptimal use of frameworks in the field of implementation research [ 38 , 39 ], with scholars recently putting forth guidance on how to select and use frameworks to enhance their use in implementation research studies [ 6 ]. Similarly, we provide a catalog of dissemination frameworks and their constructs identified in dissemination studies. It is necessary to move the dissemination research field forward by embedding frameworks in dissemination-focused studies.

Some empirical papers included in our review used frameworks based on the knowledge translation literature. Knowledge translation, a field most prominent outside the USA, has been defined as “a dynamic and iterative process that includes the synthesis, dissemination, exchange and ethically sound application of knowledge to improve health, provide more effective health services and products, and strengthen the health care system” [ 40 ]. As such, it conceptualizes an interactive relationship between the creation and the application of knowledge. In the USA, however, researchers tend to conceptualize dissemination as a concept discrete from implementation and use the acronym “D&I” to identify these two fields.

While one could state that there is a distinct set of outcomes, methods, and frameworks between dissemination and implementation fields, previous scholars have cataloged [ 4 ] a continuum, from dissemination only” to “implementation only” frameworks. Consistent with this, our findings show that scholars have adapted implementation frameworks to fit dissemination outcomes (e.g., Klesges’ adaptation of RE-AIM [ 41 ]), while other frameworks have both dissemination and implementation components (e.g., integrated Promoting Action on Research Implementation in Health Services [i-PARIHS] [ 42 ]). Additionally, behavioral change frameworks (e.g., theory of planned behavior) were cataloged in our study as they were used in included articles. The use of implementation frameworks in studies identified here as dissemination studies highlights at least three potential hypotheses. One possibility is the use of implementation frameworks in dissemination studies is due to the underdevelopment of the field of dissemination, as shown in the challenges that we found in the conceptual definition of dissemination. We hope that, by clearly outlining a definition of dissemination, scholars can start to empirically examine whether there are distinct components between implementation and dissemination outcomes and processes.

The second hypothesis is that we still do not have enough evidence in the dissemination or implementation fields to be dogmatic about the categorization of frameworks as either “dissemination” or “implementation.” Until we have more robust evidence about what is and what is not dissemination (or other continua along which frameworks may be categorized), we caution against holding too firm to characterizations of frameworks [ 38 , 43 , 44 , 45 ] Frameworks evolve as more empirical evidence is gathered [ 43 , 45 , 46 , 47 , 48 , 49 ], and they are applied in different settings and contexts. We could hypothesize that it is less important, as of now, to categorize a framework as an implementation or dissemination framework and instead clearly explain why a specific framework was selected and how it is applied in the study.

Selection and application of frameworks in dissemination and implementation research is still a challenge, especially considering scholars may often select frameworks in a haphazard way [ 6 , 50 , 51 ]. While scholars have put forward some guidance to select implementation frameworks [ 6 , 52 ], the challenge in the dissemination and implementation research fields is likely not only in the selection of the frameworks but perhaps more so in the misuse or misapplication of frameworks, theories, or models. A survey indicated that there is little consensus on the process that scholars use to select frameworks and that scholars select frameworks based on several criteria, including familiarity with the framework [ 50 ]. As such, Birken et al. [ 52 ] offer other criteria for the selection of frameworks, such as (a) usability (i.e., whether the framework includes relevant constructs and whether the framework provides an explanation of how constructs influence each other), (b) applicability (i.e., how a method, such as an interview, can be used with the framework; whether the framework is generalizable to different contexts), and (c) testability (i.e., whether the framework proposed a testable hypothesis and whether it contributes to an evidence-based or theory development). Moullin et al. [ 6 ] suggest that implementation frameworks should be selected based on their (a) purpose, (b), levels of analysis (e.g., provider, organizational, system), (c) degree of inclusion and depth of analysis or operationalization of implementation concepts, and (d) the framework’s orientation (e.g., setting and type of intervention).

More than one framework can be selected in one study, depending on the research question(s). The application of a framework can support a project in the planning stages (e.g., examining the determinants of a context, engaging with stakeholders), during the project (e.g., making explicit the mechanisms of action, tracking and exploring the process of change), and after the project is completed (e.g., use of the framework to report outcomes, to understand what happened and why) [ 6 , 51 , 53 ]. We believe that similar guidance can and should be applied to dissemination frameworks; further empirical work may be needed to help identify how to select and apply dissemination and/or implementation frameworks in dissemination research. The goal of this review is to support the advancement of the dissemination and implementation sciences by identifying constructs and frameworks that scholars can apply in their dissemination studies. Additional file 1 : Tables S6-S9 show the frequency of constructs per framework, and readers can see the variability in the frequency of constructs per framework to help in their selection of frameworks.

A third hypothesis is that the processes of dissemination and implementation are interrelated, may occur simultaneously, and perhaps support each other in the uptake of evidence-based interventions. For example, Leppin et al. [ 54 ] use the definition of implementation based on the National Institutes of Health: “the adoption and integration of evidence-based health interventions into clinical and community settings for the purposes of improving care delivery and efficiency, patient outcomes, and individual and population health” [ 55 ], and implementation research as the study of this process to develop a knowledge base about how interventions can be embedded in practices. In this sense, implementation aims to examine the “how” to normalize interventions in practices, to enhance uptake of these interventions, guidelines, or policies, whereas dissemination examines how to spread the information about these interventions, policies, and practices, intending to support their adoption (see Fig. 1 ). In other words, using Curran’s [ 56 ] simple terms, implementation is about adopting and maintaining “the thing” whereas dissemination is about intentionally spreading information to enable learning about “the thing.” As Leppin et al. argue, these two sciences [ 54 , 57 , 58 ], while separate, could co-occur in the process of supporting the uptake of evidence-based interventions. Future work may entail empirically understanding the role of these frameworks in dissemination research.

This review aimed to advance a critical step in the dissemination literature by defining and categorizing dissemination constructs. Constructs are subjective, socially constructed concepts [ 59 ], and therefore their definitions may be bounded by factors including, but not limited to, the researchers’ discipline and background, the research context, and time [ 60 ]. This is evident in the constructs’ lack of consistent, clear definitions (see Additional file 1 ). The inconsistency in the definitions of the constructs is problematic because it impairs measurement development and consequently validity and comparability across studies. The lack of clear definitions of the dissemination constructs may be due to the multidisciplinary nature of the D&I research field in general [ 61 , 62 ], which is a value of the field. However, not having consistency in terms and definitions makes it difficult to develop generalizable conclusions and synthesize scientific findings regarding dissemination research.

We identified a total of 48 constructs, which we separated into four categories: dissemination processes, determinants, strategies, and outcomes. By providing these categories, we can hope to help advance the field of dissemination research to ensure rigor and consistency. Process constructs are important to guide the critical steps and structure that scholars may need to take when doing dissemination research. Of note is that the processes identified in this study may not be unique to dissemination research but rather to the research process in general. As the field of dissemination research advances, it will be interesting to examine whether there are unique components in these process stages that are unique to the dissemination field. In addition to the process, an examination of dissemination determinants (i.e., barriers and facilitators) is essential in understanding how contextual factors occurring at different levels (e.g., information recipient, organizational setting, policy environment) influence dissemination efforts and impede or improve dissemination success [ 7 ]. Understanding the essential determinants will help to guide the selection and design of strategies that can support dissemination efforts. Finally, the constructs in the dissemination outcomes will help examine levels and processes to assess.

The categorization of the constructs was not without challenges. For example, persuasion was coded as a strategy (persuading) and as an outcome (persuasion). Likewise, the construct confirmation could be conceptualized as a stage [ 16 ] or as an outcome [ 19 ]. The constructs identified in this review provide an initial taxonomy for understanding and assessing dissemination outcomes, but more research and conceptualization are needed to fully describe dissemination processes, determinants, strategies, and outcomes. Given the recent interest in the dissemination literature [ 22 , 63 ], a future step for the field is examining the precise and coherent definition and operationalization of dissemination constructs, along with the identification or development of measures to assess them.

Limitations

A few limitations to this study should be noted. First, the search was limited to one bibliometric database and from journals publishing D&I in health studies. We limited our search to one database because we aimed to capture articles from Norton et al. [ 11 ], and therefore, our search methodology was focused on journals instead of on databases. Future work learning from other fields, and doing a broader search on other databases could provide different perspectives. Second, we did not include terms such as research utilization, research translation, knowledge exchange, knowledge mobilization, or translation science in our search, limiting the scope and potential generalizability of our search. Translation science has been defined as being a different science than dissemination, however. Leppin et al. [ 54 ], for example, offer the definition of translation science as the science that aims to identify and advance generalizable principles to expedite research translation, or the “process of turning observations into interventions that improve health” (see Fig. 1 ). Translation research, therefore, focuses on the determinants to achieve this end. Accordingly, Wilson et al. [ 7 ] used other terms in their search, including translation, diffusion of innovation, and knowledge mobilization and found different frameworks in their review. In their paper, Wilson and colleagues [ 7 ] provided a different analysis than ours in that they aimed to examine the theoretical underpinning of the frameworks identified by them. Our study is different from theirs in that we offer the definition of disseminating and a compilation of constructs and their definitions. A future study could combine the frameworks identified by our study with the ones identified by Wilson and colleagues and detail the theoretical origins of the frameworks, and the definitions of the constructs to support in the selection of frameworks for dissemination studies. Third, by being stringent in our inclusion criteria, we may have missed important work. Several articles were excluded from our scoping review because they were examining the spread of an evidence-based intervention (scale up) or of the spread of dissemination for the public (health communication). As noted above, however, clearly distinguishing dissemination from scale-up and from health communication will help further advance the dissemination research field and identify its mechanisms of action. Fourth, given the broad literatures in diffusion, dissemination, and social marketing, researchers may disagree with our definitions and how we conceptualized the constructs. Fifth, we did not code qualitative studies because we wanted to have boundaries in this study as it is a scoping study. Future studies could examine the application of frameworks in qualitative work. It is our hope that future research can build from this work to continue to define and test the dissemination constructs.

Conclusions

Based on the review of frameworks and the empirical literature, we defined dissemination research and outlined key constructs in the categories of dissemination process, strategies, determinants, strategies, and outcomes. Our data indicate that the field of dissemination research could be advanced with a more explicit focus on methods and a common understanding of constructs. We hope that our review will help guide the field in providing a narrative taxonomy of dissemination constructs that promote clarity and advance the dissemination research field. We hope that future stages of the dissemination research field can examine specific measures and empirically test the mechanisms of action of the dissemination process.

Availability of data and materials

The datasets used and/or analyzed during the current study are available from the corresponding author on reasonable request.

Abbreviations

Dissemination and implementation

Integrated Promoting Action on Research Implementation in Health Services

Reach, Effectiveness, Adoption, Implementation, Maintenance

Consolidated Framework for Implementation Research

Brownson RC, Eyler AA, Harris JK, Moore JB, Tabak RG. Getting the Word Out: New Approaches for Disseminating Public Health Science. J Public Health Manag Pract. 2018;24(2):102-11. https://doi.org/10.1097/PHH.0000000000000673 .

Naeem S, bin, Bhatti R. The COVID-19 ‘infodemic’: a new front for information professionals. Health Inf Libr J. 2020;37(3):233–9. https://doi.org/10.1111/hir.12311 .

Article Google Scholar

Narayan KMV, Curran JW, Foege WH. The COVID-19 pandemic as an opportunity to ensure a more successful future for science and public health. JAMA. 2021;325(6):525–6. https://doi.org/10.1001/jama.2020.23479 .

Article CAS PubMed Google Scholar

Tabak RG, Khoong EC, Chambers DA, Brownson RC. Bridging research and practice: models for dissemination and implementation research. Am J Prev Med. 2012;43(3):337–50. https://doi.org/10.1016/j.amepre.2012.05.024 .

Article PubMed PubMed Central Google Scholar

Green L, Kreuter M. Health program planning: an educational and ecological approach: McGraw-Hill Education; 2005.

Google Scholar

Moullin JC, Dickson KS, Stadnick NA, et al. Ten recommendations for using implementation frameworks in research and practice. Implement Sci Commun. 2020;1(1):42. https://doi.org/10.1186/s43058-020-00023-7 .

Wilson PM, Petticrew M, Calnan MW, Nazareth I. Disseminating research findings: what should researchers do? A systematic scoping review of conceptual frameworks. Implement Sci. 2010;5(1):1–16.

Munn Z, Peters MDJ, Stern C, Tufanaru C, McArthur A, Aromataris E. Systematic review or scoping review? Guidance for authors when choosing between a systematic or scoping review approach. BMC Med Res Methodol. 2018;18(1):143. https://doi.org/10.1186/s12874-018-0611-x .

Arksey H, O’Malley L. Scoping studies: towards a methodological framework. Int J Soc Res Methodol. 2005;8(1):19–32. https://doi.org/10.1080/1364557032000119616 .

Levac D, Colquhoun H, O’Brien KK. Scoping studies: advancing the methodology. Implement Sci. 2010;5(1):69. https://doi.org/10.1186/1748-5908-5-69 .

Norton WE, Lungeanu A, Chambers DA, Contractor N. Mapping the growing discipline of dissemination and implementation science in health. Scientometrics. 2017;112(3):1367–90. https://doi.org/10.1007/s11192-017-2455-2 .

Bramer W, Bain P. Updating search strategies for systematic reviews using EndNote. J Med Libr Assoc. 2017;105(3):285–9. https://doi.org/10.5195/jmla.2017.183 .

Hickey MD, Odeny TA, Petersen M, et al. Specification of implementation interventions to address the cascade of HIV care and treatment in resource-limited settings: a systematic review. Implement Sci. 2017;12(1):102. https://doi.org/10.1186/s13012-017-0630-8 .

Hooley C, Amano T, Markovitz L, Yaeger L, Proctor E. Assessing implementation strategy reporting in the mental health literature: a narrative review. Adm Policy Ment Health Ment Health Serv Res. 2020;47(1):19–35. https://doi.org/10.1007/s10488-019-00965-8 .

Mateen FJ, Oh J, Tergas AI, Bhayani NH, Kamdar BB. Titles versus titles and abstracts for initial screening of articles for systematic reviews. Clin Epidemiol. 2013;5(1):89–95. https://doi.org/10.2147/CLEP.S43118 .

Rogers EM, Singhal A, Quinlan MM. Diffusion of innovations: Routledge; 2014.

Graham ID, Tetroe JM. The Knowledge to Action Framework. Models Frameworks Implement Evid Based Pract. 2010;207:222.

Glasgow RE, Vogt TM, Boles SM. Evaluating the public health impact of health promotion interventions: the RE-AIM framework. Am J Public Health. 1999;89(9):1322–7.

Article CAS Google Scholar

Dobbins M, Ciliska D, Cockerill R, Barnsley J, DiCenso A. A framework for the dissemination and utilization of research for health-care policy and practice. Worldviews Evid Based Nurs Presents Arch Online J Knowl Synth Nurs. 2002;9(1):149–60.

Wandersman A, Duffy J, Flaspohler P, et al. Bridging the gap between prevention research and practice: the interactive systems framework for dissemination and implementation. Am J Community Psychol. 2008;41(3):171–81.

Kingdon JW, Stano E. Agendas, alternatives, and public policies, vol. 45: Little, Brown Boston; 1984.

Winkler JD, Lohr KN, Brook RH. Persuasive communication and medical technology assessment. Arch Intern Med. 1985;145(2):314–7.

Scullion PA. Effective dissemination strategies. Nurse Res (through 2013). 2002;10(1):65–77.

Anderson M, Cosby J, Swan B, Moore H, Broekhoven M. The use of research in local health service agencies. Soc Sci Med. 1999;49(8):1007–19.

Elliott SJ, O’Loughlin J, Robinson K, et al. Conceptualizing dissemination research and activity: the case of the Canadian Heart Health Initiative. Health Educ Behav. 2003;30(3):267–82.

Owen N, Glanz K, Sallis JF, Kelder SH. Evidence-based approaches to dissemination and diffusion of physical activity interventions. Am J Prev Med. 2006;31(4):35–44.

Yuan CT, Nembhard IM, Stern AF, Brush JE Jr, Krumholz HM, Bradley EH. Blueprint for the dissemination of evidence-based practices in health care. Issue Brief (Commonw Fund). 2010;86:1–16.

Graham ID, Logan J, Harrison MB, et al. Lost in knowledge translation: time for a map? J Contin Educ Health Prof. 2006;26(1):13–24.

Oh CH, Rich RF. Explaining use of information in public policymaking. Knowledge Policy. 1996;9(1):3–35.

Landry R, Amara N, Lamari M. Utilization of social science research knowledge in Canada. Res Policy. 2001;30(2):333–49.

Birken SA, Lee SYD, Weiner BJ. Uncovering middle managers’ role in healthcare innovation implementation. Implement Sci. 2012;7(1):1–12.

Fairweather GW. Methods for experimental social innovation. 1967. Published online

Fairweather GWTLG. Experimental methods for social policy research. Pergamon Press; 1977.

Leeman J, Birken SA, Powell BJ, Rohweder C, Shea CM. Beyond “implementation strategies”: classifying the full range of strategies used in implementation science and practice. Implement Sci. 2017;12(1):125. https://doi.org/10.1186/s13012-017-0657-x .

Lomas J. Diffusion, dissemination, and implementation: who should do what? Ann N Y Acad Sci. 1993;703:226–35. https://doi.org/10.1111/j.1749-6632.1993.tb26351.x discussion 235-7.

World Health Organization, EXPAND NET. Nine steps for developing a scaling-up strategy.; 2010.

Grier S, Bryant CA. Social marketing in public health. Annu Rev Public Health. 2005;26(1):319–39. https://doi.org/10.1146/annurev.publhealth.26.021304.144610 .

Article PubMed Google Scholar

Moullin JC, Dickson KS, Stadnick NA, Rabin B, Aarons GA. Systematic review of the Exploration, Preparation, Implementation, Sustainment (EPIS) framework. Implement Sci. 2019;14(1):1. https://doi.org/10.1186/s13012-018-0842-6 .