MBA Knowledge Base

Business • Management • Technology

Home » Management Case Studies » Case Study: Quality Management System at Coca Cola Company

Case Study: Quality Management System at Coca Cola Company

Coca Cola’s history can be traced back to a man called Asa Candler, who bought a specific formula from a pharmacist named Smith Pemberton. Two years later, Asa founded his business and started production of soft drinks based on the formula he had bought. From then, the company grew to become the biggest producers of soft drinks with more than five hundred brands sold and consumed in more than two hundred nations worldwide.

Although the company is said to be the biggest bottler of soft drinks, they do not bottle much. Instead, Coca Cola Company manufactures a syrup concentrate, which is bought by bottlers all over the world. This distribution system ensures the soft drink is bottled by these smaller firms according to the company’s standards and guidelines. Although this franchised method of distribution is the primary method of distribution, the mother company has a key bottler in America, Coca Cola Refreshments.

In addition to soft drinks, which are Coca Cola’s main products, the company also produces diet soft drinks. These are variations of the original soft drinks with improvements in nutritional value, and reductions in sugar content. Saccharin replaced industrial sugar in 1963 so that the drinks could appeal to health-conscious consumers. A major cause for concern was the inter product competition which saw some sales dwindle in some products in favor of others.

Coca Cola started diversifying its products during the First World War when ‘Fanta’ was introduced. During World War 1, the heads of Coca Cola in Nazi Germany decided to establish a new soft drink into the market. During the ongoing war, America’s promotion in Germany was not acceptable. Therefore, he decided to use a new name and ‘Fanta’ was born. The creation was successful and production continued even after the war. ‘Sprite’ followed soon after.

In the 1990’s, health concerns among consumers of soft drinks forced their manufactures to consider altering the energy content of these products. ‘Minute Maid’ Juices, ‘PowerAde’ sports drinks, and a few flavored teas variants were Coca Cola’s initial reactions to this new interest. Although most of these new products were well received, some did not perform as well. An example of such was Coca Cola classic, dubbed C2.

Coca Cola Company has been a successful company for more than a century. This can be attributed partly to the nature of its products since soft drinks will always appeal to people. In addition to this, Coca Cola has one of the best commercial and public relations programs in the world. The company’s products can be found on adverts in virtually every corner of the globe. This success has led to its support for a wide range of sporting activities. Soccer, baseball, ice hockey, athletics and basketball are some of these sports, where Coca Cola is involved

The Quality Management System at Coca Cola

It is very important that each product that Coca Cola produces is of a high quality standard to ensure that each product is exactly the same. This is important as the company wants to meet with customer requirements and expectations. With the brand having such a global presence, it is vital that these checks are continually consistent. The standardized bottle of Coca Cola has elements that need to be checked whilst on the production line to make sure that a high quality is being met. The most common checks include ingredients, packaging and distribution. Much of the testing being taken place is during the production process, as machines and a small team of employees monitor progress. It is the responsibility of all of Coca Colas staff to check quality from hygiene operators to product and packaging quality. This shows that these constant checks require staff to be on the lookout for problems and take responsibility for this, to ensure maintained quality.

Coca-cola uses inspection throughout its production process, especially in the testing of the Coca-Cola formula to ensure that each product meets specific requirements. Inspection is normally referred to as the sampling of a product after production in order to take corrective action to maintain the quality of products. Coca-Cola has incorporated this method into their organisational structure as it has the ability of eliminating mistakes and maintaining high quality standards, thus reducing the chance of product recall. It is also easy to implement and is cost effective.

Coca-cola uses both Quality Control (QC) and Quality Assurance (QA) throughout its production process. QC mainly focuses on the production line itself, whereas QA focuses on its entire operations process and related functions, addressing potential problems very quickly. In QC and QA, state of the art computers check all aspects of the production process, maintaining consistency and quality by checking the consistency of the formula, the creation of the bottle (blowing), fill levels of each bottle, labeling of each bottle, overall increasing the speed of production and quality checks, which ensures that product demands are met. QC and QA helps reduce the risk of defective products reaching a customer; problems are found and resolved in the production process, for example, bottles that are considered to be defective are placed in a waiting area for inspection. QA also focuses on the quality of supplied goods to Coca-cola, for example sugar, which is supplied by Tate and Lyle. Coca-cola informs that they have never had a problem with their suppliers. QA can also involve the training of staff ensuring that employees understand how to operate machinery. Coca-Cola ensures that all members of staff receive training prior to their employment, so that employees can operate machinery efficiently. Machinery is also under constant maintenance, which requires highly skilled engineers to fix problems, and help Coca-cola maintain high outputs.

Every bottle is also checked that it is at the correct fill level and has the correct label. This is done by a computer which every bottle passes through during the production process. Any faulty products are taken off the main production line. Should the quality control measures find any errors, the production line is frozen up to the last good check that was made. The Coca Cola bottling plant also checks the utilization level of each production line using a scorecard system. This shows the percentage of the line that is being utilized and allows managers to increase the production levels of a line if necessary.

Coca-Cola also uses Total Quality Management (TQM) , which involves the management of quality at every level of the organisation , including; suppliers, production, customers etc. This allows Coca-cola to retain/regain competitiveness to achieve increased customer satisfaction . Coca-cola uses this method to continuously improve the quality of their products. Teamwork is very important and Coca-cola ensures that every member of staff is involved in the production process, meaning that each employee understands their job/roles, thus improving morale and motivation , overall increasing productivity. TQM practices can also increase customer involvement as many organisations, including Coca-Cola relish the opportunity to receive feedback and information from their consumers. Overall, reducing waste and costs, provides Coca-cola with a competitive advantage .

The Production Process

Before production starts on the line cleaning quality tasks are performed to rinse internal pipelines, machines and equipment. This is often performed during a switch over of lines for example, changing Coke to Diet Coke to ensure that the taste is the same. This quality check is performed for both hygiene purposes and product quality. When these checks are performed the production process can begin.

Coca Cola uses a database system called Questar which enables them to perform checks on the line. For example, all materials are coded and each line is issued with a bill of materials before the process starts. This ensures that the correct materials are put on the line. This is a check that is designed to eliminate problems on the production line and is audited regularly. Without this system, product quality wouldn’t be assessed at this high level. Other quality checks on the line include packaging and carbonation which is monitored by an operator who notes down the values to ensure they are meeting standards.

To test product quality further lab technicians carry out over 2000 spot checks a day to ensure quality and consistency. This process can be prior to production or during production which can involve taking a sample of bottles off the production line. Quality tests include, the CO2 and sugar values, micro testing, packaging quality and cap tightness. These tests are designed so that total quality management ideas can be put forward. For example, one way in which Coca Cola has improved their production process is during the wrapping stage at the end of the line. The machine performed revolutions around the products wrapping it in plastic until the contents were secure. One initiative they adopted meant that one less revolution was needed. This idea however, did not impact on the quality of the packaging or the actual product therefore saving large amounts of money on packaging costs. This change has been beneficial to the organisation. Continuous improvement can also be used to adhere to environmental and social principles which the company has the responsibility to abide by. Continuous Improvement methods are sometimes easy to identify but could lead to a big changes within the organisation. The idea of continuous improvement is to reveal opportunities which could change the way something is performed. Any sources of waste, scrap or rework are potential projects which can be improved.

The successfulness of this system can be measured by assessing the consistency of the product quality. Coca Cola say that ‘Our Company’s Global Product Quality Index rating has consistently reached averages near 94 since 2007, with a 94.3 in 2010, while our Company Global Package Quality Index has steadily increased since 2007 to a 92.6 rating in 2010, our highest value to date’. This is an obvious indication this quality system is working well throughout the organisation. This increase of the index shows that the consistency of the products is being recognized by consumers.

Related posts:

- Case Study: The Coca-Cola Company Struggles with Ethical Crisis

- Case Study: Analysis of the Ethical Behavior of Coca Cola

- Case Study of Burger King: Achieving Competitive Advantage through Quality Management

- SWOT Analysis of Coca Cola

- Case Study: Marketing Strategy of Walt Disney Company

- Case Study of Papa John’s: Quality as a Core Business Strategy

- Case Study: Johnson & Johnson Company Analysis

- Case Study: Inventory Management Practices at Walmart

- Case Study: Analysis of Performance Management at British Petroleum

- Total Quality Management And Continuous Quality Improvement

Leave a Reply Cancel reply

Your email address will not be published. Required fields are marked *

Sign up for the newsletter

Digital editions.

Total quality management: three case studies from around the world

With organisations to run and big orders to fill, it’s easy to see how some ceos inadvertently sacrifice quality for quantity. by integrating a system of total quality management it’s possible to have both.

Top 5 ways to manage the board during turbulent times Top 5 ways to create a family-friendly work culture Top 5 tips for a successful joint venture Top 5 ways managers can support ethnic minority workers Top 5 ways to encourage gender diversity in the workplace Top 5 ways CEOs can create an ethical company culture Top 5 tips for going into business with your spouse Top 5 ways to promote a healthy workforce Top 5 ways to survive a recession Top 5 tips for avoiding the ‘conference vortex’ Top 5 ways to maximise new parents’ work-life balance with technology Top 5 ways to build psychological safety in the workplace Top 5 ways to prepare your workforce for the AI revolution Top 5 ways to tackle innovation stress in the workplace Top 5 tips for recruiting Millennials

There are few boardrooms in the world whose inhabitants don’t salivate at the thought of engaging in a little aggressive expansion. After all, there’s little room in a contemporary, fast-paced business environment for any firm whose leaders don’t subscribe to ambitions of bigger factories, healthier accounts and stronger turnarounds. Yet too often such tales of excess go hand-in-hand with complaints of a severe drop in quality.

Food and entertainment markets are riddled with cautionary tales, but service sectors such as health and education aren’t immune to the disappointing by-products of unsustainable growth either. As always, the first steps in avoiding a catastrophic forsaking of quality begins with good management.

There are plenty of methods and models geared at managing the quality of a particular company’s goods or services. Yet very few of those models take into consideration the widely held belief that any company is only as strong as its weakest link. With that in mind, management consultant William Deming developed an entirely new set of methods with which to address quality.

Deming, whose managerial work revolutionised the titanic Japanese manufacturing industry, perceived quality management to be more of a philosophy than anything else. Top-to-bottom improvement, he reckoned, required uninterrupted participation of all key employees and stakeholders. Thus, the total quality management (TQM) approach was born.

All in Similar to the Six Sigma improvement process, TQM ensures long-term success by enforcing all-encompassing internal guidelines and process standards to reduce errors. By way of serious, in-depth auditing – as well as some well-orchestrated soul-searching – TQM ensures firms meet stakeholder needs and expectations efficiently and effectively, without forsaking ethical values.

By opting to reframe the way employees think about the company’s goals and processes, TQM allows CEOs to make sure certain things are done right from day one. According to Teresa Whitacre, of international consulting firm ASQ , proper quality management also boosts a company’s profitability.

“Total quality management allows the company to look at their management system as a whole entity — not just an output of the quality department,” she says. “Total quality means the organisation looks at all inputs, human resources, engineering, production, service, distribution, sales, finance, all functions, and their impact on the quality of all products or services of the organisation. TQM can improve a company’s processes and bottom line.”

Embracing the entire process sees companies strive to improve in several core areas, including: customer focus, total employee involvement, process-centred thinking, systematic approaches, good communication and leadership and integrated systems. Yet Whitacre is quick to point out that companies stand to gain very little from TQM unless they’re willing to go all-in.

“Companies need to consider the inputs of each department and determine which inputs relate to its governance system. Then, the company needs to look at the same inputs and determine if those inputs are yielding the desired results,” she says. “For example, ISO 9001 requires management reviews occur at least annually. Aside from minimum standard requirements, the company is free to review what they feel is best for them. While implementing TQM, they can add to their management review the most critical metrics for their business, such as customer complaints, returns, cost of products, and more.”

The customer knows best: AtlantiCare TQM isn’t an easy management strategy to introduce into a business; in fact, many attempts tend to fall flat. More often than not, it’s because firms maintain natural barriers to full involvement. Middle managers, for example, tend to complain their authority is being challenged when boots on the ground are encouraged to speak up in the early stages of TQM. Yet in a culture of constant quality enhancement, the views of any given workforce are invaluable.

AtlantiCare in numbers

5,000 Employees

$280m Profits before quality improvement strategy was implemented

$650m Profits after quality improvement strategy

One firm that’s proven the merit of TQM is New Jersey-based healthcare provider AtlantiCare . Managing 5,000 employees at 25 locations, AtlantiCare is a serious business that’s boasted a respectable turnaround for nearly two decades. Yet in order to increase that margin further still, managers wanted to implement improvements across the board. Because patient satisfaction is the single-most important aspect of the healthcare industry, engaging in a renewed campaign of TQM proved a natural fit. The firm chose to adopt a ‘plan-do-check-act’ cycle, revealing gaps in staff communication – which subsequently meant longer patient waiting times and more complaints. To tackle this, managers explored a sideways method of internal communications. Instead of information trickling down from top-to-bottom, all of the company’s employees were given freedom to provide vital feedback at each and every level.

AtlantiCare decided to ensure all new employees understood this quality culture from the onset. At orientation, staff now receive a crash course in the company’s performance excellence framework – a management system that organises the firm’s processes into five key areas: quality, customer service, people and workplace, growth and financial performance. As employees rise through the ranks, this emphasis on improvement follows, so managers can operate within the company’s tight-loose-tight process management style.

After creating benchmark goals for employees to achieve at all levels – including better engagement at the point of delivery, increasing clinical communication and identifying and prioritising service opportunities – AtlantiCare was able to thrive. The number of repeat customers at the firm tripled, and its market share hit a six-year high. Profits unsurprisingly followed. The firm’s revenues shot up from $280m to $650m after implementing the quality improvement strategies, and the number of patients being serviced dwarfed state numbers.

Hitting the right notes: Santa Cruz Guitar Co For companies further removed from the long-term satisfaction of customers, it’s easier to let quality control slide. Yet there are plenty of ways in which growing manufacturers can pursue both quality and sales volumes simultaneously. Artisan instrument makers the Santa Cruz Guitar Co (SCGC) prove a salient example. Although the California-based company is still a small-scale manufacturing operation, SCGC has grown in recent years from a basement operation to a serious business.

SCGC in numbers

14 Craftsmen employed by SCGC

800 Custom guitars produced each year

Owner Dan Roberts now employs 14 expert craftsmen, who create over 800 custom guitars each year. In order to ensure the continued quality of his instruments, Roberts has created an environment that improves with each sale. To keep things efficient (as TQM must), the shop floor is divided into six workstations in which guitars are partially assembled and then moved to the next station. Each bench is manned by a senior craftsman, and no guitar leaves that builder’s station until he is 100 percent happy with its quality. This product quality is akin to a traditional assembly line; however, unlike a traditional, top-to-bottom factory, Roberts is intimately involved in all phases of instrument construction.

Utilising this doting method of quality management, it’s difficult to see how customers wouldn’t be satisfied with the artists’ work. Yet even if there were issues, Roberts and other senior management also spend much of their days personally answering web queries about the instruments. According to the managers, customers tend to be pleasantly surprised to find the company’s senior leaders are the ones answering their technical questions and concerns. While Roberts has no intentions of taking his manufacturing company to industrial heights, the quality of his instruments and high levels of customer satisfaction speak for themselves; the company currently boasts one lengthy backlog of orders.

A quality education: Ramaiah Institute of Management Studies Although it may appear easier to find success with TQM at a boutique-sized endeavour, the philosophy’s principles hold true in virtually every sector. Educational institutions, for example, have utilised quality management in much the same way – albeit to tackle decidedly different problems.

The global financial crisis hit higher education harder than many might have expected, and nowhere have the odds stacked higher than in India. The nation plays home to one of the world’s fastest-growing markets for business education. Yet over recent years, the relevance of business education in India has come into question. A report by one recruiter recently asserted just one in four Indian MBAs were adequately prepared for the business world.

RIMS in numbers

9% Increase in test scores post total quality management strategy

22% Increase in number of recruiters hiring from the school

20,000 Increase in the salary offered to graduates

50,000 Rise in placement revenue

At the Ramaiah Institute of Management Studies (RIMS) in Bangalore, recruiters and accreditation bodies specifically called into question the quality of students’ educations. Although the relatively small school has always struggled to compete with India’s renowned Xavier Labour Research Institute, the faculty finally began to notice clear hindrances in the success of graduates. The RIMS board decided it was time for a serious reassessment of quality management.

The school nominated Chief Academic Advisor Dr Krishnamurthy to head a volunteer team that would audit, analyse and implement process changes that would improve quality throughout (all in a particularly academic fashion). The team was tasked with looking at three key dimensions: assurance of learning, research and productivity, and quality of placements. Each member underwent extensive training to learn about action plans, quality auditing skills and continuous improvement tools – such as the ‘plan-do-study-act’ cycle.

Once faculty members were trained, the team’s first task was to identify the school’s key stakeholders, processes and their importance at the institute. Unsurprisingly, the most vital processes were identified as student intake, research, knowledge dissemination, outcomes evaluation and recruiter acceptance. From there, Krishnamurthy’s team used a fishbone diagram to help identify potential root causes of the issues plaguing these vital processes. To illustrate just how bad things were at the school, the team selected control groups and administered domain-based knowledge tests.

The deficits were disappointing. A RIMS students’ knowledge base was rated at just 36 percent, while students at Harvard rated 95 percent. Likewise, students’ critical thinking abilities rated nine percent, versus 93 percent at MIT. Worse yet, the mean salaries of graduating students averaged $36,000, versus $150,000 for students from Kellogg. Krishnamurthy’s team had their work cut out.

To tackle these issues, Krishnamurthy created an employability team, developed strategic architecture and designed pilot studies to improve the school’s curriculum and make it more competitive. In order to do so, he needed absolutely every employee and student on board – and there was some resistance at the onset. Yet the educator asserted it didn’t actually take long to convince the school’s stakeholders the changes were extremely beneficial.

“Once students started seeing the results, buy-in became complete and unconditional,” he says. Acceptance was also achieved by maintaining clearer levels of communication with stakeholders. The school actually started to provide shareholders with detailed plans and projections. Then, it proceeded with a variety of new methods, such as incorporating case studies into the curriculum, which increased general test scores by almost 10 percent. Administrators also introduced a mandate saying students must be certified in English by the British Council – increasing scores from 42 percent to 51 percent.

By improving those test scores, the perceived quality of RIMS skyrocketed. The number of top 100 businesses recruiting from the school shot up by 22 percent, while the average salary offers graduates were receiving increased by $20,000. Placement revenue rose by an impressive $50,000, and RIMS has since skyrocketed up domestic and international education tables.

No matter the business, total quality management can and will work. Yet this philosophical take on quality control will only impact firms that are in it for the long haul. Every employee must be in tune with the company’s ideologies and desires to improve, and customer satisfaction must reign supreme.

Contributors

- Industry Outlook

- SUGGESTED TOPICS

- The Magazine

- Newsletters

- Managing Yourself

- Managing Teams

- Work-life Balance

- The Big Idea

- Data & Visuals

- Reading Lists

- Case Selections

- HBR Learning

- Topic Feeds

- Account Settings

- Email Preferences

Quality management

- Business management

- Process management

- Project management

Creating a Culture of Quality

- Ashwin Srinivasan

- Bryan Kurey

- From the April 2014 Issue

Reign of Zero Tolerance (HBR Case Study and Commentary)

- Janet Parker

- Eugene Volokh

- Jean Halloran

- Michael G. Cherkasky

- From the November 2006 Issue

The Culture to Cultivate

- George C. Halvorson

- From the July–August 2013 Issue

Power of Internal Guarantees

- Christopher W.L. Hart

- From the January–February 1995 Issue

Teaching Smart People How to Learn

- Chris Argyris

- From the May–June 1991 Issue

Benchmarking Your Staff

- Michael Goold

- David J. Collis

- From the September 2005 Issue

The CEO of Canada Goose on Creating a Homegrown Luxury Brand

- From the September–October 2019 Issue

Get Ahead by Betting Wrong

- J.P. Eggers

- From the July–August 2014 Issue

Made in U.S.A.: A Renaissance in Quality

- Joseph M. Juran

- July 01, 1993

Relentless Idealism for Tough Times

- Alice Waters

- From the June 2009 Issue

Develop Your Strengths, Not Your Weaknesses

- Scott K. Edinger

- February 28, 2012

Will Disruptive Innovations Cure Health Care? (HBR OnPoint Enhanced Edition)

- Clayton M. Christensen

- Richard Bohmer

- John Kenagy

- June 01, 2004

A Better Way to Onboard AI

- Boris Babic

- Daniel L. Chen

- Theodoros Evgeniou

- Anne-Laure Fayard

- From the July–August 2020 Issue

Fixing Health Care from the Inside, Today

- Steven J. Spear

- September 01, 2005

The IT Transformation Health Care Needs

- Nikhil Sahni

- Robert S. Huckman

- Anuraag Chigurupati

- David M. Cutler

- From the November–December 2017 Issue

Manage Your Human Sigma

- John H. Fleming

- Curt Coffman

- James K. Harter

- From the July–August 2005 Issue

Growth as a Process: The HBR Interview

- Jeffrey R. Immelt

- Thomas A. Stewart

- From the June 2006 Issue

Health Care Needs Real Competition

- Leemore S Dafny

- Thomas H. Lee M.D.

- From the December 2016 Issue

Six Sigma Pricing

- Manmohan S. Sodhi

- Navdeep S. Sodhi

- From the May 2005 Issue

Coming Commoditization of Processes

- Thomas H. Davenport

- From the June 2005 Issue

Pleasant Bluffs: Launching a Home-Based Hospital Program

- Laura Erskine

- April 07, 2016

Oberoi Hotels: Train Whistle in the Tiger Reserve

- Ryan W. Buell

- Ananth Raman

- Vidhya Muthuram

- January 09, 2015

Toyota Motor Manufacturing, U.S.A., Inc.

- Kazuhiro Mishina

- September 08, 1992

Xerox: Design for the Environment

- Richard H.K. Vietor

- Fiona E.S. Murray

- January 07, 1994

Impacore - A Disruptive Force in Consulting

- Dennis Campbell

- Emer Moloney

- June 15, 2016

Applying the Service Activity Sequence in the World of Culture

- Beatriz Munoz-Seca Fernandez-Cuesta

- Susana Llerena

- September 21, 2012

Six Sigma at Cintas Corporation

- P. Fraser Johnson

- Adam Bortolussi

- August 29, 2010

Danone: Changing the Food System

- David E. Bell

- Federica Gabrieli

- Daniela Beyersdorfer

- November 14, 2019

Koo Foundation Sun Yat-Sen Cancer Center: Breast Cancer Care in Taiwan

- Michael E. Porter

- Jennifer F. Baron

- C. Jason Wang

- December 08, 2009

Six Sigma at Academic Medical Hospital (C)

- Robert D. Landel

- September 28, 2003

Onnie Jewellers

- Larry Menor

- August 01, 2017

Wipro Technologies Europe (A)

- Gerry Yemen

- Martin N. Davidson

- Heather Wishik

- May 29, 2002

Health-Tech Strategy at KG Hospital Part A: Identification and Prioritization of Key Focus Areas

- Vijaya Sunder M

- Meghna Raman

- January 09, 2022

Nanxi Liu: Finding the Keys to Sales Success at Enplug

- Paul Orlando

- Megan Strawther

- October 12, 2016

GE: We Bring Good Things to Life (B)

- James L. Heskett

- January 20, 1999

Czech Mate: Jake and Dan's Marvelous Adventure

- Elliott N. Weiss

- Robert Collier

- Mike Kadish

- September 22, 2009

Quality Management in the Oil Industry: How BP Greases Its Machinery for Frictionless Sourcing

- Martin Lockstrom

- Shengrong Zhang

- March 28, 2012

Lean at Wipro Technologies

- Bradley R. Staats

- David M. Upton

- October 16, 2006

Operations Management Reading: Managing Quality with Process Control

- Roy D. Shapiro

- September 10, 2013

Samsung Electronics: Using Affinity Diagrams and Pareto Charts

- Jack Boepple

- February 08, 2013

Pleasant Bluffs: Launching a Home-Based Hospital Program, Teaching Note

Setting measures and targets that drive performance, a balanced scorecard reader.

- Balanced Scorecard Collaborative

- December 17, 2008

Popular Topics

Partner center.

Making quality assurance smart

For decades, outside forces have dictated how pharmaceutical and medtech companies approach quality assurance. The most influential force remains regulatory requirements. Both individual interpretations of regulations and feedback received during regulatory inspections have shaped quality assurance systems and processes. At the same time, mergers and acquisitions, along with the proliferation of different IT solutions and quality software, have resulted in a diverse and complicated quality management system (QMS) landscape. Historically, the cost of consolidating and upgrading legacy IT systems has been prohibitively expensive. Further challenged by a scarcity of IT support, many quality teams have learned to rely on the processes and workflows provided by off-the-shelf software without questioning whether they actually fit their company’s needs and evolving regulatory requirements.

In recent years, however, several developments have enabled a better way. New digital and analytics technologies make it easier for quality teams to access data from different sources and in various formats, without replacing existing systems. Companies can now build dynamic user experiences in web applications at a fraction of the cost of traditional, enterprise desktop software; this development raises the prospect of more customized, user-friendly solutions. Moreover, regulators, such as the FDA, are increasingly focused on quality systems and process maturity. 1 MDIC Case for Quality program. The FDA also identified the enablement of innovative technologies as a strategic priority, thereby opening the door for constructive dialogue about potential changes. 2 Technology Modernization Action Plan, FDA.

Smart quality at a glance

“Smart quality” is a framework that pharma and medtech companies can apply to redesign key quality assurance processes and create value for the organization.

Smart quality has explicit objectives:

- to perceive and deliver on multifaceted and ever-changing customer needs

- to deploy user-friendly processes built organically into business workflows, reimagined with leading-edge technologies

- to leapfrog existing quality management systems with breakthrough innovation, naturally fulfilling the spirit—not just the letter—of the regulations

The new ways in which smart quality achieves its objectives can be categorized in five building blocks (exhibit).

To learn more about smart quality and how leading companies are reimagining the quality function, please see “ Smart quality: Reimagining the way quality works .”

The time has arrived for pharmaceutical and medtech companies to act boldly and reimagine the quality function. Through our work on large-scale quality transformation projects and our conversations with executives, we have developed a new approach we call “smart quality” (see sidebar, “Smart quality at a glance”). With this approach, companies can redesign key quality processes and enable design-thinking methodology (to make processes more efficient and user-friendly), automation and digitization (to deliver speed and transparency), and advanced analytics (to provide deep insights into process capability and product performance).

The quality assurance function thereby becomes a driver of value in the organization and a source of competitive advantage—improving patient safety and health outcomes while operating efficiently, effectively, and fully aligned with regulatory expectations. In our experience, companies applying smart quality principles to quality assurance can quickly generate returns that outweigh investments in new systems, including line-of-sight impact on profit; a 30 percent improvement in time to market; and a significant increase in manufacturing and supply chain reliability. Equally significant are improvements in customer satisfaction and employee engagement, along with reductions in compliance risk.

Revolutionizing quality assurance processes

The following four use cases illustrate how pharmaceutical and medtech companies can apply smart quality to transform core quality assurance processes—including complaints management, quality management review, deviations investigations, and supplier risk management, among others.

1. Complaint management

Responding swiftly and effectively to complaints is not only a compliance requirement but also a business necessity. Assessing and reacting to feedback from the market can have an immediate impact on patient safety and product performance. Today, a pharmaceutical or medtech company may believe it is handling complaints well if it has a single software deployed around the globe for complaint management, with some elements of automation (for example, flagging reportable malfunctions in medical devices) and several processing steps happening offshore (such as intake, triage, and regulatory reporting).

Yet, for most quality teams, the average investigation and closure cycle time hovers around 60 days—a few adverse events are reported late every month, and negative trends are addressed two or more months after the signals come in. It can take quality assurance teams even longer to identify complaints that collectively point to negative trends for a particular product or device. At the same time, less than 5 percent of incoming complaints are truly new events that have never been seen before. The remainder of complaints can usually be categorized into well-known issues, within expected limits; or previously investigated issues, in which root causes have been identified and are already being addressed.

The smart quality approach improves customer engagement and speed

By applying smart quality principles and the latest technologies, companies can reduce turnaround times and improve the customer experience. They can create an automated complaint management process that reduces costs yet applies the highest standards:

- For every complaint, the information required for a precise assessment is captured at intake, and the event is automatically categorized.

- High-risk issues are immediately escalated by the system, with autogenerated reports ready for submission.

- New types of complaints and out-of-trend problems are escalated and investigated quickly.

- Low-risk, known issues are automatically trended and closed if they are within expected limits or already being addressed.

- Customer responses and updates are automatically available.

- Trending reports are available in real time for any insights or analyses.

To transform the complaint management process, companies should start by defining a new process and ensuring it meets regulatory requirements. The foundation for the new process can lie in a structured event assessment that allows automated issue categorization based on the risk level defined in the company’s risk management documentation. A critical technological component is the automation of customer complaint intake; a dynamic front-end application can guide a customer through a series of questions (Exhibit 1). The application captures only information relevant to a specific complaint evaluation, investigation, and—if necessary—regulatory report. Real-time trending can quickly identify signals that indicate issues exceeding expected limits. In addition, companies can use machine learning to scan text and identify potential high-risk complaints. Finally, risk-tailored investigation pathways, automated reporting, and customer response solutions complete the smart quality process. Successful companies maintain robust procedures and documentation that clearly explain how the new process reliably meets specific regulatory requirements. Usually, a minimal viable product (MVP) for the new process can be built within two to four months for the first high-volume product family.

In our experience, companies that redesign the complaint management process can respond more swiftly—often within a few hours—to reduce patient risk and minimize the scale and impact of potential issues in the field. For example, one medtech company that adopted the new complaint management approach can now automatically assess all complaints and close more than 55 percent of them in 24 hours without human intervention. And few, if any, reportable events missed deadlines for submission. Now, subject matter experts are free to focus on investigating new or high-risk issues, understanding root causes, and developing the most effective corrective and preventive actions. The company also reports that its customers prefer digital interfaces to paper forms and are pleased to be updated promptly on their status and resolution of their complaints.

2. Quality management review

Real-time performance monitoring is crucial to executive decision making at pharmaceutical and medtech companies. During a 2019 McKinsey roundtable discussion, 62 percent of quality assurance executives rated it as a high priority for the company, exceeding all other options.

For many companies today, the quality review process involves significant manual data collection and chart creation. Often, performance metrics focus on quality compliance outcomes and quality systems—such as deviation cycle times—at the expense of leading indicators and connection to culture and cost. Managers and executives frequently find themselves engaged in lengthy discussions, trying to interpret individual metrics and often missing the big picture.

Although many existing QMS solutions offer automated data-pull and visualization features, the interpretation of complex metric systems and trends remains largely a manual process. A team may quickly address one performance metric or trend, only to learn several months later that the change negatively affected another metric.

The smart quality approach speeds up decision making and action

By applying smart quality principles and the latest digital technologies, companies can get a comprehensive view of quality management in real time. This approach to performance monitoring allows companies to do the following:

- automatically collect, analyze, and visualize relevant leading indicators and outcomes on a simple and intuitive dashboard

- quickly identify areas of potential risk and emerging trends, as well as review their underlying metrics and connections to different areas

- rapidly make decisions to address existing or emerging issues and monitor the results

- adjust metrics and targets to further improve performance as goals are achieved

- view the entire value chain and create transparency for all functions, not just quality

To transform the process, companies should start by reimagining the design of the process and settling on a set of metrics that balances leading and lagging indicators. A key technical enabler of the system is establishing an interconnected metrics structure that automates data pull and visualization and digitizes analysis and interpretation (Exhibit 2). Key business processes, such as regular quality management reviews, may require changes to include a wider range of functional stakeholders and to streamline the review cascade.

Healthcare companies can use smart quality to redesign the quality management review process and see results quickly. At one pharmaceutical and medtech company, smart visualization of connected, cross-functional metrics significantly improved the effectiveness and efficiency of quality management review at all levels. Functions throughout the organization reported feeling better positioned to ascertain the quality situation quickly, support decision making, and take necessary actions. Because of connected metrics, management can not only see alarming trends but also link them to other metrics and quickly align on targeted improvement actions. For example, during a quarterly quality management review, the executive team linked late regulatory reporting challenges to an increase in delayed complaint submissions in some geographic regions. Following the review, commercial leaders raised attention to this issue in their respective regions, and in less than three months, late regulatory reporting was reduced to zero. Although the company is still in the process of fully automating data collection, it has already noticed a significant shift in its work. The quality team no longer spends the majority of its time on data processing but has pivoted to understanding, interpreting, and addressing complex and interrelated trends to reduce risks associated with quality and compliance.

Healthcare companies can use smart quality to redesign the quality management review process and see results quickly.

3. Deviation or nonconformance investigations

Deviation or nonconformance management is a critical topic for companies today because unaddressed issues can lead to product recalls and reputational damage. More often, deviations or nonconformances can affect a company’s product-release process, capacity, and lead times. As many quality teams can attest, the most challenging and time-consuming part of a deviation or nonconformance investigation is often the root cause analysis. In the best of circumstances, investigators use a tracking and trending system to identify similar occurrences. However, more often than not, these systems lack good classification of root causes and similarities. The systems search can become another hurdle for quality teams, resulting in longer lead times and ineffective root cause assessment. Not meeting the standards defined by regulators regarding deviation or nonconformance categorization and root cause analysis is one of the main causes of warning letters or consent decrees.

The smart quality approach improves effectiveness and reduces lead times

Our research shows companies that use smart quality principles to revamp the investigation process may reap these benefits:

- all pertinent information related to processes and equipment is easily accessible in a continuously updated data lake

- self-learning algorithms predict the most likely root cause of new deviations, thereby automating the review of process data and statements

In our experience, advanced analytics is the linchpin of transforming the investigation process. The most successful companies start by building a real-time data model from local and global systems that continuously refreshes and improves the model over time. Natural language processing can generate additional classifications of deviations or nonconformances to improve the quality and accuracy of insights. Digitization ensures investigators can easily access graphical interfaces that are linked to all data sources. With these tools in place, companies can readily identify the most probable root cause for deviation or nonconformance and provide a fact base for the decision. Automation also frees quality assurance professionals to focus on corrective and preventive action (Exhibit 3).

Pharmaceutical and medtech companies that apply these innovative technologies and smart quality principles can see significant results. Our work with several companies shows that identifying, explaining, and eliminating the root causes of recurring deviations and nonconformances can reduce the overall volume of issues by 65 percent. Companies that use the data and models to determine which unexpected factors in processes and products influence the end quality are able to control for them, thereby achieving product and process mastery. What’s more, by predicting the most likely root causes and their underlying drivers, these companies can reduce the investigation cycle time for deviations and nonconformances by 90 percent.

4. Supplier quality risk management

Drug and medical device supply chains have become increasingly global, complex, and opaque as more pharmaceutical and medtech companies outsource major parts of production to suppliers and contract manufacturing organizations (CMOs). More recently, the introduction of new, complex modalities, such as cell therapy and gene editing, has further increased pressure to ensure the quality of supplier products. Against this backdrop, it is critical to have a robust supplier quality program that can proactively identify and mitigate supplier risks or vulnerabilities before they become material issues.

Today, many companies conduct supplier risk management manually and at one specific point in time, such as at the beginning of a contract or annually. Typically, risk assessments are done in silos across the organization; every function completes individual reports and rarely looks at supplier risk as a whole. Because the results are often rolled up and individual risk signals can become diluted, companies focus more on increasing controls than addressing underlying challenges.

The smart quality approach reduces quality issues and optimizes resources

Companies that break down silos and apply a more holistic risk lens across the organization have a better chance of proactively identifying supplier quality risks. With smart quality assurance, companies can do the following:

- identify vulnerabilities by utilizing advanced analytics on a holistic set of internal and external supplier and product data

- ensure real-time updates and reviews to signal improvements in supplier quality and any changes that may pose an additional risk

- optimize resource allocation and urgency of action, based on the importance and risk level of the supplier or CMO

Current technologies make it simpler than ever to automatically collect meaningful data. They also make it possible to analyze the data, identify risk signals, and present information in an actionable format. Internal and supplier data can include financials, productivity, and compliance metrics. Such information can be further enhanced by publicly available external sources—such as regulatory reporting, financial statements, and press releases—that provide additional insights into supplier quality risks. For example, using natural language processing to search the web for negative press releases is a simple yet powerful method to identify risks.

Would you like to learn more about our Life Sciences Practice ?

Once a company has identified quality risks, it must establish a robust process for managing these risks. Mitigation actions can include additional monitoring with digital tools, supporting the supplier to address the sources of issues, or deciding to switch to a different supplier. In our experience, companies that have a deep understanding of the level of quality risk, as well as the financial exposure, have an easier time identifying the appropriate mitigation action. Companies that identify risks and proactively mitigate them are less likely to experience potentially large supply disruptions or compliance findings.

Many pharmaceutical and medtech companies have taken steps to improve visibility into supplier quality risks by using smart quality principles. For example, a large pharmaceutical company that implemented this data-driven approach eliminated in less than two years major CMO and supplier findings that were identified during audits. In addition, during the COVID-19 pandemic, a global medtech company was able to proactively prevent supply chain disruptions by drawing on insights derived from smart quality supplier risk management.

Getting started

Pharmaceutical and medtech companies can approach quality assurance redesign in multiple ways. In our experience, starting with two or three processes, codifying the approach, and then rolling it out to more quality systems accelerates the overall transformation and time to value.

Smart quality assurance starts with clean-sheet design. By deploying modern design techniques, organizations can better understand user needs and overcome constraints. To define the solution space, we encourage companies to draw upon a range of potential process, IT, and analytics solutions from numerous industries. In cases where the new process is substantially different from the legacy process, we find it beneficial to engage regulators in an open dialogue and solicit their early feedback to support the future-state design.

Once we arrive at an MVP that includes digital and automation elements, companies can test and refine new solutions in targeted pilots. Throughout the process, we encourage companies to remain mindful of training and transition planning. Plans should include details on ensuring uninterrupted operations and maintaining compliance during the transition period.

The examples in this article are not exceptions. We believe that any quality assurance process can be significantly improved by applying a smart quality approach and the latest technologies. Pharmaceutical and medtech companies that are willing to make the organizational commitment to rethink quality assurance can significantly reduce quality risks, improve their speed and effectiveness in handling issues, and see long-term financial benefits.

Note: The insights and concepts presented here have not been validated or independently verified, and future results may differ materially from any statements of expectation, forecasts, or projections. Recipients are solely responsible for all of their decisions, use of these materials, and compliance with applicable laws, rules, and regulations. Consider seeking advice of legal and other relevant certified/licensed experts prior to taking any specific steps.

Explore a career with us

Related articles.

Smart quality: Reimagining the way quality works

Ready for launch: Reshaping pharma’s strategy in the next normal

Healthcare innovation: Building on gains made through the crisis

- Browse All Articles

- Newsletter Sign-Up

- 11 Apr 2023

- Cold Call Podcast

A Rose by Any Other Name: Supply Chains and Carbon Emissions in the Flower Industry

Headquartered in Kitengela, Kenya, Sian Flowers exports roses to Europe. Because cut flowers have a limited shelf life and consumers want them to retain their appearance for as long as possible, Sian and its distributors used international air cargo to transport them to Amsterdam, where they were sold at auction and trucked to markets across Europe. But when the Covid-19 pandemic caused huge increases in shipping costs, Sian launched experiments to ship roses by ocean using refrigerated containers. The company reduced its costs and cut its carbon emissions, but is a flower that travels halfway around the world truly a “low-carbon rose”? Harvard Business School professors Willy Shih and Mike Toffel debate these questions and more in their case, “Sian Flowers: Fresher by Sea?”

- 17 Sep 2019

How a New Leader Broke Through a Culture of Accuse, Blame, and Criticize

Children’s Hospital & Clinics COO Julie Morath sets out to change the culture by instituting a policy of blameless reporting, which encourages employees to report anything that goes wrong or seems substandard, without fear of reprisal. Professor Amy Edmondson discusses getting an organization into the “High Performance Zone.” Open for comment; 0 Comments.

- 27 Feb 2019

- Research & Ideas

The Hidden Cost of a Product Recall

Product failures create managerial challenges for companies but market opportunities for competitors, says Ariel Dora Stern. The stakes have only grown higher. Open for comment; 0 Comments.

- 31 Mar 2018

- Working Paper Summaries

Expected Stock Returns Worldwide: A Log-Linear Present-Value Approach

Over the last 20 years, shortcomings of classical asset-pricing models have motivated research in developing alternative methods for measuring ex ante expected stock returns. This study evaluates the main paradigms for deriving firm-level expected return proxies (ERPs) and proposes a new framework for estimating them.

- 26 Apr 2017

Assessing the Quality of Quality Assessment: The Role of Scheduling

Accurate inspections enable companies to assess the quality, safety, and environmental practices of their business partners, and enable regulators to protect consumers, workers, and the environment. This study finds that inspectors are less stringent later in their workday and after visiting workplaces with fewer problems. Managers and regulators can improve inspection accuracy by mitigating these biases and their consequences.

- 23 Sep 2013

Status: When and Why It Matters

Status plays a key role in everything from the things we buy to the partnerships we make. Professor Daniel Malter explores when status matters most. Closed for comment; 0 Comments.

- 16 May 2011

What Loyalty? High-End Customers are First to Flee

Companies offering top-drawer customer service might have a nasty surprise awaiting them when a new competitor comes to town. Their best customers might be the first to defect. Research by Harvard Business School's Ryan W. Buell, Dennis Campbell, and Frances X. Frei. Key concepts include: Companies that offer high levels of customer service can't expect too much loyalty if a new competitor offers even better service. High-end businesses must avoid complacency and continue to proactively increase relative service levels when they're faced with even the potential threat of increased service competition. Even though high-end customers can be fickle, a company that sustains a superior service position in its local market can attract and retain customers who are more valuable over time. Firms rated lower in service quality are more or less immune from the high-end challenger. Closed for comment; 0 Comments.

- 08 Dec 2008

Thinking Twice About Supply-Chain Layoffs

Cutting the wrong employees can be counterproductive for retailers, according to research from Zeynep Ton. One suggestion: Pay special attention to staff who handle mundane tasks such as stocking and labeling. Your customers do. Closed for comment; 0 Comments.

- 01 Dec 2006

- What Do You Think?

How Important Is Quality of Labor? And How Is It Achieved?

A new book by Gregory Clark identifies "labor quality" as the major enticement for capital flows that lead to economic prosperity. By defining labor quality in terms of discipline and attitudes toward work, this argument minimizes the long-term threat of outsourcing to developed economies. By understanding labor quality, can we better confront anxieties about outsourcing and immigration? Closed for comment; 0 Comments.

- 20 Sep 2004

How Consumers Value Global Brands

What do consumers expect of global brands? Does it hurt to be an American brand? This Harvard Business Review excerpt co-written by HBS professor John A. Quelch identifies the three characteristics consumers look for to make purchase decisions. Closed for comment; 0 Comments.

To read this content please select one of the options below:

Please note you do not have access to teaching notes, total quality management from theory to practice: a case study.

International Journal of Quality & Reliability Management

ISSN : 0265-671X

Article publication date: 1 May 1993

Most quality professionals recommend a core set of attributes as the nucleus of any quality improvement process. These attributes include: (1) clarifying job expectations; (2) setting quality standards; (3) measuring quality improvement; (4) effective super‐vision; (5) listening by management; (6) feedback by management; and (7) effective training. Based on a survey of employees at a medium‐sized manufacturing firm in the United States, it was found that management philosophy and actions can undermine even a proven total quality management (TQM) programme. For the many firms which hire outside consultants to set up a TQM programme, makes recommendations to management to ensure its successful implementation.

- MANAGEMENT PHILOSOPHY

- QUALITY ASSURANCE

- QUALITY MANAGEMENT

Longenecker, C.O. and Scazzero, J.A. (1993), "Total Quality Management from Theory to Practice: A Case Study", International Journal of Quality & Reliability Management , Vol. 10 No. 5. https://doi.org/10.1108/02656719310040114

Copyright © 1993, MCB UP Limited

Related articles

We’re listening — tell us what you think, something didn’t work….

Report bugs here

All feedback is valuable

Please share your general feedback

Join us on our journey

Platform update page.

Visit emeraldpublishing.com/platformupdate to discover the latest news and updates

Organizational approach to Total Quality Management: a case study

Related Papers

Afizan Amer

Management Science Letters

Yuni Pambreni

Assoc. Prof. Cross Ogohi Daniel

This study came to examine the impact of Total Quality management (TQM) as instrument in achieving on the organisational performance. TQM is defined as a policy that essentially aimed at establish and deliver high quality products and services that cover all their client's demands and achieve a high level of customer satisfaction. Total Quality Management (TQM) is a management is an administrative approach for firms focused on quality, in light of the cooperation and every individuals and aims at long haul accomplishment through consumer's loyalty and advantages to all individuals from the associations and society. The impact of many organisations failure to properly implement TQM by all administration level, challenges the organisation ability to organise frequent employee training have been a big problem. This research work attempt to find out the effect of TQM execution in the board inclusion, challenges disturbing the usages, impact of employee training and TQM standard application to the accomplishment of organisational goal. The key discoveries demonstrated that rehearsing TQM but it is yet to implement it to the highest level of subscribing to a quality reward system. The implementation of TQM is at the quality assurance level. It was discovered that administration inactions undermined initiative promise to quality and rendered TQM rehearsal insufficient. It is through questionnaires method and oral interview that data are collected from the aforementioned organisations. References were made to journals, related books, internet the aforementioned organization concurred that TQM have in hierarchical execution.

Proceedings of International Conference on Business Management

Dr. S.T.W.S. Yapa

Present-day customers are very conscious of the quality of products and services. They are ready to pay a higher price for a quality product or service. A company that meets such demands gains a competitive advantage in the market over its competitors. One of the best approaches to address this challenge is the implementation of Total Quality Management (TQM). TQM, a systematic management approach and a journey to meet competitive and technological challenges, has been accepted by both service and manufacturing organizations globally. It is commonly agreed that by adopting TQM, the overall effectiveness and performance of organizations can be improved. Despite TQM offers numerous benefits, it is not an easy task to implement it. It is generally experienced that implementation of TQM is hard and painful due to certain barriers that inhibit the successful implementation of TQM. Understanding the factors that are likely to obstruct TQM implementation enables managers to develop more ef...

International Public Management Journal

Teddy Lian Kok Fei , Hal Rainey

This research highlights the factors that have contributed to the implementation and impact of Total Quality Management (TQM) in Malaysian government agencies and to compare agencies that have won quality awards to those that have not.

Quality and Quantity

Ahamad Bahari

Maged Awwad

In the current market economy, companies are constantly struggling to achieve a sustained competitive advantage that will enable them to improve performance, which results in increased competitiveness, and of course, profit. Among the few competitive advantages that can become sustainable competitive advantages, quality plays a crucial role. Recent research shows that about 90% of buyers in the international market, consider quality as having at least equal importance with price in making the decision to purchase. In the opinion of some specialists in economic theory and practice, total quality refers to the holistic approach of quality, which actually means, addressing all aspects of economic and social development and technical of quality. Thus, the holistic approach of quality at organisation-wide involves procedural approach of quality, in this respect, the study focuses on this type of quality approach, i.e. the procedural approach, taking into account the strategic aspects of the continuous improvement of quality, which means in fact, the quality management. Total Quality Management is seen as a way to transform the economies of some countries to be more competitive than others. However, Total Quality Management brings not and will not produce results overnight, it is not a panacea for all the problems facing the organization. Total Quality Management requires a change in organizational culture, which must focus on meeting customer expectations and increasing the involvement of all employees to meet this objective, as an expression of the ethics of continuous improvement. In general, research on quality aiming identify why an organization should adopt the principles of total quality management, but attempts to identify the failing companies' attempts to implement total quality management principles are not so visible. Concerns companies to introduce quality management systems are becoming more pronounced, therefore, in this study we try to identify and present the main reasons that prevent achieving quality and implementation of total quality management system, in other words, we are interested in identify barriers to implementation and development of a quality management system.

Aliza Ramli

Haile Shitahun Mengistie

The main purpose of this paper was to investigate the effect of Total Quality Management practices on organizational performance the case of Bahir Dar Textile SC. It adopted an explanatory research design. The sample size of 71 respondents was drawn using stratified random sampling technique. The study findings of correlation analysis showed that all constructs of total quality management (customer focus, employee's empowerment, top management commitment, continuous empowerment, supplier quality management, process approach) were positively and significantly affect organizational performance. The findings of the multiple regressions analysis showed that the observed changes in organizational performance attributed by the elements of total quality management practice is 49.4% (adjusted r2=.494). The study also reveals from six major elements of total quality management practices, customer focus, top management commitment, continuous improvement, employee's empowerment, and supplier quality management has a positive effect on organizational performance, while process approach doesn't have a significant effect.

Dr. Faisal Talib

RELATED PAPERS

Leni siti Nuraeni

Predrag Ilic

Trabajo de fin de Master - UPV

Tanja Mastroiacovo

Revista Arbitrada Interdisciplinaria de Ciencias de la Salud. Salud y Vida

Adisnay Rodríguez Plasencia

Alan Carlos Nery Dos Santos

Roberto Valdivia

Everton Picolotto

Posgrado y Sociedad. Revista Electrónica del Sistema de Estudios de Posgrado

Christian Segura

muhtar pati

Revista Brasileira de Educação do Campo

Cicero Silva

Vestnik Tomskogo gosudarstvennogo universiteta. Kul'turologiya i iskusstvovedenie

Vitaly Kalitzky

ALTERNATIVE MASTER NARRATIVE: THE AVENUE LEADING TO GENERATIVITY

Lili Khechuashvili , Mariam Gogichaishvili

RePEc: Research Papers in Economics

Veronica Polin

Journal of Medical Genetics

Victoria Murday

Revista Panamericana de Salud Pública

Pedro Orduñez

Breeding Science

Kazuo Watanabe

Bà đẻ uống nước ép được không

baubi sausinh

CUNY Brooklyn毕业证书 纽约城市大学布鲁克林学院文凭证书

Monthly Notices of the Royal Astronomical Society: Letters

H. Kjeldsen

“GAGAUZCA-RUSÇA-MOLDOVANCA SÖZLÜK”ÜN 50. YILINA ADANMIŞ «GAGAUZİYA: DİL, İSTORİYA, KULTURA» ADLI HALKLARARASI BİLİM-PRAKTİKA KONFERENŢİYASININ BİLİM YAZILARI

Dilber C A M A L I Türksoy

The Nonproliferation Review

Nikolai Sokov

Paedagogia: Jurnal Pendidikan

Gita ira yolanda

Sofia Sofia

Biology of Reproduction

Carlos Stocco

RELATED TOPICS

- We're Hiring!

- Help Center

- Find new research papers in:

- Health Sciences

- Earth Sciences

- Cognitive Science

- Mathematics

- Computer Science

- Academia ©2024

Machine Learning and image analysis towards improved energy management in Industry 4.0: a practical case study on quality control

- Original Article

- Open access

- Published: 13 May 2024

- Volume 17 , article number 48 , ( 2024 )

Cite this article

You have full access to this open access article

- Mattia Casini 1 ,

- Paolo De Angelis 1 ,

- Marco Porrati 2 ,

- Paolo Vigo 1 ,

- Matteo Fasano 1 ,

- Eliodoro Chiavazzo 1 &

- Luca Bergamasco ORCID: orcid.org/0000-0001-6130-9544 1

With the advent of Industry 4.0, Artificial Intelligence (AI) has created a favorable environment for the digitalization of manufacturing and processing, helping industries to automate and optimize operations. In this work, we focus on a practical case study of a brake caliper quality control operation, which is usually accomplished by human inspection and requires a dedicated handling system, with a slow production rate and thus inefficient energy usage. We report on a developed Machine Learning (ML) methodology, based on Deep Convolutional Neural Networks (D-CNNs), to automatically extract information from images, to automate the process. A complete workflow has been developed on the target industrial test case. In order to find the best compromise between accuracy and computational demand of the model, several D-CNNs architectures have been tested. The results show that, a judicious choice of the ML model with a proper training, allows a fast and accurate quality control; thus, the proposed workflow could be implemented for an ML-powered version of the considered problem. This would eventually enable a better management of the available resources, in terms of time consumption and energy usage.

Avoid common mistakes on your manuscript.

Introduction

An efficient use of energy resources in industry is key for a sustainable future (Bilgen, 2014 ; Ocampo-Martinez et al., 2019 ). The advent of Industry 4.0, and of Artificial Intelligence, have created a favorable context for the digitalisation of manufacturing processes. In this view, Machine Learning (ML) techniques have the potential for assisting industries in a better and smart usage of the available data, helping to automate and improve operations (Narciso & Martins, 2020 ; Mazzei & Ramjattan, 2022 ). For example, ML tools can be used to analyze sensor data from industrial equipment for predictive maintenance (Carvalho et al., 2019 ; Dalzochio et al., 2020 ), which allows identification of potential failures in advance, and thus to a better planning of maintenance operations with reduced downtime. Similarly, energy consumption optimization (Shen et al., 2020 ; Qin et al., 2020 ) can be achieved via ML-enabled analysis of available consumption data, with consequent adjustments of the operating parameters, schedules, or configurations to minimize energy consumption while maintaining an optimal production efficiency. Energy consumption forecast (Liu et al., 2019 ; Zhang et al., 2018 ) can also be improved, especially in industrial plants relying on renewable energy sources (Bologna et al., 2020 ; Ismail et al., 2021 ), by analysis of historical data on weather patterns and forecast, to optimize the usage of energy resources, avoid energy peaks, and leverage alternative energy sources or storage systems (Li & Zheng, 2016 ; Ribezzo et al., 2022 ; Fasano et al., 2019 ; Trezza et al., 2022 ; Mishra et al., 2023 ). Finally, ML tools can also serve for fault or anomaly detection (Angelopoulos et al., 2019 ; Md et al., 2022 ), which allows prompt corrective actions to optimize energy usage and prevent energy inefficiencies. Within this context, ML techniques for image analysis (Casini et al., 2024 ) are also gaining increasing interest (Chen et al., 2023 ), for their application to e.g. materials design and optimization (Choudhury, 2021 ), quality control (Badmos et al., 2020 ), process monitoring (Ho et al., 2021 ), or detection of machine failures by converting time series data from sensors to 2D images (Wen et al., 2017 ).

Incorporating digitalisation and ML techniques into Industry 4.0 has led to significant energy savings (Maggiore et al., 2021 ; Nota et al., 2020 ). Projects adopting these technologies can achieve an average of 15% to 25% improvement in energy efficiency in the processes where they were implemented (Arana-Landín et al., 2023 ). For instance, in predictive maintenance, ML can reduce energy consumption by optimizing the operation of machinery (Agrawal et al., 2023 ; Pan et al., 2024 ). In process optimization, ML algorithms can improve energy efficiency by 10-20% by analyzing and adjusting machine operations for optimal performance, thereby reducing unnecessary energy usage (Leong et al., 2020 ). Furthermore, the implementation of ML algorithms for optimal control can lead to energy savings of 30%, because these systems can make real-time adjustments to production lines, ensuring that machines operate at peak energy efficiency (Rahul & Chiddarwar, 2023 ).

In automotive manufacturing, ML-driven quality control can lead to energy savings by reducing the need for redoing parts or running inefficient production cycles (Vater et al., 2019 ). In high-volume production environments such as consumer electronics, novel computer-based vision models for automated detection and classification of damaged packages from intact packages can speed up operations and reduce waste (Shahin et al., 2023 ). In heavy industries like steel or chemical manufacturing, ML can optimize the energy consumption of large machinery. By predicting the optimal operating conditions and maintenance schedules, these systems can save energy costs (Mypati et al., 2023 ). Compressed air is one of the most energy-intensive processes in manufacturing. ML can optimize the performance of these systems, potentially leading to energy savings by continuously monitoring and adjusting the air compressors for peak efficiency, avoiding energy losses due to leaks or inefficient operation (Benedetti et al., 2019 ). ML can also contribute to reducing energy consumption and minimizing incorrectly produced parts in polymer processing enterprises (Willenbacher et al., 2021 ).

Here we focus on a practical industrial case study of brake caliper processing. In detail, we focus on the quality control operation, which is typically accomplished by human visual inspection and requires a dedicated handling system. This eventually implies a slower production rate, and inefficient energy usage. We thus propose the integration of an ML-based system to automatically perform the quality control operation, without the need for a dedicated handling system and thus reduced operation time. To this, we rely on ML tools able to analyze and extract information from images, that is, deep convolutional neural networks, D-CNNs (Alzubaidi et al., 2021 ; Chai et al., 2021 ).

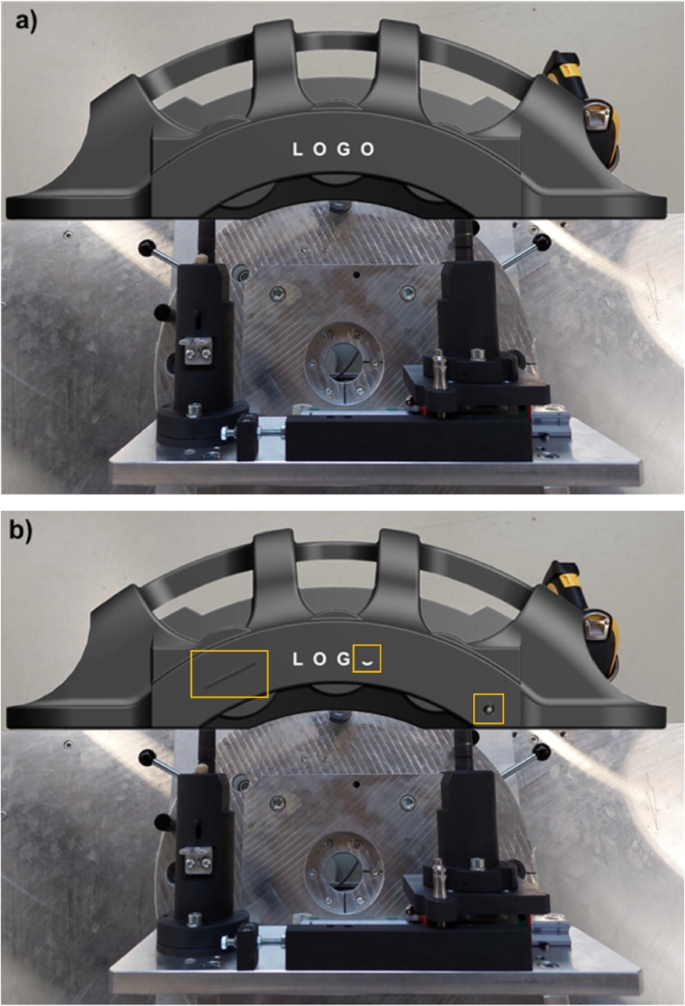

Sample 3D model (GrabCAD ) of the considered brake caliper: (a) part without defects, and (b) part with three sample defects, namely a scratch, a partially missing letter in the logo, and a circular painting defect (shown by the yellow squares, from left to right respectively)

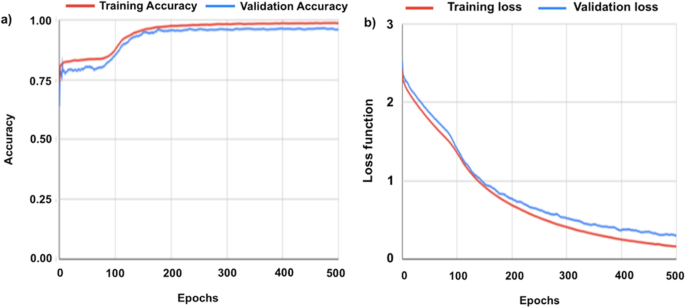

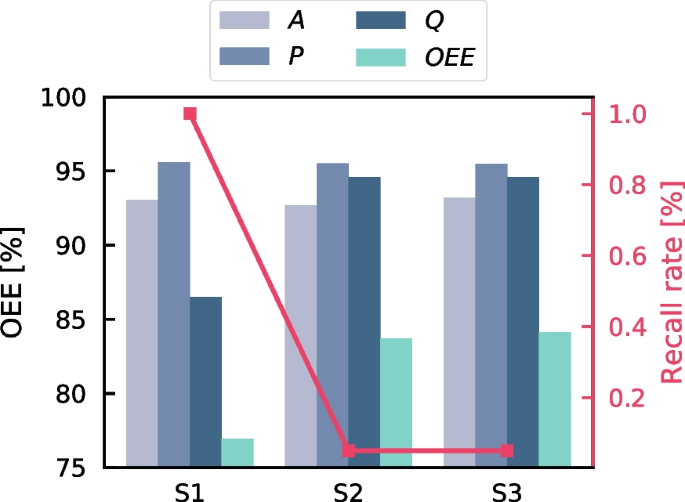

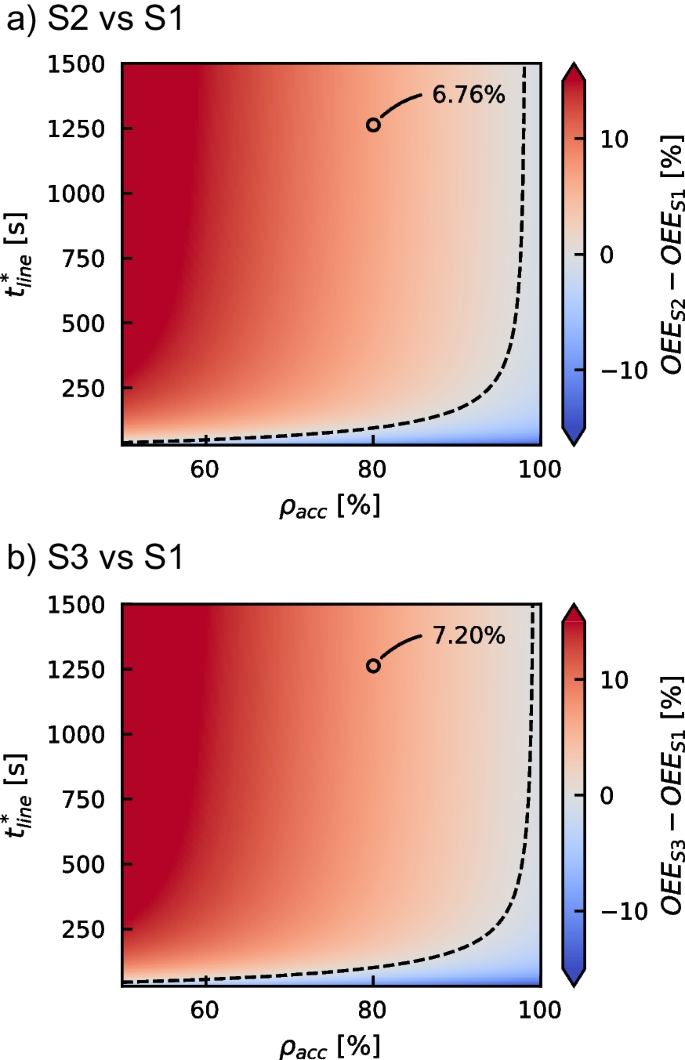

A complete workflow for the purpose has been developed and tested on a real industrial test case. This includes: a dedicated pre-processing of the brake caliper images, their labelling and analysis using two dedicated D-CNN architectures (one for background removal, and one for defect identification), post-processing and analysis of the neural network output. Several different D-CNN architectures have been tested, in order to find the best model in terms of accuracy and computational demand. The results show that, a judicious choice of the ML model with a proper training, allows to obtain fast and accurate recognition of possible defects. The best-performing models, indeed, reach over 98% accuracy on the target criteria for quality control, and take only few seconds to analyze each image. These results make the proposed workflow compliant with the typical industrial expectations; therefore, in perspective, it could be implemented for an ML-powered version of the considered industrial problem. This would eventually allow to achieve better performance of the manufacturing process and, ultimately, a better management of the available resources in terms of time consumption and energy expense.

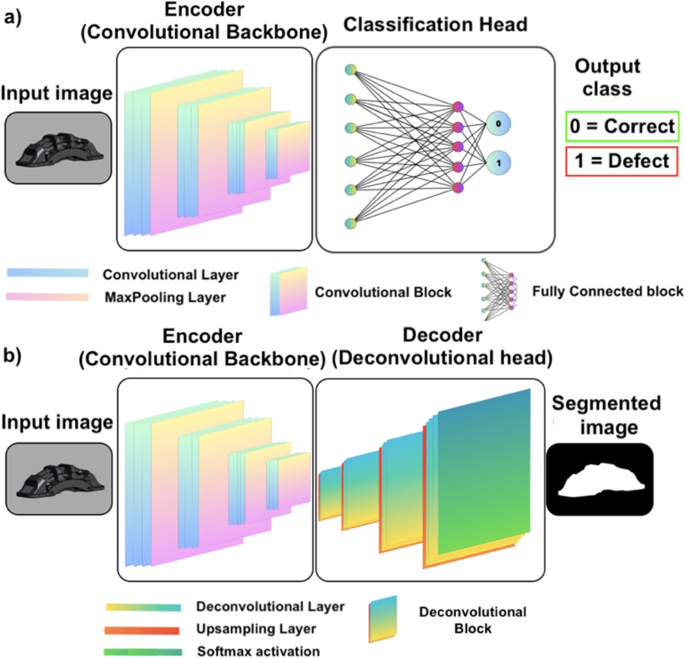

Different neural network architectures: convolutional encoder (a) and encoder-decoder (b)

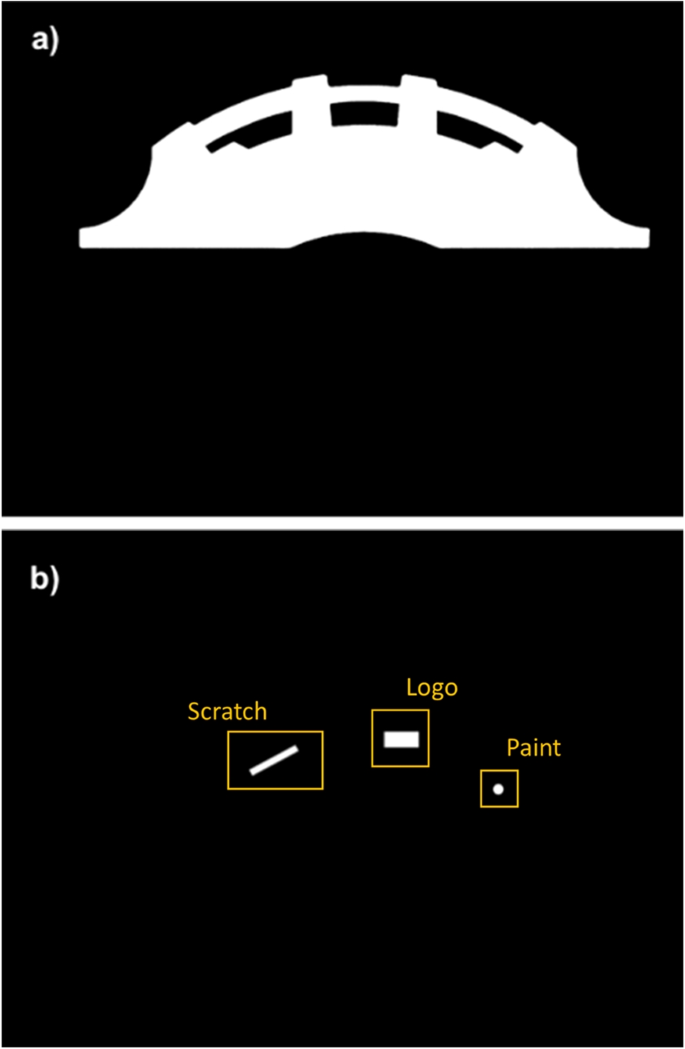

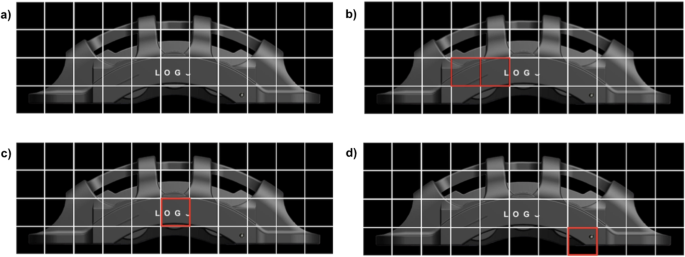

The industrial quality control process that we target is the visual inspection of manufactured components, to verify the absence of possible defects. Due to industrial confidentiality reasons, a representative open-source 3D geometry (GrabCAD ) of the considered parts, similar to the original one, is shown in Fig. 1 . For illustrative purposes, the clean geometry without defects (Fig. 1 (a)) is compared to the geometry with three possible sample defects, namely: a scratch on the surface of the brake caliper, a partially missing letter in the logo, and a circular painting defect (highlighted by the yellow squares, from left to right respectively, in Fig. 1 (b)). Note that, one or multiple defects may be present on the geometry, and that other types of defects may also be considered.

Within the industrial production line, this quality control is typically time consuming, and requires a dedicated handling system with the associated slow production rate and energy inefficiencies. Thus, we developed a methodology to achieve an ML-powered version of the control process. The method relies on data analysis and, in particular, on information extraction from images of the brake calipers via Deep Convolutional Neural Networks, D-CNNs (Alzubaidi et al., 2021 ). The designed workflow for defect recognition is implemented in the following two steps: 1) removal of the background from the image of the caliper, in order to reduce noise and irrelevant features in the image, ultimately rendering the algorithms more flexible with respect to the background environment; 2) analysis of the geometry of the caliper to identify the different possible defects. These two serial steps are accomplished via two different and dedicated neural networks, whose architecture is discussed in the next section.