Have a language expert improve your writing

Run a free plagiarism check in 10 minutes, generate accurate citations for free.

- Knowledge Base

Methodology

- Random Assignment in Experiments | Introduction & Examples

Random Assignment in Experiments | Introduction & Examples

Published on March 8, 2021 by Pritha Bhandari . Revised on June 22, 2023.

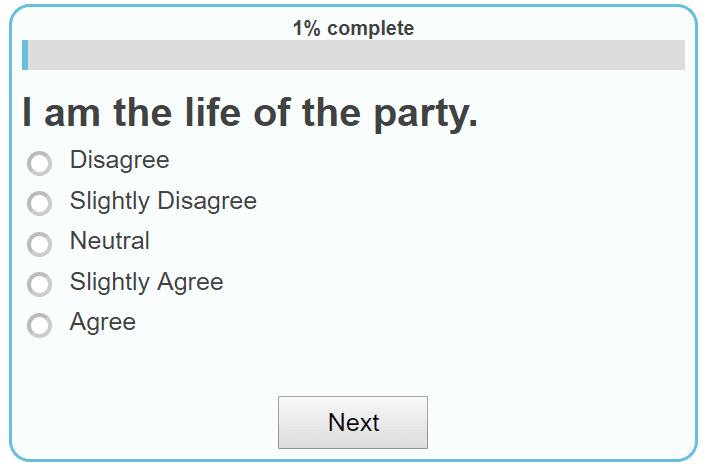

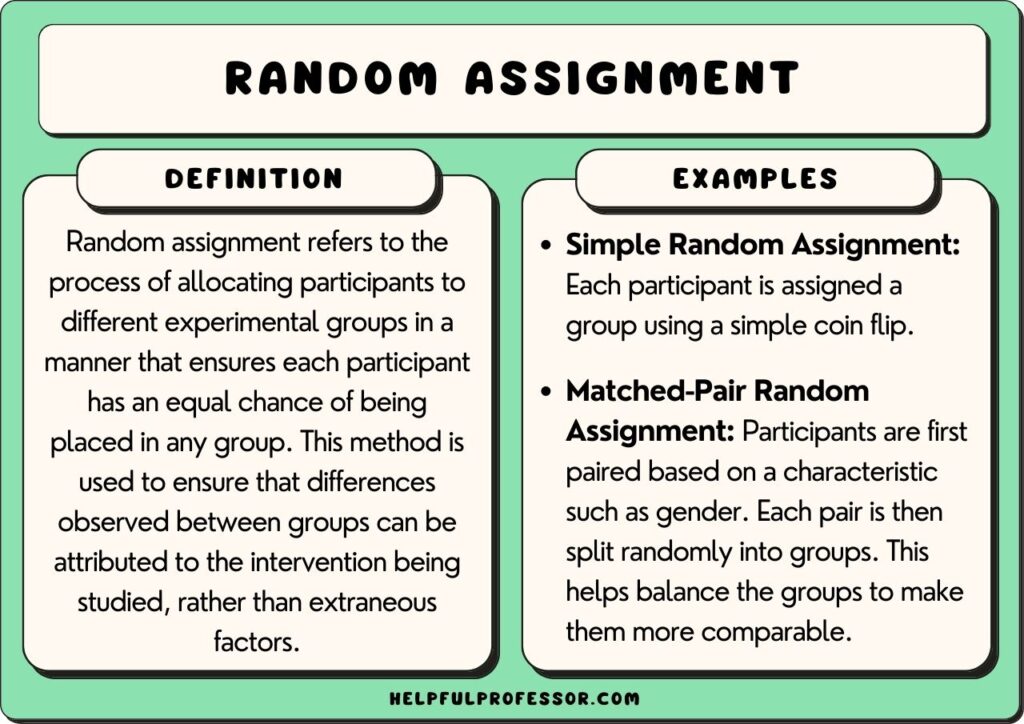

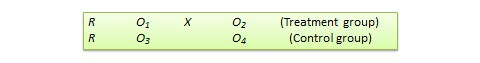

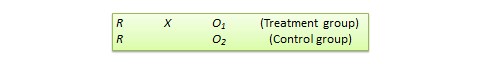

In experimental research, random assignment is a way of placing participants from your sample into different treatment groups using randomization.

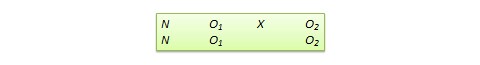

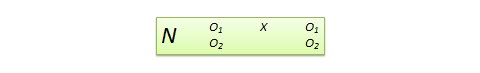

With simple random assignment, every member of the sample has a known or equal chance of being placed in a control group or an experimental group. Studies that use simple random assignment are also called completely randomized designs .

Random assignment is a key part of experimental design . It helps you ensure that all groups are comparable at the start of a study: any differences between them are due to random factors, not research biases like sampling bias or selection bias .

Table of contents

Why does random assignment matter, random sampling vs random assignment, how do you use random assignment, when is random assignment not used, other interesting articles, frequently asked questions about random assignment.

Random assignment is an important part of control in experimental research, because it helps strengthen the internal validity of an experiment and avoid biases.

In experiments, researchers manipulate an independent variable to assess its effect on a dependent variable, while controlling for other variables. To do so, they often use different levels of an independent variable for different groups of participants.

This is called a between-groups or independent measures design.

You use three groups of participants that are each given a different level of the independent variable:

- a control group that’s given a placebo (no dosage, to control for a placebo effect ),

- an experimental group that’s given a low dosage,

- a second experimental group that’s given a high dosage.

Random assignment to helps you make sure that the treatment groups don’t differ in systematic ways at the start of the experiment, as this can seriously affect (and even invalidate) your work.

If you don’t use random assignment, you may not be able to rule out alternative explanations for your results.

- participants recruited from cafes are placed in the control group ,

- participants recruited from local community centers are placed in the low dosage experimental group,

- participants recruited from gyms are placed in the high dosage group.

With this type of assignment, it’s hard to tell whether the participant characteristics are the same across all groups at the start of the study. Gym-users may tend to engage in more healthy behaviors than people who frequent cafes or community centers, and this would introduce a healthy user bias in your study.

Although random assignment helps even out baseline differences between groups, it doesn’t always make them completely equivalent. There may still be extraneous variables that differ between groups, and there will always be some group differences that arise from chance.

Most of the time, the random variation between groups is low, and, therefore, it’s acceptable for further analysis. This is especially true when you have a large sample. In general, you should always use random assignment in experiments when it is ethically possible and makes sense for your study topic.

Here's why students love Scribbr's proofreading services

Discover proofreading & editing

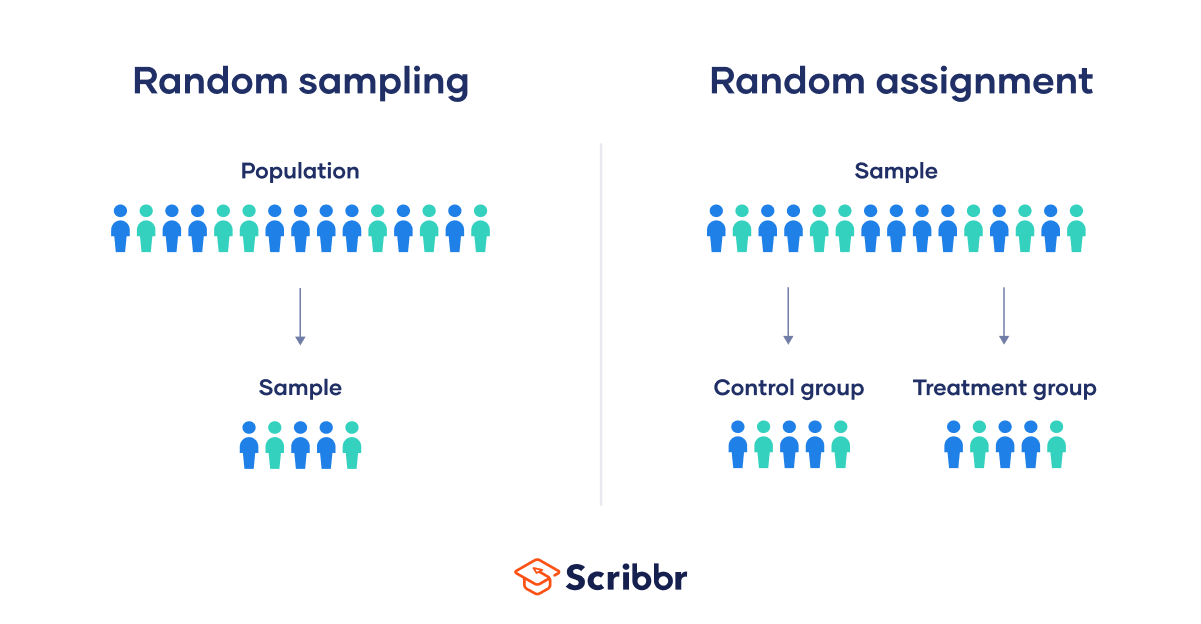

Random sampling and random assignment are both important concepts in research, but it’s important to understand the difference between them.

Random sampling (also called probability sampling or random selection) is a way of selecting members of a population to be included in your study. In contrast, random assignment is a way of sorting the sample participants into control and experimental groups.

While random sampling is used in many types of studies, random assignment is only used in between-subjects experimental designs.

Some studies use both random sampling and random assignment, while others use only one or the other.

Random sampling enhances the external validity or generalizability of your results, because it helps ensure that your sample is unbiased and representative of the whole population. This allows you to make stronger statistical inferences .

You use a simple random sample to collect data. Because you have access to the whole population (all employees), you can assign all 8000 employees a number and use a random number generator to select 300 employees. These 300 employees are your full sample.

Random assignment enhances the internal validity of the study, because it ensures that there are no systematic differences between the participants in each group. This helps you conclude that the outcomes can be attributed to the independent variable .

- a control group that receives no intervention.

- an experimental group that has a remote team-building intervention every week for a month.

You use random assignment to place participants into the control or experimental group. To do so, you take your list of participants and assign each participant a number. Again, you use a random number generator to place each participant in one of the two groups.

To use simple random assignment, you start by giving every member of the sample a unique number. Then, you can use computer programs or manual methods to randomly assign each participant to a group.

- Random number generator: Use a computer program to generate random numbers from the list for each group.

- Lottery method: Place all numbers individually in a hat or a bucket, and draw numbers at random for each group.

- Flip a coin: When you only have two groups, for each number on the list, flip a coin to decide if they’ll be in the control or the experimental group.

- Use a dice: When you have three groups, for each number on the list, roll a dice to decide which of the groups they will be in. For example, assume that rolling 1 or 2 lands them in a control group; 3 or 4 in an experimental group; and 5 or 6 in a second control or experimental group.

This type of random assignment is the most powerful method of placing participants in conditions, because each individual has an equal chance of being placed in any one of your treatment groups.

Random assignment in block designs

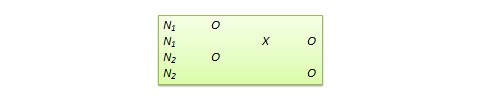

In more complicated experimental designs, random assignment is only used after participants are grouped into blocks based on some characteristic (e.g., test score or demographic variable). These groupings mean that you need a larger sample to achieve high statistical power .

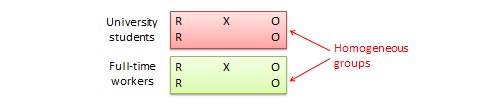

For example, a randomized block design involves placing participants into blocks based on a shared characteristic (e.g., college students versus graduates), and then using random assignment within each block to assign participants to every treatment condition. This helps you assess whether the characteristic affects the outcomes of your treatment.

In an experimental matched design , you use blocking and then match up individual participants from each block based on specific characteristics. Within each matched pair or group, you randomly assign each participant to one of the conditions in the experiment and compare their outcomes.

Sometimes, it’s not relevant or ethical to use simple random assignment, so groups are assigned in a different way.

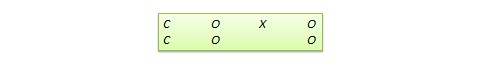

When comparing different groups

Sometimes, differences between participants are the main focus of a study, for example, when comparing men and women or people with and without health conditions. Participants are not randomly assigned to different groups, but instead assigned based on their characteristics.

In this type of study, the characteristic of interest (e.g., gender) is an independent variable, and the groups differ based on the different levels (e.g., men, women, etc.). All participants are tested the same way, and then their group-level outcomes are compared.

When it’s not ethically permissible

When studying unhealthy or dangerous behaviors, it’s not possible to use random assignment. For example, if you’re studying heavy drinkers and social drinkers, it’s unethical to randomly assign participants to one of the two groups and ask them to drink large amounts of alcohol for your experiment.

When you can’t assign participants to groups, you can also conduct a quasi-experimental study . In a quasi-experiment, you study the outcomes of pre-existing groups who receive treatments that you may not have any control over (e.g., heavy drinkers and social drinkers). These groups aren’t randomly assigned, but may be considered comparable when some other variables (e.g., age or socioeconomic status) are controlled for.

Prevent plagiarism. Run a free check.

If you want to know more about statistics , methodology , or research bias , make sure to check out some of our other articles with explanations and examples.

- Student’s t -distribution

- Normal distribution

- Null and Alternative Hypotheses

- Chi square tests

- Confidence interval

- Quartiles & Quantiles

- Cluster sampling

- Stratified sampling

- Data cleansing

- Reproducibility vs Replicability

- Peer review

- Prospective cohort study

Research bias

- Implicit bias

- Cognitive bias

- Placebo effect

- Hawthorne effect

- Hindsight bias

- Affect heuristic

- Social desirability bias

In experimental research, random assignment is a way of placing participants from your sample into different groups using randomization. With this method, every member of the sample has a known or equal chance of being placed in a control group or an experimental group.

Random selection, or random sampling , is a way of selecting members of a population for your study’s sample.

In contrast, random assignment is a way of sorting the sample into control and experimental groups.

Random sampling enhances the external validity or generalizability of your results, while random assignment improves the internal validity of your study.

Random assignment is used in experiments with a between-groups or independent measures design. In this research design, there’s usually a control group and one or more experimental groups. Random assignment helps ensure that the groups are comparable.

In general, you should always use random assignment in this type of experimental design when it is ethically possible and makes sense for your study topic.

To implement random assignment , assign a unique number to every member of your study’s sample .

Then, you can use a random number generator or a lottery method to randomly assign each number to a control or experimental group. You can also do so manually, by flipping a coin or rolling a dice to randomly assign participants to groups.

Cite this Scribbr article

If you want to cite this source, you can copy and paste the citation or click the “Cite this Scribbr article” button to automatically add the citation to our free Citation Generator.

Bhandari, P. (2023, June 22). Random Assignment in Experiments | Introduction & Examples. Scribbr. Retrieved April 15, 2024, from https://www.scribbr.com/methodology/random-assignment/

Is this article helpful?

Pritha Bhandari

Other students also liked, guide to experimental design | overview, steps, & examples, confounding variables | definition, examples & controls, control groups and treatment groups | uses & examples, "i thought ai proofreading was useless but..".

I've been using Scribbr for years now and I know it's a service that won't disappoint. It does a good job spotting mistakes”

Random Assignment in Psychology: Definition & Examples

Julia Simkus

Editor at Simply Psychology

BA (Hons) Psychology, Princeton University

Julia Simkus is a graduate of Princeton University with a Bachelor of Arts in Psychology. She is currently studying for a Master's Degree in Counseling for Mental Health and Wellness in September 2023. Julia's research has been published in peer reviewed journals.

Learn about our Editorial Process

Saul Mcleod, PhD

Editor-in-Chief for Simply Psychology

BSc (Hons) Psychology, MRes, PhD, University of Manchester

Saul Mcleod, PhD., is a qualified psychology teacher with over 18 years of experience in further and higher education. He has been published in peer-reviewed journals, including the Journal of Clinical Psychology.

Olivia Guy-Evans, MSc

Associate Editor for Simply Psychology

BSc (Hons) Psychology, MSc Psychology of Education

Olivia Guy-Evans is a writer and associate editor for Simply Psychology. She has previously worked in healthcare and educational sectors.

In psychology, random assignment refers to the practice of allocating participants to different experimental groups in a study in a completely unbiased way, ensuring each participant has an equal chance of being assigned to any group.

In experimental research, random assignment, or random placement, organizes participants from your sample into different groups using randomization.

Random assignment uses chance procedures to ensure that each participant has an equal opportunity of being assigned to either a control or experimental group.

The control group does not receive the treatment in question, whereas the experimental group does receive the treatment.

When using random assignment, neither the researcher nor the participant can choose the group to which the participant is assigned. This ensures that any differences between and within the groups are not systematic at the onset of the study.

In a study to test the success of a weight-loss program, investigators randomly assigned a pool of participants to one of two groups.

Group A participants participated in the weight-loss program for 10 weeks and took a class where they learned about the benefits of healthy eating and exercise.

Group B participants read a 200-page book that explains the benefits of weight loss. The investigator randomly assigned participants to one of the two groups.

The researchers found that those who participated in the program and took the class were more likely to lose weight than those in the other group that received only the book.

Importance

Random assignment ensures that each group in the experiment is identical before applying the independent variable.

In experiments , researchers will manipulate an independent variable to assess its effect on a dependent variable, while controlling for other variables. Random assignment increases the likelihood that the treatment groups are the same at the onset of a study.

Thus, any changes that result from the independent variable can be assumed to be a result of the treatment of interest. This is particularly important for eliminating sources of bias and strengthening the internal validity of an experiment.

Random assignment is the best method for inferring a causal relationship between a treatment and an outcome.

Random Selection vs. Random Assignment

Random selection (also called probability sampling or random sampling) is a way of randomly selecting members of a population to be included in your study.

On the other hand, random assignment is a way of sorting the sample participants into control and treatment groups.

Random selection ensures that everyone in the population has an equal chance of being selected for the study. Once the pool of participants has been chosen, experimenters use random assignment to assign participants into groups.

Random assignment is only used in between-subjects experimental designs, while random selection can be used in a variety of study designs.

Random Assignment vs Random Sampling

Random sampling refers to selecting participants from a population so that each individual has an equal chance of being chosen. This method enhances the representativeness of the sample.

Random assignment, on the other hand, is used in experimental designs once participants are selected. It involves allocating these participants to different experimental groups or conditions randomly.

This helps ensure that any differences in results across groups are due to manipulating the independent variable, not preexisting differences among participants.

When to Use Random Assignment

Random assignment is used in experiments with a between-groups or independent measures design.

In these research designs, researchers will manipulate an independent variable to assess its effect on a dependent variable, while controlling for other variables.

There is usually a control group and one or more experimental groups. Random assignment helps ensure that the groups are comparable at the onset of the study.

How to Use Random Assignment

There are a variety of ways to assign participants into study groups randomly. Here are a handful of popular methods:

- Random Number Generator : Give each member of the sample a unique number; use a computer program to randomly generate a number from the list for each group.

- Lottery : Give each member of the sample a unique number. Place all numbers in a hat or bucket and draw numbers at random for each group.

- Flipping a Coin : Flip a coin for each participant to decide if they will be in the control group or experimental group (this method can only be used when you have just two groups)

- Roll a Die : For each number on the list, roll a dice to decide which of the groups they will be in. For example, assume that rolling 1, 2, or 3 places them in a control group and rolling 3, 4, 5 lands them in an experimental group.

When is Random Assignment not used?

- When it is not ethically permissible: Randomization is only ethical if the researcher has no evidence that one treatment is superior to the other or that one treatment might have harmful side effects.

- When answering non-causal questions : If the researcher is just interested in predicting the probability of an event, the causal relationship between the variables is not important and observational designs would be more suitable than random assignment.

- When studying the effect of variables that cannot be manipulated: Some risk factors cannot be manipulated and so it would not make any sense to study them in a randomized trial. For example, we cannot randomly assign participants into categories based on age, gender, or genetic factors.

Drawbacks of Random Assignment

While randomization assures an unbiased assignment of participants to groups, it does not guarantee the equality of these groups. There could still be extraneous variables that differ between groups or group differences that arise from chance. Additionally, there is still an element of luck with random assignments.

Thus, researchers can not produce perfectly equal groups for each specific study. Differences between the treatment group and control group might still exist, and the results of a randomized trial may sometimes be wrong, but this is absolutely okay.

Scientific evidence is a long and continuous process, and the groups will tend to be equal in the long run when data is aggregated in a meta-analysis.

Additionally, external validity (i.e., the extent to which the researcher can use the results of the study to generalize to the larger population) is compromised with random assignment.

Random assignment is challenging to implement outside of controlled laboratory conditions and might not represent what would happen in the real world at the population level.

Random assignment can also be more costly than simple observational studies, where an investigator is just observing events without intervening with the population.

Randomization also can be time-consuming and challenging, especially when participants refuse to receive the assigned treatment or do not adhere to recommendations.

What is the difference between random sampling and random assignment?

Random sampling refers to randomly selecting a sample of participants from a population. Random assignment refers to randomly assigning participants to treatment groups from the selected sample.

Does random assignment increase internal validity?

Yes, random assignment ensures that there are no systematic differences between the participants in each group, enhancing the study’s internal validity .

Does random assignment reduce sampling error?

Yes, with random assignment, participants have an equal chance of being assigned to either a control group or an experimental group, resulting in a sample that is, in theory, representative of the population.

Random assignment does not completely eliminate sampling error because a sample only approximates the population from which it is drawn. However, random sampling is a way to minimize sampling errors.

When is random assignment not possible?

Random assignment is not possible when the experimenters cannot control the treatment or independent variable.

For example, if you want to compare how men and women perform on a test, you cannot randomly assign subjects to these groups.

Participants are not randomly assigned to different groups in this study, but instead assigned based on their characteristics.

Does random assignment eliminate confounding variables?

Yes, random assignment eliminates the influence of any confounding variables on the treatment because it distributes them at random among the study groups. Randomization invalidates any relationship between a confounding variable and the treatment.

Why is random assignment of participants to treatment conditions in an experiment used?

Random assignment is used to ensure that all groups are comparable at the start of a study. This allows researchers to conclude that the outcomes of the study can be attributed to the intervention at hand and to rule out alternative explanations for study results.

Further Reading

- Bogomolnaia, A., & Moulin, H. (2001). A new solution to the random assignment problem . Journal of Economic theory , 100 (2), 295-328.

- Krause, M. S., & Howard, K. I. (2003). What random assignment does and does not do . Journal of Clinical Psychology , 59 (7), 751-766.

- Bipolar Disorder

- Therapy Center

- When To See a Therapist

- Types of Therapy

- Best Online Therapy

- Best Couples Therapy

- Best Family Therapy

- Managing Stress

- Sleep and Dreaming

- Understanding Emotions

- Self-Improvement

- Healthy Relationships

- Student Resources

- Personality Types

- Guided Meditations

- Verywell Mind Insights

- 2023 Verywell Mind 25

- Mental Health in the Classroom

- Editorial Process

- Meet Our Review Board

- Crisis Support

The Definition of Random Assignment According to Psychology

Kendra Cherry, MS, is a psychosocial rehabilitation specialist, psychology educator, and author of the "Everything Psychology Book."

:max_bytes(150000):strip_icc():format(webp)/IMG_9791-89504ab694d54b66bbd72cb84ffb860e.jpg)

Emily is a board-certified science editor who has worked with top digital publishing brands like Voices for Biodiversity, Study.com, GoodTherapy, Vox, and Verywell.

:max_bytes(150000):strip_icc():format(webp)/Emily-Swaim-1000-0f3197de18f74329aeffb690a177160c.jpg)

Materio / Getty Images

Random assignment refers to the use of chance procedures in psychology experiments to ensure that each participant has the same opportunity to be assigned to any given group in a study to eliminate any potential bias in the experiment at the outset. Participants are randomly assigned to different groups, such as the treatment group versus the control group. In clinical research, randomized clinical trials are known as the gold standard for meaningful results.

Simple random assignment techniques might involve tactics such as flipping a coin, drawing names out of a hat, rolling dice, or assigning random numbers to a list of participants. It is important to note that random assignment differs from random selection .

While random selection refers to how participants are randomly chosen from a target population as representatives of that population, random assignment refers to how those chosen participants are then assigned to experimental groups.

Random Assignment In Research

To determine if changes in one variable will cause changes in another variable, psychologists must perform an experiment. Random assignment is a critical part of the experimental design that helps ensure the reliability of the study outcomes.

Researchers often begin by forming a testable hypothesis predicting that one variable of interest will have some predictable impact on another variable.

The variable that the experimenters will manipulate in the experiment is known as the independent variable , while the variable that they will then measure for different outcomes is known as the dependent variable. While there are different ways to look at relationships between variables, an experiment is the best way to get a clear idea if there is a cause-and-effect relationship between two or more variables.

Once researchers have formulated a hypothesis, conducted background research, and chosen an experimental design, it is time to find participants for their experiment. How exactly do researchers decide who will be part of an experiment? As mentioned previously, this is often accomplished through something known as random selection.

Random Selection

In order to generalize the results of an experiment to a larger group, it is important to choose a sample that is representative of the qualities found in that population. For example, if the total population is 60% female and 40% male, then the sample should reflect those same percentages.

Choosing a representative sample is often accomplished by randomly picking people from the population to be participants in a study. Random selection means that everyone in the group stands an equal chance of being chosen to minimize any bias. Once a pool of participants has been selected, it is time to assign them to groups.

By randomly assigning the participants into groups, the experimenters can be fairly sure that each group will have the same characteristics before the independent variable is applied.

Participants might be randomly assigned to the control group , which does not receive the treatment in question. The control group may receive a placebo or receive the standard treatment. Participants may also be randomly assigned to the experimental group , which receives the treatment of interest. In larger studies, there can be multiple treatment groups for comparison.

There are simple methods of random assignment, like rolling the die. However, there are more complex techniques that involve random number generators to remove any human error.

There can also be random assignment to groups with pre-established rules or parameters. For example, if you want to have an equal number of men and women in each of your study groups, you might separate your sample into two groups (by sex) before randomly assigning each of those groups into the treatment group and control group.

Random assignment is essential because it increases the likelihood that the groups are the same at the outset. With all characteristics being equal between groups, other than the application of the independent variable, any differences found between group outcomes can be more confidently attributed to the effect of the intervention.

Example of Random Assignment

Imagine that a researcher is interested in learning whether or not drinking caffeinated beverages prior to an exam will improve test performance. After randomly selecting a pool of participants, each person is randomly assigned to either the control group or the experimental group.

The participants in the control group consume a placebo drink prior to the exam that does not contain any caffeine. Those in the experimental group, on the other hand, consume a caffeinated beverage before taking the test.

Participants in both groups then take the test, and the researcher compares the results to determine if the caffeinated beverage had any impact on test performance.

A Word From Verywell

Random assignment plays an important role in the psychology research process. Not only does this process help eliminate possible sources of bias, but it also makes it easier to generalize the results of a tested sample of participants to a larger population.

Random assignment helps ensure that members of each group in the experiment are the same, which means that the groups are also likely more representative of what is present in the larger population of interest. Through the use of this technique, psychology researchers are able to study complex phenomena and contribute to our understanding of the human mind and behavior.

Lin Y, Zhu M, Su Z. The pursuit of balance: An overview of covariate-adaptive randomization techniques in clinical trials . Contemp Clin Trials. 2015;45(Pt A):21-25. doi:10.1016/j.cct.2015.07.011

Sullivan L. Random assignment versus random selection . In: The SAGE Glossary of the Social and Behavioral Sciences. SAGE Publications, Inc.; 2009. doi:10.4135/9781412972024.n2108

Alferes VR. Methods of Randomization in Experimental Design . SAGE Publications, Inc.; 2012. doi:10.4135/9781452270012

Nestor PG, Schutt RK. Research Methods in Psychology: Investigating Human Behavior. (2nd Ed.). SAGE Publications, Inc.; 2015.

By Kendra Cherry, MSEd Kendra Cherry, MS, is a psychosocial rehabilitation specialist, psychology educator, and author of the "Everything Psychology Book."

Random Assignment in Psychology (Definition + 40 Examples)

Have you ever wondered how researchers discover new ways to help people learn, make decisions, or overcome challenges? A hidden hero in this adventure of discovery is a method called random assignment, a cornerstone in psychological research that helps scientists uncover the truths about the human mind and behavior.

Random Assignment is a process used in research where each participant has an equal chance of being placed in any group within the study. This technique is essential in experiments as it helps to eliminate biases, ensuring that the different groups being compared are similar in all important aspects.

By doing so, researchers can be confident that any differences observed are likely due to the variable being tested, rather than other factors.

In this article, we’ll explore the intriguing world of random assignment, diving into its history, principles, real-world examples, and the impact it has had on the field of psychology.

History of Random Assignment

Stepping back in time, we delve into the origins of random assignment, which finds its roots in the early 20th century.

The pioneering mind behind this innovative technique was Sir Ronald A. Fisher , a British statistician and biologist. Fisher introduced the concept of random assignment in the 1920s, aiming to improve the quality and reliability of experimental research .

His contributions laid the groundwork for the method's evolution and its widespread adoption in various fields, particularly in psychology.

Fisher’s groundbreaking work on random assignment was motivated by his desire to control for confounding variables – those pesky factors that could muddy the waters of research findings.

By assigning participants to different groups purely by chance, he realized that the influence of these confounding variables could be minimized, paving the way for more accurate and trustworthy results.

Early Studies Utilizing Random Assignment

Following Fisher's initial development, random assignment started to gain traction in the research community. Early studies adopting this methodology focused on a variety of topics, from agriculture (which was Fisher’s primary field of interest) to medicine and psychology.

The approach allowed researchers to draw stronger conclusions from their experiments, bolstering the development of new theories and practices.

One notable early study utilizing random assignment was conducted in the field of educational psychology. Researchers were keen to understand the impact of different teaching methods on student outcomes.

By randomly assigning students to various instructional approaches, they were able to isolate the effects of the teaching methods, leading to valuable insights and recommendations for educators.

Evolution of the Methodology

As the decades rolled on, random assignment continued to evolve and adapt to the changing landscape of research.

Advances in technology introduced new tools and techniques for implementing randomization, such as computerized random number generators, which offered greater precision and ease of use.

The application of random assignment expanded beyond the confines of the laboratory, finding its way into field studies and large-scale surveys.

Researchers across diverse disciplines embraced the methodology, recognizing its potential to enhance the validity of their findings and contribute to the advancement of knowledge.

From its humble beginnings in the early 20th century to its widespread use today, random assignment has proven to be a cornerstone of scientific inquiry.

Its development and evolution have played a pivotal role in shaping the landscape of psychological research, driving discoveries that have improved lives and deepened our understanding of the human experience.

Principles of Random Assignment

Delving into the heart of random assignment, we uncover the theories and principles that form its foundation.

The method is steeped in the basics of probability theory and statistical inference, ensuring that each participant has an equal chance of being placed in any group, thus fostering fair and unbiased results.

Basic Principles of Random Assignment

Understanding the core principles of random assignment is key to grasping its significance in research. There are three principles: equal probability of selection, reduction of bias, and ensuring representativeness.

The first principle, equal probability of selection , ensures that every participant has an identical chance of being assigned to any group in the study. This randomness is crucial as it mitigates the risk of bias and establishes a level playing field.

The second principle focuses on the reduction of bias . Random assignment acts as a safeguard, ensuring that the groups being compared are alike in all essential aspects before the experiment begins.

This similarity between groups allows researchers to attribute any differences observed in the outcomes directly to the independent variable being studied.

Lastly, ensuring representativeness is a vital principle. When participants are assigned randomly, the resulting groups are more likely to be representative of the larger population.

This characteristic is crucial for the generalizability of the study’s findings, allowing researchers to apply their insights broadly.

Theoretical Foundation

The theoretical foundation of random assignment lies in probability theory and statistical inference .

Probability theory deals with the likelihood of different outcomes, providing a mathematical framework for analyzing random phenomena. In the context of random assignment, it helps in ensuring that each participant has an equal chance of being placed in any group.

Statistical inference, on the other hand, allows researchers to draw conclusions about a population based on a sample of data drawn from that population. It is the mechanism through which the results of a study can be generalized to a broader context.

Random assignment enhances the reliability of statistical inferences by reducing biases and ensuring that the sample is representative.

Differentiating Random Assignment from Random Selection

It’s essential to distinguish between random assignment and random selection, as the two terms, while related, have distinct meanings in the realm of research.

Random assignment refers to how participants are placed into different groups in an experiment, aiming to control for confounding variables and help determine causes.

In contrast, random selection pertains to how individuals are chosen to participate in a study. This method is used to ensure that the sample of participants is representative of the larger population, which is vital for the external validity of the research.

While both methods are rooted in randomness and probability, they serve different purposes in the research process.

Understanding the theories, principles, and distinctions of random assignment illuminates its pivotal role in psychological research.

This method, anchored in probability theory and statistical inference, serves as a beacon of reliability, guiding researchers in their quest for knowledge and ensuring that their findings stand the test of validity and applicability.

Methodology of Random Assignment

Implementing random assignment in a study is a meticulous process that involves several crucial steps.

The initial step is participant selection, where individuals are chosen to partake in the study. This stage is critical to ensure that the pool of participants is diverse and representative of the population the study aims to generalize to.

Once the pool of participants has been established, the actual assignment process begins. In this step, each participant is allocated randomly to one of the groups in the study.

Researchers use various tools, such as random number generators or computerized methods, to ensure that this assignment is genuinely random and free from biases.

Monitoring and adjusting form the final step in the implementation of random assignment. Researchers need to continuously observe the groups to ensure that they remain comparable in all essential aspects throughout the study.

If any significant discrepancies arise, adjustments might be necessary to maintain the study’s integrity and validity.

Tools and Techniques Used

The evolution of technology has introduced a variety of tools and techniques to facilitate random assignment.

Random number generators, both manual and computerized, are commonly used to assign participants to different groups. These generators ensure that each individual has an equal chance of being placed in any group, upholding the principle of equal probability of selection.

In addition to random number generators, researchers often use specialized computer software designed for statistical analysis and experimental design.

These software programs offer advanced features that allow for precise and efficient random assignment, minimizing the risk of human error and enhancing the study’s reliability.

Ethical Considerations

The implementation of random assignment is not devoid of ethical considerations. Informed consent is a fundamental ethical principle that researchers must uphold.

Informed consent means that every participant should be fully informed about the nature of the study, the procedures involved, and any potential risks or benefits, ensuring that they voluntarily agree to participate.

Beyond informed consent, researchers must conduct a thorough risk and benefit analysis. The potential benefits of the study should outweigh any risks or harms to the participants.

Safeguarding the well-being of participants is paramount, and any study employing random assignment must adhere to established ethical guidelines and standards.

Conclusion of Methodology

The methodology of random assignment, while seemingly straightforward, is a multifaceted process that demands precision, fairness, and ethical integrity. From participant selection to assignment and monitoring, each step is crucial to ensure the validity of the study’s findings.

The tools and techniques employed, coupled with a steadfast commitment to ethical principles, underscore the significance of random assignment as a cornerstone of robust psychological research.

Benefits of Random Assignment in Psychological Research

The impact and importance of random assignment in psychological research cannot be overstated. It is fundamental for ensuring the study is accurate, allowing the researchers to determine if their study actually caused the results they saw, and making sure the findings can be applied to the real world.

Facilitating Causal Inferences

When participants are randomly assigned to different groups, researchers can be more confident that the observed effects are due to the independent variable being changed, and not other factors.

This ability to determine the cause is called causal inference .

This confidence allows for the drawing of causal relationships, which are foundational for theory development and application in psychology.

Ensuring Internal Validity

One of the foremost impacts of random assignment is its ability to enhance the internal validity of an experiment.

Internal validity refers to the extent to which a researcher can assert that changes in the dependent variable are solely due to manipulations of the independent variable , and not due to confounding variables.

By ensuring that each participant has an equal chance of being in any condition of the experiment, random assignment helps control for participant characteristics that could otherwise complicate the results.

Enhancing Generalizability

Beyond internal validity, random assignment also plays a crucial role in enhancing the generalizability of research findings.

When done correctly, it ensures that the sample groups are representative of the larger population, so can allow researchers to apply their findings more broadly.

This representative nature is essential for the practical application of research, impacting policy, interventions, and psychological therapies.

Limitations of Random Assignment

Potential for implementation issues.

While the principles of random assignment are robust, the method can face implementation issues.

One of the most common problems is logistical constraints. Some studies, due to their nature or the specific population being studied, find it challenging to implement random assignment effectively.

For instance, in educational settings, logistical issues such as class schedules and school policies might stop the random allocation of students to different teaching methods .

Ethical Dilemmas

Random assignment, while methodologically sound, can also present ethical dilemmas.

In some cases, withholding a potentially beneficial treatment from one of the groups of participants can raise serious ethical questions, especially in medical or clinical research where participants' well-being might be directly affected.

Researchers must navigate these ethical waters carefully, balancing the pursuit of knowledge with the well-being of participants.

Generalizability Concerns

Even when implemented correctly, random assignment does not always guarantee generalizable results.

The types of people in the participant pool, the specific context of the study, and the nature of the variables being studied can all influence the extent to which the findings can be applied to the broader population.

Researchers must be cautious in making broad generalizations from studies, even those employing strict random assignment.

Practical and Real-World Limitations

In the real world, many variables cannot be manipulated for ethical or practical reasons, limiting the applicability of random assignment.

For instance, researchers cannot randomly assign individuals to different levels of intelligence, socioeconomic status, or cultural backgrounds.

This limitation necessitates the use of other research designs, such as correlational or observational studies , when exploring relationships involving such variables.

Response to Critiques

In response to these critiques, people in favor of random assignment argue that the method, despite its limitations, remains one of the most reliable ways to establish cause and effect in experimental research.

They acknowledge the challenges and ethical considerations but emphasize the rigorous frameworks in place to address them.

The ongoing discussion around the limitations and critiques of random assignment contributes to the evolution of the method, making sure it is continuously relevant and applicable in psychological research.

While random assignment is a powerful tool in experimental research, it is not without its critiques and limitations. Implementation issues, ethical dilemmas, generalizability concerns, and real-world limitations can pose significant challenges.

However, the continued discourse and refinement around these issues underline the method's enduring significance in the pursuit of knowledge in psychology.

By being careful with how we do things and doing what's right, random assignment stays a really important part of studying how people act and think.

Real-World Applications and Examples

Random assignment has been employed in many studies across various fields of psychology, leading to significant discoveries and advancements.

Here are some real-world applications and examples illustrating the diversity and impact of this method:

- Medicine and Health Psychology: Randomized Controlled Trials (RCTs) are the gold standard in medical research. In these studies, participants are randomly assigned to either the treatment or control group to test the efficacy of new medications or interventions.

- Educational Psychology: Studies in this field have used random assignment to explore the effects of different teaching methods, classroom environments, and educational technologies on student learning and outcomes.

- Cognitive Psychology: Researchers have employed random assignment to investigate various aspects of human cognition, including memory, attention, and problem-solving, leading to a deeper understanding of how the mind works.

- Social Psychology: Random assignment has been instrumental in studying social phenomena, such as conformity, aggression, and prosocial behavior, shedding light on the intricate dynamics of human interaction.

Let's get into some specific examples. You'll need to know one term though, and that is "control group." A control group is a set of participants in a study who do not receive the treatment or intervention being tested , serving as a baseline to compare with the group that does, in order to assess the effectiveness of the treatment.

- Smoking Cessation Study: Researchers used random assignment to put participants into two groups. One group received a new anti-smoking program, while the other did not. This helped determine if the program was effective in helping people quit smoking.

- Math Tutoring Program: A study on students used random assignment to place them into two groups. One group received additional math tutoring, while the other continued with regular classes, to see if the extra help improved their grades.

- Exercise and Mental Health: Adults were randomly assigned to either an exercise group or a control group to study the impact of physical activity on mental health and mood.

- Diet and Weight Loss: A study randomly assigned participants to different diet plans to compare their effectiveness in promoting weight loss and improving health markers.

- Sleep and Learning: Researchers randomly assigned students to either a sleep extension group or a regular sleep group to study the impact of sleep on learning and memory.

- Classroom Seating Arrangement: Teachers used random assignment to place students in different seating arrangements to examine the effect on focus and academic performance.

- Music and Productivity: Employees were randomly assigned to listen to music or work in silence to investigate the effect of music on workplace productivity.

- Medication for ADHD: Children with ADHD were randomly assigned to receive either medication, behavioral therapy, or a placebo to compare treatment effectiveness.

- Mindfulness Meditation for Stress: Adults were randomly assigned to a mindfulness meditation group or a waitlist control group to study the impact on stress levels.

- Video Games and Aggression: A study randomly assigned participants to play either violent or non-violent video games and then measured their aggression levels.

- Online Learning Platforms: Students were randomly assigned to use different online learning platforms to evaluate their effectiveness in enhancing learning outcomes.

- Hand Sanitizers in Schools: Schools were randomly assigned to use hand sanitizers or not to study the impact on student illness and absenteeism.

- Caffeine and Alertness: Participants were randomly assigned to consume caffeinated or decaffeinated beverages to measure the effects on alertness and cognitive performance.

- Green Spaces and Well-being: Neighborhoods were randomly assigned to receive green space interventions to study the impact on residents’ well-being and community connections.

- Pet Therapy for Hospital Patients: Patients were randomly assigned to receive pet therapy or standard care to assess the impact on recovery and mood.

- Yoga for Chronic Pain: Individuals with chronic pain were randomly assigned to a yoga intervention group or a control group to study the effect on pain levels and quality of life.

- Flu Vaccines Effectiveness: Different groups of people were randomly assigned to receive either the flu vaccine or a placebo to determine the vaccine’s effectiveness.

- Reading Strategies for Dyslexia: Children with dyslexia were randomly assigned to different reading intervention strategies to compare their effectiveness.

- Physical Environment and Creativity: Participants were randomly assigned to different room setups to study the impact of physical environment on creative thinking.

- Laughter Therapy for Depression: Individuals with depression were randomly assigned to laughter therapy sessions or control groups to assess the impact on mood.

- Financial Incentives for Exercise: Participants were randomly assigned to receive financial incentives for exercising to study the impact on physical activity levels.

- Art Therapy for Anxiety: Individuals with anxiety were randomly assigned to art therapy sessions or a waitlist control group to measure the effect on anxiety levels.

- Natural Light in Offices: Employees were randomly assigned to workspaces with natural or artificial light to study the impact on productivity and job satisfaction.

- School Start Times and Academic Performance: Schools were randomly assigned different start times to study the effect on student academic performance and well-being.

- Horticulture Therapy for Seniors: Older adults were randomly assigned to participate in horticulture therapy or traditional activities to study the impact on cognitive function and life satisfaction.

- Hydration and Cognitive Function: Participants were randomly assigned to different hydration levels to measure the impact on cognitive function and alertness.

- Intergenerational Programs: Seniors and young people were randomly assigned to intergenerational programs to study the effects on well-being and cross-generational understanding.

- Therapeutic Horseback Riding for Autism: Children with autism were randomly assigned to therapeutic horseback riding or traditional therapy to study the impact on social communication skills.

- Active Commuting and Health: Employees were randomly assigned to active commuting (cycling, walking) or passive commuting to study the effect on physical health.

- Mindful Eating for Weight Management: Individuals were randomly assigned to mindful eating workshops or control groups to study the impact on weight management and eating habits.

- Noise Levels and Learning: Students were randomly assigned to classrooms with different noise levels to study the effect on learning and concentration.

- Bilingual Education Methods: Schools were randomly assigned different bilingual education methods to compare their effectiveness in language acquisition.

- Outdoor Play and Child Development: Children were randomly assigned to different amounts of outdoor playtime to study the impact on physical and cognitive development.

- Social Media Detox: Participants were randomly assigned to a social media detox or regular usage to study the impact on mental health and well-being.

- Therapeutic Writing for Trauma Survivors: Individuals who experienced trauma were randomly assigned to therapeutic writing sessions or control groups to study the impact on psychological well-being.

- Mentoring Programs for At-risk Youth: At-risk youth were randomly assigned to mentoring programs or control groups to assess the impact on academic achievement and behavior.

- Dance Therapy for Parkinson’s Disease: Individuals with Parkinson’s disease were randomly assigned to dance therapy or traditional exercise to study the effect on motor function and quality of life.

- Aquaponics in Schools: Schools were randomly assigned to implement aquaponics programs to study the impact on student engagement and environmental awareness.

- Virtual Reality for Phobia Treatment: Individuals with phobias were randomly assigned to virtual reality exposure therapy or traditional therapy to compare effectiveness.

- Gardening and Mental Health: Participants were randomly assigned to engage in gardening or other leisure activities to study the impact on mental health and stress reduction.

Each of these studies exemplifies how random assignment is utilized in various fields and settings, shedding light on the multitude of ways it can be applied to glean valuable insights and knowledge.

Real-world Impact of Random Assignment

Random assignment is like a key tool in the world of learning about people's minds and behaviors. It’s super important and helps in many different areas of our everyday lives. It helps make better rules, creates new ways to help people, and is used in lots of different fields.

Health and Medicine

In health and medicine, random assignment has helped doctors and scientists make lots of discoveries. It’s a big part of tests that help create new medicines and treatments.

By putting people into different groups by chance, scientists can really see if a medicine works.

This has led to new ways to help people with all sorts of health problems, like diabetes, heart disease, and mental health issues like depression and anxiety.

Schools and education have also learned a lot from random assignment. Researchers have used it to look at different ways of teaching, what kind of classrooms are best, and how technology can help learning.

This knowledge has helped make better school rules, develop what we learn in school, and find the best ways to teach students of all ages and backgrounds.

Workplace and Organizational Behavior

Random assignment helps us understand how people act at work and what makes a workplace good or bad.

Studies have looked at different kinds of workplaces, how bosses should act, and how teams should be put together. This has helped companies make better rules and create places to work that are helpful and make people happy.

Environmental and Social Changes

Random assignment is also used to see how changes in the community and environment affect people. Studies have looked at community projects, changes to the environment, and social programs to see how they help or hurt people’s well-being.

This has led to better community projects, efforts to protect the environment, and programs to help people in society.

Technology and Human Interaction

In our world where technology is always changing, studies with random assignment help us see how tech like social media, virtual reality, and online stuff affect how we act and feel.

This has helped make better and safer technology and rules about using it so that everyone can benefit.

The effects of random assignment go far and wide, way beyond just a science lab. It helps us understand lots of different things, leads to new and improved ways to do things, and really makes a difference in the world around us.

From making healthcare and schools better to creating positive changes in communities and the environment, the real-world impact of random assignment shows just how important it is in helping us learn and make the world a better place.

So, what have we learned? Random assignment is like a super tool in learning about how people think and act. It's like a detective helping us find clues and solve mysteries in many parts of our lives.

From creating new medicines to helping kids learn better in school, and from making workplaces happier to protecting the environment, it’s got a big job!

This method isn’t just something scientists use in labs; it reaches out and touches our everyday lives. It helps make positive changes and teaches us valuable lessons.

Whether we are talking about technology, health, education, or the environment, random assignment is there, working behind the scenes, making things better and safer for all of us.

In the end, the simple act of putting people into groups by chance helps us make big discoveries and improvements. It’s like throwing a small stone into a pond and watching the ripples spread out far and wide.

Thanks to random assignment, we are always learning, growing, and finding new ways to make our world a happier and healthier place for everyone!

Related posts:

- 19+ Experimental Design Examples (Methods + Types)

- Cluster Sampling vs Stratified Sampling

- 41+ White Collar Job Examples (Salary + Path)

- 47+ Blue Collar Job Examples (Salary + Path)

- McDonaldization of Society (Definition + Examples)

Reference this article:

About The Author

Free Personality Test

Free Memory Test

Free IQ Test

PracticalPie.com is a participant in the Amazon Associates Program. As an Amazon Associate we earn from qualifying purchases.

Follow Us On:

Youtube Facebook Instagram X/Twitter

Psychology Resources

Developmental

Personality

Relationships

Psychologists

Serial Killers

Psychology Tests

Personality Quiz

Memory Test

Depression test

Type A/B Personality Test

© PracticalPsychology. All rights reserved

Privacy Policy | Terms of Use

If you're seeing this message, it means we're having trouble loading external resources on our website.

If you're behind a web filter, please make sure that the domains *.kastatic.org and *.kasandbox.org are unblocked.

To log in and use all the features of Khan Academy, please enable JavaScript in your browser.

AP®︎/College Statistics

Course: ap®︎/college statistics > unit 6.

- Statistical significance of experiment

Random sampling vs. random assignment (scope of inference)

- Conclusions in observational studies versus experiments

- Finding errors in study conclusions

- (Choice A) Just the residents involved in Hilary's study. A Just the residents involved in Hilary's study.

- (Choice B) All residents in Hilary's town. B All residents in Hilary's town.

- (Choice C) All residents in Hilary's country. C All residents in Hilary's country.

- (Choice A) Yes A Yes

- (Choice B) No B No

- (Choice A) Just the residents in Hilary's study. A Just the residents in Hilary's study.

Want to join the conversation?

- Upvote Button navigates to signup page

- Downvote Button navigates to signup page

- Flag Button navigates to signup page

Apply to be a D-Lab Data Science Fellow! Applications are due May 5.

Introduction to Field Experiments and Randomized Controlled Trials

Have you ever been curious about the methods researchers employ to determine causal relationships among various factors, ultimately leading to significant breakthroughs and progress in numerous fields? In this article, we offer an overview of field experimentation and its importance in discerning cause and effect relationships. We outline how randomized experiments represent an unbiased method for determining what works. Furthermore, we discuss key aspects of experiments, such as intervention, excludability, and non-interference. To illustrate these concepts, we present a hypothetical example of a randomized controlled trial evaluating the efficacy of an experimental drug called Covi-Mapp.

Why experiments?

Every day, we find ourselves faced with questions of cause and effect. Understanding the driving forces behind outcomes is crucial, ranging from personal decisions like parenting strategies to organizational challenges such as effective advertising. This blog aims to provide a systematic introduction to experimentation, igniting enthusiasm for primary research and highlighting the myriad of experimental applications and opportunities available.

The challenge for those who seek to answer causal questions convincingly is to develop a research methodology that doesn't require identifying or measuring all potential confounders. Since no planned design can eliminate every possible systematic difference between treatment and control groups, random assignment emerges as a powerful tool for minimizing bias. In the contentious world of causal claims, randomized experiments represent an unbiased method for determining what works. Random assignment means participants are assigned to different groups or conditions in a study purely by chance. Basically, each participant has an equal chance to be assigned to a control group or a treatment group.

Field experiments, or randomized studies conducted in real-world settings, can take many forms. While experiments on college campuses are often considered lab studies, certain experiments on campus – such as those examining club participation – may be regarded as field experiments, depending on the experimental design. Ultimately, whether a study is considered a field experiment hinges on the definition of "the field."

Researchers may employ two main scenarios for randomization. The first involves gathering study participants and randomizing them at the time of the experiment. The second capitalizes on naturally occurring randomizations, such as the Vietnam draft lottery.

Intervention, Excludability, and Non-Interference

Three essential features of any experiment are intervention, excludability, and non-interference. In a general sense, the intervention refers to the treatment or action being tested in an experiment. The excludability principle is satisfied when the only difference between the experimental and control groups is the presence or absence of the intervention. The non-interference principle holds when the outcome of one participant in the study does not influence the outcomes of other participants. Together, these principles ensure that the experiment is designed to provide unbiased and reliable results, isolating the causal effect of the intervention under study.

Omitted Variables and Non-Compliance

To ensure unbiased results, researchers must randomize as much as possible to minimize omitted variable bias. Omitted variables are factors that influence the outcome but are not measured or are difficult to measure. These unmeasured attributes, sometimes called confounding variables or unobserved heterogeneity, must be accounted for to guarantee accurate findings.

Non-compliance can also complicate experiments. One-sided non-compliance occurs when individuals assigned to a treatment group don't receive the treatment (failure to treat), while two-sided non-compliance occurs when some subjects assigned to the treatment group go untreated or individuals assigned to the control group receive the treatment. Addressing these issues at the design level by implementing a blind or double-blind study can help mitigate potential biases.

Achieving Precision through Covariate Balance

To ensure the control and treatment groups are comparatively similar in all relevant aspects, particularly when the sample size (n) is small, it is essential to achieve covariate balance. Covariance measures the association between two variables, while a covariate is a factor that influences the outcome variable. By balancing covariates, we can more accurately isolate the effects of the treatment, leading to improved precision in our findings.

Fictional Example of Randomized Controlled Trial of Covi-Mapp for COVID-19 Management

Let's explore a fictional example to better understand experiments: a one-week randomized controlled trial of the experimental drug Covi-Mapp for managing Covid. In this case, the control group receives the standard care for Covid patients, while the treatment group receives the standard care plus Covi-Mapp. The outcome of interest is whether patients have cough symptoms on day 7, as subsidizing cough symptoms is an encouraging sign in Covid recovery. We'll measure the presence of cough on day 0 and day 7, as well as temperature on day 0 and day 7. Gender is also tracked. The control represents the standard care for COVID-19 patients, while the treatment includes standard care plus the experimental drug.

In this Covi-Mapp example, the intervention is the Covi-Mapp drug, the excludability principle is satisfied if the only difference in patient care between the groups is the drug administration, and the non-interference principle holds if one patient's outcome doesn't affect another's.

First, let's assume we have a dataset containing the relevant information for each patient, including cough status on day 0 and day 7, temperature on day 0 and day 7, treatment assignment, and gender. We'll read the data and explore the dataset:

Simple treatment effect of the experimental drug

Without any covariates, let's first look at the estimated effect of the treatment on the presence of cough on day 7. The estimated proportion of patients with a cough on day 7 for the control group (not receiving the experimental drug) is 0.847458. In other words, about 84.7% of patients in the control group are expected to have a cough on day 7, all else being equal. The estimated effect of the experimental drug on the presence of cough on day 7 is -0.23. This means that, on average, receiving the experimental drug reduces the proportion of patients with a cough on day 7 by 23.8% compared to the control group.

We know that a patient's initial condition would affect the final outcome. If the patient has a cough and a fever on day 0, they might not fare well with the treatment. To better understand the treatment's effect, let's add these covariates:

The output shows the results of a linear regression model, estimating the effect of the experimental drug (treat_covid_mapp) on the presence of cough on day 7, adjusting for cough on day 0 and temperature on day 0. The experimental drug significantly reduces the presence of cough on day 7 by approximately 16.6% compared to the control group (p-value = 0.046242). The presence of cough on day 0 does not significantly predict the presence of cough on day 7 (p-value = 0.717689). A one-unit increase in temperature on day 0 is associated with a 20.6% increase in the presence of cough on day 7, and this effect is statistically significant (p-value = 0.009859).

Should we add day 7 temperature as a covariate? By including it, we might find that the treatment is no longer statistically significant since the temperature on day 7 could be affected by the treatment itself. It is a post-treatment variable, and by including it, the experiment loses value as we used something that was affected by intervention as our covariate.

However, we'd like to investigate if the treatment affects men or women differently. Since we collected gender as part of the study, we could check for Heterogeneous Treatment Effect (HTE) for male vs. female. The experimental drug has a marginally significant effect on the outcome variable for females, reducing it by approximately 23.1% (p-value = 0.05391).

Which group, those coded as male == 0 or male == 1, have better health outcomes (cough) in control? What about in treatment? How does this help to contextualize any heterogeneous treatment effect that might have been estimated?

Stargazer is a popular R package that enables users to create well-formatted tables and reports for statistical analysis results.

Looking at this regression report, we see that males in control have a temperature of 102; females in control have a temperature of 98.6 (which is very nearly a normal temperature). So, in control, males are worse off. In treatment, males have a temperature of 102 - 2.59 = 99.41. While this is closer to a normal temperature, this is still elevated. Females in treatment have a temperature of 98.5 - .32 = 98.18, which is slightly lower than a normal temperature, and is better than an elevated temperature. It appears that the treatment is able to have a stronger effect among male participants than females because males are *more sick* at baseline.

In conclusion, experimentation offers a fascinating and valuable avenue for primary research, allowing us to address causal questions and enhance our understanding of the world around us. Covariate control helps to isolate the causal effect of the treatment on the outcome variable, ensuring that the observed effect is not driven by confounding factors. Proper control of covariates enhances the internal validity of the study and ensures that the estimated treatment effect is an accurate representation of the true causal relationship. By exploring and accounting for sub groups in data, researchers can identify whether the treatment has different effects on different groups, such as men and women or younger and older individuals. This information can be critical for making informed policy decisions and developing targeted interventions that maximize the benefits for specific groups. The ongoing investigation of experimental methodologies and their potential applications represents a compelling and significant area of inquiry.

Gerber, A. S., & Green, D. P. (2012). Field Experiments: Design, Analysis, and Interpretation . W. W. Norton.

“DALL·E 2.” OpenAI , https://openai.com/product/dall-e-2

“Data Science 241. Experiments and Causal Inference.” UC Berkeley School of Information , https://www.ischool.berkeley.edu/courses/datasci/241

What Is Random Assignment in Psychology?

Categories Research Methods

Sharing is caring!

Random assignment means that every participant has the same chance of being chosen for the experimental or control group. It involves using procedures that rely on chance to assign participants to groups. Doing this means that every participant in a study has an equal opportunity to be assigned to any group.

For example, in a psychology experiment, participants might be assigned to either a control or experimental group. Some experiments might only have one experimental group, while others may have several treatment variations.

Using random assignment means that each participant has the same chance of being assigned to any of these groups.

Table of Contents

How to Use Random Assignment

So what type of procedures might psychologists utilize for random assignment? Strategies can include:

- Flipping a coin

- Assigning random numbers

- Rolling dice

- Drawing names out of a hat

How Does Random Assignment Work?

A psychology experiment aims to determine if changes in one variable lead to changes in another variable. Researchers will first begin by coming up with a hypothesis. Once researchers have an idea of what they think they might find in a population, they will come up with an experimental design and then recruit participants for their study.

Once they have a pool of participants representative of the population they are interested in looking at, they will randomly assign the participants to their groups.

- Control group : Some participants will end up in the control group, which serves as a baseline and does not receive the independent variables.

- Experimental group : Other participants will end up in the experimental groups that receive some form of the independent variables.

By using random assignment, the researchers make it more likely that the groups are equal at the start of the experiment. Since the groups are the same on other variables, it can be assumed that any changes that occur are the result of varying the independent variables.

After a treatment has been administered, the researchers will then collect data in order to determine if the independent variable had any impact on the dependent variable.

Random Assignment vs. Random Selection

It is important to remember that random assignment is not the same thing as random selection , also known as random sampling.

Random selection instead involves how people are chosen to be in a study. Using random selection, every member of a population stands an equal chance of being chosen for a study or experiment.

So random sampling affects how participants are chosen for a study, while random assignment affects how participants are then assigned to groups.

Examples of Random Assignment

Imagine that a psychology researcher is conducting an experiment to determine if getting adequate sleep the night before an exam results in better test scores.

Forming a Hypothesis

They hypothesize that participants who get 8 hours of sleep will do better on a math exam than participants who only get 4 hours of sleep.

Obtaining Participants

The researcher starts by obtaining a pool of participants. They find 100 participants from a local university. Half of the participants are female, and half are male.

Randomly Assign Participants to Groups

The researcher then assigns random numbers to each participant and uses a random number generator to randomly assign each number to either the 4-hour or 8-hour sleep groups.

Conduct the Experiment

Those in the 8-hour sleep group agree to sleep for 8 hours that night, while those in the 4-hour group agree to wake up after only 4 hours. The following day, all of the participants meet in a classroom.

Collect and Analyze Data

Everyone takes the same math test. The test scores are then compared to see if the amount of sleep the night before had any impact on test scores.

Why Is Random Assignment Important in Psychology Research?

Random assignment is important in psychology research because it helps improve a study’s internal validity. This means that the researchers are sure that the study demonstrates a cause-and-effect relationship between an independent and dependent variable.

Random assignment improves the internal validity by minimizing the risk that there are systematic differences in the participants who are in each group.

Key Points to Remember About Random Assignment

- Random assignment in psychology involves each participant having an equal chance of being chosen for any of the groups, including the control and experimental groups.

- It helps control for potential confounding variables, reducing the likelihood of pre-existing differences between groups.

- This method enhances the internal validity of experiments, allowing researchers to draw more reliable conclusions about cause-and-effect relationships.

- Random assignment is crucial for creating comparable groups and increasing the scientific rigor of psychological studies.

5.2 Experimental Design

Learning objectives.

- Explain the difference between between-subjects and within-subjects experiments, list some of the pros and cons of each approach, and decide which approach to use to answer a particular research question.

- Define random assignment, distinguish it from random sampling, explain its purpose in experimental research, and use some simple strategies to implement it

- Define several types of carryover effect, give examples of each, and explain how counterbalancing helps to deal with them.

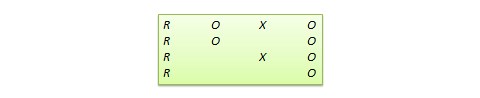

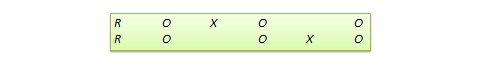

In this section, we look at some different ways to design an experiment. The primary distinction we will make is between approaches in which each participant experiences one level of the independent variable and approaches in which each participant experiences all levels of the independent variable. The former are called between-subjects experiments and the latter are called within-subjects experiments.

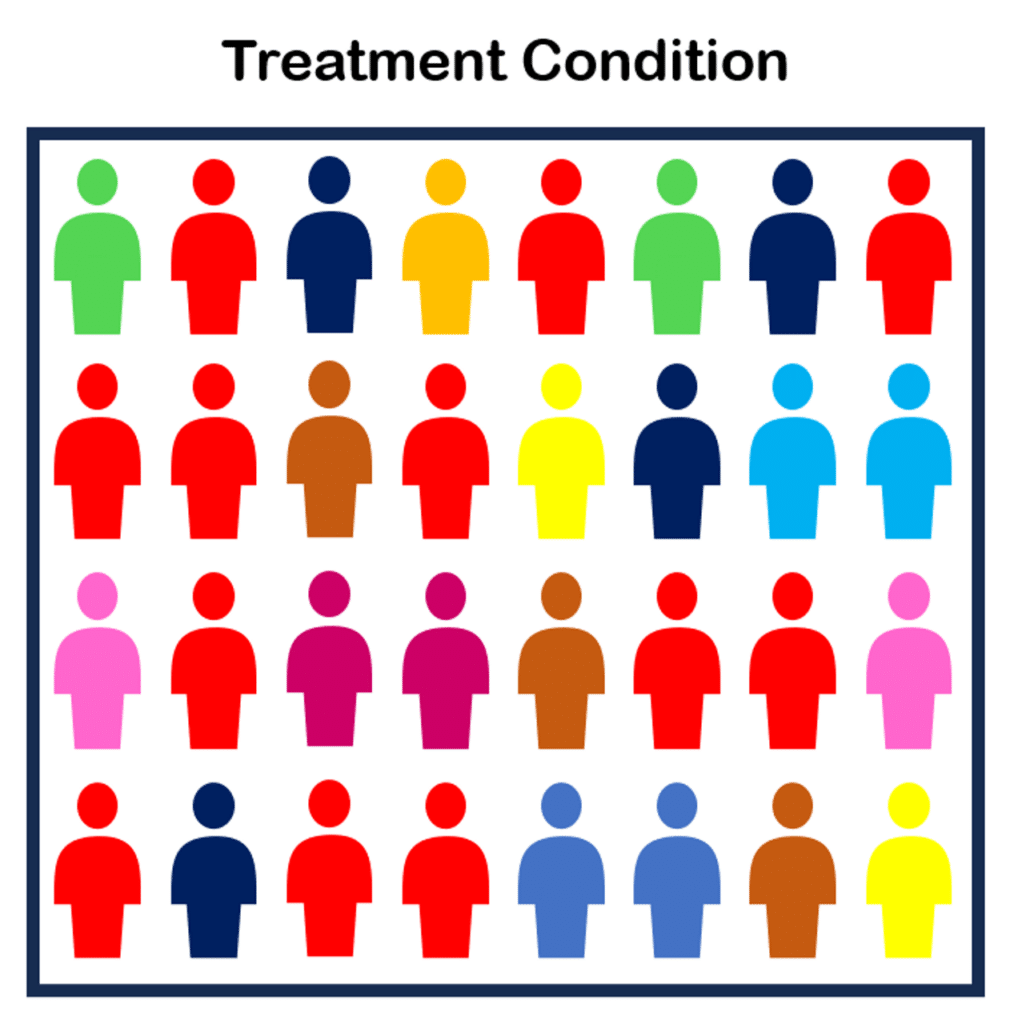

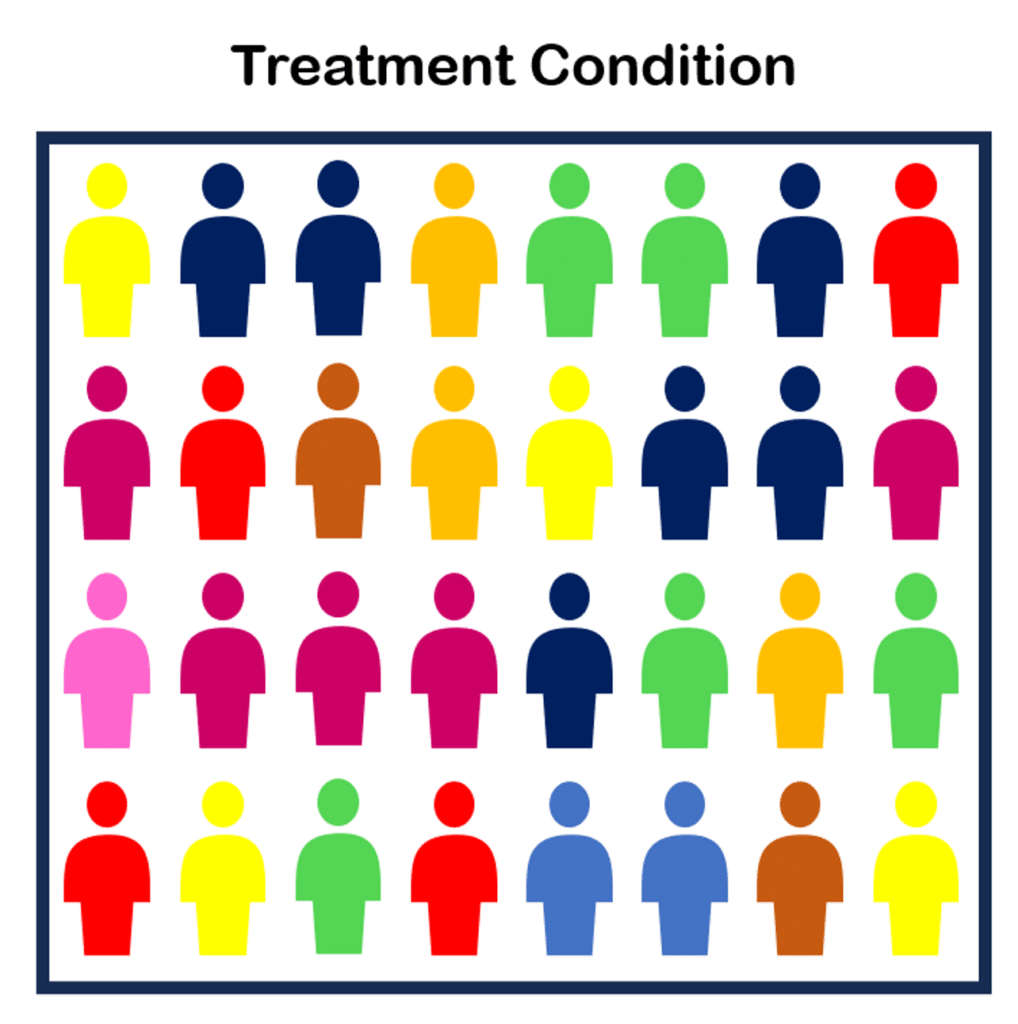

Between-Subjects Experiments

In a between-subjects experiment , each participant is tested in only one condition. For example, a researcher with a sample of 100 university students might assign half of them to write about a traumatic event and the other half write about a neutral event. Or a researcher with a sample of 60 people with severe agoraphobia (fear of open spaces) might assign 20 of them to receive each of three different treatments for that disorder. It is essential in a between-subjects experiment that the researcher assigns participants to conditions so that the different groups are, on average, highly similar to each other. Those in a trauma condition and a neutral condition, for example, should include a similar proportion of men and women, and they should have similar average intelligence quotients (IQs), similar average levels of motivation, similar average numbers of health problems, and so on. This matching is a matter of controlling these extraneous participant variables across conditions so that they do not become confounding variables.

Random Assignment