13 Best Free Speech-to-Text Open Source Engines, APIs, and AI Models

Saving time and effort with Notta, starting from today!

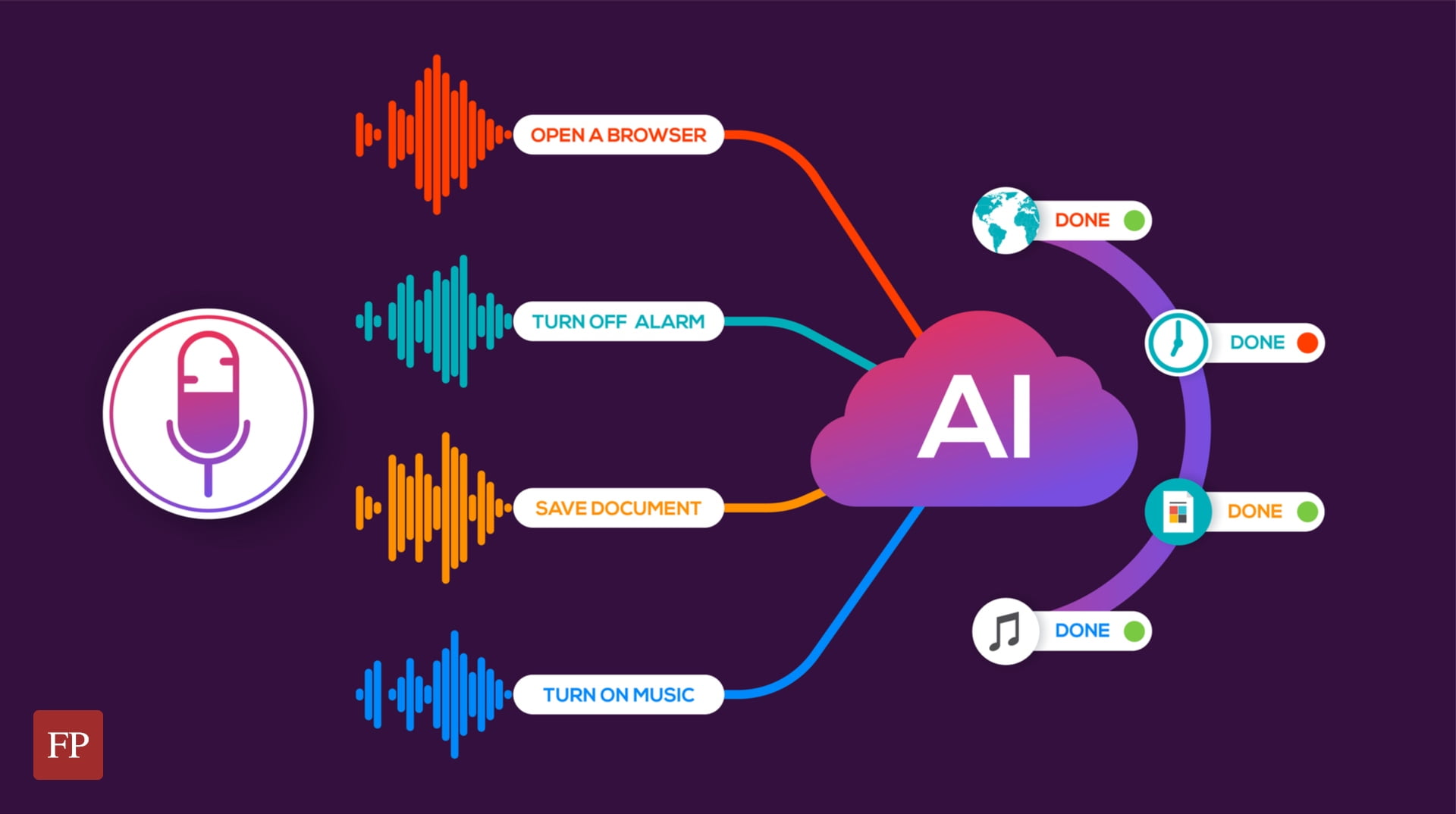

Automatic speech-to-text recognition involves converting an audio file to editable text. Computer algorithms facilitate this process in four steps: analyze the audio, break it down into parts, convert it into a computer-readable format, and use the algorithm again to match it into a text-readable format.

In the past, this was a task only reserved for proprietary systems. This was disadvantageous to the user due to high licensing and usage fees, limited features, and a lack of transparency.

As more people researched these tools, creating your language processing models with the help of open-source voice recognition systems became possible . These systems, made by the community for the community, are easy to customize, cheap to use, and transparent, giving the user control over their data.

Best 13 Open-Source Speech Recognition Systems

An open-source speech recognition system is a library or framework consisting of the source code of a speech recognition system. These community-based projects are made available to the public under an open-source license. Users can contribute to these tools, customize them, or even tailor them to their needs.

Here are the top open-source speech recognition engines you can start on:

Whisper is Open AI’s newest brainchild that offers transcription and translation services. Released in September 2022, this AI tool is one of the most accurate automatic speech recognition models. It stands out from the rest of the tools in the market due to the large number of training data sets it was trained on: 680 thousand hours of audio files from the internet. This diverse range of data improves the human-level robustness of the tool.

You must install Python or the command line interface to transcribe using Whisper. Five models are available to work with; all have different sizes and capabilities. These include tiny, base, small, medium, and large. The larger the model, the faster the transcription speed. Still, you must invest in a good CPU and GPU device to maximize their use.

Whisper AI falls short compared to models proficient in LibriSpeech performance (one of the most common speech recognition benchmarks). However, its zero-shot performance reveals that the API has 50% fewer errors than the same models.

It supports content formats such as MP3, MP4, M4A, Mpeg, MPGA, WEBM, and WAV.

It can transcribe 99 languages and translate them all into English.

The tool is free to use.

The larger the model, the more GPU resources it consumes, which can be costly.

It will cost you time and resources to install and use the tool.

It does not provide real-time transcription.

2. Project DeepSpeech

Project DeepSearch is an open-source speech-to-text engine by Mozilla. This voice-to-text command and library is released under the Mozilla Public License (MPL). Its model follows the Baidu Deep Speech research paper, making it end-to-end trainable and capable of transcribing audio in several languages. It is also trained and implemented using Google’s TensorFlow.

Download the source code from GitHub and install it in your Python to use it. The tool comes when already pre-trained on an English model. However, you can still train the model with your data. Alternatively, you can get a pre-trained model and improve it using custom data.

DeepSpeech is easy to customize since it’s a code-native solution.

It provides special wrappers for Python, C, .Net Framework, and Javascript, allowing you to use the tool regardless of the language.

It can function on various gadgets, including a Raspberry Pi device.

Its per-word error rate is remarkably low at 7.5%.

Mozilla takes a serious approach to privacy concerns.

Mozilla is reportedly ending the development of DeepSpeech. This means there will be less support in case of bugs and implementation problems.

Kaldi is a speech recognition tool purposely created for speech recognition researchers. It’s written in C++ and released under the Apache 2.0 license, one of the least restrictive licenses. Unlike tools like Whisper and DeepSpeech, which focus on deep learning, Kaldi primarily focuses on speech recognition models that use old-school, reliable tools. These include models like HMMs (Hidden Markov Models), GMMs (Gaussian Mixture Models), and FSTs (Finite State Transducers.)

Kaldi is very reliable. Its code is thoroughly tested and verified.

Although its focus is not on deep learning, it has some models that can help with transcription services.

It is perfect for academic and industry-related research, allowing users to test their models and techniques.

It has an active forum that provides the right amount of support.

There are also resources and documentation available to help users address any issues.

Being open-source, users with privacy or security concerns can inspect the code to understand how it works.

Its classical approach to models may limit its accuracy levels.

Kaldi is not user-friendly since it operates on a Command-line interface.

It's pretty complex to use, making it suitable for users with technical experience.

You need lots of computation power to use the toolkit.

4. SpeechBrain

SpeechBrain is an open-source toolkit that facilitates the research and development of speech-related tech. It supports a variety of tasks, including speech recognition, enhancement, separation, speaker diarization, and microphone signal processing. Speechbrain uses PyTorch as its foundation, taking advantage of its flexibility and ease of use. Developers and researchers can also benefit from Pytorch’s expensive ecosystem and support to build and train their neural networks.

Users can choose between both traditional and deep-leaning-based ASR models.

It's easy to customize a model to adapt to your needs.

Its integration with Pytorch makes it easier to use.

There are available pre-trained models users can use to get started with speech-to-text tasks.

The SpeechBrain documentation is not as extensive as that of Kaldi.

Its pre-trained models are limited.

You may need particular expertise to use the tool. Without it, you may need to undergo a steep learning curve.

Coqui is an advanced deep learning toolkit perfect for training and deploying stt models. Licensed under the Mozilla Public License 2.0, you can use it to generate multiple transcripts, each with a confidence score. It provides pre-trained models alongside example audio files you can use to test the engine and help with further fine-tuning. Moreover, it has well-detailed documentation and resources that can help you use and solve any arising problems.

The STT models it provides are highly trained with high-quality data.

The models support multiple languages.

There is a friendly support community where you can ask questions and get any details relating to STT.

It supports real-time transcription with extremely low latency in seconds.

Developers can customize the models to various use cases, from transcription to acting as voice assistants.

Coqui stopped to maintain the STT project to focus on their text-to-speech toolkit. This means you may have to solve any problems that arise by yourself without any help from support.

Julius is one of the oldest speech-to-text projects, dating back to 1997, with roots in Japan. It is available under the BSD -3-license, making it accessible to developers. It strongly supports Japanese ASR, but being a language-independent program, the model can understand and process multiple languages, including English, Slovenian, French, Thai, and others. The transcription accuracy largely depends on whether you have the right language and acoustic model. The project is written in the most common language, C, allowing it to work in Windows, Linux, Android, and macOS systems.

Julius can perform real-time speech-to-text transcription with low memory usage.

It has an active community that can help with ASR problems.

The models trained in English are readily available on the web for download.

It does not need internet access for speech recognition, making it suitable for users needing privacy.

Like any other open-source program, you need users with technical experience to make it work.

It has a huge learning curve.

7. Flashlight ASR (Formerly Wav2Letter++)

Flashlight ASR is an open-source speech recognition toolkit designed by the Facebook AI research team. Its capability to handle large datasets, speed, and efficiency stands out. You can attribute the speed to using only convolutional neural networks in the language modeling, machine translation, and speech synthesis.

Ideally, most speech recognition engines use convolutionary and recurrent neural networks to understand and model the language. However, recurrent networks may need high computation power, thus affecting the speed of the engine.

The Flashlight ASR is compiled using modern C++, an easy language on your device’s CPU and GPU. It’s also built on Flashlight, a stand-alone library for machine learning.

It's one of the fastest machine learning speech-to-text systems.

You can adapt its use to various languages and dialects.

The model does not consume a lot of GPU and CPU resources.

It does not provide any pre-trained language models, including English.

You need to have deep coding expertise to operate the tool.

It has a steep learning curve for new users.

8. PaddleSpeech (Formerly DeepSpeech2)

This open-source speech-to-text toolkit is available on the Paddlepaddle platform and provided under the Apache 2.0 license. PaddleSpeech is one of the most versatile toolkits capable of performing speech recognition, speech-to-text conversion, keyword spotting, translation, and audio classification. Its transcription quality is so good that it won the NAACL2022 Best Demo Award .

This speech-to-text engine supports various language models but prioritizes Chinese and English models. The Chinese model, in particular, features text normalization and pronunciation to make it adapt to the rules of the Chinese language.

The toolkit delivers high-end and ultra-lightweight models that use the best technology in the market.

The speech-to-text engine provides both command-line and server options, making it user-friendly to adopt.

It is very convenient for users by both developers and researchers.

Its source code is written in Python, one of the most commonly used languages.

Its focus on Chinese leads to the limitation of resources and support for other languages.

It has a steep learning curve.

You need to have certain expertise to integrate and use the tool.

9. OpenSeq2Seq

Like its name, OpenSeq2Seq is an open-source speech-to-text tool kit that helps train different types of sequence-to-sequence models. Developed by Nvidia, this toolkit is released under the Apache 2.0 license, meaning it's free for everyone. It trains language models that perform transcription, translation, automatic speech recognition, and sentiment analysis tasks.

To use it, use the default models or train your own, depending on your needs. OpenSeq2Seq performs best when you use many graphics cards and computers simultaneously. It works best on Nvidia-powered devices.

The tool has multiple functions, making it very versatile.

It can work with the most recent Python, TensorFlow, and CUDA versions.

Developers and researchers can access the tool, collaborate, and make their innovations.

Beneficial to users with Nvidia-powered devices.

It can consume significant computer resources due to its parallel processing capability.

Community support has reduced over time as Nvidia paused the project development.

Users without access to Nvidia hardware can be at a disadvantage.

One of the most compact and lightweight speech-to-text engines today is Vosk . This open-source toolkit works offline on multiple devices, including Android, iOS, and Raspberry Pi. It supports over 20 languages and dialects, including English, Chinese, Portuguese, Polish and German.

Vosk provides users with small language models that do not take up much space. Ideally, around 50MB. However, a few large models can take up to 1.4GB. The tool is quick to respond and can convert speech to text continuously.

It can work with various programming languages such as Java, Python, C++, Kotlyn, and Shell, making it a versatile addition for developers.

It has various use cases, from transcriptions to developing chatbots and virtual assistants.

It has a fast response time.

The engine's accuracy can vary depending on the language and accent.

You need coding expertise to integrate and use the tool.

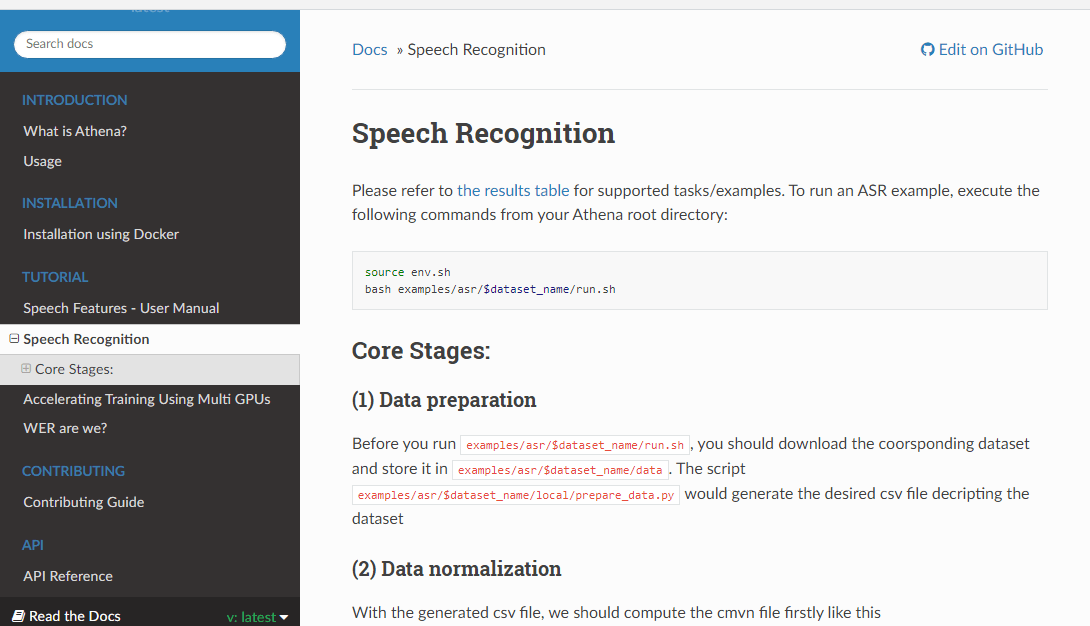

Athena is another sequence-to-sequence-based speech-to-text open-source engine released under the Apache 2.0 license. This toolkit suits researchers and developers with their end-to-end speech processing needs. Some tasks the models can handle include automatic speech recognition (ASR), speech synthesis, voice detection, and keyword spotting. All the language models are implemented on TensorFlow, making the toolkit accessible to more developers.

Athena is versatile in its use, from transcription services to speech synthesis.

It does not depend on Kaldi since it has its pythonic feature extractor.

The tool is well maintained with regular updates and new features.

It is open source, free to use, and available to various users.

It has a deep learning curve for new users.

Although it has a WeChat group for community support, it limits the accessibility to only those who can access the platform.

ESPnet is an open-source speech-to-text software released under the Apache 2.0 license. It provides end-to-end speech processing capabilities that cover tasks ranging from ASR, translation, speech synthesis, enhancement, and diarization. The toolkit stands out for leveraging Pytorch as its deep learning framework and following the Kaldi data processing style. As a result, you get comprehensive recipes for various language-processing tasks. The tool is also multi-lingual as it is capable of handling various languages. Use it with the readily available pre-trained models or create your own according to your needs.

The toolkit delivers a stand-out performance compared to other speech-to-text software.

It can process audio in real time, making it suitable for live transcription services.

Suitable for use by researchers and developers.

It is one of the most versatile tools to deliver various speech-processing tasks.

It can be complex to integrate and use for new users.

You must be familiar with Pytorch and Python to run the toolkit.

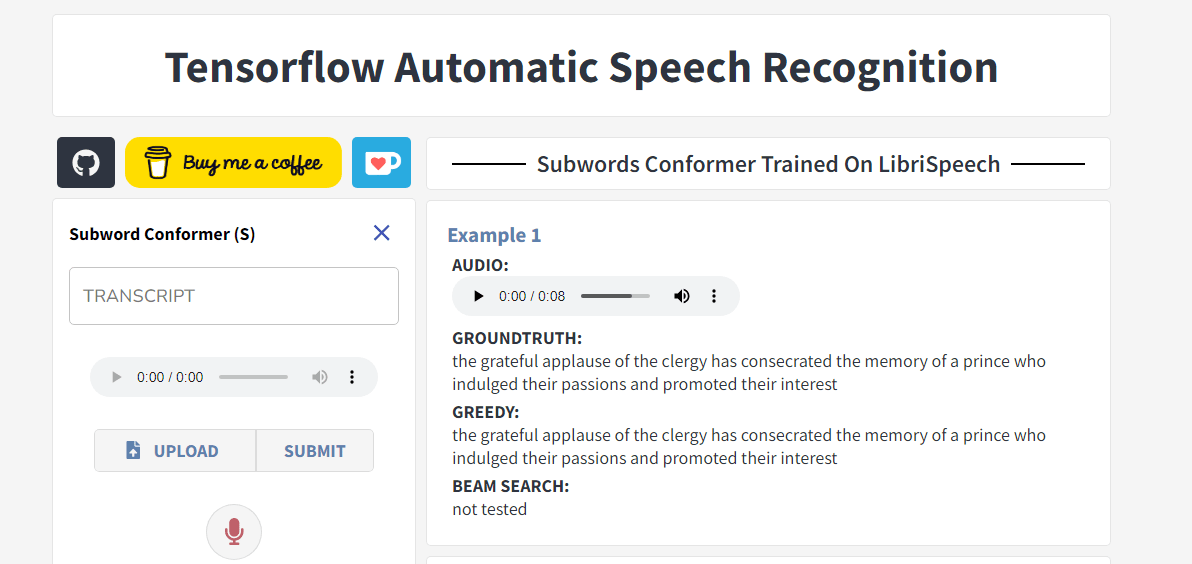

13. Tensorflow ASR

Our last feature on this list of free speech-to-text open-source engines is the Tensorflow ASR . This GitHub project is released under the Apache 2.0 license and uses Tensorflow 2.0 as the deep learning framework to implement various speech processing models.

Tensorflow has an incredible accuracy rate, with the author claiming it to be an almost ‘state-of-the-art’ model. It’s also one of the most well-maintained tools that undergo regular updates to improve its functionality. For example, the toolkit now supports language training on TPUs (a special hardware).

Tensorflow also supports using specific models such as Conformer, ContextNet, DeepSpeech2, and Jasper. You can choose the tool depending on the tasks you intend to handle. For example, for general tasks, consider DeepSpeech2, but for precision, use Conformer.

The language models are accurate and highly efficient when processing speech-to-text.

You can convert the models to a TFlite format to make it lightweight and easy to deploy.

It can deliver on various speech-to-text-related tasks.

It Supports multiple languages and provides pre-trained English, Vietnamese, and German models.

The installation process can be quite complex for beginners. Users need to have a particular expertise.

There is a learning curve to using advanced models.

TPUs do not allow testing, limiting the tool's capabilities.

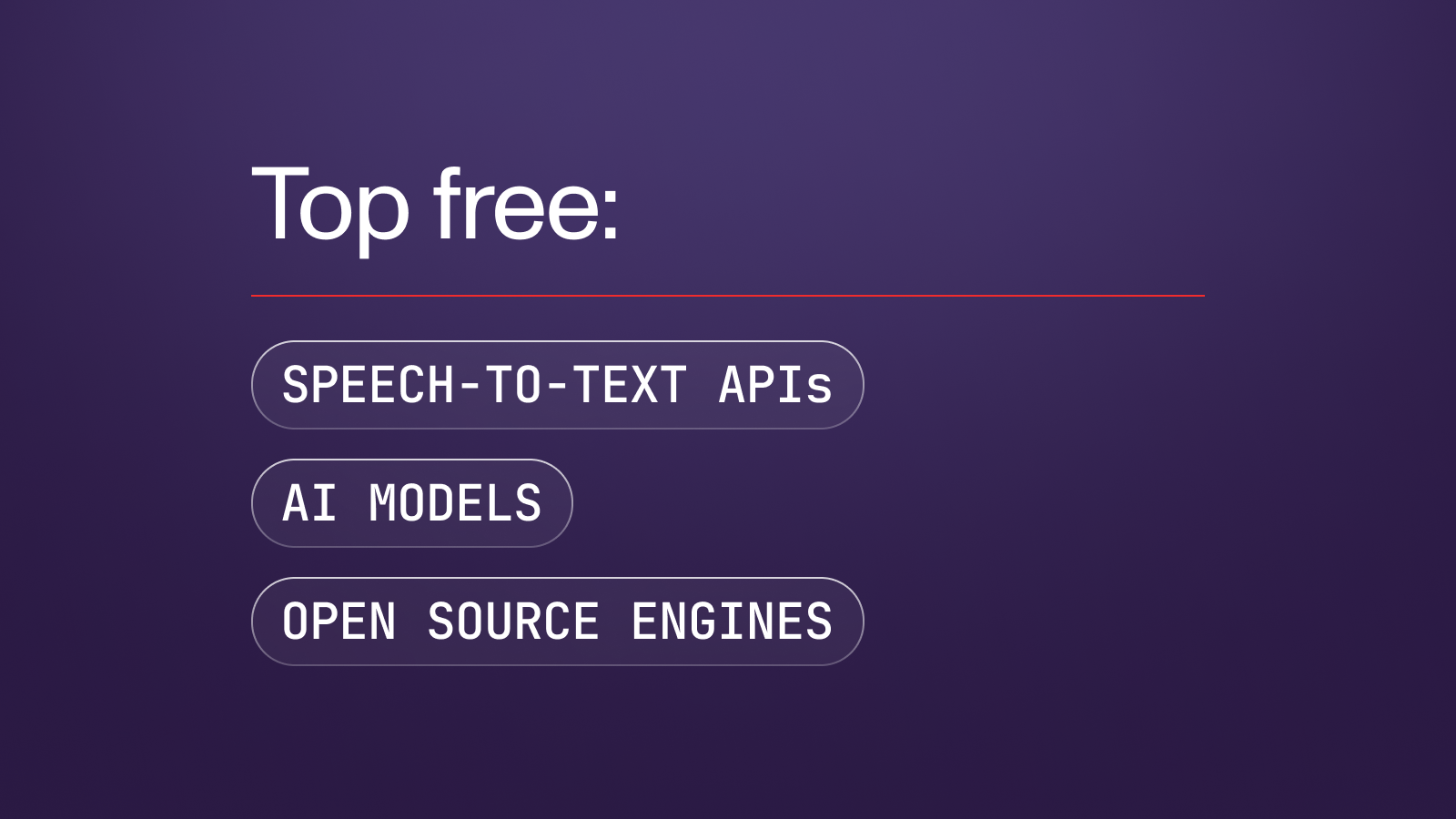

Top 3 Speech-to-Text APIs and AI Models

A Speech-to-text API and AI model is a tech solution that helps users convert their speech or audio files into text. Most of these solutions are cloud-based. You need to access the internet and make an API request to use them. The decision to use either APIs, AI models, or open-source engines largely depends on your needs. An API or AI model is the most preferred for small-scale tasks that are needed quickly. However, for large-scale use, consider using an open-source engine.

Several other differences exist between speech-to-text APIs /AI models and open-source engines. Let's take a look at the most common in the table below:

After considerable research, here are our top three speech-to-text API and AI models:

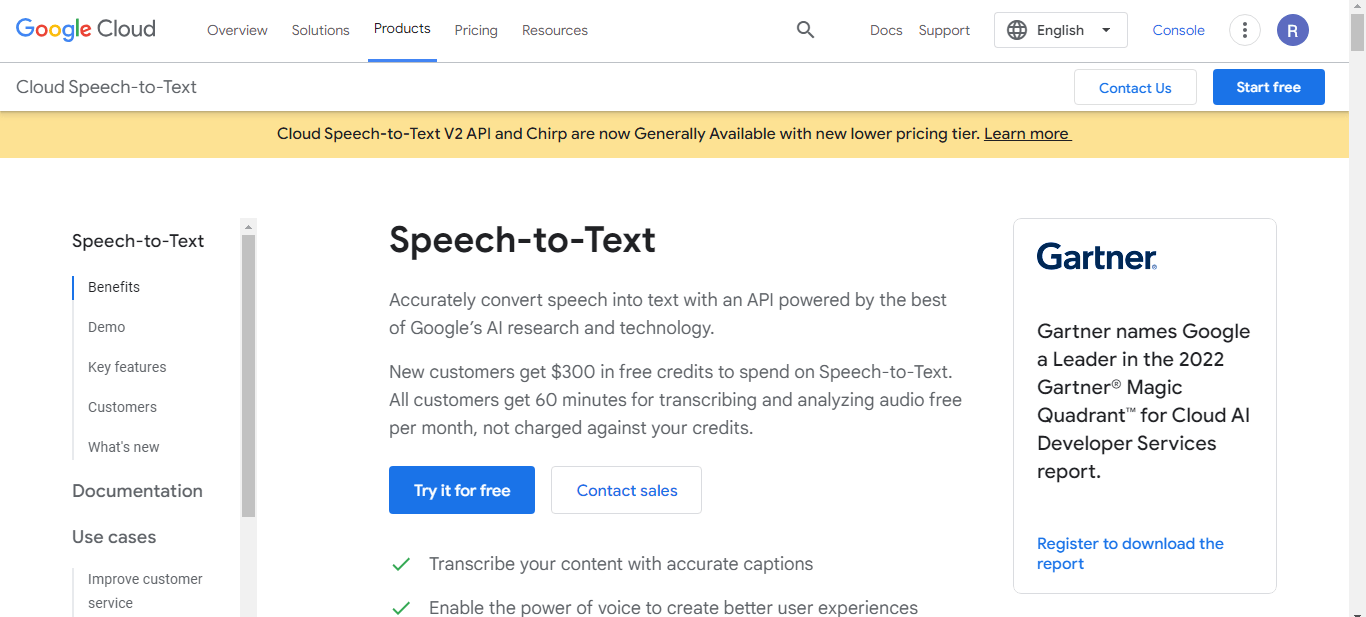

The Google Cloud Speech-to-text API is one of the most common speech recognition technologies for developers looking to integrate the service into their applications. It automatically detects and converts audio to text using neural network models. Initially, the purpose of this toolkit was for use on Google’s home voice assistant, as its focus is on short command and response applications. Although the accuracy level is not that high, it does an excellent job of transcribing with minimal errors. However, the quality of the transcript is dependent on the audio quality.

Google Cloud speech-to-text API uses a pay-as-you-go subscription, priced according to the number of audio files processed per month measured per second. Users get 60 free transcription minutes plus Google Cloud hosting credits worth $300 for the first 90 days. Any audio over 60 minutes will cost you an additional $0.006 per 15 seconds.

The API can transcribe more than 125 languages and variants.

You can deploy the tool in the cloud and on-premise.

It provides automatic language transcription and translation services.

You can configure it to transcribe your phone and video conversations.

It is not free to use.

It has a limited vocabulary builder.

2. AWS Transcribe

AWS transcribe is an on-demand voice-to-text API allowing users to generate audio transcriptions. If you have heard of the Alexa voice assistant, it's the tool behind the development. Unlike every other consumer-oriented transcription tool, the AWS API has a daily good accuracy level. It can also distinguish voices in a conversation and provide timestamps to the transcript. This tool supports 37 languages, including English, German, Hebrew, Japanese, and Turkish.

Integrating it into an existing AWS ecosystem is effortless.

It is one of the best short audio commands and response options.

It is highly scalable.

It has a reasonably good accuracy level.

It is expensive to use.

It only supports cloud deployment.

It has limited support.

The tool can be slow at times.

3. AssemblyAI

AssemblyAI API is one of the best solutions for users looking to transcribe speech without many technical terms, jargon, or accents. This API model automatically detects audio, transcribes it, and even creates a summary. It also provides services such as speaker diarization, sentiment analysis, topic detection, content moderation, and entity detection.

AssemblyAI has a simple and open pricing model, where you pay for only what you use. For example, you may need to pay $0.650016 per hour to get the core transcription service, while real-time transcription costs $0.75024 per hour.

It is not expensive to use.

Accuracy levels are high for not-technical languages.

It provides helpful documentation.

The toolkit is easy to set up, even for beginners.

Its deployment speed is slow.

Its accuracy levels drop when dealing with technical terms.

What is the Best Open Source Speech Recognition System?

As you can see above, every tool from this list has benefits and disadvantages. Choosing the best open-source speech recognition system depends on your needs and available resources. For example, if you are looking for a lightweight toolkit compatible with almost every device, Voskand Julius beat the rest of the tools in this list. You can use them on Android, iOS, and even Raspberry Pi. Moreover, they don’t consume much space.

For users who want to train their models, you can use toolkits such as Whisper, OpenSeq2Seq, Flashlight ASR, and Athena.

The best approach to choosing an open-source voice recognition software is to review its documentation to understand the necessary resources and test it to see if it works for your case.

Introducing the Notta AI Model

As shown above, AI models differ from open-source engines. They are fast, more efficient, easy to use, and can deliver high accuracy. Moreover, their use is not only limited to users with experience. Anyone can operate the tools and generate transcripts in minutes.

Here is where we come in. Notta is one of the leading speech-to-text AI models that can transcribe and summarize your audio and video recordings. This AI tool supports 58 languages and can deliver transcripts with an impressive accuracy rate of 98.86%. The tool is available for use both on mobile and web.

Notta is easy to set up and use.

It supports multiple video and audio formats.

Its transcription speed is lightning-fast.

It adopts rigorous security protocols to protect user data.

It's free to use.

There is a limit to the file size you can upload to transcribe.

The free version supports only a limited number of transcriptions per month.

The advancement of speech recognition technology has been impressive over the years. What was once a world of proprietary software has shifted to one led by open-source toolkits and APIs/AI.

It's too early to say which is the clear winner, as they are all improving. You can, however, take advantage of their services, which include transcription, translation, dictation, speech synthesis, keyword spotting, diarization, and language enhancement.

There is no right or wrong tool in the options above. Every one of them has its strengths and weaknesses. Carefully assess your needs and resources before choosing a tool to make an informed decision.

Chrome Extension

Help Center

vs Otter.ai

vs Fireflies.ai

vs Happy Scribe

vs Sonix.ai

Integrations

Microsoft Teams

Google Meet

Google Drive

Audio to Text Converter

Video to Text Converter

Online Video Converter

Online Audio Converter

Online Vocal Remover

YouTube Video Summarizer

- About AssemblyAI

The top free Speech-to-Text APIs, AI Models, and Open Source Engines

This post compares the best free Speech-to-Text APIs and AI models on the market today, including APIs that have a free tier. We’ll also look at several free open-source Speech-to-Text engines and explore why you might choose an API vs. an open-source library, or vice versa.

Growth at AssemblyAI

Choosing the best Speech-to-Text API , AI model, or open-source engine to build with can be challenging. You need to compare accuracy, model design, features, support options, documentation, security, and more.

This post examines the best free Speech-to-Text APIs and AI models on the market today, including ones that have a free tier, to help you make an informed decision. We’ll also look at several free open-source Speech-to-Text engines and explore why you might choose an API or AI model vs. an open-source library, or vice versa.

Looking for a powerful speech-to-text API or AI model?

Learn why AssemblyAI is the leading Speech AI partner.

Free Speech-to-Text APIs and AI Models

APIs and AI models are more accurate, easier to integrate, and come with more out-of-the-box features than open-source options. However, large-scale use of APIs and AI models can come with a higher cost than open-source options.

If you’re looking to use an API or AI model for a small project or a trial run, many of today’s Speech-to-Text APIs and AI models have a free tier. This means that the API or model is free for anyone to use up to a certain volume per day, per month, or per year.

Let’s compare three of the most popular Speech-to-Text APIs and AI models with a free tier: AssemblyAI, Google, and AWS Transcribe.

AssemblyAI is an API platform that offers AI models that accurately transcribe and understand speech, and enable users to extract insights from voice data. AssemblyAI offers cutting-edge AI models such as Speaker Diarization , Topic Detection, Entity Detection , Automated Punctuation and Casing , Content Moderation , Sentiment Analysis , Text Summarization , and more. These AI models help users get more out of voice data, with continuous improvements being made to accuracy .

AssemblyAI also offers LeMUR , which enables users to leverage Large Language Models (LLMs) to pull valuable information from their voice data—including answering questions, generating summaries and action items, and more.

The company offers up to 100 free transcription hours for audio files or video streams, with a concurrency limit of 5, before transitioning to an affordable paid tier.

Its high accuracy and diverse collection of AI models built by AI experts make AssemblyAI a sound option for developers looking for a free Speech-to-Text API. The API also supports virtually every audio and video file format out-of-the-box for easier transcription.

AssemblyAI has expanded the languages it supports to include English, Spanish, French, German, Japanese, Korean, and much more, with additional languages being released monthly. See the full list here .

AssemblyAI’s easy-to-use models also allow for quick set-up and transcription in any programming language. You can copy/paste code examples in your preferred language directly from the AssemblyAI Docs or use the AssemblyAI Python SDK or another one of its ready-to-use integrations .

- Free to test in the AI playground , plus 100 free hours of asynchronous transcription with an API sign-up

- Speech-to-Text – $0.37 per hour

- Real-time Transcription – $0.47 per hour

- Audio Intelligence – varies, $.01 to $.15 per hour

- LeMUR – varies

- Enterprise pricing is also available

See the full pricing list here .

- High accuracy

- Breadth of AI models available, built by AI experts

- Continuous model iteration and improvement

- Developer-friendly documentation and SDKs

- Enterprise-grade support and security

- Models are not open-source

Google Speech-to-Text is a well-known speech transcription API. Google gives users 60 minutes of free transcription, with $300 in free credits for Google Cloud hosting.

Google only supports transcribing files already in a Google Cloud Bucket, so the free credits won’t get you very far. Google also requires you to sign up for a GCP account and project — whether you're using the free tier or paid.

With good accuracy and 125+ languages supported, Google is a decent choice if you’re willing to put in some initial work.

- 60 minutes of free transcription

- $300 in free credits for Google Cloud hosting

- Decent accuracy

- Multi-language support

- Only supports transcription of files in a Google Cloud Bucket

- Difficult to get started

- Lower accuracy than other similarly-priced APIs

- AWS Transcribe

AWS Transcribe offers one hour free per month for the first 12 months of use.

Like Google, you must create an AWS account first if you don’t already have one. AWS also has lower accuracy compared to alternative APIs and only supports transcribing files already in an Amazon S3 bucket.

However, if you’re looking for a specific feature, like medical transcription, AWS has some options. Its Transcribe Medical API is a medical-focused ASR option that is available today.

- One hour free per month for the first 12 months of use

- Tiered pricing , based on usage, ranges from $0.02400 to $0.00780

- Integrates into existing AWS ecosystem

- Medical language transcription

- Difficult to get started from scratch

- Only supports transcribing files already in an Amazon S3 bucket

Open-Source Speech Transcription engines

An alternative to APIs and AI models, open-source Speech-to-Text libraries are completely free--with no limits on use. Some developers also see data security as a plus, since your data doesn’t have to be sent to a third party or the cloud.

There is work involved with open-source engines, so you must be comfortable putting in a lot of time and effort to get the results you want, especially if you are trying to use these libraries at scale. Open-source Speech-to-Text engines are typically less accurate than the APIs discussed above.

If you want to go the open-source route, here are some options worth exploring:

DeepSpeech is an open-source embedded Speech-to-Text engine designed to run in real-time on a range of devices, from high-powered GPUs to a Raspberry Pi 4. The DeepSpeech library uses end-to-end model architecture pioneered by Baidu.

DeepSpeech also has decent out-of-the-box accuracy for an open-source option and is easy to fine-tune and train on your own data.

- Easy to customize

- Can use it to train your own model

- Can be used on a wide range of devices

- Lack of support

- No model improvement outside of individual custom training

- Heavy lift to integrate into production-ready applications

Kaldi is a speech recognition toolkit that has been widely popular in the research community for many years.

Like DeepSpeech, Kaldi has good out-of-the-box accuracy and supports the ability to train your own models. It’s also been thoroughly tested—a lot of companies currently use Kaldi in production and have used it for a while—making more developers confident in its application.

- Can use it to train your own models

- Active user base

- Can be complex and expensive to use

- Uses a command-line interface

Flashlight ASR (formerly Wav2Letter)

Flashlight ASR, formerly Wav2Letter, is Facebook AI Research’s Automatic Speech Recognition (ASR) Toolkit. It is also written in C++ and usesthe ArrayFire tensor library.

Like DeepSpeech, Flashlight ASR is decently accurate for an open-source library and is easy to work with on a small project.

- Customizable

- Easier to modify than other open-source options

- Processing speed

- Very complex to use

- No pre-trained libraries available

- Need to continuously source datasets for training and model updates, which can be difficult and costly

- SpeechBrain

SpeechBrain is a PyTorch-based transcription toolkit. The platform releases open implementations of popular research works and offers a tight integration with Hugging Face for easy access.

Overall, the platform is well-defined and constantly updated, making it a straightforward tool for training and finetuning.

- Integration with Pytorch and Hugging Face

- Pre-trained models are available

- Supports a variety of tasks

- Even its pre-trained models take a lot of customization to make them usable

- Lack of extensive docs makes it not as user-friendly, except for those with extensive experience

Coqui is another deep learning toolkit for Speech-to-Text transcription. Coqui is used in over twenty languages for projects and also offers a variety of essential inference and productionization features.

The platform also releases custom-trained models and has bindings for various programming languages for easier deployment.

- Generates confidence scores for transcripts

- Large support comunity

- No longer updated and maintained by Coqui

Whisper by OpenAI, released in September 2022, is comparable to other current state-of-the-art open-source options.

Whisper can be used either in Python or from the command line and can also be used for multilingual translation.

Whisper has five different models of varying sizes and capabilities, depending on the use case, including v3 released in November 2023 .

However, you’ll need a fairly large computing power and access to an in-house team to maintain, scale, update, and monitor the model to run Whisper at a large scale, making the total cost of ownership higher compared to other options.

As of March 2023, Whisper is also now available via API . On-demand pricing starts at $0.006/minute.

- Multilingual transcription

- Can be used in Python

- Five models are available, each with different sizes and capabilities

- Need an in-house research team to maintain and update

- Costly to run

Which free Speech-to-Text API, AI model, or Open Source engine is right for your project?

The best free Speech-to-Text API, AI model, or open-source engine will depend on our project. Do you want something that is easy-to-use, has high accuracy, and has additional out-of-the-box features? If so, one of these APIs might be right for you:

Alternatively, you might want a completely free option with no data limits—if you don’t mind the extra work it will take to tailor a toolkit to your needs. If so, you might choose one of these open-source libraries:

Whichever you choose, make sure you find a product that can continually meet the needs of your project now and what your project may develop into in the future.

Want to get started with an API?

Get a free API key for AssemblyAI.

Popular posts

AI trends in 2024: Graph Neural Networks

Developer Educator at AssemblyAI

AI for Universal Audio Understanding: Qwen-Audio Explained

Combining Speech Recognition and Diarization in one model

How DALL-E 2 Actually Works

DeepSpeech 0.6: Mozilla’s Speech-to-Text Engine Gets Fast, Lean, and Ubiquitous

The Machine Learning team at Mozilla continues work on DeepSpeech, an automatic speech recognition (ASR) engine which aims to make speech recognition technology and trained models openly available to developers. DeepSpeech is a deep learning-based ASR engine with a simple API. We also provide pre-trained English models.

Our latest release, version v0.6, offers the highest quality, most feature-packed model so far. In this overview, we’ll show how DeepSpeech can transform your applications by enabling client-side, low-latency, and privacy-preserving speech recognition capabilities.

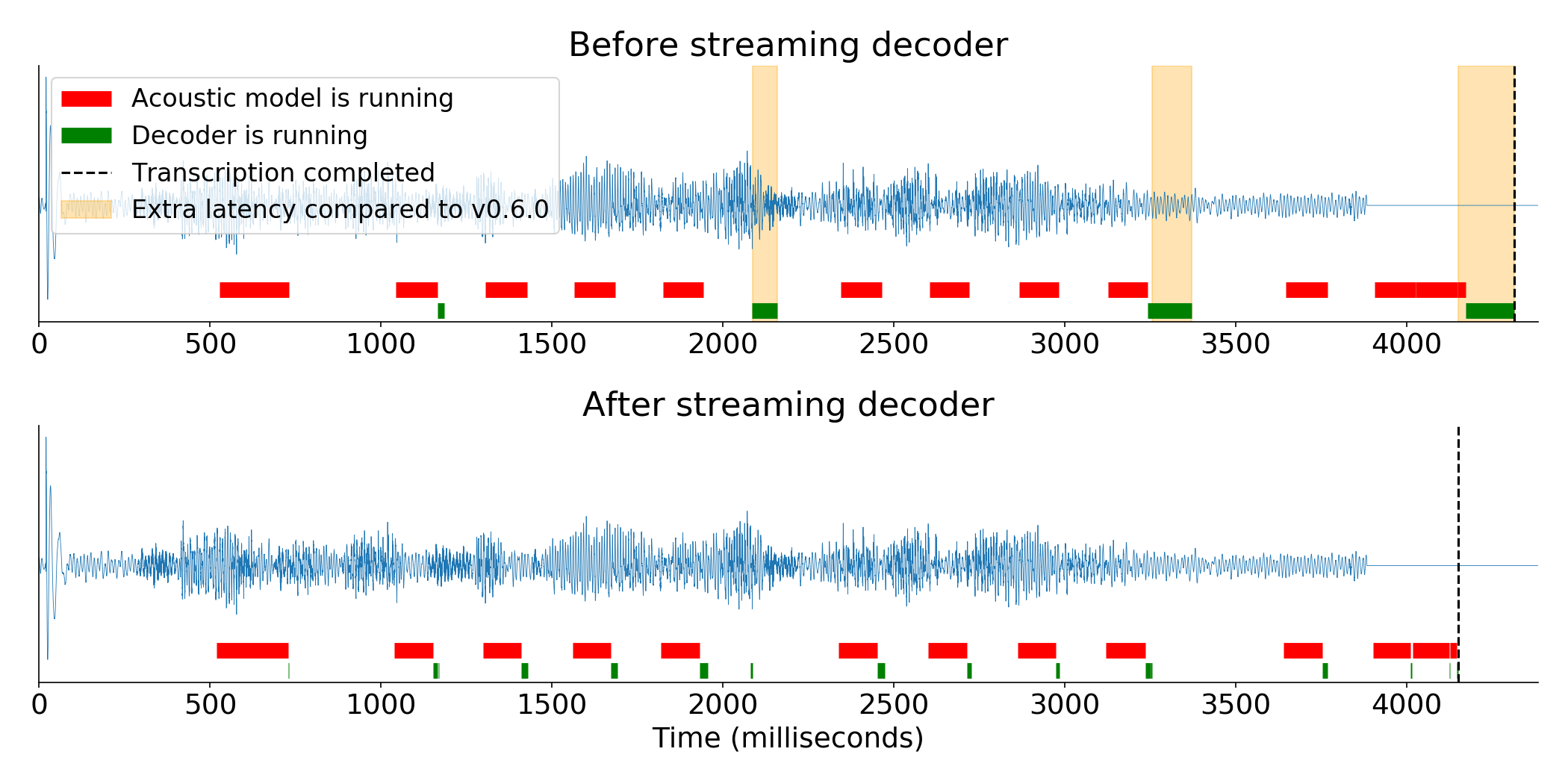

Consistent low latency

DeepSpeech v0.6 includes a host of performance optimizations, designed to make it easier for application developers to use the engine without having to fine tune their systems. Our new streaming decoder offers the largest improvement, which means DeepSpeech now offers consistent low latency and memory utilization, regardless of the length of the audio being transcribed. Application developers can obtain partial transcripts without worrying about big latency spikes.

DeepSpeech is composed of two main subsystems: an acoustic model and a decoder. The acoustic model is a deep neural network that receives audio features as inputs, and outputs character probabilities. The decoder uses a beam search algorithm to transform the character probabilities into textual transcripts that are then returned by the system.

In a previous blog post , I discussed how we made the acoustic model streamable. With both systems now capable of streaming, there’s no longer any need for carefully tuned silence detection algorithms in applications. dabinat , a long-term volunteer contributor to the DeepSpeech code base, contributed this feature. Thanks!

In the following diagram, you can see the same audio file being processed in real time by DeepSpeech, before and after the decoder optimizations. The program requests an intermediate transcription roughly every second while the audio is being transcribed. The dotted black line marks when the program has received the final transcription. Then, the distance from the end of the audio signal to the dotted line represents how long a user must wait after they’ve stopped speaking until the final transcript is computed and the application is able to respond.

In this case, the latest version of DeepSpeech provides the transcription 260ms after the end of the audio, which is 73% faster than before the streaming decoder was implemented. This difference would be even larger for a longer recording. The intermediate transcript requests at seconds 2 and 3 of the audio file are also returned in a fraction of the time.

Maintaining low latency is crucial for keeping users engaged and satisfied with your application. DeepSpeech enables low-latency speech recognition services regardless of network conditions, as it can run offline, on users’ devices.

TensorFlow Lite, smaller models, faster start-up times

We have added support for TensorFlow Lite , a version of TensorFlow that’s optimized for mobile and embedded devices. This has reduced the DeepSpeech package size from 98 MB to 3.7 MB. It has reduced our English model size from 188 MB to 47 MB. We did this via post-training quantization , a technique to compress model weights after training is done. TensorFlow Lite is designed for mobile and embedded devices, but we found that for DeepSpeech it is even faster on desktop platforms. And so, we’ve made it available on Windows, macOS, and Linux as well as Raspberry Pi and Android. DeepSpeech v0.6 with TensorFlow Lite runs faster than real time on a single core of a Raspberry Pi 4.

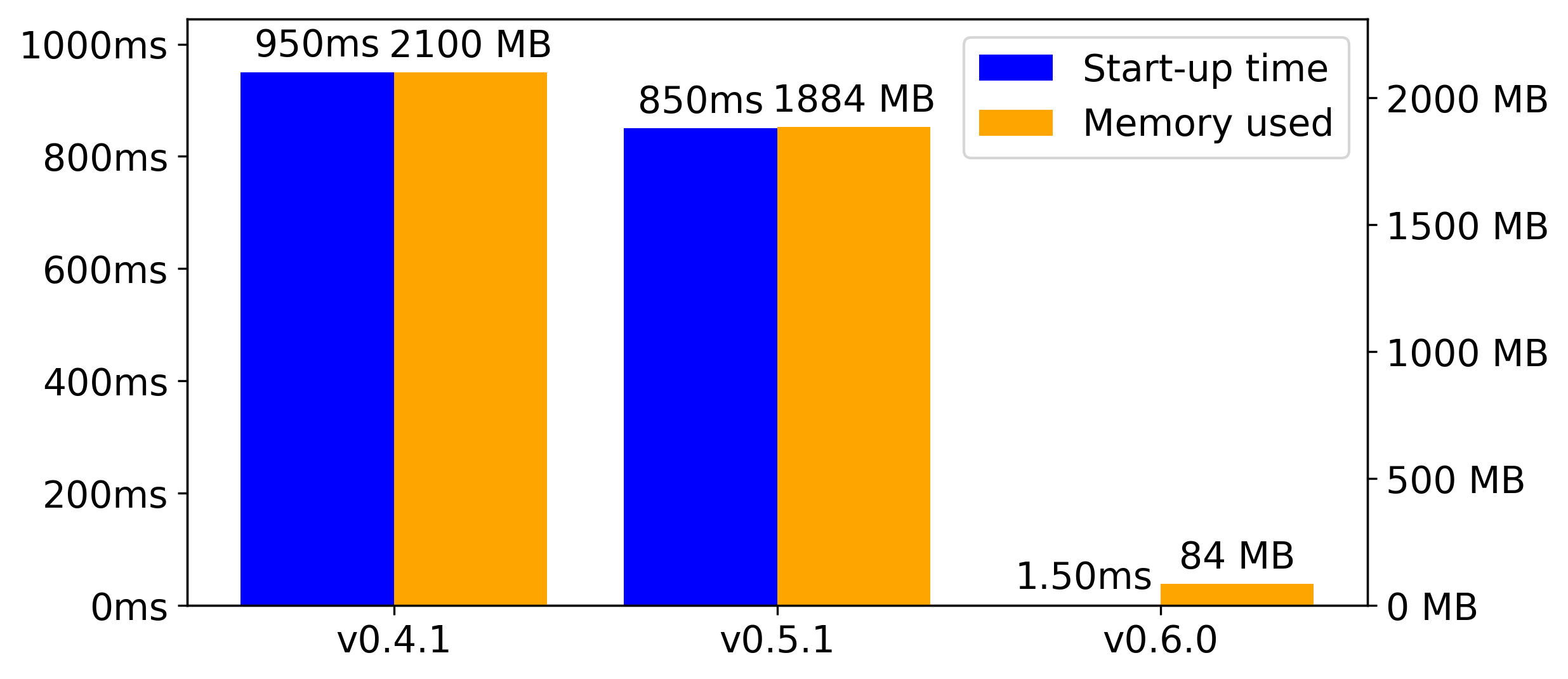

The following diagram compares the start-up time and peak memory utilization for DeepSpeech versions v0.4.1, v0.5.1, and our latest release, v0.6.0.

We now use 22 times less memory and start up over 500 times faster . Together with the optimizations we’ve applied to our language model, a complete DeepSpeech package including the inference code and a trained English model is now more than 50% smaller .

Confidence value and timing metadata in the API

In addition, the new decoder exposes timing and confidence metadata, providing new possibilities for applications. We now offer an extended set of functions in the API, not just the textual transcript. You also get metadata timing information for each character in the transcript, and a per-sentence confidence value.

The example below shows the timing metadata extracted from DeepSpeech from a sample audio file. The per-character timing returned by the API is grouped into word timings. You can see the waveform below. Click the “Play” button to listen to the sample.

Te Hiku Media are using DeepSpeech to develop and deploy the first Te reo Māori automatic speech recognizer. They have been exploring the use of the confidence metadata in our new decoder to build a digital pronunciation helper for Te reo Māori. Recently, they received a $13 million NZD investment from New Zealand’s Strategic Science Investment Fund to build Papa Reo, a multilingual language platform . They are starting with New Zealand English and Te reo Māori.

Windows/.NET support

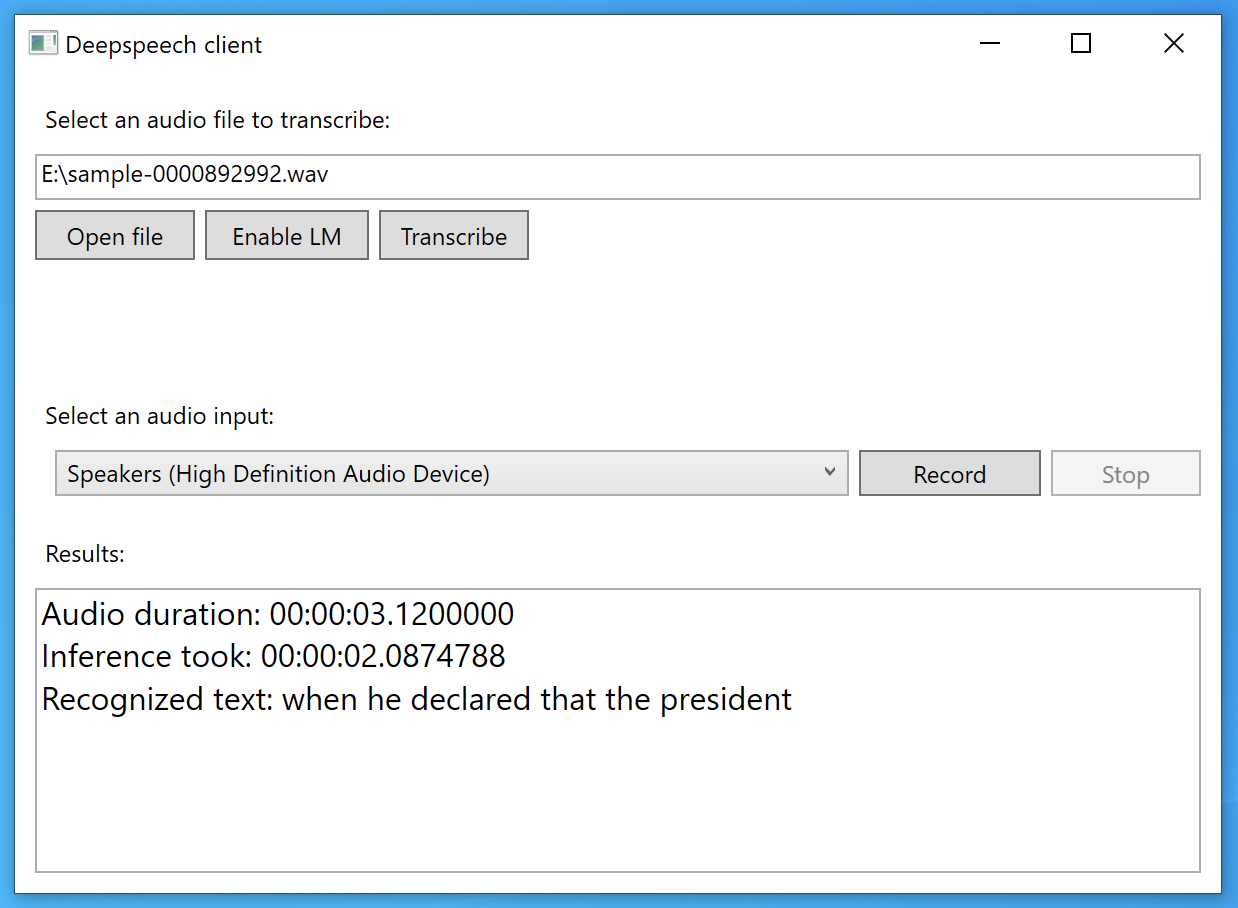

DeepSpeech v0.6 now offers packages for Windows, with .NET, Python, JavaScript, and C bindings. Windows support was a much-requested feature that was contributed by Carlos Fonseca , who also wrote the .NET bindings and examples. Thanks Carlos!

You can find more details about our Windows support by looking at the WPF example (pictured below). It uses the .NET bindings to create a small UI around DeepSpeech. Our .NET package is available in the NuGet Gallery . You can install it directly from Visual Studio.

You can see the WPF example that’s available in our repository. It contains code demonstrating transcription from an audio file, and also from a microphone or other audio input device.

Centralized documentation

We have centralized the documentation for all our language bindings in a single website, deepspeech.readthedocs.io . You can find the documentation for C, Python, .NET, Java and NodeJS/Electron packages. Given the variety of language bindings available, we wanted to make it easier to locate the correct documentation for your platform.

Improvements for training models

With the upgrade to TensorFlow 1.14, we now leverage the CuDNN RNN APIs for our training code. This change gives us around 2x faster training times, which means faster experimentation and better models.

Along with faster training, we now also support online feature augmentation, as described in Google’s SpecAugment paper . This feature was contributed by Iara Health , a Brazilian startup providing transcription services for health professionals. Iara Health has used online augmentation to improve their production DeepSpeech models.

The video above shows a customer using the Iara Health system. By using voice commands and dictation, the user instructs the program to load a template. Then, while looking at results of an MRI scan, they dictate their findings. The user can complete the report without typing. Iara Health has trained their own Brazilian Portuguese models for this specialized use case.

Finally, we have also removed all remaining points where we assumed a known sample rate of 16kHz. DeepSpeech is now fully capable of training and deploying models at different sample rates. For example, you can now more easily train and use DeepSpeech models with telephony data, which is typically recorded at 8kHz.

Try out DeepSpeech v0.6

The DeepSpeech v0.6 release includes our speech recognition engine as well as a trained English model. We provide binaries for six platforms and, as mentioned above, have bindings to various programming languages, including Python, JavaScript, Go, Java, and .NET.

The included English model was trained on 3816 hours of transcribed audio coming from Common Voice English , LibriSpeech , Fisher , Switchboard . The model also includes around 1700 hours of transcribed WAMU (NPR) radio shows. It achieves a 7.5% word error rate on the LibriSpeech test clean benchmark, and is faster than real time on a single core of a Raspberry Pi 4.

DeepSpeech v0.6 includes our best English model yet. However, most of the data used to train it is American English. For this reason, it doesn’t perform as well as it could on other English dialects and accents. A lack of publicly available voice data in other languages and dialects is part of why Common Voice was created. We want to build a future where a speaker of Welsh or Basque or Scottish English has access to speech technology with the same standard of quality as is currently available for speakers of languages with big markets like American English, German, or Mandarin.

Want to participate in Common Voice? You can donate your voice by reading small text fragments. Or validate existing recordings in 40 different languages, with more to come. Currently, Common Voice represents the world’s largest public domain transcribed voice dataset . The dataset consists of nearly 2,400 hours of voice data with 29 languages represented, including English, French, German, Spanish and Mandarin Chinese, but also for example Welsh and Kabyle.

The v0.6 release is now available on GitHub as well as on your favorite package manager. You can download our pre-trained model and start using DeepSpeech in minutes. If you’d like to know more, you can find detailed release notes in the GitHub release ; installation and usage explanations in our README . If that doesn’t cover what you’re looking for, you can also use our discussion forum .

Reuben Morais is a Senior Research Engineer working on the Machine Learning team at Mozilla. He is currently focused on bridging the gap between machine learning research and real world applications, bringing privacy preserving speech technologies to users.

More articles by Reuben Morais…

Discover great resources for web development

Sign up for the Mozilla Developer Newsletter:

Thanks! Please check your inbox to confirm your subscription.

If you haven’t previously confirmed a subscription to a Mozilla-related newsletter you may have to do so. Please check your inbox or your spam filter for an email from us.

19 comments

Hi, this looks really awesome. Is there somewhere an online demo of the new version?

We don’t have an online demo, as the focus has been on client-side recognition. We experimented with some options to run it on the browser but the technology wasn’t there yet.

Have you experimented with tensorflow.js or WebAssembly? Wasm has experimental support for threads and SIMD in some browsers. https://github.com/mozilla/DeepSpeech/issues/2233

We tried it a long time ago but it was still very rough, we couldn’t get anything working. I should take a look at it some time.

Would really want to see this! Thanks for all the awesome work you do!

Hey, thanks a lot for doing this! Your git repo lists cuda as the only GPU backend. AFAIK there is also an AMD version for tensorflow and it seems to work quite well ( people claim a Radeon VII being about as fast as 2080ti c.f. https://github.com/ROCmSoftwarePlatform/tensorflow-upstream/issues/362 ). Did you have the chance to test it with DeepSpeech?

We don’t explicitly target CUDA, it’s just a consequence of using TensorFlow. In addition, our native client is optimized for low latency. The use case optimized for is the software running locally on the user’s machine and transcribing a single stream of audio (likely from a microphone) while it’s being recorded. Our model is already faster than real time on CPUs, so there’s no need to do extensive GPU optimization. We build and publish GPU packages so people can experiment and so we don’t accidentally break GPU support, but there’s no major optimization push happening there.

Hello Reuben Morais. Tell me where you can read in detail about the principles of recognition on which Deep Speech is based. Maybe there is a video where it is told in detail in steps. For example, I am developing my own project for voice recognition on a small microcontroller with 16kB RAM – ERS VCRS. And in my video everything is shown from beginning to end.

DeepSpeech is not applicable to that hardware, the model is too big for 16kB of RAM. You can read more about it here: https://arxiv.org/abs/1412.5567

When you speak about client-side capabilities it’s not yet runnable client side on javascript in webbrowsers, right?

We’re working towards Firefox integration, but nothing concrete to share yet. People have deployed it client-side interacting with a web front-end, but currently it requires an additional component running on the machine.

hi, i’m really glad to see a graphical interface being built so also less technical users can start using deepSpeech (as opposed to google and apple products etc). however, even after 3 hours of googling and trying out, i couldn’t understand how to make the DeepSpeechWPF run. i found this code https://deepspeech.readthedocs.io/en/v0.6.0/DotNet-contrib-examples.html and this repo https://github.com/mozilla/DeepSpeech/tree/v0.6.0/examples/net_framework/DeepSpeechWPF but PLEASE, publish some instructions that are understandable to less technical users, as i am assuming that we are who need the graphical interface most. best wishes ida

Hello, The WPF example is not meant for less technical users, it’s meant for Windows developers to have an example that uses frameworks they’re familiar with. I don’t know of any graphical interfaces for DeepSpeech that target less technical users. It’d be good to have something like that, I agree.

My primary interest in DeepSpeech is to use it in an open source home automation system that doesn’t require my voice data to leave my local network / create potential security issues. Have you done anything with DeepSpeech to integrate it into programs like MQTT? Since various open source solutions can easily use MQTT as a gateway into multiple other systems, I am wondering if there is any intentions of trying to create a simple interface between DeepSpeech and MQTT.

I don’t know of any MQTT integration.

The integration may not be all that hard if an intermediate application was written to take the output of DeepSpeech and piped it into MQTT. Might even be able to work that one out myself. Is there any way to have DeepSpeech listen to you without the need for converting it to an audio file first? Along the lines of Alexa with a key word to trigger it. Audio files just add another lay of complexity on the input side that make using DeepSpeech less useful than some of the cloud solutions.

DeepSpeech has no dependency on audio files. The API receives audio samples as input, they can come from a file or a microphone or a network stream, we don’t care.

Comments are closed for this article.

Speech to text

An AI Speech feature that accurately transcribes spoken audio to text.

Make spoken audio actionable

Quickly and accurately transcribe audio to text in more than 100 languages and variants. Customize models to enhance accuracy for domain-specific terminology. Get more value from spoken audio by enabling search or analytics on transcribed text or facilitating action—all in your preferred programming language.

High-quality transcription

Get accurate audio to text transcriptions with state-of-the-art speech recognition.

Customizable models

Add specific words to your base vocabulary or build your own speech-to-text models.

Flexible deployment

Run Speech to Text anywhere—in the cloud or at the edge in containers.

Production-ready

Access the same robust technology that powers speech recognition across Microsoft products.

Accurately transcribe speech from various sources

Convert audio to text from a range of sources, including microphones , audio files , and blob storage . Use speaker diarisation to determine who said what and when. Get readable transcripts with automatic formatting and punctuation.

Customize speech models to your needs

Tailor your speech models to understand organization- and industry-specific terminology. Overcome speech recognition barriers such as background noise, accents, or unique vocabulary. Customize your models by uploading audio data and transcripts. Automatically generate custom models using Office 365 data to optimize speech recognition accuracy for your organization.

Deploy anywhere

Run Speech to Text wherever your data resides. Build speech applications that are optimized for robust cloud capabilities and on-premises using containers .

Fuel App Innovation with Cloud AI Services

Learn 5 key ways your organization can get started with AI to realize value quickly.

Comprehensive privacy and security

AI Speech, part of Azure AI Services, is certified by SOC, FedRAMP, PCI DSS, HIPAA, HITECH, and ISO.

View and delete your custom speech data and models at any time. Your data is encrypted while it's in storage.

Your data remains yours. Your audio input and transcription data aren't logged during audio processing.

Backed by Azure infrastructure, AI Speech offers enterprise-grade security, availability, compliance, and manageability.

Comprehensive security and compliance, built in

Microsoft invests more than $1 billion annually on cybersecurity research and development.

We employ more than 3,500 security experts who are dedicated to data security and privacy.

Azure has more certifications than any other cloud provider. View the comprehensive list .

Flexible pricing gives you the control you need

With Speech to Text, pay as you go based on the number of hours of audio you transcribe, with no upfront costs.

Get started with an Azure free account

After your credit, move to pay as you go to keep building with the same free services. Pay only if you use more than your free monthly amounts.

Documentation and resources

Get started.

Browse the documentation

Create an AI Speech service with the Microsoft Learn course

Explore code samples

Check out our sample code

See customization resources

Explore and customize your voice-to-text solution with Speech Studio . No code required.

Frequently asked questions about Speech to Text

What is speech to text.

It is a feature within the Speech service that accurately and quickly transcribes audio to text.

What are Azure AI Services?

AI Services are a collection of customizable, prebuilt AI models that can be used to add AI to applications. There are a variety of domains, including Speech, Decision, Language, and Vision. Speech to Text is one feature within the Speech service. Other Speech related features include Text to Speech , Speech Translation , and Speaker Recognition . An example of a Decision service is Personalizer , which allows you to deliver personalized, relevant experiences. Examples of AI Languages include Language Understanding , Text Analytics for natural language processing, QnA Maker for FAQ experiences, and Translator for language translation.

Start building with AI Services

Top 11 Open Source Speech Recognition/Speech-to-Text Systems

Last Updated on: May 15, 2024

A speech-to-text (STT) system , or sometimes called automatic speech recognition (ASR) is as its name implies: A way of transforming the spoken words via sound into textual data that can be used later for any purpose.

Speech recognition technology is extremely useful. It can be used for a lot of applications such as the automation of transcription, writing books/texts using sound only, enabling complicated analysis on information using the generated textual files and a lot of other things.

In the past, the speech-to-text technology was dominated by proprietary software and libraries. Open source speech recognition alternatives didn’t exist or existed with extreme limitations and no community around.

This is changing, today there are a lot of open source speech-to-text tools and libraries that you can use right now.

Table of Contents:

What is a Speech Recognition Library/System?

What is an open source speech recognition library, what are the benefits of using open source speech recognition, 1. project deepspeech, 4. flashlight asr (formerly wav2letter++), 5. paddlespeech (formerly deepspeech2), 6. openseq2seq, 10. whisper, 11. styletts2, what is the best open source speech recognition system.

It is the software engine responsible for transforming voice to texts.

It is not meant to be used by end users. Developers will first have to adapt these libraries and use them to create computer programs that can enable speech recognition to users.

Some of them come with preloaded and trained dataset to recognize the given voices in one language and generate the corresponding texts, while others just give the engine without the dataset, and developers will have to build the training models themselves. This can be a complex task, similar to asking someone to do my online homework for me , as it requires a deep understanding of machine learning and data handling.

You can think of them as the underlying engines of speech recognition programs.

If you are an ordinary user looking for speech recognition, then none of these will be suitable for you, as they are meant for development use only.

The difference between proprietary speech recognition and open source speech recognition, is that the library used to process the voices should be licensed under one of the known open source licenses, such as GPL, MIT and others.

Microsoft and IBM for example have their own speech recognition toolkits that they offer for developers, but they are not open source. Simply because they are not licensed under one of the open source licenses in the market.

Mainly, you get few or no restrictions at all on the commercial usage for your application, as the open source speech recognition libraries will allow you to use them for whatever use case you may need.

Also, most – if not all – open source speech recognition toolkits in the market are also free of charge, saving you tons of money instead of using the proprietary ones.

The benefits of using open source speech recognition toolkits are indeed too many to be summarized in one article.

Top Open Source Speech Recognition Systems

In our article we’ll see a couple of them, what are their pros and cons and when they should be used.

This project is made by Mozilla, the organization behind the Firefox browser.

It’s a 100% free and open source speech-to-text library that also implies the machine learning technology using TensorFlow framework to fulfill its mission. In other words, you can use it to build training models by yourself to enhance the underlying speech-to-text technology and get better results, or even to bring it to other languages if you want.

You can also easily integrate it to your other machine learning projects that you are having on TensorFlow. Sadly it sounds like the project is currently only supporting English by default. It’s also available in many languages such as Python (3.6).

However, after the recent Mozilla restructure, the future of the project is unknown, as it may be shut down (or not) depending on what they are going to decide .

You may visit its Project DeepSpeech homepage to learn more.

Kaldi is an open source speech recognition software written in C++, and is released under the Apache public license.

It works on Windows, macOS and Linux. Its development started back in 2009. Kaldi’s main features over some other speech recognition software is that it’s extendable and modular: The community is providing tons of 3rd-party modules that you can use for your tasks.

Kaldi also supports deep neural networks, and offers an excellent documentation on its website . While the code is mainly written in C++, it’s “wrapped” by Bash and Python scripts.

So if you are looking just for the basic usage of converting speech to text, then you’ll find it easy to accomplish that via either Python or Bash. You may also wish to check Kaldi Active Grammar , which is a Python pre-built engine with English trained models already ready for usage.

Learn more about Kaldi speech recognition from its official website .

Probably one of the oldest speech recognition software ever, as its development started in 1991 at the University of Kyoto, and then its ownership was transferred to as an independent project in 2005. A lot of open source applications use it as their engine (Think of KDE Simon).

Julius main features include its ability to perform real-time STT processes, low memory usage (Less than 64MB for 20000 words), ability to produce N-best/Word-graph output, ability to work as a server unit and a lot more.

This software was mainly built for academic and research purposes. It is written in C, and works on Linux, Windows, macOS and even Android (on smartphones). Currently it supports both English and Japanese languages only.

The software is probably available to install easily using your Linux distribution’s repository; Just search for julius package in your package manager.

You can access Julius source code from GitHub.

If you are looking for something modern, then this one can be included.

Flashlight ASR is an open source speech recognition software that was released by Facebook’s AI Research Team. The code is a C++ code released under the MIT license.

Facebook was describing its library as “the fastest state-of-the-art speech recognition system available” up to 2018.

The concepts on which this tool is built makes it optimized for performance by default. Facebook’s machine learning library Flashlight is used as the underlying core of Flashlight ASR. The software requires that you first build a training model for the language you desire before becoming able to run the speech recognition process.

No pre-built support of any language (including English) is available. It’s just a machine-learning-driven tool to convert speech to text.

You can learn more about it from the following link .

Researchers at the Chinese giant Baidu are also working on their own speech recognition toolkit, called PaddleSpeech.

The speech toolkit is built on the PaddlePaddle deep learning framework, and provides many features such as:

- Speech-to-Text support.

- Text-to-Speech support.

- State-of-the-art performance in audio transcription, it even won the NAACL2022 Best Demo Award ,

- Support for many large language models (LLMs), mainly for English and Chinese languages.

The engine can be trained on any model and for any language you desire.

PaddleSpeech ‘s source code is written in Python, so it should be easy for you to get familiar with it if that’s the language you use.

Developed by NVIDIA for sequence-to-sequence models training.

While it can be used for way more than just speech recognition, it is a good engine nonetheless for this use case. You can either build your own training models for it, or use models which are shipped by default. It supports parallel processing using multiple GPUs/Multiple CPUs, besides a heavy support for some NVIDIA technologies like CUDA and its strong graphics cards.

As of 2021 the project is archived; it can still be used but looks like it is no longer under active development.

Check its speech recognition documentation page for more information, or you may visit its official source code page .

One of the newest open source speech recognition systems, as its development just started in 2020.

Unlike other systems in this list, Vosk is quite ready to use after installation, as it supports 10 languages (English, German, French, Turkish…) with portable 50MB-sized models already available for users (There are other larger models up to 1.4GB if you need).

It also works on Raspberry Pi, iOS and android devices, and provides a streaming API which allows you to connect to it to do your speech recognition tasks online. Vosk has bindings for Java, Python, JavaScript, C# and NodeJS.

Learn more about Vosk from its official website .

An end-to-end speech recognition engine which implements ASR.

Written in Python and licensed under the Apache 2.0 license. Supports unsupervised pre-training and multi-GPUs training either on same or multiple machines. Built on the top of TensorFlow.

Has a large model available for both English and Chinese languages.

Visit Athena source code .

Written in Python on the top of PyTorch.

Also supports end-to-end ASR. It follows Kaldi style for data processing, so it would be easier to migrate from it to ESPnet. The main marketing point for ESPnet is the state-of-art performance it gives in many benchmarks, and its support for other language processing tasks such as speech-to-text (STT), machine translation (MT) and speech translation (ST).

Licensed under the Apache 2.0 license.

You can access ESPnet from the following link .

The newest speech recognition toolkit in the family, developed by the famous OpenAI company (the same company behind ChatGPT ).

The main marketing point for Whisper is that it does not specialize in a set of training datasets for specific languages only; instead, it can be used with any suitable model and for any language. It was trained on 680 thousand hours of audio files, one third of which were non-English datasets.

It supports speech-to-text, text-to-speech, speech translation. And the company claims that its toolkit has 50% less errors in the output compared to other toolkit in the market.

Learn more about Whisper from its official website .

The newest speech recognition library on the list, which was just released in the middle of November, 2023. It employs diffusion techniques with large speech language models (SLMs) training in order to achieve more advanced results than other models.

The makers of the model published it along with a research paper, where they make the following claim about their work:

This work achieves the first human-level TTS synthesis on both single and multispeaker datasets, showcasing the potential of style diffusion and adversarial training with large SLMs.

It is written in Python, and has some Jupyter notebooks shipped with it to demonstrate how to use it. The model is licensed under the MIT license.

There is an online demo where you can see different benchmarks of the model: https://styletts2.github.io/

If you are building a small application that you want to be portable everywhere, then Vosk is your best option, as it is written in Python and works on iOS, android and Raspberry pi too, and supports up to 10 languages. It also provides a huge training dataset if you shall need it, and a smaller one for portable applications.

If, however, you want to train and build your own models for much complex tasks, then any of PaddleSpeech, Whisper and Athena should be more than enough for your needs, as they are the most modern state-of-the-art toolkits.

As for Mozilla’s DeepSpeech , it lacks a lot of features behind its other competitors in this list, and isn’t really cited a lot in speech recognition academic research like the others. And its future is concerning after the recent Mozilla restructure, so one would want to stay away from it for now.

Traditionally, Julius and Kaldi are also very much cited in the academic literature.

Alternatively, you may try these open source speech recognition libraries to see how they work for you in your use case.

The speech recognition category is starting to become mainly driven by open source technologies, a situation that seemed to be very far-fetched a few years ago.

The current open source speech recognition software are very modern and bleeding-edge, and one can use them to fulfill any purpose instead of depending on Microsoft’s or IBM’s toolkits.

If you have any other recommendations for this list, or comments in general, we’d love to hear them below!

FOSS Post has been providing high-quality content about open source and Linux software for around 7 years now. All of our content is free so that you can enjoy it whenever you like. However, consider buying us a cup of coffee by joining our Patreon campaign or doing a one-time donation to support our efforts!

Our community platform is here. Join it now so that you can explore tons of interesting and fun discussions about various open source aspects and issues!

Are you stuck following one of our articles or technical tutorials? Drop us a support request in the forum and we'll get right back to you.

You can take a number of interesting and exciting quizzes that the FOSS Post team prepared about various open source software from FOSS Quiz.

With a B.Sc and M.Sc in Computer Science & Engineering, Hanny brings more than a decade of experience with Linux and open-source software. He has developed Linux distributions, desktop programs, web applications and much more. All of which attracted tens of thousands of users over many years. He additionally maintains other open-source related platforms to promote it in his local communities.

Hanny is the founder of FOSS Post.

Enter your email address to subscribe to our newsletter. We only send you an email when we have a couple of new posts or some important updates to share.

Social Links

Recent comments.

Open Source Directory

Join the force.

For the price of one cup of coffee per month:

- Support the FOSS Post to produce more content.

- Get a special account on our website.

- Remove all the ads you are seeing (including this one!).

- Get an OPML file containing +70 RSS feeds for various FOSS-related websites and blogs, so that you can import it into your favorite RSS reader and stay updated about the FOSS world!

Become a Supporter

Sign up in our modern forum to discuss various issues and see a lot of insightful, entertaining and informational content about Linux and open source software! Your content is yours and you can take it with you wherever you go.

* Premium members get a special badge.

No thanks, I’m not interested!

Originally published on August 23, 2020, Last Updated on May 15, 2024 by M.Hanny Sabbagh

The 5 Best Open Source Speech Recognition Engines & APIs

Video content is taking over many spaces online – in fact, more than 80% of online traffic today consists of video. Video is a tool for brands to showcase their latest and greatest products, shoot amateur creators to the tops of the charts, and even help people connect with friends and family all over the world.

With this much video out in the world, it becomes more and more important to ensure that you’re meeting all accessibility requirements and making sure that your video can be viewed and understood by all – even if they’re not able to listen to the sound included within your content.

Learn More about Rev’s Best-in-Class Speech-to-Text Technology

Rev › Blog › Resources › Other Resources › Speech-to-Text APIs › The 5 Best Open Source Speech Recognition Engines & APIs

In this article, we provide a breakdown of five of the best free-to-use open source speech recognition services along with details on how you can get started.

Mozilla DeepSpeech

DeepSpeech is a Github project created by Mozilla, the famous open source organization which brought you the Firefox web browser. Their model is based on the Baidu Deep Speech research paper and is implemented using Tensorflow (which we’ll talk about later).

Pros of Mozilla DeepSpeech

- They provide a pre-trained English model, which means you can use it without sourcing your own data. However, if you do have your own data, you can train your own model, or take their pre-trained model and use transfer learning to fine tune it on your own data.

- DeepSpeech is a code-native solution, not an API . That means you can tweak it according to your own specifications, providing the highest level of customization.

- DeepSpeech also provides wrappers into the model in a number of different programming languages, including Python, Java, Javascript, C, and the .NET framework. It can also be compiled onto a Raspberry Pi device which is great if you’re looking to target that platform for applications.

Cons of Mozilla DeepSpeech

- Due to some layoffs and changes in organization priorities, Mozilla is winding down development on DeepSpeech and shifting its focus towards applications of the tech. This could mean much less support when bugs arise in the software and issues need to be addressed.

- The fact that DeepSpeech is provided solely as a Git repo means that it’s very bare bones. In order to integrate it into a larger application, your company’s developers would need to build an API around its inference methods and generate other pieces of utility code for handling various aspects of interfacing with the model.

2. Wav2Letter++

The Wav2Letter++ speech engine was created in December 2018 by the team at Facebook AI Research. They advertise it as the first speech recognition engine written entirely in C++ and among the fastest ever.

Pros of Wav2Letter++

- It is the first ASR system which utilizes only convolutional layers , not recurrent ones. Recurrent layers are common to nearly every modern speech recognition engine as they are particularly useful for language modeling and other tasks which contain long-range dependencies.

- Within Wav2Letter++ the code allows you to either train your own model or use one of their pretrained models. They also have recipes for matching results from various research papers, so you can mix and match components in order to fit your desired results and application.

Cons of Wav2Letter++

- The downsides of Wav2Letter++ are much the same as with DeepSpeech. While you get a very fast and powerful model, this power comes with a lot of complexity. You’ll need to have deep coding and infrastructure knowledge in order to be able to get things set up and working on your system.

Kaldi is an open-source speech recognition engine written in C++, which is a bit older and more mature than some of the others in this article. This maturity has both benefits and drawbacks.

Pros of Kaldi

- On the one hand, Kaldi is not really focused on deep learning, so you won’t see many of those models here. They do have a few, but deep learning is not the project’s bread and butter. Instead, it is focused more on classical speech recognition models such as HMMs, FSTs and Gaussian Mixture Models.

- Kaldi methods are very lightweight, fast, and portable.

- The code has been around a long time, so you can be assured that it’s very thoroughly tested and reliable.

- They have good support including helpful forums, mailing lists, and Github issues trackers which are frequented by the project developers.

- Kaldi can be compiled to work on some alternative devices such as Android.

Cons of Kaldi

- Because Kaldi is not focused on deep learning, you are unlikely to get the same accuracy that you would using a deep learning method.

4. Open Seq2Seq

Open Seq2Seq is an open-source project created at Nvidia. It is a bit more general in that it focuses on any type of seq2seq model, including those used for tasks such as machine translation, language modeling, and image classification. However, it also has a robust subset of models dedicated to speech recognition.

The project is somewhat more up-to-date than Mozilla’s DeepSpeech in that it supports three different speech recognition models: Jasper DR 10×5, Baidu’s DeepSpeech2, and Facebook’s Wav2Letter++.

Pros of Seq2Seq

- The best of these models, Jasper DR 10×5, has a word error rate of just 3.61%.

- Note that the models do take a fair amount of computational power to train. They estimate that training DeepSpeech2 should take about a day using a GPU with 12 GB of memory.

Cons of Seq2Seq

- One negative with Open Seq2Seq is that the project has been marked as archived on Github, meaning that development has most likely stopped. Thus, any errors that arise in the code will be up to users to solve individually as bug fixes are not being merged into the main codebase.

5. Tensorflow ASR

Tensorflow ASR is a speech recognition project on Github that implements a variety of speech recognition models using Tensorflow. While it is not as well known as the other projects, it seems more up to date with its most recent release occurring in just May of 2021.

The author describes it as “almost state of the art” speech recognition and implements many recent models including DeepSpeech 2, Conformer Transducer, Context Net, and Jasper. The models can be deployed using TFLite and they will likely integrate nicely into any existing machine-learning system which uses Tensorflow. It also contains pretrained models for a couple of foreign languages including Vietnamese and German.

What Makes Rev AI Different

While open-source speech recognition systems give you access to great models for free, they also undeniably make things complicated. This is simply because speech recognition is complicated. Even when using an open-source pre-trained model, it takes a lot of work to get the model fine-tuned on your data, hosted on a server, and to write APIs to interface with it. Then you have to worry about keeping the system running smoothly and handling bugs and crashes when they inevitably do occur.

The great thing about using a paid provider such as Rev is that they handle all those headaches for you. You get a system with guaranteed 99.9+% uptime with a callable API that you can easily hook your product into. In the unlikely event that something does go wrong, you also get direct access to Rev’s development team and fantastic client support.

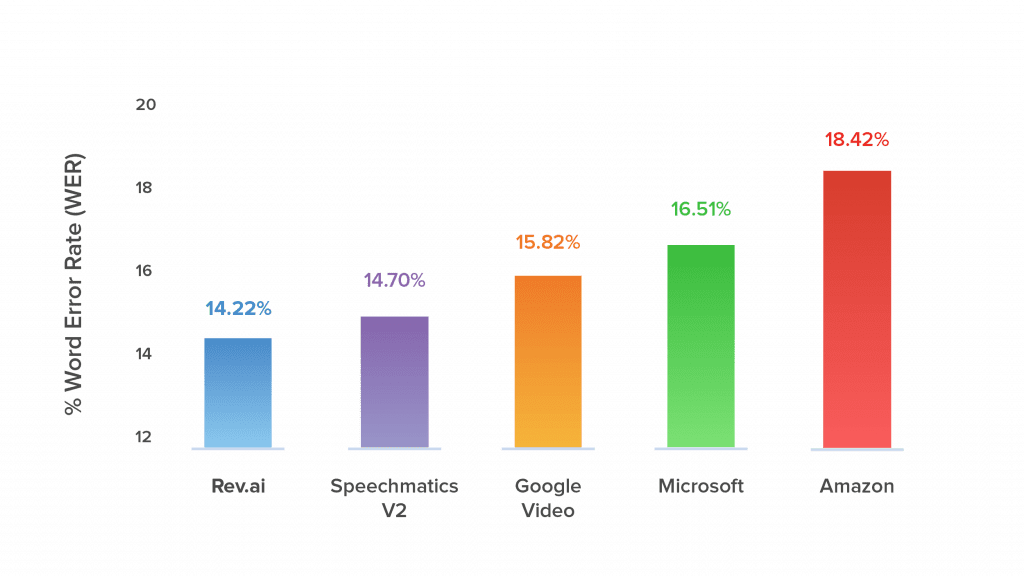

Another advantage of Rev is that it’s the most accurate speech recognition engine in the world. Their system has been benchmarked against the ones provided by all the other major industry players such as Amazon, Google, Microsoft, etc. Rev comes out on top every single time with the lowest average word error rate across multiple, real-world datasets.

Finally, when you use a third-party solution such as Rev, you can get up and running immediately. You don’t have to wait around to hire a development team, to train models, or to get everything hosted on a server. Using a few simple API calls you can hook your frontend right into Rev’s ASR system and be ready to go that very same day. This ultimately saves you money and likely more than recoups the low cost that Rev charges.

More Caption & Subtitle Articles

Everybody’s favorite speech-to-text blog.

We combine AI and a huge community of freelancers to make speech-to-text greatness every day. Wanna hear more about it?

Navigation Menu

Search code, repositories, users, issues, pull requests..., provide feedback.

We read every piece of feedback, and take your input very seriously.

Saved searches

Use saved searches to filter your results more quickly.

To see all available qualifiers, see our documentation .

- Notifications

An opensource text-to-speech (TTS) voice building tool

google/voice-builder

Folders and files, repository files navigation.

Disclaimer: This is not an official Google product.

Voice Builder

Voice Builder is an opensource text-to-speech (TTS) voice building tool that focuses on simplicity, flexibility, and collaboration. Our tool allows anyone with basic computer skills to run voice training experiments and listen to the resulting synthesized voice.

We hope that this tool will reduce the barrier for creating new voices and accelerate TTS research, by making experimentation faster and interdisciplinary collaboration easier. We believe that our tool can help improve TTS research, especially for low-resourced languages, where more experimentations are often needed to get the most out of the limited data.

Publication - https://ai.google/research/pubs/pub46977

Prerequisites